Abstract

Conventional views of brain organization suggest that regions at the top of the cortical hierarchy processes internally oriented information using an abstract amodal neural code. Despite this, recent reports have described the presence of retinotopic coding at the cortical apex, including the default mode network. What is the functional role of retinotopic coding atop the cortical hierarchy? Here we report that retinotopic coding structures interactions between internally oriented (mnemonic) and externally oriented (perceptual) brain areas. Using functional magnetic resonance imaging, we observed robust inverted (negative) retinotopic coding in category-selective memory areas at the cortical apex, which is functionally linked to the classic (positive) retinotopic coding in category-selective perceptual areas in high-level visual cortex. These functionally linked retinotopic populations in mnemonic and perceptual areas exhibit spatially specific opponent responses during both bottom-up perception and top-down recall, suggesting that these areas are interlocked in a mutually inhibitory dynamic. These results show that retinotopic coding structures interactions between perceptual and mnemonic neural systems, providing a scaffold for their dynamic interaction.

Understanding how mnemonic and sensory representations functionally interface in the brain while avoiding interference is a central puzzle in neuroscience1–5. Previous work has shown that representational interference can be reduced through operations including orthogonalization1, pattern separation6 and areal segregation5. However, the principles that preserve interaction across mnemonic and perceptual populations are less clear.

This puzzle is particularly perplexing because classic models of brain organization assume that perceptual and mnemonic cortical areas do not share neural coding principles. For example, the neural code that structures visual information processing in the brain, retinotopy, is not thought to be shared by mnemonic cortex. Instead, it is thought that retinotopic coding is replaced by abstract amodal coding as information propagates through the visual hierarchy7–10 toward memory structures at the cortical apex (for example, the default mode network (DMN))11–14. How can visual and mnemonic information interact effectively in the brain if they are represented using fundamentally different neural codes?

Recent work has suggested that even high-level cortical areas, including the DMN, exhibit retinotopic coding: they contain visually evoked population receptive fields (pRFs) with inverted response amplitudes15,16. But the functional relevance of this retinotopic coding at the cortical apex remains unclear. Here we propose that the retinotopic code spanning levels of the cortical hierarchy links functionally coupled mnemonic and perceptual areas of the brain, structuring their interaction. We investigated this proposal in three functional magnetic resonance imaging (fMRI) experiments.

Results

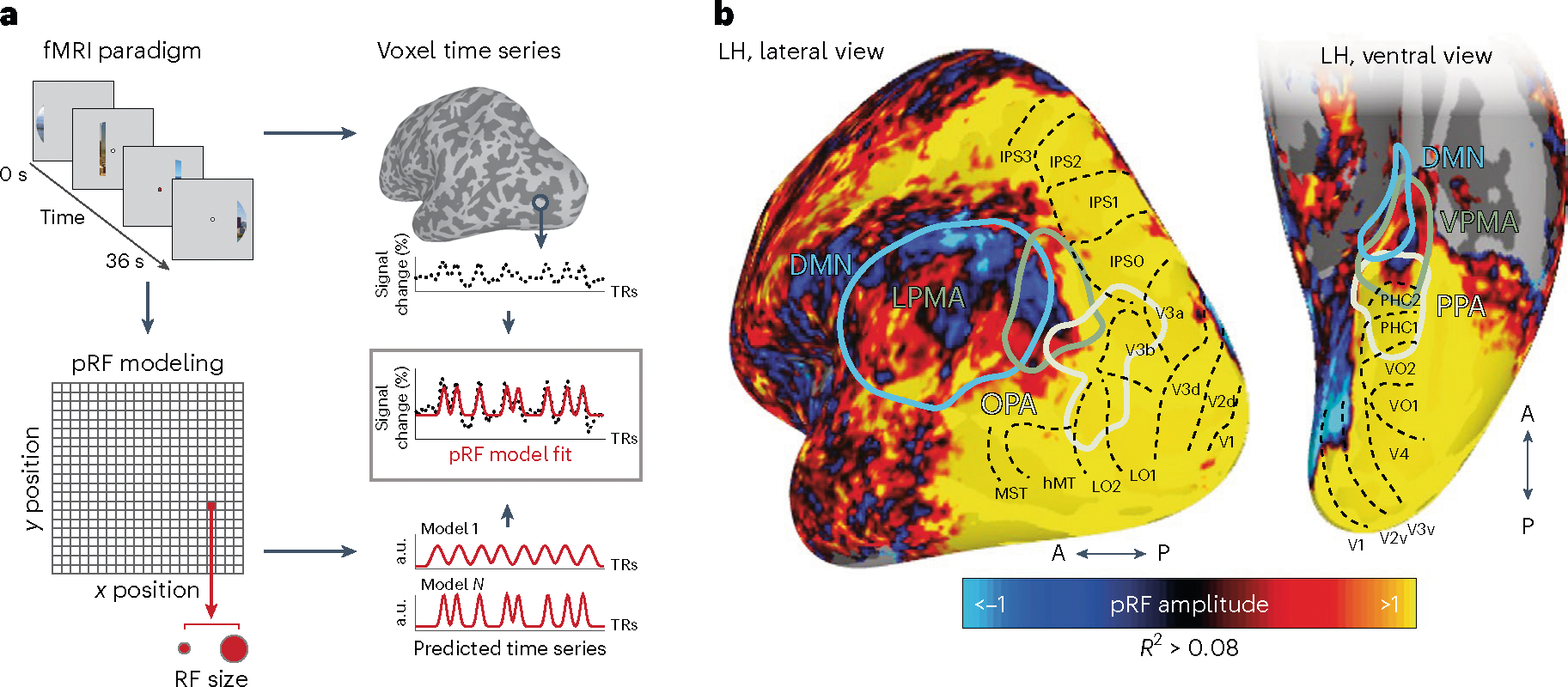

We began by determining whether retinotopic coding was present in mnemonic and perceptual regions spanning levels of the cortical hierarchy. In Experiment (Exp.) 1, participants (n = 17) underwent visual pRF mapping17,18 (Fig. 1a) with advanced multi-echo fMRI19,20 to maximize signal-to-noise ratio and pRF model fitting in anterior regions of the temporal and parietal lobes20. As expected, an initial group-level whole-brain analysis revealed robust positive retinotopic responses extending from early- to high-level visual areas (positive visually evoked pRFs, +pRFs) (Fig. 1b). We also observed robust and reliable pRFs beyond the anterior extent of known retinotopic maps21 in the anterior ventral temporal and lateral parietal cortex, regions of the cortical apex historically considered amodal12,13,22 (Fig. 1b). In striking contrast with classically defined visual areas, a large portion of these anterior voxels had pRFs with an inverted visual response (that is, negative visually evoked pRFs, −pRFs15,16). We will refer to voxels with positive or negative pRF response amplitudes as +/−pRFs, respectively. This agrees with prior studies that also observed −pRFs in the brain15,16, but the functional relevance of this inverted retinotopic code is unknown10.

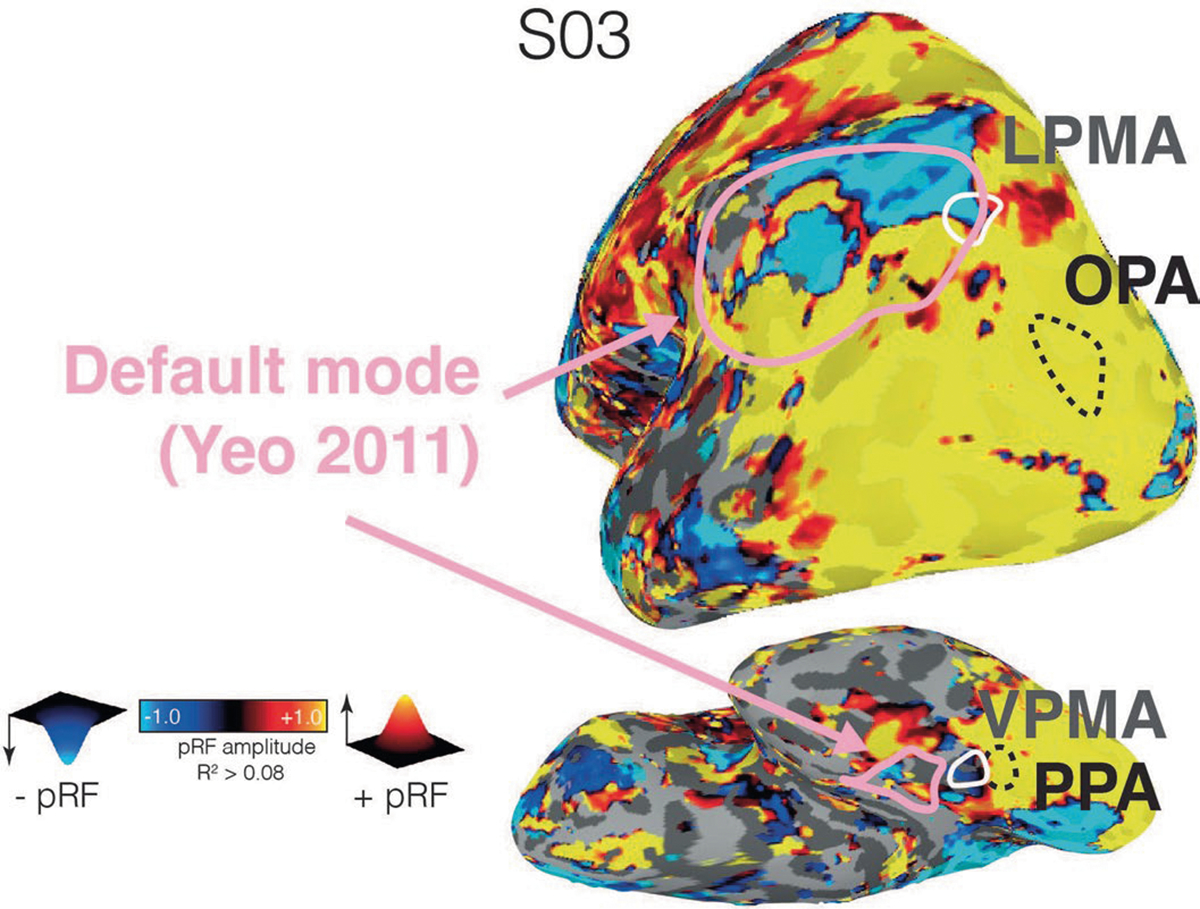

Fig. 1 |. pRF mapping reveals retinotopic coding throughout the posterior cortex, comprised of both positive- and negative-amplitude pRFs.

a, PRF paradigm and modeling. In Exp. 1, participants underwent pRF mapping. Participants viewed visual scenes through a bar aperture that gradually traversed the visual field. Each visual-field traversal lasted 36 s (18 × 2 s positions), and the bar made eight traversals per run. The direction of motion varied between traversals. To estimate the pRF for each voxel, a synthetic time series is generated for 400 visual-field locations (200 x and y positions) and 100 sizes (sigma). This results in four million possible time series that are fit to each voxel’s activity. The fit results in four parameters describing each receptive field: x, y, sigma and amplitude. b, Negative-amplitude pRFs fall anterior to the cortex typically considered visual (beyond known retinotopic maps). Group average (n = 17) pRF amplitude map (threshold at explained variance R-squared (R2) > 0.08) is shown on partially inflated representations of the left hemisphere, alongside ROIs: SPAs (OPA, PPA), PMAs (LPMA, VPMA) (localized in an independent group of participants24) and the DMN22. The known retinotopic maps in the posterior cortex (black dotted outlines21) contain exclusively positive-amplitude pRFs (hot colors), as visual stimulation evokes positive retinotopically specific BOLD responses. Negative-amplitude pRFs (cool colors), where visual stimulation evokes a negative spatially specific BOLD response, arise anterior to these retinotopic maps in the PMAs and DMN (see Fig. 2a for example time series from a representative subject).

At the group level, −pRFs only appeared beyond the anterior extent of known retinotopic maps21, in areas associated with the cortical apex, consistent with previous reports15,16 (Fig. 1b). This topographic profile of pRF amplitude was also evident at the single-participant level, with robust pRF responses present throughout posterior cerebral cortex and −pRFs emerging at the anterior edge of high-level visual cortex (Extended Data Fig. 1). In general, these anterior pRFs were not arranged topographically on the cortical surface in a manner that recapitulates the layout of the retina (that is, did not constitute ‘retinotopic maps’). However, these −pRFs were robust and reliable within individuals: a half-split analysis (Methods) confirmed that, for all regions of interest (ROIs), more than 78% of pRFs had consistent signed amplitude (that is, positive or negative), and these pRF amplitudes (all R > 0.1, P < 0.001) and visual-field-coverage maps (all Dice coefficients > 0.12, P < 0.04, Cohen’s D > 0.54) were all highly reproducible across splits. Importantly, these anterior −pRF populations on the ventral and lateral surfaces are distinct from the population of −pRFs observed on the medial wall in peripheral early visual areas, which arise from stimulation outside the pRF (that is, surround suppression)16,23.

If the functional role of the inverted retinotopic code at the cortical apex is structuring interactions with perceptual areas, we hypothesized that −pRFs would concentrate in mnemonic, as compared with perceptual, functional areas. Consistent with this prediction, at the group level, we observed that −pRFs tended to fall within swaths of cortex that selectively responded during top-down recall of familiar places, place memory areas (PMAs24) on the lateral and ventral surfaces (LPMA and VPMA, respectively) (Fig. 1b). These two mnemonic areas each lie immediately anterior to one of the scene perception areas25 (SPAs) of the human brain (occipital place area (OPA)25,26 and parahippocampal place area (PPA)27). In contrast to the PMAs, the SPAs tended to contain +pRFs.

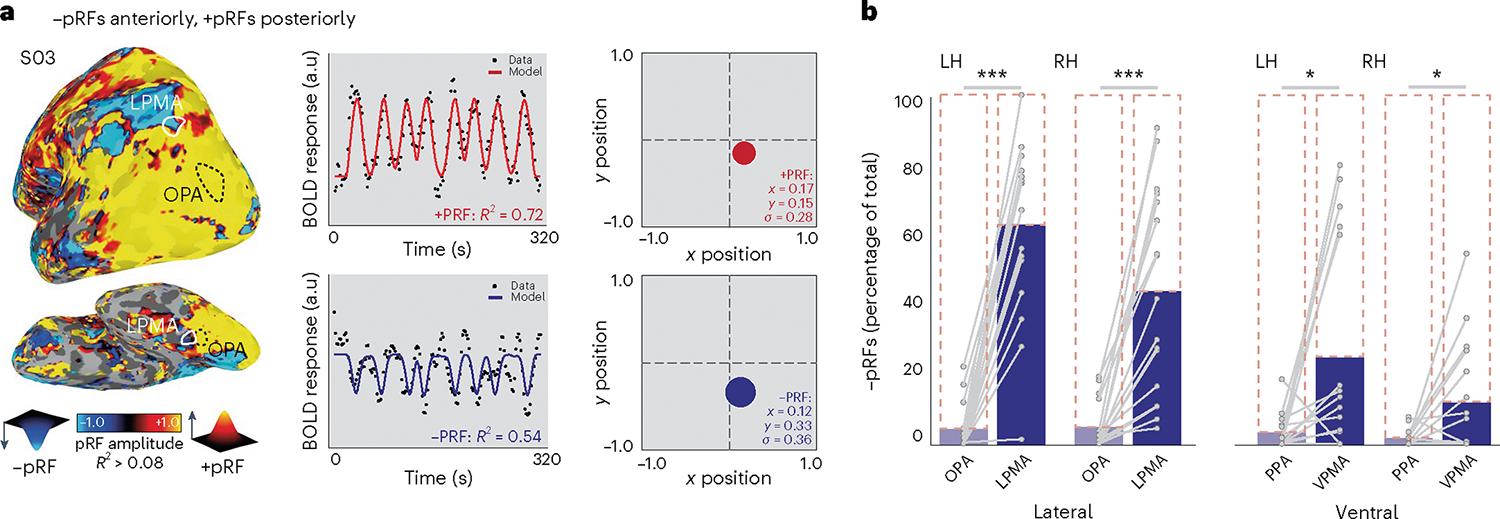

We confirmed this observation in individual participants by calculating the percentage of +/−pRFs within individually localized SPAs and PMAs (lateral: OPA, LPMA; ventral: PPA, VPMA) (Fig. 2a, Methods) to better understand their nuanced functional topography24 (Fig. 2a and Extended Data Figs. 1–3). This analysis confirmed that, unlike the perceptual SPAs, which almost exclusively contained +pRFs, the mnemonic PMAs contained a significant percentage of −pRFs (Fig. 2b) (two-way repeated measures analysis of variance (rmANOVA), main effect of ROI (F(1,16) = 85.83, P < 0.0001); ROI: hemisphere interaction (F(1,16) = 11.24, P = 0.004)); post hoc tests: left hemisphere: OPA versus LPMA t(16) = 10.74, Pcorrected (Pcorr) = 0.000002, D = 3.43); right hemisphere: OPA versus LPMA t(16) = 5.97, Pcorr = 0.00002, D = 1.89)). On the ventral surface, the effect of greater −pRFs in the PMAs versus SPAs was generally stronger in the left compared to the right hemisphere (two-way rmANOVA, main effect of ROI (F(1,16) = 10.01, P = 0.006); no ROI: hemisphere interaction (F(1,16) = 3.12, P = 0.09); left hemisphere: PPA versus VPMA (t(16) = 2.62, Pcorr = 0.02, D = 0.94); right hemisphere: PPA versus VPMA (t(16) = 2.57, Pcorr = 0.04, D = 0.90)). It should be noted that in many subjects, the PMAs contained both −pRF and +pRF subpopulations. Both +/−pRFs within each memory area showed overall positive activation during a memory recall task (t-test versus zero: −LPMA versus +LPMA (t(16) = 17.67, P = 6.35–12, D = 4.28); −VPMA versus +VPMA (t(11) = 12.43, P = 5.89–9, D = 2.31); −LPMA versus +LPMA (t(16) = 0.44, P = 0.65, D = 0.04); −VPMA versus +VPMA (t(11) = 0.26, P = 0.79, D = 0.07) (Extended Data Fig. 4)). This suggests the +/−pRF subpopulations in the PMAs are comparably engaged in memory recall, although they show opposite response profiles to visual stimulation. Importantly, these results of a higher proportion of −pRFs in the PMAs versus the SPAs held across a wide range of pRF R2 thresholds (R2 > 0.05 to R2 > 0.20). Taking these all together, these results show that −pRFs are disproportionately represented in category-selective mnemonic areas (PMAs), as compared with their perceptual counterparts (SPAs).

Fig. 2 |. Transition to mnemonic cortex is marked by the appearance of negative pRFs.

a, PRF modeling reveals posterior–anterior inversion of pRF amplitude in individual participants. Left, PRF amplitude for a representative participant overlaid onto a lateral view of the left hemisphere (threshold at R2 > 0.15; see Extended Data Fig. 1 for example ventral and lateral surface pRF amplitude maps from all participants and Extended Data Fig. 3 for amplitude maps with default mode parcellation overlaid). Posterior visual cortex is dominated by positive-amplitude pRFs (hot colors), while cortex anterior to regions classically considered visual exhibits a high concentration of negative-amplitude pRFs (cold colors). This individual’s OPA and LPMA are shown in white. Both the SPAs and PMAs contain pRFs (Extended Data Fig. 2). Right, time-series, model fits and reconstructed pRFs for two surface vertices in this subject. Top, example prototypical positive-amplitude pRF from the lateral SPA (OPA) in the left and right hemispheres (LH, RH). Bottom, example negative-amplitude population receptive field from the LPMA. b, Memory areas (PMAs) contain a larger percentage of negative pRFs compared to perceptual areas (SPAs) (repeated measures ANOVA, Bonferroni-corrected t-tests). Blue bars depict percentage of negative pRFs from individually localized SPAs and PMAs compared to total pRFs in the area (dotted outline). On the ventral and lateral surfaces, SPAs are dominated by positive pRFs, whereas a transition from positive to negative pRFs is evident within PMAs. Individual participant data points overlaid and connected in gray. *Ptwo-tailed < 0.05, ***Ptwo-tailed < 0.001. NS, not significant.

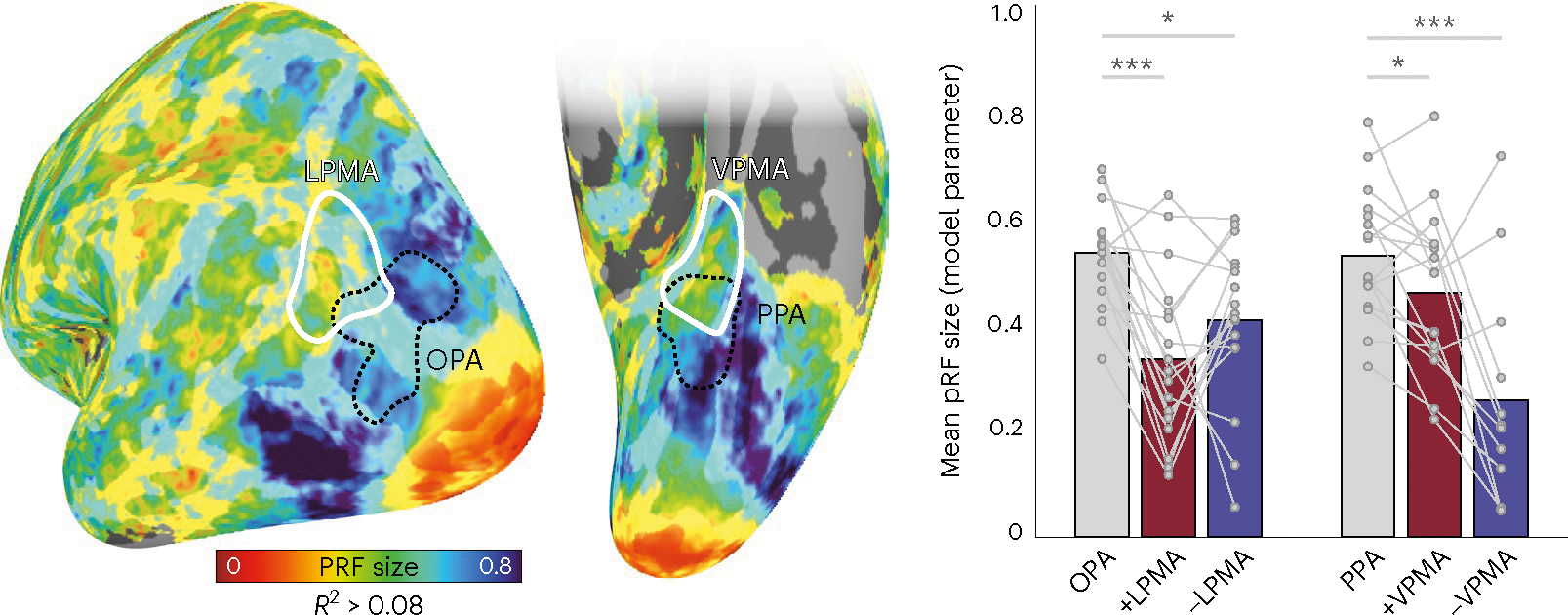

It should be noted that both +pRFs and −pRFs in the PMAs tended to be smaller than +pRFs in the SPAs on both surfaces (lateral surface: main effect of ROI (F(2,32) = 10.27, P = 0.0003); (OPA versus +pRFs in LPMA: t(16) = 5.61, Pcorr = 0.0001, D = 1.83); (OPA versus −pRFs in LPMA: t(16) = 2.59, Pcorr = 0.01, D = 1.31); (ventral surface (F(2,22) = 15.46 P = 0.000006); (PPA versus +VPMA: t(11) = 2.83, Pcorr = 0.03, D = 0.99); (PPA versus −VPMA: t(11) = 5.43, Pcorr = 0.00006, D = 1.85)). PRF sizes were not significantly different between +/−pRFs in LPMA (t(16) = 1.52, Pcorr = 0.14, D = 0.48), but on the ventral surface +pRFs in VPMA were significantly larger than −pRFs in VPMA (t(11) = 2.87, Pcorr = 0.03, D = 1.06). This result of smaller pRFs in the PMAs compared with the SPAs is particularly notable, as it runs counter to the typical pattern of pRFs becoming larger moving anteriorly from early visual areas toward higher-level brain regions8,17,28,29. Although it is not clear how the additional spatial precision manifests in these anterior, this result suggests that the PMAs could potentially represent highly specific information even compared to their perceptual counterparts (Fig. 3).

Fig. 3 |. Memory areas contain smaller pRFs compared to their paired perceptual areas.

Left, group average pRF size with memory areas and perception areas overlaid. Nodes are threshold at R2 > 0.08. Right, bars represent the mean pRF size for +pRFs in SPAs (OPA, PPA) and +/−pRFs in PMAs (LPMA, VPMA). Individual data points are shown for each participant. Across both surfaces, pRFs were significantly smaller on average in the PMAs than their perceptual counterparts (repeated measures ANOVA, Bonferroni-corrected t-tests). *Ptwo-tailed < 0.05, ***Ptwo-tailed < 0.001.

The enriched concentration of −pRFs in mnemonic (PMA) compared with perceptual (SPA) areas led to a specific hypothesis regarding their functional role. The PMAs are thought to act as a bridge between the perceptual SPAs and the spatio-mnemonic system in the medial temporal lobe24, but the format for sharing information between these systems is not clear. We hypothesized that retinotopic coding could serve as a shared substrate to scaffold the interaction between perceptual and mnemonic systems. If this proposal is correct, we should observe evidence for a functional interaction between these areas that depends on retinotopic position. We explored three tests of this hypothesis: (1) Do paired +/−pRFs represent similar portions of the visual field (Exp. 1)? (2) Do the +/−pRFs identified during visual stimulation maintain their opponent activation profiles during top-down memory recall (Exp. 2). (3) Is the functional link between +/−pRFs recapitulated when viewing familiar scene images (Exp. 3)?

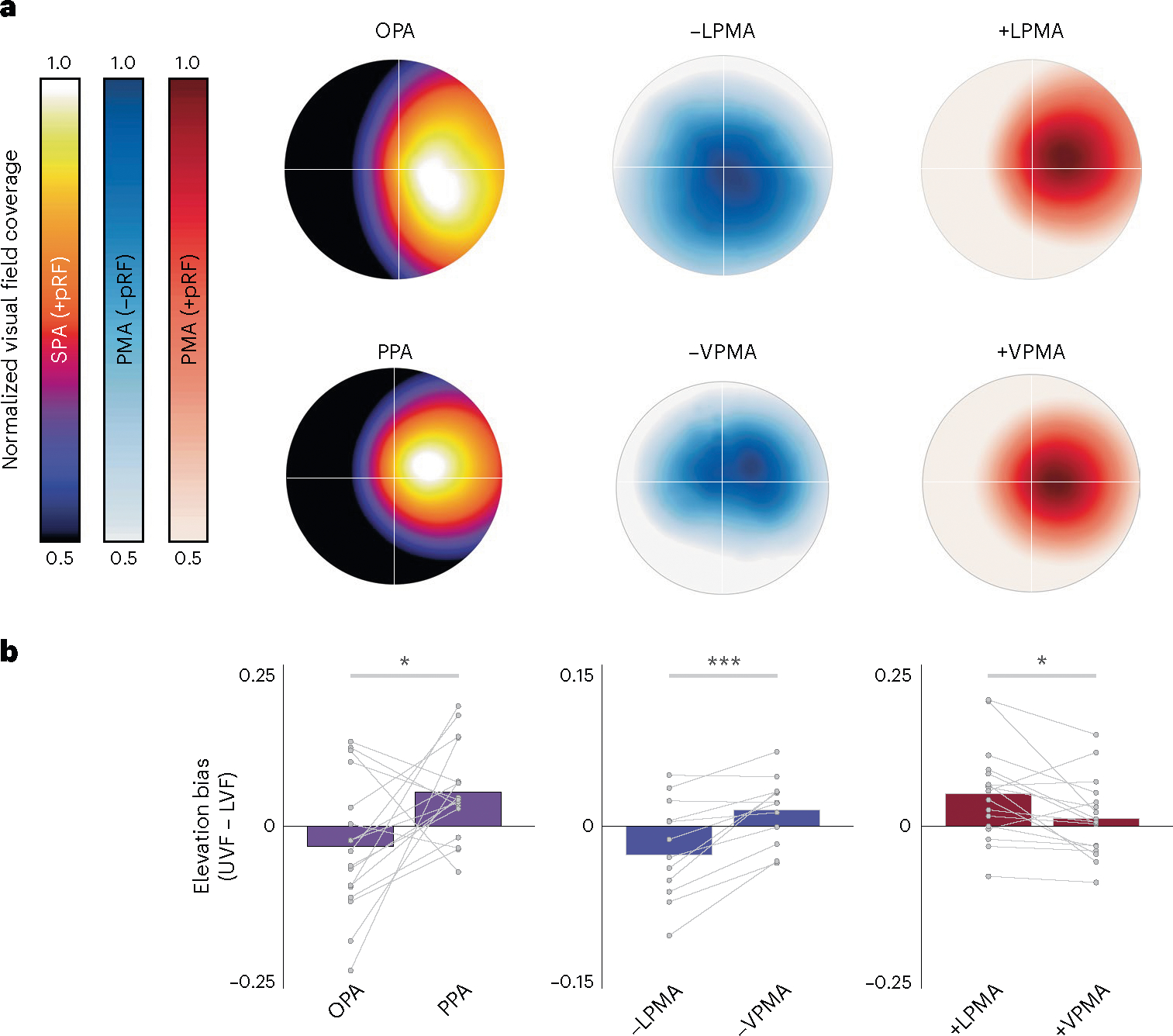

Next, we reasoned that if the retinotopic code scaffolds communication between perceptual and mnemonic systems, −pRFs within mnemonic areas should exhibit the same differential visual-field biases as their perceptual counterparts18. Visual-field representations in OPA and PPA are biased toward the lower and upper visual fields, respectively. Do +/−pRFs in their respective PMAs share these biases? Using pRF data (Exp. 1), we calculated visual-field-coverage estimates for each pRF population (+pRFs in SPAs, +pRFs and −pRFs in PMAs). Consistent with earlier reports, we found that OPA and PPA showed lower and upper field biases, respectively (elevation bias OPA versus PPA: t(16) = 2.42, P = 0.02, D = 0.98) (Fig. 4a). Critically, we found that the visual-field representations of the −pRFs in memory areas closely matched their paired perceptual counterparts (Fig. 4b). −pRFs in LPMA were biased toward the lower visual field (matching OPA), whereas −pRFs in VPMA were biased toward the upper visual field (matching PPA) (elevation bias −LPMA versus −VPMA: t(11) = 4.53, P = 0.0008, D = 0.94). By contrast, the +pRF populations in LPMA/VPMA did not show biases that corresponded to their perceptual counterparts: +pRFs in LPMA were biased toward the upper visual field, the opposite of OPA, and +pRFs in VPMA showed no clear elevation bias (elevation bias +LPMA versus +VPMA: t(16) = 2.78, P = 0.01, D = 0.65). These data show that the −pRFs in the PMAs represent similar visual-field locations as their paired SPAs, and, based on the known functional interaction between the paired PMAs and SPAs during scene processing24, we suggest that the visual-field representation may be, in part, inherited from feedforward connections originating in the SPAs.

Fig. 4 |. Shared visual-field representations between paired perception and memory areas.

a, Ventral and lateral SPAs/PMAs differentially represent the upper (ventral areas) and lower (lateral areas) visual fields. Group visual-field-coverage plots for SPAs and PMAs. The x-axis represents ipsilateral to contralateral visual field (aligned across hemispheres) while the y-axis represents elevation. All regions show the expected contralateral visual-field bias. b, With respect to elevation, SPAs differentially represent the lower (OPA) and upper (PPA) visual field, respectively (left column). The same differential representation of the lower and upper visual fields is evident for −pRFs in LPMA and VPMA (middle column) but not for +pRFs in these areas (right column). The elevation biases are quantified in b and compared using paired-sample t-tests. Bars represent average visual-field coverage for contralateral upper versus lower visual field (UVF–LVF) with individual participant data points overlaid. *Ptwo-tailed < 0.05, ***Ptwo-tailed < 0.01.

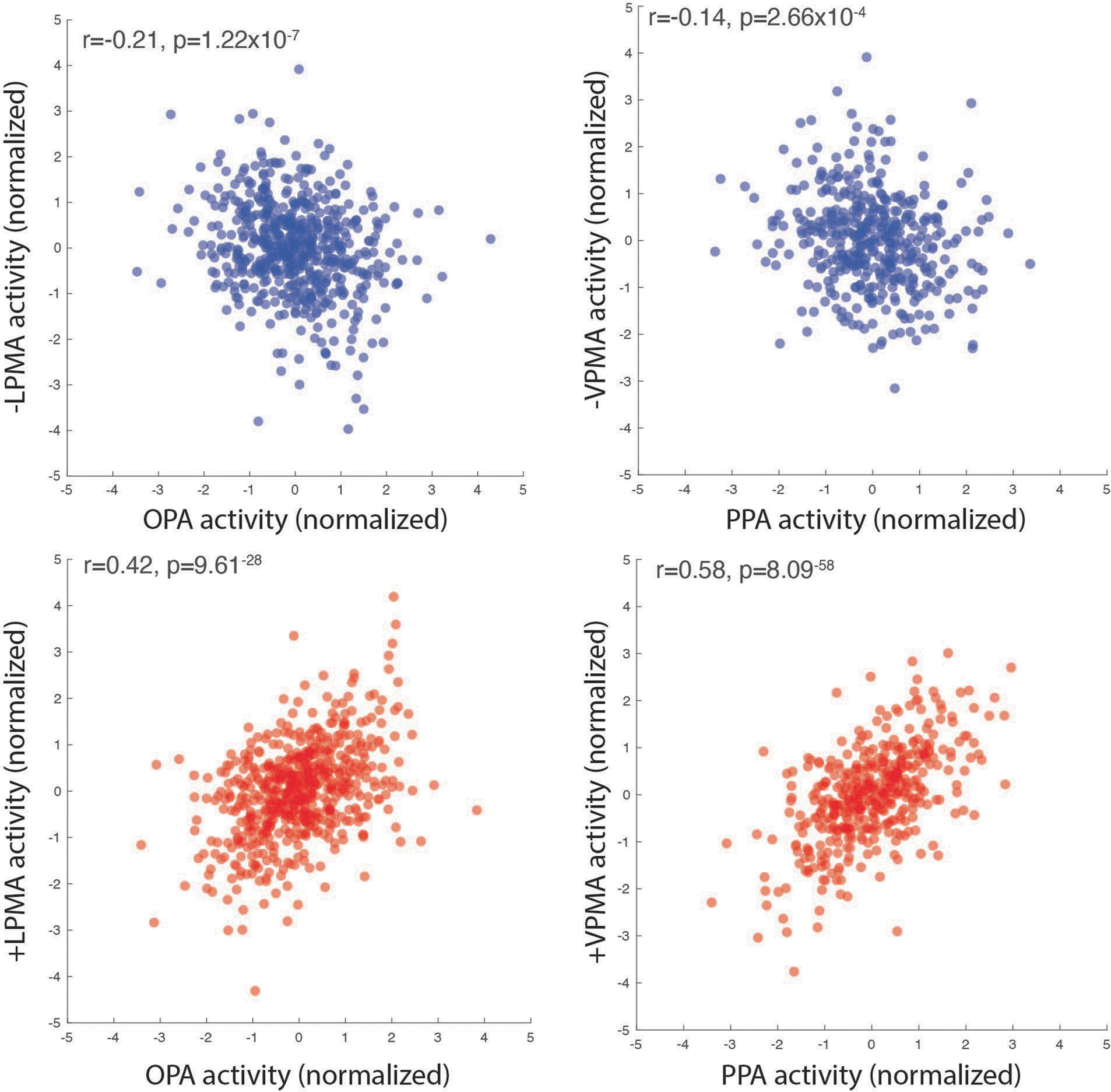

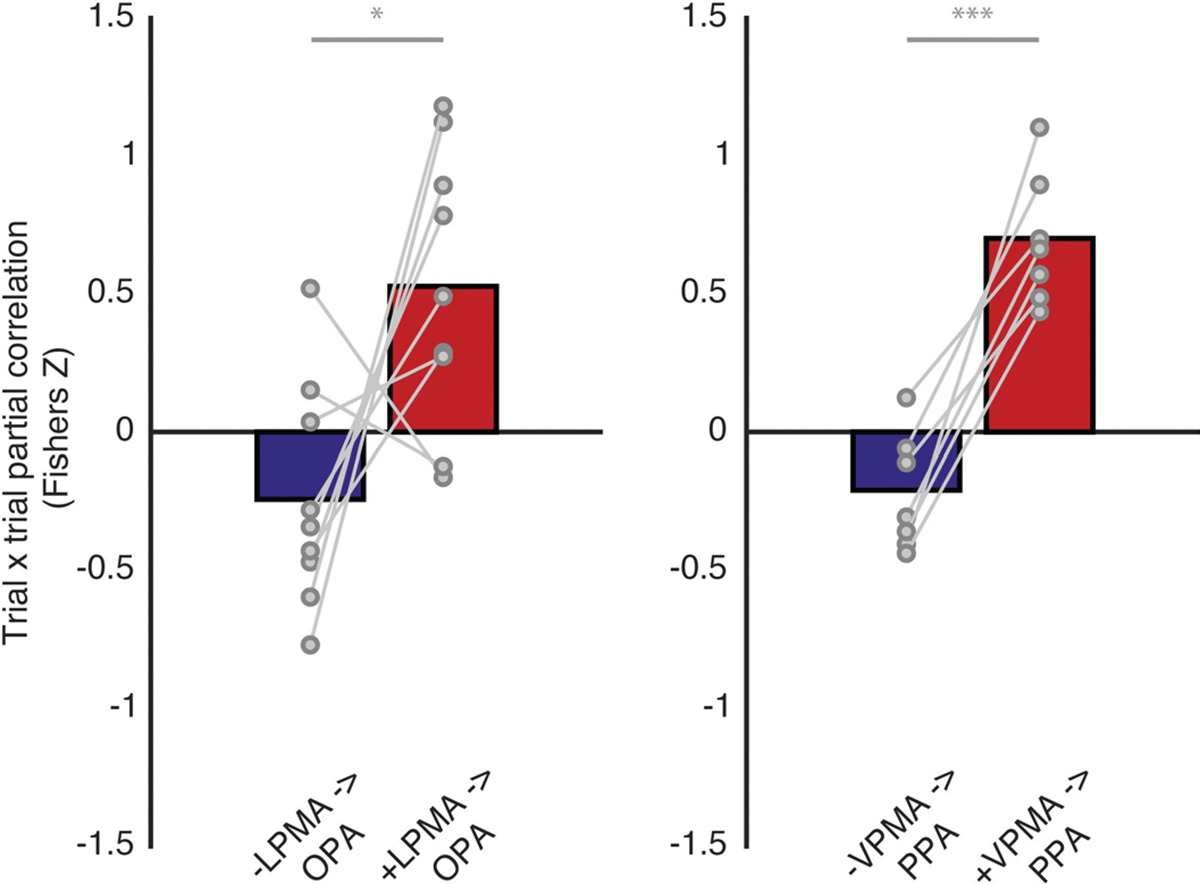

If, as hypothesized, +/−pRF populations reflect bottom-up sensory and top-down internal mnemonic processes, we predicted that the sign of the spatially specific opponent interactions observed during visual mapping (Exp. 1) would reverse during a top-down memory paradigm. Exp. 1 showed that when activity was high in +pRFs within the SPAs, activity was low in −pRFs within the PMAs. By contrast, we hypothesized that during a recall task, when −pRF activity would be high, +pRF activity would be low. To test for this competitive interaction during recall, in Exp. 2, we examined the activation profile of +/−pRFs during a place memory task, wherein participants recalled personally familiar visual environments (for example, their kitchen (Fig. 5a)). For this analysis, we first partitioned LPMA and VPMA into their +pRF and −pRF populations, respectively. Then, for each subject, we calculated the average activity (activation values versus baseline) from each of the 36 trials of the memory task for each population and ROI (for example, −pRFs in the PMA, +pRFs in the SPA) and z-scored the activation values in each ROI. We compared the z-scored values using a partial correlation between the −pRF/+pRFs in the PMAs and the SPAs (for example, correlation between −pRFs in LPMA with +pRFs in OPA, while controlling for +pRFs in LPMA, and vice versa) for each subject. Partial correlation allowed us to compare the unique impact of these distinct neural populations, while simultaneously controlling for non-specific effects (like motion and attention) that impact beta estimates on each trial. We compared the partial correlation values from the different pRF populations (that is, −LPMA × OPA versus +LPMA × OPA) for all subjects using paired t-tests separately for the lateral and ventral surfaces.

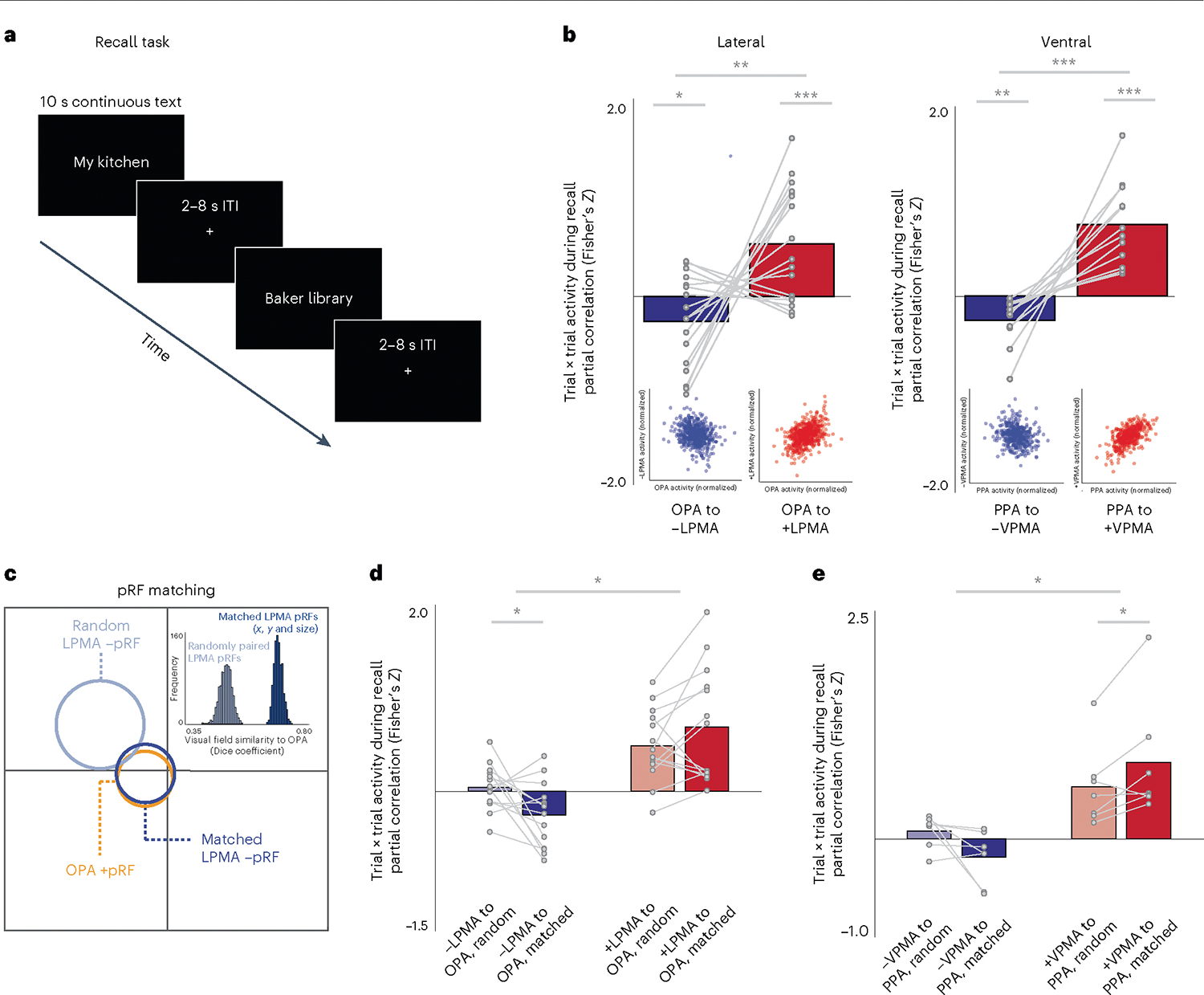

Fig. 5 |. Positive pRFs in SPAs and negative pRFs in PMAs exhibit a spatially specific push–pull dynamic during memory recall.

a, In Exp. 2, participants visually recalled personally familiar places for 10 s (cued with stimulus name; 36 trials per participant), and we calculated activation versus baseline at each trial. b, Negative and positive pRF (−pRF, +pRF) populations in SPAs and PMAs exhibit an opponent interaction during recall. Correlation in trial × trial activation of pRFs in SPAs was compared with +/−pRFs in PMAs using paired-sample t-tests. On both the lateral and ventral surfaces, when the activity of − pRFs in the PMA is high, activity in the corresponding SPA is reduced. By contrast, when activity in +pRFs in a PMA is high, activity in the adjacent SPA is also high. Inset, scatter plots show normalized trial-wise activation of each region for all trials in all participants. c, Schematic of pRF matching between OPA with −pRFs in LPMA. Schematic pRFs are depicted as circles. The OPA pRF is shown in orange, along with its best matched −pRF in LPMA (shown in dark blue) and randomly matched −pRF in LPMA (light blue). Inset, the histogram indicates the Dice coefficients for iterative matching between OPA pRFs and randomly paired pRFs from LPMA (light blue) compared to matched −pRFs from LPMA (dark blue) for one example participant, showing better correspondence between matched and random ‘unmatched’ pRFs. This pattern was consistent in all participants. d,e, Matched pRFs had a significantly stronger association in trial × trial activation than unmatched pRFs on the lateral (d) and ventral (e) surfaces, which suggests that the interaction between the perceptual and memory areas is spatially specific (paired t-tests) *Ptwo-tailed< 0.05, **Ptwo-tailed < 0.01, ***Ptwo-tailed < 0.001.

As predicted, we observed an opponent relationship between activation of the −pRFs in the PMAs and the +pRFs in the SPAs during the place memory recall task (−LPMA × OPA versus +LPMA × OPA: t(16) = 3.10, P = 0.0006, D = 1.52; −VPMA × PPA versus +VPMA × PPA: t(11) = 5.27, P < 0.0001, D = 2.98). In trials where the activity of −pRFs in PMAs was high, SPA activation was reduced (Fig. 5b) (t-test versus zero: lateral (t(16) = −2.19, P = 0.04, D = 0.53); ventral (t(11) = −3.63, P = 0.003, D = 1.04). By contrast, the +pRFs in the PMAs did not show a push–pull dynamic: on trials where activity of +pRFs in the PMA was high, activation of the SPA also increased (t-test versus zero: lateral (t(16) = 3.76, P = 0.001, D = 1.69); ventral (t(11) = 5.88, P = 0.0001, D = 1.69) (Fig. 5b). We found a similar pattern when we considered all trials from all subjects pooled together (Fig. 5b insets and Extended Data Fig. 5), and we replicated this effect in an independently collected dataset (Extended Data Fig. 6).

Is the interaction between perceptual and mnemonic spatially specific? Importantly, if the interaction between the PMAs and SPAs is structured by a retinotopic code, we expect that the opponent dynamic between −pRFs in the PMAs with +pRFs in the SPAs would be stronger between pRFs representing shared regions of visual space. To test this, we again compared the partial correlation in trial × trial activation between +/−pRFs of the perception and memory areas, this time focusing on subpopulations of +/−pRFs with matched (versus unmatched) visual-field representations (in x, y and sigma) (Methods and Fig. 5c). Because of our stringent pRF matching criteria (Methods), the overall number of subjects included in this analysis was reduced (lateral: n = 14; ventral: n = 7).

This analysis revealed that the inhibitory interaction between − pRFs in PMAs and +pRFs in SPAs is structured by a retinotopic code (that is, spatially specific) (Fig. 5d,e). The opponent relationship between −pRF activation in the PMAs and matched +pRF activation in the SPAs was significant on both the lateral (F(1,13) = 16.45, P = 0.0013) and ventral (F(1,6) = 10.93, P = 0.016) surfaces, reflecting the fact that −pRFs in PMAs exhibit a negative relationship with spatially matched SPAs, whereas +pRFs in PMAs show a positive relationship with spatially matched SPAs. It should be noted that this relationship was modulated by matching on the lateral (amplitude × matching: F(1,13) = 4.64, P = 0.05) and ventral (amplitude × matching: F(1,6) = 5.96, P = 0.05) surfaces. On the lateral surface, when trial × trial activity in SPA was high, activity in spatially matched PMA −pRFs was relatively low (matched versus zero: t(13) = 2.73, P = 0.017, D = 0.75), and this anticorrelation was significantly stronger for matched versus unmatched −pRFs (t(13) = 2.48, P = 0.027, D = 1.01). On the ventral surface, a similar pattern was observed despite the small number of participants, but it did not reach significance (matched versus zero: t(6) = 1.83, P = 0.11, D = 0.76; matched versus unmatched: t(6) = 2.06, P = 0.08, D = 1.28). By contrast, for +pRFs in the PMAs, we observed a positive correlation with spatially matched versus unmatched +pRFs in the SPAs (lateral matched versus zero: t(13) = 4.16, P = 0.0011, D = 1.15; ventral matched versus zero: t(6) = 3.41, P = 0.014, D = 1.37). These data show that the spatially specific opponent relationship between −pRFs in the PMAs and +pRFs in the SPAs evidenced during bottom-up visual stimulation (that is, pRF mapping in Exp. 1) is reversed during top-down memory recall, revealing a push–pull dynamic between +/−pRFs that represent similar regions of visual space.

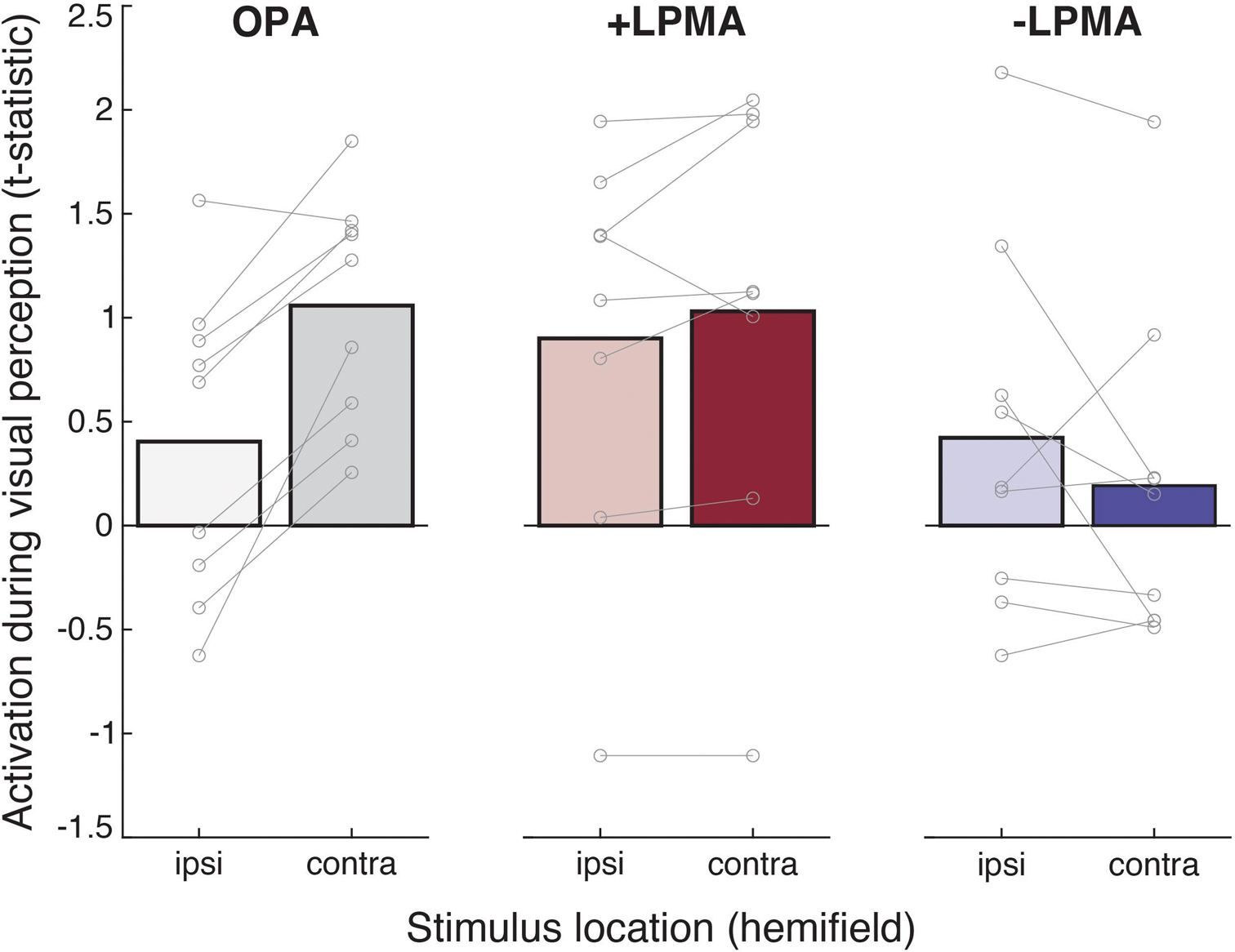

Although the spatially specific push–pull dynamic observed between +/−pRFs during both perception (Exp. 1) and recall (Exp. 2) is compelling, these two tasks represent situations wherein activation of the visual and memory systems should be maximally opposed (that is, focusing attention externally on a visual stimulus versus focusing attention internally during visual recall). Do −pRFs in the PMAs and +pRFs in the SPAs interact in a mutually inhibitory fashion in contexts where perceptual and memory systems are expected to interact, such as during familiar scene perception? As a final test of the interaction between the SPA and PMA pRFs, we asked whether the mutually inhibitory interaction between −pRFs in the PMAs and +pRFs in the SPAs is evident when participants view images of locations that were familiar to them in real life. We tested two possible hypotheses. On the one hand, processing familiar scenes could be mutually excitatory for both the memory and perception areas, resulting in a positive trial × trial relationship between the SPAs and both +pRFs and −pRFs in PMAs. On the other hand, the opponent interaction might persist if the SPAs and −pRFs in the PMAs were interlocked in a mutually inhibitory relationship, like predictive coding30.

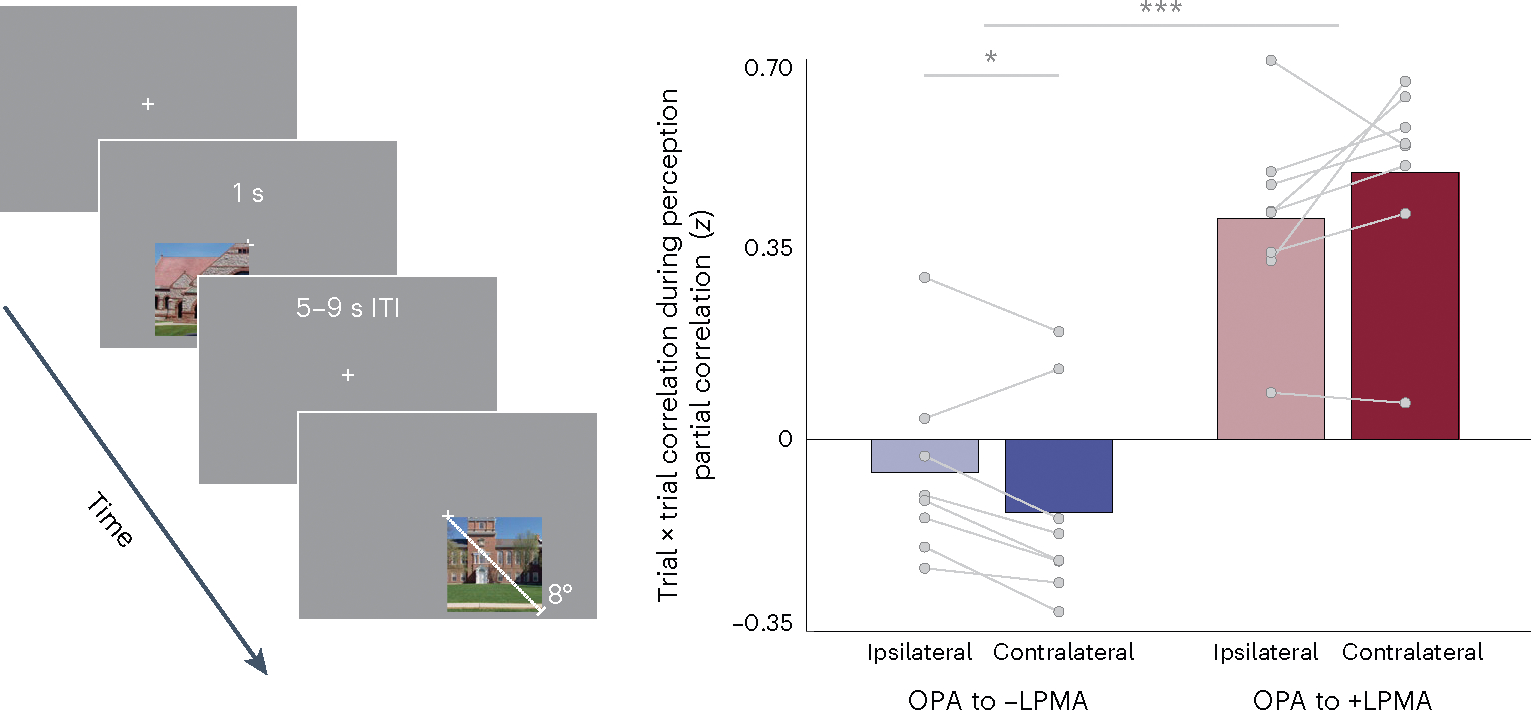

For this experiment (Exp. 3), a subset of participants (n = 8) passively viewed portions of images (lower left and right quadrants) of recognizable Dartmouth College landmarks (Fig. 6a). We focused on the lateral surface (OPA and LPMA) because −pRFs could be localized most robustly in LPMA in our main sample of participants. Familiar scene stimuli were presented in the lower quadrants to maximally stimulate OPA and LPMA, and we specifically considered +/−pRFs with centers in the contralateral lower visual field. For this analysis, we tested whether the −pRFs in LPMA and +pRFs in OPA are correlated or anticorrelated during familiar scene perception. We hypothesized that activation of +pRFs in OPA would be inversely related to activation of −pRFs in LPMA, consistent with a mutually inhibitory interaction. In addition, we predicted that this interaction would be spatially specific: the inhibitory dynamic should be stronger when visual information was presented in the contralateral lower visual field, that is, preferred quadrant, relative to the ipsilateral lower visual field, that is, non-preferred quadrant. It should be noted that this is a coarser test of spatial specificity than that presented in Exp. 2 due to both the size of the stimuli presented in Exp. 3 and the comparison of preferred versus non-preferred quadrants (Exp. 3) as compared to matched versus unmatched individual pRFs (Exp. 2).

Fig. 6 |. Positive pRFs in SPAs and negative pRFs in PMAs exhibit a push–pull dynamic during perception of familiar scene images.

Left, in Exp. 3, participants passively viewed portions of images depicting two familiar Dartmouth College landmarks in the lower visual field. We investigated the trial × trial correlation in activation between +pRFs in OPA and +/−pRFs in LPMA. Right, −pRFs in LPMA exhibit an opponent interaction with +pRFs in OPA. Six out of eight participants exhibited a negative correlation between these pRF populations, and this relationship was significantly stronger when stimuli were presented in the contralateral compared to ipsilateral visual field (repeated measures ANOVA, paired t-tests). By contrast, activation of the +pRFs in LPMA was significantly positive for both contralateral and ipsilateral visual-field presentations. *Ptwo-tailed < 0.05, ***Ptwo-tailed < 0.001.

We found that a spatially specific inhibitory interaction between +pRFs in OPA and −pRFs in LPMA persists during familiar scene viewing (interaction between visual field and pRF association: F(1,7) = 8.27, P = 0.02; Fig. 6, Extended Data Fig. 7). The trial × trial opponent dynamic between OPA pRFs, and −pRFs and +pRFs in the PMAs differed by visual field (t(7) = 2.79, P = 0.026, D = 0.98). As we observed in recall, this dynamic was driven by the opposing sign of the relationship between pRFs in OPA with +/−pRFs in LPMA. We found that the relationship between pRFs in OPA and −pRFs in LPMA was negative in six out of eight participants in the contralateral visual field, and the strength of the negative association between OPA and −pRF activity for stimuli in the contralateral visual field was significantly stronger than the ipsilateral visual field (t(7) = 2.71, P = 0.03, D = 0.95). By contrast, the relationship between OPA and +pRFs in LPMA was positive in eight out of eight participants, and was numerically stronger for the contralateral hemifield (t(7) = 1.75, P = 0.12, D = 0.62). Together, the opposing responses during visual stimulation (Exp. 1 and 3) and during recall (Exp. 2) strongly suggest that the spatially specific, mutually inhibitory interaction between −pRFs in mnemonic regions and +pRFs in perceptual regions is a generalizable description of their interaction that extends to naturalistic contexts, like familiar scene perception.

Discussion

In this study we observe that a shared retinotopic code between externally oriented (perceptual) and internally oriented (mnemonic) areas of the brain structures their mutual activity, such that pRFs representing similar areas in visual space have highly anticorrelated activation during both bottom-up scene perception and also top-down memory recall. Together with recent reports showing retinotopic coding persisting as far as the ‘cortical apex’15,16,31, including the DMN15,16, and the hippocampus31,32, our findings challenge conventional views of brain organization, which generally assume that retinotopic coding is replaced by abstract amodal coding as information propagates through the visual hierarchy7–10 toward memory structures11–14. In addition, by examining the interaction between functionally paired perceptual and memory-related areas, our work suggests that retinotopic coding may play an integral role in structuring information processing across the brain.

This conclusion has substantial implications for our systems-level understanding of information processing at the cortical apex. Along with earlier work in human and non-human primates15,16, our work demonstrates that high-level brain areas approaching the cortical apex12,13 explicitly represent visual information in the environment. These regions have an inverted retinotopic code5,17 that organizes their functional interaction with visual areas. This view directly contrasts with the classic view of the cortical apex as an amodal and ‘internally oriented’ neural system. Large-scale deactivation of mnemonic areas at the cortical apex (for example, the DMN) during visual tasks and activation during internally focused tasks is among the most striking and widely replicated network-level patterns in functional neuroimaging33–36. Of particular importance is the observation that the deactivation within the DMN was not thought to represent stimulus information, but instead resulted from a trade-off between internally and externally oriented neural processes35,36. By contrast, our results suggest that the opponent activation profiles of DMN/non-DMN brain areas may reflect a mutually suppressive functional interaction between mnemonic and perceptual areas that are involved in processing the same stimulus, rather than a trade-off between dissociable processes (like internal and external cognition). As a result, it may be necessary to reconsider the DMN’s role in tasks that require externally directed attention, as well as what representational content might be conveyed in negative blood-oxygen-level-dependent (BOLD) responses.

How general is retinotopic coding for structuring perceptual–mnemonic interactions? On the one hand, because visually guided navigation has unique mnemonic demands (for example, representing visual information out of view), scene areas might have privileged access to memory information37. The scene memory and perception areas may uniquely span the boundary of DMN/visual cortex (Fig. 1b and Extended Data Fig. 3)24 (but see ref. 11). Likewise, these unique mnemonic processes could lead to preferential coding of retinotopic information in scene memory areas, making the opponent interaction observed here ‘scene specific’. On the other hand, the present findings and others15,31,32 show that pRFs, including −pRFs, are widely distributed throughout the brain. As such, retinotopic representations could be well-poised to structure interactions between many functionally coupled brain areas broadly in cortex, beyond the domain of scenes, including other functional subnetworks situated within the DMN24,38–43. Matching visual (posterior) and language (anterior) areas have recently been identified at the anterior edge of visual cortex11, raising a question as to whether these areas might also be coupled via opponent dynamics that structure their communication. Beyond retinotopic codes, it is important to consider that the format of visual spatial coding within a region may depend on the computational roles a given region plays. For example, parietal, occipital and temporal areas utilize multiple different visuospatial coding motifs8,44–46, including retinotopic8, spatiotopic44,45,47 and head/body-centered formats48. As a result, while our data clearly show the mutual retinotopic code structures the shared activity between paired perceptual and mnemonic areas, future studies will be necessary to further elaborate the nature of the visuospatial coding spanning internally oriented and externally oriented networks in the brain.

Of particular interest is the fact that the PMAs contained a large proportion of +pRFs that, unlike the −pRFs, did not share the biased visual-field representations of their SPA counterparts. This raises two questions. First, what regions contribute visual information to the memory areas’ +pRFs? While the present data offers no definitive answer, it is possible that they represent information arriving locally from other visual areas like the visual maps in the dorsal intraparietal sulcus49 or via longer range connections like the vertical occipital fasciculus50. Second, what do the +pRFs contribute to the PMAs’ functions? The robust activation of both +pRFs and −pRFs in the PMAs during memory recall suggests that these populations have related activity and differ primarily in their response to visual stimulation. It should be noted that the PMAs are defined based on a single response property (response during recall of places versus people), and functional regions may contain subregions with different functional, structural and cytoarchitectonic characteristics (similar to the presence of multiple retinotopic maps within larger, functionally defined visual regions like OPA48). Future work is needed to elucidate what differential roles the +/−pRFs in the PMAs might play.

To summarize, our results and others15,16,31 show that retinotopy is a coding principle that straddles internally oriented (mnemonic) and externally oriented (perceptual) areas in the brain. The inverted retinotopic code at the cortical apex is functionally tied to the positive retinotopic code in perceptual areas and may be crucial for scaffolding communication between memory and perception.

Online content

Any methods, additional references, Nature Portfolio reporting summaries, source data, extended data, supplementary information, acknowledgements, peer review information; details of author contributions and competing interests; and statements of data and code availability are available at https://doi.org/10.1038/s41593-023-01512-3.

Methods

This study was approved by the Dartmouth College Institutional Review Board (Protocol no. 31288).

Participants

Seventeen adults (13 female; age = 22.8 ± 3.5 standard deviation (s.d.) years old) completed fMRI Exp. 1 and 2. A subset of nine participants (5 female; age = 23.5 ± 3.8 s.d. years old) completed Exp. 3, which examined perception of familiar places. No statistical methods were used to predetermine sample sizes but our sample sizes are similar to those reported in previous publications24. Data collection and analysis were not performed blind to the conditions of the experiments. Participants were not aware of the experimental manipulation (single-blind). Participants had normal or correct-to-normal vision, were not colorblind and were free from neurological or psychiatric conditions. Written consent was obtained from all participants in accordance with the Declaration of Helsinki and with a protocol and consent form approved by the Dartmouth College Institutional Review Board (Protocol no. 31288). Participants were compensated for their time using gift cards at a rate of US$20 per hour.

Visual stimuli and tasks

pRF mapping (Exp. 1).

During pRF mapping sessions, a bar aperture traversed gradually through the visual field while revealing randomly selected scene fragments from 90 possible scenes. During each 36-s sweep, the aperture took 18 evenly spaced steps every 2 s (1 repetition time (TR)) to traverse the entire screen. Across the 18 aperture positions, all 90 possible scene images were displayed once. A total of eight sweeps were made during each run (four orientations, two directions). The bar aperture progressed in the following order for all six runs: left to right, bottom right to top left, top to bottom, bottom left to top right, right to left, top left to bottom right, bottom to top and top right to bottom left). The bar stimuli covered a circular aperture (diameter = 11.4° of visual angle). Participants performed a color detection task at fixation, indicating via button press when the white fixation dot changed to red. Color fixation changes occurred semi-randomly, with approximately two color changes per sweep. Stimuli were presented using PsychoPy (v.3.2.3)51.

SPA localizer.

The SPAs, that is OPA and PPA, are defined as regions that selectively activate when an individual perceives places (that is, a kitchen) compared with other categories of visual stimuli (that is, faces, objects, bodies)24,27,40,52. To identify these areas in each individual, participants performed an independent functional localizer scan. On each run of the localizer (two runs), participants passively viewed blocks of scene, face and object images presented in rapid succession (500 ms stimulus, 500 ms interstimulus interval (ISI)). Blocks were 24 s long, and each run comprised 12 blocks (four blocks per condition). There was no interval between blocks.

PMA localizer (recall task: Exp. 2).

The PMAs are defined as regions that selectively activate when an individual recalls personally familiar places (that is, their kitchen) compared with personally familiar people (that is, their mother)24. To identify these areas in each individual, participants performed an independent functional localizer. Before fMRI scanning, participants generated a list of 36 personally familiar people and places to establish individualized stimuli (72 stimuli in total). These stimuli were generated based on the following instructions.

“For your scan, you will be asked to visualize people and places that are personally familiar to you. So, we need you to provide these lists for us. For personally familiar people, please choose people that you know in real life (no celebrities) that you can visualize in great detail. You do not need to be contact with these people now, as long as you knew them personally and remember what they look like. So, you could choose a childhood friend even if you are no longer in touch with this person. Likewise, for personally familiar places, please list places that you have been to and can richly visualize. You should choose places that are personally relevant to you, so you should avoid choosing places that you have only been to one time. You should not choose famous places where you have never been. You can choose places that span your whole life, so you could do your current kitchen, as well as the kitchen from your childhood home.”

During fMRI scanning, participants recalled these people and places. In each trial, participants saw the name of a person or place and recalled them in as much detail as possible for the duration that the name appeared on the screen (10 s). Trials were separated by a variable ISI (4–8 s). PMAs were localized by contrasting activity when participants recalled personally familiar places compared with people (see ‘ROI definition’ section). All trials were unique stimuli, and conditions (that is, people or place stimuli) were pseudo-randomly intermixed so that no more than two repeats per condition occurred in a row.

Perception of familiar scenes (Exp. 3).

A subset of participants in the main experiment (n = 9) took part in this experiment, which involved viewing an image of two prominent Dartmouth College buildings (Baker Library and Rollins Chapel; one image per landmark). One participant was excluded for lack of familiarity with one building (Rollins Chapel). All remaining participants were familiar with the landmarks and had lived in the Hanover area for at least one year.

During scanning, participants passively viewed the familiar scene images. On each trial, participants maintained fixation while passively viewing the lower left or lower right quadrant of each image (display time: 1 s). The order of presentations was randomly intermixed, and no stimuli were allowed to repeat more than two times in a row in a particular location. Images subtended as much of the whole lower visual-field quadrant possible (0°–8° visual angle). We focused on the lower quadrants to maximally stimulate OPA and LPMA. Before scanning, we showed participants the full image of each location to familiarize them with the specific image they would be seeing. Each image was presented eight times per location per run. Trials were separated by a variable ISI (4–8 s). We collected two imaging runs, resulting in 32 trials for each visual-field location.

fMRI data processing

MRI acquisition.

All data were collected at Dartmouth College on a Siemens Prisma 3T scanner (Siemens) equipped with a 32-channel head coil. Images were transformed from dicom to nifti format using dcm2niix (v.1.0.20190902)53.

T1 image.

For registration purposes, a high-resolution T1-weighted magnetization-prepared rapid acquisition gradient echo (MPRAGE) imaging sequence was acquired (TR = 2,300 ms, echo time (TE) = 2.32 ms, inversion time = 933 ms, flip angle = 8°, field of view = 256 × 256 mm, slices = 255, voxel size = 1 × 1 × 1 mm). T1 images segmented and surfaces were generated using FreeSurfer54–56 (v.6.0) and aligned to the fMRI data using align_epi_anat.py and @ SUMA_AlignToExperiment57.

fMRI acquisition.

fMRI data were acquired using a multi-echo T2*-weighted sequence. The sequence parameters were: TR = 2,000 ms, TEs = [14.6, 32.84, 51.08], GRAPPA factor = 2, flip angle = 70°, field of view = 240 × 192 mm, matrix size = 90 × 72, slices = 52, multiband factor = 2, voxel size = 2.7 mm isotropic. The initial two frames of data acquisition were discarded by the scanner to allow the signal to reach steady state.

Preprocessing.

Multi-echo data processing was implemented based on the multi-echo preprocessing pipeline from afni_proc.py in AFNI (v.21.3.10 ‘Trajan’)58. Signal outliers in the data were attenuated (3dDespike59). Motion correction was calculated based on the second echo and these alignment parameters were applied to all runs. The optimal combination of the three echoes was calculated and the echoes were combined to form a single, optimally weighted time series (T2smap. py). Multi-echo independent component analysis (ICA) denoising19,60–62 was then performed (see ‘Multi-echo ICA’, below). Following denoising, signals were normalized to percent signal change.

Multi-echo ICA.

The data were denoised using multi-echo ICA denoising (tedana.py19,61,62, v.0.0.10). PCA was applied and thermal noise was removed using the Kundu decision tree method. Following this, data were decomposed using ICA, and the resulting components were classified as signal and noise based on the known properties of the T2* signal decay of the BOLD signal versus noise. Components classified as noise were discarded, and the remaining components were recombined to construct the optimally combined, denoised time series.

pRF modeling.

Detailed description of the pRF model implemented in AFNI is provided elsewhere18. Given the position of the stimulus in the visual field at every time point, the model estimates the pRF parameters that yield the best fit to the data: pRF amplitude (positive, negative), pRF center location (x, y) and size (diameter of the pRF). Both Simplex and Powell optimization algorithms are used simultaneously to find the best time series/parameter sets (amplitude, x, y, size) by minimizing the least-squares error of the predicted time series with the acquired time series for each voxel. Relevant to the present work, the amplitude measure refers to the signed (positive or negative) degree of linear scaling applied to the pRF model, which reflects the sign of the neural response to visual stimulation of its receptive field.

Sampling of fMRI data to the cortical surface.

For each participant, the analyzed functional data were projected onto surface reconstructions of each individual participant’s hemispheres in the Surface Mapping with AFNI (SUMA) standard mesh (std.141 (ref. 63)), derived from the FreeSurfer autorecon script using the SUMA57 software and the 3dvol2surf commands.

ROI definition.

SPAs (OPA and PPA) were established using the same criterion used in our earlier work24. SPAs were drawn based on a general linear test comparing the coefficients of the general linear model (GLM) during scene versus face blocks. Comparable results were observed when identifying the SPAs by comparing scene versus object blocks. A vertex-wise significance of P < 0.001 along with expected anatomical locations was used to define the ROIs52,64.

To define category-selective memory areas, the familiar people or places memory data was modeled by fitting a gamma function of the trial duration for trials of each condition (people and places) using 3dDeconvolve. Estimated motion parameters were included as additional regressors of no-interest. PMAs were drawn based on a general linear test comparing coefficients of the GLM for people and place memory. A vertex-wise significance threshold of P < 0.001 was used to draw ROIs.

To control for differing ROI sizes across regions and people, we restricted all analyses to 300 vertices centered on the center of mass of each threshold ROI. Consistent with earlier work, we chose 300 vertices to ensure that no region or participant disproportionately contributed to any effects24. The results were qualitatively similar when 600 vertices were considered, suggesting our findings did not depend on ROI size.

ROI analysis of pRF amplitude.

To calculate the percentage of −pRFs in each ROI, we applied the following procedures. First, pRFs were set to a threshold on variance explained by the pRF model (R2 > 0.08), which is consistent with earlier work using R2 thresholds ranging between 0.05 and 0.10 (refs. 16,32,65,66). Our results were consistent at R2 thresholds between 0.05 and 0.20. Next, to avoid analyzing only very few pRFs within an ROI, only ROIs consisting of >25 suprathreshold pRFs (>8.3% of total ROI) were included. The percentage of suprathreshold pRFs with a negative amplitude within each ROI was then calculated and submitted for statistical analysis.

Visual-field coverage.

Visual-field coverage (VFC) plots represent the sensitivity of each ROI to different positions in the visual field. To compute these, individual participant VFC plots were first derived. These plots combine the best Gaussian receptive field model for each suprathreshold voxel within each ROI. Here, a max operator is used, which stores, at each point in the visual field, the maximum value from all pRFs within the ROI. The resulting coverage plot thus represents the maximum envelope of sensitivity across the visual field. Individual participant VFC plots were averaged across participants to create group-level coverage plots.

To compute the elevation biases, we calculated the mean pRF value (defined as the mean value in a specific portion of the visual-field coverage plot) in the contralateral upper visual field (UVF) and contralateral lower visual field (LVF) and computed the difference (UVF–LVF) for each participant, ROI and amplitude (+/−) separately. A positive value thus represents an upper visual-field bias, whereas a negative value represents a lower visual-field bias. Analysis of the visual-field biases considers pRF center location (like the center of mass calculation does), as well as pRF size and R2. This makes the mean pRF value a preferable summary metric to analyzing pRF center position alone18,32,40,48.

Reliability of pRF amplitude and VFC.

To quantify the reliability of pRF estimates, we conducted a series of split-half analyses. First, pRF models were computed on the average time series of all odd runs (1, 3, 5) and even runs (2, 4, 6) separately for each participant. Then, for each ROI, we identified all suprathreshold pRFs (R2 > 0.08) and pooled these pRFs across participants. This was done separately for +pRFs and −pRFs in each split (odd, even). Next, we conducted three tests of split-half reliability. First, we computed the percentage of pRFs in each ROI whose amplitude sign (that is, positive or negative) remained consistent across splits. Second, to determine whether the sign of pRF amplitudes was reliable, we computed Pearson’s correlation coefficient in amplitude across independent splits (odd, even) separately for positive and negative pRFs. We compared these R values against zero (that is, no correlation) using t-tests (two-tailed). Third, to determine whether the ROI visual-field preferences are reliable, we computed VFC maps from all suprathreshold pRFs in each ROI and split. Next, we calculated the Dice coefficient in the overlap between the split-half coverage maps and tested these values against zero (that is, no overlap) using t-tests (two-tailed).

Recall trial × trial analysis.

To assess the interaction between +pRFs in SPAs and −pRFs in SPAs during memory recall, we adopted the following procedures. For each participant and ROI, we sampled the pattern of activity (t-value versus baseline) elicited during the recall of each personally familiar place (36 places per participant) from the place memory localizer experiment. Suprathreshold pRFs were separated according to amplitude (+pRFs, −pRFs) before averaging the recall responses across pRFs. This produced 36 responses (one for each recalled place) per pRF amplitude in each participants ROI. We then z-scored these values for each participant and ROI separately.

After normalization, in each participant, we computed the partial correlation (Pearson’s R) between responses during memory recall of +pRFs in SPAs with −pRFs in PMAs, while controlling for the responses of +pRFs in PMAs and vice versa. To determine whether the +pRFs and −pRFs from the PMAs had differential influence on activity in the SPAs, we compared the correlation coefficients from each population against each other using paired t-tests. To determine whether the influence of the −pRFs and +pRFs was significant, we compared the Fisher-transformed correlation coefficients from each population (+pRFs and −pRFs in the PMAs) against zero (no correlation).

Spatial specificity analysis.

We matched +pRFs in the SPAs with both +/−pRFs in the PMAs separately (for example, OPA to −LPMA and OPA to +LPMA) using the following procedure. On each iteration (1,000 iterations in total; randomized PMA pRF order): (1) for every pRF in a PMA, we computed the pairwise Euclidean distance (in x, y and sigma) to all +pRFs in the paired SPA and found the SPA pRF with the smallest distance that was smaller than the median distance of all possible pRF pairs, and then (2) we required that all pRF matches were uniquely matched, so if an SPA pRF was the best match for two PMA pRFs, then the second PMA pRF was excluded. To prevent under-sampling, only subjects with more than 10 matched +/−pRFs on average in each region were considered (lateral surface: n = 14, ventral surface: n = 7).

Following this, we compared the correlation in trial × trial activation matched (versus non-matched) pairs of pRFs with ‘unmatched’ pRFs. To create the ‘unmatched’, random pRF pairings, we randomly sampled pRFs in the memory area (repeated 1,000 times). We then computed the unique correlation in trial × trial activation during recall between SPA pRFs and PMA pRFs, using the same procedure as in our main analysis (for example, the partial correlation between SPA pRFs with PMA −pRFs, controlling for PMA +pRFs) for each iteration of the pRF matching. We compared the mean of the Fisher-transformed partial correlation values across the iterations for the matched pRFs with the mean of random (that is, unmatched) pRFs. To ensure that matched pRFs had better corresponding visual-field representations than unmatched pRFs, we calculated the visual-field overlap between pRF pairs in the matched samples, compared with the random samples (average Dice coefficient of the visual-field coverage for all matched versus unmatched iterations).

Perception of familiar scenes trial × trial analysis.

To assess the interaction between pRFs in OPA and +/−pRFs in LPMA during perception, we adopted the following procedure. We modeled task-evoked activity using a GLM with each image presentation fit as a separate regressor and calculated the average trial-wise activation of pRFs in OPA and +/−pRFs in LPMA. It should be noted that, because of our interest in spatially specific interactions, we only considered pRFs with centers in the contralateral lower visual field, where the stimuli were presented (that is, left hemisphere OPA pRFs had to have centers in the lower right quadrant). We then Fisher-transformed these values for each participant and ROI separately.

After normalization, in each participant, we calculated the partial correlation between the trial × trial activation of OPA with the negative and positive pRFs in LPMA separately for each hemifield (that is, OPA with −pRFs in LPMA, controlling for +pRFs in LPMA when images were presented in the left hemifield). We compared the contralateral preference in the association (Fisher-transformed partial correlation values) between the +/−pRFs using a repeated measures ANOVA with visual field (ipsilateral, contralateral) and pRF association (OPA × +LPMA, OPA × −LPMA) as factors.

Statistical analysis.

Statistics were calculated using the R Studio package (v.1.3)67 and custom Matlab code (v.2022a, MathWorks). Data distributions were assumed to be normal, but this was not statistically tested. All individual participant data are shown. We conducted repeated measures analysis of variance using the ezANOVA function from the ‘ez’ package68. Alpha level of P < 0.05 was used to assess significance. We applied Bonferroni correction for multiple comparisons where appropriate.

Extended Data

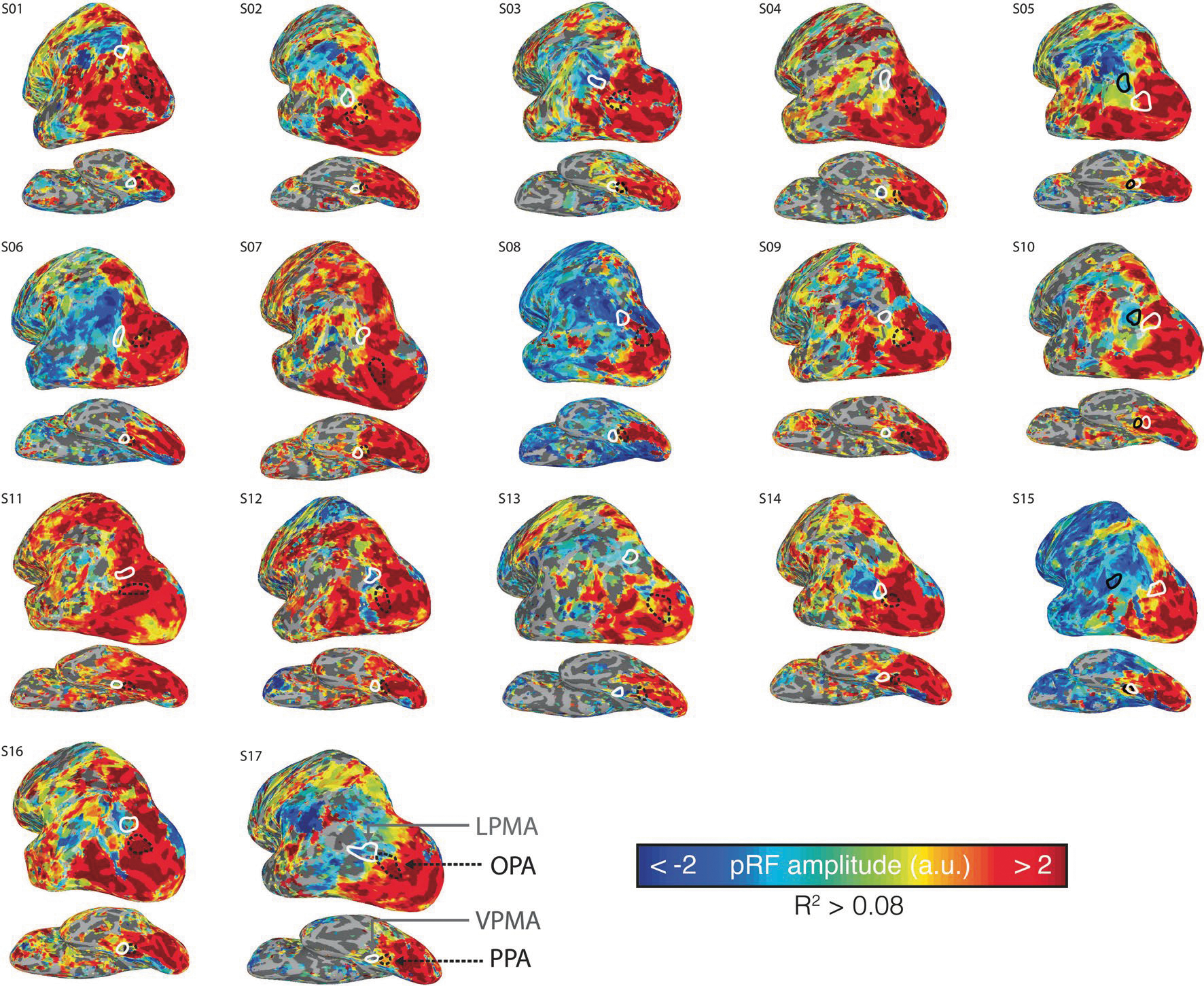

Extended Data Fig. 1 |. Transition from positive to negative-amplitude population receptive fields (+pRF, −pRF) moving anteriorly from posterior cerebral cortex is evident in individual participants.

Figure depicts amplitude maps from all participants’ left hemispheres. Only vertices surviving the threshold applied in the main text (R2 > 0.08) are shown. Individual participant SPAs and PMAs used for analysis are drawn in white (PMAs) and black (SPAs).

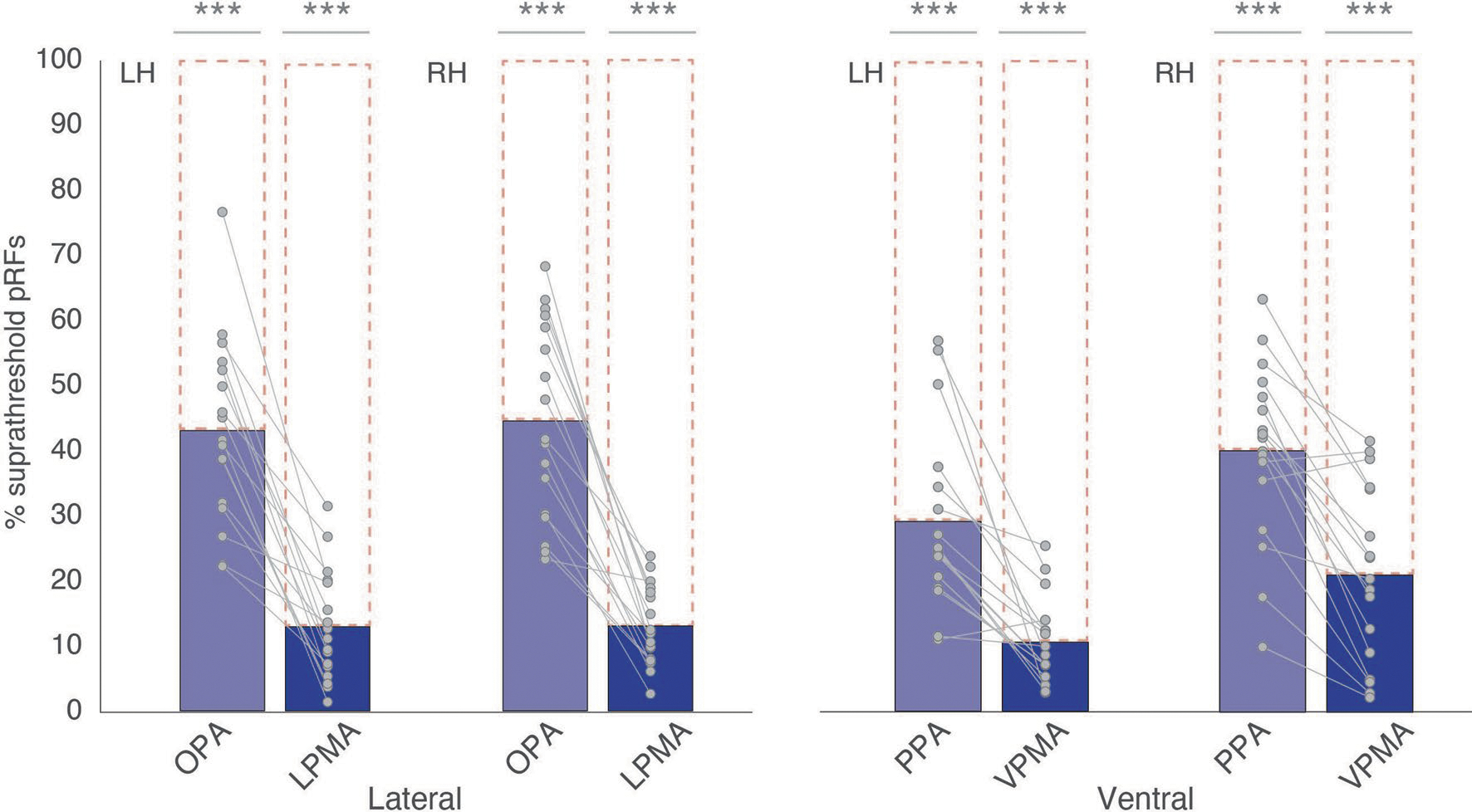

Extended Data Fig. 2 |. Retinotopic coding in SPAs and PMAs.

To quantify the extent to which retinotopic coding is expressed within each ROI we first calculated the percentage of suprathreshold pRFs (R2 > 0.08) within our ROIs for each subject separately before testing each against a non-retinotopic prediction using t-tests (that is, t-test versus zero, with Bonferroni correction). Retinotopic coding was significantly present within each ROI (LH; OPA: t(12.51)=, pcorr = 3.35–9, D = 2.99; PPA: t(16) = 8.68, pcorr = 5.67–7, D = 2.17; LPMA: t(16) = 6.23, pcorr = 3.58–5, D = 1.59; VPMA: t(16) = 6.65, pcorr = 1.64–5, D = 1.66; RH; OPA: t(16) = 12.15, pcorr = 5.10–9, D = 3.03; PPA: t(16) = 11.97, pcorr = 6.32–9, D = 3.12; LPMA: t(16) = 8.75, pcorr = 5.05–7, D = 2.18; VPMA: t(16) = 6.39, pcorr = 2.68–5, D = 1.55). Bars represent the mean percentage of suprathreshold pRFs (R2 > 0.08) in each ROI/hemisphere for the lateral (left) and ventral (right) surfaces, respectively. Individual data points are overlaid. Each ROI exhibited a significant percentage of suprathreshold pRFs, ***ptwo-tailed < 0.001.

Extended Data Fig. 3 |. Comparison between the location of the SPAs, PMAs, and default mode network in one participant.

Comparison between the location of the SPAs, PMAs, and default mode network in one participant (example participant from Main text Fig. 2). This pattern was consistent in all individuals and at the group-level (Main text Fig. 1b). Default mode network defined using the Yeo et al., 2011 parcellation24.

Extended Data Fig. 4 |. Correlation in trial × trial activation during memory recall aggregated across participants.

Mean BOLD response amplitude relative to baseline during place recall trials for each ROI (OPA, LMPA) and pRF population (+/−).

Extended Data Fig. 5 |. Differential interaction between pRFs in SPAs with −/+ pRFs in memory areas is evident across all trials.

Each scatter plot and corresponding correlation values depict the unique correlation between pRFs in the SPAs with −pRFs (blue) and +pRFs (red) in the PMAs (for example, correlation between +pRFs in OPA with −pRFs in LPMA, controlling for +pRFs in LPMA) quantified using Pearson’s correlation. Each data point represents the z-scored activation on a given trial for all pRFS in the population (that is, all -LPMA pRFS) for a given subject on a trial.

Extended Data Fig. 6 |. Trial x trial interaction between −/+ pRFs in the place memory areas and scene perception areas exhibit push-pull interaction in independent data.

Recall trials were identical to the trials used in the localizer. Participants fixated on a dot projected in the center of the screen. They were then cued with the stimulus to be recalled for 1 second, followed by a 1 s dynamic mask, and 10 seconds of imagery. Trials were separated by a 4–8 s jittered interstimulus interval. Participants completed 32 imagery trials (16 for each landmark) separated into two imaging runs. One participant was excluded from the analysis for lack of familiarity with the landmarks; the remaining participants were familiar with the locations and had lived in the Hanover area for at least one year. Two participants did not have −pRFs in the ventral surface regions of interest. We tested for the relationship between +/− pRFs in the scene perception and place memory area using the same approach described in the Main text. We examined the unique correlation between the −/+ pRFs in the place memory areas and scene perception areas (that is, correlation between activation of −pRFs in memory areas with pRFs in scene perception areas, while controlling for activation of +pRFs in the memory areas). Using paired t-tests, we found evidence for the opponent interaction between −pRFs and +pRFs in this independent sample. We found that the relationship between the −/+ pRFs in the memory areas with the scene perception area pRFs was significantly different (Lateral – t(8) = 2.61, p = 0.018; Ventral – t(6) = 7.82, p < 0.0001). As we observed in our original analysis, the majority of participants showed a negative correlation in the trial x trial activation of the −pRFs in the place memory areas with pRFs in the scene perception areas (Ventral – 6/7 participants: t(6) = 2.79, p = 0.031; Lateral – 6/8 participants: t(8) = 1.79, p = 0.11). Likewise, most participants showed a positive relationship between activation of + pRFs in the memory areas and pRFs in the perception areas (Ventral – 7/7 participants; t(6) = 7.77, p = 0.0002; Lateral – 7/8 participants; t(8) = 3.30, p = 0.01). This result gives us confidence that our original analysis was not influenced by potential circularity. * = ptwo-tailed < 0.05, *** = ptwo-tailed < 0.005.

Extended Data Fig. 7 |. Activation during recall of personally familiar places.

Mean BOLD response amplitude relative to baseline when familiar scenes were presented in each lower quadrant (hemifield: ipsilateral and contralateral) for each ROI (OPA, LPMA) and pRF population (+/−).

Acknowledgements

We thank I. Groen for assistance with specific analysis code. This work was supported by the National Institute of Mental Health under award number R01MH130529 (C.E.R.). A.S. was supported by the Neukom Institute for Computational Science and E.H.S. by the Biotechnology and Biological Sciences Research Council award number BB/V003917/1.

Footnotes

Competing interests

The authors declare no competing interests.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Additional information

Extended data Extended data is available for this paper at https://doi.org/10.1038/s41593-023-01512-3.

Supplementary information The online version contains supplementary material available at https://doi.org/10.1038/s41593-023-01512-3.

Peer review information Nature Neuroscience thanks Christopher Baldassano, Alexander Huth and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Reprints and permissions information is available at www.nature.com/reprints.

Code availability

Code used for data analysis is available on Open Science Framework (https://osf.io/sm2xf).

Data availability

Data are available on Open Science Framework (https://osf.io/sm2xf).

References

- 1.Libby A & Buschman TJ Rotational dynamics reduce interference between sensory and memory representations. Nat. Neurosci. 24, 715–726 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kiyonaga A, Scimeca JM, Bliss DP & Whitney D Serial dependence across perception, attention, and memory. Trends Cogn. Sci. 21, 493 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Summerfield C & de Lange FP Expectation in perceptual decision making: neural and computational mechanisms. Nat. Rev. Neurosci. 15, 745–756 (2014). [DOI] [PubMed] [Google Scholar]

- 4.Rademaker RL, Chunharas C & Serences JT Coexisting representations of sensory and mnemonic information in human visual cortex. Nat. Neurosci. 22, 1336–1344 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Favila SE, Lee H & Kuhl BA Transforming the concept of memory reactivation. Trends Neurosci. 43, 939–950 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yassa MA & Stark CEL Pattern separation in the hippocampus. Trends Neurosci. 34, 515–525 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Holmes G Disturbances of vision by cerebral lesions. Br. J. Ophthalmol. 2, 353–384 (1918). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wandell BA, Dumoulin SO & Brewer AA Visual field maps in human cortex. Neuron 56, 366–383 (2007). [DOI] [PubMed] [Google Scholar]

- 9.Guclu U & van Gerven MAJ Deep neural networks reveal a gradient in the complexity of neural representations across the ventral stream. J. Neurosci. 35, 10005–10014 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Groen IIA, Dekker TM, Knapen T & Silson EH Visuospatial coding as ubiquitous scaffolding for human cognition. Trends Cogn. Sci. 10.1016/j.tics.2021.10.011 (2022). [DOI] [PubMed] [Google Scholar]

- 11.Popham SF et al. Visual and linguistic semantic representations are aligned at the border of human visual cortex. Nat. Neurosci. 24, 1628–1636 (2021). [DOI] [PubMed] [Google Scholar]

- 12.Huntenburg JM, Bazin PL & Margulies DS Large-scale gradients in human cortical organization. Trends Cogn. Sci. 22, 21–31 (2018). [DOI] [PubMed] [Google Scholar]

- 13.Margulies DS et al. Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc. Natl Acad. Sci. USA 113, 12574–12579 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bellmund JLS, Gärdenfors P, Moser EI & Doeller CF Navigating cognition: spatial codes for human thinking. Science (1979) 362, eaat6766 (2018). [DOI] [PubMed] [Google Scholar]

- 15.Szinte M & Knapen T Visual organization of the default network. Cereb. Cortex 30, 3518–3527 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Christiaan Klink P, Chen X, Vanduffel W & Roelfsema PR Population receptive fields in non-human primates from whole-brain fMRI and large-scale neurophysiology in visual cortex. eLife 10, e67304 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dumoulin SO & Wandell BA Population receptive field estimates in human visual cortex. Neuroimage 39, 647 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Silson EH, Chan AWY, Reynolds RC, Kravitz DJ & Baker CI A retinotopic basis for the division of high-level scene processing between lateral and ventral human occipitotemporal cortex. J. Neurosci. 35, 11921–11935 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kundu P, Inati SJ, Evans JW, Luh WM & Bandettini PA Differentiating BOLD and non-BOLD signals in fMRI time series using multi-echo EPI. Neuroimage 60, 1759–1770 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Steel A, Garcia BD, Silson EH & Robertson CE Evaluating the efficacy of multi-echo ICA denoising on model-based fMRI. Neuroimage 264, 119723 (2022). [DOI] [PubMed] [Google Scholar]

- 21.Wang L, Mruczek REB, Arcaro MJ & Kastner S Probabilistic maps of visual topography in human cortex. Cereb. Cortex 25, 3911–3931 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Thomas Yeo BT et al. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 106, 1125–1165 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shmuel A et al. Sustained negative BOLD, blood flow and oxygen consumption response and its coupling to the positive response in the human brain. Neuron 36, 1195–1210 (2002). [DOI] [PubMed] [Google Scholar]

- 24.Steel A, Billings MM, Silson EH & Robertson CE A network linking scene perception and spatial memory systems in posterior cerebral cortex. Nat. Commun. 12, 1–13 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hasson U, Levy I, Behrmann M, Hendler T & Malach R Eccentricity bias as an organizing principle for human high-order object areas. Neuron 34, 479–490 (2002). [DOI] [PubMed] [Google Scholar]

- 26.Dilks DD, Julian JB, Paunov AM & Kanwisher N The occipital place area is causally and selectively involved in scene perception. J. Neurosci. 33, 1331–1336 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Epstein R & Kanwisher N A cortical representation the local visual environment. Nature 392, 598–601 (1998). [DOI] [PubMed] [Google Scholar]

- 28.Breedlove JL, St-Yves G, Olman CA & Naselaris T Generative feedback explains distinct brain activity codes for seen and mental images. Curr. Biol. 10.1016/j.cub.2020.04.014 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Favila SE, Kuhl BA & Winawer J Perception and memory have distinct spatial tuning properties in human visual cortex. Nat. Commun. 13, 5864 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bastos AM et al. Canonical microcircuits for predictive coding. Neuron 76, 695–711 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Knapen T Topographic connectivity reveals task-dependent retinotopic processing throughout the human brain. Proc. Natl Acad. Sci. USA 118, e2017032118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Silson EH, Zeidman P, Knapen T & Baker CI Representation of contralateral visual space in the human hippocampus. J. Neurosci. 41, 2382–2392 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zabelina DL & Andrews-Hanna JR Dynamic network interactions supporting internally-oriented cognition. Curr. Opin. Neurobiol. 40, 86–93 (2016). [DOI] [PubMed] [Google Scholar]

- 34.Shulman GL et al. Common blood flow changes across visual tasks: II. Decreases in cerebral cortex. J. Cogn. Neurosci. 9, 648–663 (1997). [DOI] [PubMed] [Google Scholar]

- 35.Fox MD et al. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc. Natl Acad. Sci. USA 102, 9673–9678 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Raichle ME The brain’s default mode network. Annu Rev. Neurosci. 38, 433–447 (2015). [DOI] [PubMed] [Google Scholar]

- 37.Robertson CE et al. Neural representations integrate the current field of view with the remembered 360° panorama in scene-selective cortex. Curr. Biol. 26, 2463–2468 (2016). [DOI] [PubMed] [Google Scholar]

- 38.Braga RM & Buckner RL Parallel interdigitated distributed networks within the individual estimated by intrinsic functional connectivity. Neuron 95, 457–471.e5 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.DiNicola LM, Braga RM & Buckner RL Parallel distributed networks dissociate episodic and social functions within the individual. J. Neurophysiol. 123, 1144–1179 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Silson EH, Steel AD & Baker CI Scene-selectivity and retinotopy in medial parietal cortex. Front Hum. Neurosci. 10, 412 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Silson EH, Steel A, Kidder A, Gilmore AW & Baker CI Distinct subdivisions of human medial parietal cortex support recollection of people and places. eLife 8, e47391 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Deen B & Freiwald WA Parallel systems for social and spatial reasoning within the cortical apex. Preprint at bioRxiv 10.1101/2021.09.23.461550 (2021). [DOI] [Google Scholar]

- 43.Ranganath C & Ritchey M Two cortical systems for memory-guided behaviour. Nat. Rev. Neurosci. 13, 713–726 (2012); 10.1038/nrn3338 [DOI] [PubMed] [Google Scholar]

- 44.Pertzov Y, Avidan G & Zohary E Multiple reference frames for saccadic planning in the human parietal cortex. J. Neurosci. 31, 1059 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Gardner JL, Merriam EP, Movshon JA & Heeger DJ Maps of visual space in human occipital cortex are retinotopic, not spatiotopic. J. Neurosci. 28, 3988 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fetsch CR, Wang S, Gu Y, DeAngelis GC & Angelaki DE Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J. Neurosci. 27, 700–712 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Golomb JD & Kanwisher N Higher level visual cortex represents retinotopic, not spatiotopic, object location. Cereb. Cortex 22, 2794–2810 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Silson EH, Groen IIA, Kravitz DJ & Baker CI Evaluating the correspondence between face-, scene-, and object-selectivity and retinotopic organization within lateral occipitotemporal cortex. J. Vis. 16, 14–14 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Swisher JD, Halko MA, Merabet LB, McMains SA & Somers DC Visual topography of human intraparietal sulcus. J. Neurosci. 27, 5326–5337 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yeatman JD et al. The vertical occipital fasciculus: a century of controversy resolved by in vivo measurements. Proc. Natl Acad. Sci. USA 111, E5214–E5223 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Peirce JW PsychoPy—psychophysics software in Python. J. Neurosci. Methods. 162, 8–13 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Weiner KS et al. Defining the most probable location of the parahippocampal place area using cortex-based alignment and cross-validation. Neuroimage 170, 373–384 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li X, Morgan PS, Ashburner J, Smith J & Rorden C The first step for neuroimaging data analysis: DICOM to NIfTI conversion. J. Neurosci. Methods. 264, 47–56 (2016). [DOI] [PubMed] [Google Scholar]

- 54.Fischl B FreeSurfer. NeuroImage 62, 774–781 (2012); 10.1016/j.neuroimage.2012.01.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Fischl B et al. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron 33, 341–355 (2002). [DOI] [PubMed] [Google Scholar]

- 56.Dale AM, Fischl B & Sereno MI Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9, 179–194 (1999). [DOI] [PubMed] [Google Scholar]

- 57.Saad ZS & Reynolds RC SUMA. Neuroimage 62, 768–773 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Cox RW AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers Biomed. Res. 29, 162–173 (1996). [DOI] [PubMed] [Google Scholar]

- 59.Jo HJ et al. Effective preprocessing procedures virtually eliminate distance-dependent motion artifacts in resting state FMRI. J. Appl. Math. 2013, 935154 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.DuPre E et al. TE-dependent analysis of multi-echo fMRI with *tedana*. J. Open Source Softw. 6, 3669 (2021). [Google Scholar]

- 61.DuPre E et al. ME-ICA/tedana: 0.0.6. Zenodo; 10.5281/ZENODO.2558498 (2019). [DOI] [Google Scholar]

- 62.Evans JW, Kundu P, Horovitz SG & Bandettini PA Separating slow BOLD from non-BOLD baseline drifts using multi-echo fMRI. Neuroimage 105, 189–197 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Argall BD, Saad ZS & Beauchamp MS Simplified intersubject averaging on the cortical surface using SUMA. Hum. Brain Mapp. 27, 14–27 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Julian JB, Fedorenko E, Webster J & Kanwisher N An algorithmic method for functionally defining regions of interest in the ventral visual pathway. NeuroImage 60, 2357–2364, 10.1016/j.neuroimage.2012.02.055 (2012). [DOI] [PubMed] [Google Scholar]

- 65.Gomez J, Barnett M & Grill-Spector K Extensive childhood experience with Pokémon suggests eccentricity drives organization of visual cortex. Nat. Hum. Behav. 3, 611–624, 10.1038/s41562-019-0592-8 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Gomez J et al. Development of population receptive fields in the lateral visual stream improves spatial coding amid stable structural-functional coupling. NeuroImage 188, 59–69 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.R Core Team. R: a language and environment for statistical computing (R Foundation for Statistical Computing, 2013); www.r-project.org/ [Google Scholar]

- 68.Lawrence MA ez: easy analysis and visualization of factorial experiments. R package version 4.0.2 (2016). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available on Open Science Framework (https://osf.io/sm2xf).