Abstract

Emotion and perception are tightly intertwined, as affective experiences often arise from the appraisal of sensory information. Nonetheless, whether the brain encodes emotional instances using a sensory-specific code or in a more abstract manner is unclear. Here, we answer this question by measuring the association between emotion ratings collected during a unisensory or multisensory presentation of a full-length movie and brain activity recorded in typically developed, congenitally blind and congenitally deaf participants. Emotional instances are encoded in a vast network encompassing sensory, prefrontal, and temporal cortices. Within this network, the ventromedial prefrontal cortex stores a categorical representation of emotion independent of modality and previous sensory experience, and the posterior superior temporal cortex maps the valence dimension using an abstract code. Sensory experience more than modality affects how the brain organizes emotional information outside supramodal regions, suggesting the existence of a scaffold for the representation of emotional states where sensory inputs during development shape its functioning.

Abstract and sensory coding of emotion coexist in the brain, with perceptual experience shaping sensory-dependent representations.

INTRODUCTION

The ability to comprehend and respond to affectively laden stimuli is vital: Observing the behavior of others enables us to predict their reactions and tailor appropriate responses (1, 2). Vision is our primary modality to get knowledge and function in the external world, as it allows to efficiently process a wealth of information from our surroundings [e.g., (3)]. For our social lives, we heavily rely on this sense to interpret nonverbal visual cues coming from other individuals, such as facial expressions and body postures, which convey crucial information about an individual’s affective state and intentions (4, 5). Also, recent research in artificial intelligence and neuroscience has highlighted the pivotal role of visual features in emotions, with convolutional neural networks predicting the emotional content of images and the activity of early visual cortex classifying perceived emotions (6). In line with this, the same mechanism responsible for the coding of stimulus properties in primary sensory areas (i.e., topographic mapping) supports the organization of affect in the right temporoparietal cortex (7).

While vision plays a dominant role in affective perception, the environment constantly engages multiple senses. Spoken communication, for instance, not only conveys semantic information but also provides cues about the speaker’s emotional state or intentions (8, 9), as in the case of vocal bursts (10–12). Similarly, humans retain a capacity for olfactory (13) and tactile (14) communication of emotion.

Understanding the interplay between different sensory modalities in the representation and expression of emotions has been a subject of interest in behavioral and brain studies [for a review, see (15)]. For instance, studies have shown that the multimodal presentation of emotional stimuli enhances recognition accuracy and speed (16, 17). This advantage may also reflect the brain organization, as regions like the superior temporal sulcus and the medial prefrontal cortex (mPFC) successfully categorize emotions across different modalities (18–20).

Despite substantial advances in the study of the interplay between perception and emotion, the majority of research has focused on unimodal emotional stimuli in typically developed individuals only [e.g., (21, 22)] making it challenging to discern whether and where in the brain emotional instances are represented using an abstract, rather than a sensory-dependent, code. In people with typical development, a fearful scream is likely to give rise to the mental imagery of someone in the act of screaming, and they are able to depict in their mind a specific facial expression and body posture, perhaps even a certain context, with a rich and dynamic representation close to what is commonly experienced in daily life (23, 24). Also, this process of mental imagery is associated with a specific pattern of brain activity in relation to the different sensory channels [see, for instance, (25, 26)]. Congenital sensory deprivation constitutes a unique model to dissect the differential contribution of specific sensory modalities to the brain representation of the external world accounting for mental imagery (27). In this regard, previous studies comparing typically developed and sensory-deprived individuals have consistently shown that the brain code for animacy (28, 29), spatial layout (30), and shape (31), among others, is primarily driven by specific computations rather than by modality or previous sensory experience (32–34).

This body of evidence prompts the inquiry of whether this sensory-independent principle, also known as supramodal organization, extends to the representation of affective states in the brain. Here, we answer this question by collecting moment-by-moment categorical and dimensional ratings of emotion during an auditory-only, visual-only, or multisensory version of a full-length movie (the “101 Dalmatians” live action movie) and by recording brain activity using functional magnetic resonance imaging (fMRI) in participants with typical development and in those who were blind or deaf since birth. Our results reveal an abstract and categorical representation of emotional states in the ventromedial prefrontal cortex (vmPFC) and of the valence dimension in the posterior portion of the superior temporal gyrus. In addition, we demonstrate that sensory experience more than modality affects how the brain organizes emotional information outside supramodal regions, suggesting the existence of a scaffold for the representation of emotional states where sensory inputs during development shape its functioning.

RESULTS

In this work, using real-time ratings of emotion and fMRI, we sought to answer six research questions aimed at dissecting the abstract, the modality-specific, and the experience-dependent coding of affect in the human brain. After having ensured that emotion reports were consistent between individuals, we asked (i) which brain regions encode the affective experience. For this purpose, we fit a categorical model of emotion into the activity of typically developed and sensory-deprived participants presented with the multisensory or unisensory versions of a naturalistic stimulus. Then, (ii) we tested through conjunction analyses whether specific brain areas represent emotion in an abstract manner, regardless of the stimulus modality and past sensory experience. Within the regions encoding the emotion model, we (iii) assessed the impact of sensory deprivation and modality by attempting to classify participants based on their brain activity. In regions representing the emotional experience through an abstract code, we tried to cross-decode emotion categories. For instance, we trained an algorithm on brain activity of people with typical sensory experience aiming to predict the emotional instances of congenitally blind or deaf individuals. This was (iv) to verify that these brain areas stored a specific, rather than an undifferentiated, experience of emotion. We then compared two alternative descriptions of the emotional experience (v) to reveal in which regions activity was better explained either by emotion categories (e.g., amusement and sadness) or affective dimensions (e.g., valence and arousal). Last, as in the case of emotion categories, we tried to predict the valence of the experience with the same cross-decoding approach, (vi) to test whether a coding of pleasantness independent of modality and previous sensory experience exists in the brain.

Behavioral experiments—Reliability of real-time valence ratings and categorical annotations of emotion

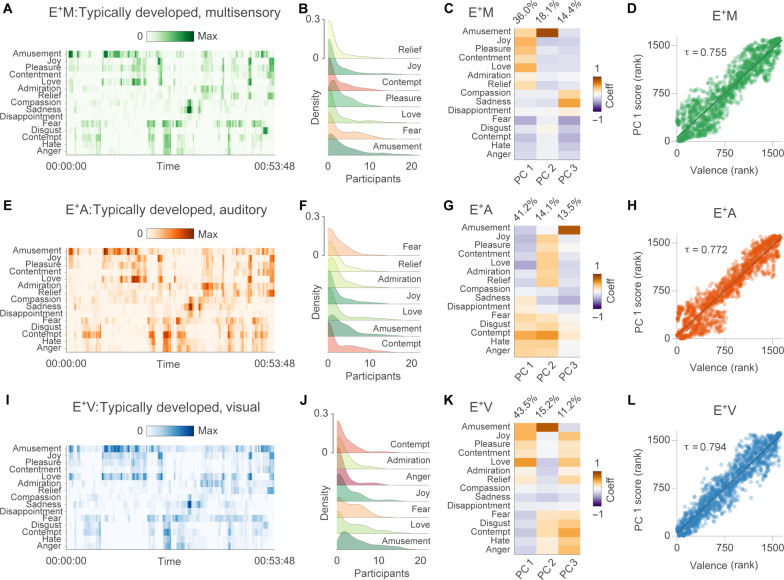

First, we explore the distribution of group-level moment-by-moment categorical reports of a collection of 15 emotions elicited by the naturalistic stimulation. Amusement, love, and joy are the positive emotions more frequently used by typically developed individuals (E+) to describe the affective experience across the multisensory (M), the auditory-only (A), and the visual-only (V) modalities. Negative states, instead, are more often labeled as fear or contempt (Fig. 1, A, B, E, F, I, and J). Single-participant annotations of the affective experience are reported in figs. S1 to S3. Using principal components (PC) analysis, we show that the first component, which contrasts positive and negative states, explains most of the variance in categorical ratings: 36.0% in the M condition, 41.2% in the A condition, and 43.5% in the V condition (Fig. 1, C, G, and K).

Fig. 1. Emotion ratings across sensory modalities.

(A) shows the group-level emotion ratings collected from typically developed participants in the multisensory condition (E+M). Darker colors indicate that a higher proportion of volunteers have reported the same emotion at a given point in time. (B) depicts the distribution of movie time points (i.e., density) as a function of the between-participant overlap in categorical ratings for the first seven emotions. In (C), we show the loadings and the explained variance of the first three PCs obtained from the emotion–by–time point group-level matrix. In (D), we report the correlation between the first PC and valence ratings collected in independent participants. (E) to (H) and (I) to (L) summarize the same information for the auditory (E+A) and the visual (E+V) conditions, respectively.

Also, in each modality, average dimensional ratings of valence obtained from independent participants correlate very strongly with the scores of the first PC extracted from categorical reports (M valence ~ M PC 1: τ = 0.755, M valence ~ M PC 2: τ = 0.138, and M valence ~ M PC 3: τ = 0.012; A valence ~ A PC 1: τ = 0.772, A valence ~ A PC 2: τ = 0.046, and A valence ~ A PC 3: τ = 0.038; V valence ~ V PC 1: τ = 0.794, V valence ~ V PC 2: τ = 0.018, and V valence ~ V PC 3: τ = 0.034; Fig. 1, D, H, and L).

The analysis of the between-participant agreement in categorical ratings reveals that the median Jaccard similarity index is J = 0.245 for sadness, J = 0.225 for amusement, J = 0.181 for love, and J = 0.158 for joy in the M condition. In the A condition, the highest median between-participant correspondence in categorical ratings is observed for amusement (J = 0.183), followed by contempt (J = 0.177), love (J = 0.176), and sadness (J = 0.167). Last, in the V condition, amusement (J = 0.229), sadness (J = 0.172), joy (J = 0.152), and love (J = 0.147) are the categories used more similarly by participants. For all emotion categories and modalities, the average Jaccard index is significantly higher than one might expect by chance (P valueBonferroni < 0.050). This finding confirms that our method can be used to reliably record the affective experience in real-time and that naturalistic stimulation significantly synchronizes people’s feelings. Nevertheless, the comparatively low value of the Jaccard index suggests that factors specific to each individual, such as personal experiences, cultural backgrounds, and linguistic nuances, may contribute to the variability in emotional reports. As far as the valence ratings are concerned, median between-participant Spearman’s correlation is moderate for all conditions (M: ρ = 0.483, A: ρ = 0.572, V: ρ = 0.456).

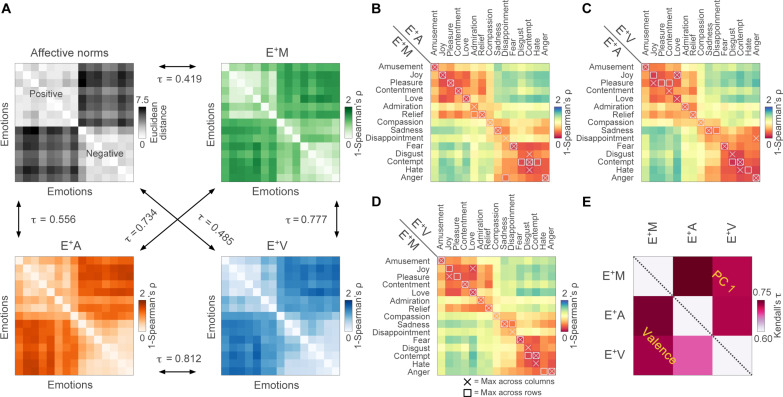

When exploring the similarity structure of behavioral ratings through representational similarity analysis (RSA), results show that group-level emotion ratings collected under the three experimental conditions relate to the arrangement of emotion labels within the space of affective norms (Fig. 2A). Specifically, the pairwise distance between emotions in the valence-arousal-dominance space correlates moderately with the representational dissimilarity matrix (RDM) built from categorical ratings collected in the M condition (τ = 0.419) and strongly with those relative to the A (τ = 0.556) and the V (τ = 0.485) modalities. Moreover, there is a very strong correlation between RDMs obtained from the three modalities, particularly between the two unimodal conditions (M ~ A: τ = 0.734, M ~ V: τ = 0.777, A ~ V: τ = 0.812).

Fig. 2. Similarity in emotion ratings between modalities and their relation with affective norms.

(A) shows that group-level emotion ratings collected under the three experimental conditions correlate with each other and relate to the arrangement of emotions in the space of affective norms. In (B) to (D), we show that, in most of the cases, ratings of a specific emotion acquired under one condition correlate maximally with ratings of the same emotion acquired under a different condition. The bottom triangular part of the matrix in (E) depicts the correlation in valence between modalities, while the top triangular part shows the correlation in terms of PC1 scores derived from categorical reports.

Further exploring the specificity of categorical ratings of emotion across sensory modalities, we demonstrate that, in the vast majority of cases, the correlation between the time course of a specific emotion acquired under two distinct conditions is higher than its correlation with all other emotions (Fig. 2, B to D). When pairs of emotions are confounded between modalities, they are also similar in valence (e.g., joy and love) and semantically related (e.g., contempt, hate, and disgust).

Last, measuring the correlation between valence ratings collected under different sensory modalities (Fig. 2E, bottom triangular part), we observe strong associations for the M versus A (τ = 0.742) and the M versus V (τ = 0.728) conditions, as well as a moderate correlation between the two unimodal conditions (A ~ V: τ = 0.679). When this analysis is repeated on PC scores from categorical ratings (Fig. 2E, top triangular part), we confirm the strength of the M versus A (τ = 0.766) and the M versus V (τ = 0.721) relationships and reveal a strong correlation between the two unimodal conditions as well (A ~ V: τ = 0.723).

fMRI experiment—Fitting the emotion model in brain activity

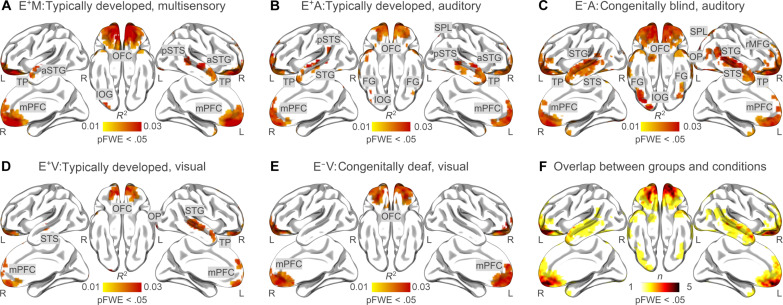

Brain activity evoked by 101 Dalmatians was measured in 50 volunteers. Participants were categorized into five groups based on their sensory experience and on the stimulus presentation modality. A group of congenitally blind individuals listened to the auditory-only stimulus (E−A), a sample of congenitally deaf watched the silent film (E−V), and three groups of typically developed individuals were presented with either the auditory-only (E+A), the visual-only (E+V), or the multisensory (E+M) versions of the stimulus. We used a voxel-wise encoding approach to identify brain regions that are involved in the representation of affect across different sensory modalities and in individuals with varying sensory experiences. Results in typically developed individuals presented with the multisensory version of the movie (i.e., E+M) show that the emotion category model is encoded bilaterally in the lateral orbitofrontal cortex (lOFC), the vmPFC, the mPFC, and the anterior portion of the bilateral superior temporal gyrus (aSTG). Also, the affective experience is mapped in the central and posterior segments of the right superior temporal gyrus and sulcus (STG/STS), the right superior parietal lobule (SPL), and the right inferior occipital gyrus (IOG; P valueFWC < 0.05, Fig. 3A).

Fig. 3. Group-level voxel-wise encoding of the emotion model.

(A) to (E) show the results of the group-level voxel-wise encoding analysis after correction for multiple comparisons (cluster-based correction, cluster forming threshold: P valueCDT < 0.001; family-wise threshold: P valueFWC < 0.05). Ratings of 15 distinct emotion categories provided by 62 E+ (M: n = 22; A: n = 20; V: n = 20) watching and/or listening to the 101 Dalmatians live action movie were used to explain fMRI activity recorded in E+ [(A) M: n = 10; (B) A: n = 10; (D) V: n = 10] and E− [(C) A: n = 11; (E) V: n = 9] people presented with the same movie. (F) shows the overlap of the group-level encoding results (P valueFWC < 0.05) between all groups and conditions. OFC, orbitofrontal cortex; pSTS, posterior superior temporal sulcus; rMFG, rostral middle frontal gyrus; TP, temporal pole; L, left hemisphere; R, right hemisphere.

When typically developed individuals listen to the audio version of the movie (i.e., E+A), emotion categories are represented bilaterally in the lOFC, the mPFC, the vmPFC, the STG/STS, the IOG, the SPL, the fusiform gyrus (FG), and in the left supramarginal gyrus (P valueFWC < 0.05, Fig. 3B). Similarly, the brain of congenitally blind individuals (i.e., E−A) represents emotions in the bilateral mPFC, the vmPFC, the lOFC, the STG/STS, the IOG, and the FG. In addition to these regions, the activity of the right lingual gyrus (LG), the right occipital pole (OP), the right ventral diencephalon, and the bilateral rostral middle frontal gyrus encodes the affective experience in congenitally blind people listening to the movie (P valueFWC < 0.05, Fig. 3C).

In the video-only condition, emotional instances are mapped mainly in the bilateral lOFC, the mPFC, the vmPFC, the right STG/STS, the right LG, and the right OP of the typically developed brain (E+V; P valueFWC < 0.05, Fig. 3D). Concerning the results obtained from congenitally deaf individuals (i.e., E−V), the affective experience is represented in the bilateral lOFC, the mPFC, the vmPFC, and the left STG/STS (P valueFWC < 0.05, Fig. 3E). Overall, the mPFC, the vmPFC, the OFC, and the STS represent emotion categories across the majority of conditions and in people with varying sensory experience (Fig. 3F).

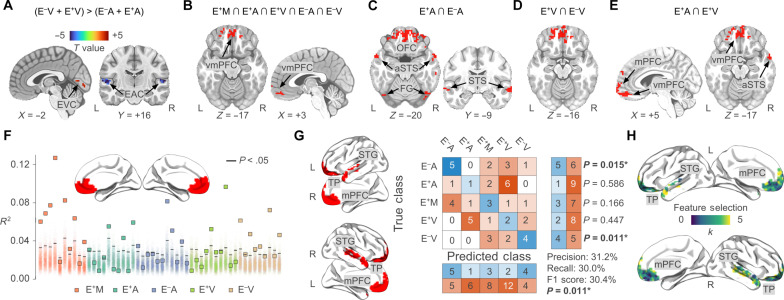

fMRI experiment—Univariate contrasts and conjunction analyses

Univariate comparisons between people with and without sensory deprivation—i.e., E−A ≠ E+A; E−V ≠ E+V; (E−A + E−V) ≠ (E+A + E+V)—show no significant differences in the extent to which individual voxels encode the emotion model (all clusters P valueFWC > 0.05). Instead, we observe that, regardless of sensory experience, emotion categories are encoded in the bilateral auditory cortex with larger fitting values when people are presented with the audio-only version of the movie—i.e., (E−V + E+V) < (E−A + E+A)—(Fig. 4A and Table 1). At the same time, the emotion model fits more the bilateral early visual cortex when typically developed and congenitally deaf people watch the silent movie—i.e., (E−V + E+V) > (E−A + E+A)—(Fig. 4A and Table 1).

Fig. 4. Univariate and multivariate analyses of the emotion network and its association with sensory modality and experience.

(A) depicts regions encoding the emotion model as a function of sensory modality. In red, voxels showing higher fitting values in the visual modality, and in blue those specific for the auditory one. (B to E) summarize the results of conjunction analyses. Areas in red in (B) map the emotion model regardless of sensory experience and modality. In (C) and (D), we show the overlap between typically developed and sensory-deprived individuals presented with the auditory and the visual stimulus, respectively. (E) represents the convergence between voxels encoding affect in unisensory modalities in typically developed participants. (F) shows single-participant results of the association between emotion ratings and the average activity of vmPFC. Squares represent the fitting of the emotion model in each participant (typically developed multisensory, E+M: red; typically developed auditory, E+A: cyan; congenitally blind auditory, E−A: blue; typically developed visual, E+V: green; congenitally deaf visual, E−V: brown). Shaded areas refer to single-participant null distributions, solid black lines represent P value < 0.05. In (G), we show the results of multivoxel pattern classification analysis. Voxel-wise encoding R2 maps are used to predict sensory experience and stimulus modality. The central part of the panel shows the confusion matrix and the performance of the multiclass (n = 5; chance ~20%) cross-validated (k = 5) classifier. Classification performance is significantly different from chance (P value = 0.011) and driven by the successful identification of sensory-deprived individuals. Feature importance analysis (H) shows that voxels of vmPFC were rarely (or never) selected to predict sensory experience and modality. aSTS, anterior superior temporal sulcus; EVC, early visual cortex; EAC, early auditory cortex.

Table 1. Results of univariate contrasts and conjunction analyses.

mOFC, medial orbitofrontal cortex; aSFG, anterior superior frontal gyrus; FP, frontal pole; aSTS, anterior superior temporal sulcus; pSTS, posterior superior temporal sulcus; TP, temporal pole; pOrbIFG, pars orbitalis of the inferior frontal gyrus; dmPFC, dorsomedial prefrontal cortex; LOC, lateral occipital cortex; ITG, inferior temporal gyrus; MTG, middle temporal gyrus; aMFG, anterior middle frontal gyrus.

| Contrast: (E−V + E+V) < (E−A + E+A) | Cluster ID | Region | Coordinates | ||

|---|---|---|---|---|---|

| Cluster ID | Region | Coordinates | 11 | Left pSTS | −62, −26, −2 |

| 1 | Left TE 1.2 | −58, −15, +5 | 12 | Right FG | +46, −50, −25 |

| 2 | Right TE 1.0 | +58, −14, +5 | 13 | Right LOC | +40, −76, −20 |

| 3 | Right TE 1.1 | +38, −31, +17 | 14 | Right ITG | +50, −60, −23 |

| 4 | Left TE 3.0 | −62, −22, +3 | 15 | Left FG | −44, −71, −20 |

| Contrast: (E − V + E + V) > (E − A + E + A) | 16 | Right MTG | +68, −34, −13 | ||

| Cluster ID | Region | Coordinates | 17 | Right MTG | +61, −27, −20 |

| 1 | Bilateral Area 17 | 0, −84, −2 | 18 | Right lOFC | +42, +24, −18 |

| 2 | Right Area 17 | +3, −71, +7 | 19 | Right aMFG | +40, +58, −12 |

| Conjunction: E + M ∩ E + A ∩ E + V ∩ E − A ∩ E − V | 20 | Right aMFG | +33, +58, −11 | ||

| Cluster ID | Region | Coordinates | 21 | Right aMFG | +25, +52, +39 |

| 1 | Left mOFC | −4, +52, −17 | Conjunction: E + V ∩ E − V | ||

| 2 | Right lOFC | +20, +43, −19 | Cluster ID | Region | Coordinates |

| 3 | Left aSFG | −18, +70, +11 | 1 | Left mOFC | −7, +51, −16 |

| 4 | Left lOFC | −24, +46, −17 | 2 | Left FP | −4, +68, −7 |

| 5 | Bilateral FP | 0, +67, +8 | 3 | Right lOFC | +20, +43, −19 |

| 6 | Right aSFG | +6, +67, −2 | 4 | Left dmPFC | −3, +61, +14 |

| Conjunction: E + A ∩ E − A | 5 | Left aSFG | −18, +70, +11 | ||

| Cluster ID | Region | Coordinates | 6 | Right aSFG | +17, +71, +9 |

| 1 | Left mOFC | −1, +60, −5 | Conjunction: E + A ∩ E + V | ||

| 2 | Right aSTS | +61, −8, −13 | Cluster ID | Region | Coordinates |

| 3 | Left aSTS | −59, +1, −18 | 1 | Left mOFC | −1, +59, −13 |

| 4 | Left pSTS | −69, −38, +2 | 2 | Right aSTG | +61, −1, −15 |

| 5 | Left TP | −31, +21, −30 | 3 | Right aSFG | +14, +68, +20 |

| 6 | Right lOFC | +35, +24, −26 | 4 | Left dmPFC | −2, +63, +21 |

| 7 | Left aSFG | −14, +59, +37 | 5 | Right pSTS | +65, −34, 0 |

| 8 | Right FG | +44, −67, −20 | 6 | Left STS | −65, −17, −6 |

| 9 | Right pOrbIFG | +36, +47, −20 | 7 | Left lOFC | −25, +44, −18 |

| 10 | Right dmPFC | +1, +58, +27 | 8 | Right aMFG | +21, +69, −5 |

The conjunction analysis between groups and conditions demonstrates that the vmPFC, the frontal pole, and the lOFC all map emotions regardless of the sensory experience and stimulus modality (equation e; Fig. 4B and Table 1). In addition, we show that the overlap between regions encoding affect in blind and sighted individuals listening to the audio-only movie extends to the bilateral temporal and occipital cortex, such as the STG/STS and the FG (equation f; Fig. 4C and Table 1). Instead, the conjunction between normally hearing and congenitally deaf volunteers watching the silent movie reveals that only medial prefrontal areas are involved in the mapping of emotion categories (equation g; Fig. 4D and Table 1). Last, when considering voxels encoding affect across modalities in typically developed people, we observe convergence in frontal and temporal regions (equation h; Fig. 4E and Table 1).

To further show that the activity of the vmPFC is associated with emotion ratings at the single-participant level and avoid double-dipping (35), we obtain a mask of this region from the website version of Neurosynth (36) https://neurosynth.org/; term: “vmpfc,” association test) and extract the average vmPFC signal across voxels in each participant. The resulting time series is used as a dependent variable in a general linear model having the emotion ratings as predictors. The strength of the association between the categorical model of emotion and the vmPFC average activity is measured using the coefficient of determination R2, and statistical significance is assessed by permuting the time points of the predictor matrix 2000 times.

Results show that the emotion model significantly relates to the average vmPFC activity (P value < 0.05) in 28 of 50 participants (Fig. 4F): 8 E+M, 5 E+A, 4 E−A, 4 E+V, and 7 E−V. We also tested whether the significance of this relationship could be explained by gender, age, or the level of understanding of the movie plot [for further details, please see (37)]. Participants were split into two groups based on the significance of the association (i.e., 28 versus 22), and this variable was entered together with the participants’ gender into a χ2 test for independence. For age and accuracy at the postscan questionnaire, we used unpaired t tests instead. Results show that none of these variables relates to the significance of the association between the vmPFC average activity and the emotion ratings [gender: χ2(1) = 0.034, P value = 0.854; age: t-stat(48) = −1.017, P value = 0.314; level of understanding: t-stat(48) = −1.320, P value = 0.193).

fMRI experiment—Classification of participants’ sensory experience and stimulus modality from brain regions encoding the emotion model

As an alternative to the univariate approach, we test in a multivariate fashion whether the pattern obtained from the fitting of the emotion model provides sufficient information to identify the participants’ previous sensory experience and the stimulus modality they are presented with. Findings show above-chance classification (F1 score: 30.4%, precision: 31.2%, recall: 30.0%; P value = 0.011; Fig. 4G), particularly when it comes to identifying sensory-deprived individuals. The five-class classifier correctly recognizes 45.5% of E−A individuals (P value = 0.015) and 44.4% of E−V volunteers (P value = 0.011). Instead, we fail to predict the sensory modality to which typically developed individuals are exposed to (E+A: 10.0%, P value = 0.586; E+V: 20.0%, P value = 0.447; E+M: 30.0%, P value = 0.166). Also, the feature importance analysis reveals that, while voxels of bilateral dorsomedial prefrontal cortex and left anterior STS contribute the most to the classification, those of bilateral vmPFC are never selected by the algorithm (Fig. 4H).

fMRI experiment—Classification of emotion categories from patterns of brain activity encoding the emotion model

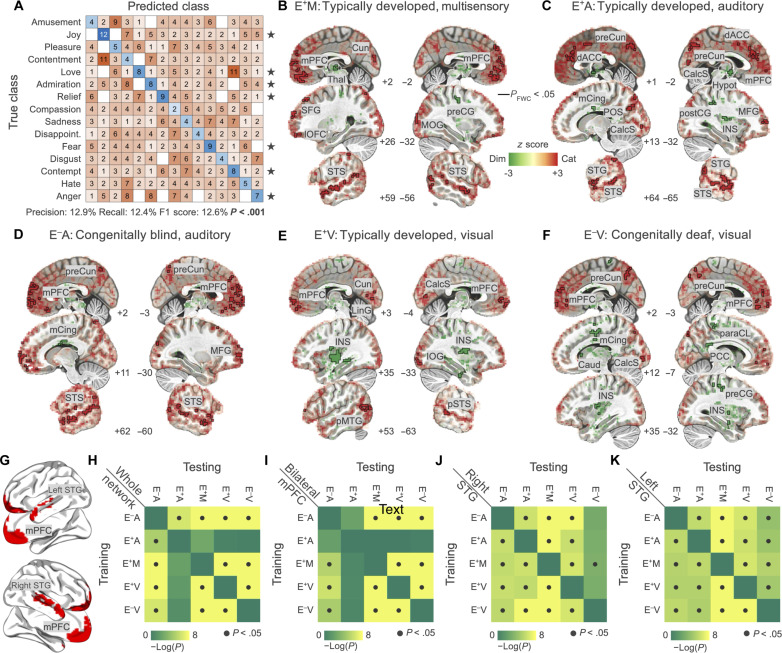

In the voxel-wise encoding analysis, we exclusively considered the fitting of the full model. Therefore, it could be argued that brain regions showing a significant association with the emotion model store the undifferentiated experience of a categorical emotion rather than the specific experience of an emotion. As this is a crucial aspect for the interpretation of our findings, particularly for what concerns the vmPFC, we tested whether emotional experiences could be classified from the activity pattern of this region. The prediction was performed in each group (e.g., test set: E+M) by training the classifier on individuals with a different sensory experience and exposed to a different stimulus modality (training set: E+A, E−A, E+V, and E−V), as in a cross-decoding analysis. Results show that, overall, it is possible to cross-decode emotion categories based on the pattern of mPFC (precision: 12.9%, recall: 12.4%, F1 score: 12.6%; P < 0.001; Fig. 5A). In addition, the analysis of classification performance at the single-class level reveals that 7 of 15 emotions can be predicted significantly better than chance. Among these, four are positive states: joy (F1 score: 24.7%; P < 0.001), love (F1 score: 17.0%; P = 0.004), admiration (F1 score: 16.7%; P = 0.004), and relief (F1 score: 19.0%; P = 0.001). Instead, three are negative emotions: fear (F1 score: 16.4%; P = 0.006), contempt (F1 score: 15.1%; P < 0.016), and anger (F1 score: 15.7%; P = 0.008). Because classification is performed using a cross-decoding approach, the pattern of mPFC activity for these seven emotions is shared between stimulus modalities and does not depend on previous sensory experience. Also, we report in fig. S4 the group average normalized maps of regression coefficients for all significant emotions.

Fig. 5. Direct comparison between the categorical and dimensional encoding models and cross-decoding of emotion categories and valence ratings from brain activity.

In (A), results for the decoding of emotion categories from mPFC activity. Standardized fitting coefficients of the emotion model are used to train a classifier and predict left-out observations from one group and condition. Performance of the classifier is significantly different from chance (P value < 0.001; black stars indicate single-class significance). In (B to F), red indicates that brain activity is better explained by the categorical model while green by the dimensional one. The black outline identifies regions in which the comparison is significant (P valueFWC < 0.05). (G) depicts areas included in all cross-decoding procedures. In (H) to (K), we show the results of the cross-validated ridge regression aimed at predicting valence ratings from brain activity. We trained the algorithm on each group and condition and tested the association between brain activity and valence in all other groups and conditions. Results are summarized by the 5-by-5 matrices showing the significance of the prediction for each pairing (dark gray dots denote P value < 0.05). Cun, cuneus; Thal, thalamus; preCG, precentral gyrus; MOG, middle occipital gyrus; preCun, precuneus; dACC, dorsal anterior cingulate cortex; mCing, mid-cingulate cortex; POS, parieto-occipital sulcus; CalcS, calcarine sulcus; Caud, caudate nucleus; Hypot, hypothalamus; postCG, postcentral gyrus; MFG, middle frontal gyrus; INS, insula; LinG, lingual gyrus; pMTG, posterior middle temporal gyrus; paraCL, paracentral lobule; PCC, posterior cingulate cortex.

fMRI experiment—Comparing the fitting of the categorical and dimensional encoding models

To answer the question of whether and where in the brain the representation of affect is better explained by a categorical rather than a dimensional account, we conducted a voxel-wise direct contrast between the adjusted coefficients of determination (adjR2) obtained from two alternative fitting models: a categorical model of emotion with 15 predictors and a dimensional one with 4 columns (the first being the valence dimension). Results show that, compared to the dimensional model, a categorical account of the self-report emotional experience better explains the mPFC activity in all groups and conditions (P valueFWC < 0.05; Fig. 5, B to F). The same is true for the activity of the superior temporal cortex, with the exception of E−V (Fig. 5F). The medial parietal cortex (e.g., precuneus and parieto-occipital sulcus) shows a significant preference for the categorical model as well, although results appear more variable across groups and conditions. In addition, we observe regions where the dimensional model explains brain activity to a larger extent than the categorical one. Among these brain areas, there is the mid-cingulate cortex in E+A, E−A, and E−V individuals (Fig. 5, C, D, and F), the insula in E+A, E+V, and E−V participants (Fig. 5, C, E, and F), as well as the somatomotor cortex (e.g., pre- and postcentral gyrus and paracentral lobule) and subcortical regions (e.g., thalamus, hypothalamus, and caudate nucleus) in the E+M, E+A, and E−V groups (Fig. 5, B, C, and F).

fMRI experiment—Cross-decoding of valence from the activity of brain regions encoding the emotion model

To characterize the information content of brain regions significantly encoding the emotion model (Fig. 5G), we estimate the association between hemodynamic activity and valence ratings in each condition and group and then test whether the relationship holds in all other conditions and groups. Results show that the cross-decoding of hedonic valence from the whole emotion network is possible for all pairings of conditions and groups, with the exception of E+A (Fig. 5H). Specifically, using regression weights estimated in typically developed individuals listening to the movie, we successfully explain the association between brain activity and valence in congenitally blind individuals (E−A, P value = 0.007) but not in other groups (E+M, P value = 0.075; E+V, P value = 0.738; E−V, P value = 0.820). In line with this, the relationship between the hemodynamic activity and valence scores in E+A can be reconstructed from regression coefficients estimated in blind people exclusively (E−A, P value = 0.001; E+M, P value = 0.117; E+V, P value = 0.180; E−V, P value = 0.358).

To further improve the spatial specificity of the cross-decoding results, we conduct the same analysis on each region of the emotion network separately. Similar to what we report for the entire network, apart from E+A, cross-decoding is possible for all pairings of conditions and groups in the bilateral mPFC cluster (Fig. 5I). In this case, however, we have not been able to reconstruct the relationship between brain activity and valence in congenitally blind people starting from regression weights of typically developed individuals listening to the movie (P value = 0.131) nor vice versa (P value = 0.105). As far as the right STG cluster is concerned, we successfully cross-decode valence across all conditions and groups, with the exception of E−V (Fig. 5J). In particular, while the coefficients relating brain activity to valence scores in congenitally deaf individuals can be used to cross-decode pleasantness in people with typical development (E+A, P value <0.001; E+M, P value <0.001; E+V, P value = 0.001) and with congenital loss of sight (E−A, P value = 0.003), the opposite is true in the multisensory condition only (E+M, P value = 0.045; E−A, P value = 0.062; E+A, P value = 0.053; E+V, P value = 0.054). Last, regarding the left STG cluster, the cross-decoding of valence is significant for all conditions and groups (Fig. 5K).

DISCUSSION

In the present study, we explored how sensory experience and modality affect the neural representation of emotional instances, with the aim of uncovering to what extent the brain represents affective states through an abstract code. To this aim, we used a naturalistic stimulation encompassing either unimodal or multimodal conditions and collected moment-by-moment categorical and dimensional emotion ratings. Brain activity was also recorded using fMRI from people with and without congenital auditory or visual deprivation while presenting them with the same emotional stimuli.

Our results reveal that the vmPFC represents emotion categories regardless of sensory experience and modality. In addition, we successfully decode the time course of emotional valence from the activity of the posterior portion of the superior temporal cortex (pSTS), even when participants lack visual or auditory inputs since birth. Through multivariate pattern classification, we show that sensory experience, but not stimulus modality, can be decoded from regions of the emotion network, except for vmPFC, which does not provide any discernible information for decoding. At the same time, this region stores a specific, rather than an undifferentiated, experience of emotion. The activity pattern of vmPFC for specific emotions, such as love or contempt, is shared between people with and without sensory deprivation. In addition to brain regions encoding the categorical description of the emotional experience, we report that the activity of the mid-cingulate, the insular, the somatomotor cortex and of the thalamus, the hypothalamus, and the caudate nucleus is better explained by affective dimensions, such as valence and arousal. Also, early sensory areas preferentially represent the emotion model based on the modality. Specifically, in blind and typically developed individuals exposed to the auditory movie, higher fitting values are observed in the early auditory cortex, whereas in the visual-only experiment, emotions are better fitted to the early visual areas of deaf and typically developed participants. Last, higher-order occipital regions, like the FG, encode the emotion model similarly in both sighted and blind individuals when listening to the narrative.

Understanding to what extent mental faculties and their corresponding neural representation can form and refine without sensory experience is of paramount importance. Previous research has shown that function-specific cortical modules can develop also in the case of congenital lack of sight or hearing (38). Although the study of the sensory-deprived brain has provided crucial insights into the architecture of human cognition, to what extent emotions are represented in a supramodal manner remains unknown.

Our voxel-wise encoding shows that vmPFC stores an abstract representation of emotion categories, as it is involved regardless of previous sensory experience and modality. To delve deeper into the role of this region, we examine with multivariate classification whether the pattern obtained from fitting the emotion model could effectively distinguish participants’ sensory experiences and the stimulus modality they are presented with. Multivariate results reinforce the notion of the supramodal nature of vmPFC, as information encoded in this area is not contaminated by sensory input. Also, within this region, the activity pattern for the experience of joy, love, admiration, relief, contempt, fear, and anger does not depend on sensory deprivation. Previous research on typically developed individuals only already pointed to the recruitment of this region in processing emotional stimuli conveyed through the visual, auditory, tactile, nociceptive, and olfactory modalities (18–20, 39). In this context, examining congenitally sensory-deprived individuals becomes essential as it ensures that the recruitment of the same brain area across various modalities does not depend on modality-specific mental imagery (27, 34, 38, 40). The vmPFC has been linked to the encoding of affective polarity and the regulation of emotional states (41–43). Also, lesions in this area alter emotional responses regardless of whether stimuli are presented through visual (44) or auditory modalities (45, 46). Together with these findings, our data support the idea that vmPFC is crucial for generating affective meaning (47, 48) and that such a meaning is represented in an experience- and modality-independent manner, as it is present in both the sensory-deprived and the typically developed brain.

Of note, according to the constructionist view, emotions can be conceptualized as abstract categories strongly dependent on language and culture (49, 50). In this regard, the fact that language helps in the construction of an emotional experience is supported by the evidence that brain regions processing semantics overlap with those involved in the representation of emotion. This is the case, for instance, of the mPFC and the temporoparietal regions (51). In line with this, we also observe the existence of an abstract code for the representation of emotion categories in vmPFC, which is independent of the sensory experience. It is interesting to note that, in our study, language was a communicative channel common across all stimulus modalities, either conveyed through speech in blind and normally hearing people or via subtitles in deaf and sighted participants. Although others reported convergence between modalities in vmPFC using emotionally laden low-level sensory stimuli (e.g., taste) (20), based on our findings, it could be hypothesized that the abstract code supporting the mapping of emotion in vmPFC relies on semantics. This idea aligns with a wealth of literature [e.g., (52)] suggesting that other regions of the brain, such as the anterior temporal lobe, store perceptual knowledge inaccessible to people with congenital sensory deprivation (e.g., blind people knowing the color of a banana) using a code based on language. The same mechanism also supports the development of complex social abilities as theory of mind (53).

By obtaining categorical and valence ratings, we were also able to ascertain whether the abstract coding used by vmPFC could be accounted by the dimension of pleasantness. Our findings indicate that the supramodal code of emotion in vmPFC is categorical rather than dimensional, as valence is mapped differently in typically developed individuals listening to the movie as compared to all other groups and conditions. Consistent with prior findings highlighting the influence of imagery on the perceptual processing of emotionally charged stimuli (54), we posit that visual imagery may underlie the distinctive mapping of pleasantness in vmPFC of sighted. That activity of this brain area cannot be merely explained with changes in valence dovetails with two other main findings in our study. First, we have been able to decode the experience of seven emotion categories from the pattern of vmPFC, suggesting that this area stores a specific, rather than an undifferentiated, experience of emotion. Second, a direct test comparing the categorical model and an alternative dimensional account of the experience reveals that activity of the mPFC is better explained by emotion categories in both sensory-deprived and typically developed people presented with different versions of the stimulus.

Notably, a recent study investigating perceived voice emotions has revealed the coexistence of both categorical and dimensional accounts of affective experiences in the brain, where the activity of frontal regions represents emotional instances in categories, whereas temporal areas through dimensions (55). Our findings not only reinforce but also expand the notion that the same emotional experience can be represented by distinct brain regions following either categorical or dimensional frameworks. We show that the activity of pSTS tracks changes in valence regardless of the stimulus modality and in people with and without congenital sensory deprivation. This area is known for its involvement in processing emotions (56, 57), with its connectivity being predictive of affective recognition performance (58). Moreover, pSTS is crucial for the multisensory integration of emotional information from faces and voices (59, 60), as well as from body postures (61, 62). The activity of the superior temporal cortex is also modulated by the valence of vocal expressions (63) and facial movements (64, 65). A prior study conducted on typically developed individuals showed that the left pSTS maps emotion categories, irrespective of the sensory modality involved (19). Our study confirms this finding, as we observe a convergence in left pSTS (x = −68, y = −14, z = −5) when examining the encoding results of the categorical model obtained from the multisensory, visual-only, and auditory-only experiments conducted in people with typical development. However, we also find that the encoding of emotional instances in pSTS is influenced by sensory experience, as congenitally deaf participants do not show a mapping of emotion categories in the same region. In this regard, it is well known that the superior temporal cortex of deaf people undergoes a sizable reorganization so that deafferented auditory areas are recruited during the processing of visual stimuli (66). The repurposing is more evident in the right hemisphere (67–69), and this might also account for our cross-decoding of valence from the left, but not the right, pSTS in congenitally deaf participants. These results collectively indicate that emotion category mapping in pSTS is contingent on sensory experience, so as the representation of valence in the right pSTS, whereas the left pSTS encodes pleasantness in a supramodal manner. Other brain areas, such as the mid-cingulate cortex, the insula, the somatomotor area, the thalamus, and the hypothalamus, show a preferential encoding of affective dimensions. Because valence explains the majority of variance in categorical ratings, it is unlikely that brain regions preferentially encoding affective dimensions are merely mapping the pleasantness of the experience. Other components, less associated with the distinction between positive and negative emotions, may be responsible for such a preference. In particular, among the first four affective dimensions, the one corresponding to the second component in the auditory modality and the third in the visual and multisensory experiments could reflect arousal. This intuition comes from two observations: (i) This component contrasts states generally characterized by lower physiological activation, such as sadness, compassion, or disappointment, with others inducing much stronger bodily reactions, such as fear, contempt, love, or joy; (ii) when plotted against each other (fig. S5), the valence ratings and the scores of the arousal component describe the typical V-shape observed across a number of datasets (70). Last, this hypothesis fits with the pattern of brain regions preferentially encoding the dimensional model, as previous studies reported the specific mapping of arousal in the thalamus and the hypothalamus (71), the mid-cingulate area (72), as well as in the insular cortex (73).

Together, our data indicate that the abstract coding of emotional instances is based on a categorical framework in vmPFC and a dimensional one in left pSTS. How does the brain represent emotional experiences beyond vmPFC and pSTS?

Our multivariate classification results reveal that sensory experience more than modality of emotion conveyance plays a role in how the brain organizes emotional information. Behavioral studies demonstrated that blind individuals have distinct experiences related to affective touch (74) and sounds [(10) but see also (75)] as compared to sighted. Similarly, congenitally deaf people exhibit specific abilities in identifying musical happiness (76). Notwithstanding these differences in the processing of affective stimuli, the precise mechanisms through which sensory deprivation shapes how emotional experiences are encoded in the brain remain to be fully elucidated. In our data, we show a significant mapping of emotion categories in higher-order occipital regions, such as the FG, in both congenitally blind and typically developed individuals while listening to the movie. It is well known that the deafferented occipital cortex of blind people is recruited for perceptual [e.g., (77)] as well as more complex tasks [e.g., (53, 78)]. Most of these studies discuss this evidence in the context of crossmodal reorganization (79). In contrast, here, we show that the network of brain regions encoding emotion categories is similar between sensory-deprived and typically developed individuals, even beyond areas representing affect in a supramodal manner. In line with this, we observe null results in the within-modality comparisons of people with and without sensory deprivation. Hence, our data suggest that, as in the case of perceptual processes (80), the brain constructs a framework for the representation of emotional states, independently of sensory experience. Sensory inputs during development, however, shape the functioning of this scaffold.

If the influence of sensory experience is evident, that of sensory modality is less conspicuous upon first examination. As a matter of fact, when considering how distinct brain regions represent our emotion model, it is not possible to discern the specific stimulus that is presented to typically developed individuals. Nonetheless, we observe higher fitting values of the emotion model in the auditory cortices when participants are exposed to the audio version of the movie. Conversely, the video stimulus prompts higher fitting values in visual areas. This finding aligns with previous research, which has proposed the existence of emotion schemas within the visual cortex (6). The concept of emotion schema suggests that perceptual features consistently associate with distinct emotions and contribute to our comprehensive emotional experience. Our finding provides further support for the idea that emotions are not only processed in centralized supramodal emotional areas but also distributed across different sensory regions of the brain (81). The specificity of the relationship between sensory modality and elicited emotions is also testified by the differences we observe in the affective experience reported by participants of our behavioral studies. While amusement, love, joy, fear, and contempt are the predominant emotions across our stimuli, fear is more challenging to experience solely through the auditory modality, while contempt is more easily elicited in the audio version of the movie. These results are consistent with previous studies demonstrating distinct emotion taxonomies associated with different sensory modalities to convey affective states (82).

Last, we wish to acknowledge potential limitations of our study. First, we did not gather affective ratings from congenitally blind and deaf participants. This limitation arises from how we collect real-time reports of the affective experience in the context of naturalistic stimulation, which represents a real challenge (83). The precision of our method in estimating the onset and duration of a self-reported emotional instance, as well as the possibility to simultaneously collect information from multiple emotion categories, comes—unfortunately—at the cost of providing the participants with complex feedback (e.g., how many emotions—if any—are “on” at any given time during the movie) that, to the best of our knowledge and technical abilities, require vision. Thus, while gathering real-time emotion reports in typically developed and deaf individuals is feasible with our method, doing the same in blind individuals is not. Of course, as far as reporting one’s own feelings is concerned, there could be alternatives for people lacking sight, such as verbal reports [e.g., pausing the movie at random times and asking participants how they feel, as in (48)]. However, we believe that having different methods for the collection of behavioral data between groups might have represented a bias in our work. Because of this, when conceiving the study, we opted to collect “normative” emotional reports from typically developed individuals only. Although the concept of normative in affective science may be controversial, the reliability of behavioral reports supports the rationale behind our choice. Future investigations could overcome this limitation by designing and implementing an innovative methodology to capture real-time emotion reports in lack of sight. Second, although the between-subject similarity in emotion reports is significantly higher than one might expect by chance, we also note a degree of variability in the occurrence and duration of emotional instances (figs. S1 to S3). In this regard, a more evocative movie might synchronize more affective reports, resulting in better generalizability across individuals. In addition, our encoding models are based on the group-level emotion annotations provided by an independent group, thus limiting the possibility to explain single participant idiosyncrasies in the fMRI response. By collecting emotional reports and brain activity in the same individuals, instead, one might expect higher performance because of the fitting of such idiosyncrasies. However, this approach is also not free from some limitations, as performing our task in the scanner recruits motor and attentional resources, which might represent a source of confound. Also, in case the task is performed outside the scanner, being exposed to the same stimuli twice might alter the affective response. Third, we are aware that the sample size of each group could be considered as small for classical fMRI studies, in which the acquisition time is typically limited to dozens of minutes. However, it is important to emphasize that across two experiments we acquired approximately 50 hours of fMRI data and 120 hours of behavioral reports. Also, we have not been able to collect personality characteristics of our participants, which might have a role in the brain representation of emotional experience. Future studies might address this by including a personality evaluation (e.g., Big Five Inventory) in the experimental protocol.

In conclusion, we have shown that emotional experiences are represented in an extensive network encompassing sensory, prefrontal, and temporal regions. Within this network, emotion categories are encoded using an abstract code in the vmPFC, and perceived valence is mapped in the left posterior superior temporal region independently of the stimulus modality and experience. Thus, abstract and sensory-specific representations of emotion coexist in the brain, so as categorical and dimensional accounts. Sensory experience, more than modality, affects how the brain organizes emotional information outside supramodal regions, suggesting the existence of a scaffold for the representation of emotional states where sensory inputs during development shape its functioning.

MATERIALS AND METHODS

Behavioral experiments—Participants

After obtaining their written informed consent, a sample of 124 Italian native speakers with typical visual and auditory experience (E+) participated in a set of behavioral experiments. All participants retained the right to withdraw from the study at any time and received a small monetary compensation for their participation. They had no history of neurological or psychiatric conditions, normal hearing, normal or corrected vision, and no reported history of drug or alcohol abuse.

A first group of 62 participants provided categorical ratings of the affective experience associated with the movie 101 Dalmatians under three experimental conditions: listening to the auditory-only version of the movie (auditory-only condition, A; n = 20, 8 females, mean age ± SD = 36 ± 16), watching the silent film (visual-only condition, V; n = 20, 10 females, age = 29 ± 8), or watching and listening to the original version of the movie (multisensory condition, M; n = 22, 11 females, age = 30 ± 3). A second independent sample of 62 individuals provided valence ratings of the emotional experience evoked by the same movie under the same experimental conditions. Specifically, 21 individuals reported the (un)pleasantness of the experience during the M condition (13 females, age = 30 ± 5), 20 annotated the emotional valence during the A condition (9 females, age = 37 ± 11), and 21 participated in the V experiment (10 females, age = 29 ± 7). The mean age and the proportion of male and female individuals in each group did not differ from those of typically developed and sensory-deprived participants enrolled in the fMRI experiment (all P values > 0.05). All participants had never watched the movie or had not watched it in the year before the experiment. The study was approved by the local Ethical Review Board (Comitato Etico Area Vasta Nord Ovest; protocol no. 1485/2017) and conducted in accordance with the Declaration of Helsinki.

Behavioral experiments—Stimuli and experimental paradigm

To study whether and to what extent sensory experience and stimulus modality affect the representation of emotions in the brain, we measured fMRI activity and real-time reports of emotion elicited by the 101 Dalmatians live-action movie (Walt Disney, 1996). We specifically selected 101 Dalmatians as it is characterized by a relatively simple plot that can be comprehended even by children or when it is narrated as in radio plays, a crucial requirement for our experimental goals. At the same time, the movie is rich in moments eliciting both positive (e.g., the wedding of the two lovers) and negative (e.g., the kidnapping of puppies) emotional states, as confirmed by the real-time affective reports we collected in the behavioral experiments (see below). A shortened version of 101 Dalmatians was created for M, A, and V stimulation (37). Scenes irrelevant to the main plot were removed to limit the overall duration of the experimental session to one hour, and the movie was split into six runs for fMRI protocol compliance. Six-second fade-in and fade-out periods were added at the beginning and the end of each run (iMovie software v10.1.10). A professional actor provided a voiceover of the story with a uniform pitch and no inflections in the voice, recorded in a studio insulated from environmental noise with professional hardware (Neumann U87ai microphone, Universal Audio LA 610 mk2 preamplifier, Apogee Rosetta converter, Apple MacOS) and software (Logic Pro). The voice track was then adequately combined with the original soundtracks and dialogues. Further, we added to each video frame subtitles of movie dialogues, text embedded in the video stream (e.g., newspaper), onomatopoeic sounds, and audio descriptions. Last, A and V versions of the movie were generated by discarding the video and audio streams, respectively.

Participants sat comfortably in a quiet room facing a 24″ Dell screen, they wore headphones (Marshall Major III; 20 to 20,000 Hz; Maximum SPL 97 dB) and were presented with either the multimodal or unimodal edited versions of the movie 101 Dalmatians. Volunteers were asked to report their moment-to-moment emotional experience (10-Hz sampling rate) using a collection of 15 emotion labels that were balanced between positive (amusement, joy, pleasure, contentment, love, admiration, relief, and compassion) and negative affective states (sadness, disappointment, fear, disgust, contempt, hate, and anger). As in our previous studies (7, 84), the emotion labels were displayed on the bottom of the screen, evenly spaced along the horizontal axis, with positive emotion categories on the left and negative ones on the right side. Participants could navigate through the emotions using the arrow keys on a QWERTY keyboard, and once selected, the label changed its color from white to red. The onset or end of an emotional instance was marked by pressing the keys “Q” or “A,” respectively. Subjects were able to select multiple emotions at a time and could constantly monitor their affective reports based on the changing color of the corresponding label. For each individual, we obtained a 32,280 (i.e., time points) by 15 (i.e., emotion labels) matrix of affective ratings, in which a value of 1 (or 0) indicated the presence (or absence) of a specific emotion at a given time. Before the experiment, participants received training on the task, in which a label appeared on the screen (e.g., joy), and they were asked to reach the corresponding emotion category and mark the onset as fast as they could. They were instructed to proceed to the actual experiment only if they felt comfortable performing the task.

We followed a similar procedure to collect real-time ratings of valence (i.e., dimensional ratings). This time, however, participants were provided with a single “valence” label and were asked to evaluate how pleasant or unpleasant their experience was at each moment by increasing (Q keypress) or decreasing (A keypress) the valence score. The valence scale ranged from −100 (extremely unpleasant) to +100 (extremely pleasant), the minimum step was set to 5 points, and a value of 0 indicated a neutral state. Participants were able to monitor their affective state in real-time and adjust the valence score at any moment (10-Hz sampling frequency). We collected a time series of 32,280 time points for each individual, with positive or negative values indicating the pleasantness or unpleasantness of their emotional experience at any given time. Both behavioral experiments were conducted using MATLAB (R2019b; MathWorks Inc., Natick, MA, USA) and Psychtoolbox v3.0.16 (85).

Behavioral experiments—Reliability of real-time valence ratings and categorical annotations of emotion

To evaluate the between-participant similarity in categorical ratings of emotion, for each volunteer and condition, we obtained the emotion–by–time point matrix expressing the onset and duration of emotional instances throughout the movie. We then computed the Jaccard similarity between all pairings of individuals. This index quantified the proportion of time points in which two volunteers reported the experience of the same state, over the number of time points in which either individuals felt the emotion (0 = completely disjoint reports, 1 = perfect correspondence). The overlap between emotional reports was then summarized by computing the median across all possible pairings of participants in each condition and emotion category. Further, we tested the reliability of real-time reports of the affective experience by comparing the average Jaccard index measured between participants with a null distribution of Jaccard coefficients built from surrogate time series. Briefly, we used the amplitude adjusted Fourier transform method (86) to generate 2000 emotion reports for each participant and emotion while keeping fixed the overall number of time points in which an emotional instance was reported. As Jaccard is computed for nonzero time points, this ensured the appropriateness of the null distribution (i.e., the union of time points was the same between the surrogate and the nonsurrogate time series). Then, for each emotion and modality, we obtained the 2000 average Jaccard indices under the null hypothesis of observing no overlap between emotional reports and estimated statistical significance by retrieving the position of the actual average Jaccard within the null distribution. We also adjusted the statistical significance threshold by the number of emotion categories (i.e., P valueBonferroni = 0.050; P valueUncorrected = 0.003). To complement the results obtained from this analysis, we computed a 1-idiosyncrasy index (depicted in figs. S1 to S3), which we believe could be informative in the context of measuring the agreement in emotional reports. Because Jaccard is computed for all time points in which at least one of two participants experienced a specific emotion (i.e., union), this index does not reflect the concordance in reporting the absence of an emotion. Our 1-idiosyncrasy index, instead, reaches its maximum (i.e., 1) whenever all participants either report the presence or the absence of a specific emotion at the same time and its minimum (i.e., 1 per number of participants) when an emotion is reported by a single individual but not by all other participants (i.e., the maximum idiosyncrasy in the experience of an emotion). Thus, as compared to the Jaccard index, the 1-idiosyncrasy coefficient takes into account the between-participant concordance in reporting both the presence and the absence of emotion. For valence ratings, instead, the between-participant similarity was evaluated using Spearman’s ρ and summarized by computing the median of correlation values obtained from all pairings of participants in each condition. RSA (87) was used to measure whether group-level emotion ratings collected during movie watching recapitulated the arrangement of emotion categories within the space of affective dimensions. An advantage offered by this technique is that it allows comparing the information content obtained from different sources. Specifically, for each source of information (e.g., behavioral ratings during movie watching), an RDM is built by computing the distance between all possible pairings of elements (e.g., emotions). Different distance metrics can be adopted depending on the nature of the information (e.g., Spearman’s ρ, Euclidean). Then, dissimilarity matrices are compared (second-order isomorphism), typically using rank-correlation distance. Here, we first obtained from a large database of affective norms (88) the scores of valence, arousal, and dominance dimensions (1 to 9 Likert scale) (89) of each emotion label. An RDM was obtained by computing the between-emotion pairwise Euclidean distance in the space of affective dimensions. We then used Kendall’s τ to correlate the affective norms (RDM) with behavioral RDMs obtained from emotion ratings of each experimental condition (i.e., M RDM, A RDM, and V RDM). Behavioral RDMs were derived by estimating the Spearman’s correlation ρ between all possible pairings of group-level emotion time series. In addition to evaluating the association between the affective norms RDM and each behavioral RDM, we also measured Kendall’s τ correlation between all pairings of behavioral RDMs, hence testing the correspondence between affective ratings collected under diverse sensory modalities. To further prove the specificity of categorical ratings across conditions, we tested whether the distance (Spearman’s ρ on group-level ratings) of a specific emotion acquired under two different experimental conditions (e.g., amusement M versus amusement A) was smaller than its distance to all other emotions (e.g., amusement M versus any other emotion A). This produced a nonsymmetric emotion-by-emotion RDM summarizing the distance between emotion ratings acquired under two modalities and was repeated for all condition pairings (i.e., M versus A, M versus V, and A versus V). For each emotion and RDM, we then identified the category with the minimum distance, thus allowing a direct inspection of the consistency of emotion ratings between sensory modalities. Last, to reveal the structure of categorical ratings and test their correspondence with valence scores collected in behavioral experiments, we performed PC analysis on the group-level emotion–by–time point matrix. The number of components was set to three to comply with the affective norms by Warriner and colleagues (88), and the procedure was repeated on data obtained from the M, A, and V experiments. Of each component, we inspected the variance explained and the coefficients of emotion categories. The time course of each component (i.e., PC scores) was also extracted and correlated (Kendall’s τ) with the behavioral valence scores collected under the same experimental condition (e.g., PC1 M versus valence M). In addition, we also reported Kendall’s τ correlation between all possible condition pairings in terms of valence ratings and scores of the PCs. The strength of Spearman’s and Kendall’s correlations was interpreted following the recommendation from Schober and colleagues (90).

Behavioral experiments—The development of an emotion model for fitting brain activity

Single-participant matrices of categorical affective ratings were downsampled to the fMRI temporal resolution (i.e., 2 s) and then aggregated across individuals by summing the number of occurrences of each emotion at each time point. The resulting 1614 (i.e., downsampled time points) by 15 (i.e., emotions) matrix stored values ranging from 0 to the maximum number of participants experiencing the same emotion at the same time. To account for idiosyncrasies in group-level affective ratings, time points in which only one participant reported experiencing a specific emotion were set to 0. The group-level matrix of categorical ratings was then normalized by dividing values stored at each time point by the overall maximum agreement in the matrix. Because these ratings were used to explain the brain activity of independent participants in a voxel-wise encoding analysis, we convolved the 15 group-level emotion time series with a canonical hemodynamic response function (spm_hrf function; HRF) and added the intercept to the model. The entire procedure was repeated for ratings collected in the M, the A, and the V conditions. Similarly, single-participant valence ratings were downsampled to the fMRI temporal resolution and aggregated at the group level by computing the average valence score across individuals at each time point and convolved with a canonical HRF. These processing steps were applied to valence ratings obtained from the M, the A, and the V experiments.

fMRI experiment—Participants

Brain activity evoked by the same movie used in the behavioral experiments was measured in a group of 50 Italian volunteers (Table 2). Participants were categorized into five groups based on their sensory experience and on the stimulus presentation modality: a group of congenitally blind individuals listening to the auditory-only version of 101 Dalmatians (E−A; n = 11, 3 females, age = 46 ± 14), a sample of congenitally deaf without cochlear implants watching the silent film (E−V; n = 9, 5 females, age = 24 ± 4), and three groups of typically developed individuals, who were presented with either the auditory-only (E+A; n = 10, 7 females, age = 39 ± 17), the visual-only (E+V; n = 10, 5 females, age = 37 ± 15), or the multisensory (E+M; n = 10, 8 females, age = 35 ± 13) versions of the stimulus. During the fMRI acquisition, participants were instructed to remain still and enjoy the movie. The scanning session lasted approximately 1 hour and was divided into six functional runs (3T Philips Ingenia scanner, Neuroimaging Center of NIT–Molinette Hospital, Turin; 32 channels head coil; gradient recall echo-echo planar imaging; 2000-ms repetition time, 30-ms echo time, 75° flip angle, 3-mm isotropic voxel, 240-mm field of view, 38 sequential ascending axial slices, 1614 volumes). Audio and visual stimulations were delivered using MR-compatible Liquid Crystal Display goggles and headphones (VisualStim Resonance Technology; video resolution, 800 × 600 at 60 Hz; visual field, 30° × 22°; 5″; audio, 30-dB noise attenuation; 40-Hz to 40-kHz frequency response). High-resolution anatomical images were also acquired (3D T1w; magnetization-prepared rapid gradient echo; 7-ms repetition time, 3.2-ms echo time, 9° flip angle, 1-mm isotropic voxel, 224-mm field of view). The fMRI study was approved by the Ethical Committee of the University of Turin (protocol no. 195874/2019), and all participants provided their written consent for participation.

Table 2. Demographics of the fMRI groups.

On average, the head displacement of congenitally blind individuals (E−A) was 1.564 ± 0.985 mm, while the head motion of typically developed individuals exposed to the same stimulus modality (E+A) was 0.864 ± 0.681 mm [t-stat(17.81) = 1.909; P value = 0.073]. For what concerns fMRI data collected during the visual experiment, head motion measured in congenitally deaf individuals (E−V) was, on average, 0.715 ± 0.401 mm, whereas in typically developed individuals (E+V) was 0.709 ± 0.471 mm [t-stat(16.96) = 0.030; P value = 0.977]. In addition, no significant differences were found when we compared head motion values of people with sensory deprivation to those of typically developed individuals presented with the multisensory stimulus [E+M head motion: 0.889 ± 0.742 mm; E−A versus E+M: t-stat(18.41) = 1.784; P value = 0.091; E−V versus E+M: t-stat(14.11) = −0.645; P value = 0.529].

| Sex | Age | Group | Cause of sensory deprivation | Average absolute head movement between runs (mm) | |

|---|---|---|---|---|---|

| Sub-012 | F | 30 | E+M | NA | 0.4817 |

| Sub-013 | F | 41 | E+M | NA | 0. 9765 |

| Sub-014 | M | 28 | E+M | NA | 0.9133 |

| Sub-015 | F | 24 | E+M | NA | 0.3645 |

| Sub-016 | M | 25 | E+M | NA | 2.8519 |

| Sub-017 | F | 62 | E+M | NA | 0.5057 |

| Sub-018 | F | 28 | E+M | NA | 0.4484 |

| Sub-019 | M | 30 | E+M | NA | 1.1704 |

| Sub-022 | F | 51 | E+M | NA | 0.7486 |

| Sub-032 | F | 29 | E+M | NA | 0.4322 |

| Sub-003 | F | 25 | E+A | NA | 0.3679 |

| Sub-004 | F | 23 | E+A | NA | 0.6111 |

| Sub-005 | F | 25 | E+A | NA | 0.2670 |

| Sub-006 | M | 54 | E+A | NA | 1.2102 |

| Sub-007 | F | 69 | E+A | NA | 1.5587 |

| Sub-008 | F | 28 | E+A | NA | 0.8935 |

| Sub-009 | M | 55 | E+A | NA | 0.2066 |

| Sub-010 | F | 24 | E+A | NA | 0.5369 |

| Sub-011 | F | 30 | E+A | NA | 0.6053 |

| Sub-027 | F | 52 | E+A | NA | 2.3813 |

| Sub-020 | F | 25 | E+V | NA | 0.3853 |

| Sub-021 | F | 26 | E+V | NA | 0.3799 |

| Sub-023 | F | 38 | E+V | NA | 0.3930 |

| Sub-024 | F | 53 | E+V | NA | 0.4596 |

| Sub-025 | M | 53 | E+V | NA | 0.7694 |

| Sub-026 | M | 57 | E+V | NA | 1.4911 |

| Sub-028 | M | 24 | E+V | NA | 0.3934 |

| Sub-029 | M | 50 | E+V | NA | 0.3920 |

| Sub-030 | F | 23 | E+V | NA | 1.5959 |

| Sub-031 | M | 23 | E+V | NA | 0.8340 |

| Sub-033 | F | 68 | E−A | Optic nerve atrophy | 0.9561 |

| Sub-034 | M | 64 | E−A | Retinitis pigmentosa | 3.6607 |

| Sub-035 | F | 37 | E−A | Leber congenital amaurosis | 2.0198 |

| Sub-036 | M | 48 | E−A | Retinopathy of prematurity | 0.7649 |

| Sub-037 | M | 49 | E−A | Optic nerve atrophy | 1.7411 |

| Sub-038 | M | 32 | E−A | Retinal detachment | 0.7483 |

| Sub-039 | M | 42 | E−A | Retinitis pigmentosa | 0.5037 |

| Sub-041 | M | 49 | E−A | Retinopathy of prematurity | 2.4106 |

| Sub-042 | M | 19 | E−A | Retinal detachment | 1.1961 |

| Sub-043 | F | 41 | E−A | Bilateral retinoblastoma | 0.7613 |

| Sub-053 | M | 57 | E−A | Retinopathy of prematurity | 2.4453 |

| Sub-044 | M | 24 | E−V | Hereditary | 0.2717 |

| Sub-045 | F | 21 | E−V | Hereditary | 1.0623 |

| Sub-046 | M | 24 | E−V | Hereditary | 0.7904 |

| Sub-047 | M | 26 | E−V | Hereditary | 1.2151 |

| Sub-048 | M | 22 | E−V | Hereditary | 0.4575 |

| Sub-049 | F | 18 | E−V | Hereditary | 0.3076 |

| Sub-050 | F | 28 | E−V | Sensorineural hearing loss | 1.0553 |

| Sub-051 | F | 32 | E−V | Hereditary | 1.0574 |

| Sub-052 | F | 22 | E−V | Hereditary | 0.2204 |

fMRI experiment—Preprocessing

For each participant, the high-resolution T1w image was brain extracted (OASIS template, antsBrainExtraction.sh) and corrected for inhomogeneity bias (N4 bias correction) with Advanced Normalization Tools v2.1.0 (91). The anatomical image was then nonlinearly transformed to match the MNI152 ICBM 2009c nonlinear symmetric template using AFNI v17.1.12 (3dQwarp) (92). Also, binary masks of white matter (WM), gray matter, and cerebrospinal fluid (CSF) were obtained from Atropos (93). We used these masks to extract the average time series of WM and CSF voxels from fMRI sequences, which were included in the functional preprocessing pipeline as regressors of no interest (94). Masks were transformed into the MNI152 space by applying the already computed deformation field (3dNwarpApply; interpolation: nearest neighbor). To ensure that after normalization to the standard space the WM mask included WM voxels only, we skeletonized the mask (erosion: 3 voxels; 3dmask_tool) and excluded (3dcalc) the Harvard-Oxford subcortical structures (i.e., thalamus, caudate nucleus, pallidum, putamen, accumbens, amygdala, and hippocampus). Similarly, the CSF mask was eroded by 1 voxel and multiplied by a ventricle mask (MNI152_T1_2mm_VentricleMask.nii.gz) distributed with the FSL suite (95). Last, both masks were downsampled to match the fMRI spatial resolution.

For each participant and functional run, we corrected slice-dependent delays (3dTshift) and removed nonbrain tissue (FSL bet -F) from the images. Head motion was compensated by aligning each volume of the functional run to a central time point (i.e., volume number 134) with rigid-body transformations (i.e., 6 degrees of freedom; 3dvolreg). The motion parameters, the aggregated time series of absolute and relative displacement, and the transformation matrices were generated and inspected. Also, we created a brain-extracted motion-corrected version of the functional images by estimating the average intensity of each voxel in time (3dTstat). This image was coregistered to the brain-extracted anatomical sequence (align_epi_anat.py, “giant move” option, lpc + ZZ cost function). To transform the functional data into the MNI152 space using a single interpolation step, we concatenated the deformation field, the coregistration matrix, and the 3dvolreg transformation matrices and applied the resulting warp (3dNwarpApply) to the brain-extracted functional images corrected for slice-dependent delays. Standard-space functional images were generated using sinc interpolation (5-voxel window), having the same spatial resolution as the original fMRI data (i.e., 3-mm isotropic voxel). Brain masks obtained from functional data were transformed into the standard space as well (3dNwarpApply, nearest-neighbor interpolation). Functional images were then iteratively smoothed (3dBlurToFWH) until a 6-mm full width at half maximum level was reached. As in Ciric and colleagues (94), we used 3dDespike to replace outlier time points in each voxel’s time series with interpolated values and then normalized the signal so that changes in blood oxygen level–dependent (BOLD) activity were expressed as a percentage (3dcalc). In addition, WM, CSF, and brain conjunction masks were created by identifying voxels common to all functional sequences (3dmask_tool -frac 1). We selected 36 predictors of no interest (36p) to be regressed out from brain activity, which included six head motion parameters (6p) obtained from 3dvolreg, average time series (3dmaskave) of WM (7p), average CSF signal (8p), and the average global signal (9p). For each of these nine regressors, we computed their quadratic expansions (18p; 1deval), the temporal derivatives (27p; 1d_tool.py), and the squares of derivatives (36p). Also, regressors of no interest were detrended using polynomial fitting (up to the fifth degree). Confounds were regressed out using a generalized least squares time series fit with restricted maximum likelihood estimation of the temporal autocorrelation structure (3dREMLfit). Regression residuals represented single-participant preprocessed time series, which were then used in voxel-wise encoding, univariate comparisons, multivariate classification, and cross-decoding analyses.

fMRI experiment—Fitting the emotion model in brain activity