Abstract

Objective

Mentoring and coaching practices have supported the career and skill development of healthcare professionals (HCPs); however, their role in digital technology adoption and implementation for HCPs is unknown. The objective of this scoping review was to summarize information on healthcare education programs that have integrated mentoring or coaching as a key component.

Methods

The search strategy and keyword searches were developed by the project team and a research librarian. A two-stage screening process consisting of a title/abstract scan and a full-text review was conducted by two independent reviewers to determine study eligibility. Articles were included if they: (1) discussed the mentoring and/or coaching of HCPs on digital technology, including artificial intelligence, (2) described a population of HCPs at any stage of their career, and (3) were published in English.

Results

A total of 9473 unique citations were screened, identifying 19 eligible articles. 11 articles described mentoring and/or coaching programs for digital technology adoption, while eigth described mentoring and/or coaching for digital technology implementation. Program participants represented a diverse range of industries (i.e., clinical, academic, education, business, and information technology). Digital technologies taught within programs included electronic health records (EHRs), ultrasound imaging, digital health informatics, and computer skills.

Conclusions

This review provided a summary of the role of mentoring and/or coaching practices within digital technology education for HCPs. Future training initiatives for HCPs should consider appropriate resources, program design, mentor-learner relationship, security concerns and setting clear expectations for program participants. Future research could explore mentor/coach characteristics that would facilitate successful skill transfer.

Keywords: Digital technology adoption, digital technology implementation, health care providers, education < lifestyle, mentor, coach

Introduction

Background

Digital health can include a wide array of technologies, processes and approaches. Digital health technology is defined by the World Health Organization (WHO) as a term encompassing eHealth (information and communication technologies; ICT), mHealth (mobile wireless technologies), ‘big data’ in computing sciences, genomics, and artificial intelligence.1–4 These technologies are increasingly adopted and applied in healthcare settings, with a number of implications for healthcare delivery.5–7 Major implications of digital technologies in healthcare include an interlinked digital ecosystem within health organizations, allowing more efficient data collection processes, using data to predict trends and outcomes in healthcare, discovering novel treatments, and the creation of personalized healthcare delivery.8,9 Despite the general excitement towards digital technologies and their potential role in delivering efficient, safe, and high-quality care, integrating these tools into clinical practice remains a significant challenge to be addressed. 10

Healthcare professionals (HCP) are at the forefront of adopting (learning to use technology) and implementing (integrating technology into the workflow) technologies into their practice. 11 Despite the advantages of digital health technologies, the healthcare workforce has generally been slow in the adoption and implementation of these tools.12,13 There are numerous contributors to the slow uptake, including low digital literacy among HCPs and the absence of consistent training provided for them to learn and engage with digital tools. 14 As such, some HCPs are ill-equipped to incorporate and utilize digital health technologies in their practice. The rapid evolution of these technologies may further exacerbate this challenge, as novel and sophisticated technologies are introduced at a faster pace than the respective training provided for HCPs.15,16 This may be daunting to HCPs who have inadequate training and limited knowledge of relevant digital systems. 17

Initiatives to increase digital literacy can be observed. Notably, the Health Information Technology Competencies (HITCOMP) were developed as a guide to build digital capacity among various medical professions 18 in the domains of (1) patient care, (2) administration, (3) engineering/information systems/information and computer technology, (4) research/biomedicine, and (5) informatics. Many other digital health competencies have also been developed to enhance HCPs’ experience and performance in the digital health landscape.19–21 Despite the number of resources available, digital health competencies may not be well-integrated into the training that HCPs currently receive, leading to an adoption and implementation process that remains slow. 20 It has become apparent that education programs are necessary to prepare and involve HCPs in the adoption and implementation of technologies into the clinical workflow, 22 but it is unclear which types of initiatives prove most effective.

Mentoring or coaching may be used to support HCPs in acquiring and retaining digital technology knowledge and skills at a quicker pace.23–25 Although sometimes used interchangeably, mentoring and coaching have different objectives and aims. Defined as a process through which an experienced individual (mentor) guides the professional development of a less experienced individual (mentee or learner), mentorship is often defined as an open-ended, reciprocal relationship that focuses on the development of broad skills for one's career.24,26 On the other hand, coaching has been defined as a process whereby an experienced individual (coach) expertly supports another individual to develop a specific skill with clear goals, expectations, and outcomes. 27

Various fields have observed mentoring as an important factor for individuals to successfully employ and implement technology in practice.28–30 Further, coaching and mentoring practices in medical education have been observed to have beneficial outcomes among medical trainees, including more engagement with self-reflection, enhanced workforce performance, more effective acquisition of new clinical skills, and a greater level of positive well-being.24,31 When coaching was added as a part of an undergraduate medical education curriculum, improved academic performance and a greater level of professionalism were observed among students. These students also reported that coaching helped in the formation of their professional identity.31,32 For instance, coaching increased employees’ confidence and a discovery of personal strengths; higher level of confidence allowed employees to engage in more conversations with their colleagues and supervisor, thus improving their relationships at work. 33

While efforts have been made synthesizing information on existing technology education programs for HCPs, 22 not much is known about how these programs integrate or deliver mentoring and coaching, in what context mentoring and coaching is available, the components of mentoring and coaching, and their effectiveness. Furthermore, while many programs focus on training HCPs to adopt innovative tools, less have described training for the implementation of them. 22 To support the design and development of future programs, there is a need to further examine the effectiveness of mentoring and coaching within adoption and implementation programs. Furthermore, the extent to which these programs have been evaluated and their outcomes (e.g., program effectiveness, program facilitators or barriers) requires further elucidation.

Thus, the overarching objective of this scoping review was to gain an understanding of the current landscape of mentoring and/or coaching for HCPs in digital technology adoption and implementation, and to inform future education programs for HCPs. Specifically, by reviewing the literature pertaining to mentoring or coaching within digital technology adoption and implementation programs in healthcare, this scoping review summarizes information on educational programs that have integrated mentoring or coaching as a key component. In particular, regarding the programs’ curriculum content, modes of delivery, the roles of mentors or coaches, facilitators, barriers to program facilitation, and perceived benefits of mentoring or coaching. Research questions include:

What education programs on digital technology adoption and implementation for HCPs exist with mentoring and/or coaching as key components?

What digital technologies are taught in the programs?

What is/are the mode(s) of delivery? (e.g., online, in-person)

Where are the programs located? (e.g., rural, urban, online)

Who are the individuals identified as a mentor/coach and learners, and what are their common attributes?

What are the critical facilitators and barriers to mentoring and coaching for effective program facilitation?

What aspects of existing programs are evaluated, and what are the outcomes? (e.g., satisfaction, knowledge translation)

Methods

This scoping review followed the Arksey and O’Malley methodological framework 34 for scoping reviews, which is appropriate for examining the extent, range, and nature of the literature. This framework involves 1) identifying the research question, 2) identifying relevant studies, 3) study selection, 4) charting the data, and 5) collating, summarizing, and reporting the results. The review also followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews Checklist (PRISMA-ScR).35,36 A scoping review protocol was not published.

Stage 1: search strategy

Search terms were iteratively developed by members of the project team (DW, MZ, IK, RC) and search strategies were developed by an experienced medical librarian (MA) at a large academic health sciences network in Toronto, Canada. The search strategy was first developed for OVID Medline (see Multimedia Appendix 1 for search strategy and initial results in Medline), then translated to multiple databases (see Multimedia Appendix 2 for search results in all other databases). Given that the research areas relevant to our topic of interest span across fields beyond medicine, we selected the following databases for our search to allow for a comprehensive sample of literature from disciplines including medical sciences, psychiatry/psychology, social work, nursing, education, and computer and information systems science: Medline, EMCARE, EMBASE, ERIC, and PsycINFO. No date limits were applied, with the last search conducted in November 2023. If conference abstracts and proceedings appeared in the search results, a subsequent search in Google Scholar, PubMed, and Research Gate was conducted to find corresponding published articles. A two-stage screening process was conducted for all included articles (title/abstract and full-text screening). Finally, a hand combing process was conducted, where relevant cited works from the included articles also underwent the two-stage screening process (title/abstract and full-text review).

Stage 2: study selection

Search results from all databases were de-duplicated in EndNote and subsequently uploaded onto the Covidence Software© for screening. The two-stage screening process consisted of: (1) title/abstract scan and (2) full-text review. An article was included if it:

Discussed the mentoring and/or coaching of HCPs on digital technology

Described a population of HCPs

Was published in English

We included studies examining mentoring and/or coaching of any digital technology due to the lack of literature on artificial intelligence and interest in the larger digital technology field. Our review included studies on HCPs at any stage of their career to examine providers use of technology across the years. Only studies in English were included to ensure proper understanding of study findings.

Articles were excluded if they were not published in English, involved non-HCPs, were not primary research, were not healthcare related, or included surgical technology. Surgical technology was excluded due to the different nature of these studies (e.g., mentoring through robotic simulation), the lack of literature on implementation, and in consideration of the research team's expertise. Studies that were not primary research were excluded because they did not directly report implementation findings.

To ensure reliability and consistency of the screening process, a pilot review of 100 of the Medline citations was conducted among two reviewers (MZ, IK) to establish inter-rater reliability. An inter-rater reliability threshold with a Cohen's Kappa of 0.70 indicated substantial agreement. An additional 50 citations were reviewed until the threshold was met. Once this threshold was met, the reviewers independently determined article eligibility during screening based on the above criteria. A single-reviewer method was used for both abstract and full-text review. Additional reviewers (RC, JS, DW) established inter-rater reliability with one of the initial reviewers (MZ, IK) before participating in the screening process.

Stage 3: data collection

A standardized data charting form was created by the project team to extract data from included articles. Table 1 lists the domains and subdomains of data extraction.

Table 1.

Data charting: Domains and subdomains.

| Domain | Subdomain |

|---|---|

| Program Description | Evaluation design Intervention (i.e., training program, educational activity) Digital technology taught Training received Aspects of program evaluated (e.g., knowledge translation, satisfaction) Sample size Demographics Program format Program location Program length Number of program iterations Program outcomes Implementation facilitators Facilitators of program implementation Barriers of program implementation Perceived benefit of program implementation |

| Mentoring or Coaching Skills | Role/responsibility of mentor/coach Role of mentor Mentor stage of career Mentor practice setting Role of learner |

Stage 4: data analysis

To organize, summarize and report articles included in this review, a data extraction template was used and an inductive approach was performed. Two approaches, the Kirkpatrick-Barr and inductive thematic analysis were undertaken. This included numeric summaries using descriptive statistics to report each domain (article details, study details, mentoring or coaching details, and implementation details). Program characteristics were reported to understand the types of existing programs and their effectiveness. Program outcomes were deductively coded using the Kirkpatrick-Barr framework of educational outcomes. 36 This framework was selected because it provided a categorization for mentoring/coaching programs and their four levels of outcomes: level 1 — reaction (attitude towards the training experience and satisfaction); level 2 — learning (acquisition of knowledge and skills); level 3 — behavior (changes in behavior), and level 4 — results (changes in organizational practice). Program implementation factors, facilitators, and barriers underwent an inductive, thematic analysis, as defined by Braun and Clark, 37 by two independent reviewers (MZ, JS). Reviewers familiarized themselves with the data and generated an initial codebook. Using this codebook, reviewers searched for themes. Next, reviewers met and discussed potential emerging themes and defined these themes. The reviewers compared coding schemes and iteratively determined overarching themes to create a framework for findings. 37 Members from the project team, along with patient partners and professionals with expertise in medical education, health informatics, and AI were involved for content validation. Any disagreements were resolved through discussions with the larger research team and principal investigator.

Results

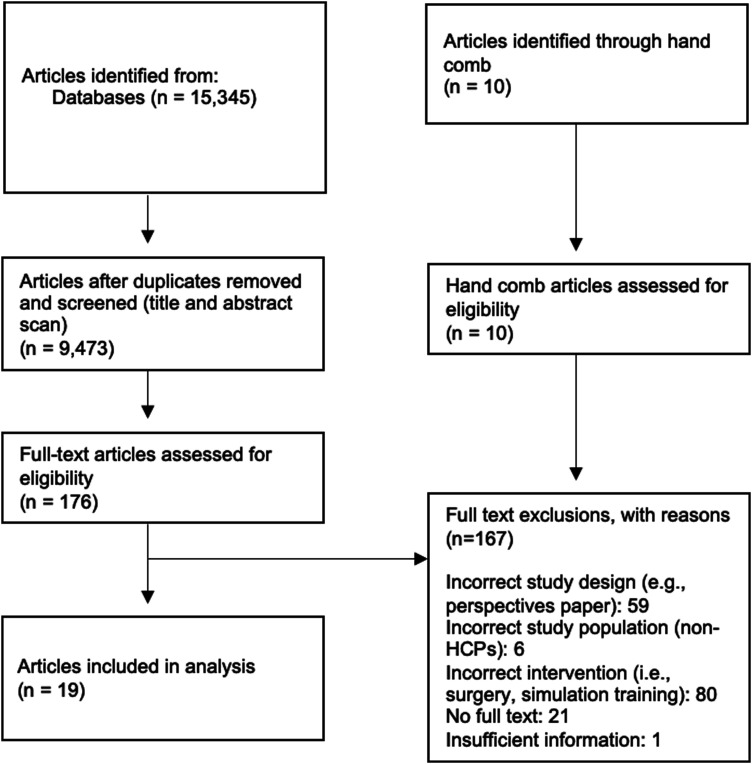

A total of 15,345 articles were initially identified, with 9473 remaining after de-duplication (Figure 1). From there, 176 articles (1.14%) were included for full-text screening. A total of 19 articles (0.20%) met the inclusion criteria. No articles were identified from conference abstracts. Ten articles were identified through a hand-combing process within the reference lists of the included articles. They were also screened by their title/abstract and full-text. None met the inclusion criteria and were excluded from data extraction. Thus, data from 19 articles (0.20%) were extracted for data analysis.

Figure 1.

PRISMA flow diagram.

Of the 19 included articles, 14 (73.7%) were case reports38–51 and 5 (26.3%) were empirical.52–56 As most articles (n = 14) were descriptive case reports, this scoping review reported overall descriptions of programs identified. Programs within the articles described mentoring and/or coaching for either the adoption (n = 11; 57.9%) or implementation of technology (n = 8; 42.1%).

Articles were published between 2000 to 2023 (Table 2). While the majority of articles described a program located in the United States,38–40,42–45,47,49–51,56 2 were from the United Kingdom,41,52 while the remaining were based in Switzerland, 53 Australia, 54 Kenya, 46 Italy 55 and Germany. 48

Table 2.

Summary of article characteristics (n = 19).

| Characteristics | Frequency, n (%) | References |

|---|---|---|

| Study Type | ||

| Case Report | 14 (73.7) | 38–51 |

| Empirical Study | 5 (26.3) | 52–56 |

| Publication Year | ||

| Before 2010 | 4 (21.1) | 38,42,43,49 |

| 2010–2019 | 7 (36.8) | 40,41,46,47,51–53 |

| 2020- | 8 (42.1) | 39,44,45,48,50,54–56 |

| Country | ||

| United States | 12 (63.2) | 38–40,42–45,47,49–51,56 |

| United Kingdom | 2 (10.5) | 41,52 |

| Other | 5 (26.3) | 46,48,53–55 |

A variety of digital technology were taught within the programs identified (Table 3), including electronic health records (EHRs),39,47,52,53,56 ultrasound imaging,41,50,55 digital health informatics,40,42,44,46,48,51,54 computer skills, 49 computerized physician order entry (CPOE), 43 and general technology skills.38,45

Table 3.

Summary of program characteristics.

| Characteristics | Frequency, n (%) | References |

|---|---|---|

| Program Type | ||

| Datathon/Hackathon | 2 (10.5) | 48,54 |

| Training Program | 11 (57.9) | 39,41–43,47,50–53,55,56 |

| Fellowship Program | 1 (5.3) | 38 |

| Informatics Course | 2 (10.5) | 40,44 |

| Workshop | 1 (5.3) | 49 |

| ICT Learning Program | 1 (5.3) | 46 |

| Unclear | 1 (5.3) | 45 |

| Technology Taught | ||

| Electronic Health Records | 5 (26.3) | 39,47,52,53,56 |

| Ultrasound | 3 (15.8) | 41,50,55 |

| Informatics | 7 (36.8) | 40,42,44,46,48,51,54 |

| Computer Skills | 1 (5.3) | 49 |

| CPOE | 1 (5.3) | 43 |

| Technology (General) | 2 (10.5) | 38,45 |

| Mode of Delivery | ||

| Online | 6 (31.6) | 39,41,48,54–56 |

| In-person | 8 (42.1) | 40,42–44,46,50,52,53 |

| Hybrid | 3 (15.8) | 38,49,51 |

| Not stated/unclear | 2 (10.5) | 45,47 |

| Program Setting | ||

| Urban | 8 (42.1) | 40,42–44,46,50,52,53 |

| Virtual | 6 (31.6) | 39,41,48,54–56 |

| Hybrid (Virtual/Urban) | 3 (15.8) | 38,49,51 |

| Not stated | 2 (10.5) | 45,47 |

| Program Length | ||

| ≥1 year | 7 (36.8) | 38,40,42–44,51,55 |

| >1 month | 4 (21.1) | 39,46,47,53 |

| >1 day | 2 (10.5) | 48,54 |

| Ongoing | 1 (5.3) | 41 |

| Not stated/unclear | 5 (26.3) | 45,49,50,52,56 |

ICT: information and communications technology; CPOE: computerized physician order entry.

Programs were delivered through various modes. Eight were in-person,40,42–44,46,50,52,53 6 were virtual,39,41,48,54–56 3 were a hybrid blend of in-person and virtual,38,49,51 and 2 were not described.45,47

Almost half of the programs (n = 8; 42.1%) were in an urban setting.40,42–44,46,50,52,53 Three programs were hybrid, with the in-person component located in an urban setting.38,49,51 Six programs were completely virtual.39,41,48,54–56 Settings were not specified for 2 programs.45,47 None were situated in a rural setting.

Characteristics

Mentor/coach

Characteristics are described in Table 4. Mentors/coaches identified in technology adoption programs represented a variety of professions, including physicians,39,55,56 faculty/education specialists,38,43,53 nurses/midwives,47,49,52 and students in healthcare training. 50 Mentors/coaches identified in technology implementation programs included physicians,40,42 faculty/education specialists, 42 healthcare researchers,42,48 informaticists, 44 ICT expert, 46 software engineers, 48 and entrepreneurs. 48 Four programs did not specify professions of mentors/coaches.41,45,51,54

Table 4.

Participant characteristics.

| Characteristics | Frequency, n (%) | References |

|---|---|---|

| Role of Mentor | ||

| Physicians | 5 (26.3) | 39,40,42,55,56 |

| Faculty/education specialists | 4 (21.1) | 38,42,43,53 |

| Nurses/midwives | 3 (15.8) | 47,49,52 |

| Researcher | 1 (5.3) | 42 |

| Informaticist | 1 (5.3) | 44 |

| ICT* Expert | 1 (5.3) | 46 |

| Students | 1 (5.3) | 50 |

| Software Engineers | 1 (5.3) | 48 |

| Entrepreneurs | 1 (5.3) | 48 |

| Not stated/unclear | 4 (21.1) | 41,45,51,54 |

| Mentor's Practice Setting | ||

| Clinical | 6 (31.6) | 39,40,43,47,52,56 |

| Academic | 6 (31.6) | 38,40,46,49,50,52 |

| Not Stated | 9 (47.4) | 41,42,44,45,48,51,53–55 |

| Role of Learner | ||

| Students/trainees | 3 (15.8) | 41,45,52 |

| Residents | 6 (31.6) | 40,44,50,51,53,55 |

| Physicians | 7 (36.8) | 38,39,42,43,45,48,56 |

| Data scientists | 1 (5.3) | 54 |

| Healthcare researchers | 1 (5.3) | 46 |

| Nursing staff | 2 (10.5) | 47,49 |

* ICT: Information and Communications Technology.

Common attributes

Across the studies, mentors/coaches were from both clinical and academic settings, with one study that described mentors from engineering and business industries. Six programs consisted of mentors whose practice setting was clinical. These included those in palliative care, 39 nursing,43,47 and physicians in clinics/hospitals.40,52,56 Mentors practicing in an academic practice participated in six programs.38,40,46,49,50,52 Mentors from the industry included software engineers, as well as business entrepreneurs. 48 All mentors described in the 19 identified programs provided guidance and support on health informatics, data analytics, digital technology use, and feedback on learners’ performance.

Learner

Learners described in the adoption programs included students and trainees in healthcare programs (not residency programs),41,52 medical residents,50,53,55 physicians,39,43,56 and nursing staff.47,49

Learners described in the implementation programs included students and trainees in healthcare programs, 45 medical residents,40,44,51 physicians,38,42,45,48 data scientists, 54 and healthcare researchers. 46

Common attributes

As described in all 19 programs, learners entered the program with minimal or no knowledge of the skills they would be mentored on. Most learners were identified as a healthcare professional at various stages of their career, except for one implementation program, which described learners who worked as data scientists. 54

Program type

Eleven programs described a mentor/coach providing close support to learners as they learned to use a digital technology (e.g., EHR).38,39,41,43,47,49,50,52,53,55,56 These studies described three program types: a fellowship program, training programs, and a workshop. In the fellowship program, fellows were introduced to new technological facilities, software, and tools that were available at the university. 38 The training programs included mentoring/coaching on the adoption of the EHR.39,47,52,53,56 Further, two programs provided mentors to ensure learners were scanning, uploading, and interpreting the ultrasound images appropriately.41,50 Another program 43 trained physicians to use a computerized physician order entry system with embedded coaches to provide feedback. Lastly, during a one-day program, mentors introduced learners to computers, furthered their computer skills, taught them to identify technology resources, and fostered continuous improvement in computer skills. 49

Eight programs described the mentoring/coaching of digital technology implementation. For instance, mentors guided learners from project ideation to completion.40,42,44–46,48,51,54 These studies described a variety of program types. Like the program supporting the adoption of digital technology, one program type for implementation included training programs. Two of these programs were informatics courses.40,44 In both courses, participants were mentored on the development of an informatics intervention. One program was a training program where physicians were given basic computer training and completed an informatics project with the supervision of a mentor. 42 Another training program for informatics and health system leadership involved the development of a capstone project guided by a mentor. 51 Another study described a learning program with topics including information and communication technology (ICT) and data management. 46 Two studies described programs that were datathons/hackathons.48,54 A hackathon is a short event where HCPs meet to develop technological solutions to healthcare issues, whereas a datathon utilizes a similar format but focuses on collaborations between data scientists and HCPs. 54 These programs were conducted over a few days and had mentors guiding learners in developing health informatics projects.

Facilitators and barriers to mentoring and coaching for effective programs

Facilitators and barriers are described in detail in Table 5. Facilitators for effective programs included (1) resources, (2) program design, (3) mentor-learner relationship, and (4) program environment. Barriers for effective program implementation included: (1) limited resources (e.g., lack of available datasets, monetary budget or poor technology infrastructure), (2) lack of mentor engagement, (3) short program duration, (4) lack of clarity on program expectations, (5) lack of financial compensation provided to mentors or stipends provided to learners, (6) low number of learners, and (7) IT security concerns.

Table 5.

Facilitators and barriers for effective programs.

| Facilitators | Example | |

|---|---|---|

| Resources | Stipend for learners38,40,44 Access to datasets41,46,54 Free learning resources 41 |

$5000 was provided to pay for laptops Datasets provided for data management training Free web-based application for sending ultrasound scans |

| Program Design | Cross disciplinary collaboration44,45,51,54 Practical topics and examples during training46,54 Clearly defined roles and responsibilities of mentors and learners 46 |

HCPs and data scientists collaborated on project Using real-life data in projects was seen as useful Highlighting project expectations and timelines for the program was needed |

| Strong Mentor-Learner Relationship | High mentor availability enhanced training42,46 Hands-on mentoring approach47,51 High engagement of mentors can lead to increased completion and high quality of projects41,46,48 |

Mentors scheduling frequent meetings with learners Learners preferred mentors providing hands-on support Online discussion boards provided a space for mentors to interact with learners |

| Program Environment | Sense of community and supportive environment39,44 Virtual environment allows for flexibility 48 |

Journal clubs where participants discussed their projects together Mentors working from home could avoid conflicts with in-person meetings |

| Barriers | ||

| Short Program Duration | Not enough time to implement project47,51 Longer program increases knowledge gain 48 |

One study showed that 2 months was not long enough to introduce and train Hackathons longer than 48 h preferred |

| Low Number of Mentors | Disproportionate mentor to learner ratio acts as a hindrance for developing skills41,51 | One program reduced more learners than originally planned and had less mentorship than planned |

| IT Security Concerns | Lack of clarity of learners’ authorization to access data

52

Concerns around data security regulations41,48 |

Lack of clarity in nursing students’ access to patient electronic records Removing patient identifiable information from files as a data security concern |

Program evaluation and outcomes

Out of the 19 articles, 15 (79%) presented the results of their training evaluation,38–40,42,46–56 as seen in Table 6. Articles reported outcomes categorized according to the Kirkpatrick-Barr Framework were either level 1 (i.e., learner reaction and satisfaction with the education),39,40,48,49,51,52,55 level 2a (i.e., change in attitude),38,39,42,47,53,54,56 level 2b (i.e., change in knowledge or skill),38,39,42,46,47,50–54,56 or level 3 (i.e., change in behavior). 40 No articles reported outcomes categorized as level 4, as they did not comment on change in affect at the organizational level or on patient outcomes. Two (11%) out of the 19 articles used validated survey measures to evaluate program outlines.47,54

Table 6.

Program outcomes.

| Level | Outcome |

|---|---|

| Level 1: Reaction/Satisfaction | |

| Overall satisfaction | Mixed results were found for overall satisfaction: |

| Likelihood to recommend the program | Mixed results were found: |

| Quality of mentorship | Instruction from mentors was rated highly40,54 Mixed results were seen for contact with mentors, with one reporting that approximately half of participants felt adequate mentor contact 51 and another reporting high mentor availability 40 |

| Level 2a: Change in Attitude | |

| Likelihood to use technology | Increased likelihood to use technology48,54 |

| Comfortability with technology | Increased comfortability with technology use38,53 Increased confidence in starting a digital health venture or research project 48 |

| Level 2b: Change in Knowledge/Skill | |

| Increase in clinical/technological skills | Increased overall clinical skills

50

Increase in IT competence 47 Increased skills in database creation 42 Increased knowledge of research methodology 54 Increased ultrasound skills and accreditation 50 Increased EHR skills 56 |

| Increase in interprofessional skills | Increased knowledge in cross-disciplinary teamwork

54

Improved communication skills53,54 |

| Increase in project delivery skills | High successful submission rate (∼85%) of project deliverables 46 |

| Level 3: Change in Behaviour | Positive changes to patient care, provider efficiency and workflow, reporting, or end-user training 40 |

Level 1 outcomes were reported in studies describing training programs, a datathon, and informatics course. Training programs showed mixed overall satisfaction,50,51 mixed likelihood to recommend the program,39,50,51 and low levels of contact with mentors. 51 Both a datathon 54 and an informatics course 40 showed highly rated instruction from mentors. The informatics course 40 also showed high mentor availability.

Level 2 outcomes were reported in studies describing training programs, datathon/hackathon, an ICT learning program and a fellowship program. Datathons/hackathons showed an increased likelihood to use technology,48,54 increased knowledge of research methodology, 54 increased knowledge in cross-disciplinary teamwork, 54 improved communication skills, 54 and increased confidence in initiating a digital health venture/research project. 48 The ICT learning program 46 showed high program deliverables. Participants in the fellowship program 38 showed increased comfortability with technology use. Training programs showed increased comfortability with technology use, 53 clinical skills, 50 IT competence, 47 database creation skills, 42 ultrasound skills, 50 EHR skills, 56 and communication skills. 53

Level 3 outcomes were reported for an informatics course and showed positive changes to patient care, provider efficiency and workflow, reporting, or end-user training. 40

From these results, we developed four guiding principles: 1) A strong mentor-learner relationship is needed for successful training; 2) Interdisciplinary engagement contributes to knowledge transfer; 3) Program expectations should be established and clear; and 4) Sustainability of mentored skills should be promoted.

Discussion

The role of mentoring and coaching in developing skills is an important component of implementation. According to the Fixsen framework, 57 there are three categories of implementation drivers: competency drivers, organization drivers and leadership drivers. Competency drivers, defined as mechanisms to develop, improve and sustain one's ability to implement, are required for implementation. These drivers include selection, training and coaching in order for participants to have the tools and skills for implementation. One such competency driver is coaching, which acts to develop, improve and sustain the ability to implement interventions by developing skills for professionals. 57 While literature exists on coaching and mentoring in healthcare and academic settings, little is known about the role of coaching and mentoring in digital technology education. As such, this review aimed to map the current roles of mentoring or coaching in digital technology adoption and implementation, particularly the extent to which mentoring, or coaching has been employed in education programs or interventions for adopting and implementing technology in healthcare. Further, the review aimed to develop a base understanding of the efficacy of mentoring and coaching within these programs.

The 19 identified studies were largely descriptive in nature and covered a variety of program types. Few articles described implementation of technology40,51 and most articles described mentoring/coaching the adoption of technology.38,39,41–50,52–56 This uncovered a gap in the literature surrounding mentoring and coaching in teaching HCPs how to implement technology. Programs reported outcomes from the first 3 levels of the Kirkpatrick-Barr Framework and showed varied success. As described above, we developed four guiding principles that may be relevant for future program design and development.

Guiding principles

Principle 1: a strong mentor-learner relationship is needed for successful training

Having a high-quality mentor-learner relationship contributes to enhanced learning and greater knowledge transfer. For programs that required the completion of a project, a stronger mentor-learner relationship resulted in greater completion rates and overall higher quality projects.41,46,48 It is essential for programs to facilitate opportunities for mentors and learners to establish a positive connection. 51 This could include encouraging frequent interactions between mentors and learners,42,46 such as integrating online discussion boards that allowed for regular communication. 46 Mentors should also consider taking an active hands-on approach by providing elaborate, detailed feedback on learner performance 52 to promote learning.47,51 A smaller ratio of mentors to learners may increase engagement within these programs. 51

Principle 2: interdisciplinary engagement contributes to knowledge transfer

Diversity in mentors/coaches enhanced program success, particularly as the implementation of technologies requires a wide repertoire of knowledge across health information systems, databases, statistics, clinical care and implementation strategies. 42 This allowed for participants to receive feedback on a variety of topics. Diversity in learners also contributed to program success, as teams should have a variety of backgrounds to enhance learning and collaboration. For instance, Lyndon et al. 54 noted that a mixture of professionals from healthcare, computer science, and engineering backgrounds increased the creativity of projects developed at a datathon. 44 This is supported by prior literature, as Agic et al. 58 noted that diverse and inclusive programs can enhance learner experience and improve accessibility. Developing diverse and inclusive programs can promote training HCPs who are better equipped to meet the diverse needs of the patient population. 59

Principle 3: program expectations should be established and clear

At an early stage, programs must ensure that program participants (mentors/coaches and learners) are aware of their roles and responsibilities. For instance, mentors should be notified of program expectations, their role in the program, and the expertise to provide to learners prior to the start of a program. 47 The flexibility requirements and time commitment of mentors should also be made clear to ensure appropriate participation with learners. 43 This can mitigate barriers of schedule conflicts and disengagement from mentors. As noted by several studies, coaches who were well-informed of program expectations and were trained on their specific roles provided higher quality training.43,50,55 Learners can also be better supported if expectations are clearly delivered to them. In a program described by Monroe-Wise et al., 46 learners reported a lack of clarity on project expectations, such as important deadlines and mandatory deliverables. As such, programs should establish a standard set of expectations for all program participants early on to ensure that learners receive a consistent quality of mentorship throughout the program. This can promote enhanced mentor-learner relationships, greater participation, and successful project completion.

Principle 4: sustainability of mentored skills should be promoted

It is important to ensure that skills obtained within training programs can be sustained in the long-term. This sustainability can be accomplished by providing necessary resources for learners to practice their skills. A lack of access to digital technologies (e.g., EHR, ultrasound) limits the ability of learners to do so. Further, it is essential for programs to use practical topics and examples for students to gain an understanding of how their knowledge can apply to the real world. This concrete and practical approach is especially crucial as mentored skills may not be sustained post-program due to the lack of need to use it or little opportunities to continue the training. Organizational and administrative support is essential for learners to conduct technology in their workplace.

Short program duration can also contribute to non-sustainment. Wysham et al. 51 found that a number of learners failed to implement their projects within the one-year program duration. Furthermore, many learners completed the program, returning to their clinical roles, with no capacity to advance their projects further. 51 Short program durations can therefore contribute to insufficient skills training. Future programs should carefully design their program timelines with that in mind.

Limitations

This review provides an overview of the role of mentoring and coaching in digital health technology, but is not without its limitations. Firstly, including only studies written in English may have limited studies describing programs in largely non-English-speaking countries, as our findings were largely from the United States and United Kingdom. The descriptive nature of several studies included acted as a limitation for the authors, as some presented only overall outcomes without extensive program descriptions. Thus, there was a limited ability to compare attributes of several programs. Additionally, only a few articles47,54 used validated surveys or mentioned their source or psychometrics. Further, no quality assessment was conducted with the articles identified. Thus, the rigor of these evaluations should be taken into consideration. This study is also limited in the generalizability of its findings. None of the included articles were conducted in a rural setting. Additionally, most studies in our review examined the role of mentoring instead of coaching due to a focus on broader technology skills (i.e., informatics, general computer use). The technology itself was also limited, as most studies examined digital technology including EHRs, informatics and there was a gap around tools related to artificial intelligence. While the authors identified recent conference abstracts that described an AI training program with mentorship,60,61 none were fully published. Similarly, there were other mentorship/coaching programs known to the authors that did not have published works and therefore were not included in this review. This may be due to the emerging nature of this field of research, as the role of AI in healthcare is rapidly expanding. Future studies should examine the increase in knowledge and development of specific skills in AI.

Conclusions

This study has found that the use of coaching and mentoring in the implementation of digital technologies is limited. Emerging enablers and barriers to coaching and mentorship in digital technologies include appropriate resources, program design, mentor-learner relationship, program environment, security concerns, clear program expectations, and appropriate number of program participants. The scoping review recommends four guiding principles that should be applied when developing a coaching/mentoring program for the adoption or implementation of digital technology. Our findings highlight the importance of the mentor-learner relationship and in ensuring expectations for participants are clear at the beginning of a program. Further, interdisciplinary collaboration and long-term, post-program sustainability should be taken into consideration during program design in order to retain skills gained during participation. Future research should explore coaching and mentorship attributes in-depth to identify what characteristics of a mentor or coach serves as strong facilitators in skill transfer. Given the rapid evolution of technology in healthcare, proper training and support will be needed to ensure that the healthcare workforce is well-equipped to use, implement, and evaluate the technology in their practice.15,16

Supplemental Material

Supplemental material, sj-docx-1-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH

Supplemental material, sj-docx-2-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH

Supplemental material, sj-docx-3-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH

Acknowledgements

The authors would like to thank Ms. Azra Dhalla, Ms. Dalia Al-Mouaswas, and Ms. Megan Clare for their support in the planning stage of the scoping review.

Footnotes

Contributorship: DW conceived of the study idea and oversaw its execution. DW, MZ, IK, and MA developed the search strategy. MA ran all database searches. MZ, IK, JS, and RC screened all titles/abstracts and full-text of the retrieved articles. MZ and JS extracted all data from identified articles. All authors contributed to data analysis. MZ and JS prepared the initial manuscript draft, while all authors contributed to the final version.

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: Waived for scoping review.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This project, “Accelerating the Appropriate Adoption of Artificial Intelligence in Health Care Through Building New Knowledge, Skills, and Capacities in the Canadian Health Care Professions” is funded by the Government of Canada's Future Skills Centre.

Guarantor: DW.

Consent: This manuscript presents a scoping review of published literature. Since no original, individual data were used for the analysis presented in this manuscript, the requirement for consent is not applicable.

ORCID iDs: Jillian Scandiffio https://orcid.org/0000-0001-6610-6164

Rebecca Charow https://orcid.org/0000-0002-8553-4006

Supplemental material: Supplemental material for this article is available online.

References

- 1.Board WHOE. eHealth: Report by the secretariat. 115th Session of the Executive Board. Provisional agenda item 4.13 EB115/39. 2004.

- 2.Organization WH. mHealth. Use of appropriate digital technologies for public health: Report by Director-General. 71st World Health Assembly provisional agenda item 12.4 A71/20. 2018.

- 3.Organization WH. Digital health, Agenda item 12.4, WHA71.7. 2018.

- 4.Organization WH. Classification of digital health interventions v1. 0: a shared language to describe the uses of digital technology for health (No. WHO/RHR/18.06). 2018.

- 5.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2017; 2: 230–243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA 2013; 309: 1351–1352. [DOI] [PubMed] [Google Scholar]

- 7.Tekkesin AI. Artificial intelligence in healthcare: past, present and future. Anatol J Cardiol 2019; 22: 8–9. [DOI] [PubMed] [Google Scholar]

- 8.Ting DSW, Carin L, Dzau V, et al. Digital technology and COVID-19. Nat Med 2020; 26: 459–461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fogel AL, Kvedar JC. Artificial intelligence powers digital medicine. NPJ Digit Med 2018; 1: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Petitgand C, Motulsky A, Denis JL, et al. Investigating the barriers to physician adoption of an artificial intelligence-based decision support system in emergency care: an interpretative qualitative study. Stud Health Technol Inform 2020; 270: 1001–1005. [DOI] [PubMed] [Google Scholar]

- 11.Kyratsis Y, Ahmad R, Holmes A. Technology adoption and implementation in organisations: comparative case studies of 12 English NHS trusts. BMJ Open 2012; 2: e000872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Aung YYM, Wong DCS, Ting DSW. The promise of artificial intelligence: a review of the opportunities and challenges of artificial intelligence in healthcare. Br Med Bull 2021; 139: 4–15. [DOI] [PubMed] [Google Scholar]

- 13.Hazarika I. Artificial intelligence: opportunities and implications for the health workforce. Int Health 2020; 12: 241–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ajmera P, Jain V. Modelling the barriers of health 4.0–the fourth healthcare industrial revolution in India by TISM. Oper Manag Res 2019; 12: 129–145. [Google Scholar]

- 15.van der Niet AG, Bleakley A. Where medical education meets artificial intelligence: ‘does technology care?’. Med Educ 2021; 55: 30–36. [DOI] [PubMed] [Google Scholar]

- 16.Brinker S. https://chiefmartec.com/2016/11/martecs-law-great-management-challenge-21st-century/ (2016, accessed March 10 2022).

- 17.Mead D, Moseley L. Attitudes, access or application: what is the reason for the low penetration of decision support systems in healthcare? . Health Informatics J 2001; 7: 195–197. [Google Scholar]

- 18.Sipes C, Hunter K, McGonigle D, et al. The health information technology competencies tool: does it translate for nursing informatics in the United States? Comput Inform Nurs 2017; 35: 609–614. [DOI] [PubMed] [Google Scholar]

- 19.Jidkov L, Alexander M, Bark P, et al. Health informatics competencies in postgraduate medical education and training in the UK: a mixed methods study. BMJ Open 2019; 9: e025460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jimenez G, Spinazze P, Matchar D, et al. Digital health competencies for primary healthcare professionals: a scoping review. Int J Med Inform 2020; 143: 104260. [DOI] [PubMed] [Google Scholar]

- 21.Caliskan SA, Demir K, Karaca O. Artificial intelligence in medical education curriculum: an e-Delphi study for competencies. PLoS One 2022; 17: e0271872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Charow R, Jeyakumar T, Younus S, et al. Artificial intelligence education programs for health care professionals: scoping review. JMIR Med Educ 2021; 7: e31043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Taherian K, Shekarchian M. Mentoring for doctors. Do its benefits outweigh its disadvantages? Med Teach 2008; 30: e95–e99. [DOI] [PubMed] [Google Scholar]

- 24.Burgess A, van Diggele C, Mellis C. Mentorship in the health professions: a review. Clin Teach 2018; 15: 197–202. [DOI] [PubMed] [Google Scholar]

- 25.Wiljer D, Hakim Z. Developing an artificial intelligence-enabled health care practice: rewiring health care professions for better care. J Med Imaging Radiat Sci 2019; 50: S8–S14. [DOI] [PubMed] [Google Scholar]

- 26.Hussey LK, Campbell-Meier J. Is there a mentoring culture within the LIS profession? J Libr Adm 2017; 57: 500–516. [Google Scholar]

- 27.Karcher MJ, Kuperminc GP, Portwood SG, et al. Mentoring programs: a framework to inform program development, research, and evaluation. J Community Psychol 2006; 34: 709–725. [Google Scholar]

- 28.Bullock D. Moving from theory to practice: an examination of the factors that preservice teachers encounter as the attempt to gain experience teaching with technology during field placement experiences. J Technol Teach Educ 2004; 12: 211–237. [Google Scholar]

- 29.Kopcha TJ. A systems-based approach to technology integration using mentoring and communities of practice. Educ Technol Res Dev 2010; 58: 175–190. [Google Scholar]

- 30.Polselli R. Combining web-based training and mentorship to improve technology integration in the K-12 classroom. J Technol Teach Educ 2002; 10: 247–272. [Google Scholar]

- 31.Lovell B. What do we know about coaching in medical education? A literature review. Med Educ 2018; 52: 376–390. [DOI] [PubMed] [Google Scholar]

- 32.Wolff M, Hammoud M, Santen S, et al. Coaching in undergraduate medical education: a national survey. Med Educ Online 2020; 25: 1699765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kombarakaran FA, Yang JA, Baker MN, et al. Executive coaching: it works! Consult Psychol J: Pract Res 2008; 60: 78. [Google Scholar]

- 34.Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol 2005; 8: 19–32. [Google Scholar]

- 35.Tricco AC, Lillie E, Zarin W, et al. PRISMA Extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med 2018; 169: 467–473. [DOI] [PubMed] [Google Scholar]

- 36.Kirkpatrick DL. Evaluating training programs: the four levels. Berkley, CA: Berrett-Koehler, 1994. [Google Scholar]

- 37.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006; 3: 77–101. [Google Scholar]

- 38.Axley L. The integration of technology into nursing curricula: supporting faculty via the technology fellowship program. Online J Issues Nurs 2008; 13: 12. [Google Scholar]

- 39.Alexander Cole C, Wilson E, Nguyen PL, et al. Scaling implementation of the serious illness care program through coaching. J Pain Symptom Manage 2020; 60: 101–105. [DOI] [PubMed] [Google Scholar]

- 40.Singer JS, Cheng EM, Baldwin K, et al. The UCLA health resident informaticist program - a novel clinical informatics training program. J Am Med Inform Assoc 2017; 24: 832–840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Reid G, Bedford J, Attwood B. Bridging the logistical gap between ultrasound enthusiasm and accreditation. J Intensive Care Soc 2018; 19: 15–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cartwright CA, Korsen N, Urbach LE. Teaching the teachers: helping faculty in a family practice residency improve their informatics skills. Acad Med 2002; 77: 385–391. [DOI] [PubMed] [Google Scholar]

- 43.Creamer JL, Elliot P. Embedded coaches lead to CPOE victory. Health Manag Technol 2005; 26: 26–28. [PubMed] [Google Scholar]

- 44.Kohn MS, Topaloglu U, Kirkendall ES, et al. Creating learning health systems and the emerging role of biomedical informatics. Learn Health Syst 2022; 6: e10259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Linderman SW, Appukutty AJ, Russo MV, et al. Advancing healthcare technology education and innovation in academia. Nat Biotechnol 2020; 38: 1213–1217. [DOI] [PubMed] [Google Scholar]

- 46.Monroe-Wise A, Kinuthia J, Fuller S, et al. Improving information and communications technology (ICT) knowledge and skills to develop health research capacity in Kenya. Online J Public Health Inform 2019; 11: e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Poe SS, Abbott P, Pronovost P. Building nursing intellectual capital for safe use of information technology: a before-after study to test an evidence-based peer coach intervention. J Nurs Care Qual 2011; 26: 110–119. [DOI] [PubMed] [Google Scholar]

- 48.Braune K, Rojas PD, Hofferbert J, et al. Interdisciplinary online hackathons as an approach to combat the COVID-19 pandemic: case study. J Med Internet Res 2021; 23: e25283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schoessler SZ, Leever JS. Computer mentoring for school nurses: the New York state model. J Sch Nurs 2000; 16: 40–43. [PubMed] [Google Scholar]

- 50.Smith CJ, Wampler K, Matthias T, et al. Interprofessional point-of-care ultrasound training of resident physicians by sonography student-coaches. MedEdPORTAL 2021; 17: 11181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wysham NG, Howie L, Patel K, et al. Development and refinement of a learning health systems training program. EGEMS (Wash DC) 2016; 4: 1236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Baillie L, Chadwick S, Mann R, et al. A survey of student nurses’ and midwives’ experiences of learning to use electronic health record systems in practice. Nurse Educ Pract 2013; 13: 437–441. [DOI] [PubMed] [Google Scholar]

- 53.Lanier C, Dominice Dao M, Hudelson P, et al. Learning to use electronic health records: can we stay patient-centered? A pre-post intervention study with family medicine residents. BMC Fam Pract 2017; 18: 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lyndon MP, Pathanasethpong A, Henning MA, et al. Measuring the learning outcomes of datathons. BMJ Innov 2022; 8: 72–77. [Google Scholar]

- 55.Mongodi S, Bonomi F, Vaschetto R, et al. Point-of-care ultrasound training for residents in anaesthesia and critical care: results of a national survey comparing residents and training program directors’ perspectives. BMC Med Educ 2022; 22: 647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Khairat S, Kwong E, Chourasia P, et al. Improving physician electronic health record usability and perception through personalized coaching. Stud Health Technol Inform 2022; 298: 3–7. [DOI] [PubMed] [Google Scholar]

- 57.Fixsen DL, Blasé KA, Naoom SF, et al. Implementation drivers: assessing best practices. Chapel Hill: University of North Carolina, Chapel Hill, Frank Porter Graham Child Development Institute, 2015. [Google Scholar]

- 58.Agic B, Fruitman H, Maharaj A, et al. Advancing curriculum development and design in health professions education: a health equity and inclusion framework for education programs. J Contin Educ Health Prof 2022; 43: S4–S8. [DOI] [PubMed] [Google Scholar]

- 59.Lucey CR. Medical education: part of the problem and part of the solution. JAMA Intern Med 2013; 173: 1639–1643. [DOI] [PubMed] [Google Scholar]

- 60.Xiang JJ, Park J, McCann J, et al. Teaching practice-based learning and improvement and digital health through EPIC self-reporting tools training. J Gen Intern Med 2022; 37: S655. [Google Scholar]

- 61.Zou Y, Weishaupt L, Enger S. Mcmedhacks: deep learning for medical image analysis workshops and hackathon in radiation oncology. Radiother Oncol 2022; 170: S4–S5. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH

Supplemental material, sj-docx-2-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH

Supplemental material, sj-docx-3-dhj-10.1177_20552076241238075 for The role of mentoring and coaching of healthcare professionals for digital technology adoption and implementation: A scoping review by Jillian Scandiffio, Melody Zhang, Inaara Karsan, Rebecca Charow, Melanie Anderson, Mohammad Salhia and David Wiljer in DIGITAL HEALTH