Abstract

Objectives

The use of interactive mobile health (mHealth) applications to monitor patient-reported postoperative pain outcomes is an emerging area in dentistry that requires further exploration. This study aimed to evaluate and improve the usability of an existing mHealth application.

Materials and methods

The usability of the application was assessed iteratively using a 3-phase approach, including a rapid cognitive walkthrough (Phase I), lab-based usability testing (Phase II), and in situ pilot testing (Phase III). The study team conducted Phase I, while providers and patients participated in Phase II and III.

Results

The rapid cognitive walkthrough identified 23 potential issues that could negatively impact user experience, with the majority classified as system issues. The lab-based usability testing yielded 141 usability issues.; 43% encountered by patients and 57% by dentists. Usability problems encountered during pilot testing included undelivered messages due to mobile phone carrier and service-related issues, errors in patients’ phone number data entry, and problems in provider training.

Discussion

Through collaborative and iterative work with the vendor, usability issues were addressed before launching a trial to assess its efficacy.

Conclusion

The usability of the mHealth application for postoperative dental pain was remarkably improved by the iterative analysis and interdisciplinary collaboration.

Keywords: dental informatics, mHealth applications, usability testing, dental pain, patient-reported outcomes

Background and significance

Dental pain has been identified as one of the most commonly occurring dental adverse events.1 While providers recognize that the pain experienced by some patients is unavoidable, efforts should be made to mitigate and, where possible, prevent its physical and emotional burden through pharmacological and non-pharmacological management methods. Patient-reported outcomes (PROs) represent a critical component of comprehensive pain assessment.2 PROs allow clinicians to directly assess a patient’s symptoms, symptom burden, functional status, health behaviors, health-related quality of life, and care experiences.

Dentists’ limited ability to actively assess patients’ postoperative pain levels has led to pre-emptive opioid prescriptions (Rxs) despite the risk of developing addiction and inferior post-op pain relief.3,4 In context, >500 000 people in the United States have died from an opioid overdose over a 20-year time span (from 1999 to 2020).5 For their part, the US dentists have prescribed opioids more frequently than any other healthcare providers, accounting for ∼12% of all opioid prescriptions; 31% of prescriptions were for 4-7 days, and 20% were for 8 days or more, which may be excessive for many dental procedures and increase the risk of abuse. For example, dentists’ opioid prescriptions for pain management of third molar extractions from opioid-naive adolescents and young adults were associated with a 6.8% statistically significant increase in persistent opioid use and a 5.4% increase in the subsequent diagnosis of opioid abuse.6 These statistics underscore the public health impact of prescribed opioids as well as the potential for dentistry to use PROs as a means to record both their use and dissemination.

Data derived from patient-reported sources are useful in measuring pain and management outcomes as they provide first-hand patient accounts.7,8 Questionnaires have been administered to dental patients via phone or email to gauge their pain and management experiences.9 Still, post-procedural communication between patients and their healthcare providers does not always occur, and when it does, the interaction can be resource-consuming for both parties.10 To reduce this commitment, medicine has used mobile Health applications (mHealth) to gather PROs, for example, pain outcomes after surgery and patient pain medication use.10 Growing evidence suggests that mobile phones are effective11–13 for the reporting of patients’ symptoms, symptom burden, health status, health behaviors, and health-related quality of life. CareSmarts represents an illustration of mHealth reporting capabilities. The mHealth diabetes platform utilized automated text messaging software to encourage self-care and care coordination between nurses and physicians. Nundy et al found that HbA1c levels were significantly improved from 7.9% in the pre-period to 7.2% in the post-period in the treatment group (mHealth application users). At the same time, no change in HbA1c was observed in the control group.11 The use of mHealth applications to inform clinical care remains largely unexplored in dentistry despite the increasing evidence of such emerging health information technologies (IT). In an effort to accelerate their use, an accounting of the mHealth landscape followed by comprehensive testing must occur before these mHealth technologies can be widely adopted in dentistry.12,13

Eighty-five percent of the US adults own a mobile phone,14 and 67% of patients use their phones to search for health information.14 Also, a significant proportion of low-income families only connect to the Internet via mobile phones.15 These data reflect a favorable patient environment that is amenable to mobile device use for health-related concerns, and they further the impetus for dental providers to embrace health IT as a potential strategy for reporting and delivering quality oral healthcare.

The literature recently published on dental mHealth applications is focused on dental hygiene, oral disease screening, and oral disease prevention.16–20 However, to our knowledge, such interactive mHealth applications,21 that provide frequent monitoring of patient-reported postoperative pain outcomes have not been widespread within the dental literature. To address this gap, we aimed to evaluate and improve the usability of an existing application for monitoring dental pain through a mobile app. The FollowApp.Care was selected for this purpose due to its suitability in dentistry and the company’s commitment to working with the research team to evaluate and improve the platform. In this research, we report on a multi-modal approach to evaluate and improve the usability of FollowApp.Care, a mHealth application focused on collecting and transmitting PROs after painful dental procedures. Our approach incorporated a user-centered design, widely used for building and improving mHealth apps.22–28 This evidence-based iterative design process considers the end users’ needs in each phase of the design process.29 Our study demonstrates the first-ever implementation of the application in the dental clinical setting, providing important insights into its potential for improving patient outcomes.

Objectives

Our primary objectives were to: (1) perform usability and pilot tests to refine the user experience and interface of the mHealth application for each trial site. (2) Identify potential implementation barriers and facilitators through a qualitative, user-centered, and iterative process. (3) Determine the mHealth application’s capacity to capture PROs in dental practice. These evaluations and improvements were needed to refine the mHealth application before use in a future randomized controlled trial.

Methods

Study overview

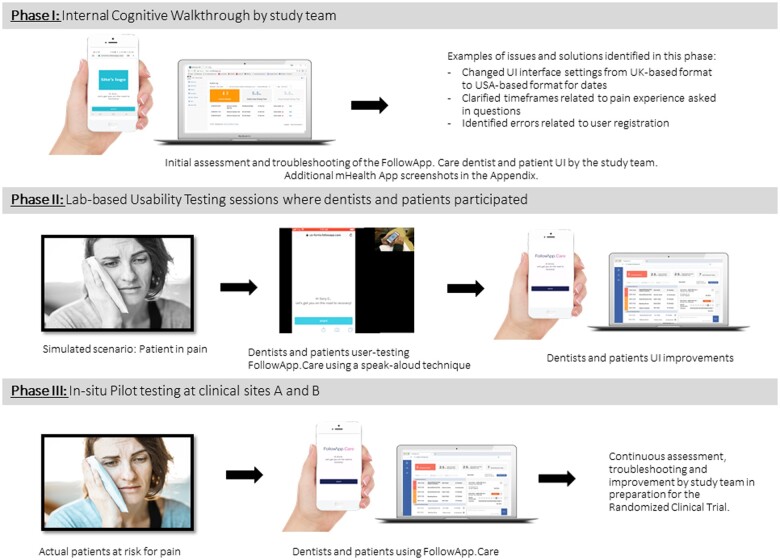

The following methods were used to evaluate and improve the usability of the mHealth application FollowApp.Care30: (1) Rapid cognitive walkthroughs (Phase I); (2) usability testing (Phase II); and (3) in situ pilot testing (Phase III) (Figure 1). The study team conducted Phase I. Phases II and III of the study were conducted at an academic dental institution (Site A) and a multispecialty large accountable care dental group practice (Site B). Permission to conduct the study at both sites was obtained from the Institutional Review Board at Site A (18-25477). A purposive sample of patients and providers was used for testing in Phases II and III. Consent for participating in Phase II, the usability testing, was obtained in written form. Phase II representative users were provided “real-life” use cases to complete using FollowApp.Care while the project team observed. For the in situ pilot testing (Phase III), provider consent was obtained in written form, while patient-implied consent was obtained by clicking on a link in the mHealth app. Figure 1 provides an overview of the 3 phases of the study.

Figure 1.

Overview of the 3 phases of the study: Phase 1: Cognitive walkthrough, Phase II: Usability Testing, and Phase III: Pilot testing. We acknowledge Engin Akyurt on Unsplash for the photo “A girl with a toothache,” and Kopparapu and Tenzen on CleanPNG for “Apple Cartoon—iPhone 5” and “Apple Cartoon—Laptop.”

Study population

Phase I did not have study subjects as it was conducted by study team members who were intentionally selected for their multi-disciplinary expertise in usability methods, informatics and/or practicing dentistry. Patients and dental providers from a large dental group practice in the Pacific Northwest and a large academic dental practice in California were invited to participate in Phases II and III. Provider participants were purposefully recruited from the participating clinics based on their interest in using a mHealth app to communicate with patients. Patients who attended clinic visits during the specific study period were invited to participate. The source of potential participants was the pool of patients and dentists at either a large dental group practice in the Pacific Northwest or an Academic dental institution in California. Eligible providers were dentists with a minimum of 2 clinic sessions per week (1 day) and at least 6 months of practice experience. Eligible patients were English-speaking adults (≥18 years) who had undergone specific, likely postoperative, painful dental procedures. Both patients and dental providers (dentists) needed access to a working smartphone with internet capabilities. The criteria used for purposive sampling were based on sex and age. We tried to recruit ∼50% male, 50% female, and participants of all age groups.

Materials

FollowApp.Care is a communications platform to collect patient-generated health data before or after a dental procedure to inform care decisions, drive quality, and generate actionable performance reports.30 This patient-monitoring mobile phone platform is currently used in the United States, the United Kingdom, Spain, and Australia. FollowApp.Care can be deployed through any text message-enabled smartphone and configured to deliver language translations (eg, Spanish) as well as generate aggregate reports at the patient, provider, practice, and organizational levels. The research team engaged with FollowApp.Care due to the suitability of the platform for dentistry and their commitment to participating in the usability assessments and iteratively improving their platform based on our findings.

The FollowApp.Care mHealth app platform was used to administer the mHealth survey consisting of validated items from the PROMIS8 and APS-POQ-R31 surveys. FollowApp had 2 user interfaces (UI): one for dentists and a second one for patients. Dentists could manage their patient’s mHealth survey responses through the dentists’ UI. Surveys were delivered to patients, and answers were collected through the patients’ UI. Neither dentists nor patients had to download FollowApp.Care from an application store platform since it is a web-based app that can be accessed through any web browser.

While dentists could access the dentists’ UI through their mobile phones, we focused on access to the platform using a web browser on a computer. After logging into the app, dentists were able to register patients, set up the surveys, review their patients’ responses, and send them manual or automated text messages through the app. Patients could only use the patients UI through their mobile phones since access was granted via a unique link embedded within a text message (see Appendix for screenshots). The sender’s mobile phone number always came from a generic 5-digit number set up by FollowApp.Care. Therefore, patients had no access to their dentists’ mobile phone number. Once a patient completed a survey, responses were collected in the system, and the dentist was notified if the patient’s pain, swelling, and bleeding levels triggered an alert or if the patient sent a comment to the dentist. Alerts were sent to providers via email or text message; these included a link that directed the dentist to the patient profile that triggered the alert. Once alerted, dentists could resolve the alert by contacting the patient through the messaging application or “acknowledge” the receipt of the alert with the option to phone/contact the patient outside the application or perform no action. Patients also always had the option to contact the provider’s office outside of using the app.

Phase I: rapid cognitive walkthrough

The multidisciplinary research team members individually performed a rapid cognitive walkthrough of the mHealth app from August 24, 2018, to November 5, 2018. The rapid cognitive walkthrough is an analysis method focused on understanding and improving the usability of a system through use and exploration.32 The walkthroughs occurred after the study team received a virtual overview of the mHealth application from the developers during the initial planning stages of the study. A facilitator organized the walkthrough process and prepared the walkthrough team for the session. A single notetaker recorded the output of the cognitive walkthrough. Subsequently, each team member completed the FollowApp.Care user-related tasks to identify potential issues. The tasks included completing patient surveys and reviewing the dentist’s dashboard. All reported issues found during the rapid cognitive walkthroughs were collected, organized, and reviewed for accuracy. There were no discussions about the design during the walkthrough.

To analyze the rapid cognitive walkthrough findings, we used qualitative descriptive theory.33 A single investigator (A.M.I.-N) classified each issue into system, content, and workflow categories. System issues were related to the mHealth UI, content issues were related to the survey questions, and workflow issues were related to using the mHealth app within the dental care workflow.34 Through a consensus process, a second investigator (M.F.W.) confirmed the classification to minimize preconception biases.

Phase II: usability testing

One-hour semi-structured interviews were conducted for usability testing of FollowApp.Care from December 3, 2018, to December 7, 2018, at sites A and B. The participants had no prior knowledge or experience with the mHealth app. The semi-structured interviews consisted of simulated scenarios describing a 7-day postoperative dental experience. In 1-hour blocked sessions, the participants interacted with the mHealth app following the simulated scenario while using a think-aloud protocol.35 Each session was moderated by a research study team member (M.F.W.). Another team member (A.M.I.-N.) managed the mHealth app’s force-send features, recorded the participants’ verbal thoughts, and observed their actions. Patients’ hand movements and computer and mobile phone screens were recorded. The usability testing video recordings were imported into NVivo 12 software for the qualitative analysis. Additionally, the usability of FollowApp.Care was evaluated in accordance with the Heuristics from the Better EHR General Design Principles classification of the NCCI&DMHC.36 Finally, the System Usability Scale (SUS) survey37 was administered to participants at the end of each semi-structured interview using Qualtrics Survey Software.

All observational data were transcribed verbatim and converted into a structured table with timestamps. Data quality was assessed by randomly reviewing 3 transcriptions and listening to the audio recordings. Two investigators conducted the initial coding stage by analyzing each sentence of the transcript and tagging the transcript lines into thematic codes. Next, advanced coding was done by developing a data dictionary, comparing the codes, identifying patterns, and grouping them into unique themes. Conceptual saturation was considered satisfied during the data analysis process when no new concepts were emerging from the data. Theoretical saturation was achieved when no new properties or dimensions of a category or theme were identified. Two categorization rounds were conducted to achieve consensus in the classification of codes and concept integration. The thematic analysis included categorizing each finding into the following:

-

Finding type of:

User Comment: “Patient has never used an application like FollowApp before.”

Usability Issue: “Dentist is confused by pain scale questions and would like the pain level to be more user friendly.”

User Suggestion: “Patient would like to be able to send pictures to show how an ‘abscess or blister’ looks like, so they can confirm if he/she needs to come in or not.”

Other: “Dentist likes that questions are automated and sent at a certain time without him having to think about sending them.”

-

Location of the usability issues and potential solutions to fix the problems.

Examples: Locations in the UI (patients’ interface or dentists’ interface), icons within a screen, questions in the questionnaire, and specific steps in the clinical workflow.

-

Types of usability issues according to the Heuristics from the Better EHR General Design Principles classification of the NCCI&DMHC.36 Each usability issue was further classified according to the following:

-

Level of impact on the usability

High impact: were those items that could have a dramatic impact on improving usability

Medium impact: were those that would have a significantly positive impact and should be addressed but were not deemed urgent, and

Low impact: those that would have a minimal impact on user experience could be addressed based on extra time and resources.

Out of Scope: were those issues or usability sessions that did not pertain to the study objectives.

-

Suggested timeframe to address the issue

Short-term: were those issues that should be addressed immediately

Medium-term: addressed by the next major release of the mobile dental application or within 1 year

Longer-term: were those that would be addressed within a 2-year timeframe.

-

The frequency and percentage of the heuristic principles that were violated were calculated. SUS survey results were analyzed using Brooke’s scoring system to determine the overall usability of the mHealth app in Phases II and III.37 The results from the usability testing were used to refine further and configure FollowApp.Care. Each item on the SUS questionnaire was reported using a 5-point Likert scale ranging from strongly disagree to strongly agree. Survey responses were analyzed quantitatively using the SUS scoring system. The score yields a single composite number ranging from 0 to 100, measuring the overall usability of FollowApp.Care. Mean overall usability scores were calculated along with standard deviations.

Phase III: pilot testing

The pilot testing was conducted from June 24, 2019, through January 14, 2020. Study team members worked with the study participants from each site and gathered all usability issues encountered while using the mHealth app either via email or on weekly team calls.

Quantitative data were collected through the assessment of fidelity and administration of the SUS questionnaire.37 The SUS questionnaire was given at 2 time points: (1) before starting the use of the mHealth app and (2) after at least one patient had completed the seventh day survey. The fidelity metrics for both patients and providers were specified in Table 2. The SUS was administered through Qualtrics Survey Software and was analyzed using methods described in Phase II.

Table 2.

Pilot-testing results: fidelity measures outcomes.

| Description | Results | |

|---|---|---|

| Fidelity measures (patients) | Provided verbal consent and received the Information Sheet | 100% of patients completed it (35/35) |

| FollowApp.Care Profile was created | 100% of patients had a FollowApp profile (35/35) | |

| Received SMS/Email notifications on Day 0 | 100% of patients received it (35/35) | |

| Patient Response Time | 6 hours 12 minutes (SD: 17hrs. and 55 minutes) | |

| Response rate Day 1 | 54% of patients responded (19/35) | |

| Response rate Day 3 | 57% of patients responded (20/35) | |

| Response rate Day 5 | 54% of patients responded (19/35) | |

| Response rate Day 7 | 57% of patients responded (20/35) | |

| Fidelity measures (dentists) | Signed consent forms before training | 100% of dentists completed it (11/11) |

| Completed 1-h training | 100% of dentists completed it (11/11) | |

| Verified FollowApp.Care Profile | 100% of dentists verified their profile (11/11) | |

| Unique Identifiers provided | 100% of dentists had a unique identifier (11/11) | |

| Completed SUS Survey | 100% of dentists completed the SUS survey (11/11) | |

| Number of Log-Ins | Total number of Log-Ins: 74 | |

| Number of Successful Log-Ins | 71.6% of Log-Ins successful (53/74) | |

| Number of Unsuccessful Log-ins | 29.4% of Log-Ins unsuccessful (21/74) | |

| Number of Alerts triggered | 60% of messages triggered an alert (9/15) | |

| Number of Alerts Resolved | 100% of alerts triggered an alert (9/9) | |

| Number of Alerts Resolved by chat | 44% of alerts were resolved by chat (4/9) | |

| Number of Alerts Resolved by phone | 11% of alerts were resolved by phone (1/9) | |

| Number of Alerts resolved by Acknowledgement | 22% of alerts were resolved by Acknowledged (2/9) | |

| Number of Alerts Unresolved | No alerts were left unresolved (0/9) | |

| Average Response Time to Alerts | 9 hours 58 minutes (SD: 6 hours 55 minutes) |

The fidelity measures were calculated using descriptive statistics. Frequency distributions with percentage contributions were used to report the distribution of each categorical metric and means and standard deviations were used to characterize continuous variables.37

Results

Subjects

The rapid cognitive walkthrough conducted during Phase I had no study subjects as it was conducted by our multidisciplinary team of experts, including 4 dentists, 2 dental hygienists, and researchers with usability expertise. Participants recruited for Phase II Usability testing included 6 patients and 9 providers. Participants recruited for Phase III Pilot testing included 34 patients and 12 dentists (see Table 1). Some dentists participated in Phase II only, while others participated in both Phases II and III. The majority of the dental providers were general dentists, except 1 oral surgeon and 1 endodontist. Nine dental providers came from the academic institution, and 7 worked at the multispecialty large accountable care group practice.

Table 1.

Demographic information of the participants involved in Phases II and III. No participants were enrolled for Phase I.

| Phase: |

Phase II: Usability lab testing |

Total phase II | Phase III: Pilot testing |

Total phase III | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| User type: |

Patients |

Dentists |

Patients |

Dentists |

|||||||||||

| Gender: | Male | Female | Total | Male | Female | Total | Male | Female | Total | Male | Female | Total | |||

| Age group | <25 y.o. | — | — | 0 | — | — | 0 | 0 | 2 | 3 | 5 | — | — | 0 | 5 |

| 25-34 y.o. | — | 1 | 1 | 4 | 1 | 5 | 6 | 7 | 2 | 9 | 5 | 4 | 9 | 18 | |

| 35-44 y.o. | 1 | — | 1 | 1 | 3 | 4 | 5 | 3a | 4a | 8a | — | 1 | 1 | 9 | |

| 45-54 y.o. | — | — | 0 | — | — | 0 | 0 | 4 | 3 | 7 | — | 1 | 1 | 8 | |

| 55-64 y.o. | — | 2 | 2 | — | — | 0 | 2 | — | — | 0 | — | — | 0 | 0 | |

| 65-74 y.o. | 1 | 1 | 2 | — | — | 0 | 2 | — | — | 0 | — | — | 0 | 0 | |

| 75-84 y.o. | — | — | 0 | — | — | 0 | 0 | 2 | 4 | 6 | — | — | 0 | 0 | |

| Totals | 2 | 4 | 6 | 5 | 4 | 9 | 15 | 18a | 16a | 35 | 5 | 6 | 11 | 45 | |

This table summarizes the demographic information of the participants involved in Phase II: the Usability testing, including 6 patients and 9 providers. Participants recruited for Phase III: Pilot testing included 34 patients and 12 dentists.

One patient in Phase III, age group 35-44 years did not provide gender information.

Phase I result: internal rapid cognitive walkthrough of prototype A

During the rapid cognitive walkthrough of the mHealth app, 23 issues with the potential to have a negative impact on users’ experience were identified. The majority were categorized as system issues (n = 17, 74%), followed by content issues (n = 4, 17%). Two issues (9%) related to the workflow and implementation of the app within the clinical context.

Overall, 13 issues belonged within the dentists’ UI (57%), and 10 belonged within the patients’ UI (43%). Among all issues identified in Phase I, 17 (74%) were classified as having a high impact on usability, 4 (17%) were classified as having a medium impact on usability, and 2 (9%) were classified as having a low impact on usability. We did not identify any issues that were out of the scope of the project; 21 (91%) of the issues needed to be addressed in the short term, and 2 (9%) in the medium term.

Phase II result: usability lab testing of prototype A

We conducted 15 semi-structured interviews lasting between 35 and 160 minutes with an average duration of 60 minutes. The first and second rounds of analytical conceptualization and re-classification of issues to achieve a consensus yielded 141 usability issues. Among all issues, 42% were classified as system issues of the mHealth app interface (n = 59), 35% were related to the content (n = 50), and 23% were associated with the workflow process of the use of the mHealth app in the participants’ environment (n = 32). Of the 141 issues, 43% were encountered by the patients (n = 61) and 57% by the dentists (n = 80). In terms of impact level on usability, 76 (54%) issues were classified as having a high impact on usability, 16 (11%) were classified as having a medium impact on usability, 3 (2%) were classified as having low impact on usability, and the remaining 46 issues were considered out of scope for this study (33%). We identified 86 (61%) of the issues needing to be addressed in the short term, 6 (4%) in the medium term, and 50 (35%) that would not be addressed. Rewording questions on the mHealth survey to improve clarity for the patient was an example of a short-term issue. Participating dentists pointed out that the communication with their patients needed to be appended to their EHR for legal reasons, which required developing additional functionality to address the need, which was an example of a medium-term issue. An issue considered long term and not addressable during the project period was adding the ability for patients to capture a photo of their health concern using their mobile device and sending it to their dentist through the app. An overlap in Phases I and II, identified by the study team and study subjects, included terminology like “Response Rate” and “Engagement Rate.” Both rates were viewed as “unclear” and violated the principles of “Match between system and world” and “Use user language.” All other issues found in Phase I were solved before Phase II.

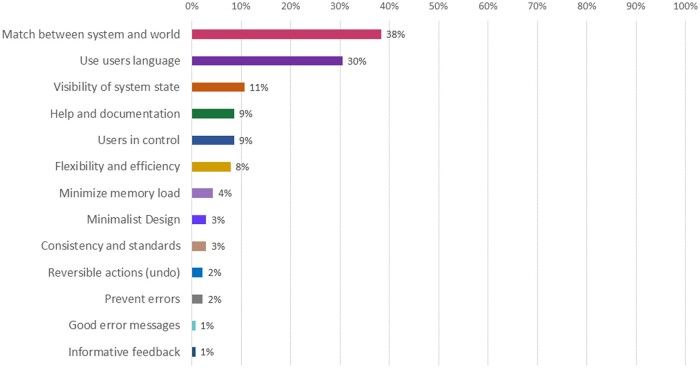

If an issue appeared more than once, it was only documented one time; non-duplicated issues summed to 141 issues. In terms of the heuristic principles that were violated (Figure 2), 54 issues referred to a lack of match between the mHealth app and the world (38%), and in 43 cases, the system did not follow the users’ language (30%). Other usability issues included a lack of visibility of system state (n = 15, 11%), help and documentation challenges (n = 12, 9%), users not feeling in control (n = 12, 9%), lack of flexibility and efficiency (n = 11, 8%), problems with minimizing the user’s memory load (n = 11, 4%). Other issues were associated with violations of the principles of minimalist design (n = 4, 3%), consistency and standards (n = 4, 3%), reversible actions (n = 3, 2%), preventing errors (n = 3, 2%), good error messages (n = 1, 1%) and informative feedback (n = 1, 1%). No issues were identified that were out of the scope of the project; 21 (91%) of the issues needed to be addressed in the short term, and 2 (9%) in the medium term.

Figure 2.

Issues found during the usability testing lab sessions: Heuristic violations. This figure quantifies and contrasts the count and percentage each of the heuristic principles’ violations, sorted from the heuristic principle most frequently violated (lack of match between the mHealth app and the world) to the least heuristic principle least frequently violated (informative feedback).

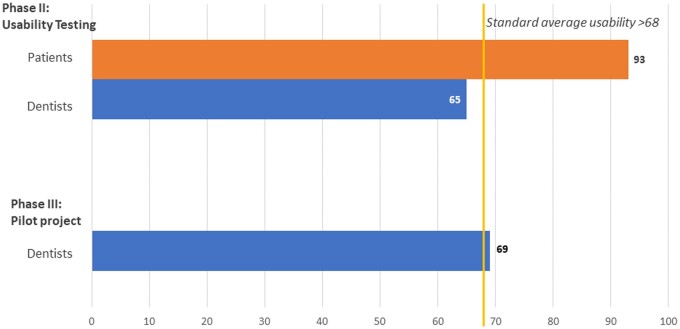

All participants in Phase II completed the SUS survey. It appears that patients found the system or product to be highly usable, with a SUS score of 93, whereas providers found it less usable, with a score of 65 (Figure 3).

Figure 3.

Average SUS score by group: the SUS score among patients and dentists during Phase II and Phase III. The SUS score among patients during Phase II: Usability testing was 93, and among providers, it was 65. The SUS score among providers during Phase III: Pilot testing was 69. *Patients did not answer the SUS in Phase III since we did not change it from Phase II.

After identifying all usability issues, the mHealth app developers modified Prototype A’s dentists’ and patients’ UI, transforming Prototype A into Prototype B. Prototype B’s dentists’ major modifications included an improved design where the dashboard’s features were more user-centered and task-oriented. The changes to the patients’ UI were minor, such as improved screen visibility by adjusting the contrast between text font and background. Screenshots of the patient UIs (before and after re-design) are included in the Appendix (Supplementary Material).

Phase III result: pilot testing of prototype B

Table 2 reports the descriptive statistics for the fidelity measures recorded in Phase III. The SUS score for providers during pilot testing is shown in Figure 3. The usability errors identified during the pilot testing of prototype B included: (1) undelivered messages due to mobile phone carrier and service-related issues, (2) errors in patients’ mobile phone number data entry, (3) problems in the training of providers, and (4) mHealth app registration issues.

Discussion

Through a multi-phase iterative usability evaluation, we significantly improved the first version of a mHealth app for monitoring patient-reported postoperative dental pain. The evaluation highlighted the importance of formally assessing usability.38,39 It allowed us to discover challenges in the use of the patient and dentist UI, understanding of the pain-related content questionnaires [based on the PROMIS40 and APS-POQ-R31 questionnaires], and disruptions in the workflow of clinical practice. Our combined approach, which utilized different usability testing methods, provided different perspectives from cognitive and heuristics experts, system experts, patient users, and dentist users.41 Each type of method allowed us to capture a more comprehensive view of usability and identify critical issues that may have been missed if we had only used one method.42,43 We also demonstrated the value of engaging an existing mHealth application developer as part of the usability evaluation process. The findings from the research were used to iteratively improve their production platform for patients and providers.

FollowApp.Care is a novel mobile application that has been successfully enhanced to efficiently capture PRO data on postoperative pain in order to inform clinical management of acute postoperative dental pain and ultimately to improve patient health outcomes, experience of care, and provider performance. The strength of our study lies in the ubiquitous use of mobile phones, which provides a convenient platform to collect PRO data. The secure-messaging feature of the FollowApp.Care system is deployable on any text message-enabled smartphone, and the high engagement rates among dental patients underscore its suitability for our study. Furthermore, to the best of our knowledge, no other mobile application has been developed and tested specifically for use in the dental office setting, which closely mirrors the specifications of our study. The collection of PRO data and its use to inform clinical care is not routinely practiced at most dental offices, despite obvious benefits to patients and providers.44,45 FollowApp.Care aims to serve both the collection and utilization of PRO data. We expect the proactive collection of PRO data, combined with a rapid response from providers, will facilitate active participation among patients and foster the positive belief that their seemingly mundane actions, such as completing surveys, can make a significant difference in the quality of care they receive.

In the first phase, a cognitive walkthrough allowed for early identification of system-related usability issues, providing more time for software engineers to transform prototype A to prototype B while evaluating other workflow and content issues. The lab-based usability testing in Phase II helped to identify most of the issues with high impact on usability. At the same time, the quantitative methodology, like the SUS in Phase II and III, allowed us to understand that the provider UI had significant usability challenges. Additionally, the in situ pilot testing allowed us to identify issues such as predicting undelivered messages and problems with data entry and training of providers that may have been missed with only lab-based testing. Using various methods for determining usability allowed us to capture a more comprehensive view of the usability of health IT and improve the production platform for patients and providers. If the cognitive walkthrough and the usability testing were entirely forfeited, the provider users in the pilot testing would have experienced more issues and frustrations that could potentially hinder the adoption of the mHealth app.46 These findings are consistent with our previous work in assessing and improving the usability of a dental EHR.43

The multiple themes identified through the qualitative analysis of the usability testing interviews showed that the mHealth app we evaluated was complex in nature. Our analysis showed that the dentists’ UI was more challenging than the patients’ UI. Our findings differed from those of Derks et al47; they found most of the usability issues in their mHealth app by testing it with patients, while we found most of the issues through provider testing.

The limitations of this study relate to the generalization of results due to a small sample of participants. Provider participants were purposefully recruited from the participating clinics based on their interest in using a mHealth app to communicate with patients. Therefore, these participants may not be generalizable to the larger population. No participants with visual or motor disabilities were included in the study; therefore, we have no information on the usability of the mHealth app when a smartphone uses accessibility features like “VoiceOver.” In addition, replication of results may change with external variables like time and technology use; we cannot determine how people use technology now versus how the use of technology in the future might affect the usability of the mHealth app. In Phase I, a single author coder with only one second investigator is a weaker way of assuring the rigor and reproducibility of the findings than other methods. For dentists, we mainly assessed the web-based UI using a computer and not the mobile UI. We found early on that dentists were much more likely to use their clinic computers rather than their personal mobile phones to access the system. We, therefore, did not compare these 2 different interfaces for dentists and focused our efforts on the computer UI. All patients used their mobile phones to access the platform, and therefore, we did not test the ability of patients to use their email and computers to access FollowApp.Care. The platform also was not interoperable with a dentist’s patient record—although such a feature is on the development plan for the future. Although the mHealth survey consists of validated items from 2 valid and reliable surveys, it still needs to be assessed for reliability and validity as we cannot assure that the revised survey retains the psychometric properties of the original surveys.

Conclusion

This study demonstrated a significant improvement in the first version of the mHealth app for monitoring patient-reported postoperative dental pain. It highlights the importance of multi-phase iterative usability evaluation in the development of the mHealth application. By using a variety of methods, such as rapid cognitive walkthrough, usability testing, in situ pilot testing, and quantitative SUS evaluation, we were able to identify and address critical usability issues in the FollowApp.Care mHealth app for postoperative dental pain management. Our findings suggest that the app has the potential to improve patient outcomes and enhance the quality of care delivered by providers.

Supplementary Material

Contributor Information

Ana M Ibarra-Noriega, Diagnostic and Biomedical Sciences, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX 77054, United States.

Alfa Yansane, Preventive and Restorative Dental Sciences, School of Dentistry, University of California at San Francisco, San Francisco, CA 94143, United States.

Joanna Mullins, Skourtes Institute, Hillsboro, OR 97124, United States.

Kristen Simmons, Skourtes Institute, Hillsboro, OR 97124, United States.

Nicholas Skourtes, Skourtes Institute, Hillsboro, OR 97124, United States.

David Holmes, FollowApp.Care, London, W1G 8GE, United Kingdom.

Joel White, Preventive and Restorative Dental Sciences, School of Dentistry, University of California at San Francisco, San Francisco, CA 94143, United States.

Elsbeth Kalenderian, Marquette University School of Dentistry, Milwaukee, WI 53233, United States; Department of Dental Management, School of Dentistry, University of Pretoria, Pretoria, 0002, South Africa.

Muhammad F Walji, Diagnostic and Biomedical Sciences, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX 77054, United States; Department of Clinical and Health Informatics, UTHealth Houston McWilliams School of Biomedical Informatics, Houston, TX 77030, United States.

Author contributions

Ana M. Ibarra-Noriega, Muhammad F. Walji, and Elsbeth Kalenderian conceptualized the manuscript and wrote an initial draft. All authors expanded, proofread, and substantially edited the manuscript. Muhammad F. Walji, Elsbeth Kalenderian, and Joel White secured funding for the project.

Supplementary material

Supplementary material is available at JAMIA Open online.

Funding

This work was supported by Agency for Healthcare Research and Quality (grant number U18HS026135).

Conflicts of interest

D.H. serves as the founder and CEO of FollowApp.Care. The other authors declare no conflicts of interest.

Data availability

The data underlying this article will be shared on reasonable request to the corresponding author.

References

- 1. Walji MF, Yansane A, Hebballi NB, et al. Finding dental harm to patients through electronic health record-based triggers. JDR Clin Trans Res. 2020;5(3):271-277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. The National Pharmaceutical Council. Pain: Current Understanding of Assessment, Management, and Treatments. National Pharmaceutical Council and the Joint Commission for the Accreditation of Healthcare Organizations; 2001. [Google Scholar]

- 3. Moore PA, Hersh EV.. Combining ibuprofen and acetaminophen for acute pain management after third-molar extractions: translating clinical research to dental practice. J Am Dent Assoc. 2013;144(8):898-908. [DOI] [PubMed] [Google Scholar]

- 4. Dionne RA, Gordon SM, Moore PA.. Prescribing opioid analgesics for acute dental pain: time to change clinical practices in response to evidence and misperceptions. Compend Contin Educ Dent. 2016;37(6):372-379. [PubMed] [Google Scholar]

- 5. Centers for Disease Control and Prevention. Understanding the Opioid Overdose Epidemic. Centers for Disease Control and Prevention; 2022.. Accessed March 9, 2023. https://www.cdc.gov/opioids/basics/epidemic.html [Google Scholar]

- 6. Schroeder AR, Dehghan M, Newman TB, et al. Association of opioid prescriptions from dental clinicians for US adolescents and young adults with subsequent opioid use and abuse. JAMA Intern Med. 2019;179(2):145-152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Turk DC, Dworkin RH, Burke LB, et al. Developing patient-reported outcome measures for pain clinical trials: IMMPACT recommendations. Pain. 2006;125(3):208-215. [DOI] [PubMed] [Google Scholar]

- 8. Cook KF, Jensen SE, Schalet BD, et al. PROMIS measures of pain, fatigue, negative affect, physical function, and social function demonstrated clinical validity across a range of chronic conditions. J Clin Epidemiol. 2016;73:89-102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Grossman S, Dungarwalla M, Bailey E.. Patient-reported experience and outcome measures in oral surgery: a dental hospital experience. Br Dent J. 2020;228(2):70-74. [DOI] [PubMed] [Google Scholar]

- 10. Anthony CA, Lawler EA, Ward CM, et al. Use of an automated mobile phone messaging robot in postoperative patient monitoring. Telemed J E Health. 2018;24(1):61-66. [DOI] [PubMed] [Google Scholar]

- 11. Nundy S, Dick JJ, Chou C-H, et al. Mobile phone diabetes project led to improved glycemic control and net savings for Chicago plan participants. Health Aff (Millwood). 2014;33(2):265-272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Zakerabasali S, Ayyoubzadeh SM, Baniasadi T, et al. Mobile health technology and healthcare providers: systemic barriers to adoption. Healthc Inform Res. 2021;27(4):267-278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Zhou L, Bao J, Watzlaf V, et al. Barriers to and facilitators of the use of mobile health apps from a security perspective: mixed-methods study. JMIR Mhealth Uhealth. 2019;7(4):e11223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. California Health Care Foundation. Consumers and Health Information Technology: A National Survey. California Health Care Foundation; 2010. [Google Scholar]

- 15. Rideout V, Katz VS.. Opportunity for all? Technology and learning in lower-income families. In: Joan Ganz Cooney Center at Sesame Workshop. New York, NY: Joan Ganz Cooney Center at Sesame Workshop; 2016. [Google Scholar]

- 16. Toniazzo MP, Nodari D, Muniz FWMG, et al. Effect of mHealth in improving oral hygiene: a systematic review with meta-analysis. J Clin Periodontol. 2019;46(3):297-309. [DOI] [PubMed] [Google Scholar]

- 17. Scheerman JFM, van Empelen P, van Loveren C, et al. A mobile app (WhiteTeeth) to promote good oral health behavior among Dutch adolescents with fixed orthodontic appliances: intervention mapping approach. JMIR Mhealth Uhealth. 2018;6(8):e163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Estai M, Kanagasingam Y, Xiao D, et al. End-user acceptance of a cloud-based teledentistry system and android phone app for remote screening for oral diseases. J Telemed Telecare. 2017;23(1):44-52. [DOI] [PubMed] [Google Scholar]

- 19. Haron N, Zain RB, Ramanathan A, et al. m-Health for early detection of oral cancer in low- and middle-income countries. Telemed J E Health. 2020;26(3):278-285. [DOI] [PubMed] [Google Scholar]

- 20. Fernández CE, Maturana CA, Coloma SI, et al. Teledentistry and mHealth for promotion and prevention of oral health: a systematic review and meta-analysis. J Dent Res. 2021;100(9):914-927. [DOI] [PubMed] [Google Scholar]

- 21. Zhou L, Bao J, Setiawan IMA, et al. The mHealth app usability questionnaire (MAUQ): development and validation study. JMIR Mhealth Uhealth. 2019;7(4):e11500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. LeRouge C, Wickramasinghe N.. A review of user-centered design for diabetes-related consumer health informatics technologies. J Diabetes Sci Technol. 2013;7(4):1039-1056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Cafazzo JA, Leonard K, Easty AC, et al. The user-centered approach in the development of a complex hospital-at-home intervention. Stud Health Technol Inform. 2009;143:328-333. [PubMed] [Google Scholar]

- 24. Chowdhary K, Yu DX, Pramana G, et al. User-centered design to enhance mHealth systems for individuals with dexterity impairments: accessibility and usability study. JMIR Hum Factors. 2022;9(1):e23794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Griffin L, Lee D, Jaisle A, et al. Creating an mHealth app for colorectal cancer screening: user-centered design approach. JMIR Hum Factors. 2019;6(2):e12700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Vaughn J, Shah N, Jonassaint J, et al. User-centered app design for acutely ill children and adolescents. J Pediatr Oncol Nurs. 2020;37(6):359-367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Tobias G, Spanier AB.. Developing a mobile app (iGAM) to promote gingival health by professional monitoring of dental selfies: user-centered design approach. JMIR Mhealth Uhealth. 2020;8(8):e19433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Zhang C, Ran L, Chai Z, et al. The design, development and usability testing of a smartphone-based mobile system for management of children’s oral health. Health Informatics J. 2022;28(3):14604582221113432. [DOI] [PubMed] [Google Scholar]

- 29. Still B, Crane K.. Fundamentals of User-Centered Design: A Practical Approach. CRC Press; 2017. [Google Scholar]

- 30. FollowApp.Care. 2022. Accessed October 5, 2022. https://www.followapp.care/

- 31. Gordon D, Polomano R, Gentile D, et al. Validation of the revised American pain society patient outcome questionnaire (APS-POQ-R). J Pain. 2011;12(4):P3. [Google Scholar]

- 32. Wharton C, Rieman J, Lewis C, Polson P.. The cognitive walkthrough method: a practitioner’s guide. In: Nielsen J, Mack RL, eds. Usability Inspection Methods. John Wiley & Sons, Inc.; 1994:105-140. [Google Scholar]

- 33. Sandelowski M. What’s in a name? Qualitative description revisited. Res Nurs Health. 2010;33(1):77-84. [DOI] [PubMed] [Google Scholar]

- 34. Walji MF, Kalenderian E, Tran D, et al. Detection and characterization of usability problems in structured data entry interfaces in dentistry. Int J Med Inform. 2013;82(2):128-138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Nielsen J. Usability Engineering. Morgan Kaufmann; 1994. [Google Scholar]

- 36. Zhang J, Walji M. Better EHR: Usability, Workflow and Cognitive Support in Electronic Health Records. National Center for Cognitive Informatics & Decision Making in Healthcare; 2014. [Google Scholar]

- 37. Brooke J. Usability Evaluation in Industry. In: Jordan PW, Thomas B, Weerdmeester BA, McClelland IL, eds. Usability Evaluation in Industry. Taylor & Francis; 1996:189-194.

- 38. Hartzler AL, Izard JP, Dalkin BL, et al. Design and feasibility of integrating personalized PRO dashboards into prostate cancer care. J Am Med Inform Assoc. 2016;23(1):38-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Jake-Schoffman DE, Silfee VJ, Waring ME, et al. Methods for evaluating the content, usability, and efficacy of commercial mobile health apps. JMIR Mhealth Uhealth. 2017;5(12):e190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.HealthMeasures. Explore Measurement Systems: PROMIS [Internet]. 2024. https://www.healthmeasures.net/explore-measurement-systems/promis

- 41. Jaspers MWM. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. Int J Med Inform. 2009;78(5):340-353. [DOI] [PubMed] [Google Scholar]

- 42. Cho H, Yen P-Y, Dowding D, et al. A multi-level usability evaluation of mobile health applications: a case study. J Biomed Inform. 2018;86:79-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Walji MF, Kalenderian E, Piotrowski M, et al. Are three methods better than one? A comparative assessment of usability evaluation methods in an EHR. Int J Med Inform. 2014;83(5):361-367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Fagundes NCF, Minervini G, Furio Alonso B, et al. Patient-reported outcomes while managing obstructive sleep apnea with oral appliances: a scoping review. J Evid Based Dent Pract. 2023;23(1s):101786. [DOI] [PubMed] [Google Scholar]

- 45. Leles CR, Silva JR, Curado TFF, et al. The potential role of dental patient-reported outcomes (dPROs) in evidence-based prosthodontics and clinical care: a narrative review. Patient Relat Outcome Meas. 2022;13:131-143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Venkatesh V, Morris MG, Davis GB, Davis FD.. User acceptance of information technology: toward a unified view. MIS Q. 2003;27(3):425-478. [Google Scholar]

- 47. Derks YP, Klaassen R, Westerhof GJ, et al. Development of an ambulatory biofeedback app to enhance emotional awareness in patients with borderline personality disorder: multicycle usability testing study. JMIR Mhealth Uhealth. 2019;7(10):e13479. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article will be shared on reasonable request to the corresponding author.