Abstract

In-vehicle sensing technology has gained tremendous attention due to its ability to support major technological developments, such as connected vehicles and self-driving cars. In-vehicle sensing data are invaluable and important data sources for traffic management systems. In this paper we propose an innovative architecture of unobtrusive in-vehicle sensors and present methods and tools that are used to measure the behavior of drivers. The proposed architecture including methods and tools are used in our NIH project to monitor and identify older drivers with early dementia

Keywords: driver’s behavior, in-vehicle sensing, in-vehicle cameras, telematics sensors

I. Introduction

About one in ten older adults in the U.S. have Alzheimer’s disease (AD) and another 15 to 20% have mild cognitive impairment (MCI), a third of whom will develop dementia within 5 years. Individuals with dementia eventually become unable to perform complex everyday activities including driving so most current driving research focuses on MCI or early stage dementia. Our 5-year project, funded by NIH, focuses on creating an innovative in-vehicle sensors architecture, selecting a large group of older drivers, ages 65 to 85 years old, measuring driver’s behavior during 3-year period, and identify cognitive changes that can lead to early dementia. Leading vehicle manufacturing companies including Volvo [1], Ford [2], and Kia [3], have adopted driver alert system applications that alert the drivers to avoid accidents. Most of those systems used the same techniques and algorithms to monitor driver’s behavior but the use of those systems are limited to alerting the driver of any situations and avoid incidents from happening i.e Driver alert systems alert’s the driver if he/she is driving long. Advance Driver assistance systems alerts the driver if vehicle to moving onto other lane or if someone is in their blind spot. Measuring driving behaviors can divided into 3 types [9]

Driver Based: Drowsiness, Sensation seeking, impulsive etc.

Driving based: Distraction, attention etc

Qualitative based: Speeding, braking, lane changing etc.

Among above mentioned distraction and drowsiness are major behavior that changes with time and can be used to find key insights into driving of participants. One of them is distraction. Some of the usual distractions during driving are when drivers are looking off road and texting and using phone [4]–[6]. Some of the metrics that are used to measure distraction are head-pose [7] and gaze patterns [8]

II. IN-VEHICLE SENSOR ARCHITECTURE

We designed an innovative architecture of unobtrusive in-vehicle sensors. The concept of utilizing in-vehicle sensors to measure the behavior of drivers and detect cognitive change is in itself innovative and reflective of the rapid development of these sensors and their application for monitoring driver behavior. Published reports of the proposed sensors are limited, particularly in regard to small sample size, duration of the testing and the number of sensors and/or cognitive tests utilized. Furthermore, few prospective studies examined patients with abnormal aging (MCI and early dementia) and there have been no studies including a culturally diverse sample. Our proposal addresses these limitations with a fully powered study, complete package of unobtrusive sensors and array of cognitive tests including the LASSI-L and others that are particularly sensitive to the early changes in cognition. Furthermore, the algorithms to measure Driving Behavior Indices are unique and will contribute significantly to application of the results in real-world settings. The specific technical innovations of this project include the following

an adaptive driver behavior data sampling technique to improve the efficiency of the data collection for driver behavior analytics,

the data fusion of telematics, vision-sensing, and the environmental data to define highly detailed driver behavior indices,

the creation of a scalable spatial network query processing platform for heterogeneous data sources.

The architecture of the proposed in-vehicle sensors is shown in fig 1. Each in-vehicle sensor is comprised of two distributed sensing units:

Fig. 1.

Architecture of Vision Sensor.

in-vehicle vision sensing unit,

in-vehicle telemetry unit.

For example, the GPS clock information will share data throughout the in-vehicle sensor networks to achieve precise time synchronization. The proposed sensors supports power-full computing resources for real-time, customized onboard data processing, data-fusion, and machine-vision. Customized sensor enclosures are designed for the sensors to support a cooling system and to minimize the size of sensor hardware. Each in-vehicle sensor has local data storage to store in-vehicle sensing data. The data are uploaded to a central database during participants’ quarterly cognitive testing visits. In-Vehicle sensing units are the main components that are used in this study, there are two types of sensing units that are used.

A. Vision Sensors

The major components of the vision sensing unit are forward-facing camera, driver-facing camera, and MDVR device to store data. The vision sensor unit is mounted on the windshield, The vision sensors use computer vision algorithms to unobtrusively

track eye and head movements,

read facial micro-expression,

driving situation awareness (e.g., traffic light classification, taillights of front vehicles, and lane marking, stop sign, and vehicle detection.

Table I shows indices measured by driver-facing and front cameras. We describe next several methods and tools that are using vision sensors to measure driver’s behavior.

Table I.

Driver behavior indices measure by two in-vehicle cameras

| Camera | Feature | description |

|---|---|---|

| Driver’s Camera | Face Detection | The AI algorithm detects the face of the driver and the driver’s facial features. |

| Eyes Detection | The AI algorithm detects the eyes and analyzed weather driver eyes are open or closed. | |

| Yawning | Yawning is based on eyes and mouth detection. | |

| Distraction | Distraction is based on the head-pose angle. Head-pose estimation technique was applied but the initial angle is adjusted due to the placement of the camera on dashboard | |

| Smoking&Phone Use | Both of these indices use AI object detection algorithms to detection. | |

| Front Camera | Traffic Sign Detection | The AI algorithm detects the traffic signals and monitor if the driver passes on red sign or not |

| Object Detection | The AI algorithm detects objects on the roads, such as pedestrian or cyclist crossing the road, curbs or barriers, nearby vehicles, and others. | |

| Lane Crossing | AI algorithm that can detect lane departure | |

| Near-Collision | AI algorithm that can detect object or vehicle at a certain distance from it. | |

| Pedestrian detection | AI algorithm that can detect whether the driver yield at for the passenger crossing. |

1). Region of Interest and Face Detectio: :

Multiple techniques are used for face detection based on the region of interest. Region-of-Interest is identified by considering different metrics such are angle of the camera, height of the driver, place of the camera. Number of drivers are large in number so in order to detect the RoI and Face we used them interchangeably such that we use a less accurate but fast face detection library to have a rough estimate of the location of the face and then based on that location. RoI is selected with maximum area for the face or any false positive that it may result in and also to rule out the passenger face. After RoI is identified, it is passed to more accurate face detector to have an exact location with facial landmarks. Fig 2 & Fig 3 shows is results from our vision sensor system

Fig. 2.

Face Detection based on region-of-interest.

Fig. 3.

6 Facial landmark detection.

2). Eyes and Mouth Detection: :

Eyes and mouth detection are two more features implemented in our vision system. Both of these features are detected separately and are based on HAAR-Cascade classifiers. The functions return a bounding box for the detected area of eyes and mouth and can be passed as an array or tuple for RGB or grayscale image for further use. Apart from that we also implemented emotion recognition but were excluded due not sufficient accuracy [11]. Fig 4 & fig 5 illustrates mouth and eyes detection in our video sensor system.

Fig. 4.

Eyes Detection.

Fig. 5.

Mouth Detection.

3). Lane Detection:

Lane detection is another feature of the video sensor system that uses deep learning model to detect lane while driving. It takes image or video as an input and display a green marker on the current lane on which the driver is driving. Rain detection is also included in this framework that used machine learning model to predict the rain

B. Telemactic Sensors

The major sensing components of the telematics unit are

an on-chip RTK GNSS module,

an Inertial Measurement Unit (IMU),

an on-board diagnostics (OBD)

They enable obtaining high-precision positioning data, real-time vehicle state information (e.g., engine RPM, vehicle speed, and pedal position), and vehicles’ dynamic motions.

1). High-precision Positioning::

An on-chip RTK GNSS module allows capturing a complete picture of vehicles’ high-precision positioning data, making in-depth driver behavior analytics at lane and intersection levels possible. This new technology provides highly precise positioning data compared to typical GPS mod- ules (accuracy about 4.9 m). The precise vehicle localization achieved by this new technology is important to capture lane deviations, travel patterns in parking lot areas, and detailed turning behaviors.

2). Onboard Diagnostics::

A Bluetooth OBD scanner will obtain real-time data streams from the vehicle’s Controller Area Network (CAN bus). Standardized OBD-II protocol facilitates easy data translation into human-readable formats based on standardized OBD-II PIDs without reverse engineering work.

3). Motion and Orientation::

IMU sensors, consisting of tri-axial accelerometers, gyroscopes, and magnetometers, are used to capture vehicles’ dynamic motions and orientations. They estimate the harsh acceleration, braking, and cornering of vehicles, which are one of the popular indicators for driver behaviors. IMU sensors also identify driving over potholes and raised pavement markers, allowing for analysis of how drivers react to unexpected pavement defects and lane departure in conjunction with the other telematics data.

III. Driver Behavior Indices

Our objective is to measure and monitor changes of Driver Behavior Indices (DBIs) using the vision and telematics sensor data. The selection of the DBIs is designed to reflect older drivers’ cognitive function and driving performance. The DBIs is evaluated for each driver and summarized on a daily, weekly, and monthly basis. DBIs are classified into four categories. Examples of DBIs are shown in Table II.

Table II.

Driver behavior indices

| Categories | Driver Behavior Indices | Data Analytics |

|---|---|---|

| Travel Pattern | number of trips, driven miles, miles on the highway, miles during the night, daytime, and severe weather, miles on highway, etc. | map-matching, data queries, map data, weather data |

| Abnormal Driving | wayfinding, getting lost, ignore traffic signals and signs, near-collision events, distraction, drowsiness, etc. | machine vision, shortest path, outlier detection, trajectory clustering, frequent graph mining |

| Reaction Time | reaction time to traffic light change, front-vehicle taillight, pothole, etc. | vision sensing, data-fusion, vibration analysis, machine learning |

| Braking Patterns | eye movements and IMU data due to stop sign, traffic signal, trail light, losing focus, potholes, etc. | signal processing, gaze estimation, data mining, machine vision |

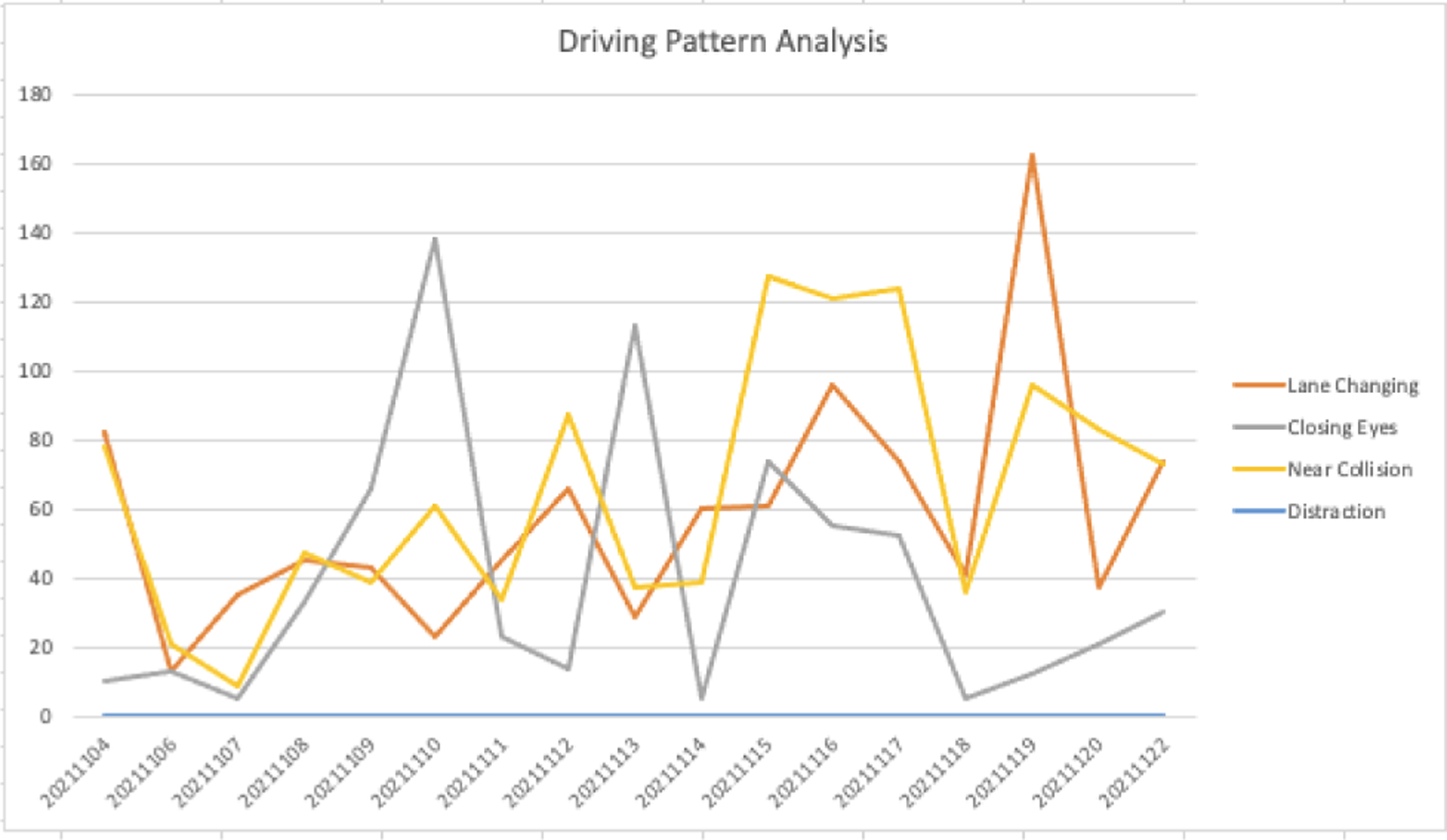

For illustration, fig 6 and 7 shows the driving pattern of a two senior drivers for 2-weeks period based on the data obtained from in-vehicle cameras that measured the number of times the driver closed eyes, number of distractions, crossing lines, and near collision events.details of the the data can be reviewed in previous works that are published is same domain [10]

Fig. 6.

Driving Pattern driver # 1

Fig. 7.

Driving Pattern driver # 2

IV. Conclusion

We presented an innovative architecture of an in-vehicle sensor system consisting of vision and telemetry sensors and includes a set of AI algorithms to measure driver behavior indices. The system was already installed in about 70 cars driven by older drivers in Florida with the objective to monitor and detect cognitive changes in these drivers. The final goal of the project is to identify those drivers that their cognitive changes imply early dementia. The detailed results of the study will be published soon.

Acknowledgment

The project mentioned in section I is supported by Award Number GT003128-NIH from the National Institute of Health. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the National Institutes of Health.

Contributor Information

Muhammad Tanveer Jan, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

Sonia Moshfeghi, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

Joshua Conniff, Charles E. Schmidt College of Science Florida Atlantic University, Boca Raton, USA.

Jinwoo Jang, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

Kwangsoo Yang, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

Jiannan Zhai, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

Monica Rosselli, Charles E. Schmidt College of Science Florida Atlantic University, Boca Raton, USA.

David Newman, Christine E. Lynn College of Nursing Florida Atlantic University, Boca Raton, USA.

Ruth Tappen, Christine E. Lynn College of Nursing Florida Atlantic University, Boca Raton, USA.

Borko Furht, College of Engg and Computer Science Florida Atlantic University, Boca Raton, USA.

References

- [1]. [11/05/2022]. https://www.volvocars.com/uk/support/manuals/xc40/2020w17/driver-support/driver-alert-control/driver-alert-control Accessed.

- [2]. [11/05/2022]. https://www.ford.com/support/how-tos/search/driver.alert.system Accessed.

- [3]. [11/05/2022]. http://webmanual.kia.com/PREM_GEN5/AVNT/RJ/KOR/English/driverassistance001.html Accessed.

- [4].Stutts JC; Reinfurt DW; Staplin L; Rodgman E The Role of Driver Distraction in Traffic Crashes; U.S. Department of Transportation: Washington, DC, USA, 2001.

- [5].Zhao Y, Görne L, Yuen IM, Cao D, Sullman M, Auger D, Lv C, Wang H, Matthias R, Skrypchuk L, Mouzakitis A. An Orientation Sensor-Based Head Tracking System for Driver Behaviour Monitoring. Sensors (Basel). 2017. Nov 22;17(11):2692. doi: 10.3390/s17112692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Khandakar A, Chowdhury MEH, Ahmed R, Dhib A, Mohammed M, Al-Emadi NAMA, Michelson D. Portable System for Monitoring and Controlling Driver Behavior and the Use of a Mobile Phone While Driving. Sensors. 2019; 19(7):1563. 10.3390/s19071563 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Husen MN; Lee S; Khan MQ Syntactic pattern recognition of car driving behavior detection. In Proceedings of the 11th International Conference on Ubiquitous Information Management and Communication, Beppu, Japan, 5–7 January 2017; pp. 1–6. [Google Scholar]

- [8].Liao Y; Li SE; Wang W; Wang Y; Li G; Cheng B Detection of driver cognitive distraction: A comparison study of stop-controlled intersection and speed-limited highway. IEEE Trans. Intell. Transp. Syst 2016, 17, 1628–1637. [Google Scholar]

- [9].Zinebi K, Souissi N, & Tikito K (2018, April). Driver Behavior Analysis Methods: Applications oriented study. In Proceedings of the 3rd International Conference on Big Data, Cloud and Applications-BDCA. [Google Scholar]

- [10].Jan MT et al. (2023). Non-intrusive Drowsiness Detection Techniques and Their Application in Detecting Early Dementia in Older Drivers. In: Arai K (eds) Proceedings of the Future Technologies Conference (FTC) 2022, Volume 2. FTC 2022 2022. Lecture Notes in Networks and Systems, vol 560. Springer, Cham. 10.1007/978-3-031-18458-10_53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Altaher A, Salekshahrezaee Z, Abdollah Zadeh A, Rafieipour H, & Altaher A (2020). Using multi-inception CNN for face emotion recognition. Journal of Bioengineering Research, 3(1), 1–12. [Google Scholar]