Abstract

Reminiscence therapy (RT) can improve the mood and communication of persons living with Alzheimer’s Disease and Alzheimer’s Disease related dementias (PLWD). Traditional RT requires professionals’ facilitation, limiting its accessibility to PLWD. Social robotics has the potential to facilitate RT, enabling accessible, home-based RT. However, studies are needed to investigate how PLWD would perceive a robot-mediated RT (RMRT) and how to develop RMRT for positive user experience and successful adoption. In this paper, we developed a prototype of RMRT using a humanoid social robot and tested it with 12 participants (7 PLWD, 2 with mild cognitive impairment, and 3 informal caregivers). The robot automatically displayed a memory trigger on its tablet and engaged participants in a relatable conversation during RMRT. A mixed-method approach was employed to assess its acceptability and usability. Our results showed that PLWD had an overall positive user experience with the RMRT. Participants laughed and sang along with the robot during RMRT and demonstrated intention to use it. We additionally discussed robot control method and several critical problems for RMRT. The RMRT can facilitate both verbal and nonverbal social interaction for PLWD and holds promise for engaging, personalized, and efficient home-based cognitive exercises for PLWD.

Index Terms—: Social robots, reminiscence therapy, dementia care, cognitive exercise, Alzheimer’s disease

I. Introduction

AN estimated 6.5 million people aged 65 or above live with Alzheimer’s Disease and Alzheimer’s Disease related dementias (AD/ADRD) in the United States [1]. The prevalence of dementia has been constantly increasing as a result of an aging population. It is predicted that the number of persons living with AD/ADRD (PLWD) in the U.S. will grow to 7.1 million by 2025 and 12.7 million by 2050 [1]. Dementias are associated with an irreversible, progressive decline of cognition, function, and behavior, which damages PLWD’s social relationships and sense of well-being and may lead to severe disability and death [2]. Reminiscence therapy (RT) is a popular psychosocial intervention widely used in dementia care. It involves a discussion of past experiences and events, using tangible prompts (e.g., photographs, music, and videos) to evoke PLWD’s memories and stimulate conversation [3], [4]. RT has shown positive effects on PLWD’s mood, cognition, communication, and quality of life [3], [4]. However, RTs are traditionally provided by clinical practitioners or professional facilitators [5], [6], limiting the accessibility of RT to PLWD. Rapidly evolving information and communication technologies (ICTs) such as smartphones, computers, and social robotics offer potential venues to support the delivery of RT [7], [8].

Using ICTs, the materials (e.g., photographs, music, and videos) for reminiscence can be digitized and stored on computers, tablets, or smartphones, and be easily managed and retrieved during RT [8]. The technology of artificial intelligence (AI) hold promises of automating the reminiscence processes [9], making RT more widely accessible for PLWD. Currently, most technology-enabled RT programs are offered in the form of a program stored on a computer, tablet, or smartphone [10], such as InspireD Reminiscence App [11]–[13], Memory Tracks App [14], and Elisabot. [15]. The interaction modalities of these computer-mediated programs are constrained to 2D visual signal and sound, lacking non-verbal interactive communication cues, such as eye contact and gestures. Comparatively, a physically embodied social robot-mediated RT (RMRT), where the robot usually facilitates the RT using attractive physical appearance, expressive gestures and other body language, and/or facial expression [16] as well as AI-enabled conversation. This approach helps promote social interactions with the PLWD both verbally and non-verbally and may enable more positive user experience and more effective and engaging memory triggers (e.g., recall and recognition) and/or pleasant experiences in reminiscence activity [8], [17]. Thus, the RMRT will potentially maximize the positive impacts of RT on PLWD’s mood, cognition, communication, and quality of life.

Currently, a few studies have been conducted on RMRT [18], [19]. For example, during reminiscence work at a care facility in Japan, Yamazaki et al. [20] introduced a huggable humanly shaped robot, pillow-phone Hugvie, and pilot tested its effect in facilitating RT with PLWD. The results from five PLWD indicated that providing topics related to personal histories through robotic media could positively affect PLWD’s communication. Using a 19.05cm-tall humanoid social robot RoBOHoN, Wu et al. [21] developed a RMRT, where deep learning techniques were applied to recognize events, objects, and scenes in photographs, and tested the system’s usability with people (aged 22–42) without cognitive impairment in Taiwan. Their results showed that the robot can pose appropriate questions related to personal photographs and had the potential to guide the user to recall the past in an organized way. In another study using a 31.5cm-tall social robot Zenbo Junior, Gamborino et al. [22] developed a robot-assisted photo reminiscence system consisting of two parts, a graphical user interface (GUI) to display photos (i.e., Dynamic and Flexible Interactive PhotoShow) [23] and a social robot. This system was designed to autonomously guide an older adult PLWD throughout a reminiscence session, asking questions that were related to the picture that the user chose. The system could automatically extract visual information from the pictures using a pre-trained AI model to detect scenes and events. Gamborino et al. tested the system with 10 older adults without cognitive impairment in Taiwan. Most participants found the system responsive, and the questions asked were appropriate for the picture selected, and praised the system. The majority of these studies were conducted with young adults or older adults without cognitive impairments; yet, few studies focused on PLWD. However, to ensure successful adoption of robotic technology among PLWD, it is necessary to evaluate the technology’s acceptability and usability specifically with PLWD [16], [24], especially considering that PLWD usually have limited cognitive and physical capacity compared to people with normal cognitive and physical capability and limited experience with technologies [25]. Another limitation existing in the literature is a deficiency of such RMRT studies in the United States. The adoption of robots for dementia care delivery can be different across cultures and countries [25]. In addition, during a RMRT, the design of user interface (UI) for PLWD covers the robot’s appearance, voice, and body movement, more than the verbal conversation and/or the GUI to display the reminiscence image. We also noticed that most of the robots in previous RMRT studies lacked a GUI interface for user interaction with the robots, meaning that an additional, separate GUI component (e.g., computer or tablet [21], [22]) is needed in support user interaction.

Our long-term goal is to develop a RMRT wherein the social robots can automatically provide engaging, adaptive, and personalized reminiscence activities for people with dementia. Such an RMRT is designed to be easy-to-use and access, personalized cognitive exercise tool by PLWD themselves at home, without the facilitation of professional clinicians. We believe this program will also help increase the accessibility of dementia care, improve the efficiency of care service, and ensure consistent quality of care. In this current work, we aimed to develop an engaging RMRT using a physically embodied social robot, Pepper [26], targeted for use by people with dementia in the United States. The Pepper robot has a tablet attached to its chest. In our previous online survey study [27] where participants watched an approximately 3-minute video of a Pepper robot presenting potential functions to assist dementia care, PLWD, caregivers, and the general public showed an overall positive attitude towards using the robot to assist with care for people with ADRD. However, participants’ perceptions of the robot were merely based on watching a video instead of direct interaction with the robot. There might be differences of user perception and attitude towards the robot between watching a video of a robot interacting with PLWD and its interaction with them in real world. Herein, this usability study was conducted to answer the following research questions, 1) How do PLWD perceive an RMRT program that is facilitated by a humanoid social robot? and 2) How effective is the robot in interacting with PLWD when delivering RT, i.e., whether the RMRT program allows PLWD to complete tasks with a minimal amount of effort and whether the interface is easy to learn and use? We developed a unique RMRT program and tested it at a dementia specialty clinic with a group of PLWD who used the program and interacted with the robot. We evaluated the acceptability and usability of the program and robot in relation to the robot’s appearance, voice and body language, the GUI on the robot’s tablet, as well as the robot’s conversation in offering reminiscence.

II. Development of a Robot-Mediated Reminiscence Activity

We employed the user-centered design principles [28] and guidelines to develop a prototype for a humanoid social robot (Pepper) equipped with a GUI to deliver reminiscence therapy for PLWD [29]–[31]. The program was primarily designed for individuals with mild or moderate dementia, as they possess better verbal communication capabilities and responsiveness [1]. Pepper is 1.21-m tall and 0.48-m wide and has 17 joints for graceful and expressive body movements. Pepper can appropriately adjust its body language (such as gestures and other body movements) as well as voice according to what it is saying. It is equipped with several LEDs in its eyes, ears, and shoulders for color changing to signal and support human-robot communication. Pepper’s size and attractive appearance make it appropriate, acceptable, and enjoyable to users in daily life as well as PLWD [16], [27], [32]–[34]. Pepper also has a range of sensors (e.g., cameras and microphones) and uses AI to perceive human interaction and the environment. Pepper can usually make good eye contact with the user during interaction. Pepper is equipped with a 246-175-14.5-mm tablet on its chest, which makes it convenient to display tangible prompts (e.g., photos and videos) to stimulate PLWD’s memories and conversations. The capability of multimodal interaction in Pepper could enable more effective and engaging memory triggers (e.g., recall and recognition) for PLWD in a reminiscence intervention [17].

A. General Reminiscence Knowledge Base

A multimedia file was created as a source of prompts for memory stimulation for PLWD. The file consists of general music, video clips of movies and TV shows (e.g., the typists from The Carol Burnett Show), and photographs that are familiar to the PLWD, including photos of local popular cultures, holidays (e.g., Christmas), scenes (e.g., Downtown Knoxville), historical buildings, and sports (e.g., the famous football quarterback, Peyton Manning). The use of stimulating concrete prompts such as photographs, music, and videos which are associated with the PLWD’s past experience has been suggested to be most effective for RT [10]. The multimedia prompts for memory stimulation were identified from websites that provide copyright clearance, YouTube, and other websites in the public domain to use for this project. We downloaded the audio clips, video clips, and photographs from the websites and saved them into the reminiscence file. The video clips were designed for approximately 3 minutes, without too negative, complex, or violent information [35]. We interviewed and discussed with experts in RT these materials to ascertain that all the images, audio, and video clips are appropriate for PLWD. For example, during the discussion, we found that the images including multiple objects (e.g., the left and middle photos in Figure 1) could be distracting to PLWD and should be avoided. Eventually, the reminiscence database consists of nine topics, including pets, cars, holidays, hobbies, chores, movies, music, sports, and local scenes.

Fig. 1.

Examples of photos that could be used to assist reminiscing sports (photo courtesy: Tennessee Athletics/UTsports.com). The left and middle photos containing multiple objects could potentially be confusing to PLWD. Comparatively, the picture on the right is more appropriate for PLWD during reminiscence.

B. A Prototype of Robot-Mediated Reminiscence Therapy

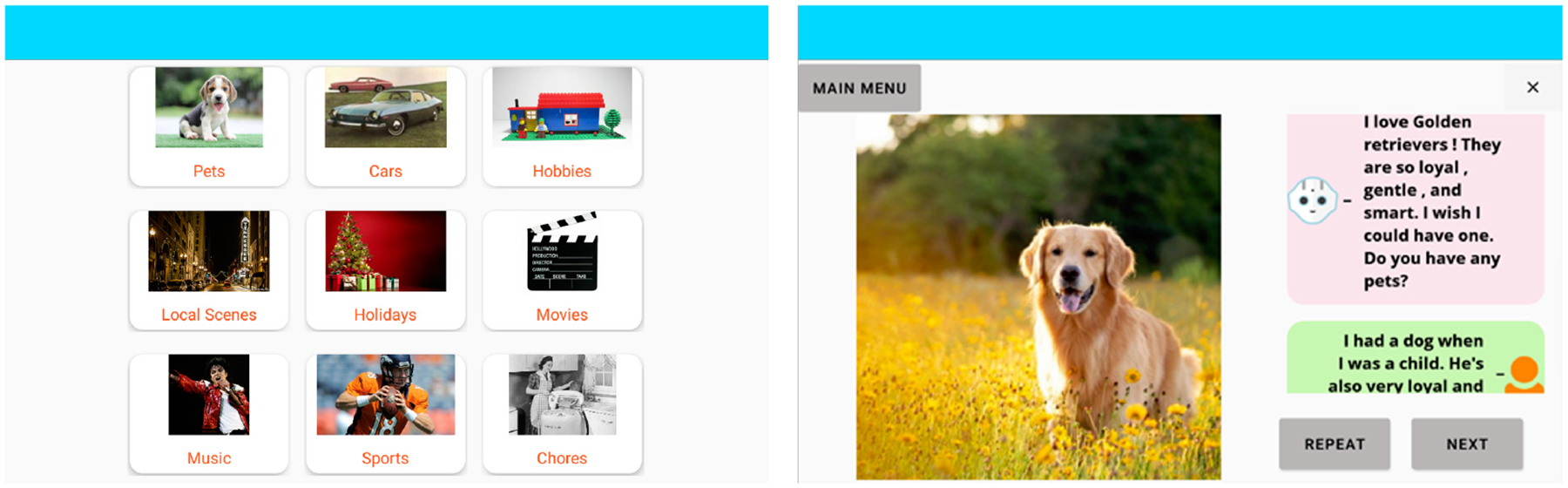

Research suggests human users prefer social machines (e.g., chatbots and robots) which convey humanness, increasing acceptance and easing social conversations with humans [36]. Studies finds that female bots are perceived more humanness than male bots and that female bots are preferred over male bots in the context of healthcare [37]. Therefore, we would like to rename Pepper to contextualize its gender as female. We renamed the robot Tammy, one of the most popular girl names in Tennessee. A standalone app, named Reminiscence, was implemented in the Tammy robot. Taking into account of common interaction design principles, including visibility, consistency, afordance, feedback, and mapping [28], [38], [39], we designed the app as simple and easy-to-use as possible. After tapping the app, a topic selection layer will be displayed on Tammy’s tablet, as shown on the left side of Figure 2. Each topic was shown together with an image as well as texts in order to facilitate PLWD’s understanding of the content of each topic. The potential user can browse each topic by tapping the topic button. After the user chose a specific topic, Tammy automatically displays an image or video clip closely associated with this topic on its tablet. Based on the design principle of consistency, all topics have the same GUI design. Considering the primary users of the RMRT, PLWD with short-term memory loss, who might forget what they need to do or why they are there during RMRT, we designed the robot to frequently remind the user about the next step. That is, at the very beginning of each topic, Tammy robot provides verbal instruction for the next step, i.e., “When you are ready, please click the ‘NEXT’ button or say, ‘Tammy next”‘, which also satisfies the basic design principle of visibility [28]. For each image or video clip, Tammy robot will have 3–4 conversational interactions with PLWD. The conversation is hardcoded using the API, Topic and QiChatbot. The image or video clip is displayed on the left side of the Tammy robot’s tablet and the conversation on the right side of Tammy’s tablet, as shown on the right side of Figure 2.

Fig. 2.

Screenshot of touchscreen design in the robot for RMRT: (a) The touchscreen design for the topic selection layer. (b) An example of a PLWD-robot conversation.

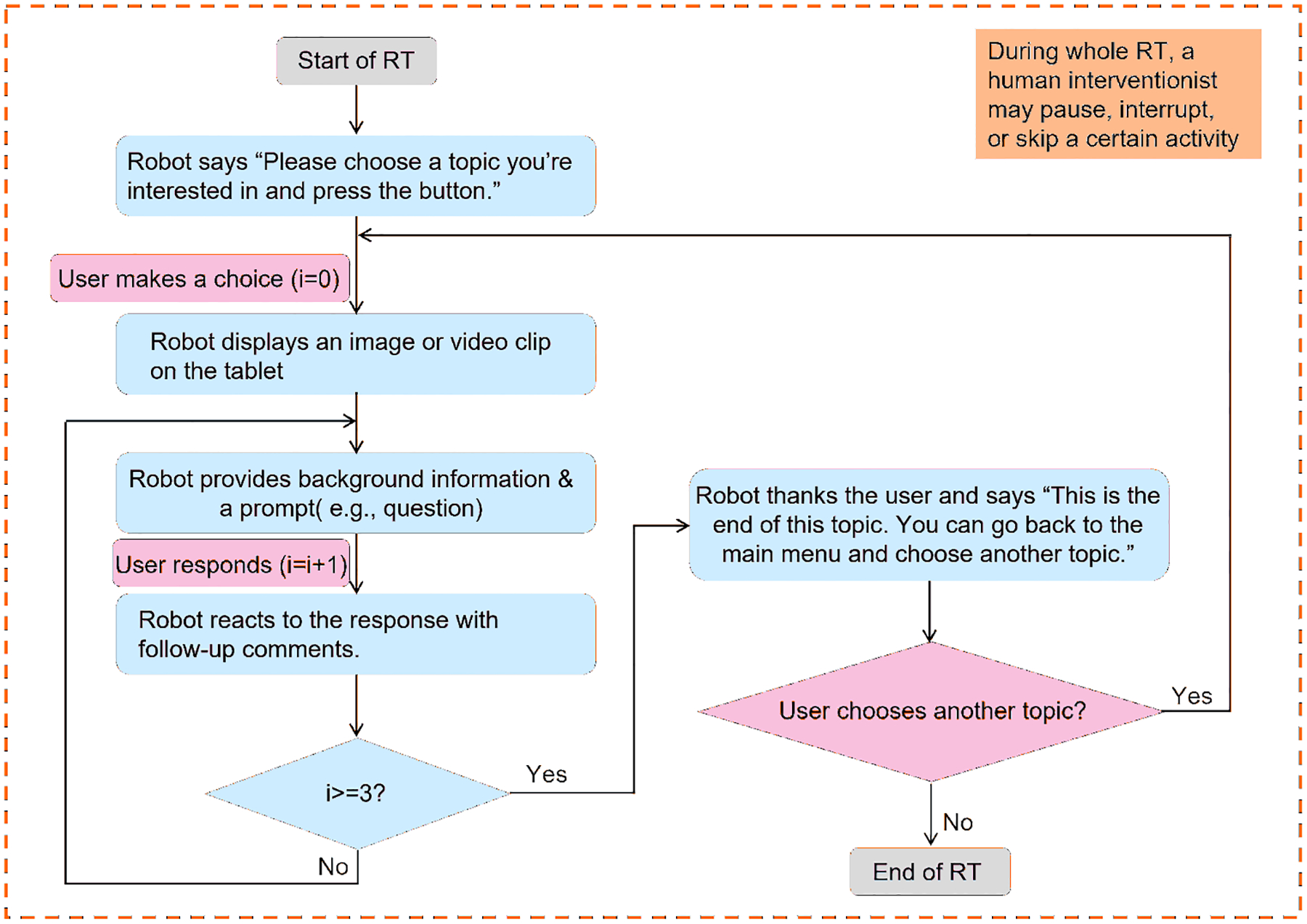

For each conversation round, Tammy starts with some background information about the image or video clip and then comes up with a relatable and engaging question (e.g., “Do you have a favorite memory with a pet?”) to encourage the PLWD to talk. The background information (e.g., “Pets are so adorable. Several months ago, I met a Golden retriever. He was a very kind and smart dog. I got to say hello and play with the dog. It was very exciting!”) is designed to let Tammy share a memory itself, which has been suggested as a good starting point and a good way of leading into asking a question more gently [29], [31]. Additionally, Tammy would provide a follow-up comment associated with the user’s answer. After all the conversation interactions relating to the image or video clip, Tammy will thank the user for talking about this topic with it and provide a verbal reminder that this is the end of the topic, and the user can go back to the main menu and choose another topic. A flowchart of the robot-mediated reminiscence activity is demonstrated in Figure. 3.

Fig. 3.

The flowchart of the robot-mediated reminiscence activity. If the user does not make a choice (or does not respond), the human interventionist will verbally invite the user to make a choice (or respond). The interventionist may also help skip the certain activity or end the RT, according to the user’s willingness.

Under each topic, the user is able to control the robot Tammy by tapping buttons or using voice input. Specifically, the user can move to the next conversation by tapping the NEXT button or saying, “Tammy next”, which on one hand can help the robot know that the user finished their answer and on the other hand, give the user a sense of self-control to skip any question and mitigate potential RT stress [19], [40]. The user can tap the REPEAT button or say “Tammy repeat” to make the robot repeat what it just said. The user can go back to the topic select layer by tapping the MAIN MENU button whenever they want to go back to the main menu or stop the current topic. The GUI design for the three buttons (i.e., “NEXT”, “REPEAT”, and “MAIN MENU”) are illustrated on the right side of Figure 2.

III. Usability Study

A. Study Design and Participant Recruitment

We conducted a pilot study to assess the acceptance of the robot Tammy through usability testing involving people with dementia, which at the same time contributed to testing feasibility of the RMRT program. We used the survey and observation approaches to conduct the usability testing of Tammy and its GUI tablet. The study protocol was approved by the Institutional Review Board (IRB) of the University of Tennessee, Knoxville. IRB number is UTK IRB-21-06631-XM. We recruited potential participants by emailing flyers to collaborating organizations and senior centers, such as Alzheimer’s Association and Alzheimer’s Tennessee, and requesting them to distribute flyers to their listservs. To reach more potential participants, we additionally recruited PLWD participants at a local dementia specialty clinic (Genesis Neuroscience Clinic, Knoxville, TN) between 24 January 2022 and 4 February 2022. Inclusion criteria included: 1) aged between 18 and 95, 2) able to understand and speak English fluently, 3) diagnosed with cognitive impairment or dementia or having experience in caring for people with cognitive impairment or dementia, and 4) no severe hearing or visual impairments that would interfere with the reminiscence process. Exclusion criteria included: 1) having any current significant systematic illness (e.g., brain injury, severe eye or hearing conditions) that could lead to difficulty complying with the experiment, or 2) unstable medical condition, such as unstable or significantly symptomatic cardiovascular disease or psychiatric disorder (e.g., major depression, schizophrenia, or bipolar disorder). Participants’ medical stability was assessed through a consultation with their clinician in the clinic, who confirmed their eligibility based on the criteria provided. Our target population included both people with cognitive impairment and their caregivers, considering that they both are important stakeholders of dementia care and may provide valuable insights (e.g., users’ requirements and opinions) about dementia assistive tools from different perspectives. The clinicians at the clinic helped recruit participants by asking a person with dementia if s/he was interested in joining a research study on interacting with a social robot. Participants who were interested and met the eligibility criteria were provided written and verbal information about this study. To ensure that a potential participant was able to provide informed consent, after describing the study to the potential participant, a researcher asked them to explain the essential information about the study in their own words, for example, “Can you tell me what this study is about?” The persons who cannot provide an adequate answer to the question were not included in the study. A researcher or a dedicated research nurse who was employed at the Genesis Neuroscience Clinic obtained an informed consent form from participants who agreed to join the study. Data were collected at the Genesis Neuroscience Clinic, Knoxville, TN, with the participants recruited there and the laboratory at the University of Tennessee, Knoxville, TN with the participants recruited from other places. The cognitive status of participants was self-reported via a survey (See Subsection III-C1) and cross-checked with the clinician at the Genesis Neuroscience Clinic for verification purposes.

B. Experimental Procedure

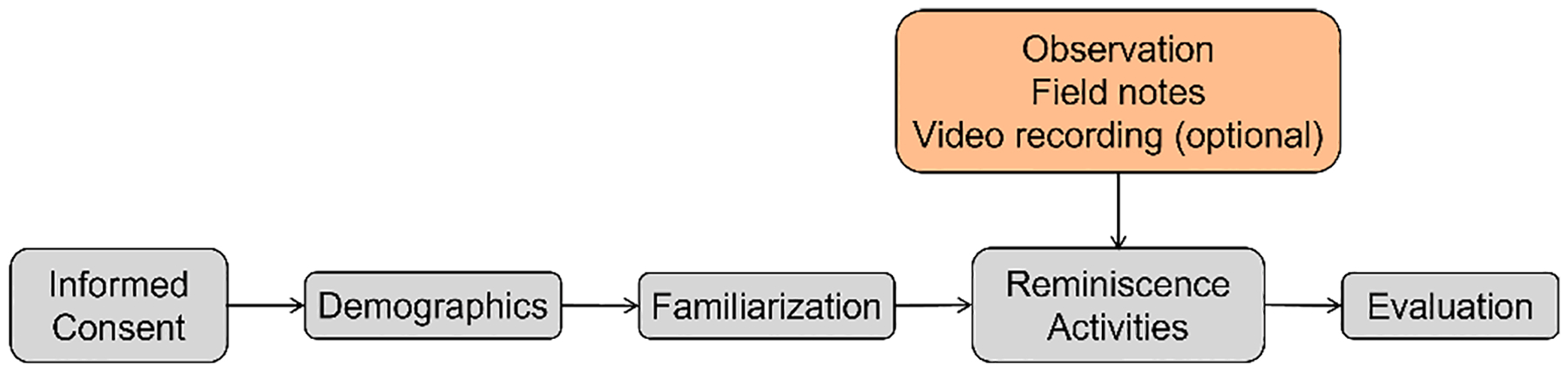

The experimental procedure for this usability study is illustrated in Figure. 4. After obtaining the consent form from the participant, a trained researcher (i.e., human interventionist) distributed a survey questionnaire that collected demographic data from each participant, background experience with technologies, and cognitive status (see III-C1). A participant with cognitive impairment or dementia can participate the study accompanied by their family member. The experiment started with a familiarization session, where the robot welcomed the participant and introduced itself, using a short talk conversation, dance, and/or song. This session can support positive emotional reactions and alleviate PLWD’s possible fears and discomfort while promoting the feeling of safety in being around the robot Tammy. Then the interventionist demonstrated how to use the Reminiscence app in the robot, for example, tapping buttons and using voice input, and the participant started to try reminiscence activities mediated by Tammy. The participant can try as many reminiscence topics as they wanted. The interventionist encouraged the participant to use the reminiscence app by themselves. However, if they had any problems using or interacting with the robot, the interventionist would provide help. During the reminiscence process, the interventionist observed the human-robot interaction and took field notes. After the reminiscence process, a questionnaire was distributed to gather data about participant’s perception (e.g., usability and acceptability) of the RMRT. See Section III-C2 for details.

Fig. 4.

Experimental procedure for the usability study of robot-mediated reminiscence therapy.

C. Data Collection

A mixed-method approach, quantitative and qualitative, was employed to collect the data. Quantitative data about demographics and user experience were collected using participant-rated questionnaires. As demonstrated in Figure 4, a demographic survey was provided before the participant interacted with the robot, and another questionnaire for evaluation was provided after the participant tested the RMRT. Details on the design of the two questionnaires were provided in the following subsections. Qualitative data were collected via the open-ended comments that participants provided, the researcher’s field notes during observation of the robot Tammy’s interaction, and participants’ video-recorded interactions with the robot during the reminiscence therapy. The researcher also noted potential critical problems in the RMRT, and participants’ thoughts, comments, and suggestions for the system. To maximize the number of participants recruited, it is voluntary for participants to be video recorded their interaction with Tammy during RMRT. For participants who agreed to be video recorded, a GoPro camera, which was put in a corner, was used to record their interactions with Tammy. After the experiment, all the videos were moved to a university-owned laptop for data analysis.

1). Demographic survey:

The survey questionnaire collected the participants’ demographic data (age, gender, and education), background experience or familiarity with using technologies (including smartphones, tablets, computers, and robots), and cognitive status (type of cognitive impairment and stage) See Appendix A for the questionnaire we used to collect characteristics of participants.

2). Questionnaire for evaluation:

After the participant interacted with the RMRT, the participant was asked to complete a 14-item questionnaire (post-test) to evaluate their experience with and perception of the robot Tammy and its tablet’s user interface (UI) during RMRT. The survey was adapted from two well-established questionnaires, the Questionnaire for User Interaction Satisfaction (QUIS) [41] and the System Usability Scale (SUS) [42]. We also applied Almere’s model to gather data about the participants’ acceptance of the Tammy [43]. QUIS measures the users’ overall system satisfaction and user satisfaction on specific interface factors, including screen visibility, terminology and system information, learning factors, and system capabilities [41]. SUS is a widely used tool for evaluating subjective usability of digital products, services, and designs, providing a holistic view of subjective assessment (user-driven) of system usability including perceived ease of use, effectiveness, efficiency, and satisfaction [42]. The Almere model tests the acceptance of assistive social agents by elderly users that positively influence elderly users’ intention to use a system over a longer period of time. To minimize the cognitive burden for PLWD in filling out the three questionnaires, we combined these into one 12-item questionnaire (Table I). The items generated data on specific constructs that are critical for understanding the participants’ interaction behavior and experience with the robot and the user interface, affective reactions to it, acceptance, trust, and effectiveness of robotic user interface for reminiscence activity. The participants rated each item (Table I) on a five-point scale, from 1 = “strongly disagree” to 5 = “strongly agree”.

TABLE I.

Items to evaluate user experience for the RMRT usability study

| Item | Construct |

|---|---|

| Q1: The robot’s appearance is pleasing | Usability and UI: Robot’s appearance |

| Q2: The robot’s voice is clear | Usability and UI: Robot’s voice |

| Q3: The robot’s gestures and other body movements are appropriate | Usability and UI: Robot’s body movement |

| Q4: The robot’s tablet screen designs and layout are attractive | Usability and UI: Robot’s touchscreen design |

| Q5: I feel relaxed when talking to the robot | Anxiety |

| Q6: I would like the robot to be my friend | Social presence |

| Q7: I would follow the advice of the robot | Trust |

| Q8: The app is useful | Perceived usefulness |

| Q9: The app is easy to use | Perceived ease of use |

| Q10: The app is interesting | Perceived enjoyment |

| Q11: I would like to use the app in the future | Intention to use |

| Q12: I would like to recommend the app to others | Willingness to recommend |

In addition to the 12 items in Table I, we added one item to learn how the PLWD would like to control the robot Tammy (i.e., through the tablet, speech, or both), during the reminiscence activity. Moreover, there is one multi-choice question to learn about the functions of Tammy that people with dementia would like to have in their daily life. Therefore, we added the open-ended question (“Do you have any suggestions or comments?”) to gather additional insights into the participants’ opinions and suggestions about the RMRT. See Appendix B. During the study, participants could skip any question they did not want to answer.

D. Data Analysis

Descriptive statistics including frequency, mean (M), standard deviation (SD), and proportions were used to analyze the quantitative data. Considering the small sample size, we manually went through all the comments from open-ended question as well as field notes to develop an understanding of the participants’ experience with the RMRT. As for the recorded videos, we used Simple Video Coder [44], free, open-source software for efficiently coding social video data, to code participants’ interaction behaviors during RMRT, including the participants’ emotions (such as laughter/giggle/joy, frown, and confusion) and success or failure in using the RMRT.

IV. Results

A. Descriptive Statistics

A total of 12 older adults (4 females and 8 males), including 3 informal caregivers, 2 individuals with mild cognitive impairment, and 7 with ADRD, consented to participate in this usability study. Their demographic information is listed in Table II. The prototype RMRT has been tested with ten participants at the Genesis Neuroscience Clinic and two participants at the laboratory. There was one participant aged 50–65, three aged 65–75, seven aged 75–85, and one aged above 85. All participants obtained an education of high school graduate or higher. On average, all participants were at least slightly familiar with the technologies of smartphones, tablets, and computers. Regarding robots, all participants reported “not at all familiar” with them. Among the seven participants with ADRD, one participant reported early-onset dementia, five with mild dementia due to AD, and one with Frontotemporal dementia. Due to the COVID-19 pandemic, most participants wore masks when interacting with the robot. Participants spent 2–3 minutes talking about one topic with the robot. On average, it took 20 minutes to complete both the robot-mediated reminiscence activity and the questionnaires. Three participants agreed to be video recorded for their interaction with the robot.

TABLE II.

Descriptive statistics of participants

| Demographic variables | Statistic |

|---|---|

| Gender, (n = 12) | n (%) |

| Female | 4 (33.33%) |

| Male | 8 (66.67%) |

| Age (years), (n = 12) | n (%) |

| 50–65 | 1 (8.33%) |

| 65–75 | 3 (25.00%) |

| 75–85 | 7 (58.34%) |

| Above 85 | 1 (8.33%) |

| Highest level of education, (n = 12) | n (%) |

| High school graduate | 1 (8.33%) |

| Some college | 4 (33.33%) |

| College graduate | 2 (16.67%) |

| Post-graduate | 5 (41.67%) |

| Familiarity with technology | Mean ± SD |

| Smartphones | 2.75 ± 1.22 |

| Tablets | 2.42 ± 1.08 |

| Computers | 2.73 ± 1.01 |

| Robots | 1.00±0 |

For the items associated with familiarity with technology (i.e., Rows Smartphones, Tablets, Computers and Robots), 1 = Not at all familiar, 2 = Slightly familiar, 3 = Moderately familiar, 4 = Very familiar.

B. Evaluation Survey

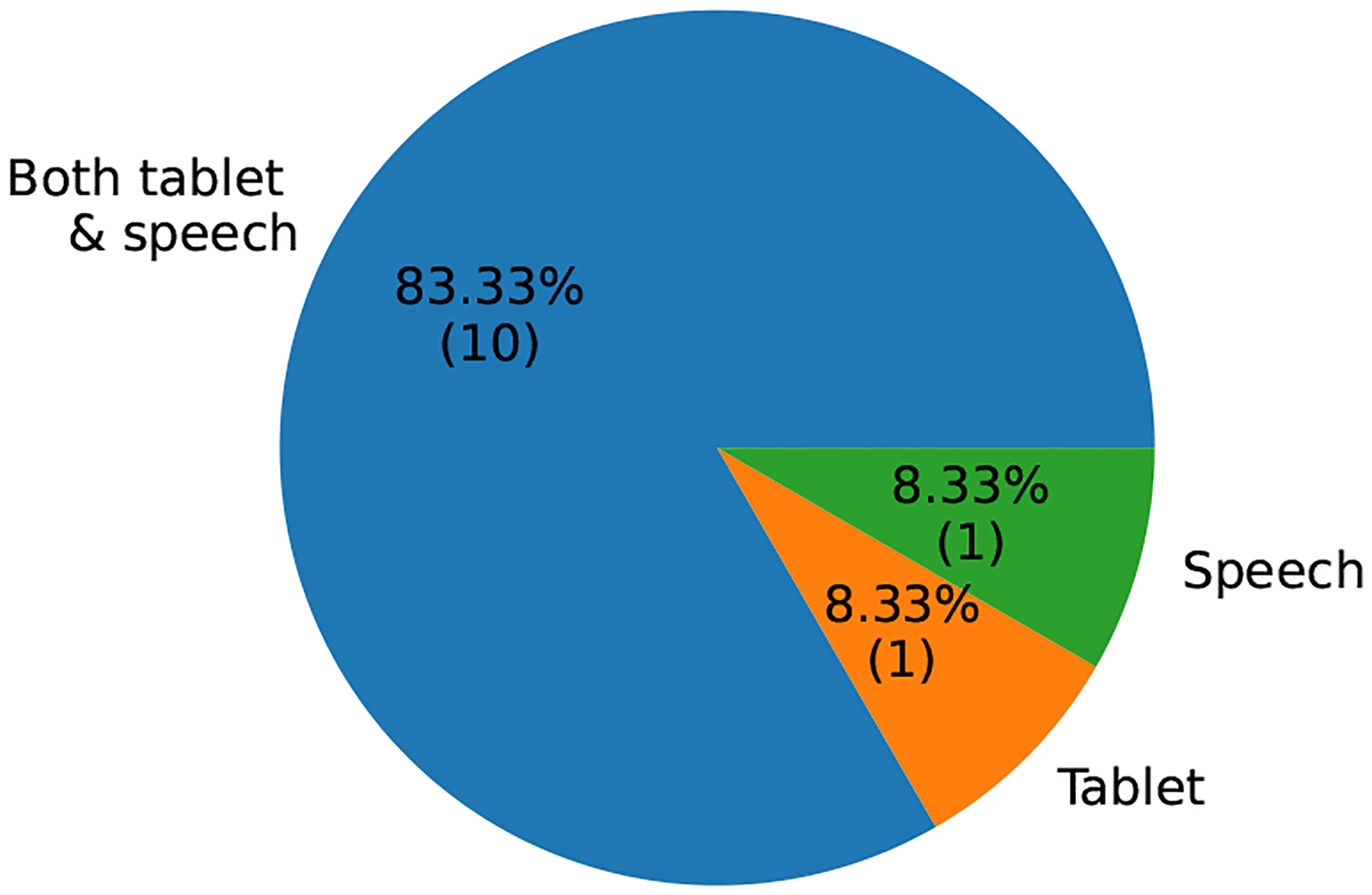

Participants’ individual responses to the 12 items of Table I are shown in Table III. Overall, except for Item Q7, participants rated each item “somewhat agree” or “strongly agree”. With respect to the robot control method, ten participants (83.33%) indicated that they would like to control the robot using both tablet and voice (speech), while one participant would like to control the robot only via tablet or speech, separately, as plotted in Figure. 5. Table IV lists participants’ individual reaction to robotic functions regarding whether the participant hoped the robot to have the function (recorded as “1” in Table IV) or not (recorded as “0”).

TABLE III.

Individual participant’s responses to Items Q1-Q12 about system usability and user experience

| ID | Diagnosis | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | Q12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P1 | AD | 5 | 5 | 5 | 5 | 5 | Skipped | 4 | 3 | 4 | 4 | 4 | 4 |

| P2 | MCI | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 5 |

| P3 | AD | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 5 | 5 | 4 | 5 |

| P4 | CA | 5 | 4 | 5 | 5 | 5 | 4 | 3 | 5 | 4 | 5 | 4 | 5 |

| P5 | AD | 4 | 1 | 1 | 1 | 1 | 4 | 4 | 4 | 4 | 4 | 4 | 3 |

| P6 | MCI | 4 | 4 | 4 | 5 | 5 | 4 | 3 | 4 | 5 | 5 | 4 | 4 |

| P7 | CA | 5 | 4 | 5 | 4 | 4 | 5 | Skipped | 5 | 5 | 5 | 4 | 4 |

| P8 | FTD | 5 | 5 | 5 | 5 | 5 | 4 | 1 | 5 | 5 | 5 | 5 | 5 |

| P9 | CA | 5 | 5 | 5 | 5 | 5 | 5 | 3 | 5 | 5 | 5 | 5 | 5 |

| P10 | Early-onset | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 5 |

| P11 | AD | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| P12 | AD | 5 | 4 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| Mean | 4.83 | 4.33 | 4.58 | 4.58 | 4.42 | 4.64 | 3.73 | 4.58 | 4.75 | 4.83 | 4.50 | 4.58 |

See Table I for Items Q1–Q12.

AD = Alzheimer’s disease, MCI = Mild cognitive impairment, CA = Informal caregiver of PLWD, FTD = Frontotemporal dementia, Early-onset = Early-onset dementia.

Scale for each item, 1 = strongly disagree, 2 = somewhat disagree, 3 = neither agree nor disagree, 4 = somewhat agree, 5 = strongly agree.

Since questionnaire questions were not mandatory, a participant may skip a question accidentally, which is marked as Skipped.

Fig. 5.

Distribution of control methods that participants would like to control the robot.

TABLE IV.

Count of functions participants hoped the robot Tammy to have

| Participant ID | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 | F9 | F10 |

|---|---|---|---|---|---|---|---|---|---|---|

| P1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 |

| P2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 |

| P3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 |

| P4 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 |

| P5 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | |

| P6 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 0 |

| P7 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 |

| P8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 |

| P9 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| P10 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| P11 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 |

| P12 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| Overall response | 12 | 11 | 6 | 5 | 9 | 8 | 11 | 11 | 9 | 1 |

F1 = Help call family and friends; F2 = Help contact medical services; F3 = Help do shopping; F4 = Help order food; F5 = Help prevent falling; F6 = Remind to turn of stove top; F7 = Remind to take medicine; F8 = Emergency call service; F9 = Keep me company; F10 = Other (Play music).

C. Observation, Comments, Field Notes, and Video Coding

Each participant tried two or more topics for the reminiscence activity. The three participants who agreed to be video recorded tried 7, 4, and 2 topics, respectively, and the video coding for these participants are shown in Table V, VI, and VII, respectively. During the RMRT, participants shared their stories after the robot came up with a question. However, there were two participants who only gave “yes” or “no” answer or simply skipped answering a question by tapping the “NEXT” button. We also observed that participants laughed, giggled, nodded, thought about their own experience, and made eye contact when the robot talked with them (as illustrated in Table V and VII). Some participants also sang along with the robot when the robot displayed a song on the Music topic (as coded in the 7th topic of Table V). Moreover, during the RMRT activities, we noticed that some participants sat too close with the robot to clearly see the content on its tablet, inhibiting the robot’s gestures.

TABLE V.

Videos coding for the participant’s interaction with the robot during RMRT for Participant P1

| Topic | Behavior | N occur | Note |

|---|---|---|---|

| 1st topic | Success in choosing a topic | 1 | |

| Laughter | 10 | ||

| Nodding | 2 | ||

| Failure in tapping NEXT | 2 | First time to use the RMRT, still learning | |

| Success in tapping NEXT | 3 | ||

| Confusion | 1 | Confused about what to do at the end of the topic | |

| 2nd topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | The robot not ready to catch voice input | |

| Success in tapping NEXT | |||

| Nodding | 1 | ||

| Success in tapping MAIN MENU | 1 | ||

| 3rd topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | ||

| Success in tapping NEXT | |||

| Reflection/Thinking | 1 | When the robot asked P1 to suggest some place | |

| Laughter | |||

| Tending to shake hands with the robot | 1 | ||

| Success in tapping MAIN MENU | 1 | ||

| 4th topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | ||

| Success in voice control | 1 | ||

| Success in tapping NEXT | |||

| Nodding | 1 | ||

| Success in tapping MAIN MENU | 1 | ||

| 5th topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | ||

| Success in voice control | 1 | ||

| Success in tapping NEXT | |||

| Success in tapping MAIN MENU | 1 | ||

| 6th topic | Failure in choosing a topic | 1 | P1 didn’t know to scroll up or down the screen |

| Success in choosing a topic | 1 | ||

| Success in voice control | 1 | ||

| Success in tapping NEXT | 1 | ||

| Laughter | 1 | ||

| Nodding | 1 | ||

| Success in tapping MAIN MENU | 1 | ||

| 7th topic | Success in choosing a topic | 1 | Successfully scrolling up the screen |

| Ineffective voice control | 1 | No need of user input in RMRT | |

| Singing along with the robot | 2 | ||

| Laughter | 4 | ||

| Success in tapping NEXT | 5 | ||

| Nodding | 1 | ||

| Success in tapping MAIN MENU | 1 |

Here the voice control was the voice input “Tammy next”.

TABLE VI.

Videos coding for the participant’s interaction with the robot during RMRT for Participant P2

| Topic | Behavior | N occur | Note |

|---|---|---|---|

| 1st topic | Confusion | 2 | First time to use the RMRT, confused about next step. |

| Dysfunction in tapping NEXT | 1 | P2 with a shaking hand | |

| 2nd topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | ||

| Laughter | 1 | Although the robot did not respond successfully | |

| Success in tapping NEXT | |||

| Dysfunction in tapping NEXT | 1 | Due to the shaking hand. | |

| Success in tapping MAIN MENU | 1 | ||

| 3rd topic | Success in choosing a topic | 1 | |

| Laughter | 1 | ||

| Success in tapping NEXT | |||

| Dysfunction in tapping NEXT | 1 | Due to the shaking hand. | |

| Success in tapping MAIN MENU | 1 | ||

| 4th topic | Success in choosing a topic | 1 | |

| Success in tapping NEXT | |||

| Not answering but directly tap NEXT | 1 | ||

| Forgetting tapping NEXT | 1 |

Here the voice control was the voice input “Tammy next”.

TABLE VII.

Videos coding for the participant’s interaction with the robot during RMRT for Participant P3

| Topic | Behavior | N occur | Note |

|---|---|---|---|

| 1st topic | Success in choosing a topic | 1 | |

| Failure in voice control | 1 | Probably low voice or not right time | |

| Success in tapping NEXT | 5 | ||

| Laugh/giggle/joy | 2 | ||

| Tending to shake hand with the robot | 1 | ||

| Confusion | 1 | Confused about what to do at the end of the topic | |

| 2nd topic | Success in choosing a topic | 1 | |

| Ineffective voice input | 1 | No need of user input in RMRT | |

| Laugh/giggle/joy | 3 | ||

| Eye Contact with the robot | 1 | ||

| Success in tapping NEXT | 4 | ||

| Confusion | 1 | Confused when the robot not responding to P3. Forgetting to tap NEXT | |

| Reflection/thinking | 1 | The robot asked P3 to share their favorite songs |

Here the voice control was the voice input “Tammy next”.

When interacting with the robot during reminiscence activities, one participant verbally mentioned that it would be better if the user’s icon matched the user’s gender. Researchers also noticed that participants appeared to be very careful when answering question Q7 (Table I), leaving a comment such as “I don’t know.” One participant commented that it was their first time using a robot, and they remained skeptical of its advice. They suggested that with more experience using the robot, their response or trust might change. Five (42%) of the 12 participants provided open-ended comments, including “Exciting possibilities!”, “Research was very, very sweet, helpful, and knowledgeable. Great idea”, “I believe this is a wonderful tool for children with problems. Also, it would be great for adults that have problems. I love her (meaning the robot Tammy)!”, “very interesting!”, and “very nice to see”. In addition, two family caregivers verbally shared their opinions about the RMRT as well as technology-enabled dementia care. One caregiver expressed appreciation for our RMRT, mentioning that their loved one with dementia was very knowledgeable and could still engage in extensive discussions about their knowledge. The caregiver found that the RMRT was a good idea, as it could stimulate and encourage their loved one to converse. Another caregiver, a spouse of a person with early-onset dementia, mentioned that there was a critical shortage of supportive care resources for people with early-onset dementia compared to other types of dementia. They expressed hope that we could work more to assist in caring for this group.

The recorded interactions of participants P1, P2, and P3 with the robot during RMRT were analyzed and coded based on their behaviors and frequency of occurrence (Noccur), as displayed in Tables V, VI, and VII, respectively. During the initial topic, all participants encountered difficulties in using the RMRT successfully, such as failing to tap the NEXT button and confusion regarding the subsequent steps after completing the first topic. However, following the initial session, participants predominantly exhibited successful control of the robot for reminiscence activities. Specifically, P1 attempted to select seven topics and successfully chose topics of interest six times (85.7%). P1’s only failure resulted from unfamiliarity with scrolling on the screen. P2 was confused about the next step (i.e. choosing a topic) during their initial use of the RMRT program but they were successful in choosing topics during following experimental period (2nd – 4th topics in Table VI). P3 successfully chose both topics of interest. In terms of button usage, P1 initially failed to tap the NEXT button but later succeeded in using both the NEXT and MAIN MENU buttons. P3 consistently used the NEXT button effectively, whereas P2 experienced difficulties due to a shaking hand, resulting in three failures and seven successes in tapping the NEXT button and two successes in using the MAIN MENU button. Regarding voice control, P1, P2, and P3 encountered failures in controlling the robot via voice input in 4 (50%), 1 (100%), and 1 (50%) instances, respectively, as outlined in Tables V, VI, and VII.

V. Discussion

In this study, we used Tammy, a humanoid social robot [26] to develop a RMRT program for people with dementia. Tammy was able to automate the reminiscence activity for PLWD. A usability study was conducted with 7 individuals with ADRD, 2 with MCI, and 3 informal caregivers to evaluate the user experience and system’s acceptability using usability testing of RMRT at a local clinic and the laboratory. Overall, participants reported a positive user experience with the RMRT and perceived the robotic user interface in the program as effective and easy to use.

A. User Experience and Usability of RMRT

The findings from this study revealed that although all participants were not familiar with robots, they had an overall positive user experience with the RMRT activity and interaction with the robot, Tammy. As shown in Table III, on average, participants somewhat or strongly agreed with the statements for all items, except for Item Q7. Items Q1 and Q2 responses showed that Tammy’s appearance was, on average, considered to be pleasing and its voice was clear to participants. In one previous study using the same robot Pepper with the same robotic voice [27], dementia caregivers and the general public (people without dementia and without experience in dementia care) were asked their opinion on how likely people with dementia would interact with a video-based robot and how much they would like its sounding voice. The caregivers and the general public rated the voice with a relatively low mean value (M = 3.17 and M = 3.18, respectively) and provided negative comments about its voice. However, in the present study, participants reported a more positive user experience in terms of Tammy’s voice, with a mean (M) rating of 4.33 and a standard deviation (SD) of 1.15. This might be related to the fact that participants interacted with the robot in-person. Item Q3 in Table III shows that, overall, the participants rated Tammy’s body movements (e.g., gestures) as high (M = 4.58) on a five-point scale. This indicates that delivering reminiscence therapy by a physically embodied social robot can facilitate both verbal and nonverbal social interaction for PLWD [8], [17]. Additionally, their rating of the tablet’s GUI design (e.g., attractiveness) of the RMRT was M = 4.58, suggesting a high potential for adopting our RMRT program by PLWD. Moreover, we found that majority (> 91.6%) of participants rated six items (i.e., Q5–Q6 and Q8–Q11) above 4 on a five-point scale (Table III), suggesting that they would use the RMRT program in the future. This finding means that using a robot, such as Tammy, has promise for successful adoption of RMRT by PLWD.

Regarding Item Q7 (i.e., “I would follow the advice of the robot”), participants provided the lowest rating (M = 3.73), with one participant skipping this question, one rating 1 (strongly disagree), and three participants rating 3 (neither agree nor disagree). The low rating might be explained by the fact that participants seemed hesitant and uncertain when responding to Item Q7. For example, many left comments like “I don’t know.” This suggests that human users may lack trust in using robots, which is consistent with findings from previous studies [45]. Herein, we conjecture that limited experience with robots as well as exposure to using them for a short period of time influenced the participants’ ratings. However, it was reported that user trust is a critical factor influencing user’s intention to use of a robot [16], [46]. Possible ways to mitigate the issue is to provide literacy and training programs for PLWD about robots and give them opportunities to interaction with robots for a longer period of time [47]. Eleven of 12 participants provided a score of 4 or greater to Item Q12, meaning that the participants would highly recommend our RMRT to other PLWD. The finding reflects participants’ positive perception of and attitude towards the robot Pepper and the RMRT program and suggests that the RMRT as well as the robot have a high potential for adoption in the future.

Through observation and the video recording of the participants’ interaction with the robot during RMRT (Table V–VII), we observed that participants sometimes laughed, giggled, made eye contact with the robot, and even tended to shake hands with the robot, when the robot Tammy talked and played body movements. The participants’ nonverbal behaviors indicate that a physically embodied social robot can facilitate both verbal and nonverbal social interaction with PLWD during RT, enabling more engaging and positive user experience and thereby increasing beneficial impacts of RT on PLWD’s mood. During the RMRT activities, we noticed that some participants sat too close with the robot to clearly see the content on its tablet, which might have reduced the effectiveness of robot’s body movements in communication[48].

B. Effectiveness of User Interface in RMRT

Effectiveness is considered as one of the key quality characteristics of a system, product, or service intended for human use [49]. The effectiveness of the robotic user interface can influence the usability and user experience (UX) of the RMRT program by PLWD. To evaluate the effectiveness of the user interface in RMRT, we considered the success of participants in completing tasks and the ease with which they learned and used the program. Through the video coding results in Tables V, VI, and VII, we observed the participants’ success and failure in completing specific tasks, such as choosing a topic of interest for reminiscence, using/tapping buttons (NEXT, REPEAT, and MAIN MENU), and using voice input to control the robot. The majority of participants successfully chose topics of interest, indicating that the RMRT program was easy to learn and use. However, the failure in tapping buttons by P2 suggests that the current button design in RMRT may not be effective for PLWD with shaking hands. Furthermore, the interface of voice control in the current prototype of RMRT was not successful, demonstrating a need for improvement in the effectiveness of this voice control interface. Participants’ rating of the level of ease of use (i.e., Item Q9 in Table I) in the post-test survey reflects the effectiveness of the GUI. As listed in Table III, all participants somewhat or strongly agreed that the Reminiscence app developed in robot Tammy was easy to use, facilitating the effectiveness of the robotic user interface in interacting with users during reminiscence. In conclusion, our findings suggest that the RMRT program’s robotic user interface is generally effective in allowing PLWD to choose topics of interest for reminiscence activities. However, improvements in button design for PLWD with shaking hands and the effectiveness of the voice control interface are needed for future work.

C. Robot Control Method

Some participants showed interest of controlling the robot Tammy using voice control. However, during the experiments, we found that most of the time it was not successful for participants to control Tammy via voice input, which happened to Participants P1, P2, and P3 (Table V–VII). This may be attributed to the fact that all the participants in this study are older adults, who most of the time had low speaking volumes. Also, wearing masks during the interaction made it more difficult for Tammy to clearly understand participants’ speech. Another hindering factor was a limitation in Tammy’s delay in finishing its verbal output and starting to listen to the participant’s verbal input. In other words, if the user provided their voice input before Tammy was ready to listen, Tammy would not respond as expected, leading to a negative user experience for PLWD.

Preference for a robot control method depends on each individual user. In this study, one participant (P2, Table VI) showed a symptom of a shaking hand. This participant planned to tap the “NEXT” button to continue the procedure but, unfortunately, tapped the “NEXT” button twice within a very short time. In this situation, it might be more convenient for the participant to control the robot through voice input. Thus, we suggest that designers enable the use of both control methods in technologies such as robots, tablets, and computers to support and enhance PLWD’s interaction and experience.

D. Personalization of RMRT

Through the observation of PLWD-robot interaction and based on the field notes taken during the experiment, we identified three aspects of personalization to incorporate in the current prototype of the RMRT. The first aspect is the design of the UI icons. As mentioned in Subsection IV-C, one participant mentioned that it would be better if the user’s icon matched the user’s gender. This finding was also confirmed in a previous study [16]. The second aspect is the personal photos and videos. Two participants suggested that topics specifically include photos and videos of individual life events, such as wedding, which people with dementia could be very interested to talk about. In this study, we avoided the use of personal materials to reduce potential privacy concerns. In future work, we will create an additional module for PLWD where they can add their personal photos and/or videos of interest to them and also be able to talk with Tammy about their life stories, experiences, and memories to improve potential effectiveness of RT for PLWD [10]. During the experiments, when the robot Tammy asked a simple question such as “do you have a favorite memory with a pet?”, two participants only provided a “yes” or “no” answer or directly skipped answering the question by tapping the “NEXT” button, while other participants shared their stories. Considering that PLWD might feel stressed because of the inability to answer difficult, open-ended questions, we hardcoded Tammy to ask easy, closed-ended questions most of the time. However, this turned out to lead to less conversation from the participants. Therefore, another potential aspect of personalization should be that Tammy adaptively asks relatable, engaging, and appropriate questions for reminiscence. For example, when a user is cognitively able to answer a more difficult question, Tammy may ask different questions such as “what is your favorite memory with a pet?”. Conversely, if a user is cognitively unable to answer a difficult question, Tammy should adaptively ask easy questions such as “do you have a favorite memory with a pet?”. Such personalization can stimulate PLWD to converse as much as possible while promoting a sense of comfort and well-being. This personalization might be enabled by using advanced AI techniques, such as deep learning [15] and reinforcement learning [19].

E. Robot Functions Desired by PLWD

With respect to the functions that the participants would like Tammy to do to support dementia care, the top four required functions found in the present usability study of RMRT were, helping call family and friends, helping contact medical services, setting reminders to take medicine, and providing emergency call service, as shown in Table IV; while the top four functions found in our previous study [27] included, setting reminders to take medicine, emergency call service, helping contact medical services, and setting reminders to turn off the stovetop, which excluded “helping call family and friends”. One possible reason for this difference is that participants in the current study interacted with the robot in-person, obtaining an impression that Tammy may not be appropriate for calling family and friends, whereas, in the previous study, the participants watched a video of the robot and did not have a sense of its live presence. Additional studies are needed using a large sample to identify the most important functions PLWD desire in a robot. As for Tammy playing music, one participant had a hobby of playing music and instruments. When answering this multi-choice question, he particularly wrote down “play music” under Other in the survey. This finding suggests that the personalization of the robot Tammy for PLWD varies based on each individual PLWD’s hobbies (such as listening to music, reading books, or watching a movie) [50]. Individualized personalization could facilitate a more successful long-term adoption of social robots by PLWD for dementia care [16].

F. Accessible Technology-Enabled RT

Two family caregivers provided insights into user’s needs and challenges in dementia care from a family caregiver perspective, indicating that more access to reminiscence therapy and other dementia care is still a challenge for PLWD and their caregivers. We suggest leveraging the advanced digital health technology, such as mobile devices, social robots, and AI, to support dementia care delivery, which will in turn support aging in place [8], [16], [51]. An early introduction of digital health, for example, in stages of mild to moderate dementia, may lead to a better user acceptability of the technology and enable the technology to assist more in dementia care [27], [52].

One reason for the comparative shortage of supportive care resources for people with early-onset dementia to other types of dementia, mentioned by the family caregiver of the participant with early-onset dementia, might be that early-onset dementia is less common [53], [54]. We encourage cross-disciplinary collaboration (such as policy and political decision-makers, clinicians, researchers, and ethicists) [55] to increase the use of dementia-care technologies for people with early-onset dementia and their caregivers to address their needs. In fact, dementia-care technologies can be provided in different forms, such as computers, tablets, smartphones, and social robots. Of note, dementia care that is assisted by physically embodied social robots can be more engaging and efficient and can lead to a more positive user experience. However, the cost may be an issue. As the cost of humanoid robots decreases, we expect that the use of robots in RT and other dementia care increases. We suggest that researchers, professionals in healthcare, and robot manufacturers focus on making robots and dementia care affordable and accessible to all PLWD and caregivers.

G. Limitations and Future Work

In this study, we identified several limitations regarding the design of RMRT, PLWD-robot interaction during the reminiscence process, as well as the research design. Tammy was very limited in hearing participants’ verbal input. Verbal conversation is an essential interaction modality during RT. Therefore, our next step is to integrate a wearable, wireless Bluetooth into Tammy to enable the robot to better capture PLWD’s verbal input. Additionally, sitting close to the robot to see the content on its tablet interfered with its body movements. This limitation concerns the design of physically embodied social robots. On one hand, the tablet attached to its tablet contributes to delivering an integrated robot-based system for reminiscence therapy. On the other hand, the tablet should be large enough for PLWD to enable seeing the content on the screen easily. One limitation in the study design is that the researchers renamed the robot as Tammy to contextualize its gender as female, which may influence participants’ perception of the robot. Moreover, the gender assigned by the researchers may not align with how participants perceive the robot’s gender [56]. Future work should investigate how PLWD would gender the robot and how this could impact the acceptability, usability, and UX of the RMRT. One strategy to address this issue could be to allow participants to personalize the gender of the robot before interacting with it. This could increase the perceived humanness and other benefits related to gendering the robot.

Another limitation resides in the small sample size (12 participants). Nonetheless, this sample should be sufficient for identifying critical usability problems and improve interactive design [57]. It is a common challenge to recruit PLWD to participate in research studies, especially those that involve technologies such as robots. The current study recruited participants from Knoxville, TN, USA. To broaden the applicability of the RMRT program, future studies should include a more diverse user group from different regions.

During the design and development of the current prototype of RMRT, experts in RT were concerned that the GUI design of PLWD-robot conversation (with the conversation displayed on the right side of the robot’s tablet, Figure 2) might be distracting to people with moderate dementia. In future work, we will make a prototype of RMRT suitable for people with moderate dementia and test it to evaluate its usability and feasibility. If needed, we will adapt the GUI design of the current prototype for people who cannot follow directions. Another limitation lies in potential bias in the results of this usability study. Due to voluntary participation, it could be that the participants were more interested in using the robot as it was a novel tool for them, which may have influenced the assessment of the participants’ interaction and experience with the RMRT program. Lack of personalization affected the participants’ interaction with Tammy. This feature will be addressed in future prototypes.

There are two potential limitations of the data collection methods employed in this study. The questionnaire we used to evaluate the participants’ experience did not include items on efficiency and user satisfaction, which are core factors in evaluating system usability. Another limitation is that the qualitative data were collected by using one open-ended question in the survey questionnaire. In future work, we will use individual interviews to collect additional insights into participants’ experiences.

Although the usability study provided insight into PLWD-robot interaction, further research is needed to evaluate the preliminary efficacy of the RMRT program and confirm its validity by implementing control groups and including a larger sample of PLWDs. For example, a control group using a tablet-based reminiscence therapy program could be used.

VI. Conclusions

In this paper, we implemented a robot-mediated reminiscence therapy (RMRT) program using a humanoid social robot which we named Tammy. Tammy was able to provide multimedia reminiscence therapy for PLWD and persons with mild cognitive impairment. We evaluated the acceptability and user experience of RMRT with PLWD through usability testing of Tammy and its GUI tablet. PLWD reported a positive experience with the program, had an overall positive emotional reaction to it, and were willing to use the program in the future. PLWD sang along with Tammy and/or laughed during reminiscence. The findings from the usability study suggest that RMRT has the potential as an engaging, effective, and accessible home-based cognitive exercise for people with dementia. This usability study also identified several limitations in the current RMRT program, including improving the auditory sensing capability of Tammy and adding personalization capabilities in Tammy. These will be addressed in the next prototype. This endeavor should facilitate a more effective long-term adoption of RMRT for PLWD.

Acknowledgments

This work in part is supported by National Institute of Health (NIH) under the grant number R01AG077003. We thank Jordis Blackburn, Robert Bray, and Ziming Liu for their assistance in conducting the experiments.

Biographies

Fengpei Yuan is a doctoral candidate in the Department of Mechanical, Aerospace, and Biomedical Engineering at the University of Tennessee, Knoxville. Her research focuses on developing social robotics and human-centered AI to enhance dementia care. This includes supporting activities of daily livings, fostering social interactions and communication, and facilitating cognitive rehabilitation and exercises for people with dementia.

Marie Boltz received the Ph.D. degree in Nursing Research and Theory Development from New York University, New York, USA. Dr. Boltz is the Elouise Ross Eberly and Robert Eberly Endowed Professor from the Nese College of Nursing at the Pennsylvania State University. Dr. Boltz’s areas of research include interventions that promote function and cognition in older adults, dementia care models, and care partner preparedness and wellbeing, and translational and health services research.

Dania Bilal received the Ph.D. degree in College of Communication and Information from Florida State University, Florida, USA. She is currently a Professor at the School of Information Sciences, The University of Tennessee in Knoxville, Tennessee. Dr. Bilal’s research sits at the intersection of human information behavior, human-computer interaction, human-AI Interaction, and information retrieval. She has numerous publications and made ample presentations at the national and international levels. Her current research focuses on human-centered voice digital assistants, human-robot interactions in health care and educational settings, and children and youth’s information behavior and interaction with system interfaces, unveiling their cognitive and affective experiences.

Ying-Ling Jao received the Ph.D. degree in Nursing from University of Iowa, Iowa, USA. Dr. Jao is an Associate Professor from the Nese College of Nursing at the Pennsylvania State University. Her research focuses on neurobehavioral symptoms of dementia, non-pharmacological interventions for dementia care, communication with older adults with dementia, use of technology in dementia care, and physical and social environments in nursing homes. She is currently leading two NIH funded dementia research projects that address neurobehavioral symptoms in nursing home residents with dementia.

Monica Crane earned her undergraduate degree from Yale University and her medical school training at Jefferson Medical College. She completed her residency and a fellowship in Geriatric Medicine at the University of Pennsylvania. Dr. Crane is the founder and medical director of the Genesis Neuroscience Clinic and a Clinical Assistant Professor at the University of Tennessee Graduate School of Medicine. She has a true passion for the clinical care, treatment and support for patients and families affected by Alzheimer’s disease and the related dementias. Dr. Crane has participated and led a number of clinical trials focused on Alzheimer’s disease and the related neurodegenerative dementias.

Joshua Duzan received the B.Eng. degree in Biomedical Engineering from the University of Tennessee, Knoxville. Josh has been an intern at Genesis Neuroscience Clinic since May of 2021 and has served as a Clinical Research Coordinator on the Perception-based Assistive Robot to Tend Neurodegenerative Dementia project.

Abdurhman Bahour is currently an undergraduate student in Computer Science at the University of Tennessee. His areas of interest encompass application development, machine Learning, and cybersecurity.

Xiaopeng Zhao (Member, IEEE) received the Ph.D. degree in Engineering Science and Mechanics from Virginia Tech, Virginia, USA. He is currently a professor from the Department of Mechanical, Aerospace, and Biomedical Engineering at the University of Tennessee, Knoxville, USA. Dr. Zhao’s areas of research are socially assistive robotics, human-centered AI, brain-computer interface, virtual reality, cognitive rehabilitation, medical informatics, wearable healthcare, automatic disease diagnosis, neuroscience, epidemic modeling and control, applied systems physiology, biomechanics, micro- and nano-systems, signal and image processing, system identification, systems dynamics, control feedback, optimal control, agent-based modeling, complex systems, nonlinear dynamics, bifurcation and chaos, and stochastic dynamics. He is also the director of the Detection, Care, and Treatment of Alzheimer’s Disease and Related Dementia (https://oneutadrd.utk.edu).

Appendix A

Appendix II

Q1. Age:

1) Under 50

2) 50–65

3) 65–75

4) 75–85

5) Above 85

Q2. What gender do you assign to yourself?

1) Female

2) Male

3) Prefer not to answer

4) Not listed; please specify____

Q3. Please indicate the highest level of education you completed:

1) 6th grade or less

2) 7th-11th grade

3) High school graduate

4) Some college

5) College graduate

6) Post-graduate

Q4. How familiar are you with the following technologies?

| Not at all familiar | Slightly familiar | Moderately familiar | Very familiar | |

|---|---|---|---|---|

| Smartphones | ||||

| Tablets | ||||

| Computers | ||||

| Robots |

Q5. Are you currently diagnosed with any of the following condition?

1) Mild cognitive impairment

2) Mild dementia due to Alzheimer’s disease

3) Moderate dementia due to Alzheimer’s disease

4) Other dementia____

5) Not applicable

Appendix B

Appendix II

Q1. Do you agree or disagree with the following statements: The robot’s appearance is pleasing.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q2. Do you agree or disagree with the following statements: The robot’s voice is clear.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q3. Do you agree or disagree with the following statements: The robot’s gestures and other body movements are appropriate.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q4. Do you agree or disagree with the following statements: The robot’s tablet screen designs and layout are attractive.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q5. Do you agree or disagree with the following statements: I feel relaxed when talking to the robot.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q6. Do you agree or disagree with the following statements: I would like the robot to be my friend.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q7. Do you agree or disagree with the following statements: I would follow the advice of the robot.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q8. Do you agree or disagree with the following statements about the reminiscence app: The app is useful.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q9. Do you agree or disagree with the following statements about the reminiscence app: The app is easy to use.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q10. Do you agree or disagree with the following statements about the reminiscence app: The app is interesting.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q11. Do you agree or disagree with the following statements about the reminiscence app: I would like to use the app in the future.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q12. Do you agree or disagree with the following statements about the reminiscence app: I would like to recommend the app to others.

1) Strongly disagree

2) Somewhat disagree

3) Neither agree nor disagree

4) Somewhat agree

5) Strongly agree

Q13. How would you like to use the robot?

1) Tablet

2) Speech

3) Both tablet and speech

Q14. What are the functions you desire to have on this robot? (multi-choice)

1) Help me to call family and friends

2) Help me to contact medical services

3) Help me to do shopping

4) Help me to order food

5) Help me to prevent falling

6) Help me to turn off the stovetop

7) Remind me to take medicine

8) Emergency call service

9) Keep me company

10) Other____

Q15. Do you have any suggestions or comments?

Contributor Information

Fengpei Yuan, Department of Mechanical, Aerospace, and Biomedical Engineering, University of Tennessee, Knoxville, TN 37996, USA.

Marie Boltz, College of Nursing, Penn State University, University Park, PA 16802, USA

Dania Bilal, School of Information Sciences, University of Tennessee, Knoxville, TN 37996, USA

Ying-Ling Jao, College of Nursing, Penn State University, University Park, PA 16802, USA

Monica Crane, Genesis Neuroscience Clinic, Knoxville, TN, USA 37909, USA.

Joshua Duzan, Genesis Neuroscience Clinic, Knoxville, TN, USA 37909, USA

Abdurhman Bahour, Department of Electrical Engineering and Computer Science, University of Tennessee, Knoxville, TN 37996, USA.

Xiaopeng Zhao, Department of Mechanical, Aerospace, and Biomedical Engineering, University of Tennessee, Knoxville, TN 37996, USA.

References

- [1].Alzheimer’s Association, “2022 alzheimer’s disease facts and figures,” Alzheimer’s & Association, vol. 18, 2022. [DOI] [PubMed] [Google Scholar]

- [2].Duong S, Patel T, and Chang F, “Dementia: What pharmacists need to know,” Canadian Pharmacists Journal/Revue des Pharmaciens du Canada, vol. 150, no. 2, pp. 118–129, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Woods B, O’Philbin L, Farrell EM, Spector AE, and Orrell M, “Reminiscence therapy for dementia,” Cochrane database of systematic reviews, no. 3, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].O’Philbin L, Woods B, Farrell EM, Spector AE, and Orrell M, “Reminiscence therapy for dementia: an abridged cochrane systematic review of the evidence from randomized controlled trials,” Expert review of neurotherapeutics, vol. 18, no. 9, pp. 715–727, 2018. [DOI] [PubMed] [Google Scholar]

- [5].Van Bogaert P, Tolson D, Eerlingen R, Carvers D, Wouters K, Paque K, Timmermans O, Dilles T, and Engel-borghs S, “Solcos model-based individual reminiscence for older adults with mild to moderate dementia in nursing homes: a randomized controlled intervention study,” Journal of Psychiatric and Mental Health Nursing, vol. 23, no. 9–10, pp. 568–575, 2016. [DOI] [PubMed] [Google Scholar]

- [6].Evans SC, Garabedian C, and Bray J, “‘now he sings’, the my musical memories reminiscence programme: Personalised interactive reminiscence sessions for people living with dementia,” Dementia, vol. 18, no. 3, pp. 1181–1198, 2019. [DOI] [PubMed] [Google Scholar]

- [7].Lazar A, Thompson H, and Demiris G, “A systematic review of the use of technology for reminiscence therapy,” Health education & behavior, vol. 41, no. 1_suppl, pp. 51S–61S, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Khan A, Bleth A, Bakpayev M, and Imtiaz N, “Reminiscence therapy in the treatment of depression in the elderly: Current perspectives,” Journal of Ageing and Longevity, vol. 2, no. 1, pp. 34–48, 2022. [Google Scholar]

- [9].Morales-de Jesús V, Gómez-Adorno H, Somodevilla-García M, and Vilarino D, “Conversational system as assistant tool in reminiscence therapy for people with early-stage of alzheimer’s,” in Healthcare, vol. 9, p. 1036, Multidisciplinary Digital Publishing Institute, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Cuevas PEG, Davidson PM, Mejilla JL, and Rodney TW, “Reminiscence therapy for older adults with alzheimer’s disease: a literature review,” International journal of mental health nursing, vol. 29, no. 3, pp. 364–371, 2020. [DOI] [PubMed] [Google Scholar]

- [11].Mulvenna M, Gibson A, McCauley C, Ryan A, Bond R, Laird L, Curran K, Bunting B, and Ferry F, “Behavioural usage analysis of a reminiscing app for people living with dementia and their carers,” in Proceedings of the European Conference on Cognitive Ergonomics 2017, pp. 35–38, 2017. [Google Scholar]

- [12].Ryan AA, McCauley CO, Laird EA, Gibson A, Mulvenna MD, Bond R, Bunting B, Curran K, and Ferry F, “‘there is still so much inside’: The impact of personalised reminiscence, facilitated by a tablet device, on people living with mild to moderate dementia and their family carers,” Dementia, vol. 19, no. 4, pp. 1131–1150, 2020. [DOI] [PubMed] [Google Scholar]

- [13].Laird EA, Ryan A, McCauley C, Bond RB, Mulvenna MD, Curran KJ, Bunting B, Ferry F, and Gibson A, “Using mobile technology to provide personalized reminiscence for people living with dementia and their carers: appraisal of outcomes from a quasi-experimental study,” JMIR mental health, vol. 5, no. 3, p. e9684, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Cunningham S, Brill M, Whalley JH, Read R, Anderson G, Edwards S, and Picking R, “Assessing wellbeing in people living with dementia using reminiscence music with a mobile app (memory tracks): a mixed methods cohort study,” Journal of healthcare engineering, vol. 2019, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Carós M, Garolera M, Radeva P, and Giro-i Nieto X, “Automatic reminiscence therapy for dementia,” in Proceedings of the 2020 International Conference on Multimedia Retrieval, pp. 383–387, 2020. [Google Scholar]

- [16].Yuan F, Klavon E, Liu Z, Lopez RP, and Zhao X, “A systematic review of roboticrehabilitation for cognitive training,” Frontiers in Robotics and AI, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Bejan A, Gündogdu R, Butz K, Müller N, Kunze C, and König P, “Using multimedia information and communication technology (ict) to provide added value to reminiscence therapy for people with dementia,” Zeitschrift für Gerontologie und Geriatrie, vol. 51, no. 1, pp. 9–15, 2018. [DOI] [PubMed] [Google Scholar]

- [18].Asprino L, Gangemi A, Nuzzolese AG, Presutti V, and Russo A, “Knowledge-driven support for reminiscence on companion robots.,” in AnSWeR@ ESWC, pp. 51–55, 2017. [Google Scholar]

- [19].Yuan F, Zhang R, Bilal D, and Zhao X, “Learning-based strategy design for robot-assisted reminiscence therapy based on a developed model for people with dementia,” in International Conference on Social Robotics, pp. 432–442, Springer, 2021. [Google Scholar]

- [20].Yamazaki R, Kochi M, Zhu W, and Kase H, “A pilot study of robot reminiscence in dementia care,” International Journal of Biomedical and Biological Engineering, vol. 12, no. 6, pp. 257–261, 2018. [Google Scholar]

- [21].Wu Y-L, Gamborino E, and Fu L-C, “Interactive question-posing system for robot-assisted reminiscence from personal photographs,” IEEE Transactions on Cognitive and Developmental Systems, vol. 12, no. 3, pp. 439–450, 2019. [Google Scholar]

- [22].Gamborino E, Herrera Ruiz A, Wang J-F, Tseng T-Y, Yeh S-L, and Fu L-C, “Towards effective robot-assisted photo reminiscence: Personalizing interactions through visual understanding and inferring,” in International Conference on Human-Computer Interaction, pp. 335–349, Springer, 2021. [Google Scholar]

- [23].Vi CT, Takashima K, Yokoyama H, Liu G, Itoh Y, Subramanian S, and Kitamura Y, “D-flip: Dynamic and flexible interactive photoshow,” in International Conference on Advances in Computer Entertainment Technology, pp. 415–427, Springer, 2013. [Google Scholar]

- [24].Vollmer Dahlke D and Ory MG, “Emerging issues of intelligent assistive technology use among people with dementia and their caregivers: A us perspective,” Frontiers in Public Health, vol. 8, p. 191, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Woods D, Yuan F, Jao Y-L, and Zhao X, “Social robots for older adults with dementia: A narrative review on challenges & future directions,” in International Conference on Social Robotics, pp. 411–420, Springer, 2021. [Google Scholar]