Abstract

With a growth of surveillance cameras, the amount of captured videos expands. Manually analyzing and retrieving surveillance video is labor intensive and expensive. It would be much more convenient to generate a video digest, with which we can view the video in a fast and motion-preserving way. In this paper, we propose a novel video synopsis approach to generate condensed video, which uses an object tracking method for extracting important objects. This method will generate video tubes and a seam carving method to condense the original video. Experimental results demonstrate that our proposed method can achieve a high condensation rate while preserving all the important objects of interest. Therefore, this approach can enable users to view the surveillance video with great efficiency.

Index Terms—: Video synopsis, seam carving, video surveillance

I. Introduction

IN the last decade millions of surveillance cameras have been deployed and used in transportation hubs, ATMs and many other public or private facilities. Due to the decreasing cost of deploying cameras, it is much easier and cheaper to surveille a specific location. With the development of the internet, millions of surveillance videos are transmitted through the web.

This would require viewing in real-time to determine if there are any important events and also identify any suspicious behavior for a large amount of captured video by companies and security organizations. However, without the help of considerable man power support, most surveillance videos are never watched or analyzed. Thus, the main challenge has been how to process surveillance video so that one can browse and retrieve important parts in the most effective and timely manner. One example is a video synopsis based method, which has the ability to preserve the integrity of moving objects in the compressed domain [1]. Another viable approach is image retargeting. Due to its unique feature of changing the image resolution without affecting the important parts, this approach has been extensively studied in the past [2], [3], including its extension to stereoscopic images [4], and videos [5]. Retargeting could be effective for preserving important information.

Various approaches to compute video digests have been proposed, among which the simplest is fast forward [6]–[8]. It is based on skipping individual frames according to a specific rate; thus limiting its capability as only entire frames can be removed. As a result, the condensation rate of fast forward is relatively low. In contrast, video summarization, which extracts key frames and presents them as a storyline, has a very high condensation rate [9]. However, it may lose all the dynamic content of the original video as only key frames are presented. Another approach, video montage, extracts relevant spatial-temporal segments of the video and combines them into a digest video [10]–[13]. The limitation of such an approach is that it may cause content loss and its complexity is quite high.

Video synopsis is a compact representation of video that enables efficient browsing and retrieval. This technique is able to generate digest video from the original version. Generally, video synopsis firstly defines objects of interest and handles them as tubes in space-time volume. Then each object can be temporary shifted to avoid collision between different objects. In this way, a condensed digest video can be generated. It should be noted that in contrast to other techniques, condensed video generated by video synopsis is able to express the complete dynamics of the scene. In addition, video synopsis may change the relative timing between objects in order to reduce temporal redundancy as much as possible [14].

Authors in [15], [16] and [17] have proposed a method to condense video from space-time video volume by extending seam-carving [3] in 2D images, which was recently proposed for image retargeting. [15] produced an efficient spatio-temporal group to do seam carving for summary video. [16] carved sheet from the space-time volume of video. In [17], the authors proposed the new concept of ribbon-carving (RC) in space-time video volume. Different from intensity-based cost function for seam-carving, RC uses an activity-based cost function. In this way, RC is able to recursively carve ribbons in order to generate digest video by minimizing an activity-aware cost function with dynamic programming. In addition, ribbon-carving uses a flex-parameter in order to tradeoff the anachronism of events and video condensation rate [17]. The trade off is that the condensation rate of RC is quite limited since it only carves the pixels of the static background.

In this paper, we propose a novel video synopsis approach to compute video digest. Based on seam carving [3] video tubes, our proposed approach is able to reduce the non-object content, as well as the redundancy in the movement of objects. In this way, we may condense a video in a high ratio while preserving the important motion of objects. We make the following contributions in this paper:

use optical flow based seam carving to condense video tubes

define a new stopping criterion for users to determine the condensation rate of video synopsis

The remainder organized as follows. Firstly, we introduce our proposed video synopsis method in Section II, in which we include tube based seam carving to condense the original video. Then we evaluate the performance of our proposed method in Section III. Finally, we draw our conclusions in Section IV.

II. Our Proposed Video Synopsis

In traditional video synopsis approaches, generally static background pixels or frames are discarded. Thus, the activity of objects can be preserved along with the video being more compact. Considering the purpose of video synopsis, which gives the viewer a compact representation of the whole content in the video, we propose a new video synopsis method. In our method, the background pixels and the video objects are processed. We propose to extract every object and apply seam carving to condense each activity. Taking advantage of seam carving, key actions of the objects are preserved and redundant movements are reduced. After these operations, a condensed video can be generated.

A. Tube Extraction

In our tube based video synopsis method, tube extraction is the first step. Recently many object tracking methods have been introduced [18]. These methods can be directly applied in situations where a moving object may correspond to an important event.

This problem of object tracking can be divided into two parts: detecting moving objects in each frame and associating the detected regions corresponding to the same object over time. In each frame, we use Gaussian Mixture Models (GMM) [19] to detect moving objects, and Blob analysis to connect corresponding pixels to moving objects.

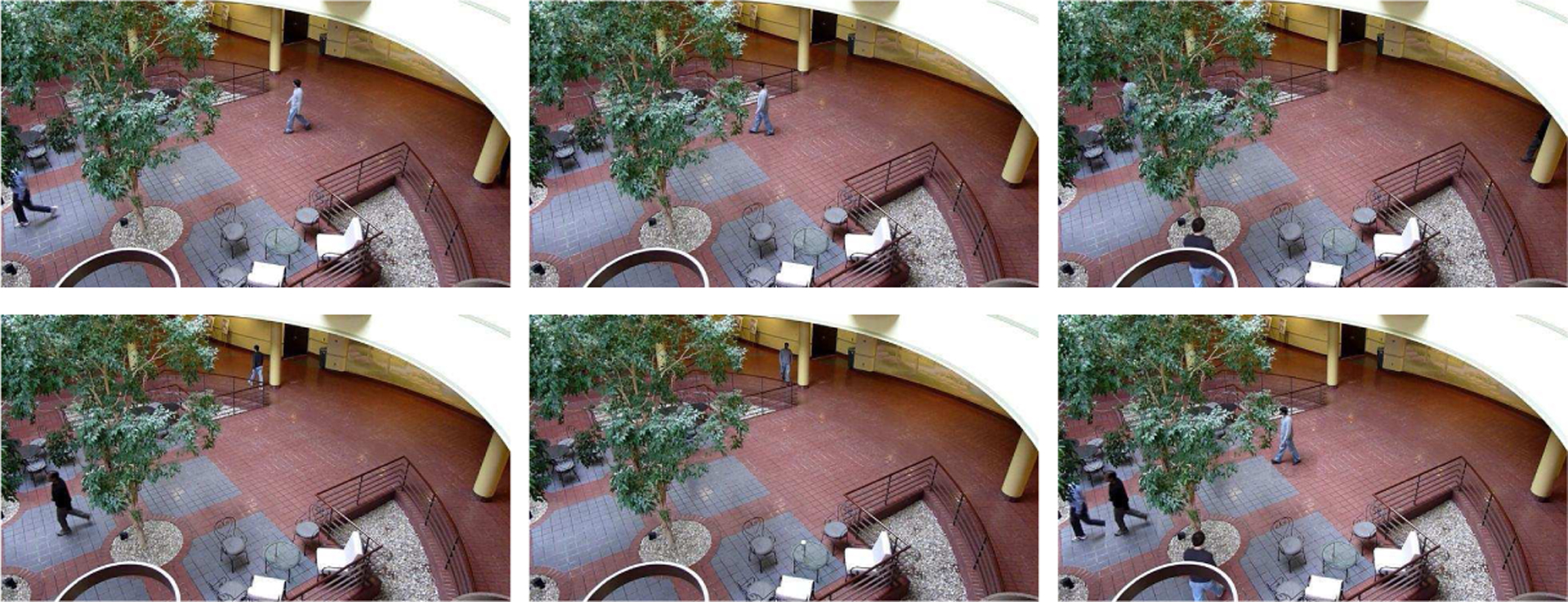

After obtaining the regions of moving objects in each frame, we need to associate them with every distinct object. The motion of an object is the association of the detection. Kalman filter is applied to estimate the motion of each track. Thus, the tracking location is predicted and the likelihood of the detection is determined. In this way, we can extract the track of each moving object. A boundary box is marked in each frame for every object. Fig. 1 shows the tracking results of some frames, which we select for the “Atrium” sequence in chronological order. As shown in Fig. 1, each moving object can be captured by our yellow boundary box. The number in the title of the box indicates the object ID.

Fig. 1:

Tracking results for “Atrium” Sequence.

With the help of object tracking, we can get all the necessary information of tubes, including the starting frame ID, the boundary box in each frame and the duration of the tube.

Using this information, we can create a binary mask sequence for each tube. The length of the sequence is the duration of the tube. The value of each pixel in the mask is:

| (1) |

where i stands for the tube number; x and y denote the coordinates of the pixel; t means the frame number; and bboxi denotes the ith boundary box.

B. Tube Condensing

In order to further condense the video, we propose to apply seam carving to video tubes derived from Section II-A to remove the less important pixels and preserve the most important regions. With boundary boxes we can extract a specific video clip for each tube or object.

Instead of using seam carving to change the resolution of the image/frame for image/video retargeting [3], we apply it to the x − t field in order to carve the temporal redundancy of the video. In traditional seam carving, the seam walks through pixels with the lowest gradient cost in the x − y field, which reflects less important content. The seam is from top to bottom and is eight-connected so continuity is preserved. Every carved seam will reduce the width of the image by one column. Contiguously carving seams with the least cost will return a smaller image with less distortion of important content.

In the x−t field of videos, seam carving acts similarly as it does in images. When we transpose the video clip and apply seam carving on the time dimension, we choose seam walking through a path with the least sum of optical flow value. In this case, the optical flow value represents the intensity of the object motion. Generally, the background pixel has less motion information; thus its optical flow value is very close to 0 and relatively much smaller than that of the foreground pixel, i.e. moving object pixel. As a result, when we apply seam carving to the video tubes, less significant motion pixels will be removed and fast moving pixels will be preserved due to the high optical flow values.

For seam carving in 2D images, firstly we need to calculate the energy map. In our proposed method, we transform the 3D problem into a 2D problem by calculating the mean of the optical flow value of every x − t plane. Fig. 2 shows an example of the energy map in the x − t plane of the original video. In this figure, white areas indicate background pixels, which have almost no motion information; blue areas represent moving objects with high optical flow values.

Fig. 2:

Seam carving in x − t plane.

Thus, the complexity is reduced and seam carving can be directly applied to the video tube. Dynamic programming (DP) is implemented in the traditional seam carving. We can reuse this idea in video synopsis. With DP, we can select the seam with the smallest energy. Fig. 2 shows the vertical seams (red) superposed in the x − t plane based on the energy map. Because the energy of every pixel is the mean of optical flow values, the vertically oriented seam is equivalent to a ribbon in a 3D cuboid, which has the least cost. In this way, we remove the ribbon to condense the tube by one frame.

C. Energy Optimization and Tube Stitching

After condensed video tubes are generated, we need to stitch the tubes back to the video. Considering that in short surveillance video, the background does not normally change, we construct the background by calculating the temporal median of the whole video clip. Then we need to figure out the order or the temporal mapping of the tubes before reinserting them into the background.

The process of stitching tubes back to the video is, in fact, a process of energy minimization. In Fig. 3, a brief framework of tube stitching is demonstrated. The generation of synopsis video is the computation of the index of each tube. The index is a temporal mapping M, which shifts dynamic object O in time from its original time in the original video to the time of the synopsis. A proper mapping M will minimize the following energy function:

| (2) |

where Eas represents the energy based on assessment. is the individual energy of the ith tube and is the pairwise one of the ith and jth tube [14]. α, β, γ are the weights set by users according to their relative importance for a particular instance. For example, increasing the weight of the assessment energy will lead to better overall quality, but in the meantime the energy of the other two may not remain unchanged and will lead to more overlaps.

Fig. 3:

The work flow of tube stitching.

In consideration of the huge computation with the large number of tubes and pixels, approaches of optimization are limited. In this paper, a greedy algorithm is applied to achieve a good result. We define the term states as the set of each tube’s position.

From the initial state, an attempt to shift backward every tube at a particular step is taken to find whether the energy decreases. The algorithm ends when the last tube exceeds the maximum length of the condensed video (defined by user) or the global energy does not decrease any more. To speed up the computation, different lengths of steps are defined. When a round of attempts fails, the algorithm will switch to a longer step to further the exploration. The step will not decrease until the energy reduces.

In this way, the algorithm returns an index of the starting frame number for each tube. According to the index, we can stitch each tube into the background properly to generate the condensed video.

D. Stopping criterion

Let us define the distance dis in the energy map as shown in Fig. 4. We can calculate the value disi on the ith row of the energy map and define MD as the mean of disi. As we can observe from Fig. 4, the value disi stands for the span of the object on the time domain given a fixed coordinate i. After every iteration of seam carving, MD will decrease due to condensing the motion. Users can set a threshold ThDis to control the condensing ratio. When MD decreases to ThDis, the seam carving process will stop. In this way, the condensation rate is under control.

Fig. 4:

The definition of the stopping criterion.

III. Experiments and Results

In order to evaluate the performance of the proposed approach, we have conducted some experiments using different surveillance videos as original video. We use three testing videos from [17] for performance comparison. A detailed description of these videos are provided in Table I. With our proposed video synopsis approach, we extract the moving objects from the video using object tracking methods and apply video seam carving to the object clips.

TABLE I:

Detailed description of three testing video sequences.

| Atrium | Highway | Overpass | |

|---|---|---|---|

| resolution | 640 × 360 | 320 × 80 | 320 × 120 |

| frames | 431 | 23,950 | 23,950 |

| frame rate | 30 | 30 | 30 |

In our experiments, we select a state-of-the-art video condensation method, ribbon-carving (RC) [17] and tube-arrangement from [14], for performance comparison with our method. In [17], the authors proposed a flex parameter ϕ to adjust the condensation rate by controlling the maximal deviation or jump from connectivity of seams. In ribbon carving, ϕ = 0 is actually frame removal. Generally, larger values of ϕ indicate a higher condensation ratio of video synopsis at the price of greater event anachronism.

The comparison results of condensation ratios are presented in Table II. As we can observe from this table, ϕ = 0 provides the minimal condensation rate. Even when we increase the value of ϕ, the increment of the condensation rate is trivial. Ribbon-carving only carves the stationary background pixels. In contrast, our proposed approach is able to carve not only the stationary background pixels, but also the low-motion object regions. In this way, our proposed method yields a much higher condensation rate as compared with ribbon-carving.

TABLE II:

Comparison results for video synopsis between RC [17] and our method.

| Methods | Atrium | Highway | Overpass | |||

|---|---|---|---|---|---|---|

| Frames | Ratio | Frames | Ratio | Frames | Ratio | |

| RC (ϕ = 0) | 409 | 1.05:1 | 8,114 | 2.95:1 | 11,880 | 2.02:1 |

| RC (ϕ = 1) | 385 | 1.12:1 | 3,186 | 7.52:1 | 9,174 | 2.61:1 |

| RC (ϕ = 2) | 380 | 1.13:1 | 3,031 | 7.90:1 | 8,609 | 2.78:1 |

| RC (ϕ = 3) | 379 | 1.14:1 | 2,970 | 8.06:1 | 8,331 | 2.87:1 |

| Tube-Rearrangement | 184 | 2.34:1 | 2135 | 11.21 | 1931 | 12.40:1 |

| Our approach | 87 | 4.95:1 | 1352 | 17.71:1 | 928 | 25.81:1 |

In addition to the performance of condensation rate, we also evaluate the performance of activity preserving of our method. Fig.5 and Fig.6 show the results of our method for the two tested sequences respectively. Fig.5 is from “Overpass” and Fig.6 is from “Atrium”. In both of Fig.5 and Fig.6, firstly 5 original frames are shown, each of which contains different objects (pedestrian, bicycle). In the last image, a condensed frame generated by our method is provided, which is able to include all these objects. Therefore, our proposed method is able to well preserve all the objects activity with much smaller number of frames in the condensed video.

Fig. 5:

Results of the condensed video frames for “Overpass” sequence. The first 5 frames are original frames; and the last one is the corresponding condensed frame. The original frame numbers are #9475, #10076, #10648, #10725, #11769. The frame number of the corresponding condensed frame is #459.

Fig. 6:

Results of the condensed video frames for “Atrium” sequence. The first 5 frames are original frames; and the last one is the corresponding condensed frame. The original frame numbers are #93, #104, #134, #202, #290. The frame number of the corresponding condensed frame is #39.

IV. Conclusions

In this paper, a novel approach of video synopsis is proposed, which applies the idea of seam carving to video tubes in order to achieve a higher condensation rate. The condensed video generated from our approach is natural-looking and with high fidelity. By altering the user-defined threshold, the length of the condensed video and the fidelity of the motion can be controlled. Different thresholds can be applied to different occasions for a better performance. Object indexing techniques enable viewers to track the selected object back to the original video.

It should be noted that the proposed method has limitations and some of these are listed as follows:

The tracking performance is based on an object detection and tracking algorithm. The performance may drop if the tracking method does not work well and extracts bad tubes.

The chronological order of events may be changed, which is not expected in some specific cases.

Acknowledgments

This work is supported by NSFC (Grant No.: 61370158; 61522202).

Contributor Information

Ke Li, School of Computer Science, Shanghai Key Laboratory of Intelligent Information Processing, Fudan University, Shanghai, China.

Bo Yan, School of Computer Science, Shanghai Key Laboratory of Intelligent Information Processing, Fudan University, Shanghai, China.

Weiyi Wang, School of Computer Science, Shanghai Key Laboratory of Intelligent Information Processing, Fudan University, Shanghai, China.

Hamid Gharavi, National Institute of Standards and Technology, Gaithersburg, MD, 20899 USA.

References

- [1].Zhong R, Hu R, Wang Z, and Wang S, “Fast synopsis for moving objects using compressed video,” IEEE Signal Processing Letters, vol. 21, no. 7, pp. 834–838, July 2014. [Google Scholar]

- [2].Kim W and Kim C, “A texture-aware salient edge model for image retargeting,” IEEE Signal Processing Letters, vol. 18, no. 11, pp. 631–634, Nov 2011. [Google Scholar]

- [3].Avidan S and Shamir A, “Seam carving for content-aware image resizing,” in ACM Transactions on Graphics (TOG), vol. 26, no. 3, 2007, p. 10. [Google Scholar]

- [4].Yoo JW, Yea S, and Park IK, “Content-driven retargeting of stereoscopic images,” IEEE Signal Processing Letters, vol. 20, no. 5, pp. 519–522, May 2013. [Google Scholar]

- [5].Rubinstein M, Shamir A, and Avidan S, “Improved seam carving for video retargeting,” in ACM transactions on graphics (TOG), vol. 27, no. 3. ACM, 2008, p. 16. [Google Scholar]

- [6].Yeung MM and Yeo B-L, “Video visualization for compact presentation and fast browsing of pictorial content,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 7, no. 5, pp. 771–785, 1997. [Google Scholar]

- [7].Nam J and Tewfik AH, “Video abstract of video,” in IEEE 3rd Workshop on Multimedia Signal Processing, 1999, pp. 117–122. [Google Scholar]

- [8].Petrovic N, Jojic N, and Huang TS, “Adaptive video fast forward,” Multimedia Tools and Applications, vol. 26, no. 3, pp. 327–344, 2005. [Google Scholar]

- [9].Oh J, Wen Q, Lee J, and Hwang S, Video abstraction. Hershey, PA: Idea Group Inc. and IRM Press, 2004. [Google Scholar]

- [10].Irani M, Anandan P. a., Bergen J, Kumar R, and Hsu S, “Efficient representations of video sequences and their applications,” Signal Processing: Image Communication, vol. 8, no. 4, pp. 327–351, 1996. [Google Scholar]

- [11].Rav-Acha A, Pritch Y, and Peleg S, “Making a long video short: Dynamic video synopsis,” in IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, 2006, pp. 435–441. [Google Scholar]

- [12].Kang H-W, Chen X-Q, Matsushita Y, and Tang X, “Space-time video montage,” in IEEE Conference on Computer Vision and Pattern Recognition, vol. 2, 2006, pp. 1331–1338. [Google Scholar]

- [13].Rav-Acha A, Pritch Y, Lischinski D, and Peleg S, “Dynamosaicing: Mosaicing of dynamic scenes,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 29, no. 10, pp. 1789–1801, 2007. [DOI] [PubMed] [Google Scholar]

- [14].Pritch Y, Rav-Acha A, and Peleg S, “Nonchronological video synopsis and indexing,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 11, pp. 1971–1984, 2008. [DOI] [PubMed] [Google Scholar]

- [15].Decombas M, Dufaux F, and Pesquet-Popescu B, “Spatio-temporal grouping with constraint for seam carving in video summary application,” in Digital Signal Processing (DSP), 2013 18th International Conference on, July 2013, pp. 1–8. [Google Scholar]

- [16].Chen B and Sen P, “Video carving,” Short Papers Proceedings of Eurographics, pp. 68–73, 2008. [Google Scholar]

- [17].Li Z, Ishwar P, and Konrad J, “Video condensation by ribbon carving,” IEEE Transactions on Image Processing, vol. 18, no. 11, pp. 2572–2583, 2009. [DOI] [PubMed] [Google Scholar]

- [18].Wu Y, Lim J, and Yang M-H, “Online object tracking: A benchmark,” in 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2013, pp. 2411–2418. [Google Scholar]

- [19].Lee D-S, “Effective gaussian mixture learning for video background subtraction,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 5, pp. 827–832, 2005. [DOI] [PubMed] [Google Scholar]