Abstract

We assessed the performance of OpenAI’s ChatGPT-4 on United States Medical Licensing Exam STEP 1 style questions across the systems and disciplines appearing on the examination. ChatGPT-4 answered 86% of the 1300 questions accurately, exceeding the estimated passing score of 60% with no significant differences in performance across clinical domains. Findings demonstrated an improvement over earlier models as well as consistent performance in topics ranging from complex biological processes to ethical considerations in patient care. Its proficiency provides support for the use of artificial intelligence (AI) as an interactive learning tool and furthermore raises questions about how the technology can be used to educate students in the preclinical component of their medical education. The authors provide an example and discuss how students can leverage AI to receive real-time analogies and explanations tailored to their desired level of education. An appropriate application of this technology potentially enables enhancement of learning outcomes for medical students in the preclinical component of their education.

Keywords: Medical education, ChatGPT, USMLE, Step 1, Machine learning, Artificial intelligence

Introduction

Artificial intelligence (AI) is revolutionizing our world. As an emerging technology, it has shown enormous potential in numerous fields with the arrival of self-driving vehicles, smart devices, and automated personal assistants [1]. This has since sparked conversation about other possible implications, particularly in the realm of education.

The basis for AI’s use in education lies in its incorporation into language learning models (LLMs) or computational tools that can comprehend, learn, and generate human-like text. These models train on an extensive dataset and abide by an algorithm to discern relationships between words and grammatical structure in order to establish language patterns [2]. As a result, LMMs establish rules for which they can create their own coherent and contextually relevant sentences.

A prime example is OpenAI’s ChatGPT, which in its fourth iteration builds upon a transformer-based model and introduces several pivotal improvements over prior versions and other models [3]. The most notable is its breadth of training data, which expanded from 175 billion parameters in GPT3.5 to 1 trillion in the 4.0 model [4]. Bing’s current AI model has 175 billion parameters, and Microsoft’s Bard has 540 billion by comparison [5, 6]. ChatGPT therefore has a substantial knowledge base, which enables it to produce more nuanced and accurate responses than its competitors.

Due to its widespread acclaim as the fastest growing model, GPT-4 has since been subjected to numerous testing environments, passing the Uniform Bar Exam, Law School Admission Test, Scholastic Aptitude Tests, Graduate Record Examinations, and Advanced Placements exams with high percentiles [7, 8]. In this paper, we focus on GPT-4 performance with respect to the United States Medical Licensing Examination (USMLE) STEP 1 examination, which is the first of three board exams required of medical students. It is administered over 8 hours and consists of 7 blocks each composed of 40 questions pertaining to basic sciences of the practice of medicine [9]. In 2022, 29,039 examinees from US/Canadian schools sat the exam and 91% passed [10].

Earlier studies have demonstrated success of the prior models, ChatGPT-3 and ChatGPT-3.5, with scores above the passing threshold on USMLE STEP 1 style questions, provoking discussion of its use as a question analyzer and educational resource [11, 12]. All of these studies, however, reported GPT’s overall performance. No studies have assessed performance within the subjects and disciplines present on the USMLE STEP 1 exam. It is of particular importance to assess for STEP 1 as students dedicate a considerable amount of time to self-directed learning in preparation for the examination. As students incorporate supplementary study resources into their studies, these multi-dimensional performance metrics are needed to provide the most accurate recommendations about their use.

In this study, we expand upon the initial findings with GPT-3 and GPT-3.5 by using the newer model GPT-4 and reporting performance by subject and discipline. Primary objectives included determination of strict AI performance in the question sets. Secondary objectives involved identification of potential implications for GPT-4 in medical education.

Materials and Methods

Sample Question Acquisition

Authors received permission to source questions from AMBOSS with an active subscription [13]. AMBOSS is a comprehensive learning platform that offers a question bank for the USMLE STEP 1 examination [13]. In its custom session interface, users can select questions from a series of systems and disciplines to hone their studies to particular topics. For our purposes, we used this feature to extract questions from 18 systems and 12 disciplines.

Analogous systems and disciplines were merged and reclassified as demonstrated in Table 1. For example, “Reproductive System” contains questions drawn from “Female Reproductive System & Breast,” “Male Reproductive System,” and “Pregnancy, Childbirth & the Puerperium.” In the same manner, “Pharmacology” incorporates questions from both “Clinical Pharmacology” and “Pharmacology and Toxicology.” “Biochemistry” and “Nutrition” were also paired.

Table 1.

Selected systems and disciplines

| Systems | Disciplines |

|---|---|

| Behavioral Health (BH) | Anatomy (AN) |

| Biostats, Epidemiology/Population Health & Interpretation of the Medical Literature (BP) | Biochemistry, Nutrition (BN) |

| Blood & Lymphoreticular System (BL) | Epidemiology, Biostatistics, and Medical Informatics (EB) |

| Cardiovascular System (CV) | Histology (HS) |

| Endocrine System (ES) | Microbiology & Virology (MV) |

| Gastrointestinal System (GI) | Molecular & Cell Biology (MC) |

| General Principles of Foundational Science (GP) | Pathology (PA) |

| Immune System (IS) | Pharmacology (PC) |

| Multisystem Processes & Disorders (MS) | Physiology (PS) |

| Musculoskeletal System (MK) | Prevention, Health Promotion (PH) |

| Nervous System & Special Senses (NS) | |

| Renal & Urinary Systems (RU) | |

| Reproductive System (RP) | |

| Respiratory System (RS) | |

| Skin & Subcutaneous Tissue (ST) | |

| Social Sciences Literature (SS) |

For each system and discipline, we selected 50 questions at random from the AMBOSS question bank. Systems and disciplines with less than 50 questions were omitted from our selection. Each set of questions were subjected to independent analysis; therefore, we did not remove questions appearing in multiple systems and disciplines but rather employed repeat questions when applicable to the system/discipline assessed. Including these repeats, our analysis encompassed a total of n = 1300 questions.

Conversation Input

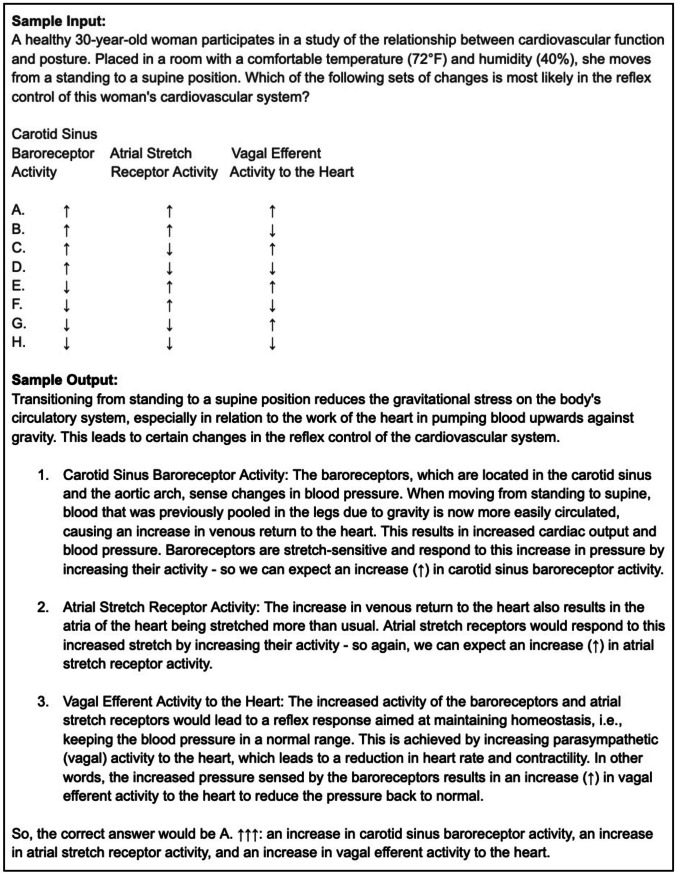

Authors entered each question and answer choice into the ChatGPT command as an independent conversation. Following each input and output set, the conversation was deleted and a new conversation was initiated to prevent influence from feedback knowledge in prior entries. Questions that contained media, such as histologic sections, gross anatomical specimens, genetic pedigrees, and biochemical pathways were excluded due to the inability of ChatGPT to process image files. Questions with tables that contained text and symbols, however, were included as demonstrated by the sample input and output shown in Fig. 1.

Fig. 1.

Sample input and output for demonstration of conversation with ChatGPT. Sample question obtained from USMLE Step 1 Orientation [14]

Data Collection

Answers for each question input were marked as either correct or incorrect following comparison to feedback after selecting the answer option within the AMBOSS question bank. In the event an output was inconclusive, the question was voided and excluded. As AMBOSS provides student performance metrics for each question, the percent of students who chose the correct answer was documented as well for performance comparison on each question.

Statistical Analysis

Statistical analysis was conducted with IBM SPSS 28. Accuracy percentages for each domain and the cumulative dataset were determined. Unpaired chi-squared tests were utilized to compare accuracies of each domain with each other for all systems and disciplines. Student accuracy for the total questions were divided into four quartiles. Unpaired chi-squared tests were used to determine the difference in ChatGPT accuracy when compared to student accuracy.

Results

A total of 1300 questions were analyzed for the study. Of that sample, 800 were analyzed in regard to systems (Fig. 2) and 500 in regard to disciplines (Fig. 3). ChatGPT answered 86% of the total questions accurately. Table 2 lists the obtained accuracies across all domains, along with the student accuracy percentages reported by AMBOSS. There were no significant differences between the various systems [x2(15) = 20.38, p = 0.158] or disciplines [x2(9) = 11.17, p = 0.265]. Figure 4 depicts the accuracy for ChatGPT when compared to different quartiles of student accuracy. Each quartile demonstrated a significant difference in ChatGPT accuracy when compared to each other [x2(3) = 108.56, p < 0.001]. Specifically, ChatGPT accuracy was significantly higher when comparing the higher student accuracy quartiles to the lower quartiles and similarly for each comparison thereafter (p < 0.001).

Fig. 2.

ChatGPT accuracy based on the various system domains analyzed

Fig. 3.

ChatGPT accuracy based on the various discipline domains analyzed

Table 2.

Accuracy comparisons for AMBOSS questions between ChatGPT and student output based on each system and discipline domain

| System | AI accuracy | Student accuracy | Discipline | AI accuracy | Student accuracy |

|---|---|---|---|---|---|

| Behavioral Health | 88% | 70% | Anatomy | 82% | 59% |

| Biostats, Epidemiology/Population Health & Interpretation of Medical Literature | 80% | 62% | Biochemistry, Nutrition | 80% | 58% |

| Blood & Lymphoreticular System | 84% | 60% | Epidemiology, Biostatistics, and Medical Informatics | 80% | 61% |

| Cardiovascular System | 90% | 64% | Histology | 90% | 57% |

| Endocrine System | 82% | 61% | Microbiology & Virology | 90% | 55% |

| Gastrointestinal System | 82% | 60% | Molecular & Cell Biology | 84% | 52% |

| General Principles of Foundational Science | 80% | 50% | Pathology | 84% | 59% |

| Immune System | 92% | 56% | Pharmacology | 92% | 57% |

| Multisystem Processes & Disorders | 84% | 54% | Physiology | 86% | 56% |

| Musculoskeletal System | 94% | 61% | Prevention, Health Promotion | 96% | 73% |

| Nervous System & Special Senses | 78% | 59% | |||

| Renal & Urinary Systems | 82% | 60% | |||

| Reproductive System | 96% | 58% | |||

| Respiratory System | 90% | 59% | |||

| Skin & Subcutaneous Tissue | 90% | 59% | |||

| Social Sciences | 92% | 83% |

Fig. 4.

ChatGPT accuracy compared to quartiles of student correct percentages

Discussion

AI Performance

In this study, we present a follow-up to previous studies that have assessed the performance of ChatGPT on USMLE STEP 1 style questions [11, 12]. This current analysis is the first, however, to provide a breakdown of ChatGPT-4’s performance within the various subjects and disciplines found on the exam. The findings of our study validate the proficiency of ChatGPT-4, which posted 86% accuracy in the 1300 questions assessed. This is a significant improvement from a previous study that used the earlier ChatGPT-3 model on a similar question set, reporting accuracy of 44% [11]. This improvement corroborates the newer model’s demonstration of success in various other standardized exams [8]. As per the NBME, students must achieve an approximate 60% to pass the USMLE STEP 1 exam [15]. ChatGPT-4 exceeds that threshold by 26 percentage points and therefore exhibits the competency level of a student who has completed at least two years of medical school.

In our analysis, we found no significant difference in ChatGPT-4 accuracy across the 18 systems and 12 disciplines shown. These findings demonstrate that ChatGPT-4 is proficient in various preclinical domains. Interestingly, the AI had no deficits in solving questions that required ethical considerations or empathetic responses to patients. This was endorsed by a non-significant difference between performance in the “Social Sciences” system and other content areas consisting of questions that required pure factual recall and multi-step processing of physiologic processes. These findings are compatible with a previous study that revered ChatGPT’s capabilities in empathetic communication skills with patients and demonstrated its proficiency in the area [16].

In addition to assessments of pure AI performance, we compared answer output accuracy to medical students answering the same questions. As student accuracy increased or decreased, we found ChatGPT accuracy to trend in the same direction for subjects and disciplines. This could indicate similar logical reasoning between AI and medical students or otherwise reflect variance in question difficulty between systems and disciplines. Notably, student accuracy percentage as reported by AMBOSS reflect performance at different times during their preparation, therefore accuracy percentages may be lower than when students sit for their actual examination.

Implications for Medical Education

A recent topic of discussion is the potential implications for ChatGPT-4 in medical education [17, 18]. Its high accuracy suggests that ChatGPT-4 could be used as a learning tool for medical students, who can engage in dialogue with the model to test their knowledge and understanding of various medical subjects and disciplines. As we have shown in our sample input and output, ChatGPT-4 demonstrates multi-step processing and provides detailed, logical explanations to questions that can help students to understand complex concepts. Interestingly, however, we found that the output can be leveraged further to provide explanations at a desired level of education. For instance, ChatGPT-4 can be prompted to provide simplified explanations or analogies while maintaining accurate reasoning to enhance learning. This is demonstrated below with follow-up input to our sample question (Fig. 5).

Fig. 5.

Sample follow-up input and output for demonstration of conversation with ChatGPT

The response provided demonstrates that GPT-4 can serve useful in providing alternative responses when further understanding is needed. This is similar to the role of a tutor and can be beneficial when explanations in a textbook or other learning resource are insufficient to the student. In essence, we show that GPT-4 can be used to provide interactive feedback and present content at the level requested by the student, which we believe could help to bridge knowledge gaps and aid students struggling with complex concepts.

As a caveat, there is some concern that an increased dependence on AI could lead to less human interaction and decreased problem-solving skills. As with any learning tool it would be critical to balance AI-based learning with traditional methods to ensure a comprehensive education. In its current stage of development AI also has some limitations, such as lack of image support. For that reason, students who use the current version of AI are unable to use this resource to aid with interpretation of ECGs, histology, or radiographic images. Although this feature is currently non-existent, we anticipate it will become available in the future, which will warrant further research.

Limitations of our study include possible bias in question selection for fields with a high proportion of image-based questions, as these were excluded. Furthermore, we were unable to assess more specific disciplines included within the AMBOSS question set due to a smaller sample size of questions within those domains. Application of ChatGPT-4 in its current state, however, offers promise as a supplemental resource for preclinical medical education. Future studies should aim to assess the practicality and efficacy of ChatGPT in supplementation with the current preclinical medical curriculum. Assessment of its accuracy on other medical school and specialty board exams may also be beneficial to understand the maximum potential of the software in its current stage of development.

Conclusion

The capability of GPT-4 to sort through large volumes of data in seconds allows it to provide immediate, interactive feedback, something that is invaluable to students preparing for high-stakes examinations. In preparation for exams such as USMLE STEP 1, ChatGPT-4 demonstrates proficiency in all subjects and disciplines assessed and could potentially serve a role in fundamentally changing our approach to pre-clinical education. In leveraging this technology, we stand on the brink of significant breakthroughs in our ability to enhance learning outcomes, especially in fields as critical and demanding as medicine.

Acknowledgements

The authors wish to acknowledge AMBOSS for allowing our use of their comprehensive question set, without which this research would not have been possible.

Author Contribution

All authors contributed to the study conception and design. Material preparation and data collection were performed by RG, BM, and JC. Analyses were performed by RG. The first draft of the introduction was written by JC, methods and results by RG, and discussion and conclusion by BM. All authors commented on previous versions of the manuscript. Revisions were completed by BM. All authors read and approved the final manuscript. Correspondence to RG.

Data Availability

The dataset obtained during and analyzed during the current study is available from the corresponding author on reasonable request.

Declarations

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Muthukrishnan N, Maleki F, Ovens K, Reinhold C, Forghani B, Forghani R. brief history of artificial intelligence. Neuroimaging Clin N Am. 2020;30(4):393–399. doi: 10.1016/j.nic.2020.07.004. [DOI] [PubMed] [Google Scholar]

- 2.Bommasani R, Liang P, Lee T. Language models are changing AI. We need to understand them. stanford university, human-centered artificial intelligence; Nov 2022. https://hai.stanford.edu/news/language-models-are-changing-ai-we-need-understand-them. Accessed 29 Jul 2023.

- 3.GPT-4 is OpenAI’s most advanced system, producing safer and more useful responses. OpenAI. https://openai.com/gpt-4. Accessed 29 Jul 2023.

- 4.Martindale J. GPT-4 vs. GPT-3.5: how much difference is there? Digital Trends; Apr 2023. https://www.digitaltrends.com/computing/gpt-4-vs-gpt-35/. Accessed 29 Jul 2023.

- 5.Deutscher M. Microsoft researchers reveal neural network with 135B parameters. SiliconANGLE; Aug 2021. https://siliconangle.com/2021/08/04/microsoft-researchers-reveal-neural-network-135b-parameters/. Accessed 29 Jul 2023.

- 6.Almekinders S. Google Bard PaLM update: 540 billion parameters take fight to ChatGPT. Techzine; Apr 2023. https://www.techzine.eu/news/applications/104043/google-bard-palm-update-540-billion-parameters-take-fight-to-chatgpt/. Accessed 29 Jul 2023.

- 7.Katz D, Bommarito M, Gao S, Arredondo P. GPT-4 passes the bar exam. Social Science Research Network; Mar 2023. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4389233. Accessed 29 Jul 2023.

- 8.GPT-4. OpenAI; 2023. https://openai.com/research/gpt-4. Accessed 29 Jul 2023.

- 9.United States Medical Licensing Examination, Step 1 Overview. https://www.usmle.org/step-exams/step-1. Accessed 29 Jul 2023.

- 10.United States Medical Licensing Examination, 2022 Performance Data. https://www.usmle.org/performance-data. Accessed 29 Jul 2023.

- 11.Gilson A, Safranek CW, Huang T, et al. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9:e45312. doi: 10.2196/45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. doi: 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.The smarter way to learn and practice medicine. AMBOSS. https://www.amboss.com. Accessed 29 Jul 2023.

- 14.United States Medical Licensing Examination, Step 1 Sample Test Questions. https://www.usmle.org/prepare-your-exam/step-1-materials/step-1-sample-test-questions. Accessed 29 Jul 2023.

- 15.United States Medical Licensing Examination, Examination Results and Scoring. https://www.usmle.org/bulletin-information/scoring-and-score-reporting. Accessed 29 Jul 2023.

- 16.Ayers JW, Poliak A, Dredze M, et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med. 2023;183(6):589–596. doi: 10.1001/jamainternmed.2023.1838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Khan RA, Jawaid M, Khan AR, Sajjad M. ChatGPT - reshaping medical education and clinical management. Pak J Med Sci. 2023;39(2):605–607. doi: 10.12669/pjms.39.2.7653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lee H. The rise of ChatGPT: exploring its potential in medical education. Anat Sci Educ. 2023 doi: 10.1002/ase.2270. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset obtained during and analyzed during the current study is available from the corresponding author on reasonable request.