Abstract

We introduce a new approach to nonlinear sufficient dimension reduction in cases where both the predictor and the response are distributional data, modeled as members of a metric space. Our key step is to build universal kernels (cc-universal) on the metric spaces, which results in reproducing kernel Hilbert spaces for the predictor and response that are rich enough to characterize the conditional independence that determines sufficient dimension reduction. For univariate distributions, we construct the universal kernel using the Wasserstein distance, while for multivariate distributions, we resort to the sliced Wasserstein distance. The sliced Wasserstein distance ensures that the metric space possesses similar topological properties to the Wasserstein space, while also offering significant computation benefits. Numerical results based on synthetic data show that our method outperforms possible competing methods. The method is also applied to several data sets, including fertility and mortality data and Calgary temperature data.

Keywords: Distributional data, RKHS, Sliced Wasserstein distance, Universal kernel, Wasserstein distance, 62G08, 62H12

1. Introduction

Complex data objects such as random elements in general metric spaces are commonly encountered in modern statistical applications. However, these data objects do not conform to the operation rules of Hilbert spaces and lack important properties such as inner products and orthogonality, making them difficult to analyze using traditional multivariate and functional data analysis methods. An important example of the metric space-valued data objects is the distributional data, which can be modeled as random probability measures that satisfy specific regularity conditions. Recently, there has been an increasing interest in this type of data. Petersen and Müller [43] extended the classical regression to Fréchet regression, making it possible to handle univariate distribution on scalar or vector regression. Fan and Müller [14] extended the Fréchet regression framework to the case of multivariate response distributions. Besides scalar or vector-valued predictors, the relationship between two distributions is also becoming increasingly important. Petersen and Müller [42] proposed the log quantile density (LQD) transformation to transform the densities of these distributions to unconstrained functions in the Hilbert space . Chen et al. [9] further applied function-to-function linear regression to the LQD transformations of distributions and mapped the fitted responses back to the Wasserstein space through the inverse LQD transformation. Chen et al. [8] proposed a distribution-on-distribution regression model by adopting the Wasserstein metric and shows that it works better than the transformation methods in Chen et al. [9]. Recently, Bhattacharjee et al. [4] proposed a global nonlinear Fréchet regression model for random objects via weak conditional expectation. In practical applications, distribution-on-distribution regression has been utilized for analyzing mortality distributions across different countries or regions [20], distributions of fMRI brain imaging signals [42], and distributions of daily temperature and humidity [40], among others.

Distribution-on-distribution regression encounters similar challenges to classical regression, including the need for exploratory data analysis, data visualization, and improved estimation accuracy through data dimension reduction. In classical regression, sufficient dimension reduction (SDR) has proven to be an effective tool for addressing these challenges. To set the stage, we outline the classical sufficient dimension reduction (SDR) framework. Let be a -dimensional random vector in and a random variable in . Linear SDR aims to find a subspace of such that , where is the projection on to with respect to the usual inner product in . As an extension of linear SDR, [28] and [25] propose the general theory of nonlinear sufficient dimension reduction, which seeks a set of nonlinear functions in a Hilbert space such that .

In the last two decades, the SDR framework has undergone constant evolution to adapt to increasingly complex data structures. Researchers have extended SDR to functional data [16, 22, 30, 31], tensorial data [12, 29], and forecasting with large panel data [15, 35, 53] Most recently, Ying and Yu [52], Zhang et al. [54], and Dong and Wu [13] have developed SDR methods for cases where the response takes values in a metric space while the predictor lies in Euclidean space.

Let and be random distributions defined on , with finite -th moments . We do allow and to be random vectors, but our focus will be on the case where they are distributions. Modelling and as random elements in metric spaces and , we seek nonlinear functions defined on such that the random measures and are conditionally independent given . In order to guarantee the theoretical properties of the nonlinear SDR methods and facilitate the estimation procedure, we assume to reside in a reproducing kernel Hilbert space (RKHS). While the nonlinear SDR problem can be formulated in much the same way as that for multivariate and functional data, the main new element in this theory that still requires substantial effort is the construction of positive definite and universal kernels on and . These are needed for constructing unbiased and exhaustive estimators for the dimension reduction problem [27]. We achieve this purpose with specific choices of the metrics of Wasserstein distance and sliced Wasserstein distance: we will show how to construct positive definite and universal kernels and the RKHS generated from them to achieve nonlinear SDR for distributional data.

While acknowledging the recent independent work of Virta et al. [50], who proposed a nonlinear SDR method for metric space-valued data, our work has some novel contributions. First, we focus on distributional data and consider a practical setting where only discrete samples from each distribution are available instead of the distributions themselves, while Virta et al. [50] only illustrated the method with torus data, positive definite matrices, and compositional data. Second, we explicitly construct universal kernels over the space of distributions, which results in an RKHS that is rich enough to characterize the conditional independence. In contrast, Virta et al. [50] only assumed that the RKHS is dense in space but missed verifications.

The rest of the paper is organized as follows. Section 2 defines the general framework of nonlinear sufficient dimension reduction for distributional data. Section 3 shows how to construct RKHS on the space of univariate distributions and multivariate distributions, respectively. Section 4 proposes the generalized sliced inverse regression methods for distribution data. Section 5 establishes the convergence rate of the proposed methods for both the fully observed setting and the discretely observed setting. Simulation results are presented in Section 6 to show the numerical performances of proposed methods. In Section 7, we analyze two real applications to human mortality & fertility data and Calgary extreme temperature data, demonstrating the usefulness of our methods. All proofs are presented in Section 9.

2. Nonlinear SDR for Distributional Data

We consider the setting of distribution-on-distribution regression. Let be a probability space. Let be a subset of and the Borel -field on . Let be the set of Borel probability measures on ) that have finite -th moment and that is dominated by the Lebesgue measure on . We let and be nonempty subsets of equipped with metrics and , respectively. We let and be the Borel -fields generated by the open sets in the metric spaces and . Let be a random element mapping from to , measurable with respect to the product -field . We denote the marginal distributions of and by and , respectively, and the conditional distributions of and by and .

Let be the sub -field in generated by , that is, . Following the terminology in [27], a sub -field of is called a sufficient dimension reduction -field, or simply a sufficient -field, if . In other words, captures all the regression information of on . As shown in Lee et al. [25], if the family of conditional probability measures is dominated by a -finite measure, then the intersection of all sufficient -field is still a sufficient -field. This minimal sufficient -field is called the central -field for versus , denoted by . By definition, the central -field captures all the regression information of on and is the target that we aim to estimate.

Let be a Hilbert space of real-valued functions defined on . We convert estimating the central field into estimating a subspace of . Specifically, we assume that the central -field is generated by a finite set of functions in , which can be expressed as

| (1) |

For any sub--field of , let denote the subspace of spanned by the function such that is -measurable, that is,

| (2) |

We define the central class as following (2). We say that a subspace of is unbiased if it is contained in and consistent if it is equal to . To recover the central class consistently by an extension of Sliced Inverse Regression [32], we need to assume the central -field is complete [25].

Definition 1. A sub -field of is complete if, for each function such that is measurable and almost surely , we have almost surely . We say that is a complete class for versus if is complete -field for versus .

Although our theoretical analysis so far does not require and to be RKHS, using an RKHS provides a concrete framework for establishing an unbiased and consistent estimator. It also builds a connection between the classical linear SDR and nonlinear SDR in the sense that can be expressed as the inner product , where is the reproducing kernel. This inner product is a nonlinear extension of in linear SDR. In the next section, We will describe how to construct RKHS for univariate and multivariate distributions.

3. Construction of RKHS

A common approach to constructing a reproducing kernel is to use a classical radial basis function (such as the Gaussian radial basis kernel) and substitute the Euclidean distance with the distance in the metric space. However, not every metric can be used in such a way to produce positive definite kernels. We show that metric spaces that are of negative type can yield positive definite kernels with form . Moreover, as will be seen in Proposition 3 and the discussion following it, in order to achieve an unbiased and consistent estimation of the central class , we need the kernels for and to be cc-universal (Micchelli et al. 37). For ease of reference, we use the term ”universal” to refer to cc-universal kernels. We select the Wasserstein metric and sliced Wasserstein metric for our work, as they possess the desired properties for constructing universal kernels.

3.1. Wasserstein kernel for univariate distributions

For probability measures and in , the -Wasserstein distance between and is defined as the solution of the Kantorovich transportation problem [49]:

where is the Euclidean metric, and is the space of joint probability measures on with marginals and . When , the -Wasserstein distance has the following explicit quantile representation:

where and denote the quantile functions of and , respectively. The set endowed with the Wasserstein metric is called the Wasserstein metric space and is denoted by . Kolouri et al. [23, Theorem 4] show that Wasserstein space of absolutely continuous univariate distributions can be isometrically embedded in a Hilbert space, and thus the Gaussian RBF kernel is positive definite.

We now turn to universality. Christmann and Steinwart [10, Theorem 3] showed that if is compact and can be continuously embedded in a Hilbert space by a mapping , then for any analytic function whose Taylor series at zero has strictly positive coefficients, the function defines a c-universal kernel on . To accommodate the scenarios of and , we need to go beyond compact metric spaces. For this reason, we use a more general definition of universality that does not require the support of the kernel to be compact, called cc-universality [37, 46, 47]. Let be a positive definite kernel and the RKHS generated by . For any compact set , let be the RKHS generated by . Let be the class of all continuous functions with respect to the topology in restricted on .

Definition 2. [37] We say that is universal(cc-universal) if, for any compact set , any member of , and any , there is an such that .

Let and . The subscripts and here refer to “Gaussian” and “Laplacian”, respectively. [54] showed that both and on a complete and separable metric space that can be isometrically embedded into a Hilbert space are universal. We note that if is separable and complete, then so is [41, Proposition 2.2.8, Theorem 2.2.7]. Therefore, We have the following proposition that guarantees the construction of universal kernels on (possibly non-compact) .

Proposition 1. If is complete, then and are universal kernels on .

By Proposition 1, we construct the Hilbert spaces and as RKHS generated by Gaussian type kernel or Laplacian type kernel . Let be the class of square-integrable functions of under . Let be the set of measurable indicator functions on , that is,

Recall that a measure on is regular if, for any Borel subset and any , there is a compact set and an open set , such that . By Zhang et al. [54, Theorem 1], if is a regular measure, is dense in , and hence dense in , which is the space of simple functions. Since is dense in , is dense in .

3.2. Sliced-Wasserstein kernel for multivariate distributions

For multivariate distributions , the sliced -Wasserstein distance is obtained by computing the average Wasserstein distance of the projected univariate distributions along randomly picked directions. Let and be two measures in , where , . Let be the unit sphere in . For , let be the linear transformation , where is the Euclidean inner-product. Let and be the induced measures by the mapping . The sliced -Wasserstein distance between and is defined by

It can be verified that is indeed a metric. We denote the metric space by and call it the sliced Wasserstein space. It has been shown (for example, Bayraktar and Guo [2]) that the sliced Wasserstein metric is a weaker metric than the Wasserstein metric, that is with , . This relation implies two topological properties of the sliced Wasserstein space that are useful to us, which can be derived from the topological properties of -Wasserstein space established in Ambrosio et al. [1, Proposition 7.1.5], and Panaretos and Zemel [41, Chapter 2.2].

Proposition 2. If is a subset of , then is complete and separable. Furthermore, if is compact, then is compact.

With , [23] show that the square of sliced Wasserstein distance is conditionally negative definite and hence that the Gaussian RBF kernel is a positive definite kernel. The next lemma shows that the Gaussian RBF kernel and Laplacian RBF kernel based on the sliced Wasserstein distance are, in fact, universal kernels.

Lemma 1. If is complete, then both and are universal kernels on . Furthermore, if and are regular measures, and are dense in and , respectively.

It’s worth mentioning that in a recent study, Meunier et al. [36] demonstrated the universality of the Sliced Wasserstein kernel. However, our findings extend beyond the scope of Meunier et al. [36]’s work. Specifically, our results apply to scenarios where is non-compact, such as , by introducing cc-universality as defined in Definition 2.

4. Generalized Sliced Inverse Regression for Distributional Data

This section extends the generalized sliced inverse regression (GSIR) [25] for distributional data. We call this extension to univariate distribution settings as Wasserstein GSIR, or W-GSIR, and to multivariate distribution settings as Sliced-Wasserstein GSIR, or SW-GSIR.

4.1. Distributional GSIR and the role of universal kernel

To model the nonlinear relationships between random elements, we introduce the covariance operator in the RKHS, a concept similar to the constructions in [19, 25], [27, Chapter 12.2] and [30]. Let and be two arbitrary Hilbert spaces, and let denote the class of bounded linear operators from to . If , we use to denote . For any operator , we use to denote the adjoint operator of , to denote the kernel of to denote the range of , and to denote the closure of the range of . Given two members and of , the tensor product is the operator on such that for all . It is important to note that the adjoint operator of is .

We define , the mean element of in , as the unique element in such that

| (3) |

for all . Define the bounded linear operator , the second-moment operator of in , as the unique element in such that, for all and in ,

| (4) |

We write , . For Gaussian RBF kernel and Laplacian RBF kernel based on Wasserstein distance or sliced-Wasserstein distance, is bounded and is finite. By Cauchy-Schwartz inequality and Jensen’s inequality, it is guaranteed that items on the right-hand side of (3) and (4) are well-defined. The existence and uniqueness of and is guaranteed by Riesz’s representation theorem. We then define the covariance operator as . Then, for all , , we have . Similarly, we can define , , and . By definition, both and are self-adjoint, and .

To define the regression operators and , we make the following assumptions. Similar regularity conditions are assumed in [25, 27, 28].

Assumption 1.

and .

and .

The operators and are compact.

Condition (i) amounts to resetting the domains of and to and , respectively. This is motivated by the fact that members of and are constants almost surely, which are irrelevant when we consider independence. Since and are self-adjoint operators, this assumption is equivalent to resetting to and to , respectively. Condition (i) also implies that the mappings and are invertible, though, as we will see, and are unbounded operators.

Condition (ii) guarantees that and , which is necessary to define the regression operators and . By Proposition 12.5 of [27], and . Thus, the above assumption is not very strong.

As interpreted in Section 13.1 of [27], Condition (iii) in Assumption 1 is akin to a smoothness condition. Even though the inverse mappings and are well defined, since and are Hilbert Schmidt operators ([18]), these inverses are unbounded operators. However, these unbounded operators never appear by themselves but are always accompanied by operators multiplied from the right. Condition (iii) assumes that the composite operators and are compact. This requires, for example, that must send all incoming functions into the low-frequency range of the eigenspaces of with relatively large eigenvalues. That is, and are smooth in the sense that their outputs are low-frequency components of or .

With Assumption 1 and universal kernels and , we then have that the range of the regression operator is contained in central class . Furthermore, if the central class is also complete, it can be fully covered by the range of . The next proposition adapts the main result of Chapter 13 of [27] to the current context.

Proposition 3. If Assumption 1 holds, is dense in and is dense in , then we have . If, furthermore, is complete, then we have .

The universal kernels and proposed in Section 3 guarantees that is dense in and , respectively.

4.2. Estimation for distributional GSIR

By Proposition 3, for any invertible operator , we have . Two common choices are and . When we take , the procedure is a nonlinear parallel of SIR in the sense that we replace the inner product in the Euclidean space by the inner product in the RKHS . For easy reference, we refer to the method using as W-GSIR1 or SW-GSIR1 and as W-GSIR2 or SW-GSIR2. To estimate the space , we successively solve the following generalized eigenvalue problem:

where are the solutions to this constrained optimization problem in the first steps.

At the sample level, we estimate , , and by replacing the expectations with sample moments whenever possible. For example, suppose we are given i.i.d. sample of . We estimate by

The sample estimates and for and are similarly defined. The subspace and are spanned by the sets , and , respectively. Let denote the matrix whose -th entry is respectively, and let denote the projection matrix . For two Hilbert spaces with spanning systems and , and a linear operator , we use the notation to represent the coordinate representation of relative to spanning systems and . We then have the following coordinate representations of covariance operators:

where and . The details are referred to Section 12.4 of [27].

When , the generalized eigenvalue problem becomes

Let . To avoid overfitting, we solve this equation for via Tychonoff regularization, that is, , where is a tuning constant. The problem is then transformed into finding eigenvector of the following matrix

and then set , . In practice, we use , where is the maximum eigenvalue of and is a tuning parameter.

For the second choice , we also use the regularized inverse , leading to the following generalized eigenvalue problem:

To solve this problem, we first compute the eigenvectors of the matrix

and then set for .

Choice of tuning parameters:

We use the general cross validation criterion [21] to determine the tuning constant :

The numerator of this criterion is the prediction error, and the denominator is to control the degree of overfitting. Similarly, the GCV criterion for is defined as

We minimize the criteria over grid to find the optimal tuning constants. We choose the parameters and in the reproducing kernels and as the fixed quantities and , where , and metric is for univariate distributional data and for multivariate distributional data.

Order Determination:

To determine the dimension in (1), we use the BIC type criterion in [28] and [30]. Let , where ’s are the eigenvalues of the matrix and is taken to be 2 when and 4 when . Then we estimate by

Recently developed order-determination methods, such as the ladle estimator [34], can also be directly used to estimate .

5. Asymptotic Analysis

In this section, we establish the consistency and convergence rates of W-GSIR and SW-GSIR. We focus on the analysis of Type-I GSIR, where the operator is chosen as the identity map . The techniques we use are also applicable to the analysis of Type-II GSIR. To simplify the exposition, we define and .

5.1. Convergence rate for fully observed distribution

If we assume that the data are fully observed, we can establish the consistency and convergence rates of W-GSIR and SW-GSIR without fundamental differences from [30]. To make the paper self-contained, we present the results here without proof.

Proposition 4. Suppose far some linear operator where . Also, suppose . Then

If is bounded, then .

If is Hilbert-Schmidt, then .

The condition is a smoothness condition, which implies the range space of be sufficiently focused on the eigenspaces of the large eigenvalues of . The parameter characterizes the degree of ”smoothness” in the relation between and , with a larger indicating a stronger smoothness relation.

By a perturbation theory result in Lemma 5.2 of Koltchinskii and Giné [24], the eigenspaces of converge to those of at the same rate if the nonzero eigenvalues of are distinct. Therefore, as a corollary of Proposition 4, the W-GSIR and SW-GSIR estimators are consistent with the same convergence rates.

5.2. Convergence rate for discretely observed distribution

In practice, additional challenges arise when the distributions are not fully observed. Instead, we observe i.i.d. samples for each , where , which is called the discretely observed scenario. Suppose we observe , where and are independent samples from and , respectively. Let , be the empirical measures , where is the Dirac measure at . Then we estimate and by and , respectively. For the convenience of analysis, we assume the sample sizes are the same, that is, . It is important to note that there are two layers of randomness in this situation: the first generates independent samples of distributions for , and the second generates independent samples given each pair of distributions .

To guarantee the consistency of W-GSIR or SW-GSIR, we need to quantify the discrepancy between the estimated and true distributions by the following assumption.

Assumption 2. For , and , where as .

Let be or for and be the empirical measure of based on i.i.d samples. The convergence rate of empirical measures in Wasserstein distance on Euclidean spaces has been studied in several works, including [7, 11, 17, 26, 51]. When is compact, Fournier and Guillin [17] showed that . However, when is unbounded, such as , we need concentration assumptions or moment assumptions on the measure to establish the convergence rate. Let be the -th moment of . If for some , the result of [17] implies that . If , then the term is dominated by and can be removed. If is a log-concave measure, then Bobkov and Ledoux [6] showed a sharper rate that .

The convergence rate of empirical measures in sliced Wasserstein distance has been investigated by Lin et al. [33], Niles-Weed and Rigollet [39], and Nietert et al. [38]. Lin et al. [33]. When is compact, the result of Lin et al. [33] indicates that . When and for some , Lin et al. [33] established the rate . A sharper rate is shown in Nietert et al. [38] under the log-concave assumption on .

To ensure notation consistency, we define

We note that are independent but not necessarily identically distributed. Despite this, we still write the sample average as . Similarly, we define and as the sample covariance operators based on the estimated distribution and . Under Assumption 2, we have the following lemma showing the convergence rates of covariance operators.

Lemma 2. Under Assumption 2, if the kernel is Lipschitz continuous, that is, , for some , then , and are Hilbert-Schmidt operators, and we have , , and .

Based on Lemma 2, we establish the convergence rate of W-GSIR in the following theorem.

Theorem 1. Suppose for some linear operator , where . Suppose , then

If is bounded, then .

If is Hilbert-Schmidt, then .

The proof is provided in Section 9. The same convergence rate can be established for SW-GSIR.

6. Simulation

In this section, we evaluate the numerical performances of W-GSIR and SW-GSIR. We consider two scenarios: univariate distribution on univariate distribution regression and multivariate distribution on multivariate distribution regression. In Section 6.4, we compare the performance of W-GSIR and SW-GSIR with the result using functional-GSIR [30]. The code to reproduce the simulation results can be found at https://github.com/bideliunian/SDR4D2DReg.

6.1. Computational details

We use the Gaussian RBF kernel to generate the RKHS. We consider the discretely observed situation described in Section 5.2. Specifically, let be the empirical distributions for . When is univariate distributions, for , , we estimate and by

respectively, where are the -th order statistics of .

When is multivariate distribution supported on , we estimate the sliced Wasserstein distance using a standard Monte Carlo method, that is,

where are i.i.d. samples drawn from the uniform distribution on . The number of samples controls the approximation error: a larger gives a more accurate approximation but increases the computation cost. In our simulation settings, we set .

We consider two measures to evaluate the difference between estimated and true predictors. The first one is the RV Coefficient of Multivariate Rank (RVMR) defined below, which is a generalization of Spearman’s correlation in the multivariate case. For two samples of random vectors and , let , be their multivariate ranks, that is,

Then the RVMR between and is defined as the RV coefficient between and :

The second one is the distance correlation [48], a well-known measure of dependence between two random vectors of arbitrary dimension.

6.2. Univariate distribution-on-distribution regression

We generate normal distribution with mean and variance parameters being random variables dependent on , that is,

| (5) |

where and are random variables generated according to the following models:

Model I-1 : ; ;

Model I-2 : ; ;

Model I-3 : ; ;

Model I-4 : ; .

We let and and generate discrete observations from distributional predictors by where and . We note that the Hellinger distance between two Beta distributions and can be represented explicitly as

where is the Beta function.

We compute the distances and by the -distance between the quantile functions. We set and generate samples . We use half of them to train the nonlinear sufficient predictors via W-GSIR, and then evaluate the RVMR and distance correlation between the estimated and true predictors using the rest of the data set. The tuning parameters and the dimensions are determined by the methods described in Section 4.2. The experiment is repeated 100 times, and averages and standard errors (in parentheses) of the RVMR and Dcor are summarized in Table 1. The following are the identified true predictors for each model: Model I-1 uses , Model I-2 uses , Model I-3 uses , and Model I-4 uses and .

Table 1:

RVMR and Distance Correlation between the estimated predictors and the true predictors of models in Section 6.2, with their Monte Carlo standard errors in parentheses.

| Models | W-GSIR1 | W-GSIR2 | |||

|---|---|---|---|---|---|

|

| |||||

| 50 | 100 | 50 | 100 | ||

| RVMR | |||||

|

|

|||||

| I-1 | 100 | 0.791 (0.128) | 0.839 (0.115) | 0.776 (0.124) | 0.812 (0.159) |

| 200 | 0.832 (0.091) | 0.864 (0.087) | 0.808 (0.114) | 0.842 (0.129) | |

| I-2 | 100 | 0.597 (0.187) | 0.607 (0.206) | 0.555 (0.236) | 0.548 (0.235) |

| 200 | 0.694 (0.141) | 0.681 (0.172) | 0.709 (0.177) | 0.688 (0.190) | |

| I-3 | 100 | 0.846 (0.037) | 0.880 (0.037) | 0.836 (0.045) | 0.859 (0.049) |

| 200 | 0.864 (0.021) | 0.896 (0.025) | 0.797 (0.088) | 0.696 (0.046) | |

| I-4 | 100 | 0.558 (0.242) | 0.652 (0.253) | 0.729 (0.196) | 0.790 (0.215) |

| 200 | 0.643 (0.221) | 0.732 (0.183) | 0.767 (0.169) | 0.847 (0.145) | |

| Dcor | |||||

|

|

|||||

| I-1 | 100 | 0.958 (0.024) | 0.969 (0.022) | 0.952 (0.029) | 0.964 (0.034) |

| 200 | 0.967 (0.011) | 0.974 (0.013) | 0.963 (0.017) | 0.970 (0.020) | |

| I-2 | 100 | 0.932 (0.037) | 0.935 (0.041) | 0.896 (0.071) | 0.898 (0.066) |

| 200 | 0.952 (0.026) | 0.948 (0.032) | 0.934 (0.054) | 0.932 (0.048) | |

| I-3 | 100 | 0.971 (0.008) | 0.978 (0.005) | 0.968 (0.010) | 0.974 (0.007) |

| 200 | 0.974 (0.004) | 0.980 (0.004) | 0.970 (0.007) | 0.971 (0.008) | |

| I-4 | 100 | 0.921 (0.042) | 0.936 (0.042) | 0.937 (0.036) | 0.947 (0.038) |

| 200 | 0.937 (0.037) | 0.950 (0.027) | 0.951 (0.023) | 0.962 (0.025) | |

Fig 1 (a) displays a scatter plot of the true predictor versus the first estimated sufficient predictor for Model I-1. Fig 1 (b) and (c) show the scatter plots of the first two sufficient predictors for Model I-2, with the color indicating the values of the true predictor. These figures demonstrate the method’s ability to capture nonlinear patterns among predictor random elements.

Fig. 1:

Visualization of W-GSIR1 estimator for (a) Model I-1, and (b)(c) Model I-2, with and . The sufficient predictors are computed via W-GSIR1

6.3. Multivariate distribution-on-distribution regression

We now consider the scenario where both and are two-dimensional random Gaussian distributions. We generate , where and are randomly generated according to the following models:

II-1: , .

II-2: and , where , , and .

II-3: and , where , , and .

II-4: and , where , , and .

where and are two fixed measures defined by

and is the truncated gamma distribution on range with shape parameter and rate parameter . We generate discrete observations of , by where and . When computing and , we use the following explicit representations of the Wasserstein distance between two Gaussian distributions:

The following are the identified true predictors for each model: Model II-1 uses , Models II-2 and II-3 uses , Model II-4 uses .

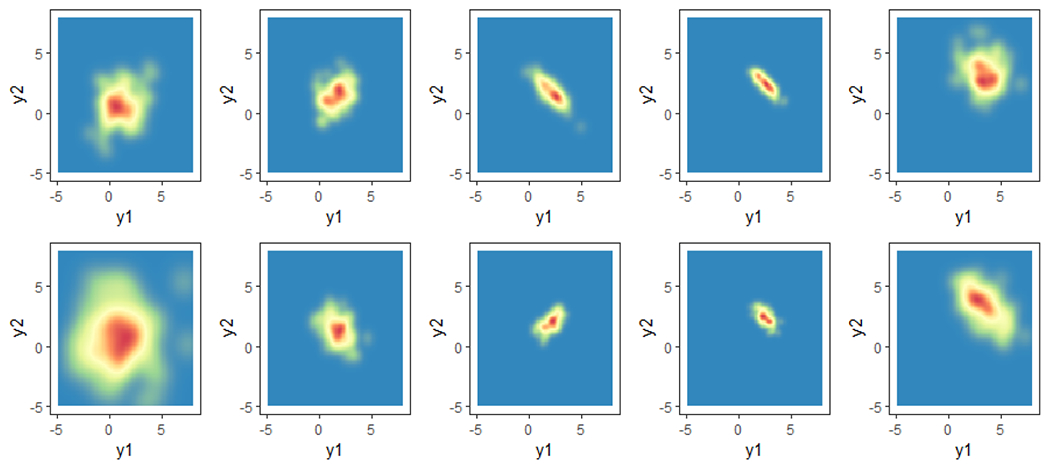

Using the true dimensions and the same choices for , , and the tuning parameters, we repeat the experiment 100 times and summarize the average and standard errors of RVMR and distance correlation between the estimated and true predictors in Table 2. In Fig. 2, we plot the 2-dimensional response densities associated with the 10%, 30%, 50%, 70%, and 90% quantiles of estimated predictor (first row) the true predictor (second row) for Model II-2. Comparing the plots, we can see that the two-dimensional response distributions show a similar variation pattern, which indicates the method successfully captured the nonlinear predictor in the responses. We also see that the first estimated sufficient predictor captures both the location and scale of the response distribution. With the increase of the estimated sufficient predictor, the location of the response distribution moves slightly rightward and upward, while the variance of the response distribution decreases at first and then increases.

Table 2:

RVMR and Distance Correlation between the estimated predictors and the true predictors of models in Section 6.3, with their Monte Carlo standard errors in parentheses.

| Models | SWGSIR1 | SWGSIR2 | |||

|---|---|---|---|---|---|

|

| |||||

| 50 | 100 | 50 | 100 | ||

| RVMR | |||||

|

|

|||||

| II-1 | 100 | 0.948 (0.063) | 0.957 (0.049) | 0.915 (0.130) | 0.910 (0.150) |

| 200 | 0.958 (0.041) | 0.970 (0.022) | 0.921 (0.087) | 0.934 (0.084) | |

| II-2 | 100 | 0.784 (0.036) | 0.791 (0.033) | 0.820 (0.038) | 0.822 (0.036) |

| 200 | 0.783 (0.023) | 0.791 (0.023) | 0.834 (0.033) | 0.824 (0.034) | |

| II-3 | 100 | 0.744 (0.061) | 0.755 (0.059) | 0.806 (0.067) | 0.812 (0.065) |

| 200 | 0.747 (0.040) | 0.753 (0.043) | 0.835 (0.069) | 0.841 (0.059) | |

| II-4 | 100 | 0.499 (0.166) | 0.500 (0.144) | 0.570 (0.170) | 0.567 (0.155) |

| 200 | 0.512 (0.156) | 0.477 (0.152) | 0.532 (0.157) | 0.501 (0.159) | |

| Dcor | |||||

|

|

|||||

| II-1 | 100 | 0.962 (0.024) | 0.963 (0.025) | 0.977 (0.018) | 0.977 (0.021) |

| 200 | 0.963 (0.017) | 0.964 (0.018) | 0.973 (0.023) | 0.970 (0.025) | |

| II-2 | 100 | 0.967 (0.013) | 0.967 (0.013) | 0.973 (0.010) | 0.975 (0.010) |

| 200 | 0.965 (0.011) | 0.966 (0.011) | 0.975 (0.008) | 0.975 (0.010) | |

| II-3 | 100 | 0.980 (0.009) | 0.981 (0.008) | 0.983 (0.007) | 0.984 (0.006) |

| 200 | 0.979 (0.007) | 0.979 (0.009) | 0.982 (0.007) | 0.983 (0.008) | |

| II-4 | 100 | 0.889 (0.031) | 0.886 (0.036) | 0.886 (0.033) | 0.892 (0.030) |

| 200 | 0.893 (0.033) | 0.886 (0.033) | 0.887 (0.034) | 0.889 (0.037) | |

Fig. 2:

Densities associated with the 10%, 30%, 50%, 70%, and 90% quantiles (left to right) of estimated predictor (first row) the true predictor (second row) for Model II-2 .

6.4. Comparison with functional-GSIR

Next, we compare the performance of W-GSIR with two methods using the GSIR framework but replacing Wasserstein distance by L1 or L2 distances. We call them L1-GSIR and L2-GSIR, respectively. Note that L2-GSIR is the same as functional-GSIR (f-GSIR) proposed in Li and Song [30]. Theoretically, L2-GSIR is an inadequate estimate since an L2 function need not be a density and vice versa. Nevertheless, we still naively implement L2-GSIR, treating density curves as L2 functions. To make a fair comparison, we first use the Gaussian kernel smoother to estimate the densities based on discrete observations and then evaluate the distances by numerical integration. For -GSIR , we take the Gaussian type kernel , with the same choice of tuning parameters as described in Subsection 4.2. We use , , and repeat the experiment 100 times with . The results are summarized in Table 3. We see that W-GSIR provides more accurate estimation than both L1-GSIR and L2-GSIR.

Table 3:

RVMR and Distance Correlation between the estimated predictors and the true predictors of models in Section 6.2, with their Monte Carlo standard errors in parentheses, computed using -GSIR, -GSIR, and W-GSIR.

| Models | -GSIR1 | -GSIR1 | W-GSIR1 |

|---|---|---|---|

|

| |||

| RVMR | |||

|

| |||

| I-1 | 0.258 (0.233) | 0.356 (0.276) | 0.839 (0.115) |

| I-2 | 0.322 (0.236) | 0.433 (0.244) | 0.607 (0.206) |

| I-3 | 0.307 (0.242) | 0.359 (0.205) | 0.880 (0.037) |

| I-4 | 0.313 (0.252) | 0.441 (0.278) | 0.652 (0.253) |

| Dcor | |||

|

|

|||

| I-1 | 0.773 (0.129) | 0.731 (0.171) | 0.969 (0.022) |

| I-2 | 0.778 (0.173) | 0.690 (0.203) | 0.935 (0.041) |

| I-3 | 0.779 (0.169) | 0.688 (0.196) | 0.978 (0.005) |

| I-4 | 0.779 (0.129) | 0.740 (0.176) | 0.936 (0.042) |

7. Applications

7.1. Application to human mortality data

In this application, we explore the relationship between the distribution of age at death and the distribution of the mother’s age at birth. We obtained our data from the UN World Population Prospects 2019 Databases (https://population.un.org), specifically focusing on the years 2015-2020. For each country, we compiled the number of deaths every five years from ages 0-100 and the number of births categorized by mother’s age every five years from ages 15-50. We represented this data as histograms with bin widths equal to 5 years. To obtain smooth probability density functions for each country, we used the R package ‘frechet’ to perform smoothing. We then calculated the relative Wasserstein distance between the predictor and response densities. The predictor and response densities are visualized in Fig. 3.

Fig. 3:

Density of (a) age at death and (b) mother’s age at birth for 194 countries, obtained using data from the UN World Population Prospects 2019 Databases (https://population.un.org)

We apply the proposed W-GSIR algorithm to the fertility and mortality data. The dimension of the central class is determined as one by the BIC-type procedure described in Subsection 4.2. We plot the age-at-death distributions versus the nonlinear sufficient predictors obtained by W-GSIR2 in Fig. 4. In Fig. 5, we present the summary statistics of the age-at-death distributions plotted against the sufficient predictors.

Fig. 4:

Densities of age at death for 194 countries in random order in (a) and (c), and versus the first nonlinear sufficient predictors obtained by W-GSIR2 in (b) and (d).

Fig. 5:

Summary statistics (mean, mode, standard deviation, and skewness) of the mortality distributions for 194 countries versus nonlinear sufficient predictors obtained by W-GSIR2.

Upon examining these plots, we obtained the following insights. The first nonlinear sufficient predictor effectively captures the location and variation information of the mortality distributions. Specifically, as the first sufficient predictor increases, the means of the mortality distributions decrease while the standard deviations increase. This suggests that the population’s death age tends to concentrate between 70 and 80 for large sufficient predictor values. Additionally, for densities with small sufficient predictors, there is an uptick at the ends of the 0-age side, which indicates higher infant mortality rates among the countries with such densities.

7.2. Application to Calgary temperature data

In this application, we are interested in the relationship between the extreme daily temperatures in spring (Mar, Apr, and May) and summer (Jun, Jul, and Aug) in Calgary, Alberta. We obtained the dataset from https://calgary.weatherstats.ca/, which contains the minimum and maximum temperatures for each day from 1884 to 2020. These data were previously analyzed in Fan and Müller [14]. We focused on the joint distribution of the minimum daily temperature and the difference between the maximum and minimum daily temperatures, which ensures that the distributions have common support. Each pair of daily values was treated as one observation from a two-dimensional distribution, resulting in one realization of the joint distribution for spring and one for summer each year. We then employed the spring extreme temperature distribution to predict the summer extreme temperature distribution. The dataset had observations, with discrete values for each joint distribution. We utilized the SW-GSIR method on the data, taking 50 random projections with . The sufficient dimension was determined as 2 using a BIC-type procedure. We illustrated the response summer extreme temperature distributions associated with the five percentiles of the first estimated sufficient predictors in Fig. 6. It is observed from Fig. 6 that as the estimated sufficient predictor value increases, the minimum daily temperature for summer rises slightly while the daily temperature range decreases.

Fig. 6:

Joint distribution of temperature range and minimum temperature in summer associated with the 10%, 30%, 50%, 70%, and 90% quantiles (from left to right) of SWGSIR2 predictor.

8. Discussion

The paper introduces a framework of nonlinear sufficient dimension reduction for distribution-on-distribution regression. The key strength of this paper is its ability to handle distributional data without linear structure. After explicitly building the universal kernels on the space of distributions, the proposed SDR method effectively reduces the complexity of the distributional predictors while retaining essential information from them.

Several related open problems persist in this topic. Firstly, a more systematic approach to selecting the kernel becomes essential, particularly when multiple universal kernels are available. Secondly, while the paper offers an adaptive method for choosing the bandwidth of the universal kernel in Section 4, the theoretical analysis of this bandwidth selection remains a potential area for future research. Additionally, more appropriate methods for determining the order in nonlinear SDR need to be developed, alongside establishing corresponding consistency results.

9. Technical Proofs

The section contains essential proof details to make the paper self-contained.

Geometry of Wasserstein space

We present some basic results that characterize when (i.e., the distributions involved are univariate). Their proofs can be found, for example, in [1] and [5]. In this case, is a metric space with a formal Riemannian structure [1]. Let be a reference measure with a continuous . The tangent space at is

where, for a set , denotes the -closure of , and id is the identity map. The exponential map from to is defined by , where the right-hand side is the measure on induced by the mapping mapping . The logarithmic map from to is defined by . It is known that the exponential map restricted to the image of log map, denoted as , is an isometric homeomorphism with inverse [5]. Therefore, is a continuous injection from to . This embedding guarantees that we can replace the Euclidean distance by the -metric in a radial basis kernel to construct a positive definite kernel.

Proof of Proposition 2: Recall that is the space of joint probability measures on with marginals and . Let be the mapping from to defined by . We first show that, if , then . This is true because, for any Borel set , we have

and similarly . Hence, for any , we have

where the last inequality is from the Cauchy-Schwartz inequality. Therefore,

Integrate the left-hand side with respect to and obtain . Therefore, the distance is a weaker metric than distance, which implies every open set in is open in . In other words, has a coarser topology than . Since is separable, so is [1, Remark 7.1.7]. Therefore, a countable dense subset of is also a countable dense subset of , implying is separable. Furthermore, if is a compact set in , then is compact [1, Proposition 7.1.5], implying is compact. This completes the proof of Proposition 2.

Proof of Lemma 1: By Theorem 3.2.2 of [3], the kernel is positive definite for all if and only if is conditionally negative definite. That is, for any with , and , . Kolouri et al. [23, Theorem 5] showed the conditional negativity of the sliced Wasserstein distance, which is implied by the negative type of the Wasserstein distance. By [44, 45], a metric is of negative type is equivalent to the statement that there is a Hilbert space and a map such that , , . By Proposition 2, is a complete and separable space. Then by the construction of the Hilbert space, is complete and separable. Therefore, there exists a continuous mapping from metric space to a complete and separable Hilbert space . Then by Zhang et al. [54, Theorem 1], the Gaussian type kernel is universal. Hence, and are dense in and , respectively. Same proof applies to the Laplacian-type kernel . This completes the proof of Lemma 1. □

Proof of Lemma 2. We will only show the details of the proof for the convergence rate of . By the triangular inequality,

where

By Lemma 5 of [18], under the assumption that and , we have

| (S.1) |

Now, we derive a convergence rate for . For simplicity, let , , , and . Then

| (S.2) |

Consider the expectation of the first term on the right-hand side. Here, the expectation involves two layers of randomness: that in and that in . Taking expectation with respect to and then , we have

Evoking the Lipschitz continuity condition on , we have

By Assumption 2, for . We then have for . Similarly, we have for . Therefore,

| (S.3) |

For the expectation of the second term on the right-hand side of equation (S.2), we have

| (S.4) |

Combine result (S.1)(S.3) and (S.4), we have

Then by Chebyshev’s inequality, we have

as desired. This completes the proof of Lemma 2.

Proof of Theorem 1. Let

Then the element of interest can be written as

Thus, we have

Since both and are compact operators, it suffices to show that

where . Writing as

we obtain

| (S.5) |

For the first term on the right-hand side, we have

| (S.6) |

where the last inequality follows from Lemma 2. For the second term on the right-hand side of (S.5), we write it as

Thus, we have

By the above derivations, we have and . Also, we have

and by Assumption 1. Therefore, we have

| (S.7) |

Finally, letting and rewriting the third term on the right-hand side of (S.5) as

we see that

| (S.8) |

Combining (S.6), (S.7), and (S.8), we prove the first assertion of the theorem.

The second assertion can then be proved by following roughly the same path and using the following facts:

- if is a bounded operator and is Hilbert-Schmidt operator and , then is a Hilbert Schmidt operator with

- if is Hilbert-Schmidt then so is and

Using the same decomposition as (S.5), we have

| (S.9) |

For the first term on the right-hand side of (S.9):

| (S.10) |

For the second term on the right-hand side of (S.9):

| (S.11) |

For the third term on the right-hand side of (S.9):

| (S.12) |

Combining the results (S.10), (S.11), and (S.12), we have

This completes the proof of Theorem 1.

Acknowledgments

We thank the Editor, Associate Editor and referees for their helpful comments. This research is partly supported by the NIH grant 1R01GM152812 and the NSF grants DMS-1953189, CCF-2007823 and DMS-2210775.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Ambrosio L, Gigli N, Savaré G, Gradient flows with metric and differentiable structures, and applications to the wasserstein space, Atti della Accademia Nazionale dei Lincei. Classe di Scienze Fisiche, Matematiche e Naturali. Rendiconti Lincei. Matematica e Applicazioni 15 (2004) 327–343. [Google Scholar]

- [2].Bayraktar E, Guo G, Strong equivalence between metrics of Wasserstein type, Electronic Communications in Probability 26 (2021) 1–13. [Google Scholar]

- [3].Berg C, Christensen JPR, Ressel P, Harmonic Analysis on Semigroups: Theory of Positive Definite and Related Functions, Springer, 1984. [Google Scholar]

- [4].Bhattacharjee S, Li B, Xue L, Nonlinear global Fréchet regression for random objects via weak conditional expectation, arXiv preprint arXiv:2310.07817 (2023). [Google Scholar]

- [5].Bigot J, Gouet R, Klein T, López A, Geodesic pca in the wasserstein space by convex pca, in: Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, volume 53, Institut Henri Poincaré, pp. 1–26. [Google Scholar]

- [6].Bobkov S, Ledoux M, One-Dimensional Empirical Measures, Order Statistics, and Kantorovich Transport Distances, American Mathematical Society, 2019. [Google Scholar]

- [7].Boissard E, Le Gouic T, On the mean speed of convergence of empirical and occupation measures in Wasserstein distance, Annales de l’IHP Probabilités et Statistiques 50 (2014) 539–563. [Google Scholar]

- [8].Chen Y, Lin Z, Müller H-G, Wasserstein regression, Journal of the American Statistical Association, in press (2021) 1–14.35757777 [Google Scholar]

- [9].Chen Z, Bao Y, Li H, Spencer BF Jr, Lqd-rkhs-based distribution-to-distribution regression methodology for restoring the probability distributions of missing shm data, Mechanical Systems and Signal Processing 121 (2019) 655–674. [Google Scholar]

- [10].Christmann A, Steinwart I, Universal kernels on non-standard input spaces, in: in Advances in Neural Information Processing Systems, pp. 406–414. [Google Scholar]

- [11].Dereich S, Scheutzow M, Schottstedt R, Constructive quantization: Approximation by empirical measures, Annales de l’IHP Probabilités et Statistiques 49 (2013) 1183–1203. [Google Scholar]

- [12].Ding S, Cook RD, Tensor sliced inverse regression, Journal of Multivariate Analysis 133 (2015) 216–231. [Google Scholar]

- [13].Dong Y, Wu Y, Fréchet kernel sliced inverse regression, Journal of Multivariate Analysis 191 (2022) 105032 [Google Scholar]

- [14].Fan J, Müller H-G, Conditional wasserstein barycenters and interpolation/extrapolation of distributions, arXiv preprint arXiv:2107.09218 (2021). [Google Scholar]

- [15].Fan J, Xue L, Yao J, Sufficient forecasting using factor models, Journal of Econometrics 201 (2017) 292–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Ferré L, Yao A-F, Functional sliced inverse regression analysis, Statistics 37 (2003) 475–488. [Google Scholar]

- [17].Fournier N, Guillin A, On the rate of convergence in wasserstein distance of the empirical measure, Probability Theory and Related Fields 162 (2015) 707–738. [Google Scholar]

- [18].Fukumizu K, Bach FR, Gretton A, Statistical consistency of kernel canonical correlation analysis., Journal of Machine Learning Research 8 (2007) 361–383. [Google Scholar]

- [19].Fukumizu K, Bach FR, Jordan MI, Dimensionality reduction for supervised learning with reproducing kernel hilbert spaces, Journal of Machine Learning Research 5 (2004) 73–99 [Google Scholar]

- [20].Ghodrati L, Panaretos VM, Distribution-on-distribution regression via optimal transport maps, Biometrika 109 (2022) 957–974. [Google Scholar]

- [21].Golub GH, Heath M, Wahba G, Generalized cross-validation as a method for choosing a good ridge parameter, Technometrics 21 (1979) 215–223. [Google Scholar]

- [22].Hsing T, Ren H, An rkhs formulation of the inverse regression dimension-reduction problem, The Annals of Statistics 37 (2009) 726–755. [Google Scholar]

- [23].Kolouri S, Zou Y, Rohde GK, Sliced wasserstein kernels for probability distributions, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5258–5267. [Google Scholar]

- [24].Koltchinskii V, Giné E, Random matrix approximation of spectra of integral operators, Bernoulli 6 (2000) 113–167. [Google Scholar]

- [25].Lee K-Y, Li B, Chiaromonte F, A general theory for nonlinear sufficient dimension reduction: Formulation and estimation, The Annals of Statistics 41 (2013) 221–249. [Google Scholar]

- [26].Lei J, Convergence and concentration of empirical measures under Wasserstein distance in unbounded functional spaces, Bernoulli 26 (2020) 767–798. [Google Scholar]

- [27].Li B, Sufficient Dimension Reduction: Methods and Applications with R, CRC Press, 2018. [Google Scholar]

- [28].Li B, Artemiou A, Li L, Principal support vector machines for linear and nonlinear sufficient dimension reduction, The Annals of Statistics 39 (2011) 3182–3210. [Google Scholar]

- [29].Li B, Kim MK, Altman N, On dimension folding of matrix-or array-valued statistical objects, The Annals of Statistics 38 (2010) 1094–1121. [Google Scholar]

- [30].Li B, Song J, Nonlinear sufficient dimension reduction for functional data, The Annals of Statistics 45 (2017) 1059–1095. [Google Scholar]

- [31].Li B, Song J, Dimension reduction for functional data based on weak conditional moments, The Annals of Statistics 50 (2022) 107–128. [Google Scholar]

- [32].Li K-C, Sliced inverse regression for dimension reduction, Journal of the American Statistical Association 86 (1991) 316–327. [Google Scholar]

- [33].Lin T, Fan C, Ho N, Cuturi M, Jordan MI, Projection robust wasserstein distance and riemannian optimization, arXiv preprint arXiv:2006.07458 (2020). [Google Scholar]

- [34].Luo W, Li B, Combining eigenvalues and variation of eigenvectors for order determination, Biometrika 103 (2016) 875–887. [Google Scholar]

- [35].Luo W, Xue L, Yao J, Yu X, Inverse moment methods for sufficient forecasting using high-dimensional predictors, Biometrika 109 (2022) 473–487. [Google Scholar]

- [36].Meunier D, Pontil M, Ciliberto C, Distribution regression with sliced wasserstein kernels, in: International Conference on Machine Learning, PMLR, pp. 15501–15523. [Google Scholar]

- [37].Micchelli CA, Xu Y, Zhang H, Universal kernels., Journal of Machine Learning Research 7 (2006). [Google Scholar]

- [38].Nietert S, Goldfeld Z, Sadhu R, Kato K, Statistical, robustness, and computational guarantees for sliced Wasserstein distances, Advances in Neural Information Processing Systems 35 (2022) 28179–28193. [Google Scholar]

- [39].Niles-Weed J, Rigollet P, Estimation of wasserstein distances in the spiked transport model, Bernoulli 28 (2022) 2663–2688. [Google Scholar]

- [40].Okano R, Imaizumi M, Distribution-on-distribution regression with wasserstein metric: Multivariate gaussian case, arXiv preprint arXiv:2307.06137 (2023). [Google Scholar]

- [41].Panaretos V, Zemel Y, An Invitation to Statistics in Wasserstein Space, Springer International Publishing, 2020. [Google Scholar]

- [42].Petersen A, Müller H-G, Functional data analysis for density functions by transformation to a hilbert space, The Annals of Statistics 44 (2016) 183–218. [Google Scholar]

- [43].Petersen A, Müller H-G, Fréchet regression for random objects with euclidean predictors, The Annals of Statistics 47 (2019) 691–719. [Google Scholar]

- [44].Schoenberg IJ, On certain metric spaces arising from euclidean spaces by a change of metric and their imbedding in hilbert space, The Annals of Mathematics 38 (1937) 787–793 [Google Scholar]

- [45].Schoenberg IJ, Metric spaces and positive definite functions, Transactions of the American Mathematical Society 44 (1938) 522–536. [Google Scholar]

- [46].Sriperumbudur B, Fukumizu K, Lanckriet G, On the relation between universality, characteristic kernels and rkhs embedding of measures, in: Proceedings of the thirteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, pp. 773–780. [Google Scholar]

- [47].Sriperumbudur BK, Fukumizu K, Lanckriet GR, Universality, characteristic kernels and rkhs embedding of measures., Journal of Machine Learning Research 12 (2011) 2389–2410. [Google Scholar]

- [48].Székely GJ, Rizzo ML, Bakirov NK, Measuring and testing dependence by correlation of distances, The Annals of Statistics 35 (2007) 2769–2794. [Google Scholar]

- [49].Villani C, Optimal Transport: Old and New, volume 338, Springer, 2009 [Google Scholar]

- [50].Virta J, Lee K-Y, Li L, Sliced inverse regression in metric spaces, arXiv preprint arXiv:2206.11511 (2022). [Google Scholar]

- [51].Weed J, Bach F, Sharp asymptotic and finite-sample rates of convergence of empirical measures in Wasserstein distance, Bernoulli 25 (2019) 2620–2648. [Google Scholar]

- [52].Ying C, Yu Z, Fréchet sufficient dimension reduction for random objects, Biometrika, in press (2022). [Google Scholar]

- [53].Yu X, Yao J, Xue L, Nonparametric estimation and conformal inference of the sufficient forecasting with a diverging number of factors, Journal of Business & Economic Statistics 40 (2022) 342–354. [Google Scholar]

- [54].Zhang Q, Xue L, Li B, Dimension reduction for fréchet regression, Journal of the American Statistical Association, in press (2023). [Google Scholar]