Summary

The brain can combine auditory and visual information to localize objects. However, the cortical substrates underlying audiovisual integration remain uncertain. Here, we show that mouse frontal cortex combines auditory and visual evidence; that this combination is additive, mirroring behavior; and that it evolves with learning. We trained mice in an audiovisual localization task. Inactivating frontal cortex impaired responses to either sensory modality, while inactivating visual or parietal cortex affected only visual stimuli. Recordings from >14,000 neurons indicated that after task learning, activity in the anterior part of frontal area MOs (secondary motor cortex) additively encodes visual and auditory signals, consistent with the mice’s behavioral strategy. An accumulator model applied to these sensory representations reproduced the observed choices and reaction times. These results suggest that frontal cortex adapts through learning to combine evidence across sensory cortices, providing a signal that is transformed into a binary decision by a downstream accumulator.

Keywords: audiovisual, decision-making, neural coding, mixed selectivity, prefrontal cortex, visual cortex, parietal cortex, optogenetics

Highlights

-

•

Mice combine visual and auditory evidence additively to solve an audiovisual task

-

•

Optogenetic inactivation indicates a key role for frontal cortex in the task

-

•

After learning, neurons in frontal area MOs represent stimuli and predict choice

-

•

An accumulator model applied to their activity reproduces mouse behavior

Coen, Sit, et al. train mice in an audiovisual task and show that they combine evidence across modalities additively, even when it is conflicting. This combination relies on activity in frontal area MOs. After learning, this activity represents auditory and visual stimuli additively and can be used to reproduce behavior.

Introduction

A simple strategy to combine visual and auditory signals, which is optimal if they are independent,1,2 is to add them. Given independent visual evidence and auditory evidence , the log odds of a stimulus being on the right or left (R or L) is a sum of functions that each depend on only one modality (see derivation in STAR Methods):

| (Equation 1) |

Multisensory integration in humans and animals is often additive.3,4,5,6,7,8,9,10,11,12,13 Nevertheless, some studies suggest that humans,14,15,16 other primates,17 and mice18,19 can break this additive law. One way that the additive law could be broken is if one modality is dominant, meaning that if the modalities conflict, the non-dominant modality is ignored.18

In rodents and other mammals, neurons integrating across modalities have been observed in superior colliculus,20,21,22,23,24 thalamus,25,26,27,28 parietal cortex,4,6,7,18,29,30,31,32,33,34,35,36,37,38 frontal cortex,39 and possibly40 even primary sensory cortices.41,42,43,44,45,46,47,48,49,50 However, it is not clear which cortical areas support multisensory decisions or how multisensory signals are encoded by neuronal populations in these regions.51 Perturbation studies have focused primarily on parietal cortex and disagree as to whether this region is18 or is not29,52,51 critical for multisensory behavior.

The brain could use different strategies to make multisensory decisions. For example, while visual and auditory cortices might be necessary and sufficient for behavioral responses to unisensory visual and auditory stimuli, a third region might be required for multisensory responses while playing no role in responses to either modality alone. Alternatively, unisensory and multisensory evidence could be processed by the same circuits: information from both senses may converge on a brain region that has a causal role in behavioral responses to both modalities, alone or in combination. If this region added evidence from the two modalities, it could drive behavior according to the additive law (Equation 1).

We studied an audiovisual localization task in mice and found support for the second hypothesis: multisensory evidence is processed by circuits that also process unisensory evidence, and these circuits involve the frontal cortex. Mouse behavior was consistent with the additive model (Equation 1). Optogenetic inactivation of visual or parietal cortex affected responses to visual stimuli only. Inactivating anterior frontal cortex (secondary motor area MOs) affected responses to both modalities. Population recordings revealed that this region encoded stimuli of both modalities additively. Its sensory responses developed with task learning and persisted during passive stimulus presentation. An accumulator model applied to these passive responses reproduced the pattern of choices and reaction times observed in the mice.

Results

We developed a two-alternative forced-choice audiovisual spatial localization task for mice (Figure 1A). We extended a visual task where mice turn a steering wheel to indicate whether a grating of variable contrast was on their left or right53 by adding an array of speakers. On each trial, at the time the grating appeared, the left, center, or right speaker played an amplitude-modulated noise. On coherent multisensory trials (auditory and visual stimuli on the same side), and on unisensory trials (zero contrast or central auditory stimulus), mice earned a water reward for indicating the correct side. On conflict multisensory trials (auditory and visual stimuli on opposite sides), or on neutral trials (central auditory and zero contrast visual), mice were rewarded randomly (Figure S1A). Mice learned to perform this task proficiently (Figure S1B), reaching 96% 3% correct (mean SD, n = 17 mice) for the easiest stimuli (coherent trials with the highest contrast).

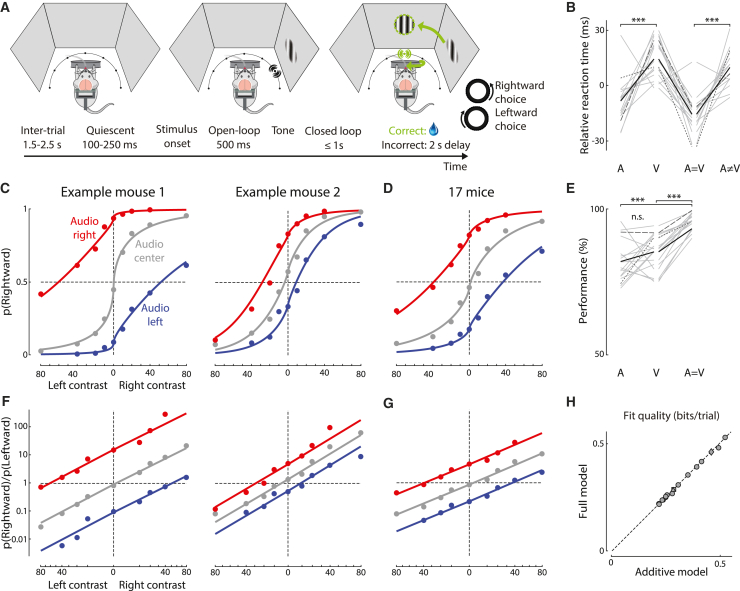

Figure 1.

Spatial localization task reveals additive audiovisual integration

(A) Behavioral task. Top: visual and auditory stimuli are presented using 3 screens and 7 speakers. In the example, auditory and visual stimuli are presented on the right, and the subject is rewarded for turning the wheel counter-clockwise to center the stimuli (a “rightward choice”). Bottom: task timeline. After inter-trial interval of 1.5–2.5 s, mice must hold the wheel still for 100–250 ms. They then have 1.5 s to indicate their choice. During the first 500 ms of this period, the stimulus does not move (“open loop”), but during the final 1 s, stimulus position is yoked to wheel movement. After training, over 90% of choices occurred during open loop (Figures S1D–S1F).

(B) Median reaction times for each stimulus type, relative to mean across stimulus types. Only trials with 40% contrast were included. Gray lines: individual mice; black line: mean across 17 mice. Long and short dashes indicate example mice from left and right of (C).

(C) Fraction of rightward choices at each visual contrast and auditory stimulus location for two example mice. Curves: fit of the additive model.

(D) As in (C), but averaged across 17 mice (∼156,000 trials). Curves: combined fit across all mice.

(E) Mouse performance (% rewarded trials) for different stimulus types (“correct” is undefined on conflict trials). Plotted as in (B).

(F and G) Data from (C) and (D), replotted as odds of choosing right vs. left (in log coordinates, Y axis) as a function of visual contrast raised to the power . Model predictions are straight lines.

(H) Log2-likelihood ratio for the additive vs. full model where each combination of visual and auditory stimuli is allowed its own behavioral response that need not follow an additive law. (5-fold cross-validation, relative to a bias-only model). Triangles and diamonds: mice from left and right of (C). Squares: combined fit across 17 mice. There is no significant difference between models (p > 0.05). ∗∗∗p < 0.001 (paired t test).

Mice responded fastest in coherent trials with high-contrast visual stimuli (Figure 1B). Reaction times were typically 190 120 ms (median MAD, n = 156,000 trials in 17 mice) and were 22 20 ms faster in unisensory auditory than unisensory visual trials (mean SD, n = 17 mice, p < 0.001, paired t test), suggesting that the circuits responsible for audiovisual decisions receive auditory signals earlier than visual signals54,55 (Figures 1B, S1M, S1O, and S1Q). In multisensory trials, reaction times were 25 18 ms faster for coherent than conflict trials (p < 0.001, paired t test). This suggests that multisensory inputs feed into a single integrator, rather than two unisensory integrators racing independently to reach threshold.5,56 Reaction times were faster at higher contrasts for unisensory visual and coherent multisensory trials (p < 0.001, linear mixed-effects model) and were possibly even faster in coherent trials than unisensory auditory trials, particularly at high contrast levels (p < 0.08, paired t test).

Spatial localization task reveals additive audiovisual integration

Mice used both modalities to perform the task, even when the two were in conflict. The fraction of rightward choices, which depended smoothly on visual contrast, further increased or decreased when sounds were on the right or on the left (Figures 1C and 1D, red vs. blue). Mice performed more accurately on coherent trials than unisensory trials (Figures 1E, S1N, and S1P), indicating that they attended to both modalities.8,12,54,55,57,58

To test whether mice make multisensory decisions additively, we fit the additive model to their choices. Equation 1 can be rewritten as:

| (Equation 2) |

where is the probability of making a rightward choice, and is the logistic function. We first fit this model with no constraints on the functions and and found that it provided excellent fits (Figure S2F). We further simplified it by modeling with a power function to account for contrast saturation in the visual system59:

| (Equation 3) |

| (Equation 4) |

Here and are right and left contrasts (at least one of which was always zero), and and are indicator variables for right and left auditory stimuli (with value 1 or 0 depending on the auditory stimulus position). This model performed almost as well as the 11-parameter unconstrained model (Figure S2F) with only 6 free parameters: bias (), visual exponent (), visual sensitivities ( and ), and auditory sensitivities ( and ). In the rest of the paper, we thus adopted this simplified version of the additive model.

The additive model provided excellent fits to the multisensory decisions of all mice. It fit both the choices of individual mice (Figure 1C) and the choices averaged across mice (Figure 1D). A simple view of these data can be obtained by representing them in terms of log odds of rightward choices (as in Equation 1) vs. linearized contrast (contrast raised by the exponent , Equation 3). As predicted by the model, the responses to unisensory visual stimuli fall on a line, and auditory cues shift this line additively (Figures 1F, 1G, and S3A–S3O). The intercept of the line is determined by the bias , the slope by the visual sensitivity , and the additive offset by the auditory sensitivity .

The additive model performed better than non-additive models, including models where one modality dominates the other (Figures S2A–S2E). It performed as well as a full model, which used 25 parameters to fit the response to each stimulus combination, without additive constraints (Figure 1H). The additive model could be fit from the unisensory choices alone, indicating that mice use the same behavioral strategy on coherent and conflict trials (Figure S3P). As predicted by the additive model, equal and opposite auditory and visual stimuli (i.e., stimuli eliciting an equal probability of left and right choices when presented alone) led to neutral behavior when presented together, i.e., a 50% chance of left or right choices (Figure S2G). In contrast, a model of sensory dominance would predict that these stimuli lead to choices determined by the dominant modality.

Optogenetic inactivation identifies roles of sensory and frontal cortical areas

To determine which cortical regions are necessary to perform the task, we used laser-scanning optogenetic inactivation across 52 sites in dorsal cortex. We inactivated with transcranial laser illumination in mice expressing ChR2 in parvalbumin interneurons59,60,61,62 (3 mW; 462 nm; 1.5 s duration following stimulus onset; Figure 2A). We combined results across mice and hemispheres because they were qualitatively consistent and symmetric (Figures S4A and S4B). Control measurements established that mouse choices were unaffected by target locations just outside the brain (Figure S4C). Because of light scattering in the brain, we expect inactivation to impact areas ∼1 mm from the target location59,63,64 (Figure 2A). For this reason, and because brain curvature hides auditory cortex, laser sites between primary visual and auditory areas likely inactivated both visual and auditory cortices. We refer to these sites as “lateral sensory cortex.” We found that inactivating them impacted the choices, as did inactivating visual and frontal areas. However, inactivating these different regions had distinct impacts on task performance, which we detail below.

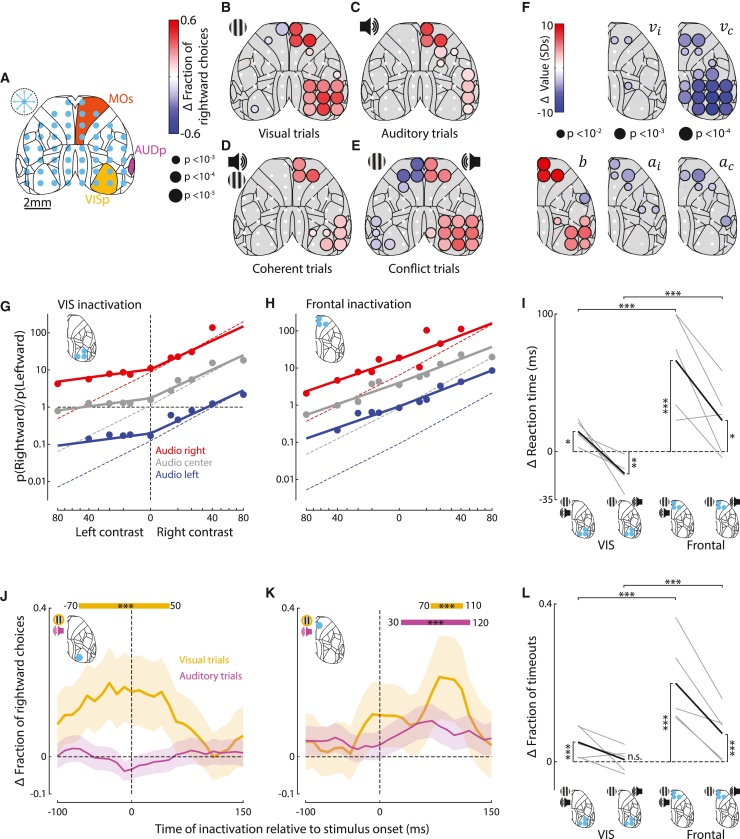

Figure 2.

Optogenetic inactivation identifies roles of sensory and frontal cortical areas

(A) Schematic of inactivation sites. On ∼75% of trials, a blue laser randomly illuminated one of 52 sites (blue dots) for 1.5 s following stimulus onset. Dashed circle: estimated radius (1 mm) of effective laser stimulation. Yellow, orange, and magenta: primary visual region (VISp), primary auditory region (AUDp), and secondary motor cortex (MOs).

(B) Change in the fraction of rightward choices for each laser site for unisensory left visual stimulus trials. Red and blue dots: increases and decreases in fraction of rightward choices; dot size represents statistical significance (5 mice, shuffle test, see STAR Methods). Data for right stimulus trials were included after reflecting the maps (see Figure S4A for both individually).

(C) As in (B), but for unisensory auditory trials.

(D) As in (B), but for coherent multisensory trials.

(E) As in (B), but for conflict multisensory trials.

(F) As in (B)–(E), but dot color indicates the change in parameters of the additive model. , bias toward ipsilateral choices (relative to inactivation site); and , sensitivity to ipsilateral and contralateral contrast; and , sensitivity to ipsilateral and contralateral auditory stimuli.

(G) Fit of additive model to trials when a site in visual cortex was inactivated. Dashed lines: model fit to non-inactivation trials. Trials with inactivation of left visual cortex were included after reflecting the maps (5 mice, 6,497 trials). Inactivation significantly changed model parameters (paired t test, p < 0.05).

(H) As in (G), but for trials when frontal cortex was inactivated (5 mice, 5,612 trials). Inactivation significantly changed model parameters (paired t test, p < 0.05).

(I) Change in multisensory reaction times when visual or frontal cortex was inactivated contralateral to the visual stimulus. Gray and black lines: individual mice (n = 5) and the mean across mice. Reaction times are the mean across the medians for each contrast relative to non-inactivation trials. Values above 100 ms were truncated for visualization. On coherent trials, inactivating visual or frontal cortex increased reaction time, with larger effect for frontal. On conflict trials, inactivation of visual cortex decreased reaction time while inactivation of frontal cortex caused an increase. ∗p < 0.05, ∗∗p < 0.01, ∗p < 0.001 (linear mixed-effects model).

(J) Change in fraction of rightward choices when contralateral visual cortex was inactivated on visual (yellow, 519 trials) or auditory (magenta, 1,205 trials) trials. Inactivation was a 25 ms, 25 mW laser pulse at different time points. Curves show average over mice smoothed with a 70 ms boxcar window. Shaded areas: 95% binomial confidence intervals. ∗∗∗ indicates intervals where fraction of rightward choices differs significantly from controls (p < 0.001, Fisher’s exact test).

(K) As in (J), but for frontal inactivation (451 and 1,291 trials for auditory and visual conditions).

(L) As in (I), but for the change in the fraction of timeout trials. On coherent trials, inactivation of either visual or frontal cortex significantly increased timeouts. On conflict trials, only frontal inactivation changed the fraction of timeouts. ∗∗∗p < 0.001 (linear mixed-effects model).

Inactivating visual cortex impaired visual but not auditory choices. As seen in visual tasks,53,59,65 inactivation of visual cortex reduced responses to contralateral visual stimuli, whether presented alone (Figure 2B) or with auditory stimuli (Figures 2D and 2E). It had a smaller effect in coherent trials, when those choices could be based on audition alone (Figure 2D, p < 0.01, paired t test across 5 mice). Conversely, it did not affect unisensory auditory choices (Figure 2C), indicating that visual cortex does not play a substantial role in processing auditory signals in this task. Finally, bilateral inactivation of visual or parietal cortex reduced the fraction of choices toward the location of the visual stimulus in both unisensory visual and multisensory trials (Figures S5U–S5X).

Inactivating frontal cortex impaired choices based on either modality with similar strength, suggesting a role in integrating visual and auditory evidence (Figures 2B–2E). On visual trials, inactivating frontal cortex had a similar effect as inactivating visual cortex: it reduced responses to contralateral stimuli (Figure 2B, as in visual detection tasks53,59,65). However, it also caused a similar reduction in the responses to contralateral auditory stimuli (p > 0.05, t test across mice; Figure 2C). In coherent multisensory trials, frontal inactivation reduced responses to contralateral stimuli (Figure 2D). On conflict trials, it reduced the responses to the contralateral stimulus, whichever modality it came from (Figure 2E). Bilateral inactivation of frontal cortex slowed responses but did not bias the animal’s choices in either direction for any stimulus type (Figures S5Q–S5X).

Finally, inactivating lateral sensory cortex strongly impaired visual choices and weakly impaired auditory choices. It decreased correct responses to contralateral stimuli whether visual alone (Figure 2B), auditory alone (Figure 2C), or combined (Figures 2D and 2E) but had a larger effect on visual than auditory choices (Figures 2B and 2C, p < 0.05, t test across mice). These results might suggest a multisensory role but might, more simply, arise from light spreading into both visual and auditory areas: indeed, because of brain curvature, light likely passes through overlying tissue before reaching auditory cortex, which is required for auditory localization.66 Attenuation by this overlying tissue may explain the weaker effect on auditory choices. The minor effect of inactivating somatosensory cortex (Figure S4F) may also arise from light spreading.

The results of these inactivations were well captured by the additive model. The model accounted for the effects of inactivating visual cortex via a decrease in the sensitivity for contralateral visual stimuli (Figure 2F), which reduced performance for contralateral visual stimuli regardless of auditory stimuli (Figure 2G). Inactivating lateral sensory cortex had a similar effect and more weakly decreased contralateral auditory sensitivity (Figures 2F and S4E, p < 0.07, t test across mice). Inactivating frontal cortex reduced visual and auditory sensitivity by a similar amount (p > 0.65, t test across mice) and increased bias to favor ipsilateral choices (Figures 2F, 2H, and S4G). The model revealed that the effects of inactivating visual, lateral, and frontal cortices were statistically different from each other (Figure S4H). For example, inactivating frontal cortex reduced sensitivity to both contralateral and ipsilateral stimuli, but inactivating lateral sensory cortex only reduced sensitivity to contralateral stimuli (Figure 2F).

The effect of inactivation on reaction times revealed a difference between frontal and other cortices. Inactivating frontal cortex delayed responses in all stimulus conditions (Figures 2I and S5A–S5P). In contrast, the effect of inactivating visual cortex depended on the stimulus condition: responses to contralateral visual stimuli or coherent contralateral audiovisual stimuli were delayed, but responses to conflicting stimuli with a contralateral visual component were accelerated (Figures 2I and S5A–S5H). It effectively caused the mouse to ignore the contralateral visual stimulus and respond as on unisensory auditory trials (Figure 1B). The effects of inactivating the lateral cortex were similar to visual cortex but did not reach statistical significance. Similar results were seen in the fraction of timeouts, i.e., trials where the mouse failed to respond within 1.5 s (Figures 2L and S5I–S5P). Bilateral inactivation of visual or parietal cortex delayed responses to unisensory visual or coherent multisensory trials, while bilateral inactivation of frontal cortex delayed responses to all trial types (Figures S5Q–S5T). These data indicate that inactivating visual or lateral cortex mimicked the absence of a contralateral stimulus, which may speed or slow reaction times depending on whether this absence resolves a conflict. They also indicate that inactivating frontal cortex slows all choices, consistent with a process of multisensory evidence integration and possibly also of premotor planning or motor execution.

The critical time window for inactivation was earlier for visual cortex than for frontal cortex (Figures 2J and 2K). We used 25-ms laser pulses to briefly inactivate visual and frontal cortex at different times relative to stimulus onset on unisensory trials59 (see STAR Methods). Inactivating right visual cortex significantly increased the fraction of rightward choices if the laser pulse began between 70 ms prior and 50 ms after the appearance of a visual stimulus on the left (p < 0.001), but had no significant effect at any time after an auditory stimulus (Figure 2J); an impact of inactivation prior to stimulus onset likely results from continued suppression of neural activity following laser offset.59 Frontal inactivation impacted behavior later: 70–110 ms after contralateral visual stimuli and 30–120 ms after contralateral auditory stimuli (Figure 2K). The earlier critical window for frontal inactivation on auditory trials is consistent with the faster reaction times on these trials (Figure 1B). However, in both cases, inactivation of frontal cortex had no significant effect >120 ms after stimulus onset, suggesting that after this time, frontal cortex plays a limited role in sensory integration. These short inactivation pulses had no significant effect when stimuli were ipsilateral to the inactivation or when the laser was targeted outside the brain.

Together, these results suggest that visual cortex’s role in the task is to relay visual information to downstream structures including frontal cortex, which integrates it with auditory information from elsewhere to shape the mouse’s choice, with this whole process occurring over ∼120 ms.

Neurons in frontal area MOs encode stimuli and predict behavior

The results of frontal inactivation suggest that at least some neurons in frontal cortex may integrate evidence from both modalities. To test this hypothesis, we recorded acutely with Neuropixels probes during behavior (Figures 3A–3D). We recorded 14,656 neurons from frontal cortex across 88 probe insertions (56 sessions) from 6 mice (Figures 3A, S6A, and S6B) divided across the following areas: MOs, orbitofrontal (ORB), anterior cingulate (ACA), prelimbic (PL), infralimbic (ILA), and nearby olfactory areas (OLF). These regions exhibited a variety of neural responses, including neurons that were sensitive to visual and auditory location (Figures 3B and 3C) and to the animal’s upcoming choice (Figure 3D).

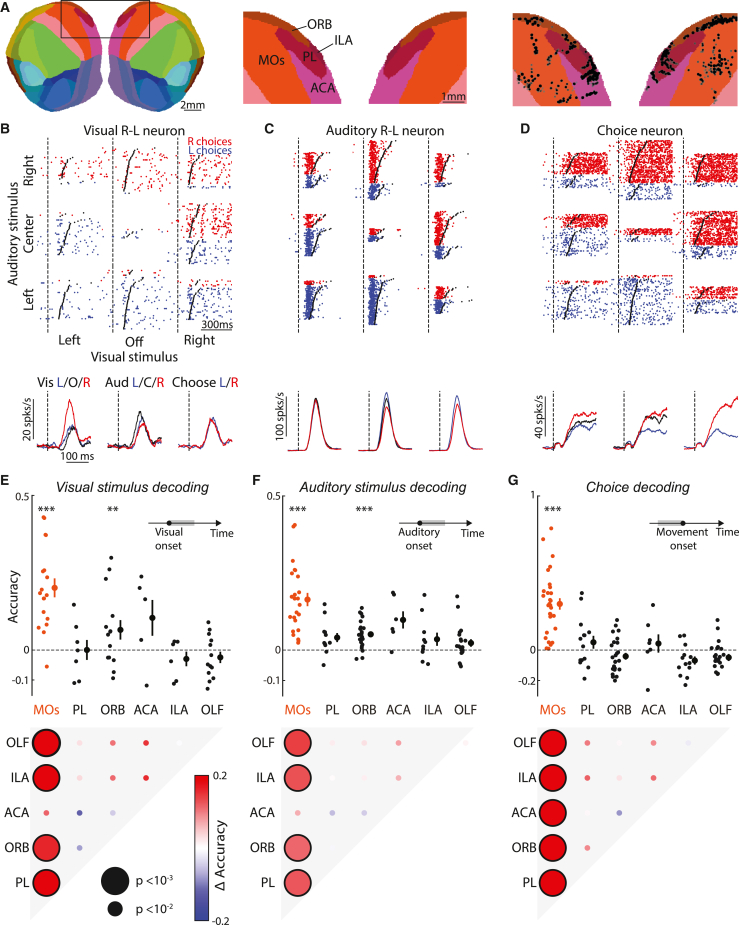

Figure 3.

Neurons in frontal area MOs encode stimuli and predict behavior

(A) Recording locations for cells (black dots, right) overlaid on a flattened cortical map (using the Allen Common Coordinate Framework67), showing locations in secondary motor cortex (MOs, 3,041 neurons), orbitofrontal (ORB, 5,112), anterior cingulate (ACA, 727), prelimbic (PL, 1,332) and infralimbic (ILA, 1,254) areas.

(B) Top: spike rasters, separated by trial condition, from a neuron sensitive to visual spatial location (dʹ = 1.85). Red/blue rasters: trials with a rightward/leftward mouse choice. Dashed line and black points: stimulus onset and movement initiation. Bottom: peri-stimulus time histogram (PSTH) of the neural response, averaged across different visual (left), auditory (center), or choice (right) conditions. Trials are not balanced; choice and stimulus location are correlated.

(C) As in (B), for a neuron sensitive to auditory spatial location (dʹ = −0.81).

(D) As in (B), for a neuron sensitive to the animal’s choice (dʹ = 2.61).

(E) Top: cross-validated accuracy (relative to a bias model, see STAR Methods) of a support vector machine decoder trained to predict visual stimulus location from population spiking activity time-averaged from 0 ms to 300 ms after stimulus onset. Accuracies 0 and 1 represent chance and optimal performance. Points: decoding accuracy from neurons in regions labeled in (A), or olfactory areas (OLF, 2,068 neurons), for one experimental session. Neurons were subsampled to equalize numbers across points. ∗∗∗p < 0.001, ∗∗p < 0.01 (5 sessions from 2 to 5 mice for each region, one-sided t test). Bottom: inter-regional comparison of decoding accuracy (linear mixed-effects model). Black outlines: statistically significant difference. Dot size: significance level.

(F) As in (E), for decoding of auditory stimulus location (6 sessions, 3–6 mice).

(G) As in (E), for decoding choices from spiking activity 0–130 ms preceding movement (7 sessions, 3–6 mice).

Among these frontal regions, task information was represented most strongly in MOs. MOs was the only region able to predict the animal’s upcoming choice before movement onset (Figures 3G and S6C) and encoded auditory and visual stimulus location significantly more strongly than the other regions (Figures 3E and 3F; p < 0.01, linear mixed-effects model; the difference with ACA did not reach significance). Activity in MOs began to predict the animal’s choice ∼100 ms before movement onset (Figure S6E) and was more accurate for neurons more anterior or lateral within MOs (Figure S6F). Furthermore, choice decoding from MOs activity was more accurate on sessions with higher behavioral performance (p < 0.05, linear mixed-effects model; Figure S6F), suggesting a link between MOs choice coding and behavioral engagement. Analysis of single cells yielded results consistent with population decoding: MOs neurons better discriminated stimulus location and choice than neurons of all other regions (Figures S6G–S6H; p < 0.05, linear mixed-effects model; differences with ACA and PL did not reach significance for visual location). These observations were robust to the correlation between stimuli and choices: even when controlling for this correlation, MOs still had the largest fraction of neurons with significant coding of stimulus location or pre-movement choice (Figures S6I–S6J). Once movements were underway, however, we could decode their direction from multiple regions, consistent with observations that ongoing movements are encoded throughout the brain68,69 (Figure S6D).

Frontal area MOs integrates task variables additively

Given the additive effects of visual and auditory signals on behavior, we asked whether these signals also combine additively in MOs. To analyze MOs responses to combined audiovisual stimuli during behavior, we used an ANOVA-style decomposition into temporal kernels.70 We focused on audiovisual trials of a single contrast so we could define binary variables , encoding the laterality (left vs. right) of auditory stimuli, visual stimuli, and choices. The population firing rate vector , on trial at time after stimulus onset, decomposed as the sum of 6 temporal kernels:

| (Equation 5) |

Here, is the mean stimulus response averaged across stimuli, and are the additive effects of auditory and visual stimulus location, and is a potential non-additive interaction between them. Finally, is a kernel for the mean effect of movement (regardless of direction and relative to the time of movement onset on trial ) and is the differential effect of movement direction (right minus left). To test for additivity, we compared the cross-validated performance of this full model against an additive model where .

The results were consistent with additive integration of visual and auditory signals. The additive model of MOs responses outperformed the full model with interactions between visual and auditory stimuli (Figures 4A–4C), as well as an alternative full model with interactions between stimuli and movement (Figure S7A). (Better performance of the additive model reflects over-fitting of the full model, whose parameters are a superset of the additive model’s.) Similar results were seen during passive presentation of the task stimuli, when sensory responses could not be confounded by movement (Figures 4D–4F and S7T–S7V).

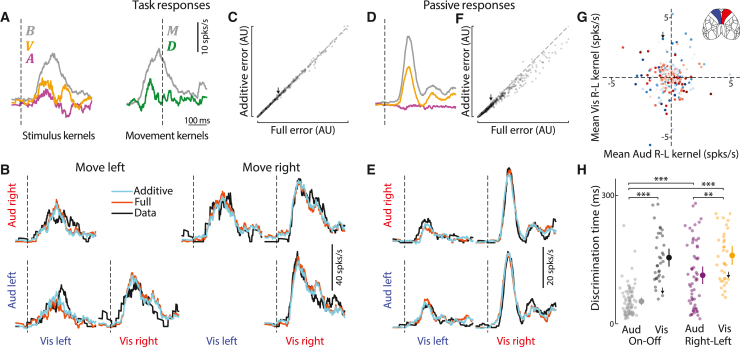

Figure 4.

Frontal area MOs encodes task variables additively

(A) Kernels from fitting the additive neural model to an example neuron. Dashed lines: stimulus onset (left) or movement onset (right). , mean stimulus response; and , additive effects of auditory and visual stimulus location; , mean effect of movement (relative to , movement onset time on trial ); , differential effect of movement direction (right minus left). The non-additive kernel was set to 0.

(B) Cross-validated model fits to average neural activity in audiovisual conditions for the neuron from (A). Coherent trials with incorrect responses were too rare to include. Cyan and orange lines: predictions of additive () and full models. Black line: test-set average responses. Dashed lines: stimulus onset.

(C) Prediction error (see STAR Methods) across all neurons for additive and full models. Arrow indicates example cell from (A and B). The additive model has a smaller error (p = 0.037, linear mixed-effects model, 2,183 cells, 5 mice). Top 1% of errors were excluded for visualization, but not analyses.

(D–F) As in (A)–(C), but for neural activity during passive stimulus presentation, using only sensory kernels. In (F), p < 10−10 (2,509 cells, 5 mice, linear mixed-effects model).

(G) Encoding of visual vs. auditory stimulus preference (time-averaged kernel amplitude for vs. ) for each cell. There was no significant correlation between and . p > 0.05 (2,509 cells, Pearson correlation test). Red/blue: cells recorded in right/left hemisphere. Color saturation: fraction of variance explained by sensory kernels.

(H) Discrimination time (see STAR Methods) relative to stimulus onset during passive conditions. Auditory Right-Left neurons (magenta, n = 59) discriminated stimulus location earlier than Visual Right-Left neurons (gold, n = 36). Auditory On-Off neurons (sensitive to presence, but not necessarily location, gray, n = 82) discriminated earliest, even compared to Visual On-Off neurons (n = 36, black). Points: individual neurons. Bars: standard error. ∗∗p < 0.01, ∗∗∗p < 0.001 (Mann–Whitney U test).

MOs neurons provided a mixed representation of visual and auditory stimulus locations but encoded the two modalities with different time courses. Similar to the mixed multisensory selectivity observed in the parietal cortex of rat29 and primate,6 the auditory and visual stimulus preferences of MOs neurons were neither correlated nor lateralized: cells in either hemisphere could represent the location of auditory or visual stimuli with a preference for either location and could represent the direction of the subsequent movement with a preference for either direction (Figures 4G, S7C–S7E, S7R, and S7S). We could not detect a significant correlation of kernel size with recording location in MOs, although there was a trend toward larger choice kernels in anterior and lateral regions (Figures S7F–S7Q). Neurons that responded to one modality, however, also tended to respond to the other, as evidenced by a weak correlation in the absolute sizes of the auditory and visual kernels (Figure S7B). Nevertheless, representations of auditory and visual stimuli had different time courses: neurons could distinguish the presence and location of auditory stimuli earlier than for visual stimuli (Figure 4H). This is consistent with the more rapid behavioral reactions to auditory stimuli (Figure 1B) and the earlier critical window for MOs inactivation on unisensory auditory than visual trials (Figures 2J and 2K). Indeed, the earliest times in which MOs encoded visual or auditory stimuli (Figure 4H) matched the times for which MOs inactivation impacted behavioral performance (Figures 2J and 2K). This delay between visual and auditory signals resembles the delay previously observed between visual and vestibular signals.38

MOs encoded information about auditory onset (regardless of sound location) more strongly and earlier than information about visual onset or the location of either stimulus (Figures 4H and S7W). This may explain why mice exhibit auditory dominance in multisensory conflict trials in a detection task18,19 but not in our localization task.

Multisensory signals develop in MOs after task training

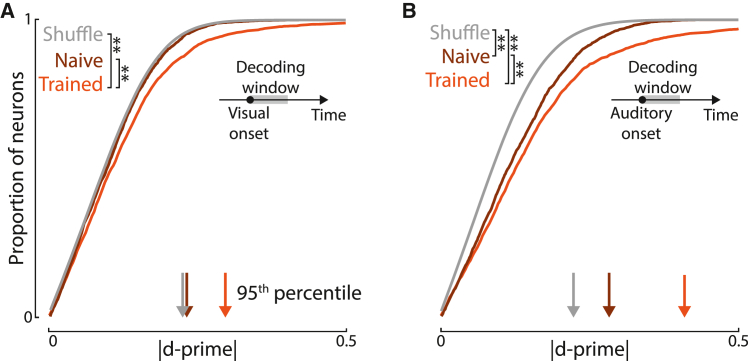

Neural populations of MOs encoded auditory and visual location more strongly in task-proficient mice (Figures 5A and 5B). We recorded the responses of 2,702 MOs neurons to the task stimuli in 4 naive mice during passive conditions with no instructed movements and compared their activity to that previously characterized in trained mice. MOs encoding of visual stimulus location was significantly higher in trained mice than naive mice (∗∗p < 0.01, Welch’s t test). In naive mice, individual MOs neurons showed no coding of visual position: their dʹ index (absolute mean difference of firing rates between stimulus conditions divided by mean trial-to-trial SD) was not significantly different from a shuffled control (Figure 5A). In naive mice, MOs did encode auditory location (p < 0.01, Welch’s t test), but this encoding grew stronger after task training (Figure 5B, p < 0.01, Welch’s t test). We conclude that training enhances sensory responses in MOs, particularly visual responses.

Figure 5.

Audiovisual integration in MOs develops through learning

(A) Cumulative histogram of absolute visual discriminability index (dʹ) scores for MOs neurons in naive mice (n = 2,700), trained mice (n = 2,956), and shuffled data. Training enriches the proportion of spatially sensitive neurons (∗∗p < 0.01, Welch’s t test). Naive mouse data was not significantly distinct from shuffled (p > 0.05, Welch’s t test). Arrows: 95th percentile for each category.

(B) As in (A), but for auditory stimuli. Training enriches the proportion of spatially sensitive neurons, although naive mouse data was significantly distinct from shuffled data (∗∗p , Welch’s t test, n = 2,698/2,946 neurons for naive/trained mice).

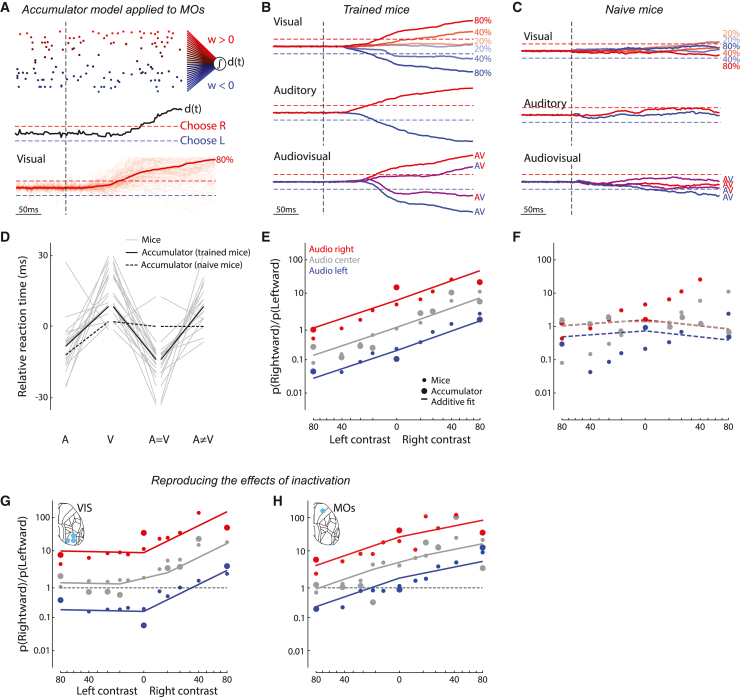

An accumulator applied to MOs activity reproduced decisions

Given that the MOs population code resembled the animals’ behavior in multiple ways, including the additive coding of visual and auditory stimuli and the earlier auditory responses, we next asked if the representation of multisensory task stimuli in MOs could explain the properties of the animals’ choices. We considered an accumulator model that makes choices based on the stimulus representation in MOs (Figures 6A and 6B). To isolate the stimulus representation and avoid the confound of movement encoding in MOs, we used passive stimulus responses and generated surrogate population spike trains by selecting (from all recordings) MOs neurons encoding the location of at least one of the sensory stimuli. These spike trains were fed into an accumulator model1,37,71,72,73; they were scaled by a weight vector and linearly integrated over time to produce a scalar decision variable :

| (Equation 6) |

Figure 6.

An accumulator applied to MOs activity in trained mice reproduced decisions

(A) Top: population spike train rasters for a single trial, colored according to the fitted weight for that neuron. Red and blue neurons push the decision variable, , toward the rightward or leftward decision boundary. Vertical dashed line: stimulus onset. Population activity was created from passive recording sessions in MOs of trained mice. Middle: evolution of the decision variable over this trial. Red/blue dashed lines: rightward/leftward decision boundaries. Bottom: decision variable trajectory for individual unisensory visual trials with 80% rightward contrast (thin) and their mean (thick).

(B) Mean decision variable trajectory for visual-only (top), auditory-only (middle), and multisensory (bottom) stimulus conditions.

(C) As in (B), but for naive mice.

(D) Median reaction times for different stimulus types, relative to mean across stimulus types, for mouse behavior (gray, n = 17; cf. Figure 1B) and the accumulator model fit to MOs activity in trained and naive mice (solid and dashed black lines).

(E) Mean behavior of the accumulator with input spikes from trained mice (large circles). Small circles represent mouse performance (n = 17; cf. Figure 1G). Solid lines: fit of the additive model to the accumulator model output. The accumulator model fits mouse behavior better than shuffled data (p < 0.01, shuffle test, see STAR Methods).

(F) As in (E), but for accumulator with input spikes from naive mice. There is no significant difference between the accumulator model and shuffled data (p > 0.05).

(G) Simulation of right visual cortex inactivation, plotted as in (E). Activity of visual-left-preferring cells was reduced by 60%. Small circles: mean behavior from visual-cortex-inactivated mice (5 mice; cf. Figure 2G). The accumulator model fits mouse behavior better than shuffled data (p < 0.01).

(H) Simulation of right MOs inactivation, plotted as in (E). Activities of neurons in left and right hemispheres were constrained to have positive and negative weights, and right-hemisphere activity was reduced by 60% before fitting. Small circles: mean behavior from MOs-inactivated mice (5 mice, small circles; cf. Figure 2H). The accumulator model fits mouse behavior better than shuffled data (p < 0.01).

The model chooses left or right when crosses one of two decision boundaries placed at 1.

Although the model parameters were fit independently of mouse behavior, the model matched the average behavior of each mouse, as long as it was applied to MOs activity recorded in trained mice. Given an MOs representation , we found the weight vector that produced the fastest and most accurate choices possible (see STAR Methods). The model reproduced the different behavioral reaction times for different stimulus types: faster in auditory and coherent trials, and slower in visual and conflict trials (Figure 6D; cf. Figure 1B). Furthermore, as observed with mice, the model integrated multisensory stimuli additively (Figure 6E; cf. Figure 1G). In contrast, an accumulator model trained on MOs representations in naive mice failed to reproduce mouse behavior, with no significant difference in model performance between shuffle and test data (p > 0.05; Figures 6C and 6F). These results suggest that behavioral features of the responses, such as the different reaction times for auditory and visual stimuli and the additivity of visual and auditory evidence, reflect features in the MOs population code that appear only after the task has been learned.

The accumulator model even predicted the outcome of inactivation. Suppressing MOs neurons preferring left visual stimuli in the model reproduced the effects of inactivating right visual cortex (Figure 6G; cf. Figure 2G). However, the simple accumulator model could not reproduce the rightward bias observed with right MOs inactivation (Figure S7X; cf. Figure 2H) because MOs neurons preferring either stimulus position are found equally in both hemispheres (Figures S7C–S7E). To reproduce these effects, we made the additional assumption that the MOs neurons projecting to the downstream integrator from a given hemisphere were those preferring contralateral stimuli.74 In practice, this means that weights from neurons in the left vs. right hemisphere must be positive vs. negative. This refined model predicted the lateralized effect of MOs inactivation (Figure 6H). These results support the hypothesis that MOs neurons learn to additively integrate evidence from visual and auditory cortices, producing a population representation that is causally and selectively sampled by a downstream circuit that makes decisions.

Discussion

We found that mice localize stimuli by integrating auditory and visual cues additively and that this additive integration relies on frontal area MOs. Inactivation of frontal cortex impaired audiovisual decisions, especially when the inactivation targeted MOs. Recordings across frontal cortex revealed that MOs has the strongest representations of task variables. Its representations of visual and auditory signals persisted even when mice were not performing the task, but emerged largely after training. MOs neurons combined visual and auditory location signals additively, and an accumulator model applied to MOs activity recorded in passive conditions in trained mice predicts the direction and timing of behavioral responses.

Taken together, our findings implicate MOs as a critical cortical region for integration of evidence from multiple modalities. This is consistent with a general role for rodent MOs in sensorimotor transformations: this frontal region has been linked to multiple functions,75 including flexible sensory-motor mapping,76,77 perceptual decision-making,59,60,78,79,80,81 value-based action selection,82 and exploration-exploitation trade-off in visual and auditory behaviors58; furthermore, homologous regions of frontal cortex can encode multisensory information in primates.39

Sensory representations in rodent MOs have been seen to evolve with learning in unisensory visual tasks,83,84 consistent with our observations. Our results suggest that the circuits responsible for multisensory decisions resemble those for unisensory decisions: sensory information relevant for the decision is relayed to frontal cortex, where it is integrated and used to guide action. When mice are trained on a multisensory task, MOs learns to represent the multiple modalities, allowing the stimuli to control choices. The weak but significant MOs representation of auditory stimuli before task training might reflect an innate circuit for orienting towards localized sounds.

The effects of inactivation on responses and reaction times to both modalities were strongest when the laser was aimed at anterior MOs. These inactivations may affect wide regions, over 1 mm from the laser’s location.59,63,64 Nevertheless, if the critical region for multisensory processing were some surface area other than anterior MOs, one would see a stronger effect targeting the laser in that area. It is also possible that targeting MOs inactivates regions below it, such as ACA or ORB. However, electrode recordings revealed that these regions had no neural correlates of upcoming choice and weaker correlates of stimulus location (the difference with ACA did not reach significance). We therefore conclude that MOs is an important center for transforming visual and auditory stimuli into motor actions, operating either alone or in parallel with other circuits. It may be part of a distributed cortical and subcortical circuit for integrating sensory evidence, choosing an action plan, and planning and executing movements.

The circuit for audiovisual integration might include the border region between primary visual region (VISp) and primary auditory region (AUDp) (lateral sensory cortex), where inactivations affected both visual and auditory choices. However, our data cannot distinguish whether this reflects multisensory integration or simply lateral spread of the inactivation to both sensory cortices. If it is multisensory, it plays a different role from MOs: inactivating it had weaker effects (particularly on auditory stimuli, as might be expected if the effect arose from diffusion of light through the brain to underlying auditory cortex) and did not affect reaction time. Our data also cannot speak to the role in audiovisual integration of cortical areas below the surface (such as temporal association areas,85 entorhinal area, or perirhinal area). However, we can conclude that the role of parietal cortex in this task is purely visual. This might appear to contradict previous work implicating parietal cortex in multisensory integration4,6,7,18,29,30,31,32,33,34,35,36,86 or showing multisensory activity in primary sensory cortices.41,42,43,44,45,46,47,48,49,50 However, our finding agrees with evidence that parietal neurons can encode multisensory stimuli without being causally involved in a task.29,51,52,87

We hypothesize that the causal role of visual and auditory cortices in this task is unimodal and that these cortices relay their unimodal signals to other regions (possibly via unimodal higher sensory areas88) where the two information streams are integrated.86,87,89 We have confirmed this hypothesis for visual cortex but not for auditory cortex. Doing so would require better access to lateralized areas.

An additive integration strategy is optimal when the probability distributions of visual and auditory signals are conditionally independent given the stimulus location,1 but it may be a useful heuristic90 in a broader set of circumstances. In fact, in our task independence holds only approximately (see STAR Methods, Figure S8). Nevertheless, additive integration is a simple computation2 that does not require learning detailed statistics of the sensory world and performs close to the optimum in many situations.

The additive model we observe in mice derives from Bayesian integration, the predominantly accepted integration strategy in humans and other animals.3,4,5,6,7,8,9,10,11,12,13 However, there is a distinction between the model and some previous work. Typically, previous studies fit psychometric curves based on a cumulative Gaussian function,3,13 which necessitates using lapse rates.58 Our approach instead starts with a conditional independence assumption, which implies that psychometric curves are a logistic function applied to a sum of evidence from the two modalities (see STAR Methods, Figure S8). Our model does not speak to the shape or linearity of these evidence functions. We found empirically that power functions of contrast approximate the data well, but this was not a necessary assumption (see STAR Methods, Figure S8).

Our finding of additive integration might appear to contradict observations from an audiovisual detection task, which suggested that mice were auditory dominant.18,19 However, the discrepancy might arise from differences in the neural representation of stimulus onsets vs. locations. Our task required localization, and the relevant auditory and visual signals combined additively in MOs, with temporal differences that explain the mice’s earlier reactions to auditory stimuli. However, we also saw that neural signals encoding auditory onset were stronger and substantially earlier than neural signals encoding either visual onset or stimulus location from either modality. These strong and early auditory onset signals might dominate behavior in a detection task.18 In other words, mice might integrate audiovisual signals additively when tasked with localizing a source but be dominated by auditory cues when tasked with detecting the source’s presence.

In summary, our data suggest that MOs neurons learn to additively integrate evidence from visual and auditory stimuli, producing a population representation that persists even outside the task and is suitable in the task for guiding a downstream circuit that makes decisions by integration-to-bound. This evidence may be conveyed to MOs via sensory cortices and then fed to downstream circuits that accumulate and threshold activity to select an appropriate action (Figure 7). Based on results in a unisensory task,95 we suspect the downstream integrator is a loop that includes MOs itself, together with basal ganglia and midbrain. As bilateral MOs inactivation slowed decision-making, but did not otherwise change behavior, we hypothesize that redundant circuitry can compensate for MOs when it is silenced.

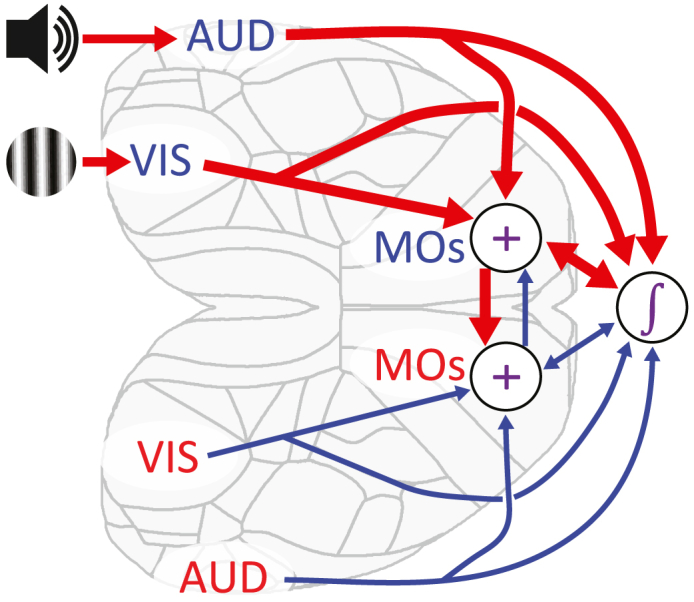

Figure 7.

Diagram of hypothesized audiovisual integration pathway through cortex

Our data suggest that visual and auditory unisensory information are conveyed via visual (VIS) and auditory (AUD) sensory cortices to MOs, where a bilateral representation results from interhemispheric connections. A downstream integrator, distributed over multiple brain regions, possibly including MOs itself, accumulates MOs activity, with a biased sampling of neurons responding to contralateral stimuli. An appropriate action is then determined by an integration to bound mechanism. Alternative pathways from visual and auditory cortices appear to be able to compensate for the absence of MOs activity (e.g., during bilateral inactivation).

The sensory code we observed in MOs has some apparently paradoxical features, but these would not prevent its efficient use by a downstream accumulator. First, a neuron’s preference for visual location showed no apparent relation to its preference for auditory location, consistent with reports from multisensory neural populations in primates6,37 and rats.29 Such “mixed selectivity” might allow downstream circuits to quickly learn to extract relevant feature combinations.91,92,93,94 Neurons encoding incoherent stimulus locations would not prevent a downstream decision circuit from learning to respond correctly; they could be ignored in the current task, but they would provide flexibility should task demands change. Second, although an approximately equal number of MOs neurons in each hemisphere preferred left and right stimuli of either modality, inactivation of MOs caused a lateralized effect on behavior. This apparent contradiction could be resolved if a specific subset of cortical neurons showed lateral bias74 or if the downstream decision circuit weighted MOs neurons in a biased manner. Indeed, midbrain neurons encoding choices in a similar task are highly lateralized,95 and the subcortical circuits connecting MOs to midbrain stay largely within each hemisphere. Indeed, when we constrained the accumulator model so that MOs neurons only contribute to contralateral choices, we reproduced the lateralized effects of MOs inactivation. Whether this downstream bias exists, and whether it depends on specific neural subtypes, is a question for future studies.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| Mouse Common Coordinate Framework | Allen Institute | https://doi.org/10.1016/j.cell.2020.04.007 |

| Sorted spikes, behavioral data | This paper | https://github.com/pipcoen/2023_CoenSit |

| Experimental models: Organisms/strains | ||

| Mouse: C57BL/6J | Jackson Labs | RRID: IMSR_JAX:000664 |

| Mouse: Ai32 | Jackson Labs | RRID: IMSR_JAX:012569 |

| Mouse: PV-Cre | Jackson Labs | RRID: IMSR_JAX:008069 |

| Software and algorithms | ||

| MATLAB version 2022b | The MathWorks, USA | www.mathworks.com |

| Rigbox/Signals | Bhagat et al., 202096 | https://github.com/cortex-lab/Rigbox |

| kilosort2 | Pachitariu et al., 201697 | https://github.com/MouseLand/Kilosort/tree/v2.0 |

| Phy | Cyrille Rossant | https://github.com/cortex-lab/phy |

| Motion analysis | Stringer et al., 201968 | https://github.com/MouseLand/facemap/tree/v1.0.0 |

| Python version 3.7.11 | Python Software Foundation | https://www.python.org |

| Python and MATLAB code to analyze data | This paper | https://github.com/pipcoen/2023_CoenSit, https://doi.org/10.5281/zenodo.7892397 |

| Other | ||

| Video displays | Adafruit | LP097QX1 |

| Fresnel lenses | Wuxi Bohai Optics, China | BHPA220-2-5 |

| Diffusing film | Window Film Company | Frostbite |

| Neuropixels probes | imec, Belgium | www.neuropixels.org |

Resource availability

Lead contact

Further information and requests for resources should be directed to the lead contact, Philip Coen (p.coen@ucl.ac.uk).

Materials availability

This study did not generate new unique reagents.

Experimental model and study participant details

Mice

Experimental procedures were conducted according to the UK Animals Scientific Procedures Act (1986) and under personal and project licenses released by the Home Office following appropriate ethics review.

Experiments were performed on 3 male and 15 female mice, aged between 9 and 21 weeks at time of surgery. The sex of the mice used did not influence the results. For all experiments, we used either transgenic mice expressing ChR2 in Parvalbumin-positive inhibitory interneurons (Ai32 [Jax #012569, RRID:IMSR_JAX: 012569] x PV-Cre [Jax #008069, RRID:IMSR_JAX: 008069]) or wild type C57BL/6J [Jackson Labs, RRID:IMSR_JAX:000664]. 17 mice contribute to behavioral data (Figure 1), 5 mice contribute to optogenetic inactivation data (Figure 2), and 6/4 mice contribute to electrophysiological recordings in trained/naive mice (Figures 3, 4, 5, and 6). Behavioral data (Figure 1) comprised both sessions without any optogenetic inactivation and non-inactivation trials within optogenetic experiments. Mice were either single-housed or co-housed in individually ventilated cages at the Biological Services Unit in University College London.

Method details

Terminology

Here, we define some terms used throughout the methods and manuscript. A “stimulus condition” refers to a particular combination of auditory and visual stimuli; for example, a visual stimulus of 40% contrast on the left and an auditory stimulus presented on the right. A “stimulus type” refers to a category that may comprise several stimulus conditions. We define five different stimulus types: unisensory auditory, unisensory visual, coherent, conflict, and neutral. “Unisensory auditory” trials are when an auditory stimulus is presented on the left or right, and contrast is zero (gray screen). “Unisensory visual” trials are when a stimulus of any contrast greater than zero is presented on the left or right, and the auditory stimulus is presented in the center (during behavior) or is absent (during passive conditions). “Coherent” trials are when a visual stimulus with non-zero contrast is presented on the same side as an auditory stimulus. “Conflict” trials are when a visual stimulus with non-zero contrast is presented on a different side from an auditory stimulus. “Neutral” trials are when the visual contrast is zero and the auditory stimulus is presented in the center. We refer to a single experimental recording (whether purely behavior, or combined with optogenetic inactivation or electrophysiology) as a “session.” Sessions can vary in duration and number of trials. Throughout the manuscript, “t test” indicates a two-sided t test unless otherwise specified. When referring to inactivation we use the term “site” to refer to a single target location (of which there were 52 in total) on dorsal cortex and “region” to refer to a collection of sites (3 sites in each case) in visual, lateral, somatosensory, or frontal cortex.

Surgery

A brief (around 1 h) initial surgery was performed under isoflurane (1–3% in O2) anesthesia to implant a steel headplate (approximately 25 × 3 × 0.5 mm, 1 g) and, in most cases, a 3D-printed recording chamber. The chamber comprised two pieces of opaque polylactic acid which combined to expose an area approximately 4 mm anterior to 5 mm posterior to bregma, and 5 mm left to 5 mm right, narrowing near the eyes. The implantation method largely followed established methods60 and has been previously described.98 In brief, the dorsal surface of the skull was cleared of skin and periosteum. The lower part of the chamber was attached to the skull with cyanoacrylate (VetBond; World Precision Instruments) and the gaps between chamber skull were filled with L-type radiopaque polymer (Super-Bond C&B, Sun Medical). A thin layer of cyanoacrylate was applied to the skull inside the cone and allowed to dry. Thin layers of UV-curing optical glue (Norland Optical Adhesives #81, Norland Products) were applied inside the cone and cured until the exposed skull was covered. The head plate was attached to the skull over the interparietal bone with Super-Bond polymer. The upper part of the cone was then affixed to the headplate and lower cone with a further application of polymer. After recovery, mice were treated with carprofen for three days, then acclimated to handling and head-fixation before training.

Audiovisual behavioral task

The two-alternative forced choice task design was an extension of a previously described visual task.53 It was programmed in Signals, part of the Rigbox MATLAB package.96 Mice sat on a plastic apparatus with their forepaws on a rigid, rubber Lego wheel affixed to a rotary encoder (Kubler 05.2400.1122.0360). A plastic tube for delivery of water rewards was placed near the subject’s mouth.

Visual stimuli were presented using three computer screens (Adafruit, LP097QX1), arranged at right angles to cover 135° azimuth and 45° elevation, where 0° is directly in front of the subject. Each screen was roughly 11 cm from the mouse’s eyes at its nearest point and refreshed at 60 Hz. Intensity values were linearized53 with a photodiode (PDA25K2, Thor labs). The screens were fitted with Fresnel lenses (Wuxi Bohai Optics, BHPA220-2-5) to ameliorate reductions in luminance and contrast at larger viewing angles, and these lenses were coated with scattering window film (‘frostbite’, The Window Film Company) to reduce reflections. Visual stimuli were flashing vertical Gabors presented with a 9° Gaussian window, spatial frequency 1/15 cycles per degree, vertical position 0° (i.e. level with the mouse) and phase randomly selected on each trial. Stimuli flashed at a constant rate of 8Hz, with each presentation lasting for 50 ms (with some jitter due to screen refresh times).

Auditory stimuli were presented using an array of 7 speakers (102-1299-ND, Digikey), arranged below the screens at 30° azimuthal intervals from −90° to +90° (where −90°/+90° is directly to the left/right of the subject). Speakers were driven with an internal sound card (STRIX SOAR, ASUS) and custom 7-channel amplifier (http://maxhunter.me/portfolio/7champ/). The frequency response of each speaker was individually estimated in situ with white noise playback recorded with a calibrated microphone (GRAS 40BF 1/4″ Ext. Polarized Free-field Microphone). For each speaker, a compensating filter was generated to flatten the frequency response using the Signal Processing Toolbox in MATLAB. Throughout all sessions, we presented white noise at 50 dbSPL to equalize background noise between different training and experimental rigs.

Auditory stimuli were 50 ms pulses of filtered pink noise (8–16kHz, 75–80 dbSPL), with 16ms sinusoidal onset/offset ramps. To ensure mice did not entrain to any residual difference in the frequency response of the speakers, auditory stimuli were further modulated on each trial by a filter selected randomly from 100 pre-generated options, which randomly amplified and suppressed different frequency components within the 8–16kHz range. As with visual stimuli, sound pulses were presented at a rate of 8Hz. On multisensory trials, the modulation of visual and auditory stimuli was synchronized, but software limitations and hardware jitter resulted in visual stimuli preceding auditory stimuli by 10 12 ms (mean SD).

A trial was initiated after the subject held the wheel still for a short quiescent period (duration uniformly distributed between 0.1 and 0.25 s on each trial; Figure 1A). Mice were randomly presented with different combinations of visual and auditory stimuli (Figure S1A). Visual stimuli varied in azimuthal position (−60° or +60°) and contrast (0%, 10%, 20%, 40%, and 80%, and also 6% in a subset of mice). On unisensory auditory trials, contrast was zero (gray screen). Auditory stimuli varied only in azimuthal position: −60°, 0°, or +60°; on unisensory visual trials, auditory stimuli were positioned at 0°. A small number of “neutral trials” had zero visual contrast and an auditory stimulus at 0°. The ratio of unisensory visual/unisensory auditory/multisensory coherent/multisensory conflict/neutral trials varied between sessions but was 10/10/5/5/1, and stimulus side was selected randomly on each trial. When a mouse was trained with 5 auditory azimuth locations (Figures S1K–S1L), the additional azimuths were −30° and +30°. A central auditory cue was chosen, rather than an absence of auditory stimuli, to avoid the auditory stimulus acting as a “trial onset” cue. However, for experiments with bilateral inactivation (Figures S5Q–S5X), this central auditory stimulus was removed to ensure that the effects of inactivating posterior parietal cortex on visual trials could not be attributed to a change in perception of this auditory cue.

After stimulus onset there was a 500 ms open-loop period, during which the subject could turn the wheel without penalty, but stimuli were locked in place and rewards could not be earned. This period was included to disambiguate sensory responses from wheel movement—as stimulus and wheel movement are perfectly correlated during the closed loop period. The mice nevertheless typically responded during this open-loop period (Figure S1F). At the end of the open-loop period, an auditory Go cue was delivered through all speakers (10 kHz pure tone for 0.1 s) and a closed-loop period began in which the stimulus position (visual, auditory, or both) became coupled to movements of the wheel. Wheel turns in which the top surface of the wheel was moved to the subject’s right led to rightward movements of stimuli on the speaker array and/or screen, that is, a stimulus on the subject’s left moved toward the central screen. For visual or auditory stimuli, the position updated at the screen refresh rate (60Hz) or the rate of stimulus presentation (8Hz). In trials, where auditory stimuli were presented at 0°, the auditory stimulus did not move throughout the trial. A left or right turn was registered when the wheel was turned by an amount sufficient to move the stimulus by 60° in either azimuthal direction ( 30° of wheel rotation, although this varied across mice/sessions); if this had not occurred within 1 s of the auditory Go cue, the trial was recorded as a “timeout.” On unisensory visual, unisensory auditory, and multisensory coherent trials, the subject was rewarded for moving the stimulus to the center. If these trials ended with an incorrect choice, or a timeout, then the same stimulus conditions were repeated up to a maximum of 9 times. In neutral and conflicting multisensory trials, left and right turns were rewarded with 50% probability (Figure S1A), and trials were only repeated in the event of a timeout, not an unrewarded choice. An incorrect choice or timeout resulted in an extra 2 s delay before the next trial for all stimulus conditions. After a trial finished (i.e. after either reward delivery or the end of the 2 s delay), an inter-trial interval of 1.5–2.5 s (uniform distribution) occurred before the software began to wait for the next quiescent period. Behavioral sessions were terminated at experimenter discretion once the mouse stopped performing the task (typically 1 h).

Mice were trained in stages (Figure S1B). First, they were trained to 70% performance with only coherent trials; then auditory, visual, and neutral/conflict trials were progressively introduced based on experimenter discretion. Using this training protocol, of mice learned the task, and those that did learn reached the final stage in <30 sessions (Figure S1C).

Optogenetic inactivation

For optogenetic inactivation experiments (Figures 2, S4, and S5) we inactivated several cortical sites through the skull using a blue laser,59,60,61,62 in transgenic mice expressing ChR2 in Parvalbumin-expressing inhibitory interneurons (Ai32 x PV-Cre). Unilateral inactivation was achieved using a pair of mirrors mounted on galvo motors (GVSM002-EC/M, Thor labs) to orient the laser (L462P1400MM, Thor labs) to different points on the skull. On every trial, custom code drove the galvo motors to target one of 52 different coordinates distributed across the cortex (Figure 2A), along with 2 control targets outside of the brain (Figure S4C). A 3D-printed isolation cone prevented laser light from reaching the screens and influencing behavior. Inactivation coordinates were defined stereotaxically from bregma and were calibrated on each session. Anterior-posterior (AP) positions were distributed across 0, 1, 2, 3, and −4 mm. Medial-lateral (ML) positions were distributed across 0.6, 1.8, 3.0, and 4.2 mm. On 75% of randomly interleaved trials, the laser (40 Hz sine wave, 462 nm, 3 mW) illuminated a pseudorandom location from stimulus onset until the end of the response window 1.5 s later (both open and closed loop periods, irrespective of mouse reaction time). The laser was not used on trial repetitions due to incorrect choices or timeouts. Pseudorandom illumination meant that a single cortical site was inactivated on only 1.4% of trials per session. This discouraged adaptation effects but required combining data across sessions for analyses. The galvo-mirrors were repositioned on every trial, irrespective of whether the laser was used, so auditory noise from the galvos did not predict inactivation. For bilateral optogenetic inactivation (Figures S5Q–S5X), the same strategy was used, but the galvo motors flipped between two locations at 40 Hz, effectively providing 20 Hz stimulation at each location. The laser power was reduced to zero when the galvo motors moved between locations. This resulted in a reduced laser power of 2 mW.

To investigate the effects of inactivation at different time points (Figures 2J and 2K) in separate experiments, the laser was switched on for 25 ms (DC) at random times relative to stimulus onset (−125 to +175 ms drawn from a uniform distribution). Inactivation was randomly targeted to visual areas (VISp; −4 mm AP, ±2 mm ML) or secondary motor area (MOs; +2 mm AP, ±0.5 mm ML) on 25% of trials.

Neuropixels recordings

Recordings in behaving mice were made using Neuropixels (Phase3A;99) electrode arrays, which have 384 selectable recording sites out of 960 sites on a 1 cm shank. Probes were mounted to a custom holder (3D-printed polylactic acid piece) affixed to a steel rod held by a micromanipulator (uMP-4, Sensapex Inc.). Probes had a soldered external reference connected to ground which was subsequently connected to an Ag/AgCl wire positioned on the skull. On the first day of recording mice were briefly anesthetized with isoflurane while one or two craniotomies were made with a biopsy punch. After at least 3 h of recovery, mice were head-fixed in the usual position. The craniotomies, as well as the ground wire, were covered with a saline bath. One or two probes were advanced through the dura, then lowered to their final position at approximately 10 μm/s.

Electrophysiological data were recorded with Open Ephys.100 Raw data within the action potential band (1-pole high-pass filtered over 300 Hz) was denoised by common mode rejection (that is, subtracting the median across all channels), and spike-sorted using Kilosort97 version 2.0 (www.github.com/MouseLand/Kilosort2). Units were manually curated using Phy to remove noise and multi-unit activity.101 Each cluster of events (‘unit’) detected by a particular template was inspected, and if the spikes assigned to the unit resembled noise (zero or near-zero amplitude; non-physiological waveform shape or pattern of activity across channels), the unit was discarded. Units containing low-amplitude spikes, spikes with inconsistent waveform shapes, and/or refractory period contamination were labeled ‘multi-unit activity’ and not included for further analysis.

To localize probe tracks histologically, probes were repeatedly dipped into a centrifuge tube containing DiI before insertion (ThermoFisher Vybrant V22888 or V22885). When probes were inserted along the same trajectory for multiple sessions (Figure S6A), they were coated with Dil on the first day, and subsequent recordings were estimated to have the same trajectory within the brain (although depth was independently estimated, Figure S6B). After experiments were concluded, mice were perfused with 4% paraformaldehyde. The brain was extracted and fixed for 24 h at 4°C in paraformaldehyde before being transferred to 30% sucrose in PBS at 4°C. The brain was then mounted on a microtome in dry ice and sectioned at 80 μm slice thickness. Sections were washed in PBS, mounted on glass adhesion slides, and stained with DAPI (Vector Laboratories, H-1500). Images were taken at 4× magnification for each section using a Zeiss AxioScan, in two colors: blue for DAPI and red for DiI. Probe trajectories were reconstructed from slice images (Figure S6A) using publicly available custom code (http://github.com/petersaj/AP_histology102). For each penetration, the point along the probe where it entered the brain was manually estimated using changes in the local field potential (LFP) signal (Figure S6B). Recordings were made in both left (47 penetrations) and right (41 penetrations) hemispheres. The position of each recorded unit within the brain was estimated from its depth along the probe. For visualization, the recorded cells were mapped onto a flattened cortex using custom code (Figure 3A). Given the small size the frontal pole, neurons in this region could not be confidently separated from MOs, and so were considered part of MOs for the purpose of this manuscript ( of MOs cells; excluding these cells did not significantly impact results).

For recordings from naive mice (Figures 5, 6C, 6D, and 6F), data were acquired with 4-shank Neuropixels 2.0 probes, which have 384 selectable recording sites out of 5,000 sites on 4 1 cm shanks.103 We recorded from the 96 sites closest to the tip of each shank. Electrophysiological data for these experiments were recorded with SpikeGLX (https://billkarsh.github.io/SpikeGLX/). The same procedures were followed as above for mouse surgery and manual curation of units. Changes in LFP signal were used to detect the point at which the probe entered the brain, and only cells within 1.25mm of the brain surface (i.e. within MOs) were included in analyses.

Passive stimulus presentation recordings

Mice were presented with task stimuli under passive conditions after each behavioral recording session. Although the wheel remained in place, stimuli were presented in open-loop (entirely uncoupled from wheel movement) and mice did not receive rewards. Unisensory auditory, unisensory visual, coherent, and conflict trials were presented to mice. However, on unisensory visual trials, the auditory amplitude was set to zero (rather than positioned at 0° as in the task) to ensure visual sensory responses could be isolated. Due to time constraints, only one coherent and conflicting stimulus combination were presented (80% contrast in both cases), and the trial interval was reduced (randomly selected from 0.5 to 1 s). Stimulus conditions were randomly interleaved, and each condition was repeated 50 times.

Quantification and statistical analysis

Statistics

Statistical tests used in each analysis can be found in the corresponding figure legend, and in the STAR Methods. Where relevant, the definition of center, dispersion, and precision measures (e.g. MAD vs. SD) are described in the text or figure legend. Sample sizes were not estimated prior to data collection. Statistical tests were selected according to the typified distribution for each data type, but we did not perform additional analyses to test the statistical assumptions of each test. Blinding of the experimenter was not applicable to these analyses. Where data were excluded, the reasoning is described in the corresponding section. Statistical tests used, the value of n, and what n represents in each analysis can be found in the corresponding figure legend or in the STAR Methods.

Behavioral quantification

With the exception of specific analyses of timeout trials (Figures 2L and S5I–S5P), timeouts and repeats following incorrect choices were excluded. To remove extended periods of mouse inattention at the start and end of experimental sessions, we excluded trials before/after the first/last three consecutive choices without a timeout. The 6% contrast level was included in analyses of inactivation experiments (Figure 2) as all mice contributing to these analyses were presented with 6% contrast levels, but not all behavioral and electrophysiology sessions included 6% contrast.

On 91.5% of trials (142853/156118), subjects responded to the stimulus onset by turning the wheel within the 500 ms open-loop period (Figure S1F). For data analysis purposes, we therefore calculated mouse choice and reaction time from any wheel movements after stimulus onset (Figures S1D and S1E), even though during the task, rewards would only be delivered after the open-loop period had ended. These choices were defined by the first time point at which the movement exceeded ° of wheel rotation (the exact number varied across sessions/mice, Figure S1D), the same threshold required for reward delivery during the closed-loop period. This matched the outcome calculated during the closed loop period on 94.9% of trials (148203/156118). The reaction time was defined as the last time prior to the choice threshold at which velocity crossed 0 after at least 50 ms at 0 or opposite to the choice direction, and then exceeded of the choice threshold per second for at least 50 ms (Figure S1E). On 5.1% of trials (8380/164498), no such timepoint existed or movement was non-zero within 10 ms of stimulus onset; these trials were excluded. On of trials (59498/156118), mice made sub-threshold movements prior to their calculated reaction time. To eliminate the possibility that these earlier movements were responsible for the neural decoding of choice (Figure 3G) we repeated this analysis using only trials without any movement prior to the calculated reaction time (Figure S6C), which did not change the results.

When calculating performance for each stimulus type for a single contrast (Figures 1E, S1N, and S1P), the value for each mouse was calculated within each session before taking the mean across sessions. We then took the mean across symmetric presentations of each stimulus condition (e.g. unisensory auditory left and right trials). In the case of reaction time (Figures S1H–S1J), we calculated the median for each session before taking the mean across sessions and symmetric presentations. For relative reaction time (Figures 1B, S1O, S1Q, and 6D) we also subtracted the mean across all stimulus types for each mouse. For both performance and reaction time, differences between stimulus types were quantified with a paired t test (n = 17 mice). Using this analysis, we established that reaction times were faster on unisensory auditory trials than unisensory visual trials (Figure 1B). To confirm that the earlier movements on unisensory auditory trials were genuine choices rather than reflexive movements unrelated to the stimulus location, we predicted whether stimuli were presented on the right or left in unisensory auditory and unisensory visual trials from the wheel velocity at each timepoint after stimulus onset. Trial data was subsampled for each session (to equalize the number of stimuli appearing on the left and right) and split into test and training data (2-fold cross validation). Mean prediction accuracy was calculated by first taking the mean across sessions, then across mice. Consistent with our conclusions from calculated reaction times, auditory location could be decoded earlier than visual location (Figure S1G). This conclusively demonstrates that mice were able to identify the location of an auditory stimulus earlier than a visual stimulus.

Video motion energy analysis

Because neural activity across the brain is related to bodily motion,68,69 we asked if mice still respond to stimuli in the passive condition. We filmed the mouse at 30 frames per second (DMK 23U618, The Imaging Source). We quantified the motion energy on each trial by averaging the absolute temporal difference in the pixel intensity values, across all pixels in a region of interest including the face and paws, and across a time period 0 to 400 ms after stimulus onset, which typically included the mouse response during behavior (Figure S1F). This analysis established that mice exhibit minimal movement in response to task stimuli during passive conditions (Figure S7T).

Wheel movement during active vs. passive conditions

To ask whether mice might still move the wheel in response to stimuli during passive stimulus presentation (Figures S7U–S7V) we calculated the absolute difference in wheel position between stimulus onset time and 0.5 s post-stimulus onset for five mice, and then took the mean across mice. We compared this value to a shuffled distribution generated from the same trials, using the same method, but with the stimulus onset time randomized within each trial (this process was repeated 1000 times). The wheel position at 0.5 s after stimulus onset was considered significant if the unshuffled value was in the top 5% of the values in the shuffled distribution.

Psychometric modeling

The model we use throughout the text, the (parametric) additive model, is given by the equation