Abstract

The use of Augmented Reality (AR) for navigation purposes has shown beneficial in assisting physicians during the performance of surgical procedures. These applications commonly require knowing the pose of surgical tools and patients to provide visual information that surgeons can use during the performance of the task. Existing medical-grade tracking systems use infrared cameras placed inside the Operating Room (OR) to identify retro-reflective markers attached to objects of interest and compute their pose. Some commercially available AR Head-Mounted Displays (HMDs) use similar cameras for self-localization, hand tracking, and estimating the objects’ depth. This work presents a framework that uses the built-in cameras of AR HMDs to enable accurate tracking of retro-reflective markers without the need to integrate any additional electronics into the HMD. The proposed framework can simultaneously track multiple tools without having previous knowledge of their geometry and only requires establishing a local network between the headset and a workstation. Our results show that the tracking and detection of the markers can be achieved with an accuracy of on lateral translation, on longitudinal translation and for rotations around the vertical axis. Furthermore, to showcase the relevance of the proposed framework, we evaluate the system’s performance in the context of surgical procedures. This use case was designed to replicate the scenarios of k-wire insertions in orthopedic procedures. For evaluation, seven surgeons were provided with visual navigation and asked to perform 24 injections using the proposed framework. A second study with ten participants served to investigate the capabilities of the framework in the context of more general scenarios. Results from these studies provided comparable accuracy to those reported in the literature for AR-based navigation procedures.

Index Terms—: Augmented Reality, Computer-Assisted Medical Procedures, Navigation, Tracking

1. Introduction

IN recent years, an increasing number of Augmented Reality (AR) applications have found their use in medical domains, including educational, training, and surgical interventions [1], [2], [3], [4]. Among the multiple technologies capable of delivering augmented content, using AR Head-Mounted Displays (HMDs) has proven beneficial to aid physicians during surgical interventions [5], [6], [7], [8]. The use of HMDs in the Operating Room (OR) allows for the observation of relevant content in-situ, enables delivering visual guidance and navigation, promotes the visualization and understanding of diverse bi-dimensional and three-dimensional medical imaging modalities, and facilitates training and communication [9], [10].

A fundamental requirement to provide visual guidance and surgical navigation using AR is to know the threedimensional pose of the elements involved in the task (i.e., their position and orientation). Although multiple technologies can be used to estimate the pose of the objects of interest, optical tracking is one of the most common due to its computational simplicity, cost-effectiveness, and the availability of cameras used in AR applications [11]. This tracking technology uses computer vision algorithms to identify textural patterns or geometrical properties of objects of interest, from which their dimensions are well known, to estimate their pose.

In the medical context, providing surgical navigation requires knowing the pose of the patients, surgical tools, and, occasionally, the surgeons and the medical team’s head. Commercially available medical-grade tracking systems estimate the pose of the objects of interest using infrared cameras that detect the location of retro-reflective markers rigidly attached to them. These retro-reflective markers are arranged in unique configurations to distinguish between the multiple objects observed in the scene. Commercially available HMDs, use built-in infrared cameras for self-localization, hand tracking, and estimation of the objects’ depth in the environment. Thus, these cameras can also be used to locate the retro-reflective markers used in medicalgrade tracking systems1 [12], [13]. While combining the accuracy provided by medical-grade tracking systems with the visualization capabilities of AR HMDs could support the integration of these devices into surgical scenarios, simultaneously maintaining the fiducial markers in the line of sight of the HMD and the tracking system in a cluttered workspace may hinder its use in the OR. As an alternative, using the HMD for combined visualization and tracking of these markers may mitigate such challenges. However, the accuracy and feasibility of using cameras and sensors that may not have the accuracy and field of view of conventional tracking systems require detailed investigation. Therefore, it is essential to establish a framework capable of performing this task and investigate the accuracy of combined tracking and visualization when only using HMDs.

This work introduces a method that uses the builtin Time-of-Flight (ToF) sensor of commercially available HMDs2 to define, detect and localize passive retro-reflective markers commonly used during navigation-assisted medical procedures. This type of sensor provides a more extensive resolution range (16-bit) compared to existing approaches that use grayscale cameras (8-bit). In addition, this sensor is unaffected by ambient visible light [14], making it more suitable for applications with challenging lighting conditions. To illustrate the benefits of the proposed framework, we present a use case in the context of medical applications to enable navigation capabilities and aid physicians in performing percutaneous surgical procedures using the built-in sensors of the Microsoft HoloLens 2.

The main contribution of this work, as compared to the limited existing literature on the use of the AR HMDs’ builtin IR sensors for tool tracking purposes, is as follows: 1) We propose a framework that can identify retro-reflective markers and define rigid tools on-the-fly. This framework can also track multiple tools in 6 DoF with comparable accuracy to that offered by medical grade tracking systems simultaneously. These capabilities are provided without the need to modify the headset or integrate any additional hardware. To our knowledge, this is the first time that these capabilities have been integrated into off-the-shelf AR HMDs. 2) We validate the proposed framework with a comprehensive evaluation, including its stability, repeatability, and accuracy (Section 4) and its potential applicability in experiments involving real users (Section 5). 3) To enable the reproducibility of our work and facilitate its use by other researchers, we have made our code open-source.3 The source code incorporates multiple functionalities, such as wireless high-framerate data transfer for HoloLens 2 research mode, real-time sensor data preview, on-the-fly tool definition, simultaneous multi-tool detection, and tool pose visualization in 3D Slicer. Although this work uses the medical domain to exemplify the capabilities of the proposed framework, its applicability can be extended to other applications that use AR HMDs and demand reliable tracking of objects for performing tasks in the personal space. We envision that the experiments and resources provided in this manuscript can aid researchers in deciding if such devices are suitable for their specific applications.

2. Related Work

Using commercially available AR HMDs for medical applications has found application in several surgical fields. In spine surgery, providing surgical navigation for pedicle screw placement using AR HMDs has shown shorter placement time when compared to traditional procedures [15] and comparable accuracy to robotic-assisted computer-navigated approaches [16]. In addition, it has supported spinal pedicle guide placement for cannulation without using fluoroscopy [17] and enabled the registration between the patient’s anatomy and three-dimensional models containing surgical planning data [18]. This technology has also been used to provide visual guidance during the performance of osteotomies, contributing to an increase in the accuracy achieved by inexperienced surgeons compared to freehand procedures [19]. Additional studies have explored the potential of using AR HMDs to support users during the performance of hip arthroplasties, showing comparable results to those achieved using commercial computer-assisted orthopedic systems [20], [21]. Even more, using AR HMDs reduces the events during which the surgeons deviate their attention from the surgical scene or are exposed to fluoroscopy radiation due to unprotected parts when they turn their bodies [22], [23].

Different works have used the built-in sensors of commercially available HMDs to estimate the pose of objects of interest in medical applications. These approaches avoid incorporating external tracking systems and additional devices into the already cluttered OR. On the one hand, identifying markers in the visible spectrum using the built-in RGB cameras has been used to investigate the feasibility of using AR in surgical scenarios [18], [20]. The identification of this type of marker is not only limited to the visible spectrum and has also been investigated using multimodal images [24]. However, the low accuracy of the registration and motion tracking may hinder their successful integration into the surgical workflow [18]. On the other hand, the use of infrared cameras to track retro-reflective markers has been adopted by surgical grade tracking systems as they fulfill the accuracy requirements to provide surgical navigation. While commercially available HMDs frequently incorporate infrared cameras for self-localization, hand tracking, or depth estimation, navigation accuracy analysis with these cameras requires further investigation.

One of the initial works proposing the use of the built-in cameras of AR HMDs for the detection and tracking of retroreflective markers was proposed by Kunz et al. [12]. This work introduced two different approaches to detecting the markers using the built-in sensors of commercially available AR HMDs (i.e., Microsoft HoloLens 12). A first approach combined the reflectivity and depth information collected using the near field ToF sensor. A second approach used the frontal environmental grayscale cameras as a stereo pair to estimate the position of the objects of interest. This last approach required the addition of external infrared LEDs to illuminate the markers and increase their visibility in the grayscale images. However, using the environmental cameras provide a higher resolution than the depth camera used as part of the ToF sensor (648×480 vs. 448×450 pixels, respectively). Although an offline accuracy evaluation of both approaches is presented in this work, the tracking and implementation of the system are reported only for the depth-based approach, showing a tracking accuracy of 0.76 mm when the markers are placed at distances ranging between 40 to 60 cm. In addition, only translational and not rotational experiments were reported in this work.

Additional work presented by Gsaxner et al. [13] used the environmental grayscale cameras of the Microsoft HoloLens 22 for similar purposes. This work introduced two tracking pipelines that used stereo vision algorithms and Kalman filters to track retro-reflective markers in the context of surgical navigation. The proposed framework uses the stereo vision pipeline to initialize the tracking of the markers and a recursive single-constraint-at-a-time extended Kalman filter to keep track of the object of interest. Whenever the tracking using the Kalman filter is lost, the stereo vision pipeline is used to re-initialize the tracking of the objects. This work showed that the combination of these tracking pipelines enables real-time tracking of the markers with six degrees of freedom and an accuracy of 1.70 mm and 1.11°. Unlike Kunz et al. [12], this work investigated the tracking errors in the position and orientation of the objects. However, it required the integration of additional infrared LEDs to illuminate the retro-reflective markers.

The proposed framework differs from other works that use AR HMDs to track retro-reflective markers because it integrates algorithms for tool definition on-the-fly. This capability allows for dynamic integration and detection of tools, even when their geometry is unknown. Therefore, providing more flexibility than existing approaches. Our framework can also track and detect multiple tools simultaneously, allowing for performing tasks that involve manipulating various objects. In addition, our experiments comprehensively evaluate the proposed framework and the HMD sensors’ capabilities. Furthermore, compared to existing works, the proposed framework provides these capabilities without modifying or integrating additional hardware into the HMD. Although commercial IR tracking systems enable tool definition and multiple tool detection, our framework represents an initial step in incorporating such approaches into AR HMDs, providing comparable accuracy, and facilitating their use by other researchers.

3. Methods

This work presents a framework that uses the built-in cameras integrated into commercially available AR HMDs, to enable the tracking of retro-reflective markers without adding any external components. This section describes the necessary steps to calibrate these cameras, enable the detection of retro-reflective markers commonly attached to surgical instruments and robotic devices, identify multiple tools in the scene, and provide visual guidance to users or AR applications. To exemplify the potential of our framework, we present a use case in which the tool tracking results serve to provide visual navigation to users during the placement of pedicle screws in orthopedic surgery.

3.1. System Setup

The proposed framework was implemented using the Microsoft HoloLens 2 and a workstation equipped with an Intel Xeon(R) E5–2623 v3 CPU, an NVIDIA Quadro K4200 GPU, and 104 Gigabytes of 2133 MHz ECC Memory. The research mode provided for the HoloLens served to gain access to the device’s built-in sensors and cameras [14]. To optimize the acquisition and processing of the images collected using the HMD, we connected this device to the workstation using a USB-C to ethernet adapter and a cable that provided a 1000 Mbps transmit rate. A multi-threaded sensor data transfer system, implemented in Python via TCP socket connection, enabled the acquisition of the sensors’ data at a high frame rate and with low latency. The data transfer system was tested using two different approaches. A first approach, using DirectX, allowed to transfer and acquire the video from the cameras at an approximate framerate of 31 fps. A second approach, implemented using Unity 3D, reported an average speed of 21 fps. In addition, a user interface was designed to collect, process, and analyze the data collected from the HMD. This user interface also provided visual guidance and assisted users while performing tasks requiring the precise alignment of virtual and real objects in surgical scenarios.

3.2. Camera Calibration and Registration

Camera Calibration.

To investigate the feasibility of utilizing and combining the multiple cameras integrated into the HoloLens 2 for the tracking of retro-reflective markers, the left front (LF), right front (RF), main (RGB), and Articulated HAnd Tracking (AHAT) cameras need to be calibrated. This calibration process enables extracting the spatial relationship between the cameras and their intrinsic parameters and representing them using a unified coordinate system.

We used a checkerboard with a 9 × 12 grid containing individual squares of 2cm per side to calibrate the different sensors. The checkerboard was attached to a robotic arm, and the HoloLens was rigidly fixed on a head model to ensure steadiness during image capturing. A set of 120 pictures, acquired by placing the checkerboard at different positions and orientations using the robotic arm, were collected for each one of the cameras. The images for the RGB camera were captured using the HoloLens device portal with a resolution of 3904 × 2196 pixels, while the other cameras’ images were retrieved via the HoloLens research mode. For the AHAT camera, the calibration and registration procedure was completed using the reflectivity images.

After data collection, the images were randomly subdivided into two subsets. The first subset contained 90 pictures used for camera calibration, and the remaining 30 images served for testing. The Root Mean Square Error (RMSE) of the detected and reprojected corner points was used to evaluate the calibration and test image sets. The images selection process was repeated twenty times to ensure the repeatability of the results. The reprojection errors for the calibration and test sets resulted in pixels and pixels for the AHAT, pixels and pixels for the LF, pixels and pixels for the RF, and pixels and pixels for the RGB, respectively.

Camera Registration.

For the registration of the different cameras, a new set of 100 images were collected from the different sensors while placing the checkerboard at different poses. The checkerboard was kept steady before moving it to a different position to ensure synchronization between the multiple sensors. As an initial step, the RF camera was registered to the LF camera using the stereo camera calibration toolbox from Matlab. The following step registered the LF and RGB cameras to the AHAT. For the registration of these cameras, the three-dimensional positions of the checkerboard’s corner points were calculated using the respective intrinsic camera parameters. The extrinsic parameters between two cameras were later calculated by solving a leastsquares optimization problem:

| (1) |

where represents the three-dimensional corner points in the RGB and LF cameras, and denotes the corner points in AHAT space.

The registration error was evaluated using the RMSE between the detected corner points of one camera and the reprojected points of the other camera. The registration between the RF-LF cameras produced a 0.190 pixels reprojection error. In comparison, the registration between the LF-AHAT and RGB-AHAT cameras led to reprojection errors of 0.901 and 0.758 pixels, respectively.

In addition, the field-of-view (FoV) of every camera was estimated quantitatively in horizontal and vertical directions using the equations:

| (2) |

| (3) |

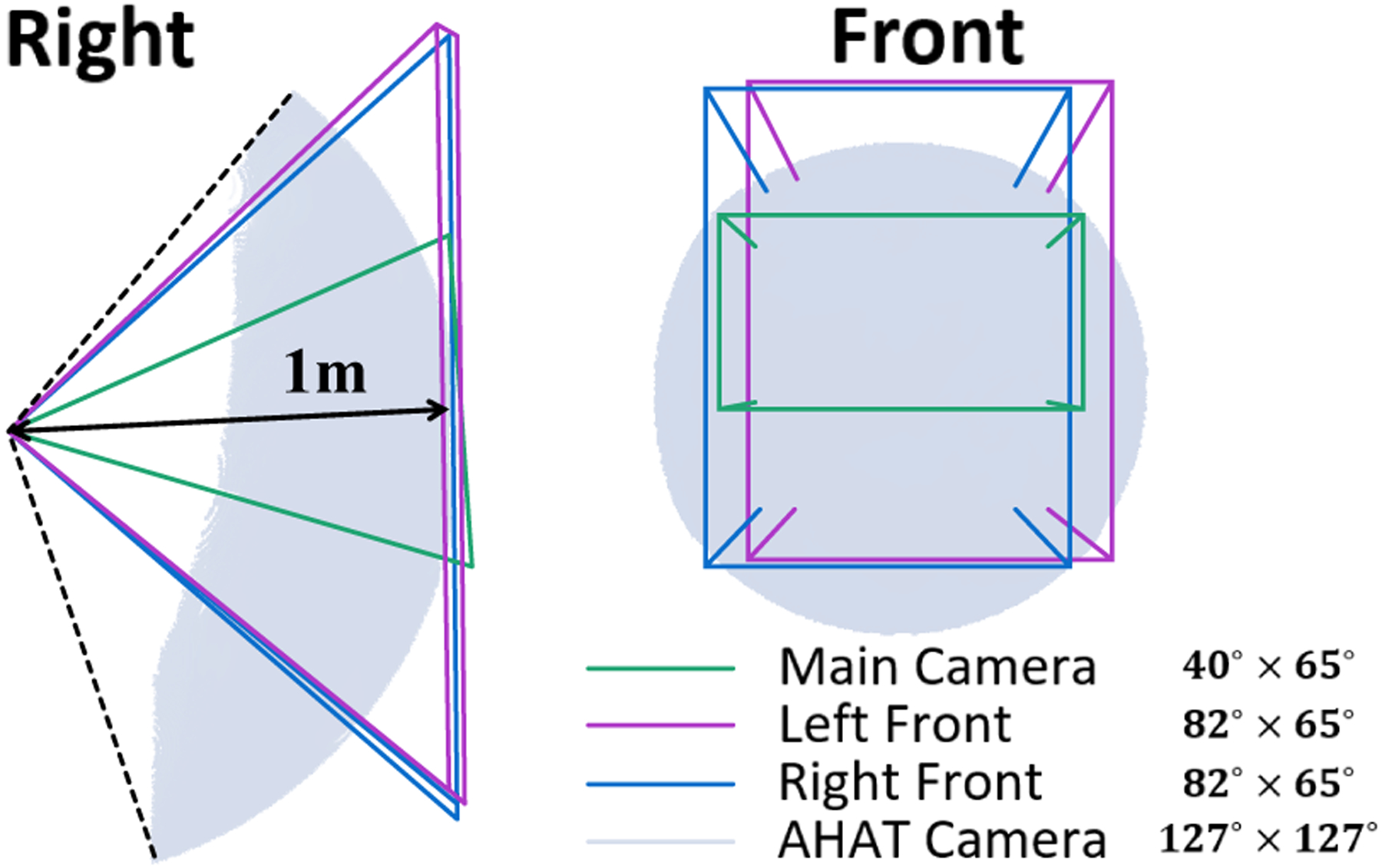

Once the registration between the cameras is complete, the different sensors can be presented in a common coordinate space using their corresponding camera parameters (see Figure 2). This figure depicts the FoV of the visible light cameras from the optical center to a unit plane using frustums. As the AHAT sensor data is only effective within a 1-meter distance, the FoV of this sensor is depicted by the furthest detection surface.

Fig. 2.

The spatial relationship between the built-in cameras of the HoloLens 2. The camera spaces of the RGB, LF, and RF cameras are displayed using frustums. The camera space of the AHAT camera is presented using a one-meter radius sphere. The FoV of all the cameras is depicted as height × width.

During the calibration process presented in this work, a angle down was observed for the AHAT compared to the other cameras. The FoV of the AHAT camera resulted in , which is about six times larger than the RGB camera and three times larger than the LF and RF cameras , making it extremely useful for the target detection within a meter distance, where most of the actions in the personal space take place. In addition, the relatively large FoV of the AHAT camera, combined with the sensor information of environmental infrared reflectivity and depth, makes this camera suitable for detecting retro-reflective markers used in surgical procedures.

3.3. Tool Tracking

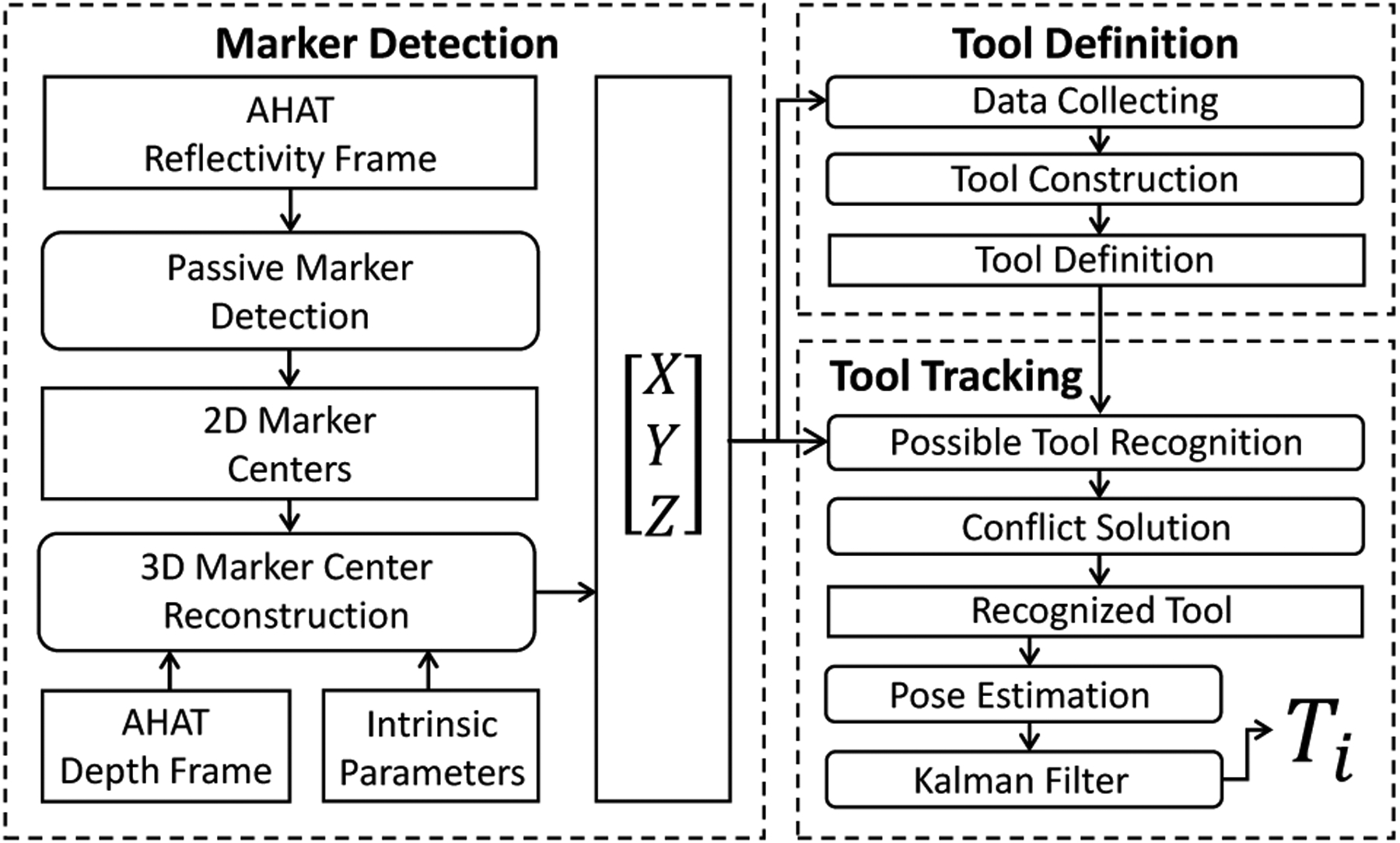

To explore the capabilities of the AHAT camera to track retro-reflective markers used in surgical scenarios, we propose a framework for the definition, recognition, and localization of tools that use this specific type of marker. As depicted in Figure 3, such a framework includes three main stages: three-dimensional marker’s center detection, tool definition, and multi-tool recognition and localization.

Fig. 3.

The proposed framework comprises three main stages. i) The three-dimensional position of all the retro-reflective markers observed using the AHAT camera is extracted from the scene. ii) The detected markers are grouped in subsets to identify particular arrangements corresponding to specific tools. iii) The pose of the identified tools is extracted from subsequent image frames.

3.3.1. Marker Detection

As an initial step, the three-dimensional positions of the individual retro-reflective markers are obtained using the intrinsic camera parameters and the reflectivity and depth images of the AHAT camera. This type of retro-reflective marker depicts extremely high-intensity values when observed in the reflectivity images of the AHAT camera. Therefore, an intensity-based threshold criterion was used to separate these objects of interest from the rest of the scene. The AHAT intensity images are represented using a 16-bit unsigned integer format. As depicted in Figure 4, most of the environment presents a reflectivity intensity below 300. At the same time, the retro-reflective markers report values larger than 500, with a peak value over 2000 at the center of the marker.

Fig. 4.

The proposed framework combines the reflectivity and depth images of the AHAT camera to detect and extract the three-dimensional position of passive retro-reflective markers. The marker detection stage facilitates identifying and defining individual tools composed of multiple spherical markers. This approach allows localizing and recognizing multiple tools in the scene without modifying the AR-HMD.

In addition, a connected component detection algorithm was used to separate individual markers from each other. Considering the environmental noise observed in the reflectivity images, including large connected high-reflection areas caused by flat reflective objects like glass or monitors or smaller areas resulting from random noise or surrounding objects, an additional threshold on the connected component area was applied to extract the individual retro-reflective markers. To estimate the area in pixels that a retro-reflective marker with radius at a distance would occupy in the image of the AHAT camera, we used the following equation:

| (4) |

where , and are the pixel size in and the focal distance in of the AHAT intrinsic parameters.

After applying the intensity-based and connected component detection algorithms, the three-dimensional position of every retro-reflective marker is calculated using the AHAT camera’s depth information , its intrinsic parameters, and the marker’s diameter. The central pixel of every marker is first back-projected into a unit plane using the intrinsic parameters. The depth information from the AHAT camera represents the distance between a certain point in the three-dimensional space and the camera’s optical center [25]. Hence, the position of this point can be expressed as follows:

| (5) |

While the calculated position corresponds to the actual center of the target when using flat markers, the radius of the target must be considered when using spherical markers. In this particular case, the estimated depth information provided by the AHAT camera corresponds to the central point on the surface of the marker and not to the center of the sphere. Therefore, when using spherical markers, the central position of the sphere can be computed as:

| (6) |

where represents the radius of the spherical marker.

3.3.2. Tool Definition

A common approach to tracking objects using retro-reflective markers involves attaching a set of these components to a rigid frame with a unique spatial distribution. This spatial information contributes to estimating the pose of a particular object and allows distinguishing between different instances that can be observed simultaneously in the scene. The procedure to define the properties of an object using our framework, from now on referred to as a tool, requires a series of steps that are described next.

First, a single image frame from the AHAT camera is used to extract the three-dimensional distribution of the retro-reflective markers that belong to a tool. The spatial distribution of such markers provides the geometrical properties of the tool and enables the generation of an initial configuration of it. This step allows identifying potential misdetection in subsequent frames by comparing the distance observed between the detected markers.

The following step uses an optimization method to calculate the shape of the rigid tool using the threedimensional positions of the markers extracted from every frame. In this step, we use to represent the set of markers , in the frame of the total collection, that defines the three-dimensional position of the individual markers with index observed in the tool definition.

The RMSE between one tool in two frames, and , is used to evaluate the difference as follows:

| (7) |

where is the Euclidean distance of the marker’s position with index observed in the frames and .

Furthermore, to estimate the optimal position of the set of markers that define a tool, the mean difference between the optimized and estimated positions in every frame are used as the minimization target as follows:

| (8) |

where I represents the total number of collected frames for the tool definition procedure.

Lastly, the mean translation of the set of markers is removed to generate the definition using:

| (9) |

Here, represents the final definition of the tool with origin at the geometric center of all the markers in the tool. This procedure is depicted in Figure 4.

3.3.3. Tool Recognition and Localization

To identify multiple tools, each with shape , from a single AHAT frame where multiple markers are detected, we estimate the Euclidean distance between the markers and tools using the following equations:

| (10) |

| (11) |

where indicates the marker in the tool or a scene, and represents the tool to be detected in the environment.

All subsets of detected markers whose shape fits the definition of the tool are found using a depthfirst graph searching algorithm; refers to the possible subset of markers that fits the target tool. To quantitatively depict the similarity between the possible solution and target tool definition, we used a loss function that considers the corresponding lengths of the tool:

| (12) |

where refers to the number of markers in the definition of the tool.

During the searching process, we define two thresholds to exclude incorrect matches. A first threshold identifies mismatches between the corresponding sides of the tool. A second threshold discerns between the obtained solution and the tool definition. These thresholds are applied as follows:

| (13) |

| (14) |

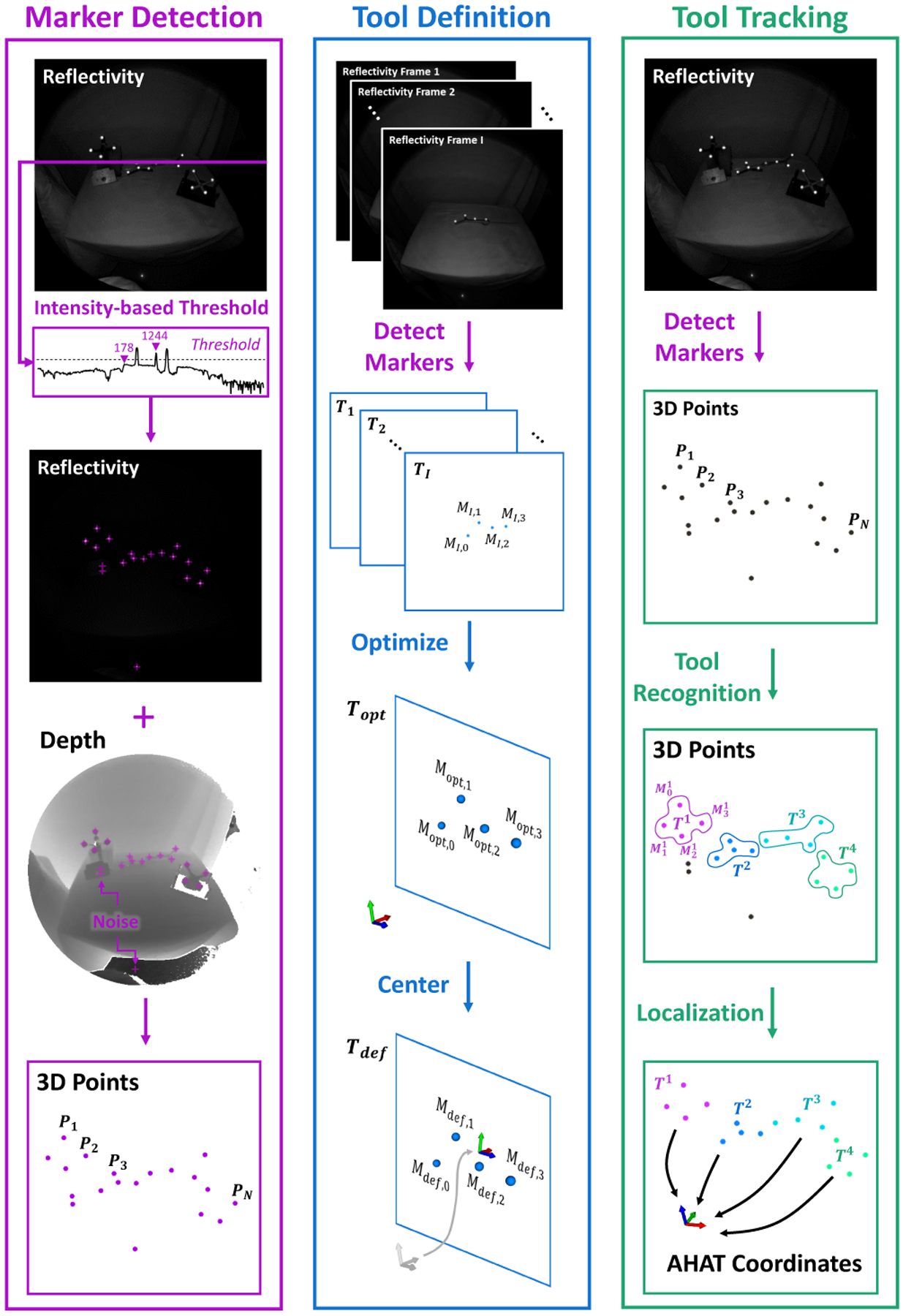

To select a proper value for these thresholds, the uncertainty of the three-dimensional position of the detected markers is considered. As shown in Figure 5c, the standard error for depth detection, , changes as a function of the depth at which the markers are observed. This value serves to calculate the maximum standard error for a side using . When a 95% probability for detection is desired, the thresholds for a single side can then be calculated as . Lastly, the error threshold for the whole tool is computed using .

Fig. 5.

(a) Experimental setup used to estimate the noise distribution provided by the built-in depth camera on the HMD. (b) The point-to-plane distance error distribution at approximately 200mm, 500mm, and 700mm depths. (c) The standard error of depth detection for every pixel versus depth. The vertical lines depict the mean depth at which the target was detected.

After the possible solutions for every tool have been extracted, the following step deals with any redundant information found in the possible solutions. This redundant information includes using the same retro-reflective marker more than once or using the same set of markers to track the same tool. When multiple solutions use the same set of markers, ordered using different index values, to estimate the pose of the same tool, the solution with the lowest error is preserved, and the remaining solutions are removed. After removing these redundant solutions, the remaining subsets are sorted according to their error using their respective tool definition . In this case, the solution with the least error is considered the reference. Any remaining solution that conflicts with the reference or aims at detecting the same tool is removed. This procedure is repeated until all the defined tools are found or no other solution exists. A final step uses an SVD algorithm to calculate the transformation matrices that map the tool coordinates into the AHAT camera space.

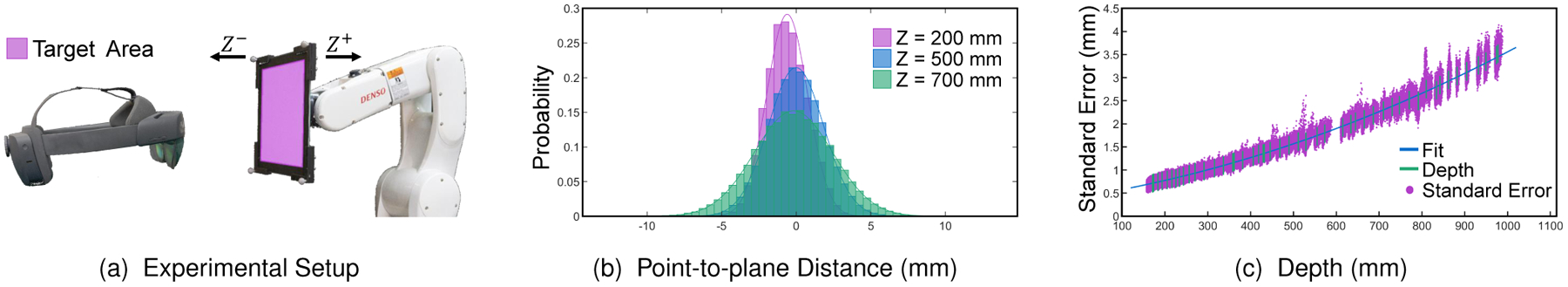

3.3.4. Precision Enhancement

In addition, we proposed using Kalman filters to enhance the precision that can be achieved with the proposed framework. On the one hand, using these filters could contribute to reducing the tremor observed by the cut-off error from the AHAT depth data. This source of error is observed because the AHAT provides an integer value corresponding to the depth detected in millimeters, limiting the maximum depth resolution to 1 mm and decreasing the tracking stability of the passive markers and tools. On the other hand, the implementation of Kalman filters could compensate for the detection error observed when using the AHAT sensor. However, to justify the use of Kalman filters, it is required that the signals involved follow a gauss distribution. This type of distribution allows the filters to behave as a best linear unbiased estimator. Therefore, we conducted an experiment to estimate if the AHAT’s detection error can be modeled using a gaussian distribution.

Our experimental setup used a glass board with an anodized aluminum surface and retro-reflective markers located at the corners. A robotic arm was used to move the glass board along the z-axis of the AHAT camera frame while keeping the board steady during data collection (Fig. 5a). The error distribution of the depth data was tested using 48 different depths, assigned randomly and ranging from 156 to 971 mm. These lower and upper boundaries correspond to the limits where the board occupies the whole image until the depth data is invalid. For every depth distance, 300 continuous AHAT frames were collected. The reflectivity information of the first frame was used to extract the target detection area. Every depth image contained in the 300 frames was used to create individual point clouds merged to produce a final set. A plane fitting algorithm used the merged point cloud to estimate the board’s position.

Finally, the fitting error was estimated using a point-to-plane function distance. As depicted in Figure 5b, the error presents a strong gauss-like distribution at different depths. An Anderson-Darling test was used to test normal distribution, where the p-value was found to be smaller than 0.0005 for all 48 distances. In addition, the standard deviation increased as a function of the depth. Further details regarding the error observed in the data at the different depths are presented in Figure 5c.

In addition, we investigated if the standard error depicted by the AHAT could be modeled as a function of the depth detected. For every pixel in the marker area at a specific depth, the uncertainty over 300 continuous frames was considered the standard error std(p), while the mean depth dep(p) was used as the ground truth. Because a non-linear increment of the std(p) over the dep(p) was observed, we used a quadratic polynomial function to fit the relationship between these variables using:

| (15) |

After modeling this relationship, the goodness of the fitting was evaluated using its coefficient of determination:

| (16) |

where represents the fitted value of pixel p.

For the data collected during our experiments, we obtained a coefficient of determination . This value indicates that our model can quantitively predict the relationship between the depth and its standard error.

These results support the use of a Kalman filter for the proposed framework. Thus, we used several independent filters for the individual markers for every tracked tool based on their depth value. After the filtered depth value is calculated, the three-dimensional position and transform matrix from the tool to AHAT space are adjusted. In addition, when the tool’s tracking is lost, every Kalman filter for the specific tool is reinitialized.

4. Experiments And Results

4.1. Localization Error

To assess the tracking accuracy of our algorithm, we conducted a set of experiments in which we compared different tracking technologies in a controlled environment. Among these tracking technologies, we used optical cameras to detect ArUco and ChArUco markers in the visible spectrum. Although existing works have used the environmental cameras of the HoloLens for the tracking of these markers [17], we used an M3-U3-13Y3C-CS 1/2” Chameleon3 color camera4 coupled with an M1614-MP2 lens5. This camera provided a resolution of 1280 × 1024 pixels and enabled the acquisition of images with better image quality than those acquired using the built-in cameras of the HoloLens. In addition, we compared the performance of our algorithm against an NDI Polaris Spectra6. This system is commonly used in surgical procedures to track passive retro-reflective markers in the infrared spectrum.

Stability, Repeatability, and Tracking Accuracy

To compare the stability, repeatability, and accuracy of the optical markers’ pose estimation using different tracking technologies, we conducted an experiment in which we translated and rotated the target markers using six different configurations. These experiments targeted multiple distances in the personal space at reaching distances. To precisely control the movements of the markers for the different sensors, we used a 3-axis linear translation stage and a rotation platform with an accuracy of and , respectively. The markers were left static for the first experiment, and 100 poses were collected. After data collection, the markers were moved along the x-axis by , and another set of 100 poses was recorded. The following step brought the markers back to their original position and a total number of 10,000 randomly selected distance values were calculated using these two data sets. We performed this procedure 20 times using different initial marker poses and environmental light conditions to ensure repeatability. A second experiment followed this procedure but used a translation of along the x-axis. The markers were moved along the z-axis using the same translations for the third and fourth experiments. Lastly, the markers were rotated 10 and around the world-up vector for the fifth and sixth experiments.

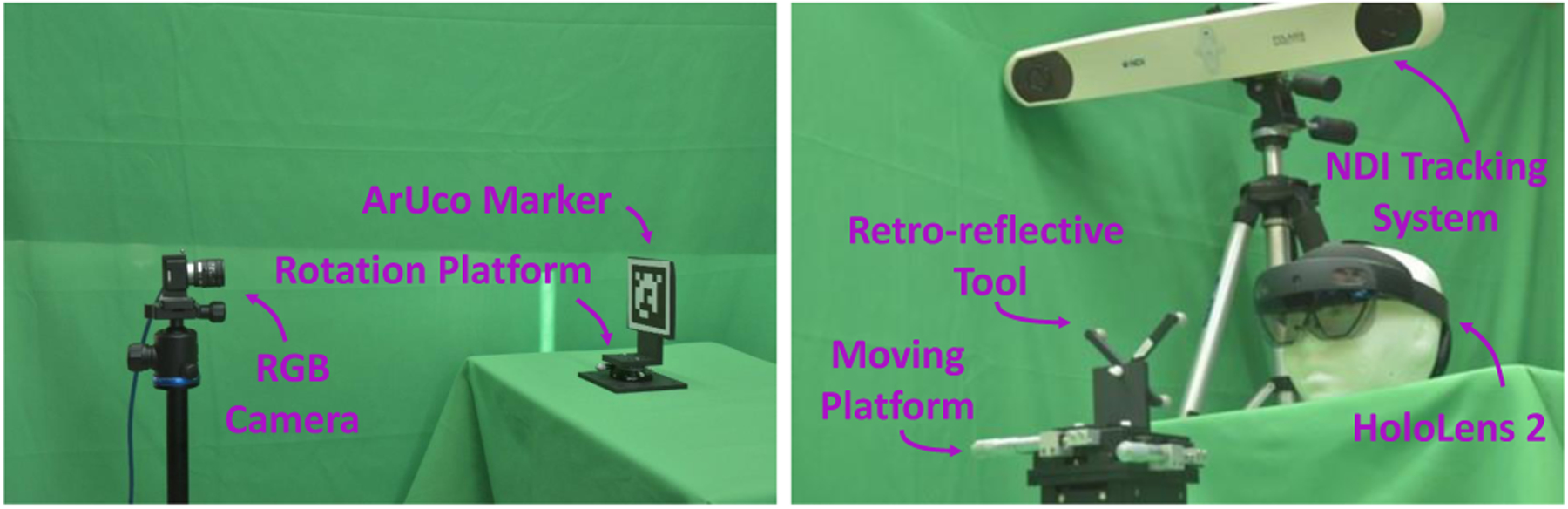

To ensure that the tracking systems could provide accurate values, we placed the markers at different distances from the sensors depending on the tracking technology used. For our proposed method, a tool composed of four retro-reflective spheres with a diameter of was placed at an approximate distance of from the AHAT camera. This distance corresponds to the approximate length of the user’s arms at normal working conditions and ensures the observation of the target in the central portion of the usable tracking space of the AHAT. The same tool was placed at approximately from the surgical tracking system. Similar to the case of the AHAT sensor, this distance depicts a standard distance at which the NDI tracking device is placed during surgical procedures and ensures the observation of the target in the central portion of the usable tracking space. In addition, for the detection of markers in the visible spectrum, we used a 6 × 6 ArUco marker and a 3 × 3 ChArUco marker, each with a side length of . These optical markers were placed at from the camera. The experimental setup used for tracking these targets is shown in Figure 6, and the results of this experiment are presented in Figure 7.

Fig. 6.

Experimental setup for tracking accuracy using ArUco and ChArUco (left) and retro-reflective (right) markers.

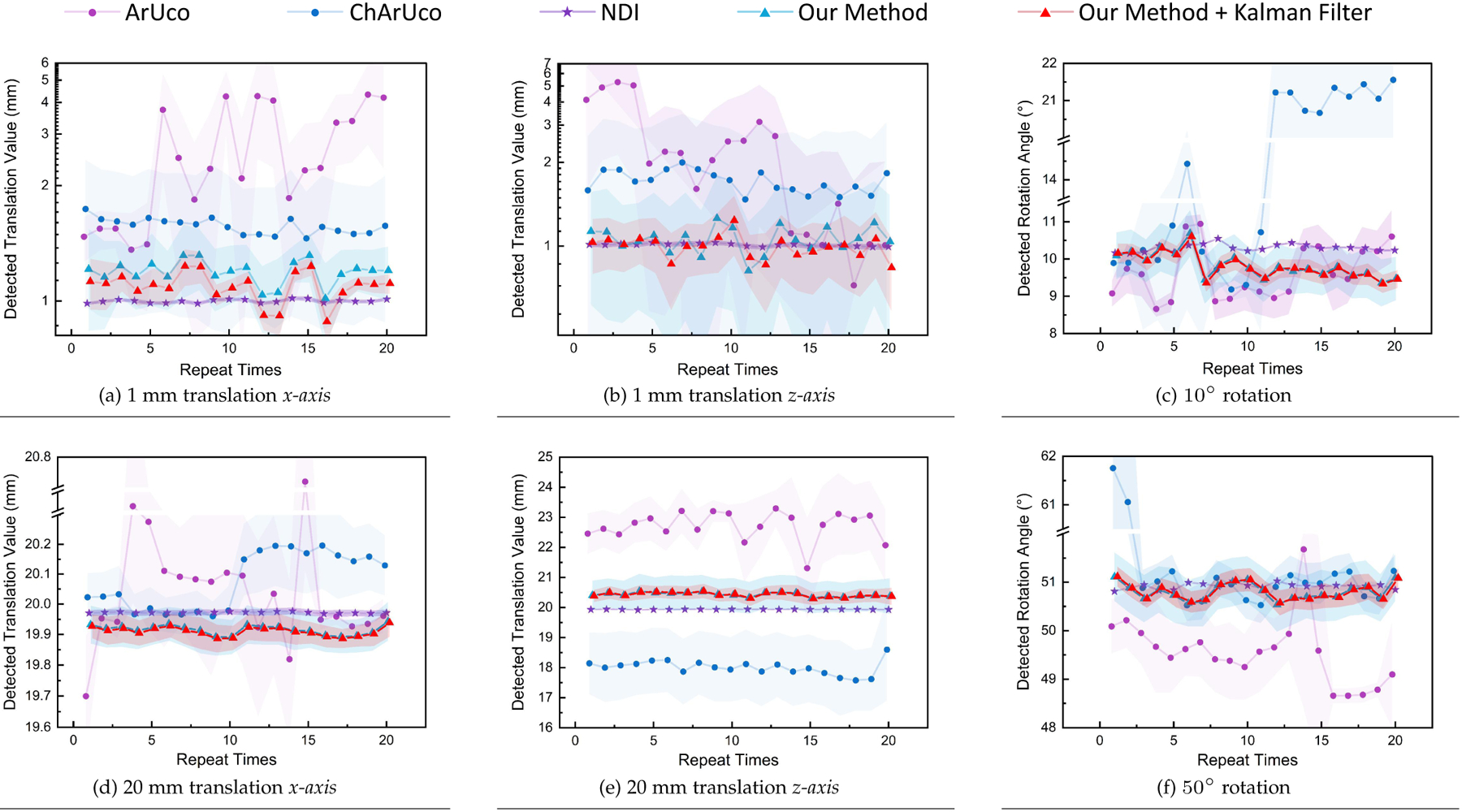

Fig. 7.

Experiment results of tool tracking accuracy assessment of our infrared depth camera and Kalman filter-based tracking method, compared with commercial IR and visible light marker tracking methods. (a),(d) Distribution of detected moving distances when moving optical platform 1 and 20 mm along x-axis over 20 repetitive tests. (b),(e) Distribution of detected moving distances when moving the optical platform 1 and 20 mm along the z-axis. (c),(f) Distribution of detected rotation angle when rotating the optical platform 10 and 50 degrees.

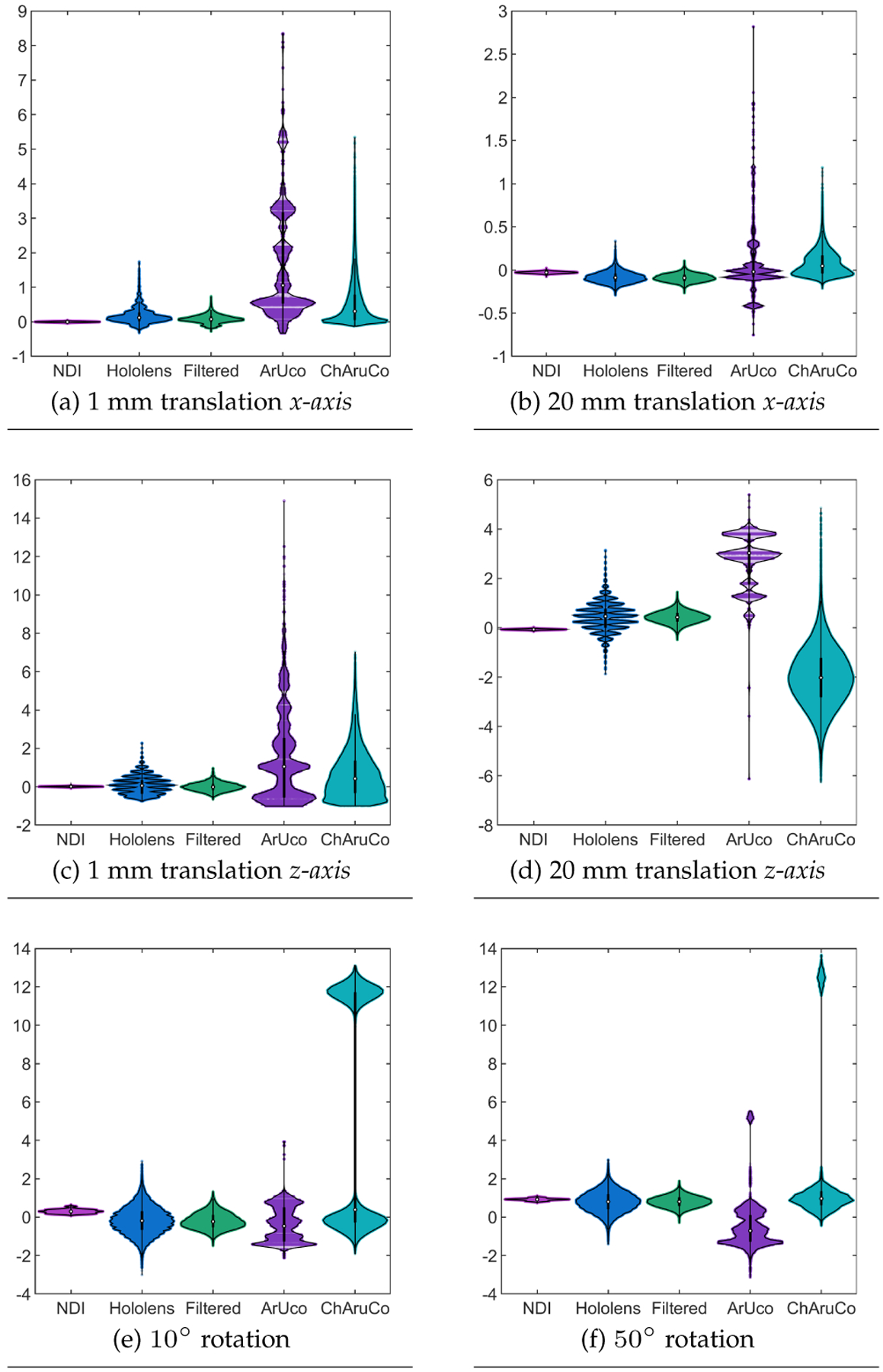

To evaluate the stability and tracking accuracy, we performed a statistical analysis of the results collected. Considering the non-normal distribution of the data collected, we used Kruskal-Wallis tests with to compare the results obtained for position and orientation. Posterior Bonferroni tests were used to reveal significant differences between the tracking technologies. The median and interquartile range (IQR) of all the experiments are summarized in Table 1 and presented in Figure 8.

TABLE 1.

Median and IQR accuracy errors reported by the different tracking technologies.

| Translation (x-axis) | Translation (z-axis) | Rotation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tracking Technology | 1 mm | 20 mm | 1 mm | 20 mm | 10° | 50° | ||||||

| Median | IQR | Median | IQR | Median | IQR | Median | IQR | Median | IQR | Median | IQR | |

| ArUco | 1.0566 | 2.6093 | −0.020 | 0.267 | 1.0544 | 3.0490 | 3.032 | 2.001 | −0.461 | 1.717 | −0.713 | 1.339 |

| ChArUco | 0.3047 | 0.7078 | 0.047 | 0.195 | 0.4215 | 1.633 | −0.022 | 1.553 | 0.386 | 11.941 | 0.964 | 0.680 |

| NDI | −0.0007 | 0.0172 | −0.028 | 0.014 | 0.008 | 0.027 | −0.067 | 0.026 | 0.294 | 0.151 | 0.922 | 0.075 |

| Ours | 0.1223 | 0.2226 | −0.089 | 0.083 | 0.058 | 0.661 | 0.464 | 0.714 | −0.194 | 0.917 | 0.804 | 0.728 |

| Ours + Kalman | 0.0764 | 0.1332 | −0.092 | 0.063 | −0.010 | 0.289 | 0.424 | 0.320 | −0.231 | 0.583 | 0.807 | 0.395 |

Fig. 8.

Experiment results of tool tracking accuracy assessment of our infrared depth camera and Kalman filter-based tracking method, comparing with commercial IR tracking method and visible light marker tracking methods. (a),(c) Detected moving distances when moving optical platform 1 mm along x- and z-axis. (b),(d) Detected moving distances when moving optical platform 20 mm along x- and z-axis. (e),(f) Detected rotation angle when rotating the platform 10 and 50 degrees.

Overall, the lowest errors were reported by the NDI tracking system, followed by the proposed method with and without the addition of Kalman filters. When evaluating the results for 1 mm translations in the x-axis, the Kruskal-Wallis test revealed a significant interaction between the multiple tracking technologies . A posterior Bonferroni test revealed statistical significance among all the tracking technologies . The NDI tracking system provided significantly higher accuracy than the other tracking technologies, followed by the proposed method with and without Kalman filters and the ChArUco and ArUco markers (see Figure 8a). Regarding the 20 mm translations in the x-axis, the Kruskal-Wallis test revealed a significant interaction between the multiple tracking technologies . A posterior Bonferroni test showed that the NDI tracking system and the ArUco markers provided significantly higher accuracy than the ChArUco and the proposed method with and without the addition of the Kalman filters . However, the results presented in Figure 8b show that the precision provided by the ArUco markers is lower than for all the other tracking technologies.

Regarding position accuracy on the z-axis, a Kruskal-Wallis test revealed a significant interaction between the tracking technologies when the markers were translated 1 mm . A posterior Bonferroni test revealed statistical significance among all the tracking technologies . The NDI tracking system proved to be more accurate than the other compared technologies. The proposed method with and without Kalman filters followed the NDI system, while the ChArUco and ArUco reported the worst scores (Figure 8c). When translating the markers 20 mm, our Kruskal-Wallis test revealed a significant interaction between the multiple technologies . The posterior Bonferroni test revealed the same behavior then the one observed for the 1 mm translations in this axis (see Figure 8d).

Furthermore, results from the Kruskal-Wallis tests for the orientation errors revealed a significant interaction between the tracking technologies when rotating the target by and degrees. In contrast to the translation results, both versions of the proposed method reported lower median errors than the NDI tracking system and the ArUco and ChArUco markers. However, the precision reported by the NDI tracking system is higher than for the other tracking technologies (see Figures 8e and 8f).

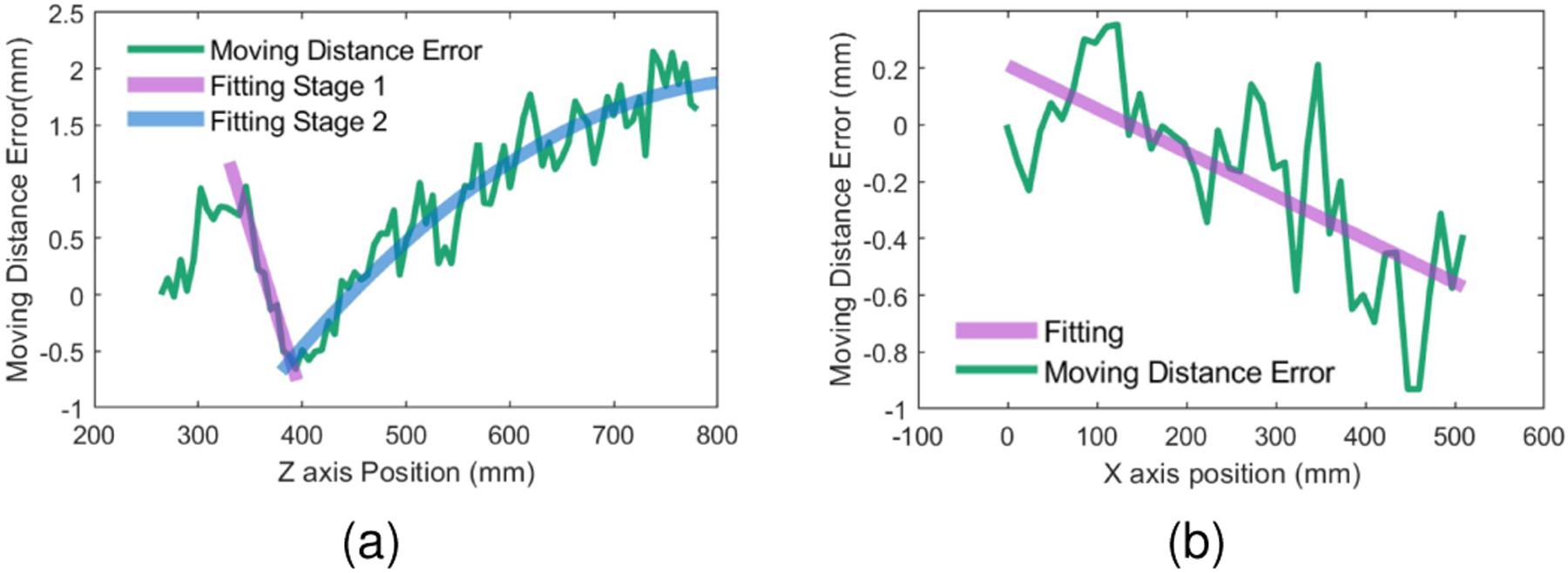

Workspace Definition

In addition to the tracking accuracy, a further experiment investigated the role that the FoV plays over the proposed method’s accuracy. To achieve more considerable displacement capabilities than the one used in the previous experiment, we moved our tracking tool along the x- and z-axis using a different linear stage. For this portion of the experiment, the NDI tracking system was used as the ground truth to evaluate the tracking accuracy. The passive tool was incrementally moved along the linear stage. After the movement of the linear stage was completed, the data corresponding to the tool’s pose was collected using the NDI tracking system and the AHAT camera of the HoloLens. A total number of 50 values were collected for every position. This process was repeated from the nearest to the furthest detection distances for the z-axis and from the center to the lateral margins of the x-axis that could be detected using the AHAT camera. The mean values of the data at every position were considered the real pose values and used to estimate the moving direction of the tool. The estimated displacement along the moving platform can then be calculated by projecting the tracked tool’s position to the moving direction and comparing it with its initial position.

The detection error observed during tool displacement using our system is shown in Figure 9 as a function of the observed depth. Such results show that our system can steadily trace the passive tool within depths of 250 and (Figure 9a) and within radial distances of when the tool is placed at a maximum distance of (Figure 9b). Using , it can be shown that these values are equivalent to an FoV of . Interestingly, the detection error observed for the z-axis depicts a different behavior before and after . In addition, the absolute difference between the error observed at 400 and results in a moving distance error of , or a distortion of 0.625%. For the radial direction, a smaller moving distance error can be observed. The absolute difference observed between 0 and translations depicts an error of , equivalent to a distortion of 0.15%.

Fig. 9.

The global tracking accuracy of the AHAT tracking method compared to NDI Polaris Spectra. (a) Moving distance detection error along the z-axis. (b) Moving distance detection error along the x-axis at 500mm depth.

4.2. Runtime and Latency

To investigate the runtime capabilities of our system, we placed different quantities of passive tools within the FoV of the AHAT camera. The sensor data collected by the AHAT was recorded and used for the offline runtime test. In this way, the frame rate of the algorithm during the test would not be limited by the sensor acquisition frequency. In addition, we collected and evaluated the runtime for detecting the ArUco and ChAruCo markers using an RGB camera with a resolution of 1280 × 1024 pixels. We used the average runtime of 10,000 frames on a Xeon(R) E5–2623 v3 CPU for this portion of the experiments.

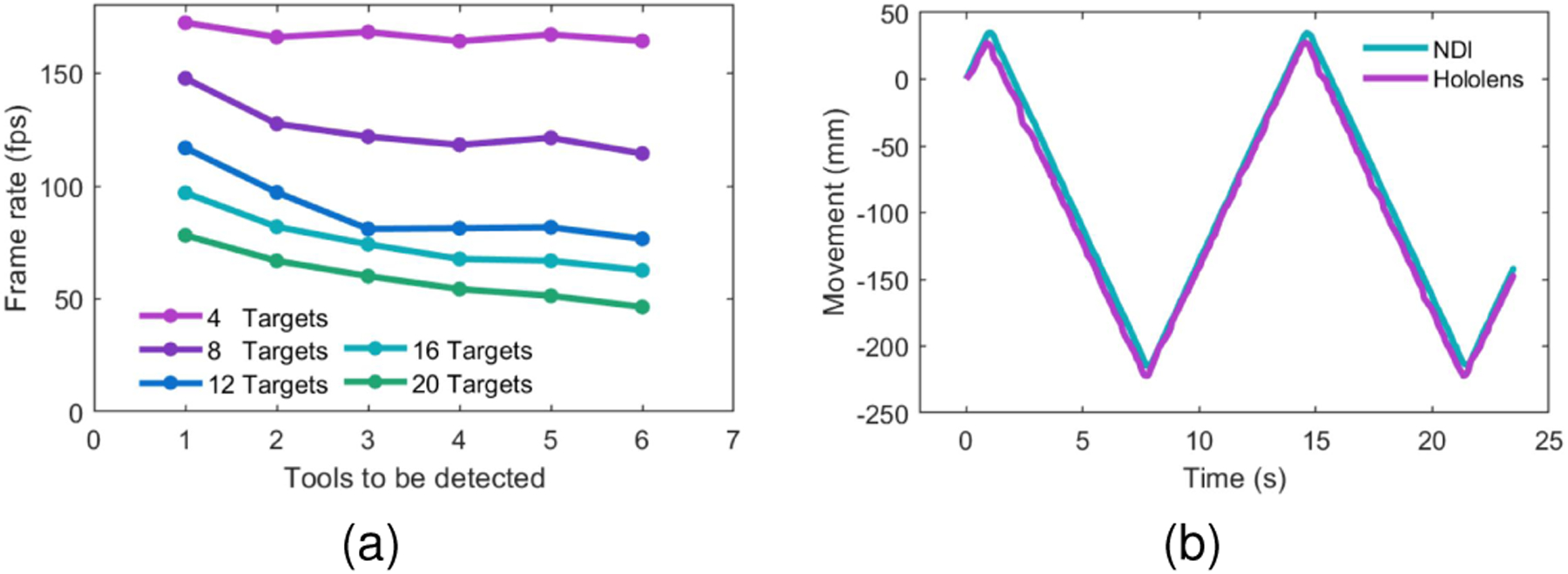

This experiment revealed a runtime of 28.4 ms (35.2 Hz) for the detection of the ArUco and 30.14 ms(33.2 Hz) for the ChArUco makers. The runtime results when using the AHAT camera proved to be influenced by both the number of retro-reflective markers detected in the scene and the number of loaded tools for detection. These results are depicted in Figure 10a. When only one passive tool is expected to be detected, the runtime of our system is 5.80 ms (172.37 Hz). However, when the passive markers corresponding to five different tools are detected and their five corresponding definitions are loaded, the runtime is 19.48 ms (51.34 Hz).

Fig. 10.

Frame rate and latency results of the proposed algorithm using the AHAT camera. (a) Tool detection frame rate under different conditions. Every series depicts several passive tools containing four retro-reflective markers detected using the AHAT camera. Different tools are loaded to evaluate the detection frame rate when multiple markers are observed. If more tools are defined than the number of markers observed, the system will indicate that the respective tool is not detected. (b) Movement detection of synchronously acquired localization data from the NDI tracking system and the proposed method. The target is kept in motion back and forth using a linear stage.

The latency of our system was then compared using the NDI tracking system as a reference. For this experiment, we moved back and forth a passive tool attached to a linear stage using a period of seconds. The localization data from the NDI and our system were collected simultaneously. The detected moving distance along the linear stage for both systems can be expressed as and with for the NDI and HoloLens, respectively (see Figure 10b). The moving signal of the HoloLens is first adjusted to match the amplitude of the NDI system using:

| (17) |

The following step computed the delay between our tracking system and the NDI system using:

| (18) |

where depicts the time difference in seconds between the signals and .

This experiment showed that the proposed method presents a time delay of 103.23 ms compared to the NDI tracking system.

5. Use Case

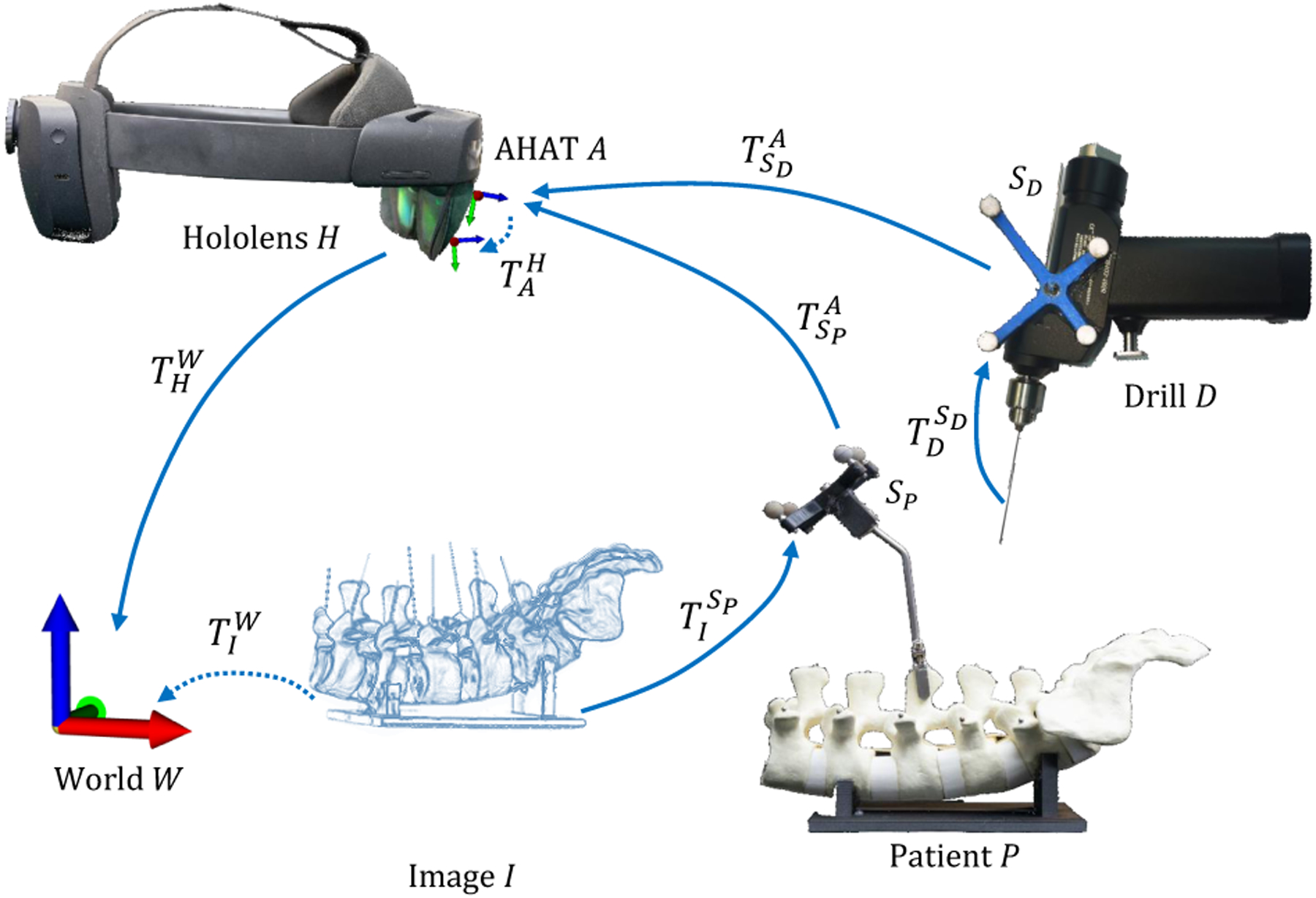

To highlight the relevance of the proposed framework for surgical applications, we introduced a use case in which we provided visual navigation using AR HMDs in orthopedic surgical procedures for the placement of pedicle screws. Two different tools containing retro-reflective markers were attached to a phantom spine model and a surgical drill to enable visual navigation. We used an O-arm imaging system and acquired a pre-operative computed tomography (CT) scan from the spine model. This data was used to plan the trajectories that the surgeons must follow during the placement of the pedicle screws. In addition, we added four metallic balls (BBs) to the phantom spine model that were visible in the CT and by direct observation. This action allowed us to determine the spatial relationship between the tool attached to the spine model and the image space (I). The spatial relationship between the different coordinate systems involved in this use case is depicted in Figure 11.

Fig. 11.

The spatial relationship between different components is required to provide surgical navigation using the proposed framework.

After successful tracking of the retro-reflective tools, the pose of the phantom model in world coordinates can be computed using the tool pose , estimated with the proposed framework, and the HMD’s self-localization data as follows:

| (19) |

Likewise, the pose of the surgical drill in world coordinates can be acquired using the tool pose utilizing:

| (20) |

This procedure requires calculating the transformation matrix from the AHAT camera to HoloLens view space . For this purpose, we designed a 3D-printed structure composed of a retro-reflective tool, , and four BBs to register the AHAT and view spaces (see Figure 1a). To calculate the position of the real BBs in the tracker space, we performed a pivot calibration. The spatial information extracted from the real BBs during the pivot calibration served to generate a set of virtual replicas that were aligned to their real counterparts. A computer keyboard enabled the user to control the 6 DoF of the virtual objects in the HoloLens world space . After proper alignment of the real and virtual objects, the spatial relationship between the AHAT camera and the view space was computed as follows:

| (21) |

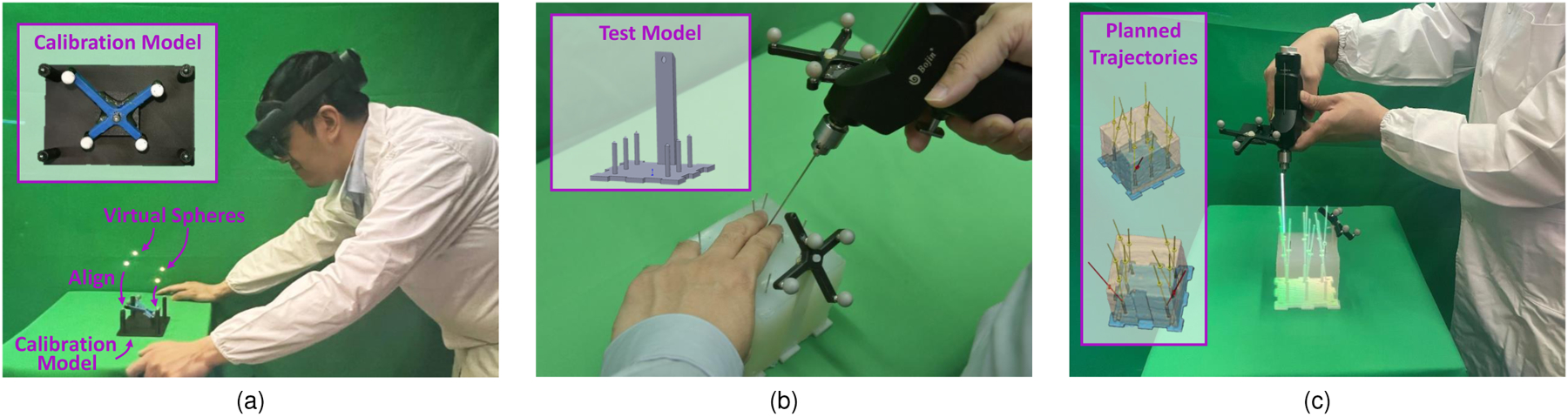

Fig. 1.

(a) Calibration model used to align a set of virtual and real spheres. This model enables the registration of the observer’s view and the image sensors. (b) Our tracking system enables the localization of surgical tools and anatomical models by attaching retro-reflective passive markers without integrating any other external trackers or electronics. (c) A surgeon can then use this method to insert surgical k-wires assisted by virtual trajectories displayed using an Augmented Reality Head-Mounted Display.

5.1. Surgical Navigation

To further evaluate the performance of our surgical navigation system, we designed a total of three phantom models to replicate traditional k-wire placement procedures (Figure 1b). These models were composed of a 3D printed base containing six cylindrical shapes with conical tips covered using silica gel (see Figure 1b). After constructing the phantom models, we collected CT volumes from them to generate their virtual replicas. An experienced surgeon later used the CT volumes to plan multiple trajectory paths aiming at the tips of the individual cones. A total of two different drilling paths were planned for every cone, leading to a total of 12 trajectories per phantom model. These trajectories defined the optimal path in which a k-wire should be inserted and were overlayed on the phantom using the HoloLens. In addition to the trajectories, virtual indicators in the form of concentric circles were presented over the surface of the phantom. The virtual prompts indicated the entry point that would lead to the optimal trajectory in the physical model (Figure 1c).

Seven surgeons took part in this portion of the study. Every participant performed an eye-tracking calibration procedure before the start of the experiment. After calibration, participants were asked to complete the registration procedure described in Equation 21 and depicted in Figure 1a. Once the virtual and real models were registered and the spatial relationship between the AHAT camera and the view space was computed, the pre-planned trajectories were presented to the participants using the AR HMD. Before collecting data for evaluation, every participant was allowed to perform multiple k-wire insertions in one of the three models to get familiar with the system. After this step, the remaining models containing 24 drilling paths were used for formal testing. To measure the accuracy achieved by the study participants, a registration step between pre- and post-operative imaging was performed. This step involved the acquisition of CT volumes from the models and allowed comparing the differences between the planned and real trajectories. The translation and angular errors between the optimal and real trajectories were used as metrics for the performance evaluation.

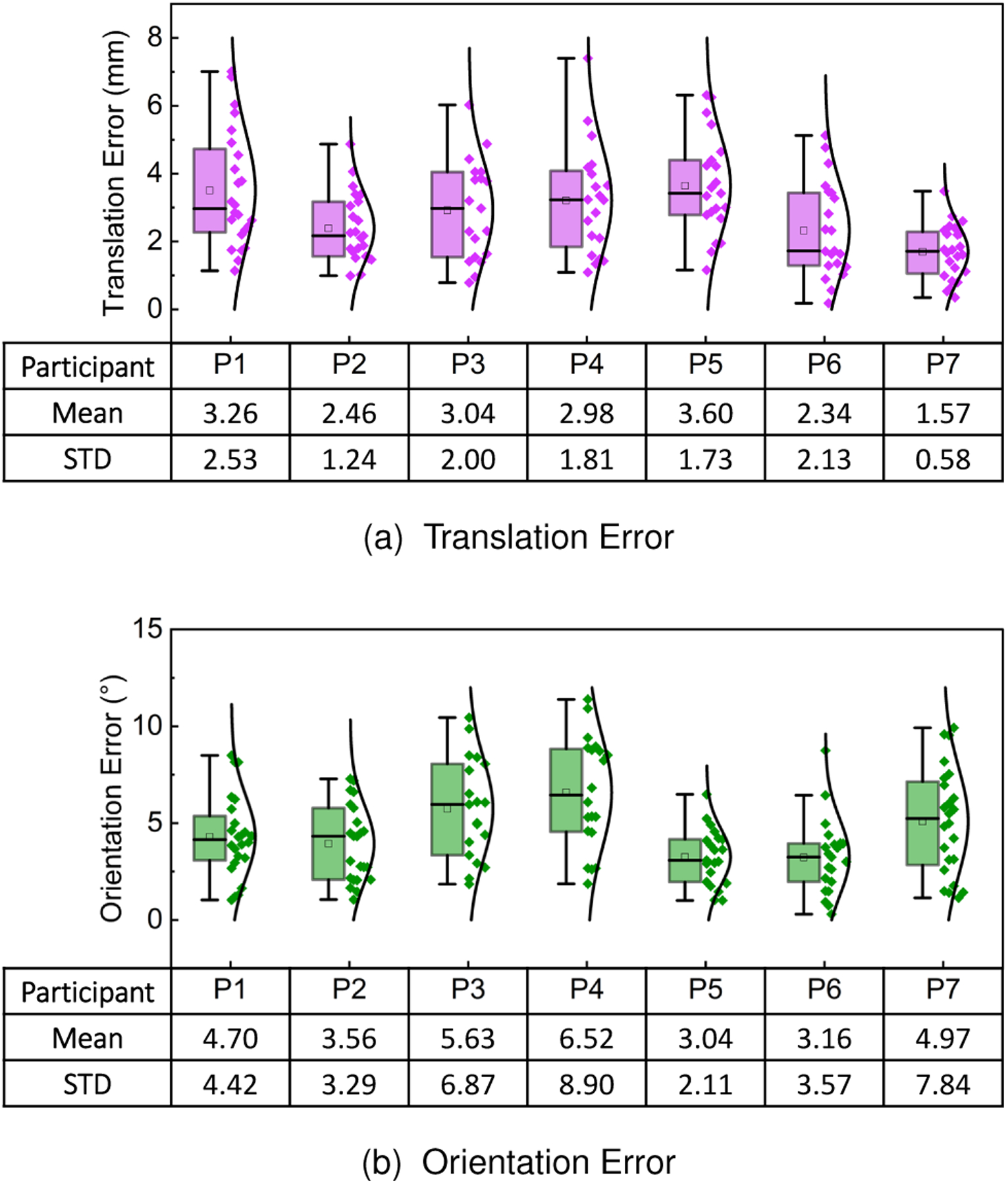

Among the 168 trials performed by the 7 participants, a total number of 16 were not considered for evaluation because the participants interfered with the line of sight of the tracking system. Overall, participants achieved a mean translation error of and a mean orientation error of . Results from this experiment, summarized in Figure 12, showed comparable to the accuracy values reported by other AR-based navigation systems for pedicle screw placement [18], [26], [27].

Fig. 12.

(a) Translation and (b) orientation errors for k-wire insertion using visual guidance and the proposed framework. The results correspond to the seven surgeons that participated in the study.

Although this experiment shows that the proposed methods provide comparable tracking accuracy and stability to successfully performing the insertion of the k-wires, the latency observed between the data processing using the workstation and the visualization of the optimal trajectories using the HMD seems to contribute to the observation of uncertainty during the alignment and localization of the targets. Furthermore, the elastic and smooth surface of the silicone gel used to create the test models replicates the challenges brought by percutaneous surgeries where few landmarks are visible and the working surface is soft and deformable. These properties increase the difficulty of performing the drilling task and the accurate insertion of the k-wire. However, the scores reported by the users demonstrate the performance of the proposed system when these challenges exist.

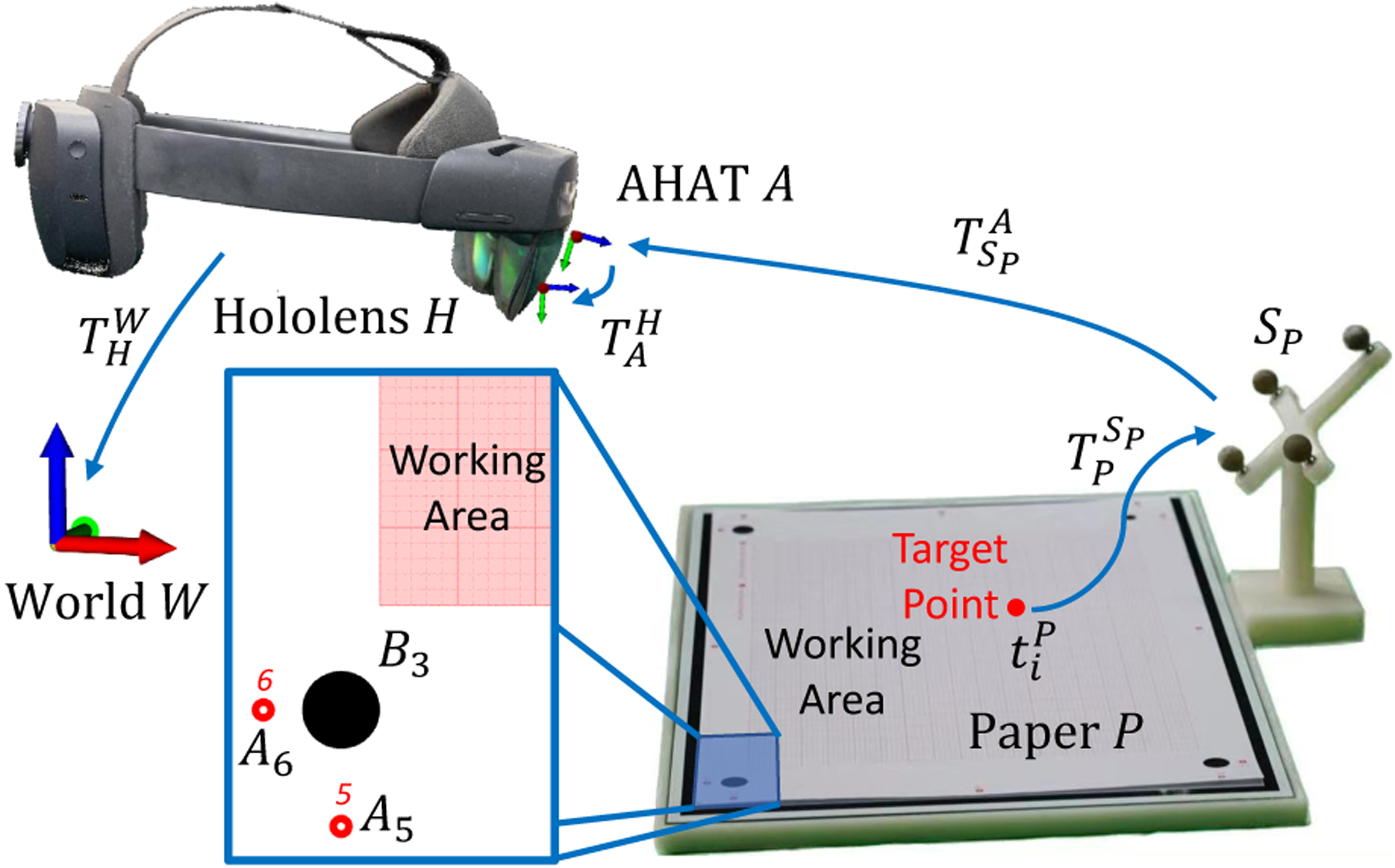

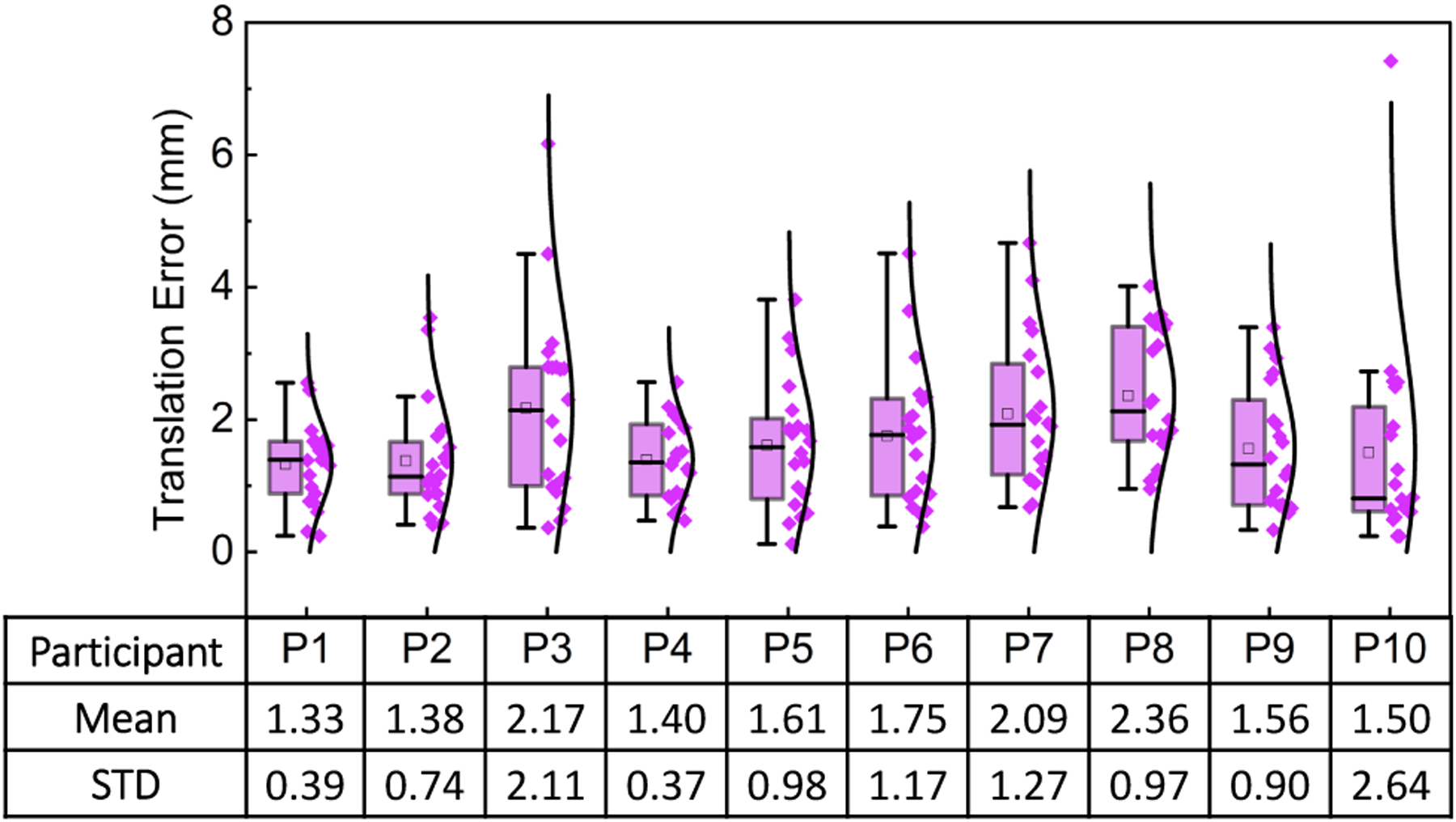

5.2. Pointing Accuracy

To explore the transferability of the proposed framework to more general human domains, we conducted a second study in which participants were presented with virtual points displayed over a flat surface and asked to mark the position where the virtual point was perceived. The experimental setup for this study, shown in Figure 13, consisted of a 3D printed structure including a rigid tool connected to a flat working area. The working area, with an effective size of , included an extra layer composed of glass and aluminum oxide to maximize the planarity of the surface. On top of this surface, a grid paper was used to record the participant’s answers. The grid paper was specially designed to contain two sets of points for registration. The primer set, , was used to perform registration between the paper and the tracking system. The second set, , was used to calculate the differences between the perceived and target position of the points presented to the participants.

Fig. 13.

Experimental setup for the pointing accuracy study. Participants of this study were asked to indicate the perceived position of a set of target points displayed over the surface of a grid paper.

Before starting the experiment, a registration procedure was conducted to calculate the rigid transformation between the paper and the rigid tool (see Figure 13). For every point in , a tracked pointer was used to indicate the circle’s center. Simultaneously, the NDI tracking system was used to estimate the position of the tool and the passive tracking pointer . The three-dimensional position of the registration point with respect to was calculated using:

| (22) |

Using the set of rigid transformations for the points in it is then possible to calculate .

During task performance, a set of random points were generated inside the working area with location . The position of these points, expressed in world coordinates, can be calculated using the pose of the marker tracked with the AHAT camera using:

| (23) |

After the computation of this value, a virtual point would then be presented to the participants, which will draw the point at their perceived position in the grid paper.

This portion of the study included ten participants (six male and four female). All of the participants, researchers in biomedical engineering, ranged in age from 22 to 28. Before starting their participation, every user was instructed to complete the calibration process of the HMD to adjust for their respective inter-pupillary distance. To familiarize the participants with the task, a training set of twenty points was presented to them. The points contained in the set were randomly generated. After completion of the training stage, participants were instructed to indicate the position of an evaluation set containing twenty randomly generated points. Once the data was collected from the participants, the grid paper was scanned and the data digitalized. To achieve this, we used the set of registration points and traditional image processing methods to extract the position of the perceived points marked in the grid paper. To estimate the error between every pair of target and perceived points, we computed the Euclidean distance.

Overall, participants perceived and reported the virtual points to be positioned within a mean distance of from the target points. These results suggest that the proposed framework could be used to provide visual guidance in applications that enable the observation of errors under . Examples of these are instructional, manufacturing, industrial, and training applications. A more detailed description of the results of this study is presented in Figure 14.

Fig. 14.

Translation errors for the pointing accuracy task using visual guidance and the proposed framework. The results correspond to the ten participants recruited during the study.

6. Discussion

This work introduces a framework to track retro-reflective markers using the built-in cameras of commercially available AR HMDs. Results regarding the error distribution of depth detection demonstrate that the error of the AHAT depth data can be modeled using a normal distribution. This normal distribution increases its standard error as a function of the detected depth (see Figure 5). Results from the test of tracking accuracy demonstrate that the integration of Kalman filters contributes to the reduction of the IQR, as depicted in Table 1. Therefore, they contribute to improve the precision of the methods proposed. As a result of the integration of the Kalman filters, this framework showed to be capable of achieving a tracking accuracy of on lateral translation, on longitudinal translation, and on rotation around the vertical axis (see Figure 7 and Table 1). These results are more accurate and precise than those achievable using traditional feature-based tracking algorithms such as the ArUco and ChArUco markers. Although it can be expected that feature-based tracking algorithms provide lower accuracy and precision, they are frequently used to enable the optical tracking of tools in AR applications. Therefore, they were considered during our study. Of note, our statistical analysis did not reveal statistical significance when comparing the tracking results of the NDI tracking system and the ArUco markers for 20 mm movements on the x-axis. However, when observing the distribution of the tracking results of the ArUco markers, the precision provided by this type of marker is worse than the one observed when using the NDI tracking system and the two variants of the proposed framework.

In addition, the proposed framework was demonstrated to be capable of tracking individual tools containing up to 4 retro-reflective markers at a frame rate of 172 Hz. This tracking frame rate remains over 50 Hz even when five different tools containing multiple retro-reflective markers are detected simultaneously. These reported speeds are faster than that of traditional feature-based tracking techniques such as ArUco (35 Hz) and ChArUco (33.2 Hz) markers. This frame rate is even higher than the frame rate of the AHAT camera (45 Hz). Therefore, allowing for tracking multiple targets before having a new sensor frame available.

In contrast to existing works, the proposed framework only uses the ToF camera of the HoloLens 2 without the addition of external components. Moreover, when compared to methods that use the grayscale environmental cameras of the headset for tool tracking, several benefits support using the depth sensor when hand distance tracking is needed. In this case, the tracking distance from 250 mm to 750 mm provided by the AHAT sensor nicely satisfies the specific application’s needs. At the same time, the LF and RF cameras have short baseline distances and focal lengths, restricting their performance at near distances [13]. In addition, using the AHAT camera provides an FoV of , while the overlap of the LF and RF cameras is smaller than in width. Moreover, the proposed algorithm does not require the addition of external infrared light to enhance the visibility of the passive markers. Therefore, mitigating the possibility of interfering with the functionalities of the HMD that rely on the use of this type of light, including environmental reconstruction and hand tracking.

In terms of applicability, the use case for the placement of k-wires during orthopedic interventions demonstrates its potential use in medical environments during the performance of surgical procedures [18], [26], [27]. Although the objective of this study was to investigate the accuracy that the users of the proposed framework could achieve, an interesting effect derived by visual inspection of the results is that participants that achieved smaller errors in translation depicted larger errors in orientation (e.g., participant 7) and vice versa (e.g., participant 5). This could be an indication that certain users pay more attention to one of these parameters during the alignment process. Similar observations have been noticed in the literature from works that investigate how the shape of an object influences alignment tasks in mixed and virtual reality applications [28]. In addition, results from the pointing accuracy test suggest that the alignment errors can be task-related. In this regard, the errors showed a decrease in the error from in the medical scenario to in the pointing accuracy test. However, further studies would need to be conducted in this regard.

Although this work introduces a method to track retroreflective markers using the built-in sensors of the Microsoft HoloLens 2, the applicability of such a framework should not be exclusive to this headset. In this regard, if a similar device contains a depth sensor, and the depth information is available, it would only be necessary to estimate the transformation between the headset and the depth sensor ( in Figure 11) to adopt the proposed framework. Although the manufacturer of the HoloLens provides an initial estimation, the method described in Section 3 can be used to find this spatial relationship when using other devices. This could be achieved with the help of a passive tool containing a set of retro-reflective markers and a 3D-printed structure like the one depicted in Figure 1a.

Limitations

The utility of the HMD’s depth camera for tracking and detecting retro-reflective markers presented in this work led to promising results in terms of precision and stability. However, certain limitations associated with the proposed framework exist. In this regard, compared to commercially available tracking systems, the accumulated error observed using our method increases as a function of the depth in which the tools are tracked. However, this accumulated error remains less than 1 mm at distances between 300 to 600 mm. In the future, this limitation caused by the precision achieved by the built-in depth sensor of the HMD could be addressed by modeling the error and designing an error compensation algorithm. In addition, although the camera calibration and registration procedure presented in Section 3.2 was conducted in a controlled environment, the cameras’ low resolution and high distortion contributed to the observation of errors. In the case of the HoloLens 2, and with the only exception of the LF-RF camera pair, the LF and main cameras are registered to the AHAT camera. However, as illustrated in the manuscript, the AHAT camera covers of angular range with only 512 pixels, leading to a small spatial resolution. In addition, the AHAT camera presents strong distortion, which may bring additional difficulties in detecting the corners in the checkerboard. Furthermore, the planar marker has several local minima during localization [29], which would further increase the ambiguities. Any mistake in estimating the checkerboard’s pose would contribute to an incorrect estimation of the three-dimensional corner points, thus increasing the registration error. When combined, all these errors are propagated through the transformation chain between the cameras, leading to the registration errors reported in this work. While the framework presented in this work only relies on the AHAT sensor, if the application would require the use of multiple cameras and observation of smaller errors, alternative methods of camera calibration and registration must be considered to reduce the observation of errors.

Another limitation of the proposed methods is the latency observed when presenting the virtual content to the user after the achievement of tool tracking. This issue currently represents the most significant challenge in providing visual information that could enable stable and reliable navigation. As the proposed framework relies on the quality of the network to transfer the data collected, the incorrect synchronization between the tool tracking result and the HMD’s self-localization data could lead to the observation of inconsistencies in the content displayed. These differences would be more noticeable when using wireless networks, where the sensor data transfer delay is longer and the frame rate is lower . More importantly, the results presented in this work model the properties of the AR HMD used during the experiments. Additional studies must be conducted to investigate if the results are consistent for multiple devices of the same type.

7. Conclusion

This paper proposes a framework that uses the built-in cameras of commercially available AR headsets to enable the accurate tracking of passive retro-reflective markers. Such a framework enables tracking these markers without integrating any additional electronics into the headset and is capable of simultaneous tracking of multiple tools. The proposed method showed a tracking accuracy of approximately 0.1 mm for translations on the lateral axis and approximately 0.5 mm for translations on the depth axis. The results also show that the proposed method can track retro-reflective markers with an accuracy of less than for rotations. Finally, we demonstrated the early feasibility of the proposed framework for k-wire insertion as performed in orthopedic procedures.

Acknowledgement

This research was partially supported by the National Institutes of Health (R01-EB023939, R01-EB016703, and R01-AR080315), the National Natural Science Foundation of China (U20A20389), and the Laboratory Innovation Fund of Tsinghua University.

Biographies

Alejandro Martin-Gomez is an Assistant Research Professor at the Computer Science Department of the Johns Hopkins University. Prior to receiving his appointment, he joined the Laboratory for Computational Sensing and Robotics at Johns Hopkins University as a Postdoctoral Fellow. He earned his Ph.D. in Computer Science at the Chair for Computer Aided Medical Procedures and Augmented Reality (CAMP) of the Technical University of Munich, Germany; his M.Sc. degree in Electronic Engineering from the Autonomous University of San Luis Potosi, Mexico; and his B.Sc. degree in Electronic Engineering from the Technical Institute of Aguascalientes, Mexico. His main research interests include improving visual perception for augmented reality and its applications in interventional medicine and surgical robotics.

Haowei Li is an undergraduate student in the Department of Biomedical Engineering, Tsinghua University. He has been a member of Prof. Guangzhi Wang’s lab since junior years. His current interests include computer aided surgery and augmented reality.

Tianyu Song is a Ph.D. candidate of Computer Science at the Chair for Computer Aided Medical Procedures and Augmented Reality (CAMP) of the Technical University of Munich. He received the B.Sc. degree in Theoretical and Applied Mechanics from Sun Yat-sen University, China and B.Sc. degree in Mechanical Engineering from Purdue University, USA in 2017. He earned his M.Sc. in Robotics at Johns Hopkins University, USA in 2019. After graduation, he worked at the Applied Research team at Verb Surgical/ Johnson&Johnson, USA for a year. His current interests include augmented reality and computer aided surgery.

Sheng Yang is a PhD candidate at Department of Biomedical Engineering, Tsinghua University. Also a member of Guangzhi Wang’s Lab, fortunately advised by Prof. Guangzhi Wang, with current research focus on Medical Robot, especially on Compute-Aided Image Guided Navigation System. He received the B.Sc degree and the M.Sc degree in Biomedical Engineering from Southeast University in 2016 and in 2019 respectively. His current interests include medical robot and medical image processing.

Guangzhi Wang, PhD, received his B.E. and M.S. Degrees in mechanical engineering in 1984 and 1987 respectively, and received his PhD Degree in Biomedical Engineering in 2003, all from Tsinghua University, Beijing, China. Since 1987, he has been with the Biomedical Engineering division at Tsinghua University. Since 2004, He is a tenured full Professor at Department of Biomedical Engineering, Tsinghua University. He is the author of hundreds of peerreviewed scientific papers and is the inventor of 30 granted Chinese and PCT patents. Since 2015 Professor Guangzhi Wang was elected as the vice president of Chinese Society of Biomedical Engineering, and since 2018, he was elected as vice president of Chinese Association of Medical Imaging Technology. His current research interest includes biomedical image processing, image based surgical planning and computer aided surgery.

Hui Ding is a senior engineer and laboratory director in the Department of Biomedical Engineering, Tsinghua University. She was awarded the M.Sc degree in computer science from the University of science and technology of China. She has been engaged in medical image processing, computer aided minimally invasive treatment planning, human motion modeling and analysis for rehabilitation. At present, her research work includes multimode image guided minimally invasive orthopedic and neurosurgery, ultrasonic fusion imaging. She is the inventor of more than 10 Chinese and PCT patents.

Nassir Navab is a Full Professor and Director of the Laboratory for Computer-Aided Medical Procedures (CAMP) at the Technical University of Munich (TUM) and Adjunct Professor at Laboratory for Computational Sensing and Robotics (LCSR) at Johns Hopkins University (JHU). He is a fellow of MICCAI society and received its prestigious Enduring Impact Award in 2021. He was also the recepient of 10 years lasting impact award of IEEE ISMAR in 2015, SMIT Society Technology award in 2010 and Siemens Inventor of the year award in 2001. He completed his PhD at INRIA and University of Paris XI, France, in January 1993, before enjoying two years of post-doctoral fellowship at MIT Media Laboratory and nine years of expeirence at Siemens Corporate Research in Princeton, USA. He has acted as a member of the board of directors of the MICCAI Society, 2007–2012 and 2014–2017, and serves on the Steering committee of the IEEE Symposium on Mixed and Augmented Reality (ISMAR) and Information Processing in Computer-Assisted Interventions (IPCAI). He is the author of hundreds of peer-reviewed scientific papers, with more than 52,000 citations and an h-index of 101 as of April, 2022. He is the author of more than thirty awarded papers including 11 at MICCAI, 5 at IPCAI, 2 at IPMI and 3 at IEEE ISMAR. He is the inventor of 51 granted US patents and more than 60 International ones. His current research interests include medical augmented reality, computer-assisted surgery, medical robotics, computer vision and machine learning.

Zhe Zhao is an attending surgeon of the Department of Orthopeadics, Beijing Tsinghua Changgung Hospital. Associate professor of School of Clinical Medicine, Tsinghua University. His research interest covers orthopaedic trauma, computer assisted surgery and orthopeadic implant development.

Mehran Armand received the Ph.D. degree in mechanical engineering and kinesiology from the University of Waterloo, Waterloo, ON, Canada, in 1998. He is currently a Professor of Orthopaedic Surgery, Mechanical Engineering, and Computer Science with Johns Hopkins University (JHU), Baltimore, MD, USA, and a Principal Scientist with the JHU Applied Physics Laboratory (JHU/APL). Prior to joining JHU/APL in 2000, he completed postdoctoral fellowships with the JHU Orthopaedic Surgery and Otolaryngology-Head and Neck Surgery. He currently directs the Laboratory for Biomechanical- and Image-Guided Surgical Systems, JHU Whiting School of Engineering. He also directs the AVICENNA Laboratory for advancing surgical technologies, Johns Hopkins Bayview Medical Center. His laboratory encompasses research in continuum manipulators, biomechanics, medical image analysis, and augmented reality for translation to clinical applications of integrated surgical systems in the areas of orthopaedic, ENT, and craniofacial reconstructive surgery.

Footnotes

e.g., Polaris NDI, Northern Digital Incorporated. Ontario, Canada.

i.e., Microsoft HoloLens 2. Microsoft. Washington, USA.

The code for our work can be accessed through the following link: https://github.com/lihaowei1999/IRToolTrackingWithHololens2.git

Edmund Optics. Teledyne FLIR Integrated Imaging Solutions, Inc.

CBC AMERICA LLC.

Northern Digital Incorporated

Contributor Information

Alejandro Martin-Gomez, Laboratory for Computational Sensing and Robotics, Whiting School of Engineering, Johns Hopkins University, United States of America..

Haowei Li, Department of Biomedical Engineering, Tsinghua University, China..

Tianyu Song, Chair for Computer Aided Medical Procedures and Augmented Reality, Department of Informatics, Technical University of Munich, Germany..

Sheng Yang, Department of Biomedical Engineering, Tsinghua University, China..

Guangzhi Wang, Department of Biomedical Engineering, Tsinghua University, China..

Hui Ding, Department of Biomedical Engineering, Tsinghua University, China..

Nassir Navab, Laboratory for Computational Sensing and Robotics, Whiting School of Engineering, Johns Hopkins University, United States of America.; Chair for Computer Aided Medical Procedures and Augmented Reality, Department of Informatics, Technical University of Munich, Germany.

Zhe Zhao, Department of Orthopaedics, Beijing Tsinghua Changgung Hospital. School of Clinical Medicine, Tsinghua University..

Mehran Armand, Laboratory for Computational Sensing and Robotics, Whiting School of Engineering, Johns Hopkins University, United States of America.; Department of Orthopaedic Surgery, Johns Hopkins University School of Medicine, United States of America.

References

- [1].Kamphuis C, Barsom E, Schijven M, and Christoph N, “Augmented reality in medical education?” Perspectives on medical education, vol. 3, no. 4, pp. 300–311, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Peters TM, Linte CA, Yaniv Z, and Williams J, Mixed and augmented reality in medicine. CRC Press, 2018. [Google Scholar]

- [3].Campisi CA, Li EH, Jimenez DE, and Milanaik RL, “Augmented reality in medical education and training: from physicians to patients,” in Augmented Reality in Education. Springer, 2020, pp. 111–138. [Google Scholar]

- [4].Mehrfard A, Fotouhi J, Taylor G, Forster T, Armand M, Navab N, and Fuerst B, “Virtual reality technologies for clinical education: evaluation metrics and comparative analysis,” Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization, vol. 9, no. 3, pp. 233–242, 2021. [Google Scholar]

- [5].Fida B, Cutolo F, di Franco G, Ferrari M, and Ferrari V, “Augmented reality in open surgery,” Updates in surgery, vol. 70, no. 3, pp. 389–400, 2018. [DOI] [PubMed] [Google Scholar]

- [6].Deib G, Johnson A, Unberath M, Yu K, Andress S, Qian L, Osgood G, Navab N, Hui F, and Gailloud P, “Image guided percutaneous spine procedures using an optical see-through head mounted display: proof of concept and rationale,” Journal of neurointerventional surgery, vol. 10, no. 12, pp. 1187–1191, 2018. [DOI] [PubMed] [Google Scholar]

- [7].Jud L, Fotouhi J, Andronic O, Aichmair A, Osgood G, Navab N, and Farshad M, “Applicability of augmented reality in orthopedic surgery-a systematic review,” BMC musculoskeletal disorders, vol. 21, no. 1, pp. 1–13, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Casari FA, Navab N, Hruby LA, Kriechling P, Nakamura R, Tori R, de Lourdes dos Santos Nunes F, Queiroz MC, Fürnstahl P, and Farshad M, “Augmented reality in orthopedic surgery is emerging from proof of concept towards clinical studies: a literature review explaining the technology and current state of the art,” Current Reviews in Musculoskeletal Medicine, vol. 14, no. 2, pp. 192–203, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Rahman R, Wood ME, Qian L, Price CL, Johnson AA, and Osgood GM, “Head-mounted display use in surgery: a systematic review,” Surgical innovation, vol. 27, no. 1, pp. 88–100, 2020. [DOI] [PubMed] [Google Scholar]

- [10].Fotouhi J, Mehrfard A, Song T, Johnson A, Osgood G, Unberath M, Armand M, and Navab N, “Development and pre-clinical analysis of spatiotemporal-aware augmented reality in orthopedic interventions,” IEEE transactions on medical imaging, vol. 40, no. 2, pp. 765–778, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Koulieris GA, Akşit K, Stengel M, Mantiuk RK, Mania K, and Richardt C, “Near-eye display and tracking technologies for virtual and augmented reality,” in Computer Graphics Forum, vol. 38, no. 2. Wiley Online Library, 2019, pp. 493–519. [Google Scholar]

- [12].Kunz C, Maurer P, Kees F, Henrich P, Marzi C, Hlaváč M, Schneider M, and Mathis-Ullrich F, “Infrared marker tracking with the hololens for neurosurgical interventions,” Current Directions in Biomedical Engineering, vol. 6, no. 1, 2020. [Google Scholar]

- [13].Gsaxner C, Li J, Pepe A, Schmalstieg D, and Egger J, “Inside-out instrument tracking for surgical navigation in augmented reality,” in Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, 2021, pp. 1–11. [Google Scholar]

- [14].Ungureanu D, Bogo F, Galliani S, Sama P, Meekhof C, Stühmer J, Cashman TJ, Tekin B, Schönberger JL, Olszta P et al. , “Hololens 2 research mode as a tool for computer vision research,” arXiv preprint arXiv:2008.11239, 2020. [Google Scholar]