Abstract

Artificial intelligence (AI) and algorithms are heralded as significant solutions to the widening gap between the rising healthcare needs of ageing and multi-morbid populations and the scarcity of resources to provide such care.

Objective

This article investigates how the PMHnet algorithm – an AI prognostication tool developed in Denmark to predict the one-year all-cause mortality risk for patients hospitalized with ischemic heart disease – was presented to cardiologists working in the hospital setting, and how they responded to this novel decision-support tool.

Methods

Empirically, we draw upon ethnographic fieldwork in the Danish-led international research project, PM Heart, which since 2019 has developed the PMHnet algorithm and implemented the software into the electronic health record system in hospitals in Eastern Denmark (the Capital Region and Region Zealand).

Results

Paying careful attention to the hopes and concerns of cardiologists who will have to embrace and adapt to algorithmic tools in their everyday work of diagnosing and treating patients, we identify three analytical themes meriting attention when AI is implemented in healthcare: 1) the re-negotiation of agency and autonomy in human-algorithm relations, 2) accountability in algorithmic prognostication and 3) the complex relationship between association and causation actualized by predictive algorithms. From these analytical themes, we elicit methodological questions to guide future ethnographic explorations of how AI and advanced algorithms are put to use in the healthcare system, with what implications, and for whom.

Conclusion

We conclude that local, qualitative investigations of how algorithms are used, embraced and contested in everyday clinical practice are needed in order to understand their implications – good and bad, intended and unintended – for clinicians, patients and healthcare provision.

Keywords: Artificial intelligence, personalized medicine, qualitative (studies), predictive algorithms, clinical care, ethnography, methodological guidance, cardiovascular disease, precision medicine

Introduction

Healthcare systems are increasingly put under strain by multi-morbid and ageing populations as well as shortages of health professionals and care staff. Artificial intelligence (AI) is a solution promoted in health policy to address the widening gap between the healthcare needs of populations and the scarcity of human and economic resources.1–3 The hope is that more data-driven healthcare systems, and the use of automation will provide better and more efficient care.4,5 Yet while bioinformatics research projects proliferate, integrating huge amounts and kinds of health data, the resulting algorithms seldom reach clinical implementation and use 6 – for illustrative examples in the field of cardiology, see recently published studies in this journal.7–12 In other areas (e.g., social media, the banking community, insurance and advertising), AI tools have been easier to bring to use, and in response, social science scholars have demonstrated the social, ethical and organizational implications of algorithmic systems for automating and supporting decision-making.13–19 Of particular scholarly concern are issues of algorithmic bias and discrimination, the delegation of agency and authority from humans to AI and the complexity and lack of transparency in algorithmic systems that render difficult or even impossible to assess accountability in complex human-algorithm relations and processes. Indeed, the slow uptake of AI tools in healthcare practice pertains to uncertainties about whether decision-support tools might induce harm to patients and to challenges in developing a regulatory framework for AI applications in healthcare. The IBM Watson for Oncology is an example of an algorithmic decision-support tool that made its way to the clinic yet failed to prove its legitimacy. As only very few decision-support tools developed with AI are actually implemented sustainably in clinical practice, knowledge about how clinicians perceive and use algorithmic tools is very limited.

The PMHnet algorithm is an AI prognostication tool developed to support clinical decision-making which is fully integrated into the electronic health record (EHR) system used in Eastern Denmark. The PMHnet algorithm predicts the one-year, all-cause mortality risk – or the survival prognosis – for patients hospitalized with ischemic heart disease (hereafter IHD), and was put into service in the middle of September 2023. 20 In this article, we investigate how the PMHnet algorithm was presented to its future users, that is, cardiologists working in the hospital setting, and how these users in turn responded to the fact that they will be utilizing the algorithm in their future clinical work. Empirically, we draw upon ethnographic engagements in the international, Danish-led research project, PM Heart, which since 2019 has developed the PMHnet algorithm and built the software into the EHR system used in Eastern Denmark; that is, in two out of Denmark's five regions responsible for hospital care (the Capital Region and Region Zealand). While our study cannot provide insights into the actual clinical use of the PMHnet algorithm, we pay close empirical attention to both alignment and friction in human-algorithm relations 21 by taking seriously the hopes and concerns of the clinicians who will have to embrace and adapt to AI tools in their everyday work of diagnosing and treating patients. In so doing, our analyses elicit significant methodological questions meriting special attention in future ethnographic explorations of how AI and advanced algorithms are put to use in the healthcare system, with what implications, and for whom. Such studies are paramount to critically investigate and evaluate how humans might work together with algorithms in meaningful ways.22,23

The article is organized as follows: First, we introduce the PM Heart research project and the PMHnet algorithm, followed by a presentation of our study. We then analyze how the Project Leader of the PM Heart research project presented the algorithm to the cardiologists who will use it in their daily work (part I), and how these future users reacted towards it, with both hopes and concerns for AI as a decision-support tool (part II). We identify three analytical themes meriting attention when AI is implemented in healthcare: 1) the re-negotiation of agency and autonomy in human-algorithm relations, 2) accountability in algorithmic prognostication and 3) the complex relationship between association and causation actualized by predictive algorithms. From these analytical themes, we then elicit methodological questions to guide future ethnographic explorations of the application of AI in healthcare. We conclude that local, qualitative investigations of how algorithms are used, embraced and contested in everyday clinical practice are needed to understand their implications – good and bad, intended and unintended – for clinicians, patients and the provision of healthcare.

The PM Heart research project and the PMHnet algorithm

The PM Heart research project was initiated in 2019 as a Nordic collaboration between clinical researchers at Copenhagen University Hospital and bioinformaticians at the University of Copenhagen, Denmark, Norwegian researchers and the Icelandic firm deCODE Genetics, a global leader in researching human genetics. The bioinformaticians in the project developed and trained the PMHnet algorithm; a neural network-based time-to-event model predicting the one-year all-cause mortality risk for patients hospitalized with IHD. To do so, they included 39,746 patients with IHD from the Eastern Danish Heart registry, who between 2006 and 2016 underwent coronary angiography. The model was trained using the data of 34,746 patients and subsequently tested in a population of 5000 patients. The PMHnet algorithm was built by extracting and integrating existing health data from the Danish national registries and EHRs, including prior diagnoses and procedure codes, laboratory test results, imaging (coronary angiographies), medication, genetics and clinical measurements. The final model took into account up to 584 patient-specific features. Upon development, the model was validated in an Icelandic cohort of 8287 patients, showing improved accuracy in prognosticating the survival prognosis in patients with IHD compared to the GRACE 2.0 risk score which is currently the most widely acknowledged tool for estimating all-cause mortality risk of these patients. 20

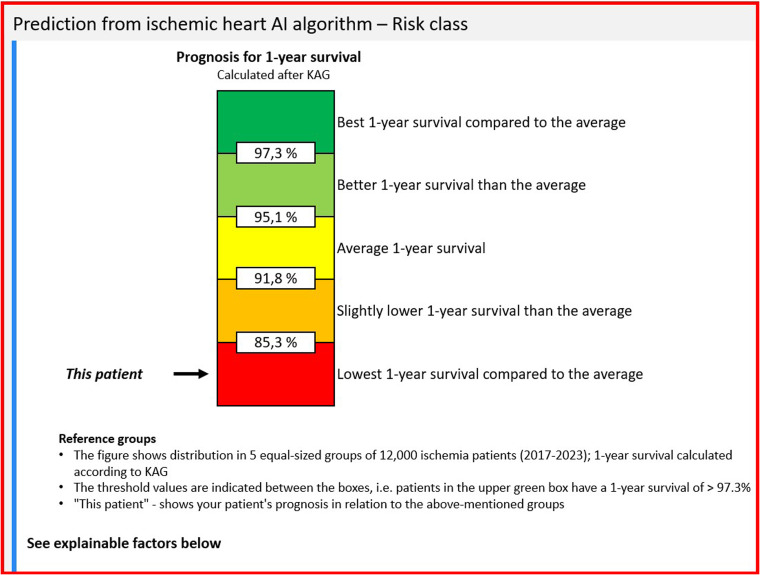

The hypothesis behind the algorithm is that clinicians who acquire information about the survival prognosis of the individual patient will be able to decide on better management compared to their colleagues who do not have the prognosis available. To test this hypothesis, a randomized controlled trial was initiated on 18 September 2023, at all hospitals in Denmark's Capital Region and Region Zealand treating patients with IHD. When a patient admitted to the hospital with IHD undergoes a coronary angiography, the EHR system automatically randomizes this patient to either an intervention group or a control group. For the intervention group, the PMHnet algorithm calculates the patient's one-year survival prognosis, which is then displayed to the treating clinician. For patients in the control group, a survival prognosis is also calculated but not disclosed to the clinician. Through this study, the researchers will investigate the clinical usefulness of the PMHnet algorithm as a decision-support tool, evaluating whether the use of the algorithm will 1) improve patients’ one-year survival, and 2) minimize their risk of re-admission compared to patients for whom cardiologists do not know the survival prognoses. The survival prognosis is not displayed as a number but as a risk category relative to the average survival prognosis, calculated from a population of 12,000 patients with IHD from 2017 to 2023. Figure 1 shows how the EHR displays the survival prognosis to the treating clinician.

Figure 1.

The survival prognosis calculated by the PMHnet algorithm as displayed in the electronic health record (EHR).

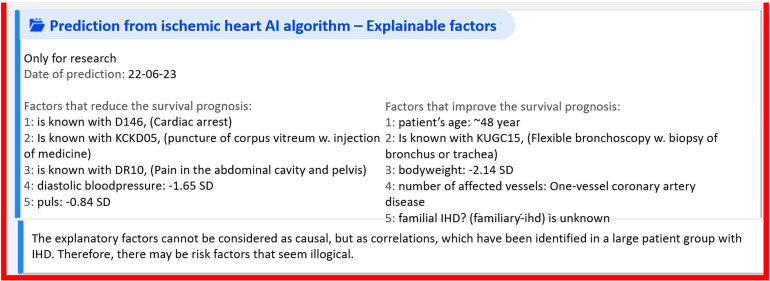

In addition to the survival prognosis, the EHR provides cardiologists with a list of so-called ‘explainable factors’, a list of the most important input features driving the prediction towards either non-survival (left) or survival (right) (see Figure 2).

Figure 2.

The survival prognosis with explainable factors.

To the left in Figure 2, a list displays five factors driving the prediction towards death, whereas the list to the right in the same box provides five factors driving the prediction towards survival. As examples of factors driving the prediction towards death for this patient, factor 1 reads ‘[patient] is known with D146, (cardiac arrest)’, designating a diagnostic code, and factor 2 reads: ‘[patient] is known with KCKD05, (puncture of corpus vitreum w. injection of medicine)’, designating a code for a clinical procedure. As examples of factors driving the prediction towards survival for the patient, factor 1 reads ‘patient's age: ∼48 years’, and factor 2 reads ‘[patient] is known with KUG15, (flexible bronchoscopy w. biopsy of bronchus or trachea)’, also designating a clinical procedure. The relation between these factors and the survival prognosis is a mathematical association, not necessarily a causal relationship. Yet even though an explainable factor might not be directly causally linked to the survival prognosis, this factor can be a proxy for something else that might have a causal relation to the prognosis. We return to the issue of association versus causation in the analysis.

While the PMHnet algorithm provides the patient's survival prognosis and up to 10 explainable factors, it does not instruct the clinician what decisions to make. Regardless of the survival prognosis, the clinicians must comply with the recommendations for procedures and treatments that have well-documented effects, referred to by cardiologists as ‘Class I recommendations’ in national and international guidelines.24,25 However, there are some recommendations for disease management for which only less well-documented effects have been demonstrated, and clinicians might consider adjusting these Class II and III treatment recommendations upon knowing the patient's survival prognosis. Examples of adjustable treatment options include the extent of further examinations, the number of medications, the dose of medications, the duration of admission, recommendations for rehabilitation and the frequency and intensity of follow-up care.

Before we analyze the presentation of the PMHnet algorithm to the cardiologists who will use it, and their responses to it, we account for the ethnographic study and the empirical material providing the basis for this article.

Methods

All authors of this article are based in Copenhagen, Denmark, and actively involved in the PM Heart research project. Anna is the research administrator of the project, Peter and Søren are bioinformaticians and have developed and trained the PMHnet algorithm; moreover, Søren is the co-Leader of the PM Heart research project. Henning is a clinical researcher, cardiologist and the Project Leader of the research project. Together, Henning, Søren, Peter and Anna have been responsible for moving the PMHnet algorithm to clinical implementation. As a social scientist investigating the social, practical and organizational implications of predictive algorithms in healthcare, Iben has followed as closely as possible the development of the algorithm and the efforts to integrate it into the EHR system. Empirically, the article draws upon Iben's ethnographic engagements and fieldwork in more than fifteen project meetings held regularly since 2019, lasting between one and two hours. While a few meetings at the beginning of the research project were in-person meetings held at Copenhagen University Hospital, the vast majority of meetings have been on-line. In these meetings, the different project members updated each other on significant developments within each of the different researchers’ responsibilities and sought out solutions to potential challenges in moving the project forward. Moreover, Iben has conducted nine qualitative interviews with members of the PM Heart research project, each lasting approximately one hour.

Participation in research meetings and interviews has provided important insights into the visions and challenges of developing the PMHnet algorithm and moving it to clinical implementation. However, the main empirical material for this article constitutes participant observation carried out by Iben in a ‘roadshow’, that is, a series of visits around the hospitals in Denmark's Capital Region and Region Zealand. In each of these visits, Henning presented the PMHnet algorithm to the cardiologists on duty that particular day with a slideshow either before or after the daily morning conference, typically held at 8:00 a.m. The presentations lasted between 30 and 45 minutes and were followed by ten minutes of Q&A and joint discussion, hence approximately one hour in total. Iben attended six such presentations out of a total of ten: Hvidovre Hospital (August 16, 2023), Copenhagen University Hospital [Rigshospitalet] (August 17, 2023), Herlev Hospital (August 30, 2023) and Glostrup Hospital (September 4, 2023), all located in the Capital Region; and Slagelse Hospital (August 29, 2023) and Holbæk Hospital (September 8, 2023), both located in Region Zealand. During the presentations, Iben took extensive field notes, documenting how Henning presented the PMHnet algorithm to his colleagues and how the audience of cardiologists responded with comments and questions about the algorithm, how it had been developed and how to use it in their clinical decision-making. Observing and documenting these presentations, Iben obtained important insights into the cardiologists’ immediate responses and reactions to the PMHnet algorithm and the PM Heart research project more broadly. Overall, the cardiologists were predominantly positive about the prospect of using the PMHnet algorithm in their decision-making and were curious to learn and understand how knowing (more) precisely about patients’ survival prognoses might improve the cardiologists’ medical practice and thus patient outcomes. Yet during the Q&A sessions and the ensuing discussions, concerns also came to the fore about the uncertain and opaque influence algorithms might have on the cardiologists’ judgments and decisions. These reactions, positive as well as reserved, serve as the empirical catalyst for the following analysis and the ensuing methodological questions provided for future ethnographic studies of predictive algorithms in healthcare.

This ethnographic study has been approved by the Danish Data Protection Authorities. Danish Law does not require formal ethics approval of such studies. No patients participated in this study, therefore, patient consent was not necessary.

Analysis

Part I: Presenting the PMHnet algorithm to its future users

An early morning at the end of August 2023, Iben, Henning and Anna sat in a large conference room in a hospital in Region Zealand about a one-hour's drive from Copenhagen. Little by little, the room filled up with clinicians, waiting for the daily morning conference to begin. At 8:00 a.m., Henning began his presentation of the PM Heart research project and the PMHnet algorithm. As an introduction, he rhetorically asked his audience of colleagues how many factors they as cardiologists can ‘keep in their heads’ and take into account when they make clinical decisions about patients with IHD. He then went on to supply the answer: ‘Well, on a good Monday morning, perhaps five, on a bad Friday afternoon, maybe three’. With this question, Henning was alluding both to the insufficient capacity of the human brain to simultaneously consider multiple factors, and to the inconsistent quality of human judgment. He listed a range of factors influencing the development and course of IHD, as well as the proliferating amounts and kinds of health data that might bring cardiologists closer to understanding and intervening in these processes: clinical data, biochemical data, omics data and more. He concluded: ‘To be able to use the entire knowledge we have about the individual patient, we need help. We believe we need AI. We need a system to help organize and keep track of all these data so that we can make the decision. I like thinking about it as an “AI Wingman” or a “Robot Butler”’. To consolidate his argument, he presented the headline of a feature article published in March 2023 in The Journal of the Danish Medical Association [Ugeskrift for Læger], saying: ‘Statement: AI will not replace people, but people who use AI will replace those who do not use AI’ [AI vil ikke erstatte folk, men folk, der bruger AI, vil erstatte dem, der ikke bruger AI]. 26 His slideshow furthermore featured a recent article in Berlingske, one of the major Danish newspapers, in which Denmark's Minister for The Interior and Health declared that the Danish government will invest heavily in AI in the future to solve the current and future problem of shortages of doctors and nurses in the healthcare system. 27

Characterizing the algorithm as a ‘Robot Butler’ and thus a helpful companion, Henning humanized the algorithm and conveyed the widespread expectation that AI tools will make medical work easier, more efficient and convenient. With the concept ‘The Sociology of Expectations’, social science scholars have analyzed the generative effects of expectations in science and technology innovation, demonstrating how the emphasis on future opportunities and capabilities provides interest, legitimation and investment around novel technologies.28–31 Drawing upon powerful statements and narratives about the significant role of AI as the solution to improve both medical practice and a strained healthcare system, Henning depicted to his colleagues a future in which the presence and use of AI is inevitable. In particular, the feature article headline statement effectively suspends arguments and resistance against the use of AI in clinical work: either you embrace AI and become savvy in using these technologies, or you can expect to become redundant in the future. As argued by Borup and colleagues, ‘expectations link technical and social issues, because expectations and visions refer to images of the future, where technical and social aspects are tightly intertwined’. 28 In other words, the anticipatory claims and expectations to AI construct a ‘technological promise’ around not only the PMHnet algorithm but around algorithms in healthcare in general in which the integration of algorithms in the clinical work equates with better clinical decisions and patient care.

As part of their everyday work, cardiologists use a range of different risk scores in which they manually type in several patient-specific values. During each of his presentations, Henning compared the PMHnet algorithm to other tools – already in use in the clinic – for prognostication and risk scoring of patients, saying: ‘We use these scores all the time! We lean against these scores, and we trust them blindly’. Presenting the PMHnet algorithm to his colleagues, Henning on the one hand promoted algorithms as novel and groundbreaking technologies with the potential to revolutionize medical work, yet on the other hand, he simultaneously downplayed the novelty of these algorithms by comparing them to other decision-support tools already in use in the clinic, and thus as ‘normal procedure’. In so doing, he shifted between what Rikke Torenholt and Henriette Langstrup 32 in their study of algorithm systems in cancer care have termed a ‘logic of disruption’ and a ‘logic of continuation’. From within the logic of disruption, Henning portrayed the algorithm as superhuman, stressing its capacity to substitute incomplete and flawed human reasoning. Denoting many of the decisions and judgments made in everyday clinical practice as ‘guesswork’, he foregrounded the limited capacities of the human brain, only capable of taking into account a few patient-specific variables relevant to the diagnostic work and decision-making. Yet simultaneously, from within the logic of continuation, Henning emphasized the algorithm as inferior to the human brain and its capacities for reasoning, stressing the algorithm as only a support, or a ‘wingman’ in human-driven decision-making grounded in clinical experience. Continuously oscillating between these two logics, he encouraged the cardiologists in their need to collaborate with the algorithm to improve their practice and their decisions, while simultaneously guarding the unique competencies, agency and autonomy of the cardiologists, an issue we go further into below.

To sum up, in presenting the PMHnet algorithm to its future users, Henning constructed a ‘technological promise’ around AI in healthcare and oscillated between a logic of disruption and a logic of continuation to encourage cardiologists to embrace the algorithm in their everyday work. However, algorithms do not necessarily function as helpful wingmen or Robot Butlers who seamlessly tally with the goals and intentions of human actors, making clinical decision-making easier, more effective or precise.15,16,18 Therefore, the practical effects and implications of algorithms must be scrutinized. In the following part of the analysis, we examine the clinicians’ subsequent responses to the PMHnet algorithm. We have synthesized the hopes and concerns articulated by the clinicians into three overall themes: 1) the re-negotiation of agency and autonomy in human-algorithm relations, 2) accountability in algorithmic prognostication and 3) the complex relationship between association and causation actualized by predictive algorithms. These analytical themes help us articulate and understand the putative effects of the algorithm on clinical practice, and in so doing, elicit methodological questions warranting future exploration.

Part 2: Cardiologists’ responses to the PMHnet algorithm

Theme 1: Agency and autonomy re-negotiated: Augmentation versus replacement?

One of the major concerns voiced by critics and in scholarship on AI is whether artificial forms of reasoning erode human agency and autonomy.15,21,33 At a hospital in the Capital Region, a cardiologist responded to Henning's presentation of the PMHnet algorithm with a mix of enthusiasm and concern, saying: ‘The day the algorithm decides whether I should treat the patient with beta-blockers, we [cardiologists] are no longer needed. But this [the PMHnet algorithm] is still a tool’. With this comment, the cardiologist negotiated agency and autonomy in human-algorithmic collaboration by delineating the algorithm as a tool for augmenting human decisions rather than a replacement for human agency and autonomy. 34 We might understand the cardiologist's insistence on the algorithm as ‘just a tool’ as a way of keeping at a distance the unpleasant feeling that algorithms might erode clinicians’ epistemic authority over their actions and choices and thus give rise to uncertainty about ‘who is the one deciding’.21,35 Henning confirmed this view by responding: ‘Nothing has been taken from us – we’ll just feel better [when making decisions]’. In other words, while welcoming technological tools that might enhance their practice through automated data processing, cardiologists hold on to the value that their decisions should be ‘their own’. 33 This is a balancing act: on the one hand, the algorithm is portrayed as a butler – another individual – with whom clinicians can share judgment and thus responsibility, so that they feel better when making difficult decisions with consequences for the lives and deaths of their patients. Yet, on the other hand, cardiologists seek to keep a firm line between human and algorithmic agency, guarding and upholding the value of ‘pure’ human self-determination.

To strike this balance, during his presentations, Henning would also remind his colleagues of the simple algorithms already widely used in the clinical setting, namely risk scores which cardiologists ‘trust blindly’. This point poignantly demonstrates that no clear boundaries can be drawn between human and technological agency. As argued by Hayles, 36 when part of the cognitive processing that precedes a decision is outsourced to an algorithm, cognition and the powers of making decisions are distributed throughout the algorithmic system. In other words, regardless of the complexity of an algorithm, sharing responsibility and judgment with a Robot Butler occasions a reconfiguration of what constitutes clinicians’ ‘own’ decisions. Autonomous agency and self-determination are thus not qualities cardiologists can possess, but rather situational achievements that must be re-negotiated in practice on an ongoing basis in the face of new technologies and, particularly, artificial modes of reasoning.21,37 As Figures 1 and 2 demonstrate, visualization techniques are applied to help clinicians use algorithmic risk predictions in a meaningful way when they make decisions and communicate with patients.38,39 The question for further inquiries is therefore not if algorithms reconfigure human agency but rather how they do it: How does the information provided by algorithms inform and shape the decisions of clinicians? How do clinicians use, collaborate with, and build trust in algorithms, and how do they experience and mobilize visualizations of risk and prognosis? For more questions, see Table 1.

Table 1.

Methodological questions for studying the implementation, and consequent implications, of predictive algorithms in healthcare practice.

| Analytical theme | Methodological questions |

|---|---|

| Theme 1: Agency and autonomy re-negotiated |

Practice

|

| Theme 2: Accountability in prognostication |

Practice

|

| Theme 3: Association versus causation |

Practice

|

Theme 2: Accountability in prognostication

At the heart of questions of agency and autonomy in human-algorithmic collaboration is also the question about accountability: who is responsible and can be held accountable for decisions in case algorithmic predictions fail?3,40 In presenting the PMHnet algorithm to cardiologists, Henning stressed that for non-required treatment and follow-up care for patients with IHD (referred to as Class II and III recommendations earlier), cardiologists decide from their personal experience or ‘feelings’ [fornemmelser]: ‘In choosing among Class II and III recommendations, on what do we ground our decisions? It's all about feelings: when do I feel safe? On what do we ground these feelings? Well, that might be the prognosis for the patient’. Some cardiologists inquired into this issue, questioning the repercussions in cases where the algorithm's predictions fail. One cardiologist asked: ‘How are we positioned legally if the algorithm says [mistakenly] that the patient's pulse is high, and we react accordingly (…)? Might the algorithm be used as an argument from the authorities asking why one did not offer a specific treatment?’ Another cardiologist depicted a similar scenario: ‘What about people whose prognoses are very bad, and we tell them. In response, they change their lives completely. One year later, they come back, saying ‘Hey, I’m still alive!! Yet now I’ve spent all the money I had!’ Posing these questions, the cardiologists raised the question of how to assign blame and to whom for algorithmic predictions that turn out to be wrong and pointed to the paradox that novel technologies developed to improve clinical practice might also unintendedly be appropriated by authorities as tools for surveillance, control and governance. 31

In social science scholarship, the opacity and complexity of algorithms have been identified as major hindrances to algorithmic accountability. Algorithms are often described and conceived of as ‘black boxes’ because the recipients of the output (in our case, cardiologists receiving a patient's survival prognosis) rarely understand how and why the algorithm arrived at this classification from the inputs.3,31,40–43 Efforts to render AI more explainable and interpretable (‘explainable AI’) are seen as a solution to this problem, for instance by increasing the transparency of how a given model functions for its users, and by aiding users to understand how a model behaved and why. 41 Indeed, the explainable factors provided by the PMHnet algorithm (see Figure 2) aim exactly at increasing interpretability, helping clinicians better understand why the algorithm arrived at a given prognosis. Yet as argued by McKee and Wouters, the intrinsic complexity of algorithms is difficult to address. 3 Furthermore, the technical provision of explainable factors does not in itself guarantee correct interpretation or understanding, a point we further elaborate on below (Theme 3: Association versus causation). How clinicians make sense of algorithmic output, and how they integrate it into their decision-making is highly individual and context-dependent. This point leads to another accountability problem: traceability, denoting the difficulty of detecting possible failures across the process of developing, training and implementing an algorithm. It remains challenging, if not impossible, to determine or assess the impact of an algorithm in human-algorithm decision-making and thus of apportioning blame in distributed networks of human and AI actors.3,40 At the European Union level, on March 13 2024 an EU AI Act was adopted by the Parliament to ensure a regulatory framework for developing AI technologies and tools that balances innovation and patient safety.44–46 Within the field of healthcare, however, AI tools are different and put to use in very different settings with the aim of solving different problems, meaning that no single or universal solution to accountability problems exists. Along with other scholars, we therefore stress the need for ethnographic studies of how AI tools are actually used in practice to examine what accountability issues emerge in the everyday use of algorithms, with what consequences and for whom.47–50 Such studies are paramount to document the effects and implications of AI in healthcare and thus to aim for algorithmic accountability.

Questions of accountability furthermore point to the inherent uncertainty of algorithmic predictions, namely that they are probable, not certain, claims about the future – an important point that physicians, according to Katherine Goodman and colleagues, tend to get wrong. 38 On several occasions, cardiologists asked Henning what would happen to the PMHnet algorithm's predictions in the case of missing data. He replied that in contrast to other similar tools, the advantage of the PMHnet algorithm is that it runs even with missing data, yet the prediction becomes more uncertain. Also deliberating on the relationship between input and output in algorithmic models, a cardiologist pondered openly about clinicians’ flawed registration practices, the resulting poor quality of clinical data, 51 and the implications of erroneous data for the algorithm's predictions. To this apt point, Henning responded: ‘This is an important issue – we’ve trained the algorithm on these [erroneous] data. It's no better than the everyday clinic. Maybe this will force us to be a little more precise when we register (…) For many years, we’ve used the EHR as a typewriter, that is, registered patient data in a non-structured format. Maybe the PMHnet algorithm will make us write more structured data to improve its performance’. One example of unstructured data entry with huge implications for calculating the survival prognosis of patients with IHD is whether the patient smokes or not. Although smoking status can be entered in the EHR as structured data, this is by no means common practice. Instead, clinicians write it as free text, which makes it more cumbersome to incorporate in an algorithm. As such, Henning and some of his colleagues in the PM Heart research project envisioned that the PMHnet algorithm would encourage and bring about an improved register culture among clinicians. Yet how cardiologists will evaluate the (un)certainty of the algorithmic predictions, and with what consequences, remain questions open to further investigation.

As a last point, the cardiologists’ questions about accountability and uncertainty illuminate the complex temporal relationships and assumptions at play in algorithmic predictions. Predictive algorithms like the PMHnet embody the assumption that in making decisions about, for example, medication and follow-up care, clinicians construct and attend to an estimate of the patient's future risk. Yet in practice, clinicians might have other considerations when making decisions; that is, considerations grounded in the here-and-now complex situations of patients that carry more weight than the risk of a future outcome. The fundamental premise of predictive algorithms is that knowledge about the probability of a given future outcome shapes decisions in the present in a desirable manner. The question remains, however, how – without further instructions from the PMHnet algorithm about how to treat and manage the patient – clinicians make sense and use of algorithmic predictions in practice: How do AI and algorithms redistribute accountability and responsibility in the practices and experiences of clinicians? How do clinicians understand and act upon algorithmic predictions? Does the implementation of algorithms change clinicians’ registration practices, and if so, how? For more questions, see Table 1.

Theme 3: Association versus causation

As touched upon previously, the provision of explainable factors does not necessarily guarantee interpretability and understanding of algorithmic output. Moreover, scholars have pointed out that associations themselves do not convey predictive value, and therefore acting on associations can be problematic.40,52 In every presentation, Henning carefully explained to his colleagues the importance of remembering that the explainable factors provided by the PMHnet algorithm and shown in the EHR are mathematical associations, not necessarily causal relationships between the provided factors and the calculated survival prognosis of the patient. At a presentation at a hospital in the Capital Region, one of the cardiologists was very impressed by the PM Heart research project and responded to the presentation, saying: ‘This is a fascinating project! It's incredibly well done that you’ve been able to build this model and integrate it into the EHR. (…) Could we possibly run into problems using the algorithm if it makes us ‘turn off the brain’ [slå hjernen fra]? What if I think I need to treat kidney disease in the patient, yet that doesn’t figure on the list as an explainable factor, and then I do nothing about it? Can we possibly overlook something? Have you discussed what happens when we see it [the prognosis and its explainable factors] in cold print?’

This question about association versus causation came up recurrently. As a way of recognizing the question, on several occasions Henning took the screenshot picture of the algorithm in the EHR as appears in Figure 2 as an illustrative example. In this example, bronchoscopy is listed as a significant factor driving the prediction towards survival for this patient. Referring to this example, Henning asked: ‘Does this mean that we should send the next patient with a very bad survival prognosis for a bronchoscopy? Of course, no! This is where we ought to use our common sense’. While in this example, it seems obvious that having a bronchoscopy performed is not causally linked to better chances of survival, there might be other associations between either risk or protective factors for which the relation between association and causation is much more difficult to sort out and understand. Therefore, Henning also acknowledged the confusing mix of associations and causal relationships facing clinicians in using this tool, expecting recurrent difficulties for himself and his colleagues in wrapping their heads around this. This difficulty also relates to the complex and opaque nature of advanced algorithms discussed above15,53,54 which means that the daily users, who have not been actively involved in developing the model, have very limited qualifications for understanding its operations and how it ends up with a given prognosis and list of explainable factors.

At a hospital in Copenhagen, another cardiologist raised the issue of association versus causation. After the presentation of the PMHnet algorithm, she asked: ‘Have you thought about what it means [in practice] that we find associations before we understand the causal relationship? How are we to instruct the patient?’ With this comment, she raised the question of patient involvement. At another presentation, a cardiologist had a similar concern about the reactions from patients towards the use of AI in their care, asking: ‘Do patients have a right to know about the algorithm? My concern is: what happens if a headline appears in Ekstra Bladet [one of the largest Tabloid newspapers in Denmark] saying that an algorithm is secretly running, concealed from the patients?’ In response to these questions, Henning explained that although patients are unable to ‘see’ the algorithm in their EHR because it is classified as research data, he would strongly encourage the cardiologists to mobilize the algorithm and its predictions when communicating with patients about their disease and their future. He continued: ‘This tool might change how we talk to the patients (…) It opens a larger debate about the fact that we’re probably not that good at talking to patients about their prognoses. Oncologists are much better at that!’ The third issue warranting further scrutiny is thus this: How do clinicians understand and delineate association and causation when using predictive algorithms, and what are the implications for how they make decisions? How is the provision of explainable factors meaningful and useful to clinicians in their decision-making, and how – if at all – do they mobilize algorithmic predictions when communicating with patients? See Table 1 for further questions.

Methodological guidance: Studying predictive algorithms in practice

For each of the themes elicited in the analysis, Table 1 provides a list of questions we believe should warrant special attention in social science studies of the implementation of predictive algorithms and the implications for clinical practice. The list of questions is not meant to be exhaustive, as more questions will inevitably emerge as predictive algorithms are increasingly put into service. Nevertheless, as the questions elaborate on the hopes and concerns of real end-users of the PMHnet algorithm, we believe them to be useful as guidance in future studies exploring how human-algorithm relations are crafted in ways that are meaningful to healthcare staff and of benefit to the patients.

Discussion

In presenting the PMHnet algorithm at different hospitals and as the Project Leader of the PM Heart research project, Henning made great efforts to encourage his colleagues to welcome the algorithm into their everyday work. On the other hand, however, he was also honest when cardiologists in the audience asked whether he truly expects the algorithm to improve the survival of patients with IHD, and to minimize patients’ risk of re-admission. To this question, on one occasion Henning responded: ‘Well, we might be optimistic. Yet we’ve been able to wheel in a barrow an awful lot of clinical data [from the hospital] to the university. From these data, some of the best mathematicians and bioinformaticians have developed a model, which we sent to Iceland and validated in a different country and entirely different healthcare system. Then we transported it back again to the [Danish] healthcare system and integrated it into the EHR. During the process, we tried to make the lawyers happy, and have you ever seen a happy lawyer? So the real exercise here has been this: is this possible? It's a different game’. Narrating the research process this way, Henning attempted to make visible the complexity and technical, legal and organizational challenges of developing an algorithm and bringing it to clinical implementation. 55 Accordingly, he characterized the PM Heart research project and the PMHnet algorithm as a ‘proof of concept’, comparing their endeavors to constructing a ‘highway’ on which future algorithms could – hopefully – be transported into the clinical space. Along similar lines, an enthusiastic cardiologist termed the research project and the algorithm an ‘ice-breaker project’ paving the way for algorithms in healthcare. Building algorithms in healthcare is thus not so much about increasing predictive power in the clinic, in the short run, at least. It is about developing and consolidating research collaboration and academic networks in precision medicine across borders and disciplines, and about navigating novel data-intensive technology through an uncharted organizational, legal and moral landscape.

The PMHnet algorithm is not primarily valuable because of its effects on patient treatment but because of its ability to pave the way for a future and upgraded 2.0 edition of the algorithm. Peter and Søren, the bioinformaticians in the PM Heart research project, are already working on this upgraded version which includes multiple and additional clinically relevant outcomes. When researchers think, experience and develop algorithms in generations, they succeed in bringing these technologies into clinical application, gradually and pragmatically. Yet as the future, upgraded algorithms will likely constitute improved and more sophisticated versions of the current ones, the generational approach also effectively suspends larger questions about the legitimacy of algorithms and their implications for health professionals, patients and the healthcare system; that is, whether algorithms are meaningful to and useful in the clinical work. Therefore, despite the probably insignificant effect of the PMHnet and similar algorithms in clinical practice, we should not dismiss their significance as infrastructuring entities. Regardless of their effects on clinical outcomes in the present, these ‘proofs of concept’ or ‘ice-breaker’ algorithms pave the way for new, yet-to-be-invented, algorithms that will transform our practices and experiences of health and disease.

While this study provided important insights into the presentation of an algorithm aimed at supporting and enhancing the clinical management of patients with IHD and the subsequent reception of it among future users, limitations apply. Our study is a case study, meaning that our empirical material is limited to one specific algorithm that was presented very locally in one country and thus in a very specific healthcare system. Our results might therefore be difficult to generalize to other algorithmic tools and other clinical, professional and healthcare settings. Currently, research projects developing AI decision-support tools are proliferating, and more research needs to be done to explore their implications across different clinical contexts. However, as the hopes and concerns of cardiologists identified in this study echo larger theoretical and scholarly debates about the ethics and implications of algorithms, we regard our findings as analytically generalizable. 56 We therefore believe that the proposed attention to how autonomy and agency, algorithmic accountability and the relation between association and causation, play out in practice in human-algorithm relations will be helpful: it serves as a guide to explore and understand the implications of algorithms in clinical decision-making across local contexts.

Conclusion

In this article, we have investigated how a predictive algorithm, the PMHnet, was presented to its future users, namely cardiologists working in the hospital setting in the eastern part of Denmark, and their immediate responses to it. Providing individual survival prognoses on the basis of a variety of health data, the algorithm is aimed at supporting and enhancing cardiologists’ decision-making in the management of patients with IHD. We identified three analytical themes in need of further scrutiny when AI decision-support tools are implemented in clinical practice: firstly, the cardiologists considered how to uphold human autonomy and agency when using the PMHnet algorithm; secondly, they questioned how to evaluate and negotiate accountability in human-algorithm relations; and thirdly, they deliberated on the judgmental effects of the confusion between association and causation in algorithmic prediction. From these analytical themes, we have elicited a list of methodological questions to guide further ethnographic research into the practical use of predictive algorithms. As no algorithms, no professional user groups and no clinical or healthcare settings are identical, in-depth ethnographic studies in local, situated practices are needed to scrutinize these emerging and promissory technologies before they acquire status as natural, objective ‘Robot Butlers’ who seem to conveniently and effectively augment the decisions of clinicians. 57 Only through local investigations of how algorithms are used, embraced and contested in everyday clinical practices can we understand their implications – good and bad, intended and unintended – for clinicians, patients and healthcare provision. With this article, we intend to encourage such critical investigations, aiming for socially robust medical technologies that are useful and meaningful to clinical work.

Acknowledgments

The authors thank all members of the PM Heart research project, particularly those who agreed to participate in an interview with Iben. The authors also thank the cardiologists and hospital staff for sharing their expectations and concerns for the PMHnet algorithm and for engaging in discussions with us.

Footnotes

Contributorship: Iben formulated the research idea and prepared the first draft of the manuscript. Anna and Henning participated in generating the empirical material. Peter and Søren developed the algorithm and supported in writing the section presenting the research project and the algorithm. All authors reviewed and edited the manuscript draft and approved the final version.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: This ethnographic study has been approved by the Danish Data Protection Authorities. Danish Law does not require formal ethics approval.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was funded by NordForsk, grant number: 90580; The Novo Nordisk Foundation, grant number: NNF22OC0079382; and Rigshospitalets Rammebevilling, grant number: E-22503-19.

Guarantor: IMG.

ORCID iD: Iben Mundbjerg Gjødsbøl https://orcid.org/0000-0003-3938-5211

References

- 1.Bohr A, Memarzadeh K. The rise of artificial intelligence in healthcare applications. In: Bohr A and Memarzadeh K (eds) Artificial Intelligence in Healthcare. London: Academic Press, 2020, pp. 25–60. doi: 10.1016/B978-0-12-818438-7.00002-2. [DOI] [Google Scholar]

- 2.Hazarika I. Artificial intelligence: opportunities and implications for the health workforce. Int Health 2020; 12: 241–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McKee M, Wouters OJ. The challenges of regulating artificial intelligence in healthcare. Comment on “clinical decision support and new regulatory frameworks for medical devices: are we ready for it? – a viewpoint paper”. Int J Health Policy Manage 2023; 12: 7261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Khera R, Butte AJ, Berkwits M, et al. AI In medicine – JAMA’s focus on clinical outcomes, patient-centered care, quality, and equity. JAMA 2023; 330: 818–820. [DOI] [PubMed] [Google Scholar]

- 5.Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J 2019; 6: 94–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Beede E, Baylor E, Hersch F, et al. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy. In Proceedings of the 2020 CHI conference on human factors in computing systems (CHI ‘20). Association for Computing Machinery, New York, NY, USA, 1-12. doi: 10.1145/3313831.3376718. [DOI]

- 7.Liao H-C, Lin C, Wang C-Het al. et al. The deep learning algorithm estimates chest radiograph-based sex and age as independent risk factors for future cardiovascular outcomes. Digit Health 2023; 9: 1–12. doi: 10.1177/20552076231191055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dong T, Sinha S, Zhai B, et al. Cardiac surgery risk prediction using ensemble machine learning to incorporate legacy risk scores: A benchmarking study. Digit Health 2023; 9: 1–20. doi: 10.1177/20552076231187605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tsai D-J, Lou Y-S, Lin C-S, et al. Mortality risk prediction of the electrocardiogram as an informative indicator of cardiovascular diseases. Digit Health 2023; 9: 1–16. doi: 10.1177/20552076231187247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Niu Y, Wang H, Wang H, et al. Diagnostic validation of smart wearable device embedded with single-lead electrocardiogram for arrhythmia detection. Digit Health 2023; 9: 1–11. doi: 10.1177/20552076231198682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lee C-H, Liu W-T, Lou Y-S, et al. Artificial intelligence-enabled electrocardiogram screens low left ventricular ejection fraction with a degree of confidence. Digit Health 2022; 8: 1–16. doi: 10.1177/20552076221143249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Spencer R, Thabtah F, Abdelhamid Net al. et al. Exploring feature selection and classification methods for predicting heart disease. Digit Health 2020; 6: 1–10. doi: 10.1177/2055207620914777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Savolainen L, Uitermark J, Boy JD. Filtering feminisms: emergent feminist visibilities on Instagram. New Media Soc 2022; 24: 557–579. [Google Scholar]

- 14.Schüll ND. Addiction by Design. Princeton, NJ: Princeton University Press, 2014. [Google Scholar]

- 15.Besteman C, Gusterson H. (eds) Life by Algorithms: How Roboprocesses Are Remaking Our World. Chicago and London: The University of Chicago Press, 2019. [Google Scholar]

- 16.O’Neil C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown, 2016. [Google Scholar]

- 17.Tanninen M, Lehtonen T-K, Ruckenstein M. Tracking lives, forging markets. J Cult Econ 2021; 14: 449–463. [Google Scholar]

- 18.Pasquale F. The Black Box Society: The Secret Algorithms that Control Money and Information. Cambridge, NY: Harvard University Press, 2015. [Google Scholar]

- 19.Prainsack B, Van Hoywegen I. Shifting solidarities: personalisation in insurance and medicine. In: Van Hoywegen I, Pulignano V, Meyers G. (eds) Shifting Solidarities. New York: Palgrave Macmillan, 2020, pp.127–151. doi: 10.1007/978-3-030-44062-6_7. [DOI] [Google Scholar]

- 20.Holm PC, Haue AD, Westergaard D, et al. Development and validation of a neural network-based survival model for mortality in ischemic heart disease. Under review 2023. medRxiv 2023. doi: 10.1101/2023.06.16.23291527 [DOI] [Google Scholar]

- 21.Savolainen L, Ruckenstein M. Dimensions of autonomy in human-algorithm relations. New Media Soc 2022: 1–19. doi: 10.1177/14614448221100802. [DOI] [Google Scholar]

- 22.Ruckenstein M. Time to re-humanize algorithmic systems. AI Soc 2023; 38: 1241–1242. [Google Scholar]

- 23.Schwennesen N. Algorithmic assemblages of care: imaginaries, epistemologies and repair work. Sociol Health Illn 2019; 41: 176–192. [DOI] [PubMed] [Google Scholar]

- 24.Fuchs A, Bojer AS, Christensen DM, et al. Forebyggelse af hjertesygdom [Prevention of heart disease]. Dansk Cardiologisk Selskab: Behandlingsvejledning | Forebyggelse af hjertesygdom (cardio.dk) (accessed 24 September).

- 25.Knuuti J, Wijns W, Saraste A, et al. 2019 ESC guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J 2020; 41: 407–447. [DOI] [PubMed] [Google Scholar]

- 26.Emmersen J, Astorp MS, Andersen S. Kunstig intelligens og lægestudiet [Artificial intelligence and the medical educational programme]. Ugeskrift for Læger 2023: Kunstig intelligens og lægestudiet | Ugeskriftet.dk (accessed 23 September 2023).

- 27.Jensen SL. Minister bebuder ai-offensiv: Skal afbøde mangel på varme hænder. [Minister heralds ai-offensive: Shall avert shortage of warm hands]. Berlingske, 2017: Minister bebuder ai-offensiv: Skal afbøde mangel på varme hænder (berlingske.dk) (accessed 20 September 2023).

- 28.Borup M, Brown N, Konrad Ket al. et al. The sociology of expectations in science and technology. Technol Anal Strateg Manag 2006; 18: 285–298. p. 286. [Google Scholar]

- 29.Jasanoff S, Kim S-H. (eds). Dreamscapes of Modernity: Sociotechnical Imaginaries and the Fabrication of Power. Chicago: The University of Chicago Press, 2015. [Google Scholar]

- 30.Jensen LG, Svendsen MN. Personalised medicine in the Danish welfare state: political visions for the public good. Crit Public Health 2022; 32: 713–724. [Google Scholar]

- 31.Hoeyer K. Data Paradoxes: The Politics of Intensified Data Sourcing in Contemporary Healthcare. Cambrigde, MA: MIT Press, 2023. [Google Scholar]

- 32.Torenholt R, Langstrup H. Between a logic of disruption and a logic of continuation: negotiating the legitimacy of algorithms used in automated clinical decision-making. Health 2023; 27: 41–59. [DOI] [PubMed] [Google Scholar]

- 33.Mackenzie C. Three dimensions of autonomy: a relational analysis. In: Veltman A, Piper M. (eds) Autonomy, Oppression, and Gender. New York: Oxford University Press, 2014, pp.15–41. p. 17. [Google Scholar]

- 34.Davenport TH, Glover WJ. Artificial intelligence and the augmentation of healthcare decision-making. NEJM Catalyst 2018. Artificial Intelligence and the Augmentation of Health Care Decision-Making | NEJM Catalyst (accessed 23 September 2023).

- 35.Tanninen M, Lehtonen T-K, Ruckenstein M. Trouble with autonomy in behavioral insurance. Br J Sociol 2022; 73: 786–798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hayles NK. Unthought: The Power of the Cognitive Nonconscious. Chicago: The University of Chicago Press, 2017. [Google Scholar]

- 37.Beer D. Power through the algorithm? Participatory web cultures and the technological unconscious. New Media Soc 2009; 11: 985–1002. [Google Scholar]

- 38.Goodman KE, Rodman AM, Morgan DJ. Preparing physicians for the clinical algorithm era. N Engl J Med 2023; 389: 483–487. [DOI] [PubMed] [Google Scholar]

- 39.Webmoor T. Algorithmic alchemy, or the work of code in the age of computerized visualization. In: Carusi A, Hoel AS, Webmoor T, et al. (eds) Visualization in the Age of Computerization. New York: Routledge, 2014, pp.19–39. [Google Scholar]

- 40.Mittelstadt BD, Allo P, Taddeo M, et al. The ethics of algorithms: mapping the debate. Big Data Soc 2016; 3: 1–21. doi: 10.1177/2053951716679679. [DOI] [Google Scholar]

- 41.Mittelstadt BD, Russell C, Watcher S. Explaining explanations in AI. Proceedings of the 2019 conference on fairness, accountability, and transparency, Atlanta, GA, United States, 29-31 January; 2019: 279-288. doi: 10.1145/3287560.3287574 [DOI]

- 42.Poechhacker N, Kacianka S. Algorithmic accountability in context. Socio-technical perspectives on structural causal models. Front Big Data 2021; 3: 519957. 1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Diakopoulos N. Algorithmic Accountability: journalistic investigation of computational power structures. Digit Journal 2015; 3: 398–415. [Google Scholar]

- 44.Van Laere S, Muylle KM, Cornu P. Clinical decision support and new regulatory frameworks for medical devices: are we ready for it? – A viewpoint paper. Int J Health Policy Manag 2022; 11: 3159–3163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.European Parliament. Artificial intelligence act. Artificial intelligence act (europa.eu) (Accessed 12 January 2024).

- 46.European Parliament. Artificial Intelligence Act: MEPs adopt landmark law. (https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law) (accessed 18 March 2024).

- 47.Kitchin R. Thinking critically about and researching algorithms. Inf Commun Soc 2017; 20: 14–29. [Google Scholar]

- 48.Seaver N. What should an anthropology of algorithms do? Cult Anthropol 2018; 33: 375–385. [Google Scholar]

- 49.Seaver N. Algorithms as culture: some tactics for the ethnography of algorithmic systems. Big Data Soc 2017; 4: 1–12. doi: 10.1177/2053951717738104. [DOI] [Google Scholar]

- 50.Neyland D. Bearing Account-able witness to the ethical algorithmic system. Sci Technol Hum Values 2016; 41: 50–76. [Google Scholar]

- 51.Kristiansen TB, Kristensen K, Uffelmann Jet al. et al. Erroneous data: the Achilles’ heel of AI and personalized medicine. Front Digit Health 2022; 4: 862095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Varga TV, Niss K, Estampador AC, et al. Association is not prediction: a landscape of confused reporting in diabetes – a systematic review. Diabetes Res Clin Pract 2020; 170: 108497. [DOI] [PubMed] [Google Scholar]

- 53.Schultze U, Aanestad M, Mähring M, et al. Living with monsters? Social implications of algorithmic phenomena, hybrid agency, and the performativity of technology. Springer 2018: IFIP WG 8.2 Working Conference on the Interaction of Information Systems and the Organization, IS&O 2018, San Francisco, CA, USA, December 11–12, 2018, Proceedings | SpringerLink (accessed 24 September 2023).

- 54.Marjanovic O, Cecez-Kecmanovic D, Vidgen R. Algorithmic pollution: making the invisible visible. J Inf Technol 2021; 36: 391–408. [Google Scholar]

- 55.Cathaoir KÓ, Gunnarsdóttir HD, Hartlev M. The journey of research data: accessing Nordic health data for the purposes of developing an algorithm. Med Law Int 2022; 22: 52–74. [Google Scholar]

- 56.Halkier B. Methodological practicalities in analytical generalization. Qual Inq 2011; 17: 787–797. [Google Scholar]

- 57.Latour B. Science in Action: How to Follow Scientists and Engineers Through Society. Cambridge, MA: Harvard University Press, 1987. [Google Scholar]