Abstract

We consider the problem of estimating common community structures in multi-layer stochastic block models, where each single layer may not have sufficient signal strength to recover the full community structure. In order to efficiently aggregate signal across different layers, we argue that the sum-of-squared adjacency matrices contain sufficient signal even when individual layers are very sparse. Our method uses a bias-removal step that is necessary when the squared noise matrices may overwhelm the signal in the very sparse regime. The analysis of our method relies on several novel tail probability bounds for matrix linear combinations with matrix-valued coefficients and matrix-valued quadratic forms, which may be of independent interest. The performance of our method and the necessity of bias removal is demonstrated in synthetic data and in microarray analysis about gene co-expression networks.

Keywords: network data, community detection, stochastic block models, spectral clustering, matrix concentration inequalities, gene co-expression network

1. Introduction

A network records the interactions among a collection of individuals, such as gene co-expression, functional connectivity among brain regions, and friends on social media platforms. In the simplest form, a network can be represented by a binary symmetric matrix A ∈{0,1}n×n where each row/column represents an individual and the (i, j)-entry of A represents the presence/absence of interaction between the two individuals. In the more general case, Aij may take values in to represent different magnitudes or counts of the interaction. We refer to Kolaczyk (2009), Newman (2009), and Goldenberg et al. (2010) for general introduction of statistical analysis of network data.

In many applications, the interaction between individuals are recorded multiple times, resulting in multi-layer network data. For example, in this paper, we study the temporal gene co-expression networks in the medial prefrontal cortex of rhesus monkeys at ten different developmental stages (Bakken et al., 2016). The medial prefrontal cortex is believed to be related to developmental brain disorders, and many of the genes we study are suspected to be associated with autism spectrum disorder at different stages of development. Other examples of multi-layer network data are brain imaging, where we may infer one set of interactions among different brain regions from electroencephalography (EEG), and another set of interactions using resting-state functional magnetic resonance imaging (fMRI) measures. Similarly, one may expect the brain regions to form groups in terms of connectivity. The wide applicability and rich structures of multi-layer networks make it an active research area in the statistics, machine learning, and signal processing community. See Tang et al. (2009), Dong et al. (2012), Kivelä et al. (2014), Xu and Hero (2014), Han et al. (2015), Zhang and Cao (2017), Matias and Miele (2017) and references within.

In this paper, we study multi-layer network data through the lens of multi-layer stochastic block models, where we observe many simple networks on a common set of nodes. The stochastic block model (SBM) and its variants (Holland et al., 1983; Bickel and Chen, 2009; Karrer and Newman, 2011; Airoldi et al., 2008) are an important prototypical class of network models that allow us to mathematically describe the community structure and understand the performance of popular algorithms such as spectral clustering (McSherry, 2001; Rohe et al., 2011; Jin, 2015; Lei and Rinaldo, 2015) and other methods (Latouche et al., 2012; Peixoto, 2013; Abbe and Sandon, 2015). Roughly speaking, in an SBM, the nodes in a network are partitioned into disjoint communities (i.e., clusters), and nodes in the same community have similar connectivity patterns with other nodes. A key inference problem in the study of SBM is estimating the community memberships given an observed network.

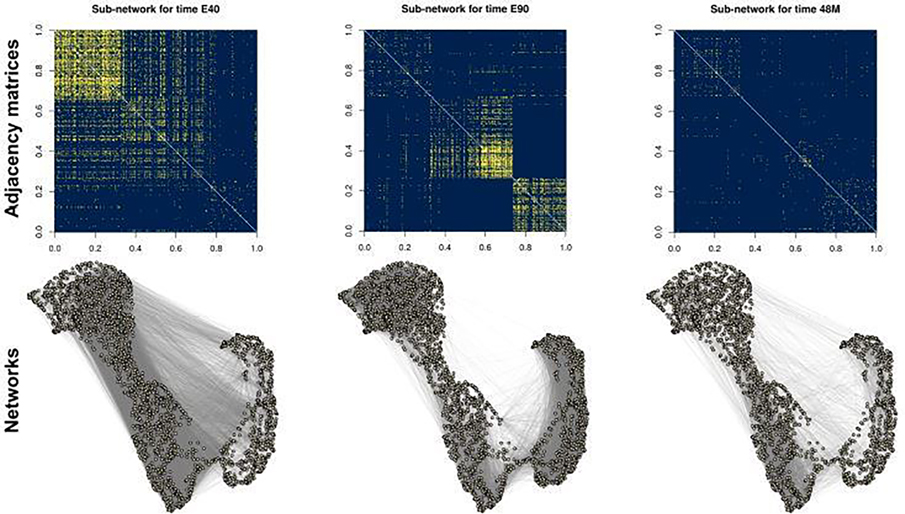

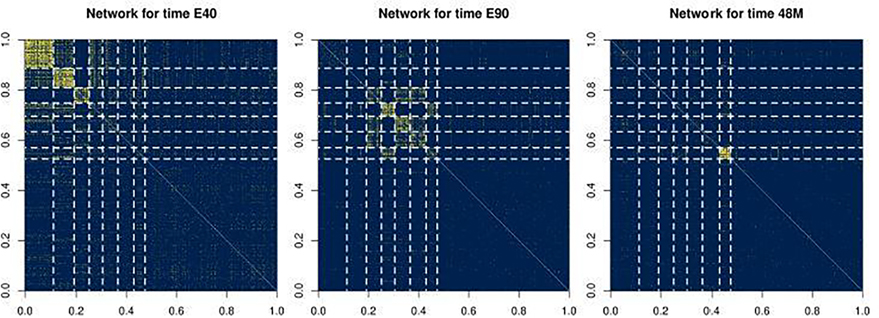

Compared to an individual layer, a multi-layer network contains more data and hopefully enables us to extract salient structures, such as communities, more easily. On the other hand, new methods must be developed in order to efficiently combine the signal from individual layers. To demonstrate the necessity for these methods, we plot the observed gene co-expression networks collected from Bakken et al. (2016) in Figure 1. The three networks correspond to gene co-expression patterns within the medial prefrontal cortex tissue of rhesus monkeys collected at different stages of development. We plot only the sub-network formed by a small collection genes for simplicity. A quick visual inspection across the three networks suggests that the genes can be approximately divided into four common communities (i.e., clusters that persist throughout all three networks), where genes in the same community exhibit similar connectivity patterns. However, different gene communities are more visually apparent in different layers. For example, in the layer labeled as “E40” (for tissue collected 40 days of development in the embryo), the last three communities are indistinguishable. In contrast, in the layer labeled as “E90,” the first community is less distinguishable, and in the layer labeled “48M” (for the tissue collected 48 months after birth), nearly all of the communities are indistinguishable. These qualitative observations are of scientific interest since these time-dependent densely-connected communities are evidence of “gene coordination,” a biological concept that describes when a community of genes is synchronized in ramping up or down in gene expression at certain stages of development (Paul et al., 2012; Werling et al., 2020). Hence, we can infer two potential advantages of analyzing such multi-layer network data in an aggregated manner. First, an aggregated analysis is able to reveal global structures that are not exhibited by any individual layer. Second, the common structure across different layers can help us to better filter out the noise, which allows us to obtain more accurate inference results. We describe the analysis in more detail and return to analyze the full dataset in Section 6.

Fig. 1.

The adjacency matrix (top row, yellow denoting the presence of an edge and blue denoting the lack of) and the corresponding network (bottom row) for three different developmental times of the rhesus monkey’s gene co-expression in the medial prefrontal cortex based on selected set of genes to visually demonstrate the varying network structures. Likewise, the ordering of the genes in the adjacency matrices is chosen to visually demonstrate the clustering structure, and persist throughout all three adjacency matrices. The three developmental times are E40, E90 (for 40 or 90 days in the embryo) and 48M (for 48 months after birth), corresponding to the pair of plots on the left to the pair of plots on the right. The full dataset is analyzed in Section 6.

The theoretical understanding of estimating common communities in multi-layer SBMs is relatively limited compared to those in single-layer SBMs. Bhattacharyya and Chatterjee (2018) and Paul and Chen (2020) studied variants of spectral clustering for multi-layer SBMs, but the strong theoretical guarantee requires a so-called layer-wise positivity assumption, meaning each matrix encoding the probability of an edge among the communities must have only positive eigenvalues bounded away from zero. In contrast, Pensky and Zhang (2019) studied a different variant of spectral clustering, but established estimation consistency under conditions similar to those for single-layer SBMs. These results only partially describe the benefits of multi-layer network aggregation. Alternatively, Lei et al. (2019) considered a least-squares estimator, and proved consistency of the global optima for general block structures without imposing the positivity assumption for individual layers, but that method is computationally intractable in the worst case.

The first main contribution of this paper is a simple, novel, and computationally-efficient aggregated spectral clustering method for multi-layer SBMs, described in Section 2. The estimator applies spectral clustering to the sum of squared adjacency matrices after removing the bias by setting the diagonal entries to 0. In addition to its simplicity, this estimator has two appealing features. First, summing over the squared adjacency matrices enables us to prove its consistency without requiring a layer-wise positivity assumption. Second, compared with single-layer SBMs, the consistency result reflects a boost of signal strength by a factor of L1/2, where L is the number of layers. Such a L1/2 signal boost is comparable to that obtained in Lei et al. (2019), but is now achieved by a simple and computationally tractable algorithm. The removal of the diagonal bias in the squared matrices is shown to be crucial in both theory (Section 3) and simulations (Section 5), especially in the most interesting regime where the network density is too low for any single layer to carry sufficient signal for community estimation. Interestingly, similar diagonal-removal techniques have also been discovered and studied in other contexts, such as Gaussian mixture model clustering (Ndaoud, 2018), principal components analysis (Zhang et al., 2022), and centered distance matrices (Székely and Rizzo, 2014).

Another contribution of this paper is a collection of concentration inequalities for matrix-valued linear combinations and quadratic forms. These are described in Section 4, which are an important ingredient for the aforementioned theoretical results. Specifically, an important step in analyzing our matrix-valued data is to understand the behavior of the matrix-valued measurement errors. Towards this end, many powerful concentration inequalities have been obtained for matrix operator norms under various settings, such as random matrix theory (Bai and Silverstein, 2010), eigenvalue perturbation and concentration theory (Feige and Ofek, 2005; O’Rourke et al., 2018; Lei and Rinaldo, 2015; Le et al., 2017; Cape et al., 2017), and matrix deviation inequalities (Bandeira and Van Handel, 2016; Vershynin, 2011). The matrix Bernstein inequality and related results (Tropp, 2012) are also applicable to linear combinations of noise matrices with scalar coefficients. In order to provide technical tools for our multi-layer network analysis, we extend these matrix-valued concentration inequalities in two directions. First, we provide upper bounds for linear combinations of noise matrices with matrix-valued coefficients. This can be viewed as an extension of the matrix Bernstein inequality to allow for matrix-valued coefficients. Second, we provide concentration inequalities for sums of matrix-valued quadratic forms, extending the scalar case known as the Hanson–Wright inequality (Hanson and Wright, 1971; Rudelson and Vershynin, 2013) in several directions. A key intermediate step in relating linear cases to quadratic cases is deriving a deviation bound for matrix-valued U-statistics of order two.

2. Community Estimation in Multi-Layer SBM

Throughout this section, we describe the model, theoretical motivation, and our estimator for clustering nodes in a multi-layer SBM. Motivated by such multi-layer network data with a common community structure as demonstrated in Figure 1, we consider the L-layer SBM containing n nodes assigned to K different communities,

| (1) |

where ℓ is the layer index, θi ∈{1,...,K} is the membership index of node i for i ∈ {1,...,n}, ρ ∈(0,1] is an overall edge density parameter, and Bℓ ∈[0,1]K×K is a symmetric matrix of community-wise edge probabilities in layer ℓ. We assume Aℓ is symmetric and Aℓ,ii = 0 for all ℓ ∈{1,...,L} and i ∈{1,...,n}.

Our statistical problem is to estimate the membership vector θ = (θ1,...,θn)∈{1,...,K}n given the observed adjacency matrices A1,...,AL. Let be an estimated membership vector, and the estimation error is the number of mis-clustered nodes based on the Hamming distance,

| (2) |

for the indicator function 1(·), where the minimum is taken over all label permutations π:{1,...,K} ↦ {1,...,K}. An estimator is consistent if .

The assumption of a fixed common membership vector θ can be relaxed to each layer having its own membership vector but close to a common one. The theoretical consequence of this relaxation is discussed in Remark 1, after the main theorem in Section 3. We assume that K is known. The problem of selecting K from the data is an important problem and will not be pursued in this paper. Further discussion will be given in Section 7.

When L = 1, the community estimation problem for single-layer SBM is well-understood (Bickel and Chen, 2009; Lei and Rinaldo, 2015; Abbe, 2017). If K is fixed as a constant while n → ∞, ρ → 0 with balanced community sizes lower bounded by a constant fraction of n, and B is a constant matrix with distinct rows, then the community memberships can be estimated with vanishing error when nρ → ∞. Practical estimators include variants of spectral clustering, message passing, and likelihood-based estimators.

As mentioned in Section 1, in the multi-layer case, consistent community estimation has been studied in some recent works. The theoretical focus is to understand how the number of layers L affects the estimation problem. Paul and Chen (2020) and Bhattacharyya and Chatterjee (2018) show that consistency can be achieved if Lnρ diverges, but under the aforementioned positivity assumption, meaning that each Bℓ is positive definite with a minimum eigenvalue bounded away from zero. Such assumptions are plausible in networks with strong associativity patterns where nodes in the same communities are much more likely to connect to one another than nodes in different communities. But there are networks observed in practice that do not satisfy this assumption, such as those in Newman (2002) and Litvak and Van Der Hofstad (2013). See Lei (2018) and the references within for additional discussion on such positivity assumptions in a more general context. To remove the positivity assumption, Lei et al. (2019) considered a least-squares estimator, and proved consistency when L1/2nρ diverges (up to a small poly-logarithmic factor) and the smallest eigenvalue of grows linearly in L. A caveat is that the least-squares estimator is computationally challenging, and in practice, one may only be able to find a local minimum using greedy algorithms.

In the following subsections, we will motivate a spectral clustering method from the least-squares perspective, investigate its bias, and derive our estimator with a data-driven bias adjustment.

2.1. From least squares to spectral clustering

In this subsection, we motivate how least-squares estimators is well-approximated by spectral clustering, which lays down the intuition of our estimator in Section 2.3. Let ψ ∈{1,...,K}n be a membership vector and Ψ = [Ψ1,...,ΨK] be the corresponding n × K membership matrix where each Ψk = (Ψ1,k,...,Ψn,k) is an n×1 vector with Ψi,k = 1(ψi = k). Let Ik (ψ) = {i ∈{1,...,n} : ψi = k} and nk (ψ) = |Ik (ψ)|, the size of the set Ik (ψ).

The least-squares estimator of Lei et al. (2019) seeks to minimize the residual sum of squares,

| (3) |

where

is the sample mean estimate of Bℓ under a given membership vector ψ. Recall that the total-variance decomposition implies the equivalence between minimizing within-block sum of squares and maximizing between-block sum of squares. Hence, if we accept the approximation , then after multiplying the least-squares objective function (3) by 2 and using the total-variance decomposition, the objective function becomes

which is equivalent to

where ‖·‖F denotes the matrix Frobenius norm, and with is the column-normalized version of Ψ where each column of has norm 1. This means is orthonormal, i.e., . The benefit of considering orthonormal matrices is that for any orthonormal matrix and symmetric matrix ,

The right-hand side of the above inequality is maximized by the leading K eigenvectors of A, where the eigenvalues ordered by absolute value. For this U, the inequality becomes equality. Additionally, under the multi-layer SBM, the expected values of adjacency matrices {P1,...,PL} (where for ℓ ∈{1,...,L}) share roughly the same leading principal subspace as determined by the common community structure. Putting all these facts together, we intuitively expect to correspond to an approximate solution of the original least-squares problem, where is the column-normalized version of the true membership matrix Θ.

Therefore, a relaxation of the approximate version of the original problem (3) is

| (4) |

which is a standard spectral problem. For this reason, we often call U the “spectral embedding.” The community estimation is then obtained by applying a clustering algorithm to the rows of , a solution to (4).

2.2. The necessity of bias adjustment

Let denote the expected adjacency matrix, meaning that Pℓ is the matrix obtained by zeroing out the diagonal entries of . We now show that is a biased estimate of , and that we can correct for this bias by simply removing its diagonal entries. Let Xℓ = Aℓ − Pℓ be the noise matrix. Then

| (5) |

where . The first term is the signal term, with each summand close to , and will add up over the layers, because each matrix is positive semi-definite. The second term is a mean-0 noise matrix, which can be controlled using matrix concentration inequalities developed in Section 4 below.

The third term is a squared error matrix and will also add up over the layers, which may introduce bias if the overall edge density parameter ρ is too small.

We use a simple simulation study to illustrate the necessity of bias adjustment in spectral clustering applied to the sum of squared adjacency matrices. We set K = 2 and consider two edge-probability matrices,

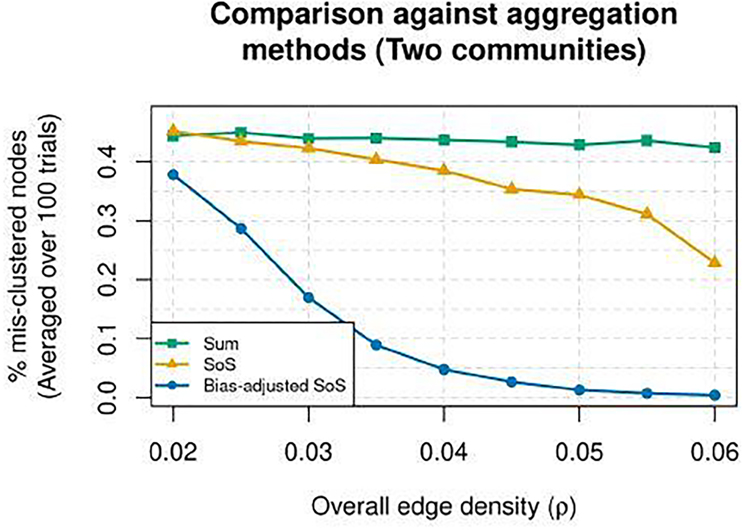

These two matrices are chosen such that spectral clustering applied to the sum of the adjacency matrices and the sum of squared adjacency matrices would be either sub-optimal or inconsistent in the very sparse regime. We set n = 200 nodes with 100 nodes in each community, the number of layers to be L = 30, and for each layer ℓ, Bℓ is randomly and independently chosen from B(1) and B(2) with equal probability. We use five different values of the overall edge density parameter ρ between 0.02 and 0.06. For each value of ρ, we generate a multi-layer SBM according to (1) and apply spectral clustering to three matrices: (1) the sum of adjacency matrices without squaring (i.e., “Sum”), (2) the sum of squared adjacency matrices (i.e., “SoS”), and (3) a bias-adjusted sum of squared adjacency matrices (i.e., “Bias-adjusted SoS”), which will be introduced in the next subsection. The results across 100 trials are reported in Figure 2. By construction, the “Sum” method performs poorly since the sum of adjacency matrices has only one significant eigen-component, meaning the result is sensitive to noise when K = 2 eigenvectors are used for spectral clustering. In fact, as described in Example 1 below, it is also easy to generate cases in which the sum of adjacency matrices carries no signal at all. The “SoS” method also performs poorly. This is because although the sum of squared adjacency matrices contains signal for clustering, the aforementioned bias is large when ρ is small. In contrast, our method “Bias-adjusted SoS” performs the best. A more detailed simulation study is presented in Section 5.

Fig. 2.

The average proportion of mis-clustered nodes for three methods (measured via Hamming distance shown in (2), averaged over 100 trials), with n = 200 and two equal-sized communities among overall edge densites ranging from ρ ∈[0.02, 0.06] and L = 30 layers. Three methods’ performance are shown: Sum (green squares), SoS (orange triangles), and Bias-adjusted SoS (blue circles).

2.3. Bias-adjusted sum-of-squared spectral clustering

We are now ready to quantify the amount of bias, and to describe our aforementioned bias-adjusted sum-of-squared method to cluster nodes in a multi-layer SBM. From (5), we see that the diagonal entries of the squared error term have positive expected value and hence may cause systematic bias in the principal subspace of . Now consider a further decomposition S = S1 + S2 where and correspond to the off-diagonal and diagonal parts of , respectively. Observe that only the diagonal entries of have positive expected value, so our effort will focus on removing the bias caused by . Towards this end, observe that by construction, we have

| (6) |

where is the degree of node i in layer ℓ. The expected value of is . In the very sparse regime, maxℓ,ij Pℓ,ij is very small so is the leading term in (S2)ii.

Combining this calculation with a key observation that can be computed from the data, we arrive at the following bias-adjusted sum-of-squared spectral clustering algorithm. Let Dℓ be the diagonal matrix consisting of the degrees of Aℓ where (Dℓ)ii = dℓ,i. The bias-adjusted sum of squared adjacency matrices is

| (7) |

The community membership is estimated by applying a clustering algorithm to the rows of the matrix whose columns are the leading K eigenvectors of given in (7).

3. Consistency of bias-adjusted sum-of-squared spectral clustering

We now describe our theoretical result characterizing how multi-layer networks benefit community estimation. The hardness of community estimation is determined by many aspects of the problem, including number of communities, community sizes, number of nodes, separation of communities, and overall edge density. Here, we need to consider all of these aspects jointly across the L layers. To simplify the discussion, we primarily focus on the following setting but discuss additional settings in later remarks.

Assumption 1.

The number of communities K is fixed and community sizes are balanced. That is, there exists a constant c such that each community size is in [c−1n / K, cn / K].

-

The relative community separation is constant. That is, Bℓ = ρBℓ,0 where Bℓ,0 is a K × K symmetric matrix with constant entries in [0,1].

Furthermore, the minimum eigenvalue of is at least cL for some constant c > 0.

Part (a) simplifies the effect of the community sizes and the number of communities. This setting has been well-studied in the SBM literature for L = 1 (Lei and Rinaldo, 2015). Part (b) puts the focus on the effect of the overall edge density parameter ρ, and requires a linear growth of the aggregated squared edge-probability matrices in terms of the minimum eigenvalue. This is much less restrictive than the layer-wise positivity assumption used in other work mentioned in Section 2 which require each Bℓ,0 to be positive definite. We give two examples in which Assumption 1(b) is satisfied but the layer-wise positivity is not.

Example 1 (Identicially distributed random layers). Consider a theoretical scenario in which the Bℓ,0 ‘s have i.i.d. Uniform(0,1) entries subject to symmetry. It is easy to verify that the expected sum matrix is a constant matrix with each entry being Lρ / 2. Therefore it is impossible to reconstruct the block structure from the sum of adjacency matrices when ρ is small.

Example 2 (Community merge and split). Consider a more realistic scenario in which for {Bℓ :1 ≤ ℓ ≤ L}, some layers ℓ and community indices k, k′ have Bℓ,kj = Bℓ,k′j for all j. This can be interpreted as the merge of communities k and k′ at layer ℓ. In such cases, each layer may not contain full community information, and we must aggregate the layers to recover the full community structure. In our real data example, we actually observe that in most layers, all but one or two communities merge with a large, null community, and each non-null community is active in one or two layers.

Based on these assumptions, in the asymptotic regime n → ∞ and ρ → 0, it is well-known that consistent community estimation is possible for L = 1 when nρ → ∞. Hence, in the multi-layer setting when L → ∞, one should expect a lower requirement on overall density as we aggregate information across layers. This is shown in our following result.

Theorem 1. Under Assumption 1, if L1/2nρ ≥ C1 log1/2(L + n) and nρ ≤ C2 for a large enough positive constant C1 and a positive constant C2, then spectral clustering with a constant factor approximate K-means clustering algorithm applied to , the bias-adjusted sum of squared adjacency matrices in (7), correctly estimates the membership of all but a

proportion of nodes for some constant C with probability at least 1−O((L + n)−1).

An immediate consequence of Theorem 1 is the Hamming distance consistency of the bias-adjusted sum-of-squared spectral clustering, provided that L1/2nρ / log1/2(L + n) → ∞. This demonstrates the boost of signal strength by a factor of L1/2 made possibly due to aggregating layers (up to a poly-logarithmic factor) that we alluded to in Section 1.

The proof of Theorem 1 is given in Appendix D, where the main effort is to establish sharp operator norm bounds for the linear noise term and the quadratic noise term . A refined operator norm bound for the off-diagonal part of plays an important role (Theorem 5). Once the operator norm bound is established, the clustering consistency follows from a standard analysis of the K-means algorithm (Lemma D.1). These concentration inequalities indeed hold for more general classes of matrices, and we provide a systematic development in the next section.

Theorem 1 is stated in a simple form for brevity. It can be generalized in several directions to better suit practical scenarios with more careful bookkeeping in the proof. We describe some important extensions in the remarks below, where ‖·‖ denotes the operator norm (i.e., largest singular value).

Remark 1 (Varying membership across layers). Theorem 1 can be extended to accommodate varying membership across the layers. In particular, assume that the ℓ th layer has membership matrix Ψℓ ∈{0,1}n×K, such that each Ψℓ is close to a common membership matrix Ψ ∈{0,1}n×K,

| (8) |

for some positive constant ϵℓ. Then we have the following generalization of Theorem 1.

Corollary 2 (Consistency under varying membership). Assume the multilayer adjacency matrices A1,...,AL are generated from individual membership matrices Ψ1,...,ΨL satisfying (8) for some sequence ϵ1,...,ϵL and common membership matrix Ψ. Under the same condition as in Theorem 1, if in addition for some positive constant C3, then the error bound of the bias-adjusted sum of squared spectral clustering is no more than

with high probability.

Remark 2 (Other regimes of network density). The condition L1/2nρ ≥ C1 log1/2(L + n) is required in order for the error bound in Theorem 1 to imply consistency, and is suitable for the linear squared signal accumulation assumed in Part (b) of Assumption 1. If we assume a different growth speed of the minimum eigenvalue of , this requirement needs to be changed accordingly. Second, the condition nρ ≲ 1 is used for notational simplicity. The regime nρ ≫ 1 would allow for consistent community recovery even when L = 1. For multilayer models, if nρ ≥ C2 for some constant C2, the error bound in Theorem 1 becomes

for some constant C with high probability. Detailed explanations of this claim are given in Appendix D.

Remark 3 (More general conditions on community sizes). Let be the size of the smallest community, and denote α = nmin / n. Our analysis can also allow the number of communities, K, and α to change with other model parameters (n, L, ρ). In particular, the lower bound of the signal term in (5) will be multiplied by α since the operator norm of is Ψ proportional to α. All the matrix concentration results, such as Theorem 5 and Lemma C.1 still hold as they do not rely on any block structures. Therefore under the same setting as Theorem 1, if we allow K and α to vary with (n, L, ρ), but have αL1/2nρ ≥ C1 log1/2(L + n) for some constant C1, then with high probability, Theorem 1 holds with error bound

4. Matrix Concentration Inequalities

We generically consider a sequence of independent matrices with independent mean-0 entries. The goal is to provide upper bounds for operator norms of linear combinations of the form with for ℓ ∈{1,...,L}, and quadratic forms with for ℓ ∈{1,...,L}. Here, Hℓ and Gℓ are non-random. To connect with the notations in previous sections, let Hℓ = Pℓ, then an operator norm bound of will help control the second term in (5). Let Gℓ = Ir be the r × r identity matrix, then corresponds to the third term in (5). Our general results cover both the symmetric and asymmetric cases, as well as more general entries of Xℓ beyond the Bernoulli case.

Concentration inequalities usually require tail conditions on the entries of Xℓ. A standard tail condition for scalar random variables is the Bernstein tail condition.

Definition 1. We say a random variable Y satisfies a (v, R)-Bernstein tail condition (or is (v, R)-Bernstein), if for all integers k ≥ 2.

The Bernstein tail condition leads to concentration inequalities for sums of independent random variables (van der Vaart and Wellner, 1996, Chapter 2). Since we are interested not only in linear combinations of Xℓ ‘s, but also the quadratic forms involving , we need the Bernstein condition to hold for the squared entries of X1,...,XL. Specifically we consider the following three assumptions.

Assumption 2. Each entry Xℓ,ij is (v1, R1)-Bernstein, for all ℓ ∈ {1,...,L} and i, j ∈{1,...,n}.

Assumption 3. Each squared entry is (v2, R2)-Bernstein, for all ℓ ∈{1,...,L} and i, j ∈{1,...,n}.

Assumption 3’. The product is (v2′, R2′)-Bernstein, for all ℓ ∈{1,...,L} and i, j ∈{1,...,n}, where is an independent copy of Xℓ.

There are two typical scenarios in which such a squared Bernstein condition in Assumption 3 holds. The first is the sub-Gaussian case: If a random variable Y satisfies the sub-Gaussian condition for some σ > 0, then we have , and hence Y2 is (4σ4, σ2)-Bernstein. The second scenario is centered Bernoulli: If a random variable Y satisfies for some p ∈[0,1 / 2], then we have , and hence Y2 is (2p,1)-Bernstein. Our proof will also use the fact that if Y2 is (v2, R2)-Bernstein, then the centered version is also (v2, R2)-Bernstein (Wang et al., 2016, Lemma 3).

We require Assumption 3’ in order to use a decoupling technique in establishing concentration of quadratic forms. One can show that if Assumption 3 holds then Assumption 3’ holds with (v2′, R2′) = (v2, R2). However, when Xℓ,ij ‘s are centered Bernoulli random variables with parameters bounded by p ≤ 1 / 2, then Assumption 3’ holds with v2′ = 2p2 and R2′ = 1, while Assumption 3 holds with v2 = 2p and R2 = 1, so that v2′ can potentially be much smaller than v2. We will explicitly keep track of the Bernstein parameters in our results for the sake of generality.

4.1. Linear combinations with matrix coefficients

Theorem 3. Let X1,...,XL be a sequence of independent n × r matrices with mean-0 independent entries satisfying Assumption 2, and Hℓ be any sequence of r × m non-random matrices. Then for all t > 0,

| (9) |

A similar result holds, with t2 / 2 replaced by t2 / 8 and 2(m + n) replaced by 4(m + n) in (9), for symmetric Xℓ ‘s of size n × n with independent (v1, R1)-Bernstein diagonal and upper-diagonal entries and Hℓ of size n × m.

The proof of Theorem 3, given in Appendix B, combines the matrix Bernstein inequality (Tropp, 2012) for symmetric matrices and a rank-one symmetric dilation trick (Lemma B.1) to take care of the asymmetry in XℓHℓ.

Remark 4. If n = m = r = 1, then Theorem 3 recovers the well-known Bernstein’s inequality as a special case with a different pre-factor.

If n ≥ min{m, Lr}, then and the probability upper bound in Theorem 3 reduces to

| (10) |

If n = 1 then and the probability bound reduces to

| (11) |

Remark 5. When L = 1, the setting is similar to that considered in Vershynin (2011). In the constant variance case (e.g., sub-Gaussian), , Theorem 3 implies a high probability upper bound of , which agrees with Theorem 1.1 of Vershynin (2011). The extra factor in our bound is because our result is a tail probability bound while Vershynin (2011) provides upper bounds on the expected value. However, in the sparse Bernoulli setting, where v1 ≪ R1 = 1, the upper bound in Theorem 3 is better because it correctly captures the factor multiplied by , whereas the result in Vershynin (2011) leads to .

4.2. Matrix U-statistics and quadratic forms

Let

| (12) |

where the summation is taken over all pairs (i, j), (i′, j′) ∈{1,...,n}2 and ei is the canonical basis vector in with a 1 in the ith coordinate. In this subsection, we will focus on the symmetric case because the bookkeeping is harder compared to the asymmetric case. The treatment for the asymmetric case is similar and the corresponding results are stated separately in Appendix B for completeness.

Because Xℓ has centered and independent diagonal and upper diagonal entries, a term in (12) has non-zero expected value only if (i, j) = (i′, j′) or (i, j) = (j′, i′) since this would imply . This motivates the following decomposition of into a quadratic component with non-zero entry-wise mean value

| (13) |

and a cross-term component with entry-wise mean-0 value

| (14) |

It is easy to check that and . Intuitively, the spectral norm of should be small since it is the sum of many random terms with zero mean and small correlation, which can be viewed as a U-statistic with a centered kernel function of order two. This U-statistic perspective is a key component of the analysis and will be made clearer in the proof. For a similar reason, should also be small. Hence, the main contributing term in should be the deterministic term . To formalize this, define the following quantities,

where ‖·‖2,∞ is the maximum L2-norm of each row, and ‖·‖∞ is the maximum entry-wise absolute value. The following theorem quantifies the random fluctuations of , and around their expectations.

Theorem 4. If X1,...,XL are independent n × n symmetric matrices with independent diagonal and upper diagonal entries satisfying Assumption 2 and Assumption 3’. Let G1,...,GL be n × n matrices. Define and , as in (13) and (14). Then there exists a universal constant C such that with probability at least 1−O((n + L)−1),

| (15) |

If in addition Assumption 3 holds, then with probability at least 1−O((n + L)−1),

| (16) |

and consequently,

| (17) |

The proof of Theorem 4 is given in Appendix B, where the main effort is to control ‖S1‖. Unlike the linear combination case, the complicated dependence caused by the quadratic form needs to be handled by viewing as a matrix-valued U-statistic indexed by the pairs (i, j), and using a decoupling technique due to de la Peña and Montgomery-Smith (1995). This reduces the problem of bounding ‖S1‖ to that of bounding , where are i.i.d. copies of X1,...,XL. The upper bounds in Theorem 4 look complicated. This is because we do not make any assumption about the Bernstein parameters or the matrices Gℓ. The bound can be much simplified or even improved in certain important special cases. In the sub-Gaussian case, where , the first term v1n log(L + n)σ1 in (15) dominates. This reflects the L1/2 effect for sums of independent random variables. For example, in the case Gℓ = G0 for all ℓ and Xℓ are i.i.d., we have , but when we consider the fluctuations contributed by , we have ignoring logarithmic factors. In other words, the signal is contained in whose operator norm may grow linearly as L, while the fluctuation in the operator norm of only grows at a rate of L1/2.

Additionally, in the Bernoulli case, the situation becomes more complicated when the variance v1 is vanishing, meaning that . In the simple case of Gℓ = In, we have σ1 = L1/2, σ2 = σ3 = 1. Thus the second term (v1Ln)1/2σ2 in (15) may dominate the first term when nv1 ≪ 1. In this case, we also have σ2′ = (Ln)1/2. Therefore, it is also possible that the term in (16) may be large. It turns out that in such very sparse Bernoulli cases, the bound on the fluctuation term ‖S1‖ can be improved by a more refined and direct upper bound for . The details are presented in the next subsection.

4.3. Sparse Bernoulli matrices

In this section, we focus on the case where Gℓ = In for all ℓ, and the Xℓ ‘s are symmetric with centered Bernoulli entries whose probability parameters are bounded by ρ. Here, ρ can be very small. In this case, Assumptions 2, 3 and 3’ hold with v1 = v2 = 2ρ, R1 = R2 = R2′ = 1, v2′ = 2ρ2, and the matrices Gℓ satisfy σ1 = L1/2, σ2 = σ3 =1, σ2′ = (Ln)1/2.

Ignoring logarithmic factors, the first part of Theorem 4 becomes

where the second term (Lnρ)1/2 can be dominating when nρ is small and Lnρ is large. This is suboptimal since intuitively we expect that the main variance term L1/2nρ is the leading term as long as its value is large enough, which only requires nρ ≫ L1/2. To investigate the cause of this suboptimal bound, observe that (Lnρ)1/2 originates from the second term R1(v1Ln)1/2σ2 in (15). Investigating the proof of Theorem 4, this term is derived by bounding by , which is suboptimal in this sparse Bernoulli case when applying the decoupling technique. The following result shows a sharper bound in this setting using a more refined argument.

Theorem 5. Assume Gℓ = In for all ℓ ∈{1,...,L} and X1,...,XL are symmetric with centered Bernoulli entries whose parameters are bounded by ρ. If L1/2nρ ≥ C1 log1/2(L + n) and nρ ≤ C2 for some constants C1, C2, then with probability at least 1−O((n + L)−1),

| (18) |

for some constant C.

The proof of Theorem 5 is given in Appendix C where we modify our usage of the decoupling technique. At a high level, the decoupling technique reduces the problem to controlling the operator norm of where is an i.i.d. copy of Xℓ. Instead of directly applying Theorem 3 with , we instead shift back to the original Bernoulli matrix by considering , where is the original uncentered binary matrix and . Then Theorem 3 is applied to and separately, where the entry-wise non-negativity of allows us to use the Perron–Frobenius theorem to obtain a sharper bound for .

5. Further simulation study

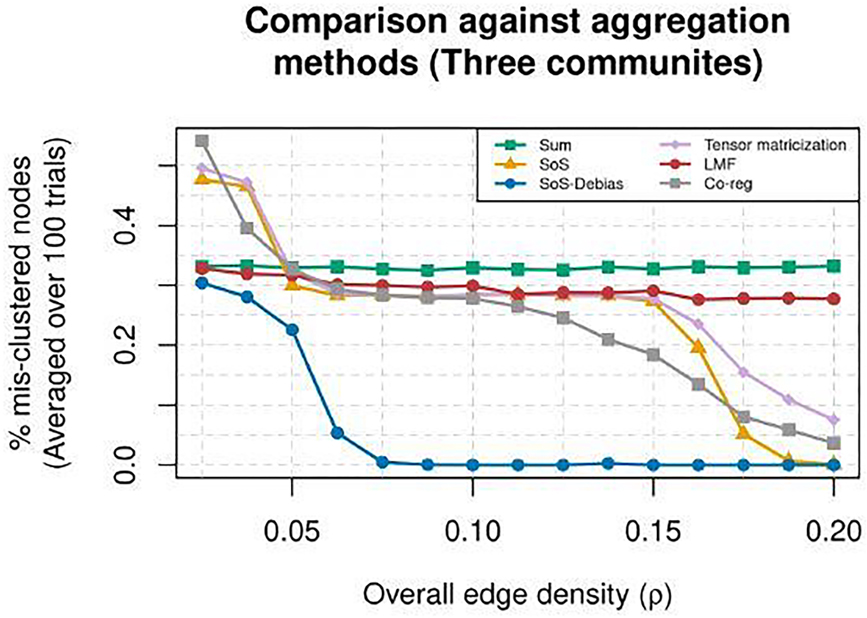

In the following simulation study, we show that bias-adjusting sum of squared adjacency matrices constructed in (7) has a measurable impact on the downstream spectral clustering accuracy, and that our method performs favorably against other competing methods. This builds upon the simulation initially shown in Section 2.2.

Data-generating process.

We design the following simulation setting to highlight the importance of bias adjustment for . We consider n = 500 nodes per network across K = 3 communities, with imbalanced sizes n1 = 200, n2 = 50, n3 = 250. We construct two edge-probability matrices that share the same eigenvectors,

| (19) |

The two edge-probability matrices are

We then generate L = 100 layers of adjacency matrices, where each layer is drawn by setting the edge-probability matrices Bℓ = ρB(1) for ℓ ∈{1,...,L / 2} and Bℓ = ρB(2) for ℓ ∈{L / 2 + 1,..., L}. Using this, we generate the adjacency matrices via (1), with ρ varying from 0.025 to 0.2.

We choose this particular simulation setting for two reasons. First, the first two eigenvectors in W are not sufficient to distinguish between the first two communities. Hence, methods based on are not expected to perform well since the third eigen-component cancels out in the summation. Second, the average degrees among the three communities are drastically different, which are 251ρ, 191ρ and 327ρ respectively. This means the variability of degree matrix Dℓ ‘s diagonal entries will be high, helping demonstrating the effect of our method’s bias adjustment.

Methods we consider.

We consider the following four ways to aggregate information across all L layers, three of which were used earlier in Figure 2: 1) the sum of adjacency matrices without squaring (i.e., considering , “Sum”), 2) the sum of squared adjacency matrices (i.e., considering , “SoS”), 3) our proposed bias-adjusted sum of squared adjacency matrices (i.e., considering (7), or equivalently and then zeroing out the diagonal entries, “SoS-Debias”), and 4) column-wise concatenating the adjacency matrices together, specifically, considering

(i.e., “Tensor matricization”). This method is commonly-used in the tensor literature (see Zhang and Xia (2018) for example), where the L adjacency matrices can be viewed as a n × n × L tensor, and the column-wise concatenation converts the tensor into a matrix. Then, using one of the four construction of the aggregated matrix M, we then apply spectral clustering onto M, meaning we first compute the matrix containing the leading K left singular vectors of M and perform K-means on its rows.

Additionally, we consider two methods that developed in Paul and Chen (2020) called Linked Matrix Factorization (i.e., “LMF”) and Co-regularized Spectral Clustering (i.e., “Co-reg”). These two methods fall outside the framework of the four methods discussed above. Instead, they use optimization procedures designed with different so-called fusion techniques to solve for an appropriate low-dimensional embedding shared among all L layers, and then perform K-means clustering on its rows.

Results.

The results shown in Figure 3 demonstrate that bias-adjusting the diagonal entries of has a noticeable impact on the clustering accuracy. Using the aforementioned simulation setting and methods, we vary ρ from 0.025 to 0.2 in 15 equally-spaced values, and compare the methods for each setting of ρ across 100 trials by measuring the average Hamming distance (i.e., defined in (2)) between the true memberships in θ and the estimated membership . We observe phenomenons in Figure 3 which all agree with our intuition and theoretical results. Specifically, summing the adjacency matrices hinders our ability to cluster the nodes due to the cancellation of positive and negative eigenvalues (green squares), and the diagonal bias induced by squaring the adjacency matrices has a profound effect in the range of ρ ∈[0.08, 0.17], which our bias-adjusted sum-of-squared method removes (purple diamonds verses blue circles). We also see that our bias-adjusted sum-of-squared method out-performs Linked Matrix Factorization (red circles) and Co-regularized Spectral Clustering (gray squares). While the LMF method and Co-reg method show some improvements over the Sum and SoS methods, respectively, they still behave qualitatively similar. This observation suggests that these two methods may have similar difficulty in aggregating layers without positivity or removing the diagonal bias.

Fig. 3.

The average proportion of mis-clustered nodes for eight methods (measured via Hamming distance shown in (2), averaged over 100 trials), with n = 500 with three unequally-sized communities among overall edge densities ranging from ρ ∈[0.025, 0.2] and L = 100 layers. Six methods’ performance are shown: “Sum” (green squares), “SoS” (orange triangles), “Bias-adjusted SoS” (blue circles), “Tensor matricization” (purple diamonds), “LMF” (red circles), and “Co-reg” (gray squares).

Intuition behind results.

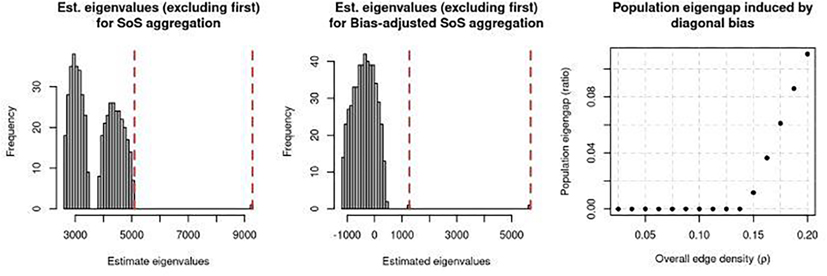

We provide additional intuition behind the results shown in Figure 3 by visualizing the impact of the diagonal terms on the overall spectrum and quantifying the loss of population signal due to the bias.

First, we demonstrate in Figure 4 that the third leading eigenvalue of when ρ = 0.15 is indistinguishable from the remaining bulk “noise” eigenvalues if the diagonal bias is not removed (left), but becomes well-separated if so (middle). Recall by construction (19), all three eigenvectors are needed for recovering the communities. Hence, if the third eigenvalue of is indistinguishable from fourth through last eigenvalues (i.e., the “noise”), then we should expect many nodes to be mis-clustered. This is exactly what Figure 4 (left) shows, where the third eigenvalue (denoted by the left-most red vertical line) is not separated from the remaining eigenvalues. However, when we appropriately bias-adjust via (7), then Figure 4 (middle) shows that the third eigenvalue is now well-separated from the remaining eigenvalues. This demonstrates the importance of bias-adjustment for community estimation in this regime of ρ.

Fig. 4.

(Left): For one realization of A1,...,AL given the setup described in the simulation with ρ = 0.15, a histogram of all 500 eigenvalues of , where the red vertical dashed lines denote the second and third eigenvalues. (The first eigenvalue is too large to be shown.) (Middle): Similar to the left plot, but showing the 500 eigenvalues of the bias-adjusted variant of (i.e., setting the diagonal to be all 0’s). (Right): The population eigengap (λ3 – λ4) / λ3 computed from for varying values of ρ.

Next, in Figure 4 (right), we show that this lack-of-separation between the third eigenvalue and the noise can be observed on the population level. Specifically, we show that the population counterpart of has considerable diagonal bias that makes the accurate estimation of the third eigenvector nearly impossible when ρ is too small. To show this, for a particular value of ρ, recall from our theory that the population counterpart of is

and . Let λ1,...,λn denote the n eigenvalues of the above matrix, dependent on ρ. We then plot (λ3 – λ4) / λ4 against ρ in Figure 4 (right). This plot demonstrates that when ρ is too small, the diagonal entries (represented by ‘s) add a disproportionally large amount of bias that makes it impossible to accurately distinguish between the third and fourth eigenvectors. Additionally, the raise in the eigengap in Figure 4 (right) at ρ = 0.15 corresponds to when “SoS” starts to improve in Figure 3 (orange triangles). This means starting at ρ = 0.15, the effect of the diagonal bias starts to diminish, and at larger values of ρ, the sum of squared adjacency matrices contains accurate information for community estimation (both with and without bias adjustment). We report additional results in Appendix E, where we report the time needed for each method, visualize the lack of concentration in the nodes’ degrees in sparse graphs and its effect on the spectral embedding, and also report that the qualitative trends in Figure 3 remain the same when we either consider the varying-membership setting (described in Corollary 2) or an additional variant of spectral clustering where the eigenvectors are reweighted by its corresponding eigenvalues.

6. Data application: Gene co-expression patterns in developing monkey brain

We analyze the microarray dataset of developing rhesus monkeys’ tissue from the medial prefrontal cortex introduced in Section 1 that was originally collected in Bakken et al. (2016) to demonstrate the utility of our bias-adjusted sum-of-squared spectral clustering method. As described in other work that analyze this data (Liu et al. (2018) and Lei et al. (2019)), this is a suitable dataset to analyze as other work have well-documented that the gene co-expression patterns in monkeys’ tissue from this brain region change dramatically over development. Specifically, the data from Bakken et al. (2016) consists of the gene co-expression network of ten different developmental times (starting from 40 days in the embryo to 48 months after birth) derived from microarray data, where each of the developmental time points corresponds to post-mortem tissue samples of multiple unique rhesus monkeys. With this data, we aim to show that our bias-adjusted sum-of-squared spectral clustering method produces insightful gene communities.

Preprocessing procedure.

The microarray dataset from Bakken et al. (2016) contains n = 9173 genes measured among many samples across the L = 10 layers, which we preprocess into ten adjacency matrices in the following way in line with other work like Langfelder and Horvath (2008). We use these specific set of n genes, following the analysis in Liu et al. (2018), since they map to the human genome. First, for each layer ℓ ∈{1,...,L}, we construct the Pearson correlation matrix. Then, we convert each correlation matrix into adjacency matrix by hard-thresholding at 0.72 in absolute value, resulting in ten adjacency matrices A1,...,AL. We choose this particular threshold since it yields sparse and scale-free networks that have many disjoint connected components individually but have one connected component after aggregation, as reported in Appendix F. Lastly, we remove all the genes corresponding to nodes whose total degree across all ten layers is less than 90. This value is chosen since the median total degree among all nodes that do not have any neighbors in five or more of the layers (i.e., a degree of zero in more than half the layers) is 89. In the end, we have ten adjacency matrices A1,...,AL ∈{0,1}7836×7836, each representing a network corresponding to 7836 genes. We note that the above procedure of transforming correlation matrices into adjacency matrices is unlikely to procedure networks that severely violate the layer-wise positivity assumption commonly required by other methods – this hypothetically could happen if many pairs of genes display high negative correlations, but this is not typical in genomic data. Nonetheless, we are interested in what insights the bias-adjusted sum-of-squared spectral clustering method can reveal for this dataset.

Results and interpretation.

The following results show that bias-adjusted sum-of-squared spectral clustering finds meaningful gene communities. Prior to using our method, we select the dimensionality and number of communities to be K = 8 based on a scree plot of the singular values of the bias-adjusted variant of . We perform our bias-adjusted spectral clustering on this matrix with K = 8, and visualize three out of the ten adjacency matrices using the estimated communities in Figure 5 (which are the full adjacency matrices corresponding to the three adjacency matrices shown in Figure 1). We see that as development occurs from 40 days in the embryo to 48 months after birth, there are different gene communities that are most-connected. This visually demonstrates different biological processes in brain tissue that are most active at different stages of development. Labeling the communities 1 through 8 from top left to bottom right, our results show that starting at 40 days in the embryo, Community 1 is highly coordinated (i.e., densely connected), and ending at 48 months after birth, Community 7 is highly coordinated. All the genes in Community 8 are sparsely connected throughout all ten adjacency matrices, suggesting that these genes are not strongly correlated with many other genes throughout development.

Fig. 5.

Three of ten adjacency matrices where the genes are ordered according to the estimated K = 8 communities. Blue pixels correspond to the absence of an edge between the corresponding genes in Aℓ ‘s, while yellow pixels correspond to an edge. The dashed white lines denote the separation among the K = 8 gene communities. The adjacency matrices shown in Figure 1 correspond to the same three developmental times (from left to right), and are formed by selecting only the genes in Communities 1, 4, 5, and 7.

To interpret these K = 8 communities, we perform a gene ontology analysis, using the cluster- Profiler::enrichGO function on the gene annotation in the Bioconductor package org.Mmu.eg.db to analyze the scientific interpretation of each of the K communities of genes within rhesus monkeys. Table 1 shows the results. We see the first seven communities are highly enriched for cell processes closely related to brain development – we can interpret Figure 5 and Table 1 together as which biological systems are most active in a coordinated fashion at different developmental stage. Since genes in the eighth community are sparsely-connected across all developmental time and is not enriched for any cell processes, we infer that these genes are unlikely to be coordinated to drive any process related to brain development. Together, these results demonstrate that the bias-adjusted sum-of-squared spectral clustering is able to find meaningful gene communities. Visualizations of all ten adjacency matrices, beyond those shown in Figure 5 and explicit reporting of the edge densities, as well as stability analyses that demonstrate how the results vary when different tuning parameters are used, are included in Appendix F.

Table 1.

Gene ontology of the estimated K = 8 communities of genes. Here, “GO” denotes the gene ontology ID, and “p-value” denotes the Fisher’s exact test to denote an enrichment (i.e., significance or over-representation) of a particular GO for the genes in said community compared to all other genes.

| Community | Description | GO ID | p-value |

|---|---|---|---|

| 1 | RNA splicing | GO:0008380 | 1.07 × 10−11 |

| 2 | Nuclear transport | GO:0051169 | 3.15 × 10−5 |

| 3 | Neuron development | GO:0048666 | 2.08 × 10−8 |

| 4 | Chromosome segregation | GO:0007059 | 1.31 × 10−8 |

| 5 | Neuron projection development | GO:0031175 | 1.51 × 10−5 |

| 6 | Regulation of transporter activity | GO:0032409 | 5.68 × 10−6 |

| 7 | Anchoring junction | GO:0070161 | 8.86 × 10−5 |

| 8 | None |

7. Discussion

While we establish community estimation consistency in this paper, there are two major additional theoretical directions we hope our results will help shed light into for future work. First, an important theoretical question in the study of stochastic block models is the critical threshold for community estimation. This involves finding a critical rate of the overall edge density and/or the separation between rows of Bℓ,0, and proving achievability of certain community estimation accuracy when the density and/or separation are above this threshold, as well as impossibility for non-trivial community recovery below this threshold. For single-layer SBMs, this problem has been studied by many authors, such as Massoulié (2014), Abbe and Sandon (2015), Zhang and Zhou (2016), and Mossel et al. (2018). The case of multi-layer SBMs is much less clear, especially for generally structured layers. The upper bounds proved in Paul and Chen (2020) and Bhattacharyya and Chatterjee (2018) imply achievability of vanishing error proportion when Lnρ → ∞ under a layer-wise positivity assumption. Our results requires a stronger L1/2nρ / log1/2 (L + n) → ∞ condition, but does not require a layer-wise positivity assumption. Ignoring logarithmic factors, is a rate of L1/2 the right price to pay for not having the layer-wise positivity assumption? The error analysis in the proof of Theorem 1 seems to suggest a positive answer, but a rigorous claim will require a formal lower bound analysis. We note that the simplified constructions such as that in Zhang and Zhou (2016) designed for single-layer SBMs are unlikely to work, since they do not reflect the additional hardness brought to the estimation problem by unknown layer-wise structures.

Second, the consistency result for multi-layer SBMs also makes it possible to extend other inference tools developed for single-layer data to multi-layer data. One such example is model selection and cross-validation (Chen and Lei, 2018, Li et al., 2020). The probability tools developed in this paper, such as Theorem 3 and Theorem 4 and Theorem B.2, may be useful for other statistical inference problems involving matrix-valued measurements and noise. For example, our theoretical analyses could refine the theoretical analyses for multilayer graphs that go beyond SBMs, such as degree-corrected SBMs or random dot-product graphs in general (Nielsen and Witten, 2018; Arroyo et al., 2021). Alternatively, in dynamic networks where the network parameters change smoothly over time, one may use nonparametric kernel smoothing techniques in Pensky and Zhang (2019) and the matrix concentration inequalities developed in this paper to control the aggregated noise and perhaps obtain more refined analysis in those settings.

Supplementary Material

Acknowledgments:

We thank Kathryn Roeder, Fuchen Liu, and Xuran Wang for providing the data and code used in Liu et al. (2018) to pre-process the data. We also thank the editor, the associate editor, and two anonymous reviewers for their helpful suggestions to improve the manuscript. Jing Lei’s research is partially funded by NSF grant DMS-2015492 and NIH grant R01 MH123184.

Contributor Information

Jing Lei, Department of Statistics and Data Science, Carnegie Mellon University, USA.

Kevin Z. Lin, Department of Statistics, Wharton School of Business, University of Pennsylvania, USA

References

- Abbe E (2017), “Community detection and stochastic block models: recent developments,” The Journal of Machine Learning Research, 18, 6446–6531. [Google Scholar]

- Abbe E and Sandon C (2015), “Community detection in general stochastic block models: Fundamental limits and efficient algorithms for recovery,” 2015 IEEE 56th Annual Symposium on Foundations of Computer Science, 670–688. [Google Scholar]

- Airoldi EM, Blei DM, Fienberg SE, and Xing EP (2008), “Mixed membership stochastic blockmodels,” The Journal of Machine Learning Research, 9, 1981–2014. [PMC free article] [PubMed] [Google Scholar]

- Arroyo J, Athreya A, Cape J, Chen G, Priebe CE, and Vogelstein JT (2021), “Inference for multiple heterogeneous networks with a common invariant subspace,” Journal of Machine Learning Research, 22, 1–49. [PMC free article] [PubMed] [Google Scholar]

- Bai Z and Silverstein JW (2010), Spectral analysis of large dimensional random matrices, vol. 20, Springer. [Google Scholar]

- Bakken TE, Miller JA, Ding S-L, Sunkin SM, Smith KA, Ng L, Szafer A, Dalley RA, Royall JJ, Lemon T, et al. (2016), “A comprehensive transcriptional map of primate brain development,” Nature, 535, 367–375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandeira AS and Van Handel R (2016), “Sharp nonasymptotic bounds on the norm of random matrices with independent entries,” The Annals of Probability, 44, 2479–2506. [Google Scholar]

- Bhattacharyya S and Chatterjee S (2018), “Spectral clustering for multiple sparse networks: I,” arXiv preprint arXiv:1805.10594. [Google Scholar]

- Bickel PJ and Chen A (2009), “A nonparametric view of network models and Newman–Girvan and other modularities,” Proceedings of the National Academy of Sciences, 106, 21068–21073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cape J, Tang M, and Priebe CE (2017), “The Kato–Temple inequality and eigenvalue concentration with applications to graph inference,” Electronic Journal of Statistics, 11, 3954–3978. [Google Scholar]

- Chen K and Lei J (2018), “Network cross-validation for determining the number of communities in network data,” Journal of the American Statistical Association, 113, 241–251. [Google Scholar]

- de la Peña VH and Montgomery-Smith SJ (1995), “Decoupling inequalities for the tail probabilities of multivariate U-statistics,” The Annals of Probability, 806–816. [Google Scholar]

- Dong X, Frossard P, Vandergheynst P, and Nefedov N (2012), “Clustering with multi-layer graphs: A spectral perspective,” IEEE Trans. Signal Processing, 60, 5820–5831. [Google Scholar]

- Feige U and Ofek E (2005), “Spectral techniques applied to sparse random graphs,” Random Structures & Algorithms, 27, 251–275. [Google Scholar]

- Goldenberg A, Zheng AX, Fienberg SE, and Airoldi EM (2010), “A survey of statistical network models,” Foundations and Trends[textregistered] in Machine Learning, 2, 129–233. [Google Scholar]

- Han Q, Xu K, and Airoldi E (2015), “Consistent estimation of dynamic and multi-layer block models,” in International Conference on Machine Learning, pp. 1511–1520. [Google Scholar]

- Hanson DL and Wright FT (1971), “A bound on tail probabilities for quadratic forms in independent random variables,” The Annals of Mathematical Statistics, 42, 1079–1083. [Google Scholar]

- Holland PW, Laskey KB, and Leinhardt S (1983), “Stochastic blockmodels: First steps,” Social networks, 5, 109–137. [Google Scholar]

- Jin J (2015), “Fast Community Detection by SCORE,” Annals of Statistics, 43, 57–89. [Google Scholar]

- Karrer B and Newman ME (2011), “Stochastic blockmodels and community structure in networks,” Physical Review E, 83, 016107. [DOI] [PubMed] [Google Scholar]

- Kivelä M, Arenas A, Barthelemy M, Gleeson JP, Moreno Y, and Porter MA (2014), “Multilayer networks,” Journal of Complex Networks, 2, 203–271. [Google Scholar]

- Kolaczyk ED (2009), Statistical analysis of network data, Springer. [Google Scholar]

- Langfelder P and Horvath S (2008), “WGCNA: An R package for weighted correlation network analysis,” BMC Bioinformatics, 9, 559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Latouche P, Birmele E, and Ambroise C (2012), “Variational Bayesian inference and complexity control for stochastic block models,” Statistical Modelling, 12, 93–115. [Google Scholar]

- Le CM, Levina E, and Vershynin R (2017), “Concentration and regularization of random graphs,” Random Structures & Algorithms, 51, 538–561. [Google Scholar]

- Lei J (2018), “Network representation using graph root distributions,” arXiv preprint arXiv:1802.09684. [Google Scholar]

- Lei J, Chen K, and Lynch B (2019), “Consistent community detection in multilayer network data,” Biometrika. [Google Scholar]

- Lei J and Rinaldo A (2015), “Consistency of spectral clustering in stochastic block models,” The Annals of Statistics, 43, 215–237. [Google Scholar]

- Li T, Levina E, and Zhu J (2020), “Network cross-validation by edge sampling,” Biometrika. [Google Scholar]

- Litvak N and Van Der Hofstad R (2013), “Uncovering disassortativity in large scale-free networks,” Physical Review E, 87, 022801. [DOI] [PubMed] [Google Scholar]

- Liu F, Choi D, Xie L, and Roeder K (2018), “Global spectral clustering in dynamic networks,” Proceedings of the National Academy of Sciences, 115, 927–932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massoulié L (2014), “Community detection thresholds and the weak Ramanujan property,” in Proceedings of the forty-sixth annual ACM symposium on Theory of computing, pp. 694–703. [Google Scholar]

- Matias C and Miele V (2017), “Statistical clustering of temporal networks through a dynamic stochastic block model,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 79, 1119–1141. [Google Scholar]

- McSherry F (2001), “Spectral partitioning of random graphs,” in Foundations of Computer Science, 2001. Proceedings. 42nd IEEE Symposium on, IEEE, pp. 529–537. [Google Scholar]

- Mossel E, Neeman J, and Sly A (2018), “A proof of the block model threshold conjecture,” Combinatorica, 38, 665–708. [Google Scholar]

- Ndaoud M (2018), “Sharp optimal recovery in the two component Gaussian mixture model,” arXiv preprint arXiv:1812.08078. [Google Scholar]

- Newman M (2009), Networks: an introduction, Oxford University Press. [Google Scholar]

- Newman ME (2002), “Assortative mixing in networks,” Physical review letters, 89, 208701. [DOI] [PubMed] [Google Scholar]

- Nielsen AM and Witten D (2018), “The multiple random dot product graph model,” arXiv preprint arXiv:1811.12172. [Google Scholar]

- O’Rourke S, Vu V, and Wang K (2018), “Random perturbation of low rank matrices: Improving classical bounds,” Linear Algebra and its Applications, 540, 26–59. [Google Scholar]

- Paul A, Cai Y, Atwal GS, and Huang ZJ (2012), “Developmental coordination of gene expression between synaptic partners during GABAergic circuit assembly in cerebellar cortex,” Frontiers in neural circuits, 6, 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paul S and Chen Y (2020), “Spectral and matrix factorization methods for consistent community detection in multi-layer networks,” The Annals of Statistics, 48, 230–250. [Google Scholar]

- Peixoto TP (2013), “Parsimonious module inference in large networks,” Physical review letters, 110, 148701. [DOI] [PubMed] [Google Scholar]

- Pensky M and Zhang T (2019), “Spectral clustering in the dynamic stochastic block model,” Electronic Journal of Statistics, 13, 678–709. [Google Scholar]

- Rohe K, Chatterjee S, and Yu B (2011), “Spectral clustering and the high-dimensional stochastic block model,” The Annals of Statistics, 39, 1878–1915. [Google Scholar]

- Rudelson M and Vershynin R (2013), “Hanson-Wright inequality and sub-Gaussian concentration,” Electronic Communications in Probability, 18. [Google Scholar]

- Székely GJ and Rizzo ML (2014), “Partial distance correlation with methods for dissimilarities,” The Annals of Statistics, 42, 2382–2412. [Google Scholar]

- Tang W, Lu Z, and Dhillon IS (2009), “Clustering with multiple graphs,” in International Conference on Data Mining (ICDM), IEEE, pp. 1016–1021. [Google Scholar]

- Tropp JA (2012), “User-friendly tail bounds for sums of random matrices,” Foundations of Computational Mathematics, 12, 389–434. [Google Scholar]

- van der Vaart AW and Wellner JA (1996), Weak Convergence and Empirical Processes, Springer-Verlag. [Google Scholar]

- Vershynin R (2011), “Spectral norm of products of random and deterministic matrices,” Probability theory and related fields, 150, 471–509. [Google Scholar]

- Wang T, Berthet Q, and Plan Y (2016), “Average-case hardness of RIP certification,” in Advances in Neural Information Processing Systems, pp. 3819–3827. [Google Scholar]

- Werling DM, Pochareddy S, Choi J, An J-Y, Sheppard B, Peng M, Li Z, Dastmalchi C, Santpere G, Sousa AM, et al. (2020), “Whole-genome and RNA sequencing reveal variation and transcriptomic coordination in the developing human prefrontal cortex,” Cell reports, 31, 107489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu KS and Hero AO (2014), “Dynamic stochastic blockmodels for time-evolving social networks,” IEEE Journal of Selected Topics in Signal Processing, 8, 552–562. [Google Scholar]

- Zhang A and Xia D (2018), “Tensor SVD: Statistical and computational limits,” IEEE Transactions on Information Theory, 64, 7311–7338. [Google Scholar]

- Zhang AR, Cai TT, and Wu Y (2022), “Heteroskedastic PCA: Algorithm, optimality, and applications,” The Annals of Statistics, 50, 53–80. [Google Scholar]

- Zhang AY and Zhou HH (2016), “Minimax rates of community detection in stochastic block models,” The Annals of Statistics, 44, 2252–2280. [Google Scholar]

- Zhang J and Cao J (2017), “Finding common modules in a time-varying network with application to the Drosophila Melanogaster gene regulation network,” Journal of the American Statistical Association, 112, 994–1008. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.