Abstract

In this paper, we propose a dense multi-scale adaptive graph convolutional network (DMA-GCN) method for automatic segmentation of the knee joint cartilage from MR images. Under the multi-atlas setting, the suggested approach exhibits several novelties, as described in the following. First, our models integrate both local-level and global-level learning simultaneously. The local learning task aggregates spatial contextual information from aligned spatial neighborhoods of nodes, at multiple scales, while global learning explores pairwise affinities between nodes, located globally at different positions in the image. We propose two different structures of building models, whereby the local and global convolutional units are combined by following an alternating or a sequential manner. Secondly, based on the previous models, we develop the DMA-GCN network, by utilizing a densely connected architecture with residual skip connections. This is a deeper GCN structure, expanded over different block layers, thus being capable of providing more expressive node feature representations. Third, all units pertaining to the overall network are equipped with their individual adaptive graph learning mechanism, which allows the graph structures to be automatically learned during training. The proposed cartilage segmentation method is evaluated on the entire publicly available Osteoarthritis Initiative (OAI) cohort. To this end, we have devised a thorough experimental setup, with the goal of investigating the effect of several factors of our approach on the classification rates. Furthermore, we present exhaustive comparative results, considering traditional existing methods, six deep learning segmentation methods, and seven graph-based convolution methods, including the currently most representative models from this field. The obtained results demonstrate that the DMA-GCN outperforms all competing methods across all evaluation measures, providing and for the segmentation of femoral and tibial cartilage, respectively.

Keywords: knee cartilage osteoarthritis (KOA), magnetic resonance imaging (MRI) segmentation, multi-atlas, graph neural networks (GNNs), deep learning, graph learning, semi-supervised learning (SSL)

1. Introduction

Osteoarthritis (OA) is one of the most prevalent joint diseases worldwide, causing pain and mobility issues, reducing the ability to lead an independent lifestyle, and ultimately decreasing the quality of life in patients. It primarily manifests among populations of advanced age, with an estimated of people over the age of 55 dealing with this condition. That percentage is likely to noticeably increase in the coming years, especially in the developing parts of the world where life expectancy is steadily on the rise [1].

Among the available imaging modalities, magnetic resonance imaging (MRI) constitutes a valuable tool in the characterization of the knee joint, providing a robust quantitative and qualitative analysis for detecting anatomical changes and defects in the cartilage tissue. Unfortunately, manual delineations performed by human experts are resource- and time-consuming, while also suffering from unacceptable levels of inter- and intrarater variability. Thus, there is an increasing demand for accurate and time-efficient fully automated methods for achieving reliable segmentation results.

During the past decades, a considerable amount of research has been conducted to achieve the above goals. However, the thin cartilage structure, as well as the great variability in and intensity inhomogeneity of MR images have posed significant challenges. Several methods are proposed to address those issues, ranging from more traditional image processing ones such as statistical shape models and active appearance models, to more automated ones employing classical machine learning and deep learning techniques. A comprehensive review of such automated methods for knee articular segmentation can be found in [2].

1.1. Statistical Shape Methods

A wide variety of methods that fall under the statistical shape model (SSM) and active appearance model (AAM) family have been extensively employed in knee joint segmentation applications in the past. Since the specific shape of the cartilage structure is quite distinct and characteristic, these methods employ this feature as a stepping stone towards complete delineation for the whole knee joint [3]. Additionally, SSMs have been successfully utilized as shape regularizers within more complex segmentation pipelines, mainly as a final postprocessing step [4]. While conceptually simple, these methods are highly sensitive to the initial landmark selection process.

1.2. Machine Learning Methods

Under the classical machine learning setting, knee cartilage segmentation is cast as a supervised classification task, estimating the label of each voxel from a set of handcrafted or automatically extracted features from the available set of images. Typical examples of such approaches can be found in [5,6]. These methods are conceptually simple but usually offer mediocre results, due to their poor generalization capabilities and the utilization of fixed feature descriptors that may not be well suited to efficiently capture the data variability.

1.3. Multi-Atlas Patch-Based Segmentation Methods

Multi-atlas patch-based methods have long been a staple in medical imaging applications [7,8]. Utilizing an atlas library comprising magnetic resonance images and their corresponding label maps , these methods operate on a single target image at a time, annotating it by propagating voxel labels from the atlas library . The implicit assumption in this framework is that the target image and the corresponding images comprising the atlas library reside in a common coordinate space. This assumption is enforced by registering all atlases along with their corresponding labels maps to the target image space, via an affine or deformable transformation [9].

Multi-atlas patch-based methods usually consist of the following steps: For all voxels , a search volume of size centered around is formed, and every corresponding voxel in the spatially adjacent locations in the registered atlases yields a patch library for all atlases . An optimization problem such as sparse coding (SC) is then used to reconstruct the target patch as a linear combination of its corresponding atlas library. Established methods of this category of segmentation algorithms are presented in [7,8]. Despite being capable of achieving appreciable results, these methods do not scale well to large datasets due to the intense computational demands of constructing a patch library and solving an optimization problem for each voxel of every target image.

1.4. Deep Learning Methods

The recent resurgence of deep learning has had a great impact on medical imaging applications, with an increasing number of works reporting the use of deep architectures in various applications pertaining to that field. Initially restricted to 2D models due to the large computational load imposed by the 3D structure of magnetic resonance images, the recent advancements in processing power have allowed for a wide variety of fully 3D models to be proposed, offering markedly better performance with respect to the more traditional methods [10,11]. In our study, we compare our proposed method against a series of representative deep architectures, with applications ranging from semantic segmentation to point-cloud classification and medical image segmentation. In particular, we consider the SegNet [12], DenseVoxNet [13], VoxResNet [14], PointNet [15], CAN3D [13], and KCB-Net [16] architectures (Section 9.4.2).

1.5. Graph Convolutional Neural Networks

Recently, intensive research has been conducted in the field of graph convolutional networks, owing to their efficiency in handling non-Euclidean data [17]. These models can be distinguished into two general categories, namely, the spectral-based [18,19] and the spatial-based methods [20,21,22,23,24]. The spectral-based networks rely on the graph signal processing principles, utilizing filters to define the node convolutions. The ChebNet in [18] approximates the convolutional filters by Chebyshev polynomials of the diagonal matrix of eigenvalues while the GCN model in [19] performs a first-order approximation of ChebNet.

Spatial-based graph convolutions, on the other hand, update a central node’s representation by aggregating the representations of its neighboring nodes. The message-passing neural network (MPNN) [20] considers graph convolutions as a message-passing process, whereby information is traversed between nodes via the graph edges. GraphSAGE [25] applies node sampling to obtain a fixed number of neighbors for each node’s aggregation. A graph attention network (GAT) [24] assumes that the contribution of the neighboring nodes to the central one is determined according to a relative weight of importance, a task achieved via a shared attention mechanism across nodes with learnable parameters.

During the last years, GCNs have found extensive use in a diverse range of applications, including citation and social networks [19,26], graph-to-sequence learning tasks in natural language processing [27], molecular/compound graphs [28], and action recognition [29]. Considerable research has been conducted on the classification of remotely sensed hyperspectral images [30,31,32], mainly due to the capabilities of GCNs to capture both the spatial contextual information of pixels, as well as the long-range relationships of distant pixels in the image. Another domain of application is the forecasting of traffic features in smart transportation networks [33,34]. To capture the varying spatio-temporal relationships between nodes, integrated models are developed in these works, which combine graph-based spatial convolutions with temporal convolutions.

Finally, to confront the gradient vanishing effect faced by traditional graph-based models, deep GCN networks have recently been suggested [35,36]. Particularly, in [35], a densely connected graph convolutional network (DCGCN) is proposed for graph-to-sequence learning, which can capture both local and nonlocal features. In addition, Ref. [36] presents a densely connected block of GCN layers, which is used to generate effective shape descriptors from 3D meshes of images.

1.6. Outline of Proposed Method

The existing patch-based methods exhibit several drawbacks which can potentially degrade their segmentation performance. First, for each target voxel, these methods construct a local patch library at a specific spatial scale, comprising neighboring voxels from atlas images. Then, classifiers are developed by considering pairwise similarities between voxels in that local region. This suggests that target labeling is accomplished by relying solely on local learning while disregarding the global contextual information among pixels. Hence, long-range relationships among distant voxels in the region of interest are ignored, although these voxels may belong to the same class but with a different textural appearance. Secondly, previous methods in the field resort to inductive learning to produce voxel segmentation, which implies that the features of the unlabeled target voxels are not leveraged during the labeling process. Finally, some recent segmentation methods employ graph-based approaches allowing a more effective description of voxel pairwise affinities via sparse code reconstructions [8,37]. Despite the better data representation, the target voxel labeling is achieved using linear aggregation rules for transferring the atlas voxel’s labels, such as the traditional label propagation (LP) mechanism via the graph edges. Such first order methods may fail to adequately capture the full scope of dependencies among the voxel representations. The labeling of each voxel proceeds by aggregating spectral information strictly from its immediate neighborhood, failing to exploit long-term dependencies with potentially more similar patches in distant regions of the image, thus ultimately yielding suboptimal segmentation results.

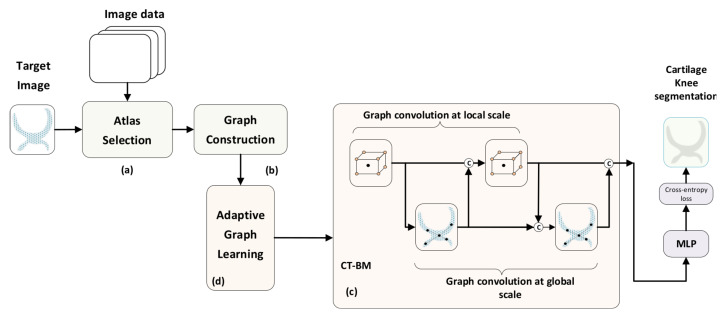

To properly address the above shortcomings, in this paper we present a novel method for the automatic segmentation of knee articular cartilage, based on recent advances in the field of graph-based neural networks. More concretely, we propose the dense multi-scale adaptive graph convolutional network (DMA-GCN) method, which constructively integrates local spatial-level learning and global-level contextual learning concurrently. Our goal is to generate, via automatic convolutional learning, expressive node representations by merging pairwise importance at multiple spatial scales with long-range dependencies among nodes for enhanced volume segmentation. We approach the segmentation task as a multi-class classification problem with the five classes: background: 0; femoral bone: 1; femoral cartilage: 2; tibial bone: 3; tibial cartilage: 4. Recognizing the more crucial role of the cartilage structure in the assessment of the knee joint and considering the increased difficulty for its automatic segmentation as contrasted with that of bones, our efforts are primarily devoted to that issue. Figure 1 depicts a schematic framework of the proposed approach. The main properties and innovations of the DMA-GCN model are described as follows:

Figure 1.

Outline of the proposed knee cartilage segmentation approach. It comprises the atlas subset selection (a), the graph construction part (b), a specific form of graph-based convolutional model (c), the adaptive graph learning (d), and the MLP network providing the class estimates for the segmentation of the target image. Black dots correspond to central nodes and colored nodes to neighboring ones, respectively. The encircled C symbol represents an aggregation function.

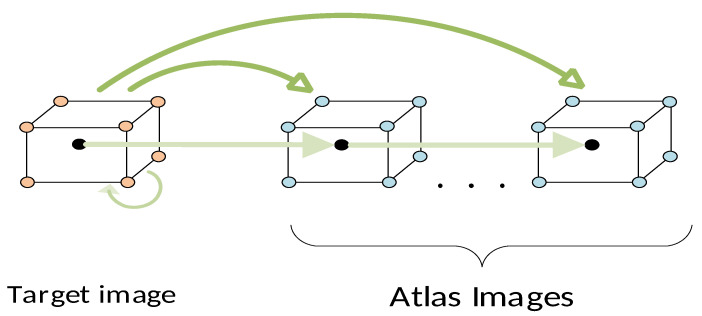

Multi-atlas setting: Our scheme is tailored to the multi-atlas approach, whereby label information from atlas images (labeled) is transferred to segment the target image (unlabeled). To this end, at the preliminary stage, images are aligned using a cost-effective affine registration. Subsequently, for each target image , we generate its corresponding atlas library according to a similarity criterion (Figure 1a).

Graph construction: This part refers to the way in which images are represented in terms of nodes and the organization of node data to construct the overall graph (Figure 1b). Here, the graph node corresponds to a generic patch of size 5 × 5 × 5 around a central voxel, while the node feature vector is provided by a 3D-HOG feature descriptor. Accordingly, the image is represented as a collection of spatially stratified nodes, covering adequately all classes across the region of interest. Following the multi-atlas setting, we construct sequences of aligned data, comprising target nodes and those for the atlases at spatially correspondent locations. Given the node sequences, we further generate the sequence libraries which are composed of neighboring nodes at various spatial scales. The collection of all node libraries forms the overall graph structure, whereby both local (spatially neighboring) and global (spatially distant) node relationships are incorporated.

Semi-supervised learning (SSL): Following the SSL scenario, the input graph data comprise both labeled nodes from the atlas library and unlabeled ones from the target image to be segmented. In that respect, contrary to some existing methods, the features of unlabeled data are leveraged via learning to compute the node embbeddings and label the target nodes.

Local–global learning: As can be seen from Figure 1c, graph convolutions over the layers proceed along two directions, namely, the local spatial level and the global level, respectively. The local spatial branch includes the so-called local convolutional () units which operate on the subgraph of aligned neighborhoods of nodes (sequence libraries). The node embeddings generated by these units incorporate the contextual information between nodes at a local spatial level. To further improve local search, we integrate local convolutions at multiple scales, so that the local context around nodes is captured more efficiently. On the other hand, the global branch includes global convolution () units. These units provide the global node embeddings by taking into consideration the pairwise affinities of distant nodes distributed over the entire region of interest of the cartilage volume. The final node representations are then obtained by aggregating the embeddings computed at the local spatial and the global levels, respectively.

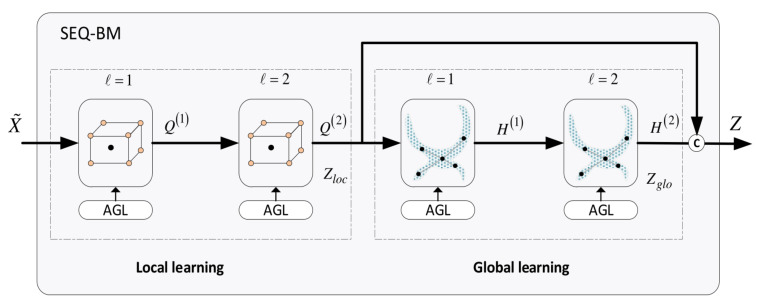

Convolutional building models: An important issue is how the local and global hidden representations of nodes are combined across the convolution layers. In this context, we propose two different structures: the cross-talk building model (CT-BM) and the sequential building model (SEQ-BM). Both models comprise four convolutional units overall, specifically, two and two units, undertaking local and global convolutions, respectively. The CT-BM (Figure 1c) performs intertwined local–global learning, with skip connections and aggregators. The links indicate the cross-talks between the two paths. The SEQ-BM, on the other hand, adopts a sequential learning scheme. In particular, local spatial learning is completed first, followed by the respective convolutions at the global level.

Adaptive graph learning (AGL): Considering fixed graphs with predetermined adjacency weights among nodes can degrade the segmentation results. To confront this drawback, every and unit is equipped here with an AGL mechanism, which allows us to automatically learn the proper graph structure at each layer. At the local spatial level, AGL adaptively designates the connectivity relationships between nodes via learnable attention coefficients. Hence, can concentrate and aggregate features from relevant nodes in the local search region. Further, at the global level we propose a different AGL scheme for units, whereby graph edges are learned from the input features of each layer.

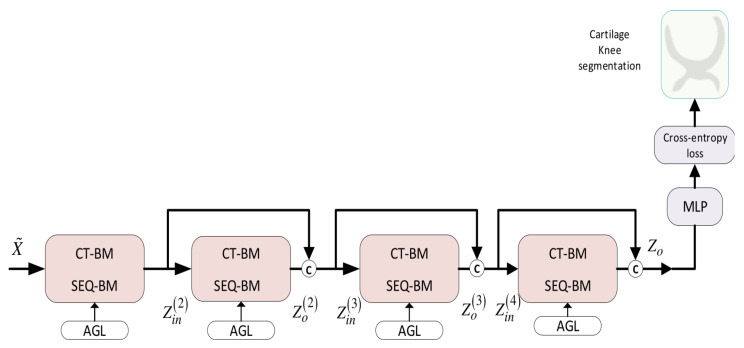

Densely connected GCN: The proposed CT-BM and SEQ-BM can be utilized as standalone models to undertake the graph convolution task. Nevertheless, their depth is confined to two local–global layers, since an attempt to deepen the networks is hindered by the gradient-vanishing effect. To circumvent this deficiency, we finally propose a densely connected convolutional network, the DMA-GCN model. The DMA-GCN considers CT-BM or SEQ-BM as the building block of the deep structure. It exhibits a deep architecture with skip connections whereby each layer in the block receives feature maps from all previous layers and transmits its outputs to all subsequent layers. Overall, the DMA-GCN shares some salient qualities, such as a deep structure with an enhanced performance rate and better information flow, local–global level convolutions, and adaptive graph learning.

In summary, the main contributions of this paper are described as follows.

A novel multi-atlas approach is presented for knee cartilage segmentation from MRI images based on graph convolutional networks which operates under the semi-supervised learning paradigm.

With the aim to generate expressive node representations, we propose a new learning scheme that integrates graph information at both local and global levels concurrently. The local branch exploits the relevant spatial information of neighboring nodes at multiple scales, while the global branch incorporates global contextual relationships among distant nodes.

We propose two convolutional building models, the CT-BM and SEQ-BM. In the CT-BM, the local and global learning tasks are intertwined along the layer convolutions, while the SEQ-BM follows a sequential mode.

Both local and global convolutional units, at each layer, are equipped with suitable attention mechanisms, which allows the network to automatically learn the graph connective relationships among nodes during training.

Using the proposed CT-BM and SEQ-BM as block units, we finally present a novel densely connected model, the DMA-GCN. The network exhibits a deeper structure which leads to more enhanced segmentation results, while at the same time, it shares all salient properties of our approach.

We have devised a thorough experimental setup to investigate the capabilities of the suggested segmentation framework. In this setting, we examine different test cases and provide an extensive comparative analysis with other segmentation methods.

The remainder of this paper is organized as follows. Section 2 reviews some representative forms of graph convolutional networks related to our work and involved in the experimental analysis. Section 3 presents the image preprocessing steps and the atlas selection process. In Section 4, we discuss the node feature descriptor, as well as the graph construction of the images. Section 5 elaborates on the proposed local and global convolutional units, along with their attention mechanisms. Section 6 describes the suggested convolutional building blocks, while Section 7 presents our densely connected network. Section 8 discusses the transductive vs. inductive learning and the full-batch vs. mini-batch learning in our approach. In Section 9 and Section 10, we provide the experimental setup and respective comparative results of the proposed methodology, while Section 11 concludes this study.

2. Related Work

In this section, we review some representative models in the field of graph convolutional networks that are related to our work and are also included in the experiments.

Definition 1.

A graph is defined as where denotes the set of N nodes , and is the set of edges connecting the nodes . is a matrix subsuming the node feature descriptors with F denoting the feature vector dimensionality. The graph is associated with an adjacency matrix (binary or weighted), which includes the connection links between nodes. A larger entry suggests the existence of a strong relationship between nodes , while signifies the lack of connectivity. The graph Laplacian matrix is defined as , where is the diagonal degree matrix with . Finally, the normalized graph adjacency matrix with the added self-connections is denoted by , with the corresponding degree matrix given by

2.1. Graph Convolutional Network (GCN)

The GCN proposed in [19] is a spectral convolutional model. It tackles the node classification task under the semi-supervised framework, i.e., where labels are available only for a portion of the nodes in the graph. Under this setting, learning is achieved by enforcing a graph Laplacian regularization term with the aim of smoothing the node labels:

| (1) |

| (2) |

where represents the supervised loss measured on the labeled nodes of the graph, is a differentiable function implemented by a graph neural network, is a regularization term balancing the supervised loss in regard to the overall smoothness of the graph, is the node feature matrix, and is the graph Laplacian.

In a standard multilayer graph-based neural network framework, information flows across the nodes by applying the following layerwise propagation rule:

| (3) |

where denotes the activation function, is a layer-specific trainable weight matrix, and is the matrix of activation functions in the lth layer, with . The authors in [19] show that the propagation rule in Equation (3) provides a first-order approximation of localized spectral filters on graphs. Most importantly, we can construct multilayered graph convolution networks by stacking several convolutional layers of the form in Equation (3). For instance, a two-layered GCN can be represented by

| (4) |

where is the network’s output, is the output layer activation function for multi-class problems, and is the normalized adjacency matrix. The weight matrices and are trained using some variant of gradient descent with the aid of a loss function.

2.2. Graph Attention Network (GAT)

A salient component in GATs [24] is an attention mechanism incorporated in the aggregation of the graph attention layers (GALs), with the aim to automatically capture valuable relationships between neighboring nodes. Let and denote the inputs and outputs of a GAL, where N is the number of nodes, while D and are the corresponding dimensionalities of the node feature vectors. The convolution process entails three distinct issues: the shared node embeddings, the attention mechanism, and the update of node representations. As an initial step, a learnable transformation parameterized by the weight matrix is applied on nodes, with the goal of producing expressive feature representations. Next, for every node pair, a shared attention mechanism is performed on the transformed features,

| (5) |

where signifies the importance between nodes and , is a learnable weight vector, and denotes the concatenation operator. To make the above mechanism effective, the computation of the attention coefficients is confined between each node and its neighboring nodes , . In the GAT framework, the attention mechanism is implemented by a single-layer feed-forward neural network, parameterized by nonlinearities, which provide the normalized attention coefficients:

| (6) |

Given the attention coefficients, the node feature representations at the output of the GAT are updated via a linear aggregation of neighboring nodes’ features

| (7) |

To stabilize the learning procedure, the previous approach is extended in a GAT by considering multiple attention heads. In that case, the node features are computed by

| (8) |

where K is the number of independent attention heads applied, while and denote the normalized attention coefficients associated with the kth attention head and its corresponding embedding matrix, respectively. In this work, we exploit the principles of the GAT-based attention mechanism in the proposed local convolutional units, with the goal to aggregate valuable contextual information from local neighborhoods, at multiple search scales (Section 5.1).

2.3. GraphSAGE

The GraphSAGE network in [25] tackles the inductive learning problem, where labels must be generated for previously unseen nodes, or even entirely new subgraphs. GraphSAGE aims to learn a set of aggregator functions , which are used to aggregate information from each node’s local neighborhood. Node aggregation is carried out at multiple spatial scales (hops). Among the different schemes proposed in [25], in our experiments, we consider the max-pooling aggregator, where each neighbor’s vector is independently supplied to a fully connected neural network:

| (9) |

where denotes the element-wise max operator, is the weight matrix of learnable parameters, is the bias vector, and is a nonlinear activation function. Further, denotes the result obtained after a max-pooling aggregation on the neighboring nodes of node v. GraphSAGE then concatenates the current node’s representation with the aggregated neighborhood feature vector to compute, via a fully connected layer, the updated node feature representations:

| (10) |

where is a weight matrix associated with aggregator .

2.4. GraphSAINT

GraphSAINT [21] differs from the previously examined architectures in that instead of building a full GCN on all the available training data, it samples the training graph itself, creating subsets of the original graph, building and training the associated GCNs on those subgraphs. For each mini-batch sampled in this iterative process, a subgraph (where ) is used to construct a GCN. Forward and backward propagation is performed, updating the node representations and the participating edge weights. An initial preprocessing step is required for the smooth operation of the process, whereby an appropriate probability of sampling must be assigned to each node and edge of the initial graph.

3. Materials

In this section, we present the dataset used in this study, the image preprocessing steps, and finally, the construction of the atlas library.

3.1. Image Dataset

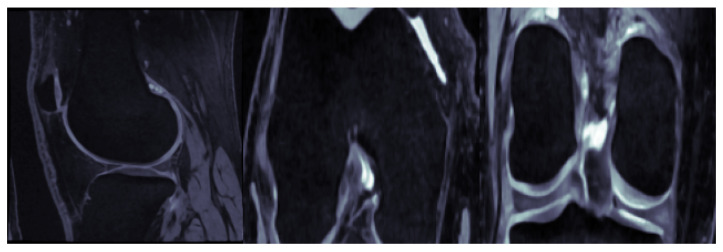

The MR images used in this study comprise the entirety of the publicly available, baseline Osteoarthritis Initiative (OAI) repository, for which segmentation masks are available, consisting of a total of 507 subjects. The specific MRI modality utilized across all the experiments corresponds to the sagittal 3D dual-echo steady-state (3D-DESS) sequence with water excitation, with an image size of voxels and a voxel size of mm. The respective segmentation masks serving as the ground truth are provided by the publicly available repository assembled by [38], including labels for the following knee joint structures (classes): background tissue, femoral bone (FB), femoral cartilage (FC), tibial bone (TB), and tibial cartilage (TC). Figure 2 showcases a typical knee MRI, in the three standard orthogonal planes (sagittal, coronal, axial).

Figure 2.

A typical knee MRI viewed in three orthogonal planes (left to right: sagittal, coronal, axial).

3.2. Image Preprocessing

The primary source of difficulties in automated cartilage segmentation stems from the similar texture and intensity profile of articular cartilage and background tissues, as they are depicted in most MRI modalities, a problem further accentuated by the usually high intersubject variability present in the imaging data. To this end, the images were preprocessed by applying the following steps:

Curvature flow filtering: A denoising curvature flow filter [39] was applied, with the aim of smoothing the homogeneous image regions, while simultaneously leaving the surface boundaries intact.

Inhomogeneity correction: N3 intensity nonuniform bias field correction [40] was performed on all images, dealing with the issue of intrasubject variability within similar classes among subjects.

Intensity standardization: MRI histograms were mapped to a common template, as described in [41], ensuring that all associated structures across the subjects shared a similar intensity profile.

Nonlocal-means filtering: A final filtering process smoothed out any leftover artefacts and further reduced noise. The method presented in [42] offers a robust performance and is widely employed in similar medical imaging applications. Finally, the intensity range of all images was rescaled to .

3.3. Atlas Selection and ROI Extraction

The construction of an atlas library for each target image to be segmented necessitates the registration of all atlases to the particular target image. An affine transformation was employed, registering all atlas images in the target image domain space, accounting for deformations of linear nature, such as rotations, translations, shearing, scaling, etc. The same transformation was also applied to the corresponding label map of each atlas, resulting in the atlas library registered to .

Considering the fact that the cartilage volume accounts for a very small percentage of the overall image volume, a region of interest (ROI) was defined for every target image, covering the entire cartilage structure and its surrounding area. A presegmentation mask was constructed by passing the registered atlas cartilage mask through a majority voting (MV) filter, and then expanded by a binary morphological dilation filter, yielding the ROI for the target image. This region corresponded to the sampling volume for the target image and its corresponding atlas library . This process guaranteed that the selected ROI enclosed the totality of cartilage tissue both in the target , as well as in the corresponding atlas library.

Finally, to simultaneously reduce the computational load and increase the spatial correspondence between target and atlas images, we included a final atlas selection step. Measuring the spatial misalignment in the ROI of every pair using the mean squared difference , we only kept the first atlases exhibiting the least disagreement in the metric [37].

4. Graph Constructions

In this section, we describe the node representation, the construction of aligned sequences of nodes, and the sequence libraries, which lead to the formation of the aligned image graphs used in the convolutions.

4.1. Node Representation

An important issue to properly address is how an image is transformed to a graph structure of nodes. In our setting, a node was described by a generic patch surrounding a central voxel . The image was then represented by a collection of nodes which were spatially distributed across the ROI volume.

Each node was described by a feature vector implemented via HOG descriptors [43], which aggregated the local information on the node patch. HOG descriptors constitute a staple feature descriptor in image processing and recognition. Here, we applied a modification suitable for operating on 3D data [44]. For each voxel , we extracted an HOG feature description by computing the gradient magnitude and direction along the axes for each constituent voxel in the node patch. The resulting values were binned to a -dimensional feature vector where each entry corresponded to the vertex of a regular icosahedron, with each bin representing the strength of the gradient along that particular direction.

Finally, each node was associated with a class indicator vector , where if voxel belongs in class i and 0 otherwise.

4.2. Aligned Image Graphs

The underlying principle of the multi-atlas approach is that the target image and the atlas library are aligned via affine registration, thus sharing a common coordinate space. This allows the transfer of label information from atlas images towards the target one by operating upon sequences of spatially correspondent voxels. Complying with the multi-atlas setting, we applied a two-stage sampling process with the aim to construct a sequence of aligned graphs, involving the target image and its respective atlases. This sequence contained the so-called root nodes which were distinguished from the neighboring nodes introduced in the sequel.

Target graph construction: This step used a spatially stratified sampling method to generate an initial set of target voxels , where D denotes the feature dimensionality. To ensure a uniform spatial covering of all classes in the target ROI, we performed a spatial clustering step partitioning all contained voxels into clusters. After interpolating the cluster centers to the nearest grid point, we obtained the global dataset , which defined a corresponding target graph of root nodes . These target nodes served as reference points from which the aligned sequences were subsequently generated.

-

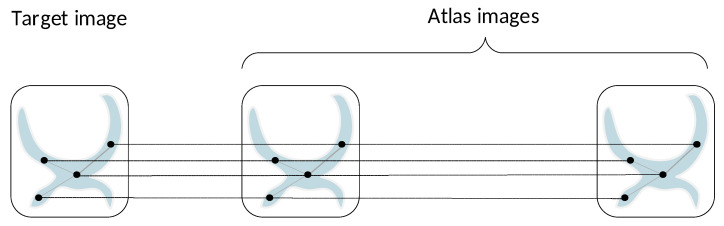

Sequences of aligned data: For each , we defined a sequence of aligned nodes , containing the target node and its respective nodes from the atlas library, located at spatially correspondent positions:

(11) The entire global dataset of root nodes, containing all sampled target nodes and their associated atlas ones, was defined as the union of all those sequences

where contains a total number of root nodes, while and denote the datasets of root nodes sampled from the target and atlases, respectively. Accordingly, this led to a sequence of aligned graphs , , which is schematically shown in Figure 3. In this figure, we can distinguish two modes of pairwise relationships among root nodes that should be explored. Concretely, there are local spatial affinities across the horizontal axis between nodes belonging to a specific node sequence. On the other hand, there also exist global pairwise affinities between nodes of each image individually, as well as between nodes belonging to different images in the sequence. The latter type of search ensures that nodes of the same class located at different positions in the ROI volume and with different textural appearance are taken into consideration, thus leading to the extraction of more expressive node representations of the classes via learning.(12)

Figure 3.

Schematic illustration of a sequence of aligned image graphs of root nodes, including the target graph (left) and the graphs of its corresponding atlases (right). There are local spatial affinities at aligned positions (horizontal axis), as well as global pairwise similarities between nodes located at different positions in the ROIs.

4.3. Sequence Libraries

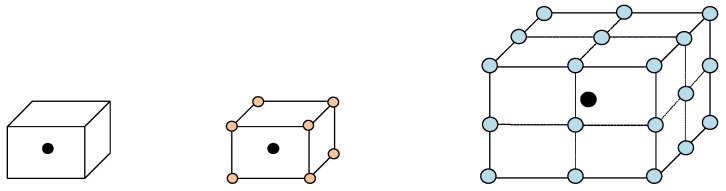

It should be stressed that the cost-effective affine registration used in our method is not capable of coping with severe image deformations. Hence, it cannot provide sufficiently accurate alignment between the target and the atlases. To account for this deficiency, we expanded the domain of local search by considering neighborhoods around nodes. Specifically, for each node , we defined multihop neighborhoods at multiple scales:

| (13) |

for , where denotes the neighborhood at scale s, and S is the number of scales used. corresponds to the basic patch of the node itself. and are the 1-hop and 2-hop neighborhoods delineated as and volumes around , respectively. In our experiments, we considered two different spatial scales . Figure 4 illustrates the different node neighborhoods.

Figure 4.

A generic patch (left) representing a node . The corresponding 1-hop (, middle) and 2-hop neighborhoods (, right), corresponding to and hypercubes, respectively. Black dots correspond to root nodes, while colored ones stand for the neighboring nodes. All nodes are represented by patches.

Next, for each sequence , we created the corresponding sequence libraries by incorporating the local neighborhoods of all root nodes belonging to that sequence. The sequence library at scales was defined by

| (14) |

contains nodes, where denotes the size of the spatial neighborhood at scale s. Figure 5 provides a schematic illustration of a sequence library.

Figure 5.

Schematic illustration of a sequence library for a specific scale , comprising the aligned neighborhoods from the target and the atlas images. Green arrows indicate the different scopes of the attention mechanism. For a particular root node, attention is paid to its own neighborhood, as well as the other neighborhoods in the sequence.

The collection of all forms a global dataset of aligned neighborhoods described as follows:

| (15) |

for . is formed as union of the dataset of root nodes and the dataset comprising their neighboring nodes at scale s. Its cardinality is , where is the number of root nodes, and the cardinality of . Further, corresponds to a subgraph , with . In this subgraph, connective edges are established in along the horizontal axis, namely, between root nodes and the neighboring nodes across the sequence libraries. Concluding, since the neighborhoods are by definition inclusive as the scale increases, the dataset contains the maximum number of nodes, forming the overall dataset :

| (16) |

The corresponding graph comprises an overall total number of nodes, including the root and neighboring nodes.

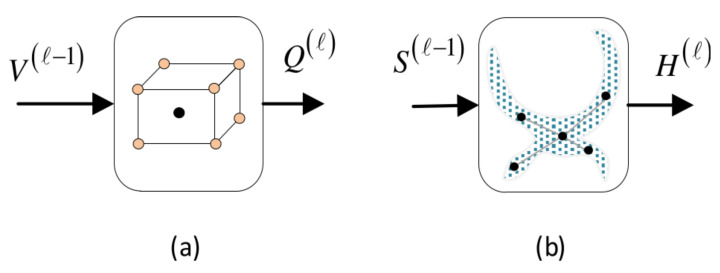

5. Convolutional Units

This section elaborates on the basic convolutional units, namely, the local convolutional unit and the global convolutional unit which serve as structural elements to devise our proposed models (Figure 6).

Figure 6.

Outline of the convolutional units employed. (a) The local convolutional unit , (b) the global convolutional unit .

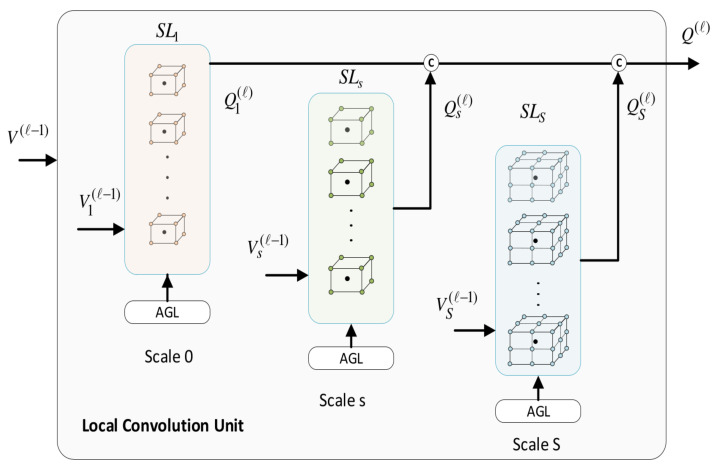

5.1. Local Convolutional Unit

The local convolutional unit undertakes the local spatial learning task, operating horizontally along the sequence libraries (SLs) of nodes. Instead of confining ourselves to predefined and fixed weights in the graphs, we opted to apply a local attention mechanism to adaptively learn the graph structure information at each layer. Specifically, we used the attention approach suggested in the GAT as a means to capture the local contextual relationships among nodes in the search area.

A functional outline of at layer l is shown in Figure 7. It receives an input from the previous layer and provides its output , where and denote the dimensionalities of the input and output node features, respectively.

Figure 7.

Detailed description of the local convolutional unit, which aggregates local contextual information from node neighborhoods at different spatial scales.

Figure 7 provides a detailed architecture of . The model involves S sub-modules, each one associated with a specific spatial scale of aggregation. The sub-module s acts upon the subgraph , which subsumes the sequence libraries of root nodes. Its input is and after a local convolution at scale s, it provides its own output . In this context, the structure of is adapted to the local attention mechanism. Let us assume that a root node belongs to the qth sequence library: . Then, node pays attention to two pools of neighboring nodes (Figure 3): (a) it aggregates relevant feature information from nodes of its own neighborhood, (self-neighborhood attention); (b) it aggregates features of nodes belonging to the other aligned neighborhoods in pertaining to the atlas images: . For these pairs of nodes, we compute normalized attention coefficients using Equation (6). Further, pairwise affinities between nodes belonging to different sequence libraries are disregarded, i.e., when and , . It should be noticed that we are primarily focused on computing comprehensive feature representations of the root nodes. Nevertheless, neighboring nodes are also updated; however, in this case, the attention is confined to the neighborhood of the root node it belongs to.

For convenience, let us consider the input node features of the form , where and similarly, . The local-level convolution at scale s of a root node is obtained by:

| (17) |

The first term in the above equation refers to the self-neighborhood attention, which aggregates node features from within the same image. Moreover, the second term aggregates node information from the other aligned neighborhoods in the sequence. In an attempt to stabilize the learning process and further enhance the local feature representations, we followed a multi-head approach, whereby K independent attention mechanisms are applied. Accordingly, the node convolutions proceed as follows:

| (18) |

where denote the attention coefficients between nodes and according to the kth attention head, while are the corresponding parameter weights used for node embeddings. The attention parameters are shared across all nodes in and are simultaneously learned at each layer l and for each spatial scale, individually. The outputs of the different sub-modules are finally aggregated to yield the overall output of the unit:

| (19) |

where ⊕ denotes the concatenation operator.

The multilevel attention-based aggregation of valuable contextual information from sequence libraries offers some noticeable assets to our approach: (a) it acquires comprehensive node representations which assist in producing better segmentation results, (b) the graph learning circumvents the inaccuracies caused by affine registration in severe image deformations which may lead to node misclassification.

5.2. Global Convolutional Unit

The global convolutional unit conducts the global convolution task; it acts upon the subgraph which includes the sequence of root nodes . aims at exploring the global contextual relationships among nodes located at different positions in the target image and the atlases (Figure 3). Accordingly, we established in suitable pairwise connective weights according to spectral similarity for nodes , . Node pairs belonging to the same sequence are processed by units; hence, they are disregarded in this case.

The global convolution at layer l is acquired using the spectral convolutional principles in GCN:

| (20) |

where and denote the input and output of , respectively, whereas are the corresponding dimensionalities. is the learnable embedding matrix and is the adjacency matrix, as defined in Section 2.1.

Similar to , we also incorporated the AGL mechanism to , so that global affinities could be automatically captured at each layer via learning. More concretely, we applied an adaptive scheme whereby the connective weights between nodes are determined from the module’s input signals [45]. The adjacency matrix elements were computed by:

| (21) |

where is obtained after applying batch-normalization to the inputs, is the sigmoidal activation function applied on an element-wise operation, and is the embedding matrix to be learned, shared across all nodes of . The adaptation scheme in Equation (21) assigns greater edge values between nodes with high spectral similarity and vice-versa.

In the descriptions above, we considered the GCN model equipped with AGL as a baseline scheme. Nevertheless, in our experimental investigation, we examined several scenarios whereby the global convolution task was tackled using alternative convolutional models, including GraphSage, GAT, GraphSAINT, etc.

6. Proposed Convolutional Building Blocks

In this section, we present two alternative building models, namely, the cross-talk building model (CT-BM) and the sequential building model (SEQ-BM). They are distinguished according to the way the local and global convolutional units are blended across the layers. Every constituent local and global unit within the structures has its individual embedding matrix of learnable parameters. Further, it is also equipped with its own AGL mechanism for adaptive learning of the graphs, as described in the previous section.

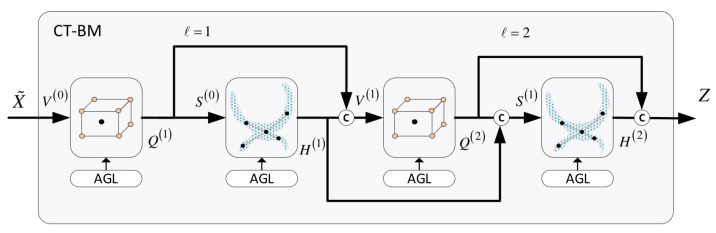

6.1. Cross-Talk Building Model (CT-BM)

The CT-BM is shown in the outline of our approach in Figure 1. Nevertheless, a more compact form is depicted in Figure 8. The model comprises two composite layers , each one containing one local and one global unit. As can be seen, convolutions proceed in an alternating manner across the layers, whereby the local unit transmits its output to the next global unit, and vice versa. A distinguishing feature of this structure is that there are also skip connections and aggregators which implement cross-talk links between the local and global components. Particularly, in addition to the standard flow from one unit to the next, each unit’s output is aggregated with the output of the subsequent unit.

Figure 8.

Illustration of the proposed cross-talk building model (CT-BM), where local and global convolutional units are combined following an alternating scheme.

The overall workflow of the CT-BM is outlined below:

- The first local unit yields

(22) - The local unit’s output is passed to the first global unit to compute

(23) - The second local unit receives an aggregated signal to provide its output,

(24) - The second global unit produces

(25) - The final output of the CT-BM is the obtained by

(26)

6.2. Sequential Building Model (SEQ-BM)

The architecture of the SEQ-BM is illustrated in Figure 9. This model also contains two local and two global units. Contrary to the CT-BM, convolutions are conducted in the SEQ-BM sequentially. Concretely, the local learning task is first completed using the first two local convolutional units. The outputs of this stage are then transmitted to the subsequent stage which accomplishes the global learning task, using the two global convolutional units. The overall output of the SEQ-BM is formed by aggregating the resulting local and global features of the two stages.

Figure 9.

Illustration of the proposed sequential building model (SEQ-BM), where the local and global learning tasks are carried out sequentially.

The workflow of the SEQ-BM is outlined as follows:

- The local learning task is described by

(27) (28) - The global learning task is described by

(29) (30) - The final output of the SEQ-BM is obtained by

(31)

The alternating blending of the CT-BM provides a more effective integration between local and global features at each layer, individually, as compared to the sequential combination in SEQ-BM. This observation is attested experimentally as shown in Section 10.

7. Proposed Dense Convolutional Networks

In this section, we present two variants of our main model, the DMA-GCN network. The motivation behind this design is based on an attempt to further expand the structures CT-BM and SEQ-BM by including multiple layers of convolutions, to face the gradient vanishing effect, where the gradients diminish, thus hindering effective learning or even worsening the results. This is the reason why in the above building blocks, we are restricted to two-layered local–global convolutions.

To tackle this problem, we resorted to the recent advancements in deep GCNs [36] and developed the DMA-GCN model with a densely connected convolutional architecture utilizing residual skip connections, as shown in Figure 10. As can be seen, the model consists of several blocks arranged across M layers of block convolutions. These blocks are implemented by either CT-BM or SEQ-BM described in Section 5, which leads to two different alternative configurations, the DMA-GCN(CT-BM) and DMA-GCN(SEQ-BM), respectively. Let and denote the input and output of the ith block, , with being the feature dimensionalities, respectively. The properties of the suggested DMA-GCN are discussed in the following:

- The skip connections interconnect the blocks across the layers. Concretely, each block receives as input the outputs of blocks from all preceding layers:

for , where ⊕ denotes the concatenation operator. This allows the generation of deeper GCN structures which can acquire more expressive node features. Overall, the DMA-GCN involves convolutional units. Within each block, two layers of local–global convolutions are internally performed; the resulting outputs are then integrated along the block layers to provide the final output:(32) (33) The other beneficial effect of skip connections is that they allow the final output to have direct access to the outputs of all blocks in the dense network. This assures a better reverse flow of information and facilitates the effective learning of parameters pertaining to the blocks. Since block operations are confined to two-layered local–global convolutions, overall, we can circumvent the gradient vanishing problem.

-

In order to preserve the parametric complexity at a reasonable level, similar to [36], we define the feature dimensions of each block in DMA-GCN to be the same:

(34) The node feature growth rate caused by the aggregators can be defined as , . The input dimensions grow linearly as we proceed to deeper block layers, with the last block showing the largest increase . To prevent feature dimensionalities from receiving too large values, we considered initially a DMA-GCN model with blocks. The particular number of blocks in the above range was then decided after experimental validation (Section 10).

Every block in DMA-GCN is supported with its corresponding AGL process to automatically learn the graph connective affinities at each layer. This is accomplished by applying an attention-based mechanism for local convolutional units (Section 5.1) and an adaptive construction of adjacency matrices from inputs node features (Section 5.2).

Figure 10.

Description of the proposed DMA-GCN model, with densely connected block convolutional structure and residual skip connections.

The output of the DMA-GCN is fed to a two-Layer MLP unit to obtain the label estimates

| (35) |

We adopted the cross-entropy error to penalize the differences between the model’s output and the corresponding node labels

| (36) |

where denotes the subset of labeled nodes. The DMA-GCN network was trained under either the transductive or the inductive learning methods, as discussed in the following section.

8. Network Learning

In our setting, we considered transductive learning (SSL) as the basic learning scheme for training the DMA-GCN models. In this case, both unlabeled data from the target image to be segmented and the labeled data from the atlases were used for the construction of the model. Nevertheless, in the experiments, we also investigated the inductive (supervised) learning scenario, whereby training was conducted by solely using labeled data from the atlases.

8.1. Transductive Learning (SSL)

The SSL scheme was adapted to the context of image segmentation task elaborated here. In regard to the data used in the learning, SSL can be carried out along two different modes of operation, namely, mini-batch learning and full-batch learning. Next, we detail mini-batch learning and then conclude with full-batch learning, which is a special case of the former one.

Mini-batch learning was implemented by following a three-stage procedure. In stage 1, an initial model was learned and used to label an initial batch of data from . Stage 2 was an iterative process, where out-of-sample batches were sequentially sampled from and labeled via refreshing learning. Finally, stage 3 labeled the remaining voxels of the target image using a majority voting scheme.

Stage 1: Learning. In this stage, , we started by sampling an initial unlabeled batch from the target image. Then, we used the different steps detailed in Section 4 to construct the corresponding graph of nodes. (a) Given , we created the corresponding aligned sequences (Section 4.3), giving rise to the dataset of root nodes . (b) Next, we incorporated neighborhood information by generating sequence libraries at multiple scales (Section 4.3), leading to the datasets , where denotes the neighboring nodes. (c) Finally, we considered the overall dataset that corresponded to the graph containing the root and neighboring nodes at that stage.

The next step was to perform convolutional learning on graph using DMA-GCN models. Upon completion of the training process, we accomplished the labeling of ,

| (37) |

denotes the model’s functional mapping, is the labeling function of the target nodes, and stands for the network’s weights, including the learnable parameters of embedding matrices and attention coefficients, across all layers of the DMA-GCN.

Stage 2: iterative learning. This stage followed an iterative procedure, , whereby at each iteration, out-of-sample batches of yet unlabeled nodes were sampled from , of size . Considering the nodes in as root nodes, we then applied steps (a)–(c) of the previous stage, to obtain the datasets , and the overall set , which corresponded to a graph of out-of-sample nodes. In the following, data were fed to the pretrained model from stage 1, . The model was initialized as to preserve previously acquired knowledge. Further, it was subject to several epochs of refreshing convolutional learning, with the aim of adapting to the newly presented data. The above sequential process terminated at when all target nodes were labeled.

Stage 3: labeling of remaining voxels. This was the final stage of target image segmentation, entailing the labeling of target voxels not considered during the previous learning stages. Given that nodes were the central voxels of a generic patches, there were multiple remaining voxels scattered within volumes. Labeling of these voxels was accomplished by a voting scheme. Specifically, for each , the voting function accounted for both the spectral and the spatial distance from its surrounding labeled vertices:

| (38) |

| (39) |

where and are normalized weighting coefficients denoting the spectral and spatial proximity, measured by the norm (Euclidean distance) and norm (Manhattan distance), respectively.

Full-batch learning is a special case of the above mini-batch learning. In that case, the dataset is a large body of data, comprising all possible target nodes contained in the target ROI. Under this circumstance, the iterative stage 2 is disregarded. Full-batch learning is completed after convolutional learning (stage 1), followed by the labeling of the rest of target voxels (stage 3).

8.2. Inductive Learning

Under this setting, the target data remain unseen during the entire training phase. Adapting to the multi-atlas scenario, we devised a supervised learning scheme according to the following steps. (a) For each target image , we selected the most similar labeled image from atlases, where is a spectral similarity function used to identify the nearest neighbors of . (b) The image along with its corresponding atlas library were used to learn a supervised model by applying exactly stage 1 of the previous subsection. (c) Finally, the target image was labeled by means of . As opposed to the SSL scenario, the critical difference was that the developed model relied solely on labeled data, disregarding target image information.

9. Experimental Setup

9.1. Evaluation Metrics

The overall segmentation accuracy achieved by the proposed methods was evaluated using the following three, standard volumetric measures: the Dice similarity coefficient , the volumetric difference , and the volume overlap error . Denoting the ground truth labels and the estimated ones, the above measures are defined as:

| (40) |

| (41) |

| (42) |

Taking into account that the large majority of voxels correspond to either the background class or the two bone classes, we opted to also include the precision and recall classification measures, to better evaluate the segmentation performance on each individual structure. All measures correspond exclusively to the image content delineated by the respective ROI of each evaluated MRI.

9.2. Hyperparameter Setting and Validation

The overall performance of the proposed method depends on a multitude of preset parameters, the most prominent of which are the number of atlases comprising the atlas library , the number of heads K utilized in the multi-head attention mechanism, and the number of scales S corresponding to the different neighborhood scales. The optimal values of the above hyperparameters, as well as the performance of the DMA-GCN(SEQ) and DMA-GCN(CT) segmentation methods, were evaluated through a 5-fold cross-validation.

9.3. Experimental Test Cases

The proposed methodology comprises several components affecting its overall capacity and performance. We hereby present a series of experimental test cases, aiming to shed light on those effects.

Local vs. global learning: In this scenario, we aimed to observe the effect of performing local-level learning in addition to global learning. The goal here was to determine the potential boost in performance facilitated by the inclusion of the attention mechanism in our models.

Transductive vs. inductive learning: The goal here was to ascertain whether the increased cost accompanying the transductive learning scheme could be justified in terms of performance, as compared to the less computationally demanding inductive learning.

Sparse dense adjacency matrix: Here, we examined the effect of progressively sparsifying the adjacency matrix at each layer on the overall performance. We examined the following cases: (1) the default case with a dense and (2) thresholding so that each node was allowed connections to , or 20 spectrally adjacent ones.

Global convolution models: Finally, we tested the effect of varying the design of the global components by examining some prominent architectures, namely, GCN, ClusterGCN, GraphSAINT, and GraphSAGE

9.4. Competing Cartilage Segmentation Methods

The efficacy of our proposed method was evaluated against several published works dealing with the problem of automatic knee cartilage segmentation.

9.4.1. Patch-Based Methods

The patch-based sparse coding (PBSC) [8] and patch-based nonlocal-means (PBNLM) [7] methods are two state-of-the art approaches in medical image segmentation. For consistency reasons, similar to the DMA-GCN, we set the patch size for both these methods to and the corresponding search volume size to . The remaining parameters were taken as described in their respective works.

9.4.2. Deep Learning Methods

Here, we opted to evaluate the DMA-GCN against some state-of-the-art deep learning architectures that were successfully applied in the field of medical image segmentation.

SegNet [12]: A convolutional encoder–decoder architecture, utilizing the state-of-the-art VGG16 [46] network that is suitable for pixelwise classification. Since we are dealing with 3D data, we split each input image patch into its constituent planes () and fed those to the network.

DenseVoxNet [13]: A convolutional network proposed for cardiovascular MRI segmentation. It comprises a downsampling and upsampling sub-component, utilizing skip connections from each layer to its subsequent ones, enforcing a richer information flow across the layers. In our experiments, the model was trained using the same initialization scheme for the parameters’ values as described in the original paper.

VoxResNet [14]: A deep residual network comprising a series of stacked residual modules, each one performing batch-normalization and convolution, also containing skip connections from each module’s input to its respective output.

KCB-Net [16]: A recently proposed network that performs cartilage and bone segmentation from volumetric images, by utilizing a modular architecture, where initially, each one of the three sub-components is trained to process a separate plane (sagittal, coronal, axial), followed by a 3D component with the task of aggregating the respective outputs into a single overall segmentation map.

CAN3D [47]: This network utilizes a successively dilated convolution kernel aiming to aggregate multi-scale information by performing feature extraction within an increasingly dilating receptive field, facilitating the final voxelwise classification in full resolution. Additionally, the loss function employed at the final layer consists of a combination of Dice similarity coefficient and , a variant of the standard used in evaluating segmentation results.

Point-Net [15]: Point-Net is a recently proposed architecture specifically geared towards point-cloud classification and segmentation. As an initial preprocessing step, it incorporates a spatial transformer network (STN) [48] that renders the input invariant to permutations and is used to produce a global feature for the whole point cloud. That global feature is appended on the output of a standard multilayer perceptron (MLP) that operates on the initial point-cloud features, and the resulting aggregated features are passed through another MLP in order to provide the final segmentation map.

9.4.3. Graph-Based Deep Learning Methods

In regard to graph-based convolution models, we compared our DMA-GCN approach to a series of baseline GCN architectures to carry out the global learning task. In addition, we considered in the comparisons the multilevel GCN with automatic graph learning (MGCN-AGL) method [30], used for the classification of hyperspectral remote sensing images. The MGCN-AGL approach takes a form similar to the one of the SEQ-BM in Figure 9 to combine the local learning via a GAT-based attention mechanism and global learning implemented by a GCN. A salient feature of this method is that the global contextual affinities are reconstructed based on the node representations obtained after completion of the local learning stage.

9.5. Implementation Details

All models presented in this study were developed using the PyTorch Geometric library (https://github.com/pyg-team/pytorch_geometric, accessed on 1 May 2023), specifically built upon PyTorch (https://pytorch.org) to handle graph neural networks. For the initial registration step, we used the elastix toolkit (https://github.com/SuperElastix/elastix, accessed on 1 May 2023). The code for all models proposed in this study can be found at (https://gitlab.com/christos_chadoulos/graph-neural-networks-for-medical-image-segmentation, accessed on 10 March 2024).

Regarding the network and optimization parameters used in our study, we opted for the following choices: after some initial experimentation, the parameter d controlling the node feature growth rate (Section 7) was set to , resulting in the input feature dimensionality progression for dense layers. All models were trained for 500 epochs using the Adam optimizer, with an early stopping criterion halting the training process either when no further improvement was detected on the validation error in the span of 50 epochs, or when the validation error steadily increased for more than 10 consecutive epochs.

10. Experimental Results

10.1. Parameter Sensitivity Analysis

In this section, we examine the effect of critical hyperparameters in the performance of the models under examination. The numbers and figures presented for each hyperparameter correspond to results obtained while the remaining ones assumed their optimal determined value.

10.1.1. Number of Selected Atlases

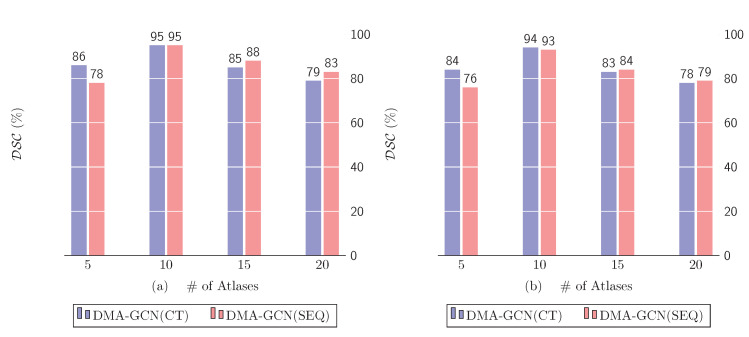

The number of selected atlases is a crucial parameter for all methods adopting the multi-atlas framework. Figure 11 shows the effect on the performance of DMA-GCN(CT) and DMA-GCN(SEQ) by sampling the following values .

Figure 11.

Cartilage score vs. number of atlases. (a) Femoral cart, (b) tibial cart.

For both methods, the number of atlases has a similar effect on the overall performance. The highest score in each case is achieved for atlases and slowly diminishes as that number grows. Constructing the graph by sampling voxels from a small pool of atlases increases the bias of the model, thus failing to capture the underlying structure of the data. Increasing that number allows the image graphs to include a greater percentage of nodes with dissimilar feature descriptions, which enhances the expressive power of features. Accordingly, a moderate number of aligned atlases seems to provide the best overall rates, as it achieves a reasonable balance between bias and variance.

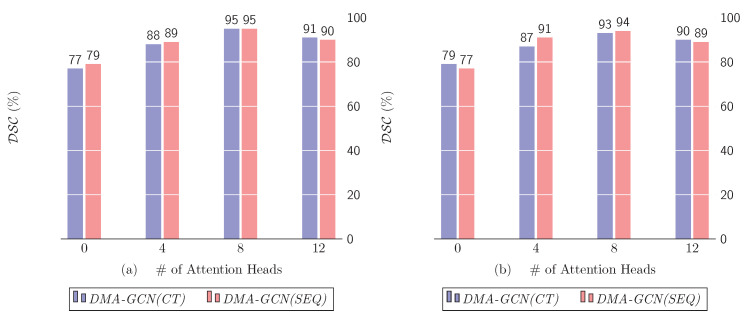

10.1.2. Number of Attention Heads

The number of attention heads is arguably one of the most influential parameters for the local units of our models. Figure 12 demonstrates the effect on performance for . The trivial case of corresponds to the case where the local convolutional units are disregarded, i.e., the convolution task is undertaken solely by the global units.

Figure 12.

Cartilage score vs. number of attention heads. (a) Femoral cart, (b) tibial cart.

The best performance is achieved for for either DMA-GCN(CT) or DMA-GCN(SEQ). Most importantly, in both charts, we can notice a sharp drop in performance when . This indicates that discarding the local convolutional units significantly aggravates the overall efficiency of the DMA-GCN. Particularly, in that case, the model disregards the local contextual information contained in node libraries, while the node features are formed by applying graph convolutions at a global level exclusively.

It can also be noted that independent embeddings of the attention mechanism provide different representations of the local pairwise affinities between nodes, which facilitates a better aggregation of the local information. Nevertheless, beyond a threshold value, the results deteriorate, most likely due to overfitting.

10.1.3. Sparsity of Adjacency Matrix

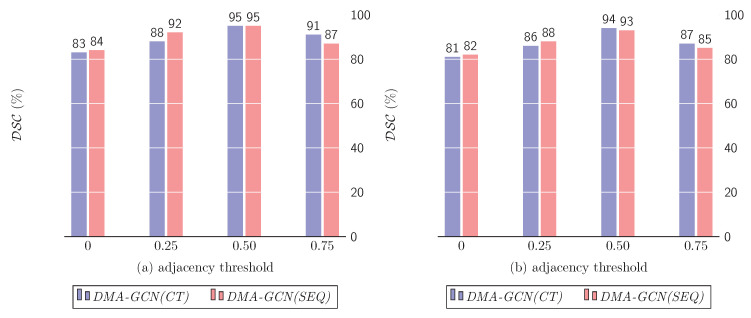

The adjacency matrix is the backbone of graph neural networks in general, encoding the graph structure and node connectivity. As mentioned in [30], a densely connected may have a negative impact on the overall segmentation performance. To this end, we evaluated a number of thresholds that served as cut-off points, discarding edges that were not sufficiently strong. A small threshold leaves most edges in the graph intact, while a larger one creates a sparser by preserving only the most significant edges. Figure 13 summarizes the effects of thresholding at different sparsity levels.

Figure 13.

Cartilage score vs. adjacency threshold. (a) Femoral cart, (b) tibial cart.

As can be seen, large sparsity values considerably reduce the segmentation accuracy, which suggests that preserving only the strongest graph edges decreases the aggregation range from neighboring nodes, and hence the expressive power of the resulting models. On the other hand, similar deficiencies are incurred for low sparsity values with dense matrices . In that case, the neighborhood’s range is unduly expanded, thus allowing aggregations between nodes with weak spectral similarity. A moderate sparsity of the adjacency matrices corresponding to a threshold value of attains the best results for both DMA-GCN(CT) and DMA-GCN(SEQ).

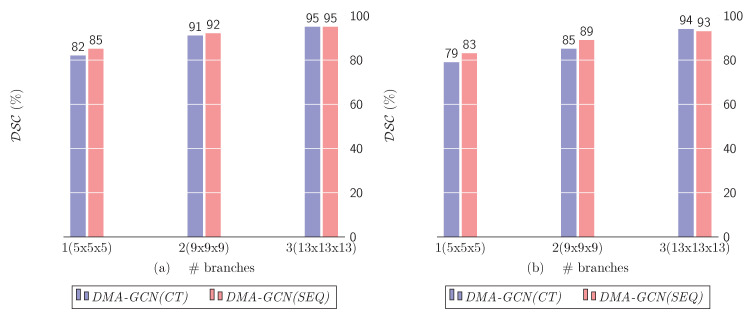

10.1.4. Number of Scales

The number of scales considered in conjunction with the local attention mechanism plays an important role in the overall performance of the DMA-GCN. It defines the size of spatial neighborhoods considered in local convolutional units, which greatly affects the resulting node feature representations. Figure 14 shows the segmentation rates for different values of scales . As can be seen, for both DMA-GCN(CT) and the DMA-GCN(SEQ) models, the incorporation of additional neighborhoods of progressively larger scales improves the accuracy results, consistently.

Figure 14.

Cartilage score vs. number of scales. (a) Femoral cart, (b) tibial cart.

In the trivial case of , each node aggregates local information by paying attention solely to its aligned root nodes. Due to the restricted attention, we were led to weak local representations of nodes, and hence degraded overall performance for the models (left columns). Incorporating the one-hop neighborhoods , we expanded the range of the attention mechanism, which provided more enriched node features. This resulted in significantly better results compared to the previous case (middle columns). The above trend was further retained by including the two-hop neighborhoods of nodes , where we could notice an even greater improvement of results (right columns).

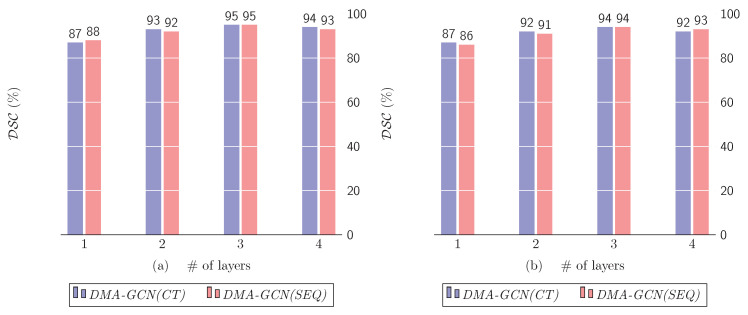

10.2. Number of Dense Layers

In this section, we examine the effect of the number M of dense layers used in the DMA-GCN models (Section 7). It defines the depth of the networks and thus directly impacts the size, as well as the representational capabilities of the respective models. Figure 15 shows the results obtained by progressively using up to four dense layers. It should be noticed that the single layer results refer to the case where DMA-GCN(CT) and DMA-GCN(SEQ) coincide with their constituent block models, i.e., the CT-BM and SEQ-BM, respectively.

Figure 15.

Cartilage score vs. number of dense layers. (a) Femoral cart, (b) tibial cart.

Figure 15a clearly demonstrates an upwards trend in the obtained rates as the number of dense layers increases. The lowest performance is unsurprisingly achieved for the shallow network of a single layer (CT-BM). The best results are achieved for , while the inclusion of an additional layer diminishes slightly the accuracy, an effect most likely attributed to overfitting. A similar pattern of improvements can also be observed for the case of DMA-GCN(SEQ) model. Concluding, the proposed densely connected block architectures lead to deeper GCN networks with multiple local–global convolutional layers, which can acquire more comprehensive node features, and thus offer better results.

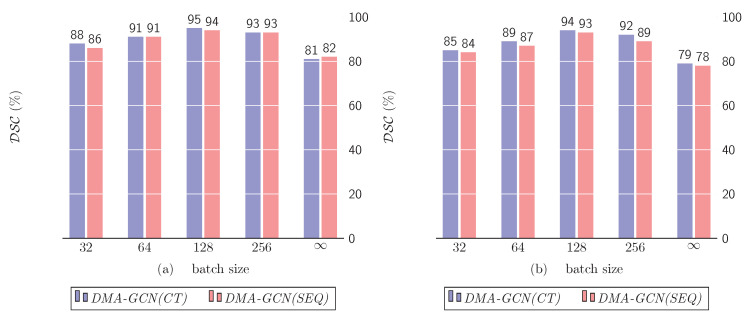

10.3. Mini-Batch vs. Full-Batch Training

The goal of this section is twofold. First, we aim to compare the mini-batch against full-batch learning schemes (Section 8). Secondly, we examine the effect of the batch size on the performance of the mini-batch learning. Figure 16a,b presents the metrics of the models DMA-GCN(CT) and DMA-GCN(SEQ), respectively, for varying batch sizes. The first four columns refer to the mini-batch learning, while the rightmost column represents the full-batch scenario.

Figure 16.

Cartilage score vs. batch size. (a) Femoral cart, (b) tibial cart.

Both figures share a similar pattern of results. Noticeably, in the mini-batch learning, the best results were achieved for a batch size of 128. This value referred to the size of the initial batch as well as the out-of-sample batches extracted from the target . Given these batches, we then proceeded to the formation of the sequences of aligned nodes and the sequence libraries at multiple scales, to generate the corresponding graphs of nodes used for convolutional learning. Finally, the full-batch learning provided a significantly inferior performance compared to the mini-batch scenario.

10.4. Global Module Architecture

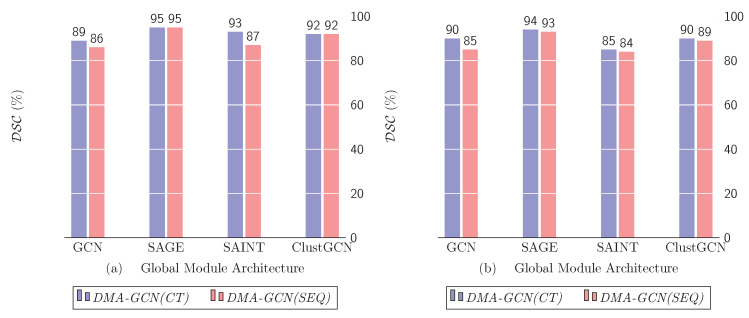

In this section, we examine the effectiveness of some of the popular graph-based networks in our approach. Concretely, in the context of DMA-GCN structures, we considered several model combinations, whereby the GAT-based attention and local information aggregation was used to carry out the local learning task, while the global task was undertaken by the GCN, SAGE, SAINT and ClustGCN, respectively.

Figure 17 shows the measures for both DMA-GCN(CT) and DMA-GCN(SEQ) models. Table 1 also provides more detailed results on this issue. As can be seen, for both models, the utilization of GraphSAGE clearly provided the best performance, possibly due to its more sophisticated node sampling method. Nevertheless, all the alternatives offered consistently good results.

Figure 17.

Cartilage score vs. global module architecture. (a) Femoral cart, (b) tibial cart.

Table 1.

Summary of segmentation performance measures (means ± stds) of the two cartilage classes of our proposed method DMA-GCN (CT/SEQ) by varying the global component of the sub-modules (GCN, SAINT, SAGE, ClustGCN). Best results for each category (CT vs SEQ) with respect to DSC index are highlighted.

| Femoral Cartilage | Tibial Cartilage | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Module | CT | SEQ | Recall | Precision | Recall | Precision | ||||||

| GAT-GCN | ✓ | |||||||||||

| ✓ | ||||||||||||

| GAT-SAGE | ✓ | |||||||||||

| ✓ | ||||||||||||

| GAT-SAINT | ✓ | |||||||||||

| ✓ | ||||||||||||

| GAT-ClustGCN | ✓ | |||||||||||

| ✓ | ||||||||||||

10.5. Transductive vs. Inductive Learning

In this final test case, we investigated the efficacy of the transductive against the inductive learning schemes (Section 8.1). Table 2 presents detailed results pertaining to both DMA-GCN(CT/SEQ) structures.

Table 2.

Summary of segmentation performance measures (means ± stds) of the two cartilage classes of our proposed methods DMA-GCN (CT/SEQ) by varying the overall learning paradigm (transductive vs. inductive). Best results for each category (CT vs SEQ) with respect to DSC index are highlighted.

| Femoral Cartilage | Tibial Cartilage | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Module | SEQ | CT | Recall | Precision | Recall | Precision | ||||||

| Inductive | ✓ | |||||||||||

| ✓ | ||||||||||||

| Transductive | ✓ | |||||||||||

| ✓ | ||||||||||||

According to the results, transductive learning significantly outperforms the inductive learning scenario, for both cartilage classes of interest and across all evaluations metrics. This can be attributed to the following reasons. First, corroborating the well-established finding of the literature, the superior rates underscore the importance of utilizing the SSL features of the unlabeled nodes in the training process, combined with those of labeled ones. Secondly, the refreshing learning stage applied in mini-batch learning (Section 10.3) allows the network to appropriately adjust to the newly observed out-of-sampling batches. On the other hand, the inductive model is trained once using the nearest neighbor image. This network is then used to segment the target image, by classifying the entire set of unlabeled batches from .

10.6. Comparative Results

Table 3 presents extensive comparative results, contrasting our DMA-GCN models with traditional patch-based approaches, and state-of-the-art deep learning architectures established in the field of medical image segmentation. We also applied six graph-based convolution networks in the comparisons. These networks were used as standalone models solely to conduct global learning of the nodes. Finally, we applied the more integrated MGCCN model [30]. For the DMA-GCN models, we applied the following parameter setting: atlases, attention heads, spatial scales, dense layers, and transductive learning with a mini-batch size of 128.

Table 3.

Summary of segmentation performance measures (means ± stds) of the two cartilage classes of our proposed methods DMA-GCN (CT) and DMA-GCN (SEQ) compared to state of the art: 1. patch-based methods, 2. deep learning methods, 3. graph deep learning methods. Best results for all three categories (CT vs SEQ) with respect to DSC index are highlighted.

| Femoral Cartilage | Tibial Cartilage | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Recall | Precision | Recall | Precision | |||||||||

| PBSC | |||||||||||||

| PBNLM | |||||||||||||

| HyLP | |||||||||||||

| SegNet | |||||||||||||

| DenseVoxNet | |||||||||||||

| VoxResNet | |||||||||||||

| KCB-Net | |||||||||||||

| CAN3D | |||||||||||||

| PointNet | |||||||||||||

| GCN | |||||||||||||

| SGC | |||||||||||||

| ClusterGCN | |||||||||||||

| GraphSAINT | |||||||||||||

| GraphSAGE | |||||||||||||

| GAT | |||||||||||||

| MGCN | |||||||||||||

| DMA-GCN (SEQ) | |||||||||||||

| DMA-GCN (CT) | |||||||||||||

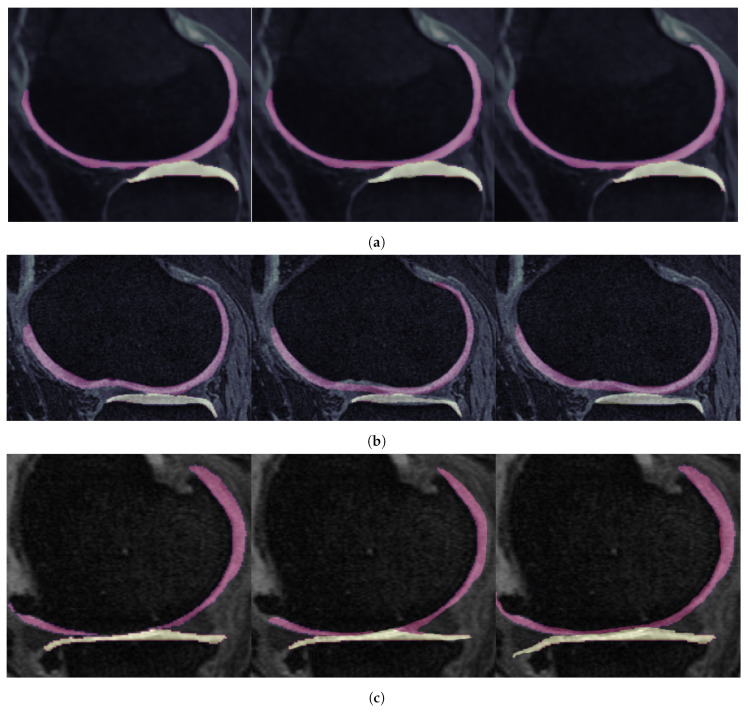

Based on the results of Table 3, we should notice the more enhanced rates of GAT and MGCN compared with those of the other graph convolution networks, suggesting that the attention mechanism combined with a multi-scale consideration of the data can improve the model’s performance. Furthermore, the proposed deep DMA-GCN(CT) and DMA-GCN(SEQ) models are both shown to outperform all competing methods in the experimental setup, achieving and , respectively, across all evaluation metrics and in both femoral and tibial segmentation. DMA-GCN(CT) provides a slightly better performance compared to the DMA-GCN(SEQ) indicating that the alternating combination of local–global convolutional units is more effective. However, both methods may fail to deliver satisfactory results in certain cases where the cartilage tissue is severely damaged or otherwise deformed. Figure 18 showcases an example of a successful application of both DMA-GCN(SEQ) and DMA-GCN(CT) models, along with a marginal case exhibiting suboptimal results, due to extreme cartilage thinning.

Figure 18.