Abstract

Animals in the natural world constantly encounter geometrically complex landscapes. Successful navigation requires that they understand geometric features of these landscapes, including boundaries, landmarks, corners and curved areas, all of which collectively define the geometry of the environment1–12. Crucial to the reconstruction of the geometric layout of natural environments are concave and convex features, such as corners and protrusions. However, the neural substrates that could underlie the perception of concavity and convexity in the environment remain elusive. Here we show that the dorsal subiculum contains neurons that encode corners across environmental geometries in an allocentric reference frame. Using longitudinal calcium imaging in freely behaving mice, we find that corner cells tune their activity to reflect the geometric properties of corners, including corner angles, wall height and the degree of wall intersection. A separate population of subicular neurons encode convex corners of both larger environments and discrete objects. Both corner cells are non-overlapping with the population of subicular neurons that encode environmental boundaries. Furthermore, corner cells that encode concave or convex corners generalize their activity such that they respond, respectively, to concave or convex curvatures within an environment. Together, our findings suggest that the subiculum contains the geometric information needed to reconstruct the shape and layout of naturalistic spatial environments.

Subject terms: Neural circuits, Sensory processing

Longitudinal calcium imaging reveals the ability of corner cells to synchronize their activity with the environment, with the results implying the potential of the subiculum to contain the information required to reconstruct spatial environments.

Main

Neurons that contribute to building a ‘cognitive map’ for an environment, including hippocampal place cells13,14 and entorhinal grid cells15, integrate information from geometric environmental features to shape their spatial representations16–24. To accomplish this integration, the brain needs to represent the explicit properties of geometric features in the environment, such as boundary distances and corner angles. However, it is still unclear which geometric properties are encoded in the brain at the single-cell level, outside of egocentric (self-centred) and allocentric (world-centred) boundary coding in the hippocampal formation and associated regions25–30. Unlike traditional laboratory conditions, which often have straight walls, natural environments are full of concave and convex shapes, from networks of tree branches to winding burrow tunnels. Given that the combination of straight lines and curves can give rise to any shape, we hypothesized that the brain encodes the concave and convex curvatures of an environment (for example, corners and curved protrusions), in addition to straight boundaries24,25,29. One brain region that could play a role in encoding concave and convex environmental features is the subiculum, a structure that receives highly convergent inputs from both the hippocampal subregion CA1 and the entorhinal cortex31,32. Earlier work has demonstrated that neurons in the subiculum encode the locations of environmental boundaries and objects in an allocentric reference frame, as well as the axis of travel in multi-path environments25,33–35. Here, we describe single-cell neural representations for concave and convex environmental corners and curvatures in the dorsal subiculum, which reside interspersed with single-cell neural representations for environmental boundaries.

Subiculum neurons encode environmental corners

To record from large numbers of neurons in the subiculum, we performed in vivo calcium imaging using a single photon (1P) miniscope in freely behaving mice (Fig. 1a, b). We primarily used Camk2a-Cre; Ai163 (ref. 36) transgenic mice, which exhibited stable GCaMP6s expression in subiculum pyramidal neurons (Extended Data Fig. 1a) and thus facilitated longitudinal tracking of individual neurons37 (Fig. 1c). Calcium signals were extracted with CNMF38 and OASIS39 deconvolution, and subsequently binarized to estimate spikes for all cells (Fig. 1d and Extended Data Fig. 1b). We treated these deconvolved spikes as equivalent to electrophysiological spikes and for calculating spike rates in downstream analyses.

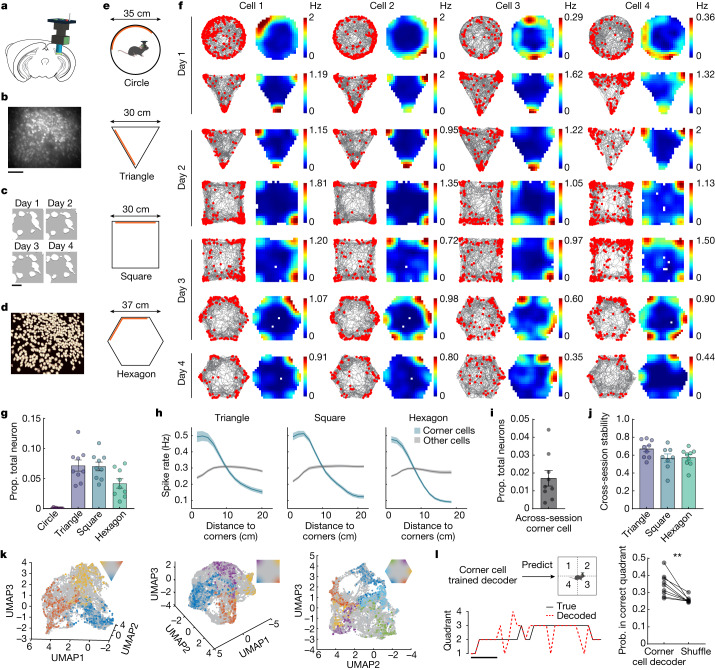

Fig. 1. The subiculum contains neurons that exhibit corner-associated activity.

a, Schematic of miniscope calcium imaging in the subiculum. b, Maximum intensity projections of subiculum imaging from a representative mouse. c, An enlarged region of interest from b across days. d, Extracted neurons from b. e, Open arena environment shapes. Orange bars indicate local visual cues. f, Four representative corner cells from three mice. Each column is a cell with its activity tracked across sessions. Raster plot (left) indicates extracted spikes (red dots) on top of the animal’s running trajectory (grey lines) and the spatial rate map (right) is colour coded for maximum (red) and minimum (blue) values. g, Proportion of corner cells in each environment (arena shapes, x-axis). Each dot represents a mouse, with a maximum of two sessions averaged within each mouse (mean ± standard error of the mean (s.e.m.); n = 9 mice). h, Positional spike rates plotted relative to the distance to the nearest corner (n = 9 mice). Solid line, mean; shaded area, s.e.m. i, Proportion (prop.) of corner cells across sessions (mean ± s.e.m.; two-tailed Wilcoxon signed-rank test against zero: P = 0.0039; n = 9 mice). j, Cross-session stability (Pearson’s correlation) of across-session corner cells in i for each environment. k, Three-dimensional (3D) embedding of the subiculum population activity in the triangle, square and hexagon from a representative mouse. Uniform manifold approximation and projection (UMAP) plots shown. Each dot is the population state at one time point. Time points within 5 cm of the corners are colour coded as shown in the inset. l, Left, an example of decoding the animal’s quadrant location over time using a decoder trained on corner cell activity. Black line, true quadrant location; red dotted line, decoded quadrant location. Right, quadrant decoding accuracy versus shuffle (mean ± s.e.m.: decoder versus shuffle, 0.35 ± 0.02 versus 0.26 ± 0.006; two-tailed Wilcoxon signed-rank test: P = 0.0039; n = 9 mice). y-axis indicates the probability (prob.) that the animal’s location was decoded in the correct quadrant. Scale bars, 100 μm (b), 10 μm (c), 5 s (l).

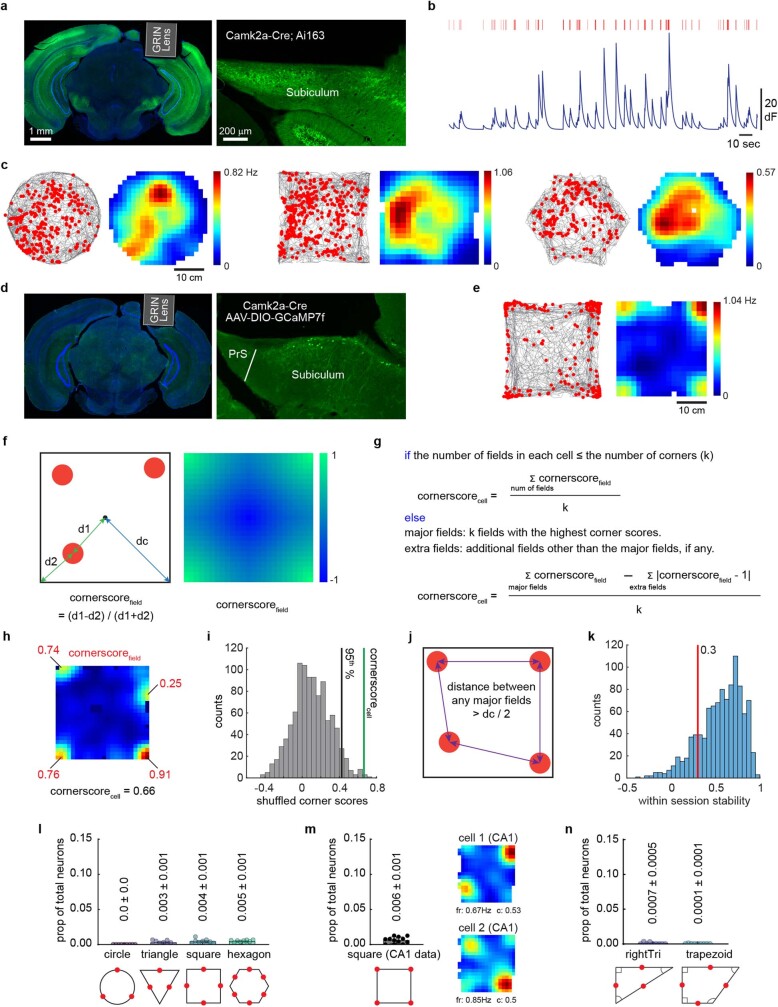

Extended Data Fig. 1. Histology and the development of a score to classify corner cells.

(a) Histology of GRIN lens implantation in the dorsal subiculum of an example Camk2a-Cre; Ai163 mouse. Green: GCaMP6, Blue: DAPI. Right, enlarged view of GCaMP6-expressing subiculum neurons. The experiment was replicated in 8 mice with similar results. (b) Representative de-noised calcium signal traces (dark blue) and de-convolved inferred spikes (red bars) extracted from CNMF-E. (c) Raster plots and rate maps of a representative place cell in the subiculum. Raster plot (left) indicates extracted spikes (red dots) on top of the animal’s running trajectory (grey lines) and the spatial rate map (right) is colour-coded for maximum (red) and minimum (blue) values. Activity was tracked across different environments. (d) Same as (a), but from a Camk2a-Cre mouse with AAV-DIO-GCaMP7f injected in the subiculum. GCaMP expression was restricted to the subiculum. PrS: presubiculum. (e) An example corner cell from the animal in (d), plotted as in (c). (f) Left, the definition of corner score for a given spatial field (cornerscorefield). d1: distance from the centre of the arena to the field; d2: distance from the field to the nearest corner; dc: the mean distance from the corners to the centre of the arena. Right, the distribution of cornerscorefield in a square environment. This represents the expected corner score if a neuron were active in a given pixel of this plot. Note that the cornerscore can range from −1 (blue) to 1 (green). (g) The definition of the corner score for a given cell (cornerscorecell, see Methods). (h) An example corner cell with corner score values for each field labeled in red, the final corner score for this cell is shown below. (i) Shuffling of cornerscorecell to determine a threshold for classifying a neuron as a corner cell. This example is from the same cell as in (h). See also Extended Data Fig. 2a–d and Methods. (j) To be classified as a corner cell, the distance between any two fields (major fields, if the number of fields > number of corners) needed to be greater than half of the dc value, as indicated by the blue line in (f). (k) As an additional criterion, to be classified as a corner cell, within-session stability needed to be greater than 0.3 (Pearson’s correlation between the two halves of the data). The distribution shows the within-session stability of all corner cells from the triangle, square, and hexagon sessions before applying this criterion (n = 1018 cells from 9 mice). (l) Proportion of neurons that passed the definition for a corner cell when corners of the environments were manually assigned to the walls. Red dots in the bottom schematic denote the locations that were assigned as ‘corners’. (m) Left: Proportion of corner cells in CA1 (n = 12 mice). Right: Rate maps of two example CA1 corner cells. Peak spike rates (fr) and corner scores (c) for the cells are indicated at the bottom. (n) Same as (l), but for the right triangle and trapezoid environments.

We placed animals in one of four open field arenas, including a circle, an equilateral triangle, a square and a hexagon. On each day, we recorded subiculum neurons from two of these four arenas (20 min per session) (Fig. 1e,f). Many subicular neurons exhibited place cell-like firing patterns that were spatially modulated but not geometry-specific across the different environments (Extended Data Fig. 1c), as previously reported40,41. However, we also observed a subset of subicular neurons that were active near the boundaries of the circle (Fig. 1f). Following the activity of these neurons in all the other non-circle environments revealed that they exhibited increased spike rates specifically at the corners of the environments (Fig. 1f). To ensure that these neurons were anatomically located in the subiculum, we used a viral strategy to restrict GCaMP expression to the subiculum (Extended Data Fig. 1d) and observed the same corner-associated neural activity (Extended Data Fig. 1e).

To classify neurons that exhibited corner-specific activity patterns, we devised a corner score that measures how close a given spatial field is to the nearest corner (Extended Data Fig. 1f). The score ranged from −1 for fields situated at the centroid of the arena, to +1 for fields perfectly located at a corner (Extended Data Fig. 1f and Methods). We defined a corner cell as a cell with: (1) a corner score greater than the 95th percentile of a distribution of shuffled scores generated by shuffling the spike times along the animal’s trajectory (Extended Data Fig. 1g–i, Extended Data Fig. 2a–d and Methods); (2) a distance between any two fields (major fields, if number of fields greater than number of corners) greater than half the distance between the corner and centroid of the environment (Extended Data Fig. 1g,j and Methods); and (3) a within-session spatial stability value greater than 0.3 (Extended Data Fig. 1k and Methods). Using this definition, we classified 7.2 ± 0.9% (mean ± s.e.m., n = 9 mice) of neurons as corner cells in the triangle, 7.0 ± 0.7% in the square and 4.2 ± 0.8% in the hexagon (Fig. 1g and Extended Data Fig. 3). Notably, this method classified almost no neurons as corner cells in the circle when four or three equally spaced points on the wall were assigned as the ‘corners’ for the environment (Fig. 1g: 0.04 ± 0.03%, four points; Extended Data Fig. 1l: 0.0 ± 0.0%, three points). Applying the same procedure to all other environments, we confirmed that no more than 0.5% of neurons were classified as corner cells when we manually moved the corner location to the walls (Extended Data Fig. 1l). Furthermore, we imaged 5,212 CA1 neurons from 12 mice in a square environment. Only 0.6 ± 0.1 % of CA1 neurons were classified as corner cells (Extended Data Fig. 1m), a significantly lower proportion than the number of subiculum cells classified as corner cells in the square (Fig. 1g; Mann–Whitney test: P < 0.0001).

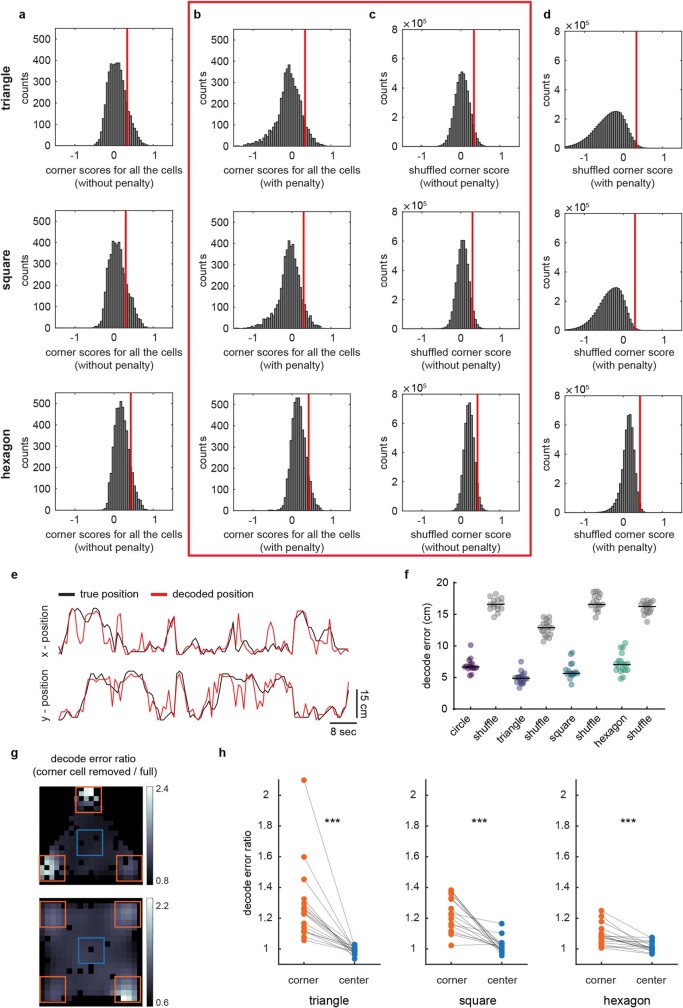

Extended Data Fig. 2. Corner score shuffling and spatial decoding.

(a) Distributions of corner scores calculated without applying the penalty (Methods) for all subiculum neurons recorded in the triangle (n = 4774 cells from 18 sessions of 9 mice), square (n = 4685 cells from 17 sessions of 9 mice), and hexagon (n = 4774 cells from 18 sessions of 9 mice) environments. The red lines represent the 95th percentile of the distributions in (b). Note: This calculation is for display purposes only and was not used for the analyses in this paper, also see Methods for details. (b) Distributions of corner scores calculated with penalty applied (Extended Data Fig. 1g), which was used in the analyses. The red lines represent the 95th percentile of the corresponding distributions. (c) Distributions of shuffled corner scores calculated without applying the penalty, which was used in the analyses. Each cell was shuffled 1000 times. The red lines represent the 95th percentile of the distributions in (b). The red box over (b) and (c) indicates the method used in corner score calculation and shuffling procedures in the current work, which resulted in the most stringent corner score criteria for defining corner cells. (d) Same as c, but distributions of shuffled corner scores calculated with penalty applied. The red lines represent the 95th percentile of the distributions in (b). Note: this method was not used in this paper. (e) An example of the true vs. decoded spatial x-y position using the full decoder (all recorded subiculum neurons). (f) Decoding performance of the full decoder in different environments. The decoder was trained and tested within each session using 10-fold cross-validation. Each dot is a session, black lines represent the median. Decoding errors were compared with the corresponding shuffle within each condition (two-tailed Wilcoxon signed-rank test: all p < 0.0001; n = 15, 18, 17, and 18 sessions for circle, triangle, square, and hexagon, respectively, from 9 mice). (g) Decoding error ratio from a triangle (top) or square (bottom) session, color-coded for larger (white) and smaller (black) error ratios. The error ratio was obtained by taking the error map from the corner cell removed decoder and dividing it by the error map of the full decoder. Corner areas (orange boxes) showed higher error ratios than the center area (blue box). (h) Quantitative comparisons of the decoding error ratios between the corner and the center areas (shown in g) for all sessions. Each line is a session (two-tailed Wilcoxon signed-rank test: triangle: p < 0.0001; square: p < 0.0001; hexagon: p = 0.0004; n = 18, 17, and 18 sessions for triangle, square, and hexagon, respectively, from 9 mice).

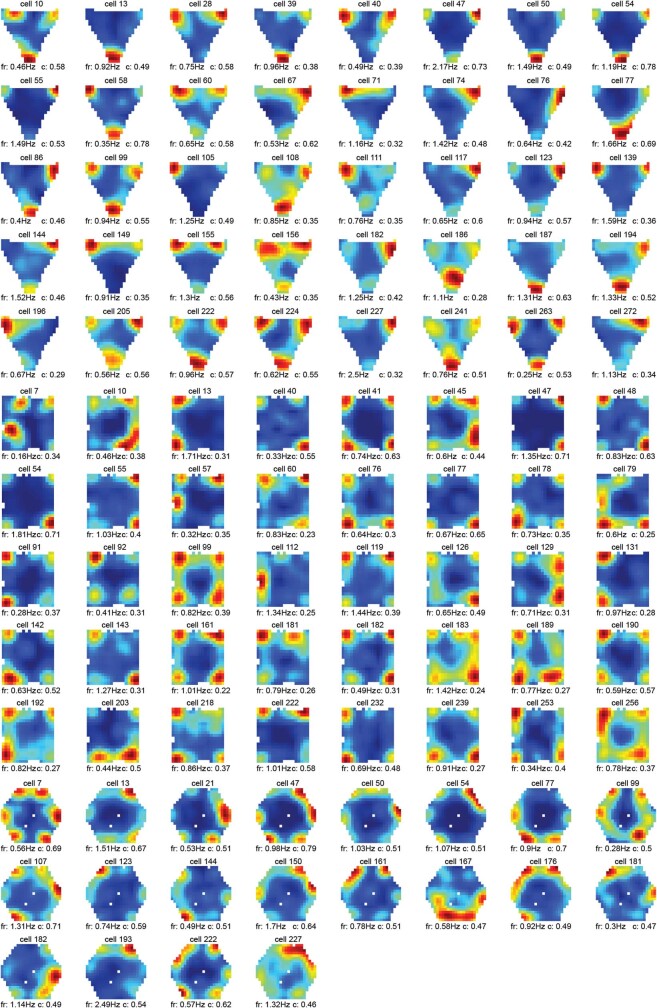

Extended Data Fig. 3. Rate map examples of identified corner cells.

Rate maps of identified corner cells in the triangle, square, and hexagon environments from a representative mouse. Corresponding peak spike rate (fr) and corner score (c) are labeled under each rate map.

To verify that neurons classified as corner cells encode locations near corners, we plotted the spike rate for each bin on the rate map as a function of the distance to the nearest corner. As expected, corner cells showed a higher spike rate near the corners than the centroid, which was not observed in non-corner cells from the same animal (Fig. 1h). Second, a decoding analysis revealed that subicular neurons provided significant information regarding the animal’s spatial location (Extended Data Fig. 2e,f). Removing corner cells from this decoder resulted in higher decoding errors near the corners than at the centre of the environment, compared to the full decoder (Extended Data Fig. 2g,h). Accounting for the animal’s behaviour, as measured by a corrected peak spike rate at each corner (Extended Data Fig. 4a–f and Methods), we did not observe a bias in the corner cell population activity towards encoding specific corners (Extended Data Fig. 4f). Finally, across all non-circle geometries, 1.7 ± 0.4% of neurons were consistently classified as corner cells (referred to as ‘across-session corner cells’, Fig. 1i). These across-session corner cells exhibited stable corner-associated activity in all environments (Fig. 1j) (mean cross-session stability from 0.57–0.67, Pearson’s correlation). Of note, the neural population classified as corner cells in one environment continued to show activity at corners in later sessions/conditions in which they were not classified as corner cells (Extended Data Fig. 4g,h), indicating corner activity generally persisted across different geometries when considering the neurons as a population rather than only single cells classified based on their corner score.

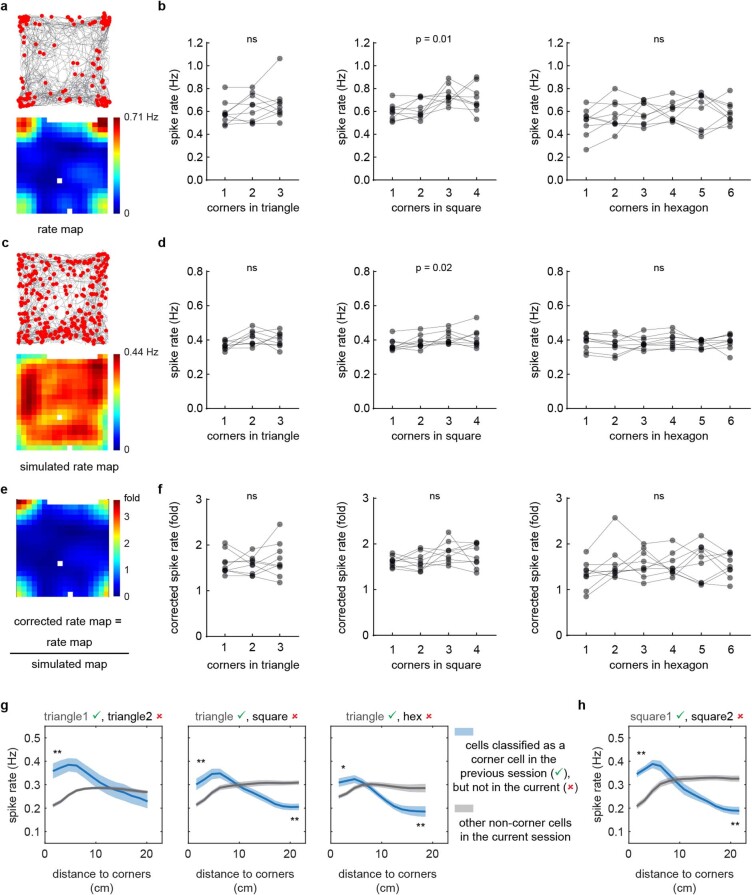

Extended Data Fig. 4. Corrected rate map and persistent activity of corner cells.

(a) Raster plot and the corresponding rate map of an example corner cell. The raster plot (top) indicates extracted spikes (red dots) on top of the animal’s running trajectory (grey lines) and the spatial rate map (bottom) is color-coded for maximum (red) and minimum (blue) values. (b) Spike rates of corner cells at each corner of the triangle, square, and hexagon, respectively, calculated using the rate maps of corner cells. Each line represents a mouse. There is a significant difference in the corner spike rates across different corners in the square (repeated measures ANOVA: F(2.24, 12.92) = 5.76, p = 0.010; n = 9 mice). ns: not significant. (c) Raster plot and the corresponding rate map of a simulated cell. The simulated rate map was generated using a simulated neuron that fires along the animal’s trajectory using the animal’s own speed at the overall mean spike rate observed across all neurons of a given mouse (Methods). (d) Same as (b), but calculated using simulated rate maps for each mouse. The difference in corner spike rates in the square persists even in the simulated rate maps (repeated measures ANOVA: F(2.56, 20.48) = 4.24, p = 0.022; n = 9 mice), indicating this effect is due to animals’ behavior. (e) An example of corrected rate map, by dividing the original rate map (i.e., a) by the simulated rate map (i.e., c). Therefore, the spike rates on the corrected rate map were automatically converted to fold changes relative to the simulated rate map. This method was used to correct for any measurements that might have been associated with the animal’s movement or occupancy, as purely behavior-related changes should be evident in both the original and simulated rate maps. (f) Same as (b), but calculated using the corrected rate maps of corner cells. Each line represents a mouse (repeated measures ANOVA: all p > 0.05; n = 9 mice). (g-h) Spike rates plotted relative to the distance to the nearest corner. Blue curves indicate neurons that were corner cells in the first session (green check) but not in the second session (red cross). The plots were generated based on the activity of neurons in the second session. Grey curves indicate other non-corner cells. Solid line: mean; Shaded area: SEM. Statistical tests were carried out as in Fig. 2k (two-tailed Wilcoxon signed-rank test: * p < 0.05, ** p < 0.01; n = 9 mice for each plot).

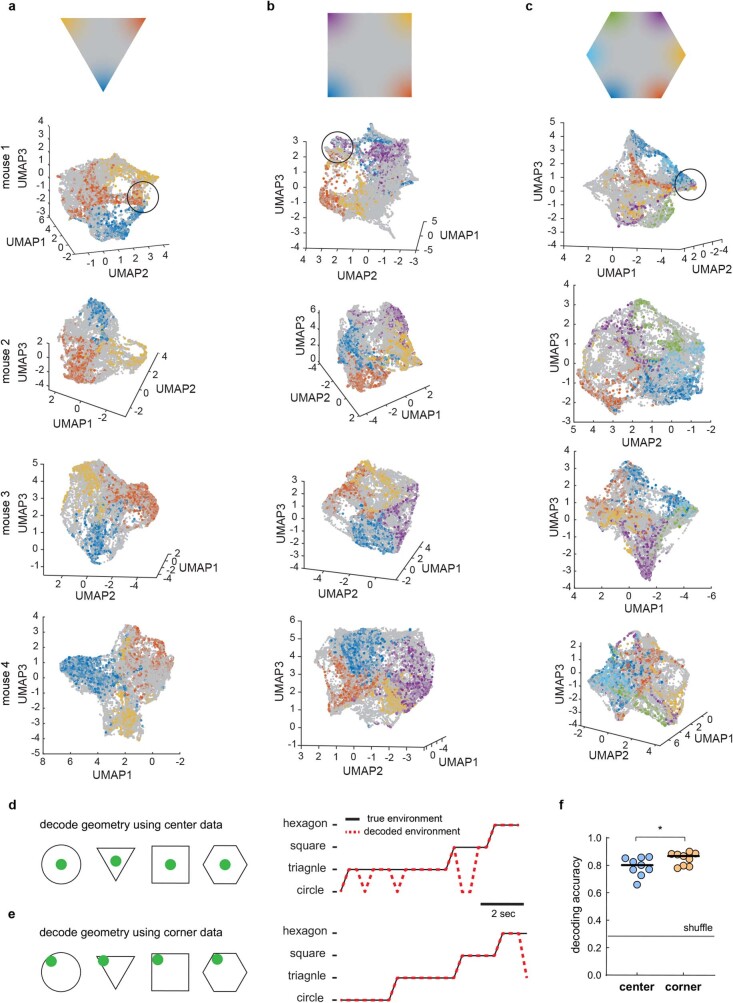

To visualize the representation of corners in the low-dimensional neural manifold of the subiculum, we performed three-dimensional (3D) embedding42 of the population activity of all recorded neurons in the triangle, square and hexagon (Fig. 1k, Extended Data Fig. 5a–c and Methods). Across different mice, we found that the representation of each corner for a given environment was distinct from other corners and the rest of the space and that the sequential order of corners was effectively preserved in the low-dimensional neural manifold (Fig. 1k and Extended Data Fig. 5a–c). On the other hand, corner representations also converged at a specific point on the manifold (as indicated by the black circles in Extended Data Fig. 5a–c). This convergence suggests that subiculum neurons also generalize the concept of corners, in addition to representing their distinct locations. A prediction of this ‘separated yet connected’ corner representation is that corner cells more generally encode the presence of a corner and only modestly encode the precise allocentric location of corners (for example, the northwest versus the southwest corner). To test this idea, we first trained a decoder on corner cell activity and used this decoder to predict the animal’s quadrant location in the square environment (Fig. 1l). While decoding performance significantly exceeded chance levels, the accuracy of the decoding was only moderate (approximately 35%, Fig. 1l), consistent with the idea that corner cells generalize their coding to all corners. Next, we implemented a decoder to predict the geometry (that is, identity) of the environment and compared the prediction accuracy of the decoder when using data from locations near versus away from the geometric features of the environment (that is, corners, boundaries). This approach revealed that subiculum neurons carried more information about the overall environmental geometry when the animal was closer to a geometric feature (Extended Data Fig. 5d–f). Together, these results point to the subiculum as a region that encodes information related to corners and the geometry of the environment.

Extended Data Fig. 5. Neural manifold embedding and geometry decoding.

(a) Three-dimensional (3D) embedding of the population activity of recorded subiculum neurons in the triangle from four different mice. We applied a sequential dimensionality reduction method using PCA and UMAP to obtain this neural manifold embedding (Methods). Each dot represents the population state at one time point. Time points within 5 cm of the corners are color-coded, with the color graded to grey as a function of the distance away from the corner (top row). The example from mouse 1 is the same as Fig. 1k but from a rotated view. The black circle denotes the place that corner representations converged on each manifold. (b) same as (a), but in the square environment. (c) same as (a), but in the hexagon environment. For a-c, analyses were replicated in 9 mice with similar results. (d) Left: Schematic of using the data from the environmental centre (8 cm diameter) to decode the environmental identity (geometry). Right: A decoding example to predict the geometry of the environment. (e) Left: Schematic of using the data near (within 8 cm) a geometric feature of the environment (e.g., a corner) to decode the environmental identity (geometry). Right: A decoding example to predict the geometry of the environment. (f) Comparison of decoding accuracy in (d) and (e) (mean ± SEM: center vs. corner: 0.79 ± 0.02 vs. 0.85 ± 0.02; two-tailed Wilcoxon signed-rank test: p = 0.019; n = 9 mice).

Corner coding is specific to environmental corners

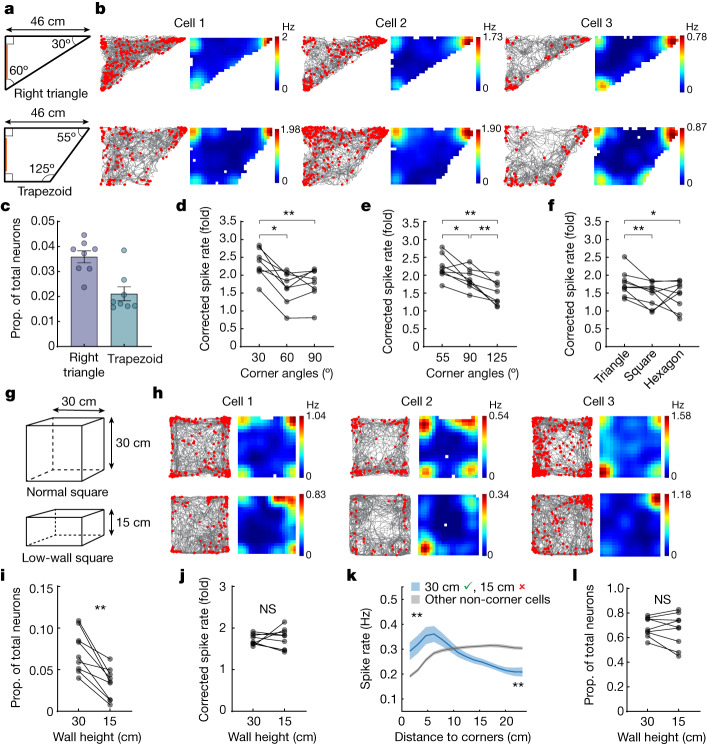

To investigate the degree to which corner cells specifically encode environmental corners, we considered three properties that comprise a corner: (1) the angle of the corner, (2) the height of the walls and (3) the connection between two walls. First, we imaged as animals explored two asymmetric environments: a right triangle (30-60-90° corners) or a trapezoid (55-90-125° corners) (Fig. 2a). In these asymmetric environments, corner cells composed 3.6 ± 0.3% and 2.1 ± 0.3% of all neurons recorded in the right triangle and the trapezoid, respectively (Fig. 2b,c; n = 8 mice). By comparison, there were essentially no neurons classified as corner cells when points on the wall were assigned as the ‘corners’ of these environments (Extended Data Fig. 1n). In the right triangle, corner cell peak spike rates were significantly higher for the 30° (2.32 ± 0.14, mean ± s.e.m.) corner compared to the 60° (1.67 ± 0.16) and 90° (1.76 ± 0.16) corners, but did not differ between the 60° and 90° corners (Fig. 2b,d). To rule out the possibility that this was due to the limited angular range of these acute angles, we compared the peak spike rates at the corners of the trapezoid and found that the peak spike rates of corner cells increased from 125° (1.49 ± 0.12, mean ± s.e.m.) to 90° (1.90 ± 0.10) to 55° (2.23 ± 0.12) (Fig. 2e). We also compared the peak spike rates at the corners using the aforementioned across-session corner cells in the triangle (60°, 1.76 ± 0.12, mean ± s.e.m., n = 9 mice), square (90°, 1.47 ± 0.11) and hexagon (120°, 1.44 ± 0.13), and found the peak spike rate was higher in the triangle compared to the square and hexagon (Fig. 2f). Together, these results suggest that corner cells encode information regarding corner angles, particularly within asymmetric environments.

Fig. 2. Corner cell coding is sensitive to corner angle and wall height.

a, Asymmetric arena shapes. Orange indicates visual cues. b, Three representative corner cells from three mice, plotted as in Fig. 1f. c, Proportion of corner cells in each environment. Each mouse averaged from two sessions (mean ± s.e.m.; n = 8 mice). d, Corrected peak spike rates of corner cells at each right triangle corner (repeated measures ANOVA: F(1.65, 11.53) = 18.54, P = 0.0004; 30° versus 60°: P = 0.016; 30° versus 90°: P = 0.0078; 60° versus 90°: P = 0.31; n = 8 mice). e, Same as d, for trapezoid (repeated measures ANOVA: F(1.85, 12.97) = 23.94, P < 0.0001; 55° versus 90°: P = 0.023; 55° versus 125°: P = 0.0078; 90° versus 125°: P = 0.0078; n = 8 mice). f, Corrected peak spike rates of across-session corner cells in different environments (arena shapes, x-axis; repeated measures ANOVA: F(2,16) = 3.88, P = 0.042; triangle versus square: P = 0.0078; triangle versus hexagon: P = 0.039; square versus hexagon: P = 0.91; n = 9 mice). g, Schematic of normal versus low-wall squares. h, Three representative corner cells from three mice, plotted as in b. i, Proportion of corner cells in 30 versus 15 cm square (P = 0.0039; n = 9 mice). j, Corrected peak spike rates of corner cells in 30 and 15 cm square (P = 0.73). k, Spike rates relative to the distance from the nearest corner in the 15 cm square. Blue curve denotes neurons that were corner cells in the 30 cm (green check) but not the 15 cm square (red cross). Blue and grey curves, approximately 5 cm from either the curve’s head or tail, were compared (all P = 0.0039). Solid line, mean; shaded area, s.e.m. l, Same as i, for place cells (P = 0.50). Pairwise comparisons throughout the figure use two-tailed Wilcoxon signed-rank test. NS, not significant.

Next, we imaged as animals explored the normal square environment (as in Fig. 1) with 30 cm high walls (normal square), followed by a low-wall square environment with 15 cm high walls (Fig. 2g,h). Quantitative analysis revealed the proportion of corner cells significantly decreased from the normal (7.2 ± 0.8%, mean ± s.e.m.) to the low-wall square (3.3 ± 0.6%) (Fig. 2i). The remaining corner cells in the low-wall square had corner spike rates similar to their corner spike rates in the normal square (1.72 ± 0.04 versus 1.74 ± 0.08; n = 9 mice) (Fig. 2j). However, for neurons classified as corner cells in the normal square but not in the low-wall square, their spike rates near the corners of the low-wall square were still higher than those in non-corner cells (Fig. 2h,k), indicating that their corner-related activity decreased by lowing the wall but was not completely lost. Finally, in comparison to corner cells, the proportion of subiculum place cells did not change between the normal (68.5 ± 2.6%) and low-wall squares (66.4 ± 4.5%) (Fig. 2l). Together, these results indicate that the tuning of corner cells is sensitive to the height of the walls that constitute the corner.

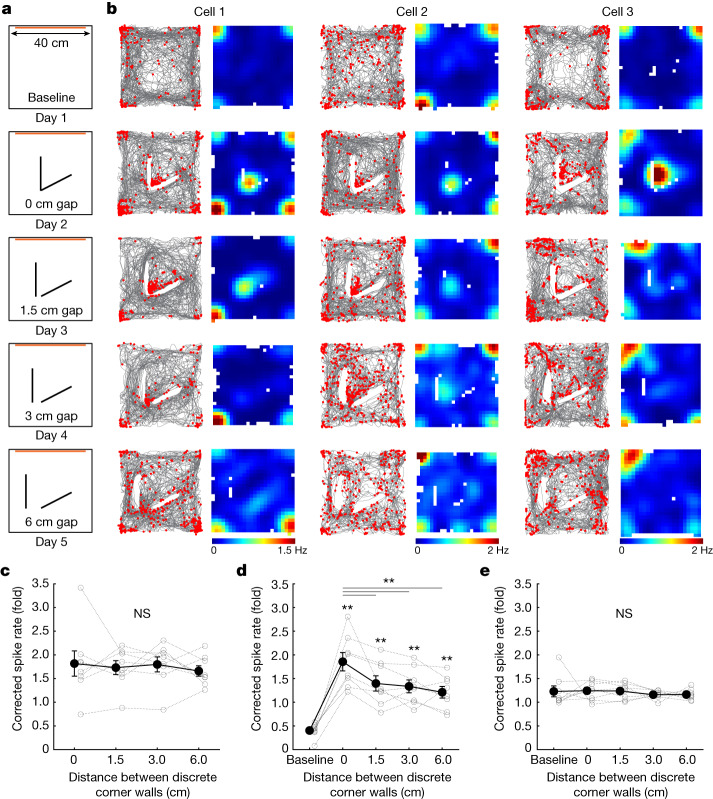

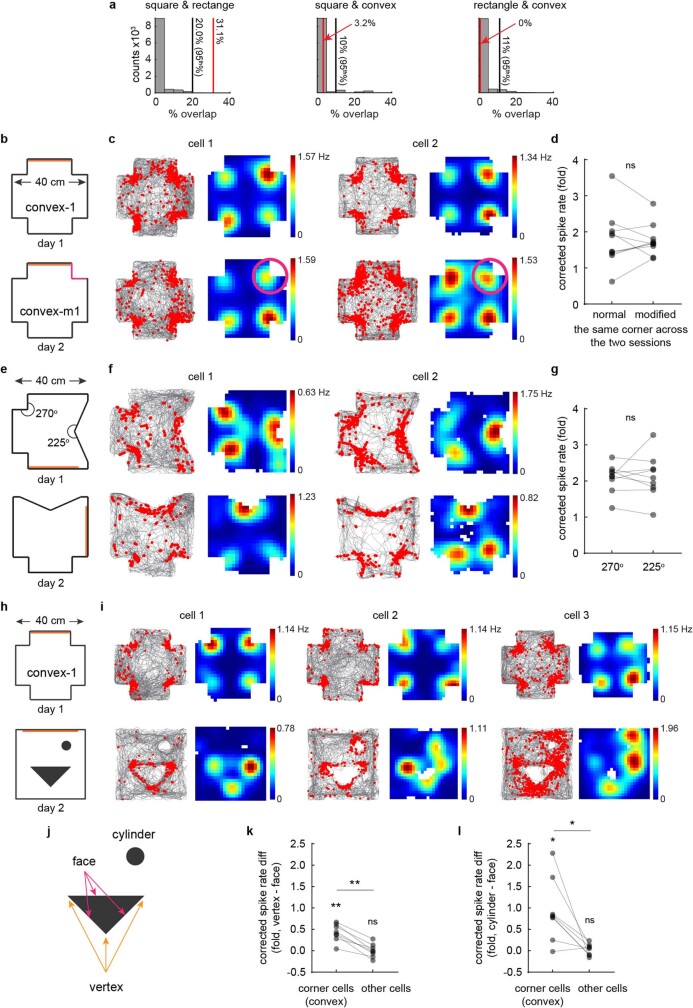

Finally, we imaged as animals explored a large square environment in which we inserted a discrete corner and gradually separated its two connected walls (1.5, 3 or 6 cm separation) (Fig. 3a). We identified corner cells in the baseline session and tracked their activity across all manipulations (Fig. 3b). Despite the insertion of the discrete corner, corner cells identified in baseline did not change their average peak spike rates at the corners of the square environment (Fig. 3b,c). Upon the insertion of the discrete corner, corner cells developed a new field near the inserted corner (Fig. 3b). As the distance between the walls of the discrete corner increased, the peak spike rate of corner cells at that corner decreased (Fig. 3d). Even at the largest gap of 6 cm, however, corner cell peak spike rate at the discrete corner was still significantly higher than at baseline (1.21 ± 0.12 versus 0.40 ± 0.05, mean ± s.e.m.) (Fig. 3d), indicating that the animal may still perceive the inserted walls as a corner. Furthermore, the peak spike rates of corner cells at 1.5 cm (1.40 ± 0.16), 3 cm (1.34 ± 0.14) and 6 cm (1.21 ± 0.12) gap were significantly attenuated compared to the 0 cm (1.86 ± 0.19) gap condition (Fig. 3d), suggesting that corner cells are sensitive to the connection of the walls that constitute the corner. In comparison, there was no effect at the inserted corner when we performed the same analyses using non-corner cells (Fig. 3e).

Fig. 3. Corner cell coding is sensitive to the proximity of the walls that constitute a corner.

a, Schematic of the open field arena and sessions in which a discrete corner was inserted into the centre of the environment. Orange bars indicate the locations of local visual cues. b, Raster plots and the corresponding rate maps of three representative corner cells from three different mice, plotted as in Fig. 1f. Each column is a cell in which its activity was tracked across sessions. Note that rate maps for each cell were plotted to have the same colour-coding scale for maximum (red) and minimum (blue) values. c, Corrected peak spike rates of baseline-identified corner cells at the environmental corners (not the inserted corner) across non-baseline sessions (repeated measures ANOVA: F(1.99, 13.99) = 0.30, P = 0.74; n = 8 mice). Black dots represent mean ± s.e.m.; grey lines represent each animal. d, Corrected peak spike rates of baseline-identified corner cells at the inserted corner across all the sessions, plotted as in c (repeated measures ANOVA: F(2.42, 16.96) = 25.62, P < 0.0001; two-tailed Wilcoxon signed-rank test: baseline versus 0 cm, P = 0.0078; baseline versus 1.5 cm, P = 0.0078; baseline versus 3 cm, P = 0.0078; baseline versus 6 cm, P = 0.0078; 0 cm versus 1.5 cm, P = 0.0078; 0 cm versus 3 cm, P = 0.0078; 0 cm versus 6 cm, P = 0.0078; n = 8 mice). e, Same as d, but for non-corner cells (repeated measures ANOVA: F(1.57, 10.97) = 0.33, P = 0.68; n = 8 mice).

Decoupling corner coding from non-geometric features

We next investigated whether corner cells in the subiculum were sensitive to non-geometric features of a corner. To test this, we placed the animals in a shuttle box with two connected square compartments that differed in colour and texture (Extended Data Fig. 6a). Corner cells showed increased spike rates uniformly across all the corners, regardless of the context (Extended Data Fig. 6b,c). In addition, their average peak spike rates at corners were comparable across the two contexts regardless of the context in which the corner cell was defined (Extended Data Fig. 6d–f). These results suggest that corner cells in the subiculum primarily encode corner-associated geometric features, rather than non-geometric properties, such as colours and textures.

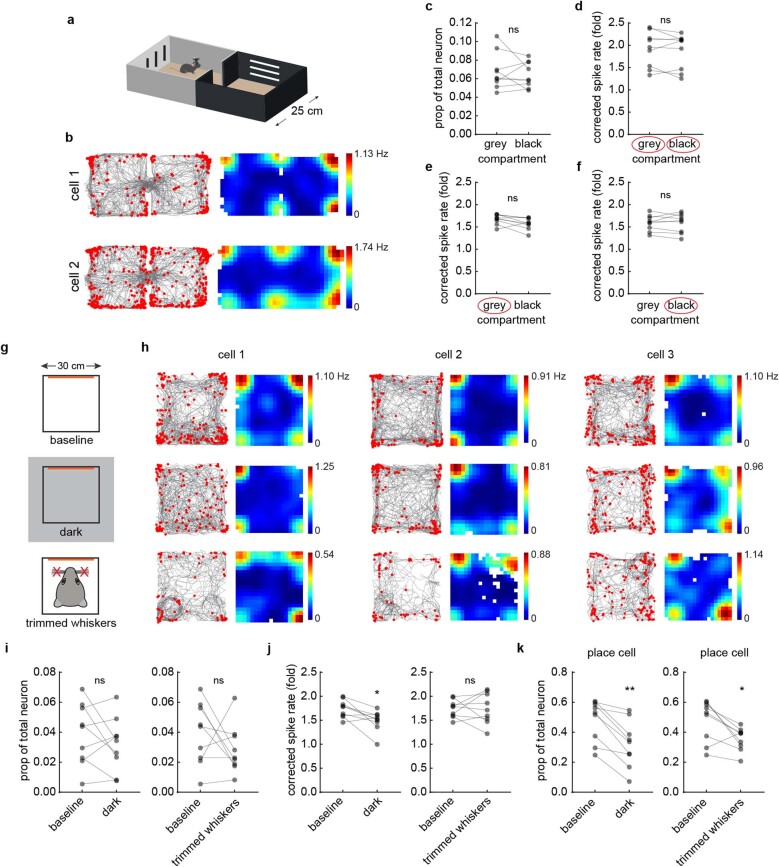

Extended Data Fig. 6. The response of corner cells to non-geometric and sensory manipulations.

(a) Schematic of a shuttle box composed of two compartments that differed in their visual and tactile cues. (b) Two example corner cells from two different mice recorded in the shuttle box shown in (a). Raster plot (left) indicates extracted spikes (red dots) on top of the animal’s running trajectory (grey lines) and the spatial rate map (right) is color-coded for maximum (red) and minimum (blue) values. (c) Proportion of neurons classified as corner cells in the grey vs. black compartments of the shuttle box (two-tailed Wilcoxon signed-rank test: p = 0.91; n = 9 mice). ns: not significant. (d) Average corrected peak spike rates of corner cells at the corners in the grey vs. black compartments (two-tailed Wilcoxon signed-rank test: p = 0.43; n = 9 mice). Corner cells included in this quantification were defined as corner cells in both grey and black compartments. (e) Same as (d), but using corner cells that defined in the grey compartment (two-tailed Wilcoxon signed-rank test: p = 0.07). (f) Same as (d), but using corner cells that defined in the black compartment (two-tailed Wilcoxon signed-rank test: p = 0.91). (g) Schematic of imaging in the dark or after trimming the whiskers. Orange bars indicate the location of local visual cues. (h) Raster plots and the corresponding rate maps of three corner cells from three different mice, as in (b). Each column is a neuron with activity tracked across all the conditions indicated on the left. (i) Left: Proportion of corner cells compared between baseline and dark (two-tailed Wilcoxon signed-rank test: p = 0.50; n = 9 mice). Right: Proportions of corner cells compared between baseline and whisker trimming (two-tailed Wilcoxon signed-rank test: p = 0.46, n = 9 mice). (j) Left: Comparison of the corrected peak spike rates of corner cells at square corners between baseline and dark (two-tailed Wilcoxon signed-rank test: p = 0.019; n = 9 mice). Right: Comparison of the corrected peak spike rates of corner cells at square corners between baseline and whisker trimming (two-tailed Wilcoxon signed-rank test: p > 0.99; n = 9 mice). (k) Same as (i), but for neurons classified as place cells in the subiculum (two-tailed Wilcoxon signed-rank test: left: p = 0.0039; right: p = 0.019; n = 9 sessions from 9 mice).

We then placed the animals in a square arena in complete darkness. In this condition, the representations of corners by corner cells persisted, and the proportion of corner cells remained unchanged (Extended Data Fig. 6g–i). Similarly, trimming the animals’ whiskers did not significantly affect the proportion of corner cells (Extended Data Fig. 6g–i). However, compared to the baseline, there was a decrease in the peak spike rates of corner cells in darkness, but not after whisker trimming (Extended Data Fig. 6j). By contrast, recording in darkness or after whisker trimming significantly decreased the number of place cells in the subiculum (Extended Data Fig. 6k). Together, our results suggest that visual information plays a more significant role than tactile information in the corner coding of the subiculum.

Subiculum neurons encode convex corners

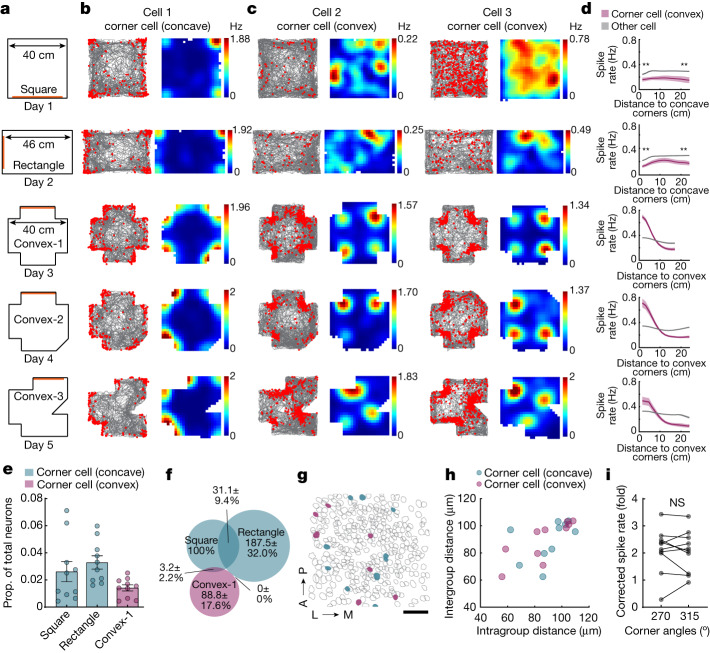

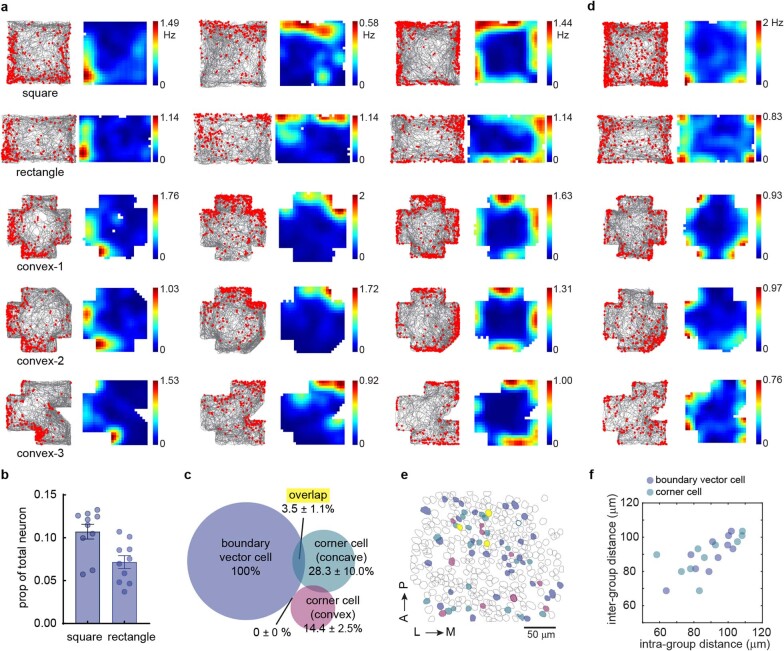

If corner sensitivity in the subiculum has an important role in encoding environmental geometry, it would be reasonable to anticipate distinct coding for concave versus convex corners, as these qualitative distinctions are critical for defining geometry. We next examined whether corner coding in the subiculum extended to other corner geometries. We designed more complex environments that included both concave and convex corners. We imaged as animals explored a square and rectangle environment (concave corners, 30 min), followed by three environments with convex corners (convex-1, convex-2, convex-3) (Fig. 4a). First, we identified corner cells in the square and followed their activity across other environments. As in our prior experiments, we observed corner cells that increased their spike rate at the concave corners, but less so to the convex corners (Fig. 4b). Further investigation of neurons imaged in the convex-1 environment however, revealed a small subset of neurons that increased their spike rate specifically at the convex corners (Fig. 4c). By tracking the activity of these convex corner cells to the convex-2 and -3 environments, we further found that they responded to convex corners regardless of the location of the corners or the overall geometry of the environment (Fig. 4c). Similar to corner cells that encode concave corners, corner cells encoding convex corners showed a higher spike rate near the convex corners than at the centroid (Fig. 4d, bottom three panels) (n = 10 mice). Tracking the activity of convex corner cells retrogradely to the square and rectangle environments, we observed that they had an overall lower spike rate compared to other subicular neurons (Fig. 4d). This low level of activity in the absence of convex corners suggests these corner cells respond specifically to convex corners. In environments with convex corners, the proportion of convex corner cells was 1.4 ± 0.2%, a slightly smaller proportion than that of concave corner cells identified in the square (2.6 ± 0.7%) and rectangle (3.3 ± 0.5%) in the same set of experiments (Fig. 4e). Corner cells encoding concave or convex corners were non-overlapping neural populations (Fig. 4f,g), as they overlapped less than expected by chance (Extended Data Fig. 7a). Corner cells encoding concave or convex corners were distributed in the subiculum in a salt and pepper pattern without clear clustering, as suggested by the similar intergroup and intragroup anatomical distances (Fig. 4g,h).

Fig. 4. The subiculum contains neurons that exhibit convex corner-associated activity.

a, Schematic of the arenas containing concave and convex corners. Orange bars indicate visual cues. b, Raster plots and rate maps of a representative concave corner cell, plotted as in Fig. 1f. c, Same as b, but for two representative convex corner cells from two mice. d, Positional spike rates of convex corner cells relative to the distance to the nearest convex corner, organized as in a. Top two plots show positional spike rates of convex-1 identified convex corner cells relative to the distance to the nearest concave corner in the square and rectangle. Spike rates between convex corner cells and other neurons were compared within approximately 5 cm of either the curve’s head or tail (two-tailed Wilcoxon signed-rank test: all P = 0.0020; n = 10 mice). e, Proportions of corner cells in the square and rectangle and and of convex corner cells in the convex-1 arena (mean ± s.e.m.; n = 10 mice). f, Venn diagram of the overlap between concave and convex corner cells. All numbers were normalized to the corner cells in square. g, Anatomical locations of concave (teal) and convex (purple) corner cells from a representative mouse, identified from square and convex-1 arena, respectively. Grey, non-corner cells; A, anterior; P, posterior; L, lateral; M, medial. h, Pairwise intra- versus intergroup anatomical distances for concave and convex corner cells (repeated measures ANOVA: F(1.41, 12.69) = 0.26, P = 0.70; n = 10 mice). The intergroup distance would be greater if the neuronal groups were anatomically clustered. i, Corrected peak spike rates of corner cells (identified in convex-3) at the convex corners (270° versus 315°) in the convex-3 arena (two-tailed Wilcoxon signed-rank test: P = 0.85; n = 10 mice). Scale bar, 50 μm (g).

Extended Data Fig. 7. Convex corner cells are not sensitive to non-geometric changes or corner angles but respond to certain properties of discrete objects.

(a) Related to Fig. 4f: comparing the overlap of corner cells with the chance. Left: overlap of corner cells (both concave) between square and rectangle environments (red bar). Middle: overlap of corner cells (concave vs. convex) between square and convex-1 environments (red bar). Right: overlap of corner cells (concave vs. convex) between rectangle and convex-1 environments (red bar). The gray histogram illustrates the corresponding distribution of overlap expected by chance, with the black bar denotes the 95th percentile of each distribution. This distribution is generated by randomly selecting the same number of neurons, as indicated above for each environment, 1000 times in each mouse (n = 9 mice). Corner cells in the square and rectangle showed an overlap that is higher than chance (left), while the overlap between corner cells encoding concave or convex corners was minimal and below the chance level. (b) Schematic of the normal (convex-1) and the modified (convex-m1) convex environments. In convex-m1, one of the convex corners (in pink) was composed of walls of a different color and texture from the other three. Orange bars indicate the location of local visual cues. (c) Two representative corner cells encoding convex corners from two different mice. Each column is a neuron in which its activity was tracked across the two conditions indicated in (b). Raster plot (left) indicates extracted spikes (red dots) on top of the animal’s running trajectory (grey lines) and the spatial rate map (right) is color-coded for maximum (red) and minimum (blue) values. Pink circles delineate the location of the modified corner in the convex-m1 arena. (d) Corrected peak spike rates of corner cells (convex) at the location of the modified corner in the convex-1 vs. convex-m1 arenas (two-tailed Wilcoxon signed-rank test: p = 0.85; n = 10 mice). Corner cells were defined in each session. (e) Schematic of a convex environment containing 270° and 225° corners, as in (b). The second session was also rotated 90 degrees counterclockwise, but was combined with the first session for analysis. (f) Raster plots and the corresponding rate maps of two corner cells encoding convex corners from two different mice, as in (c). Each column is a neuron in which its activity was tracked across the two sessions. (g) Corrected peak spike rates of corner cells (convex) at 270° and 225° corners (two-tailed Wilcoxon signed-rank test: p = 0.73; n = 9 mice). (h) Schematic of the experiments, as in (b). Corner cells (convex) were identified in the convex-1 environment on day 1, then their activity was tested with inserted objects (a triangle and a cylinder) on day 2. (i) Raster plots and the corresponding rate maps of three corner cells encoding convex corners from three different mice. Each column is a neuron in which its activity was tracked across the two sessions. (j) Illustration showing vertex and face locations for the triangular object. (k) Differences between spike rates at the vertices and faces of the triangular object in corner (convex) and non-corner cells (two-tailed Wilcoxon signed-rank test against zero: corner cells: p = 0.0078; non-corner cells: p = 0.95; two-tailed Wilcoxon signed-rank test: corner cells vs. non-corner cells: p = 0.0078; n = 8 mice). (l) Differences between spike rates at the cylinder and the faces of the triangular object in corner (convex) and non-corner cells (two-tailed Wilcoxon signed-rank test against zero: corner cells: p = 0.016; non-corner cells: p = 0.74; two-tailed Wilcoxon signed-rank test: corner cells vs. non-corner cells: p = 0.016; n = 8 mice).

The activity of corner cells encoding convex corners was not affected by non-geometric changes to the corners, as they showed consistent spike rates for the same corner regardless of its colour or texture (Extended Data Fig. 7b–d). However, unlike corner cells that encode concave corners, corner cells encoding convex corners showed comparable spike rates for corners at various angles in an asymmetric environment (315°, 2.06 ± 0.24; 270°, 2.10 ± 0.27 and 2.09 ± 0.13; 225°, 2.12 ± 0.20; mean ± s.e.m.) (Fig. 4c,i and Extended Data Fig. 7e–g). We then introduced a triangular and cylindrical object to the centre of the environment. Corner cells encoding convex corners showed higher spike rates at the vertices of the triangular object compared to the faces (Extended Data Fig. 7h-k). Furthermore, most of the corner cells encoding convex corners increased their spike rates around the cylinder (Extended Data Fig. 7l). Together, these results demonstrate that the subiculum encodes both concave and convex corners.

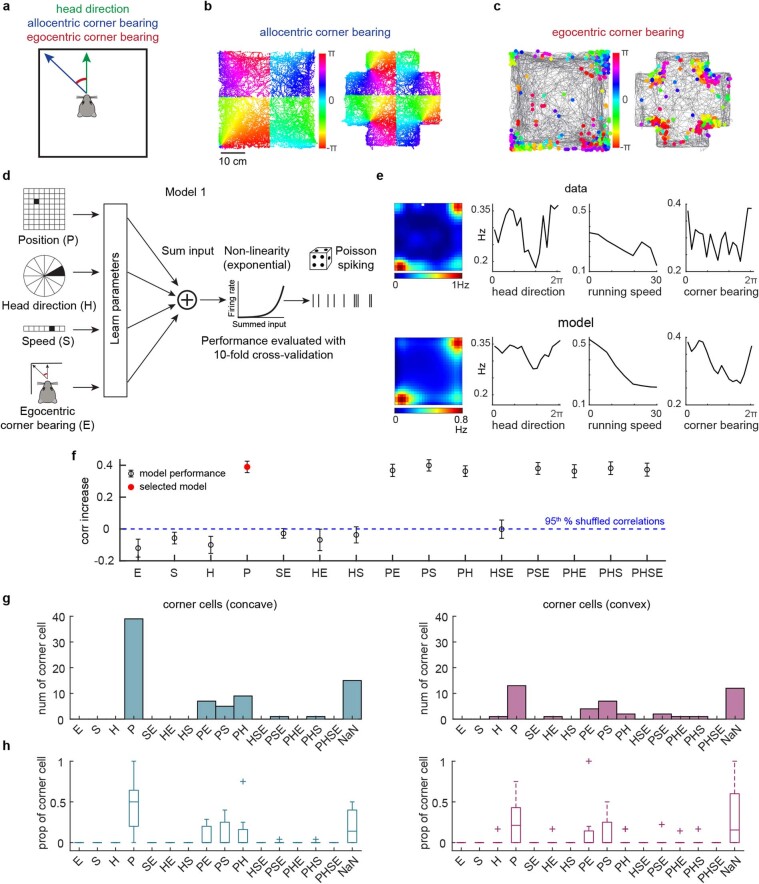

Corner coding in the subiculum is primarily allocentric

To determine whether the previously described corner cells encode corners from an allocentric or egocentric reference frame, we first trained a linear–nonlinear Poisson (LN) model with behavioural variables including the animal’s allocentric position (P), head direction (H), running speed (S) and egocentric bearing to the nearest corner (E) (Model 1, Extended Data Fig. 8a–d). We used corner cells from the square environment (40 cm) and the convex-1 environment for this analysis. For both corner cells encoding concave and convex corners, the majority (note, 15 concave and 12 convex corner cells could not be classified in the LN model) fell into the allocentric position only category (P), which means that adding variables did not improve the model performance (Extended Data Fig. 8e–h). A smaller number of corner cells encoded head direction, running speed and/or egocentric corner bearing in conjunction with position (Extended Data Fig. 8g,h), indicating that corner cell coding in the subiculum is largely independent of modulation by the animal’s head direction, running speed and egocentric corner bearing.

Extended Data Fig. 8. Corner cells primarily correspond to an allocentric reference frame.

(a) Schematic for calculating egocentric corner bearing (red) using head direction (green) and allocentric corner bearing (blue) (Methods). (b) Behavioral data of allocentric corner bearing in square and convex-1 environments from one representative mouse. Each position is color-coded for the allocentric bearing of the nearest corner relative to the animal. Note the discrete color shifts represent changes in the closest corner to the animal (e.g. the northwest versus southwest corner). (c) A corner cell example with spikes color-coded according to the egocentric corner bearing in square and convex-1 environments. 0 degrees indicates the animal is directly facing the nearest corner. (d) Schematic of the linear-non-linear Poisson (LN) model framework with behavioral variables including allocentric position (P), allocentric head direction (H), linear speed (S) and egocentric corner bearing (E) (Model 1, see Methods). (e) True tuning curves (top) and model-derived response profiles (bottom) from an example corner cell. (f) An example of evaluating the model performance and selecting the best model using a forward search method. This example is from the corner cell in (e) and the best fit model (red dot) is the position (P) only model. (g) Number of corner cells (concave or convex) that were classified in each cell type category. This plot combined all the corner cells from all mice identified from the large square or convex-1 in Fig. 4 (a total of 77 corner cells (concave) and 44 corner cells (convex) from 10 mice). Some corner cells could not be classified potentially due to low spike rates (NaN). (h) Same as (g), but plotted using the proportion of total corner cells in each animal. For the box plots, the center indicates median, and the box indicates 25th and 75th percentiles. The whiskers extend to the most extreme data points without outliers (+).

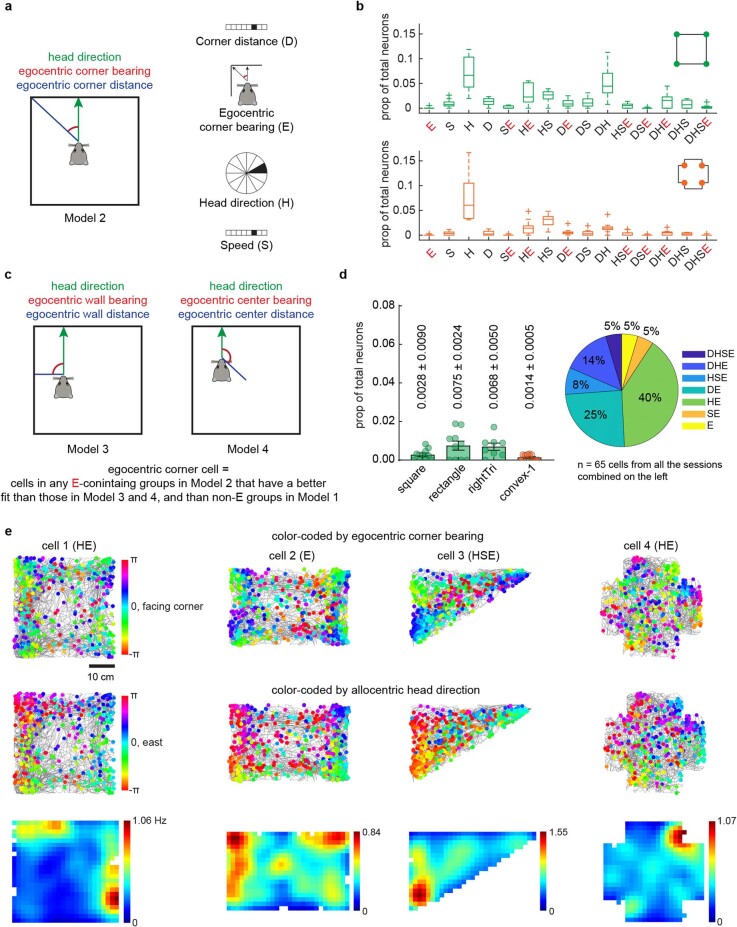

Inspired by recent studies of egocentric boundary or centre-bearing cells12,26,27,30,43,44, we expanded our investigation to consider egocentric corner coding across the entire population of subiculum neurons. We introduced additional LN models that incorporated egocentric corner bearing and distance to identify egocentric corner cells (Model 2, Extended Data Fig. 9a,b and Methods) and filtered out neurons that encoded egocentric boundaries or the centre of the environment (Models 3 and 4, Extended Data Fig. 9c and Methods). Results from both rotationally symmetric and asymmetric (for example, 30–60–90 triangle) environments consistently revealed that a small proportion of subiculum neurons (less than or equal to 0.75%) encoded corners in an egocentric reference frame (Extended Data Fig. 9d,e). This corresponded to 65 egocentric corner cells out of 12,550 total subiculum neurons, summed from 38 sessions (square, rectangle, rightTri and convex-1 combined, n = 10 mice). Two-thirds of these egocentric corner cells conjunctively encoded the animal’s head direction (Extended Data Fig. 9d,e). These neurons minimally overlapped (2 out of 65) with the allocentric corner cells classified in the corresponding session. Together, our results suggest that corner coding in the subiculum is primarily allocentric, a reference frame consistent with boundary vector cell (BVC) and place cell coding in the subiculum25,33,34.

Extended Data Fig. 9. A small number of subiculum neurons encode egocentric bearing from corners.

(a) Schematic of the linear-non-linear Poisson (LN) model framework with behavioral variables including egocentric corner bearing (E), egocentric corner distance (D), allocentric head direction (H) and linear speed (S) (Model 2). (b) Proportion of subiculum neurons that were classified by Model 2 in large square (green) or convex-1 (orange) environments (n = 10 mice). Neurons combined from all model groups featuring egocentric corner-bearing (E, highlighted in red) account for 6.24 ± 1.20 % (n = 10 mice) of the total recorded subiculum neurons in the square environment. Similarly, 3.1 ± 0.6 % of neurons featuring egocentric corner bearing for convex corners in the convex-1 environment. For the box plots, the center indicates median, the box indicates 25th and 75th percentiles. The whiskers extend to the most extreme data points without outliers (+). (c) Schematics of LN model 3 and 4. Model 3 contains egocentric wall bearing (E), egocentric wall distance (D), allocentric head direction (H) and linear speed (S). Model 4 contains egocentric center bearing (E), egocentric center distance (D), allocentric head direction (H) and linear speed (S) (see Methods). (d) Left: Proportion of egocentric corner cells in the subiculum from the square (mean ± SEM; n = 10 mice), rectangle (n = 10), right triangle (n = 8), and convex-1 (bearing to convex corners; n = 10) environments. Right: pie chart showing the conjunctive coding of egocentric corner cells with other behavioral variables. (e) Representative egocentric corner cells from the square, rectangle, right triangle, and convex-1 (bearing to convex corners) environments. Each column represents a neuron. The first row shows spike raster plots color-coded with egocentric corner bearing on top of the animal’s running trajectory (grey lines). Similarly, the second row is color-coded with allocentric head direction. The third row shows positional rate maps.

Corner coding differs from boundary coding

We next examined the relationship between corner cells and previously reported BVCs in the subiculum25. We observed BVCs in the square (10.7 ± 0.9%, n = 10 session from 10 mice) and rectangle (7.2 ± 0.8%) environments (Extended Data Fig. 10a,b). Tracking the activity of BVCs identified in the square environment revealed stable boundary coding across both concave and convex environments (Extended Data Fig. 10a). We observed a lower than chance overlap (3.5 ± 1.1% versus 12.5%) between BVCs and corner cells encoding concave corners (Extended Data Fig. 10c), reflective of neurons that were active at both corners and boundaries (Extended Data Fig. 10d). However, we did not observe any overlap between BVCs and corner cells encoding convex corners (Extended Data Fig. 10c). Anatomically, BVCs and corner cells did not form distinct clusters but instead showed a salt-and-pepper distribution in the subiculum (Extended Data Fig. 10e,f). Together, this suggests that corner cells are a separate neuronal population from BVCs in the subiculum.

Extended Data Fig. 10. Corner coding differs from boundary vector coding in the subiculum.

(a) Raster plots and rate maps of three boundary vector cells (BVCs) from three different mice, plotted as in Fig. 1f. BVCs were identified in the square environment. Each column is a cell in which its activity was tracked across sessions. (b) Proportion of neurons classified as BVCs in the square and rectangle sessions. Each dot represents a session (n = 10 mice). Histogram and error bars indicate mean ± SEM. (c) Venn diagram showing the overlap between BVCs and corner cells (concave or convex). BVCs and corner cells encoding concave corners were identified in the square environment, while corner cells encoding convex corners were identified in the convex-1 arena. All numbers were normalized to the number of BVCs. The overlap between corner cells and BVCs (3.5 ± 1.1%) was not higher than the threshold above the random overlap level (12.5%) (d) An example neuron classified as both a BVC and corner cell based on its activity in the square environment. (e) Anatomical locations of BVCs and corner cells (concave or convex) from a representative mouse. Color codes are the same as in (c). Unfilled grey circles represent other subicular neurons. A: anterior; P: posterior; L: lateral; M: medial. (f) Pairwise intra- vs. inter-group anatomical distances for BVCs and corner cells (concave + convex) (repeated measures ANOVA: F(1.37, 12.36) = 0.30, p = 0.66; n = 10 mice).

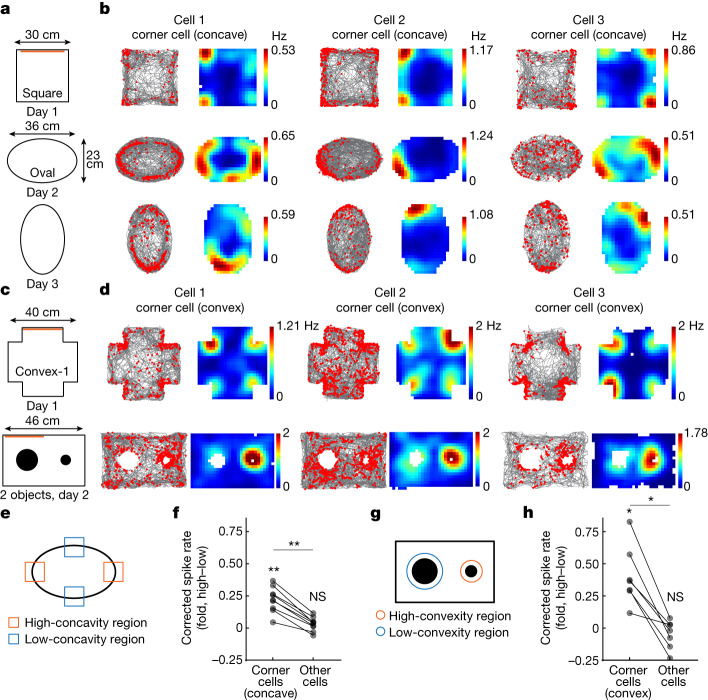

Corner coding generalizes to concavity and convexity

The observation of increased activity at the boundaries of the circular environment (Fig. 1e,f) and around the cylinder in corner cells (Extended Data Fig. 7h,i,l), led us to ask whether corner coding reflect a broader coding scheme for concavity and convexity in the subiculum. To test this idea, animals explored an oval environment to examine concavity coding (Fig. 5a,b) and different sizes of cylinders (3 cm versus 9 cm in diameter) to examine convexity coding (Fig. 5c,d). Corner cells, initially identified in the square environment, were examined for their activity in the high- versus low-concavity regions of the oval (Fig. 5a,b,e,f). Indeed, corner cells encoding concave corners showed higher spike rates at the high-concavity regions compared to the low-concavity regions (oval high–low, 0.22 ± 0.03, mean ± s.e.m.) (Fig. 5e,f). Similarly, corner cells encoding convex corners, identified in the convex-1 environment, showed higher spike rates around the high-convexity cylinder compared to the low-convexity cylinder (cylinder 3 cm–9 cm, 0.41 ± 0.09) (Fig. 5c,d,g,h). These effects were not observed in non-corner cells (oval high–low, 0.03 ± 0.02; cylinder 3 cm–9 cm, −0.05 ± 0.04) and the increase in the activity of corner cells was higher than in that of non-corner cells (Fig. 5f,h). Together, our results indicate that the subiculum encodes the concave and convex curvature of the environment through distinct neuronal populations.

Fig. 5. Corner cells generalize their activity to encode environmental concavity and convexity.

a, Schematic of the arenas. Orange bars indicate visual cues. The oval on day 3 was rotated 90° relative to day 2. b, Raster plots and rate maps of three corner cells from three mice. Each column is a cell that was tracked across sessions, plotted as in Fig. 1f. c, Schematic of the experiments. Orange bars indicate visual cues. On day 2, two cylindrical objects (3 cm and 9 cm in diameter) were placed in the rectangle environment. d, Raster plots and rate maps of three convex corner cells from three mice. Each column is a cell which was tracked across sessions. e, Illustration of the high- versus low-concavity regions in the oval arena. f, Spike rate differences between high- and low-concavity regions in the oval arena for corner and non-corner cells. Corner cells were identified in the square environment (two-tailed Wilcoxon signed-rank test against zero: corner cells: P = 0.0039; non-corner cells: P = 0.16; two-tailed Wilcoxon signed-rank test: corner cells versus non-corner cells: P = 0.0039; n = 9 mice, data averaged from day 2 and day 3 for each mouse). g, Illustration showing the high- versus low-convexity regions around the objects. h, Spike rate differences between high- and low-convexity regions around the objects for both corner and non-corner cells. Convex corner cells were identified in the convex-1 environment (two-tailed Wilcoxon signed-rank test against zero: corner cells, P = 0.016; non-corner cells, P = 0.58; two-tailed Wilcoxon signed-rank test: corner cells versus non-corner cells, P = 0.016; n = 7 mice).

Discussion

Animals use boundaries and corners to orient themselves during navigation1–8. These features define the geometry of an environment and can serve as landmarks or indicate locations associated with ethologically relevant needs, such as a nest site or an entryway. Here, we report that alongside neurons that encode environmental boundaries25,29, the subiculum also contains distinct neural populations that encode concave and convex corners. This encoding is consistent across environments, with the activity of these neurons reflecting specific geometric properties of the corners, and generalized to a broader framework for coding environmental concavity and convexity. Such coding may have particular relevance to animals navigating natural environments, in which features such as burrows or nesting sites are often high in concavity or convexity.

A remaining question is how corner-specific firing patterns are generated. Given the dense CA1 to subiculum connectivity31,32,45 and recent observations that CA1 population codes can indicate the distance to objects and walls20, one possibility is that corner cell firing patterns arise from the convergent inputs of CA1 place cells. Namely, they could arise from a thresholded sum of the activity of place cells near environmental corners. This idea aligns with the previously observed clustering of place fields near environmental corners in CA1 place cells37,46, and could explain the sensitivity of corner cell firing rates to corner angles, as hippocampal place fields may show more overlap in smaller corner regions. Understanding how corner-specific patterns are generated could provide important insight into the algorithms the brain uses to construct a single cell code for geometric features and future work using targeted manipulations in the hippocampus may help resolve this question47.

Cells that explicitly encode geometric properties of an environment, such as the corner cells described here, differ from cells that respond to manipulations of an environment’s geometry. For example, entorhinal grid cells transiently change the physical distance between their firing fields when a familiar box is stretched or compressed23,48 and distort in polarized environmental geometries16. These changes in grid cell firing patterns represent alterations to either a familiar geometry or the geometric symmetry of the environment, but the grid pattern itself is not encoding geometric properties or specific elements that define the geometry. Likewise, changes in place cell firing rates, field locations or field size are indicative of an alteration to environmental geometry18,19 but provide little information about the specific elements that compose the geometry. On the other hand, corner coding in the subiculum represents a geometric feature universally across environmental shapes and tracks the explicit properties of corners, including angle, height and the degree to which the walls were connected. Thus, the subiculum may be well positioned to provide information to other brain regions regarding the geometry of the environment in an allocentric reference frame. To guide behaviour however, this allocentric information needs to interface with egocentric information regarding an animal’s movements30. One possibility is that corner cells in the subiculum provide a key input to the recently observed corner-associated activity in the lateral entorhinal cortex (LEC)49. Unlike corner coding in the subiculum, LEC corner-associated activity is largely egocentric and speed modulated, raising the possibility that LEC integrates allocentric corner information with egocentric and self-motion information to prepare an animal to make appropriate actions when approaching a corner or curved areas (for example, deceleration or turning).

Methods

Subjects

All procedures were conducted according to the National Institutes of Health guidelines for animal care and use and approved by the Institutional Animal Care and Use Committee at Stanford University School of Medicine and the University of California, Irvine. For subiculum imaging, eight Camk2a-Cre; Ai163 (ref. 36) mice (four male and four female), one Camk2-Cre mouse (female, JAX: 005359) and one C57BL/6 mouse (male) were used. For the Camk2-Cre mouse, AAV1-CAG-FLEX-GCaMP7f was injected in the right subiculum at anteroposterior (AP): −3.40 mm; lateromedial (ML): +1.88 mm; and dorsoventral (DV): −1.70 mm. For the C57BL/6 mouse, AAV1-Camk2a-GCaMP6f was injected in the right subiculum at the same coordinates. For CA1 imaging, 12 Ai94; Camk2a-tTA; Camk2a-Cre (JAX id: 024115 and 005359) mice (seven male and five female) were used. Mice were group housed with same-sex littermates until the time of surgery. At the time of surgery, mice were 8–12 weeks old. After surgery mice were singly housed at 21–22°C and 29–41% humidity. Mice were kept on a 12-hour light/dark cycle and had ad libitum access to food and water in their home cages at all times. All experiments were carried out during the light phase. Data from both males and females were combined for analysis, as we did not observe sex differences in, for example, corner cell proportions, spike rates to different corners angles, and concavity and convexity.

GRIN lens implantation and baseplate placement

Mice were anesthetized with continuous 1–1.5% isoflurane and head fixed in a rodent stereotax. A three-axis digitally controlled micromanipulator guided by a digital atlas was used to determine bregma and lambda coordinates. To implant the gradient refractive index (GRIN) lens above the subiculum, a 1.8-mm-diameter circular craniotomy was made over the posterior cortex (centred at −3.28 mm anterior/posterior and +2 mm medial/lateral, relative to bregma). For CA1 imaging, the GRIN lens was implanted above the CA1 region of the hippocampus centred at −2.30 mm anterior/posterior (AP) and +1.75 mm medial/lateral (ML), relative to bregma. The dura was then gently removed and the cortex directly below the craniotomy aspirated using a 27- or 30-gauge blunt syringe needle attached to a vacuum pump under constant irrigation with sterile saline. The aspiration removed the corpus callosum and part of the dorsal hippocampal commissure above the imaging window but left the alveus intact. Excessive bleeding was controlled using a haemostatic sponge that had been torn into small pieces and soaked in sterile saline. The GRIN lens (0.25 pitch, 0.55 NA, 1.8 mm diameter and 4.31 mm in length, Edmund Optics) was then slowly lowered with a stereotaxic arm to the subiculum to a depth of −1.75 mm relative to the measurement of the skull surface at bregma. The GRIN lens was then fixed with cyanoacrylate and dental cement. Kwik-Sil (World Precision Instruments) was used to cover the lens at the end of surgery. Two weeks after the implantation of the GRIN lens, a small aluminium baseplate was cemented to the animal’s head on top of the existing dental cement. Specifically, Kwik-Sil was removed to expose the GRIN lens. A miniscope was then fitted into the baseplate and locked in position so that the GCaMP-expressing neurons and visible landmarks, such as blood vessels, were in focus in the field of view. After the installation of the baseplate, the imaging window was fixed for long-term, in respect to the miniscope used during installation. Thus, each mouse had a dedicated miniscope for all experiments. When not imaging, a plastic cap was placed in the baseplate to protect the GRIN lens from dust and dirt.

Behavioural experiments with imaging

After mice had fully recovered from the surgery, they were handled and allowed to habituate to wearing the head-mounted miniscope by freely exploring an open arena for 20 min every day for one week. The actual experiments took place in a different room from the habituation. The behaviour rig, an 80/20 built compartment, in this dedicated room had two white walls and one black wall with salient decorations as distal visual cues, which were kept constant over the course of the entire study. For experiments described below, all the walls of the arenas were acrylic and were tightly wrapped with black paper by default to reduce potential reflections from the LEDs on the scope. A local visual cue was always available on one of the walls in the arena, except for the oval environment. In each experiment, the floors of the arenas were covered with corn bedding. All animals’ movements were voluntary.

Circle, equilateral triangle, square, hexagon and low-wall square

This set of experiments was carried out in a circle, an equilateral triangle, a square, a hexagon and a low-wall square environment. The diameter of the circle was 35 cm. The side lengths were 30 cm for the equilateral triangle and square, and 18.5 cm for the hexagon. The height of all the environments was 30 cm except for the low-wall square, which was 15 cm. In total, we conducted 15, 18, 17, 18 and 12 sessions (20 min per session) from nine mice in the circular, triangular, square, hexagonal and low-wall square arenas, respectively. We recorded a maximum of two sessions per condition per mouse. For each mouse, we recorded 1–2 sessions in each day. If two sessions were made from the same animal on a given day, recordings were carried out from different conditions with at least a two-hour gap between sessions. For each mouse, data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked across the sessions. As described above, all the walls of the arenas were black. A local visual cue (strips of white masking tape) was present on one wall of each arena, covering the top half of the wall. For CA1 imaging, mice were placed into a familiar 25 × 25 cm square environment for a single, 20 min session recording.

Trapezoid and 30-60-90 right triangle

This set of experiments was carried out in a right triangle (30°, 60°, 90°) and a trapezoid environment. Corner angles from the trapezoid were 55°, 90°, 90° and 125°. The dimensions of the mazes were 46 (L) × 28 (W) × 30 (H) cm. In total, we conducted 16 sessions each (25 min per session) from eight mice for the right triangle and trapezoid. Data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked across the sessions for each mouse. Other recording protocols were the same as described above.

Insertion of a discrete corner in a square environment

This set of experiments was carried out in a large square environment with dimensions of 40 (L) × 40 (W) × 40 (H) cm. The experiments comprised a baseline session followed by four sessions with the insertion of a discrete corner into the square maze. In these sessions, the walls that formed the discrete corner were gradually separated by 0, 1.5, 3 and 6 cm. Starting from 3 cm, the animals were able to pass through the gap without difficulty. The dimensions of the inserted walls were 15 (W) × 30 (H) cm. For each condition, we recorded eight sessions (30 min per session) from eight mice by conducting a single session from each mouse per day. Data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked throughout the sessions.

Square, rectangle, convex-1, convex-2, convex-3 and convex-m1

This set of experiments was carried out in a large square, rectangle and multiple convex environments that contained both concave and convex corners. The dimensions of the square were 40 (L) × 40 (W) × 40 (H) cm and the rectangle were 46 (L) × 28 (W) × 30 (H) cm. The convex arenas were all constructed based on the square environment using wood blocks or PVC sheets that were tightly wrapped with the same black paper. There convex corners had angles at 270° and 315° in the convex environments. Note that, for four out of ten mice, their convex-2 and -3 arenas were constructed in a mirrored layout compared to the arenas of the other six mice to control for any potential biases that could arise from the specific geometric configurations in the environment (Fig. 4c). For convex-m1 (Extended Data Fig. 7b), the northeast convex corner was decorated with white, rough surface masking tape from the bottom all the way up to the top of the corner. For each condition, we recorded ten sessions (30 min per session) from ten mice, a single session from each mouse per day. For each mouse, data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked across all the sessions.

Convex environment with an obtuse convex corner

This set of experiments was carried out in a convex environment that contained two 270° convex corners and one 225° convex corner (Extended Data Fig. 7e). The arena was constructed in the same manner as the other convex environments described above. For two days, we recorded a total of 18 sessions (30 min per session) from nine mice, two sessions per mouse. Please note, although the maze was rotated by 90° in the second session, we combined the two sessions together for the analysis.

Triangular and cylindrical objects

This set of experiments was first carried out in the convex-1 environment, followed by a 40 cm square environment containing two discrete objects (Extended Data Fig. 7h). The first object was an isosceles right triangle with the hypotenuse side measuring 20 cm in length and 7 cm in height (occasionally, animals climbed on top of the object). The second object was a cylinder with a diameter of 3 cm and a height of 14 cm. For this experiment, we recorded a total of eight sessions (30 min per session) from eight mice for each environment.

Shuttle box

The shuttle box consisted of two connected, 25 (L) × 25 (W) × 25 (H) cm compartments with distinct colours and visual cues (Extended Data Fig. 6a). The opening in the middle was 6.5 cm wide, so that the mouse could easily run between the two compartments during miniscope recordings. The black compartment was wrapped in black paper, but not the grey compartment. For two days, we recorded a total of 18 sessions (20 min per session) from nine mice, two sessions per mouse.

Recordings in the dark or with trimmed whiskers

This set of experiments was carried out in a square environment with dimensions of 30 (L) × 30 (W) × 30 × (H) cm. The animals had experience in the environment before this experiment. The experiments consisted of three sessions: a baseline session, a session recorded in complete darkness, and a session recorded after the mice’s whiskers were trimmed. For the dark recording, the ambient light was turned off immediately after the animal was placed inside the square box. The red LED (approximately 650 nm) on the miniscope was covered by black masking tape. This masking did not completely block the red light, so the behavioural camera could still detect the animal’s position. Before the masking, the intensity of the red LED was measured as approximately 12 lux from the distance to the animal’s head. However, after the masking, the intensity of the masked red LED was comparable to the measurement taken with the light metre sensor blocked (complete darkness, approximately 2 lux). The blue LED on the miniscope was completely blocked from the outside. For the whisker-trimmed session, facial whiskers were trimmed (not epilated) with scissors until no visible whiskers remained on the face 12 h before the recording. For each condition, we recorded nine sessions (20 min per session) from nine mice by conducting a single session from each mouse per day. For each mouse, data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked across these sessions. Note that according to previous reports50–52, the number of hippocampal place cells decrease in both darkness and whisker trimming conditions.

Square and oval

This set of experiments was carried out in the 30 cm square environment (day 1) and an oval environment (days 2 and 3) (Fig. 5a). The oval environment had an elliptical shape, with its major axis measuring 36 cm and minor axis measuring 23 cm. Notably, the oval experiment on day 3 was rotated 90° relative to day 2 (Fig. 5a). For each condition, we recorded nine sessions (25 min per session) from nine mice, a single session from each mouse per day. For each mouse, data from this set of experiments were aligned and concatenated, and the activity of neurons was tracked across all the sessions. Data from both the oval and rotated oval conditions were combined for analysis.

Two cylindrical objects

This set of experiments was first carried out in the convex-1 environment, followed by a 46 (L) × 28 (W) × 30 (H) cm rectangle environment containing two cylindrical objects (Fig. 5c). The first cylinder had a diameter of 3 cm and a height of 14 cm, while the second cylinder had a diameter of 9 cm and a height of 14 cm. For this experiment, we recorded a total of seven sessions (30 min per session) for each environment from seven mice.

Miniscope imaging data acquisition and preprocessing

Technical details for the custom-constructed miniscopes and general processing analyses are described in32,37,53 and at http://miniscope.org/index.php/Main_Page. In brief, this head-mounted scope had a mass of about 3 g and a single, flexible coaxial cable that carried power, control signals and imaging data to the miniscope open-source data acquisition (DAQ) hardware and software. In our experiments, we used Miniscope v.3, which had a 700 μm × 450 μm field of view with a resolution of 752 pixels × 480 pixels (approximately 1 μm per pixel). For subiculum imaging, we measured the effective image size (the area with detectable neurons) for each mouse and combined this information with histology. The anatomical region where neurons were recorded was approximately within a 450-μm diameter circular area centred around AP: −3.40 mm and ML: +2 mm. Owing to the limitations of 1-photon imaging, we believe the recordings were primarily from the deep layer of the subiculum. Images were acquired at approximately 30 frames per second (fps) and recorded to uncompressed avi files. The DAQ software also recorded the simultaneous behaviour of the mouse through a high-definition webcam (Logitech) at approximately 30 fps, with time stamps applied to both video streams for offline alignment.

For each set of experiments, miniscope videos of individual sessions were first concatenated and down-sampled by a factor of two, then motion corrected using the NoRMCorre MATLAB package54. To align the videos across different sessions for each animal, we applied an automatic two-dimensional (2D) image registration method (github.com/fordanic/image-registration) with rigid x–y translations according to the maximum intensity projection images for each session. The registered videos for each animal were then concatenated together in chronological order to generate a combined dataset for extracting calcium activity.

To extract the calcium activity from the combined dataset, we used extended constrained non-negative matrix factorization for endoscopic data (CNMF-E)38,55, which enables simultaneous denoising, deconvolving and demixing of calcium imaging data. A key feature includes modelling the large, rapidly fluctuating background, allowing good separation of single-neuron signals from background and the separation of partially overlapping neurons by taking a neuron’s spatial and temporal information into account (see ref. 38 for details). A deconvolution algorithm called OASIS39 was then applied to obtain the denoised neural activity and deconvolved spiking activity (Extended Data Fig. 1b). These extracted calcium signals for the combined dataset were then split back into each session according to their individual frame numbers. As the combined dataset was large (greater than 10 GB), we used the Sherlock HPC cluster hosted by Stanford University to process the data across 8–12 cores and 600–700 GB of RAM. While processing this combined dataset required significant computing resources, it enhanced our ability to track cells across sessions from different days. This process made it unnecessary to perform individual footprint alignment or cell registration across sessions. The position, head direction and speed of the animals were determined by applying a custom MATLAB script to the animal’s behavioural tracking video. Time points at which the speed of the animal was lower than 2 cm s−1 were identified and excluded from further analysis. We then used linear interpolation to temporally align the position data to the calcium imaging data.

Corner cell analyses

Calculation of spatial rate maps

After we obtained the deconvolved spiking activity of neurons, we binarized it by applying a threshold using a ×3 standard deviation of all the deconvolved spiking activity for each neuron. The position data was sorted into 1.6 × 1.6 cm non-overlapping spatial bins. The spatial rate map for each neuron was constructed by dividing the total number of calcium spikes by the animal’s total occupancy in a given spatial bin. The rate maps were smoothed using a 2D convolution with a Gaussian filter that had a standard deviation of two.

Corner score for each field

To detect spatial fields in a given rate map, we first applied a threshold to filter the rate map. After filtering, each connected pixel region was considered a place field, and the x and y coordinates of the regional maxima for each field were the locations of the fields. We used a filtering threshold of 0.3 times the maximum spike rate for identifying corner cells in smaller environments (for example, the circle, triangle, square and hexagon), and a filtering threshold of 0.4 for identifying corner cells in larger environments (for example, 40 cm square, rectangle and convex environments, Fig. 4). These thresholds were determined from a search of threshold values that ranged from 0.1–0.6. The threshold range that resulted in the best corner cell classification, as determined by the overall firing-rate difference between the corner and the centroid of an environment (for example, Fig. 1h), was 0.3–0.4 across different environments. The coordinates of the centroid and corners of the environments were automatically detected with manual corrections. For each field, we defined the corner score as:

where d1 is the distance between the environmental centroid and the field, and d2 is the distance between the field and the nearest environmental corner. The score ranges from −1 for fields situated at the centroid of the arena to +1 for fields perfectly located at a corner (Extended Data Fig. 1f).

Corner score for each cell