Abstract

Objective

Tongue segmentation as a basis for automated tongue recognition studies in Chinese medicine, which has defects such as network degradation and inability to obtain global features, which seriously affects the segmentation effect. This article proposes an improved model RTC_TongueNet based on DeepLabV3, which combines the improved residual structure and transformer and integrates the ECA (Efficient Channel Attention Module) attention mechanism of multiscale atrous convolution to improve the effect of tongue image segmentation.

Methods

In this paper, we improve the backbone network based on DeepLabV3 by incorporating the transformer structure and an improved residual structure. The residual module is divided into two structures and uses different residual structures under different conditions to speed up the frequency of shallow information mapping to deep network, which can more effectively extract the underlying features of tongue image; introduces ECA attention mechanism after concat operation in ASPP (Atrous Spatial Pyramid Pooling) structure to strengthen information interaction and fusion, effectively extract local and global features, and enable the model to focus more on difficult-to-separate areas such as tongue edge, to obtain better segmentation effect.

Results

The RTC_TongueNet network model was compared with FCN (Fully Convolutional Networks), UNet, LRASPP (Lite Reduced ASPP), and DeepLabV3 models on two datasets. On the two datasets, the MIOU (Mean Intersection over Union) and MPA (Mean Pixel Accuracy) values of the classic model DeepLabV3 were higher than those of FCN, UNet, and LRASPP models, and the performance was better. Compared with the DeepLabV3 model, the RTC_TongueNet network model increased MIOU value by 0.9% and MPA value by 0.3% on the first dataset; MIOU increased by 1.0% and MPA increased by 1.1% on the second dataset. RTC_TongueNet model performed best on both datasets.

Conclusion

In this study, based on DeepLabV3, we apply the improved residual structure and transformer as a backbone to fully extract image features locally and globally. The ECA attention module is combined to enhance channel attention, strengthen useful information, and weaken the interference of useless information. RTC_TongueNet model can effectively segment tongue images. This study has practical application value and reference value for tongue image segmentation.

Keywords: Tongue segmentation, DeepLabV3, improved residual structure, transformer, attention mechanism

Introduction

Traditional Chinese medicine has a long history. Traditional Chinese medicine diagnoses diseases through the four diagnostic methods of observation, listening, inquiry, and pulse diagnosis. Among them, tongue diagnosis is the most important. Traditional Chinese medicine theory believes that the tongue is connected with the five internal organs through the meridians. The virtual reality of human organs, Qi and blood, and body fluids, as well as changes in diseases, can be objectively reflected in tongue images. 1 Doctors can carry out dialectical treatment by observing the patient's fur color, fur quality, tongue color, and tongue shape, combined with inquiry and pulse diagnosis. Therefore, tongue diagnosis is an important basis for clinical doctors to assist in diagnosing diseases. 2 Tongue diagnosis requires professional knowledge and the rich experience of doctors. Tongue image is also easily affected by factors such as light and dark and colored food pollution of tongue fur. The tongue image contains rich information. Using modern technology to obtain tongue image information objectively, accurately, and effectively can assist doctors in tongue diagnosis, which is of great significance for modernizing traditional Chinese medicine diagnosis and treatment.

In recent years, with the development of modern technology, many scholars have studied the automation of tongue image diagnosis. In the automatic diagnosis of tongue images, it is necessary to classify and extract information from tongue images. However, due to the interference of facial features, lips, teeth, upper jaw, and other information, the direct classification effect is not good. 3 Segmentation of the tongue from the background can exclude the influence of other parts and greatly improve the classification effect of the tongue. Therefore, tongue segmentation is the basis of tongue image automatic diagnosis research. Only by accurately and completely segmenting the tongue can the classification effect of tongue image be effectively improved.

In tongue segmentation, the edge position of the tongue, such as the gap between the tongue and the surrounding teeth, lips, upper jaw, and oral cavity, is difficult to segment because it is close to the color of the tongue. Currently, tongue segmentation has become a research hotspot in the field of modernization of traditional Chinese medicine. Many scholars have conducted related research on tongue segmentation, tried various segmentation methods, and achieved some research results.

Tongue image segmentation methods can be divided into traditional machine learning–based segmentation methods and deep learning–based end-to-end segmentation methods. The segmentation effect based on deep learning is obviously better than that of traditional machine learning. Therefore, in recent years, many scholars have conducted research on tongue image segmentation based on deep learning models. The research on tongue image segmentation is mainly divided into fine-tuning, optimization, and improvement based on the classical semantic segmentation model UNet,4–7 which can achieve good tongue image segmentation effect; Some researchers also optimized and improved DeepLabV3 or DeepLabV3+ models 8 in order to obtain better tongue image segmentation effect. To improve the segmentation precision and accuracy of the model, the attention mechanism is introduced to solve the problem of inaccurate tongue segmentation and edge segmentation, and the edge information is filtered by the attention feature map, and important features are selected. 9 Some scholars also improved the segmentation performance of the model by improving the loss function.10,11 Some scholars also use residual networks or extended residual networks combined with feature pyramids to extract information at multiple scales,12,13 which also has a good performance in tongue image segmentation.

The above research on tongue segmentation has achieved good results, but these studies are all based on CNN models. Due to the problem of network degradation in deep CNN networks and the limitations of CNN models in feature extraction, global features of tongue images cannot be well extracted. In addition, CNN models also have shortcomings in long-distance dependency relationships, resulting in more fragmented and patchy tongue segmentation results and less smooth tongue segmentation edges, which cannot be well-segmented from the background such as lips, teeth, and upper jaw, affecting the segmentation effect. To solve this problem, this paper proposes an improved tongue segmentation model RTC_TongueNet. The RTC_TongueNet model combines the improved residual structure and transformer based on DeepLabV3 to extract local and global features of the image; at the same time, multiple different sizes of dilated convolutions are used to extract features at multiple scales; further combined with ECA attention module to enhance channel attention, strengthen useful information and weaken noise interference. Our improved model achieved good results on our own dataset and public dataset. The innovation and highlights of this paper mainly include the following three points:

Based on the DeepLabV3 network, the residual structure is improved into two structures to solve the problem of network degradation. When the width and height of the input feature layer are twice the output feature layer, the input feature map will pass through two branches; when the width and height of the input feature layer are equal to the output feature layer, the input feature layer will pass through three branches. The improved residual structure only passes through one convolutional layer in the main branch and adds it to the shortcut branch. The intermediate convolution operation is less, which speeds up the frequency of shallow information mapping to deep network and effectively extracts underlying features of tongue image.

To better extract global and local features, the model adds a transformer to capture long-distance dependency relationships and combines two residual structures with multiple positions added to the transformer as the backbone network. This network structure can effectively extract local and global features. 14

Further improve the Atrous Spatial Pyramid Pooling (ASPP) structure, add ECA attention mechanism after the concat operation in the ASPP structure, which is conducive to the information fusion between the output results of the five parallel operations of DeepLabV3 and makes the model focus more on difficult-to-separate areas such as tongue edges and tooth marks. Experiments have shown that this method has a certain improvement effect on tongue segmentation.

The rest of this paper is structured as follows: “Algorithm principles” section introduces the algorithm principle related to this paper; “Improved DeepLabV3 model” section introduces our improved network structure and theoretical interpretation. “Experiment results” section is a comparison experiment between our improved network and other classical methods, which proves that the improved network is effective. The fifth part summarizes all the work of the paper and explains the shortcomings. The last part gives the conclusion of the thesis.

Algorithm principles

DeepLabV3 network

The DeepLab is a semantic segmentation network developed by Google that uses an end-to-end training method. DeepLabV1 uses the Vgg convolutional neural network as the backbone network for feature extraction. It introduces dilated convolution in the backbone network and, like the Fully Convolutional Networks (FCN), converts fully connected layers into convolutional layers to form a fully convolutional network. DeepLabV2 uses the ResNet convolutional neural network as the backbone network for feature extraction. To prevent resolution reduction caused by downsampling, the last two blocks of convolution operations no longer downsample but replace ordinary convolution layers with dilated convolution layers for feature extraction. The extracted features are sent to ASPP. Atrous Spatial Pyramid Pooling samples inputs in parallel with different sampling rates of dilated convolutions to extract high-level semantic information and achieve multiscale feature extraction. 15 DeepLabV3 improves the ASPP structure based on DeepLabV2. After improvement, the feature information output by ASPP is concatenated in the channel dimension and reduced in channel number through 1 × 1 convolution. Finally, the output is further fused through a 1 × 1 convolution layer to obtain the output. 16 This is shown in Figure 1.

Figure 1.

DeepLabV3 network structure.

Residual structure

Deep neural networks can extract high-level semantic information, but as the number of layers in the neural network continues to increase, there is a problem of network degradation where the training set loss does not decrease but increases. The ResNet network introduces the idea of “residual learning” based on traditional convolutional neural networks, which effectively solves the problem of network degradation by adding residual blocks to the network. The basic idea of a residual block is to directly connect the original feature map of the input end to the output end of the main branch through a shortcut branch, which improves the feature extraction ability of the network. A typical residual block can be represented by the following formula:

| (1) |

where xl and xl+1 are the input and output of the first layer, f(·) is the activation function, H(xl) = is the identity mapping is the residual part, and the residual part generally consists of two or three convolution operations, 17 as shown in Figure 2.

Figure 2.

Residual structure in ResNet.

Vision transformer

Transformer is an encoder–decoder structure that was originally applied in the NLP field. Dosovitskiy et al. first applied the transformer to the computer vision field and proposed the Vision transformer (ViT) model for image classification. 15 Liu et al.'s Swin transformer introduced a sliding window mechanism that allows the model to learn information across windows. Through downsampling layers, the model can handle high-resolution images, save computation, and focus on global and local information. 18 In the field of medical image semantic segmentation, many scholars have fused Unet and transformer. Chen et al. proposed a network that fuses Unet and transformer for multiresolution input image information aggregation and achieved good medical image segmentation results. 19 Some scholars have also improved the network by fusing transformer based on the DeepLabV3 model. Yang et al. proposed to fuse transformer based on DeepLabV3 for image segmentation of patellar fractures and achieved good results. 20 However, there are no reports on the use of related technologies in tongue image segmentation research.

The ViT transformer based on tongue images mainly consists of three parts: Linear Projection of Flattened Patches (Embedding layer), transformer Encoder, and the MLP (Multilayer Perceptron) Head layer structure used for classification, as shown in Figure 3. The Embedding layer divides the image into several image blocks according to a given size, flattens each image block into a one-dimensional vector, and then adds a class token and Position Embedding to each vector before inputting it into the transformer Encoder. The transformer Encoder is composed of multiple Encoder Blocks that are stacked repeatedly. Each Encoder Block includes Layer Norm processing for each token, multihead attention mechanism, Dropout layer, and MLP Block. The MLP head consists of Linear + tanh activation function + Linear. Finally, the corresponding result generated by extracting the [class] token is the final classification result. 15

Figure 3.

Structure diagram of the Vision transformer (ViT).

ECA attention mechanism

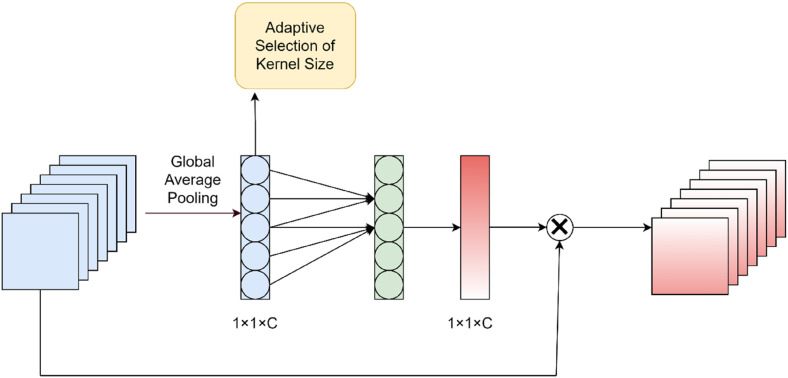

Taking into account the improvement effect and parameter volume of various attention mechanisms, this paper uses the ECA attention mechanism. As shown in Figure 4, the ECA structure first uses global average pooling for each channel separately and then performs one-dimensional convolution operations on each channel and its k neighbors to achieve local cross-channel information interaction. The global average pooling layer effectively reduces the number of parameters and integrates global information. The use of one-dimensional convolution can not only interact locally across channels but also effectively avoid the impact of dimensionality reduction on channel attention learning in the early SE attention mechanism. The parameter volume of the ECA structure is also less than that of two-dimensional convolution, which can maintain model performance while reducing model complexity. 21

Figure 4.

ECA attention mechanism.

Improved DeepLabV3 model

This paper proposes a new model RTC_TongueNet based on the improved DeepLabV3 model. The new model is improved from multiple perspectives such as residual structure, transformer structure, ASPP, and backbone network.

Improved residual structure

To better solve the problem of network degradation, this paper has improved the residual network. When the width and height of the input feature layer are twice that of the output feature layer, the input feature map will pass through two branches. The main branch passes through a 3 × 3 convolution, and the shortcut branch passes through a 1 × 1 convolution. When the width and height of the input feature layer are equal to the output feature layer, the input feature layer will pass through three branches. The main branch is a 3 × 3 convolution, one shortcut branch is a 1 × 1 convolution, and the other shortcut branch only passes through a BN layer, as shown in Figure 5. Compared with the residual structure in ResNet, the improved residual structure has one less convolutional layer in the main branch and fewer intermediate convolution operations, which means that shallow information is mapped to deep networks more frequently; and regardless of which shortcut branch is used, it passes through a BN layer, which further solves the problem of gradient disappearance and gradient explosion.

Figure 5.

Improved residual structure. (a) The width and height of the input feature layer are twice that of the output feature layer. (b) The width and height of the input feature layer are equal to the output feature layer.

Improved transformer structure

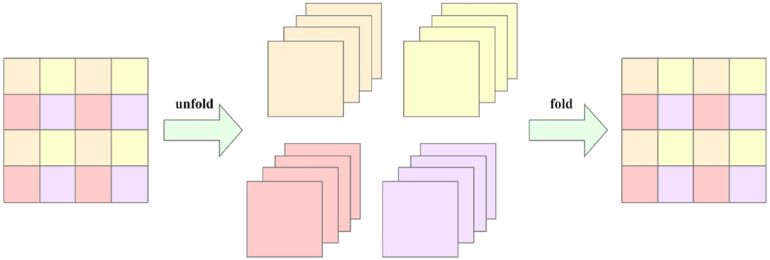

In this paper, multiple transformer block structures are used to model local and global information. Convolution operations are used to model local information, and an improved self-attention mechanism is used to model global information. Unlike traditional self-attention mechanisms, this method solves the problem of the transformer's lack of spatial inductive bias.

As shown in Figure 6, for a given input tensor X ∈ RH × W × C, a 3 × 3 convolution operation is used to model local information. After integrating the local information, the output tensor is subjected to a 1 × 1 convolution operation to obtain tensor XL ∈ RH × W × D. This operation is performed to obtain higher-dimensional local information (C, D are the number of channels or dimensions, and C < D). Then, when unfolding the tensor XL, the tensor XL is converted into N nonoverlapping patches XU ∈ RP × N × D, where P = wh, N = WH/P, D is the number of channels, and N is the number of patches. w and h are the width and height of each patch. For each p ∈ {1,…,p}, the relationship between patches is encoded using a transformer to obtain:

| (2) |

Figure 6.

Improved transformer structure.

Figure 7 shows a detailed explanation of the encoding process in Figure 6, each patch is evenly divided into tokens. Tokens in the same position of each patch are encoded together. That is, the combinations of tokens with the same color shown in the figure are encoded together. This method not only preserves the order of patches but also preserves the order within each patch. Therefore, we fold XG ∈ RP × N × D (after encoding) to obtain XF ∈ RH × W × D and then project XF to a low-dimensional (c-dimensional) space using a 1 × 1 convolution. We concatenate it with tensor X in the depth direction through a shortcut branch and finally adjust the number of channels back to the same number of channels C as tensor X using a 1 × 1 convolution. It should be noted that XU(p) is encoded after local information integration using a 3 × 3 convolution operation. Therefore, each token in each patch contains all pixel information in the patch area. XG(p) encodes information from global pixel information for each p-th token of each patch. Therefore, each pixel in XG can encode information from all pixels in X. As shown in Figure 7.

Figure 7.

Encoding and decoding process.

Add the ASPP structure of ECA attention

The DeepLabV3 model uses the ASPP structure to integrate as much global information as possible and extract useful information. This article attempts to improve the ASPP structure by adding an ECA attention mechanism after the concat operation.

The ASPP structure in DeepLabV3 consists of five parallel branches, namely, a 1 × 1 convolution layer, three 3 × 3 convolution layers with different expansion rates for extracting multiscale image information, and a global pooling layer. The output feature graph of the five branches has the same size, which is 60 × 60 × 256. Finally, the output of the five branches is spliced into 60 × 60 × 1280 according to the channel, an ECA attention mechanism is added, and then further feature fusion is carried out through the 1 × 1 convolution layer, and the output size is 60 × 60 × 256. The improved ASPP structure takes Backbone's output as input, and the output of the ASPP structure is returned to the original size by bilinear interpolation after passing through two convolutional layers.

The experiment shows that the addition of the ECA attention mechanism is more conducive to the information exchange between the output results after five parallel operations and can further obtain useful information. The ASPP structure with ECA attention is shown in Figure 8.

Figure 8.

Atrous Spatial Pyramid Pooling (ASPP) structure with ECA attention.

Backbone network

Traditional convolutional neural networks increase the depth will bring gradient explosion or gradient dispersion, a network degradation phenomenon. Due to the need to decode the result back to the original image in the image segmentation, the downsampling multiplier should not be too large, otherwise it will lead to the CNN feeling field being limited and difficult to capture the global information, and the transformer can effectively capture the long-distance dependency. Based on the above considerations, this paper adopts the method of combining the two residual structures and adding a transformer to build its own backbone network for feature extraction. The new model network uses an improved residual structure, multiple transformer block structures, and an improved, optimized strategy for the ASPP module that incorporates the ECA attention mechanism. The backbone structure of the network is shown in Figure 9, where newconv is the new residual structure described above, where r is the convolution coefficient of the expansion convolution.

Figure 9.

RTC_TongueNet network structure diagram.

Experiment results

Dataset

In January 2023, to study the intelligent recognition of traditional Chinese medicine tongue images, we collected patient tongue images in the Gastroenterology Department of the Third Affiliated Hospital of Beijing University of Chinese Medicine and carried out research. All patients provided written informed consent. We used two datasets in the experiment. The details of the datasets are as follows:

BIOHit dataset: This is a public dataset provided by the Harbin Institute of Technology. The tongue images in this dataset were collected by a professional tongue imaging instrument with a constant light source under a semiclosed state. There are a total of 300 images in the dataset, and the image size is 768 × 576 with a jpg format. The dataset has been annotated with marked mask files.

Self-built tongue image dataset: This is a tongue image dataset collected by the Third Affiliated Hospital of Beijing University of Traditional Chinese Medicine in clinical practice. The dataset was collected by a professional tongue imaging instrument, and there are 642 images in total. The image size is 2592 × 1944 with a PNG format. The original images of the patients contain the outline of the instrument and the patient's facial information. After further manual cropping, we retained the part below the nose to construct our own dataset. The tongue was annotated using Labelme software by professional personnel, and the annotation results of the dataset have been confirmed and improved by medical experts.

In this study, we randomly divided the two tongue image datasets into training sets and test sets at a ratio of approximately 8:2. The training set of the BIOHit dataset consists of 240 images, and the test set consists of 60 images. Our own tongue image dataset consists of 530 images for training and 112 images for the test set. During training, a 256 × 256 portion was randomly cropped from each image as input and normalized. To reduce the overfitting of the model, random horizontal flipping was performed on the training set data to achieve data augmentation.

Experimental environment

The experimental system environment was the Windows 11 operating system, the GPU was NVIDIA GeForce RTX2060, the programming language was Python 3.10, and the deep learning framework was PyTorch 1.10. The default parameter settings were as follows: the optimizer was SGD, the epoch size was 50, the batch size was 2, the initial learning rate was 0.001, and the loss function was CrossEntropyLoss.

Experimental evaluation indicators

Image semantic segmentation can be regarded as pixel-level classification. PA (Pixel Accuracy), Class PA, MPA (Mean PA), IOU (Intersection over Union), MIOU (Mean IOU), and other indicators are often used to evaluate the segmentation performance of models.22–26 In this experiment, we used the commonly used evaluation indicators for medical image segmentation, MIOU, MPA, and Dice similarity coefficient, to evaluate and compare the model's segmentation performance.

The evaluation metrics of image semantic segmentation are based on the confusion matrix. The meanings and formulas of each metric are shown below:

- PA: It is used to represent the proportion of pixels that are correctly predicted to the total number of pixels. In the confusion matrix, the elements on the main diagonal are the correctly predicted elements, and the elements at other positions are the incorrectly predicted elements. TP is true positive; TN is true negative; FP is false positive; FN is false negative. pii is the total number of pixels correctly identified as i for class i; pij is the total number of pixels incorrectly identified as class j for class i; k is the category:27–29

(3) - MPA: Mean PA, that is, the average accuracy at the pixel level. Calculate the proportion of pixels correctly classified for each class to the total predicted pixels, and then divide the number of pixels correctly classified into all categories by the total number of pixels to obtain pixel-level accuracy. Finally, take the average of all pixel-level accuracies to obtain the MPA index: 30

(4) - MIOU: It is used to represent the average value of the ratio of the intersection and union of all categories. In the formula, k is the number of categories that need to be segmented, and there are a total of k + 1 categories that need to be segmented (background is one category); pii is the total number of TPs correctly identified as class i; pij is the total number of pixels incorrectly identified as class j for class i, that is, the total number of FPs; pji is the total number of pixels incorrectly identified as class i for class j, that is, the total number of FNs:31–33

(5)

Experimental results

Comparison of the dilation coefficients in the ASPP structure

In the ASPP structure, the dilation coefficient may also affect the model performance. To determine the optimal dilation coefficient, this study conducted experiments on four groups of dilation coefficients on the second dataset. Each group has three values representing the dilation coefficients of the three parallel dilated convolutions in the ASPP structure. The experimental results are shown in Table 1

Table 1.

Comparison table of dilation coefficients in ASPP structure.

| Coefficient of expansion group | MIOU |

|---|---|

| 3,6,9 | 0.949 |

| 9,18,27 | 0.950 |

| 12,24,36 | 0.951 |

| 15,30,45 | 0.950 |

As can be seen from the experimental results, the model performs best when the dilation coefficient array is 12, 24, and 36. Subsequent experiments will be based on this result and use the dilation coefficient array of 12, 24, and 36 for experimentation.

Comparison of each model

In this experiment, a total of five models, FCN, UNet, Lite Reduced ASPP (LRASPP), DeepLabV3, and RTC_TongueNet were used to conduct experiments on two datasets, respectively. Table 2 shows the IOU and MPA values of each model on the two datasets.

Table 2.

Performance of each model on the self-built tongue image dataset and BIOHit.

| Network | Our Dataset | BIOHit Dataset | ||

|---|---|---|---|---|

| MIOU | MPA | MIOU | MPA | |

| FCN | 0.869 ± 0.041 | 0.935 ± 0.051 | 0.862 ± 0.044 | 0.911 ± 0.054 |

| UNet | 0.936 ± 0.014 | 0.971 ± 0.022 | 0.924 ± 0.023 | 0.951 ± 0.017 |

| LRASPP | 0.926 ± 0.022 | 0.961 ± 0.021 | 0.903 ± 0.026 | 0.930 ± 0.019 |

| DeepLabV3 | 0.941 ± 0.016 | 0.976 ± 0.015 | 0.931 ± 0.019 | 0.968 ± 0.013 |

| RTC_TongueNet(ours) | 0.950 ± 0.012 | 0.979 ± 0.015 | 0.941 ± 0.019 | 0.979 ± 0.011 |

Through the experiment, it can be seen that the MIOU and MPA values of the classic model DeepLabV3 on the two datasets are higher than those of the FCN, UNet, and LRASPP models, and the performance is better. Our improved model RTC_TongueNet has an IOU increase of 0.9% and an MPA increase of 0.3% compared to the DeepLabV3 model on the first dataset, and both MIOU and MPA are the highest among the five models. On the second dataset, RTC_TongueNet has an MIOU increase of 1.0% and an MPA increase of 1.1% compared to the DeepLabV3 model, and both MIOU and MPA are also the highest among the five models. Overall, our RTC_TongueNet (ours) model performs best on both datasets.

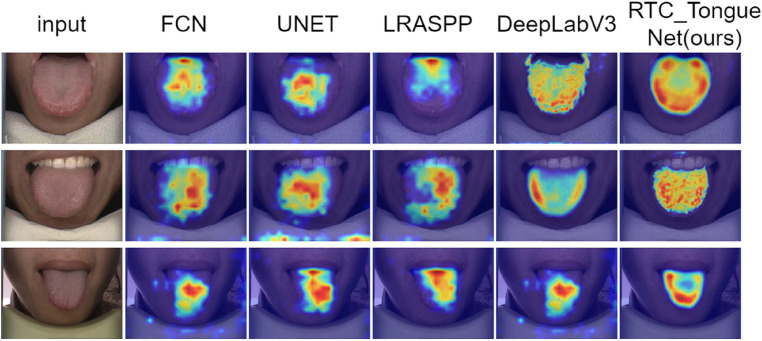

Further compare the tongue segmentation effect of each model, as shown in Figure 10. The predicted results of each model can also show that FCN and other models have problems such as inaccurate segmentation of the tongue edge, incorrect segmentation of the tongue root, and spots in the tongue. The improved RTC_TongueNet (ours) model has the most accurate segmentation effect, handles the segmentation of the tongue edge well, and the edge processing is relatively smooth. It can effectively identify teeth and lips on the edge of the tongue and correctly segment them into the background.

Figure 10.

Tongue segmentation effect of each model.

Further, compare the changes of the loss value and Epoch on the training set of each model on the second dataset. The experimental results are shown in Figure 11. It can be seen that the loss values of each model are stable after 30 rounds, indicating that the results of each model are optimal and that the model and data have been fitted, indicating that the experimental results are true and reliable. At the same time, it can be seen that the RTC_TongueNet (ours) model has a faster decrease in loss and can converge quickly; after 30 rounds, it tends to be stable, and the model is relatively stable; at the same time, the model has the smallest loss and the best performance.

Figure 11.

Loss and Epoch change plots for each model.

Ablation experiment

To verify whether the improvement strategy has a positive effect on the model, the RTC_TongueNet (ours) model was tested using ablation experiments on the two datasets, respectively. The results are as follows:

As can be seen from Tables 3 and 4, after adding the ECA attention mechanism, the MIOU of the model has been improved on both datasets, and the MPA has not changed on the first dataset but has increased by 0.3% on the second dataset; after adding ECA and transformer, MIOU on both datasets has increased by 0.4%, and MPA has increased by 0.2% on the first dataset and 0.6% on the second dataset; after adding ECA, transformer and new residual structure, MIOU on both datasets has increased by 0.4%, and MPA has increased by 0.1%. It can be seen that the ECA attention mechanism pays more attention to the details of the tongue edge in the network and can effectively improve the performance of the model; after adding the transformer block, the self-attention mechanism allows the network to obtain information in different subregions for effective local and global feature extraction; The use of new residual structures can help solve network degradation problems. It can be seen that the improvement methods attempted in this article have had a positive effect on network training in RTC_TongueNet.

Table 3.

Ablation experiment of RTC_TongueNet model on self-built tongue chart dataset.

| ECA | Transformer | Improved residual structure | MIO | MPA |

|---|---|---|---|---|

| 0.941 | 0.976 | |||

| √ | 0.942 | 0.976 | ||

| √ | √ | 0.946 | 0.978 | |

| √ | √ | √ | 0.950 | 0.979 |

Table 4.

Ablation experiment of the RTC_TongueNet model on the BIOHit dataset.

| ECA | Transformer | Improved residual structure | MIOU | MPA |

|---|---|---|---|---|

| 0.931 | 0.968 | |||

| √ | 0.933 | 0.971 | ||

| √ | √ | 0.937 | 0.978 | |

| √ | √ | √ | 0.941 | 0.979 |

Feature visualization

In the semantic segmentation prediction stage, the process is like a black box with poor interpretability. The Grad-CAM model can use a heat map visualization method to present the trained model in the form of a heat map. To determine whether the network model used has learned the features we expect, this study uses DeepLabV3 and the improved DeepLabV3 combined with the Grad-CAM model for prediction, which can intuitively display the contribution of each area in the image to segmentation and show the network's focus during the learning process.

As shown in Figure 12, the input is the original image that needs to be predicted, and RTC_TongueNet (ours) is the heat map generated by the improved model during prediction. The improved model's heatmap in the tongue region has more vibrant colors, and there are almost no blue dots in the background region, indicating that the improved model is more likely to learn tongue features and is less likely to be interfered with by noise information. This proves that the model improvement does guide the network to capture key features during network learning.

Figure 12.

Heat map of each model presented by Grad-CAM.

Discussion

Tongue diagnosis is an important means of diagnosing diseases in traditional Chinese medicine. Tongue diagnosis requires the identification of tongue image, but traditional tongue image identification requires the professional knowledge and experience of the doctor, which is subjective to a certain extent and is also susceptible to the influence of factors such as light brightness and contamination of colored foods on the tongue coating. In the process of informatization development of TCM, the intelligent tongue diagnosis and identification system has always been a research hotspot, and how to effectively segment and classify the tongue body is an important part of it. 34 Tongue segmentation is easily influenced by background information such as teeth and lips, as well as the edge areas of the tongue that are similar in color to the tongue. As a result, the segmentation effect is not always satisfactory. In this study, based on DeepLabV3, the network structure is improved to improve the tongue recognition effect of the model. A good tongue segmentation effect has been achieved on both data sets, and the model has a certain generalization ability. This study improves the network structure based on DeepLabV3, enhances the tongue recognition effect and generalization ability of the model, and achieves excellent tongue segmentation results on two different datasets.

In this study, the residual module was improved, and the residual module was divided into two structures. Different residual structures were used under different conditions to speed up the frequency of mapping shallow information to deep networks and more effectively extract the underlying features of tongue images. The two residual structures were combined and added to the transformer as the main network at multiple locations for effective local and global feature extraction. We further added the ECA attention mechanism after the concatenation operation in the ASPP structure to increase attention on difficult-to-separate areas such as tongue edges and tooth marks based on information fusion, so that the tongue edge can obtain a better segmentation effect. Through experiments, our improved model has significantly improved evaluation indicators compared with other classic models and can complete tongue segmentation tasks well.

Although this study has achieved a good tongue segmentation effect, it still has the following shortcomings: (1) Limited by GPU memory, the image size received by the model is small, and there will be information loss during image resize; (2) At present, the model can only segment static images and cannot segment the video tongue in real time. This is also the problem that the next research needs to continue to solve.

Conclusion

Tongue image segmentation is an important part of intelligent tongue diagnosis. Based on DeepLabV3, this paper combines the improved residual structure and transformer to completely extract local and global image features. Meanwhile, multiple differently sized dilated convolutions are utilized for multiscale feature extraction. Furthermore, CAE attention modules are introduced to enhance channel attention, strengthen useful information, and reduce the interference of irrelevant information. Through experimental comparison, our improved model has achieved good results on our own dataset and public datasets. This study has practical application value and reference value for tongue image segmentation research.

Footnotes

Contributorship: Yan Tang1 and Daiqing Tan jointly discussed the improvement points of the model and conducted experiments, drafted the paper together, and revised it according to their opinions. Zaijian Wang, Aiqing Han conceived and designed the study. Huixia Li, Muhua Zhu, Xuan Wang, JiaQi Wang, and Xiaohui Li labeled the data and carried out pre-processing. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: National Key R&D Program of China (2020YFC2003100, 2020YFC2003102). All patients provided written informed consent.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the China University Industry-University-Research Innovation Fund (2021LDA09001).

Guarantor: ZW and AH

ORCID iDs: Daiqing Tan https://orcid.org/0009-0007-1559-7785

Aiqing Han https://orcid.org/0000-0002-1087-0873

References

- 1.Huang C-J, Lin H-J, Liao W-L, et al. Diagnosis of traditional Chinese medicine constitution by integrating indices of the tongue,acoustic sound, and pulse. Eur J Integr Med 2019; 27: 114–120. [Google Scholar]

- 2.Pang B, Zhang D, Li Net al. et al. Computerized tongue diagnosis based on Bayesian networks. IEEE Trans Biomed Eng 2004; 51: 1803–1810. [DOI] [PubMed] [Google Scholar]

- 3.Wang X, Liu J, Wu C, et al. Artificial intelligence in tongue diagnosis: using deep convolutional neural network for recognizing unhealthy tongue with tooth-mark. Comput Struct Biotechnol J 2020; 18: 973–980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Song H, Huang Z, Feng L, et al. RAFF-Net: an improved tongue segmentation algorithm based on residual attention network and multiscale feature fusion. Digit Health 2022; 8: 205520762211363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhang H, Jiang R, Yang T, et al. Study on TCM tongue image segmentation model based on convolutional neural network fused with superpixel. Emran TB, ed. Evid Based Complement Alternat Med 2022; 2022: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zhou J, Zhang Q, Zhang Bet al. et al. TongueNet: a precise and fast tongue segmentation system using U-Net with a morphological processing layer. Appl Sci 2019; 9: 3128. [Google Scholar]

- 7.Li L, Luo Z, Zhang M, et al. An iterative transfer learning framework for cross-domain tongue segmentation. Concurr Comput Pract Exp 2020; 32: e5714. [Google Scholar]

- 8.Yan B, Zhang S, Yang Zet al. et al. Tongue segmentation and color classification using deep convolutional neural networks. Mathematics . 2022;10: 4286. [Google Scholar]

- 9.Liu H, Feng Y, Xu H, et al. MEA-Net: multilayer edge attention network for medical image segmentation. Sci Rep 2022; 12: 7868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang X, Bian H, Cai Y, et al. An improved tongue image segmentation algorithm based on Deeplabv3+ framework. IET Image Proc 2022; 16: 1473–1485. [Google Scholar]

- 11.Cai Y, Wang T, Liu Wet al. et al. A robust interclass and intraclass loss function for deep learning based tongue segmentation. Concurr Comput Pract Exp 2020; 32: e5849. [Google Scholar]

- 12.Huang X, Zhang H, Zhuo L, et al. TISNet-enhanced fully convolutional network with encoder-decoder structure for tongue image segmentation in Traditional Chinese Medicine. Comput Math Methods Med 2020; 2020: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhou C, Fan H, Li Z. Tonguenet: accurate localization and segmentation for tongue images using deep neural networks. IEEE Access 2019; 7: 148779–148789. [Google Scholar]

- 14.Dosovitskiy A, Beyer L, Kolesnikov A, et al. An image is worth 16×16 words: transformers for image recognition at scale. ICLR 2021.2021; arXiv:2010.11929.

- 15.Chen L-C, Papandreou G, Kokkinos I, et al. Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Mach Intell 2018; 40: 834–848. [DOI] [PubMed] [Google Scholar]

- 16.Chen LC, Papandreou G, Schroff Fet al. et al. Rethinking atrous convolution for semantic image segmentation. arXiv:1706.05587, 2017.

- 17.He K, Zhang X, Ren Set al. et al. Deep residual learning for image recognition. arXiv:1512.03385, 2015.

- 18.Liu Z, Lin Y, Cao Y, et al. Swin transformer: hierarchical vision transformer using shifted windows. Proc IEEE/CVF Int Conf Comput Vis 2021; 2021: 10012–10022. [Google Scholar]

- 19.Chen S, Qiu C, Yang Wet al. et al. Multiresolution aggregation transformer UNet based on multiscale input and coordinate attention for medical image segmentation. Sensors 2022; 22: 3820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yang J, Tu J, Zhang X, et al. TSE Deeplab: an efficient visual transformer for medical image segmentation. Biomed Signal Process Control 2023; 80: 104376. [Google Scholar]

- 21.Wang Q, Wu B, Zhu Pet al. et al. ECA-Net: efficient channel attention for deep convolutional neural networks. arXiv: 1910.03151, 2020.

- 22.Mehta S, Rastegari M. Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer. arXiv:2110.02178, 2021.

- 23.Li Z, Ren X, Xiao L, et al. Research on data analysis network of TCM tongue diagnosis based on deep learning technology. J Healthc Eng 2022; 2022: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liu R, Tao F, Liu X, et al. RAANet: a residual ASPP with attention framework for semantic segmentation of high-resolution remote sensing images. Remote Sens (Basel) 2022; 14: 3109. [Google Scholar]

- 25.Tang Z, Duan J, Sun Y, et al. A combined deformable model and medical transformer algorithm for medical image segmentation. Med Biol Eng Comput 2023; 61(1): 129–137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhou C, Fan H, Zhao W, et al. Reconstruction enhanced probabilistic model for semisupervised tongue image segmentation. Concurr Comput Pract Exp 2020; 32: e5844. [Google Scholar]

- 27.Kusakunniran W, Borwarnginn P, Karnjanapreechakorn S, et al. Encoder-decoder network with RMP for tongue segmentation. Med Biol Eng Comput 2023; 61: 1193–1207. [DOI] [PubMed] [Google Scholar]

- 28.Xu H, Chen X, Qian P, et al. A two-stage segmentation of sublingual veins based on compact fully convolutional networks for Traditional Chinese Medicine images. Health Inf Sci Syst 2023; 11: 19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kusakunniran W, Borwarnginn P, Imaromkul T, et al. Automated tongue segmentation using deep encoder-decoder model. Multimed Tools Appl 2023; 82: 37661–37686. [Google Scholar]

- 30.Li Y, Cheng Z, Wang C, et al. RCCT-ASPPNet: dual-encoder remote image segmentation based on transformer and ASPP. Remote Sens (Basel) 2023; 15: 379. [Google Scholar]

- 31.Marhamati M, Latifi Zadeh AA, Mozhdehi Fard M, et al. LAIU-Net: a learning-to-augment incorporated robust U-Net for depressed humans’ tongue segmentation. Displays 2023; 76: 102371. [Google Scholar]

- 32.Qiu D, Zhang X, Wan X, et al. A novel tongue feature extraction method on mobile devices. Biomed Signal Process Control 2023; 80: 104271. [Google Scholar]

- 33.Huang Z, Miao J, Song H, et al. A novel tongue segmentation method based on improved U-Net. Neurocomputing 2022; 500: 73–89. [Google Scholar]

- 34.Gao S, Guo N, Mao D. LSM-SEC: tongue segmentation by the level set model with symmetry and edge constraints. Comput Intell Neurosci 2021; 2021: 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]