Abstract

Objective

Caregivers raising children with fetal alcohol spectrum disorders (FASD) have limited access to evidence-based supports. This single-arm feasibility trial assesses the Families Moving Forward (FMF) Connect app to determine readiness for a larger randomized controlled trial (RCT).

Methods

Eligibility for this online trial included caregivers of children (ages 3–12) with FASD residing in the United States. Caregivers received FMF Connect for 12 weeks on their personal smartphones (iOS or Android). Pre- and post-assessments included child behavior, parenting and family functioning, and app quality; user experience interviews were conducted post-intervention. Usage and crashes were monitored. Study objectives assessed feasibility of the trial (recruitment, attrition, measure sensitivity), intervention (technical functionality, acceptability), and implementation (caregiver usage).

Results

Recruitment strategies proved sufficient with 171 caregivers screened and 105 deemed eligible. Analyses identified a few predictive demographic and outcome variables related to attrition. Several study measures were sensitive to change. Additional trial and measurement improvements were identified. From a technological perspective, the FMF Connect app was functional; the Android prototype required more

Keywords: mHealth, psychology, prenatal alcohol, fetal alcohol spectrum disorders, parenting, intervention, implementation, feasibility

Introduction

Fetal alcohol spectrum disorders (FASD) is an umbrella term referring to a number of physical and neurobehavioral differences associated with prenatal alcohol exposure (PAE). These neurobehavioral differences include difficulties with planning, problem solving, learning and memory, and behavioral and emotional regulation, as well as daily living, sensory processing, and social communication. 1 Other symptoms, like seizures, growth delays, and characteristic facial features, may also be present but are not necessary for a diagnosis in the category of FASD. 2 People living with FASD also have a variety of strengths, especially in social motivation, and contribute to society and those around them in many positive ways.3,4

FASD is estimated to affect 1.1 to 5% of children in the U.S. 5 Despite the high prevalence of this condition, many of these individuals and their families do not have access to FASD-informed care. Further, many providers report not feeling equipped to diagnose or treat FASD.6–8 For families raising school-aged children with FASD, interventions including a positive behavior support approach have been shown to improve child and family functioning,9,10 and early intervention is crucial to supporting positive outcomes.9,11 Essential elements for FASD-informed care have been identified. 9 But evidence-based intervention for FASD remains inaccessible to many. Families of individuals living with FASD face significant barriers to accessing these interventions, including but not limited to lack of widespread and systemic awareness of FASD, lack of resources, and stigma.6,12,13

To address this significant need, the Families Moving Forward (FMF) Connect mobile health (mHealth) intervention was developed. FMF Connect is a derivative product of the FMF Program, a behavioral consultation program specialized for caregivers of children with FASD aged 3–12. The FMF Program, which includes a positive behavior support approach, is traditionally delivered by a trained specialist for 90 min biweekly (or 60 min weekly) in person or via telehealth.14,15 In this standard format, the FMF Program is delivered individually and can be customized for caregiver needs. Used with diverse families, the FMF Program has been shown to positively affect caregiver outcomes including knowledge of FASD, family needs met, parenting efficacy, self-care, and child behavior.14–16 Findings have held up across several randomized control trials. Additional analyses have shown high treatment and provider satisfaction. 17

In adapting the FMF Program to FMF Connect, a systematic user-centered design approach to development and testing was utilized (see Petrenko et al., 2020; 2021 for detailed description of development and initial testing). Although complex, the core components and underlying theory and logic model of the FMF Program were identified and adapted to an mHealth format, and augmented with new content and functionalities. These adaptations were made based on how users interact with technology, incorporating a backward design process 18 and principles of behavior change. 19 The FMF Connect app has five components (see Figure 1): Learning Modules (core intervention), Notebook (storing completed exercises and user notes), Dashboard (progress summary and behavior tracker tool), Family Forum (caregiver interactive forum), and Library (resources and fact sheets). The entire development process was informed by qualitative interviews with community members, essential in a user-centered design intervention development approach.20,21

Figure 1.

Families moving forward (FMF) connect components.

The prototype of the FMF Connect app then underwent an initial feasibility trial to solicit feedback and make further refinements. 21 In this trial, 38 of 45 (84%) caregivers installed the app; users typically used the app for approximately 20 min at a time on usage days, largely spent watching videos in the Learning Modules. Five usage tiers based on content completion were characterized, ranging from high usage (completing at least 9 of 12 Learning Modules) to low or no usage. 21 About 56% of users had more than minimal use of the app, which is in line with meta-analysis on usual in-person intervention retention in child and adolescent mental health. 22 Results from qualitative interviews suggested caregivers were generally positive about the app, but also identified need for navigation and technological improvements and offered recommendations for new features. 21 Results of both usage data and qualitative interviews were incorporated into app refinements in preparation for further trials.

The current study

Consistent with the next step in a systematic development process and best practices in intervention development, 21 the current study describes a larger-scale feasibility study of the FMF Connect app. Feasibility studies are deemed necessary when some aspect of a future randomized controlled trial (RCT) is uncertain; the aim is to test feasibility of one or more aspects of a future RCT. 23 Although the therapist-guided standard FMF Program has undergone several trials with promising results,14,15,17 conclusions about the efficacy of FMF Connect cannot be made without a rigorous RCT. Thus, the current study was a non-randomized pilot study designed to test the feasibility of the intervention and other aspects of a planned RCT (e.g., recruitment, data collection infrastructure) to determine any necessary improvements for a larger trial. Specifically, the current study had the following aims:

Aim 1. Trial Feasibility

1a. Determine whether proposed recruitment and enrollment procedures produce sufficient rates to support a large-scale RCT, and sample demographics

1b. Investigate study attrition rate and attrition predictors

1c. Examine whether the assessment battery is sensitive to change

1d. Ascertain the optimal length between baseline and follow-up measurement

Aim 2. Intervention Feasibility

2a. Determine whether the FMF Connect app is technologically functional

2b. Investigate acceptability of the FMF Connect app to caregivers

Aim 3. Implementation Feasibility

3a. Examine caregiver implementation and usage predictors

Methods

Study design

This study was pre-registered at ClinicalTrials.gov (Identifier: NCT04194489) on November 12, 2019. The study included two rounds of feasibility testing of the FMF Connect mHealth intervention. Each round included a single group allocation to intervention. The first round of feasibility testing (FT1) was conducted from January 31 to October 21, 2020 and consisted only of iOS prototype users. The second round of feasibility testing (FT2) was conducted from January 26 to July 30 of 2021 and consisted only of Android prototype users. The start date of FT2 was delayed significantly due to the unforeseen impact of the SARS COVID-19 pandemic. Participants included caregivers of children (ages 3 to 12) with FASD or confirmed PAE. Feasibility objectives were evaluated using mixed methods. Participants completed quantitative surveys at the start of the study and then again 12 weeks later after receiving access to the FMF Connect app. Two additional surveys were administered at the 12-week follow-up timepoint with questions regarding participants’ thoughts about app quality and how the COVID-19 pandemic affected their family and usage of the FMF Connect app. Following these surveys, qualitative interviews were completed to understand participants’ experiences using the app. Usage data was collected within the app. Methods were identical for both feasibility rounds, with no significant changes once initiated. The COVID-19 experiences survey was added to the follow-up assessment battery early during FT1 prior to participants beginning follow-up assessment to ascertain the effect of the pandemic (which began in the U.S. during the course of FT1).

Participants

Caregivers were recruited through a variety of mechanisms by the study team and other investigators in the Collaborative Initiative on FASD (CIFASD; www.cifasd.org). These included social media ads, existing FASD research registries, provider referrals, targeted flyers in parent support groups or online newsletters, and conferences. Caregivers were eligible if they had a child between the age of 3 to 12 with an FASD diagnosis or confirmed PAE, and the child had been in their care for at least 4 months and was expected to remain in the home for the next year. Available details on PAE history and copies of FASD diagnostic evaluations (when applicable) were provided by caregivers during study screening. The first author, who has 20 years’ experience in FASD diagnosis, reviewed all records to assess eligibility. Caregivers were also required to: live in the U.S.; be over the age of 18; be comfortable reading and writing in English; and have a smartphone with iOS or Android operating system. Caregivers were excluded if they had ever received the standard FMF Program or if another caregiver in the home was already enrolled in the study.

A priori targeted sample size was 60 caregivers with usable data. Based on predicted attrition rates (20%) from initial testing, the planned enrollment number was about 75 caregivers. However, due to early signals of increased attrition at follow-up during the COVID-19 pandemic, enrollment was opened up to a maximum of 120 caregivers.

Procedures

All study procedures were approved by the University Institutional Review Board (IRB). All data were collected online. Recruitment materials directed interested participants to the study website where they could access a link to the study Screening & Consent Module in REDCap hosted by the University of Rochester.24,25 Participants completed informed consent in REDCap by choosing to read the document or watch an IRB-approved video version of the consent form. Participants were then directed to complete screening and demographic questionnaires, also within REDCap. Study staff reviewed and documented eligibility for each participant. Baseline surveys were then sent to eligible participants via REDCap.

Following baseline survey completion, participants were sent an invitation to install the FMF Connect app, along with instructions. Over the 12-week study period, caregivers were informed they could use the app at their discretion. Participants also received weekly emails highlighting app content and features, along with information for technical assistance. The Family Forum was monitored by two peer moderators, who were caregivers with experience in the standard FMF Program and FMF Connect. The study team monitored usage and metrics throughout each trial. Bugs and crashes were also tracked, and updates were sent out to address problems or expand functionalities. Following completion of the 12-week trial, participants received an invitation to complete the same battery of surveys in REDCap as administered at baseline, with the addition of the Mobile Application Rating Scale User Version (uMARS, 25 ), and a COVID-19 Experience Survey (study-developed). Participants received $40 per timepoint for completing surveys at baseline and follow-up.

After finishing follow-up surveys, participants were asked to complete an interview with a member of the research team (full-time bachelors- and masters-level research staff and graduate students including 2nd, 5th, and 6th authors). Interviews were held on and recorded by HIPAA-compliant Zoom, with recorded files stored on a secure server. Participants were asked open-ended questions in a semi-structured interview led by the research team member. Areas of questioning included, but were not limited to, overall impression of the app, experiences with the five main components, usage patterns, motivation to try out the app, and barriers to use. Research members encouraged negative criticism and constructive feedback from participants to minimize positive bias. Interviews were 50.38 min in length on average (SD = 15.62 min), ranging from 22 min, 21 s to 83 min, 30 s. Participants were compensated an additional $25 for completing this interview.

Measures

Demographic Information: Demographic information was collected through a survey during the initial screening assessment. Variables included caregiver and child age, sex at birth, race, and ethnicity, and caregiver education, marital status, relation to child, income, and community type. Caregiver comfort with technology and operating system were also assessed. Additional details about current services received by the child and family were assessed in baseline surveys.

Eyberg Child Behavior Inventory (ECBI 27 ): The ECBI is a 36-item caregiver-report scale measuring frequency (Intensity scale) and perception (Problem scale) of disruptive behavior in children ages 2 through 16. The current study focuses on the Intensity scale; items are rated on a 7-point scale indicating frequency of the behavior. Total Intensity scores are presented as T-scores (M = 50, SD = 10), with higher scores indicating more frequent behavior problems. A T-score of greater than 60 is considered clinically elevated on this measure. Internal consistency in the current sample was high (Cronbach's α = .92 at baseline, α = .89 at follow up).

Parenting Sense of Competence Scale (PSOC 28 ): The PSOC is a 16-item self-report measure of the caregiver's sense of parenting efficacy (i.e., perceived competence, problem solving ability, and capability) and satisfaction (i.e., extent to which the individual enjoys the parenting role or experiences parenting frustration and anxiety). The items in the PSOC are answered on a 6-point scale ranging from “strongly disagree” to “strongly agree”. Higher scores on the efficacy scale indicate lower efficacy; higher scores on the satisfaction scale indicate higher satisfaction. Internal consistency was good for both subscales in the current sample, with Satisfaction α = .72 and Efficacy α = .81 at baseline, Satisfaction α = .81 and Efficacy α = .74 at follow up.

Family Needs Met questionnaire (FNM 11 ): The FNM includes 20 items relating to potential needs of caregivers of children with FASD or PAE and assesses the degree to which caregivers perceive these needs have been met. It is based on a measure developed for traumatic brain injury. 29 Each need is rated on a four-point scale (1 = not at all met, 4 = a great deal met) and is scored by computing the average response across items. Internal consistency in the current sample was high (baseline α=.94, follow-up α=.88).

FASD Knowledge and Advocacy questionnaire (K&A; 11): The K&A was developed for use in the FMF Program. It includes 45 items testing knowledge of FASD, advocacy and key FMF Program principles and is scored by calculating the number of items answered correctly. For level of agreement items, responses are scored correct if receiving the correct valence (e.g., credit for “agree” or “strongly agree” if item statement is true). Thirteen items are rated on a four-point scale ranging from strongly agree to strongly disagree, 10 items are rated on a true/false scale, and 19 items are multiple choice. Finally, three items are short answer. For the current study, a total score was computed by summing correct answers (excluding the three short-answer items). Internal consistency is good in this sample (baseline α=.74, follow-up α=.77).

Self-care and Support Assessment14,30: This is a 13-item questionnaire assessing caregiver self-care and social support. At follow up, caregivers also rated how their level of self-care and their level of support has changed over the past three months. For the current study, analyses used one item indicating how caregivers perceived their self-care changed over the past three months, rated on a five-point scale: 1 = a lot less self-care, 2 = somewhat less self-care, 3 = no change in level of self-care, 4 = somewhat more self-care, and 5 = a lot more self-care.

Mobile Application Rating Scale User Version (uMARS 26 ): The uMARS is a 26-item survey measuring user perception of the quality of mobile health apps. It includes 6 subscales: engagement, functionality, aesthetics, information, subjective quality, and perceived impact. The uMARS was completed at follow up only. Caregivers rated the items on a five-point scale that was specific to each question; higher scores indicate more positive impressions of the app. Internal consistency in the current sample was high: engagement α=.76, functionality α=.80, aesthetics α=.81, information α=.83, subjective quality α=.80, and perceived impact α=.95.

COVID-19 experience survey (study-developed): This is a 30-item survey developed to assess the impact the COVID-19 pandemic and shutdowns had on the caregiver and family. Caregivers completed the survey at follow up only. The current study focused on 12 items assessing caregiver stress about issues related to COVID-19 such as health, adjustment to new routines, and job stability on a five-point scale ranging from not at all stressed to extremely stressed. These items were summed to create a total score, with higher scores indicating higher stress. Internal consistency of these items was high (α=.89).

Data analysis

This study was designed to assess the feasibility of the FMF Connect intervention, trial procedures and measurement, and intervention implementation. As a feasibility trial, it was not designed to determine the statistical significance of intervention efficacy or reliably estimate the magnitude of intervention effects. Data analyses were guided by primary feasibility objectives listed in Table 1, with corresponding data sources. Analyses were descriptive for the majority of objectives.

Table 1.

Primary feasibility objectives for FMF Connect trial.

| Objective | Data Source | Data Analytic Method |

|---|---|---|

| 1. Trial Feasibility | ||

| 1a. Do proposed recruitment and enrollment procedures produce sufficient rates to support a large-scale RCT? Who is reached and who is not? | Website analytics; Consent & Screening Database; Study team documentation; Study CONSORT diagram | Descriptives |

| 1b. What is the study attrition rate? What predicts attrition? | Study CONSORT diagram; Study team documentation; Demographic variables | Chi square analyses, independent samples t-tests |

| 1c. Is the assessment battery sensitive to change? | Baseline and 12-week Follow-up surveys | Descriptives, pre-post comparison Cohen's d, repeated measures ANOVA |

| 1d. What is the optimal length between baseline and follow-up measurement? | Caregiver app usage patterns (see 3a) | Descriptives |

| 2. Intervention Feasibility | ||

| 2a. Does FMF Connect work from a technological perspective? | AWS crash reports; Qualitative interviews | Descriptives, qualitative content analysis |

| 2b. Is FMF Connect acceptable to caregivers? | uMARS ratings; Qualitative interviews | Descriptives, independent samples t-tests, Pearson's correlations, qualitative content analysis |

| 3. Implementation Feasibility | ||

| 3a. What does caregiver implementation look like? What predicts usage? | Usage data extracted from AWS | Descriptives, Pearson's correlations, independent samples t-tests, linear regression |

Although this trial was not designed to test intervention efficacy, measures of pre-post intervention effect size (Cohen's d) were calculated to determine whether selected measures were sensitive to change (Objective 1c). Group differences in outcome measures were also assessed by operating system (iOS vs. Android) using repeated measures ANOVAs. All quantitative analyses were conducted using SPSS software.

Qualitative data for Objective 2 (intervention feasibility; Table 1) were processed and analyzed as follows. The recorded files were de-identified and transcribed by research staff and stored on a secure server. Observations from video files including tone of voice, verbal, and non-verbal cues were integrated within transcripts and all files were rechecked for accuracy. Data were coded and analyzed with the assistance of HIPAA-compliant Dedoose software. 31 A team of four research staff coded the same transcript using the codebook from the initial feasibility study. 21 This included using structural, evaluative, and values coding methods 32 to best capture the idea and intention of the participant. Group coding was conducted until coders were confident in the a priori codebook and methods, with three transcripts in total. The remaining transcripts were pair coded. All coders met as a group to discuss codes and reach consensus when there were discrepancies or questions. New codes were added to the codebook when necessary and confirmed with the whole team. As a new code was introduced, research members reviewed previously completed transcriptions to apply the new code if necessary. Research staff never coded transcripts for which they were the interviewer.

Qualitative data analysis was guided by specific trial aims 2a and 2b (intervention feasibility; Table 1). Aim 2b was further broken down into three focused questions: 1) Did the user experience differ in terms of feasibility and acceptance for participants in the current trial relative to the initial feasibility study? 2) How did users perceive the new features/functionalities added following the initial feasibility trial? and 3) How did the COVID-19 pandemic impact families and their use of the FMF Connect app?

FMF Connect app usage data were extracted from the HIPAA-compliant Amazon Web Services (AWS) Cloud infrastructure for the project. In particular, users’ interactions with the app and progress through the Learning Modules were recorded on a database hosted on AWS. Recorded usage data include the overall time spent using the app and time spent on each screen of the Learning Modules. Usage data were extracted and matched with individual measures and interviews. Usage data were then analyzed graphically, and with correlation and regression techniques.

Results

Trial feasibility

Objective 1a. Do proposed recruitment and enrollment procedures produce sufficient rates to support a large-scale RCT? Who is reached and who is not?

Access to the REDCap Screening & Consenting Module for the study was provided through the study's website. Review of website traffic showed 782 new users during the FT1 study period, with the majority of new users accessing the site during the first two months. The majority reached the site directly (42.7%) or via social media (41.4%; mostly through Facebook). During the FT2 study period, 609 new users visited the site, with the majority in April and June of 2021. Similarly, the majority reached the site directly (61.8%) or via social media (25.6%; mostly through Facebook).

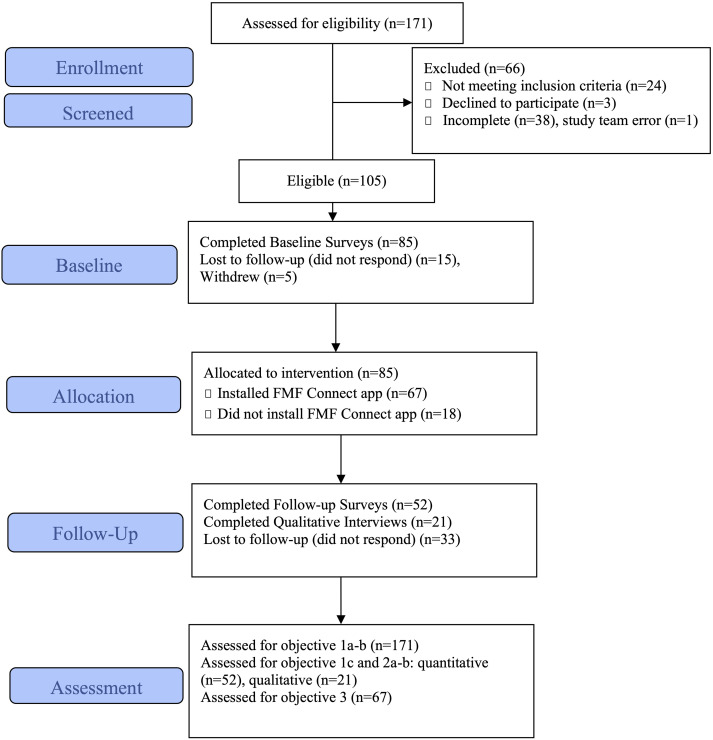

As shown in the CONSORT diagram in Figure 2, 171 non-duplicative caregivers initiated enrollment and screening in the online REDCap Consent & Screening module. Of caregivers enrolling in the study, 75% joined in the first 2.5 weeks. This suggests robust interest in the study and supports the ability to enroll a sample of sufficient magnitude for a larger-scale RCT.

Figure 2.

CONSORT extension for pilot and feasibility trials for FMF Connect.

Next, the number of participants determined to be eligible and their characteristics relative to the broader FASD population were considered. A total of 24 caregivers did not meet eligibility criteria, mainly due to having completed the standard FMF Program or the FMF Connect initial feasibility trial (n = 12), being outside the US (n = 3), or having a child outside the target age range (n = 6). Another 38 caregivers did not provide sufficiently complete data to determine eligibility. The majority of these incomplete cases were due to pending status with families indicating they were going to mail or fax FASD record documentation. This was complicated during the early recruitment period, in that COVID-19 pandemic-related shutdowns occurred 6 weeks after study launch which limited participant and staff submission and processing of records. A study team error also resulted in 1 eligible participant not being pushed through to the next stage of the study. Three caregivers elected not to participate but to wait for the future RCT. Study team documentation identified several procedures which will allow for more efficient eligibility determinations and record processing for the future RCT.

A total of 105 participants (61.4% of total enrolled) were determined eligible for the study and were sent baseline study measures. Five participants decided to withdraw from the study after eligibility determinations. Three participants decided they were either no longer interested or did not have time to participate, while two decided to wait for the subsequent RCT. Table 2 provides demographic information for eligible participants (excluding withdrawals). Although a wide age range is represented, nearly all participating caregivers were white adoptive women. Educational and income status skews higher than the general U.S. population. 33 Child age, sex at birth, and racial/ethnic composition are comparable to other FASD samples. 5 Participants resided in 30 U.S. states. States without coverage were primarily in the Deep South, Great Plains, and outside the continental U.S. Beyond characterizing the present sample, these data provide indicators of where recruitment efforts could be improved for the subsequent RCT.

Table 2.

FMF connect feasibility trial participant characteristics (n = 100).

| Characteristics | Caregiver (n = 100) | Child (n = 100) |

|---|---|---|

| Age (years) | ||

| Mean (SD) | 46.48 (8.72) | 8.09 (2.62) |

| Range | 26.19–65.99 | 3.20–12.86 |

| Sex at birth, n (%) | ||

| Female | 98 (98.0) | 39 (39%) |

| Ethnicity, n (%) | ||

| Hispanic/Latinx | 1 (1.0) | 16 (16.0) |

| Unknown | 4 (4.0) | 4 (4.0) |

| Race, n (%) | ||

| African American/Black | 0 (0.0) | 6 (6.0) |

| Asian | 1 (1.0) | 2 (2.0) |

| White | 92 (92.0) | 59 (59.0) |

| Native American/Alaska native | 2 (2.0) | 6 (6.0) |

| Native Hawaiian/Pacific islander | 0 (0.0) | 1 (1.0) |

| More than one race | 5 (5.0) | 23 (23.0) |

| Unknown | 0 (0.0) | 3 (3.0) |

| Education level, n (%) | ||

| Partial high-school (10th or 11th grade) | 1 (1.0) | N/A |

| High-school diploma/GEDc | 4 (4.0) | N/A |

| Some college/trade school/Associate's degree | 25 (25.0) | N/A |

| Bachelor's degree | 34 (34.0) | N/A |

| Partial graduate school | 2 (2.0) | N/A |

| Finished graduate school | 34 (34.0) | N/A |

| Current Marital Status, n (%) | ||

| Never married | 6 (6.0) | N/A |

| Living with partner | 2 (2.0) | N/A |

| Married | 76 (76.0) | N/A |

| Separated | 2 (2.0) | N/A |

| Divorced | 10 (10.0) | N/A |

| Widowed | 4 (4.0) | N/A |

| Relation to childd, n (%) | ||

| Biological parent | 3 (3.0) | N/A |

| Adoptive parent | 83 (83.0) | N/A |

| Foster parent | 7 (7.0) | N/A |

| Grandparent | 7 (7.0) | N/A |

| Other relative | 8 (8.0) | N/A |

| Family income, n (%) | ||

| $0-$15,000 | 4 (4.0) | N/A |

| $15,000-$24,999 | 3 (3.0) | N/A |

| $25,000-$34,999 | 3 (3.0) | N/A |

| $35,000-$49,999 | 5 (5.0) | N/A |

| $50,000-$74,999 | 27 (27.0) | N/A |

| $75,000-$99,999 | 21 (21.0) | N/A |

| $100,000-$149,999 | 23 (23.0) | N/A |

| $150,000 or more | 14 (14.0) | N/A |

| Prefer not to answer | 0 (0.0) | N/A |

| Community type, n (%) | ||

| Rural | 30 (30.0) | N/A |

| Suburban | 58 (58.0) | N/A |

| Urban | 12 (12.0) | N/A |

| Operating system, n (%) | ||

| iOS | 62 (62.0) | N/A |

| Android | 38 (38.0) | N/A |

| Comfort with technologyf | ||

| Mean (SD) | 6.37 (1.02) | N/A |

| Range | 2–7 | N/A |

| Services | ||

| Receiving special education services or formal accommodations in school | N/A | 68 (68.0) |

| Average rating: school meeting child's needsg | 2.53 (1.48) | N/A |

| Receiving individual or couples/family therapyh | 30 (30.0) | 47 (47.0) |

| Current or past parent training program | 56 (56.0) | N/A |

| In-person or online support group | 63 (63.0) | N/A |

| In-person or online FASD-specific support group | 52 (52.0) | N/A |

GED – General Education Development Test.

Eight participants were foster parents but were noted in other categories (e.g., foster parent who was in the process of becoming adoptive parent).

Comfort with technology was measured using a Likert scale ranging from 1 (low) to 7 (high).

Caregivers rated how well they felt the school was meeting the child's needs on a Likert scale from 1 (not at all) to 5 (very well).

Couples/family therapy represented in parent column only.

Objective 1b. What is the study attrition rate? What predicts attrition?

The CONSORT diagram (Figure 2) illustrates attrition rates across study procedures including baseline survey completion, app installation, and follow-up survey completion. Chi square analyses were used to determine if caregiver sex, caregiver race and ethnicity, caregiver type, caregiver income, and caregiver education level predicted attrition at any point. Independent sample t-tests were used to determine if caregiver age or child age predicted attrition at any point. T-tests were also used to determine if baseline measures predicted app installation or completion of follow up measures.

Not including withdrawals, 85% of participants completed baseline surveys. No demographic variables predicted attrition at baseline (ps > .05).

As described later in objective 2b (intervention acceptability), the COVID-19 pandemic may have played a role to some degree in attrition rates and app usage. The majority of participants in FT1 (all iOS users) received surveys prior to COVID-19 pandemic-related shutdowns in mid-March 2020 (31/52 iOS completed baseline surveys prior to March 13, 2020). The FMF Connect app was released to the first group of caregivers in FT1 a few days before shutdowns began. The Android protype was originally planned to launch within a month, after some additional refinements were made to achieve parity with the iOS prototype. However, the small programming team was either diverted to other University-wide pandemic-related efforts or were no longer able to work on the project due to student status. Because of unavoidable constraints, nearly a year passed before the Android prototype was ready.

A total of 67 participants installed the FMF Connect app across FT1 (iOS) and FT2 (Android), representing 78.8% of those who received it after completing baseline assessments. There was a trend toward caregiver education level predicting app installation (X2(5) = 10.037, p = .074), suggesting that caregivers with higher levels of education were more likely to install the app compared to caregivers with less education. No other demographic variables predicted app installation (caregiver age, child age, caregiver type, caregiver race/ethnicity, caregiver sex, caregiver income). Family needs met at baseline predicted app installation at a trend level. Caregivers who installed the app showed fewer family needs met at baseline (t(86) = 1.77, p = .081). No other baseline measures predicted app installation (ECBI, PSOC, ACEs, K&A).

Twelve weeks after receiving access to the app, 52 caregivers completed follow-up surveys, or 61.2% of caregivers receiving access to the app. Caregiver education level predicted completion of follow up surveys, X2 (5) = 11.802, p = .038, as caregivers with higher education were significantly more likely to complete follow up compared to caregivers with less education. Caregiver age predicted completion of follow up at a trend level, with younger caregivers more likely to complete follow up assessments (t(96.563) = 1.859, p = .066). No other demographic variables predicted completion of follow up measures (child age, caregiver type, caregiver race/ethnicity, caregiver sex, caregiver income). PSOC efficacy at baseline predicted completion of follow up assessments, with caregivers reporting more efficacy at baseline significantly more likely to complete follow up measures (t(85)=-2.116, p = .037). No other baseline measures predicted completion of follow up measures (ECBI, ACEs, FNM, K&A). Usage variables also predicted completion of follow up. Overall, people showing more app usage tended to be more likely to complete measures at follow up (total time: t(47.092)=-4.65, p < .001; sections completed: t(43.809)=-4.53, p < .001).

A total of 21 caregivers completed interviews (14 iOS users and 7 Android users). Caregiver education level predicted completion of interviews, X2(5) = 12.253, p = .031. Caregivers with higher education were generally more likely to complete an interview compared to those with less education. No other demographic variables predicted completion of interviews (caregiver age, child age, caregiver type, caregiver race/ethnicity, caregiver sex). Caregivers completing interviews tended to have children with less ECBI disruptive behavior at baseline (t(86) = 2.139, p = .035). There was a trend for this to be true at follow-up as well, with caregivers with children showing less ECBI disruptive behavior more often completing interviews (t(50) = 1.691, p = .097). Caregivers who completed interviews also tended to have fewer family needs met at baseline at a trend level (t(86) = 1.887, p = .063). No other outcome measures predicted interview completion (PSOC, ACEs, K&A, COVID stress, uMARS, self-care). Usage variables also predicted interview completion. Overall, people with more usage were more likely to complete interviews (total time: t(25.255)=-2.48, p = .02; sections completed: t(48)=-2.576, p = .007).

No adverse events or other harms were observed or reported by participants.

Objective 1c. Is the assessment battery sensitive to change?

Table 3 provides means and standard deviations for all primary outcome measures at baseline and follow-up for the full sample, as well as by device type. Cohen's d effect sizes are provided for pre-post comparisons based on paired samples T-tests. Effect sizes were in the medium to large range for child behavior problems (ECBI intensity) and in the small to medium range for parenting efficacy (PSOC) and FASD Knowledge, across the full sample and device type. Parenting satisfaction (PSOC) effect sizes were more variable, ranging from negligible to medium. Family needs met effect sizes were negligible. Caregiver's ratings of self-care change over the prior 12 weeks averaged around a rating of 3, which equates to no change.

Table 3.

FMF connect feasibility trial means, standard deviations, and measures of effect size for primary outcome measures.

| Outcome Measure | iOS (n = 30) | Android (n = 22) | Total Sample (n = 52) |

|---|---|---|---|

| ECBI Intensity - Baseline | 71.03 (8.55) | 66.95 (9.94) | 69.31 (9.29) |

| ECBI Intensity – Follow-up | 67.17 (7.74) | 63.50 (7.57) | 65.62 (7.81) |

| ECBI Intensity Pre-Post effect size [95% CI] | 0.63 [0.24, 1.02] | 0.54 [0.08, 0.98] | 0.60 [0.30, 0.89] |

| PSOC Efficacy – Baseline | 22.93 (6.27) | 20.82 (5.55) | 22.04 (6.01) |

| PSOC Efficacy – Follow-up | 21.53 (4.70) | 19.23 (4.70) | 20.56 (4.80) |

| PSOC Efficacy Pre-Post effect size [95% CI] | 0.43 [0.05, 0.80] | 0.40 [−0.04, 0.83] | 0.42 [0.13, 0.70] |

| PSOC Satisfaction – Baseline | 32.67 (5.79) | 33.23 (6.80) | 32.90 (6.18) |

| PSOC Satisfaction – Follow-up | 33.07 (6.50) | 35.32 (8.00) | 34.02 (7.18) |

| PSOC Satisfaction Pre-Post effect size [95% CI] | 0.09 [−0.27, 0.45] | 0.50 [0.05, 0.94] | 0.25 [−0.03, 0.53] |

| Family Needs Met – Baseline | 2.18 (0.62) | 2.62 (0.73) | 2.36 (0.70) |

| Family Needs Met – Follow-up | 2.29 (0.43) | 2.64 (0.51) | 2.43 (0.49) |

| Family Needs Met Pre-Post effect size [95%CI] | 0.16 [−0.20, 0.52] | 0.02 [−0.40, 0.44] | 0.09 [−0.18, 0.36] |

| K&A FASD Knowledge – Baseline | 34.27 (3.88) | 34.68 (5.00) | 34.44 (4.34) |

| K&A FASD Knowledge – Follow-up | 34.80 (3.83) | 36.18 (5.06) | 35.38 (4.40) |

| K&A FASD Knowledge Pre-Post effect size [95% CI] | 0.20 [−0.16, 0.56] | 0.49 [0.04, 0.93] | 0.33 [0.05, 0.61] |

| Change in Self-Care – Follow-up mean (SD) | 2.73 (1.26) | 3.18 (1.14) | 2.92 (1.22) |

Some significant differences emerged between participants in the iOS and those in the Android group. Specifically, participants in the iOS group were significantly older than those in the Android group (F(1102) = 7.098, p = .009). Additionally, participants in the iOS group reported significantly more stress related to COVID-19 at follow up (F(1,56) = 16.505, p < .001). Participants in the two groups did not differ on constructs of interest at baseline (ECBI, PSOC, FNM, K&A, ACEs), nor did they differ on other demographic variables (child age, caregiver type, caregiver race/ethnicity, caregiver sex, caregiver income).

Additionally, minimum detectable change (MDC) values were computed. 34 Cronbach's alpha was used. These represent the number of points an individual would need to improve in order to be considered as having reliably changed on a given measure given measure variability. MDC values are provided in Table 4 along with the percentage of the current sample (with follow-up data) meeting the criteria for reliable change. Note this was not computed for the K&A given it is a knowledge/criterion measure rather than a measure of a certain construct.

Table 4.

FMF connect feasibility trial minimum detectable change and percent meeting criteria for reliable change by measure.

| Measure | MDC95 | N (%) |

|---|---|---|

| ECBI Intensity | 7.09 | 10 (19.2%) |

| ECBI Problem | 9.46 | 13 (25.0%) |

| PSOC Efficacy | 7.04 | 3 (5.8%) |

| PSOC Satisfaction | 8.86 | 1 (1.9%) |

| Family Needs Met | 0.46 | 18 (34.6%) |

Descriptive statistics were complemented by repeated measures ANOVAs, examining potential group differences by device type. Table 5 shows statistics for main effect and interaction terms for each measure. Mean change across device type over the 12-week interval was statistically significant for nearly all indicators, with the exception of the Family Needs Met questionnaire. Across timepoints, group differences by device type were detected for family needs met. No interaction terms were significant, suggesting device type did not moderate effects.

Table 5.

FMF connect Feasibility Trial main and interaction effects for time and device type.

| Variable | Time | Device | Time x Device | |||

|---|---|---|---|---|---|---|

| F (df) | p | F (df) | p | F (df) | p | |

| ECBI Intensity | 17.42 ( 1,50) | <.001 | 3.09 (1,50) | .085 | 0.06 (1,50) | .815 |

| PSOC Efficacy | 8.84 (1,50) | .005 | 2.41 (1,50) | .127 | 0.04 (1,50) | .850 |

| PSOC Satisfaction | 4.06 (1,50) | .049 | 0.62 (1,50) | .433 | 1.87 (1,50) | .178 |

| Family Needs Met | 0.33 (1,50) | .569 | 11.02 (1,50) | .002 | 0.16 (1,50) | .694 |

| K&A FASD Knowledge | 6.64 (1,50) | .013 | 0.59 (1,50) | .445 | 1.50 (1,50) | .226 |

An item review was conducted for the Family Needs Met and K&A measures, as these had been previously tailored specifically for the standard FMF Program.11,14 Item content and graphical displays of participant responses were reviewed. As documented in team notes, it was determined that further adaptation would be appropriate to better align items with FMF Connect app content and the types of needs most likely to be met with self-directed use of a mobile health intervention. The team also concluded it would be useful to try to streamline the baseline and follow-up batteries by reducing the number of items, especially free response items not likely to be analyzed, to reduce participant burden and improve attrition.

Objective 1d assessing the optimal length between baseline and follow-up measurement will be addressed below in the context of Objective 3 based on caregiver app usage patterns.

Intervention feasibility

Objective 2a. Does FMF Connect work from a technological perspective?

The iOS version of FMF Connect was stable and users did not report particular issues or crashes to the development team. However, throughout the iOS feasibility study, 4 app updates were released to add additional functionalities (e.g., behavior tracking tool and daily ratings) and expanded the library section with new content that was unlocked while progressing through the Learning Modules. App performance and app connection to cloud infrastructure were also improved.

The Android version of FMF Connect was less stable. Users experienced issues progressing through the learning modules, difficulties navigating through the avatar builder, and problems accessing some of the app functionalities. To address these issues, 6 app updates were released between February 2021 and April 2021. In addition to fixing bugs reported by users, the app was updated to expand the video library, and improved overall performance when using older versions of the Android operating system.

Qualitative user experience interviews

Participants generally felt the app was functional, but also identified some technological challenges. Ten people who completed interviews (47.6%) reported at least one technological issue. Most technological issues were considered minor or infrequent; two Android users described more significant or multiple issues. Problems included app loading (9 people), streaming videos (6 people), app crashes (4 people), saving progress (3 people), installation (3 people), and login (2 people). Users described some problems with notifications (10 people). Participants also reported occasional technological errors in specific features; for example, one (FT058) said, “I wasn’t getting the Tip of the Day and all of the sudden, one Sunday, I got eight Tips of the Day in a row. So…I don’t know if there was a glitch or what the problem was but I got them all eight different times.”

Objective 2b. Is FMF Connect acceptable to caregivers?

This objective was assessed through quantitative ratings on the uMARS and qualitative interview data; results from the COVID-19 survey also informed this aim.

Mean app quality ratings on each of the four subscales and overall score of the uMARS are presented in Table 6. Scores were generally in the very good to excellent range (4–5 out of 5). Consistent with the primary purpose of the app, Information scores received the highest ratings across subscales. iOS users rated the FMF Connect app significantly higher than Android users on all four subscales and total score. This could be due to the greater technological functionality of the iOS version of the app. It should be noted that demographic differences at baseline between the groups may have also played a role; specifically, participants in the iOS group were significantly older and reported more stress related to COVID-19 at follow up. These could have favorably influenced their evaluations of the app.

Table 6.

FMF connect feasibilty trial means and standard deviations on uMARS scales by device type.

| Scale | iOS (n = 29) | Android (n = 21) | Total (n = 50) | t-test |

|---|---|---|---|---|

| Engagement | 3.90 (0.49) | 3.45 (0.50) | 3.71 (0.53) | t(48) = 3.19, p = .002 |

| Functionality | 4.23 (0.53) | 3.83 (0.61) | 4.07 (0.60) | t(48) = 2.46, p = .018 |

| Aesthetics | 4.25 (0.55) | 3.89 (0.56) | 4.10 (0.58) | t(48) = 2.28, p = .027 |

| Information | 4.59 (0.48) | 4.26 (0.54) | 4.45 (0.53) | t(48) = 2.32, p = .030 |

| Total App Quality | 4.24 (0.41) | 3.86 (0.46) | 4.08 (0.47) | t(48) = 3.10, p = .003 |

| Perceived Impact | 3.80 (0.71) | 3.40 (1.22) | 3.63 (0.97) | t(29.515) = 1.36, p = .185a |

Equal variances not assumed due to significant Levene's test (p = .014).

The uMARS also includes ratings of perceived impact. Mean scores were in the “neutral” to “agree” range, suggesting participants felt the app had some impact on their lives. Perceived impact ratings did not differ by device type.

Qualitative analysis

Overall themes and evaluations did not differ by device, based on participant matrices, with the exception of barriers related to COVID-19 pandemic. Themes and evaluations are discussed below.

Did the user experience differ in terms of feasibility and acceptance for participants in the current trial relative to the initial feasibility study?

Qualitative analysis results indicated participants in the current trial reported similar experiences to those in the initial feasibility trial. 21 Consistent with results from the initial trial, global evaluations and evaluations of specific components were overwhelmingly positive with over 2.75 times the number of positive evaluations coded than negative ones. Overlap between the two trials was particularly evident in evaluations of quality of information, aspects of videos, user interface, ease of use, and app accessibility. The Learning Modules, consistent with the previous trial, were received positively, with caregivers especially valuing the information and content. The format of the Learning Modules was also positively received, especially the table of contents and videos personalized to age and sex of the caregiver's child. One of the caregivers (FT048) noted: “You can kinda see yourself in [the videos]. And especially since it seems to be tailored towards kids in my daughter's age, grade, age range. Some of the other trainings I did were just, you know, just generic and you know, when you start talking about teens, and I am, ‘well, I have a five-year-old.’” Negative evaluations were also similar to the previous trial in that they generally focused on technological issues (see above) and lack of forum activity. One caregiver (FT018) said, “I wish there had been a little more talking among [caregivers in the Forum], because there's not any support groups, really for [FASD].”

In addition to evaluations, caregivers described their beliefs and values, which were consistent with those of caregivers in the previous trial, 21 including beliefs about the complexity of FASD and parenting a child with FASD. They also valued access to information and connection to others, given they felt FASD was an isolating diagnosis. As demonstrated in previous trials,20,21 caregivers felt the app was responsive and well-suited to their beliefs and values.

Finally, motivations for using the app and barriers to use were generally consistent with those identified in the previous trial. 21 Common motivators for using the app were access to information, connection with others, and app features and exercises. Barriers were also similar to those previously identified; by the far the barrier reported most often was not having enough time. Other barriers identified included length of content, difficulty navigating the app, and the effort required to engage with the app. Just as in the previous trial, caregivers offered both content and feature recommendations for the app. In the current trial, caregivers called for additional content (e.g., navigating the COVID-19 pandemic with children with FASD) and features (e.g., more notifications, joint account with partner).

2. How did users perceive the new features/functionalities added following the initial feasibility trial?

Two new features were added to the FMF Connect app following the previous trial: these were the Behavior Tracker tool and the Daily Ratings. The Behavior Tracker tool was designed to help caregivers notice and understand patterns in their children's behavior, and was received positively by caregivers: “I think my favorite part was the behavior tracker tool… I used it a lot because whenever he had a bad day or a good day, we saw a pattern” (FT045). Caregivers felt the behavior tracker was easy to use and emphasized the utility of the Behavior Tracker, with one (FT039) noting, “And once you’ve kind of noticed that, oh, these things are triggering his-some of his behaviors or we can reframe more, you know…it helps us notice okay, if we did this, if he gets this going on, schedule was off today…and he didn’t go to bed until 10 o’clock…the next day will be set up for a bad day.”

The Daily Ratings were also implemented for iOS in FT1. These were ratings the caregiver would complete each day on how well they felt they were doing at finding positives and their own self-care. Almost all evaluations of these ratings were negative, with caregivers reporting they did not like completing the ratings because they found the concepts difficult and often rated themselves low, which was discouraging for them. One caregiver (FT045) said: “Sometimes it made me like a little, not irritated, but like man I wish like I could do that…but I’d be like I just don’t have time, you know? …I’d get irritated like, I don’t have time for that today.” As a result, the Daily Ratings feature was not implemented in Android for FT2.

In addition to new features, a modification was made to the Learning Modules; for the iOS trial, the stepwise format of the Modules was retained and for the Android trial, this format was dropped. Similar to prior studies,20,21 iOS users provided mixed evaluations about the requirement to proceed through the Learning Modules in a stepwise format. A couple of Android users gave a positive evaluation of the open-access format to the modules. For example, one caregiver (FT071) stated: “Even just the perception of having the freedom to [skip around], I did like that and it made me more interested… I could always go back to it again and rewatch it and I wasn’t forced to sit there and do the whole thing.”

Finally, participants were asked about a proposed new feature called “FMF Connect Coaching”, in which they would have access to a live person who could communicate with the caregiver through the app. Caregivers generally reacted positively to this idea, with one (FT066) saying, “I think any real chat with anybody is more beneficial than like an artificial intelligence type of communication.”

3. How did the COVID-19 pandemic impact families and their use of the FMF Connect app?

The current trial occurred during the COVID-19 pandemic (iOS trial January-October 2020, Android trial January-July 2021), and caregivers felt this impacted their use of the app. Participant matrices revealed that Android users did not perceive the COVID-19 pandemic to be a barrier; this code only appeared in iOS users. COVID-19 related barriers included decreased mental health, increased parenting load, and homeschooling. These barriers were often discussed along with feelings of burnout and lack of time.

Caregivers’ responses to the COVID-19 survey show a similar pattern. Across the sample, mean stress was 26.22 (SD = 11.93, range 1–57), indicating on average caregivers felt “somewhat” worried or stressed related to the COVID-19 pandemic. Significant differences by device type were expected given the differences in timing of the trials. Indeed, iOS users reported significantly greater stress related to the COVID-19 pandemic compared to Android users (iOS mean 30.83, SD = 11.28; Android mean 19.62, SD = 9.68; t(49) = 3.70, p < .001).

User implementation feasibility

Objective 3a. What does caregiver implementation look like? What predicts usage?

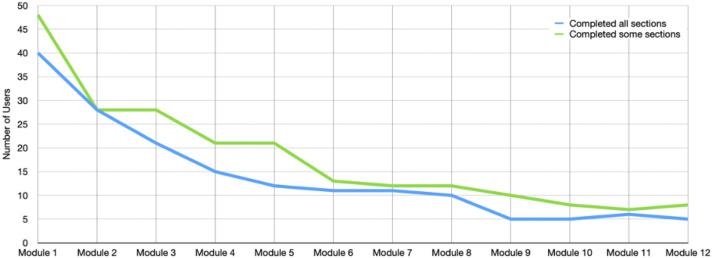

A total of 67 users installed the app (78.8% of the 85 who were sent the app). Of these, 29 iOS and 21 Android users completed at least 1 section of the Learning Modules (total of 12 modules subdivided into 130 total sections). The trajectory of module completion (Figure 3) was similar to those observed in the initial feasibility trial. 21 Despite open access to all modules, Android users generally went in order (as was required for iOS users). Twenty-one participants (31.3% of those who installed) completed most or all of Level 1 (Modules 1–4), 12 (17.9%) completed most or all of Level 2 (Modules 5–8), and eight (11.9%) completed most or all of Level 3 (modules 9–12).

Figure 3.

Trajectory of FMF Connect module completion.

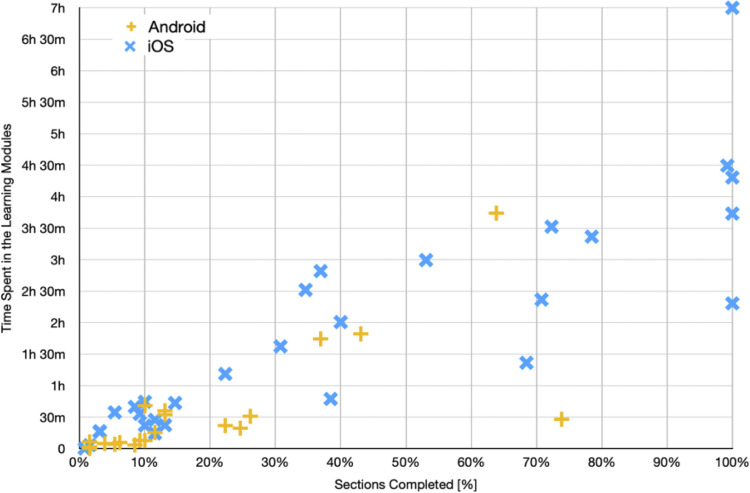

Total time spent across Learning Modules and percentage of sections completed are illustrated in Figure 4. The generally linear relationship between time and percentage completed suggests most participants spent a reasonable amount of time engaging with content rather than skipping quickly through screens. Consistent with Figure 4, participants who spent more time on the app completed more sections (r = .867, p < .001).

Figure 4.

Sections of FMF Connect completed by time spent in learning modules.

Usage data was also examined in relation to participant demographics and outcomes. Neither length of time using the app nor number of sections completed were significantly correlated with demographics and family factors. These included caregiver age, child age, caregiver type, or stress associated with COVID.

Length of time using the app and number of sections completed were significantly related to Family Needs Met at baseline (length of time r = -.288. p = .043; number of sections completed r = -.297, p = .036). Those with more needs met used the app less and completed fewer sections. Usage was not significantly related to any other outcome measure at baseline or follow up (ECBI, PSOC, K&A, self-care, COVID stress, services). Greater length of time in the app was significantly associated with greater satisfaction with the app on the following uMARS subscales: Engagement (r = .507, p = .002), Aesthetics (r = .450, p = .006), Information (r = .394, p = .019), and Overall App Quality (r = .488, p = .003). Greater number of sections completed was significantly associated with greater satisfaction with app aesthetics (uMARS aesthetics r = .425, p = .01).

Usage was not associated with change in regression analyses on the ECBI, PSOC, FNM, and K&A.

Table 7 presents usage metrics by device type. iOS users demonstrated significantly higher usage across metrics compared to Android users. Although it is possible this could have been impacted by significant differences in age and COVID stress between the two groups, this is unlikely as these variables were not related to usage overall. It seems more likely that usage differences are attributable to satisfaction with the app (given that iOS users reported significantly greater satisfaction with the app on the uMARS) and may reflect the differences in technological capabilities in the iOS and Android prototypes.

Table 7.

Usage metrics of FMF Connect by device type.

| Variable | iOS users (n = 29), n (%) | iOS range | Android users (n = 21), n (%) | Android range | T (df), p1 |

|---|---|---|---|---|---|

| Total time (in minutes) | 106.94 (101.94) | .12–419.93 | 33.89 (53.04) | .15–224.37 | t (44.201) = 3.29, p = .002 |

| Total sections completed | 51.38 (46.92) | 1–130 | 24.00 (26.49) | 2–96 | t (45.680) = 2.62, p = .012 |

| Proportion of modules completed | .395 (.361) | .008–1.00 | .185 (204) | .015-.738 | t (45.680) = 2.62, p = .012 |

Equal variances not assumed.

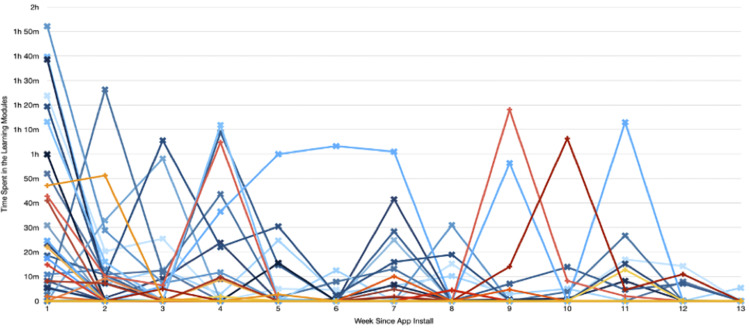

Objective 1d. What is the optimal length between baseline and follow-up measurement?

Figure 5 illustrates the amount of time spent in the Learning Modules by all users per week, centered for each user on the day they installed the app (Day 0). Nearly all users discontinued regular use prior to the 12-week measurement point, suggesting this is an appropriate “post-intervention” timepoint. An additional timepoint around 6-weeks may also be useful for the subsequent RCT as, for many users, heaviest use appears to end around this period. Some of the large usage peaks toward the end of the trial seemed to correspond with prompts for follow-up survey and interview completion.

Figure 5.

This figure illustrates variation in the time spent in the learning modules by week for individual users.

Note: Each line represents the usage time for an individual user, centered for each user on the day they installed the app (Day 0). Nearly all users discontinued regular use prior to the 12-week measurement point, and heaviest use declined for most by 6-weeks. This usage information provides valuable data to inform timepoints for subsequent efficacy trials.

Discussion

This larger-scale feasibility trial of the FMF Connect intervention was aimed to answer vital questions that could inform an upcoming full-scale RCT. The study measured trial feasibility across multiple levels— assessing recruitment, attrition, the sensitivity to change of the assessment battery, and when to pursue follow-up measurement. The study evaluated intervention feasibility— investigating technological functioning and user acceptability. The study also examined implementation feasibility— exploring typical usage patterns and predictors of usage. Overall, it was established that the FMF Connect intervention is robustly feasible as a tech-based mobile Health (mHealth) application.

In terms of recruitment, website traffic and study enrollment demonstrate there was clear interest in study participation. There were 105 participants deemed eligible, while the study team documented several procedures for more efficient eligibility processing in the future RCT. The current study yielded attrition rates similar to, or better than, other app-based interventions. 35 Attrition analysis showed none of the demographic variables collected were associated with completing baseline measures. However, several demographic and outcome variables did relate to app installation or completion of follow-up surveys and interviews. Caregiver education level trended or significantly predicted app installation, follow-up survey completion, and interview participation. Strategies such as increasing incentive amounts, sending reminders across various platforms, and/or altering length or format of follow-up assessments might be useful to reduce this effect. Family needs met at baseline were also related to various attrition outcomes. Caregivers who noted that more of their family needs were met were less likely to install the app, had lower usage, and were less likely to complete interviews. This suggests that the FMF Connect may be best suited for families with more unmet needs. Furthermore, completion of follow-up surveys and interviews was more likely for caregivers with higher baseline parenting efficacy ratings or who had children with relatively less disruptive behavior. These findings may reflect that these were caregivers with less emotional burnout who could more easily engage in research procedures. This trial recruitment and attrition analysis will help guide alternate strategies and benchmarks for a subsequent RCT. As many participants’ app usage dropped off at six weeks and the majority of users discontinued use by 12 weeks, these timepoints may be ideal for assessment in the future RCTs. The study identified additional strategies to increase reach, such as using resources to engage more newly diagnosed individuals, target additional areas of the country, and attracting families with demographics not well represented in the current study (e.g., wider range of racial and ethnic groups, socioeconomic and educational status, family structures, and lower comfort with technology). Acknowleding the limited sample, consisting of primarily White, adoptive mothers, the conclusions rendered from the present data may not translate to diverse groups not assessed. Further work, inclusive of different demographic groups, is necessary to understand what differing needs and adaptations may be necessary to make FMF Connect accessible to all.

Although this study was not designed to test efficacy, sensitivity to change across outcome measures was examined and preliminary estimates of effect sizes were obtained. This feasibility trial demonstrated change across the 3-month interval with medium to large effect sizes for child behavior problems and small to medium effects for parenting efficacy for both the full sample and by device type. This demonstrates measure sensitivity to change over time and suggests feasibility of these measures for use in the upcoming RCT. The pre/post effect sizes of child behavior and parenting satisfaction align with prior in-person trials of FASD interventions, while the effect sizes on family needs met and FASD knowledge measures were generally smaller in the present study.16,17 In general, similar patterns were seen for both iOS and Android, even one year apart in data collection. As noted above, the Android trial was delayed due to diversion of the programming team to address University and community needs relating to developing tracking systems for the COVID-19 pandemic. The Family Needs Met and K&A measures, developed for the standard FMF Program, were not as sensitive to change over time as other measures. This is not that surprising given that intervention timeframes of the FMF Connect app studies are notably shorter (by 6 to 9 months) than the longer, therapist-guided standard FMF Program. Additionally, the more intensive in-person aspect of standard FMF may contribute to more tailored assessment and support of caregiver and family needs than an app-based intervention could. After item review, the study team determined these measures should be adapted for FMF Connect to better suit the app-based intervention and timeframe of the app study.

Consistent with the initial smaller-scale feasibility testing with an earlier prototype, 21 the FMF Connect intervention demonstrated feasibility from a technological perspective. The iOS prototype was more stable than the Android protype, with no major issues or crashes. Android users experienced more issues with progressing through learning modules and accessing some app functionalities; 6 app updates were released during the trial to address these issues. From an acceptability standpoint, the app was rated highly by participants on a standardized scale, with the majority of scores on subscales falling in the “very good” to “excellent” range. iOS users indicated higher satisfaction with the app compared to Android users across all subscales. Qualitative analysis of user interviews showed various themes regarding facilitators and barriers to usage, many of them overlapping with themes from the previous trial of the FMF Connect app. 21 Motivators included access to information and connecting with others while barriers included not having enough time. New features not present in previous trials, including the Behavior Tracker and Daily Ratings, received mixed feedback from users. The Behavior Tracker was widely well received, while the Daily Ratings scale was viewed as discouraging and difficult for users. A potential new feature, FMF Connect Coaching, was presented to users and was well received. The COVID-19 pandemic was a barrier for many users (especially iOS), as they indicated this ongoing life event impacted app use and aligned with feelings of burnout and lack of time. Overall, results of the current larger-scale feasibility trial indicate user experience of the app largely improved or remained consistent compared to previous trials, 21 and have informed additional app improvements for the upcoming RCT.

In terms of implementation feasibility, the trajectory of module completion and the variability in usage aligns with the initial feasibility trial. 16 It is not surprising that participants who spent more time on the app completed more sections. Demographic factors, pre-existing levels of services, and COVID-19 stress did not predict length of time using the app nor number of sections completed. Consistent with the attrition findings discussed above, those with more family needs met the app less and completed fewer sections. These results suggest, in line with literature documenting low availability of FASD-informed resources in many parts of the country,6,10 the FMF Connect app may be more attractive to users whose needs are not already met. However, usage variability among participants could not be explained via data analysis in this preliminary trial. Fortunately, the upcoming RCT will provide further insight into which users may be more likely to use and benefit from FMF Connect.

The FMF Connect app demonstrates excellent user acceptability and feasibility. Yet through this larger-scale feasibility trial, the study team could identify areas of further refinement to improve the app for an RCT, such as bug fixes and module additions. An RCT of the FMF Connect app is needed to establish the effect of the intervention on child behavior and family factors. Evidence-based interventions for children and families with FASD are still remarkably limited.6,10 The FASD community, in general, still lacks access to essential services, despite demonstrated, ongoing need such as over 90% of individuals meeting criteria for a mental health disorder.36,37 This larger-scale feasibility trial of the FMF Connect mHealth app demonstrates readiness for a larger RCT that can help respond to the need to fill this gap in care. Adapting the standard FMF Program to an additional, app-based format allows the intervention to reach a wider audience and impact a greater number of families and children. The current study highlights real potential for positive outcomes in a larger scale RCT that could put accessible resources in the hands of families and the FASD community.

Applicability to other interventions and populations

The current study has broader implications for mHealth interventions. Although this is the first app-based intervention, two other online caregiver interventions for FASD have been developed.38,39 These online programs have demonstrated feasibility of the intervention modality and acceptance by the FASD community. Additionally, this study highlights the importance of considering community input to maximize meeting the needs of the population. The user-centered design framework can be applied to mobile health interventions more broadly, as community feedback has been shown to increase applicability in intervention work. 40 Mobile health interventions could also be pivotal for other populations in which a lack of services is present, as mobile health interventions allow for easier access to care and a wider reach. 41 Specifically, other individuals and their caregivers with neurodivergent identities could be of particular interest for mHealth interventions. There is up and coming literature displaying the acceptability and feasibility of mHealth for children with attention deficit hyperactivity disorder (ADHD), and for caregivers of autistic individuals.42–44 A new app, currently called My Health Coach, is also under development for adults with FASD with community member input suggesting high acceptability. 45

Strengths, limitations, and future directions

This is the first large-scale feasibility trial for mHealth interventions for caregivers with FASD. The strength of this study lies in the systematic approach to development and evaluation and potential for scalable public health impact. Given the acceptability and feasibility demonstrated, there is now grounds for an RCT which could support broader dissemination. Additionally, the inclusion of community member feedback is a particular strength. This ties in to the methodological rigor of the study, as the FMF Connect app development has closely followed the best practices in implementation research. 21 A limitation of the current study relates to sampling. The current sample is not representative of all diverse individuals and their caregivers with FASD, as the sample was primarily white and the large majority of caregivers were adoptive mothers. Participant income was also skewed higher than the US general population, with all participants required to have a smartphone with access to the Internet to be eligible. Careful analysis of participant reach and attrition factors in the current study identified opportunities for improvement for the RCT.

This study builds upon prior research, demonstrating the feasibility and acceptability of adapting pre-existing caregiver interventions for FASD in to mHealth interventions.20,21 The results of this trial give us vital information for a larger RCT and provide further evidence of the positive impact FMF Connect can have on families and individuals with FASD. The RCT will be the final phase of app development before wide dissemination, addressing a critical need for evidence-based FASD-informed quality care.

Supplemental Material

Supplemental material, sj-doc-1-dhj-10.1177_20552076241242328 for Larger-scale feasibility trial of the families moving forward (FMF) connect mobile health intervention for caregivers raising children with fetal alcohol spectrum disorders by C.L.M. Petrenko, C. Kautz-Turnbull, M.N. Rockhold, C. Tapparello, A. Roth, S. Zhang, B. Grund, B. Wood and H. Carmichael Olson in DIGITAL HEALTH

Acknowledgements

This work was conducted in conjunction with the Collaborative Initiative on Fetal Alcohol Spectrum Disorders (CIFASD), which is funded by grants from the National Institute on Alcohol Abuse and Alcoholism. Additional information about Collaborative Initiative on Fetal Alcohol Spectrum Disorders can be found on the CIFASD website. 19 The authors appreciate the CIFASD collaborators who assisted with recruitment, as well as FASD United. Seattle Children's Research Institute (SCRI) and the University of Washington are recognized for their contributions to intellectual property from the standard Families Moving Forward (FMF) Program. The standard FMF Program was developed, tested, and materials refined by a team led by Heather Carmichael Olson, PhD, based at and sponsored by these institutions (led by SCRI), with funding via multiple grants from the Centers for Disease Control and Prevention. We would also like to thank Jennifer Parr and Emily Speybroeck who assisted with interview data collection and additional staff at Mt. Hope Family Center for their support throughout the project. The authors appreciate all the families who made time in their busy schedules to participate in this study. This research would not have been possible without their valuable insights.

Footnotes

Contributorship: All authors contributed to the preparation of this study. CP conceived of the study, oversaw the study team, led app content development, obtained necessary approvals, and led manuscript preparation. CKT assisted in qualitative interviews, led quantitative data analysis, and contributed to manuscript preparation. MR contributed to manuscript preparation and revision. AR and SZ contributed to qualitative interviews, as well as led BG and BW in qualitative coding and analysis; all four authors contributed to manuscript preparation. CT led technological development of the app and contributed to manuscript revisions. HCO is developer of the standard FMF Program, from which the app and original assessment battery are derived. She made significant contribution to intellectual property exchange, app content adaptation and development, and assisted with manuscript revisions. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: All study procedures were approved by the University Institutional Review Board (STUDY00004433).

Funding: This research was supported by the National Institute of Health under Award #U01AA026104. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

National Institute on Alcohol Abuse and Alcoholism, (grant number U01AA026104).

Guarantor: CLMP.

ORCID iDs: C.L.M. Petrenko https://orcid.org/0000-0003-2091-3686

M.N. Rockhold https://orcid.org/0000-0002-1698-2702

C. Tapparello https://orcid.org/0000-0002-8016-8676

Supplemental material: Supplemental material for this article is available online.

References

- 1.Mattson SN, Bernes GA, Doyle LR. Fetal Alcohol Spectrum Disorders: a review of the neurobehavioral deficits associated with prenatal alcohol exposure. Alcoholism: Clinical and Experimental Research 2019; 43: 1046–1062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hoyme HE, Kalberg WO, Elliott AJ, et al. Updated clinical guidelines for diagnosing fetal alcohol spectrum disorders. Pediatrics 2016. Aug; 138: e20154256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Flannigan K, Kapasi A, Pei J, et al. Characterizing adverse childhood experiences among children and adolescents with prenatal alcohol exposure and Fetal Alcohol Spectrum Disorder. Child Abuse Negl 2021. Feb 1; 112: 104888. [DOI] [PubMed] [Google Scholar]

- 4.Kautz-Turnbull C, Adams TR, Petrenko CLM. The strengths and positive influences of children with Fetal Alcohol Spectrum Disorders. Am J Intellect Dev Disabil 2022. Sep 1; 127: 355–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.May PA, Chambers CD, Kalberg WO, et al. Prevalence of Fetal Alcohol Spectrum Disorders in 4 US Communities. JAMA 2018. Feb 6; 319: 474–482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Petrenko CLM, Tahir N, Mahoney ECet al. et al. Prevention of secondary conditions in fetal alcohol spectrum disorders: identification of systems-level barriers. Matern Child Health J 2014. Aug 1; 18: 1496–1505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ryan S, Ferguson DL. The person behind the face of Fetal Alcohol Spectrum Disorder: student experiences and family and Professionals’ perspectives on FASD. Rural Special Education Quarterly 2006. Mar 1; 25: 32–40. [Google Scholar]

- 8.Elliott EJ, Payne J, Haan Eet al. et al. Diagnosis of foetal alcohol syndrome and alcohol use in pregnancy: a survey of paediatricians’ knowledge, attitudes and practice. J Paediatr Child Health 2006. Nov; 42: 698–703. [DOI] [PubMed] [Google Scholar]

- 9.Olson HC, Pruner M, Byington Net al. et al. FASD-Informed Care and the future of intervention. In: Abdul-Rahman OA, Petrenko CLM. (eds) Fetal alcohol Spectrum disorders : a multidisciplinary approach [internet]. Cham: Springer International Publishing, 2023, pp.269–362. [cited 2023 Nov 13]. Available from: 10.1007/978-3-031-32386-7_13 [DOI] [Google Scholar]

- 10.Petrenko CLM, Alto ME. Interventions in fetal alcohol spectrum disorders: an international perspective. Eur J Med Genet 2017. Jan; 60: 79–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Olson HC, Oti R, Gelo Jet al. et al. “Family matters:” fetal alcohol spectrum disorders and the family. Dev Disabil Res Rev 2009; 15: 235–249. [DOI] [PubMed] [Google Scholar]

- 12.Petrenko CLM, Kautz-Turnbull C. Chapter two - from surviving to thriving: a new conceptual model to advance interventions to support people with FASD across the lifespan. In: Riggs NR, Rigles B. (eds) International review of research in developmental disabilities. Cambridge, Massachusetts: Academic Press, 2021, pp. 39–75. [Google Scholar]

- 13.Roozen S, Stutterheim SE, Bos AER, et al. Understanding the social stigma of fetal alcohol Spectrum disorders: from theory to interventions. Found Sci 2022. Jun 1; 27: 753–771. [Google Scholar]

- 14.Bertrand J. Interventions for children with fetal alcohol spectrum disorders (FASDs): overview of findings for five innovative research projects. Res Dev Disabil 2009. Sep; 30: 986–1006. [DOI] [PubMed] [Google Scholar]

- 15.Olson HC, Montague RA. An innovative Look at early intervention for children affected by prenatal alcohol exposure. In: Prenatal alcohol use and fetal alcohol Spectrum disorders: diagnosis, assessment and new directions in research and multimodal treatment. Sharjah: Bentham Science, 2011, pp.64–107. [Google Scholar]

- 16.Petrenko CLM, Pandolfino ME, Robinson LK. Findings from the families on track intervention pilot trial for children with fetal alcohol Spectrum disorders and their families. Alcoholism: Clinical and Experimental Research 2017; 41: 1340–1351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Petrenko C, Olson HC, Walker-Bauer M. Growing Evidence for a Scientifically-Validated FASD Intervention & Ideas for Providing FASD-Informed Care: The Families Moving Forward Program. The 7th International Conference on Fetal Alcohol Spectrum Disorder Research: Results and Relevence; 2017 Mar; Vancouver, BC, Canada.

- 18.Wiggins G, McTighe J. Understanding by design. Alexandria, VA: Association for Supervision and Curriculum Development, 1998. [Google Scholar]

- 19.Michie S, Wood CE, Johnston M, et al. Behaviour change techniques: the development and evaluation of a taxonomic method for reporting and describing behaviour change interventions (a suite of five studies involving consensus methods, randomised controlled trials and analysis of qualitative data). Health Technol Assess 2015. Nov; 19: 1–188. [DOI] [PMC free article] [PubMed] [Google Scholar]