Abstract

Fully supervised medical image segmentation methods use pixel-level labels to achieve good results, but obtaining such large-scale, high-quality labels is cumbersome and time consuming. This study aimed to develop a weakly supervised model that only used image-level labels to achieve automatic segmentation of four types of uterine lesions and three types of normal tissues on magnetic resonance images. The MRI data of the patients were retrospectively collected from the database of our institution, and the T2-weighted sequence images were selected and only image-level annotations were made. The proposed two-stage model can be divided into four sequential parts: the pixel correlation module, the class re-activation map module, the inter-pixel relation network module, and the Deeplab v3 + module. The dice similarity coefficient (DSC), the Hausdorff distance (HD), and the average symmetric surface distance (ASSD) were employed to evaluate the performance of the model. The original dataset consisted of 85,730 images from 316 patients with four different types of lesions (i.e., endometrial cancer, uterine leiomyoma, endometrial polyps, and atypical hyperplasia of endometrium). A total number of 196, 57, and 63 patients were randomly selected for model training, validation, and testing. After being trained from scratch, the proposed model showed a good segmentation performance with an average DSC of 83.5%, HD of 29.3 mm, and ASSD of 8.83 mm, respectively. As far as the weakly supervised methods using only image-level labels are concerned, the performance of the proposed model is equivalent to the state-of-the-art weakly supervised methods.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10278-023-00931-9.

Keywords: Weakly supervised semantic segmentation, Uterine lesions, Magnetic resonance imaging, Deep learning

Introduction

Accurate determination of the type, number, shape, and location of lesions is of great importance for preoperative diagnosis, intraoperative planning, and postoperative evaluation. Take the pathological changes in uterine area as an example, an accurate segmentation of endometrial cancer lesions based on the magnetic resonance (MR) images is crucial to determining the clinicopathological stage, which is essential for selecting the correct surgical plans. In June 2023, the Federation International of Gynecology and Obstetrics (FIGO) revised the staging of endometrial cancer to make it more refined [1], which put forward higher requirement for the accuracy of image segmentation. However, manual segmentation of lesions not only requires a large number of experienced radiologists, but also greatly increases their workload. With the development of deep learning techniques, researchers began to use artificial intelligence (AI) models to solve the problem of automatic medical image segmentation.

Most segmentation models with good performance are fully supervised convolutional neural network models, which require a large number of high-quality pixel-level labels to supervise the training procedure [2–10]. However, in MR image analysis, the cost of obtaining a large number of pixel-level clinical labels is prohibitive, resulting in a small amount of standardized MR image data.

In order to reduce the challenge of intensive annotation, many studies have been carried out on weakly supervised semantic segmentation (WSSS) [11–19], and weakly supervised labels such as bounding box-, graffiti-, and image-level classification annotations were used to achieve segmentation performance comparable to that of fully supervised methods. One of the methods that is easy for the annotator to operate is to use graffiti-based weak annotations [20], which only requires the annotator to draw a few lines to mark a small part of the foreground or background. In order to reduce the workload of manual labeling, some researchers simultaneously used a small number of strongly annotated images and a large number of weakly annotated images as classification labels [21], which can achieve the performance close to that of fully supervised methods. However, these hybrid supervision methods still require strong and weak annotations of all images. Another strategy is the semi-supervised learning, which is to jointly train models with a large number of strongly annotated images and a large number of unlabeled data [22–24]. The presence of a large number of unlabeled data is conducive to improving the performance and generalization ability of the model. In order to further reduce the labeling cost, several weakly and semi-supervised frameworks have been proposed recently [21], that is, a small number of graffiti-level weakly labeled images and a large number of unlabeled data were used simultaneously to train the model, achieving the performance of close to the fully supervised methods.

Among all weak annotation labels, image-level labels are the most readily available resources. However, segmentation using image-level labels is also the most challenging task, and its main problem is the lack of location information of the target regions. WSSS using image-level labels is often based on class activation maps (CAM) [11], which can effectively locate the objects in images. However, CAMs tend to cover only the most distinguishable part of the object and usually activate the background region incorrectly. In addition, when the image dataset is enlarged, the CAMs of the same image are usually inconsistent after different affine transformations. There is always a significant gap between the semantic segmentation network trained by CAM and that trained by ground truth (GT) as supervision information. To bridge the gap between WSSS and fully supervised semantic segmentation (FSSS), a number of CAM-based WSSS approaches have been proposed [12–19]. The main idea of these methods is to solve the problem that CAM cannot completely cover the object regions. Some methods used CAM to generate the seed regions and refine them to cover the entire object [18, 19], while others directly generate more accurate saliency maps [13–16]. Chen introduced the causal intervention into the segmentation model [16], alleviated the problem of unclear organ boundaries by using category causal chain, and then alleviated the problem of organ co-occurrence by using anatomical causal chain. Finally, accurate organ segmentation was achieved on three MR image datasets. However, due to the lack of qualified medical image resources, most CAM-based methods were designed for standardized natural images, and their application in medical image segmentation was relatively limited.

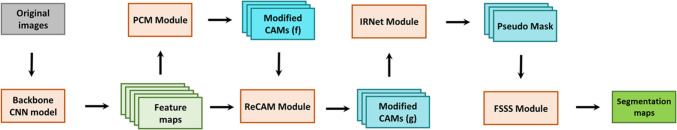

The development of automatic image segmentation method for uterine regions is especially backward. To our knowledge, there is no weakly supervised model developed for the segmentation of uterine regional lesions. Therefore, on the basis of a systematic investigation of various state-of-the-art (SOTA) WSSS models and the characteristics of uterine MR images, a two-stage segmentation framework was proposed in the current study. The core idea can be summarized into three parts: 1) generating the initial CAMs using image-level label supervised classification networks; 2) using the pixel correlation module (PCM), class re-activation map (ReCAM) module, and the inter-pixel relation network (IRNet) sequentially to refine the initial CAMs and generate pseudo masks; 3) using the pseudo masks as pixel-level labels to supervise DeepLab V3 + networks to achieve final segmentation.

Materials and Methods

Data Acquisition

The dataset used in this study was from the non-public image cohort of Dalian Women and Children’s Medical Group. The single-center study retrospectively collected preoperative T2-weighted MR images from 316 patients. A 3.0-T MR system (Ge Healthcare) with 8-channel phased array coils was used for image acquisition, and the imaging parameters were set as follows: layer thickness 6 mm, layer spacing 1 mm, repetition time 3040 ms, echo time 107.5 ms, field of view 28 × 22.4 cm. Each MR image was resized to 790 × 940 pixels, and the region of interest (ROI) with the size of 470 × 435 pixels was cut uniformly from the center of the original image, and then the Z-score normalization was carried out on the modified images. All images were analyzed independently by two radiologists with more than 15 years (16 and 19 years, respectively) of experience in gynecological imaging, and the image-level discrimination results were recorded and organized into multi-class (multi-hot) labels, which were used for model training, validation, and testing. The lesions were also outlined by the radiologists using ITK-SNAP software (version 3.6.0, open-source software, https://itk.org/) for the evaluation of model performance. An agreement would be reached through discussion to resolve the differences of manual segmentation. The average curve of the two contours was calculated as the GT using a piece of self-developed code. The research protocol was approved by the Ethics Committee and the Institutional Review Committee, and all experiments and methods were carried out in accordance with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Segmentation Pipeline

This study followed the current mainstream research ideas of WSSS: 1) training a multi-label classification model using image-level class labels; 2) extracting the CAMs of each class and using it as the initial seeds to generate 0–1 mask; 3) training the segmentation model in a standard fully supervised manner by using all class masks as pseudo-labels.

CAM

CNN is frequently used in medical image classification. In this kind of tasks, the original feature maps extracted from each convolutional layer contain the location information and possess excellent object positioning capabilities. However, the last layer of the CNN uses a fully connected layer to accommodate the classification task, which makes the object localization ability ineffective. Therefore, a global average pooling (GAP) layer [11] is inserted in front of the fully connected layer, and the GAP outputs the average value of the feature map of each unit in the last convolutional layer. The weighted sum of these values is used to generate the final output. In other words, the target CAMs can be obtained by calculating the weighted sum of the feature maps of the last convolutional layer. The current SOTA WSSS methods were mainly based on CAM, and the CAMs trained by weak classification labels can effectively locate the objects in the images.

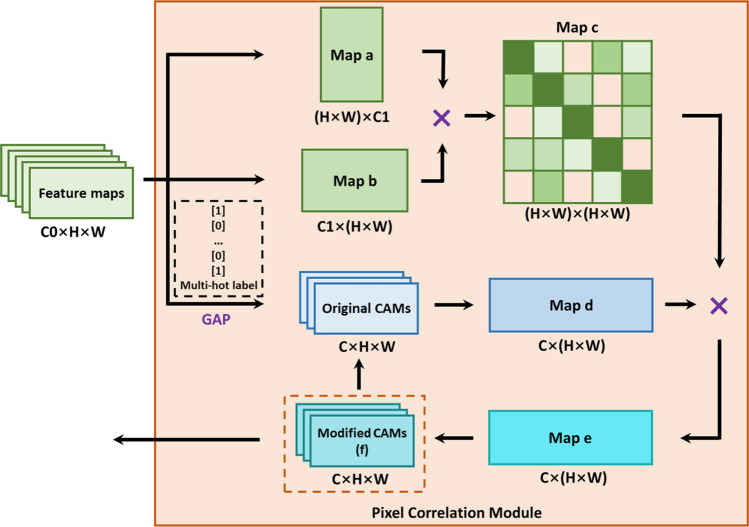

Pixel Correlation Module

The original CAMs usually cover only the most recognizable part of the target object and cannot be directly used to generate pseudo masks. Therefore, the first step of the proposed method was to use the PCM to refine the CAMs [13]. A standard ResNet-50 was used as the backbone network to extract the feature maps, and the original CAMs were obtained by training the residual network with multi-hot class labels. The PCM employed a self-attention mechanism to reconstruct the original CAMs (Fig. 1) through the similarity between the pixels and their neighborhoods. The modified CAMs can be reused as the original CAMs to iteratively obtain the best optimization results.

Fig. 1.

The network structure of the PCM module. × denotes matrix multiplication

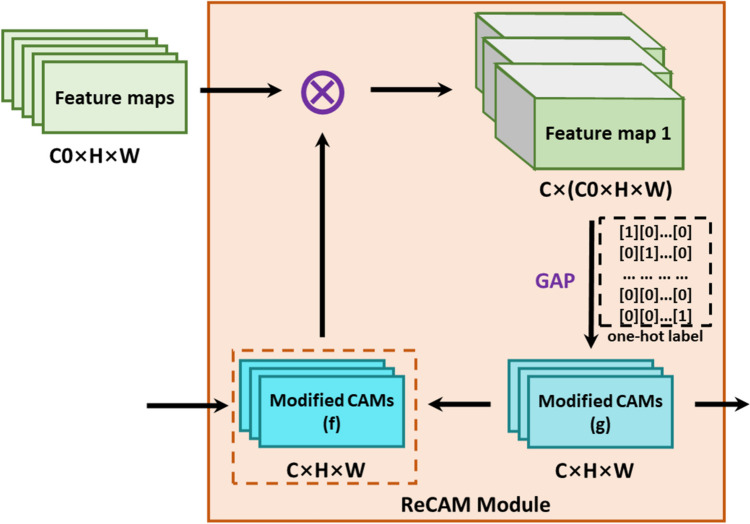

ReCAM Module

The original feature maps extracted by the convolutional layers serve for the multi-classification tasks, that is, the feature maps contain the location information of multiple classes of objects. This results in dispersed activation of the target region, and the convergence speed of the model will also slow down. Therefore, the multi-hot labels were firstly converted into the one-hot labels, and the ReCAM module was further used to convert the multi-classification task into several single-classification tasks (Fig. 2) [16]. Similar to PCM, the best CAMs can be achieved in an iterative manner.

Fig. 2.

The network structure of the ReCAM module. denotes element-wise multiplication

IRNet Module

To further improve the accuracy of the pseudo pixel-level annotations, the modified CAMs were then refined by IRNet to generate more accurate pseudo-masks [18]. IRNet consists of two branches that share the same network structure and parameters. The first branch predicts the displacement vector field and further converts it into the instance map. The second branch detects the class boundary map through the random walk algorithm and further predicts the semantic affinities between each pair of pixels. IRNet took the modified CAMs as the input, and the CAMs were transformed into the labels suitable for IRNet through a fully connected conditional random field (denseCRF) algorithm to represent the relationship between two pixels (for more explanations please see S1 in Supplementary).

FSSS Module

The newly developed segmentation model Deeplab v3 + was trained in full supervision to achieve final segmentation results. The synthesized pseudo mask was used as training labels in Deeplab v3 + [25]. Deeplab v3 + used the encoder-decoder architecture, which took Deeplab v3 as the encoder model, and added a decoder module to capture the clear target boundaries by gradually recovering the spatial information. At the same time, DeepLab v3 + adopted atrous spatial pyramid pooling (ASPP) to achieve multi-scale feature extraction with different receptive fields and upsampling method. In addition, Deeplab v3 + used the improved Xception model as the backbone network. The Xception network mainly adopted depthwise separable convolution, which reduced the computational complexity of Xception (for more explanations please see S2 in Supplementary).

The overall network architecture is shown in Fig. 3.

Fig. 3.

The overall network architecture of the proposed WSSS model

Design of Loss Functions

The PCM was used to generate and refine the original CAMs. In this step, the binary cross entropy (BCE) loss function with sigmoid activation function was trained using the multi-hot class labels in the following formula:

| 1 |

where C denotes the total number of object classes in the datasets, y(i) represents the multi-hot label for the i-th class, x(i) is the prediction logit of the i-th class, s(·) denotes the sigmoid function, and λ1 is the weight parameter used to balance the positive and negative samples. The ReCAM module decomposed a multi-label feature into a set of single-label features. Therefore, the softmax cross entropy (SCE) loss was used accordingly to adapt to the one-hot class labels. The SCE loss function can be expressed as

| 2 |

where C denotes the total number of object classes in the datasets, y(i) represents the one-hot label for the i-th class, and CAMj(k) represents the k-th elements of CAMj. Therefore, the overall loss function is expressed as follows:

| 3 |

where λ2 represents the weight parameter used to balance the BCE and SCE losses.

Experimental Environment

In order to verify the effectiveness of the proposed method, all experiments were conducted in the same software and hardware environment, thereby excluding the influence of the external factors. The whole segmentation model was implemented in the widely used deep learning framework pytorch (version 1.11). The graphical computing platform consisted of four NVIDIA RTX 3090TI GPUs, each with 24 GB memory. The CUDA11.2 and cuDNN10.0 toolkits were also installed for parallel acceleration.

Metrics for Evaluation

The segmentation performance was quantitatively evaluated according to the dice similarity coefficient (DSC), the Hausdorff distance (HD), and the average symmetric surface distance (ASSD). The higher the DSC values, the better the segmentation performance. The DSC values were only calculated for the MR images with lesions, as MR images without lesions had no foreground target and the DSCs could not be calculated. HD is the maximum distance from one point set to the nearest point in another point set. Low HD values indicate high segmentation accuracy in terms of boundary similarity. In order to eliminate the unreasonable distance caused by some outliers and maintain the stability of the overall numerical statistics, the HDs in this article were calculated based on the 95th percentile instead of the maximum distance. ASSD is the average of the nearest distance between two sets of boundary points. Similar to HD, a lower ASSD value means an improved segmentation performance.

Statistical Analysis

The segmentation experiment was repeated for five times, and the mean and standard deviation of the metrics were calculated accordingly. All statistical analyses were carried out using R software (version 4.0). Normality test was performed using Lilliefors test. The comparison of repeated measurements was possessed using independent sample t-test (data normally distributed) or Mann–Whitney U test (data non-normally distributed). One-way analysis of variance (ANOVA) was employed for multiple comparisons. All statistical tests were two-sided with p-value < 0.05 as the probability required to declare a difference.

Results

Demographic Characteristics and Dataset Pretreatment

This research included 316 participants with four different types of lesions (Table 1). The mean age of all patients participating in this study was 46.2 years old, and their axial, coronal, and sagittal multiplanar MR images were collected as the original dataset (85,730 slices). The patients were divided randomly into three groups, including 196 patients as the train set (51,438 slices, 60%), 57 patients as the validation set (17,146 slices, 20%), and the rest 63 patients as the test set (17,146 slices, 20%). The simple cross-validation was carried out on the three datasets, and the statistical analysis showed that there was no significant difference in the basic characteristics among the three groups. To balance the sample proportion of each lesion, data augmentation was performed on MR images of endometrial polyps and atypical hyperplasia of endometrium. These images were randomly scaled in the range of 0.5 to 2 times, flipped horizontally and vertically, and rotated in the range of − 180 to 180°.

Table 1.

Characteristics of the patients and the train, validation and test datasets

| Overall population | Train set | Validation set | Test set | |

|---|---|---|---|---|

| Age (years)a | 46.2 (22.4) | 47.6 (23.6) | 46.7 (26.0) | 44.5 (15.4) |

| Type of lesionb | ||||

| Endometrial cancer | 6963 (8.12) | 4231 (6.58) | 1391 (1.62) | 1341 (1.56) |

| Uterine leiomyoma | 9125 (10.6) | 5475 (8.52) | 1816 (2.12) | 1834 (2.14) |

| Endometrial polyps | 595 (0.69) | 355 (0.55) | 124 (0.14) | 116 (0.14) |

| Atypical hyperplasia of endometrium | 334 (0.39) | 199 (0.31) | 68 (0.08) | 67 (0.08) |

| Normal tissue/organsb | ||||

| Background | 85,730 (100) | 51,438 (80.0) | 17,146 (20.0) | 17,146 (20.0) |

| Uterus | 28,775 (33.6) | 17,348 (27.0) | 5711 (6.66) | 5716 (6.67) |

| Cervix | 4912 (5.73) | 2963 (4.61) | 1001 (1.17) | 948 (1.11) |

| Endometrium | 14,769 (17.2) | 8979 (14.0) | 2898 (3.38) | 2892 (3.37) |

| Totalb | 85,730 (100.0) | 51,438 (80.0) | 17,146 (20.0) | 17,146 (20.0) |

aData are present as mean (standard deviation)

bData are present as number (percentage, %)

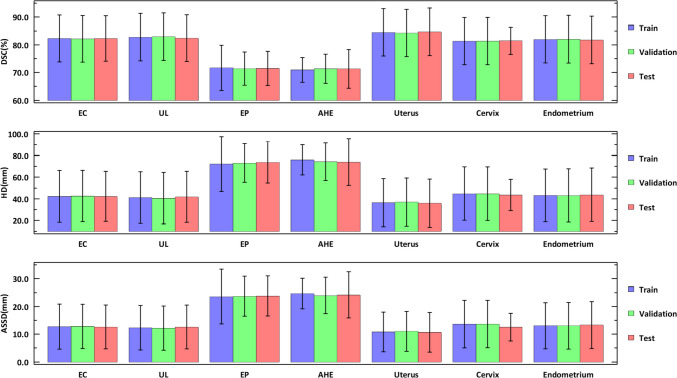

Assessment of the Segmentation Performance

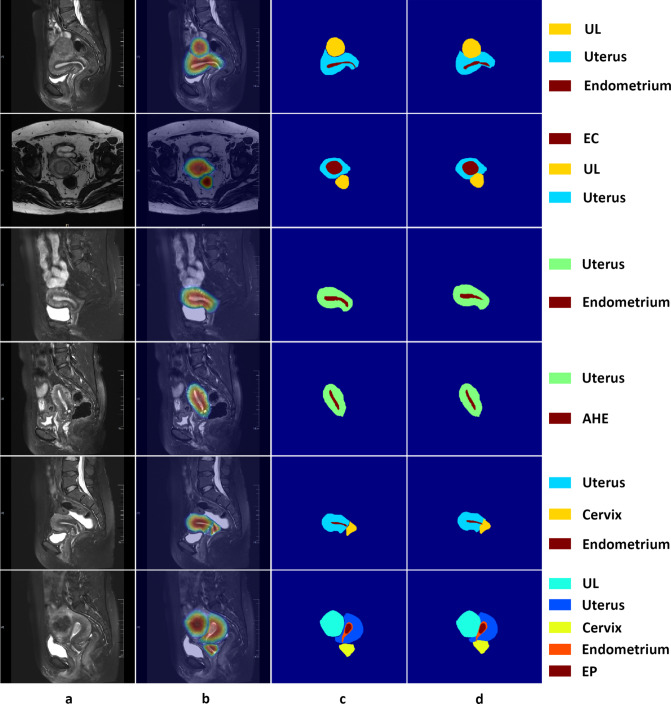

The accuracy of uterine lesion segmentation by the proposed method was demonstrated by an average DSC of 83.0%, HD of 40.3 mm, and ASSD of 12.0 mm on the test set (Fig. 4). Except for the normal uterine tissue (mean DSC 84.7%), uterine leiomyoma achieved the best segmentation performance with the mean DSC up to 82.4%, while atypical hyperplasia of endometrium got the lowest DSC at 71.3%. Besides, the mean DSCs of endometrial cancer, endometrial polyps, cervix, and endometrium varied in the range of 71.5% and 82.3%. As for HDs, the highest (73.8 mm) and lowest (35.9 mm) mean HD were given by uterus and atypical hyperplasia of endometrium, corresponding to a satisfactory overlap between the segmentation and the GTs. The changing trend of ASSD is similar to that of HD: the mean ASSDs varied from 10.7 to 24.2 mm, with the highest and lowest values given by atypical hyperplasia of endometrium and uterus, respectively. The qualitative results of six images from the test dataset are illustrated in Fig. 5, which shows that there is good consistency between the segmentation and the GTs.

Fig. 4.

The segmentation performance of the proposed model in the train, validation, and test sets. Data are present as mean ± standard deviation. EC denotes endometrial cancer, UL denotes uterine leiomyoma, EP denotes endometrial polyps, and AHE denotes atypical hyperplasia of endometrium

Fig. 5.

Visualization of the representative segmentation results on six cases of the test dataset. a Input images. b CAMs produced by the proposed model. c Ground truth. d Results predicted by the proposed model using the pseudo labels. EC denotes endometrial cancer, UL denotes uterine leiomyoma, EP denotes endometrial polyps, and AHE denotes atypical hyperplasia of endometrium

Ablation Studies

The ablation experiment evaluated the necessity of a module by removing the remaining parts from the model and evaluating the changes in the segmentation accuracy. The contribution of three key components (namely, PCM, ReCAM, and IRNet) was investigated using 2% training set data (Table 2). The results showed that compared to the original CAMs, the baseline model with PCM improved the DSC values by 5.3%. The ReCAM alone increased the DSC values by 6%. IRNet contributed the most to the improvement of DSC values, with an increase of 10.3%.

Table 2.

The results of the ablation experiment. Data are present as mean (standard deviation) (n = 5)

| Baseline * | PCM | RECAM | IRNet | DSC (%) |

|---|---|---|---|---|

| √ | 64.9 (2.30) | |||

| √ | √ | 70.2 (3.62)a | ||

| √ | √ | 70.9 (3.47)a | ||

| √ | √ | 75.2 (3.14)aa | ||

| √ | √ | √ | 81.5 (1.55)b | |

| √ | √ | √ | 80.9 (1.64)b | |

| √ | √ | √ | 75.0 (2.53)bb | |

| √ | √ | √ | √ | 83.6 (1.27) |

*denotes the baseline model with the backbone network of ResNet-50

arepresents a significant difference between the modified and the baseline model (p-value < 0.05)

aarepresents a very significant difference between the modified and the baseline model (p-value < 0.01)

brepresents a significant difference between the modified and the whole model (p-value < 0.05)

bbrepresents a very significant difference between the modified and the whole model (p-value < 0.01)

Comparison with SOTAs

To further evaluate the effectiveness of our proposed method, we also compared the accuracy of our produced pseudo masks to the SOTA methods. All these WSSS methods used CAM-like localization methods, including AffinityNet [17], SEAM [13], ReCAM [16], Puzzle-CAM [14], and L2G [15]. Since almost all these methods were designed for natural images, the parameters of the models were reoptimized based on the same datasets used in our study for fair comparison. These methods were evaluated with the same backbone network (ResNet-50) used in our model, and the produced pseudo masks were used to train the DeepLab v3 + segmentation model in full supervision. Compared to the previous WSSS methods, the final synthesized pseudo labels gave the highest DSC of 82.8% on validation set and 83.5% on test set (Table 3). In terms of the metrics HD and ASSD, our model also led to the best performance, with the lowest value of 34.7 mm and 10.5 mm on validation set, and 29.3 mm and 8.83 mm on test set, respectively. All the results showed that the proposed model significantly improved the segmentation performance, which was comparable to that of the SOTA models.

Table 3.

Quantitative comparisons with previous state-of-the-art approaches on validation and test sets. Data are present as mean (standard deviation) (n = 5)

| Methods | Validation set | Test set | |||||

|---|---|---|---|---|---|---|---|

| DSC (%) | HD (mm) | ASSD (mm) | DSC (%) | HD (mm) | ASSD (mm) | ||

| AffinityNet | 72.7 (2.45) ** | 69.1 (4.68) ** | 21.6 (1.81) ** | 73.5 (1.47) * | 58.1 (0.43) ** | 20.4 (1.46) ** | |

| SEAM | 75.2 (2.30) ** | 52.8 (0.97) ** | 16.9 (2.86) ** | 75.6 (4.56) * | 56.7 (0.60) ** | 17.6 (5.10) ** | |

| Puzzle-CAM | 76.8 (4.09) * | 49.7 (1.54) ** | 13.6 (0.94) * | 77.0 (0.93) * | 41.5 (2.48) ** | 15.6 (5.04) * | |

| ReCAM | 78.8 (3.56) | 45.5 (3.16) ** | 12.2 (2.82) | 78.2 (2.42) | 43.9 (3.18) ** | 12.1 (1.47) | |

| L2G | 81.0 (6.17) | 37.4 (2.69) | 11.1 (0.65) | 81.1 (3.47) | 36.3 (2.29) ** | 9.62 (8.51) | |

| Ours | 82.8 (0.96) | 34.7 (2.14) | 10.5 (2.56) | 83.5 (5.33) | 29.3 (3.26) | 8.83 (4.21) | |

*represents a significant difference between the previous and proposed methods (p-value < 0.05); **represents a very significant difference between the previous and proposed methods (p-value < 0.01)

Discussion

In this study, we present a two-stage deep learning-based model dedicated to the automated segmentation of four types of uterine lesions and three normal tissues. A good agreement (mean DSC = 83.5%, HD = 29.3 mm, ASSD = 8.83 mm on test set) between the segmentation and the GT was achieved by using the T2-weighted MR images with only image-level annotations. Precise segmentation of multiple lesions and tissues is a complicated task, but it is crucial to optimizing the clinical management and radiomics research. However, the heterogeneity of the lesions and the similarity of the visual characteristics between the lesions and the surrounding environment make the precise segmentation very difficult. Although many excellent segmentation models have emerged in recent years and have shown good results in such segmentation tasks, fully automatic segmentation algorithms that can be put into practice still need to be improved. This is mainly due to the following difficulties in the existing segmentation algorithms: 1) There is no real gold standard for defining the boundaries of the lesions, as there is often adhesion between the lesions and their surrounding tissues. The definition of their boundaries is inevitably subjective: there will be differences in the marks of different doctors, making it very difficult to obtain highly consistent segmentation results; 2) the lesions are usually the objects with irregular geometric shapes, making it difficult to build suitable models; 3) there is a huge amount of information in medical images, so manually sketching the target region of medical image in clinical practice is a time-consuming and laborious work, which increases a great burden on the daily work of clinicians. Therefore, clinical segmentation of medical image remains a difficult problem.

The neural network model based on deep learning has achieved good results not only in image classification but also in image semantic segmentation. A common semantic segmentation method in deep learning is to transform the image segmentation into the pixel classification problem. Most segmentation models with good performance are the fully supervised convolutional neural network models, which require a large number of high-quality pixel-level labels to supervise the training process. However, it is expensive to obtain a large number of pixel-level clinical labels or data labels during MR image analysis, which results in a very small amount of normalized MR image data. If the analysis is directly carried out in hundreds of samples, it is difficult to train a deep learning model due to the “data hunger” characteristics. Although the amount of patient-level MR image data is relatively small, MR images of a lesion usually contain a large number of sections or voxel points. Splitting these slices or voxel points yields a large number of training samples, which in turn allows more complex deep learning models to be trained. This study used the method of slice splitting to expand the datasets, and the good segmentation accuracy proved the feasibility of this method.

Equivariant is an implicit constraint in FSSS, and the pixel-level labels are transformed in the same way as the input images during data augmentation. However, such transformation does not exist in the process of image-level supervised training, so different CAMs will be generated when data augmentation of images is carried out by affine transformation. According to this characteristic, Jiang found that more detailed regions can be learned by using local images as input for classification [15]. Therefore, the authors randomly cropped the local images from the global images, established a local network to learn the detailed regional semantic information, and then transferred the local knowledge to the global network effectively through online learning. Jo introduced the self-attention mechanism in the model to generate CAMs from the original and block images, respectively [14], and narrow the gap between the two by reconstructing regularization. Wang found that the CAMs obtained from the randomly scaled images were different from that obtained from the original images, and then input the original and modified images into the twin network with the same structure and sharing weights [13]. Finally, more accurate CAMs were obtained through self-supervision and equivariant regularization. In addition, there are differences in the ratio of positive and negative pixels in different subgraphs compared to the original images, which leads to the activation of different regions when generating CAMs using a unified model structure.

Inspired by the above observations, our proposed method also introduced the self-attention mechanism to consistently regularize the CAMs generated at different stages, thereby improving the segmentation accuracy through self-supervision. The difference was that our method did not perform reconstruction or equivariant operations on the CAMs. Instead, the ROIs in the raw MR images were automatically intercepted as the original input of the model. Compared to the overall MR image, the lesion regions were usually small and irregularly shaped. The percentage of the positive pixels can be increased by intercepting the ROIs. The size of the rectangular area was determined by the anatomical structure of the uterus and the lesions, and was closely related to the MRI scanning orientation. After repeated attempts, it was finally determined to intercept an area with the size of 470 × 435 pixels. This has the advantage of removing background areas that are not helpful for improving the segmentation performance and balancing the proportions of positive pixels and negative ones. This could be confirmed by the optimization result of the weight parameter λ1 in BCE (0.16, see Table S1 and Fig. S1 in Supplementary): λ1 was used to balance the positive and negative error terms of the objective function, and too large or small λ1 values indicate unbalanced positive and negative samples.

Although equivariant regularization can provide additional supervision information for the network, it is difficult to achieve ideal equivariant using only classical convolutional layers. The results of the ablation experiment illustrated that the segmentation accuracy was greatly improved by using self-attention-based PCM and ReCAM (Table 2). PCM can effectively capture the surrounding environment information and improve the pixel-level prediction results. This module captured the contextual appearance information for each pixel and corrected the original CAMs through the learnable affinity attention maps. ReCAM assigned higher attention to the target regions corresponding to the feature maps with the original CAMs, and then generated the corresponding CAMs for each category, converting the multi-classification task into multiple single-classification tasks. Accordingly, the loss function should be converted from BCE to SCE.

WSSS based on natural images can meet the segmentation requirements in most cases by using one-hot labels. However, there is an obvious difference between medical and natural images: co-occurrence. For example, the endometrial cancer lesions always appear together with the endometrium in MR images. Therefore, in addition to the background, there are often multiple types of objects in the images, which correspond to multiple-classification labels, namely, multi-hot labels. In other words, the semantic segmentation of the medical images can often be transformed into a multi-objective classification problem. BCE is often used as the objective function of multi-classification tasks. However, BCE has two main shortcomings: 1) each pixel in the CAM can respond to multiple types of targets that appear together in the same receptive field, that is, the original CAM may incorrectly invade the regions belonging to other categories; 2) an inactive region in the CAM may be a part of the objective class. BCE loss is not class exclusive: misclassification of one class does not penalize the activation of other classes. However, SCE has the characteristic of compulsory class-exclusive learning, which has two effects on the CAM generation: 1) reducing the false positive pixels in fusion models between different classes; 2) motivating the model to explore the class-specific features and reduce the false negative pixels.

In the current study, endometrial polyps and atypical hyperplasia of endometrium got the lowest segmentation accuracy. This may be due to the small sample size, and the extra data obtained through data augmentation cannot provide more diversity information, which resulted in a weak generalization ability of the model. Moreover, although the number of endometrium MR images was the largest except for that of uterine, the fitting ability of the model trained by the endometrium samples was only at a moderate level (DSC = 81.7%, HD = 43.7 mm, and ASSD = 13.3 mm), which may be attributed to their narrow and irregular shapes.

Several limitations to this study should be acknowledged. First, as far as the current hardware level was concerned, the model had a relatively large scale, occupied a large amount of memory, and took a long training time. Only the ResNet-50 network was trained as the backbone network. With more advanced platform, more complicated and efficient CNN model (e.g., ResNet-101 or Resnet-269) could be trained to perform better segmentation. Second, the segmentation accuracy of this model for the lesions with irregular shapes, especially the long and narrow lesions, was still low (Figs. 4 and 5). One possible reason of this phenomenon is that there are few images of lesions or tissues with long and narrow shapes in this study, resulting in a small training dataset. Another possible reason is the proportion of lesions in the whole image is low, which means relatively small positive error terms of the objective functions. Besides, the extra data obtained through data augmentation may not provide more diversity information. One solution is to make full use of the spatial correlation among MR images and train 3D segmentation networks to achieve better segmentation. Another way is to add a small number of precisely segmented pixel-level labels to the training set, that is, to use a weakly and semi-supervised mode to improve the segmentation performance. Third, only the T2-weighted MR images were used in our study, and the segmentation cannot be directly applied to other sequences. Although the T2-weighted images were the most commonly used data in the diagnosis of uterine diseases, multi-sequence or multimodal images cannot only enrich the training dataset to make the model more robust, but also extract more abundant semantic information and enhance the generalization ability of the model. Furthermore, the number of subjects was relatively small and no external datasets were involved. The original dataset was expanded by using all the individual images instead of selecting one from each patient’s MR images as the basic training unit, which means the dataset contained multiple images from the same patient and the images have strong correlations, thus reducing the generalization ability of the proposed model.

Conclusion

This study proposed a two-stage deep learning-based semantic segmentation method for four types of uterine lesions and three normal tissues in magnetic resonance images. The segmentation accuracy was improved by sequentially using the PCM and ReCAM with self-attention mechanism and the IRNet as an effective refinement method. A segmentation performance comparable to the SOTA WSSS models was achieved by combining BCE and SCE loss functions to optimize the parameters. Our model performed well for the lesions with regular shapes and clear boundaries, such as uterine fibroids. However, for cases with irregular shapes (e.g., long and narrow lesions), the model performed slightly worse. Although the current WSSS method is not yet sufficient to replace the manual operations in MR image analysis, it can significantly improve the efficiency of image analysis. In future studies, the utility of the model in clinical settings can be refined by incorporating more multimodal clinical indications. In addition, the segmentation performance can be effectively improved by using more advanced pseudo mask generation algorithms and more complex neural network models.

Supplementary Information

Below is the link to the electronic supplementary material.

Abbreviations

- WSSS

Weakly supervised semantic segmentation

- FSSS

Fully supervised semantic segmentation

- CAM

Class activation map

- GAP

Global average pooling

- PCM

Pixel correlation module

- ReCAM

Class re-activation map

- IRNet

Inter-pixel relation network

- GT

Ground truth

- SCE

Softmax cross entropy

- BCE

Binary cross entropy

- DSC

Dice similarity coefficient

- HD

Hausdorff distance

- ASSD

Average symmetric surface distance

- EC

Endometrial cancer

- UL

Uterine leiomyoma

- EP

Endometrial polyps

- AHE

Atypical hyperplasia of endometrium

- ROI

Region of interest

Author Contribution

Yu-meng Cui: conceptualization; methodology; writing; funding acquisition; Hua-li Wang: conceptualization; resources; supervision; Rui Cao: resources; supervision; Hong Bai: methodology; validation; statistical analysis; Dan Sun: data curation; project administration; Jiu-Xiang Feng: data curation; project administration; Xue-feng Lu: conceptualization; review and editing; coding; funding acquisition.

Funding

This study has received funding by the National Natural Science Foundation of China (No. 82003843), Natural Science Foundation of Liaoning Province (No. 2020-BS-221), and Dalian Medical Science Research Program (No.2211023).

Data Availability

Data that support the findings of this study are available on request from the corresponding author. Data are not publicly available due to privacy or ethical restrictions.

Declarations

Ethics Approval

This study was approved by the Institutional Review Board of Dalian Women and Children’s Medical Group before patient information was accessed.

Consent to Participate

This study was performed under a waiver for informed consent due to the retrospective nature of the analysis, the anonymity of the data.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Berek JS, Matias-Guiu X, Creutzberg C, Fotopoulou C, Gaffney D, Kehoe S, Lindemann K, Mutch D, Concin N; Endometrial Cancer Staging Subcommittee, FIGO Women's Cancer Committee. FIGO staging of endometrial cancer: 2023. Int J Gynaecol Obstet. 2023; 162 (2): 383–394. 10.1002/ijgo.14923.

- 2.Rahimpour M, Saint Martin MJ, Frouin F, Akl P, Orlhac F, Koole M, Malhaire C. Visual ensemble selection of deep convolutional neural networks for 3D segmentation of breast tumors on dynamic contrast enhanced MRI. Eur Radiol. 2023;33(2):959–969. doi: 10.1007/s00330-022-09113-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Opfer R, Krüger J, Spies L, Ostwaldt AC, Kitzler HH, Schippling S, Buchert R. Automatic segmentation of the thalamus using a massively trained 3D convolutional neural network: higher sensitivity for the detection of reduced thalamus volume by improved inter-scanner stability. Eur Radiol. 2023;33(3):1852–1861. doi: 10.1007/s00330-022-09170-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chen C, Zhang T, Teng Y, Yu Y, Shu X, Zhang L, Zhao F, Xu J. Automated segmentation of craniopharyngioma on MR images using U-Net-based deep convolutional neural network. Eur Radiol. 2023;33(4):2665–2675. doi: 10.1007/s00330-022-09216-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jávorszky N, Homonnay B, Gerstenblith G, Bluemke D, Kiss P, Török M, Celentano D, Lai H, Lai S, Kolossváry M. Deep learning-based atherosclerotic coronary plaque segmentation on coronary CT angiography. Eur Radiol. 2022;32(10):7217–7226. doi: 10.1007/s00330-022-08801-8. [DOI] [PubMed] [Google Scholar]

- 6.Rouvière O, Moldovan PC, Vlachomitrou A, Gouttard S, Riche B, Groth A, Rabotnikov M, Ruffion A, Colombel M, Crouzet S, Weese J, Rabilloud M. Combined model-based and deep learning-based automated 3D zonal segmentation of the prostate on T2-weighted MR images: clinical evaluation. Eur Radiol. 2022;32(5):3248–3259. doi: 10.1007/s00330-021-08408-5. [DOI] [PubMed] [Google Scholar]

- 7.Corrado PA, Wentland AL, Starekova J, Dhyani A, Goss KN, Wieben O. Fully automated intracardiac 4D flow MRI post-processing using deep learning for biventricular segmentation. Eur Radiol. 2022;32(8):5669–5678. doi: 10.1007/s00330-022-08616-7. [DOI] [PubMed] [Google Scholar]

- 8.Li Y, Wu Y, He J, Jiang W, Wang J, Peng Y, Jia Y, Xiong T, Jia K, Yi Z, Chen M. Automatic coronary artery segmentation and diagnosis of stenosis by deep learning based on computed tomographic coronary angiography. Eur Radiol. 2022;32(9):6037–6045. doi: 10.1007/s00330-022-08761-z. [DOI] [PubMed] [Google Scholar]

- 9.Zheng Q, Zhang Y, Li H, Tong X, Ouyang M. How segmentation methods affect hippocampal radiomic feature accuracy in Alzheimer's disease analysis? Eur Radiol. 2022;32(10):6965–6976. doi: 10.1007/s00330-022-09081-y. [DOI] [PubMed] [Google Scholar]

- 10.Cayot B, Milot L, Nempont O, Vlachomitrou AS, Langlois-Jacques C, Dumortier J, Boillot O, Arnaud K, Barten TRM, Drenth JPH, Valette PJ. Polycystic liver: automatic segmentation using deep learning on CT is faster and as accurate compared to manual segmentation. Eur Radiol. 2022;32(7):4780–4790. doi: 10.1007/s00330-022-08549-1. [DOI] [PubMed] [Google Scholar]

- 11.Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning Deep Features for Discriminative Localization. In: IEEE Conference on Computer Vision and Pattern Recognition. 2016; pp. 2921–2929. 10.1109/CVPR.2016.319.

- 12.Chen Z, Tian ZQ, Zhu JH, Li C, Du SY. C-CAM: Causal CAM for Weakly Supervised Semantic Segmentation on Medical Image. In: IEEE Conference on Computer Vision and Pattern Recognition. 2022; pp. 11676–11685.

- 13.Wang Y, Zhang J, Kan M, Shan S, Chen X. Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. 2020; pp. 12272–12281. 10.48550/arXiv.2004.04581.

- 14.Jo SH, Yu IJ. Puzzle-cam: Improved local- ization via matching partial and full features. In: IEEE International Conference on Image Processing. 2021; pp. 639–643. 10.1109/ICIP42928.2021.9506058.

- 15.Jiang PT, Yang YQ, Hou QB, Wei YC. L2G: A Simple Local-to-Global Knowledge Transfer Framework for Weakly Supervised Semantic Segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. 2022; pp. 16886–16896. 10.1109/CVPR52688.2022.01638.

- 16.Chen ZZ, Wang T, Wu XW, Hua XS, Zhang HW, Sun QR. Class Re-Activation Maps for Weakly-Supervised Semantic Segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. 2022; pp. 959–968. 10.1109/cvpr52688.2022.00104.

- 17.Ahn J, Kwak S. Learning pixel-level semantic affinitywith image-level supervision for weakly supervised semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. 2018; pp. 4981–4990. http://10.1109/CVPR.2018.00523.

- 18.Ahn J, Cho S, Kwak S. Weakly Supervised Learning of Instance Segmentation with Inter-pixel Relations. In: IEEE Conference on Computer Vision and Pattern Recognition. 2019; pp. 2204–2213. 10.1109/CVPR.2019.00231.

- 19.Ou Y, Huang SX, Wong KK, Cummock J, Volpi J, Wang JZ, Wong STC. BBox-Guided Segmentor: Leveraging expert knowledge for accurate stroke lesion segmentation using weakly supervised bounding box prior. Comput Med Imaging Graph. 2023;107:102236. doi: 10.1016/j.compmedimag.2023.102236. [DOI] [PubMed] [Google Scholar]

- 20.Lin D, Dai J, Jia J, He K, Sun J. Scribblesup: scribble-supervised convolutional networks for semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 3159–3167. 10.1109/CVPR.2016.344.

- 21.Gao F, Hu M, Zhong ME, Feng S, Tian X, Meng X, Ni-Jia-Ti MY, Huang Z, Lv M, Song T, Zhang X, Zou X, Wu X. Segmentation only uses sparse annotations: Unified weakly and semi-supervised learning in medical images. Med Image Anal. 2022;80:102515. doi: 10.1016/j.media.2022.102515. [DOI] [PubMed] [Google Scholar]

- 22.Luo X, Wang G, Liao W, Chen J, Song T, Chen Y, Zhang S, Metaxas DN, Zhang S. Semi-supervised medical image segmentation via uncertainty rectified pyramid consistency. Med Image Anal. 2022;80:102517. doi: 10.1016/j.media.2022.102517. [DOI] [PubMed] [Google Scholar]

- 23.Wang K, Zhan B, Zu C, Wu X, Zhou J, Zhou L, Wang Y. Semi-supervised medical image segmentation via a tripled-uncertainty guided mean teacher model with contrastive learning. Med Image Anal. 2022;79:102447. doi: 10.1016/j.media.2022.102447. [DOI] [PubMed] [Google Scholar]

- 24.Huang M, Zhou S, Chen X, Lai H, Feng Q. Semi-supervised hybrid spine network for segmentation of spine MR images. Comput Med Imaging Graph. 2023;107:102245. doi: 10.1016/j.compmedimag.2023.102245. [DOI] [PubMed] [Google Scholar]

- 25.Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European conference on computer vision. pp. 801–818.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data that support the findings of this study are available on request from the corresponding author. Data are not publicly available due to privacy or ethical restrictions.