Abstract

Our goal was to analyze radiology report text for chest radiographs (CXRs) to identify imaging findings that have the most impact on report length and complexity. Identifying these imaging findings can highlight opportunities for designing CXR AI systems which increase radiologist efficiency. We retrospectively analyzed text from 210,025 MIMIC-CXR reports and 168,949 reports from our local institution collected from 2019 to 2022. Fifty-nine categories of imaging finding keywords were extracted from reports using natural language processing (NLP), and their impact on report length was assessed using linear regression with and without LASSO regularization. Regression was also used to assess the impact of additional factors contributing to report length, such as the signing radiologist and use of terms of perception. For modeling CXR report word counts with regression, mean coefficient of determination, R2, was 0.469 ± 0.001 for local reports and 0.354 ± 0.002 for MIMIC-CXR when considering only imaging finding keyword features. Mean R2 was significantly less at 0.067 ± 0.001 for local reports and 0.086 ± 0.002 for MIMIC-CXR, when only considering use of terms of perception. For a combined model for the local report data accounting for the signing radiologist, imaging finding keywords, and terms of perception, the mean R2 was 0.570 ± 0.002. With LASSO, highest value coefficients pertained to endotracheal tubes and pleural drains for local data and masses, nodules, and cavitary and cystic lesions for MIMIC-CXR. Natural language processing and regression analysis of radiology report textual data can highlight imaging targets for AI models which offer opportunities to bolster radiologist efficiency.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10278-023-00927-5.

Keywords: Natural language processing (NLP), Chest radiography, Artificial intelligence (AI)

Introduction

When employed alongside radiologists, artificial intelligence (AI) applications for chest radiographs (CXRs) have two primary potential benefits: increased diagnostic accuracy and increased efficiency of report production. The potential of increased diagnostic accuracy has often received significant attention [1–4]. Analysis of the commercially available Annalise software (Annalise-AI, Sydney, Australia) demonstrated that pulmonary nodules and acute rib fractures were the findings most missed by radiologists out of the imaging findings considered “critical” by the software, which is capable of assessing CXRs for a total of 124 possible imaging findings [1].

However, in analysis of the Annalise software by Jones et al., 7 out of 10 surveyed radiologists reported subjectively worse reporting times [1], which raises concern about the impact of AI software on radiologist efficiency. In mammography, a high number of computer-aided detection (CAD) alerts has also been correlated with an increased interpretation time for each additional CAD mark in a study [5]. Additionally, a CXR AI application which presents a radiologist with an overabundance of information can potentially trigger alarm fatigue. This phenomenon has been previously investigated for electronic health records (EHRs) and involves physicians disregarding automatically generated patient safety alerts when the number of false positive alarms is perceived as high [6].

Nevertheless, the rising popularity of large language models (LLMs) such as ChatGPT (OpenAI, San Francisco, CA) has increased awareness for possible efficiency gains related to AI-driven text generation [7]. One such area of opportunity is with software which auto-populates structured report templates based on model predictions. This prospect is particularly appealing given that it reduces the amount of text that a radiologist needs to dictate and eliminates the potential of report errors related to voice recognition (VR) software. Multiple studies have reported a wide range of error rates for using VR software to generate radiology reports including 5% [8], 11% [9], 18% [10], 22% [11], and 35% [12]. Due to COVID-19-related protocols, there has even been investigation into the impact of face masks on error rates [13]. Not only do these potential VR errors detract from report quality, but efforts to remove these errors require a radiologist to take additional time to proofread and edit their reports. Consequently, AI applications which directly operate on CXRs to auto-populate a structured report template based on model predictions present a great opportunity for increasing report production efficiency, particularly if developed in parallel to AI-improved speech recognition [14].

If more parsimonious AI models have potential advantages in streamlining AI-augmented workflows, then, conceptually, this raises the question of which CXR findings are the best possible targets for convolutional neural networks (CNNs) and other AI strategies. Work related to software such as Annalise has already shown an opportunity for increased diagnostic accuracy related to acute rib fractures and pulmonary nodules [1]. AI models have also demonstrated value in detecting critical clinical findings such as pneumothoraces [15–17]. However, it is not necessarily clear which findings can help best reduce dictation time if automatically incorporated into a structured report template.

To address this question, we have performed natural language processing (NLP) using de-identified reports in the MIMIC-CXR dataset [18] as well as a local institutional dataset constituting an additional 168,949 single frontal view CXR reports. For each radiologist report, we computed text length based on sentence, word, and syllable counts, and then performed regression analysis both without regularization and with least absolute shrinkage and selection operator (LASSO) in order to identify categories of CXR imaging findings which have a high impact on report length.

Materials and Methods

Compliance with Ethical Standards

The authors have no potential conflicts of interest to report. This retrospective exploratory study was approved by our institutional review board with waiver of informed consent.

Data Resources

We obtained radiology report text from the public MIMIC-CXR database and from our local institution. The MIMIC-CXR database includes DICOM images and reports for 227,835 chest radiograph exams from 65,379 patients performed between 2011 and 2016 on patients presenting to the Beth Israel Deaconess Medical Center Emergency Department [18, 19]. For our local institutional data, we collected de-identified radiology report text for 168,949 single frontal view chest radiograph exams performed from January 1, 2019, to June 11, 2022.

Data Processing

For each report in our local dataset and MIMIC-CXR, we used NLP to calculate the number of sentences, words, and syllables in each report. We also quantified text complexity and readability with Flesh-Kincaid reading ease, Flesch-Kincaid grade level, and Coleman-Liau index [20, 21]. Text analysis was performed using the Anaconda distribution (Anaconda Inc., Austin, TX) of Python (https://www.python.org/, RRID:SCR_008394) and the spaCy library (https://spacy.io/).

Upon manual review of radiologist report samples from the MIMIC-CXR dataset, a portion of the reports were found to consist of preliminary “wet reads” concatenated with subsequent final reports. Since these cases of having preliminary reports concatenated together with the final report distorted our quantifications of report length and complexity, we subsequently filtered out the17,810 reports containing “wet reads” from MIMIC-CXR (7.8% of the total dataset) and restricted our analysis to the remaining 210,025 MIMIC-CXR reports. Additionally, we found that the extensive text processing performed to remove protected health information (PHI) in order to allow MIMIC-CXR reports to be releasable publicly as described by Johnson et al. [18] distorted our selected estimations of text complexity, particularly with regards to text complexity quantifications based on underlying syllable and letter counts. Consequently, we elected to focus on length quantifications for comparison of the two datasets, and we only report our quantifications of text complexity for our local radiology report dataset which was anonymized and de-identified at the patient level but not de-identified in regards to clinical provider, location, or time information like MIMIC-CXR.

For our local dataset, 168,961 reports were collected and de-identified at the patient level during the collection process. Twelve of these reports were filtered out of the corpus through a manual review process after identification of a mismatch between the procedure code for a single view chest radiograph and the report text content. Additional filtering of the text was then performed on the remaining 168,949 reports to remove text identifying the signing radiologist and workstation which is automatically added to the report based on the configuration of the local dictation software, Nuance Powerscribe 360 (Nuance Communications, Burlington, MA). However, report header text related to the exam type, indication, and comparisons was not filtered out of the text corpus for both the local institutional dataset and MIMIC-CXR. Documentation of physician-to-physician communication for critical findings was also not removed from the text datasets. Exam indication, comparisons, and physician-to-physician communication documentation were not filtered out of the text corpuses as this information is frequently dictated by radiologists during report preparation.

The local dataset was collected from two hospitals located within the same city in the southwestern USA, separated geographically by approximately 6 miles from each other. One of these local hospitals operates as a level I trauma center for the region, while the other operates as a level IV trauma center, offering advanced care and life support prior to transfer to a higher level trauma center. 82,890 of the local studies were ordered from the emergency department (ED) of these two hospitals. 51,080 studies were ordered from the level I trauma center ED and 31,810 studies were ordered from the ED of the level IV facility. The remaining 86,059 cases were ordered either on inpatients or on outpatients at these facilities undergoing surgical or other interventional procedures not requiring hospital admission.

To assess keywords of interest in report text, we reviewed label sets employed in existing public CXR databases which in addition to MIMIC-CXR include PadChest, NIH CXR14, CheXpert, and CANDID-PTX [4, 22–24]. We also reviewed the 127-finding label set initially utilized for the Annalise software [3]. After reviewing these varying finding label sets, we pruned and merged categories while also adding specific keyword categories to cover certain findings and devices not already within these pre-existing label sets. This resulted in a total of 59 keyword categories for our analysis.

The two-sided Mann–Whitney U test was used for statistical comparison between distributions of quantified values of text length and complexity [25]. Regression without regularization and LASSO regression (26) were performed using scikit-learn (https://scikit-learn.org/) [27]. Regression was performed with tenfold cross-validation, unless otherwise stated. L1 regularization strength was parameterized with α of 0.01.

Results

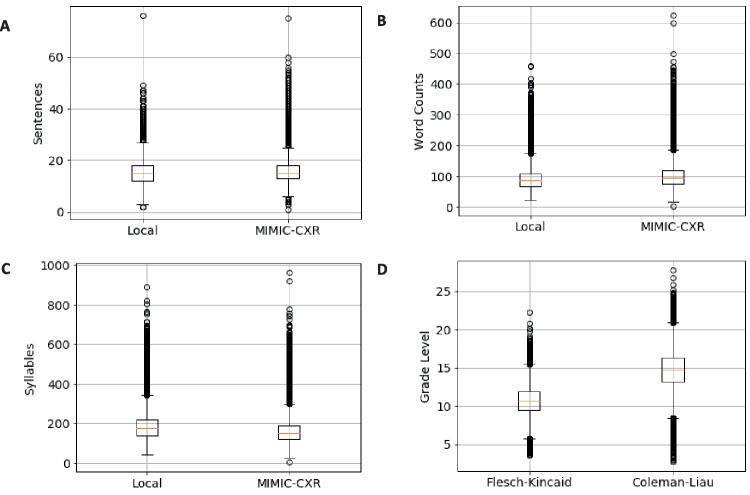

Figure 1 shows boxplots comparing quantifications of text length and complexity for our local dataset and MIMIC-CXR. Figure 1A, B, and C show boxplots for the local dataset and MIMIC-CXR for the total numbers of sentences, words, and syllables in each radiologist report. Figure 1 D shows boxplots for the Flesch-Kincaid grade level and the Coleman-Liau index estimate of reading grade level for the local dataset. Mean sentence count was 15.3 for local data and 15.8 for MIMIC-CXR (p < 0.001). Mean word count was 90.8 for local data and 102.3 for MIMIC-CXR (p < 0.001). Mean syllable count was 182.4 for local data and 159.7 for MIMIC-CXR (p < 0.001). The Mean Flesch-Kincaid grade level for local data was 10.7, while mean Coleman-Liau index was 14.7.

Fig. 1.

Distributions of sentence, word, and syllable counts for local institution and MIMIC-CXR chest radiograph reports as well as Flesch-Kincaid grade level and Coleman-Liau index for local institutional data. A Boxplots for number of sentences in each report for local institutional data and MIMIC-CXR. B Boxplots for number of words in each report. C Boxplots for number of syllables in each report. D Flesch-Kincaid grade level and Coleman-Liau index for local institutional data only

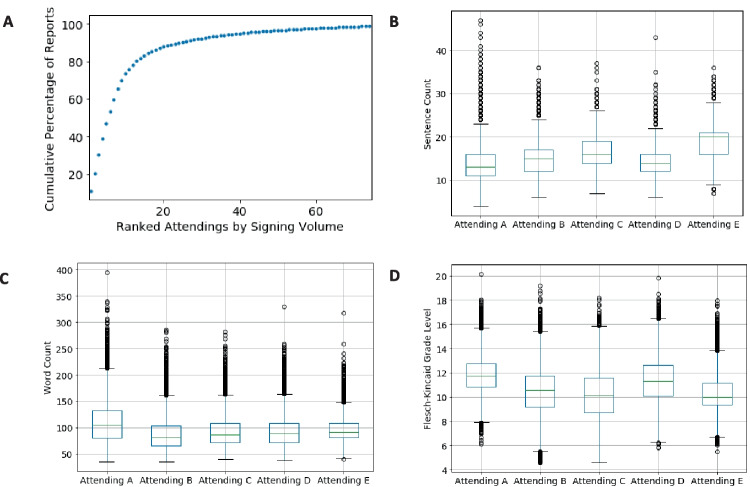

To understand factors underlying variation in report length and text complexity, such as personal radiologist reporting style, we assessed text length variation related to the radiologist finalizing the report, independent of the report findings. This information is not available in the public MIMIC-CXR dataset, so we performed this analysis exclusively on data from our local institution. One hundred eighty-five physicians finalized the interpretations of single frontal view chest radiographs at our local institution during the time period of data collection. A portion of these were related to teleradiology and locum tenens coverage, and most reports were finalized by a much smaller subset of radiologists. Only 33 radiologists signed greater than 500 reports, and only 21 radiologists signed greater than 1000 reports in the study period. Figure 2A shows a ranking of the final signing radiologists by total volume of signed chest radiograph reports plotted against the cumulative percentage of total reports for the 75 radiologists with the highest volume. 80.3% of reports were signed by 13 radiologists, and 46.9% of the reports were signed by 5 radiologists. The top 5 radiologists signed a total of 79,292 reports with totals of 18,284, 16,319, 16,276, 14,771, and 13,642 respectively. Figure 2B and C show boxplots related to the numbers of sentences and words in these radiologists’ reports. Figure 2D shows boxplots related to Flesch-Kincaid grade level. The radiologists have been anonymized as Attendings A, B, C, D, and E in descending order of total report volume. Of note, attendings C and E were affiliated with the Emergency Radiology division, and attendings A, B, and D were affiliated with the Cardiothoracic Imaging division during the local dataset study period. All five of these attendings had greater than 10 years of experience in practicing radiology during the study period. Additionally, the Supplementary Material shows example default chest radiograph templates for the local institution.

Fig. 2.

Variation in report characteristics based on the signing radiologist for local institutional data. A Cumulative percentage of reports signed by radiology attendings ranked in order of highest to lowest volume for the top 75 radiologists in the dataset. B Boxplots for the number of sentences in each report for the top 5 highest volume radiologists. C Boxplots for the number of words in each report for the top 5 highest volume radiologists. D Boxplots for Flesch-Kincaid grade level for reports from the top 5 highest volume radiologists

In addition to the variability of the attending final signing the report, there is also a confounding factor in that our local institutional dataset includes a teaching hospital with approximately 40 total residents per year in the radiology residency program. 60,088 of the 168,949 text reports considered in our study (35.6%) were submitted as preliminary reports into the medical record by residents before the reports were edited and signed by an attending radiologist. Additionally, a separate percentage of the reports was potentially drafted by a resident but not submitted as a preliminary report before the attending began editing the resident draft in order to create a final report, and these scenarios are not quantifiable within our local institutional dataset. The involvement of residents in the report creation process consequently creates a confounding variable for how text length and complexity change based on the radiologist final signing the report.

Since radiologist-specific differences in style are not readily extractable from the MIMIC-CXR public dataset reports, we also investigated report verbosity unrelated to report findings by targeting “terms of perception” as described by Hartung et al. [28]. These terms are used by radiologists to convey the experience of image perception and add verbosity to radiology reports without conveying any clinically meaningful information. Examples include variants of language such as “there is,” “is present,” “is seen,” “is noted,” “is visualized,” and “is demonstrated.” Fig. 3A shows a bar graph with the percentages of local reports containing different terms of perception. Figure 3B shows a bar graph with these percentages for the MIMIC-CXR reports. Summary statistics for report lengths associated with the use of these terms of perception are listed in Table 1 for the local dataset and Table 2 for MIMIC-CXR. Table 1 reports the median and interquartile range (IQR) of report word counts and the median value of the Flesch-Kincaid grade level as well as p-values for Mann–Whitney U testing performed on word counts and Flesch-Kincaid grade levels between reports containing each term of perception and those which do not contain the term. Table 2 reports the word counts, IQRs, and Mann–Whitney p-values for the usage of each term of perception in MIMIC-CXR.

Fig. 3.

Bar graphs for percentage of reports containing various terms of perception for local institutional data (A) and MIMIC-CXR data (B)

Table 1.

Terms of perception in local institution reports

| Perceptive language | Median word count | Word count IQR | P-value | Median Flesch-Kincaid grade level | P-value |

|---|---|---|---|---|---|

| All reports | 86 | 44 | - | 10.67 | - |

| Seems/appears | 87 | 44 | < 0.001 | 10.74 | < 0.001 |

| There is/are | 97 | 49 | < 0.001 | 9.92 | < 0.001 |

| Is/are demonstrated | 117 | 82 | < 0.001 | 11.52 | < 0.001 |

| Is/are visualized | 109 | 58.75 | < 0.001 | 11.31 | < 0.001 |

| Is/are seen | 101 | 53 | < 0.001 | 10.01 | < 0.001 |

| Is/are present | 96 | 47 | < 0.001 | 10.34 | < 0.001 |

| Is/are noted | 79 | 53.5 | < 0.001 | 10.36 | < 0.001 |

Table 2.

Terms of perception in MIMIC-CXR reports

| Perceptive language | Median word count | Word count IQR | P-value |

|---|---|---|---|

| All reports | 95 | 44 | - |

| Seems/appears | 107 | 53 | < 0.001 |

| There is/are | 99 | 46 | < 0.001 |

| Is/are demonstrated | 108 | 48 | < 0.001 |

| Is/are visualized | 104 | 42.75 | < 0.001 |

| Is/are seen | 100 | 46 | < 0.001 |

| Is/are present | 110 | 53 | < 0.001 |

| Is/are noted | 111 | 47 | < 0.001 |

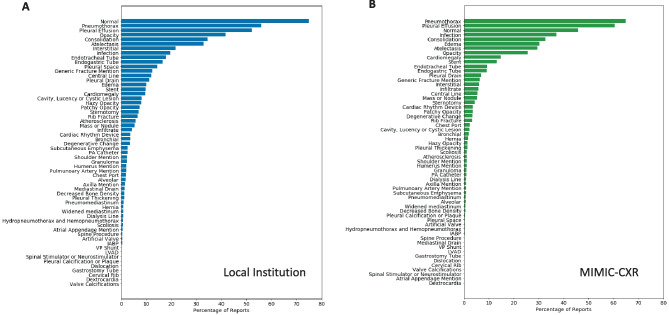

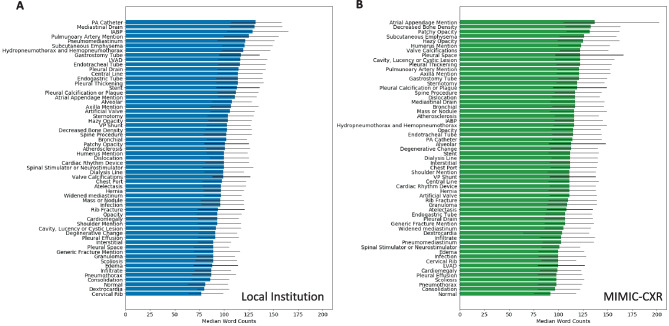

For assessing the impact of specific imaging findings on report length and complexity, we developed custom sets of keywords based in part on existing label sets in the multiple public CXR datasets as well as the label sets associated with Annalise [3, 4, 22–24, 29]. We further supplemented these label sets to target additional findings and devices not covered in the purview of those label sets to define a total of 59 keyword categories. For each of these keyword categories, we then used NLP to identify their use in reports and quantified their impact on text length and complexity. Of note, while we searched for keyword usage, we did not discriminate between positive or negative usage for our quantification. For example, a pertinent negative description of “no pneumothorax” or “no pleural effusion” was considered equivalent to description of a present pneumothorax or a pleural effusion for the purposes of the keyword categorization. Figure 4A shows a bar graph of the percentage of reports which include each category of keywords for the local dataset, sorted by the percentage of reports utilizing each keyword category. Figure 4B shows these percentages for MIMIC-CXR. Figure 5A shows a bar graph of the median word counts and IQR for the subset of reports which include the keyword categories for the local dataset. Displayed keyword categories are sorted by median report word count. Figure 5B shows the median word counts and IQR for MIMIC-CXR.

Fig. 4.

Percentage of reports containing at least one instance of an imaging finding category keyword for local institutional data (A) and MIMIC-CXR data (B). Displayed keyword presence is not selective for usage in a positive or negative context

Fig. 5.

Report word counts based on the presence of imaging finding keywords ranked by median word count for local institutional data (A) and MIMIC-CXR (B). Each colored bar shows the median word count. Each thin black bar spans the associated interquartile range from the first to third quartile of word counts

To better elucidate which of our enumerated keyword categories and other factors of the radiology reports contributed most to radiology report length, we then performed linear regression without regularization and LASSO regression with respect to report word counts in order to identify the most relevant features contributing to length. For local institutional data, we were able to model report length with and without accounting for attending level variability. For the MIMIC-CXR data, we only modeled report length based on our set of 66 total features related to “terms of perception” and report finding keyword categories. With tenfold cross-validation, mean coefficient of determination (R2) was 0.490 ± 0.001 for local data with a 66 feature linear model. Using LASSO, mean R2 was 0.478 ± 0.001. When modeling the complete dataset without folds, 46 features remained with non-zero coefficients as shown in Fig. 6A. When accounting for attending level variation for local data, we elected to only model data related to the 33 radiologists who had each signed at least 500 reports. This reduced the dataset size from 168,949 to 157,385 reports. For a linear regression model with a resulting total of 99 features (7 terms of perception, 59 finding keyword categories, and 33 possible signing radiologists), the R2 was 0.570 ± 0.002. With LASSO regression, R2 was 0.548 ± 0.002. For our selected regularization, 55 of the features had non-zero coefficients when the entire set of 157,385 reports was modeled. Terms of perception had significantly less impact on report length and when linear regression modeling was performed for only the 7 terms of perception, mean R2 was 0.067 ± 0.001 compared to 0.469 ± 0.001 for modeling with only imaging finding-related keywords (p < 0.001).

Fig. 6.

Non-zero LASSO coefficients for local institutional data (A) and MIMIC-CXR (B) ranked by coefficient value

As shown in Fig. 6A, perhaps surprisingly, one of the factors most strongly associated with shorter reports is the “is/are noted” term of perception. Importantly, in the local dataset, a common “normal” template phrase was “No osseous abnormalities are noted” (6878 occurrences, 45% of total usage for this term of perception) which likely contributed significantly to the negative association with report length despite conventionally being an indicator of verbosity. Similarly, pneumothorax was mentioned as a pertinent negative in 89,017 reports (95% of usage) contributing to its negative association with report length. Pleural drains and endotracheal tubes had the highest impact on report length.

For MIMIC-CXR data, mean R2 for a linear regression model was 0.387 ± 0.002. With only the 7 terms of perception, mean R2 was 0.086 ± 0.002. Mean R2 for only imaging finding-related keywords was 0.354 ± 0.002. With LASSO regression performed on all 66 features, mean R2 was 0.369 ± 0.002 with 45 non-zero features. Figure 6B shows non-zero LASSO coefficients for a MIMIC-CXR model on the entire considered dataset.

Discussion

A fully autonomous AI system for CXR interpretation would ideally consider the highest possible number of clinically relevant imaging features for reporting purposes. However, for CXR AI systems designed to function in tandem with human readers, additional human factors must be considered, and an overabundance of possible predictive outputs might potentially be deleterious. Drawbacks of an overabundance of predictive outputs would include alarm fatigue [6], and, based on the literature related to mammography CAD, we might also expect increased interpretation time if the AI system generates multiple predictions or image annotations which a radiologist must verify [5]. Consequently, it is worth considering which imaging features have not only the highest clinical impact, but also the largest impact on report length and complexity.

Our regression analysis helps highlight imaging findings which correlate with increased report length, although as shown in Fig. 6, there were marked differences between MIMIC-CXR and our local institution dataset, likely related to institution-level and radiologist-level variability. Despite these differences, endotracheal tubes were an important factor in report length in both datasets, which underscores the value of work towards automated assessment of endotracheal tube positioning such as that of Lakhani et al. [30].

Pleural drains were also an important factor in report length in both datasets. This emphasizes the importance of targeted datasets such as CANDID-PTX which contains 19,237 chest radiographs with segmented annotations for pneumothoraces, acute rib fractures, and intercostal chest tubes [23]. Targeted datasets like CANDID-PTX are especially valuable in the context that for the Annalise software, intercostal tube malpositioning was excluded as a label specifically due to lack of an adequate amount of model data for this critical finding [3].

The frequency with which reports mention pneumothorax, pleural effusion, opacity, and atelectasis, as either pertinent negatives or positive findings, also helps highlight the value of the multiple public chest radiograph datasets such as NIH CXR14 and CheXpert in addition to MIMIC-CXR which provide extracted labels for these findings [4, 18, 24]. However, of these 3 datasets, only MIMIC-CXR offers access to the radiologist reports as part of the dataset which allows for more detailed textual analysis.

The variation between our local institutional dataset and MIMIC-CXR also underscores that radiology report language can vary considerably both at institutional levels and at the level of individual radiologists. A limitation of this work is that more institutions were not included in the analysis, and that, for the public MIMIC-CXR report data, we were not able to model radiologist-level variation. For the local institutional dataset, modeling of individual radiologist-level variation is limited by a large percentage of the reports being pre-dictated by a resident prior to the report being finalized. Another limitation is that the de-identification process performed to anonymize the MIMIC-CXR reports compromised our ability to accurately quantify text readability and complexity for this corpus. Additionally, given the higher mean coefficients of determination for imaging finding keyword models for the local institutional dataset compared to MIMIC-CXR, we would suspect at least in part that there was underlying bias in the keyword selection process based on local patterns of report language use.

Despite these limitations and institutional variability, our analysis of 378,986 total chest radiograph reports spotlights opportunities for AI model targets which can potentially increase radiologist efficiency. Targets such as endotracheal tubes, pleural drains, masses, nodules, and cavitary and cystic lesions are key contributors to increased report length. Consequently, AI models which focus on these types of findings create opportunities for increased efficiency without necessarily exposing radiologists to alarm fatigue through exposure to a large number of model predictions.

Supplementary Information

Below is the link to the electronic supplementary material.

Author Contribution

All authors contributed to the study’s conception and design. Data collection and analysis were performed by Dr. Carl Sabottke and Dr. Raza Mushtaq. The first draft of the manuscript was written by Dr. Carl Sabottke and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Data Availability

Data can be made available upon request to the authors, subject to data use agreement and Institutional Review Board restrictions.

Declarations

Ethics Approval

This retrospective study was approved by the Institutional Review Board affiliated with University of Arizona Tucson College of Medicine and Banner Health.

Consent to Participate

Informed consent for this retrospective study was waived by the Institutional Review Board.

Consent to Publish

No individual person’s data is contained within the manuscript which would require consent to publish. Informed consent for this retrospective study was waived by the Institutional Review Board.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Jones CM, Danaher L, Milne MR, et al. Assessment of the effect of a comprehensive chest radiograph deep learning model on radiologist reports and patient outcomes: a real-world observational study. BMJ Open. British Medical Journal Publishing Group; 2021;11(12):e052902. 10.1136/BMJOPEN-2021-052902. [DOI] [PMC free article] [PubMed]

- 2.Gipson J, Tang V, Seah J, et al. Diagnostic accuracy of a commercially available deep-learning algorithm in supine chest radiographs following trauma. British Journal of Radiology. British Institute of Radiology; 2022;95(1134). 10.1259/BJR.20210979 [DOI] [PMC free article] [PubMed]

- 3.Seah JCY, Tang CHM, Buchlak QD, et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study. Lancet Digit Health. Elsevier Ltd; 2021;3(8):e496–e506. 10.1016/S2589-7500(21)00106-0. [DOI] [PubMed]

- 4.Irvin J, Rajpurkar P, Ko M, et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. www.aaai.org. Accessed February 1, 2019.

- 5.Schwartz TM, Hillis SL, Sridharan R, et al. Interpretation time for screening mammography as a function of the number of computer-aided detection marks. Journal of Medical Imaging. Society of Photo-Optical Instrumentation Engineers; 2020;7(2):1. 10.1117/1.JMI.7.2.022408. [DOI] [PMC free article] [PubMed]

- 6.Ancker JS, Edwards A, Nosal S, Hauser D, Mauer E, Kaushal R. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Med Inform Decis Mak. BioMed Central; 2017;17(1). 10.1186/S12911-017-0430-8. [DOI] [PMC free article] [PubMed]

- 7.Elkassem AA, Smith AD. Potential Use Cases for ChatGPT in Radiology Reporting. American Roentgen Ray Society ; 2023. 10.2214/AJR.23.29198. [DOI] [PubMed]

- 8.Mcgurk S, Brauer K, Macfarlane T v., Duncan KA. The effect of voice recognition software on comparative error rates in radiology reports. Br J Radiol. Br J Radiol; 2008;81(970):767–770. 10.1259/BJR/20698753. [DOI] [PubMed]

- 9.Chang CA, Strahan R, Jolley D. Non-clinical errors using voice recognition dictation software for radiology reports: A retrospective audit. J Digit Imaging. 2011;24(4):724–728. doi: 10.1007/s10278-010-9344-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Motyer RE, Liddy S, Torreggiani WC, Buckley O. Frequency and analysis of non-clinical errors made in radiology reports using the National Integrated Medical Imaging System voice recognition dictation software. Ir J Med Sci. Ir J Med Sci; 2016;185(4):921–927. 10.1007/S11845-016-1507-6. [DOI] [PubMed]

- 11.Quint LE, Quint DJ, Myles JD. Frequency and Spectrum of Errors in Final Radiology Reports Generated With Automatic Speech Recognition Technology. Journal of the American College of Radiology. Elsevier; 2008;5(12):1196–1199. 10.1016/j.jacr.2008.07.005. [DOI] [PubMed]

- 12.Pezzullo JA, Tung GA, Rogg JM, Davis LM, Brody JM, Mayo-Smith WW. Voice Recognition Dictation: Radiologist as Transcriptionist. J Digit Imaging. Springer; 2008;21(4):384. 10.1007/S10278-007-9039-2. [DOI] [PMC free article] [PubMed]

- 13.Femi-Abodunde A, Olinger K, Burke LMB, et al. Radiology Dictation Errors with COVID-19 Protective Equipment: Does Wearing a Surgical Mask Increase the Dictation Error Rate? J Digit Imaging. Springer Science and Business Media Deutschland GmbH; 2021;34(5):1294–1301. 10.1007/S10278-021-00502-W [DOI] [PMC free article] [PubMed]

- 14.Radford A, Kim JW, Xu T, Brockman G, Mcleavey C, Sutskever I. Robust Speech Recognition via Large-Scale Weak Supervision. . https://github.com/openai/. Accessed December 27, 2022.

- 15.Hillis JM, Bizzo BC, Mercaldo S, et al. Evaluation of an Artificial Intelligence Model for Detection of Pneumothorax and Tension Pneumothorax in Chest Radiographs. JAMA Netw Open. American Medical Association; 2022;5(12):e2247172–e2247172. 10.1001/JAMANETWORKOPEN.2022.47172. [DOI] [PMC free article] [PubMed]

- 16.Thian YL, Ng D, Hallinan JTPD, et al. Deep learning systems for pneumothorax detection on chest radiographs: A multicenter external validation study. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(4). 10.1148/RYAI.2021200190 [DOI] [PMC free article] [PubMed]

- 17.Malhotra P, Gupta S, Koundal D, Zaguia A, Kaur M, Lee HN. Deep Learning-Based Computer-Aided Pneumothorax Detection Using Chest X-ray Images. Sensors (Basel). Sensors (Basel); 2022;22(6). 10.3390/S22062278. [DOI] [PMC free article] [PubMed]

- 18.Johnson AEW, Pollard TJ, Berkowitz SJ, et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci Data. NLM (Medline); 2019;6(1):317. 10.1038/s41597-019-0322-0. [DOI] [PMC free article] [PubMed]

- 19.MIMIC-CXR Database v2.0.0. . https://physionet.org/content/mimic-cxr/2.0.0/. Accessed April 4, 2020.

- 20.Kincaid J, Fishburne R, Rogers R, Chissom B. Derivation Of New Readability Formulas (Automated Readability Index, Fog Count And Flesch Reading Ease Formula) For Navy Enlisted Personnel. Institute for Simulation and Training. 1975; https://stars.library.ucf.edu/istlibrary/56. Accessed December 21, 2022.

- 21.Liau TL, Bassin CB, Martin CJ, Coleman EB. MODIFICATION OF THE COLEMAN READABILITY FORMULAS.

- 22.Bustos A, Pertusa A, Salinas JM, de la Iglesia-Vayá M. PadChest: A large chest x-ray image dataset with multi-label annotated reports. Med Image Anal. Elsevier; 2020;66:101797. 10.1016/J.MEDIA.2020.101797. [DOI] [PubMed]

- 23.Feng S, Azzollini D, Kim JS, et al. Curation of the candid-ptx dataset with free-text reports. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(6). 10.1148/RYAI.2021210136 [DOI] [PMC free article] [PubMed]

- 24.Wang X, Peng Y, Lu L, Lu Z, Bagheri M, Summers RM. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. . https://uts.nlm.nih.gov/metathesaurus.html. Accessed February 1, 2019.

- 25.Mann HB, Whitney DR. On a Test of Whether one of Two Random Variables is Stochastically Larger than the Other. Institute of Mathematical Statistics; 1947;18(1):50–60. 10.1214/AOMS/1177730491.

- 26.Tibshirani R. Regression shrinkage and selection via the lasso: a retrospective. J R Statist Soc B. 2011;73:273–282. doi: 10.1111/j.1467-9868.2011.00771.x. [DOI] [Google Scholar]

- 27.Pedregosa FABIANPEDREGOSA F, Michel V, Grisel OLIVIERGRISEL O, et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research. 2011;12(85):2825–2830. http://jmlr.org/papers/v12/pedregosa11a.html. Accessed December 21, 2022.

- 28.Hartung MP, Bickle IC, Gaillard F, Kanne JP. How to create a great radiology report. Radiographics. Radiological Society of North America Inc.; 2020;40(6):1658–1670. 10.1148/RG.2020200020 [DOI] [PubMed]

- 29.Johnson AEW, Pollard TJ, Berkowitz SJ, et al. MIMIC-CXR: A LARGE PUBLICLY AVAILABLE DATABASE OF LABELED CHEST RADIOGRAPHS. . https://github.com/ncbi-nlp/NegBio. Accessed July 14, 2019. [DOI] [PMC free article] [PubMed]

- 30.Lakhani P, Flanders A, Gorniak R. Endotracheal tube position assessment on chest radiographs using deep learning. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(1). 10.1148/RYAI.2020200026 [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data can be made available upon request to the authors, subject to data use agreement and Institutional Review Board restrictions.