Abstract

The ubiquity of missing values in real-world datasets poses a challenge for statistical inference and can prevent similar datasets from being analyzed in the same study, precluding many existing datasets from being used for new analyses. While an extensive collection of packages and algorithms have been developed for data imputation, the overwhelming majority perform poorly if there are many missing values and low sample sizes, which are unfortunately common characteristics in empirical data. Such low-accuracy estimations adversely affect the performance of downstream statistical models. We develop a statistical inference framework for regression and classification in the presence of missing data without imputation. Our framework, RIFLE (Robust InFerence via Low-order moment Estimations), estimates low-order moments of the underlying data distribution with corresponding confidence intervals to learn a distributionally robust model. We specialize our framework to linear regression and normal discriminant analysis, and we provide convergence and performance guarantees. This framework can also be adapted to impute missing data. In numerical experiments, we compare RIFLE to several state-of-the-art approaches (including MICE, Amelia, MissForest, KNN-imputer, MIDA, and Mean Imputer) for imputation and inference in the presence of missing values. Our experiments demonstrate that RIFLE outperforms other benchmark algorithms when the percentage of missing values is high and/or when the number of data points is relatively small. RIFLE is publicly available at https://github.com/optimization-for-data-driven-science/RIFLE.

1. Introduction

Machine learning algorithms have shown promise when applied to various problems, including healthcare, finance, social data analysis, image processing, and speech recognition. However, this success mainly relied on the availability of large-scale, high-quality datasets, which may be scarce in many practical problems, especially in medical and health applications (Pedersen et al., 2017; Sterne et al., 2009; Beaulieu-Jones et al., 2018). Moreover, many experiments and datasets suffer from the small sample size in such applications. Despite the availability of a small number of data points in each study, an increasingly large number of datasets are publicly available. To fully and effectively utilize information on related research questions from diverse datasets, information across various datasets (e.g., different questionnaires from multiple hospitals with overlapping questions) must be combined in a reliable fashion.

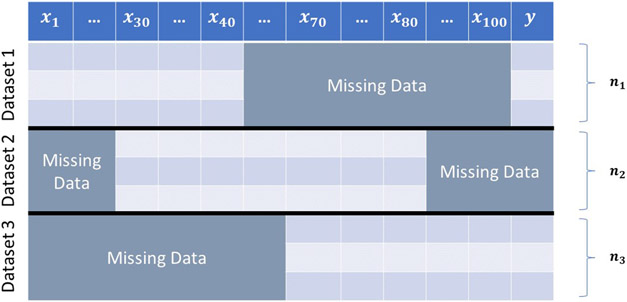

After integrating data from different studies, the obtained dataset can contain large blocks of missing values, as they may not share the same features (Figure 1).

Figure 1:

Consider the problem of predicting the trait from feature vector (). Suppose that we have access to three data sets: The first dataset includes the measurements of () for individuals. The second dataset collects data from another individuals by measuring () with no measurements of the target variable in it; and the third dataset contains the measurements from the variables () for number of individuals. How one should learn the predictor from these three datasets?

There are three general approaches for handling missing values in statistical inference (classification and regression) tasks. A Naïve method is to remove the rows containing missing entries. However, such an approach is not an option when the percentage of missingness in a dataset is high. For instance, as demonstrated in Figure 1, the entire dataset will be discarded if we eliminate the rows with at least one missing entry.

The most common methodology for handling missing values in a learning task is to impute them in a pre-processing stage. The general idea behind data imputation is that the missing values can be predicted using the available data entries and correlated features. Imputation algorithms cover a wide range of methods, including imputing missing entries with the columns means Little & Rubin (2019, Chapter 3) (or median), least-square and linear regression-based methods (Raghunathan et al., 2001; Kim et al., 2005; Zhang et al., 2008; Cai et al., 2006; Buuren & Groothuis-Oudshoorn, 2010), matrix completion and expectation maximization approaches Dempster et al. (1977); Ghahramani & Jordan (1994); Honaker et al. (2011), KNN based (Troyanskaya et al., 2001), Tree based methods (Stekhoven & Bühlmann, 2012; Xia et al., 2017), and methods using different neural network structures. Appendix A presents a comprehensive review of these methods.

The imputation of data allows practitioners to run standard statistical algorithms requiring complete data. However, the prediction model’s performance can be highly reliant on the accuracy of the imputer. High error rates in the prediction of missing values by the imputer can lead to the catastrophic performance of the downstream statistical methods executed on the imputed data.

Another class of methods for inference in the presence of missing values relies on robust optimization over the uncertainty sets on missing entries. Shivaswamy et al. (2006) and Xu et al. (2009) adopt robust optimization to learn the parameters of a support vector machine model. They consider uncertainty sets for the missing entries in the dataset and solve a min-max problem over those sets. The obtained classifiers are robust to the uncertainty of missing entries within the uncertainty regions. In contrast to the imputation-based approaches, the robust classification formulation does not carry the imputation error to the classification phase. However, finding appropriate intervals for each missing entry is challenging, and it is unclear how to determine the uncertainty range in many real datasets. Moreover, their proposed algorithms are limited to the SVM classifier.

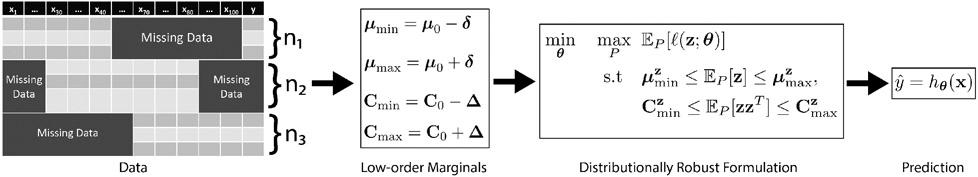

In this paper, we propose RIFLE (Robust InFerence via Low-order moment Estimations) for the direct inference of a target variable based on a set of features containing missing values. The proposed framework does not require the data to be imputed in a pre-processing stage. However, it can also be used as a pre-processing tool for imputing data. The main idea of the proposed framework is to estimate the first and second-order moments of the data and their confidence intervals by bootstrapping on the available data matrix entries. Then, RIFLE finds the optimal parameters of the statistical model for the worst-case distribution with the low-order moments (mean and variance) within the estimated confidence intervals (See Figure 2). Compared to Shivaswamy et al. (2006); Xu et al. (2009), we estimate uncertainty regions for the low-order marginals using the Bootstrap technique. Furthermore, our framework is not restricted to any particular machine learning model, such as support vector machines (Xu et al., 2009).

Figure 2:

Prediction of the target variable without imputation. RIFLE estimates confidence intervals for low-order (first and second-order) marginals from the input data containing missing values. Then, it solves a distributionally robust problem over the set of all distributions whose low-order marginals are within the estimated confidence intervals.

Contributions:

Our main contributions are as follows:

We present a distributionally robust optimization framework over the low-order marginals of the training data distribution for inference in the presence of missing values. The proposed framework does not require data imputation as a pre-processing stage. In Section 3 and Section 4, we specialize the framework to ridge regression and classification models as two case studies respectively. The proposed framework provides a novel strategy for inference in the presence of missing data, especially for datasets with large proportions of missing values.

We provide theoretical convergence guarantees and the iteration complexity analysis of the presented algorithms for robust formulations of ridge linear regression and normal discriminant analysis. Moreover, we show the consistency of the prediction under mild assumptions and analyze the asymptotic statistical properties of the solutions found by the algorithms.

While the robust inference framework is primarily designed for direct statistical inference in the presence of missing values without performing data imputation, it can also be adopted as an imputation tool. To demonstrate the quality of the proposed imputer, we compare its performance with several widely-used imputation packages such as MICE (Buuren & Groothuis-Oudshoorn, 2010), Amelia (Honaker et al., 2011), MissForest (Stekhoven & Bühlmann, 2012), KNN-Imputer (Troyanskaya et al., 2001), MIDA (Gondara & Wang, 2018), GAIN (Yoon et al., 2018) on real and synthetic datasets. Generally speaking, our method outperforms all of the mentioned packages when the number of missing entries is large.

2. Robust Inference via Estimating Low-order Moments

RIFLE is based on a distributionally robust optimization (DRO) framework over low-order marginals. Assume that follows a joint probability distribution . A standard approach for predicting the target variable given the input vector is to find the parameter that minimizes the population risk with respect to a given loss function :

| (1) |

Since the underlying distribution of data is rarely available in practice, the above problem cannot be directly solved. The most common approach for approximating (1) is to minimize the empirical risk with respect to given i.i.d samples drawn from the joint distribution :

The above empirical risk formulation assumes that all entries of and are available. Thus, to utilize the empirical risk minimization (ERM) framework in the presence of missing values, one can either remove or impute the missing data points in a pre-processing stage. Training via robust optimization is a natural alternative in the presence of missing data. Shivaswamy et al. (2006); Xu et al. (2009) suggest the following optimization problem that minimizes the loss function for the worst-case scenario over the defined uncertainty sets per data points:

| (2) |

where represents the uncertainty region of data point . Shivaswamy et al. (2006) obtains the uncertainty sets by assuming a known distribution on the missing entries of datasets. The main issue in their approach is that the constraints defined on data points are totally uncorrelated. Xu et al. (2009) on the other hand defines as a “box” constraint around the data point such that they can be linearly correlated. For this specific case, they show that solving the corresponding robust optimization problem is equivalent to minimizing a regularized reformulation of the original loss function. Such an approach has several limitations: First, it can only handle a few special cases (SVM loss with linearly correlated perturbations on data points). Furthermore, Xu et al. (2009) is primarily designed for handling outliers and contaminated data. Thus, they do not offer any mechanism for the initial estimation of when several vector entries are missing. In this work, we instead take a distributionally robust approach by considering uncertainty on the data distribution instead of defining an uncertainty set for each data point. In particular, we aim to fit the best parameters of a statistical learning model for the worst distribution in a given uncertainty set by solving the following:

| (3) |

where is an uncertainty set over the underlying distribution of data. A key observation is that defining the uncertainty set in (3) is easier and computationally more efficient than defining the uncertainty sets in (2). In particular, the uncertainty set can be obtained naturally by estimating low-order moments of data distribution using only available entries. To explain this idea and to simplify the notations, let , , and . While and are typically not known exactly, one can estimate the (within certain confidence intervals) from the available data by simply ignoring missing entries (assuming the missing value pattern is completely at random, e.g., MCAR). Moreover, we can estimate the confidence intervals via bootstrapping. Particularly, we can estimate , , , and from data such that and with high probability (where the inequalities for matrices and vectors denote component-wise relations). In Appendix B, we show how a bootstrapping strategy can be used to obtain the confidence intervals described above. Given these estimated confidence intervals from data, (3) can be reformulated as

| (4) |

Gao & Kleywegt (2017) utilize the distributionally robust optimization as (3) over the set of positive semi-definite (PSD) cones for robust inference under uncertainty. While their formulation considers balls for the constraints on low order moments of the data, we use constraints that are computationally more natural in the presence of missing entries when combined with bootstrapping. Furthermore, while it can be applied to general convex losses, their method relies on the ellipsoid and the existence of oracles for performing the steps of the ellipsoid method, which is not applicable in modern high-dimensional problems. Moreover, they assume concavity in data (the existence of some oracle to return the worst-case data points) that is practically unavailable even in convex loss functions (including linear regression and normal discriminant analysis studied in our work).

In Section 3, we study the proposed distributionally robust framework described in (4) for the ridge linear regression. We design efficient first-order convergent algorithms to solve the problem and show how we can use the algorithms for both inference and imputation in the presence of missing values. Further, in Appendix F, we study the proposed distributionally robust framework for the classification problems under the normality assumption of features. In particular, we show how Framework (4) can be specialized to the robust normal discriminant analysis in the presence of missing values.

3. Robust Linear Regression in the Presence of Missing Values

Let us specialize our framework to the ridge linear regression model. In the absence of missing data, ridge regression finds optimal regressor parameter by solving

or equivalently by solving:

| (5) |

Thus, having the second-order moments of the data and is sufficient for finding the optimal solution. In other words, it suffices to compute the inner product of any two column vectors , of , and the inner product of any column of with vector . Since the matrix and vector are not fully observed due to the existence of missing values, one can use the available data (see (24) for details) to compute the point estimators and . These point estimators can be highly inaccurate, especially when the number of non-missing rows for two given columns is small. In addition, if the pattern of missing entries does not follow the MCAR assumption, the point estimators are not unbiased estimators of and .

3.1. A Distributionally Robust Formulation of Linear Regression

As we mentioned above, to solve the linear regression problem, we only need to estimate the second-order moments of the data ( and ). Thus, the distributionally robust formulation described in (4) is equivalent to the following optimization problem for the linear regression model:

| (6) |

where the last constraint guarantees that the covariance matrix is positive and semi-definite. We dicuss the procedure of estimating the confidence intervals (, , , and ) in Appendix B.

3.2. RIFLE for Ridge Linear Regression

Since the objective function in (6) is convex in (ridge regression) and concave in and (linear), the minimization and maximization sub-problems are interchangeable (Sion et al., 1958). Thus, we can equivalently rewrite Problem (6) as:

| (7) |

where . Function can be computed in closed-form given any pair of () by setting . Thus, using Danskin’s Theorem (Danskin, 2012), we can apply projected gradient ascent to function to find an optimal solution of (7) as described in Algorithm 1. At each iteration of the algorithm, we first perform one step of projected gradient ascent on matrix and vector ; then we update in closed-form for the obtained and . We initialize and using entriwise point estimation on the available rows (see Equation (24) in Appendix B). The projection of to the box constraint can be done entriwise and has the following closed-form

| Algorithm 1 RIFLE for Ridge Linear Regression in the Presence of Missing Values | |

|---|---|

|

|

Theorem 1. Let () be the optimal solution of (6), , and . Assume that for any given and , within the uncertainty (constraint) sets described in (6), . Then Algorithm 1 computes an -optimal solution of the objective function in (7) in iterations.

Proof. The proof is relegated to Appendix H.

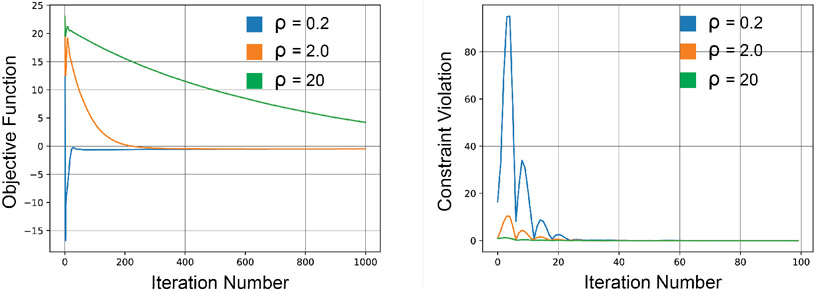

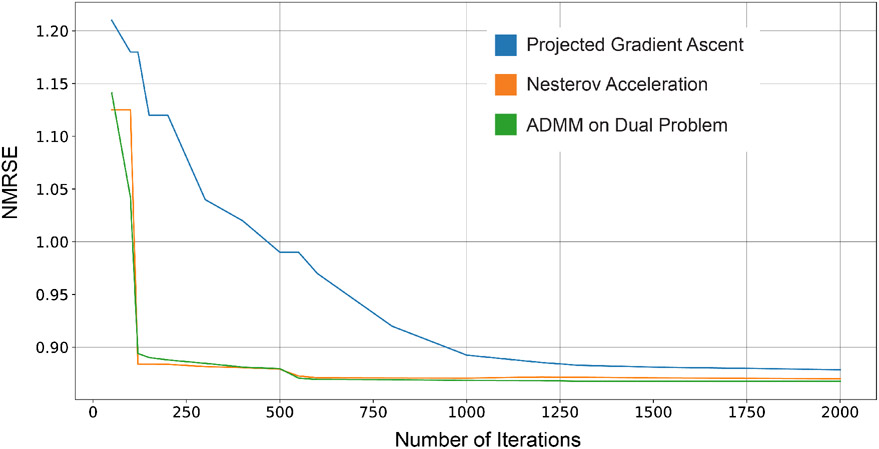

In Appendix C, we show how using the acceleration method of Nesterov can improve the convergence rate of Algorithm 1 to . A technical issue of Algorithm 1 and its accelerated version presented in Appendix C is that projection of to the intersection of box constraints and the set of positive semidefinite matrices () is challenging and cannot be done in closed-form. In the implementation of Algorithm 1, we relax the problem by removing the PSD constraint on to avoid this complexity and time-consuming singular value decomposition at each iteration. This relaxation does not drastically change the algorithm’s performance, as our experiments show in Section 5. A more systematic approach is to write the dual problem of the maximization problem and handle the resulting constrained minimization problem with the Alternating Direction Method of Multipliers (ADMM). The detailed procedure of such an approach can be found in Appendix D. All these algorithms are provably convergent to the optimal points of Problem (6). In addition to theoretical convergence, we have numerically evaluated the convergence of resulting algorithms in Appendix K. Further, the proposed algorithms are consistent, as discussed in Appendix J.

3.3. Performance Guarantees for RIFLE

Thus far, we have discussed how to efficiently solve the robust linear regression problem in the presence of missing values. A natural question in this context is the statistical performance of the obtained optimal solution in the previous section on the unseen test data points. Theorem 2 answers this question from two perspectives: Assuming that the missing values are distributed completely at random, our estimators are consistent. Moreover, for the finite case, Theorem 2 part (b) states that with the proper choice of confidence intervals, with high probability, the test loss of the obtained solution is bounded by the training loss of the estimator. Note that the results regarding the performance of the robust estimator generally hold for MCAR missing pattern. However, we perform several experiments on datasets with MNAR patterns to show how RIFLE works in practice on such datasets in Section 5.

Theorem 2. Assume the data domain is bounded and that the missing pattern of the data follows MCAR. Let , be the training data drawn i.i.d. from the ground-truth distribution with low-order moments and . Further, assume that each entry of and is missing with probability p < 1. Let () be the solution of Problem (6).

(a). Consistency of the Covariance Estimator:

As the number of data points goes to infinity, the estimated low-order marginals converge to the ground-truth values, almost surely. More precisely,

| (8) |

| (9) |

(b). Defining

where and are the ground-truth second-order moments. Given (maximum variance of pairwise feature products), with the probability of at least , we have:

| (10) |

where and c is the hyper-parameter for controlling the size of the confidence intervals as presented in (6)

Proof. The proof is relegated to Appendix H.

3.4. Imputation of Missing Values and Going Beyond Linear Regression

RIFLE can be used for imputing missing data. To this end, we impute different features of a given dataset independently. More precisely, to impute each feature containing missing values, we consider it as a target variable and the rest of the features as the input in our methodology. Then, we train a model to predict the feature given via Algorihm 1 (or its ADMM version, Algorithm 7, in the appendix). Let the obtained optimal solutions be , , and . For a given missing entry, we can use only if all other features in the row of that missing entry are available. However, that is not usually the case in practice, as each row can contain more than one missing entry. Therefore, one can learn a separate model for each missing pattern in the dataset. Let us clarify this point through the example in Figure 1. In this example, we have three different missing patterns (one missing pattern for each dataset). For missing entries in Dataset 1, the first forty features are available. Let denote the vector of the first 40 features in row . Assume that we aim to impute entry in row where denoted by . To this end, we restrict to the first 40 features. Moreover, we consider as the target variable. Then, we run Algorithm 1 on and to obtain the optimal , , and . Consequently, we impute as follows:

We can use the same methodology for imputing missing entries in each feature for missing patterns in Dataset 2 and Dataset 3. While this approach is reasonable for the missing pattern observed in Figure 1, in many practical problems, different rows can have distinct missing patterns. Thus, in the worst case, Algorithm 1 must be executed once for each missing entry. Such an approach is computationally expensive and might be infeasible in large-scale datasets containing large amounts of missing entries. Alternatively, one can perform Algorithm 1 only once to obtain and (considered the “worst-case/pessimistic” estimation of the moments). Then to impute each missing entry, and are restricted to the features available in that missing entry’s row. Having the restricted and , the regressor can be obtained in closed-form (line 6 in Algorithm 1). In this approach, we perform algorithm 1 once and find the optimal for each missing entry based on the estimated and . This approach can lead to sub-optimal solutions compared to the former approach, but it is much faster and more scalable.

Beyond Linear Regression:

While the developed methods are primarily designed for ridge linear regression, one can apply non-linear transformations (kernels) to obtain models beyond linear. In Appendix E, we show how to extend the developed algorithms to quadratic models. The RIFLE framework applied to the quadratically transformed data is called QRIFLE.

4. Robust Classification Framework

In this section, we study the proposed framework in (4) for the classification tasks in the presence of missing values. Since the target variable takes discrete values in classification tasks, we consider the uncertainty sets over the data’s first- and second-order marginals given each target value (label) separately. Therefore, the distributionally robust classification over low-order marginals can be described as:

| (11) |

where , , , and are the estimated confidence intervals for the first and second order of the data distribution. Unlike the robust linear regression task in Section 3, the evaluation of the objective function in (11) might depend on higher-order marginals (beyond second-order) due to the nonlinearity of the loss function. As a result, Problem (11) is a non-convex non-concave intractable min-max optimization problem in general. For the sake of computational traceability, we restrict the distribution in the inner maximization problem to the set of normal distributions. In the following section, we specialize (11) to the quadratic discriminant analysis as a case study. The methodology can be extended to other popular classification algorithms, such as support vector machines and multi-layer neural networks.

4.1. Robust Quadratic Discriminant Analysis

Learning a logistic regression model on datasets containing missing values has been studied extensively in the literature (Fung & Wrobel, 1989; Abonazel & Ibrahim, 2018). Besides deleting missing values and imputation-based approaches, Fung & Wrobel (1989) models the logistic regression task in the presence of missing values as a linear discriminant analysis problem where the underlying assumption is that the predictors follow normal distribution conditional on the labels. Mathematically speaking, they assume that the data points assigned to a specific label follow a Gaussian distribution, i.e., . They use the available data to estimate the parameters of each Gaussian distribution. Therefore, the parameters of the logistic regression model can be assigned based on the estimated parameters of the Gaussian distributions for different classes. Similar to the linear regression case, the estimations of means and covariances are unbiased only when the data satisfies the MCAR condition. Moreover, when the number of data points in the dataset is small, the variance of the estimations can be very high. Thus, to train a logistic regression model that is robust to the percentage and different types of missing values, we specialize the general robust classification framework formulated in Equation (11) to the logistic regression model. Instead of considering a common covariance matrix for the conditional distributions of given labels (linear discriminant analysis), we assume a more general case where each conditional distribution has its own covariance matrix (quadratic discriminant analysis). Assume that for , 1. We aim to find the optimal solution to the following problem:

| (12) |

Where is the sigmoid function.

To solve Problem (12), first, we focus on the scenario when the target variable has no missing values. In this case, each data point contributes to the estimation of either () or (), depending on its label. Similar to the robust linear regression case, we can apply Algorithm 4 to estimate the confidence intervals for , using data points whose target variable equals .

Obviously, the objective function is convex in since the logistic regression loss is convex, and the expectation of loss can be seen as a weighted summation, which is convex. Thus, fixing the outer minimization problem can be solved with respect to using standard first-order methods such as gradient descent.

Although the robust reformulation of logistic regression stated in (12) is convex in and concave in and , the inner maximization problem is intractable with respect to and . We approximate Problem (12) in the following manner:

| (13) |

where and . To compute optimal and , we have:

| (14) |

Theorem 3. Let be the i-th element of vector . The optimal solution of Problem (14) has the following form:

| (15) |

Note that we relaxed (12) by taking the maximization problem over a finite set of estimations. We estimate each by bootstrapping on the available data using Algorithm 4. Define as:

| (16) |

Similarly, we can define:

| (17) |

Since the maximization problem is over a finite set, we can rewrite Problem (13) as:

| (18) |

Since the maximum of several functions is not necessarily smooth (differentiable), we add a quadratic regularization term to the maximization problem, accelerating the convergence rate (Nouiehed et al., 2019) as follows:

| (19) |

First, we show how to solve the inner maximization problem. Note that the and are independent. We show how to find optimal . Optimizing with respect to is similar. Since the maximization problem is a constrained quadratic program, we can write the Lagrangian function as follows:

| (20) |

Having the optimal , the above problem has a closed-form solution with respect to each , which can be written as:

Since is a non-increasing function with respect to , we can find the optimal value of using the following bisection algorithm. Algorithm 2 demonstrates how to find an -optimal and efficiently using the bisection idea.

| Algorithm 2 Finding the optimal and using the bisection idea | |

|---|---|

|

|

Remark 4. An alternative method for finding optimal , and is to sort values in first, and then finding the smallest such that if we set , the sum of is bigger than 1 (let j be the index of that value). Without loss of generality, assume that . Then, , which has a closed-form solution with respect to .

To update , we need to solve the following optimization problem:

| (21) |

Similar to the standard statistical learning framework, we solve the following empirical risk minimization problem by applying the gradient descent to on a finite data sample. Define as follows:

| (22) |

where are generated from the distribution . The empirical risk minimization problem can be written as follows:

| (23) |

Algorithm 3 summarizes the robust linear discriminant analysis method for the case where the label of all data points is available. Theorem 5 demonstrates the convergence of gradient descent algorithm applied to (23) in iterations to an -optimal solution.

| Algorithm 3 Robust Quadratic Discriminant Analysis in the Presence of Missing Values | |

|---|---|

|

|

Theorem 5. Assume that . Gradient descent algorithm requires gradient evaluations for converging to an -optimal saddle point of the optimization problem (23).

In Appendix F, we extend the methodology to the case where contains missing entries.

5. Experiments

In this section, we evaluate RIFLE’s performance on a diverse set of inference tasks in the presence of missing values. We compare RIFLE’s performance to several state-of-the-art approaches for data imputation on synthetic and real-world datasets. The experiments are designed in a manner that the sensitivity of the model to factors such as the number of samples, data dimension, types, and proportion of missing values can be evaluated. The description of all datasets used in the experiments can be found in Appendix I.

5.1. Evaluation Metrics

We need access to the ground-truth values of the missing entries to evaluate RIFLE and other state-of-the-art imputation approaches. Hence, we artificially mask a proportion of available data entries and predict them with different imputation methods. A method performs better than others if the predicted missing entries are closer to the ground-truth values. To measure the performance of RIFLE and the existing approaches on a regression task for a given test dataset consisting of data points, we use normalized root mean squared error (NRMSE), defined as:

where , , and represent the true value of the i-th data point, the predicted value of the i-th data point, and the average of true values of data points, respectively. In all experiments, generated missing entries follow either a missing completely at random (MCAR) or a missing not at random (MNAR) pattern. A discussion on the procedure of generating these patterns can be found in Appendix G.

5.2. Tuning Hyper-parameters of RIFLE

The hyper-parameter c in (7) controls the robustness of the model by adjusting the size of confidence intervals. This parameter is tuned by performing a cross-validation procedure over the set {0.1, 0.25, 0.5, 1, 2, 5, 10, 20, 50, 100}, and the one with the lowest NMRSE is chosen. The default value in the implementation is c = 1 since it consistently performs well over different experiments. Furthermore, , the hyper-parameter for the ridge regression regularizer, is tuned by choosing 20% of the data as the validation set from the set {0.01, 0.1, 0.5, 1, 2, 5, 10, 20, 50}. To tune K, the number of bootstrap samples for estimating the confidence intervals, we tried 10, 20, 50, and 100. No significant difference is observed in terms of the test performance for the above values.

Furthermore, we tune the hyper-parameters of the competing packages as follows. For KNN-Imputer (Troyanskaya et al., 2001), we try {2, 10, 20, 50} for the number of neighbors (K) and pick the one with the highest performance. For MICE (Buuren & Groothuis-Oudshoorn, 2010) and Amelia (Honaker et al., 2011), we generate 5 different imputed data and pick the one with the highest performance on the test data. MissForest has multiple hyper-parameters. We keep the criterion as “MSE” since our performance evaluation measure is NRMSE. Moreover, we tune the number of iterations and number of estimations (number of trees) by checking values from {5, 10, 20} and {50, 100, 200}, respectively. We do not change the structure of the neural networks for MIDA (Gondara & Wang, 2018) and GAIN (Yoon et al., 2018), and the default versions are performed for imputing datasets.

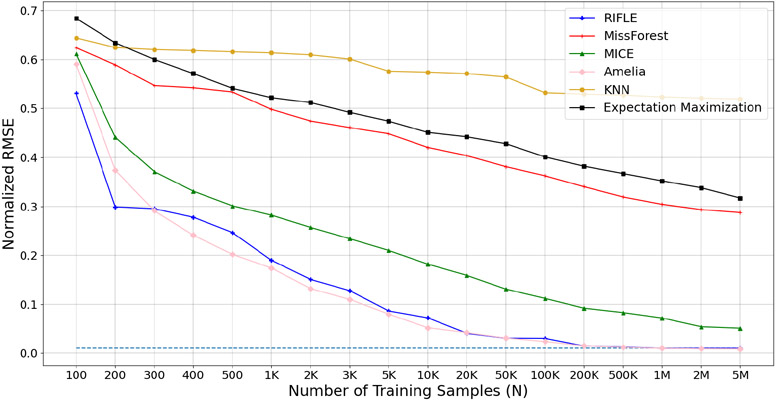

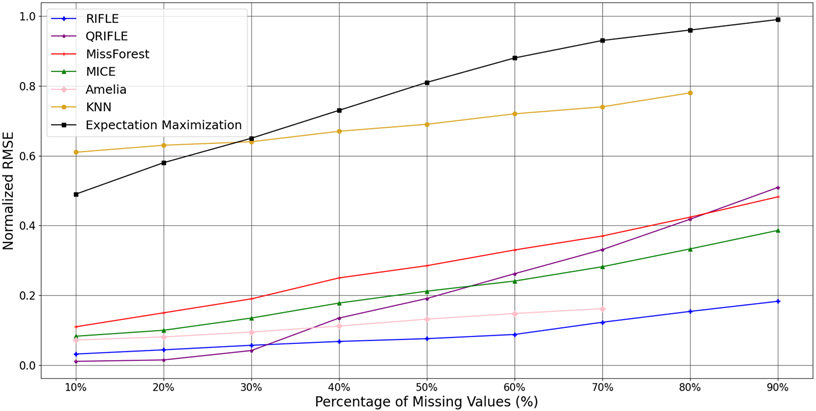

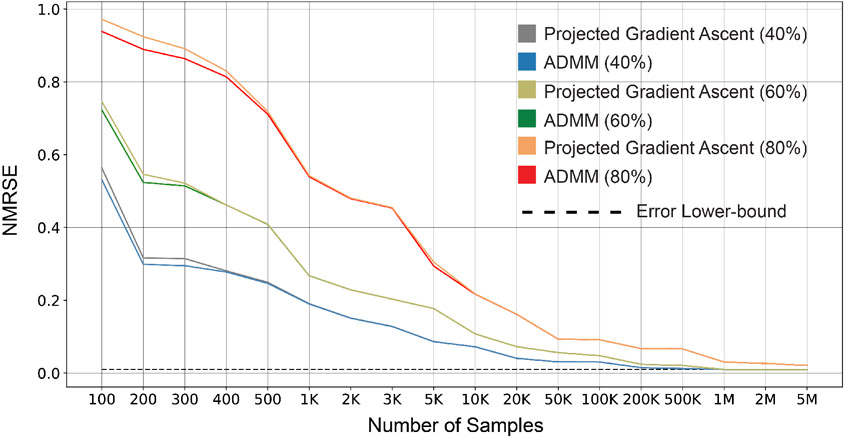

5.3. RIFLE Consistency

In Theroem 2 Part (a), we demonstrated that RIFLE is consistent. In Figure 3, we investigate the consistency of RIFLE on synthetic datasets with different proportions of missing values. The synthetic data has 50 input features following a jointly normal distribution with the mean whose entries are randomly chosen from the interval (−100, 100). Moreover, the covariance matrix equals where elements are randomly picked from (−1, 1). The dimension of is 50 × 20. The target variable is a linear function of input features added to a mean zero normal noise with a standard deviation of 0.01. As depicted in Figure 3, RIFLE requires fewer samples to recover the ground-truth parameters of the model compared to MissForest, KNN Imputer, Expectation Maximization (Dempster et al., 1977), and MICE. Amelia’s performance is significantly good since the predictors have a joint normal distribution and the linear underlying model. Note that by increasing the number of samples, the NRMSE of our framework converges to 0.01, which is the standard deviation of the zero-mean Gaussian noise added to each target value (the dashed line).

Figure 3:

Comparing the consistency of RIFLE, MissForest, KNN Imputer, MICE, Amelia, and Expectation Maximization methods on a synthetic dataset containing 40% of missing values.

5.4. Data Imputation via RIFLE

As explained in Section 3, while the primary goal of RIFLE is to learn a robust regression model in the presence of missing values, it can also be used as an imputation tool. We run RIFLE and several state-of-the-art approaches on five datasets from the UCI repository (Dua & Graff, 2017) (Spam, Housing, Clouds, Breast Cancer, and Parkinson datasets) with different proportions of MCAR missing values (the description of the datasets can be found in Appendix I). Then, we compute the NMRSE of imputed entries. Table 1 shows the performance of RIFLE compared to other approaches for the datasets where the proportion of missing values are relatively high . RIFLE outperforms these methods in almost all cases and performs slightly better than MissForest, which uses a highly non-linear model (random forest) to impute missing values.

Table 1:

Performance comparison of RIFLE, QRIFLE (Quadratic RIFLE), and state-of-the-art methods on several UCI datasets. We applied to impute methods on three different missing-value proportions for each dataset. The best imputer is highlighted with bold font, and the second-best imputer is underlined. Each experiment is done 5 times, and the average and the standard deviation of performances are reported.

| Dataset Name | RIFLE | QRIFLE | MICE | Amelia | GAIN | MissForest | MIDA | EM |

|---|---|---|---|---|---|---|---|---|

| Spam (30%) | 0.87 ±0.009 | 0.82 ±0.009 | 1.23 ±0.012 | 1.26 ±0.007 | 0.91 ±0.005 | 0.90 ±0.013 | 0.97 ±0.008 | 0.94 ± 0.004 |

| Spam (50%) | 0.90 ±0.013 | 0.86 ±0.014 | 1.29 ±0.018 | 1.33 ±0.024 | 0.93 ±0.015 | 0.92 ±0.011 | 0.99 ±0.011 | 0.97 ± 0.008 |

| Spam (70%) | 0.92 ±0.017 | 0.91 ±0.019 | 1.32 ±0.028 | 1.37 ±0.032 | 0.97 ±0.014 | 0.95 ±0.016 | 0.99 ±0.018 | 0.98 ± 0.017 |

| Housing (30%) | 0.86 ±0.015 | 0.89 ±0.018 | 1.03 ±0.024 | 1.02 ±0.016 | 0.82 ±0.015 | 0.84 ±0.018 | 0.93 ±0.025 | 0.95 ± 0.011 |

| Housing (50%) | 0.88 ±0.021 | 0.90 ±0.024 | 1.14 ±0.029 | 1.09 ±0.027 | 0.88 ±0.019 | 0.88 ±0.018 | 0.98 ±0.029 | 0.96 ± 0.016 |

| Housing (70%) | 0.92 ±0.026 | 0.95 ±0.028 | 1.22 ±0.036 | 1.18 ±0.038 | 0.95 ±0.027 | 0.93 ±0.024 | 1.02 ±0.037 | 0.98 ± 0.017 |

| Clouds (30%) | 0.81 ±0.018 | 0.79 ±0.019 | 0.98 ±0.024 | 1.04 ±0.027 | 0.76 ±0.021 | 0.71 ±0.011 | 0.83 ±0.022 | 0.86 ± 0.013 |

| Clouds (50%) | 0.84 ±0.026 | 0.84 ±0.028 | 1.10 ±0.041 | 1.13 ±0.046 | 0.82 ±0.027 | 0.75 ±0.023 | 0.88 ±0.033 | 0.89 ± 0.018 |

| Clouds (70%) | 0.87 ±0.029 | 0.90 ±0.033 | 1.16 ±0.044 | 1.19 ±0.048 | 0.89 ±0.035 | 0.81 ±0.031 | 0.93 ±0.044 | 0.92 ± 0.023 |

| Breast Cancer (30%) | 0.52 ±0.023 | 0.54 ±0.027 | 0.74 ±0.031 | 0.81 ±0.032 | 0.58 ±0.024 | 0.55 ±0.016 | 0.70 ±0.026 | 0.67 ± 0.014 |

| Breast Cancer (50%) | 0.56 ±0.026 | 0.59 ±0.027 | 0.79 ±0.029 | 0.85 ±0.033 | 0.64 ±0.025 | 0.59 ±0.022 | 0.76 ±0.035 | 0.69 ± 0.022 |

| Breast Cancer (70%) | 0.59 ±0.031 | 0.65 ±0.034 | 0.86 ±0.042 | 0.92 ±0.044 | 0.70 ±0.037 | 0.63 ±0.028 | 0.82 ±0.035 | 0.67 ± 0.014 |

| Parkinson (30%) | 0.57 ±0.016 | 0.55 ±0.016 | 0.71 ±0.019 | 0.67 ±0.021 | 0.53 ±0.015 | 0.54 ±0.010 | 0.62 ±0.017 | 0.64 ± 0.011 |

| Parkinson (50%) | 0.62 ±0.022 | 0.64 ±0.025 | 0.77 ±0.029 | 0.74 ±0.034 | 0.61 ±0.022 | 0.65 ±0.014 | 0.71 ±0.027 | 0.69 ± 0.022 |

| Parkinson (70%) | 0.67 ±0.027 | 0.74 ±0.033 | 0.85 ±0.038 | 0.82 ±0.037 | 0.69 ±0.031 | 0.73 ±0.022 | 0.78 ±0.038 | 0.75 ± 0.029 |

5.5. Sensitivity of RIFLE to the Number of Samples and Proportion of Missing Values

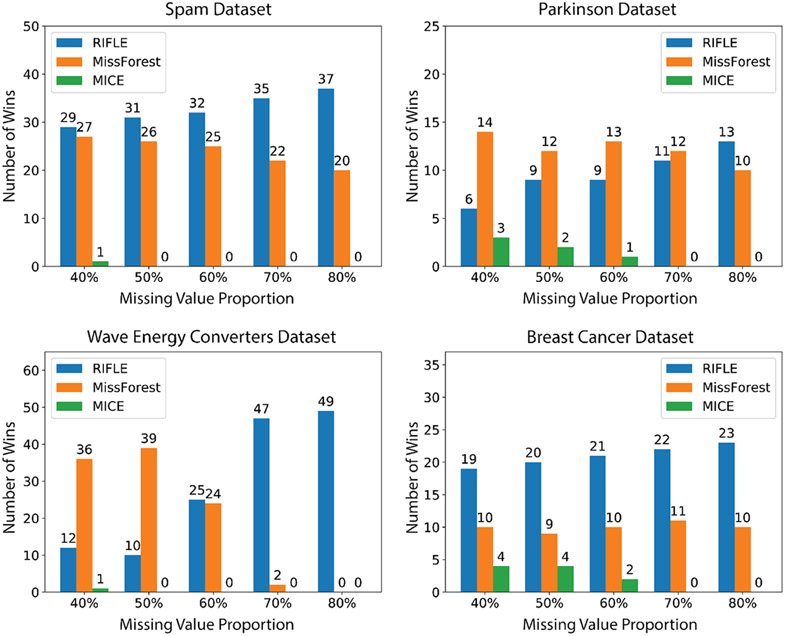

In this section, we analyze the sensitivity of RIFLE and other state-of-the-art approaches to the number of samples and the proportion of missing values. In the experiment in Figure 4, we create 5 datasets containing 40%, 50%, 60%, 70%, and 80% of MCAR missing values, respectively, for four real datasets (Spam, Parkinson, Wave Energy Converter, and Breast Cancer) from UCI Repository (Dua & Graff, 2017) (the description of the datasets can be found in Appendix I). Given a feature in a dataset containing missing values, we say an imputer wins that feature if the imputation error in terms of NRMSE for that imputer is less than the error of the other imputers. Figure 4 reports the number of features won by each imputer on the created datasets described above. As we observe, the number of wins for RIFLE increases as we increase the proportion of missing values. This observation shows that the sensitivity of RIFLE as an imputer to the proportion of missing values is less than MissForest and MICE in general.

Figure 4:

Performance Comparison of RIFLE, MICE, and MissForest on four UCI datasets: Parkinson, Spam, Wave Energy Converter, and Breast Cancer. For each dataset, we count the number of features that each method outperforms the others.

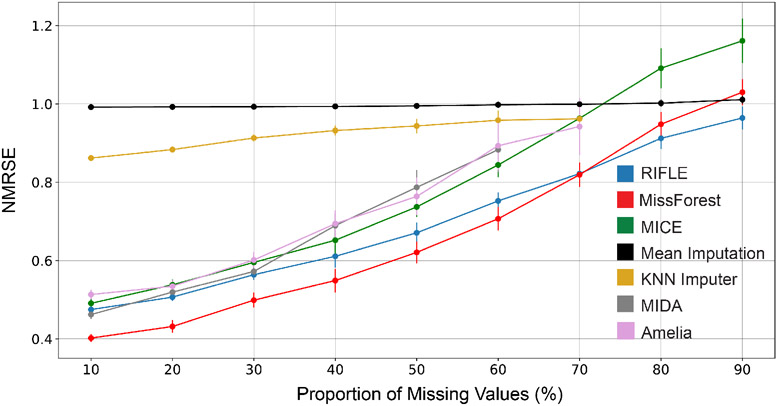

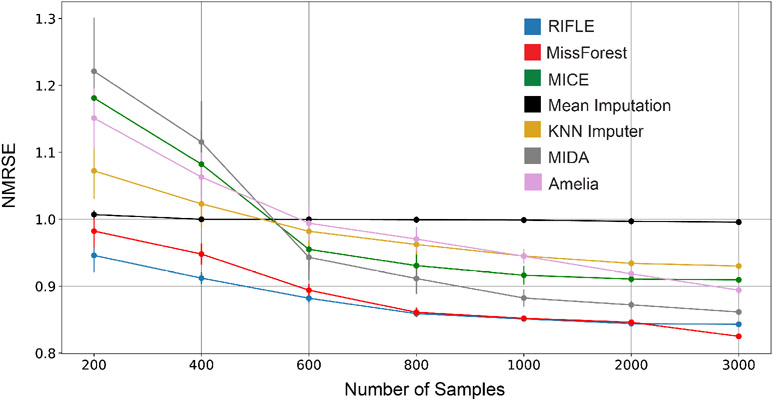

Figure 4 does not show how the increase in the proportion of missing values changes the NRMSE of imputers. Next, we analyze the sensitivity of RIFLE and several imputers to change in missing value proportions. Fixing the proportion of missing values, we generate 10 random datasets containing missing values in random locations on the Drive dataset (the description of datasets is available in Appendix I). We impute the missing values for each dataset with RIFLE, MissForest, Mean Imputation, and MICE. Figure 5 shows the average and the standard deviation of these 4 imputers’ performances for different proportions of missing values (10% to 90%). Figure 5 depicts the sensitivity of MissForest and RIFLE to the proportion of missing values in the Drive dataset. We select 400 data points for each experiment with different proportions of missing values (from 10% to 90%) and report the average NRMSE of imputed entries. Finally, in Figure 6, we have evaluated RIFLE and other methods on the BlogFeedback dataset (see Appendix I) containing 40% missing values. The results show that RIFLE’s performance is less sensitive to decreasing the number of samples.

Figure 5:

Sensitivity of RIFLE, MissForest, Amelia, KNN Imputer, MIDA, and Mean Imputer to the percentage of missing values on the Drive dataset. Increasing the percentage of missing value entries degrades the benchmarks’ performance compared to RIFLE. KNN-imputer implementation cannot be executed on datasets containing 80% (or more) missing entries. Moreover, Amelia and MIDA do not converge to a solution when the percentage of missing value entries is higher than 70%.

Figure 6:

Sensitivity of RIFLE, MissForest, MICE, Amelia, Mean Imputer, KNN Imputer, and MIDA to the number of samples for the imputations of Blog Feedback dataset containing 40% of MCAR missing values. When the number of samples is limited, RIFLE outperforms other methods, and its performance is very close to the non-linear imputer MissForest for larger samples.

5.6. Performance Comparison on Real Datasets

In this section, we compare the performance of RIFLE to several state-of-the-art approaches, including MICE (Buuren & Groothuis-Oudshoorn, 2010), Amelia (Honaker et al., 2011), MissForest (Stekhoven & Bühlmann, 2012), KNN Imputer (Raghunathan et al., 2001), and MIDA (Gondara & Wang, 2018). There are two primary ways to do this. One method to predict a continuous target variable in a dataset with many missing values is first to impute the missing data with a state-of-the-art package, then run a linear regression. An alternative approach is to directly learn the target variable, as we discussed in Section 3.

Table 2 compares the performance of mean imputation, MICE, MIDA, MissForest, and KNN to that of RIFLE on three datasets: NHANES, Blog Feedback, and superconductivity. Both Blog Feedback and Superconductivity datasets contain 30% of MNAR missing values generated by Algorithm 9, with 10000 and 20000 training samples, respectively. The description of the NHANES data and its distribution of missing values can be found in Appendix I.

Table 2:

Normalized RMSE of RIFLE and several state-of-the-art Methods on Superconductivity, blog feedback, and NHANES datasets. The first two datasets contain 30% Missing Not At Random (MNAR) missing values in the training phase generated by Algorithm 9. Each method applied 5 times to each dataset, and the result is reported as the average performance ± standard deviation of experiments in terms of NRMSE.

| Methods | Datasets | ||

|---|---|---|---|

| Super Conductivity | Blog Feedback | NHANES | |

| Regression on Complete Data | 0.4601 | 0.7432 | 0.6287 |

| RIFLE | 0.4873 ± 0.0036 | 0.8326 ± 0.0085 | 0.6304 ± 0.0027 |

| Mean Imputer + Regression | 0.6114 ± 0.0006 | 0.9235 ± 0.0003 | 0.6329 ± 0.0008 |

| MICE + Regression | 0.5078 ± 0.0124 | 0.8507 ± 0.0325 | 0.6612 ± 0.0282 |

| EM + Regression | 0.5172 ± 0.0162 | 0.8631 ± 0.0117 | 0.6392 ± 0.0122 |

| MIDA Imputer + Regression | 0.5213 ± 0.0274 | 0.8394 ± 0.0342 | 0.6542 ± 0.0164 |

| MissForest | 0.4925 ± 0.0073 | 0.8191 ± 0.0083 | 0.6365 ± 0.0094 |

| KNN Imputer | 0.5438 ± 0.0193 | 0.8828 ± 0.0124 | 0.6427 ± 0.0135 |

Efficiency of RIFLE:

We perform RIFLE for 1000 iterations and the step size of 0.01 in the above experiments. At each iteration, the main operation is to find the optimal for any given and . The average time of each method on each dataset is reported in Table 5 in Appendix L. The main reason for the time efficiency of RIFLE compared to MICE, MissForest, MIDA, and KNN Imputer is that it directly predicts the target variable without imputation of all missing entries.

Since MICE and MIDA cannot predict values during the test phase without data imputation, we use them in a pre-processing stage to impute the data. Then we apply the linear regression to the imputed dataset. On the other hand, RIFLE, KNN imputer, and MissForest can predict the target variable without imputing the training dataset. Table 2 shows that RIFLE outperforms all other state-of-the-art approaches executed on the three mentioned datasets. In particular, RIFLE outperforms MissForest, while the underlying model RIFLE uses is simpler (linear) compared to the nonlinear random forest model utilized by Missforest.

5.6.1. Performance of RIFLE on Classification Tasks

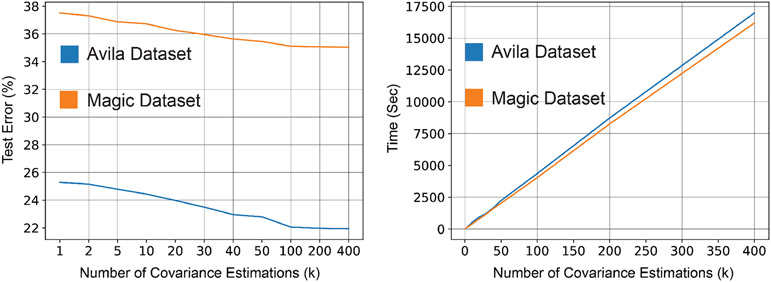

In Section 4, we discussed how to specialize RIFLE to robust normal discriminant analysis in the presence of missing values. Since the maximization problem over the second moments of the data () is intractable, we solved the maximization problem over a set of covariance matrices estimated by bootstrap sampling. To investigate the effect of choosing on the performance of the robust classifier, we train robust normal discriminant analysis models for different values of on two training datasets (Avila and Magic) containing 40% MCAR missing values. The description of the datasets can be found in Appendix I. For , there is no maximization problem, and thus, it is equivalent to the classifier proposed in Fung & Wrobel (1989). As shown in Figure 7, increasing the number of covariance estimations generally enhances the accuracy of the classifier in the test phase. However, as shown in Theorem 5, the required time for completing the training phase grows linearly regarding the number of covariance estimations.

Figure 7:

Effect of the number of covariance estimations on the performance (left) and run time (right) of robust LDA on Avila and Magic datasets. Increasing the number of covariance estimations (k) improves the model’s accuracy on the test data. However, it takes longer training time.

5.6.2. Comparison of Robust Linear Regression and Robust QDA

An alternative approach to the robust QDA presented in Section 4 is to apply the robust linear regression algorithm (Section 3) and mapping the solutions to each one of the classes by thresholding (positive value maps to Label 1 and negative values to label −1).

Table 4 compares the performance of two classifiers on three different datasets. As demonstrated in the table, when all features are continuous, quadratic discriminant analysis has a better performance. It shows the QDA model relies highly on the normality assumption, while robust linear regression handles the categorical features better than robust QDA.

Table 4:

Accuracy of RIFLE, MICE, KNN-Imputer, Expectation Maximization (EM), and Robust QDA on different discrete, mixed, and continuous datasets. Robust QDA can perform better than other methods when the input features are continuous, and the target variable is discrete. However, RIFLE results in higher accuracy in mixed and discrete settings.

| Accuracy of Methods | |||||||

|---|---|---|---|---|---|---|---|

| Dataset | Feature Type | RIFLE | Robust QDA | MissForest | MICE | KNN Imputer | EM |

| Glass Identification | Continuous | 67.12% ± 1.84% | 69.54% ± 1.97% | 65.76% ± 1.49% | 62.48% ± 2.45% | 60.37% + ±1.12% | 68.21% + ±0.94% |

| Annealing | Mixed | 63.41% ± 2.44% | 59.51% ± 2.21% | 64.91% ± 1.35% | 60.66% ± 1.59% | 57.44% ± 1.44% | 59.43% + ±1.29% |

| Abalone | Mixed | 68.41% ± 0.74% | 63.27% ± 0.76% | 69.40% ± 0.42% | 63.12% ± 0.98% | 62.43% ± 0.38% | 62.91% + ±0.37% |

| Lymphography | Discrete | 66.32% ± 1.05% | 58.15% ± 1.21% | 66.11% ± 0.94% | 55.73% ± 1.24 | 57.39% ± 0.88% | 59.55% + ±0.68% |

| Adult | Discrete | 72.42% ± 0.06% | 60.36% ± 0.08 | 70.34% ± 0.03% | 63.30% ± 0.14% | 60.14% ± 0.00 | 60.69% + ±0.01% |

Limitations and Future Directions:

The proposed framework for robust regression in the presence of missing values is limited to linear models. While in Appendix E, we use polynomial kernels to apply non-linear transformations on the data, such an approach can potentially increase the number of missing values in the kernel space generated by the composition of the original features. A future direction is to develop efficient algorithms for non-linear regression models such as multi-layer neural networks, decision tree regressors, gradient boosting regressors, and support vector regression models. In the case of robust classification, the methodology is extendable to any loss beyond quadratic discriminant analysis. Unlike the regression case, a limitation of the proposed method for robust classification is its reliance on the Gaussianity assumption of data distribution (conditioned on each data label). A natural extension is to assume the underlying data distribution follows a mixture of Gaussian distributions.

Conclusion:

In this paper, we proposed a distributionally robust optimization framework over the distributions with the low-order marginals within the estimated confidence intervals for inference and imputation of datasets in the presence of missing values. We developed algorithms for regression and classification with convergence guarantees. The method’s performance is evaluated on synthetic and real datasets with different numbers of samples, dimensions, missing value proportions, and types of missing values. In most experiments, RIFLE consistently outperforms other existing methods.

Table 3:

Sensitivity of Linear Discriminant Analysis, Robust LDA (Common Covariance Matrices), and Robust QDA (Different Covariance matrices for two groups) to the number of training samples.

| Number of Training Data Points | Method | ||

|---|---|---|---|

| LDA | Robust LDA | Robust QDA | |

| 50 | 52.38% ± 3.91% | 62.14% ± 1.78% | 61.36% ± 1.62% |

| 100 | 61.24% ± 1.89% | 68.46% ± 1.04% | 70.07% ± 0.95% |

| 200 | 73.49% ± 0.97% | 73.35% ± 0.67% | 73.51% ± 0.52% |

Acknowledgments

This work was supported by the NIH/NSF Grant 1R01LM013315-01, the NSF CAREER Award CCF-2144985, and the AFOSR Young Investigator Program Award FA9550-22-1-0192.

A A Review of Missing Value Imputation Methods in the Literature

The fundamental idea behind many data imputation approaches is that the missing values can be predicted based on the available data of other data points and correlated features. One of the most straightforward imputation techniques is to replace missing values by the mean (or median) of that feature calculated from what data is available see Little & Rubin (2019, Chapter 3). However, this naïve approach ignores the correlation between features and does not preserve the variance of features. Another class of imputers has been developed based on the least-square methods (Raghunathan et al., 2001; Kim et al., 2005; Zhang et al., 2008; Cai et al., 2006). Raghunathan et al. (2001) learns a linear model with multivariate Gaussian noise for the feature with the least missing entries. It repeats the same procedure on the updated data to impute the next feature with the least missing entries until all features are completely imputed. One drawback of this approach is that the error from the imputation of previous features can be propagated to subsequent features. To impute entries of a given feature in a dataset, Kim et al. (2005) learns several univariate regression models that consider that feature as the response. Then it takes the average of these predictions as the final value of imputation. This approach fails to learn the correlations involving more than two features.

Many more complex algorithms have been developed for imputation, although many are sensitive to initial assumptions and may not converge. For instance, KNN-Imputer imputes a missing feature of a data point by taking the mean value of the K closest complete data points (Troyanskaya et al., 2001). MissForest, on the other hand, imputes the missing values of each feature by learning a random forest classifier using other training data features (Stekhoven & Bühlmann, 2012). MissForest does not need to assume that all features are continuous (Honaker et al., 2011) or categorical (Schafer, 1997). However, both KNN-imputer and MissForest do not guarantee statistical or computational convergence for their algorithms. Moreover, when the proportion of missing values is high, both are likely to have a severe drop in performance, as demonstrated in Section 5. The Expectation Maximization (EM) algorithm is another popular approach that learns the parameters of a prior distribution on the data using available values based on the EM algorithm of Dempster et al. (1977); see also Ghahramani & Jordan (1994) and Honaker et al. (2011). The EM algorithm is also used in Amelia, which fits a jointly normal distribution to the data using EM and the bootstrap technique (Honaker et al., 2011). While Amelia demonstrates a superior performance on datasets following a normal distribution, it is highly sensitive to the violation of the normality assumption (as discussed in Bertsimas et al. (2017)). Ghahramani & Jordan (1994) adopt the EM algorithm to learn a joint Bernoulli distribution for the categorical data and a joint Gaussian distribution for the continuous variables independently. While those algorithms can be viewed as inference methods based on low-order estimates of moments, they do not consider uncertainty in such low-order moments estimates. By contrast, our framework utilizes robust optimization to consider the uncertainty around the estimated moments. Moreover, our optimization procedure for imputation and prediction is guaranteed to converge despite some of the algorithms mentioned above.

Another popular method for data imputation is multiple imputations by chained equations (MICE). MICE learns a parametric distribution for each feature conditional on the remaining features. For instance, it assumes that the current target variable is a linear function of other features with a zero-mean Gaussian noise. Each feature can have its distinct distribution and parameters (e.g., Poisson regression, logistic regression). Based on the learned parameters of conditional distributions, MICE can generate one or more imputed datasets (Buuren & Groothuis-Oudshoorn, 2010). More recently, several neural network-based imputers have been proposed. GAIN (Generative Adversarial Imputation Network) learns a generative adversarial network based on the available data and then imputes the missing values using the trained generator (Yoon et al., 2018). One advantage of GAIN over other existing GAN imputers is that it does not need a complete dataset during the training phase. MIDA (Multiple Imputation using Denoising Autoencoders) is an auto-encoder-based approach that trains a denoising auto-encoder on the available data considering the missing entries as noise. Similar to other neural network-based methods, these algorithms suffer from their black-box nature. They are challenging to interpret/explain, making them unpopular in mission-critical healthcare approaches. In addition, no statistical or computational guarantees are provided for these algorithms.

Bertsimas et al. (2017) formulates the imputation task as a constrained optimization problem where the constraints are determined by the underlying classification model such as KNN (k-nearest neighbors), SVM (Support Vector Machine), and Decision Trees. Their general framework is non-convex, and the authors relax the optimization for each choice of the cost function using first-order methods. The block coordinate descent algorithm then optimizes the relaxed problem. They show the convergence and accuracy of their proposed algorithm numerically, while a theoretical analysis that guarantees the algorithm’s convergence is absent in their work.

B Estimating Confidence Intervals of Low-order Moments

In this section, we explain the methodology of estimating confidence intervals for and . Let and be the data matrix and target variables for given data points respectively whose entries are in , where * symbol represents a missing entry. Moreover, assume that represents the i-th column (feature) of matrix . We define:

Thus, is obtained by replacing the missing values with 0. We estimate the confidence intervals for the mean and covariance of features using multiple bootstrap samples on the available data. Let and be the center and the radius of the confidence interval for , respectively. We compute the center of the confidence interval for as follows:

| (24) |

where and . This estimator is obtained from the rows where both features are available. More precisely, let be the mask of the input data matrix defined as:

Assume that , which is the number of rows in the dataset where both features and are available. To estimate the confidence intervals for , we use Algorithm 4. First, we select multiple (K) samples of size from the rows where both features are available. Each one of these samples with size is obtained by applying a bootstrap sampler (sampling with replacement) on the rows where both features are available. Then, we compute the second-order moment of two features for each sample.

To find the radius of confidence intervals for each given pair () of features, we choose different bootstrap samples with length on the rows where both features and are available. Then, we compute of two features in each bootstrap sample. The standard deviation of these estimations determines the radius of the corresponding confidence interval. Algorithm 4 summarizes the required steps for computing the confidence interval radius for the ij-th entry of covariance matrix . Note that the confidence intervals for can be computed similarly. Having and , the confidence interval for the matrix is computed as follows:

Computing and can be done in the same manner. The hyper-parameter c is defined to control the robustness of the model by tuning the length of confidence intervals. A larger c corresponds to bigger confidence intervals and, thus, a more robust estimator. On the other hand, large values for c lead to very large confidence intervals that can adversely affect the performance of the trained model.

| Algorithm 4 Estimating Confidence Interval Length for Feature and Feature . | |

|---|---|

|

|

Remark 6. Since the computation of confidence intervals for different entries of the covariance matrix are independent of each other, they can be computed in parallel. In particular, if cores are available, features (columns of the covariance matrix) can be assigned to each one of the available cores.

C Solving Robust Ridge Regression with the Optimal Convergence Rate

The convergence rate of Algorithm 1 to the optimal solution of Problem (6) can be slow in practice since the algorithm requires to do a matrix inversion for updating and applying the box constraint to and at each iteration. While we update the minimization problem in closed-form with respect to , we can speed up the convergence rate of the maximization problem by applying Nesterov’s acceleration method to function in (7). Since function is the minimum of convex functions, its gradient with respect to and can be computed using Danskin’s theorem. Algorithm 5 describes the steps to optimize Problem (7) using Nesterov’s acceleration method.

| Algorithm 5 Applying the Nesterov’s Acceleration Method to Robust Linear Regression | |

|---|---|

|

|

Theorem 7. Let () be the optimal solution of (6) and . Assume that for any given and , within the uncertainty sets described in (6), . Then, Algorithm 1 computes an -optimal solution of the objective function in iterations.

Proof. The proof is relegated to Appendix H.

D Solving the Dual Problem of the Robust Ridge Linear Regression via ADMM

The Alternating Direction Method of Multipliers (ADMM) is a popular algorithm for efficiently solving linearly constrained optimization problems (Gabay & Mercier, 1976; Hong et al., 2016). It has been extensively applied to large-scale optimization problems in machine learning and statistical inference in recent years (Assländer et al., 2018; Zhang et al., 2018). Consider the following optimization problem consisting of two blocks of variables and that are linearly coupled:

| (25) |

The augmented Lagrangian of the above problem can be written as:

| (26) |

ADMM schema updates the primal and dual variables iteratively as presented in Algorithm 6.

| Algorithm 6 General ADMM Algorithm | |

|---|---|

|

|

As we mentioned earlier, simultaneous projection of to the set of positive semi-definite matrices and the box constraint in Algorithm 1 and Algorithm 5 is computationally expensive. Moreover, careful step-size tuning is necessary to avoid inconsistency and guarantee convergence in that algorithm.

An alternative approach for solving Problem (6) that avoids removing the PSD constraint in the implementation of Algorithm 1 and Algorithm 5 is to solve the dual of the inner maximization problem. Since the maximization problem is concave with respect to and , and the relative interior of the feasible set of constraints is non-empty, the duality gap is zero. Hence, instead of solving the inner maximization problem, we can solve its dual which is a minimization problem. Theorem 8 describes the dual problem of the inner maximization problem in (6). Thus, Problem (6) can be alternatively formulated as a minimization problem rather than a min-max problem. We can solve such a constrained minimization problem efficiently via the ADMM algorithm. As we will show, the ADMM algorithm applied to the dual problem does not need tuning of step-size or applying simultaneous projections to the box constraints and positive semi-definite (PSD) constraints.

Theorem 8. (Dual Problem) The inner maximization problem described in (6) can be equivalently formulated as:

Therefore, Problem (6) can be alternatively written as:

| (27) |

Proof. The proof is relegated to Appendix H.

To apply the ADMM method to the dual problem, we require to divide the optimization variables into two blocks as in (25) such that both sub-problems in Algorithm 6 can be efficiently solved. To do so, first, we introduce the auxiliary variables and to the dual problem. Also, let .

Therefore, Problem (27) is equivalent to:

| (28) |

Since handling both constraints on in Problem (27) is difficult, we interchange with in the first constraint. Moreover, the non-negativity constraints on and are exchanged with non-negativity constraints on and . For the simplicity of presentation, assume that , , , , and . Algorithm 7 describes the ADMM algorithm applied to Problem (28).

Corollary 9. If the feasible set of Problem (6) has non-empty interior, then Algorithm 7 converges to an -optimal solution of Problem (28) in iterations.

Proof. Since the inner maximization problem, in (6) is convex, and its feasible interior set is not empty, the duality gap is zero by Slater’s condition. Thus, according to Theorem 6.1 in He & Yuan (2015), Algorithm 7 converges to an optimal solution of the primal-dual problem with a linear rate. Moreover, the sequence of constraint residuals converges to zero with a linear rate as well.

Remark 10. The optimal solution obtained from the ADMM algorithm can be different from the one given by Algorithm 1 because we remove the positive semi-definite constraint on in the latter. We investigate the difference between solutions of two algorithms in three cases: First, we generate a small positive semi-definite matrix and the matrix of confidence intervals () as follows:

Moreover, let and are generated as follows:

Initializing both algorithms with a random matrix within and , and a random vector within and , ADMM algorithm returns a different solution from Algorithm 1. Besides, the difference in the performance of algorithms during the test phase can be observed in the experiments on synthetic datasets depicted in Figure 3 as well, especially when the number of samples is smaller.

| Algorithm 7 Applying ADMM to the Dual Reformulation of Robust Linear Regression | |

|---|---|

|

|

Now, we show how to apply ADMM schema to Problem (28) to obtain Algorithm 7. As we discussed earlier, we consider two separate blocks of variables and . Assigning , and to the constraints of Problem (28) in order, we can write the corresponding augmented Lagrangian function as:

| (29) |

At each iteration of the ADMM algorithm, the parameters of one block are fixed, and the optimization problem is solved with respect to the parameters of the other block. For the simplicity of presentation, let , , , , and .

We have two non-trivial problems containing positive semi-definite constraints. The sub-problem with respect to can be written as:

| (30) |

By completing the square, and changing the variable , equivalently we require to solve the following problem:

| (31) |

Thus, , where is the projection to the set of PSD matrices, which can be done by setting the negative eigenvalues of in its singular value decomposition to zero.

The other non-trivial sub-problem in Algorithm (7) is the minimization with respect to (Line 10). By completing the square, it can be equivalently formulated as:

| (32) |

Let be the singular value decomposition of the matrix where is a diagonal matrix containing the eigenvalues of the matrix G. Set . Since , we have:

Set , then Problem (32) can be reformulated as:

| (33) |

Note that the constraint of the above optimization problem is equivalent to the following:

where Since the block matrix is symmetric, using Schur Complement, it is positive semi-definite if and only if is positive semi-definite and (the third inequality above).

Set , then we can write Problem (33) as:

| (34) |

It can be easily shown that the optimal solution has the form , where is the optimal Lagrangian multiplier corresponding to the constraint of Problem (34). The optimal Lagrangian multiplier can be obtained by the bisection algorithm similar to Algorithm 2. Having , the optimal can be computed by solving the linear equation .

E Quadratic RIFLE: Using Kernels to Go Beyond Linearity

A natural extension of RILFE to non-linear models is to transform the original data via multiple Kernels and then apply RIFLE to the obtained data. To this end, we applied Polynomial Kernels to the original data that considers the polynomial transformations of features and their interactions. A drawback of this approach is that if the original data contains features, and the order of the polynomial Kernel is , the number of features in the transformed data will be that increases the runtime of the prediction/imputation drastically. Thus, we only consider , which leads to a dataset containing the interaction of different features of the original data. We call the RIFLE algorithm applied on the data transformed by Quadratic Kernel Quadratic RIFLE (QRIFLE). Table 1 demonstrates the performance of QRIFLE alongside RIFLE and other state-of-the-art approaches. Moreover, we applied QRIFLE on a regression task where the correlation between predictors and the target variable is quadratic (Figure 8). We can observe that QRIFLE works better than RIFLE when the percentage of missing values is not high.

E.1 Performance of RIFLE and QRIFLE on Synthetic Non-linear Data

A natural question is how RIFLE performs when the underlying model is non-linear. To evaluate RIFLE and other methods, we have generated jointly normal data similar to the experiment in Figure 9. Here, we have 5000 data points, and the data dimension is d = 5. The target variable has the following quadratic relationship with the input features:

Figure 8:

Performance of RIFLE, QRIFLE, MissForest, Amelia, KNN Imputer, MICE, Expectation Maximization to the percentage of missing values on Quadratic artificial datasets with different percentages of missing values.

We evaluated the performance of KNN-Imputer (Troyanskaya et al., 2001), MICE (Buuren & Groothuis-Oudshoorn, 2010), Amelia (Honaker et al., 2011), MissForest (Stekhoven & Bühlmann, 2012), and Expectation Maximization (Dempster et al., 1977), alongside the RIFLE and QRIFLE. QRIFLE is the RIFLE application on the original data transformed by a polynomial kernel with the degree of 2. Although QRIFLE can learn the quadratic models, the number of missing values in the new features (interaction terms) will be higher than the original data. For instance, if, on average, 50% of entries are missing in the original features, there will be 75% of missing entries in the interaction terms. Moreover, the computation complexity will be increased since we have d2 features instead of d if we use QRIFLE. Figure 8 demonstrates the performance of the aforementioned methods on the artificial data with 5000 samples containing different percentages of missing values. We generated 5 artificial datasets for each missing value percentage, and each method is performed 5 times on the datasets. We reported the average performances for each method in Figure 8. For small percentages of missing values, QRIFLE performs better than other approaches. However, by increasing the percentage of missing values, QRIFLE performance drops, and RIFLE works much better than RIFLE.

F Robust Quadratic Discriminant Analysis (Presence of Missing Values in the Target Feature)

In Section 4 we formalized robust quadratic discriminant analysis assuming the target variable is fully available. In this appendix, we study Problem (12) when the target variable contains missing values.

If the target feature contains missing values, the proposed algorithm for solving the optimization problem (13) does not exploit the data points whose target feature is unavailable. However, such points can contain valuable statistical information about the underlying data distribution. Thus, we apply an Expectation Maximization (EM) procedure on the dataset as follows:

Assume that a dataset consisting of samples. Let be samples whose target variable is available and are samples where their corresponding labels are missing. Similar to the previous case, we assume:

Thus, the probability of observing a data point can be written as:

The log of likelihood function can be formulated as follows:

We apply Expectation Maximization procedure to jointly update and . Note that the posterior distribution of can be written as:

We update values in the E-step by comparing the posterior probabilities for two possible labels. Precisely, we assign label 1 to if and only if:

In M-step, we estimate and by fixing the values. Since in M-step, all labels (both already available and estimated in E-step) are assigned, updating the aforementioned parameters can be done as follows:

| (35) |

| (36) |

| (37) |

| (38) |

| (39) |

| (40) |

We apply the M-step and E-step iteratively to obtain and . Based on the random initialization of we can obtain different values for , , and . Having these estimations, we apply Algorithm 3 to solve the robust normal discriminant analysis formulated in (13).

| Algorithm 8 Expectation Maximization Procedure for Learning a Robust Normal Discriminant Analysis | |

|---|---|

|

|

G Generating Missing Values Patterns in Numerical Experiments

In this appendix, we define MCAR and MNAR patterns and discuss how to generate them in a given dataset. Formally, the distribution of missing values in a dataset follows a missing completely at random (MCAR) pattern if the probability of having a missing value for a given entry is constant, independent of other available and missing entries. On the other hand, a dataset follows a Missing At Random (MAR) pattern if the missingness of each entry only depends on the available data of other features. Finally, if the distribution of missing values does not follow an MCAR or MAR pattern, we call it missing not at random (MNAR).

To generate the MCAR pattern on a given dataset, we fix a constant probability 0 < p < 1 and make each data entry unavailable with the probability of p. On the other hand, the generation of the MNAR pattern is based on the idea that if the value of an entry is farther from the mean of its corresponding feature, then the probability of missingness for that entry is larger.

The generation of the MNAR pattern is based on the idea that if the value of an entry is farther from the mean of its corresponding feature, then the probability of missingness for that entry is larger. Algorithm 9 describes the procedure of generating MNAR missing values for a given column of a dataset:

| Algorithm 9 Generating MNAR Pattern for a Given Column of a Dataset | |

|---|---|

|

|

Note that in the above algorithm is the cumulative distribution function of a standard Gaussian random variable. and control the percentage of missing values in the given column. As and increase, the probability of having more missing values is higher. Since the availability of each data entry depends on its value, the generated missing pattern is missing not at random (MNAR).

H Proof of Lemmas and Theorems

In this appendix, we prove all lemmas and theorems presented in the article. First, we prove the following lemma that is useful in several convergence proofs:

Lemma 11. Let . Assume that for any given and , . Then, the Lipschitz constant of the gradient of the function used in Problem (7) is equal to .

Proof. Since the problem is convex in and concave in and , we have:

Assume that . Define , as follows:

is convex in and and strongly concave with respect to . According to Lemma 1 in Barazandeh & Razaviyayn (2020), is Lipschitz continuous with the Lipschitz constant equal to:

where is the strong-concavity modulus of . Note that

Thus, . On the other hand,

Therefore, , which means . Note that is computed exactly in Algorithm 1 and Algorithm 5 at each iterations. Thus, during the optimization procedure the norm of is bounded by the maximum norm of for any given and :

As a result, .

Proof of Theorem 1: Since the set of feasible solutions for and defines a compact set, and function is a concave function with respect to and , the projected gradient ascent algorithm converges to the global maximizer of in iterations (Bubeck, 2014, Theorem 3.3), where and is the Lipschitz constant of function , which is equal to according to Lemma 11.

Proof of Theorem 7 Algorithm 5 applies the projected Nesterov acceleration method on the concave function . As proved in Nesterov (1983), the rate of convergence of this method conforms to the lower bound of first-order oracles for the general convex minimization (concave maximization) problems, which is . We compute the Lipschitz constant that appeared in the iteration complexity bound by Lemma 11.

Proof of Theorem 8: First, note that if we multiply the objective function by −1, Problem (6) can be equivalently formulated as:

| (41) |

If we assign to the constraints respectively, then the Lagrangian function can be written as:

| (42) |

The dual problem is defined as:

| (43) |

The minimization of takes the following form:

| (44) |

To avoid value for the above minimization problem, it is required to set and to zero. Thus the dual problem of (41) is formulated as:

| (45) |

Since the duality gap is zero, Problem (6) can be equivalently formulated as:

| (46) |

We can multiply the objective function by −1 and change the maximization to minimization, which gives the dual problem described in (27).

Proof of Theorem 2:

(a) Let be the estimated confidence matrix obtained from samples. The first part of the theorem is true, if converges to 0 as , the number of samples goes to infinity (the same argument works for and ). Assume that is an i.i.d bootstrap sample over data points that both features and are available. Since the distribution of missing values is completely at random (MCAR), we have . Therefore, . Moreover, since the samples are drawn independently, . Since the variance of the product of every two features is bounded, according to the weak law of large numbers:

Therefore, for any given bootstrap sample of features and , the estimation converges in probability to the ground-truth value. This means the size of the confidence interval converges in probability to 0. Therefore, the estimation of is consistent by the definition of consistency. With the same argument, we can prove the consistency of the estimator for any given features and .

(b) Fix two features and . Let be i.i.d pairs sampled via bootstrap from the entries where both features and are available. Define (for simplicity we do not consider the dependence of to and in the notation). Assume that we initialize . Note that, . According to Chebyshev’s inequality, we have:

Note that are iid samples, thus:

Let . Then, based on the two above inequalities, we have:

Using a union bound argument, with the probability of at least , we have: , which means the actual covariance matrix is within the confidence intervals we have considered. In that case, for (), we have:

which completes the proof.

Proof of Theorem 3: Since the objective function is convex with respect to , and the constraint on is closed and bounded (compact), an optimal solution exists to the problem on the boundaries (note that the problem is convex maximization.) Therefore, for any entry of the , it should either take or , which gives the provided solution in the theorem.

I Dataset Descriptions

In this section, we introduce the datasets used in Section 5 to evaluate the performance of RIFLE on regression and classification tasks. Except for the NHANES, all other datasets contain no missing values. For those datasets, we generate MCAR and MNAR missing values artificially (for MNAR patterns, we apply Algorithm 9 to the datasets).

Datasets for Evaluating RIFLE on Regression and Imputation Tasks

NHANES: The percentage of missing values varies for different features of the NHANES dataset. There are two sources of missing values in NHANES data: Missing entries during data collection and missing entries resulting from merging different datasets in the NHANES collection. On average, approximately 20% of data is missing.

Super Conductivity1: Super Conductivity datasets contains 21263 samples describing supercon-ductors and their relevant features (81 attributes). All features are continuous, and the assigned task is to predict the critical temperature based on the given 81 features. We have used this dataset in experiments summarized in Figure 10, Figure 11, and Table 2.

BlogFeedback2: BlogFeedback data is a collection of 280 features extracted from HTML-documents of the blog posts. The assigned task is to predict the number of comments in the upcoming 24 hours based on the features of more than 60K data training data points. The test dataset is fixed and is originally separated from the training data. The dataset is used in experiments described in Table 2.