Abstract

Entrustable professional activities (EPAs) are observable clinical skills and/or procedures that have been introduced into medical education at the student and resident levels in most specialties to determine readiness to advance into residency or independent practice, respectively. This publication describes the process and outcomes of a pilot study looking at the feasibility of using two anatomic pathology and two clinical pathology EPAs in pathology residency in 6 pathology residency programs that volunteered for the study. Faculty development on EPAs and their assessment was provided to pilot program faculty, and EPA assessment tools were developed and used by the pilot programs. Pre- and post-study surveys were given to participating residents, faculty, and program directors to gauge baseline practices and to gather feedback on the EPA implementation experience. Results demonstrated overall good feasibility in implementing EPAs. Faculty acceptance of EPAs varied and was less than that of program directors. Residents reported a significant increase in the frequency with which faculty provided formative assessments that included specific examples of performance and specific ways to improve, as well as increased frequency with which faculty provided summative assessments that included specific ways to improve. EPAs offered the most benefit in setting clear expectations for performance of each task, for providing more specific feedback to residents, and in increasing Program director's understanding of resident strengths abilities and weaknesses.

Keywords: Assessment, Competency-based medical education, Entrustable professional activities, Pathology, Pilot program

Introduction

The ultimate goal of residency training is to graduate pathologists who are able to independently carry out the professional activities of their practice in order to best serve their patients and their specialty. The Accreditation Council for Graduate Medical Education (ACGME) and the American Board of Pathology have an interest in expanding competency-based medical education in residency training in order to ensure that newly practicing pathologists are competent in the discrete tasks of their practice. The ACGME and American Board of Medical Specialties have held joint symposia with the goal of accelerating the transition to competency-based medical education in graduate medical education.1 Entrustable professional activities (EPAs) can serve as a tool to reach those goals. EPAs describe a task that a trainee is expected to be able to complete without supervision once competency is reached.2 EPAs have been published in many specialties and for undergraduate medical education, many of which focus on procedural skills.3, 4, 5, 6, 7, 8, 9 Pathology has a unique set of tasks specific to the field, requiring tailored implementation for the use of EPAs in pathology residency training.

EPAs have been published for anatomic and clinical (AP and CP) pathology residency training10,11 and for two fellowship tracks.12,13 Four single-institution studies have examined incorporating EPAs into training.14, 15, 16, 17 However, widespread standardized use of EPAs is still lacking in AP and CP residency training. Incorporating assessment tools into practice is an iterative process involving complex educational systems and is difficult to standardize between institutions. As such, the National EPA Working Group, co-sponsored by the Association of Pathology Chairs (APC) and College of American Pathologists (CAP), designed a study for the implementation of EPAs in AP and CP residency training to evaluate feasibility, impact of EPAs on resident and faculty behaviors, and utility of EPAs for providing assessment data on resident performance.

We describe the first multi-institutional feasibility study of EPAs in pathology residency and detail the experience of 6 programs that implemented EPAs. This was a pilot study of four specific EPAs, two AP- and two CP-focused, with standardized assessment tools and EPA-specific faculty development across the institutions in the intervention group. Data was collected via pre- and post-implementation surveys to all residents, faculty, and program directors. Additional quarterly town-hall discussions with the implementation sites provided helpful insights into successes and barriers to implementing EPAs in pathology training. Results are presented and evaluated within the context of Kirkpatrick's four-level evaluation model.18

Materials and methods

Study design

The EPA pilot was originally designed as a non-random Intact-Group Design study, but due to attrition in the control group and the resultant small sample size for specific survey questions, data presented is limited to the intervention group in a time-series experimental design.18 Pathology residency training programs in the intervention group incorporated EPAs into pertinent rotations and added formative and summative evaluations of EPAs to the residents' assessment portfolios. Assessment data was required to be shared with the intervention programs’ Clinical Competency Committees (CCC). Residency programs in the control group made no changes to their pre-existing assessment methods. Surveys were analyzed from the start and end of the pilot. Participants were also surveyed at the mid-point of the study, but due to extremely low response rates, these results were not informative and therefore are excluded from this article. IRB review was obtained through the University of Vermont, with local institutional review obtained as required by each participating program.

Program recruitment

All pathology residency program directors (PDs) received an invitation to participate in the pilot in summer of 2019. The invitation was distributed through the Association of Pathology Chairs PD listserv, which reaches the majority of PDs and Associate PDs for US pathology residency programs. Interested PDs completed a brief survey to indicate whether they wanted to participate as an intervention site, a control site, or had no preference. Inclusion criteria for the intervention group included use of MedHub (Minneapolis, MN) or New Innovations (Uniontown, OH) as a program assessment platform, as EPA assessments would be built on these platforms for easy distribution. Sixteen programs volunteered (8 as intervention, 2 as control, 6 as either). Eleven programs were assigned to the intervention group and 5 were assigned to the control group.

The target pilot start date was July 2020, however due to the COVID pandemic, the pilot was delayed by 1 year, and ran during the 2021-22 academic year. In the intervening year, several programs dropped out due to a change in program directorship (2), faculty staffing issues and/or lack of faculty buy-in (3), and inability to implement EPAs due to other ongoing projects in the residency program (2). At this point, only two programs remained in the control group. Targeted outreach to pathology residency programs recruited one additional program to the control group three months into the study period. One intervention program was excluded at the end of the study due to zero post-implementation surveys completed by faculty.

Despite efforts to recruit adequate control programs, this group had half as many programs as the intervention group, with some control group surveys having less than 10 respondents total across programs. Editorial feedback suggested that the control group was likely not of adequate size for a sufficiently powered analysis, so data and analysis is only presented for the intervention group (6 pathology residency programs) in a Time-Series Experimental Design model.18

EPA tool development

Four EPAs were selected for the pilot, including two EPA's specific to Anatomic Pathology (Performance of a medical autopsy and Frozen section preparation, review, and call-back) and two EPAs specific to Clinical Pathology (Evaluation and reporting of adverse transfusion events and Reporting of peripheral blood smear consultations). The EPA assessment tools were developed from previously published EPAs.10 The validation of the assessment tool and entrustment scale was performed during faculty development.19 Formative and summative assessments were developed for each EPA. The formative assessments included the knowledge and skills statements anchored in competency, blank fields for free text of one skill performed well and one skill needing improvement, and the entrustment scale using a modification of the O-Score and OCAT score.20,21 The summative assessments included entrustment ratings only, with a field for free text comments. The summative assessment entrustment ratings were mapped to ACGME Milestones 2.0.22

EPA implementation

Prior to the start of the 2021-22 academic year, several support materials were provided to intervention programs for implementation of EPAs. Background webinar presentations were made available on demand to explain the concept of EPAs and the goals of the pilot. A version oriented to residents and a version oriented to faculty were both made available to PDs at intervention sites. PDs were encouraged to identify “EPA Champions”—faculty members who frequently participated in a specific EPA task and were involved in resident education. PDs and EPA champions participated in “train-the-trainer” faculty development sessions. The training sessions utilized performance dimension training and frame of reference training to practice using the entrustment scale and to discuss reasons for ratings.19 PDs and/or EPA Champions then held similar training sessions at their home institutions and discussed how to incorporate EPAs into clinical workflow.

Because every program in the pilot has different workflows to clinical service, a fair amount of latitude was allowed to incorporate EPAs into workflow, but two main principles were followed. The formative assessment was incorporated into clinical workflow as a workplace-based assessment and completed at or near the time the clinical task was performed. The summative assessment was completed by the EPA champion or PD at the end of a rotation or prior to the semi-annual CCC meetings and was to be based on review of all formative assessments completed during the rotation or in the prior 6 months, respectively. All formative and summative EPAs with associated Milestone recommendations were made available to the CCC ahead of each semi-annual meeting.

Quarterly town-hall meetings were held with PDs and EPA champions to discuss successes and challenges to EPA implementation. Each town hall was 1 h, with all PDs and EPA Champions from the intervention groups invited. Attendance was optional. Representatives from each program attended at least one town hall. All programs struggled with completing the formative EPAs in MedHub or New Innovations, due to the cumbersome need to login, find the correct assessment, and complete it. Therefore, paper copies of all formative assessments were provided for programs to print and complete in real time. Other themes from the town hall discussions are included with the qualitative comments.

Survey administration

Online surveys were administered using REDCap electronic data capture tool hosted at the University of Vermont23,24 at the start (July 1–November 31, 2021) and end (June 1–July 13, 2022) of the pilot to gather information from PDs, faculty and residents (in post-graduate years 1–4). The pre-implementation surveys gathered baseline data on assessment practices, formative and summative evaluation practices and satisfaction, resident understanding of expectations, and EPA implementation plans. The post-implementation surveys included key questions from the pre-surveys for comparative purposes, as well as questions to gather feedback on the EPA implementation experience.

At both the start and end of the pilot, PDs received links to access the online surveys via email from the principal investigator, which were then forwarded on to faculty and residents. Faculty and resident emails were not made available to the research team to ensure anonymity of responses. Multiple reminders were sent to encourage participation.

Survey participation was voluntary. A research information sheet was provided on the first page of each survey to facilitate informed consent. Respondents who did not consent exited the survey. Responses are identifiable only to the level of the institution and respondent group (PD, faculty, resident).

Survey responses were summarized using descriptive statistics. Comparison of results was completed using t-tests and chi-square tests, and statistical results are presented only if significant. Analyses were performed using IBM SPSS Statistics for Windows, version 23.0 (IBM Corp, Armonk, NY).

Results

Participant demographics

The participating pathology programs, including number of residents and faculty, are listed in Table 1. The pre- and post-survey response rates are listed in Table 2. As was the expectation, all program directors responded. The rate of faculty participation was comparable between the pre- and post-surveys, whereas residents had notably lower response rates for the post-survey. Respondent demographics for both the pre-survey and post-survey are in Table 3.

Table 1.

Participating pathology programs.

| Pathology programs implementing EPAs | Number of residents | Number of faculty |

|---|---|---|

| Duke University | 24 | 31 |

| Houston Methodist Hospital | 20 | 45 |

| Montefiore Medical Center/Albert Einstein College of Medicine | 19 | 37 |

| University of Arizona/Banner University Medical Center Tucson | 16 | 10 |

| University of Vermont | 17 | 26 |

| Zucker School of Medicine at Hofstra/Northwell | 16 | 39 |

EPAs: Entrustable professional activities.

Table 2.

Survey response rates.

| Program Directors | Faculty | Residents | |

|---|---|---|---|

| Number of surveyrecipients | 6 | 188 | 112 |

| Pre-survey response rate, % (n) | 100% (6) | 26% (48) | 53% (59) |

| Post-survey response rate, % (n) | 100% (6) | 27% (51) | 36% (40) |

Table 3.

Respondent demographics.

| Pre-survey | Post-survey | |

|---|---|---|

| Program directors, years as PDa | n = 6 | n = 6 |

| 0–5 | 33% | 50% |

| 6–10 | 17% | 17% |

| 11–15 | 33% | 17% |

| 16–20 | 17% | 17% |

| > 20 | 0% | 0% |

| Program directors | n = 6 | n = 6 |

| Percentage who are members of CCC | 100% | 83% |

| Faculty, years as faculty | n = 45 | n = 48 |

| 0–5 | 22% | 29% |

| 6–10 | 16% | 13% |

| 11–15 | 18% | 8% |

| 16–20 | 13% | 13% |

| > 20 | 31% | 38% |

| Faculty | n = 44 | n = 40 |

| Percentage who are members of CCC | 25% | 33% |

| Residents, Post-graduate year | n = 59 | n = 39 |

| PGY-1 | 27% | 21% |

| PGY-2 | 24% | 36% |

| PGY-3 | 27% | 26% |

| PGY-4 | 20% | 15% |

| PGY-5 | 2% | 3% |

| Residents, training track | n = 59 | n = 39 |

| AP/CP | 95% | 92% |

| AP only | 5% | 5% |

| CP only | 0% | 3% |

| AP/NP | 0% | 0% |

The differences in pre- and post-survey years as PD are due to one PD retiring in the middle of the study (after 11–15 years as PD). PD = Program director; CCC = Clinical competency committee; PGY=Post-graduate year; AP = Anatomic Pathology; CP=Clinical Pathology; NP=Neuropathology.

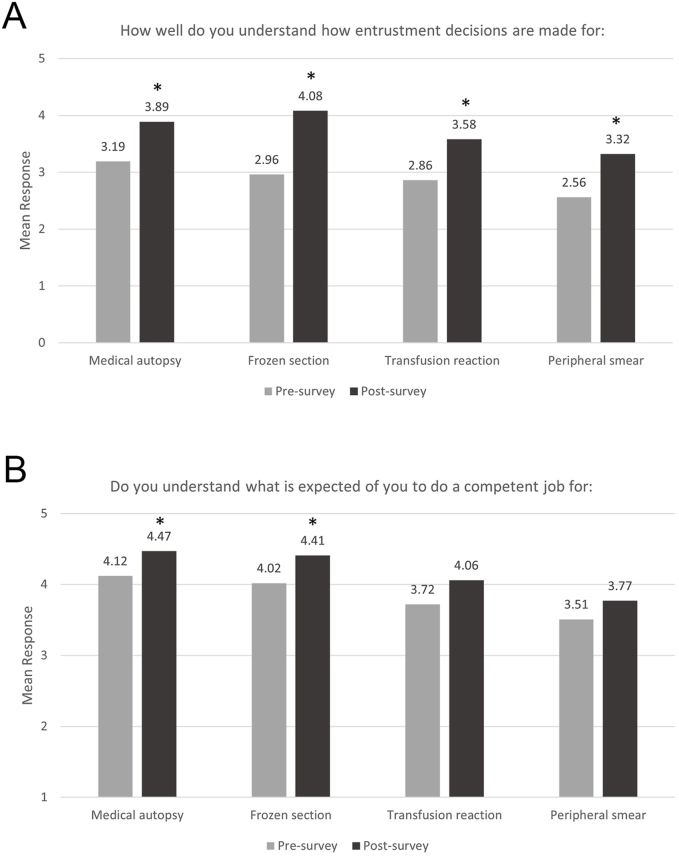

Understanding expectations

Residents were asked how well they understand how entrustment decisions are made in their respective programs (Fig. 1A) and whether they understand what is expected of them by faculty to do a complete and competent job for each task (Fig. 1B). Post-survey results were compared to the pre-survey results. Residents (Npre = 57 and Npost = 38) reported significantly better understanding of how entrustment decisions are made for all EPAs at the end of the pilot (medical autopsy: t(37) = 3.367, P = .002; frozen section: t(37) = 7.330, P < .001; transfusion reaction (the number of responses to this item on the pre-survey was different from the other EPAs; N = 56) t(37) = 3.337, P = .002; and peripheral smear: t(37) = 3.164, P = .003). Residents also reported significantly better understanding of what is expected of them to do a complete and competent job for the procedural EPAs (medical autopsy: Npre = 52 and Npost = 32; t(31) = 2.458, P = .02 and frozen section: Npre = 51 and Npost = 32; t(31) = 2.740, P = .01) and a non-significant but relative increase in understanding for the transfusion reaction and peripheral smear EPAs (Fig. 1B).

Fig. 1.

Understanding expectations for each EPA task. (A) 6-point scale ranging from 0 (not at all) to 5 (extremely well). (B) 5-point scale ranging from 1 (do not understand) to 5 (completely understand). ∗P < .05; post-survey means were compared to the pre-survey via one-sample t-tests.

Program directors and faculty were asked whether the knowledge and skill statements in the EPA helped standardize expectations and evaluation efforts of faculty, and whether they helped residents understand what is expected of them (in comparison to the program's traditional teaching and evaluation methods). All program directors (N = 6) and 64% of faculty (N = 27 of 42) agreed or strongly agreed that EPAs helped standardize expectations and evaluation efforts of faculty. However, faculty were significantly less likely to agree with this statement (M = 3.64 on a 5-point scale) than were program directors (M = 4.33, t(41) = -4.648, P < .001). Similarly, all program directors (N = 6) and 64% of faculty (N = 27 of 42) agreed or strongly agreed that the EPAs helped residents understand what is expected of them. Faculty agreement with this statement (M = 3.64) is again significantly lower than program director agreement (M = 4.33, t(41) = -4.916, P < .001).

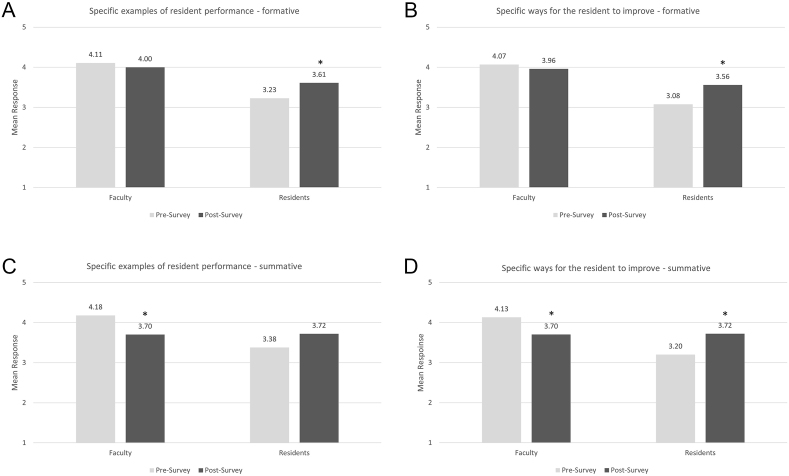

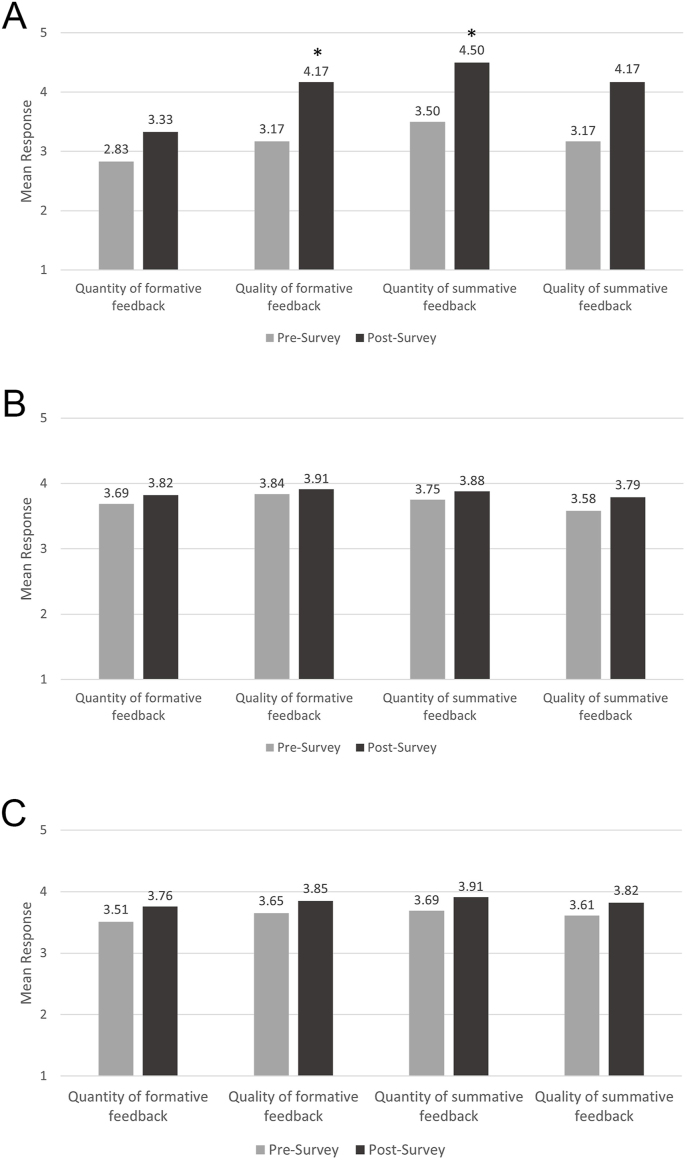

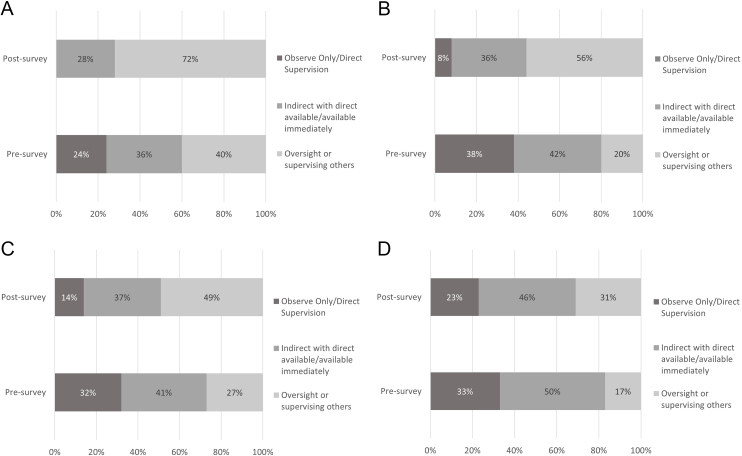

Frequency of specifics in feedback

Residents (Npre = 53 and Npost = 36) reported a significant increase in the frequency with which faculty provided formative assessments that included specific examples of performance (t(35) = 2.179, P = .036) and specific ways to improve (t(35) = 2.640, P = .012), as well as increased frequency with which faculty provided summative assessments that included specific ways to improve (t(35) = 2.820, P = .008) (Fig. 2A, B, and 2D). In contrast, faculty (Npre = 45 and Npost = 47) reported no significant change in the frequency of providing specific feedback in formative assessments (Fig. 2A and B), and a significant decrease in frequency of providing specific examples (t(46) = -2.873, P = .006) and specific ways to improve (t(46) = -2.490, P = .016) in summative feedback (Fig. 2C and D). With the implementation of EPAs, residents appear to be hearing more specific feedback, even if faculty report that they are not providing more specific feedback. Free text comments from residents describe the EPA feedback as being more “actionable,” “structured,” and “specific,” and that feedback provided “a better sense of current skill level” and “reinforced” current behaviors.

Fig. 2.

Frequency of providing specific formative (A and B) and summative (C and D) feedback. 5-point scale ranging from 1 (never do this) to 5 (always do this). ∗P < .05; post-survey means were compared to the pre-survey via one-sample t-tests.

Program directors and faculty were specifically asked whether EPAs helped faculty give residents more focused feedback. All program directors (N = 6) and 64% of faculty (N = 27 of 42) agreed or strongly agreed that EPAs helped faculty give more focused feedback. Faculty agreement with this statement (M = 3.74) is significantly lower than program director agreement (M = 4.67, t(41) = -6.105, P < .001). Of note, 93% (25 of 27) of faculty who agree or strongly agree that EPAs helped faculty give more focused feedback also agreed or strongly agree that EPAs help standardize expectations and evaluation efforts of faculty.

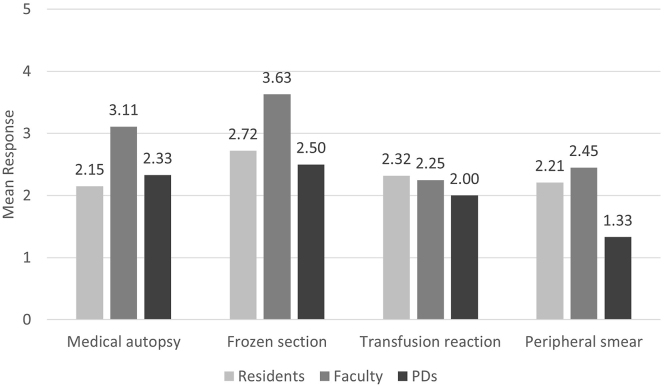

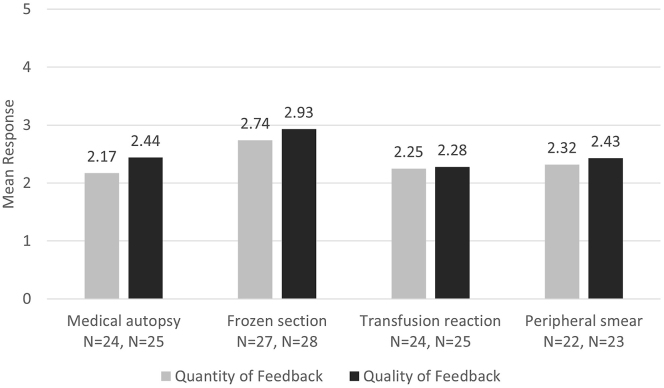

Impact on behaviors

Respondents rated the impact of EPAs on certain behaviors. Faculty (N = 18) self-reported that the EPA formative assessment tools for the medical autopsy and frozen sections had the highest impact on how they teach and provide feedback, although neither mean rating was particularly high (Fig. 3). Residents (N = 26) reported EPAs had less impact on their learning in comparison to the faculty responses. Program directors (N = 6) also reported EPAs having less impact on faculty teaching, especially for peripheral smear review. Notably, the procedural EPAs (medical autopsy and frozen section) had slightly higher mean impact ratings than the clinical interpretative EPAs (transfusion reaction and peripheral smear).

Fig. 3.

Impact of EPA formative assessment tools on learning/teaching and feedback. Residents reported impact on their learning. Faculty reported impact on their teaching and feedback. Program directors reported impact on faculty teaching and feedback overall. 6-point scale ranging from 0 (no impact) to 5 (significant impact).

Free text comments from faculty and residents most frequently praised the framework that EPAs created for providing feedback and how EPAs standardized a process. One program director noted that faculty felt more comfortable discussing deficiencies. Some faculty and residents noted that the feedback was not different from a conversation between faculty and resident, but the EPA did serve to document the interaction.

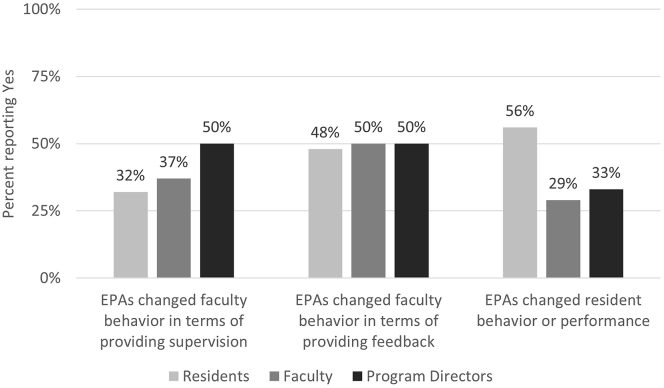

Respondents were asked whether the use of EPAs changed faculty behavior in providing supervision and feedback and whether EPAs changed resident behavior/performance (Fig. 4). Over half of the residents (N = 15 of 27) reported that EPAs changed their behavior or performance, almost double that noted by faculty (N = 11 of 38) and program directors (N = 2 of 6). Half of each group (residents N = 13 of 27, faculty N = 19 of 38, and program directors N = 3 of 6) agreed that faculty behavior changed in terms of providing feedback (responses include at least one resident/faculty from each program). About one-third of residents (N = 9 of 28) and faculty (N = 14 of 38) and half of program directors (N = 3 of 6) noted changes in providing supervision.

Fig. 4.

Impact of EPAs on faculty and resident behavior.

Residents self-reported the current level of supervision they need to perform each EPA task (Supplemental Figure 1). For medical autopsy, frozen section and transfusion reaction EPAs, residents reported requiring less supervision at the end of the pilot. There was no notable change for the peripheral smear EPA. Examples of ways residents reported changing their behavior included improving quality of reports (autopsy and peripheral smear consults), reinforcing good behaviors/skills/practices, and remaining more focused. Program directors and faculty were less sure if resident behaviors changed, citing a need for more time and experience with EPAs to detect behavior changes. Some specific changes noted by faculty included residents actively seeking feedback and EPAs providing a reminder of expectations when residents returned to a service they had been away from for some time.

Some free text comments suggested increased supervision and/or observation by faculty. A few comments noted faculty paid closer attention to the task, knowing they would have to fill out an assessment afterwards. More commonly, faculty behavior changed in terms of providing feedback, with half of the free text comments from faculty and residents citing that faculty's feedback included more specifics than before. Some faculty also noted that they were clearer on exactly what they should be evaluating the resident on, and one resident noted the EPA forced feedback to be more thorough. One faculty and one resident noted feedback was timelier.

Satisfaction with feedback

Program directors (N = 6) were significantly more satisfied with the quality of formative feedback (t(5) = 3.243, P = .023) and the quantity of summative feedback (t(5) = 4.472, P = .007) at the end of the pilot (Fig. 5A).

Fig. 5.

Satisfaction with the Quantity and Quality of Formative and Summative Feedback. Responses from Program Directors (A), Faculty (B), and Residents (C). 5-point scale ranging from 1 (Extremely Dissatisfied) to 5 (Extremely Satisfied). ∗P < .05; post-survey means were compared to the pre-survey via one-sample t-tests.

Faculty satisfaction with the quality and quantity of formative and summative feedback was overall slightly higher than for PDs but did not show any significant change between the pre- and post-surveys (Fig. 5B). Faculty were also asked how comfortable they felt giving feedback to a resident who needed to improve their performance. Results showed a slight but not statistically significant shift from 67% (pre-survey; N = 30 of 45) to 75% (post-survey; N = 32 of 43) reporting feeling comfortable or very comfortable giving feedback to a resident needing to improve.

Residents did not show a significant change in overall satisfaction with the quality and quantity of feedback between pre- and post-surveys (Fig. 5C). However, residents trended towards higher satisfaction with the quality and quantity of formative and summative feedback. Residents were also asked to rate the impact that each EPA formative assessment had on the quantity and quality of feedback they received (Fig. 6). Responses were very similar for each EPA, with the Frozen Section EPA having a slightly higher impact (non-significant) than the other EPAs. For each EPA, the mean rating was slightly higher for quality of feedback compared to quantity of feedback.

Fig. 6.

Resident assessment of the impact of EPA formative assessments on feedback. 5-point scale ranging from 0 (no impact) to 5 (significant Impact).

It is not surprising that the program directors had the most significant increase in satisfaction with feedback, when compared to faculty and residents, as this individual is reviewing all assessment data, whereas faculty and residents may only be reflecting on a narrower range of assessments. Among all survey respondents who picked dissatisfied or extremely dissatisfied with the quantity of formative and summative feedback, reasons included continued lack of enough data points, faculty not completing evaluations, a desire for increased face-to-face or one-on-one feedback, and lack of time due to clinical volume. Dissatisfaction with quality of feedback focused on evaluations being “vague,” no free-text comments, or overall lack of specifics.

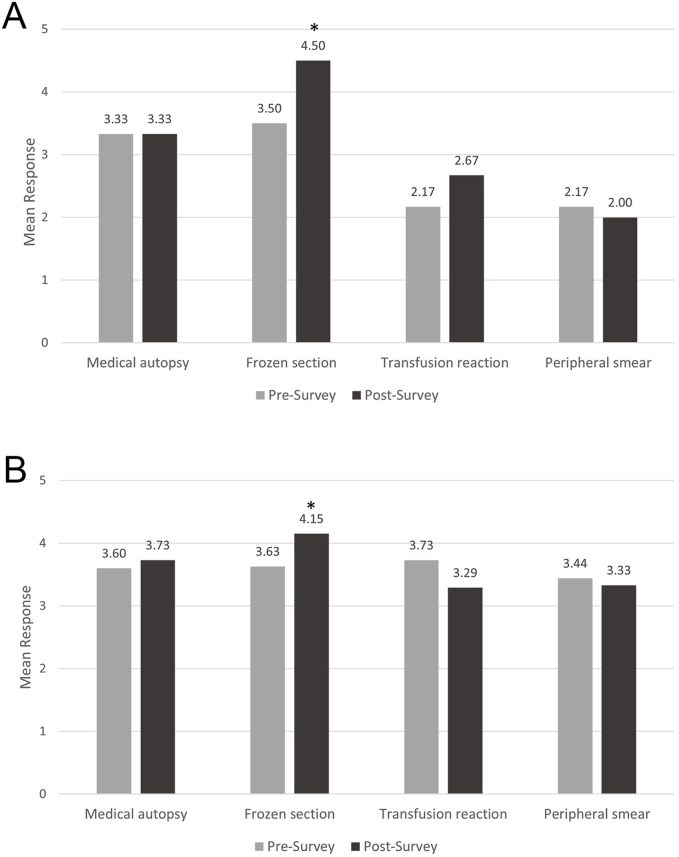

Understanding of abilities, strengths, and weaknesses

Both program directors (N = 6; t(5) = 4.472, P = .007) and faculty (Npre = 27 and Npost = 33; t(32) = 3.189, P = .003) reported a significantly better understanding of individual residents' abilities, strengths, and weaknesses at performing frozen sections in the post-survey compared to the pre-survey (Fig. 7A and B). Other EPAs showed no significant difference between pre- and post-surveys. When asked for which EPAs faculty supervise and evaluate residents, the frozen section EPA was most frequently selected (65% of faculty), more than double any other EPA (31% for medical autopsy, 16% for transfusion reaction, and 12% for peripheral smear review). A minimum number of assessments may be necessary to detect an impact on understanding of residents abilities, strengths, and weaknesses. When asked if EPAs made it easier to assess residents’ abilities, knowledge, and skills, 67% of program directors (N = 4 of 6) and 50% (N = 21 of 42) of faculty agreed or strongly agreed with this statement. Faculty agreement with this statement (M = 3.48) is again significantly lower than program director agreement (M = 4.17; t(41) = -4.642, P < .001).

Fig. 7.

Understanding of resident abilities, strengths, and weaknesses. Responses from program directors (A) and faculty (B). 6-point scale ranging from 0 (not at all) to 5 (extremely well). ∗P < .05; post-survey means were compared to the pre-survey via one-sample t-tests.

Clinical competency committee

When asked to describe the top two challenges in assigning Milestone levels to residents, most responses cited a lack of data for assigning Milestones, with lack of faculty filling out evaluations as a common culprit. When asked specifically about the impact of EPAs on assigning Milestones, responses noted the data was useful, but two PDs said it did not have a big impact either way at this point. One faculty stated they might be used as a tiebreaker before assigning Milestone level.

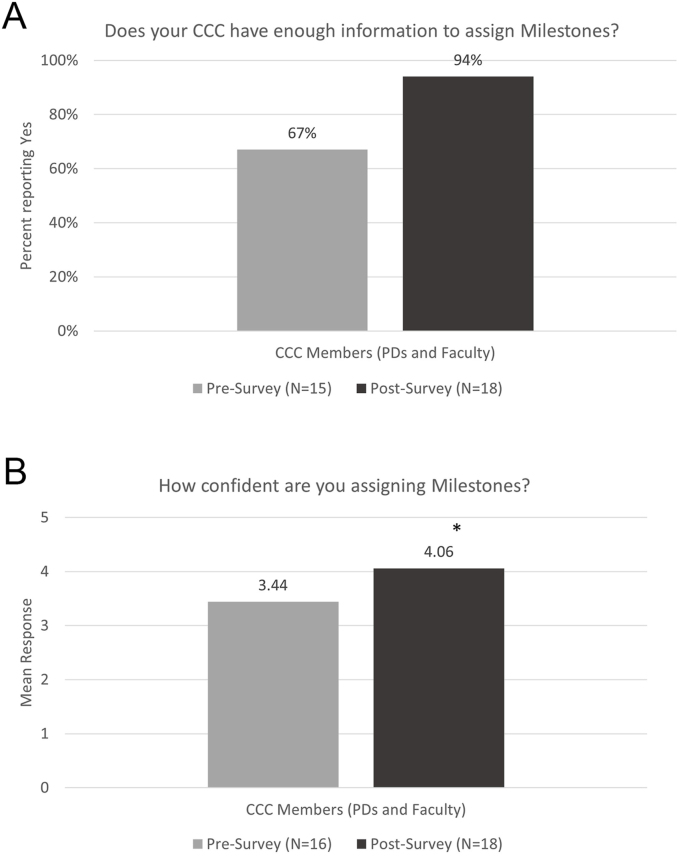

A significantly higher percentage of CCC members reported having enough information to assign Milestone levels at the end of the pilot compared to the beginning of the pilot (χ2(1) = 4.244, P = .039; Fig. 8A). CCC members also reported a significantly higher level of confidence in assigning Milestone levels at the end of the pilot compared to the beginning of the pilot (t(32) = -2.408, P = .024; Fig. 8B). Both groups of responses suggest the addition of EPAs to the assessment portfolio had a positive impact in assigning Milestones. However, when specifically asked to rate the extent to which EPA summative assessments helped the CCC to assign Milestone levels, responses were quite varied, with almost one-third of CCC members (28%, or 5 of 18) stating EPAs did not help at all. Thirty-nine percent (7 of 18) indicated they helped very much or for the most part.

Fig. 8.

Impact of EPAs on the clinical competency committee. (A) Yes/No. (B) 6-point scale ranging from 0 (no confidence) to 5 (extremely confident). ∗P < .05; one-way chi-square tests (A) and independent samples t-tests (B) were used to compare post-survey results to the pre-survey.

Continued use of EPAs

Four of the 6 program directors (67%) stated they would continue to use the EPA assessment tool after the conclusion of the pilot, with the remaining programs unsure. Three-quarters of all CCC members (N = 33 of 44) reported being likely or very likely to support further adoption of EPAs in the residency program. Program directors (N = 5) were overall more favorable than other CCC members were, with faculty (N = 39) having a significantly lower mean when responding to the likelihood of supporting further adoption of EPAs (M = 2.87 compared to 3.67 on a 4-point scale; t(38) = -5.770, P < .001). Half of the program directors (N = 3 of 6) stated they would implement other EPAs into their program, with the remaining programs unsure. Those who responded “unsure” cited lack of faculty buy-in or faculty “pushing back” about an additional evaluation. One PD noted a small group of faculty members found EPAs very helpful and plan to continue to use them. One PD who said they were “unsure” about adding other EPAs cited lack of faculty buy-in for current EPAs and needing to get the department used to the current four EPAs before adding any more. The use and support of EPAs by CCC members requires further investigation.

Barriers to implementation

The most frequently cited barriers to implementing EPAs included lack of time to complete the EPA assessments, issues with faculty buy-in, forgetting to fill out the assessments, challenges with incorporating the assessments into workflow, and understaffed sections of the laboratory. The institutional online assessment platforms were listed as significant challenges, as they were not user friendly or easy to incorporate into workflow. All sites resorted to filling out EPA assessments on paper. While it was easier to complete the assessments this way, it created an additional burden of data collection and analysis. A key take-way is identifying an easy-to-use, app-based platform that could be incorporated into workflow to fill out assessments. This was the most common recommendation to facilitate implementation of EPAs.

Other frequent recommendations were to simplify the EPA tool to one or two scales, make EPAs required (either require residents to ask for them or for the American Board of Pathology to require them), include Pathologists’ Assistants or other mid-level providers as evaluators, and to train faculty. One response noted how helpful the faculty training was in setting group-wide expectations for how to assess residents. The suggestion to add a faculty training may stem from incomplete faculty development at some programs, highlighting how essential faculty development is in the implementation of EPAs. A last suggestion commented on EPAs being a cultural shift that may just take time, with new classes of residents being introduced at the start.

Discussion

This is the first multi-institutional study of EPAs in pathology residency training programs. The study was able to demonstrate that the implementation of EPAs is feasible in anatomic and clinical pathology residency training programs. It also demonstrated how EPAs can benefit pathology residents, faculty, and program directors and provided insights on limitations of utility of EPAs and barriers to EPA implementation, which are quite similar to those described in other studies.25 Qualitative comments and periodic discussions throughout the study also garnered rich insights into successes and barriers to implementation.

The approach in this study, namely piloting the implementation of a small number of EPAs with a limited number of interested programs, is the same approach that was taken by general surgery in evaluating EPAs for primary surgery training.26 In the surgery study, data for feasibility essentially included numbers of assessments collected as proof of concept for achieving adequate numbers of assessments for resident evaluation. While we did not collect numbers of assessments in our study, we were able to demonstrate that sufficient evaluations were collected to impact program directors’ understanding of resident performance, and in many cases, to provide useful information to inform CCC Milestones evaluations. This was achieved despite using mainly paper-based EPA evaluations.

When considering Kirkpatrick's four-level evaluation model, this study mainly addressed levels one through three, with level four to be addressed in future studies (Table 4). Residents' reactions to the program [EPA Pilot] demonstrated the desirable outcome of more specific feedback in formative assessment, demonstrating the benefit of assessment for learning. Residents also had a better understanding of what competent performance looks like, reinforcing the benefits of behavioral descriptions in developing a shared mental model of a physician activity. In terms of learning that could be attributed to the program [EPA Pilot], in this case EPA implementation, program directors, faculty members, and CCC members noted EPAs made it easier to assess of residents' abilities and/or had sufficient data for resident assessment after implementation of EPAs. Finally, residents self-reported positive changes in their work performance with implementation and requiring less supervision for EPAs at the end of the study period, but it is uncertain if this is due to the use of EPAs. Limitations of both Time-Series Experimental Design studies and the Kirkpatrick evaluation model include intervening variables that may also impact learning in addition to the study intervention.18 In the current study, this may include the passage of time (namely, one additional year of training in pathology), changes in faculty complement, changes in residency program curriculum or rotation experiences, changes in the learning environment, or other changes in the residency program not evaluated by this study. However, most survey questions were worded to specifically ask for the impact of EPA assessments; potentially mitigating the impact of intervening variables on participant responses.

Table 4.

Kirkpatrick's four-level evaluation model (adapted from Frye and Hemmer18).

| Level and study application | Evidence of positive outcomes | |

|---|---|---|

| 1 | “Learner satisfaction or reaction to the program [EPA Pilot]”

|

|

| 2 | “Measures of learning attributed to the program [EPA Pilot] (e.g. knowledge gained, skills improved, attitudes changed)”

|

|

| 3 | “Changes in learner behavior in the context for which they are being trained”

|

|

| 4 | “Program's final results in larger context”

|

|

The National Pilot of EPAs in pathology residency demonstrated that EPAs offered the most benefits in setting clear expectations for performance of each task and in providing more specific feedback to residents. The increased frequency of specific feedback is an exciting trend to see. Often in medical education, there is a discrepancy in the amount of feedback faculty report providing (more) versus the amount of feedback learners report receiving (less).27 Our results suggest the discrepancy is in the opposite direction—faculty report giving less feedback while residents report hearing more. The addition of EPAs likely contributed to residents hearing more frequent specifics in formative and summative assessments, and further exploration of this trend is warranted. Few studies in the literature have examined the impact of EPAs on the quantity or quality of associated feedback, instead focusing on numbers of evaluations completed or summarizing data entrustment ratings by post-graduate year level.26,28 In a study of medical students, desired qualities of EPA feedback included addressing both domain-specific qualities and more general competencies such as communication, giving feedback focused on the entrustment level, providing timely feedback, and providing feedback on multiple occasions.29 Aspects of both the pathology formative evaluation design and the required pilot implementation may have helped address these needs. By delineating both the knowledge and skills of the EPA in addition to an entrustment scale, the tool provided faculty with both domain-specific and general competency related skills. Also, the study design requiring multiple formative assessments per unit of time, rotation, etc., encouraging repeated assessment of the same skills.

Faculty acceptance of EPAs was overall lower, with notable issues with faculty buy-in on a new assessment method and with the additional time taken to provide the specific feedback. This is likely one of the larger potential barriers to EPA implementation and is not unique to pathology.25,30 This may be mitigated by the ability to retire preexisting assessment forms once EPAs are well integrated into the residency workflow—during the pilot, faculty likely faced the double burden of filling out existing evaluation forms and the new EPA evaluation forms. A subset of faculty reported agreement to specific questions about EPAs standardizing expectations and evaluation efforts, as well as EPAs providing more focused feedback, suggesting a subset of faculty see EPAs in a favorable light. Some faculty did provide free text comments on benefits of EPA evaluations, specifically citing a better framework to assess residents and more direct observation of resident performance.

Program directors noticed a benefit in the overall assessment of residents, and CCC members showed increased confidence in assigning Milestones, with more members reporting having sufficient data. Overall, PDs and CCC members reported the highest acceptance of EPAs. This is likely due in part to the PDs' and CCC members' broad view of an individual resident's progress and the progress of residents as a whole, which may not be appreciated by individual faculty or individual residents. The improvement in ability to assess residents based on EPA assessment may encourage other PDs at other programs to consider updating the assessment portfolio of their residents to include EPAs.

One key finding, which represents a significant barrier pending resolution, is the need for an easily accessible and easy-to-use electronic platform for completing EPAs within the daily workflow. The institutional assessment platforms proved too cumbersome to integrate into workflow, so all programs resorted to using paper EPA assessments. While this supported immediate feedback to residents, collecting those paper assessments for global assessment by the CCC is impractical and not sustainable long term. It also hinders the ability of programs to do more sophisticated analysis or tracking of performance over time in training such as evaluation of learning curves.

Several subspecialties have tried to develop quick and easy electronic assessment platforms,31,32 however cost remains an issue. The ACGME offers a free tool called Direct Observation of Clinical Care (DOCC),33 however this tool lacks specificity and applicability to pathology. All of these platforms lack some of the specificity available in the EPA tools utilized in this study. Although there may be a need to streamline some of the longer EPAs, maintaining key coachable knowledge and skills statements are desirable in future iterations of this work.

The multi-institutional nature of the study required a highly adaptable framework that could fit into multiple clinical settings and workflows. The adaptability of EPAs allowed integration into a variety of workflows in both anatomic and clinical pathology settings. Frequent discussion with implementation groups allowed identification of core elements of EPAs and sharing of tips and tricks. Several common barriers were identified, most commonly around lack of faculty buy-in as mentioned above, short staffing in specific areas, or other major initiatives ongoing at the institution.

Acceptance of EPA assessments was low, which is primarily attributed to EPAs not being required and the newness/unfamiliarity of the assessment tool. Because EPA assessments were filled out on paper, programs were not able to report total number of EPA evaluations completed on each task; therefore, we do not have an objective measure of the volume of assessments used in each program. As such, we were also not able to tie observed improvements in quantity or quality of feedback with actual assessment volume. Likely ways to increase participation would be to replace existing assessments with EPAs to decrease the additional burden on faculty.

While some survey data did not show statistical significance, the pilot was designed to be a limited trial, focusing on four EPAs during one year of training (in a 4-year residency program). The results of the pilot offer a limited amount of data, but enough to support the continued study of EPAs in pathology residency training. Other limitations included low survey response rates for faculty and residents as compared to PDs. The number of PDs and CCC members were low by the nature of representing a subset of faculty, limiting statistical analysis. Many of these limitations are inherent to educational research in pathology, where program sizes, and therefore sample sizes, are small. This is also a limitation to survey-based research where participation is not compulsory.

Bias may have been introduced at the time of study recruitment. PD's who chose to participate in the study were already motivated to introduce a new system of assessment and may not represent a program with less interest adopting EPAs. Also, programs remaining in the study potentially demonstrated more stability and support for educational innovation within the department, as the majority of programs that dropped out were due to change in program leadership or insufficient faculty availability or buy-in.

Further work needs to be done, as programs gain experience with EPAs. EPAs were mapped to Milestones to support CCC discussions. It's possible, with continued use, EPAs may be able to replace many rotation evaluations, thus decreasing rather than adding to faculty evaluation burden. Also, replacing less intuitive summative evaluations with a more intuitive EPA-based evaluation may improve faculty completion rates for resident evaluations. This is an important area of study, as time constraints on faculty are a true concern. EPAs should not be just “one more thing,” but a conscious replacement of current assessment strategies that do not include formative assessment and assessment for learning. Continued work is needed to demonstrate the value of EPAs in global assessment and to ensure residents are being assessed according to the Milestones in all necessary aspects of their training. Also, further investigation into the use of EPAs in procedure-based skills versus clinical reasoning skills is needed.

There is a strong national push to incorporate competency-based assessments into residency training, with the American Board of Surgery even requiring EPA assessments for Interns starting in July 2023.34,35 EPAs are one type of competency-based assessment, and this article shows the feasibility of EPA assessment in pathology residency training. Survey feedback suggests that EPA assessments support resident education by providing more specific feedback and helping to set expectations between faculty and residents for performance. PD and CCC survey feedback suggest that EPAs support the CCC process by providing more data for assigning Milestones. EPAs also provide Program Directors with a better understanding of resident competence. Definite hurdles remain, namely faculty support and development of an electronic platform that provides convenient access and the ability for data analysis. Continued use of EPAs over time will likely increase faculty and resident comfort and acceptability of EPAs. The Pathology EPA Working Group continues to support the expanded use of EPAs in a way that makes sense for pathology residency, furthering the national goals of competency-based medical education.

Funding

This research is supported by the Association of Pathology Chairs through staff time and the cost of publication, and by the College of American Pathologists through staff time. No internal or external grant funding was used in this study.

Declaration of competing interest

The authors declare the following financial interests/personal relationships that may be considered as potential competing interests: Bronwyn H Bryant reports administrative support was provided by Association of Pathology Chairs. Bronwyn H Bryant reports statistical analysis was provided by College of American Pathologists. If there are other authors, they declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this article.

Acknowledgments

The authors would like to acknowledge Cheryl Hanau, MD, Kate Hatlak, EdD, Karen Kaul, MD, PhD, Priscilla Markwood, CAE, Madeleine Markwood, Douglas Miller, MD, PhD, Gary Procop, MD, and Cindy Riyad, PhD, for their support of this work. Dr. Bryant gratefully acknowledges the guidance of The University of Vermont Larner College of Medicine Teaching Academy.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.acpath.2024.100110.

Supplementary data.

The following are the Supplementary data to this article.

figs1.

References

- 1.Holmboe E. American Board of Medical Specialties; October 10, 2023. CBME and Certification: Essential Partners for Improving Health and Health Care.https://www.abms.org/newsroom/cbme-and-certification-essential-partners-for-improving-health-and-health-care/ [Google Scholar]

- 2.ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013;5(1):157–158. doi: 10.4300/JGME-D-12-00380.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brasel K.J., Klingensmith M.E., Englander R., et al. Entrustable professional activities in general surgery: development and implementation. J Surg Educ. 2019;76(5):1174–1186. doi: 10.1016/j.jsurg.2019.04.003. [DOI] [PubMed] [Google Scholar]

- 4.Thoma B., Hall A.K., Clark K., et al. Evaluation of a national competency-based assessment system in emergency medicine: a CanDREAM study. J Grad Med Educ. 2020;12(4):425–434. doi: 10.4300/JGME-D-19-00803.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stahl C.C., Jung S.A., Rosser A.A., et al. Entrustable professional activities in general surgery: trends in resident self-assessment. J Surg Educ. 2020;77(6):1562–1567. doi: 10.1016/j.jsurg.2020.05.005. [DOI] [PubMed] [Google Scholar]

- 6.Schnobrich D.J., Mathews B.K., Trappey B.E., Muthyala B.K., Olson A.P.J. Entrusting internal medicine residents to use point of care ultrasound: towards improved assessment and supervision. Med Teach. 2018;40(11):1130–1135. doi: 10.1080/0142159X.2018.1457210. [DOI] [PubMed] [Google Scholar]

- 7.Hauer K.E., Soni K., Cornett P., et al. Developing entrustable professional activities as the basis for assessment of competence in an internal medicine residency: a feasibility study. J Gen Intern Med. 2013;28(8):1110–1114. doi: 10.1007/s11606-013-2372-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hart D., Franzen D., Beeson M., et al. Integration of entrustable professional activities with the milestones for emergency medicine residents. West J Emerg Med. 2018;20(1):35–42. doi: 10.5811/westjem.2018.11.38912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Moeller J.J., Warren J.B., Crowe R.M., et al. Developing an entrustment process: insights from the AAMC CoreEPA pilot. Med Sci Educ. 2020;30(1):395–401. doi: 10.1007/s40670-020-00918-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McCloskey C.B., Domen R.E., Conran R.M., et al. Entrustable professional activities for pathology: recommendations from the College of American pathologists graduate medical education committee. Acad Pathol. 2017;4 doi: 10.1177/2374289517714283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Powell D.E., Wallschlaeger A. Making sense of the milestones: entrustable professional activities for pathology. Hum Pathol. 2017;62:8–12. doi: 10.1016/j.humpath.2016.12.027. [DOI] [PubMed] [Google Scholar]

- 12.Pagano M.B., Treml A., Stephens L.D., et al. Entrustable professional activities for apheresis medicine education. Transfusion. 2020;60(10):2432–2440. doi: 10.1111/trf.15983. [DOI] [PubMed] [Google Scholar]

- 13.White K., Qualtieri J., Courville E.L., et al. Entrustable professional activities in hematopathology pathology fellowship training: consensus design and proposal. Acad Pathol. 2021;8 doi: 10.1177/2374289521990823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bryant B.H. Feasibility of an entrustable professional activity for pathology resident frozen section training. Acad Pathol. 2021;8 doi: 10.1177/23742895211041757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cotta C.V., Ondrejka S.L., Nakashima M.O., Theil K.S. Pathology residents as testing personnel in the hematology laboratory. Arch Pathol Lab Med. 2022;146:10. doi: 10.5858/arpa.2020-0630-OA. [DOI] [PubMed] [Google Scholar]

- 16.Ju J.Y., Wehrli G. The effect of entrustable professional activities on pathology resident confidence in blood banking/transfusion medicine. Transfusion. 2020;60(5):912–917. doi: 10.1111/trf.15679. [DOI] [PubMed] [Google Scholar]

- 17.Kemp W.L., Koponen M., Sens M.A. Forensic autopsy experience and core entrustable professional activities: a structured introduction to autopsy pathology for preclinical student. Acad Pathol. 2019;6 doi: 10.1177/2374289519831930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Frye A.W., Hemmer P.A. Program evaluation models and related theories: AMEE guide no. 67. Med Teach. 2012;34(5):e288–e299. doi: 10.3109/0142159X.2012.668637. [DOI] [PubMed] [Google Scholar]

- 19.Bryant B.H., Anderson S.R., Brissette M., et al. Leveraging faculty development to support validation of entrustable professional activities assessment tools in anatomic and clinical pathology training. Acad Pathol. 2024 doi: 10.1016/j.acpath.2024.100111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gofton W.T., Dudek N.L., Wood T.J., Balaa F., Hamstra S.J. The Ottawa Surgical competency operating room evaluation (O-SCORE): a tool to assess surgical competence. Acad Med. 2012;87(10):1401–1407. doi: 10.1097/ACM.0b013e3182677805. [DOI] [PubMed] [Google Scholar]

- 21.Rekman J., Hamstra S.J., Dudek N., Wood T., Seabrook C., Gofton W. A new instrument for assessing resident competence in surgical clinic: the Ottawa clinic assessment tool. J Surg Educ. 2016;73(4):575–582. doi: 10.1016/j.jsurg.2016.02.003. [DOI] [PubMed] [Google Scholar]

- 22.Accreditation Council for Graduate Medical Education . second revision. 2019. Pathology Milestones.https://www.acgme.org/globalassets/pdfs/milestones/pathologymilestones.pdf Published February. [Google Scholar]

- 23.Harris P.A., Taylor R., Thielke R., Payne J., Gonzalez N., Conde J.G. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inf. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Harris P.A., Taylor R., Minor B.L., et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inf. 2019;95 doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Schultz K., Griffiths J. Implementing competency-based medical education in a postgraduate family medicine residency training program: a stepwise approach, facilitating factors, and processes or steps that would have been helpful. Acad Med. 2016;91(5):685–689. doi: 10.1097/ACM.0000000000001066. [DOI] [PubMed] [Google Scholar]

- 26.Lindeman B., Brasel K., Minter R.M., Buyske J., Grambau M., Sarosi G. A phased approach: the general surgery experience adopting entrustable professional activities in the United States. Acad Med. 2021;96(7S):S9–S13. doi: 10.1097/ACM.0000000000004107. [DOI] [PubMed] [Google Scholar]

- 27.Van De Ridder J.M.M., Stokking K.M., McGaghie W.C., Ten Cate OTJ What is feedback in clinical education?: feedback in clinical education. Med Educ. 2008;42(2):189–197. doi: 10.1111/j.1365-2923.2007.02973.x. [DOI] [PubMed] [Google Scholar]

- 28.Brasel K.J., Lindeman B., Jones A., et al. Implementation of entrustable professional activities in general surgery: results of a national pilot study. Ann Surg. 2023;278(4):578–586. doi: 10.1097/SLA.0000000000005991. [DOI] [PubMed] [Google Scholar]

- 29.Duijn C.C.M.A., Welink L.S., Mandoki M., Ten Cate OTJ, Kremer W.D.J., Bok H.G.J. Am I ready for it? Students' perceptions of meaningful feedback on entrustable professional activities. Perspect Med Educ. 2017;6(4):256–264. doi: 10.1007/s40037-017-0361-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Emke A.R., Park Y.S., Srinivasan S., Tekian A. Workplace-based assessments using pediatric critical care entrustable professional activities. J Grad Med Educ. 2019;11(4):430–438. doi: 10.4300/JGME-D-18-01006.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bohnen J.D., George B.C., Williams R.G., et al. The feasibility of real-time intraoperative performance assessment with SIMPL (system for improving and measuring procedural learning): early experience from a multi-institutional trial. J Surg Educ. 2016;73(6):e118–e130. doi: 10.1016/j.jsurg.2016.08.010. [DOI] [PubMed] [Google Scholar]

- 32.Cooney C.M., Redett R.J., Dorafshar A.H., Zarrabi B., Lifchez S.D. Integrating the NAS Milestones and handheld technology to improve residency training and assessment. J Surg Educ. 2014;71(1):39–42. doi: 10.1016/j.jsurg.2013.09.019. [DOI] [PubMed] [Google Scholar]

- 33.Accreditation Council for Graduate Medical Education Direct Observation of Clinical Care (DOCC) https://docc.acgme.org

- 34.ABMS Member Boards Pave the Way for CBME . 2023. American Board of Medical Specialties.https://www.abms.org/newsroom/abms-member-boards-pave-the-way-for-cbme/ Published October 10. [Google Scholar]

- 35.American Board of Surgery Entrustable Professional Activities. https://www.absurgery.org/default.jsp?epahome

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.