Summary

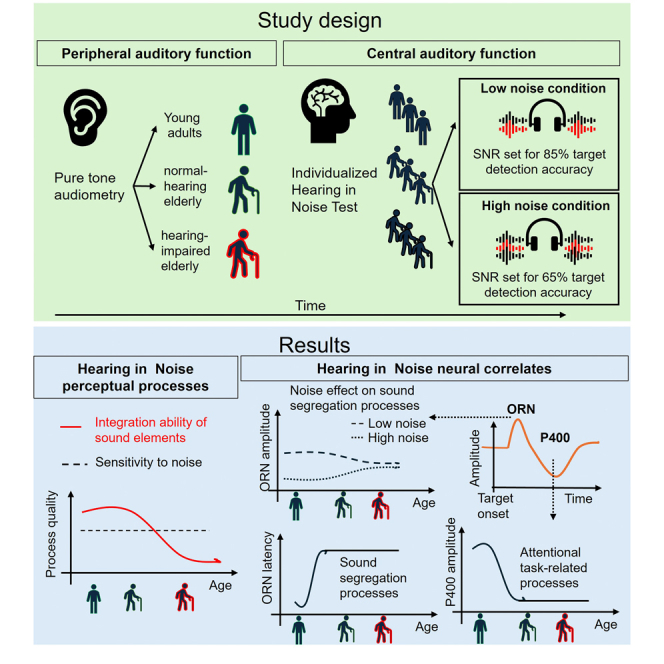

The study investigates age-related decline in listening abilities, particularly in noisy environments, where the challenge lies in extracting meaningful information from variable sensory input (figure-ground segregation). The research focuses on peripheral and central factors contributing to this decline using a tone-cloud-based figure detection task. Results based on behavioral measures and event-related brain potentials (ERPs) indicate that, despite delayed perceptual processes and some deterioration in attention and executive functions with aging, the ability to detect sound sources in noise remains relatively intact. However, even mild hearing impairment significantly hampers the segregation of individual sound sources within a complex auditory scene. The severity of the hearing deficit correlates with an increased susceptibility to masking noise. The study underscores the impact of hearing impairment on auditory scene analysis and highlights the need for personalized interventions based on individual abilities.

Subject areas: Neuroscience, Sensory neuroscience

Graphical abstract

Highlights

-

•

The central and peripheral factors in age-related listening deficits were examined

-

•

Behavioral and EEG data were analyzed from a figure-ground segregation task

-

•

Aging alone does not markedly impact sound source detection in complex scenes

-

•

Mild hearing impairment significantly reduces sound source detection ability

Neuroscience; Sensory neuroscience

Introduction

In everyday situations, detecting auditory objects in noise (figure-ground segregation), such as understanding speech in a crowded restaurant, is essential for adaptive behavior. Difficulty in listening to speech under adverse listening conditions results in compromised communication and socialization, which are commonly reported phenomena in the aging population,1,2,3 affecting ca. 40% of the population over 50, and almost 71% over 70 years of age.4,5 Problems in figure-ground segregation are attributed to peripheral, central auditory, and/or cognitive factors.5,6 However, the relative contributions and interactions among these factors have yet to be studied in depth. Here, we investigated together the effects of peripheral (hearing loss) and central auditory processes on the deterioration of figure-ground segregation in aging combining personalized psychoacoustics and electrophysiology.

Major changes in the peripheral auditory system likely contribute to age-related hearing loss. For instance, outer and inner hair cells within the basal end of the cochlea degrade at an older age, resulting in high-frequency hearing loss.5,7 The pure-tone audiometric threshold is a proxy of changes in cochlear function and structure,5 and it is well established that due to elevated hearing thresholds elderly people have difficulty hearing soft sounds.8,9 Age-related damage to the synapses connecting the cochlea to auditory nerve fibers (cochlear synaptopathy) results in higher thresholds with fibers having low spontaneous rates,10,11 which is assumed to contribute to comprehension difficulties when speech is masked by background sounds.12 However, older adults with similar pure-tone thresholds can differ in their ability to understand degraded speech, even after the effects of age are controlled for.13

Deficits in central auditory functions (decoding and comprehending the auditory message14,15) may also contribute to the difficulties elderly people experience in speech-in-noise situations. Specifically, these central functions may partly compromise concurrent sound segregation6,16,17 and lead to diminished auditory regularity representations and reduced inhibition of irrelevant information at higher levels of the auditory system.16,18,19

Navigating noisy scenes also depends on selective attention (independently of the modality of stimulation), which is known to be impaired in aging (for reviews see Friedman and Grady20,21). Specifically, increased distraction by irrelevant sounds1,2,3 suggest deficits in inhibiting irrelevant information, which is especially prominent in information masking.17

Figure-ground segregation relies on grouping sound elements belonging to one sound source and segregating them from the rest of the competing sounds.22,23,24,25 Figure-ground segregation has been recently studied with the help of tone clouds, a series of short chords composed of several pure tones with random frequencies. The figure within the cloud consists of a set of tones progressing together in time, while the rest of the tones randomly vary from chord to chord (background24,25,26,27). When the frequency range of the figure and the background tone set spectrally overlap, the figure is only distinguishable by parsing the coherently behaving tones across frequency (concurrent grouping) and time (sequential grouping). With component tones of equal amplitude (which is typical in these studies), the ratio of the number of figure tones (figure coherence) and background tones (noise) determines the figure-detection signal-to-noise ratio (SNR). Figure detection performance within these tone clouds was found to predict performance in detecting speech in noise,12,28 making this well-controlled stimulus attractive as a model for studying real-life figure detection.

Figure-related neural responses commence as early as after two temporally coherent chords (ca. 150 ms from the onset of the figure24,27,29). Figure detection accuracy scales with figure coherence and duration (the number of consecutive figure tone-sets presented). This suggests that both spectral and temporal integration processes are involved in figure detection.25 Event-related brain potential (ERP) signatures of figure-ground segregation are characterized by the early (200–300 ms from stimulus onset) frontocentral object-related negativity response (ORN); a later (450–600 ms), parietally centered component (P400) is elicited when listeners are instructed to detect the figure.25 The former indexes the outcome of the process separating the figure from the background (ORN is elicited when separating concurrent sound streams;30,31), while the latter likely reflects a process leading to the perceptual decision, such as matching to a memorized pattern.25,30,31

While a mechanism based on tonotopic neural adaptation could explain the extraction of a spectrally coherent figure, it is more likely that this kind of figure detection is based on a more general process, such as the analysis of temporal coherence between neurons encoding various sound features.25,26,32 This is because spectrally constant and variable figures can be detected equally efficiently24,25,26 and the segregation process is robust against interruptions.27

Some results suggest that figure-ground segregation is primarily pre-attentive.26,27 However, there is also evidence that figure-ground segregation can be modulated by attention25 and cross-modal cognitive load.33

The current study aimed to test the causes of impaired listening in noise in aging. To separate the effects of age and age-related hearing loss, three groups of listeners (young adults; normal-hearing elderly, and hearing-impaired elderly) have been tested. The group of hearing-impaired elderly was selected based on an elevated pure-tone audiometric threshold, thus assuring deterioration of peripheral function.5 The groups’ differences in peripheral gain and cognitive load (e.g., effects of the inter-individual variation in working memory capacity) were reduced by keeping task performance approximately equal across all listeners using individualized stimuli. Electrophysiological measures have the advantage (compared to psychoacoustic measures) that they could provide supportive and additional information about the central processing stages.

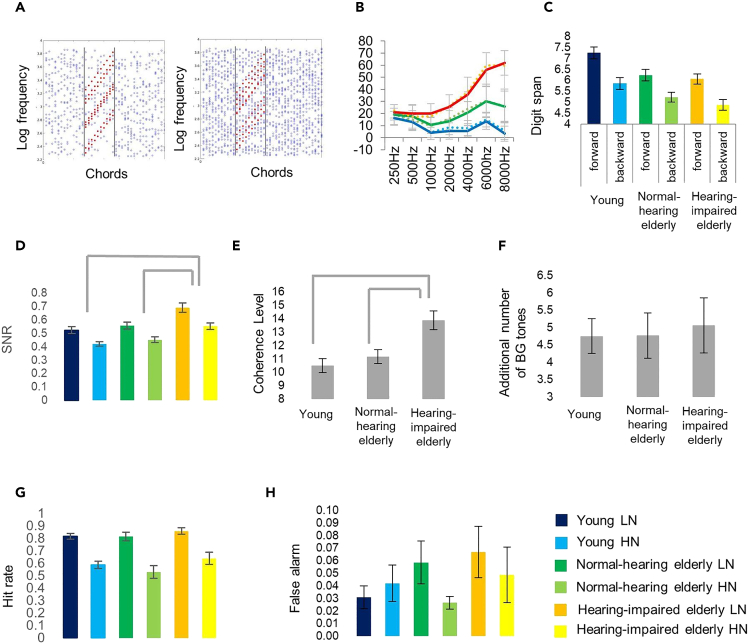

Participants were presented with stimuli concatenating 40 chords of 50 ms duration (Figure 1A). Half of the stimuli included a figure (a set of tones rising together in time embedded in a cloud of randomly selected tones; figure trials), while the other half consisted only of chords made up of randomly selected frequencies (no-figure trials; stimuli were adapted from previous studies by O’ Sullivan et al., Toth et al., and Teki et al.24,25,26). Listeners performed the figure detection task under low noise (LN) and high noise (HN) conditions, which differed only in the number of concurrent randomly varying (background) tones. Stimulus individualization was achieved through two adaptive threshold detection procedures conducted before the main figure detection task. First, LN stimuli were adjusted for each participant by manipulating the number of tones belonging to the figure (figure coherence) so that participants performed figure detection at 85% accuracy. Second, HN stimuli were adjusted for each participant by increasing the number of background tones in the LN stimuli until the participant performed at 65% accuracy.

Figure 1.

Stimuli and behavioral results

(A) Figure examples of stimuli for the low (LN) and high noise (HN) conditions, respectively.

(B) Mean pure tone audiometry thresholds for the young, adult (blue line; N = 20), normal-hearing, elderly (green line; N = 13), and hearing-impaired older listeners across elderly groups (red line; N = 16) in the 250–8000 Hz range. Error bars depict SEM on all graphs.

(C) Forward and backward digit span performance (corresponding to working memory capacity and control) for the three groups.

(D) Mean SNR values were derived from the threshold detection tasks (across LN and HN) from the stimulus individualization procedure.

(E) Mean figure coherence level derived from the first threshold detection task (and employed later in the FG segregation task) for the three groups. Mean Figure coherence level of the LN stimuli for the three groups; significant group differences (p < 0.01) are marked by gray lines above the bar charts.

(F) Mean increase in the background tone number between the tones from LN to HN conditions for the three groups.

(G and H) Behavioral performance (Hit rate and false alarm rate, respectively) in, separately for LN and HN; color labels are at the FG segregation task lower right corner of the figure.

If integrating sound elements into a single object was more difficult for the elderly than the young listeners, then they will require more figure tones than young listeners to reach similar figure detection performance, as higher coherence helps figure integration.24,25,26 Suppose the elderly adults were more susceptible to masking. In that case, their performance will be affected by fewer additional background tones than young adults when individual stimuli are created for the HN condition.

As for separating peripheral and central processes, peripheral effects are expected to be more pronounced in the elderly group with hearing loss than in the normal-hearing elderly: higher coherence and/or fewer background tones are needed for reaching the same performance. In contrast, both groups will be equally affected by the deterioration of central processes. One general effect often seen in aging is slowing information processing.20,21,33 One should expect longer ORN or P400 latency in both elderly groups compared to young adults. Finally, less efficient selective attention may lead to lower P400 amplitudes.

Results

Behavioral results

Participants were divided into three groups based on their age and pure-tone hearing threshold (Figure 1B), the latter measured by audiometry (young adults, normal-hearing elderly, and hearing-impaired elderly). None of the listeners reported issues with their hearing (“The Hearing Handicap Inventory for the Elderly” adopted from34). After measuring the participant’s digit span and introducing the stimuli in general, the LN and HN conditions were set up individually for each participant. First, the number of parallel figure tones allowing the participant to detect the figure with 85% accuracy was established by a procedure of increasing the number of figure tones while simultaneously decreasing the number of background tones, thus keeping the total number of tones constant (N = 20; LN condition). Then, extra background tones were added, until the participant’s performance declined to 65% (HN condition). The main experiment consisted of a series of trials mixing LN and HN, figure, and no-figure stimuli in equal proportion (25%, each). Figure detection responses and electroencephalogram (EEG) were recorded. Figure 1 summarizes behavioral results from the pure tone audiometry, digit span, and figure-ground segregation task.

Group differences in the number of figures and background tones in the LN and HN stimuli

The threshold detection procedure yielded distinct LN and HN conditions for each listener. The SNR was calculated from the ratio of the number of figure tones and background tones, separately for each group and the LN and HN conditions (Figure 1D). SNR values were log-transformed before analyses due to their heavily skewed distribution. As was set up by the procedure, there was a main effect of NOISE (LN vs. HN conditions) on SNR (F[1, 46] = 75.57, p < 0.001, ηp2 = 0.622), with larger SNR in the LN (before log transformation: M = 2.29, SD = 3.60) than in the HN (M = 0.99, SD = 0.53) condition.

There was also a main effect of GROUP: F(2, 46) = 10.93, p < 0.001, ηp2 = 0.697. Post hoc pairwise comparisons revealed that SNR was larger in the hearing-impaired elderly group (M = 2.85, SD = 4.36) compared to both the normal-hearing elderly (M = 1.12, SD = 0.54; Tukey’s Honest Significant Difference (HSD) q[2, 95] = 5.35, p < 0.001) and the young adult group (M = 1.00, SD = 0.54; q[2, 95] = 7.30, p < 0.001). The interaction between GROUP and NOISE showed a tendency toward significance (F[2, 46] = 2.86, p = 0.067, ηp2 = 0.111). Separate pairwise comparisons between the two NOISE levels for each group showed that the effect size of NOISE was smaller in the hearing-impaired elderly than in the other two groups (all ps < 0.001; effect size for young adults: Cohen’s d = 1.78; for normal-hearing elderly: d = 1.98; for hearing-impaired elderly: d = 1.12).

To test whether the SNR effects were due to the number of coherent tones in the figure (coherence level; Figure 1G) or to the noise increase for the HN condition, separate ANOVAs were calculated for the coherence level (in LN) and the additional number of background tones (HN; Figure 1H). For coherence level, a one-way ANOVA with factor GROUP found a main effect (F(2, 46) = 9.62, p < 0.001, ηp2 = 0.295), with larger values in hearing-impaired elderly (M = 13.88, SD = 2.80) than in the normal-hearing elderly (M = 11.15, SD = 1.86; q[2, 95] = 4.57, p = 0.006) or young adults (M = 10.5, SD = 2.28; q[2, 95] = 5.67, p < 0.001). For the number of additional background tones, a one-way ANOVA with factor GROUP yielded no significant effect (F[2, 46] = 0.07, p = 0.93, ηp2 = 0.003).

Figure detection results

The effects of GROUP and NOISE on task performance (d’, hit rate, false alarm rate, and RT; Figures 1D–1H) were tested with two-way mixed-model ANOVAs. As was set up by the individualization procedures, there was no significant main effect of GROUP for any of the performance measures in the main figure detection segregation task in either noise condition (all Fs[2, 46] < 1.73, ps > 0.18, ηp2’s < 0.03), and there was a main effect of NOISE for hit rate, d’ and reaction times (RT) (all three Fs > 24.9, ps < 0.001, ηp2’s > 0.35). Detailed results for the performance measures were as follows.

The effects of GROUP (young adults, normal-hearing elderly, and hearing-impaired elderly) and NOISE (LN versus HN) on performance (hit rate, false alarm rate, d’, and RT) in the figure detection task were tested with two-way mixed-model ANOVAs.

For hit rate, there was no significant interaction (F[2, 46] = 0.571, p = 0.569, ηp2 = 0.024). There was a significant main effect of NOISE (F[1, 46] = 92.854, p < 0.001, ηp2 = 0.669) with larger values in LN (M = 0.831, SD = 0.11) than in HN trials (M = 0.589, SD = 0.171). There was no significant main effect of GROUP (F[2, 46] = 1.726, p = 0.189, ηp2 = 0.015).

For false alarm rate, there was no significant interaction (F[2, 46] = 1.907, p = 0.16, ηp2 = 0.077). There was no significant main effect of NOISE (F[1, 46] = 2.057, p = 0.158, ηp2 = 0.043) or GROUP (F[2, 46] = 0.702, p = 0.501, ηp2 = 0.030).

For d’, there was no significant interaction (F[2, 46] = 0.611, p = 0.547, ηp2 = 0.026). There was a significant main effect of NOISE (F[1, 46] = 91.827, p < 0.001, ηp2 = 0.666) with larger values in LN (M = 2.959, SD = 0.768) than in HN trials (M = 2.205, SD = 0.644). There was no significant main effect of GROUP (F[2, 46] = 0.496, p = 0.547, ηp2 = 0.021).

For RT, there was a tendency toward interaction: F(2, 46) = 2.88, p = 0.066, ηp2 = 0.111. There was also a main effect of NOISE (F[1, 46] = 24.912, p < 0.001, ηp2 = 0.351) with larger values in HN (M = 329.96 ms, SD = 88.51) than in LN trials (M = 318.09 ms, SD = 83.25). There was no significant main effect of GROUP (F[2, 46] = 0.355, p = 0.703, ηp2 = 0.015). Mean RT values for the young adult, normal-hearing elderly, and hearing-impaired elderly were M = 313.42 ms (SD = 93.09), M = 322.15 ms (SD = 74.85), and M = 337.80 ms (SD = 86.97), respectively.

Relationship between peripheral loss and figure-ground segregation

The relationship between peripheral hearing loss (average hearing threshold across frequencies and ears, as measured by pure-tone audiometry) and behavioral performance (log-transformed SNRs from the individualization procedure, as well as their difference; d’ and RT from the main task, separately for LN and HN) was tested with Pearson’s correlations. There were significant correlations between peripheral loss and SNR both in LN (r[47] = 0.556, p < 0.001; Bonferroni correction applied for all correlation tests) and HN (r[47] = 0.561, p < 0.001), as well as their difference (r[47] = −0.385, p = 0.044). The latter showed that larger peripheral loss (worse hearing) resulted in smaller noise differences between LN and HN. Confirming the success of stimulus individualization, no significant correlation was observed between peripheral loss and figure detection performance (d’ and RT in either LN or HN; all rs[47] < 0.11, all ps > 0.5).

Relationship between working digit span and figure-ground segregation

The relationship between the digit span measures (working memory capacity and control, as measured by forward and backward digit span, respectively; Figure 1C) and behavioral performance (log-transformed SNRs of the individualization procedure and their difference; d’ and RT from the main task, separately for LN and HN) was not significant (all rs[47] < 0.265, ps > 0.5).

ERP results

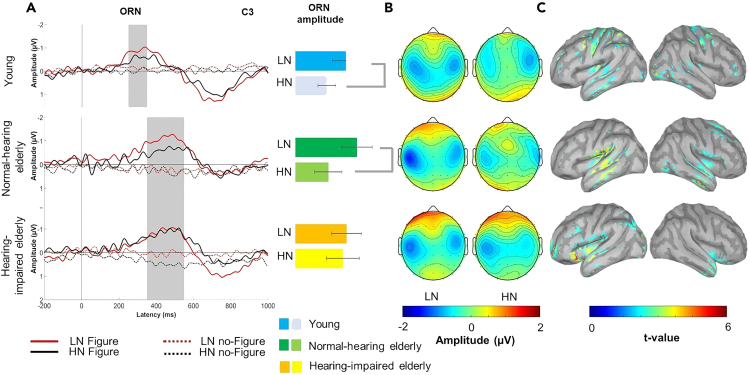

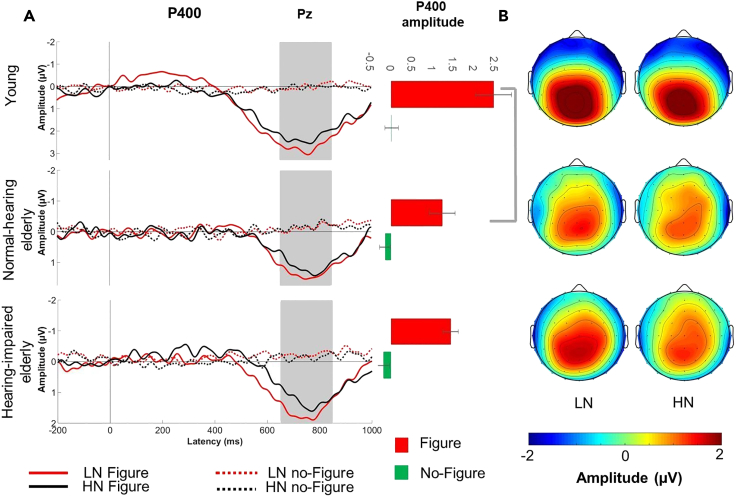

ERPs were separately collected for figure and no-figure trials, the LN and HN conditions, and the young adult, normal-hearing elderly, and hearing-impaired elderly group. Only ERPs to figure and no-figure events with a correct response (hit for figure, correct rejection for no-figure) were analyzed. Two ERP components were identified based on a visual inspection of the group’s average responses. Figure detection elicited the ORN response (Figures 2A and 2B) over fronto-central leads, followed by a parietally maximal P400 response (Figures 3A and 3B). Two contrasts were tested by mixed-model ANOVAs on the amplitudes and latencies of the ORN and P400 responses with factors of FIGURE (figure vs. no-figure), NOISE (LN vs. HN), LATERALITY (left vs. midline vs. right), and GROUP: one for exploring age effects by comparing the young adult and the normal-hearing elderly group (AGE factor), and one to test the effect of age-related hearing loss by comparing the normal-hearing and the hearing-impaired elderly group (HEARING IMPAIRMENT factor). Effects not including GROUP (AGE or HEARING IMPAIRMENT) or NOISE are reported separately at the end of the Results.

Figure 2.

EEG results: ORN response

(A) Group-averaged (young adult: N = 20; normal-hearing elderly: N = 13; hearing-impaired older elderly: N = 16) central (C3; maximal ORN amplitude) ERP responses to figure (solid line) and no-figure (dashed line) related central (C3 lead) ORN elicited stimuli obtained in the LN (red) and the HN condition (black), respectively, for young normal-hearing and hearing-impaired older listeners.). Zero latency is at the onset of the figure event. Gray vertical bands show the measurement window for ORN while the yellow dashed line indicates the latency. The bar charts on the right side of ORN in young adults. On the panel shown on the right, the effect of NOISE is shown on the barplot for mean ORN amplitude of figure only trials, respectively, for amplitudes (with SEM) separately for the LN and HN conditions and groups. Significant NOISE effects (p < 0.05) are marked by gray lines beside the bar charts.

(B) Scalp distribution of the ORN responses to Figure elicited ORN response, respectively, stimuli for the LN and HN conditions and groups with color scale below. (C) Source localization results of Brain areas sensitive to the NOISE effect (HN vs. LN condition) within the ORN time window.

(C) Significant NOISE effect on source activity (current source density based on dSPM) found in young normal-hearing and hearing-impaired older adult groups separately. (Color scale below).

Figure 3.

EEG results: P400 response

(A) Group-averaged (young adult: N = 20; normal-hearing elderly: N = 13; hearing-impaired older elderly: N = 16) parietal (Pz; maximal P400 amplitude) ERP responses to figure (solid line) and no-figure (dashed line) related parietal (Pz lead) p400 elicited stimuli obtained in the LN (red) and the HN condition (black), respectively, for young normal-hearing and hearing-impaired older listeners.). Zero latency is at the onset of the figure event. Gray vertical bands show the measurement window for P400. On the right, the effect of FIGURE is shown the bar charts on the barplot for the right side of the panel show the mean P400 amplitude, respectively, for figure and no-figure trials (collapsed across LN and HN) and groups. Amplitudes (with SEM) separately for the LN and HN conditions. Significant group effects (p < 0.05) are marked by gray lines beside the bar charts.

(B) Scalp distribution of figure elicited the P400 response, respectively, responses to figure stimuli for the LN and HN conditions and groups with color scale below.

Object-related negativity (ORN)

AGE effect on the ORN amplitude

No main effect of AGE was found on the ORN amplitude. There was a tendency for the NOISE effect (F[1, 31] = 4.069, p = 0.0524; ηp2 = 0.116) with larger (more negative) ORN amplitudes in the LN compared to the HN condition. The interaction between NOISE and FIGURE also yielded a tendency (F[1, 31] = 4.1550, p = 0.05012; ηp2 = 0.118) with figure trials eliciting larger ORN for LN than HN (post hoc comparison: p = 0.032) but not no-figure trials.

HEARING IMPAIRMENT effect on the ORN amplitude

The three-way interaction of FIGURE × NOISE × HEARING IMPAIRMENT was significant (F[1, 27] = 4.942, p = 0.035; ηp2 = 0.15). This effect was further analyzed with repeated measures ANOVAs including only figure trials measured at the C3 electrode (due to the left central ORN distribution), separately for the normal-hearing and hearing-impaired elderly groups. In the normal-hearing elderly group, figure stimuli elicited significantly larger ORN in the LN relative to HN condition (F[1, 12] = 5.344, p = 0.039), whereas no significant NOISE effect was found in the hearing-impaired elderly group (F[1, 15] = 0.078, p = 0.783).

AGE and HEARING IMPAIRMENT effects on the ORN peak latency

Figure events elicited ORN between 250 and 350 ms latency from figure onset in the young adult group while between 350 and 550 ms in the normal-hearing and hearing-impaired elderly groups. There was a significant main effect of AGE F[1,31] = 4.2662, p < 0.05, ηp2 = 0.12), with the ORN peak latency delayed in normal-hearing elderly (M = 421 ms) compared to young adults (M = 280 ms). NOISE and HEARING IMPAIRMENT did not significantly affect the ORN latency.

The brain regions activated during the ORN period were identified by source localization performed on the responses elicited by figure trials. The sensitivity to SNR was tested by comparing the source signals between the LN and HN conditions, separately for the young adult, normal-hearing, and hearing-impaired elderly groups by permutation-based t-tests. Significant NOISE effects were found predominantly in higher-level auditory and associational areas such as the left temporal cortices, the planum temporale (PT), and the intraparietal sulcus (IPS) Figure 2C). In young listeners, precentral cortical regions were also significantly sensitive to the SNR.

P400

AGE and HEARING IMPAIRMENT effects on the P400 amplitude

The P400 amplitude was significantly lower in the normal-hearing elderly group compared to the young adults (F[1,31] = 5.00, p < 0.05, ηp2 = 0.14). A significant interaction was found between FIGURE and AGEsponses with factors of FIGURE (Fig (F[1,31] = 6.50, p < 0.05, ηp2 = 0.17). Post hoc comparisons revealed that the P400 response was lower in the normal-hearing elderly relative to young adults for the figure responses (p = 0.008) but not for the no-figure responses (p > 0.05). No significant effect including NOISE or HEARING IMPAIRMENT was found for P400 amplitude.

AGE and HEARING IMPAIRMENT effects on the P400 peak latency

Neither NOISE nor AGE or HEARING IMPAIRMENT significantly affected the P400 latency.

EEG results not involving the GROUP (AGE or HEARING IMPAIRMENT) or NOISE factor

ORN

AGE effect on the ORN amplitude

There was a main effect of FIGURE (F[1,31] = 72.7, p < 0.001, ηp2 = 0.70) with figure trials eliciting stronger ORN response (more negative signal) than no-figure trials and LATERALITY (F[2,62] = 9.08, p < 0.001, ηp2 = 0.23). Post-hoc pairwise comparisons revealed that the ORN was dominant on the left side as the amplitude at C3 amplitudes was larger than at C4 or Cz (p < 0.001, both).

HEARING IMPAIRMENT effect on the ORN amplitude

There was a main effect of figure (F[1,27] = 30.47, p < 0.001, ηp2 = 0.53) with FIGURE trials eliciting stronger ORN response than no-figure trials and LATERALITY (F[2,54] = 6.77, p < 0.05, ηp2 = 0.20). The significant interaction between FIGURE and LATERALITY (F[2, 54] = 5.5185, p = 0.007; ηp2 = 0.17) was caused by the amplitude at C3 being significantly higher than at Cz for figure (p < 0.001) but not for no-figure trials.

P400

AGE effects on the P400 amplitude

There was a main effect of FIGURE (F[1,31] = 77.76, p < 0.001, η2 = 0.71) with figure trials eliciting stronger P400 response (more positive signal) than no-figure trials and LATERALITY (F[2,62] = 14.71, p < 0.001, ηp2 = 0.32). The significant interaction between FIGURE and LATERALITY (F[2, 62] = 4.3340, p = 0.01731 ηp2 = 0.122) was caused by the amplitude at Pz being significantly higher than at P3 or P4 for figure (p < 0.001, both) but not for no-figure trials.

HEARING IMPAIRMENT effects on the P400 amplitude

There was a main effect of FIGURE (F[1,27] = 55.60, p < 0.001, ηp2 = 0.67) with figure trials eliciting stronger P400 response than no-figure trials and LATERALITY (F[2,54] = 17.15, p < 0.001, ηp2 = 0.39). The significant interaction between FIGURE and LATERALITY (F[2, 54] = 8.6854, p < 0.001; ηp2 = 0.24) was caused by the amplitude at Pz being significantly higher than at P3 or P4 for figure (p < 0.001, both) but not for no-figure trials.

Discussion

Using a tone-cloud-based figure detection task, we tested the contributions of peripheral and central auditory processes to age-related decline of hearing in noisy environments. We found that while aging slows the processing of the concurrent cues of auditory objects (long ORN latencies in the elderly groups) and may affect processes involved in deciding the task-relevance of the stimuli (lower P400 amplitude in the normal-hearing elderly than the young adult group), overall, it does not significantly reduce the ability of auditory object detection in noise (no significant differences in the SNRs between the young adults and the normal-hearing elderly group). However, when aging is accompanied by higher levels of hearing loss, grouping concurrent sound elements suffers and, perhaps not independently, the tolerance to noise decreases. The latter was supported by the results that (1) higher coherence was needed by the hearing-impaired than the normal-hearing elderly group for the same figure detection performance; (2) hearing thresholds negatively correlated with the number of background tones reducing detection performance from the LN to the HN level; (3) the ORN amplitudes did not significantly differ between the HN and LN condition in the hearing-impaired elderly group (while they differed in the other two groups). The inference about the effects of peripheral hearing loss is strongly supported by the efficacy of the stimulus individualization procedure that effectively eliminated correlations between hearing thresholds and performance measures of the figure detection task as well as between the current working memory indices and any of the behavioral measures. Further, the current results are fully compatible with those of prior studies showing that spectrotemporal coherence supports auditory stream segregation (better figure detection performance with higher coherence.31,35,36,37,38

We now discuss in more detail the general age-related changes in auditory scene analysis followed by the effects of age-related hearing loss.

Age-related changes in auditory scene analysis

We found no behavioral evidence for age-related decline either in the ability to integrate sound elements (coherence level) or in the sensitivity to the noise (number of added background tones). Evidence about aging-related changes in auditory scene analysis is contradictory. Results are suggesting that the ability to exploit sequential stimulus predictability for auditory stream segregation degrades with age.39 A recent study, however, suggested that elderly listeners can utilize predictability, albeit with a high degree of inter-individual variation.36 Further, de Kerangal and colleagues40 also found that the ability to track sound sources based on acoustic regularities is largely preserved in old age. The current results strengthen this view, as figures within the tone clouds are detected by their temporally coherent behavior. Further, age did not significantly affect performance in an information masking paradigm,41 a result fully compatible with the current finding of no significant behavioral effect of age, as tone clouds impose both energetic and informational masking on detecting figures.

Although figure detection performance was found preserved in the normal-hearing elderly group, the underlying neural activity significantly differed compared to the young adults both at the early (ORN) and later (P400) stage of processing figure trials.

ORN results

In line with our hypothesis, the early perceptual stage of central auditory processing was significantly slowed in the elderly compared to young adults (the peak latency of ORN was delayed by ca. 150 ms). Although using the mistuned partial paradigm, Alain and colleagues6,42 did not report a significant delay of ORN in the elderly compared to young or middle-aged adults, a tendency of longer ORN peak latencies with age can be observed on the responses (see42 Figure 2). ORN is assumed to reflect the outcome of cue evaluation, the likelihood of the presence of two or more concurrent auditory objects.43 Compared to the mistuned partial paradigm, in which only concurrent cues are present (i.e., there is no relationship between successive chords), the tone-cloud-based figure detection paradigm also includes a sequential element: the figure only emerges if the relationship between elements of successive chords is discovered. It is thus possible that the delay is due to slower processing of the temporal aspect of the segregation cues. Alternatively, the delay may be related to the higher complexity of concurrent cues in the current compared to the missing fundamental design, because the latter can rely on harmonicity, whereas the figure in the current paradigm links together tones with harmonically unrelated frequencies. Slower sensory information processing has often been found in the elderly compared to young adults (e.g., Alain and McDonald found an age-related delay of the latency of the P2 component).

A possible specific explanation of the observed aging-related delay of the ORN peak is that the auditory system at older age needs to accumulate more sensory evidence for the perceptual buildup of the object representation. The input from the periphery may be noisier at an older age (for review see Slade et al.5) therefore, more time is needed to evaluate the relations between the current and previous chords or separate and integrate the spectral elements into an object than at a young age. This assumption is compatible with results showing that elderly listeners perform at a higher level in detecting mistuned partials with chords of 200 ms duration, compared to 40 ms duration. The ORN responses are similar to those obtained for young adults.6 Concordantly, some studies using speech in-noise tasks suggested that older listeners required more time than younger listeners to segregate sound sources from either energetic or informational maskers17,44 The study from Ben-David et al. demonstrated similar results for a speech babble masker but not for a noise masker.17 Since the current study employed a delayed response task, the reaction time data were not suitable to test the age-related slowing information processing hypothesis. Young adults nevertheless responded slightly faster than the elderly groups, but the difference was not significant.

P400 results

The P400 amplitude was significantly lower in normal-hearing elderly compared to young adults. Considering the commonalities between the neural generators and sensitivity to stimulus and task variables between the P400 and the P3 components16,20,45,46 P400 likely reflects attentional task-related processes.47 The P3 amplitude was found to be lower in healthy aging.48,49 This is interpreted as normal cognitive decline with aging.48,50,51 Therefore, the current finding of reduced P400 amplitude likely reflects general cognitive age-related changes in attention or executive functions.

Distraction by irrelevant sounds1,2,3 may be an important cause of the difficulties encountered by many elderly people in speech-in-noise situations. Deterioration of these central functions may partly compromise concurrent sound segregation,16,17,42 lead to diminished auditory regularity representations at the higher stations of the auditory system,16,18 as well as deficient inhibition of irrelevant information processing.19

Consequences of age-related hearing loss on stream segregation ability

The hypothesis suggesting that integrating sound elements into an object was more difficult for the elderly with moderate hearing loss than for normal-hearing elderly was confirmed: hearing-impaired elderly needed more figure tones and higher SNR than normal-hearing elderly listeners to reach similar figure-detection performance. Specifically, while for normal-hearing elderly listeners’ ca. 55% of the tones in the chord forming the figure was sufficient for an 85% figure-detection ratio (LN condition), hearing-impaired elderly needed ca. 70% of the tones to belong to the figure for the same performance. Further, hearing impairment may increase susceptibility to masking by the background tones, as the number of background tones reducing performance from 85 to 65% (HN condition) negatively correlated with the hearing threshold. Supporting this explanation, former studies investigating speech perception performance in background noise found that the impact of hearing impairment is as detrimental for young and middle-aged as it is for older adults. When the background noise becomes cognitively more demanding, there is a larger decline in speech perception due to age or hearing impairment.52,53

The ORN responses may provide further insight into the problems of figure-ground segregation caused by hearing impairment. Whereas young adults and normal-hearing elderly elicited larger ORNs in the low than the HN condition, the ORN amplitude did not differ between the two conditions for the hearing-impaired elderly. This suggests that the sensory-perceptual processes involved in detecting figures in noise were not made more effective by surrounding the figure with less noise for the hearing-impaired elderly. The lower amount of information arriving from the periphery limits their ability to find coherence or integrate concurrent sound elements. Consequently, there is no capacity to reduce the effect of additional noise resulting in a steep performance decline when in more noisy situations.

In contrast to early (sensory-perceptual) processing, no difference was found between normal-hearing and hearing-impaired elderly listeners, as was shown by the similar-amplitude P400 responses in the two groups. Thus, hearing deficits without general cognitive effects (as was promoted by the group selection criteria and the lack of working memory differences found between the groups) only affect early sensory-perceptual processes. Further, as the level of hearing impairment of the current hearing-impaired group is modest (as none of the participants reported serious difficulties in the hearing handicap inventory for the elderly34), the current results suggest that the tone-cloud-based figure detection paradigm could be used to detect hearing loss before it becomes severe.

Conclusions

Results obtained in a well-controlled model of the speech-in-noise situation suggest that age-related difficulties in listening under adverse conditions are largely due to hearing impairment, making figure-ground segregation especially difficult for elderly people. Coherence levels needed by individuals to reliably detect figures were very sensitive to hearing impairment and may serve as a diagnostic tool for hearing decline before it becomes clinically significant.

Limitations of the study

The sample sizes for the normal-hearing and hearing-impaired elderly groups were relatively low, bringing the statistical power of the analyses into question. For ERP analyses statistical power might have been insufficient to detect all effects of interest (see e.g., Boudewyn et al. and Jensen et al.54,55 for simulations on power in ERP studies), thus caution is advised before generalizing the results of the current study. Further caution is needed due to results that remained slightly above the conventional significance threshold (see object-related negativity (ORN) section in results).

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| EEG data | this paper | OSF: https://osf.io/q8n9k/10.17605/OSF.IO/Q8N9K |

| Behavioral data | this paper | OSF: https://osf.io/q8n9k/10.17605/OSF.IO/Q8N9K |

| Software and algorithms | ||

| Matlab (version R2017a) | The MathWorks, Inc. | https://www.mathworks.com |

| Code for Quest and other experimental procedure | Implemented with Psychtoolbox 3.0.16 |

https://github.com/dharmatarha/SFG_aging_study/tree/master/threshold https://doi.org/10.5281/zenodo.10657952 |

| EEGlab14_1_2b toolbox | Swartz Center for Computational Neuroscience | https://sccn.ucsd.edu/eeglab/index.php |

| Brainstorm v. 3.230810 | Brainstorm team | http://neuroimage.usc.edu/brainstorm |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the Lead contact, Ádám Boncz (boncz.adam@ttk.hu).

Materials availability

This study did not generate new unique materials.

Data and code availability

-

•

Behavioral and EEG data have been deposited at OSF and are publicly available as of the date of publication. The DOI is listed in the key resources table.

-

•

All original code has been deposited at GitHub and Zenodo and is publicly available as of publication. DOIs are listed in the key resources table.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Experimental model and study participant details

Twenty young (14 females; mean age: 21.2 ± 2,4), thirteen old (9 females; mean age: 67.3 ± 4,3) with intact hearing, and sixteen old adults (11 females; mean age: 68.7 ± 4,0) with mild hearing impairment participated in the study. Participants were financially compensated for their participation (ca. 4 euros/hour). None of the participants reported any neurological diseases. Participants gave informed consent before the experiment. Study protocols adhered to the Declaration of Helsinki and were approved by the local review board of the Institute of Cognitive Neuroscience and Psychology at the Research Centre for Natural Sciences. Participants were of European ancestry (self-reported).

Method details

Audiometry

All participants underwent standard pure tone audiometric hearing tests in which hearing thresholds (HT) were screened at frequencies between 250 Hz and 8000 Hz (250, 500, 1000, 2000, 4000, 6000, 8000 Hz), separately for both ears. Based on the hearing test results, elderly participants were divided into two groups: elderly participants with any HT above 45 dB were categorized as having mild hearing loss.5 In contrast, those with no HT above 45 dB formed the normal-hearing elderly group. The threshold difference between the two ears was less than 20 dB in all participants. Average HT across tested frequencies was entered into a mixed-model ANOVA with between-subject factor GROUP (young, normal-hearing, and hearing-impaired elderly) and within-subject factor EAR (left or right). As expected, there was a significant main effect of GROUP (F(2, 46) = 101.18, p < 0.001, ηp2 = 0.788), but no main effect of the EAR (F(1, 46) = 1.39) or interaction (F(2, 46) = 0.83). Pairwise comparisons revealed that all three groups differed significantly regarding average HT. Young adults had a lower average HT (M = 9.69 dB, SD = 2.97) than normal-hearing (M = 19.75 dB, SD = 6.80; t(31) = 4.87, p < 0.001, d = 0.99) and hearing-impaired elderly (M = 34.31 dB, SD = 5.81; t(34) = 16.50, p < 0.001, d = 2.55). Normal-hearing elderly also had a significantly lower HT than hearing-impaired elderly (t(27) = 6.22, p < 0.001, d = 0.93).

Subjective hearing impairment questionnaire

Subjective hearing impairment (i.e., hearing difficulties in everyday life, including their emotional and social aspects) was assessed by a subset of the “Hearing Handicap Inventory for the Elderly” questionnaire (HHIE, adapted from34; see the administered subset in Table S1). The short HHIE test scores did not differ across groups (F(2, 42) = 0.754, p = 0.47).

Working memory assessment

Working memory (WM) capacity was tested by the forward and backward digit span tasks56; see Figure 1) and compared across groups by one-way ANOVAs with the between-subject factor GROUP. Both the WM capacity (forward digit span) and WM control function (backward digit span) were significantly higher in young adults than in either elderly group (pairwise all cases p < 0.005; forward digit span: F(2,46) = 6.694, p < 0.05, ηp2 = 0.225; backward digit span: F(2,46) = 3.99, p < 0.05, ηp2 = 0.148). No significant difference was found for either WM function between the two elderly groups of participants.

Stimuli

Sounds were generated with MATLAB (R2017a, Mathworks; Natick, MA, USA) at a sampling rate of 44.1 kHz and 16-bit resolution. Stimuli were adapted from previous studies25,26 samples depicted in Figure 1A). Each stimulus consisted of 40 random chords (sets of concurrent pure tones) of 50 ms duration with 10 ms raised-cosine ramps and zero inter-chord interval (total sound duration: 2000 ms). Each chord in the low-noise condition was composed of 20 pure tones with the tone frequencies drawn with equal probability from a set of 129 frequency values equally spaced with one semitone step in the 179–7246 Hz interval (the “tone cloud” stimulus). (See the number of concurrent tones in the high-noise condition in the “threshold detection setting up LN/HN conditions” subsection.) A correction for equal loudness of tones with different frequencies was applied to the stimuli, based on the equal-loudness contours specified in the ISO 226:2003 standard.

In half of the stimuli (Figure stimuli), a subset of the tones increased in frequency by one semitone over ten consecutive chords. Thus, these tones were temporally coherent with each other, forming a figure within the tone cloud stimulus stimuli. Listeners can segregate temporally coherent tones from the remaining ones; perceiving this “figure” against the background of concurrent tones becomes more likely by increasing the number of temporally coherent tones (termed “coherence level”.25,26; In the current study, the figure appeared one for 500 ms randomly within the 300–1700 ms interval from stimulus onset (between the 7th and the 33rd position of the sequence of 40 chords). In the other half of the stimuli (No-figure stimuli) all tones of the chords were selected randomly.

Procedure

Participants were tested in an acoustically attenuated and electrically shielded room at the Research Center for Natural Sciences. Stimulus presentation was controlled with Psychtoolbox 3.0.16.57 Sounds were delivered to the listeners via HD600 headphones (Sennheiser electronic GmbH & Co. KG) at a comfortable listening level of 60–70 dB SPL (self-adjusted by each listener). A 20″ computer screen was placed directly in front of participants at 80 cm for displaying visual information.

Familiarization

At the beginning of the experiment, participants were familiarized with the stimuli by asking them to listen to Figure and No-figure stimuli until they were confident in perceiving the difference (∼5 min). To facilitate detection during familiarization, Figure stimuli were generated with a coherence level of 18 (out of the total 20 tones making up the chords). In each trial, the participant initiated the presentation of either a Figure or a No-figure stimulus by pressing a key on the left or the right side of a keyboard. The keys used in this phase were then consistently used for the Figure or the No-figure responses throughout the experiment; they were counterbalanced across participants. During familiarization, a fixation cross was shown at the center of the computer screen, and participants were instructed to focus on it. The familiarization phase ended when participants indicated their confidence in being able to distinguish between Figure and No-figure stimuli.

Training

Next, participants were trained in the Figure detection task (∼10 min). Each trial started with the presentation of a stimulus (2000 ms) followed by the response interval (maximum of 2000 ms), visual feedback (400 ms), and an inter-trial interval (ITI) of 500–800 ms (randomized). Participants were instructed to press one of the previously learned responses during the response interval to indicate whether they detected the presence of a Figure or not (yes/no task). The instruction emphasized the importance of confidence in the response over speed. During the ITI and the stimulus, a fixation cross was shown centrally on the screen, which then switched to a question mark for the response interval. Feedback was provided in the form of a short text (“Right” or “Wrong”) displayed centrally on the screen. Participants were instructed to fixate on the cross or question mark throughout the task. The training phase consisted of 6 blocks of 10 trials, each, with 5 Figure and 5 No-figure stimuli in each block, presented in a pseudorandom order. At the end of each block, summary feedback about accuracy was provided to participants. In the first block, Figure coherence level was 18, decreasing by 2 in each subsequent block (ending at a coherence level of 8). Blocks in the training phase were repeated until the participant’s accuracy was higher than 50% in at least one of the last three blocks (with Figure coherence levels of 12, 10, or 8). Repeated blocks started from the last block with higher than 50% accuracy.

Threshold detection setting up LN/HN conditions

After training, each participant performed two adaptive threshold detection tasks (∼15 min) to determine the stimuli parameters corresponding to 85% (termed the low-noise (LN) condition) and 65% accuracy (the high-noise (HN) condition) in the figure detection task. In both threshold detection tasks, the trial structure was as described for the training phase, except for the lack of feedback at the end of each trial. As in the training phase, participants were instructed to indicate the presence or absence of a figure in the stimulus, with an emphasis on accuracy.

In the first threshold detection task, the goal was to determine the participant’s individual Figure coherence level that corresponded to ca. 85% accuracy, while keeping the overall number of tones in each cord constant at 20. In the second task, the coherence level was kept constant at the level determined in the LN threshold detection task, and the number of background tones in the chord was increased until performance dropped to ca. 65% accuracy.

In both cases, thresholds were estimated using the QUEST procedure,58 an adaptive staircase method that sets the signal-to-noise ratio (SNR) of the next stimulus to the most probable level of the threshold, as estimated by a Bayesian procedure taking into account all past trials. SNR was determined as the ratio of the number of figure and background tones. Both tasks consisted of one block of 80 trials, with 20 trials added if the standard deviation of the threshold estimate was larger than the median difference between successive SNR levels allowed by FG stimuli parameters. The thresholding phase yielded stimulus parameters corresponding to 65 and 85% accuracy, separately for each participant. Thus, in the main experiment, the LN and HN condition tasks posed similar difficulty levels to each participant. The exact parameters used for the QUEST procedure can be found in the GitHub repository of the experiment.

Main figure detection task

In the main part of the experiment (∼90 min), the trial structure and the instructions were identical to those used in the threshold detection procedure. Two conditions (LN and HN) were administered, resulting in four types of stimuli: Figure - LN, No-figure - LN, Figure - HN, and No-figure - HN. Participants received 200 repetitions of each stimulus type for a total of 800 trials. Trials were divided into 10 stimulus blocks of 80 trials each, with each block containing an equal number (20-20) of all four stimulus types in a randomized order. Summary feedback on performance (overall accuracy) was provided to participants after each block. Short breaks were inserted between successive stimulus blocks with additional longer breaks after the 4th and 7th blocks.

Analysis of behavioral data

From the threshold detection tasks, we analyzed the participants’ coherence level in the LN condition, the number of additional background tones in the HN condition, and log-transformed SNR values for both conditions. A mixed-model ANOVA with the within-subject factor NOISE (LN vs. HN) and the between-subject factor GROUP (young adult, normal-hearing elderly, hearing-impaired elderly) was conducted on SNR. For coherence levels (LN only) and the number of additional background tones (HN only), one-way ANOVAs were performed with the between-subject factor GROUP (young, normal-hearing old, hearing-impaired old).

From the main task, detection performance was assessed by the sensitivity index,59 false alarm rate (FA), and mean reaction times (RT). Mixed-model ANOVAs were conducted with the within-subject factor NOISE, and the between-subject factor GROUP, separately on d’, FA, and RT. Statistical analyses were carried out in MATLAB (R2017a). The alpha level was set at 0.05 for all tests. Partial eta squared (ηp2) is reported as effect size. Post-hoc pairwise comparisons were computed by Tukey HSD tests.

Pearson’s correlations were calculated between the average of pure-tone audiometry thresholds in the 250–8000 Hz range and working memory measures (capacity and control) on one side and behavioral variables from the threshold detection and the figure detection tasks on the other side. Bonferroni correction was used to reduce the potential errors resulting from multiple comparisons.

Analysis of EEG data

EEG recording and preprocessing

EEG was recorded with a Brain Products actiCHamp DC 64-channel EEG system and actiCAP active electrodes. Impedances were kept below 15 kΩ. The sampling rate was 1 kHz with a 100 Hz online low-pass filter applied. Electrodes were placed according to the International 10/20 system with FCz serving as the reference. Eye movements were monitored with bipolar recording from two electrodes placed lateral to the outer canthi of the eyes.

EEG was preprocessed with the EEGlab14_1_2b toolbox60 implemented in Matlab 2018b. Signals were band-pass filtered between 0.5 and 80 Hz using a finite impulse response (FIR) filter (Kaiser windowed, with Kaiser β = 5.65326 and filter length n = 18112). A maximum of two malfunctioning EEG channels were interpolated using the default spline interpolation algorithm implemented in EEGlab. The Infomax algorithm of Independent Component Analysis (ICA) was employed for artifact removal.60 ICA components from blink artifacts were removed after visually inspecting their topography and the spectral contents of the components. No more than 10 percent of the overall number of ICA components (for a maximum of n = 3) were removed.

Event-related brain activity analysis

Epochs were extracted from the continuous EEG records between −800 and +2300 ms relative to the onset of the Figure in Figure trials. For No-figure trials, onsets were selected randomly from the set of Figure onsets in the Figure trials (each Figure onset value from Figure trials was selected only once for a No-figure trial). Only epochs from trials with a correct response (hit for Figure and correct rejection for No-figure trials) were further processed. Baseline correction was applied by averaging voltage values in the [-800 - 0] ms time window. Epochs exceeding the threshold of +/−100 μV change throughout the whole epoch measured at any electrode were rejected. The remaining epochs were averaged separately for each stimulus type and group. The mean number of valid epochs (collapsed across groups) were Figure - HN: 107.78; No-figure - HN: 175.08; Figure - LN: 153.04; No-figure - LN: 173.29. Brain activity within the time windows corresponding to the ORN and P400 ERP components were measured separately for each stimulus type/condition/group. Time windows were defined by visual inspection of grand average ERPs.

The predominantly fronto-central ORN25,30,31,47 amplitudes were measured as the average signal in the 250–350 ms latency range from Figure onset for the young adult group and the 350–550 ms latency range for the normal-hearing and hearing-impaired elderly groups at the C3, Cz, and C4 leads. The predominantly parietal P40025,30,31,47 amplitudes were measured as the average signal in the 650–850 ms latency range from the P3, Pz, and P4 electrodes in all three groups.

Peak latency was measured as the latency value of the maximal amplitude within the latency range of ORN and P400 respectively.

EEG source localization

The Brainstorm toolbox (version 2022 January61; was used to perform EEG source reconstruction, following the protocol of previous studies.62,63,64 The MNI/Colin27 brain template was segmented based on the default setting and was entered, along with default electrode locations, into the forward boundary element head model (BEM) provided by the openMEEG algorithm.65 For the modeling of time-varying source signals (current density) of all cortical voxels, a minimum norm estimate inverse solution was employed using dynamical Statistical Parametric Mapping normalization,66 separately on Figure and No-Figure trials of the LN and HN conditions, and the three groups.

Quantification and statistical analysis

Statistical analysis of behavioral data

From the threshold detection tasks, we analyzed the participants’ coherence level in the LN condition, the number of additional background tones in the HN condition, and log-transformed SNR values for both conditions. A mixed-model ANOVA with the within-subject factor NOISE (LN vs. HN) and the between-subject factor GROUP (young adult, normal-hearing elderly, hearing-impaired elderly) was conducted on SNR. For coherence levels (LN only) and the number of additional background tones (HN only), one-way ANOVAs were performed with the between-subject factor GROUP (young, normal-hearing old, hearing-impaired old).

From the main task, detection performance was assessed by the sensitivity index (57), false alarm rate (FA), and mean reaction times (RT). Mixed-model ANOVAs were conducted with the within-subject factor NOISE, and the between-subject factor GROUP, separately on d’, FA, and RT. Statistical analyses were carried out in Matlab (R2017a). The alpha level was set at 0.05 for all tests. Partial eta squared (ηp2) is reported as effect size. Post-hoc pairwise comparisons were computed by Tukey HSD tests.

Pearson’s correlations were calculated between the average of pure-tone audiometry thresholds in the 250–8000 Hz range and working memory measures (capacity and control) on one side and behavioral variables from the threshold detection and the figure detection tasks on the other side. Bonferroni correction was used to reduce the potential errors resulting from multiple comparisons.

Statistical analysis of EEG data

Event-related brain activity

The effects of age were tested with mixed-model ANOVAs with within-subject factors FIGURE (Figure vs. No-figure), NOISE (LN vs. HN), LATERALITY (left vs. midline vs. right), and the between-subject factor AGE (young adult vs. normal-hearing elderly) on the two ERP amplitudes and peak latencies. Similar mixed-model ANOVAs were conducted to test the effects of hearing impairment by exchanging AGE for the between-subject factor HEARING IMPAIRMENT (normal-hearing vs. hearing-impaired older adults). Post-hoc pairwise comparisons were computed by Tukey HSD tests.

EEG source activity

Contrasts were evaluated on the average signal for the time window of interest (250–350 ms for young adults and 350–550 ms for normal-hearing and hearing-impaired older listeners), between Figure trials of the LN and HN conditions, separately for each group by a permutation-based (N = 1000) paired sample t-test (alpha level = 0.01).

Acknowledgments

We would like to express our gratitude to Roberto Barumerli for his advice on behavioral analysis.

This work was funded by the Hungarian National Research Development and Innovation Office (ANN131305 and PD123790 to B.T. and K132642 to I.W.), the János Bolyai Research grant awarded to B.T. (BO/00237/19/2), and the Austrian Science Fund (FWF, I4294-B) awarded to R.B. All data are available at OSF: https://osf.io/q8n9k/.

Author contributions

Conceptualization: Á.B., R.B., and B.T.; methodology: Á.B., R.B., B.T., and O.Sz.; formal analysis: Á.B., B.T., O.Sz., and L.B.; investigation: Á.B., B.T., O.Sz., and R.B.; writing – original draft: Á.B. and B.T.; writing – review and editing: Á.B., B.T., R.B., and I.W.; supervision: B.T.; funding acquisition: R.B. and I.W.; resources: B.T.; software: Á.B. and O.Sz.; data collection: P.K.V.; project administration: P.K.V.

Declaration of interests

The authors declare no competing interests.

Published: February 20, 2024

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2024.109295.

Contributor Information

Ádám Boncz, Email: boncz.adam@ttk.hu.

Brigitta Tóth, Email: toth.brigitta@ttk.hu.

Supplemental information

References

- 1.Prosser S., Turrini M., Arslan E. Effects of different noises on speech discrimination by the elderly. Acta Otolaryngol. 1991;111:136–142. doi: 10.3109/00016489109127268. [DOI] [PubMed] [Google Scholar]

- 2.Tun P.A., Wingfield A. One voice too many: Adult age differences in language processing with different types of distracting sounds. J. Gerontol. B-Psychol. 1999;54:317–327. doi: 10.1093/geronb/54B.5.P317. [DOI] [PubMed] [Google Scholar]

- 3.Schneider B.A., Daneman M., Murphy D.R., See S.K. Listening to discourse in distracting settings: the effects of aging. Psychol. Aging. 2000;15:110–125. doi: 10.1037/0882-7974.15.1.110. [DOI] [PubMed] [Google Scholar]

- 4.Cardin V. Effects of aging and adult-onset hearing loss on cortical auditory regions. Front. Neurosci. 2016;10:199. doi: 10.3389/fnins.2016.00199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Slade K., Plack C.J., Nuttall H.E. The effects of age-related hearing loss on the brain and cognitive function. Trends Neurosci. 2020;43:810–821. doi: 10.1016/j.tins.2020.07.005. [DOI] [PubMed] [Google Scholar]

- 6.Alain C., McDonald K., Van Roon P. Effects of age and background noise on processing a mistuned harmonic in an otherwise periodic complex sound. Hear. Res. 2012;283:126–135. doi: 10.1016/j.heares.2011.10.007. [DOI] [PubMed] [Google Scholar]

- 7.Frisina R.D., Ding B., Zhu X., Walton J.P. Age-related hearing loss: prevention of threshold declines, cell loss and apoptosis in spiral ganglion neurons. Aging. 2016;8:2081. doi: 10.18632/aging.101045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kurakata K., Mizunami T., Matsushita K. Pure-tone audiometric thresholds of young and older adults. Acoust Sci. Technol. 2006;27:114–116. doi: 10.1250/ast.27.114. [DOI] [Google Scholar]

- 9.Profant O., Tintěra J., Balogová Z., Ibrahim I., Jilek M., Syka J. Functional changes in the human auditory cortex in ageing. PLoS One. 2015;10 doi: 10.1371/journal.pone.0116692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sergeyenko Y., Lall K., Liberman M.C., Kujawa S.G. Age-related cochlear synaptopathy: an early-onset contributor to auditory functional decline. J. Neurosci. 2013;33:13686–13694. doi: 10.1523/JNEUROSCI.1783-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Liberman M.C., Kujawa S.G. Cochlear synaptopathy in acquired sensorineural hearing loss: Manifestations and mechanisms. Hear. Res. 2017;349:138–147. doi: 10.1016/j.heares.2017.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Holmes E., Griffiths T.D. ‘Normal’ hearing thresholds and fundamental auditory grouping processes predict difficulties with speech-in-noise perception. Sci. Rep. 2019;9 doi: 10.1038/s41598-019-53353-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vermiglio A.J., Soli S.D., Freed D.J., Fisher L.M. The relationship between high-frequency pure-tone hearing loss, hearing in noise test (HINT) thresholds, and the articulation index. J. Am. Acad. Audiol. 2012;23:779–788. doi: 10.3766/jaaa.23.10.4. [DOI] [PubMed] [Google Scholar]

- 14.Helfer K.S., Wilber L.A. Hearing loss, aging, and speech perception in reverberation and noise. J. Speech Lang. Hear. Res. 1990;33:149–155. doi: 10.1044/jshr.3301.149. [DOI] [PubMed] [Google Scholar]

- 15.Pichora-Fuller M.K., Souza P.E. Effects of aging on auditory processing of speech. Int. J. Audiol. 2003;42:11–16. doi: 10.3109/14992020309074638. [DOI] [PubMed] [Google Scholar]

- 16.Snyder J.S., Alain C. Age-related changes in neural activity associated with concurrent vowel segregation. Cognit. Brain Res. 2005;24:492–499. doi: 10.1016/j.cogbrainres.2005.03.002. [DOI] [PubMed] [Google Scholar]

- 17.Ben-David B.M., Vania Y.Y., Schneider B.A. Does it take older adults longer than younger adults to perceptually segregate a speech target from a background masker? Hear. Res. 2012;290:55–63. doi: 10.1016/j.heares.2012.04.022. [DOI] [PubMed] [Google Scholar]

- 18.Herrmann B., Buckland C., Johnsrude I.S. Neural signatures of temporal regularity processing in sounds differ between younger and older adults. Neurobiol. Aging. 2019;83:73–85. doi: 10.1016/j.neurobiolaging.2019.08.028. [DOI] [PubMed] [Google Scholar]

- 19.Bertoli S., Smurzynski J., Probst R. Effects of age, age-related hearing loss, and contralateral cafeteria noise on the discrimination of small frequency changes: psychoacoustic and electrophysiological measures. J. Assoc. Res. Oto. 2005;6:207–222. doi: 10.1007/s10162-005-5029-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Friedman D. In: The Oxford Handbook of Event-Related Potential Components. Kappenman E.S., Luck S.J., editors. Oxford Academic; 2011. The Components of Aging; pp. 514–536. [DOI] [Google Scholar]

- 21.Grady C. The cognitive neuroscience of ageing. Nat. Rev. Neurosci. 2012;13:491–505. doi: 10.1038/nrn3256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bizley J.K., Cohen Y.E. The what, where and how of auditory-object perception. Nat. Rev. Neurosci. 2013;14:693–707. doi: 10.1038/nrn3565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bregman A.S., Liao C., Levitan R. Auditory grouping based on fundamental frequency and formant peak frequency. Can. J. Psychol. 1990;44:400–413. doi: 10.1037/h0084255. [DOI] [PubMed] [Google Scholar]

- 24.O'Sullivan J.A., Shamma S.A., Lalor E.C. Evidence for neural computations of temporal coherence in an auditory scene and their enhancement during active listening. J. Neurosci. 2015;35:7256–7263. doi: 10.1523/JNEUROSCI.4973-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tóth B., Kocsis Z., Háden G.P., Szerafin Á., Shinn-Cunningham B.G., Winkler I. EEG signatures accompanying auditory figure-ground segregation. Neuroimage. 2016;141:108–119. doi: 10.1016/j.neuroimage.2016.07.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Teki S., Chait M., Kumar S., Shamma S., Griffiths T.D. Segregation of complex acoustic scenes based on temporal coherence. Elife. 2013;2 doi: 10.7554/eLife.00699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Teki S., Barascud N., Picard S., Payne C., Griffiths T.D., Chait M. Neural correlates of auditory figure-ground segregation based on temporal coherence. Cerebr. Cortex. 2016;26:3669–3680. doi: 10.1093/cercor/bhw173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Holmes E., Zeidman P., Friston K.J., Griffiths T.D. Difficulties with speech-in-noise perception related to fundamental grouping processes in auditory cortex. Cerebr. Cortex. 2021;31:1582–1596. doi: 10.1093/cercor/bhaa311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schneider F., Balezeau F., Distler C., Kikuchi Y., van Kempen J., Gieselmann A., Griffiths T.D. Neuronal figure-ground responses in primate primary auditory cortex. Cell Rep. 2021;35 doi: 10.1016/j.celrep.2021.109242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Alain C., Schuler B.M., McDonald K.L. Neural activity associated with distinguishing concurrent auditory objects. J. Acoust. Soc. Am. 2002;111:990–995. doi: 10.1121/1.1434942. [DOI] [PubMed] [Google Scholar]

- 31.Bendixen A., Jones S.J., Klump G., Winkler I. Probability dependence and functional separation of the object-related and mismatch negativity event-related potential components. Neuroimage. 2010;50:285–290. doi: 10.1016/j.neuroimage.2009.12.037. [DOI] [PubMed] [Google Scholar]

- 32.Shamma S.A., Elhilali M., Micheyl C. Temporal coherence and attention in auditory scene analysis. Trends Neurosci. 2011;34:114–123. doi: 10.1016/j.tins.2010.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Molloy K., Lavie N., Chait M. Auditory figure-ground segregation is impaired by high visual load. J. Neurosci. 2019;39:1699–1708. doi: 10.1523/JNEUROSCI.2518-18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Weinstein B.E., Ventry I.M. Audiometric correlates of the hearing handicap inventory for the elderly. J. Speech Hear. Disord. 1983;48:379–384. doi: 10.1044/jshd.4804.379. [DOI] [PubMed] [Google Scholar]

- 35.Andreou L.V., Kashino M., Chait M. The role of temporal regularity in auditory segregation. Hear. Res. 2011;280:228–235. doi: 10.1016/j.heares.2011.06.001. [DOI] [PubMed] [Google Scholar]

- 36.Neubert C.R., Förstel A.P., Debener S., Bendixen A. Predictability-Based Source Segregation and Sensory Deviance Detection in Auditory Aging. Front. Hum. Neurosci. 2021;15 doi: 10.3389/fnhum.2021.734231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sohoglu E., Chait M. Detecting and representing predictable structure during auditory scene analysis. Elife. 2016;5 doi: 10.7554/eLife.19113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Aman L., Picken S., Andreou L.V., Chait M. Sensitivity to temporal structure facilitates perceptual analysis of complex auditory scenes. Hear. Res. 2021;400 doi: 10.1016/j.heares.2020.108111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rimmele J., Schröger E., Bendixen A. Age-related changes in the use of regular patterns for auditory scene analysis. Hear. Res. 2012;289:98–107. doi: 10.1016/j.heares.2012.04.006. [DOI] [PubMed] [Google Scholar]

- 40.de Kerangal M., Vickers D., Chait M. The effect of healthy aging on change detection and sensitivity to predictable structure in crowded acoustic scenes. Hear. Res. 2021;399 doi: 10.1016/j.heares.2020.108074. [DOI] [PubMed] [Google Scholar]

- 41.Farahbod H., Rogalsky C., Keator L.M., Cai J., Pillay S.B., Turner K., Saberi K. Informational Masking in Aging and Brain-lesioned Individuals. J. Assoc. Res. Oto. 2023;24:67–79. doi: 10.1007/s10162-022-00877-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Alain C., McDonald K.L. Age-related differences in neuromagnetic brain activity underlying concurrent sound perception. J. Neurosci. 2007;27:1308–1314. doi: 10.1523/JNEUROSCI.5433-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Alain C., Tremblay K. The role of event-related brain potentials in assessing central auditory processing. J. Am. Acad. Audiol. 2007;18:573–589. doi: 10.3766/jaaa.18.7.5. [DOI] [PubMed] [Google Scholar]

- 44.Ezzatian P., Li L., Pichora-Fuller K., Schneider B.A. Delayed stream segregation in older adults: More than just informational masking. Ear Hear. 2015;36:482–484. doi: 10.1097/aud.0000000000000139. [DOI] [PubMed] [Google Scholar]

- 45.Polich J., Kok A. Cognitive and biological determinants of P300: an integrative review. Biol. Psychol. 1995;41:103–146. doi: 10.1016/0301-0511(95)05130-9. [DOI] [PubMed] [Google Scholar]

- 46.Alain C. Breaking the wave: effects of attention and learning on concurrent sound perception. Hear. Res. 2007;229:225–236. doi: 10.1016/j.heares.2007.01.011. [DOI] [PubMed] [Google Scholar]

- 47.Alain C., Arnott S.R., Picton T.W. Bottom–up and top–down influences on auditory scene analysis: Evidence from event-related brain potentials. J. Exp. Psychol. Human. 2001;27:1072. doi: 10.1037/0096-1523.27.5.1072. [DOI] [PubMed] [Google Scholar]

- 48.Polich J. Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 2007;118:2128–2148. doi: 10.1016/j.clinph.2007.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ashford J.W., Coburn K.L., Rose T.L., Bayley P.J. P300 energy loss in aging and Alzheimer's disease. J. Alzheimers Dis. 2011;26:229–238. doi: 10.3233/jad2011-0061. [DOI] [PubMed] [Google Scholar]

- 50.van Dinteren R., Arns M., Jongsma M.L., Kessels R.P. P300 development across the lifespan: a systematic review and meta-analysis. PLoS One. 2014;9 doi: 10.1371/journal.pone.0087347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.O'Connell R.G., Balsters J.H., Kilcullen S.M., Campbell W., Bokde A.W., Lai R., Robertson I.H. A simultaneous ERP/fMRI investigation of the P300 aging effect. Neurobiol. Aging. 2012;33:2448–2461. doi: 10.1016/j.neurobiolaging.2011.12.021. [DOI] [PubMed] [Google Scholar]

- 52.Goossens T., Vercammen C., Wouters J., van Wieringen A. Masked speech perception across the adult lifespan: Impact of age and hearing impairment. Hear. Res. 2017;344:109–124. doi: 10.1016/j.heares.2016.11.004. [DOI] [PubMed] [Google Scholar]

- 53.Helfer K.S., Freyman R.L. Aging and speech-on-speech masking. Ear Hear. 2008;29:87. doi: 10.1097/AUD.0b013e31815d638b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Boudewyn M.A., Luck S.J., Farrens J.L., Kappenman E.S. How many trials does it take to get a significant ERP effect? It depends. Psychophysiology. 2018;55 doi: 10.1111/psyp.13049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Jensen K.M., MacDonald J.A. Towards thoughtful planning of ERP studies: How participants, trials, and effect magnitude interact to influence statistical power across seven ERP components. Psychophysiology. 2023;60 doi: 10.1111/psyp.14245. [DOI] [PubMed] [Google Scholar]

- 56.Lamar M., Price C.C., Libon D.J., Penney D.L., Kaplan E., Grossman M., Heilman K.M. Alterations in working memory as a function of leukoaraiosis in dementia. Neuropsychologia. 2007;45:245–254. doi: 10.1016/j.neuropsychologia.2006.07.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Brainard D.H., Vision S. The psychophysics toolbox. Spatial Vis. 1997;10:433–436. doi: 10.1163/156856897x00357. [DOI] [PubMed] [Google Scholar]

- 58.Watson A.B., Pelli D.G. QUEST: A Bayesian adaptive psychometric method. Percept. Psychophys. 1983;33:113–120. doi: 10.3758/BF03202828. [DOI] [PubMed] [Google Scholar]

- 59.Swets J.A. Measuring the accuracy of diagnostic systems. Science. 1988;240:1285–1293. doi: 10.1126/science.3287615. [DOI] [PubMed] [Google Scholar]

- 60.Delorme A., Sejnowski T., Makeig S. Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage. 2007;34:1443–1449. doi: 10.1016/j.neuroimage.2006.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Tadel F., Baillet S., Mosher J.C., Pantazis D., Leahy R.M. Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011;2011:1–13. doi: 10.1155/2011/879716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Song J., Davey C., Poulsen C., Luu P., Turovets S., Anderson E., Tucker D. EEG source localization: Sensor density and head surface coverage. J. Neurosci. Methods. 2015;256:9–21. doi: 10.1016/j.jneumeth.2015.08.015. [DOI] [PubMed] [Google Scholar]

- 63.Huang Y., Parra L.C., Haufe S. The New York Head—A precise standardized volume conductor model for EEG source localization and tES targeting. Neuroimage. 2016;140:150–162. doi: 10.1016/j.neuroimage.2015.12.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pizzagalli D.A. In: Handbook of psychophysiology. 3rd ed. Cacioppo J.T., Tassinary L.G., Berntson G.G., editors. Cambrdige University Press; 2007. Electroencephalography and high-density electrophysiological source localization; pp. 56–84. [DOI] [Google Scholar]

- 65.Gramfort A., Papadopoulo T., Olivi E., Clerc M. Forward field computation with OpenMEEG. Comput. Intell. Neurosci. 2011;2011 doi: 10.1155/2011/923703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Stropahl M., Bauer A.K.R., Debener S., Bleichner M.G. Source-modeling auditory processes of EEG data using EEGLAB and brainstorm. Front. Neurosci. 2018;12:309. doi: 10.3389/fnins.2018.00309. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

Behavioral and EEG data have been deposited at OSF and are publicly available as of the date of publication. The DOI is listed in the key resources table.

-

•

All original code has been deposited at GitHub and Zenodo and is publicly available as of publication. DOIs are listed in the key resources table.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.