Abstract

Optical processors, built with “optical neurons”, can efficiently perform high-dimensional linear operations at the speed of light. Thus they are a promising avenue to accelerate large-scale linear computations. With the current advances in micro-fabrication, such optical processors can now be 3D fabricated, but with a limited precision. This limitation translates to quantization of learnable parameters in optical neurons, and should be handled during the design of the optical processor in order to avoid a model mismatch. Specifically, optical neurons should be trained or designed within the physical-constraints at a predefined quantized precision level. To address this critical issues we propose a physics-informed quantization-aware training framework. Our approach accounts for physical constraints during the training process, leading to robust designs. We demonstrate that our approach can design state of the art optical processors using diffractive networks for multiple physics based tasks despite quantized learnable parameters. We thus lay the foundation upon which improved optical processors may be 3D fabricated in the future.

Introduction

Current advancements in deep learning have renewed the interest in optical processors as a promising avenue to accelerate large-scale linear computations. While such optical accelerators have not yet been fully realized for arbitrary linear operations, optical neural processors -i.e. optical processors equivalent to neural networks- have already shown great promise in a variety of applications. For instance, Diffractive networks 1, made of a cascade of passive diffractive layers, could perform all-optical classification1, all-optical quantitative phase imaging (QPI)2,3, optical logic operations4, spatiotemporal signal processing5, saliency segmentation6, and 3D object detection7. Similarly, learnable optical Fourier processors have shown to be capable of all-optical quantitative phase imaging3, medical image processing8,9, optical image encryption10, and image classification11. Such optical processors have also been used as coding elements to design end-to-end optimized computational imaging systems12,13. All these optical processors linearly map an input light field to an output field. They thus perform specific linear operations “learned” from training data, using computational elements we term “optical neurons”.

Optical neurons are traditionally implemented using reconfigurable optical elements like digital micromirror devices (DMDs), and spatial light modulators (SLMs). The state of each DMD micromirror or each SLM pixel, is treated as the learnable parameter of the optical neuron. However, recent advancements in microfabrication have now enabled the fabrication of 3D optics by voxel-wise modulating the refractive index of an optical substrate. Therefore we now possess the unique capability to 3D print optical processors upon learnable design. Here, the transmission coefficient at each 3D location is treated as the learnable parameter of the optical neuron. In either case, however, —unlike the parameters of artificial neurons that can represent any real value— the parameters of optical neurons can only represent a limited set of complex values, constrained due to their physical characteristics and fabrication limitations. More precisely, parameters in optical neurons are quantized, complex-valued, and bounded. For example, typical SLM pixels enable 8-bit-quantized phase-only parameters bounded in ; DMD micromirrors enable 1-bit-quantized amplitude-only parameters bounded in {0, 1}. Similarly in 3D optics, fabrication limitations essentially constrain the parameters to a set of quantized complex values that are bounded in phase (). These constrains may be ignored while designing (or training) the optical processor. But the change in the parameter distribution from design to fabrication, i.e., the model mismatch, frequently leads to a performance decline in the realized system14. For example, recent work on all-optical QPI using diffractive networks (D2NNs) shows that reducing the precision of physical parameters exponentially decreases the performance of the D2NN2. Nevertheless, beyond such isolated examples, there has been little exploration15 on how both boundedness and quantization of parameters affect the performance of optical neurons.

While quantization of optical neurons remain unexplored, quantization of parameters in artificial neural networks has been extensively studied in the machine learning literature to deploy models in resource constraint devices16–18. These works address, quantization post hoc19–21 or during the training –using quantization aware training (QAT) methods18,22,23. Post-hoc-quantized models are easier to train but perform poorly during inference due to model mismatch; QAT models, on the other hand, are harder to train but perform faithful to the trained model. The training difficulty lies in the quantization operation, which is not differentiable. Non-differentiability impedes gradient-based optimizers making quantized models harder to train. QAT methods address this issue using few ways. Most straight forward is to inject quantization noise during forward propagation such that the model learns to be robust; this technique has been used in optical neural network to train neuromorphic models24. Another is to use the straight-through estimator (STE)25,26 that passes the output gradient directly to the input during backpropagation; STE has been used to train optical neurons with limited-precision controls and device variations26. A third approach is to use differentiable soft quantization functions22,23 that approximate the quantized model. Soft quantization functions have not been efficiently utilized in optical neurons, and is the focus of this work. Of note however, Gumbel-Softmax (GS) –that enjoys wide-spread use in neural network as a differentiable approximation to the categorical distribution– has been used as QAT technique in optical neural networks15. While conceptually similar, GS is not a direct approximation of the quantization operation and hence is not as effective as soft quantization functions (see Fig. 1).

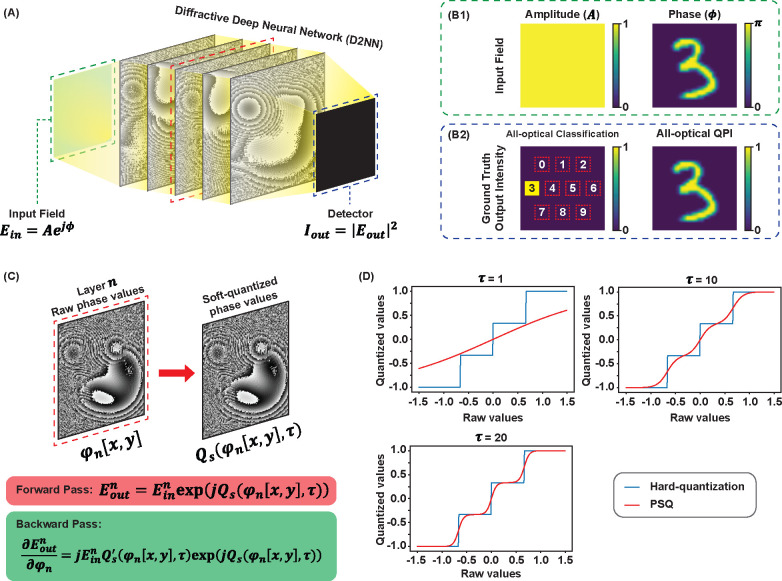

Figure 1. Representative results for performance of QuATON (PSQ-LT) compared to other QAT methods (PQ, STE, GS) used to train optical neurons.

Here PSQ-LT, PQ, STE, and GS respectively stands for progressive sigmoid quantization with learnable temperature, post quantization, straight-through estimator, and gumbel softmax.

To this end, inspired by recent work in computer vision22,23, we introduce “Quantization Aware Training of Optical Neurons”, or “QuATON” a QAT framework specifically targeting optical neurons. The key elements of this framework consists of: (1) a soft quantization function constructed using shifted sigmoid functions that gradually evolve to the desired hard quantization levels; (2) an auto-tuning temperature parameter to control the quantization function during training. In comprehensive performance comparisons, we show the superiority of QuATON over competing methods used to train optical neurons (see Fig. 1). Our work sets the foundation upon which improved optical processors may be designed and built in the future.

Results and Discussion

QuATON: Quantization-aware Training of Optical Neurons

The quantization operation maps a given continuous variable, , to a set of discrete values. These discrete values are known as quantization levels; In uniform quantization, they are evenly spaced and the uniform quantization operation is defined as,

| (1) |

Here, is the rounding operation to the nearest integer, and is the step size. are the lower and upper bounds of the quantized range, where is the number of quantization levels. We hereon refer to the operation described in Eq. 1 as the hard-quantization function.

Due to its step-like nature, the hard-quantization function (Eq. 1) has a zero derivative almost everywhere except for the sharp transitions between two quantization levels; at the transitions its derivative is undefined. Gradient-based optimizers –that depend on the derivative– thus fail in the presence of the hard-quantization function. In artificial- or deep- neural networks, the issues of hard-quantization have been addressed using soft-quantization functions. For instance, Differentiable soft quantization (DSQ)23 is a soft-quantization function based on the hyperbolic tangent (tanh). It has a learnable temperature parameter controlling the steepness of the transitions between two quantization levels. The temperature is updated during the training process which transforms the DSQ function closer to hard-quantization. Inspired by this, we developed a separate soft-quantization function named progressive sigmoid quantization (PSQ) to train optical neurons aware of quantization. PSQ is different from DSQ in two ways. First, PSQ is based on the sigmoid function. Second, in DSQ, the weights outside the quantization range are clamped to the lower and upper bounds of the range. This results in a zero gradient for the weights outside the quantization range, causing them to freeze during training. In PSQ, we removed this clamping which gives a non-zero gradient to weights outside the quantization range.

Our proposed progressive sigmoid quantization (PSQ) function is defined as,

| (2) |

where

| (3) |

and is the temperature factor that changes the steepness of transition between two adjacent quantization levels (other vairables are the same as in Eq. 1). As increases, the PSQ function approaches the hard-quantization function, as shown in Fig. 2–D. When using PSQ in QuATON we start training with a smaller value (i.e., a relaxed quantization function). We then gradually increase as the training progresses, until PSQ ( in Eq. 2) approaches the hard quantization function ( in Eq. 1). We call this progressive training. In this work, we consider two progressive training approaches: 1) linearly increasing temperature (PSQ-LI); and 2) treating the temperature learnable (PSQ-LT). In PSQ-LI, we start with a small value and we increase it linearly with the number of epochs. In PSQ-LT, we use gradient-based optimization to adjust as the training progresses. These two schemes are described in detail in the Methods section (under Progressive Training Schemes).

Figure 2. Quantization-aware training of diffractive deep neural networks (D2NNs) using progressive sigmoid quantization (PSQ):

A) D2NN architecture: D2NNs consist of several diffractive layers. The input field passes through the layers and the detector captures the output intensity. B1) an example of the amplitude and phase of the input field for the MNIST dataset. The input phase contains the information of interest. B2) the ground truth output intensities for the two tasks considered. For the all-optical classification task, the detector region is divided into 10 patches corresponding to each class shown in red dotted lines. For the example shown, the area corresponding to digit 3 is lighted up, and the other areas have zero intensity. For the all-optical quantitative phase imaging (QPI) task, the ground truth output intensity is proportional to the input phase. C) the training procedure optimizing only the phase coefficients of the D2NN. During forward propagation, the raw phase weights of the nth layer are sent through the PSQ function . The immediate output of the layer is obtained by modulating the input to the layer with the soft-quantized phase coefficients as shown in the red box. During the backward propagation through the layer, the partial derivatives with respect to phase weights are computed as shown in the green box. D) the evolution of the PSQ function with the temperature parameter . When increases from 1 to 20, the function gradually becomes closer to hard quantization while keeping the differentiability.

In the next section we show how QuATON can be used to train diffractive networks.

QuATON in Diffractive Networks

In this section we use QuATON to train Diffractive Netowrks (D2NNs), a type of optical processors first demonstrated by Lin et al. for all optical image processing at terahertz wavelengths1. D2NNs have a set of diffractive layers through which the light passes and acts as 2D fully connected networks. These diffractive layers have a set of discrete spatial locations (neurons), each having a complex transmission coefficient. The transmission coefficient of each neuron modulates the amplitude and the phase of the incoming light wave at each layer. The size of a typical D2NN neuron is in the order of half of the operating wavelength; the original terahertz D2NNs consisted of millimeter-scale neurons; D2NNs that work at visible-wavelengths –the more preferred operational range for optical imaging and image processing– require nanometer-scale neurons. These nanometer-scale optical neurons are notoriously difficult to fabricate at full precision. The fabrication processes used to fabricate them impose constraints on the precision and the bounds of the transmission coefficients. Thus here we used QuATON to train visible-range D2NNs with quantized parameters, and thereby relaxing the required fabrication precision.

Micro-fabrication technologies, like two-photon lithography, allow manipulating the refractive index of any given 3D location of on optical substrates at diffraction limited resolution. In the context of D2NNs this capability translates to phase-only optical neurons. Thus our study considers phase only D2NNs. As shown in Fig. 2–A let, and , be the input and output light fields to the D2NN. At the output light field, a detector is placed to capture its intensity . Let be the function of D2NN that maps the input light field to the output light field.

| (4) |

is the composition of individual functions two types of “layers” as shown below.

| (5) |

’s are propagation layers, and ’s are modulation layers. , and respectively propagates the input field to the first layer, from one layer to the next layer, and from the final layer to the detector. To model the function of G’s we use the Rayleigh-Sommerfeld diffraction theory (Ch. 3.5 in Goodman27). Details of the propagation operation are given in Eq. (S2)–(S4) in section A of the supplementary material. Next, , (where ) denotes the modulation happening at the ith layer. Modulation layers, ’s consist of phase coefficients and are treated as a quantization instances for QuATON. As shown in Fig. 2–C, during the forward propagation, the raw phase coefficients are sent through the PSQ (or DSQ) function to obtain the soft-quantized phase coefficients. Then the incoming light field to the layer is modulated with the soft-quantized phase coefficients. For the nth layer, this is given as

| (6) |

where and are the fields immediately before and after the layer and are the phase coefficients and quantization temperature of layer respectively. During the backpropagation, the partial derivative of the layer output with respect to phase coefficients is computed as shown in Fig. 2–C. Similarly, for the learnable temperature case, the partial derivatives with respect to are also computed for the optimization of the temperature parameters. A detailed description explaining the backpropagation is also given in the supplementary materials sec. A.

In the next section, we evaluate the performance of D2NNs trained with QuATON compared to other competing methods.

QuATON Designs State-of-the-art Diffractive Networks Despite Quantized Weights

As shown in Fig. 2–A and B, we designed D2NNs for two selected physics-based tasks; all-optical classification and all-optical quantitative phase imaging (QPI)2,3. In all-optical classification, the D2NN was trained to classify phase objects whereas in all-optical QPI, it was trained to perform QPI of a phase object. For both tasks, the information of interest is in the phase of the input field. Therefore, the input light-field to the D2NN models were constructed by placing images (from the datasets) in the phase after scaling into the range . The amplitude of the input field was set to one throughout the field. An example of the input field to a D2NN is shown in Fig. 2–B1 and the field can be given as,

| (7) |

where is the input phase, which is an image from the dataset scaled to . The D2NN training details and the two physics-based tasks are explained in detail in the Methods section.

For each task we experimented on multiple datasets. Our experiments were organized as follows. For each task and each dataset, we first trained D2NNs with full precision weights without quantization. These models (denoted by FP) established heuristic upper limits for the performance of a specific task on a particular dataset. We then hard quantized the weights of the pre-trained full precision models, generating results for post-quantized (PQ) weights. We then trained our D2NNs using two existing QAT methods used to train optical neurons, straight-through estimator (STE)25, and Gumbel-Softmax based quantization (GS)15. These experiments, i.e., PQ, STE and GS, set the baseline of the current state-of-the-art (SOTA). A detailed description of these methods is given in the Methods section. Finally we trained D2NNs using QuATON. We experimented four QuATON variations. We first used the same soft quantization and training mechanisms in Differentiable soft quantization (DSQ)23 (note that DSQ has not been used to train optical neurons before). We then used our proposed PSQ while keeping the temperature () fixed (denoted by PSQ-FT). Last we used PSQ with our two progressive training approaches, i.e. with a linearly increasing temperature (denoted by PSQ-LI) and the learnable temperature (denoted by PSQ-LT).

In following subsections we present the results of these experiments for the two physics-based tasks, all-optical classification, and all-optical QPI.

All-optical Classification

We experimented on two classification datasets, MNIST and CIFAR10 as shown in Table 1. D2NN weights were quantized with 2, 4, and 8 quantization levels (Q2, Q4, and Q8). The full precision D2NN achieved ≈ 90% accuracy for the MNIST data set. However, for the more challenging CIFAR10 dataset, the classification accuracy was only 31.88%, even for the full precision model. We observe that 8 quantization levels were sufficient to achieve full precision accuracy in both datasets. Therefore, for 8 quantization levels, even without quantization-aware training frameworks, we achieved performance as good as the full precision model. However, for 4 and 2 levels of quantization, our QuATON variants (DSQ, PSQ-FT, PSQ-LI, and PSQ-LT) showed a clear improvement in performance. PSQ variants outperformed DSQ by a small margin on all datasets and all quantization levels. The qualitative results are shown in Figure 3. Of note, for 2 quantization levels in the MNIST dataset, QuATON methods showed more than 50% increase in accuracy compared to current SOTA.

Table 1.

Quantitative results of all-optical classification using D2NNs (classification accuracy).

| Method | Proposed by | Used for Optical Neurons in | MNIST | CIFAR10 | ||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| Q2 | Q4 | Q8 | Q2 | Q4 | Q8 | |||

|

| ||||||||

| FP | - | - | 89.99% | 31.88% | ||||

|

| ||||||||

| PQ | - | 2 | 21.84% | 86.89% | 90.06% | 14.54% | 26.79% | 31.89% |

| STE | 25 | 26 | 11.98% | 69.05% | 87.43% | 11.97% | 22.55% | 29.80% |

| GS | 28 | 15 | 12.79% | 55.19% | 83.50% | 9.86% | 19.88% | 30.62% |

|

| ||||||||

| DSQ | 23 | This paper | 74.75% | 84.69% | 89.13% | 19.98% | 29.07% | 32.10% |

| PSQ-FT | This paper | This paper | 44.53% | 86.34% | 89.62% | 16.14% | 26.91% | 32.08% |

| PSQ-LI | This paper | This paper | 71.31% | 87.73% | 90.08% | 19.23% | 30.18% | 32.35% |

| PSQ-LT | This paper | This paper | 75.03% | 87.06% | 89.76% | 22.27% | 28.83% | 32.01% |

Figure 3. All-optical classification results:

A1-A2) two examples of the phase of the incoming wave to the D2NN for the two datasets considered. A3-A4) output intensities for the D2NNs trained with full precision (FP) weights. B-G) classification results for the quantization-aware trained D2NNs for each of the examples. Each row named as shows the results for dataset , using D2NNs trained with -level quantized weights (). Each column corresponds to different QAT methods considered which are stated above row B). H) comparison of quantitative results (classification accuracy over the test set of each dataset). I-L) confusion matrices for the FP model and the best-performing methods for each quantization level for the MNIST dataset.

All-optical Quantitative Phase Imaging (QPI)

All-optical quantitative phase imaging translates the phase information of the input light field to the intensity at the output light field. It is thus an image translation task that’s more challenging than the previous classification task. As shown in Table 2 we experimented on three datasets, MNIST, TinyImageNet, and RBC (red blood cells). D2NN weights were quantized with 4, 8, and 16 quantization levels (Q4, Q8, and Q16). The comparison of the quantitative results (using the structural similarity index measur - SSIM29) for this task is given in Table 2. The full precision D2NN (FP) achieved an SSIM of 0.8560, 0.7385, and 0.9227 on the MNIST, TinyImageNet, and RBC datasets respectively. Post quantization of FP models severely degraded the model performances especially for Q4 and Q8 quantization levels (see row PQ in Table 2). STE and GS behaved differently on each dataset. On the MNIST and RBC datasets, STE improved the SSIM while for the TinyImageNet dataset the improvement was not consistent. For the MNIST and TinyImageNet datasets, GS in fact degraded the SSIM values (compared to the PQ baseline), but surprisingly improved by a large margin for the RBC dataset. Our QuATON variants (DSQ, PSQ-FT, PSQ-LI, and PSQ-LT) clearly and consistently improved the SSIM on all datasets and quantization levels (compared to the PQ baseline). In almost all cases, PSQ variants with progressive training (i.e., PSQ-LI and PSQ-LT) outperformed the others. Except for the RBC-Q4 case, at least one QuATON variant over performed the current SOTA (i.e., PQ, STE and GS). Surprisingly, GS showed the highest SSIM for the RBC-Q4 case. For a deeper analysis of these results, we consider the qualitative results shown in Fig. 4.

Table 2.

Quantitative results of all-optical quantitative phase imaging using D2NNs. The SSIM values are given for FP models and quantized models trained using different QAT methods.

| Method | MNIST | TinyImageNet | RBC | ||||||

|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||

| Q4 | Q8 | Q16 | Q4 | Q8 | Q16 | Q4 | Q8 | Q16 | |

|

| |||||||||

| FP | 0.8560 | 0.7385 | 0.9227 | ||||||

|

| |||||||||

| PQ | 0.0674 | 0.3526 | 0.6555 | 0.0870 | 0.3234 | 0.5258 | 0.1232 | 0.3214 | 0.7140 |

| STE | 0.1200 | 0.4138 | 0.6698 | 0.0570 | 0.3689 | 0.5721 | 0.5578 | 0.7898 | 0.8774 |

| GS | 0.1114 | 0.2938 | 0.4595 | 0.0793 | 0.2182 | 0.2610 | 0.7188 | 0.7854 | 0.7891 |

|

| |||||||||

| DSQ (QuATON) | 0.1207 | 0.5701 | 0.7321 | 0.1433 | 0.5610 | 0.6782 | 0.3095 | 0.8333 | 0.9208 |

| PSQ-FT (QuATON) | 0.1653 | 0.5348 | 0.7627 | 0.1709 | 0.5845 | 0.7038 | 0.5678 | 0.8646 | 0.9199 |

| PSQ-LI (QuATON) | 0.1411 | 0.5412 | 0.7822 | 0.1854 | 0.5924 | 0.7237 | 0.5394 | 0.8663 | 0.9206 |

| PSQ-LT (QuATON) | 0.1772 | 0.5374 | 0.7759 | 0.1832 | 0.6042 | 0.7125 | 0.6156 | 0.8723 | 0.9202 |

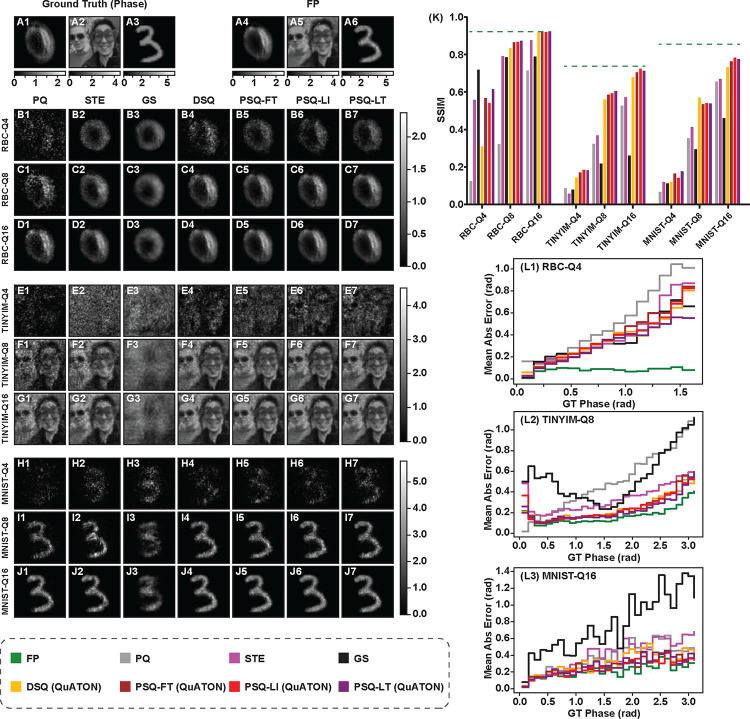

Figure 4. All-optical quantitative phase imaging results:

A1)-A3) three examples of the phase of the incoming wave to the D2NN for the three datasets considered. A4-A6) output intensities for the D2NNs trained with full precision (FP) weights. B)-J) QPI results for quantization-aware trained D2NNs for each of the examples. Each row named as shows the results for dataset , using D2NNs trained with -level quantized weights (. Each column corresponds to different QAT methods considered which are stated above row B). Note that all results are given as output intensity . K) the comparison of the quantitative results (mean SSIM over the test set of each dataset). L1)-L3) mean absolute phase error variation against ground truth phase for RBC-Q4, TINYIM-Q8, and MNIST-Q16 cases respectively. These plots are shown for the given examples in the figure.

Considering the qualitative results for the RBC dataset with 4-level quantization (RBC-Q4), although GS (Fig. 4–B3) resulted a higher SSIM value, it had failed to capture the morphological features of the red blood cell as well as the PSQ methods (Figs. 4–B6 and B7). Further analysis revealed that for the RBC dataset, the D2NNs trained with GS results mode collapse. Additional examples showing this are given in supplementary materials Fig. S1. This is also evident from the mean phase error plot shown in Fig. 4–L1. It can be clearly seen that PSQ-LT has a lower mean phase error throughout the entire phase range, especially for higher phase values compared to other methods for the considered example. For RBC-Q8 and RBC-Q16 (Fig. 4–C and D), our methods perform similar to the FP model.

For TinyImageNet with 4-level quantization (TINYIM-Q4), none of the methods produces good results. However, it can be noted that our QuATON variants (Figs. 4–E4–E7) have managed to capture some of the features of the input phase. For the TINYIM-Q8 and TINYIM-Q16 cases, QuATON variants showed better performance than the current SOTA appraoches. The qualitative results for the MNIST dataset also show similar trends as the TinyImageNet. However, for MNIST-Q4, PSQ has managed to capture the shape of the digit much better compared to other methods (Fig. 4–H). Fig. 4–L3 shows the phase error plot for MNIST-Q16. This shows that similar to TinyImageNet, although PSQ and DSQ perform on par with each other, for certain phase ranges (E.g. from 1.5 rad to 2.5 rad) PSQ methods have lower errors than DSQ. For further validity of these observations, additional examples for each dataset are shown in the supplementary materials Fig. S2. Furthermore, the progressive quantization of D2NN phase coefficients for the TINYIM-Q8 case using the PSQ-LT method is shown in supplementary materials Fig. S3.

Summary

In this study, we presented a quantization-aware training (QAT) framework for optical neutrons called QuATON. QuATON is based on a soft differentiable quantization function and a progressive training approach. We demonstrated the results of this method on two physics-based tasks performed by diffractive networks (D2NNs).

Our results show that QuATON outperforms the post-quantization, straight-through estimator, and Gumbel-Softmax which are the current state-of-the-art(SOTA) QAT methods used for optical neutrons. D2NNs with quantized weights trained using our method managed to achieve similar performance to a model with full-precision, using a smaller number of quantization levels (4 levels for all-optical classification and 16 levels for all-optical QPI). Furthermore, progressive training based methods outperformed fixed temperature based methods in almost all cases. This shows that progressive training is significant when the number of quantization levels is smaller. Altogether, this comprehensive evaluation of QAT methods for optical neural architectures sheds light on which method to choose to achieve a desired level of performance for a task while being constrained to a given precision of physical parameters.

Although not demonstrated in this work, our method can easily be extended to non-uniform quantization by combining multiple PSQ functions. It is important to note that this study presents a numerical simulation of the performance of the D2NNs. The physical realization process of these networks can introduce other noise and artifacts that should be considered during the training process. In future work, we aim to include other types of noise in the training process and fabricate them to experimentally validate the results.

In conclusion, our QAT framework addresses the issue of lack of precision in fabrication methods, optical devices, and analog-to-digital/ digital-to-analog conversions. We believe that this work lays the foundation upon which optical neurons can be physically realized for challenging vision applications in the future.

Materials and Methods

Progressive Training Schemes

This section describes the two progressive training schemes used in QuATON: 1) linearly increasing temperature (PSQ-LI) and 2) learnable temperature (PSQ-LT).

Linearly Increasing Temperature

In this approach, we start by setting to a small value and increase it linearly over the training epochs. The scheduling of has three hyperparameters; the initial value of , the increment step size , and the interval between two consecutive increments . Using these hyperparameters, the temperature at epoch can be given as

| (8) |

Learnable Temperature

In learnable temperature scheduling, we optimize through backpropagation. In this context, we define quantization instances (QIs), each having a separate PSQ function that is characterized by its own specific temperature factor. A QI can consist of either a single trainable parameter or a set of such parameters. Consider an example of an ONA with number of QIs. For the mth quantization instance, the temperature is defined as

| (9) |

where is a trainable parameter and is a constant setting the upper bound of . To promote the progressive nature in the optimization process of , we introduce a regularization term in the loss computation. For the tth training epoch, the regularization term is given by

| (10) |

In this equation, is the vector containing the parameters (Eq. 9) of each QI in the model and are hyperparameters. is a constant that is updated every epoch as . This term forces the temperature to increase every epoch while allowing it to be updated through backpropagation. The overall loss is computed as

| (11) |

where is the ground truth, is the predicted output, is the loss function specific to the task, and is the set of trainable parameters of the ONA. Note that the regularization term is added to the loss only when the temperature is learnable.

All-Optical Classification

We consider the all-optical classification of phase images using a D2NN. The task is evaluated on two datasets: MNIST digits30 and CIFAR1031. Since both datasets have 10 classes, in this task, the output plane has 10 spatially separated patches, as shown in Fig. 2–B2. The patch with the highest mean intensity determines the class. During training, the objective is to concentrate most of the light on the patch corresponding to the ground truth class and suppress the light that scatters to other patches. The loss function for this task is given in Eq. (12),

| (12) |

where is the squared error (SE) for the i-th pixel. is the label map corresponding to the ground truth digit. For example, as shown in Fig. 2, only the patch corresponding to digit 3 will equal 1 in the label map (Y) for digit 3. In essence, the loss function is a weighted mean squared error, where more weight is given to the non-target region to penalize light scattering to those areas.

All-Optical Quantitative Phase Imaging

In the all-optical QPI task, we consider three datasets; MNIST digits30, TinyImageNet32, and an experimentally collected red blood cell (RBC) dataset. In this task, the D2NN is trained with the objective

| (13) |

such that the output intensity is proportional to the input phase. Here, denotes the phase coefficients of the D2NN, is the overall loss, is the output field of the D2NN, and is the vector containing parameters of each D2NN layer. The overall loss is computed according to Eq. (11) and reverse Huber loss33 is used as the loss function .

Training Details

In both tasks, only the phase coefficients of the D2NNs are optimized. During the training process, we apply PSQ for QAT of the phase coefficients. For a given task and a given dataset, we first train a D2NN with full-precision (FP) weights without quantization for 100 epochs (200 epochs for CIFAR10 dataset). Then, starting from FP weights, we apply PSQ to train the D2NN with quantized phase coefficients for another 100 epochs. Since we consider only the phase coefficients, they lie in the range rad. However, the trained phase coefficients of the FP model are unwrapped phase values, thus distributing them over several phase cycles. As a better initialization for the QAT process, we wrap the FP weights to bring them to the range . This prevents weights outside of from being clamped to the lowest or highest quantization level at the beginning of the QAT process.

Furthermore, during QAT we limit the range of quantization levels to rad since 0 rad and rad correspond to the same phase shift. For example, if we consider phase weights with 4-level quantization, the corresponding quantization levels will be rad. However in the all-optical classification task, for 2-level quantization, we use the levels . We evaluate three versions of PSQ and compare their performance for both tasks: 1) PSQ with keeping fixed (PSQ-FT), 2) PSQ with linearly increasing (PSQ-LI), and 3) PSQ with learnable (PSQ-LT).

For the all-optical classification task, we use 7-layer D2NNs trained with 2, 4, and 8 quantization levels for each case. For the all-optical QPI task, we train each D2NN with three different quantization levels (4, 8, and 16). For this task, we use 5-layer D2NNs for both TinyImageNet and RBC datasets, and 7-layer D2NNs for the MNIST dataset. Further details on D2NN specifications and the training process are included in the supplementary materials sec. B.

Comparison of Performance

We compare the performance of PSQ for the considered tasks with several QAT methods, the FP model, and the post-quantized FP model. For a fair comparison, we initialize the D2NN phase weights with FP weights before QAT using all the methods.

Post-Quantization (PQ)

In this, the phase weights of the FP model are directly quantized using Eq. (1).

Straight-through Estimator (STE)25

In this method, Eq. (1) is used in the forward pass while during the backpropagation, gradients are computed as,

| (14) |

where is any trainable parameter in the network.

Gumbel-Softmax based Quantization (GS)

This method uses Gumbel-Softmax as a soft quantization function, and this has been demonstrated as a QAT technique for D2NNs by Li et al.15. When training D2NNs using GS, we use the linear annealed temperature schedule (temperature starts from 50 and decreases by 0.5 each epoch), which they have mentioned in the paper for best-performing results.

Differentiable Soft Quantization (DSQ)

This method was proposed by Gong et al.23 as a QAT method for DNNs. In their original work, DSQ is implemented with a learnable temperature and lower and upper bounds of the quantization range. However, since we consider the phase weights of D2NNs in the range , we keep the lower and upper bounds of the quantization range fixed.

Supplementary Material

Acknowledgements

This work was supported by the Center for Advanced Imaging at Harvard University (H.K., R.H., and D.N.W.), and NIH R21-MH130067-01 (D.N.W.). D.N.W. further acknowledge support from the John Harvard Distinguished Science Fellowship Program within the FAS Division of Science of Harvard University. Q.Y., P.T.S., and E.S.B. acknowledge support from Fujikura Inc. A.M. and P.T.S. also acknowledge support from NIH R01HL158102. E.S.B. further acknowledges the support from HHMI, John Doerr, and Lisa Yang.

Footnotes

Additional information

Competing interests: The authors declare that they have no competing interests.

References

- 1.Lin X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, DOI: 10.1126/science.aat8084 (2018). [DOI] [PubMed] [Google Scholar]

- 2.Mengu D. & Ozcan A. All-optical phase recovery: diffractive computing for quantitative phase imaging. Adv. Opt. Mater. 10, 2200281 (2022). [Google Scholar]

- 3.Herath K. et al. Differentiable microscopy designs an all optical quantitative phase microscope. arXiv preprint arXiv:2203.14944 (2022). [Google Scholar]

- 4.Qian C. et al. Performing optical logic operations by a diffractive neural network. Light. Sci. & Appl. 9, 59 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhou J., Pu H. & Yan J. Spatiotemporal diffractive deep neural networks. Opt. Express 32, 1864–1877 (2024). [DOI] [PubMed] [Google Scholar]

- 6.Yan T. et al. Fourier-space diffractive deep neural network. Phys. review letters 123, 023901 (2019). [DOI] [PubMed] [Google Scholar]

- 7.Shi J. et al. Multiple-view d 2 nns array: realizing robust 3d object recognition. Opt. Lett. 46, 3388–3391 (2021). [DOI] [PubMed] [Google Scholar]

- 8.Yelleswarapu C. S., Kothapalli S.-R. & Rao D. Optical fourier techniques for medical image processing and phase contrast imaging. Opt. communications 281, 1876–1888 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Panchangam A. et al. Processing of medical images using real-time optical fourier processing. Med. Phys. 28, 22–27 (2001). [DOI] [PubMed] [Google Scholar]

- 10.Liu S., Mi Q. & Zhu B. Optical image encryption with multistage and multichannel fractional fourier-domain filtering. Opt. Lett. 26, 1242–1244 (2001). [DOI] [PubMed] [Google Scholar]

- 11.Miscuglio M. et al. Massively parallel amplitude-only fourier neural network. Optica 7, 1812–1819 (2020). [Google Scholar]

- 12.Haputhanthri U. et al. From Hours to Seconds: Towards 100x Faster Quantitative Phase Imaging via Differentiable Microscopy. arXiv preprint (2022). [Google Scholar]

- 13.Kellman M. R., Bostan E., Repina N. A. & Waller L. Physics-Based Learned Design: Optimized Coded-Illumination for Quantitative Phase Imaging. IEEE Transactions on Comput. Imaging 5, DOI: 10.1109/tci.2019.2905434 (2019). [DOI] [Google Scholar]

- 14.Metzler C. A., Ikoma H., Peng Y. & Wetzstein G. Deep Optics for Single-Shot High-Dynamic-Range Imaging. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, DOI: 10.1109/CVPR42600.2020.00145 (2020). [DOI] [Google Scholar]

- 15.Li Y., Chen R., Gao W. & Yu C. Physics-Aware Differentiable Discrete Codesign for Diffractive Optical Neural Networks. In Proceedings of the 41st IEEE/ACM International Conference on Computer-Aided Design, 1–9, DOI: 10.1145/3508352.3549378 (ACM, New York, NY, USA, 2022). [DOI] [Google Scholar]

- 16.Zafrir O., Boudoukh G., Izsak P. & Wasserblat M. Q8bert: Quantized 8bit bert. In 2019 Fifth Workshop on Energy Efficient Machine Learning and Cognitive Computing-NeurIPS Edition (EMC2-NIPS), 36–39 (IEEE, 2019). [Google Scholar]

- 17.Wu J., Leng C., Wang Y., Hu Q. & Cheng J. Quantized convolutional neural networks for mobile devices. In Proceedings of the IEEE conference on computer vision and pattern recognition, 4820–4828 (2016). [Google Scholar]

- 18.Esser S. K., McKinstry J. L., Bablani D., Appuswamy R. & Modha D. S. Learned step size quantization. arXiv preprint arXiv:1902.08153 (2019). [Google Scholar]

- 19.Liu Z. et al. Post-training quantization for vision transformer. Adv. Neural Inf. Process. Syst. 34, 28092–28103 (2021). [Google Scholar]

- 20.Nagel M., Amjad R. A., Van Baalen M., Louizos C. & Blankevoort T. Up or down? adaptive rounding for post-training quantization. In International Conference on Machine Learning, 7197–7206 (PMLR, 2020). [Google Scholar]

- 21.Nahshan Y. et al. Loss aware post-training quantization. Mach. Learn. 110, 3245–3262 (2021). [Google Scholar]

- 22.Yang J. et al. Quantization Networks. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 7300–7308, DOI: 10.1109/CVPR.2019.00748 (IEEE, 2019). [DOI] [Google Scholar]

- 23.Gong R. et al. Differentiable soft quantization: Bridging full-precision and low-bit neural networks. In Proceedings of the IEEE International Conference on Computer Vision, vol. 2019-October, DOI: 10.1109/ICCV.2019.00495 (2019). [DOI] [Google Scholar]

- 24.Kirtas M. et al. Quantization-aware training for low precision photonic neural networks. Neural Networks 155, 561–573 (2022). [DOI] [PubMed] [Google Scholar]

- 25.Bengio Y., Léonard N. & Courville A. Estimating or propagating gradients through stochastic neurons for conditional computation. arXiv preprint arXiv:1308.3432 (2013). [Google Scholar]

- 26.Gu J. et al. Roq: A noise-aware quantization scheme towards robust optical neural networks with low-bit controls. In 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), 1586–1589 (IEEE, 2020). [Google Scholar]

- 27.Goodman J. W. Introduction to fourier optics. Introd. to Fourier optics, 3rd ed., by JW Goodman. Englewood, CO: Roberts & Co. Publ. 2005 1 (2005). [Google Scholar]

- 28.Jang E., Gu S. & Poole B. Categorical reparameterization with gumbel-softmax. In International Conference on Learning Representations (2017). [Google Scholar]

- 29.Wang Z., Bovik A. C., Sheikh H. R. & Simoncelli E. P. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13, 600–612 (2004). [DOI] [PubMed] [Google Scholar]

- 30.Deng L. The mnist database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 29, 141–142 (2012). [Google Scholar]

- 31.Krizhevsky A. Learning multiple layers of features from tiny images. Tech. Rep. (2009). [Google Scholar]

- 32.Le Y. & Yang X. S. Tiny imagenet visual recognition challenge (2015). [Google Scholar]

- 33.Zwald L. & Lambert-Lacroix S. The BerHu penalty and the grouped effect. arXiv preprint (2012). [Google Scholar]

- 34.Ratcliffe J. A. Some Aspects of Diffraction Theory and their Application to the Ionosphere. Reports on Prog. Phys. 19, 306, DOI: 10.1088/0034-4885/19/1/306 (1956). [DOI] [Google Scholar]

- 35.Paszke A. et al. Automatic differentiation in pytorch. (2017). [Google Scholar]

- 36.Kingma D. P. & Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.