Abstract

Purpose:

Applying effective learning strategies to address knowledge gaps is a critical skill for lifelong learning, yet prior studies demonstrate that medical students use ineffective study habits.

Methods:

To address this issue, the authors created and integrated study resources aligned with evidence-based learning strategies into a medical school course. Pre-/post-course surveys measured changes in students’ knowledge and use of evidence-based learning strategies. Eleven in-depth interviews subsequently explored the impact of the learning resources on students’ study habits.

Results:

Of 139 students, 43 and 66 completed the pre- and post-course surveys, respectively. Students’ knowledge of evidence-based learning strategies was unchanged; however, median time spent using flashcards (15% to 50%, p<.001) and questions (10% to 20%, p=.0067) increased while time spent creating lecture notes (20% to 0%, p=.003) and re-reading notes (10% to 0%, p=.009) decreased. In interviews, students described four ways their habits changed: increased use of active learning techniques, decreased time spent creating learning resources, reviewing content multiple times throughout the course, and increased use of study techniques synthesizing course content.

Conclusion:

Incorporating evidence-based study resources into the course increased students’ use of effective learning techniques, suggesting this may be more effective than simply teaching about evidence-based learning.

Keywords: Science of learning, study skills, learning techniques, undergraduate medical education

Introduction

Medical professionals must continue learning throughout their career given continual scientific and clinical discoveries. To be master adaptive learners, students need to appraise their knowledge gaps, apply effective learning strategies to address them, and evaluate the success of their learning to meet this lifelong challenge (Cutrer et al. 2017). Unfortunately, many students use ineffective study techniques and are unaware of evidence-based learning strategies that promote durable learning (Piza et al. 2019).

For-profit educational companies have created learning resources capitalizing on evidence-based techniques. Students increasingly use commercial resources to supplement or replace medical school curricula (Hirumi et al. 2022). One medical school showed that 84% of students used Boards and Beyond videos, 72% used USMLE Rx Question Bank, 65% used Anki cards, and 55% used Sketchy videos (Wu et al. 2021). Many educators have expressed frustration at this parallel curriculum over which they lack control; however, use of these resources has been shown to increase scores on standardized examinations, likely because they encourage evidence-based learning strategies such as retrieval practice, spaced repetition, and interleaving (Cutting and Saks 2012; Deng et al. 2015; Gooding et al. 2017; Lu et al. 2021; Hirumi et al. 2022).Because commercial materials are costly, they can exacerbate inequities already present among learners. Furthermore, these resources may not be aligned with local curricula and do not necessarily address the metacognitive awareness students must develop to become master adaptive learners.

To teach students effective study skills, we designed study resources aligned with our curriculum that encouraged evidence-based learning strategies. We aimed to 1) examine whether inclusion of these resources increased students’ use of evidence-based learning strategies, and 2) understand how and why the resources impacted learning habits to inform future curriculum design for our institution and others.

Materials and methods

The four-week ‘Introduction to Human Disease’ course for first-year medical students at Emory University teaches foundational concepts in immunology, microbiology, pharmacology, and pathology. This course occurs four months after matriculation to medical school and bridges coursework between physiology and organ system-based pathophysiology. Course evaluations and informal discussions with prior students revealed they found this course challenging as it includes substantial, intrinsically difficult new content and begins at the same time as anatomy coursework.

To address students’ concerns and use the opportunity to teach effective study habits, the course director (JOS) created learning materials aligned with evidence-based learning strategies in 2019 (Table 1). These learning materials were chosen by considering and balancing the following principles based on informal input from faculty and students:

Do the learning materials promote evidence-based learning strategies?

How easy will it be to create the materials? (i.e. time required, faculty familiarity with technology, etc.).

How likely are students to use the materials? (i.e. student familiarity with methods/technology, prior course feedback, ease of use).

Table 1.

Learning materials created to teach evidence-based learning (EBL) skills in an undergraduate medical education course in 2019.

| Learning materials | Evidence-based learning (EBL) principle | Details | Citations |

|---|---|---|---|

| Overview of EBL principles | Metacognition | 1-h introduction by course director to EBL, master-adaptive learner framework, specific learning materials, and how to use them | (Cutrer et al. 2017; Chang et al. 2021; Biwer et al. 2023) |

| Lecture outlines | Scaffolding to reduce cognitive load; promotes generation and elaboration | Created by instructors for each lecture, meant to guide notetaking and help with synthesis of material by asking students to create lists and fill out tables, concept maps, etc. | (Cornelius and Owen-DeSchryver 2008) |

| Anki cards | Retrieval practice, spaced learning, interleaving | Electronic flashcards with focused open-ended questions that students answered to test basic understanding of terms and concepts; included recall & application questions; approximately 25 cards created per hour of lecture and available immediately after the lecture. The Anki application (https://apps.ankiweb.net/) is a freely available resource for electronic flashcards that was used due to its built-in spaced learning algorithm. Students were encouraged to add the flashcards for new lectures each day but include flashcards from all prior lectures in their review to promote interleaving of the topics covered within the course (i.e. microbiology, immunology, pathology, and pharmacology). | (Kornell 2009; Rawson and Dunlosky 2011; Deng et al. 2015; Lambers and Talia 2021; Lu et al. 2021) |

| Self-assessment | Retrieval practice, application of learning | Weekly, containing 20–35 multiple-choice questions on topics students learned that week meant to represent how content would be presented on exams | (Kerfoot et al. 2007; Larsen et al. 2009; Roediger and Butler 2011) |

| Team-based learning | Retrieval practice, elaboration, spaced learning, transfer | Closed-book session used to apply content that students learned in lectures to clinical scenarios; ungraded but included competition to enhance motivation | (Norman 2009; Krupat et al. 2016; Schmidt et al. 2019; Versteeg et al. 2019) |

We chose to focus on evidence-based learning principles from the cognitive sciences that have been shown to aid with knowledge acquisition in both higher education and health professions education given the preclinical nature of the course (Kerfoot et al. 2007; Kerfoot et al. 2010; Dunlosky et al. 2013; Schmidt et al. 2019; Schmidt and Mamede 2020). For example, Anki cards were chosen since they encouraged multiple evidence-based learning strategies (i.e. retrieval practice, spaced learning, and interleaving), and the technology platform was already familiar to a significant portion of the medical school class. Since this was a new technology for faculty, however, the course director created all Anki cards.

The learning materials were initially introduced (2019–2020 academic year), and the course director modified the learning resources based on informal feedback from faculty and students and course evaluations. Then, the following year (2020–2021 academic year), we used an explanatory sequential mixed methods design (Creswell and Plano Clark 2007) to understand how the course influenced students’ study habits. We created pre-/post-course surveys (Supplemental Digital Appendix 1) to determine students’ current study habits, knowledge of learning science, whether study habits changed after the course, and perception of the learning materials provided. We based our survey on others’ published work (Piza et al. 2019), piloted it with three students who had completed the course, and made minor revisions based on feedback. The surveys were distributed to students via email one week prior to the first day of the course and the day following their final course examination. Participation was voluntary and anonymous. Students were not required to answer all questions; thus, total responses varied slightly for each question. Student responses were included if they had answered any of the questions since each question was analyzed independently, and denominators for each question are included in the analysis. Since only 14 students answered both the preand post-course surveys, we analyzed pooled responses. We analyzed the data using descriptive statistics and unpaired t-tests for means and chi-squared or Fisher’s exact test for proportions to compare pre- and post-course responses. Percentages for each question are reported using the denominator for that question as not all students completed all questions. Analyses were completed using SAS 9.4 (SAS Institute, Cary, NC).

We created a semi-structured interview guide to explore if/how students’ study habits changed, which learning materials they used and how/why they used them, if/how they used external resources, and recommendations for future iterations (Supplemental Digital Appendix 2). Students were invited to participate in the interviews after grades were finalized. Interviews were conducted via a video-conferencing platform (Zoom, Zoom Video Communications Inc, San Jose, California) by an author not involved in the course (KCU) with experience in qualitative methods. Interviews were transcribed verbatim and de-identified before analysis. Three transcripts were read by four authors - the course director (JOS), a pre-medical student (KCU), a medical educator not involved in the course (HCG), and a learning scientist from another institution (JM) - who together developed a codebook of inductive and deductive codes. Three separate authors applied the codebook to each transcript, met to discuss, and decided on final codes by consensus. Then one author (JOS) created initial and integrative memos, which were shared with the study team and used to develop themes. Throughout the process the authors considered their positionality with regards to the study subjects and the findings. We worked to ensure the trustworthiness of the findings by engaging in reflective discussion to decide on final codes and develop themes. The Emory University Institutional Review Board reviewed and deemed this study exempt.

Results

In 2020, 139 students participated in the course. 31% (43/139) and 47% (66/139) of the students completed at least half of the pre- and post-course surveys, respectively. Only 23 of 40 (58%) students who completed the pre-course survey indicated they had been taught evidence-based learning methods prior to medical school. When presented learning scenarios comparing an evidence-based and a non-evidence-based option, the majority of students ranked the evidence-based option higher for the scenarios demonstrating generation, retrieval practice, and spacing (Table 2); however, the proportion of students selecting the evidence-based option did not change from the pre- to post-course survey.

Table 2.

Percentage of students providing higher rating for the evidence-based learning scenarios in the pre- and post-course surveys.

| Pre-course (n = 37) | Post-course (n = 58) | p Value | |

|---|---|---|---|

| Generation | 62.2% | 62.1% | .993 |

| Retrieval | 71.4% | 69.0% | .802 |

| Interleaving | 44.4% | 50.0% | .600 |

| Spacing | 94.3% | 96.5% | .634 |

Hours spent studying did not change from pre- to post-course survey; however, students changed the percentage of study time spent on certain activities. They increased the median percentage of time spent using flashcards (15% to 50%, p<.001) and questions/practice problems (10% to 20%, p=.007) and decreased the median time spent creating their own lecture notes (20% to 0%, p=.003) and re-reading notes (10% to 0%, p=.009). An analysis performed on only participants with paired pre-/post-surveys (n=14) showed a similar magnitude of change in percentages of study time; however, some findings were no longer statistically significant (flashcards: 12.5% to 50%, p=.04; questions/practice problems: 15% to 20%, p=.31; creating lecture notes: 20% to 0%, p=.36; re-reading notes: 10% to 0%, p=.02). Most students already used spacing (38/42, 90%) and interleaving (31/42, 74%) during their studying prior to the course, which remained similar after the course. In the post-course survey, a higher number of students rated the Anki cards (51/55, 95%) and the self-assessments (54/55, 98%) as very/extremely effective as compared to the lecture outlines (26/55, 47%) and team-based learning sessions (33/55, 60%). Additionally, 43 of 55 (78%) students indicated they planned to change their study habits for future courses.

In the interviews, students’ comments about the learning resources were generally positive. All interviewed students used the Anki cards and self-assessments whereas only a portion (6 of 11 students) used the lecture outlines, primarily because the lecture outlines were more time-consuming to complete, and many students reported they did not have sufficient time to use all resources. Students described four ways in which their study habits changed after using the learning resources in this course: 1) increased time spent using active learning techniques, 2) more time spent studying rather than creating study resources, 3) reviewing content multiple times throughout the course rather than cramming before the examination, and 4) increased use of study techniques that synthesized course content (Table 3).

Table 3.

Themes identified from interviews with medical students regarding how their study habits changed during an undergraduate medical school course, 2020.

| Theme | Representative quotes |

|---|---|

| Increased time spent using active learning techniques | ‘I wanna prioritize my time and be as efficient as possible… It’s really easy to be studying all the time and be kind of drowning in information and not really feeling like you’re even processing it… my main study resource has been using Anki.… I think [the course] was helpful to reinforce how active recall is the most efficient and helpful way to study.’ ‘I definitely did not review PowerPoints in their entirety, there simply wasn’t enough time for that. I focused more on quizzing myself on the material through the learning objectives after each lecture. And then, wherever I felt weak, going back to the PowerPoint and reviewing that component. [I used] Anki cards religiously. I honestly think those helped a lot. And, then I would look at the [lecture outlines] that were provided for us and basically do those at the end of the week as a final review.’ |

| Devoted more time to studying rather than creating study resources | ‘[I am] transitioning from making all of my own study resources to kind of trying to be a little more time efficient, and make less of my own study resources so I can spend more of my time just actively studying. So, now [after that course], I try to use many more pre-made resources, because I think that it’s a more effective use of my time.’ ‘I think the biggest thing that changed in [the course] was that I did not have to make my own Anki cards. So, it became a lot less about, I’d say like 80% of my time with Anki was spent on making cards and 20% was spent reviewing them, and now it’s, you know, 100% reviewing them, so I think I got to just focus on drilling a lot of the concepts a lot more.’ |

| Reviewed content multiple times throughout the course rather than cramming before the examination | ‘We know that you should study things from the beginning of the semester or the class, you know, keep it up, but it’s really hard to prioritize that. So just having the Anki decks pre-made was incredible. Just using them at all, helps me realize how important it is to be reviewing stuff from the beginning of the module. And I’m just not sure that I would have figured that out on my own, frankly. And even though I would have told you that I know I should be studying stuff from week one, like it’s just hard to prioritize that. And so now … I learned how to make good cards from seeing her [Anki card] deck, but I also learned that I need to be reviewing. And so, for the last two classes, I’ve set up my decks to be like week one, week two, week three, so that I can make sure that every night, I’m coming back to week one and week two, even though we’re already in week three.’ ‘I did not do a full review [before the examination] because it was just like you’re constantly reviewing.’ |

| Increased use of study techniques that synthesize course content | ‘[The lecture outlines] did make me think about the content differently. [They] helped me look at a lecture and say, ‘Can I make a table out of this information? Is there something here that I should be comparing to something else we’ve learned?’ So it’s kind of a meta element to the handouts, even though I thought for [the course they] didn’t make a huge difference, [they] did just kinda teach me what kind of things to think about.’ ‘I use tables. [A professor from the course], he used to have a lot of tables [in the lecture outlines]. So, I still make tables whenever I want to compare and contrast multiple diseases. Like, for the last module, I had to learn seven types of arthritis. So, as you use the tables, the way he had them, it would have etiology, symptoms, something like that. So, I used that method.’ |

During our course, students used limited external resources since they felt they had sufficient learning materials provided within the course, as illustrated here:

At the beginning, I was using more external resources. But I think as the [course] went on, I feel like the materials that were provided were sufficient. And I think that’s pretty unique for me because normally I’m using a lot of external resources.

Having pre-made study materials that aligned with the course helped them focus their time on learning new content rather than searching for the best learning resources. Students reported they typically used external resources because they do ‘a better job of explaining complicated concepts in simple terms’; however, because the learning materials provided helped them learn and understand concepts, they did not feel the need to seek out additional external resources. Students said they believe faculty are responsible for curating, organizing, and signaling important content; therefore, having the learning resources from our curriculum helped. As one student said, ‘it’s not that we want to be spoon-fed, it’s that having that structure is helpful.’ For example, the partial lecture outlines helped students see how to organize material yet still required them to synthesize it on their own; likewise, Anki cards and self-assessment questions signaled key content.

Discussion

By providing students with evidence-based learning resources that aligned with our course, we increased students’ use of evidence-based learning strategies. Students not only used the instructor-developed evidence-based learning materials, but they also abandoned external resources that they had relied upon in previous courses. Faculty often express frustration with students’ increasing use of external commercial learning resources at the expense medical school curriculum (Kanter 2012; Hafferty et al. 2020; Wu et al. 2021). Students frequently turn to these resources because they provide clear, cohesive instruction and incorporate evidence-based learning principles (Coda 2019; Hirumi et al. 2022). Therefore, instructor-developed learning materials that incorporate these evidence-based learning principles may decrease use of external resources, which could promote equity among students and better align study materials with local program objectives.

Although creating these materials was time-intensive in the first year (approximately 1 h of time per lecture hour), subsequent upkeep has been minimal. Other faculty looking to replicate our efforts could create their own learning resources, leverage free open-access materials (e.g. student-developed Anki decks, like Anking, available at https://www.ankipalace.com/step-1-deck), or invest in commercial resources for all students. For faculty who lack the time or expertise to create resources, co-creation with students could combine students’ familiarity with learning platforms and faculty’s content expertise, as was used to create 200 multiple-choice questions in three months at one medical school (Harris et al. 2015).

Leveraging students’ opinions was critical for the success of our intervention. Before creating our materials, we had informal discussions with students to understand their challenges and current learning resources. We found that students were spending substantial time creating notes and re-reading notes or PowerPoint slides. Given the increase in difficulty and volume of our course content as compared to prior courses, we felt providing students with partial outlines would scaffold their learning by organizing and synthesizing the information and signaling important content. Additionally, faculty commented that creating the outlines helped them better organize their lectures, which was an unanticipated benefit.

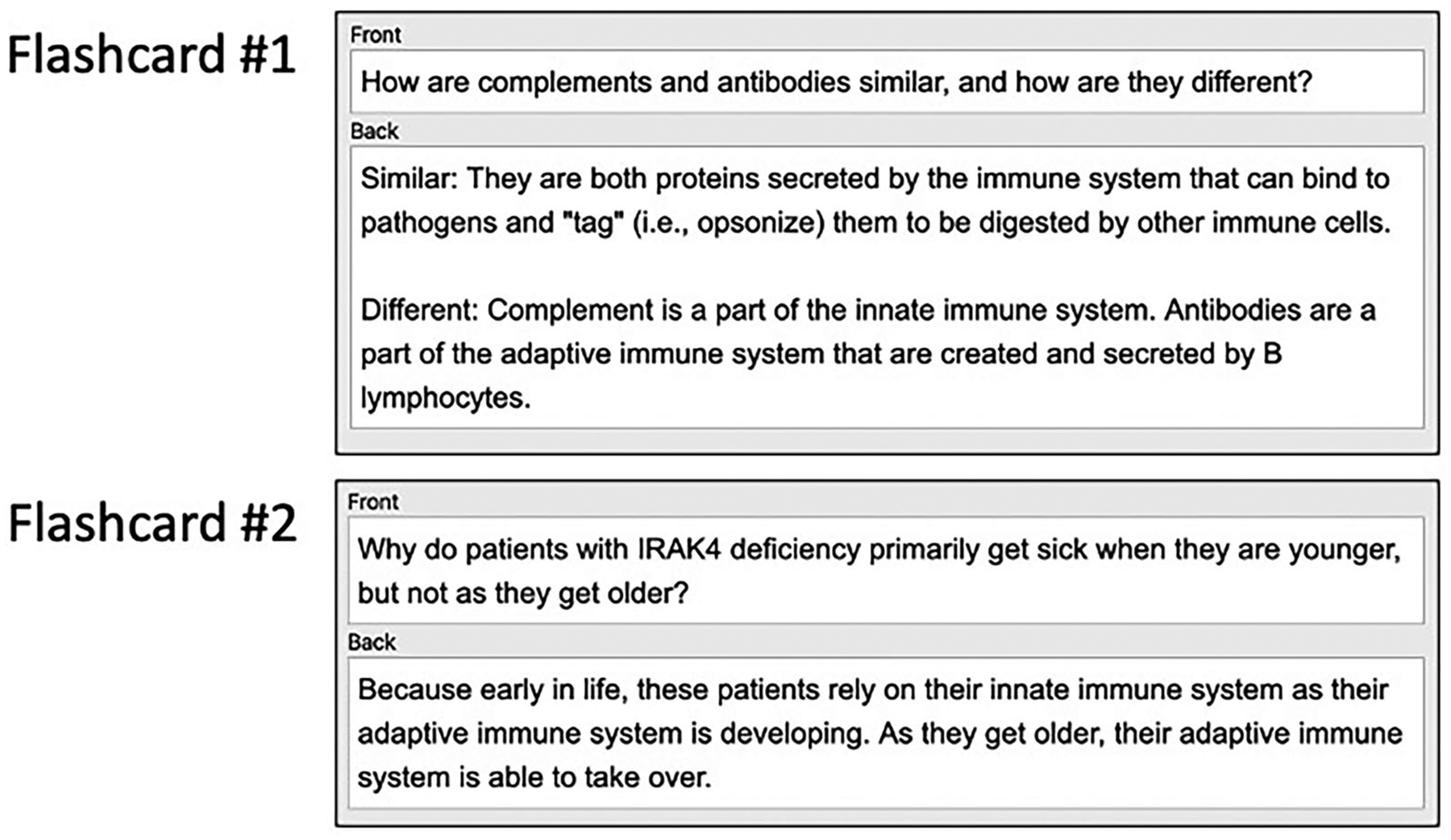

During our informal discussions, we also found some students were already using Anki cards to study for their courses, but their cards were used primarily for factual recall and often contained unimportant minutiae from lectures. We decided to use Anki since it had a built-in spaced repetition algorithm that encouraged interleaving, and we created cards that promoted higher order cognitive processes, like explaining concepts, applying content to new problems, and comparing/contrasting course material (Figure 1). Because many students were already familiar with the Anki platform, they provided invaluable tips on creating Anki cards efficiently, thus saving faculty time.

Figure 1.

Examples of Anki cards from our course that promoted higher order cognitive processes.

Getting students’ input initially and then encouraging students to give feedback helped us develop better resources and get buy-in from them to use the resources. Engaging students as partners in educational design is becoming more common within medical education (Harris et al. 2015; Scott et al. 2019; Kapadia 2021; Könings et al. 2021; Gheihman et al. 2021; Suliman et al. 2023). Student involvement can range from being an informant and/or tester, as in our intervention, to a full design partner who co-creates a curriculum (Martens et al. 2019). Moving forward, we believe there are substantial benefits to including students as full design partners in medical school curricula. Students have insight into popular learning resources, which can help faculty leverage existing resources and create new ones.

Students were less likely to recognize interleaving as a beneficial learning strategy in our pre-/post-survey as compared with retrieval, spacing, and generation. These findings are similar to another recent study (Piza et al. 2019), suggesting this as a potential area for future interventions. Since Anki allows users to create study sessions based on content from multiple different ‘decks’ or topics, this learning tool may provide a natural opportunity to encourage students to use interleaving and see the value of this study technique for long-term retention.

There are several limitations to our study. First, we limited our intervention to evidence-based principles shown to assist with knowledge acquisition given the stage of learner and timing of this course in our curriculum. As students advance to the clinical years and beyond, they will need to incorporate other evidence-based principles to assist with clinical reasoning, such as illness scripts (Moghadami et al. 2021). Second, although we demonstrated changes in students’ study habits, we have limited data regarding how lasting changes were. Our interviews took place during the students’ next course, yet we do not have data beyond that time period. Furthermore, we did not demonstrate that our intervention resulted in a change in student performance. Because both course lectures and the examination were revised substantially at the same time we implemented the intervention, any comparison of examination performance to prior years could not be attributed only to the learning resource intervention. Third, we had a limited response rate with only fourteen paired responses, which restricts the strength of our inferences and introduces potential bias. Fourth, having the course director involved in the evaluation process may have biased responses. To mitigate this, we made it clear that we wanted honest responses, and we emphasized that survey data was anonymous. Moreover, interviews were conducted by a student from another program (KCU) after course grades were submitted, and students were assured that interviews were completely de-identified prior to analysis. Finally, since medical students adjust their study habits throughout medical school based on their experiences and peer advice, we cannot prove that all changes occurred due to our intervention; however, based on our interviews, we know that at least some students changed their habits due to our intervention.

In conclusion, we believe our study supports the value of providing students with evidence-based learning materials for their medical school courses. Schools looking to develop master adaptive learners may want to consider how they can integrate curricular elements to promote evidence-based study techniques outside of the classroom.

Supplementary Material

Practice points.

Incorporating instructor-developed evidence-based learning resources into a pre-clinical medical school course increased students’ use of effective study habits.

Students decreased use of external commercial learning resources when provided with instructor-developed electronic flashcards, lecture outlines, and self-assessment multiple-choice questions.

Seeking student input before developing learning resources and encouraging feedback after initial implementation led to student buy-in and higher-quality resources.

Faculty should incorporate evidence-based teaching and learning practices into their courses in addition to teaching general principles of effective study habits.

Acknowledgments

The authors wish to thank Emory University School of Medicine students in the Class of 2023 for their feedback on the learning resources used in this study during their first year of implementation and the Class of 2024 for those who volunteered to participate in the study. They would also like to thank all of the faculty teaching in the Introduction to Human Disease course for their help in the creation of learning resources for the students.

Funding

Research reported in this publication was supported in part by Imagine, Innovate and Impact (I3) from the Emory School of Medicine, a gift from Woodruff Fund Inc., and through the Georgia CTSA NIH award (UL1-TR002378). LSW is supported in part by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award Number UL1TR002378 and Award Number TL1TR002382.

Footnotes

Ethical approval

This study was deemed exempt from review by the Emory University Institutional Review Board on November 24, 2020 (IRB ID: STUDY00001784).

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article.

Supplemental data for this article is available online at https://doi.org/10.1080/0142159X.2023.2218537

References

- Biwer F, Bruin AD, Persky A. 2023. Study smart – impact of a learning strategy training on students’ study behavior and academic performance. Adv Health Sci Educ Theory Pract. 28(1): 147–167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C, Colón-Berlingeri M, Mavis B, Laird-Fick HS, Parker C, Solomon D. 2021. Medical student progress examination performance and its relationship with metacognition, critical thinking, and self-regulated learning strategies. Acad Med. 96(2):278–284. [DOI] [PubMed] [Google Scholar]

- Coda JE. 2019. Third-party resources for the USMLE: reconsidering the role of a parallel curriculum. Acad Med. 94(7):924. [DOI] [PubMed] [Google Scholar]

- Cornelius TL, Owen-DeSchryver J. 2008. Differential effects of full and partial notes on learning outcomes and attendance. Teach Psychol. 35(1):6–12. [Google Scholar]

- Creswell J, Plano Clark V. 2007. Designing and conducting mixed methods research. Thousand Oaks, (CA): Sage Publishing. [Google Scholar]

- Cutrer WB, Miller B, Pusic MV, Mejicano G, Mangrulkar RS, Gruppen LD, Hawkins RE, Skochelak SE, Moore DE. 2017. Fostering the development of master adaptive learners. Acad Med. 92(1): 70–75. [DOI] [PubMed] [Google Scholar]

- Cutting MF, Saks NS. 2012. Twelve tips for utilizing principles of learning to support medical education. Med Teach. 34(1):20–24. [DOI] [PubMed] [Google Scholar]

- Deng F, Gluckstein JA, Larsen DP. 2015. Student-directed retrieval practice is a predictor of medical licensing examination performance. Perspect Med Educ. 4(6):308–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunlosky J, Rawson KA, Marsh EJ, Nathan MJ, Willingham DT. 2013. Improving students’ learning with effective learning techniques. Psychol Sci Public Interest. 14(1):4–58. [DOI] [PubMed] [Google Scholar]

- Gheihman G, Callahan DG, Onyango J, Gooding HC, Hirsh DA. 2021. Coproducing clinical curricula in undergraduate medical education: student and faculty experiences in longitudinal integrated clerk-ships. Med Teach. 43(11):1267–1277. [DOI] [PubMed] [Google Scholar]

- Gooding HC, Mann K, Armstrong E. 2017. Twelve tips for applying the science of learning to health professions education. Med Teach. 39(1):26–31. [DOI] [PubMed] [Google Scholar]

- Hafferty FW, O’Brien BC, Tilburt JC. 2020. Beyond high-stakes testing: learner trust, educational Acad Med. 95(6):833–837. [DOI] [PubMed] [Google Scholar]

- Harris BHL, Walsh JL, Tayyaba S, Harris DA, Wilson DJ, Smith PE. 2015. A novel student-led approach to multiple-choice question generation and online database creation, with targeted clinician input. Teach Learn Med. 27(2):182–188. [DOI] [PubMed] [Google Scholar]

- Hirumi A, Horger L, Harris DM, Berry A, Daroowalla F, Gillum S, Dil N, Cendán JC. 2022. Exploring students’ [pre-pandemic] use and the impact of commercial-off-the-shelf learning platforms on students’ national licensing exam performance: a focused review – BEME Guide No. 72. Med Teach. 44(7):707–719. [DOI] [PubMed] [Google Scholar]

- Kanter SL. 2012. To be there or not to be there. Acad Med. 87(6):679. [DOI] [PubMed] [Google Scholar]

- Kapadia SJ. 2021. Perspectives of a 2nd-year medical student on ‘Students as Partners’ in higher education – what are the benefits, and how can we manage the power dynamics? Med Teach. 43(4):478–479. [DOI] [PubMed] [Google Scholar]

- Kerfoot BP, DeWolf WC, Masser BA, Church PA, Federman DD. 2007. Spaced education improves the retention of clinical knowledge by medical students: a randomised controlled trial. Med Educ. 41(1): 23–31. [DOI] [PubMed] [Google Scholar]

- Kerfoot BP, Fu Y, Baker H, Connelly D, Ritchey ML, Genega EM. 2010. Online spaced education generates transfer and improves long-term retention of diagnostic skills: a randomized controlled trial. J Am Coll Surg. 211(3):331.e1–337.e1. [DOI] [PubMed] [Google Scholar]

- Könings KD, Mordang S, Smeenk F, Stassen L, Ramani S. 2021. Learner involvement in the co-creation of teaching and learning: AMEE Guide No. 138. Med Teach. 43(8):924–936. [DOI] [PubMed] [Google Scholar]

- Kornell N 2009. Optimising learning using flashcards: spacing is more effective than cramming. Appl Cognit Psychol. 23(9):1297–1317. [Google Scholar]

- Krupat E, Richards JB, Sullivan AM, Fleenor TJ, Schwartzstein RM. 2016. Assessing the effectiveness of case-based collaborative learning via randomized controlled trial. Acad Med. 91(5):723–729. [DOI] [PubMed] [Google Scholar]

- Lambers A, Talia AJ. 2021. Spaced repetition learning as a tool for orthopedic surgical education: a prospective cohort study on a training examination. J Surg Educ. 78(1):134–139. [DOI] [PubMed] [Google Scholar]

- Larsen DP, Butler AC, Roediger HL. 2009. Repeated testing improves long-term retention relative to repeated study: a randomised controlled trial. Med Educ. 43(12):1174–1181. [DOI] [PubMed] [Google Scholar]

- Lu M, Farhat JH, Dallaghan GLB. 2021. Enhanced learning and retention of medical knowledge using the mobile flash card application Anki. Med Sci Educ. 31(6):1975–1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martens SE, Meeuwissen SNE, Dolmans DHJM, Bovill C, Könings KD. 2019. Student participation in the design of learning and teaching: disentangling the terminology and approaches. Med Teach. 41(10): 1203–1205. [DOI] [PubMed] [Google Scholar]

- Moghadami M, Amini M, Moghadami M, Dalal B, Charlin B. 2021. Teaching clinical reasoning to undergraduate medical students by illness script method: a randomized controlled trial. BMC Med Educ. 21(1):87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman G 2009. Teaching basic science to optimize transfer. Med Teach. 31(9):807–811. [DOI] [PubMed] [Google Scholar]

- Piza F, Kesselheim JC, Perzhinsky J, Drowos J, Gillis R, Moscovici K, Danciu TE, Kosowska A, Gooding H. 2019. Awareness and usage of evidence-based learning strategies among health professions students and faculty. Med Teach. 41(12):1411–1418. [DOI] [PubMed] [Google Scholar]

- Rawson KA, Dunlosky J. 2011. Optimizing schedules of retrieval practice for durable and efficient learning: how much is enough? J Exp Psychol Gen. 140(3):283–302. [DOI] [PubMed] [Google Scholar]

- Roediger HL, Butler AC. 2011. The critical role of retrieval practice in long-term retention. Trends Cogn Sci. 15(1):20–27. [DOI] [PubMed] [Google Scholar]

- Schmidt HG, Mamede S. 2020. How cognitive psychology changed the face of medical education research. Adv Health Sci Educ Theory Pract. 25(5):1025–1043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt HG, Rotgans JI, Rajalingam P, Low-Beer N. 2019. A psychological foundation for team-based learning: knowledge reconsolidation. Acad Med. 94(12):1878–1883. [DOI] [PubMed] [Google Scholar]

- Scott KW, Callahan DG, Chen JJ, Lynn MH, Cote DJ, Morenz A, Fisher J, Antoine VL, Lemoine ER, Bakshi SK, et al. 2019. Fostering student–faculty partnerships for continuous curricular improvement in undergraduate medical education. Acad Med. 94(7):996–1001. [DOI] [PubMed] [Google Scholar]

- Suliman S, Könings KD, Allen M, Al-Moslih A, Carr A, Koopmans RP. 2023. Sailing the boat together: co-creation of a model for learning during transition. Med Teach. 45(2):193–202. [DOI] [PubMed] [Google Scholar]

- Versteeg M, Blankenstein F v, Putter H, Steendijk P. 2019. Peer instruction improves comprehension and transfer of physiological concepts: a randomized comparison with self-explanation. Adv Health Sci Educ Theory Pract. 24(1):151–165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu JH, Gruppuso PA, Adashi EY. 2021. The self-directed medical student curriculum. JAMA. 326(20):2005. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.