Summary

The concept of localization precision, which is essential to localization microscopy, is formally extended from optical point sources to microscopic rigid bodies. Measurement functions are presented to calculate the planar pose and motion of microscopic rigid bodies from localization microscopy data. Physical lower bounds on the associated uncertainties – termed centroid precision and orientation precision – are derived analytically in terms of the characteristics of the optical measurement system and validated numerically by Monte Carlo simulations. The practical utility of these expressions is demonstrated experimentally by an analysis of the motion of a microelectromechanical goniometer indicated by a sparse constellation of fluorescent nanoparticles. Centroid precision and orientation precision, as developed here, are useful concepts due to the generality of the expressions and the widespread interest in localization microscopy for super-resolution imaging and particle tracking.

Keywords: Centroid precision, localization microscopy, localization precision, orientation precision, uncertainty

1. Introduction

Localization microscopy comprises a class of rapidly advancing and broadly applicable experimental and computational methods for measuring the positions and motions of small optical indicators (Deschout et al., 2014a, b; Chenouard et al., 2014). Although the resolution of optical microscopy is ordinarily limited by diffraction to the Rayleigh limit of approximately half the imaging wavelength, the position of an optical point source that is individually resolved within an image with pixels and noise can be measured with an uncertainty that is orders of magnitude smaller (Bobroff, 1986). The physical lower bound of uncertainty in estimating the position of a fluorescent point source was derived (Thompson et al., 2002) and corrected (Mortensen et al., 2010) to:

| (1) |

where is the standard deviation of the Gaussian approximation of the microscope point spread function, is the pixel pitch of the imaging sensor, is the expected number of background photons per pixel and is the total number of detected signal photons. This expression of localization precision denotes one standard deviation along a single spatial axis.

Localization precision is widely used as a metric of minimum uncertainty for designing measurements for low uncertainty and for assessing empirical uncertainties (Thompson et al., 2002; Ober et al., 2004; Betzig et al., 2006; Huang et al., 2009, 2013; Mortensen et al., 2010; Smith et al., 2010; Deschout et al., 2014b; Chenouard et al., 2014; Endesfelder & Heilemann, 2014). For a given experimental measurement or analysis, Eq. (1) can be used to predict if a target uncertainty is physically possible to achieve. Eq. (1) can also be informative of critical parameters in the design of an experimental measurement system to achieve a target uncertainty. In an analysis of experimental measurement results, if the empirical uncertainty is significantly larger than the localization precision, then experimental errors not modelled by Eq. (1), such as microscope drift (Elmokadem & Yu, 2015), vibration, or fixed pattern noise (Fox-Roberts et al., 2014; Long et al., 2014), must dominate the uncertainty in the measurement. If the empirical uncertainty is approximately equal to the localization precision, however, then it might be possible to reduce the empirical uncertainty by changing one of the experimental parameters in Eq. (1), such as increasing the number of detected signal photons emitted by an optical indicator.

Common optical indicators for localization microscopy include individual fluorophores, which are widely used in super-resolution imaging, and subresolution particles containing many fluorophores, which are frequently tracked as probes and fiducials (Ribeck & Saleh, 2008). Such fluorescent nanoparticles are better localized than individual fluorophores in two ways. An ensemble of many fluorophores with random orientations increases in Eq. (1), other things being equal, and produces approximately isotropic emission for which simple algorithms produce optimal results (Enderlein et al., 2006; Stallinga & Rieger, 2010) that otherwise require more complex methods (Stallinga & Rieger, 2012). For many practical applications, the localization precision of an individual optical indicator is on the order of 1 to 10 nm.

Many microscopic objects of interest are rigid bodies with planar motions which can be measured by localizing and tracking multiple optical indicators in or on the bodies. For such experimental systems, information from multiple optical indicators can be mathematically combined to further reduce the uncertainty of a position measurement and to enable an orientation measurement with low uncertainty. Related measurements have diverse applications in microtechnology, nanotechnology, biology, materials and metrology (Freeman, 2001; Ropp et al., 2013; Berfield et al., 2006, 2007; McGray et al., 2013; Samuel et al., 2007; Yoshida et al., 2011; Teyssieux et al., 2011; Ueno et al., 2010). Recently, constellations of fluorescent nanoparticles indicating the motion of microscopic actuators were localized and tracked, demonstrating the utility of the measurement method (McGray et al., 2013; Copeland et al., 2015). McGray et al. (2013) presented an expression for the minimum uncertainty of the centroid of a constellation of point sources of equal brightness. However, metrics comparable to localization precision have not been developed for either the centroid or the orientation of a sparse constellation of point sources of variable brightness on a microscopic rigid body. Such metrics would be useful to design measurements and assess uncertainties in these experimental systems.

In this paper, the concept of localization precision is extended to the analogous concepts of centroid precision and orientation precision. Just as localization precision provides the minimum uncertainty of localizing a point source by optical microscopy, centroid precision and orientation precision provide minimum uncertainties of the position and orientation of a sparse constellation of point sources of variable brightness on a microscopic rigid body in the image plane of an optical microscope. In Section 2, measurement functions and associated uncertainties for planar pose and motion are formally derived in terms of the localization precision and weighting of individual point sources in the constellation. This leads to the expressions for centroid precision and orientation precision that are summarized in Section 3. In Section 4, the expressions are numerically tested by Monte Carlo simulation, giving confidence in their validity. In Section 5, the expressions are applied to evaluate the experimental measurement uncertainties of the motion of a microelectromechanical goniometer labelled with fluorescent nanoparticles, providing a specific example of the type of analysis for which the expressions are useful. Future directions are indicated in Section 6, and conclusions are made in Section 7. Details of the measurement functions and associated uncertainties are presented in the Appendix.

2. Measurement functions and uncertainties for planar pose and motion

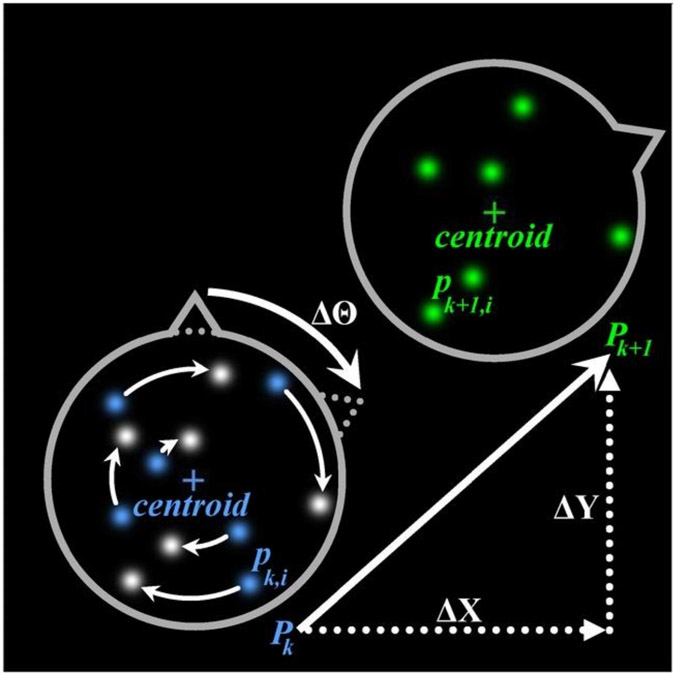

A sparse constellation of point sources in an invariant configuration can be used to measure the pose and motion of a rigid body in the imaging plane of an optical microscope by localizing and tracking the point sources, as illustrated in Figure 1. The optical indicators can be inherent to the body or applied for the purpose of the measurement. In such a measurement, an optical micrograph of a set of indicators in or on the body is captured, and the position of each point source is localized relative to the coordinate frame of the micrograph using an estimation technique, such as least squares or maximum likelihood (Mortensen et al., 2010). The images of the point sources in a micrograph captured after the body has moved are similarly localized. The full planar motion of the body can then be tracked by calculating the rigid planar transform that maps the points in the prior micrograph to the points in the subsequent micrograph. This transform can be expressed as a rotation followed by a translation.

Fig. 1.

Schematic illustrating the measurement concept, in which a sparse constellation of point sources in an invariant constellation indicates the planarmotion of a microscopic rigid body. Planar motion of the body (gray) can be expressed by a rotation () followed by a translation, (, ). The initial positions of the point sources (blue) are , where is the index of a series of images, is the number of point sources, and is the position of the point source in the frame of an image sequence. After the body has moved, the final positions of the point sources (green) are . Uncertainties of each of the three motion parameters are derived from the uncertainties of the positions of the point sources. The fundamental limits of uncertainty of position and orientation measurements are termed centroid precision and orientation precision, respectively. These limits are derived from the localization precision and radial position of the individual point sources in the constellation.

Let be a set of point source positions estimated from an image of a rigid body, and let be the set of true positions of those point sources when an arbitrarily defined coordinate system intrinsic to the body is aligned with the (, ) axes of the measurement. The diacritical hat, such as appears in , is used to denote a true value, as opposed to an estimated value. Let be a transform over (, ) vectors in the Cartesian plane. The pose of the body is the triple (, , ) such that is the transform that best maps onto .

Similarly, if is a set of point source positions estimated prior to some planar rigid motion, and is the set of point source positions estimated subsequent to the motion, then is the transform characterizing the motion. Importantly, the triple (, , ) is independent of , allowing motion measurements to be performed independently of the choice of the origin, which can be arbitrarily defined. The sets and may represent the positions of all point sources observed in the two images or the intersection of two different sets of point sources observed in the two images, as, for example, in the case of super-resolution imaging (Betzig et al., 2006; Hess et al., 2006; Rust et al., 2006).

The measurement functions utilized in calculating from and are best selected to minimize the uncertainty of each coordinate of the motion (, , ). In selecting these measurement functions and in calculating the associated uncertainties of motion, random and independent errors in the position estimates of point sources are assumed. The rotation and translation components of the motion can be treated separately (Arun et al., 1987). The translation, (, ), is considered first. Each coordinate of each position estimate of a point source, or , has some associated measurement uncertainty, , , , or , which is at best the localization precision of that point source. If the uncertainties in , , , and are all equal, then (, ) can be estimated with minimum uncertainty by the centroid displacement. However, in the experimentally relevant case that some of the points have lower uncertainties than others, for example due to a larger number of detected signal photons, then it is appropriate to weight the contribution of each point to the measurement, producing the following measurement function and associated uncertainty:

| (2) |

where is the coordinate component of the weighted estimate of the object motion, is the associated uncertainty, and the set is a set of weights applied to the point sources. Optimal choices of and are inversely proportional to the sum of the variance, as shown in Eq. (2).

Similarly, an estimate of the minimum uncertainty of object rotation, , can be calculated from the optimally weighted measurement function with uncertainty as follows:

| (3) |

where is the weighted estimate of the rotation of the object, (, ) and (, ) are the polar coordinates of the measured position of the point source with respect to the unweighted centroid of the constellation in the first and second image, respectively, is the uncertainty of , is the weight applied to the point source, and is the value of the weight in a normalized, optimized weighting. Derivations are presented in the Appendix.

If for all , then the uncertainty of each component of the motion is a factor of greater than the corresponding component of the pose. The true positions are not ordinarily known or estimated in measurement applications, since there is no axis to which the orientation of the rigid body is absolutely registered. In such cases, rotation is a meaningful metric, but orientation is not. In contrast, the true positions, , are typically known in simulations, such as the one reported in the Section 4.

3. Centroid precision and orientation precision

The expression of localization precision given by Eq. (1) is the minimum uncertainty of a position measurement of a point source. Analogously, the equations in Table 3 can be used to express the minimum uncertainties of position and orientation measurements of a constellation of point sources on a microscopic rigid body in the image plane of a microscope. Most notable of these expressions are the centroid precision and the orientation precision. Centroid precision and orientation precision are minimum values of the centroid uncertainty and orientation uncertainty of the constellation, respectively, as determined from optimally weighted measurements of the pose of the constellation:

| (4) |

where (, ) is the centroid precision, is the orientation precision and is the total number of signal photons detected from the point source. The minimum values for the associated measurements of motion are given in Table 3. These expressions have several practical implications. Both centroid precision and orientation precision can be improved by increasing the brightness of individual point sources and the number of point sources in the constellation. Orientation precision can be further improved by increasing the radius of the constellation.

Table 3.

Measurement functions and associated uncertainties.

| Pose(, , ) |

Motion(,,) |

|||

|---|---|---|---|---|

| Measurand | Measurement | Uncertainty | Measurement | Uncertainty |

| , | ||||

| , | ||||

| , | ||||

| , | ||||

| Expressions for -axis pose and motion are isomorphic to those for -axis pose and motion. | ||||

is the number of point sources used in the measurement; , , the estimated coordinate of the ith point in the only image, image , and image ; , , the single-axis uncertainties of the point in the only image, image , and image ; , , the estimated coordinate of the point in the only image, image , and image ; , , the estimated coordinate of the point in the only image, image , and image ; , the true centroid-offset position of the point in polar coordinates; , the uniformly weighted and optimally weighted estimates of -axis centroid position; , the uniformly weighted and optimally weighted estimates of orientationl; , the uniformly and optimally weighted estimates of -axis centroid displacement; , the uniformly weighted and optimally weighted estimates of rotation.

4. Numerical validation of uncertainty equations

A Monte Carlo simulation was conducted to validate the measurement uncertainties shown in Table 3. Sets of points were randomly generated, and each set of points was subjected to a randomly generated rigid planar transformation. Two images were synthesized from each set of points, one from the untransformed configuration of the set and one from the transformed configuration. In the synthetic images, each point was represented by a two-dimensional Gaussian intensity function with a randomly generated total number of photons. After adding a randomly generated uniform background photon intensity to the image, the intensity value of each pixel in the image was used as the lambda parameter for generating a value from a Poisson distribution. In this way, each image was constructed to resemble the image of a set of point sources recorded by an ideal sensor (Geist et al., 1982), and to represent isotropic emitters, as opposed to dipole emitters, for which synthetic images can be found elsewhere (Sage et al., 2015). The ranges of the parameters used and other details of the simulation are summarized in Section A5 of the Appendix.

The positions of the points in each synthetic image were estimated by regression to a bivariate Gaussian with parameters , where (, ) are the coordinates of the Gaussian peak, is the Gaussian amplitude, is the Gaussian standard deviation, is an offset approximating the background intensity, and is the ratio of camera pixel intensity counts to photon counts. Poses and motions of the simulated rigid body were calculated from the estimated point positions using the measurement functions in Table 3. For simulated pose measurements, the true positions of the untransformed points were treated as .

A set of image pairs was simulated according to the above procedure. Each pair was simulated times for a total of 1 million images. For each of the 12 measurands, , of Table 3, the standard deviation of the set of estimates is the simulated uncertainty of the measurement, . The calculated uncertainty, , is determined from the associated uncertainty equation in Table 3. For each measurand, the normalized root mean square residual is defined as , the residual bias is defined as , and the coefficient of determination is defined as . All of the normalized root mean square residuals were less than 2.5 %, the absolute values of all of the residual biases were less than 0.8 %, and the values of all of the measurands were greater than 0.996, indicating good agreement between the calculated and simulated uncertainties.

Normal probability plots (not shown) indicated that the residuals were normally distributed. Graphical residual analysis demonstrated good randomness in the residuals with respect to the calculated uncertainties and all model parameters except for the standard deviation of the Gaussian point spread function, . A slight positive relationship between and the normalized residual of each measurand was fit to a linear regression model. For each measurand, the slope of this trend line was less than 0.5 % per pixel of . This systematic difference between the analytical calculations and the Monte Carlo calculations may have been due to truncation of wide Gaussians, since the region of interest employed in calculating each point source position had a width of 30 pixels. The largest simulated Gaussians with were therefore truncated at . The systematic variation between the analytical and Monte Carlo models due to this effect was only 3% across the full domain of point spread functions tested.

5. Experimental pose and motion measurements

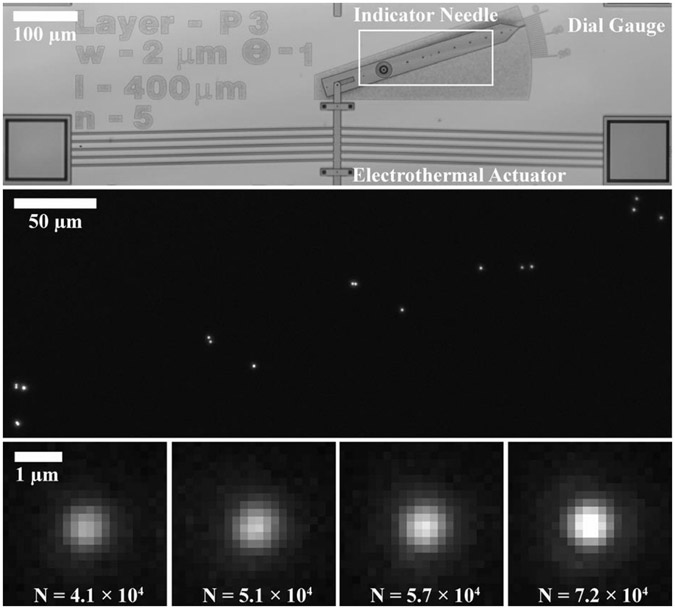

The utility of the derived measurement functions and associated uncertainties was demonstrated by analysis of the uncertainties from experimental measurements of the motion of a microelectromechanical system (MEMS). This particular MEMS (Oak et al., 2011) took the form of a goniometer, articulated by a chevron-type electrothermal actuator (Sinclair, 2000; Baker et al., 2004), with an indicator needle rotating around a pivot, pointing to a dial gauge with graduations at increments of 17.5 mrad (1°) for readout, as shown in Figure 2. The indicator needle was labelled with subresolution fluorescent nanoparticles, and the system was imaged using a widefield epifluorescence microscope equipped with a light emitting diode for excitation at approximately 630 nm, an objective lens with a nominal magnification of 50× and a numerical aperture of 0.55, and a complementary metal–oxide–semiconductor camera with a nominal pixel size of 6.5 μm × 6.5 μm for detection at approximately 660 nm. A region of the imaging sensor of 812 pixels × 1856 pixels was used, resulting in a field of view of 103 μm × 236 μm. Representative parameters of the experimental measurement system that are relevant to the calculation of uncertainties are given in Table 1.

Fig. 2.

(Top) Optical brightfield micrograph showing a microelectromechanical system (MEMS) in the form of a goniometer, with an electrothermal actuator linked to an indicator needle. Linear actuation of the electrothermal actuator resulted in rotary motion of the indicator needle around a pivot. Coarse measurement of rotation was enabled by a graduated dial gauge. A region of interest is indicated by a white box. (Middle) Optical fluorescence micrograph showing a constellation of fluorescent nanoparticles on the indicator needle in the region of interest. (Bottom) Optical fluorescence micrographs showing four of the nanoparticles that label the indicator needle, appearing as the point spread function of the imaging system. The nanoparticles are ordered from left to right by increasing numbers of detected signal photons (), which vary due to polydispersity in nanoparticle size and heterogeneity in illumination intensity. A larger number of detected signal photons results in a lower measurement uncertainty, motivating weighting of the contributions of individual nanoparticles to the overall measurement.

Table 1.

Representative parameters of the experimental measurement system.

| Parameter | Value | Units | Subsystem |

|---|---|---|---|

| 127 | nm | Microscope | |

| 228 | photons per pixel | Microscope | |

| 286 | nm | Microscope | |

| 104 to 105 | photons | Particles | |

| 10 to 100 | Particles |

To evaluate the uncertainties of position measurements of individual fluorescent nanoparticles, 2000 sequential fluorescence micrographs of the labelled indicator needle were recorded at a rate of 8 Hz in the absence of intended motion. Images were processed after the experiment at a rate of 2.5 Hz, including target detection, registration, Gaussian estimation and motion calculation. The and position of each nanoparticle was measured by least squares Gaussian estimation from the image data, which is equivalent to maximum likelihood estimation for the number of detected signal photons. The corresponding uncertainty of each coordinate of the position of each nanoparticle was determined from the root mean square displacement of the nanoparticle between successive images divided by , which is equivalent to a pooled standard deviation of successive image pairs (ISO Technical Advisory Group 4, 1995). The position uncertainties of individual nanoparticles were then used to calculate predicted uncertainties for motion measurements of the nominally rigid indicator needle, according to the uncertainty expressions of Table 3.

Empirical uncertainties for motion measurements of the indicator needle were then estimated from the apparent motion of the needle between each pair of successive images, due to vibration, drift, or other non-ideal behaviour, as well as photon shot noise described by Eq. (1). The motion of the indicator needle between each successive pair of images was estimated using the expressions of Table 3, after establishing a correspondence between the points in each image (Besl & McKay, 1992; Pennec & Thirion, 1997; Zitova & Flusser, 2003). The empirical uncertainty of each coordinate of this motion was calculated as the standard deviation of the set of estimates of the corresponding coordinate. The resulting empirical uncertainties are compared in Table 2 with the uncertainties predicted by the expressions of Table 3, and also with the minimum uncertainties given by the centroid and orientation precision.

Table 2.

Measurement uncertainties of the planar motion of a MEMS goniometer.

| Uncertainty | Uniform (pixels) |

Optimal (pixels) |

Uniform (pixels) |

Optimal (pixels) |

Uniform (μ-rad) |

Optimal (μ-rad) |

|---|---|---|---|---|---|---|

| Predicted | 0.0093 | 0.0081 | 0.0096 | 0.0081 | 21.4 | 18.5 |

| Empirical | 0.0101 | 0.0093 | 0.0107 | 0.0097 | 23.4 | 18.9 |

| Minimum | 0.0031 | 0.0030 | 0.0031 | 0.0030 | 6.7 | 6.5 |

The predicted and empirical uncertainties agree to within 2.9–16.5%. This near equality of uncertainties validates the assumption of planar rigid motion, as violation of this assumption would lead to much higher empirical uncertainties. In contrast, the predicted and empirical uncertainties are approximately a factor of three times greater than the minimum uncertainties defined by Eq. (4). This is a useful result, indicating that the limiting factor of the experimental measurement was not one of the parameters modelled by the measurement functions.

Such factors might include vibration and drift of the microscope system, or fixed pattern noise of the complementary metal–oxide–semiconductor camera. The empirical uncertainty might therefore be improved by up to a factor of three by additional investigation and mitigation of such factors, for example, by drift correction (Lee et al., 2012; Elmokadem & Yu, 2015) or camera calibration (Huang et al., 2013) If the empirical uncertainties and predicted uncertainties were then to become approximately equal to the associated minimum uncertainties, the signal-to-noise ratio would be the limiting factor of the measurement. Only at this point would the detection of more signal photons, for example, be useful to further reduce the measurement uncertainty. Such comparison of measured to minimum uncertainties provides practical guidance for ongoing improvement of the experimental measurement system.

Once the uncertainty of the rotation measurement was established, the repeatability of the MEMS rotation was tested. The actuator was driven alternately with an applied voltage of 0 and 5 V for 1000 cycles, which repeatedly rotated the indicator needle by an angle of ±5.00 mrad (±0.287°). Fluorescence micrographs were taken after each motion, and the rotation of the indicator needle was determined using the optimally weighted and uniformly weighted estimators, and . The rotational repeatability of the indicator needle, as determined by the standard deviation of the motion measurement, was 18.9 μrad – the same as the empirical uncertainty – indicating that the observed repeatability was dominated by measurement uncertainty. Therefore, the repeatability of the MEMS may have been even better than indicated by this measurement. This is an interesting result, considering the sliding contact in the linkage coupling the electrothermal actuator and indicator needle. The motions of such systems can be complex, as will be investigated in future studies.

6. Future directions

The measurement functions and associated uncertainties presented in this paper have expressed the physical lower bounds of uncertainty of measurements of planar pose and motion, in terms of the parameters of the experimental measurement system used to perform localization microscopy. The direct calculation of these minimum uncertainties was facilitated by the existing theoretical basis of localization precision. This is an important distinction between the mathematical expressions presented here, and many generic algorithms for image registration (Zitova & Flusser, 2003). Although measurement uncertainties derived from such algorithms are beginning to be addressed (Fitzpatrick & West, 2001; Simonson et al., 2007), a similar physical basis for uncertainty is not ordinarily available for such generic algorithms. Future work might trace the uncertainties of generic algorithms to a physical basis in the quantization of light.

The measurement functions and associated uncertainties presented in this paper apply to the broadly relevant case of the pose and motion of rigid bodies within the image plane of an optical microscope. However, in some applications of localization microscopy, microscopic rigid bodies exhibit a component of motion out of the image plane. Such motion may lead to systematic effects that are not modelled here, and would be indicated by a discrepancy between the empirical and predicted uncertainties. The effects of such motion will be addressed in future work by a generalization of the image mapping from rigid to affine transformations.

The measurement functions, associated uncertainties, and experimental analysis presented in this paper are predicated on the assumption stated in Section 2 that errors in position estimates of point sources are random and independent. However, this assumption could be invalidated by some motions of optical microscopes, such as drift and vibration, which could result in correlated errors in position estimates of multiple point sources. Related effects will be addressed in future work.

Finally, the experimental measurements in this paper were performed on a microfabricated device that implemented motion as an engineered function. The measurement functions and associated uncertainties presented in this paper are similarly applicable in different experimental contexts, for example, reference measurements of multiple point sources in a fiducial constellation for investigation and correction of errors from unintended motion of an optical microscope.

7. Conclusions

This paper has extended the concept of localization precision from optical point sources to microscopic rigid bodies in the imaging plane of a widefield microscope. This has established a firm foundation for related measurements involving localization microscopy. A complete set of measurement functions in closed form, along with the associated set of measurement uncertainties, was calculated for the planar pose and motion of rigid bodies indicated by a sparse constellation of multiple point sources of light in an invariant configuration. Physical limits on the minimum uncertainties, termed centroid precision and orientation precision, were expressed in terms of the characteristic properties of the optical measurement system. These measurement functions and uncertainties were numerically validated by Monte Carlo simulation. The utility of the expressions was demonstrated by analysis of the empirical uncertainty of motion measurements of a microelectromechanical goniometer. Because of the generality of centroid precision and orientation precision, and the widespread interest in super-resolution imaging and particle tracking, these innovations are broadly applicable to designing measurements for low uncertainty, interpreting the significance of measurement uncertainties, and identifying sources of uncertainty in measurement systems.

Acknowledgements

This research was performed in the Physical Measurement Laboratory and the Center for Nanoscale Science and Technology at the National Institute of Standards and Technology (NIST). The authors acknowledge support of this research under the NIST Innovations in Measurement Science Program. C.R.C. acknowledges support of this research under the Cooperative Research Agreement between the University of Maryland and the National Institute of Standards and Technology Center for Nanoscale Science and Technology, Award 70NANB10H193, through the University of Maryland.

Appendix

Detailed descriptions of measurement functions, associated uncertainties, analytical derivations, and numerical simulations are presented in this appendix.

A1. Measurement functions for planar motion of a rigid body

If a sparse constellation of multiple point sources in an invariant configuration is located in or on a rigid body, then the planar pose of the body can be determined from the positions of the point sources. Similarly, the motion of the body can be determined from the change in the positions of the point sources resulting from the motion. Measurement functions for each coordinate of pose and motion, reflecting uniform weighting of the point sources and optimal weighting of the point sources, along with associated uncertainties for the measurements, are presented in Table 3. Derivations and discussion of the measurement functions are presented in the remainder of this section. The associated uncertainties are derived and discussed in Section A2.

A1.1. Measurement functions for planar motion of a rigid body.

Let be the true positions of a set of point sources on a rigid body and let be the set of true positions of those point sources when an arbitrarily-defined coordinate system intrinsic to the body is aligned with the (, ) axes of the measurement. The true pose of the body is defined by the proper rigid planar transform, over (, ) vectors in the Cartesian plane, that maps to . The transform can be expressed in terms of three scalar parameters as follows:

| (A1) |

where the triple, (, , ), is an equivalent expression of the true object pose, with corresponding to the -axis position, to the -axis position, and to the orientation.

Let be a set of estimates of the positions in . Estimates of the object pose can be calculated from as follows:

| (A2) |

where , and are estimates of , and , respectively; and are the Cartesian coordinates of ; and are the polar coordinates of with respect to an origin at the (weighted) centroid of ; and are the polar coordinates of with respect to an origin at the (weighted) centroid of and the sets , and are weights applied to the measurements.

A1.2. Motion measurement functions.

If two images bracket a motion of a planar rigid body, then the motion can be determined from the change in the estimated positions of corresponding point sources in the two images, as determined by localization microscopy. Let be the true positions of a set of point sources on a rigid body prior to some motion, and let be the set of true positions of those point sources after the motion. The motion of the body is defined by the proper rigid planar transform, over (, ) vectors in the Cartesian plane, that maps to . The transform can be expressed in terms of three scalar parameters as follows:

| (A3) |

where (, , ) is an equivalent expression of the true motion of the object, with , and corresponding to the -axis displacement, -axis displacement, and rotation, respectively.

Let be a set of estimates of the positions in , and let be a set of estimates of the positions in . Estimates of the motion of the object can be calculated from and as follows:

| (A4) |

where , and are estimates of , and , respectively; (, ) and (, ) are the Cartesian coordinates of and ; (, ) and (, ) are the polar coordinates of and with respect to origins at the (weighted) centroids of and , respectively; and the sets , and are weights applied to the measurements.

A1.3. Derivation of the orientation measurement function.

The expression for in Eq. (A2) is derived by finding the value of that minimizes the weighted sum of squared error between and :

| (A5) |

where (, ) are the coordinates of the point in and (, ) are the coordinates of the corresponding point in . The offset, , is the free variable for the optimization and represents a test rotation between the two sets of points. The error, , is the distance between and the point found by rotating by , and is the weight applied to the squared error . The optimal choice of rotation determined by this method, , is found by minimizing :

| (A6) |

The optimal value of can then be found by solving Eq. (A6), which yields:

| (A7) |

Alternatively, the residuals () in Eq. (A6) can be treated with a small angle approximation yielding the simpler expression:

| (A8) |

where the sums and are implicitly defined by the numerator and denominator to simplify use of the expression in the next section.

A2. Uncertainties of motion measurements by localization microscopy

Given uncertainties, (, ), of the position of each point source , the combined standard uncertainty of each component of the pose of can be calculated from the associated measurement function using the law of propagation of uncertainty (ISO Technical Advisory Group 4, 1995). Here the pose and motion uncertainties are calculated for the common case in which for each value of .

A2.1. Position uncertainty.

The -axis position uncertainty of a pose measurement by localization microscopy can be calculated from the -axis position uncertainties of the individual points, , and the associated weights, , using the law of propagation of uncertainty as follows:

| (A9) |

The expression for is isomorphic to .

A2.2. Orientation uncertainty.

With Eq. (A8) defining the measured value of , the measurement uncertainty associated with can be calculated from the law of propagation of uncertainty (ISO Technical Advisory Group 4, 1995) as follows:

| (A10) |

where is the uncertainty of , is the uncertainty of , is the uncertainty of , is the uncertainty of , is the uncertainty of and and are defined in Eq. (A8). Since and are true point coordinates, and are both zero.

and can be derived from the position uncertainties, , again by using the law of propagation of uncertainty:

| (A11) |

The uncertainty of the rotation can then be calculated as:

| (A12) |

Noting that the residuals, (), are far smaller than unity and that the centroid distance errors, (), are far smaller than the centroid distances themselves in all but degenerate cases, the orientation uncertainty can then be approximated as:

| (A13) |

It is evident from Eq. (A13) that the orientation uncertainty depends neither on the choice of the (, , ) origin, nor on the orientation, , but only on the weight, the position uncertainty, and the distance from the centroid of each point in .

A3. Minimum uncertainty weights

An optimal weighting, , for each measurand, , can be determined from the estimated coordinates of the individual point sources and their associated uncertainties, such that the uncertainty of the measurand is minimized. The calculations for optimally weighted measurement functions are presented below and uncertainties are presented in Table 3. The optimal weight calculations are presented in Sections A3.1-A3.3.

A3.1. Optimal weighting for position.

Weightings for measurements of the position of a rigid body are restricted to those in which the weights sum to unity, to avoid scaling the measurand. The method of Lagrange is utilized to minimize the uncertainties of the weighted position calculated in Section 2, subject to the normalization constraint.

Consider the square of the -axis position uncertainty of Eq. (A9):

| (A14) |

To minimize the squared uncertainty subject to the constraint , a Lagrangian is constructed as follows:

| (A15) |

where is the Lagrangian and the Lagrange multiplier. The zeros of the partial derivatives of with respect to , coupled with the normalization constraint, provide the following system of equations:

| (A16) |

which yields uniquely optimal weights:

| (A17) |

Substituting Eq. (A17) into Eq. (A9) produces the minimum uncertainty in -axis position that is achievable:

| (A18) |

The weighting for minimum uncertainty of -axis position is isomorphic.

A3.2. Optimal weighting for orientation.

Since orientation is scale invariant, the method of Lagrange need not be used, and a set of weightings can be determined all of which are optimal with respect to orientation uncertainty. For simplicity, the unique normalized optimal weighting is selected.

Consider the square of the orientation uncertainty of Eq. (A13):

| (A19) |

Optimal weightings occur where the gradient of with respect to the set of is zero. Each component of the gradient can be expressed as:

| (A20) |

The partials can then be calculated as follows:

| (A21) |

which at their roots yield a unique set of optimal normalized weights:

| (A22) |

Substituting Eq. (A22) into Eq. (A13) produces the minimum uncertainty in orientation:

| (A23) |

Table A1.

Parameters of the Monte Carlo simulation.

| Parameter | Units | Domain | Probability distribution |

|---|---|---|---|

| Pixels | {} | ||

| Photons per pixel | {} | ||

| Points | {} | ||

| Photons | {} | ||

| Photons | {} | ||

| Photons | {} | ||

| Pixels | {} | ||

| Radians | {} | ||

| Pixels | {} | ||

| Pixels | {} | ||

| Radians | {} |

A4. Motion uncertainty

Uncertainties of motion measurements can be calculated from the measurement functions of Eq. (A4) in the same way that uncertainties of pose measurements were calculated from Eq. (A2) in the previous section, resulting in the following expressions:

| (A24) |

In cases that the uncertainties of the positions of the points in the two images are the same in the two images that bracketed the measured motion, the above motion uncertainties are simply a factor of greater than the corresponding pose uncertainty.

A5. Optimal weightings for motion measurements.

Optimal weightings for motion measurements can be calculated in the same way as those for pose measurements described in Section A.3, resulting in the following expressions:

| (A25) |

A5. Range of simulation parameters

A total of 200 configurations were randomly generated for the Monte Carlo method described above. Each configuration consisted of set of parameters, {, , , , , , , }, where is the standard deviation of the Gaussian intensity function used to approximate the image of each point source, is the expected number of background photons detected at each pixel, is the number of point sources, each of is the expectation value of the number of photons detected from the point source, is the set of untransformed points, and the triple (, , ) denotes the true transform of the points from the first image to the second. The parameters , , , and are generated from uniform distributions, whereas the distribution from which is generated is logarithmically scaled. The points are generated as polar coordinates , where is generated from a uniform distribution over the range 0 to , and is generated over the range 0 to 150 from a distribution that is quadratically scaled to ensure that points are distributed uniformly over a circle of diameter 300. Each of the photon counts in is generated from a uniform distribution over the range of scale centred on a mean value, , that is generated from a logarithmic distribution. The domain and probability distribution of each parameter are shown in Table A1.

References

- Arun KS, Huang TS & Blostein SD (1987) Least-squares fitting of two 3-d point sets. IEEE Trans. Pattern Anal. Mach. Intell 9, 698–700. [DOI] [PubMed] [Google Scholar]

- Baker MS, Plass RA, Headley TJ& Walraven JA(2004)Final report: compliant thermo-mechanical MEMS actuators, LDRD#52553. Sandia National Laboratory, Albuquerque, New Mexico. [Google Scholar]

- Berfield TA, Patel JK, Shimmin RG, Braun PV, Lambros J & Sottos NR (2006) Fluorescent image correlation for nanoscale deformation measurements. Small 2, 631–635. [DOI] [PubMed] [Google Scholar]

- Berfield TA, Patel JK, Shimmin RG, Braun PV, Lambros J & Sottos NR(2007) Micro- and nanoscale deformation measurement of surface and internal planes via digital image correlation. Proc. Soc. Exp. Mech 64, 51–62. [Google Scholar]

- Besl PJ & McKay HD (1992)A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell 14, 239–256. [Google Scholar]

- Betzig E, Patterson GH, Sougrat R, et al. (2006) Imaging intracellular fluorescent proteins at nanometer resolution. Science 313, 1642–1645. [DOI] [PubMed] [Google Scholar]

- Bobroff N (1986) Position measurement with a resolution and noise-limited instrument. Rev. Sci. Instrum 57, 1152–1157. [Google Scholar]

- Chenouard N, Smal I, de Chaumont F, et al. , (2014) Objective comparison of particle tracking methods. Nat. Methods 11, 281–U247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copeland CR, McGray CD, Geist J, Aksyuk VA & Stavis SM (2015) Characterization of electrothermal actuation with nanometer and microradian precision. In: Proceedings of the 18th International Conference on Solid-State Sensors, Actuators and Microsystems (TRANSDUCERS), 2015 Transducers, Anchorage, AK. [Google Scholar]

- Deschout H, Shivanandan A, Annibale P, Scarselli M & Radenovic A (2014a) Progress in quantitative single-molecule localization microscopy. Histochem. Cell Biol 142, 5–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deschout H, Zanacchi FC, Mlodzianoski M, Diaspro A, Bewersdorf J, Hess ST & Braeckmans K (2014b) Precisely and accurately localizing single emitters in fluorescence microscopy. Nat. Methods 11, 253–266. [DOI] [PubMed] [Google Scholar]

- Elmokadem A & Yu J (2015) Optimal drift correction for superresolution localization microscopy with Bayesian inference. Biophys. J 109, 1772–1780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enderlein J, Toprak E & Selvin PR (2006) Polarization effect on position accuracy of fluorophore localization. Opt. Express 14, 8111–8120. [DOI] [PubMed] [Google Scholar]

- Endesfelder U & Heilemann M (2014) Art and artifacts in single-molecule localization microscopy: beyond attractive images. Nature Methods 11, 235–238. [DOI] [PubMed] [Google Scholar]

- Fitzpatrick JM & West JB (2001) The distribution of target registration error in rigid-body point-based registration. IEEE Trans. Med. Imaging 20, 917–927. [DOI] [PubMed] [Google Scholar]

- Fox-Roberts P, Wen TQ, Suhling K & Cox S (2014) Fixed pattern noise in localization microscopy. Chemphyschem 15, 677–686. [DOI] [PubMed] [Google Scholar]

- Freeman DM (2001) Measuring motions of MEMS. Mrs Bulletin 26, 305–306. [Google Scholar]

- Geist J, Gladden WK & Zalewski EF (1982) Physics of photon-flux measurements with silicon photodiodes. J. Opt. Soc. Am 72, 1068. [Google Scholar]

- Hess ST, Girirajan TPK & Mason MD (2006) Ultra-high resolution imaging by fluorescence photoactivation localization microscopy. Biophys. J 91, 4258–4272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang B, Bates M & Zhuang X (2009) Super-resolution fluorescence microscopy. Ann. Rev. Biochem 78, 993–1016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang F, Hartwich TMP, Rivera-Molina FE, et al. (2013) Video-rate nanoscopy using sCMOS camera-specific single-molecule localization algorithms. Nat. Methods 10, 653–658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ISO Technical Advisory Group 4, G. W (1995) Guide to the expression of uncertainty in measurement. International Standards Organization, Geneva, Switzerland. [Google Scholar]

- Lee SH, Baday M, Tjioe M, Simonson PD, Zhang RB, Cai E & Selvin PR (2012) Using fixed fiduciary markers for stage drift correction. Opt. Express 20, 12177–12183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long F, Zeng SQ & Huang ZL (2014) Effects of fixed pattern noise on single molecule Localization microscopy. Phys. Chem. Chem. Phys 16, 21586–21594. [DOI] [PubMed] [Google Scholar]

- McGray CD, Stavis SM, Giltinan J, Eastman E, Firebaugh S, Piepmeier J, Geist J & Gaitan M (2013) MEMS kinematics by super-resolution fluorescence microscopy. J. Microelectromech. Syst 22, 115–123. [Google Scholar]

- Mortensen KI, Churchman LS, Spudich JA & Flyvbjerg H (2010) Optimized localization analysis for single-molecule tracking and super-resolution microscopy. Nat. Methods 7, 377–U359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oak S, Rawool S, Sivakumar G, Hendrikse EJ, Buscarello D & Dallas T (2011) Development and testing of a multilevel chevron actuator-based positioning system. J. Microelectromech. Syst 20, 1298–1309. [Google Scholar]

- Ober RJ, Ram S & Ward ES (2004) Localization accuracy in single-molecule microscopy. Biophys. J 86, 1185–1200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennec X & Thirion JP (1997) A framework for uncertainty and validation of 3-D registration methods based on points and frames. Int. J. Comput. Vis 25, 203–229. [Google Scholar]

- Ribeck N & Saleh OA (2008) Multiplexed single-molecule measurements with magnetic tweezers. Rev. Sci. Instrum 79, 094301-1–094301-6. [DOI] [PubMed] [Google Scholar]

- Ropp C, Cummins Z, Nah S, Fourkas JT, Shapiro B & Waks E (2013) Nanoscale imaging and spontaneous emission control with a single nano-positioned quantum dot. Nat. Commun. 4, 1–8. [DOI] [PubMed] [Google Scholar]

- Rust MJ, Bates M & Zhuang XW (2006) Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nature Methods 3, 793–795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sage D, Kirshner H, Pengo T, Stuurman N, Min J, Manley S & Unser M (2015) Quantitative evaluation of software packages for single-molecule localization microscopy. Nature Methods 12, 717–U737. [DOI] [PubMed] [Google Scholar]

- Samuel BA, Demirel MC & Haque A (2007) High resolution deformation and damage detection using fluorescent dyes. J. Micromech. Microeng 17, 2324–2327. [Google Scholar]

- Simonson KM, Drescher SM & Tanner FR (2007) A statistics-based approach to binary image registration with uncertainty analysis. IEEE Trans. Pattern Anal. Mach. Intell 29, 112–125. [DOI] [PubMed] [Google Scholar]

- Sinclair MJ (2000) A high force low area MEMS thermal actuator. In: Proceedings of the Itherm 2000: Seventh Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems, Vol. I (ed. by Kromann GB, Culham JR & Ramakrishna K). IEEE, New York. [Google Scholar]

- Smith CS, Joseph N, Rieger B & Lidke KA (2010) Fast, single-molecule localization that achieves theoretically minimum uncertainty. Nat. Methods 7, 373–U352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stallinga S & Rieger B (2010) Accuracy of the Gaussian point spread function model in 2D localizationmicroscopy. Opt. Express 18, 24461–24476. [DOI] [PubMed] [Google Scholar]

- Stallinga S & Rieger B (2012) Position and orientation estimation of fixed dipole emitters using an effective Hermite point spread function model. Opt. Express 20, 5896–5921. [DOI] [PubMed] [Google Scholar]

- Teyssieux D, Euphrasie S & Cretin B (2011) MEMS in-plane motion/vibration measurement system based CCD camera. Measurement 44, 2205–2216. [Google Scholar]

- Thompson RE, Larson DR & Webb WW (2002) Precise nanometer localization analysis for individual fluorescent probes. Biophys. J 82, 2775–2783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ueno H, Nishikawa S, Iino R, Tabata KV, Sakakihara S, Yanagida T & Noji H (2010) Simple dark-field microscopy with nanometer spatial precision and microsecond temporal resolution. Biophys. J 98, 2014–2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoshida S, Yoshiki K, Namazu T, Araki N, Hashimoto M, Kurihara M, Hashimoto N & Inoue S (2011) Development of strain visualization system for microstructures using single fluorescent molecule tracking on three dimensional orientation microscope. In: Optics and photonics for information processing V (ed. By M. K A. A Iftekharuddin AS), pp. 81340E-1–81340E-7. SPIE Press, Bellingham WA. [Google Scholar]

- Zitova B& Flusser J (2003) Image registration methods: a survey. Image Vis. Comput 21, 977–1000. [Google Scholar]