Abstract

Vision provides animals with detailed information about their surroundings, conveying diverse features such as color, form, and movement across the visual scene. Computing these parallel spatial features requires a large and diverse network of neurons, such that in animals as distant as flies and humans, visual regions comprise half the brain’s volume. These visual brain regions often reveal remarkable structure-function relationships, with neurons organized along spatial maps with shapes that directly relate to their roles in visual processing. To unravel the stunning diversity of a complex visual system, a careful mapping of the neural architecture matched to tools for targeted exploration of that circuitry is essential. Here, we report a new connectome of the right optic lobe from a male Drosophila central nervous system FIB-SEM volume and a comprehensive inventory of the fly’s visual neurons. We developed a computational framework to quantify the anatomy of visual neurons, establishing a basis for interpreting how their shapes relate to spatial vision. By integrating this analysis with connectivity information, neurotransmitter identity, and expert curation, we classified the ~53,000 neurons into 727 types, about half of which are systematically described and named for the first time. Finally, we share an extensive collection of split-GAL4 lines matched to our neuron type catalog. Together, this comprehensive set of tools and data unlock new possibilities for systematic investigations of vision in Drosophila, a foundation for a deeper understanding of sensory processing.

Introduction

Over a century of anatomical studies have carefully cataloged many cell types in the fly visual system1–3, and in parallel, behavioral and physiological experiments have examined the visual capabilities of flies. A wealth of high-performance visual behaviors and genetic tools for targeted access to specific cell types have made Drosophila uniquely suited for studying the neural circuit implementation of many visual computations4. Recently, the anatomical understanding of the Drosophila visual system has been revolutionized by connectomics, the reconstruction of neuron shapes and synaptic connections from Electron Microscopy (EM) data5–8. The motion vision pathway provides an impressive example. Despite decades of systematic behavioral and physiological studies, detailed EM circuit reconstructions of just a small piece of the Drosophila visual system transformed the field by proposing several testable hypotheses about the mechanism of directional selectivity in the T4 and T5 neurons7,9. Connectome analysis revealed that these cells receive asymmetric synaptic inputs from distinct neuron populations at different positions along their asymmetrically shaped dendrites. This structural information, together with a near-complete list of relevant cell types, launched a decade of experiments aiming to uncover the mechanism of directional selectivity. This prior work clearly illustrates the crucial insights into function that can come from describing the shapes of neurons and their connections. A complete visual system connectome will bring this level of analysis and incisive experimental design to all areas of visual processing.

While understanding the function of a brain region is an ongoing challenge in neuroscience, there is little mystery about the role of visual brain areas—their neurons and circuits must be involved in seeing. This core insight guides the analysis of the neurons engaged in vision: these neurons should sample and represent information from across the field of view, this information should then be transformed by a series of steps that extract increasingly selective signals, and finally, these signals should be conveyed to higher brain areas. While the mammalian retina is the iconic example of this ground plan, the same organization is found in the Drosophila visual system and even in the reduced visual system of the micro-wasp Megaphragma10. Studies in the mouse retina have used anatomical and functional methods to sort the retinal ganglion cells and other neurons into a comprehensive inventory of more than 40 retinal ganglion cell types11–13. A critical property in defining retinal cell types is their arrangement into anatomical mosaics: cells of the same type are usually organized with approximately uniform coverage and evenly spaced to sample all points in the tissue, so there are no blind spots12–14. We take inspiration from these efforts in fly and mouse vision that have resoundingly demonstrated the power of careful cell typing for functional analysis.

With recent progress in the acquisition and analysis of Drosophila EM connectomes6,15–17 and Light Microscopy18,19 (LM) data, we are struck by the unique role the fly can play in the study of vision. In Drosophila, these studies can be built on a foundation of comprehensive knowledge of the morphology of the relevant neurons, their neurotransmitters, and their synaptic connections, as well as access to cell-type-specific genetic tools to manipulate them20,21. To date, the systematic EM studies of Drosophila visual neurons were all performed in female brains7,9,22–25. Here we introduce a new EM dataset and the first detailed examination of the visual system in a male brain, thereby establishing the most comprehensive survey of any insect optic lobe. This manuscript details this new dataset along with the cell typing of all the visual neurons and a matched collection of genetic drivers. We believe these insights and resources will empower an unprecedented, holistic understanding of vision.

Neurons of the Drosophila visual system

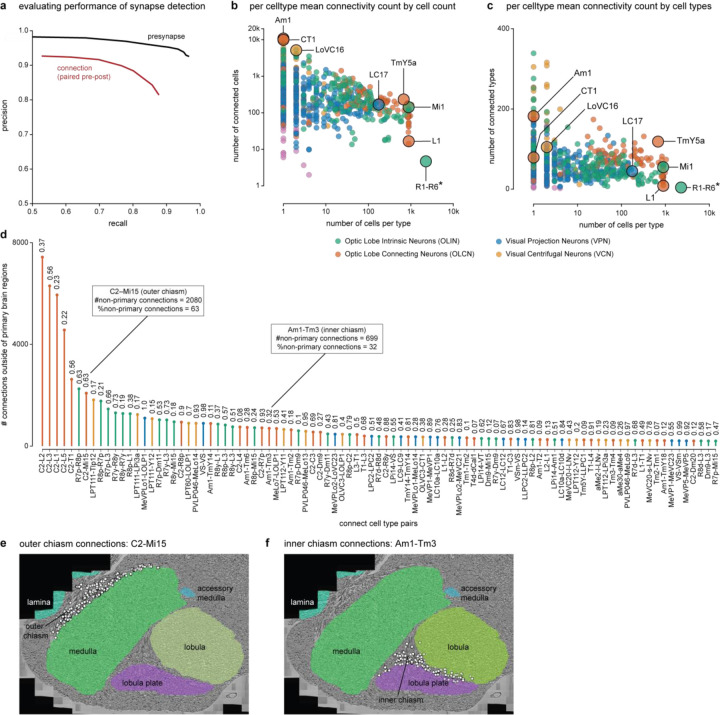

The complete Central Nervous System (CNS), including the entire brain and ventral nerve cord of a male Drosophila was dissected, fixed, stained, and cut into 66 20-μm slabs, which were then imaged using Focused Ion Beam milling and Scanning Electron Microscopy (FIB-SEM). Subsequently, the entire volume was aligned, synapses were detected (Extended Data Fig. 1a), and automatic segmentation was applied to extract neuron fragments. Proofreading of the visual regions on one side has been completed (Extended Data Table 1), establishing a new connectome (available via neuPrint database, see Data Availability), while proofreading of the rest of the CNS is ongoing. We provide here a complete catalog of the neurons in this visual system (Fig. 1a,b, Supplementary Table 1). The process by which individual neurons were assigned to cell types is described in Fig. 2–4, and the complete quantitative inventory is provided in Supplementary Fig. 1.

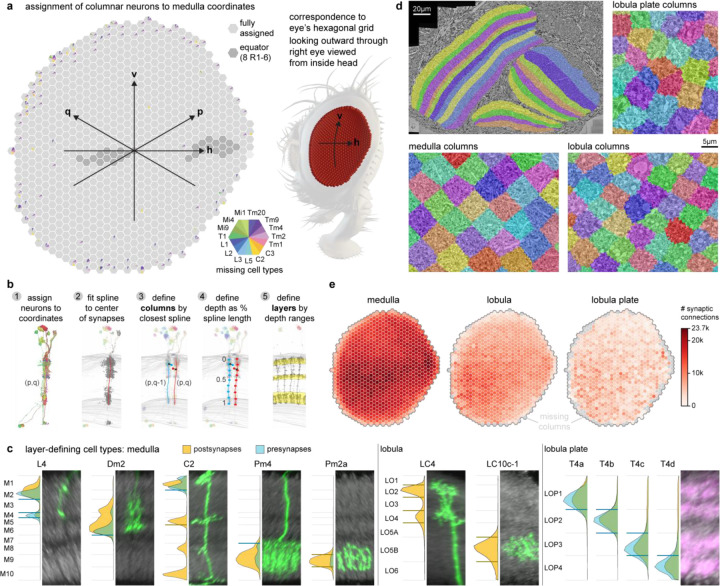

Fig. 1. The neurons of the male Drosophila visual system.

(a) Overview of the male central nervous system dataset, with the VNC attached. (b) This study describes the complete connectome and neuron inventory of the right optic lobe (blue). The optic lobe comprises five regions: lamina, medulla, accessory medulla, lobula, and lobula plate. (c) The 4 main groups of cell types with an example each (number cells shown / total cells of this type). (d) The number of cells and input and output synaptic connections for the 160 cell types with the largest contributions to the total connectivity in the visual system connectome are shown (all types in Supplementary Table 1). Fewer than 100 cell types account for most cells and connections. (e) Summarizing the inventory with the number of cell types, cells, and connections aggregated by cell type groups. The inventory includes a small group of “other” cell types with minimal connectivity. (f) Summary as in (e) but grouped by the five optic lobe brain regions. Counts include cell types and cells with >2% of their synapses contained within a region; many cells contribute to multiple regions. Contributions from the neurons in each cell type group are shown as pie charts. These counts summarize the connectome in the optic-lobe:v1.0 neuPrint database, and reflect the asymmetry between the completion percentage for presynapses and postsynapses (Extended Data Table 1). A few cell types are undercounted (an estimated 2,777 cells from the lamina and 459 R7/R8 photoreceptors and some Lat types in the lamina, see Methods). (g) Input/output connectivity in the optic lobe for all cell types in the inventory. The VPNs generally have more input cells than output cells, while the VCNs show the opposite connectivity pattern (excluding central brain connectivity). The example cell types from (c) and others with unusually high and low connectivity are highlighted.

Fig. 2. Sorting neurons into types based on morphology and connectivity.

(a) Examples of 15 cell types that occur once per column in nearly every column of the visual system, shown across a slice of the optic lobe. (b) Mi9 and Mi4, shown as LM images (see methods) and EM reconstructions, are an example of neurons that appear similar but can be uniquely identified by morphology in nearly all cases. (c) Mi4 and Mi9 can be distinguished by connectivity, as shown for selected input and output cell types. (d) After cell typing all neurons, connectivity clustering sorts the cells assigned to the 15 columnar cell types in (a) into distinct groups, confirming their assignments. (e) Two examples of each of Pm2a and Pm2b neurons, medulla amacrine cells with very similar morphology. (f) The distribution of cell volume is similar for the two types. (g) Pm2a and Pm2b are sorted into two types by connectivity clustering (Extended Data Figs. 2,3), revealing 2 overlapping mosaics. The first two principal components of the centers of mass of their synapse locations are plotted. This visualization preserves the spatial relationships of the cells and aligns with major anatomical axes. (h) Selected distinguishing input and output connections for Pm2a and Pm2b cells. The combination of consistent connectivity differences with overlapping cell distributions supports the split into two types. (i) Most cell types can be distinguished by their strong connections. The proportion of unique combinations of connections across all types for the indicated number of top-ranked connections.

Fig. 4. Diversity of neurotransmitter signaling in the visual system.

(a) Using prior neurotransmitter expression measurements in 59 cell types as ground truth data, a VGG-style deep neural network43 was trained to classify presynaptic transmitter. (b) Performance of the per-(pre)synapse predictions evaluated on held-out synapses; cell types, cells, and synapses used for training and testing are tabulated. (c) Synapse predictions are aggregated for cell-type-level consensus transmitter assignments, shown for 16 TmY cell types; example morphologies below. Three cell types (asterisks) were included in training data, predictions for six types (names in gray boxes) were confirmed by new validation data. (d) Images of driver lines for those six TmY types that were assayed for neurotransmitter marker gene expression38,39, showing GAL4 cell marker (top), markers for ChAT, VGlut, and GAD1 (middle), and merged images (bottom), and the assigned transmitter below. (e) Performance of neurotransmitter predictions for 79 cell types evaluated with experimental data (Supplementary Table 5). (f) Neurotransmitter types counted for synapses, cells, cell types, and cell type groups. In these and following summaries, synapse-level predictions are inherited from consensus (cell-type-level) transmitters. Cells with limited predictions scored as “unclear.” (g) Spatial distribution of presynapses with neurotransmitter classifications; color scale normalized for each column. (h) Median size for OLIN and OLCN cell types. (i) Linear regression estimates of synaptic fanout, the cell-type averaged ratio of output connections to presynapses, grouped by transmitter. The fanout values are significantly different between ACh and GABA (p-value < 0.001) and Glu and GABA (p-value < 0.001), but not between Ach and Glu (p-value ~0.64), ANCOVA.

The fly visual system comprises several distinct anatomical regions: the lamina, medulla, accessory medulla, lobula, and lobula plate, forming the structure called the optic lobe. Homologous regions are found in visual systems across pancrustaceans26. These regions are referred to as neuropils, structures dense with the synaptic connections of dendrites and axons, and shown here as meshes (Fig. 1a,b) that were drawn using visible boundaries in the EM data. Similar to other invertebrate nervous systems, cell bodies are typically distributed surrounding the neuropils.

We first classified neurons based on their relationship to anatomical regions. Optic Lobe Intrinsic Neurons (OLINs) have synapses confined to a single region. Optic Lobe Connecting Neurons (OLCNs) connect two or more visual regions. A large and diverse set of neuron types connects the visual regions with the central brain. The Visual Projection Neurons (VPNs) primarily convey signals to the central brain, while Visual Centrifugal Neurons (VCNs) primarily convey central brain signals to the optic lobes. Fig. 1c shows a representative cell type from each group, visualized on a slice of the principal visual regions. Several important features of visual neurons are immediately apparent – many types form a set of repeating neurons that collectively cover large swaths of the visual brain regions with distinguishing innervation patterns that underlie their specific shapes. Nearly all connections between visual neurons are in the neuropils. However, we also found connections outside the neuropils, most frequently in the chiasmata that connect them (Supplementary Table 2, Extended Data Fig. 1d–f). For example, we find such connections in the outer chiasm involving nearly all lamina-associated neurons, extending a recent observation made for R7, R8 photoreceptors24.

We classified ~53k visual system neurons into 727 cell types (Supplementary Video 1). The number of neurons that comprise a type varies widely, with 60 cell types accounting for over half of the total synaptic connections and ~75% of the neurons. Fig. 1d highlights the 160 cell types with the largest contributions to total connectivity in the visual system connectome, including many well-studied neurons of the motion vision pathway9,27–29: L1, L2, L3, L5, Mi1, Mi4, Mi9, Tm1, Tm2, Tm3, Tm4, Tm9, and all 8 T4 and T5 types. It may be that the need for fast, high-resolution motion signals requires many cells and synapses. Moreover, this unbiased connectivity survey reveals many cells with substantial contributions to the network that have received minimal attention, including many Pm and TmY cell types. The inventory is summarized in Fig. 1e–f, which describes the breakdown of cell types by group and brain region. We include 26 cell types not placed in one of the four main groups, primarily central brain cell types with some limited (<2%) connectivity in visual brain regions.

The connectome contains ~49 million connections in the visual brain regions. Fig. 1g and Extended Data Fig. 1b,c summarize the input-output connectivity of all cell types, color-coded by their group. The number of connected cells in the network spans five orders of magnitude across the visual cell types. Individual R1–6 photoreceptors and Lamina Monopolar Cells (e.g. L1) are connected to just a few other cells, while on the other extreme, several giant individual inhibitory interneurons, such as Am1 and CT1 are connected to >10,000 other cells.

Our naming scheme, explained in Methods, aims to be systematic while respecting well-established historical names. We introduced new, short names for all newly described cell types. For example, for VPNs and VCNs, the names are based on the principal neuropil, with an appended VP or VC, and a unique number. Where new evidence convinced us a previously defined cell type should be divided into several distinct types, we append a letter.

Cell typing visual neurons

To assign cell types, we adopted a practical cell type definition that directly follows the pioneering C. elegans connectome30, the mammalian retina12,13, and decades of work on fly visual neurons3,5,7,9,23,31. We grouped neurons sharing similar morphology and connectivity patterns into types, with the aim that all neurons assigned to a type are more similar to each other than to any neurons assigned to different types. We generated type annotations in an iterative process that combined visual inspection of each cell’s morphology with computational methods for grouping cells by connectivity. Nearly all neurons in the dataset were reviewed multiple times by several team members as part of this process.

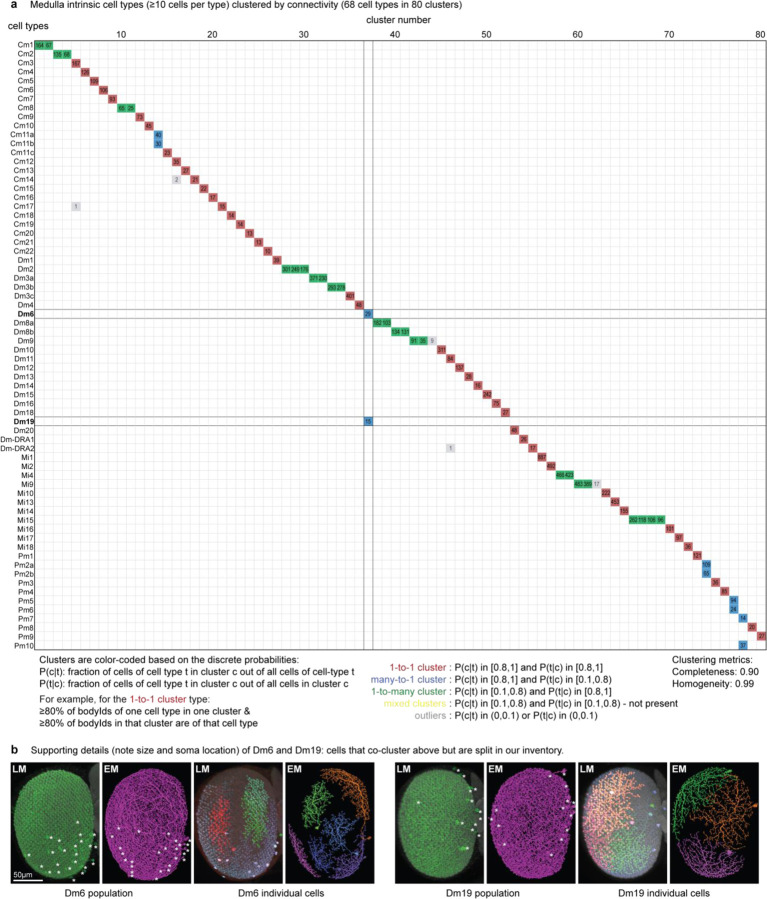

We started by naming easily recognizable, often well-known neurons, building on decades of LM studies3,18,19, prior EM work7, and our unpublished LM analyses. These neurons included the 15 columnar cell types in Fig. 2a, which are present in nearly every retinotopic medulla column (Supplementary Video 2). Each of these cell types can be identified by morphology; even cells such as Mi4 and Mi9 that appear similar are distinguishable at LM and, even more clearly, at EM resolution (Fig. 2b). Importantly, Mi4 and Mi9 neurons can be independently classified by connectivity, even when only considering a few strongly connected input and output types (Fig. 2c). Such connectivity differences can often only be assessed once connections are combined for all neurons of a cell type, because two putative type A neurons may have synaptic connections with cells of type B, but not the same individual type B cells. For this reason, we employed an iterative process in which neurons are first preliminarily typed to be used in connectivity (by type) analyses, which then confirm or refute the initial type assignment. In practice, comparisons of connectivity, including connectivity-based clustering, are already useful when only a minority of neurons is named, with increasing accuracy as more cells are typed. Indeed, with our current cell typing, the 15 columnar neurons are sorted perfectly into unique, connectivity-defined clusters (Fig. 2d).

When applying connectivity-based clustering with a preselected number of clusters to larger groups of cell types (e.g. medulla intrinsic cells, Extended Data Fig. 2), we encountered cases of (putative) cell types that clustered with different cell types or were split into multiple types. Such cases were resolved using additional criteria, such as cell morphology or, when available, genetic markers. For example, Dm6 and Dm19 cells have similar connectivity but different arbor sizes and soma locations (often indicative of their development32; Extended Data Fig. 2a,b) and are labeled by genetic driver lines with expression restricted to only one type. Our collection of cell-type-specific driver lines, discussed below, provided important clues about cell typing for dozens of cell types.

Another important criterion we used when deciding whether to split or merge cell types is spatial coverage. Like the cell types of the mouse retina, the populations of repeating neurons in the fly visual system also form mosaics achieving uniform coverage. To illustrate this biologically and methodologically important property, we consider two related amacrine-like cells of medulla layer M9, Pm2a and Pm2b. While Pm2a and Pm2b have similar morphologies (Fig. 2e,f), we found clear evidence for two populations that each separately cover visual space and have clear connectivity differences (Fig. 2g,h). We used similar analyses of repeating neurons to decide whether to divide groups into finer type categories (Extended Data Fig. 3).

Some challenging cases could only be resolved by using multiple types of information: connectivity, morphology, size, spatial distribution, and cell body position. For example, in a previous analysis of the R7 and R8 photoreceptors and their targets in the pathways that process color information, we found that individual neuron morphologies were idiosyncratic, such that we could not confidently sort all Tm5 neurons into types24. The completeness of the new connectome data overcomes these issues; we identified independent sets of connected neurons that allowed us to separately cluster Tm5a, Tm5b, and Tm29, as well as Dm8a and Dm8b, into overlapping mosaics (Extended Data Fig. 4).

Our cell clustering demonstrates how connectivity places tight constraints on cell typing. We found that >99% of cell types can be distinguished by their top five connections (Fig. 2i). Although this method does not robustly classify individual neurons, it captures essential properties of cell types and is included in our cell type summaries (Fig. 5, Supplementary Fig. 1).

Fig. 5. Quantitative summary of anatomy and connectivity of visual system neurons.

(a) Examples of 13 cell types associated with the Dm3 “line amacrine” neurons, including their major inputs and outputs. (b) Each row presents the cell type’s quantified summary. Number of neurons and consensus neurotransmitter prediction (left-most). Distribution of presynapses and postsynapses across visual region layers (left), top five connected input and output cell types by contributed connectivity (color shade indicates rank; center), and cell size measured by depth (mean number of innervated columns, right). The visual cell types are summarized in The Cell Type Catalog (Supplementary Fig. 1). (c) The stripe-like patterns of Dm3 types each cover all M3, shown here in separate panels with neurons colored by their dominant column coordinate. The inset shows two individual Dm3s from adjacent coordinates and selected connected TmYs.(d) The number of Dm3c and TmY4 cells innervating each column as a spatial distribution by region. Similar plots for all visual cell types are in the Cell Type Explorer web resource. (e) The relationship between the number of cells (population size) and the average number of columns innervated by each cell (cell size) within the medulla (for types with >50 synapses and >5% of total connectivity therein). Color-coded by the coverage factor (per-type average number of cells per column). The 1X line indicates one-fold coverage: cell types above/below cover the whole medulla with more/less neurons per column. Selected cell types show coverage factors for different tiling arrangements: Dm4 (~1) and Dm20 (~5). (f) The per cell type density of medulla coverage, comparing the population’s column innervation to its convex area. Types close to the diagonal (e.g., MeVP10) densely cover the medulla, types above the diagonal (e.g., l-LNv) feature sparser coverage. Medulla layers shown face-on.

Visual system architecture from connectivity

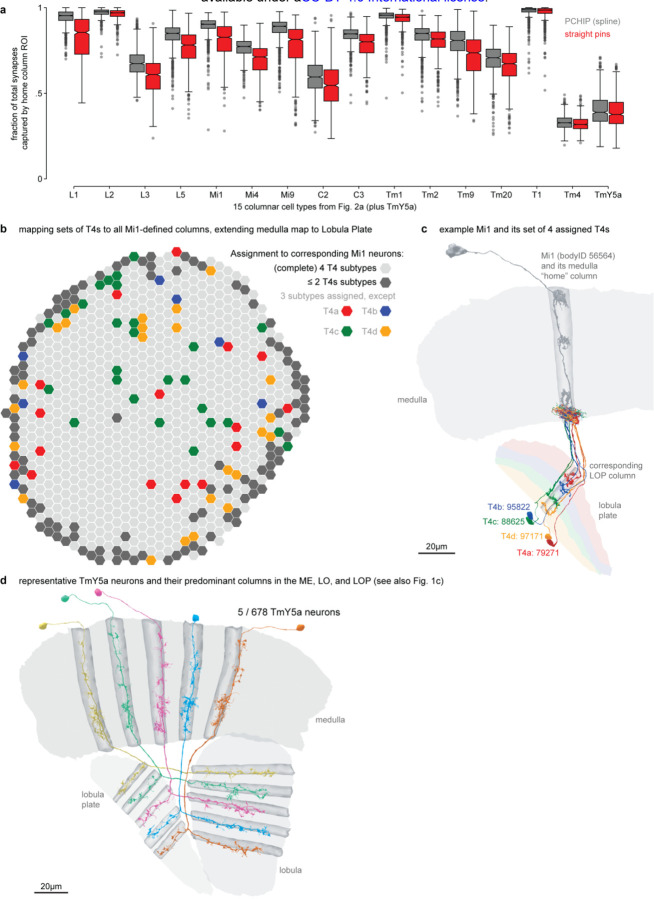

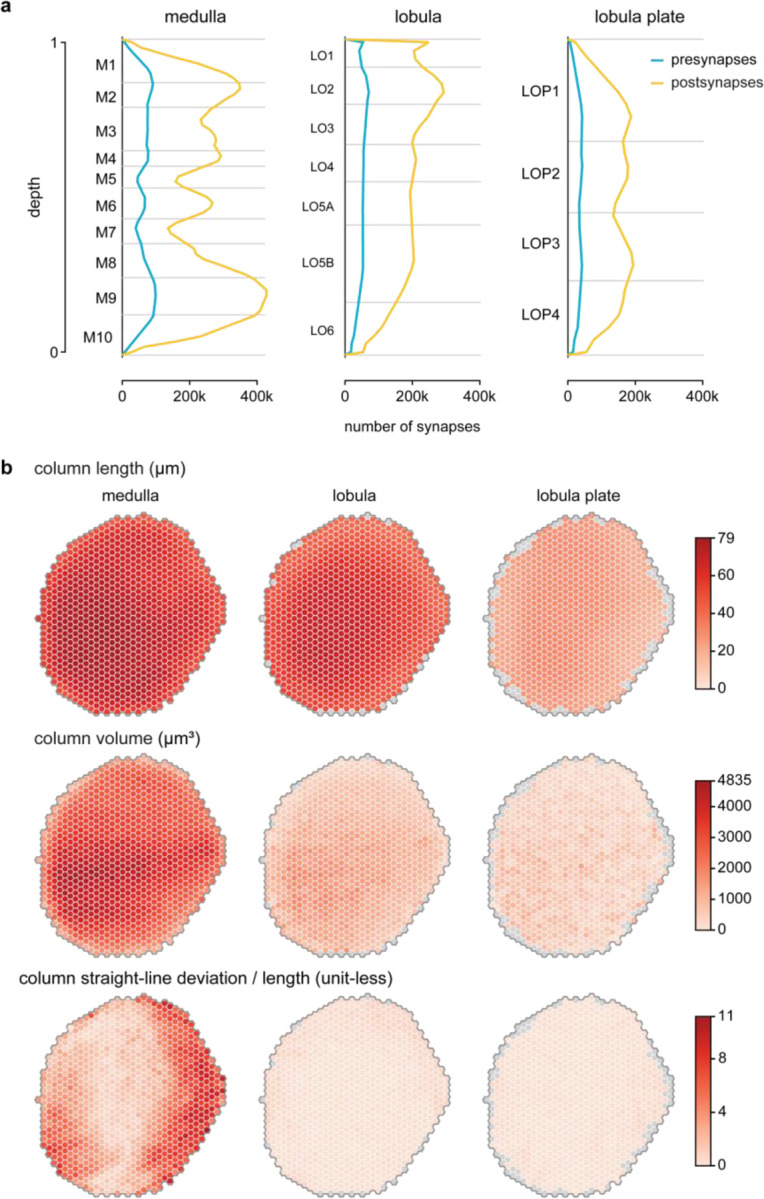

The regions of the fly visual system, like the mammalian neocortex, have been further divided into layers wherein connections between distinct neuron populations occur. Taken together, these layers and the approximately orthogonal columns that convey retinotopy provide a representation of visual space and a biologically meaningful framework for describing visual neurons. Indeed, the distributions of arbors of many fly visual neurons have been shown to provide strong clues about their function7,9,33,34. Although layered structures can be seen with LM35, they are abstractions that emerge from the collective organization of millions of connections between cells. We, therefore, developed a computational infrastructure using principal neurons as scaffolds to delineate the visual system’s layers and columns.

We first built a coordinate system in the medulla. We assigned each of the 15 columnar neurons of Fig. 2a to hexagonal coordinates using the approach we developed in the FAFB dataset36. The coordinates correspond one-for-one to lenses on the compound eye (except for some lenses on the edge). We also identified coordinates along the eye’s equator, a global anatomical reference (Fig. 3a). A complete set of the columnar neurons was assigned to most medulla coordinates, while we found some incomplete sets, mostly at the margins of the medulla (Fig. 3a, Supplementary Table 3).

Fig. 3. Capturing the architecture of the visual system by analyzing the connectivity of key cell types.

(a) The lenses of the fly eye form a hexagonal grid and are mapped onto a hexagonal coordinate system in the medulla. The darker hexagons correspond to locations along the eye’s equator (determined by counting photoreceptors in the corresponding lamina cartridges). The 15 columnar neuron types in Fig. 2a were assigned to each coordinate. Full gray hexagons indicate a medulla location that has been assigned a set of all 15 neurons (see Methods, Supplementary Table 3). The color-coded wedges indicate the cells of a type missing at that medulla location, most of which are along the edge. (b) Process for creating columns and layers. Lobula plate columns were based on sets of T4 neurons assigned to Mi1s (Extended Data Fig. 5). (c) Layer boundaries defined based on synapse distributions of “marker” cell types, established from LM images. For each type, we show the distribution of pre/post synapses across depth together with LM images of single-neuron clones (in green; rotated and rescaled to match top and bottom of the neuropil in light grey). The lobula plate image shows the neuropil (grey, nc82-antibody) and the axon terminals of the T4 neurons (magenta). The horizontal blue/orange lines indicate the distribution cutoff around a peak that defines a boundary. The collection of these defines all layer boundaries, shown in gray. (d) The layers and columns are shown as volumes superimposed on the grayscale EM data. (e) The spatial distribution of postsynapses, by region and column (see also Extended Data Fig. 6).

We then used the synapses of all neurons assigned to each medulla coordinate to create volumetric columns for the medulla and lobula (Fig. 3b, Extended Data Fig. 5a). Lobula plate columns were built by assigning sets of T4 neurons to each Mi1 in the medulla (Extended Data Fig. 5, Supplementary Table 4), which extends the same coordinate system to all three major visual regions. Volumetric layers were constructed by bounding depth positions along columns, calibrated using benchmark cell types (visualized alongside LM examples, Fig. 3c). These volumes establish an “addressable” visual system within the accompanying neuPrint database. They can be visualized alongside the gray-scale EM data (Fig. 3d) or reconstructed neurons (Extended Data Fig. 5c,d). These tools enable database queries that simplify otherwise complex data analyses, such as the spatial distribution of synaptic connections across the visual regions (Fig. 3e), or as a function of depth (Extended Data Fig. 6). Most significantly, the columns and layers facilitate quantification of connectivity and morphology, which form the core of our comparative dataset (Fig. 5).

Neurotransmitters in the visual system

An essential component of a complete description of the fly visual system’s connectome is the neurotransmitters each neuron type uses to communicate across synapses. The functional sign of each synaptic connection, whether excitatory or inhibitory, depends primarily on presynaptic neurotransmitter expression, and on the receptors expressed by postsynaptic cells. Identifying neurotransmitters expressed by each neuron has been challenging and imprecise, relying on genetic techniques like reporter expression or using antibodies for labeling transmitters or their synthesizing enzymes. Moreover, we do not have robust methods for detecting neuromodulation via peptides37.

Recently, more reliable and scalable methods have been developed for assigning neurotransmitters to neurons. Detecting expression of neurotransmitter-synthesizing enzymes within cells using Fluorescence in situ hybridization (FISH)38,39 or RNAseq40–42 has provided transmitter identity for dozens of cell types in the fly visual system. Although EM data do not give direct information about transmitter identity, recent progress in applying machine learning to classify neurotransmitters from small image volumes around presynapses (Fig. 4a) has shown impressive accuracy43. We were therefore excited to apply these methods to the visual system, where the many repeating cell types and substantial prior data on neurotransmitter expression provide an unprecedented scale of training data and means to evaluate the accuracy of these predictions.

We trained a neural network to classify synapses into one of seven transmitters (acetylcholine, glutamate, GABA, histamine, dopamine, octopamine, and serotonin) using the known transmitters of 59 cell types, contributing nearly 2 million synapses (Fig. 4b). The per-synapse accuracy of the trained network showed a modest improvement compared to predictions for the hemibrain and FAFB datasets43. To further validate the predictions, we collected new data on neurotransmitter expression for 66 additional cell types, primarily using EASI-FISH38.

As an example, we show the neurotransmitter predictions for a complete anatomical group of neurons, the TmY cells (Fig. 4c). Three of the 16 cell types (indicated by asterisks) were included in the training data, while the neurotransmitters expressed by six of these types are covered in our new, independent experimental data (labels on gray in Fig. 4c, experimental data in Fig. 4d). The fraction of the top transmitter prediction is high; 14/16 TmY cell types have >85% of synapses classified as one neurotransmitter. The consistency or accuracy of predictions is comparable between the types in the training dataset and cell types not used in training. Our experimental expression data were consistent in all six cases with the top predictions.

The prediction accuracy is further improved when the single-synapse predictions are aggregated across the cells of each type, as shown for our validation dataset of 79 cell types not included in training the neural network (Fig. 4e, Supplementary Table 5). Despite this impressive performance, there are limitations to the reliability of these predictions. Many cell types, including >100 VPN types, have limited presynapses in our data volume. Another ~50 cell types, all with low cell counts, have predictions for octopamine, dopamine, or serotonin, which far exceeds the number of optic-lobe-associated cell types previously estimated to express these transmitters39. Precisely because of the scarcity of neurons confirmed to express these transmitters, they are not well represented in our training data, and accordingly, these predictions are less reliable. For cell types with low confidence predictions, unless independently confirmed by experimental data, the consensus transmitter is reported as “unclear” in the neuPrint database.

Applying the consensus neurotransmitters to the synaptic connectivity data provides new insights (Fig. 4f–i). Approximately half of the cell types express acetylcholine and are likely excitatory, and half express GABA or glutamate and are likely inhibitory (Fig. 4f). Excitatory neurons make up a majority of the region connecting OLCN and VPN types, while the OLIN and VCN types are heavily skewed towards glutamatergic, GABAergic, and aminergic cells (Fig. 4f). There are also distinct differences in neurotransmitters between the layers in each region (Fig. 4g). Furthermore, the largest neurons tend to be glutamatergic or GABAergic, and thus likely inhibitory (Fig. 4h). We also examined the synaptic “fanout” of visual system neurons, i.e., the average ratio of output connections to presynapses. These data show a remarkably consistent relationship, with a significant and potentially interesting difference across the neurotransmitters—the average fan-out of GABAergic neurons is lower than glutamatergic or cholinergic neurons, participating in ~13% fewer connections (Fig. 4i).

Most neurons appear to release the same neurotransmitter(s) across their various terminals, and molecular profiling suggests that most neurons signal with a single dominant neurotransmitter. However, there are two clear examples of co-transmission in the visual neurons: Mi15s express the markers for both dopamine and acetylcholine, while R8 (pale and yellow) express the markers for histamine and acetylcholine40 (Supplementary Table 5). Several visual neurons are peptide-releasing cells, such as the l-LNVs44, and EM-based prediction methods have not yet been developed for peptidergic transmission.

Quantified anatomy and connectivity

The shapes of neurons are varied and complex, but by representing visual brain regions as a coordinate system of layers and columns (Fig. 3), we could bypass much of this complexity to provide a compact, quantitative summary of innervation patterns and connections. Like a fingerprint, these summaries uniquely identify most cell types and are broadly applicable—for comparisons between cells and cell types within our dataset, with neurons imaged with LM (see Fig. 8), and for neurons in other EM volumes. These comparisons can be extended to other insect species due to the deep conservation of the optic lobe ground plan.

Fig. 8: Genetic driver lines for targeting visual system neuron types, matched to the EM-defined cell types.

(a) In a split-GAL4 driver line, two distinct expression patterns are genetically intersected to produce selective expression in cells of interest. We report 577 driver lines that we matched to >300 cell types. (b-e) Examples of the types of LM information used to characterize driver lines for matching to EM-defined cell types. (b) Overall expression pattern of example split-GAL4 driver line that primarily labels LPLC4 VPNs, with some “off-target” VNC expression. (c) LM image of LPLC4 neurons (driver line as in (b)) registered to and displayed on a standard brain (JRC2018U) (left) or overlayed with the skeletons of all EM-reconstructed LPLC4 neurons (registered to JRC2018U). (d) (left) EM reconstructions of individual LPLC4 cells. (right) LM images of stochastically labeled (MCFO19) individual LPLC4 neurons. (e) A manually segmented, registered LM image of a single MCFO-labeled LPLC4 neuron with a slice of the template brain. This image shows a different view than the images in (b)-(d), to emphasize the layer patterns in the visual system. A graphical summary of LPLC4’s innervation, as in Fig. 5b, is shown below. (f) Selected split-GAL4 lines driving expression in OLIN and OLCN types. Layer patterns of genetically labeled cell populations are shown with a neuropil marker (anti-Brp). Corresponding layers are indicated on both the LM images and the EM summary figures (see e). (g) Selected split-GAL4 labeled VPN and VCN types. Images show overlays with registered EM skeletons. Detailed layer-specific patterns can be found in the cell type catalog (Supplementary Fig. 1). Supplementary Table 6 lists split-GAL4 lines.

We illustrate these summaries with a fascinating group of connected neurons that includes the three Dm3 types (Fig. 5a,b). Each Dm3 type forms a striking pattern of stripes across the medulla (Fig. 5c). The stripes of each type have a distinct orientation across the medulla and each type provides substantial input to a distinct TmY type, that itself shows oriented medulla branches that are approximately orthogonal to those of the upstream Dm3 type (Fig. 5c, lower). Although prior LM studies have described Dm3 cells, also known as line amacrines, and their oriented arbors2,3,19, only the comprehensive EM reconstruction and annotation reveal this network in full detail. While we focus here on presenting our cell type inventory of the visual system, we note that the intriguing structure of the Dm3/TmY network has strong implications for function, as others have also recently noted45.

To make it easier to explore the circuits in our visual system inventory, we created compact summaries of many defining features of each cell type (example for Dm3/TmY network in Fig. 5b). The summaries present the number of individual cells of that type, the consensus neurotransmitter prediction, the mean distribution of presynapses and postsynapses (left), the top five connected input and output cell types (middle), and the mean size of the neuron as numbers of innervated columns (right). The top five input and output connections of the example neurons already capture key features of this circuit: each Dm3 type receives its main excitatory input from Tm1 neurons and provides glutamatergic (likely inhibitory) output to the other two Dm3 types, and one of the three TmYs. We were particularly intrigued to find that the three TmY types are the top inputs to LC15, a VPN with selective responses to moving long, thin bars46. A different VPN with a preference for small objects, LC1147, is instead a target of a large glutamatergic interneuron (Li16), which is another major target of the TmY cells, a potential opponent processing step that tunes the selectivity of these feature-detecting LC neurons.

The detailed morphology of the neurons was quantified as the synaptic distribution and column innervation as a function of depth within visual regions. The classic layer boundaries are shown as a reference, but the data are presented with higher depth resolution since it is clear these established neuroanatomic features do not fully capture the organization. An essential test of whether these measurements accurately profile each cell type’s distinctive morphology is to see if they can be used to sort cells. Indeed, by compiling synapse-by-depth measurements into a per-neuron feature vector, we find that most medulla neurons are sorted into their respective types (Extended Data Fig. 7), and this sorting only slightly underperforms connectivity-based clustering (cluster completeness 0.88 vs. 0.9; Extended Data Fig. 2); however, this method does not require pre-assigning cell types beyond those used to establish the columns. The cell type summaries, along with a gallery of example neurons of each of the 727 types, can be found in Supplementary Fig. 1. We developed a set of interactive webpages that summarize all visual neurons’ connectivity and serve as a browsable companion to the neuPrint web interface. The Drosophila Visual System Cell Type Explorer is previewed in Extended Data Fig. 8.

To survey the distribution of the neurons and connections of each cell type we analyzed their spatial coverage across visual regions (summed across layers, Fig. 5d). The example spatial coverage maps for Dm3c and TmY4 in the medulla exhibit a common trend of somewhat higher density of neurons in the area near the eye’s equator. Dm3c is a medulla intrinsic cell without processes in other regions. The coverage by TmY4 shows a noteworthy trend towards higher overlap in the lobula plate (the Cell Type Explorer web resource contains these maps for all cell types). This spatial analysis enables measurements of how neurons cover their retinotopic brain regions, directly linked to their sampling of visual space. Fig. 5e shows the relationship between the number of cells per type, the size of each type in columns, and the coverage factor (average number of cells/column) for the medulla (lobula and lobula plate plots in Extended Data Fig. 9; interactive plots on the Cell Type Explorer web resource). As expected, we find a prominent trend in which the number of cells of a type is inversely related to their size, consistent with the uniformity of coverage property of visual neurons. However, interesting differences emerge within this trend, such that cell types above the “1X” line have higher coverage factors, with more cells than are strictly necessary to cover the region. For instance, there are exactly 48 cells of each of Dm4 and Dm20, but individual Dm20 cells are much larger, featuring higher coverage. Dm4 neurons neatly tile medulla layer M3, while Dm20 cells feature substantial overlap with nearly ten cells per column. Neurons below the “1X” line have partial coverage of the medulla, but this coverage can result from two manifestations: either regional but dense coverage, illustrated by MeVP10s, or broad but patchy coverage, illustrated by l-LNvs (Fig. 5f, Supplementary Video 3). These summary data, along with the complete inventory detailed in Supplementary Fig. 1 and the Cell Type Explorer web resource, provide a comprehensive starting point for circuit discovery and experiment design.

Specialized cell types

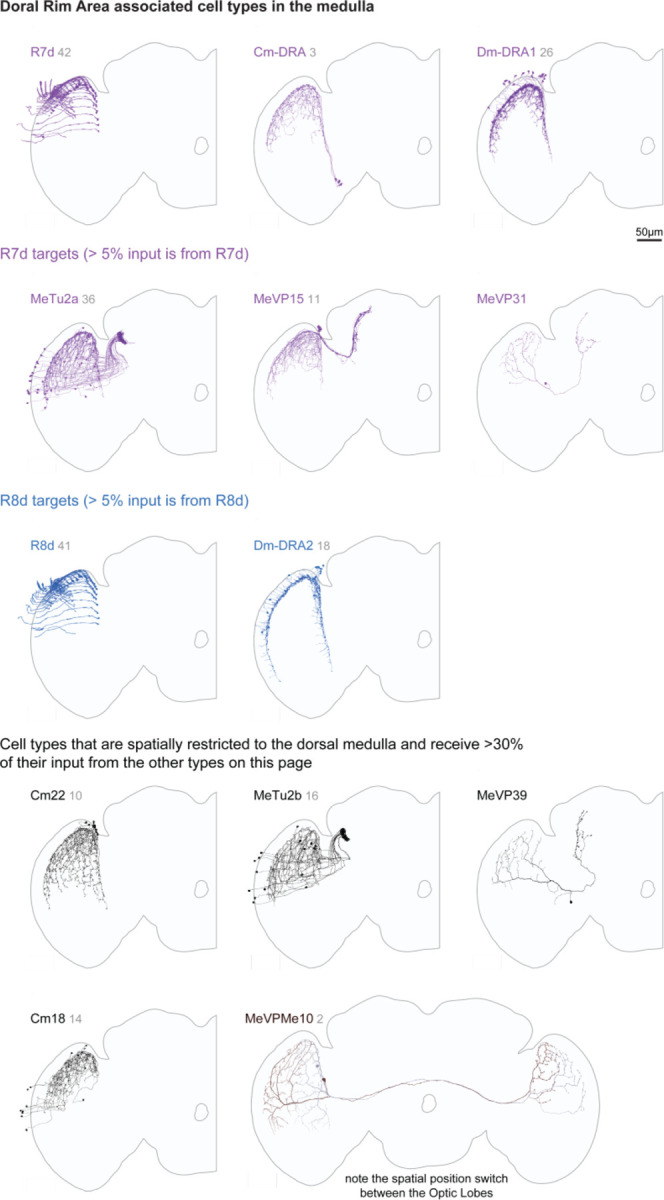

Our complete survey also allows us to highlight specialized sets of visual neurons defined by their anatomy and expected functional roles. The Dorsal Rim Area (DRA) is a zone of the eye whose photoreceptors are specialized for detecting polarized light24,48. Specialized medulla neurons integrate these R7d and R8d photoreceptors (Extended Data Fig. 10). Interestingly, we did not find DRA-specific OLCNs and, therefore, no specialized cells in the lobula.

The Accessory Medulla is a small brain region at the anterior-medial edge of the medulla, with a well-established role in clock circuitry and circadian behaviors44,49. Unlike the other visual brain areas, the AME does not exhibit obvious retinotopy, which supports a common view that photoentrainment of circadian rhythms should not require detailed visual-spatial information. Nevertheless, the diversity of neurons with significant connectivity in the AME (Extended Data Fig. 11) suggests an underappreciated complexity with perhaps broader roles in sensory integration and behavior.

Communication with the central brain

The Visual Projection Neurons (VPNs) represent a remarkable compression of information. Signals from the nearly 50,000 local neurons that initially interpret the visual world are conveyed by just ~4,500 neurons of 350 cell types to the central brain (Fig. 1e, Supplementary Table 1). Many VPN types, including the Lobula Plate Tangential, LPT33, the Lobula Columnar, LC47, and the Medulla-Tubercle, MeTu50, neurons have been carefully described. Nevertheless, we have identified novel cell types, and further refined prior classifications within each of these well-studied groups. Among the VPNs, the small field projections neurons of the lobula and lobula plate, the LCs, LPCs, LLPCs, and LPLCs make up ~3000 cells, while the MeTu neurons make up over 500 more. The remaining cells are morphologically varied and target many brain regions. We present all VPNs in Fig. 6 to illustrate the diverse pathways relaying vision to central behavioral control areas. The neurons are grouped based on rough anatomical similarities, mainly the visual region in which they receive inputs. We refer readers to the summaries in Supplementary Fig. 1 for details of the connectivity and morphology within visual brain regions.

Fig. 6: Visual Projection Neurons.

All Visual Projection Neurons that connect the right optic lobe with the central brain or the contralateral optic lobe are shown. We first divided the ~4500 VPNs into the 350 types shown here, and then placed them into 51 groups of morphologically similar types. These groups are based on the main region they receive their optic lobe inputs in, whether they project to the ipsi- or contralateral central brain or the contralateral optic lobe, and other aspects of their morphology. Unilateral cells, those whose projections do not cross the midline, are mainly shown in half-brain panels, and most cells with arbors in both brain hemispheres are shown in full-brain views. Each panel includes the names of the cell types (color-matched to the rendered neurons) and the number of individual cells of each type (in gray); types without numbers are present once per brain hemisphere. Detailed morphology within the visual brain regions can be found in the Cell Type Catalog (Supplementary Fig. 1).

The Visual Centrifugal Neurons (VCNs), 108 types across ~270 total cells, are the final group of neurons we present as a complete set (Fig. 7). Most of these types consist of a single neuron, although there are several populations of smaller field inhibitory neurons mainly targeting the lobula plate. Among the larger VCNs are several octopaminergic cells51 that are likely critical for modulating visual neurons during active movement52,53. Many VCNs are large cells that target rather precise layers of the medulla (mostly called the MeVC neurons), the lobula (the LoVC and some LT neurons), and the lobula plate (called LPTs for historical reasons). One of these types, LoVC1 (also called IB1126) has recently been reported to gate the flow of specific channels of visual information during social behaviors54. Although most of VCNs are presumed to be inhibitory (glutamatergic or GABAergic), it is noteworthy that most of the MeVC neurons are predicted to be cholinergic. We present this full set as a single unit (Fig. 7) to serve as a reference to these understudied cells and to facilitate the systematic study of their roles in the central modulation of visual processing.

Fig. 7: Visual Centrifugal Neurons.

Visual centrifugal neurons receive major input in the central brain and project back to the optic lobes. We cataloged 108 VCN types (284 cells combined for the right optic lobe) and show them all here in groups organized by their main target regions in the optic lobes and other anatomical features (such as ipsi-, contra- or bilateral projection patterns). The figure also includes neurons (“other”) that have some optic lobe synapses in addition to central brain synapses but were not classified as VPN or VCN (see Methods). Each panel indicates the names of the cell types (color-matched to the rendered neurons) and the number of individual cells of each type (in gray); types without numbers are present once per brain hemisphere. Detailed morphology within the visual brain regions can be found in the Cell Type Catalog (Supplementary Fig. 1).

Cell-type-specific genetic driver lines

Analysis and simulations of connectomes can suggest functions for many of its constituent cell types55. Testing those predictions requires genetic tools that permit the activity of specific cell types to be manipulated or marked for functional imaging or electrophysiology. For over 15 years, we have been making genetic driver lines targeted to specific cell types in the Drosophila visual system using the split-GAL4 intersectional method56,57. Using light microscopy to analyze the morphology of neurons expressed within first-generation driver lines, we developed several collections that successfully matched groups of known cell types in the lamina21, medulla29, and lobula47. These lines enabled targeted expression control within these cell types for functional experiments, further analysis of their anatomy19, and measurements of high-resolution transcriptomes40. Matching driver lines to well-defined cell types can be quite involved, but LM images of single neurons and populations, along with classic Golgi surveys1,3, provided a sufficient basis for matching most of the high-count OLCNs and OLIN types. An example of the success of our approach is that we find no new Dm types in the connectome compared to a prior LM study19, except for a new division of Dm3 cells and the dorsal rim types (Fig. 5c, Extended Data Fig. 10). However, the full visual system inventory (Supplementary Fig. 1) is the ideal reference for matching a much larger set of driver lines, especially the less numerous cell types. We report here a collection of 577 split-GAL4 lines (Fig. 8a; Supplementary Table 6) that have been matched to >300 EM-defined cell types across all our inventory groups.

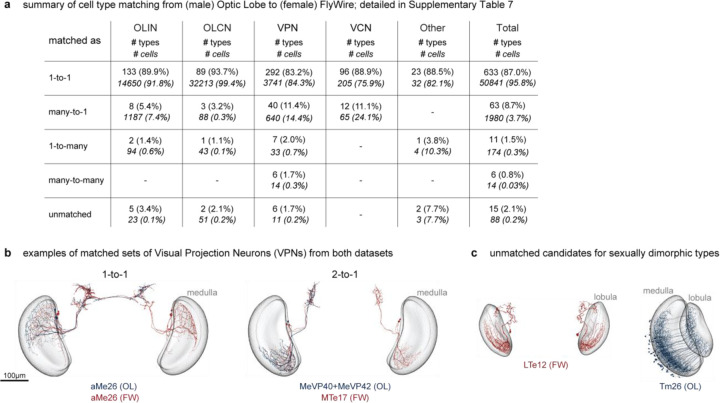

We found candidate matches for nearly all visual system cell types labeled by split-GAL4 lines, highlighting the completeness of our EM cell type inventory. This is all the more remarkable since most LM images are from female flies, whereas the EM reconstruction is of a male fly, suggesting that most optic lobe cell types are not sexually dimorphic. We matched 98% of the cell types in our inventory (accounting for 99.8% of cells) to cell types in the female FlyWire-FAFB dataset15–17,25 (Extended Data Fig. 13a,b; Supplementary Table 7), providing strong evidence for the completeness of our inventory and the absence of significant cell-type-level sexual dimorphism. The cell types that could not be matched between the datasets are candidates for sexually dimorphic neurons, with two examples shown in Extended Data Fig. 13c.

Figure 8b–e shows an example of morphological matching of a split-GAL4 driver’s expression pattern, which selectively labels a single population of visual neurons, to EM-reconstructed LPLC4s cells. We use brain registration to juxtapose EM-reconstructed neurons in the same reference as registered LM data, to compare single neuron morphology and population patterns (Fig. 8c–d). In most cases, the quantified innervation of the visual regions (Supplementary Fig. 1) provides a simple comparison that is sufficient for confident matching (Fig. 8e for LPLC4), especially for the OLIN and OLCN lines (Fig. 8f). Many VPN and VCN driver lines can be matched to EM-defined cell types using the central brain arborization patterns (visualized on a co-registered brain, Fig. 8g). We use additional information when the neurons labeled with a driver line appear to be credible matches for multiple EM-defined cell type. In these cases, we use finer differences in the layer innervations and features like the cell body distribution, regional pattern coverage, and arbor size and shape (Extended Data Fig. 12a–e). Our LM images often confirm the accuracy of the EM-reconstructed morphologies, even in a few cases where we find atypical neuron morphologies that appear to represent real but infrequent variants (Extended Data Fig. 12f). By linking genetic driver lines to EM-defined cell types, this collection establishes a powerful toolkit for probing the circuits of the visual system.

Inter-region connectivity

Understanding the flow of visual information throughout the fly brain is a substantial undertaking, but the infrastructure we developed to catalog the visual system’s neurons provides an approach to an initial analysis. Examining major cell type groups, we asked whether specific connectivity patterns—from particular layers to others across the visual regions—are most prevalent. The first matrix in Fig. 9a shows the inter-region connectivity of the OLINs, and as expected, we find a block diagonal structure with prominent within-layer connections indicated by the high connectivity along the diagonal. The organization of the medulla’s connections supports the anatomical division into three units of more tightly interacting layers and the separation of interneurons into Dm (distal), Cm (central), and Pm (proximal). The summarized connectivity matrices (Fig. 9a) highlight the major pathways and indicate representative cell types substantially contributing to the strongest connection (Fig. 9b). The OLCNs have a much denser connectivity matrix (Fig. 9a), such that nearly every layer is connected to every other layer. However, there are more substantial connection patterns typified by prominent region-connecting cell types (highlighted in Fig. 9b). Notably, the connectivity above the diagonal is much higher than below, indicating that most connections flow in feedforward directions, e.g., from the medulla to the lobula and lobula plate. Finally, the analysis of the VPNs’ connectivity to the central brain reflects the broad classes of projection neurons (Fig. 6), with prominent connections from multiple visual regions often targeting the same central brain regions (Fig. 9b).

Fig. 9: Connectivity within visual brain regions and with the central brain.

(a) The connectivity between regions quantified as a weighted sum (see Methods) over all neurons from the OLIN (right), OLCN (middle), and VPN (left) groups. Optic lobe layers (Fig. 3) are treated as individual regions. The color code reflects the contributions to total connectivity: inter-region connections, the entries in each matrix, were ranked in ascending order and binned based on their contribution to the cumulative sum. (b) Schematic representation of the main conduits for visual information flow within and between brain regions based on the connectivity matrices in (a). The arrows are scaled to represent the number of connections between regions. Arrows are shown for each matrix entry in the highest 50% (darkest colors) with the addition of a few other prominent connections below this threshold. Major contributing cell types for every strong connection are indicated for each arrow. Layers of the visual regions and several central brain regions are grouped in this summary.

Interestingly, many central brain regions, such as the central complex, do not receive significant, direct visual projections. This is in part because connections outside of the top 93.75% of connections are not shown in Fig. 9. For example, there are direct VPN connections to specific Kenyon Cell types in the mushroom body that respond to visual information58,59. Additionally, we expect that visual signals arrive via central brain interneurons, as found for the mushroom bodies58, further expanding the footprint of vision in the central brain. By aggregating the visual neurons into this “projectome,” we emphasize the most prevalent connections to major brain regions. Notably, all connections are dissectible down to the individual cell types (Fig. 9b), which can be quickly found with a query of the neuPrint database. Together with the extensive collection of driver lines (Fig. 8, Supplementary Table 6), we now have a comprehensive toolkit for exploring this entire visual system and discovering how visual features are detected and where vision is integrated in the central brain.

Concluding Remarks

Our survey has been exhaustively curated and proofread from the new connectome of one optic lobe of a male brain. In the coming months, proofreading of the rest of the central brain, and eventually the ventral nerve cord, of this connected volume will be completed. With these updates, our knowledge about the VPNs and VCNs will increase substantially as details of their central inputs and outputs are filled in. These datasets, together with an emerging connectome of a female brain15–17, provide a spectacular resource for understanding how vision is processed in central brain circuits and, ultimately, how vision is used to guide behavior. By mapping neural connections at unprecedented scale and resolution, connectomics overcomes the traditional trade-offs between completeness and accuracy in cataloging cell type diversity13. This approach has been particularly transformative in visual systems—the mammalian retina and especially in the fly—where it has facilitated detailed investigations of circuit function. In many cases, the analysis of these data directly suggests functional hypotheses. While there is some lingering skepticism about the interpretability of vast datasets or the translatability of structural maps to functional insights, these methods are already revolutionizing neuroscience on at least two levels: at their most powerful, the completeness of these surveys enables advanced analytical and modeling studies of integrative brain function, but more straightforwardly, these methods outline countless experimental roadmaps for detailed investigation of brain circuit function. The combination of connectomic surveys, detailed analysis, and genetic tools for experimental manipulation finally provide the necessary elements for bridging the gap between the stunning structural intricacy of neuronal circuits and the functional understanding the field has long sought.

Methods

EM sample preparation

The sample preparation followed our recently described methods1,2 and are reproduced here with minimal modifications from the recent manuscript on the “MANC” Ventral Nerve Cord (VNC) Electron Microscopy (EM) volume3 to preserve the consistency and accuracy of the reported methods:

Five-day-old males from a cross between wildtype Canton S strain G1 x w1118 were raised on a 12-hour day/night cycle and dissected 1.5 hours after lights-on. The main difference from previous work occurred during this dissection step. For this new sample we dissected the entire central nervous system (CNS) as a unit, including the brain, ventral nerve cord, and the neck. The main difficulty, requiring many attempts and extreme care, was dissection without damaging the relatively fragile neck connective. An undamaged neck connective is necessary to reconstruct an entire CNS. The optic lobe reported here is a subset of this complete CNS reconstruction. Samples were fixed in aldehyde fixative, enclosed in bovine serum albumin (BSA) and post-fixed in osmium tetroxide. Samples were then treated with potassium ferricyanide and incubated with aqueous uranyl acetate followed by lead aspartate staining. A Progressive Lowering Temperature (PLT) dehydration procedure was applied to the samples. After PLT and low-temperature incubation with an ethanol-based UA stain, the samples were infiltrated and embedded in Epon (Poly/Bed 812; Luft formulations). Collectively, these methods optimize morphological preservation, allow full-brain preparation without distortion, and provide increased staining intensity, enabling faster FIB-SEM imaging. Each completed sample was examined by x-ray CT imaging (Zeiss Versa 520) to check for dissection damage and other potential flaws and to assess the quality of the staining.

EM sample preparation: hot knife cutting

The Drosophila CNS is roughly 700 μm wide and more than 1000 μm long, making it too large to image by FIB-SEM without milling artifacts. We therefore subdivided the brain right to left (vertical cuts in the orientation shown in Fig. 1a), and the VNC lengthwise (horizontal cuts in the orientation of Fig. 1a), both into 20 μm thick slabs using an approach developed during the hemibrain project1. This allowed imaging of individual slabs in parallel across multiple FIB-SEM machines. During embedding, the CNS was oriented such that the long axis of the VNC was perpendicular to the block face, with its caudal tip closest to the block face. The block was then trimmed into a fin shape4 (600 μm wide and several millimeters long in the cutting direction with a sloped trailing edge) to a depth encompassing all of the VNC and half of the neck, leaving the brain in the untrimmed part of the block. This VNC and half-neck were then sectioned into a total of 31 slabs, each 20 μm thick, using our previously described “hot-knife” ultrathick sectioning procedure4 (oil-lubricated diamond knife (Diatome 25° angle Cryo-Dry), 90°C knife set point temperature, 0.05 mm/s). The remaining part of the block, containing the brain and upper half of the neck, was then reoriented and re-embedded in Epon so that the long axis of the brain was perpendicular to the new block face. This block was trimmed into a fin shape as before, and hot-knife sectioned (cutting speed of 0.025 mm/s) into a total of 35 slabs, each 20 μm thick. Each slab was imaged by light microscopy for quality control, then flat-embedded against a sturdy backing film, glued onto a metal FIB-SEM mounting tab, and laser-trimmed using previously described methods4. Each mounted slab was then x-ray CT imaged (0.67 μm voxel size) to check preparation quality, to provide a reference guide for FIB-SEM milling, and to establish a z-axis scale factor for subsequent volume alignment. All 66 slabs were FIB-SEM imaged separately, and the resulting volume datasets were stitched together computationally (as discussed in the section EM volume alignment) and used in the ongoing reconstruction of the entire CNS. The right optic lobe was contained in 13 of these slabs.

EM volume imaging

The imaging methods in this section are reproduced with minimal modifications from the recent manuscript describing the MANC EM volume3 to ensure the consistency and accuracy of the reported methods:

The 35 brain and 31 VNC slabs were imaged using seven customized FIB-SEM systems in parallel over a period of almost a year. Unlike the FIB-SEM machines used for the hemibrain project1, this new platform replaced the FEI Magnum FIB column with the Zeiss Capella FIB column to improve the precision and stability of FIB milling control5. FIB milling was carried out by a 15-nA 30 kV Ga ion beam with a nominal 8-nm step size. SEM images were acquired at 8-nm XY pixel size at 3 MHz using a 3-nA beam with 1.2 kV landing energy. Specimens were grounded at 0V to enable the collection of both secondary and backscattered electrons.

EM volume alignment

Alignment of the EM volumes was performed with an updated version of pipeline used for the hemibrain1, that was also used for the MANC VNC3 volume. The “render” web services were used for serial section alignment of the 35 brain slabs, followed by automatic adjustment for milling thickness variation6. The surfaces of the slabs were automatically identified using a combination of a hand-engineered7 and machine-learning-based cost estimation3 prior to graph-cut computation3,7, followed by manual refinements using a custom BigDataViewer8-based tool to interactively correct remaining issues. As with the MANC and hemibrain, the series of flattened slabs was then stitched using a custom, distributed method for large-scale deformable registration to account for deformations introduced during hot-knife sectioning. A custom BigDataViewer-based tool was developed to interactively help the automatic alignment in challenging regions. The code used for the “render” web services can be found at https://github.com/saalfeldlab/render and the code used for surface finding and hot-knife image stitching is available at https://github.com/saalfeldlab/hot-knife.

EM volume segmentation

Segmentation was carried out as described in the MANC VNC volume3, but with some differences described below. Flood-filling network (FFN) inference was only applied in areas likely to contain segmentable tissue. To detect these, we used the following heuristic: the CLAHE-normalized images were downsampled to 32×32×32 nm3 voxel size and then filtered in-plane (XY) with a high-pass filter Ihp = I + min3×3(255 − I), where I is the pixel intensity and min3×3 is the minimum function, applied convolutionally with a kernel size of 3 × 3 pixels. We then computed 2D (in-plane) connected components of the filtered images thresholded at a value of 220, and used the largest connected component within every section to exclude the corresponding parts of the EM images from segmentation.

We trained a semantic segmentation model for the volume by manually annotating selected segments from the FFN segmentation into 8 disjoint classes: “do-not-merge”, “glia”, “trachea”, “soma”, “non-glia-nuclei”, “neuropil”, “muscle”, “glia-nuclei”, and using these to train a convolutional neural network model to classify every voxel of the dataset at 16×16×16 nm3 resolution into one of these classes. For every segment, we then computed the corresponding class using a majority voting scheme and only included “soma”, “non-glia-nuclei” and “neuropil” segments in the agglomeration graph. The neural network used for this process had the same architecture as the FFN (“residual convstack”), but with all convolutional layers applied in the “VALID” mode.

Instead of manually annotating all nuclei in the dataset, we relied on the predictions of the semantic segmentation model. We computed 3D connected components of voxels classified as glial and non-glial nuclei, and postprocessed them twice according to the following procedure: apply morphological erosion with a radius of 5 voxels, recompute 3D connected components, and then remove components smaller than 10,000 voxels. We took the centroids of the remaining objects to be nuclei centers and disallowed distinct nuclei to be merged in the agglomeration graph. We applied a heuristic procedure for merging nuclei segments and surrounding soma segments. We applied morphological dilation with a radius of 5 voxels to every nucleus generated in the previous step. We next computed the number of overlapping voxels between these expanded nuclei and FFN segments of at least 10,000 voxels at 16×16×16 nm3 with a majority class of “soma” or “nonglia-nuclei”. All segments matched with a specific nucleus were then merged.

EM volume synapse identification

We performed synapse prediction using the same methods as described in the MANC VNC reconstruction3. We obtained ground truth synapse data from the larger CNS sample and used it to train the networks for pre-synaptic T-bar detection and for post-synaptic partner detection The network weights for T-bar detection were first initialized using the previously-trained detector3, then fine-tuned using the available CNS training data. To quantify performance of the synapse identification within the optic lobe, 14 cubes of 300×300×300 voxels were randomly selected within the optic lobe volume, and synapses within each subvolume were densely annotated. In aggregate, the subvolumes contained 287 annotated T-bars and 2425 annotated post-synaptic partners. The overall precision-recall plots for T-bars alone, and for the synapse as a whole (both components) are shown in Extended Data Fig. 1a. This performance is substantially better than the accuracy achieved in the hemibrain1 and similar to the performance on the more recent MANC reconstruction3.

EM volume Proofreading

Proofreading followed similar methods to those documented for the hemibrain1 and MANC3 connectomes and used a software toolchain for connectome reconstruction including NeuTu9, Neu310, and DVID11. One difference for this volume is that we automatically generated point annotations for cell bodies (as discussed in EM volume segmentation), then added point annotations to neck fibers and nerve bundles, at early stages of the proofreading process. These annotations were fed back into the agglomeration stage, where they were used to forbid merges between the corresponding segments. This considerably reduced the number of falsely merged bodies, which are difficult and time-consuming to separate. An important difference in the optic-lobe connectome was the role that cell typing played in quality control and setting some proofreading priorities (see Overview of cell typing). As we were typing cells from an early stage during the proofreading, we frequently compared the morphology and connectivity of cells assigned the same types, serving as a robust check for incomplete shapes or reconstruction errors. In the optic lobe dataset, the vast majority of cells and synapses belong to repeating cell types, so this parallel cell typing and proofreading effort was especially helpful in arriving at a high-quality connectome.

An important metric for evaluating the “completeness” of a connectome, or a region of a connectome, is the percentage of all synapses where both the pre- and post-synaptic partners belong to identified neurons. We provide this completion percentage for the optic lobe regions in Extended Data Table 1, and these summary metrics show this new connectome to be based on one of the highest-quality reconstructions to date. The completion rate is high, and relatively uniform across regions. Across the whole dataset, the connection completeness is 52.7% (54.2% when the partial lamina, discussed below, is excluded). This is considerably higher than the hemibrain, where the corresponding metric is 37.5%1.

The lamina completion metrics (Extended Data Table 1) are considerably worse than the other optic lobe neuropils. The lamina is the most peripheral neuropil of the optic lobe, where the axons of outer photoreceptors contact their targets. In this volume, the lamina was particularly difficult to reconstruct for two reasons: 1) it is incomplete with a noteworthy stair-step profile due to the trimmed edges of the 20μm slabs, and 2) the severed axons of photoreceptors are generally quite heavily stained and therefore were much harder to segment than other neurons in the volume. While it was not our original intention to analyze the lamina, the more complete segments of it are quite useful, especially for estimating the location of the eye’s equator12, as shown in the eye map of Fig. 3a. For this reason, and despite the sample’s limitations, we invested considerable effort in the lamina’s reconstruction, but the completeness metrics reflect the lower quality and completeness of the data for this neuropil. The heavily stained inner photoreceptors (R7/R8) that target the medulla also present very considerable challenges for segmentation and proofreading. Nevertheless, we proofread most of these cells, except for those in one patch of the medulla where these terminals were too fragmented to assemble into neurons. The distribution of these photoreceptors is detailed in Extended Data Fig. 4a. We estimate the counts of the missing cells in the section Estimating the count of photoreceptors and lamina intrinsic neurons.

LM-EM volume correspondence

To compare neurons imaged by Light Microscopy (LM) and those reconstructed from EM data, we transformed the 3D coordinates of the whole central brain EM volume, including the optic lobes, into reference coordinates for a standard reference brain used for for LM data. The spatial transformation was obtained by registering the synapse cloud of the male CNS EM volume onto the JRC2018M Drosophila male template13, following an approach similar to that described for the hemibrain volume1. To produce an image volume, the synapse predictions were rendered at a resolution close to the JRC2018M template (512nm isotropic). Next, we performed a two-step automatic image registration procedure using Elastix14, with the JRC2018M template as a fixed image and the rendered synapse cloud as the moving image. The first step estimates an affine transformation, which is used to initialize the second step, during which a nonlinear (B-spline) transformation is estimated. We then manually fine-tuned this transformation using BigWarp15 to place 91 landmarks across the datasets to define a thin-plate spline transformation to correct visible misalignments. This defined an initial transformation that we used to warp neuron skeletons traced from the hemibrain dataset1 into the coordinate system of this new (male CNS) EM volume. We identified putative neuron correspondences between hemibrain neurons and preliminary neuron traces from this volume and used these to identify remaining misalignments. We corrected these in BigWarp by placing 82 additional landmarks, bringing the final total of landmarks to 173. The composition of the transformations from these steps (affine, b-spline, thin-plate-spline) is the spatial transformation from the JRC2018M template to the EM space. We also estimated the inverse of the total transformation using code found in https://github.com/saalfeldlab/template-building. As there are transformations already defined between JRC2018M, JRC2018F, and JRC2018U, establishing the correspondence for one of the three automatically does it for the other two. Fig. 8c,d,g and Extended Data Fig. 12b–f show many applications of the image registration to compare neurons from the optic lobe dataset to similar cells in LM and EM (hemibrain) images, using the JRC2018U template.

Defining anatomical regions in the EM volume

Prior to segmentation, the neuropils were defined using synapse point clouds. The neuropil boundaries were initialized based on the JRC2018M Drosophila male template13 after registration with our EM volume, as described in section LM-EM volume correspondence. Then the boundaries of the right optic lobe regions were refined with hand-drawn corrections based on the grayscale data. While some boundaries are quite clear, others, such as those surrounding the inner chiasm of the optic lobe, are not sharp. The Regions of Interest (ROIs) for each neuropil were drawn with the goal of enclosing the maximum number of synapses within the corresponding neuropil (without overlapping with adjacent neuropils).We named the regions following the standard nomenclature for the optic lobe16 and central brain17. For the optic lobe we then used an iterative process to columns and layers, first reported in classic neuroanatomical studies16,18,19. We match all of the classically defined layers except for the 5th layer of the lobula, which we divide into LO5A and LO5B following our previous definition from LM data20. This process is described in the section Defining column centerlines and ROIs and the section Layer ROIs. The resulting ROIs are available in neuPrint and are systematically named. Column ROIs are named by concatenating the neuropil, brain hemisphere, and hexagonal coordinates (e.g., LOP R col 15 21), and layer ROIs are named by their neuropil, brain hemisphere, and layer number (e.g., ME R layer 07).

Connectome data access overview

The primary data source for our analysis is the optic-lobe:v1.0 dataset in neuPrint21, a Neo4J22 graph database. We access three levels of nodes in the database: (i) “Segment” (ii) “SynapseSet”, which are collections of synaptic sites representing T-bars and postsynaptic densities (PSDs) and (iii) “Synapse” which refers to the individual synaptic site. These nodes have a hierarchical relationship wherein the “Segment” nodes contain “SynapseSet” nodes, and the “SynapseSet” nodes contain “Synapse” nodes. Both the “Segment” and “SynapseSet” nodes connect to other “Segment” and “SynapseSet” nodes, respectively. These connections are calculated based on the relationship between individual “Synapse” nodes. All “Synapse” nodes derive from an “Element” type and inherit their properties, such as the 3D (x,y,z) coordinate in the EM volume. The overwhelming majority of “Segment” nodes correspond to small, unproofread, and unnamed fragments with few synapses and are not relevant to most analyses. To enable faster queries, the subset of “Segment” nodes that are relevant for typical analyses are additionally labeled as “Neuron” nodes. To qualify as a Neuron, a Segment must have at least 100 synaptic connections, a defined “type,” “instance,” or known soma location. In most data analyses presented here, we primarily work with named Neurons, i.e. “Neuron” nodes with defined “type” and “instance” properties. We ignore non-Neuron Segments and further exclude Neurons whose types are suffixed with “_unclear”.

For full clarity, in the graph database syntax21, the nodes are “:Neuron” “:Segment” and have a “:Contains” relationship to “:SynapseSet”s which have a “:Contains” relationship with “:Synapse”s. “:Neuron”s and “:SynapseSet”s have “:ConnectsTo” relationships to other “:Neuron”s and “:SynapseSet”s respectively. These relationships are calculated based on the “:SynapsesTo” relationship between individual “:Synapse” nodes.

In neuPrint data loaders, regions of interest (ROIs) are defined as connected volumes, i.e. spatially contiguous collections of voxels. Consequently, each element with a spatial location can be assigned to any number of ROIs, such as ROIs we defined for layers, columns, and neuropils. This is a simple assignment for point objects like postsynapses, presynapses, and column pins (defined below). Since synaptic connections are typically based on a single presynapse and several postsynaptic densities, neuPrint uses the convention of assigning the connection to the ROIs of the postsynaptic site.

While our analysis focuses on the visual system, several cell types have processes in the central brain. Although proofreading and naming efforts are ongoing and, therefore, not as complete as for the visual system, the reconstructed arbors are included in the dataset, as skeletons (and meshes) but without the synaptic connections. In the database, the aggregated summaries of synaptic sites and their innervated brain regions representing the current state of the reconstruction effort are included as properties of neurons. These aggregations rely on the confidence threshold of 0.5 set for the optic-lobe:v1.0 dataset. As a result, the summaries are consistent with the late 2023 snapshot of the reconstruction (optic-lobe:v1.0), but the synapse properties of “:Neuron”s differ from the number of “:Synapse”s they contain (as central brain connections are incomplete, only half the connection is present).

Within the python environment, neuprint-python23 provides access to the neuPrint servers and translates function calls to Neo4J Cypher queries (https://connectome-neuprint.github.io/neuprint-python/docs/). We rely on the carefully selected, reasonable default values of the neuPrint database (the most relevant is the synapse confidence threshold of 0.5). To simplify analysis, we aggregate data as close to the source as possible either in pandas DataFrames or using neuprint-python’s “fetch_custom” function with optimized Neo4j Cypher queries. The code we share in the repository (see Code availability) uses a combination of these methods for data access. For estimating the spatial location of an element, such as a synapse, in relation to columns and layers, we added the data type “:ColumnPin” to neuPrint, which represents points positioned along the center lines of each column (of the medulla, lobula, and lobula plate) and with properties representing the identity of and depth within a column.

In addition to the neuPrint Neo4J database, we provide neuron morphologies and boundaries of ROIs in the form of meshes through Google Storage buckets. Meshes are generated from ROIs with marching cubes, followed by Laplacian smoothing and decimation by quadrics (https://github.com/sp4cerat/Fast-Quadric-Mesh-Simplification). Meshes for brain regions, columns, and layers are available in three different spatial resolutions between 256nm and 1024nm (per side of voxels), while meshes of most individual neurons were generated from 16nm voxels.

Data specific to our analysis and retrieved by other methods are stored inside the code repository in the “/params” folder, such as:

The “Primary_cell_type_table” groups the named neuron types into the 4 main categories OLIN, OLCN, VPN, VCN, and “other” (see section Cell type groups).

The identification of example cells (and their bodyIDs) for each neuron type, which we call “star neurons” inside the “all_stars” spreadsheet (see section Gallery plots).

We provide some heuristically defined “Rendering_parameters” in a second spreadsheet (see section Gallery plots).

Additional files contain parameters used in the generation of pins and layers: “pin_creation_parametera” (see Defining column centerlines and ROIs and Layer ROIs).

The data inside the neuPrint database, precomputed meshes stored at the Google Cloud, and the data inside the params folder is sufficient for replicating our data analysis and figures, for example using the source code we provide in our repository (see Code availability).

We provide our code in “notebooks” (literate programming24) to explain analytical steps or command-line scripts for long-running processes. Both rely on functions for prototypical implementations or object-oriented components shared between several of our applications.

Visualization of reconstructed EM neurons