Abstract

EEG signals combined with deep learning play an important role in the study of human–computer interaction. However, the limited dataset makes it challenging to study EEG signals using deep learning methods. Inspired by the GAN network in image generation, this paper presents an improved generative adversarial network model L-C-WGAN-GP to generate artificial EEG data to augment training sets and improve the application of BCI in various fields. The generator consists of a long short-term memory (LSTM) network and the discriminator consists of a convolutional neural network (CNN) which uses the gradient penalty-based Wasserstein distance as the loss function in model training. The model can learn the statistical features of EEG signals and generate EEG data that approximate real samples. In addition, the performance of the compressed sensing reconstruction model can be improved by using augmented datasets. Experiments show that, compared with the existing advanced data amplification techniques, the proposed model produces EEG signals closer to the real EEG signals as measured by RMSE, FD and WTD indicators. In addition, in the compressed reconstruction of EEG signals, adding the new data reduces the loss by about 15% compared with the original data, which greatly improves the reconstruction accuracy of the EEG signals’ compressed sensing.

Keywords: EEG signals, generative adversarial networks, long short-term memory network, convolutional neural networks, compressed sensing

1. Introduction

An EEG signal is a random non-smooth signal reflecting the pattern of bioelectrical rhythmic activity in the brain [1], which has a significant reference value in clinical diagnosis and brain function research [2,3,4]. In the research of EEG compression sensing and emotion recognition, building a deep learning framework to train the model is necessary. However, the lack of EEG data is challenging to establish an effective, accurate and stable model. Therefore, the need for large training data remains challenging for researchers and developers [5]. Additionally, there are several restrictions on the acquisition of EEG signal data. First, the EEG signal data acquisition process requires as little noise interference as possible at the acquisition site; second, the subjects need to focus on completing a series of motion imagery or emotional performance tasks to record and classify different events [6]. Furthermore, the time during which the subjects can sustain the experiment under such a high concentration of brain energy is minimal [7]. Finally, the time cost is considerable if a standard process is followed to acquire high-quality EEG signals. Furthermore, the collected data are not always fully available because data acquisition of EEG signals is often accompanied by interference of other physiological signals such as noise and eye movement artifacts. Therefore, the data augmentation of EEG signals can expand the size of the training dataset, so that deep learning can dig deep into the internal features of the data, which will play an important role in emotion recognition, motor imagination recognition, epilepsy prediction and other fields [8,9,10]. Geometric methods like correlated combinations of initial trials, distortion and window sliding segmentation to increase the number of samples have been used by some researchers to address these issues [11,12,13,14]. Although such augmented samples can improve the classification performance, the results are unsatisfactory. In recent years, generative adversarial networks have developed rapidly [15,16,17]. They can effectively learn the distribution characteristics of real data and generate augmented samples with the same distribution. Many researchers have achieved breakthrough performance using GAN networks to augment EEG signal data [18,19].

Hartmann et al. [20] presented the first GAN framework for generating EEG signal data: the EEG–GAN, used to generate data with EEG characteristics and enhanced by the Wasserstein GAN. Abdelfattah SM et al. [21] proposed recursive GAN (RGAN), which can capture temporal features in EEG signals by replacing the fully connected layer in the GAN with the RNN. Luo et al. [22] proposed WGAN–GP to generate differential entropy features of EEG signals and used the generated differential entropy features for emotion recognition and classification tasks. A framework (E2SGAN) for synthesizing stereo electroencephalogram (SEEG) data from EEG signals was proposed in [23]. In addition, correlated spectral attention (CSA) was proposed to capture the correlation between each pair of EEG and SEEG frequencies, enhancing the discriminator of E2SGAN. The weighted patch prediction (WPP) technique was designed to ensure robust temporal results. Zhang Z et al. [24] proposed the generative adversarial network self-supervised data enhancement (GANSER) framework for data enhancement. For EEG-based emotion recognition, it combines adversarial training with self-supervised learning to produce high-quality and high-diversity simulated EEG samples. Abdelghaffar Y et al. [25] compared the performance of three different generative adversarial networks as data augmentation techniques. They investigated the effect of increasing the training data size on P300 classification performance using each GAN network. The experimental results showed that increasing the training data improves classification accuracy significantly. Zhi Zhang et al. [26] proposed the emotional subspace constrained generative adversarial network (ESC–GAN), using diversity perception loss to encourage diverse affective subspace by expanding sample differences, while boundary perception loss limits the enhancer space near the decision boundary to solve the problem of dataset imbalance, facilitating unbiased and secure EEG data augmentation.

In this paper, we propose an improved generative adversarial network model based on a combination of long short-term memory networks and convolutional neural networks to generate artificial EEG signal data more consistent with real EEG signal data. Compared with previous methods, the proposed method results in more similar distributions compared to real EEG signals. It addresses the lack of EEG signal datasets and boosts the accuracy of compressed perceptual reconstruction of EEG signals. Among them, the generator of this model consists of LSTM. The LSTM generator can extract the features of EEG data samples on time series and reconstruct the data distribution step by step to obtain the generated EEG data. The discriminator comprises a CNN rather than the forgetting unit in LSTM, which has a repeated connection structure. Therefore, the CNN can be trained faster in the case of long sequence data modeling. It can rapidly discriminate the similarity between real and generated data and direct the training of the generator based on the discriminator’s error. The public dataset of the EEG signal BCI competition serves as the basis for the simulation experiments. In addition, it is demonstrated that the proposed generative adversarial network model can learn the statistical features of the EEG signal and expand the dataset. Consequently, the EEG compressed sensing reconstruction model performs better.

The innovation and work of this study are mainly reflected in the following aspects:

Innovative generative adversarial network model: We propose an improved generative adversarial network model, L–C–WGAN–GP, to generate artificial EEG signal data. The model uses LSTM as generator and a CNN as discriminator, combining the advantages of deep learning to learn the statistical features of EEG signals and generate synthetic EEG signal data close to the real samples.

Data augmentation and training set augmentation: It can be used to enhance existing training sets by generating EEG data generated from an adversarial network model. This data-augmented approach can extend the scale and diversity of the training data, combined with using the gradient penalty-based Wasserstein distance as the loss function in model training to improve the performance and robustness of deep learning models.

Applied to the compressed perceptual reconstruction model: we added the generated EEG data to the original dataset to train the compressed perceptual reconstruction model of EEG signals. Experimental results show that using the enhanced dataset can significantly improve the accuracy of compressed perceptual reconstruction, and thus improve the reconstruction quality of EEG signal data.

2. Related Theories

2.1. Generative Adversarial Network

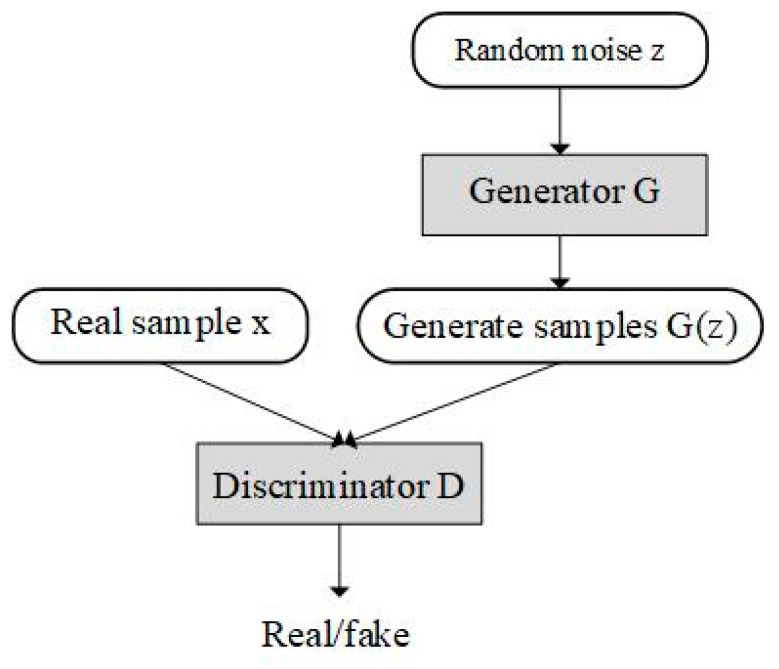

Goodfellow et al. [27] proposed a generative adversarial network in 2014. The GAN consists of two independent neural networks: the generator and the discriminator. The basic GAN structure is shown in Figure 1.

| (1) |

where x represents real data, z is random noise, G(z) represents generated data, D(x) is the probability of real data in the discriminator, D(G(z)) represents the probability of generating data in the discriminator, Pdata(x) represents the real data distribution and Pz(z) is the generator input data distribution.

Figure 1.

Basic GAN structure.

2.2. WGAN–GP

The GAN’s training difficulties and gradient disappearance are two of its drawbacks at times. The Wasserstein distance was introduced by an enhanced GAN known as the Wasserstein generative adversarial network (WGAN) [28,29] to address these issues. It eliminates the problem of the existence of Jensen–Shannon divergence and is utilized to measure the overlap between two distributions. The Wasserstein distance can also provide a meaningful gradient when the overlap between real and generated data distribution is small. The Wasserstein distance is defined as Equation (2):

| (2) |

Among them, represents the set of all joint distributions obtained by combining and , each joint distribution is represented by and denotes the distance between two samples in the joint distribution . The expected value of the sample distance in the distribution can be calculated by calculation, and the maximum lower bound of all expected values is the Wasserstein distance. In addition, the Lipschitz continuity theorem is introduced by the WGAN to resolve the issue that the Wasserstein distance cannot be directly solved. However, to satisfy Lipschitz continuity, the gradient of the discriminator is required to be no larger than a finite constant K. Weight clipping, which results in the concentration of the parameter value distribution on the two extremes of maximum and minimum. The discriminator will tend to learn simple mapping functions by this influence, resulting in poor discriminator performance.

Based on the WGAN, WGAN–GP uses gradient penalty to add constraints to the loss function, which solves the problem of gradient explosion, accelerates the training speed and makes the training more stable [30]. The WGAN–GP’s objective function is defined as Equation (3):

| (3) |

2.3. Long Short-Term Memory Network

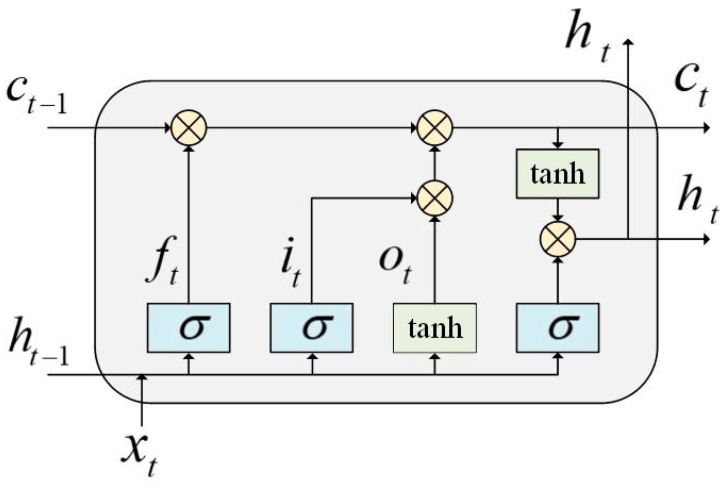

LSTM is an improvement of recurrent neural networks (RNNs) and is effective in learning sequence information at long intervals and solving the problem of gradient disappearance or explosion when training long sequence information [31]. Figure 2 depicts the structural elements of the LSTM network:

Figure 2.

LSTM basic building blocks.

Equations (4)–(8) display the LSTM calculation:

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

where is the weight parameter and bias term of input gate , is the weight parameter and bias term of the forget gate , is the weight parameter and bias term of the output gate , is the weight parameter and bias term of the memory unit and is the LSTM network’s output value.

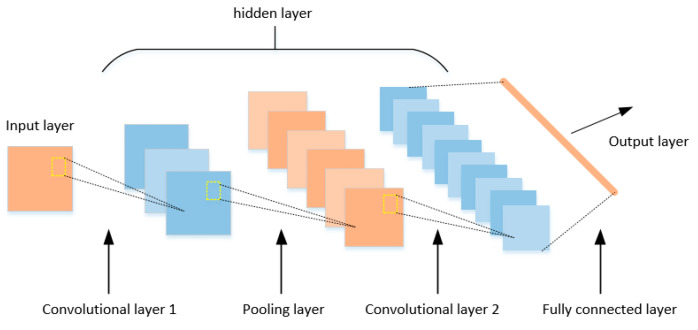

2.4. Convolutional Neural Network

The convolutional neural network (CNN) is a deep learning model similar to artificial neural network, as proposed by Le Cun et al. [32]. The basic CNN structure is shown in Figure 3. The CNN expands the network structure on the basis of artificial neural network, which consists of three parts: input layer, hidden layer, output layer. The input layer is used to receive the original input data. The hidden layer consists of the convolutional layer, pooling layer and fully connected layer, generating corresponding feature vectors. The internal structure of the hidden layer can modify the design until the optimal performance of the network is achieved. The output layer processes the feature vectors obtained from the hidden layer through activation functions and can be used for prediction in classification, regression or other tasks.

Figure 3.

CNN basic structure.

The core of the CNN is the convolutional layer, through which the CNN performs deep feature extraction from the input data through the convolution layer. Convolution operation is the operation of multiplying a small movable window with the input data element by element and then adding it [33]. This small window is actually a weight matrix, called a convolution kernel, and can also be seen as a specific filter. The 2D convolution can be written as Equation (9):

| (9) |

Adding pooling layers between adjacent convolutional layers effectively reduces the number of parameters. The main work of the pooling layer is feature selection and information filtering, as well as reducing the dimension of the deep extracted features to reduce the operation difficulty. For input maps, the output maps are generally smaller as given by Equation (10):

| (10) |

where and are the multiplicative and additive bias terms and down(·) is the pooling function. The output of PL is given as an input to an FC layer.

Finally, the fully connected layer integrates the convolutional layer and pooling layer, transforming them into one-dimensional feature maps as inputs to the output layer.

3. Approach

3.1. L–C–WGAN–GP Model

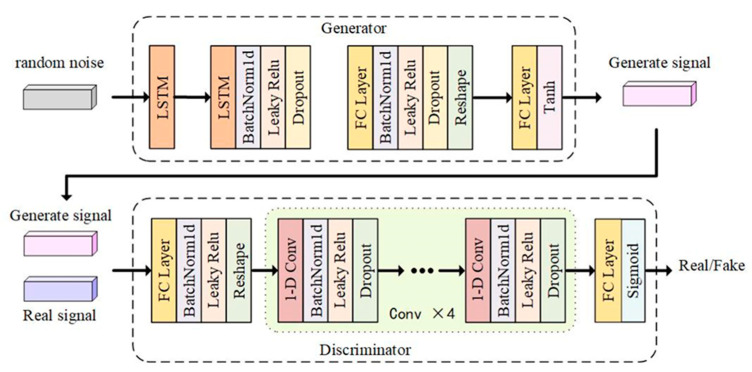

An improved generative adversarial network model named L–C–WGAN–GP is proposed in this study. It automatically learns from existing data and generates EEG signal data similar to the original distribution. It also can retain the characteristics of real data in the generated EEG signal data. Figure 4 shows the overall structure of the L–C–WGAN–GP model. Firstly, the model inherits the idea of WGAN–GP and uses the gradient penalty-based Wasserstein distance as the loss function in model training. Secondly, the generator’s input is a noise data point that follows Gaussian distribution sampling. The generator part comprises LSTM and a full connection layer, which selectively retains historical and current information and can effectively learn the temporal characteristics of EEG signals [34]. The inputs of the discriminator are the real data and the generated data. The discriminator can be considered a binary classifier for determining whether the input sample is true or false [35]. Therefore, unlike the forgetting unit in LSTM, the discriminator component comprises a convolutional neural network without repeatable connections. The training process is usually faster when modeling long sequence data using the CNN as a discriminator.

Figure 4.

Overall structure of the L–C–WGAN–GP model.

3.2. Generator Design

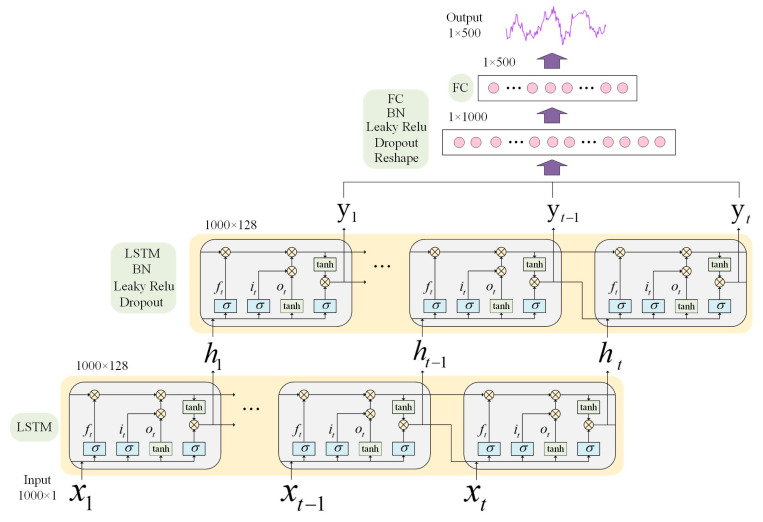

The L–C–WGAN–GP model’s generator structure is depicted in Figure 5. The composition of the generator from bottom to top is an input layer, two LSTM layers, a fully connected layer, reshape layer, a fully connected layer and an output layer. LSTM is better able to capture long-term dependencies in sequence data, has better memory performance and can retain distant contextual information when processing signal data.

Figure 5.

Generator structure of the L–C–WGAN–GP model.

The input layer of the generator is random noise with a length of 1000 dimensions, a random sequence that obeys a uniform distribution of U [0, 1]. Each point in the 1000-dimensional input sequence corresponds to the input value of different time steps of the LSTM layer, and the time step of the LSTM layer is set to 1000. Each hidden layer cell unit of the LSTM layer receives an input data dimension of 1 × 1. The dimension of the output value of the cell unit is 1 × 128 due to the 128 neurons that make up the departmental structure of the cell unit in the hidden layer of LSTM. The LSTM layer combines the data received at t time with the output state of the cell unit at time as a new input. The output value of the first LSTM layer will be the input for the second LSTM layer at time t in this model, which sets up two LSTM layers with the same structure. After the action of two layers of the LSTM layer, a two-dimensional tensor of 1000 × 128 is obtained. Then, the output dimension of the second layer LSTM layer is reduced to a vector of 500 × 1 through the full connection layer, the Reshape layer, and the full connection layer. The Tanh activation function then processes the network output to produce the final generated signal.

Leaky Relu is the generator’s activation function, and the LSTM and full connection layers use it. The activation function’s linear slope is 0.0001. A batch normalization (BN) layer is added before the activation function to normalize the data and remove the influence of distribution offset on the input of the lower layer network [36]. After activating the function, we perform the following steps:

Add the dropout layer to process the output of this layer;

Set the discard rate to 0.5;

Discard half of the network unit output in each layer;

Set it to 0 in the discard bit, which can effectively prevent the occurrence of the over-fitting phenomenon.

3.3. Discriminator Design

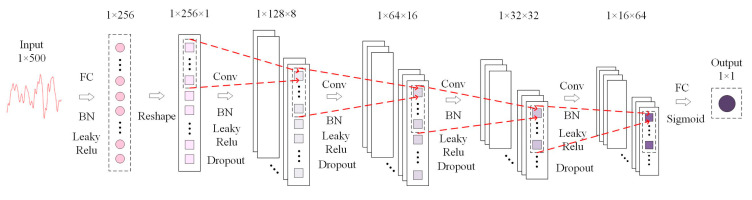

The L–C–WGAN–GP model’s discriminator structure is depicted in Figure 6. The discriminator comprises an input layer, full connection layer, reshape layer, four-layer convolution layer, full connection layer and output layer from left to right.

Figure 6.

Discriminator structure of L–C–WGAN–GP model.

The input of the discriminator is a one-dimensional sequence with a length of 500. After a full connection layer, it is reduced to 256. Each one-dimensional sequence in a Batch undergoes a Reshape transformation to become a feature sequence with a dimension of 1 × 256 × 1 to satisfy the dimensional requirements of the convolution function for the Tensor’s input data. After the four-layer convolution layer operation, the feature sequence size is reduced by half layer by layer, and finally, the sequence of 1 × 16 × 64 dimension is output. The convolution kernel size used in the discriminator is 3 × 1, and the convolution step is 2. The convolution kernel is weighted with the input of each layer of the neural network, and the features of the output sequence of the upper layer are extracted. With the increase in the number of convolution layers, more advanced features are extracted. The four-layer convolution layer filters are 8, 16, 32 and 64, respectively. The number of characteristic graphs output from each layer increases with the number of filters layer by layer. In addition, after each convolution layer, a BN layer is added to standardize the data, a dropout layer is added to avoid network over-fitting and the discard rate is set to 0.5. The Leaky Relu function, which has a slope of 0.0001, is the activation function utilized in each convolution layer. The network’s last layer is the fully connected layer. The output of the convolution layer is mapped to a value of 1 × 1 by the fully connected layer, which is processed by the Sigmoid activation function as the output value of the discriminator. Problems involving binary classification typically use the Sigmoid function, and the discriminator’s role is to determine whether the input data are authentic. Through the Sigmoid function, the output value of the full connection layer can be mapped to a probability value in the interval [0, 1], representing the discriminant result of the discriminator for the input data.

4. Experimental Simulation and Analysis

4.1. Experimental Datasets

The BCI-IV-2a dataset contains the data of the EEG signal that records the motor imagination of the subjects. Its amplification helps to improve the recognition rate of the motor imagination, and it is recognized and widely used in the field of EEG signals. This dataset is used to facilitate the comparison of subsequent experiments. The public dataset BCI-IV-2a, which contains EEG data from nine subjects, served as the basis for producing the experimental data. In brain–computer interface experiments, subjects must perform motor imaging tasks based on arrows pointing left, right, down or up that appear on a computer screen (corresponding to the left hand, right hand, foot or tongue, respectively). In these experiments, 22 Ag/AgCl electrodes (3.5 cm distance between electrodes) were used to record EEG signals and acquire EEG signal data from 22 channels. All the signals were recorded as unipolar, with the left mastoid as a reference and the right mastoid as a grind. The signal was sampled at 250 Hz and band-pass-filtered between 0.5 Hz and 100 Hz. The amplifier’s sensitivity was 100 μV, and an additional 50 Hz notch filter suppressed line noise. The datasets can be found in the data availability statement at the end of this article.

In the experiment in this paper, the entire EEG signal is intercepted into signal frames with a length of only 2 s per period. Each of the 29,150 signal frames has a length of 500, and the quality of the generated data is evaluated using 30% of the intercepted EEG signals randomly selected as the test set and 70% as the generative model training set.

4.2. Experimental Environment

This paper’s computer configuration for model training is as follows: the processor is AMD Ryzen5 5600H, and the main frequency is 3.3 GHz. The graphics card is an NVIDIA Geforce RTX 3050 with 4 GB of memory. This article uses the Python 3.6 programming language and the Tensorflow 1.0 development framework to implement the model.

4.3. Trial Protocol and Model Training

4.3.1. Data Preprocessing

During the data pre-processing phase, the signal data were first loaded and cleaned, including replacing the NaN value with the minimum non-NaN value and adjusting the signal shape for subsequent processing. Subsequently, the signals were segmented to ensure that each segment was of a specified single length, and the processed signal fragments were integrated into a two-dimensional array. Next, the entire dataset was normalized to calculate its mean and standard deviation, and the data were normalized to ensure that the data had distributional properties of zero mean and unit variance. Finally, the dataset was divided into training and test sets based on the specified proportion of training data and shuffled using pseudorandom seeds to ensure the randomness of the dataset.

4.3.2. Model Training Scheme

The training process of the generator and discriminator alternates, and the model is trained for 1000 epochs. We select a batch size of data from the real sample and mark the data from this part of the real sample as one during each training epoch; at the same time, we use the generator model to generate a batch size of the generated data, and set the label of the generated data to 0. The data of these two parts are input into the discriminant model, the loss gradient of the discriminant model is calculated and the gradient reverse update updates the network parameters of the discriminator. For the loss gradient calculation of the generative model, we set the label of the data generated by the generator to 1 and calculate the generator’s loss gradient by comparing the difference between the discriminant result of the generated data and the set label vector by the discriminator, and then fix the discriminator’s parameters and only update the generator’s parameters in reverse. Due to the significant difference in the training speed of the generator network and the discriminator network, to ensure that the two can progress together at a similar speed in each epoch, the training strategy we adopt is that the discriminator model is updated multiple times. The generator model is updated once. The discriminator is updated five times, and the generator is updated one time [37].

4.3.3. Experimental Detail

The generator and discriminator selected Adam as the optimizer, the learning rate was set to 0.001 and the batch size was 64. The beta parameters of the Adam optimizer (beta1 and beta2) control the decay rate of the exponential moving average of the gradient and the exponential moving average of the square gradient. Search was performed between 0.5 and 0.9. The gradient penalty term was set to 10 and was used to control the intensity of the gradient penalty. Model training protocol uses the Step LR scheduler to reduce the learning rate to half every 50 epochs. This learning rate scheduling method helps the model to converge better during training.

4.4. Evaluation Indicators

4.4.1. Similarity Evaluation Indicators

This paper uses three measures to judge the similarity of EEG signals to evaluate the generated EEG signals comprehensively. The three measures are root mean square error (RMSE) [38], Fréchet distance (FD) [39] and dynamic time warping (WTD) [40].

RMSE can effectively measure the deviation between the true and predicted values. The RMSE value will be lower if the generated EEG signal is more like the real EEG signal; otherwise, the RMSE value will be higher. RMSE is calculated as Equation (11):

| (11) |

where the values and are the true and predicted, respectively.

FD is used to measure the similarity between curves, which considers the location and order of the points along the curve. Smaller FD values usually represent higher quality and better diversity of the generated signals. Use and to represent the sequential set of two trajectory points, assuming that curve has trajectory points and curve has trajectory points. Then, there are and . Then, you can obtain a sequence of several points: , and the length ‖d‖ of this sequence is calculated by Equation (12):

| (12) |

where d represents the Euclidean distance, and , , , , and for any , there are or and . Finally, the FD is calculated as Equation (13):

| (13) |

Additionally, WTD is one of the tried-and-true methods for determining the difference between two time series. A lower WTD value indicates that the two-time series differ less. In DTW, one or more time series are distorted along the time axis to achieve “alignment”, and their similarity is calculated. Suppose there are two time series, and , whose lengths are n and m, respectively. A matrix of n × m size can be constructed to align the and implementations. Each matrix element represents the alignment of the point and the , and represents the distance corresponding to the two points, calculated by the Euclidean distance. Define a cumulative distance , where is the sum of the Euclidean distances between the points and and the cumulative distances of the smallest neighboring element capable of reaching the point:

| (14) |

4.4.2. Evaluation Index of Compressed Sensing Reconstruction

To verify that the reconstruction effect of adding generated data to train the compressed sensing reconstruction model is superior to that of the compressed sensing reconstruction model without adding generated data, this article employs the percentage root mean squared distortion (PRD) to compare the reconstruction accuracy of EEG signals with and without generated data [41]. Equation (15) shows reconstruction accuracy:

| (15) |

where and represent the original and reconstructed signals, respectively. Reconstruction accuracy is higher when PRD is lower.

4.5. Experimental Analysis

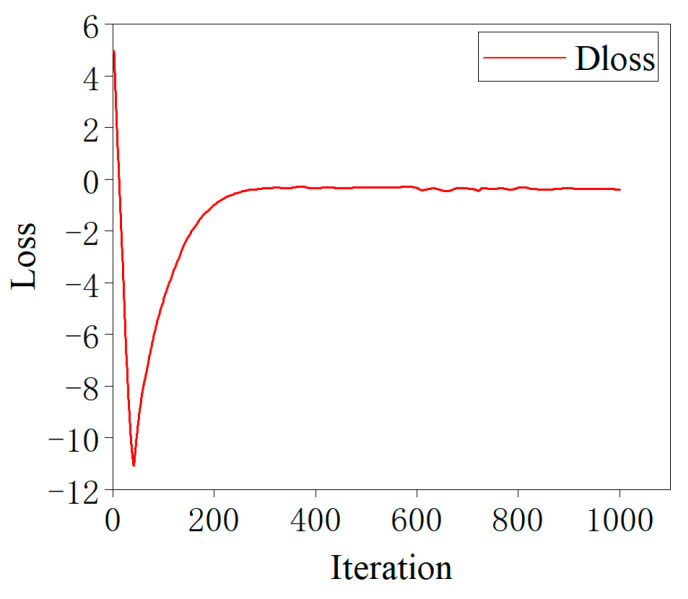

The loss curve of the L–C–WGAN–GP discriminator is depicted in Figure 7. The discriminator loss value will initially decrease significantly as the number of iterations increases, but it will soon rise above −1. After 250 iterations, Dloss rises to −0.3, indicating the network has good convergence performance. When the number of iterations reaches 600, although Dloss will oscillate slightly, it is also stable between the −0.3~0 range and continues to approach 0. The Wasserstein distance also describes the distinction between generated and real data distribution. Dloss convergence to a smaller value means that the two data distributions are increasingly similar. Furthermore, it can be observed that Dloss converges fast, and the training process is stable, thanks to the gradient penalty’s (GP) contribution to the gradient calculation process [42].

Figure 7.

Loss curve of the L–C–WGAN–GP discriminator.

The experimental results of the deep convolutional generative adversarial network (DCGAN) [43], the Wasserstein GAN (WGAN), the Wasserstein GAN-gradient penalty (WGAN-GP) [30] and the long short-term memory generation adversarial network (LSTM-GAN) [44] are contrasted with those of the proposed method to demonstrate that the latter is more effective at producing EEG data. The generator and discriminator of LSTM–GAN mentioned above are composed of long short-term memory networks.

Table 1 shows the similarity evaluation values between the model’s real EEG data and the generated EEG data from five different models. Since DCGAN uses cross-entropy as a loss function, it is simple to produce gradient disappearance during training, which results in insufficient generator learning of the characteristics of EEG data. That is why, as seen from the table, the three similarity evaluation values of DCGAN are the largest, indicating that the model produces poor-quality EEG data. The quality of the generated data cannot be improved during training. L–C–WGAN–GP has the smallest RMSE value, FD value and DTW value compared to DCGAN, WGAN, WGAN–GP and LSTM–GAN. Therefore, the EEG data generated by L–C–WGAN–GP are more similar to the real EEG data, and the model has more advantages in learning the characteristics of EEG signals.

Table 1.

The similarity evaluation values of RMSE, FD and WTD between EEG data and real EEG data were generated.

| Model | DCGAN | WGAN | WGAN–GP | LSTM–GAN | L–C–WGAN–GP |

|---|---|---|---|---|---|

| RMSE | 0.71 | 0.40 | 0.37 | 0.26 | 0.21 |

| FD | 0.99 | 0.92 | 0.89 | 0.81 | 0.75 |

| DTW | 23.71 | 16.89 | 15.23 | 12.87 | 10.38 |

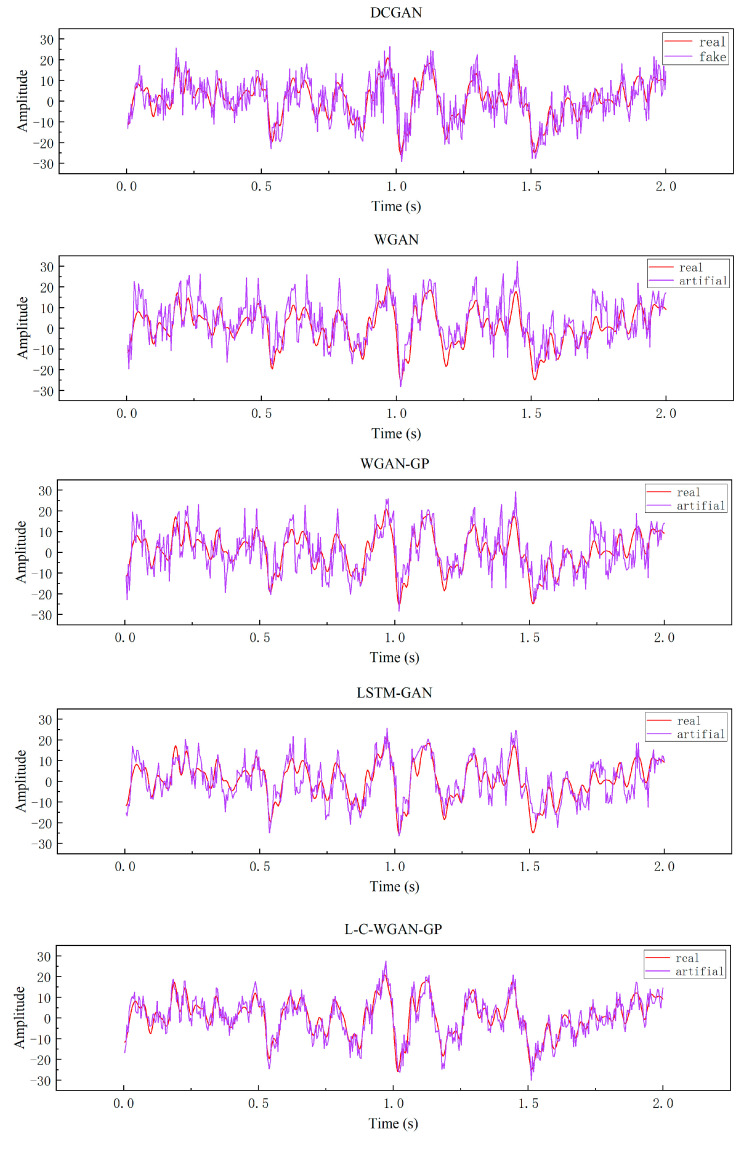

Secondly, this paper further illustrates the above conclusions by observing the generated sample’s time domain plot and time-frequency plot. Figure 8 shows the time domain plots of the generated data for five generative adversarial models. Among them, the abscissa denotes time, the ordinate denotes amplitude and the red and purple curves denote true and generated EEG signals. As can be seen from the figure, L–C–WGAN–GP has less noise than the waveforms generated by other methods. Although the trend of all methods is basically consistent with the direction of the original EEG signal, the other methods are much higher (lower) than the original signal at the maximum and the minimum. Our proposed method is still in good agreement with the original signal at the extreme value, which is due to the fact that the LSTM network can learn temporal continuity, rather than non-continuous data, to evaluate the signal. Moreover, the EEG data generated by L–C–WGAN–GP are the most similar to the real EEG data, and their waveform characteristics are more fully learned and closer to the real waveform morphology, indicating that the model generates EEG data best. DCGAN’s EEG signal data are less effective than that of other models.

Figure 8.

Time domain plots of generated data for five generative adversarial models.

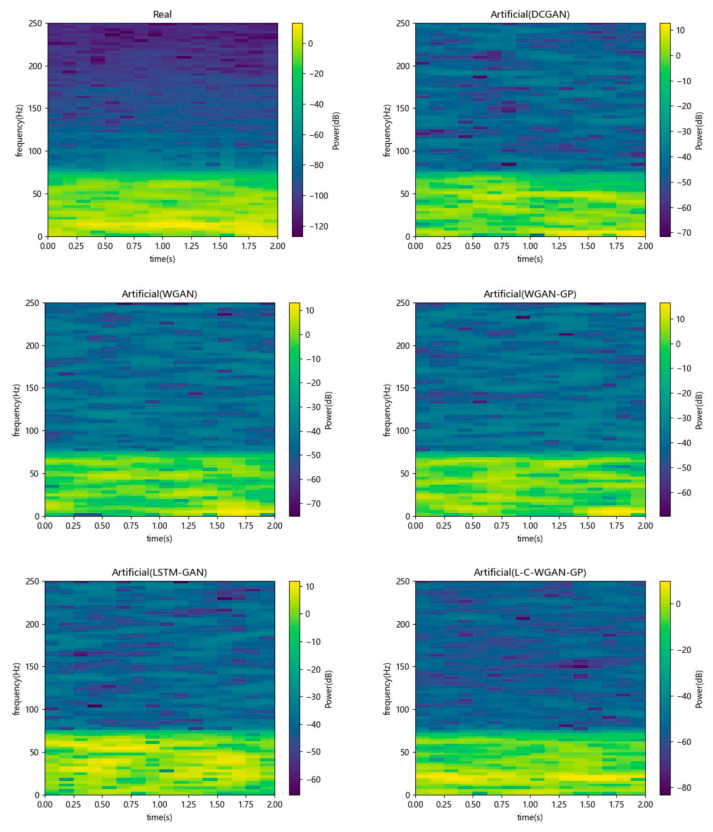

Figure 9 shows the time-frequency plots of the generated data for five generative adversarial models. The abscissa represents time, the left ordinate represents frequency and the right ordinate represents power. It can be seen from the figure that the EEG data generated by the five models have similarities with the real data. Specifically, the low-frequency part has a higher power value than the high-frequency one. In addition, as can be seen in the timeline, our proposed model is also more consistent with the original EEG signal data, and compared with other methods, the power of our proposed method is as stable as the original power. Additionally, the fact that L–C–WGAN–GP’s power distribution is more comparable to the power distribution of real data indicates that the proposed model has superior performance.

Figure 9.

Time-frequency plots of generated data for five generative adversarial models.

Finally, this paper adds the generated dataset as the training set of the compressed sensing reconstruction model based on the original dataset to verify whether the added generated dataset improves the training of the reconstructed model. We utilize the network models that have been published by us as an object for the comparison of the compressed perceptual reconstruction accuracy of the EEG signals [45]. In the EEG signal compressed sensing reconstruction experiment, the length of each sample is N = 500. Utilizing the measurement matrix to project the EEG signal to a lower dimension is necessary to obtain the compressed signal with length M. The sparse binary matrix is selected as the measurement matrix, and the compression ratio (CR) is defined as . Therefore, this paper constructs a measurement matrix of M = 50, 100, …, 400, 450, and the corresponding compression ratios CR = 10%, 20%, …, 80%, 90%. Figure 10 depicts the compressed sensing reconstruction model for CS-ResNet.

Figure 10.

CS-ResNet compressed sensing reconstruction model.

As shown, the compressed EEG signal is first converted into 500 dimensions by multiplying it by the measurement matrix’s pseudoinverse . The input is then converted into a 16-channel feature map using a convolutional layer with a convolution kernel size of 3 × 1 and several convolution kernels of 16. Then, through two residual blocks, each residual block contains six convolutional layers, in which the dimension size of the residual block input and output is the same, and both are feature maps of 16 channels to ensure that the input and output of the residual block in the residual learning network can be added. The number of convolution kernels in the six convolutional layers in the residual block is 32, 64, 128, 64, 32 and 16, and the corresponding convolution kernel sizes are 7 × 1, 7 × 1, 5 × 1, 5 × 1, 3 × 1 and 3 × 1. The expansion rate of each convolution kernel is set to 2. According to the size and expansion rate of the current convolution kernel, appropriate fill values are set in each convolution layer to keep the feature map’s size unchanged. The exponential linear unit (ELU) function is the activation function behind each convolution layer in the residual block. After the two residual blocks, a convolutional layer of 3 × 1 with one convolution kernel is added, and a fully connected layer is used to input the reconstructed EEG signal.

In the experiments, L–C–WGAN–GP was used to generate 20,000 generated data with a length of 500, and 25%, 50%, 75% and 100% of the total generated data were added to the original dataset, respectively, to train the compressed sensing reconstruction model fully. For reconstructing EEG signals using CNNs at various compression ratios, PRD values trained with the original dataset and PRD values trained with 25%, 50%, 75% and 100% of the generated data on top of the original dataset are all shown in Table 2.

Table 2.

CNN reconstructs the PRD values of EEG signals at different compression ratios.

| CR/% | CNN (PRD/%) | ||||

|---|---|---|---|---|---|

| None | Add 25% | Add 50% | Add 75% | Add 100% | |

| 90% | 0.9728 | 0.9291 | 0.8852 | 0.8477 | 0.8212 |

| 80% | 1.0507 | 0.9913 | 0.9343 | 0.9064 | 0.8796 |

| 70% | 1.2605 | 1.1954 | 1.1436 | 1.1108 | 1.0954 |

| 60% | 1.3053 | 1.2589 | 1.1997 | 1.1539 | 1.1297 |

| 50% | 1.5008 | 1.4321 | 1.3847 | 1.3583 | 1.3178 |

| 40% | 2.2074 | 2.1282 | 2.0693 | 2.0165 | 1.9855 |

| 30% | 6.2556 | 5.9215 | 5.7764 | 5.3842 | 5.0331 |

| 20% | 25.7751 | 24.8785 | 24.0494 | 22.7955 | 21.9786 |

| 10% | 44.2411 | 42.7291 | 41.2338 | 39.8773 | 38.6593 |

PRD values trained with the original dataset and 25%, 50%, 75% and 100% of the generated data based on the original dataset are shown in Table 3 for reconstructing EEG signals at various compression ratios with CS-ResNet.

Table 3.

CS-ResNet reconstructs the PRD value of EEG signals at different compression ratios.

| CR/% | CS-ResNet (PRD/%) | ||||

|---|---|---|---|---|---|

| None | Add 25% | Add 50% | Add 75% | Add 100% | |

| 90% | 0.5485 | 0.4889 | 0.4465 | 0.4178 | 0.3966 |

| 80% | 0.5976 | 0.5447 | 0.4979 | 0.4766 | 0.4498 |

| 70% | 0.6198 | 0.5623 | 0.5244 | 0.4981 | 0.4763 |

| 60% | 0.6506 | 0.5991 | 0.5493 | 0.5212 | 0.5049 |

| 50% | 0.7996 | 0.7268 | 0.6881 | 0.6549 | 0.6411 |

| 40% | 1.0016 | 0.9546 | 0.9173 | 0.8896 | 0.8588 |

| 30% | 3.9906 | 3.6824 | 3.4564 | 3.3151 | 3.189 |

| 20% | 20.1886 | 19.2756 | 18.2276 | 17.3934 | 16.4784 |

| 10% | 30.2299 | 29.3579 | 27.9547 | 26.6872 | 25.5369 |

The table demonstrates that, when the compression ratio is 40~90%, the average reconstruction accuracy with CNN reconstruction after adding 25~100% of the generated data is 0.06~0.17% higher than that without adding the generated data. The reconstruction accuracy of CS-ResNet is 0.05~0.14% higher on average. When the compression ratio is 30%, the reconstruction accuracy after adding 25~100% of the generated data using CNN reconstruction is 0.33~1.22% higher than without adding generated data. The reconstruction accuracy of CS-ResNet is 0.3~0.80% higher. When the compression ratio is 20%, the reconstruction accuracy after adding 25~100% of the generated data using CNN reconstruction is 0.89~3.79% higher than without adding generated data. CS-ResNet has a higher reconstruction accuracy of 0.91–3.71%. When the compression ratio is 10%, the reconstruction accuracy is 1.51~5.58% higher when 25–100% of the generated data are added through CNN reconstruction than when the generated data are not added. The reconstruction accuracy of CS-ResNet is 0.87~4.69% higher. Finally, it can be concluded that the compressed sensing reconstruction model is fully trained when the number of training sets increases, significantly improving the accuracy of EEG signal reconstruction. The network model’s performance is enhanced because overfitting is avoided during training. Therefore, L–C–WGAN–GP can produce approximate EEG data samples effectively.

4.6. Discussion

Our proposed model can generate EEG data while maintaining the original data features, and exhibits good performance in multiple indicators, but there are also limitations. Our method uses EEG signal data as a training dataset and focuses on the enhancement of EEG motor imagination, and in the future we will try to generalize this method to more datasets for validation. The calculation quantity of LSTM in the running process of operation is significant, and GRU [46] can solve the problem of calculation quantity, but LSTM shows better performance than GRU in the experiment, and the reasons for this are worth exploring. In the future, the proposed method can also be applied to repair damaged EEG signals, after mastering the generation rules of EEG signals, to help us to further understand EEG signals and further explore the functional characteristics of the brain at the same time, in a similar field of physiological signals. For example, for nonlinear skin electrical signals, this could include ECG signal or EMG signal activity. These physiological signals have similar properties to EEG signals, are nonlinear, non-stationary and temporally related, and our method can also be popularized to augment the dataset to meet the data needs of deep learning and accelerate the development of man–machine collaboration.

5. Conclusions

This paper proposes a new generative adversarial network model to address the problem of EEG signal scarcity. This model can generate EEG data from the original data while keeping the characteristics of the original data. This model, based on WGAN–GP, uses LSTM as the generator and a CNN as the discriminator. The similarity index of the EEG data generated by the proposed approach is superior to that of other generative adversarial network models, as demonstrated by simulation experiments. The generated EEG signals are more similar to the real EEG signals regarding morphological characteristics. In addition, 25%, 50%, 75% and 100% of the generated data are added to the EEG signal original dataset for model reconstruction training in the EEG signal compression perception reconstruction, and the results show that the loss of compressed EEG signal reconstruction also decreases with increasing augmented data. Consequently, the reconstruction accuracy of EEG signals can be effectively improved by reducing overfitting and significantly increasing model stability. Therefore, we believe that the proposed augmentation technology of EEG signal data will have a high application value in future EEG applications.

Author Contributions

Conceptualization, X.D. (Xiuli Du) and X.W.; methodology, X.D. (Xiaohui Ding) and Y.L.; software, X.W., L.Z. and S.Q.; validation, X.D. (Xiuli Du) and X.W.; formal analysis, X.W. and Y.L.; investigation, X.W., L.Z. and S.Q.; resources, X.D. (Xiuli Du) and S.Q.; data curation, X.D. (Xiaohui Ding) and X.W.; writing—original draft preparation, X.W., L.Z. and Y.L.; writing—review and editing, X.D. (Xiaohui Ding) and X.W.; visualization, X.W. and Q.L.; supervision, Q.L. and Y.L.; project administration, X.D. (Xiuli Du) and X.W.; funding acquisition, X.D. (Xiaohui Ding). All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study came from publicly available datasets, which can be found at the following links: https://www.bbci.de/competition/iv/, accessed on 3 July 2008 (BCI CompetitionIV).

Conflicts of Interest

The authors declare no conflicts of interest.

Funding Statement

The project is sponsored by Liaoning Provincial Education Department “Research on emotion recognition of cross subject EEG signals based on deep learning”, grant number JYTMS20230377.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Vaid S., Singh P., Kaur C. EEG signal analysis for BCI interface: A review; Proceedings of the 2015 Fifth International Conference on Advanced Computing & Communication Technologies; Haryana, India. 21–22 February 2015; Piscataway, NJ, USA: IEEE; 2015. pp. 143–147. [Google Scholar]

- 2.Hosseini M.P., Hosseini A., Ahi K. IEEE Reviews in Biomedical Engineering. IEEE; Piscataway, NJ, USA: 2020. A Review on Machine Learning for EEG Signal Processing in Bioengineering; p. 1. [DOI] [PubMed] [Google Scholar]

- 3.Ahangi A., Karamnejad M., Mohammadi N., Ebrahimpour R., Bagheri N. Multiple classifier system for EEG signal classification with application to brain-computer interfaces. Neural Comput. Appl. 2013;23:1319–1327. doi: 10.1007/s00521-012-1074-3. [DOI] [Google Scholar]

- 4.Lalitharatne T.D., Teramoto K., Hayashi Y., Kiguchi K. Towards hybrid EEG-EMG-based control approaches to be used in bio-robotics applications: Current status, challenges and future directions. Paladyn J. Behav. Robot. 2013;4:147–154. doi: 10.2478/pjbr-2013-0009. [DOI] [Google Scholar]

- 5.Li J.Y., Du X.B., Zhu Z.L., Deng X.M., Ma C.X., Wang H.A. A review of deep learning research on EEG emotion recognition. J. Softw. 2023;34:255–276. [Google Scholar]

- 6.Koelstra S., Muhl C., Soleymani M., Lee J.S., Yazdani A., Ebrahimi T., Pun T., Nijholt A., Patras I. Deap: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012;3:18–31. doi: 10.1109/T-AFFC.2011.15. [DOI] [Google Scholar]

- 7.Fahimi F., Dosen S., Ang K.K., Mrachacz-Kersting N., Guan C. IEEE Transactions on Neural Networks and Learning Systems. IEEE; Piscataway, NJ, USA: 2020. Generative adversarial networks-based data augmentation for brain–computer interface; pp. 4039–4051. [DOI] [PubMed] [Google Scholar]

- 8.Veeranki Y.R., Ganapathy N., Swaminathan R. Analysis of Fluctuation Patterns in Emotional States Using Electrodermal Activity Signals and Improved Symbolic Aggregate Approximation. Fluct. Noise Lett. 2022;21:2250013. doi: 10.1142/S0219477522500134. [DOI] [Google Scholar]

- 9.Veeranki Y.R., McNaboe R., Posada-Quintero H.F. EEG-Based Seizure Detection Using Variable-Frequency Complex Demodulation and Convolutional Neural Networks. Signals. 2023;4:816–835. doi: 10.3390/signals4040045. [DOI] [Google Scholar]

- 10.Angus-Leppan H. Diagnosing Epilepsy in Neurology Clinics: A Prospective Study. Seizure. 2008;17:431–436. doi: 10.1016/j.seizure.2007.12.010. [DOI] [PubMed] [Google Scholar]

- 11.Zhang S., Mao X., Sun L., Yang Y. EEG data augmentation for personal identification using SF-GAN; Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA); Changchun, China. 20–22 May 2022; Piscataway, NJ, USA: IEEE; 2022. pp. 1–6. [Google Scholar]

- 12.Hasan M.A., Khan M.U., Mishra D. A Computationally Efficient Method for Hybrid EEG-fNIRS BCI Based on the Pearson Correlation. BioMed Res. Int. 2020;2020:1838140. doi: 10.1155/2020/1838140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Saini M., Satija U., Upadhyay D.M. IEEE Signal Processing Letters. IEEE; Piscataway, NJ, USA: 2020. Wavelet Based Waveform Distortion Measures for Assessment of Denoised EEG Quality with Reference to Noise-Free EEG Signal; pp. 1260–1264. [Google Scholar]

- 14.Gaur P., Gupta H., Chowdhury A., McCreadie K., Pachori R.B., Wang H. A Sliding Window Common Spatial Pattern for Enhancing Motor Imagery Classification in EEG-BCI. IEEE Trans. Instrum. Meas. 2021;70:4002709. doi: 10.1109/TIM.2021.3051996. [DOI] [Google Scholar]

- 15.de Souza V.L.T., Marques B.A.D., Batagelo H.C., Gois J.P. A review on Generative Adversarial Networks for image generation. Comput. Graph. 2023;114:13–25. doi: 10.1016/j.cag.2023.05.010. [DOI] [Google Scholar]

- 16.Contreras-Cruz M.A., Correa-Tome F.E., Lopez-Padilla R., Ramirez-Paredes J.P. Generative Adversarial Networks for anomaly detection in aerial images. Comput. Electr. Eng. 2023;106:108470. doi: 10.1016/j.compeleceng.2022.108470. [DOI] [Google Scholar]

- 17.Li S., Zhao X. High-resolution concrete damage image synthesis using conditional generative adversarial network. Autom. Constr. 2023;147:104739. doi: 10.1016/j.autcon.2022.104739. [DOI] [Google Scholar]

- 18.Tian C., Ma Y., Cammon J., Fang F., Zhang Y., Meng M. IEEE Transactions on Neural Systems and Rehabilitation Engineering: A Publication of the IEEE Engineering in Medicine and Biology Society. IEEE; Piscataway, NJ, USA: 2023. Dual-encoder VAE-GAN with Spatiotemporal Features for Emotional EEG Data Augmentation. [DOI] [PubMed] [Google Scholar]

- 19.Liu Q., Hao J., Guo Y. EEG Data Augmentation for Emotion Recognition with a Task-Driven GAN. Algorithms. 2023;16:118. doi: 10.3390/a16020118. [DOI] [Google Scholar]

- 20.Hartmann K.G., Schirrmeister R.T., Ball T. EEG-GAN: Generative adversarial networks for electroencephalograhic (EEG) brain signals. arXiv. 20181806.01875 [Google Scholar]

- 21.Abdelfattah S.M., Abdelrahman G.M., Wang M. 2018 International Joint Conference on Neural Networks (IJCNN) IEEE; Piscataway, NJ, USA: 2018. Augmenting the size of EEG datasets using generative adversarial net-works; pp. 1–6. [Google Scholar]

- 22.Luo Y., Lu B.L. EEG data augmentation for emotion recognition using a conditional Wasserstein GAN; Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 2535–2538. [DOI] [PubMed] [Google Scholar]

- 23.Hu M., Chen J., Jiang S., Ji W., Mei S., Chen L., Wang X. E2SGAN: EEG-to-SEEG translation with generative adversarial networks. Front. Neurosci. 2022;16:971829. doi: 10.3389/fnins.2022.971829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhang Z., Zhong S.H., Liu Y. IEEE Transactions on Affective Computing. IEEE; Piscataway, NJ, USA: 2022. GANSER: A self-supervised data augmentation framework for EEG-based emotion recognition. [Google Scholar]

- 25.Abdelghaffar Y., Hashem A., Eldawlatly S. Generative Adversarial Networks for Augmenting EEG Data in P300-based Applications: A Comparative Study; Proceedings of the 2022 IEEE 35th International Symposium on Computer-Based Medical Systems (CBMS); Shenzhen, China. 21–23 July 2022; pp. 1–6. [Google Scholar]

- 26.Zhang Z., Shenghua Z., Yan L. Beyond Mimicking Under-Represented Emotions: Deep Data Augmentation with Emotional Subspace Constraints for EEG-Based Emotion Recognition; Proceedings of the AAAI Conference on Artificial Intelligence; Vancouver, BC, Canada. 20–27 February 2024; pp. 10252–10260. [Google Scholar]

- 27.Goodfellow I., Pouget-Abadie J., Mirza M., Xu B., Warde-Farley D., Ozair S., Courville A., Bengio Y. Generative adversarial networks. Commun. ACM. 2020;63:139–144. doi: 10.1145/3422622. [DOI] [Google Scholar]

- 28.Arjovsky M., Chintala S., Bottou L. Wasserstein GAN. arXiv. 20171701.07875 [Google Scholar]

- 29.Villani C., Villani C. Optimal Transport: Old and New. Springer; Berlin/Heidelberg, Germany: 2009. The wasserstein distances; pp. 93–111. [Google Scholar]

- 30.Gulrajani I., Ahmed F., Arjovsky M., Dumoulin V., Courville A.C. Improved training of wasserstein gans; Proceedings of the Advances in Neural Information Processing Systems; Long Beach, CA, USA. 4–9 December 2017; [Google Scholar]

- 31.Singh K., Malhotra J. Two-layer LSTM network-based prediction of epileptic seizures using EEG spectral features. Complex Intell. Syst. 2022;8:2405–2418. doi: 10.1007/s40747-021-00627-z. [DOI] [Google Scholar]

- 32.LeCun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86:2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 33.Khare S.K., Bajaj V. Time–Frequency Representation and Convolutional Neural Network-Based Emotion Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2021;32:2901–2909. doi: 10.1109/TNNLS.2020.3008938. [DOI] [PubMed] [Google Scholar]

- 34.Pan X. Time series data anomaly detection based on LSTM-GAN. Front. Comput. Intell. Syst. 2022;1:35–37. doi: 10.54097/fcis.v1i2.1701. [DOI] [Google Scholar]

- 35.Liu Y., Jebelli H. Proceedings of the Canadian Society of Civil Engineering Annual Conference 2021: CSCE21 General Track Volume 1. Springer Nature Singapore; Singapore: 2022. Enhanced Robotic Teleoperation in Construction Using a GAN-Based Physiological Signal Augmentation Framework; pp. 295–307. [Google Scholar]

- 36.Ma Z., Mei G., Piccialli F. An Attention Based Cycle-Consistent Generative Adversarial Network for IoT Data Generation and Its Application in Smart Energy Systems. IEEE Trans. Ind. Inform. 2022;19:6170–6181. doi: 10.1109/TII.2022.3204282. [DOI] [Google Scholar]

- 37.Heusel M., Ramsauer H., Unterthiner T., Nessler B., Hochreiter S. Gans trained by a two time-scale update rule converge to a local nash equilibrium; Proceedings of the Advances in Neural Information Processing Systems; Long Beach, CA, USA. 4–9 December 2017; [Google Scholar]

- 38.Willmott C.J. Some comments on the evaluation of model performance. Bull. Am. Meteorol. Soc. 1982;63:1309–1313. doi: 10.1175/1520-0477(1982)063<1309:SCOTEO>2.0.CO;2. [DOI] [Google Scholar]

- 39.Aronov B., Har-Peled S., Knauer C., Wang Y., Wenk C. Fréchet distance for curves, revisited; Proceedings of the Algorithms–ESA 2006: 14th Annual European Symposium; Zurich, Switzerland. 11–13 September 2006; Berlin/Heidelberg, Germany: Springer; 2006. pp. 52–63. [Google Scholar]

- 40.Keogh J., Pazzani M.J. Derivative Dynamic Time Warping; Proceedings of the First SIAM International Conference on Data Mining; Arlington, VA, USA. 11–13 April 2002. [Google Scholar]

- 41.Cao W., Zhang J. Real-Time Deep Compressed Sensing Reconstruction for Electrocardiogram Signals; Proceedings of the 2022 14th International Conference on Machine Learning and Computing (ICMLC); Guangzhou, China. 18–21 February 2022; p. 490. [Google Scholar]

- 42.Abu-Srhan A., Abushariah M.A.M., Al-Kadi S.O. The effect of loss function on conditional generative adversarial networks. J. King Saud Univ. Comput. Inf. Sci. 2022;34:6977–6988. [Google Scholar]

- 43.Radford A., Metz L. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv. 20151511.06434 [Google Scholar]

- 44.Xu Z., Du J., Wang J., Jiang C., Ren Y. Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC) IEEE; Piscataway, NJ, USA: 2019. Satellite image prediction relying on GAN and LSTM neural networks; pp. 1–6. [Google Scholar]

- 45.Du X., Liang K., Lv Y., Qiu S. Fast reconstruction of EEG signal compression sensing based on deep learning. Sci. Rep. 2024;14:5087. doi: 10.1038/s41598-024-55334-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Chung J., Gulcehre C., Cho K., Bengio Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv. 20141412.3555 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used in this study came from publicly available datasets, which can be found at the following links: https://www.bbci.de/competition/iv/, accessed on 3 July 2008 (BCI CompetitionIV).