Abstract

This work presents a multi-resolution physics-informed recurrent neural network (MR PI-RNN), for simultaneous prediction of musculoskeletal (MSK) motion and parameter identification of the MSK systems. The MSK application was selected as the model problem due to its challenging nature in mapping the high-frequency surface electromyography (sEMG) signals to the low-frequency body joint motion controlled by the MSK and muscle contraction dynamics. The proposed method utilizes the fast wavelet transform to decompose the mixed frequency input sEMG and output joint motion signals into nested multi-resolution signals. The prediction model is subsequently trained on coarser-scale input–output signals using a gated recurrent unit (GRU), and then the trained parameters are transferred to the next level of training with finer-scale signals. These training processes are repeated recursively under a transfer-learning fashion until the full-scale training (i.e., with unfiltered signals) is achieved, while satisfying the underlying dynamic equilibrium. Numerical examples on recorded subject data demonstrate the effectiveness of the proposed framework in generating a physics-informed forward-dynamics surrogate, which yields higher accuracy in motion predictions of elbow flexion–extension of an MSK system compared to the case with single-scale training. The framework is also capable of identifying muscle parameters that are physiologically consistent with the subject’s kinematics data.

Keywords: Multi-resolution recurrent neural network, Physics-informed parameter identification, Musculoskeletal system, Gated recurrent unit, Fast wavelet transform

Introduction

The prediction of the evolution of state variables in dynamical systems has been a vital component to several scientific applications such as biology, geophysics, earthquake engineering, solid mechanics, robotics, computer vision [1–7] etc. Black-box techniques based on data-driven mapping and development of parameterized multi-physics models describing the progression of the data have been previously utilized for making predictions on the states. This task continues to be an active area of research due to challenges on many fronts, such as, the quality and scarcity of relevant physical data, the dynamics and complexity of the system, and the reliability and accuracy of the prediction model.

On the other hand, the characterization of parameters in the multi-physics models of these dynamical systems is also critical [8–14]. The task is challenging due in parts to potential noise pollution captured by sensors in the system’s measured data, as well as the potential of the parameter space being high-dimensional, leading to ill-posed problems that pose difficulties in numerical solutions. Standard optimization techniques such as genetic algorithms [15, 16], simulated annealing [17], and non-linear least squares [18, 19] have been employed for parameter identification, but can be computationally expensive and may not converge for ill-posed, non-convex optimization problems that are encountered while solving inverse problems on MSK systems [15, 20].

In recent years, machine learning (ML) or deep-learning-based approaches have gained significant popularity for solving forward and inverse problems, attributed to their capability in effectively extracting complex features and patterns from data [21]. This has been successfully demonstrated in numerous engineering applications such as reduced-order modeling [22–26], and materials modeling [27–29], among others. Data-driven computing techniques that enforce constraints of conservation laws in the learning algorithms of a material database, have been developed in the field of computational mechanics [29–37]. More recently, physics-informed neural networks (PINNs) have been developed [11, 38, 39] to approximate the solutions of given physical equations by using neural networks (NNs). By minimizing the residuals of the governing partial differential equations (PDEs) and the associated initial and boundary conditions, PINNs have been successfully applied to solve forward problems [11, 40, 41], and inverse problems [11, 38, 42–44], where the unknown system characteristics are considered trainable parameters or functions [38, 45]. For biomechanics and biomedical applications [1, 46–50], this method has been applied extensively along with other ML techniques [51, 52]. These attempt to bridge the gap between ML-based data-driven surrogate models and the satisfaction of physical laws.

In this study, we focus on the application to musculoskeletal systems, aiming at utilizing non-invasive muscle activity measurements such as surface electromyography (sEMG) signals to predict joint kinetics or kinematics [1, 18, 19], which is of great significance to health assessment and rehabilitation purposes [15, 16]. These sEMG signals can be used as control inputs to drive the physiological subsystems that are governed by parameterized non-linear differential equations, and thus form the forward dynamics problem. Given information on muscle activations, the joint motion of a subject-specific MSK system can be obtained by solving a forward dynamics problem. Data-driven approaches for motion prediction have also been introduced to directly map the input sEMG signal to joint kinetics/kinematics, bypassing the forward dynamics equations and the need for parameter estimation [26–30]. However, the resulting ML-based surrogate models lack interpretability and may not satisfy the underlying physics. Another challenge is that the sEMG signal usually exhibits a wide range of frequencies that are non-trivial for ML models [1] to map to the joint motion.

In our previous work [1], a physics-informed parameter identification neural network (PI-PINN) was proposed for the simultaneous prediction of motion and parameter identification with application to MSK systems. Using the raw transient sEMG signals obtained from the sensors and the corresponding joint motion data, the PI-PINN learned a forward model to predict the motion with identifying the parameters of the hill-type muscle models representing the contractile muscle–tendon complex. A feature-encoded approach was introduced to enhance the training of the PI-PINN, which yielded high motion prediction accuracy and identified system parameters within a physiological range, with only a limited number of training samples. However, this method relies on mapping in a feature domain constituted by Fourier and polynomial bases, which requires the input sEMG signal to span over the entire duration of the motion. Thus, it prevents real-time predictions as the signal is obtained from the sensor.

To enhance the predictive accuracy of the time-dependent signals, recurrent neural networks (RNNs) such as gated recurrent units (GRUs) [29, 53] are utilized in this study to inform predictions with the history information of the motion. To overcome the limitation of the size of the data and provide more information from the composite frequency bands in the signals, a multi-resolution based (MR) approach is proposed. In this approach, wavelets are used to decompose both the raw sEMG and joint motion signals into coarse-scale components at various frequency scales and the remaining fine-scale details. Using principles of the multi-resolution theory and transfer learning, multi-resolution training processes are repeated recursively from the coarse-scale to the full-scale in order to map the sEMG signal to the joint motion. Furthermore, gaussian noise is introduced to the recorded motion data used for training to enhance the robustness and generalizability of the model [29]. The trained model can be applied for real-time motion predictions given the raw sEMG signal obtained from the sensor.

This manuscript is organized as follows. Section 2 introduces the subsystems and mathematical formulations of MSK forward dynamics, followed by an introduction of the proposed multi-resolution PI-RNN framework for simultaneous motion prediction and system parameter identification in Sect. 3. The following sections verify the proposed framework using synthetic data and validate it by modeling the elbow flexion–extension movement using subject-specific sEMG signals and recorded motion data in Sect. 4 and 5, respectively. Concluding remarks and future work are summarized in Sect. 6.

Formulations for muscle mechanics and musculoskeletal forward dynamics

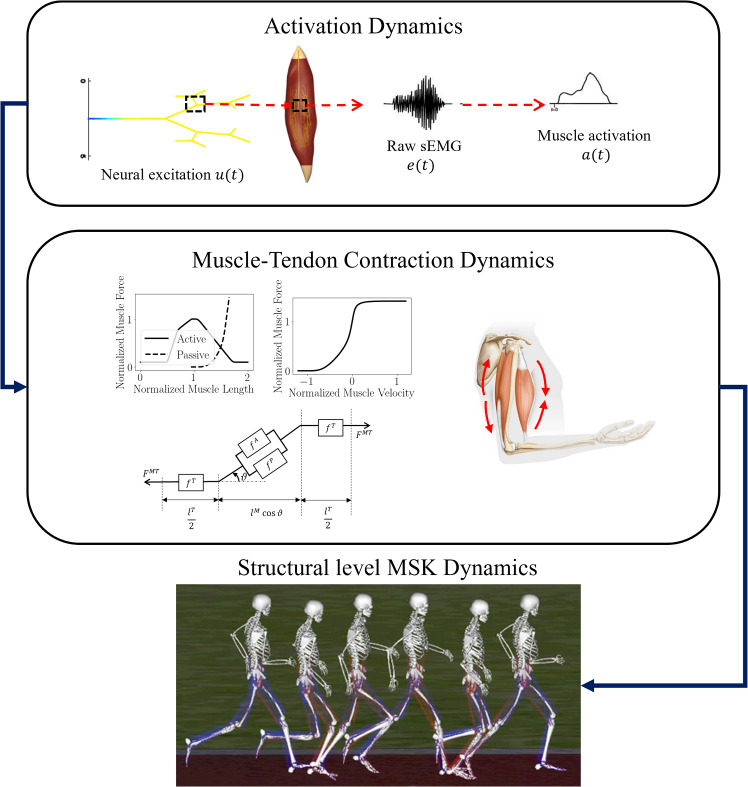

This section provides a brief overview of muscle mechanics and forward dynamics of the human MSK system, with details in “Appendices A and B”. As depicted in Fig. 1, multiple subsystems within the MSK forward dynamics interact hierarchically: 1) the neural excitation transforms into muscle activation (activation dynamics); 2) Muscle activation drives muscle fibers to produce force (muscle–tendon (MT) contraction dynamics); 3) the resultant forces produce joint motion q (translation and rotation) of MSK systems, called the MSK forward dynamics [18, 19].

Fig. 1.

The subsystems involved in the forward dynamics of an MSK system are depicted in this flowchart. Neural excitations are transmitted to muscle fibers (activation dynamics) that contract to produce force (muscle–tendon contraction dynamics). These forces generate torques at the joints (structural level MSK dynamics) leading to joint motion [1, 54]

Neural excitation-to-activation dynamics

While activations in the muscle fibers can be obtained through a non-linear transformation on neural excitations , they are difficult to measure in-vivo. Therefore, the excitations are estimated from [15, 16] the raw sEMG signals considering an electro-mechanical delay:

| 1 |

where measures the delay between the neural excitation originating and reaching the muscle group. The muscle activation signal is then expressed as,

| 2 |

where is a shape factor. These activations initiate muscle fiber contraction leading to force production from the muscle group (Fig. 2).

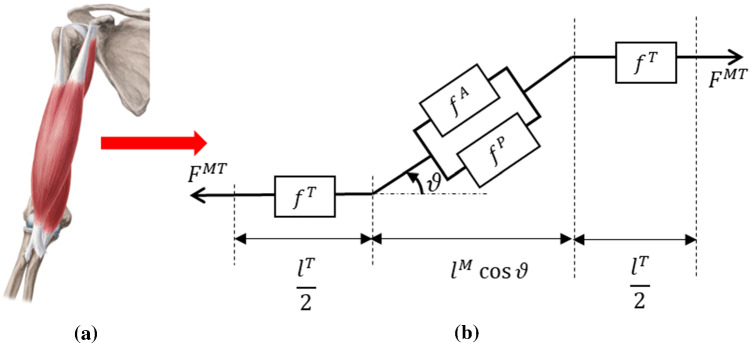

Fig. 2.

A muscle–tendon complex in the arm modelled by a homogenized hill-type model where muscle group’s in a are a homogenized muscle–tendon (MT) complex described by the model shown in b

Muscle–tendon force generation through contraction dynamics

Forces in the muscle–tendon (MT) complex are generated by the dynamics of MT contractions, where for structural length scale behaviour of the MT complex, homogenized hill-type muscle models are utilized (described in “Appendix B”). Each muscle group can be characterized by a parameter vector,

| 3 |

containing constants such as the maximum isometric force in the muscle (), the optimal muscle length () corresponding to the maximum isometric force, the maximum contraction velocity (), the slack length of the tendon (, and the initial pennation angle ( [18, 19]. The total force produced by the MT complex, , can be expressed as:

| 4 |

where is the activation function in Eq. (2), is the normalized muscle length, is the normalized velocity of the muscle and is the current pennation angle. In this study, the tendon is assumed to be rigid which simplifies the MT contraction dynamics [57, 58] accounting for the interaction of the activation, force length, and force velocity properties of the MT complex. More details can be found in “Appendices A and B”.

MSK forward dynamics of motion

Body movement is the result of the force produced by actuators (MT complexes), converted to torques at the joints of the body, leading to rotation and translation of joints, which are considered as the generalized degrees of freedom of an MSK system . The dynamic equilibrium can be expressed as

| 5 |

where are the vectors of generalized angular motions, angular velocities, and angular accelerations, respectively; is the torque from the external forces acting on the MSK system, e.g., ground reactions, gravitational loads etc.; is the inertial matrix; is the torque from all muscles in the model calculated by , where are the moment arm’s and are the forces from the MT complex. Given the muscle activation signals , initial conditions and parameters of involved muscle groups , the generalized angular motions and angular velocities of the joints can be obtained by solving Eq. (5). An example of these vectors is shown in Sect. 4 and “Appendix D”.

Multi-resolution recurrent neural networks for physics-informed parameter identification

This section describes the recurrent neural network algorithms, followed by the physics-informed parameter identification that enables the development of a forward dynamics surrogate and simultaneous parameter identification. The employment of multi-resolution analysis based on fast wavelet transform [59, 60] for training data augmentation is then defined. The computational framework for multi-resolution recurrent neural network for physics-informed parameter identification is also discussed.

Recurrent neural networks and gated recurrent units

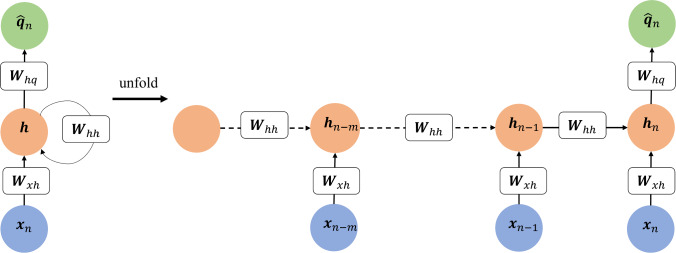

The computational graph of a standard recurrent neural network (RNN) and its unfolded graph is shown in Fig. 3. The hidden state allows for RNNs to learn important history-dependent features from the data in sequential time steps [29, 53]. The unfolded graph shows the sharing of parameters across the architecture of the network, allowing for efficient training. The forward propagation of an RNN starts with an initial hidden state that embeds history-dependent features and propagates through all input steps. Considering an RNN with history steps as shown in Fig. 3, the propagation of the hidden state can be expressed as follows [29].

| 6 |

Fig. 3.

Computational graph of a standard recurrent neural network using ‘m’ history steps for prediction

The hidden state at the final (current) step is then used to inform the prediction.

| 7 |

Here, is the hyperbolic tangent function; and are the trainable weight coefficients; and are the trainable bias coefficients. The trainable parameters are shared across all RNN steps. Let be the current time and current sEMG data of the muscle components and be the predicted joint motions at the current time . Figure 4a illustrates the computational graph of an RNN model trained to predict the motion at step by using m history steps of and as well as the at step . The forward propagation is defined as

| 8 |

| 9 |

| 10 |

with trainable parameters including the weight coefficients and and bias coefficients and . During training, the ‘teacher-forcing’ method is used where the measured motion data is given to the model in the history steps. In test mode, the model is fed back to the previous predictions as input to inform future predictions. The inputs received in this scenario could be quite different from those passed through in the training process, leading the network to make extrapolative predictions and therefore, accumulate errors which will pollute the predictions. To improve the testing performance and enhance model accuracy and robustness, a user-controlled amount of random Gaussian noise is added to the recorded motion data to introduce stochasticity so that the network can learn variable input conditions, resembling those in the test mode, see [29] for details.

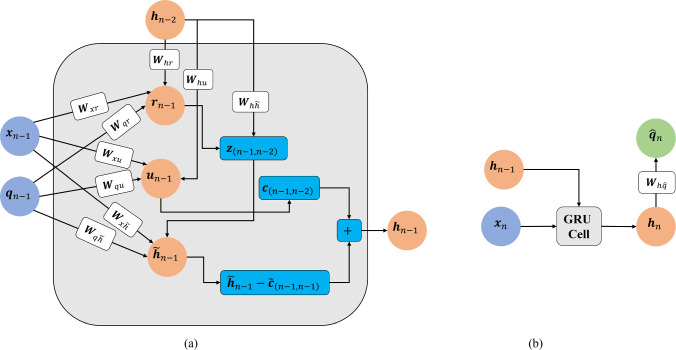

Fig. 4.

An example computational graph of an RNN that uses one history step: a The train mode and b the test mode, where the motion predicted from the previous step is used as part of the input to predict motion at the current step

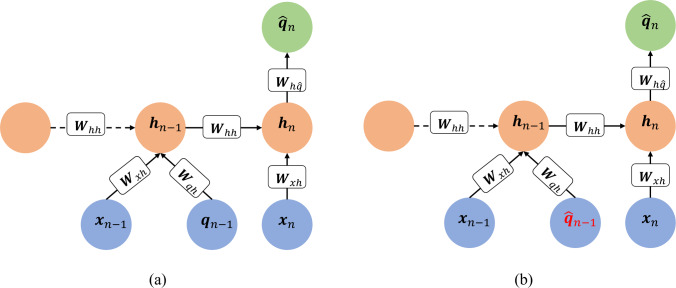

Standard RNNs, however, have difficulties in learning long-term dependencies due to vanishing and exploding gradient issues arising from the recurrent connections. To mitigate these issues, gated recurrent units (GRUs) have been developed [29, 53]. A standard GRU consists of a reset gate, that removes irrelevant history information, an update gate that controls the amount of history information that is passed to the next step, and a candidate hidden state that is used to calculate the current hidden state . Considering a GRU with history steps, the forward propagation can be expressed as follows [29]:

| 11 |

| 12 |

| 13 |

where denotes the element-wise (Hadamard) product; is the sigmoid activation function and is the hyperbolic tangent function; and are the trainable weight coefficients; and are the trainable bias coefficients. The current hidden state is calculated by a linear interpolation between the previous hidden state and the candidate hidden state , based on the update gate . The model is trained via the backpropagation through time algorithm applied to RNNs [21]. Training occurs by plugging in the measured motion data in history steps (shown in Fig. 5), known as the teacher forcing procedure [21]. For predictions, the prediction from the previous step is used to predict the current step. The addition of gaussian noise to measured data, as described before, is adopted in GRU models as well.

Fig. 5.

An example computational graph of a GRU in train mode that uses one history step: a Starting with an initial or previously obtained hidden state , the main GRU cell takes the input and motion that are used to obtain the GRU hidden state at step (Eq. (11)) and, b where the hidden state is plugged back in to the GRU along with input at step to predict the motion (Eq. (12)-(13)). The ‘ + ’ cell produces an output (arrow pointing outwards) that is the summation of the inputs (arrows pointing into the cell)

Simultaneous forward dynamics learning and parameter identification

With the governing equations for a general MSK forward dynamics (Sect. 2.1), the following parameterized ODE system is defined as.

| 14 |

where the differential operator is parameterized by a set of parameters . The right-hand side is parameterised by . is the operator for initial conditions, and is the vector of prescribed initial conditions. To simplify notations, the ODE parameters are denoted by . The solution to the ODE system depends on the choice of parameters .

Here, an RNN is used to relate data inputs containing discrete sEMG signals and discrete time from all the previous history time-steps of a trial, , to discrete joint motion data outputs at the current time-step, , approximating the MSK forward dynamics. Let the training input at the history step be defined as , where denotes the time at the time step, and denotes the sEMG signals of muscle groups involved in the MSK joint motion at . The motion at time step , is then predicted using the training input from all the previous steps using the RNN.

| 15 |

where denotes RNN evaluations (depending on model chosen) discussed in Eq. (11)-(13). The optimal RNN parameters and the ODE parameters are obtained by minimizing the composite loss function as follows,

| 16 |

where is the parameter to regularize the loss contribution from the ODE residual term in the loss function and can be estimated analytically [1]. The data loss is defined by,

| 17 |

where is the predicted motion, and is the recorded motion of MSK joints. In addition to training an MSK forward dynamics surrogate, the proposed framework aims to simultaneously identify important MSK parameters from the training data by minimizing residual of the governing equation of MSK system dynamics in Eq. (5).

with

| 18 |

where is the residual associated with Eq. (14) for the sample; represents the ODE parameters relevant to the MSK system. The gradients of the network outputs with respect to the network parameters , MSK parameters , and inputs are needed in the loss function minimization in Eq. (16), which can be obtained efficiently by automatic differentiation [61]. The formulation in Eq. (15) is general such that more advanced RNN frameworks can be used such as the GRU described in Eq. (11)-(13).

Multi-resolution training with transfer learning

To improve the training efficiency of RNN for MSK applications with mixed-frequency sEMG input signals and low-frequency output joint motion, a multi-resolution decomposition of the training input–output data is introduced in Sect. 3.3.1, followed by the transfer learning based multi-resolution training protocols to be discussed in Sect. 3.3.2.

Wavelet based multi-resolution analysis

Consider a sequence of nested subspaces where , and . Each subspace of scale is spanned by a set of scaling functions , i.e.,

Each subspace is related to the finer subspace through the law of dilation i.e., if then . Translations of the scaling function span the same subspace, i.e., if .

A mutually orthogonal complement of in is , such that,

| 19 |

where is a direct sum. This subspace is spanned by a set of wavelet functions , i.e.,where is the mother wavelet. It follows that,

| 20 |

and therefore,

| 21 |

The two-scale dilation and translation relations for the scaling functions can be written as

| 22 |

Orthogonal wavelet functions can be obtained by imposing orthogonality conditions between scaling and wavelet functions in the frequency domain using Fourier transform,

| 23 |

where is the coefficient.

Orthogonal scaling functions can be constructed by choosing a candidate function such that have reasonable decay and a finite support. In addition, . It should also satisfy the two-scale relation,

| 24 |

With these, an orthogonal scaling function can be expressed in terms of as

| 25 |

It is then possible to define the scaling function at the coarse scale in terms of the scaling function at the fine scale and the wavelet functions at the coarser scale,

| 26 |

Any function can be approximated at scale by using as a basis as well as using its coarse scale representation and details at the coarse scale, i.e.,

| 27 |

where and are the operators projecting onto the subspaces and details of at scale in the orthogonal subspace , respectively. and are the corresponding basis coefficients at the coarse scale . While the example shown here is for a one-dimensional case, this multi-resolution representation can be extended to multi-dimensions.

Multi-resolution data representation and training protocols

In this approach, a given signal is represented using the multi-resolution scaling functions and wavelets. A scale representation of signal can be obtained from the scale representation with the addition of wavelet components (high frequency components) of the scales higher than , using the discrete wavelet transform modified from Eq. (27),

| 28 |

where is the projection operator at scale and are the wavelet projectors of the signal that are added from scale to scale to reconstruct the signal at scale ; and are the scaling and wavelet function’s coefficients, obtained by the orthogonality condition as given in Sect. 3.3.1.

Using the Wavelet transform to represent a time series under multiple resolutions offers advantages for feature extraction from signals. Compared to the Fourier transform which offers only localization in the frequency domain, the Wavelet transform provides both frequency and time domain localization, making it more suitable for time history (or sequence) learning algorithms such as the standard RNN and its enhanced variant GRU. More specifically, one can enhance training efficiency by using a sequential training strategy for the time-history input (sEMG) and output (joint motion) data. Applying the Fast Wavelet Transform [59, 60] to obtain the input and output data from low to high resolutions results in better generalization performance of the RNN trained to map from sEMG signals to joint motion time history as described below. The second order Daubechies wavelets are used in this work.

Here we consider a general MSK system described in Sect. 2. The original unfiltered data is denoted as scale [0], which will be decomposed into a sequence of lower scales for multi-resolution training.

Let be the input training data at the full-scale of the raw signals i.e.,

| 29 |

and the motion of joints of the MSK system at the time-step at the full-scale is such that the array of the unfiltered motion data for the duration of the motion is .

From MR theory, subtracting details from the fine scale representations at the full-scale of the signal, i.e., , results in a course scale representation of the signal at scale . The projected training data at coarse scale [-j] is defined as

| 30 |

where is the total number of data points and

| 31 |

is the input data of scale [-j] at time step . The motion of the MSK joints at the time-step at the scale is The data sets for a representative muscle group ‘’, and motion , are obtained from the original raw data and by wavelet projection using Eq. (27), that is,

| 32 |

where and are the projection operators in multi-dimensions. Hence, datasets that contain lower resolution representations of the original signal at scales can be expressed as:

| 33 |

where .

Instead of learning the signal mapping from input original raw sEMG data to motion data , we initiate learning the mapping by starting from a coarse scale representation of the input–output data at scale and map to . For multi-resolution RNN, the initial learning starts from the coarsest scale as follows:

| 34 |

| 35 |

| 36 |

At the next finer scale , the weights at scale (using an early stopping [62]) are used as the initial values for , , , , similar to the concept of transfer learning[63].

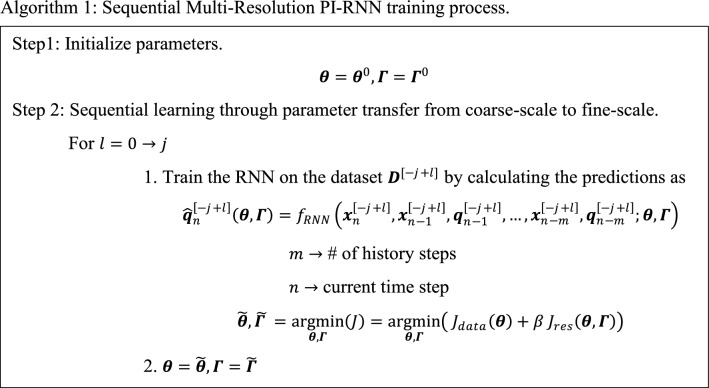

Similarly, for multi-resolution GRU, the initial learning starts from the coarsest scale as described in “Appendix C”. The same procedures to transfer the NN parameters in Eq. (34)-(36) are repeated with until it reaches scale [0]. To enhance model accuracy and robustness, variations based on Gaussian noise are added to the motion data in each sequential step, as suggested by [29]. The sequential MR training process is described in Algorithm 1.

Verification example

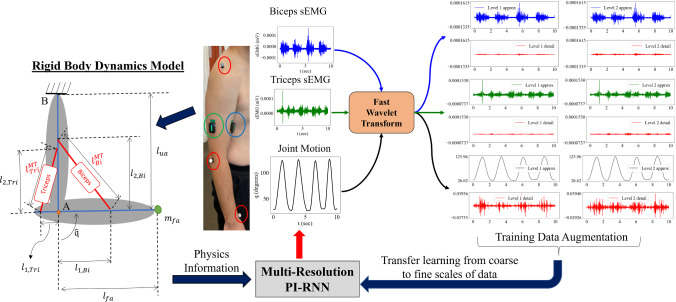

For verification of the proposed MR PI-RNN framework, an elbow flexion–extension model [1] and synthetic sEMG signals with Gaussian noise and associated motion responses were considered. The flowchart of the proposed computational framework for simultaneous forward dynamics prediction and parameter identification of MSK parameters is shown in Fig. 6.

Fig. 6.

An overview of the application of this framework to the recorded motion data. The location of motion capture markers is circled in red and the sEMG sensors on Biceps and Triceps muscle groups in blue and green, respectively. The simplified rigid body model was used in the forward dynamics equations within the framework with appropriately scaled anthropometric properties (for geometry) and physiological parameters (for muscle–tendon material models). The raw sEMG signals were mapped to the target angular motion of the elbow and used to simultaneously characterize the MSK system using the proposed Multi-Resolution PI-RNN framework

The model contained two rigid links corresponding to the upper arm and forearm with lengths and , respectively. They were connected at a hinge resembling the elbow joint “A”, while the upper arm link was fixed at the top joint “B”, and the biceps (Bi) and triceps (Tri) muscle–tendon complexes (modeled by Hill-type models with parameters and ) were represented by the lines connecting the links, as shown in Fig. 6. The degree of freedom of the model was the elbow flexion angle . The mass in the forehand was assumed to be concentrated at the wrist location, hence, a mass was attached to one end of the forearm link with a moment arm from the elbow joint. Tendons were assumed as rigid [58] for ease of computation.

The equation of motion for this rigid body system is given in “Appendix D”. Given the synthetic sEMG signals (), the initial conditions and and the parameters in Table 1, the motion of the elbow joint, , can be obtained by solving the MSK forward dynamics problem using an explicit Runge–Kutta scheme, implemented in Python’s SciPy library [64].

Table 1.

Parameters involved in the forward dynamics setup of elbow flexion–extension motion

| Parameter | Type | Value | Parameter | Type | Value |

|---|---|---|---|---|---|

| Biceps Muscle Model | 0.6 m | Equation of motion | 1.0 kg | ||

| Biceps Muscle Model | 6 m | Geometric | 1.0 m | ||

| Biceps Muscle Model | 300 N | Geometric | 1.0 m | ||

| Biceps Muscle Model | 0.55 m | Geometric | 0.3 m | ||

| Biceps Muscle Model | 0.0 radians | Geometric | 0.8 m | ||

| Triceps Muscle Model | 0.4 m | Geometric | 0.2 m | ||

| Triceps Muscle Model | 4 m | Geometric | 0.7 m | ||

| Triceps Muscle Model | 300 N | Activation Dynamics | 0.08 sec | ||

| Triceps Muscle Model | 0.33 m | A | Activation Dynamics | 0.2 | |

| Triceps Muscle Model | 0.0 radians |

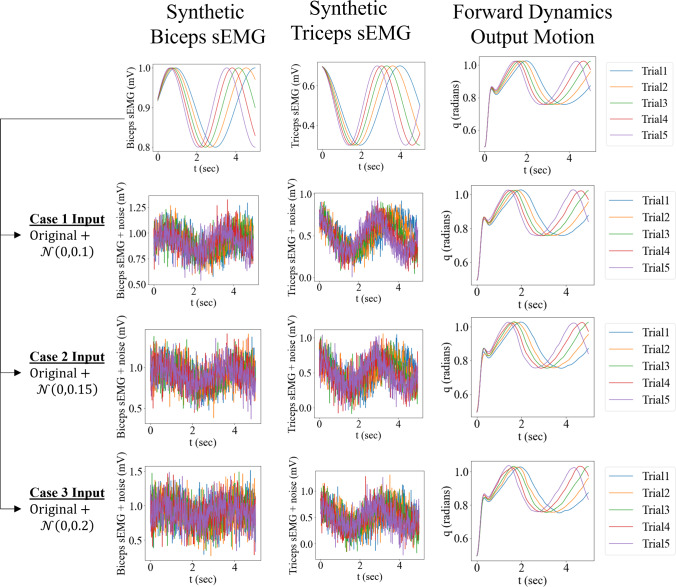

To verify and check the robustness of the MR framework to different levels of noise in the input, the following test was performed. Originally, five synthetic samples i.e., Trial's 1 to 5, of noiseless synthetic muscle sEMG signals are assumed, as shown in Fig. 7. In practical applications, signals obtained from measurement devices such as sEMG sensors contain noise in their content. Therefore, three cases were developed by adding Gaussian noise with zero mean and increasing levels of standard deviations to the input synthetic sEMG signals as mentioned in Table 2. As the maximum value of the noiseless sEMG signals is 1, the chosen 's were kept within 10%—20% of the signal maximum for a reasonable level of noise. Restricting it between 10%—20% is a choice as having higher noise levels (> 20%) would dominate over the underlying ‘noiseless’ periodic sinusoidal signal, leading to non-physiological synthetic sEMG signals. The corresponding output motions are generated by passing the noisy sEMG as input to the FD equations in Sect. 2. The following training procedures were performed for each of the three cases.

Fig. 7.

The original ‘noiseless’ input–output data set with the synthetic biceps and triceps sEMG signals having variations in frequency for five trials are shown at the top. Increasing levels of noise are added to develop 3 cases of synthetic mixed frequency input sEMG, from which corresponding output motions are solved, using the forward dynamics equations. To verify the MR framework, these three cases with their respective mixed frequency input data are then mapped to their corresponding motion data

Table 2.

Input data and gaussian noise level for each case

| Case ID | Input Synthetic sEMG data + |

|---|---|

| 1 | Original + |

| 2 | Original + |

| 3 | Original + |

1-scale training

The mixed frequency input sEMG signals and corresponding output motion data at scale [0], denoted by and , respectively, are mapped to get a baseline performance. This is termed as 1-scale training as only the full-scale (i.e., [0]) of the mixed frequency data is used for training.

2-scale training

Initiate learning from a coarse scale representation of the mixed frequency input data at scale and map to the corresponding motion data at scale [-1], , of that case.

Transfer parameters to the next scale training and finish the learning by mapping to .

3-scale training

Start learning from a coarse scale representation of the mixed frequency input data at scale and map to the corresponding motion data at scale [-2], , of that case.

Transfer parameters to the next scale training and continue learning by mapping to .

Transfer parameters to the next scale training and finish the learning by mapping to .

For each case and for each of the training scales in that case, the training data samples contained the data of trial’s 1, 2, 4, 5 while trial 3 was used for testing, each trial with 500 data points. The MSK parameters were chosen to be identified from the training data using the proposed framework. Due to differences in units and physiological nature of the parameters, the conditioning of the parameter identification system could be affected. To mitigate this issue, normalization [1, 44] was applied to each of the parameters,

| 37 |

where was the initial value of the parameter. Therefore, the parameters to be identified became .

The proposed framework, as described in Sect. 3, was applied to each case to simultaneously learn the MSK forward dynamics surrogate and identify the MSK parameters by optimizing Eq. (16), where the residual of the governing equation for the current time step , was expressed as

| 38 |

and is included in the residual term in the loss function in Eq. (16). While the training happens sequentially from coarse to fine-scales of the motion, the final identification of parameters happens at the scale , i.e., the full-scale in each of the 1-, 2- and 3-scale MR training types.

A GRU with 2 history steps, 1 hidden layer and 50 neurons in each layer was used. The training was performed after standardizing the data, such that the scale or range of the input and output have minimal influence on model performance [21]. The Adam algorithm [65] was used with an initial learning rate of and the penalty parameter for the MSK residual term in the loss function, . is the time-step between data points and is the moment of inertia in Eq. (38). Five parameter initialization seeds were used for an averaged response of the MR training.

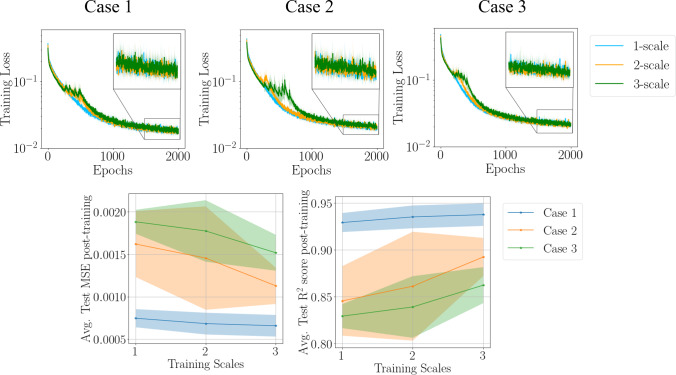

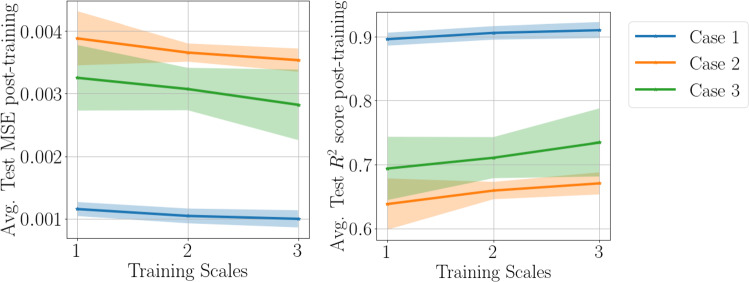

To compare the post-training performance of 1-, 2- and 3-scale MR training’s, the average testing mean squared error (MSE) and testing scores were compared, where these measures for a single trial are defined as:

| 39 |

| 40 |

where is the motion data of the trial, is the trial’s predicted motion from the MR PI-RNN framework, and is the mean of trial’s motion data with being the number of data points in the trial. At each epoch in the MR training, the training loss is calculated by using the scale of the training data used in that training scale, i.e., scale of the data is used in -scale training.

The gradual improvement in these metrics is evident from Fig. 8 where, as further scales of information are added and the training data is augmented, the generalization performance shows improvement from 1-scale to 3-scale. Overall, it is noted that the test metrics such as the MSE reduces, and the score gets closer to one, indicating an increase in the generalization accuracy as more training scales are introduced. This can be explained through the theory of bias-variance tradeoff; training on various scales of the data introduces more variance to the training, helping the ML framework to reduce the bias it develops by just training on the full-scale of the data. Together, this reduction in bias and growth in variance leads to a better generalization performance. Computationally, this method improves accuracy in the same amount of training epochs showing the efficiency of this method. As generalization predictions post-training are made using the full-scale of the data, there is no increase in time needed to perform the forward pass for any scale.

Fig. 8.

The training loss and testing metrics are shown. The zoomed-in plots are included for clarity on the loss evolution for the last 500 epochs. The shaded area indicates one standard deviation from the mean (solid line), in both the loss and average test MSE and score figures. As more scales of data are introduced in the MR training, the average Test MSE and score calculated post-training improve in each case

Meanwhile, the MSK parameters, (maximum isometric force) and (optimal muscle length corresponding to the maximum isometric force), of both the biceps and the triceps were accurately identified from the motion data, as shown in Table 3. Compared with the parameter identification from our previous work [1] where in addition to , the maximum contraction velocity was independently identified, due to non-convergence of by the time-domain and feature-encoded trainings, the proposed method can accurately identify . can then by obtain by the experimentally observed relationship of [57, 66].

Table 3.

The average percentage error (shown as mean standard deviation) between predicted and true values of the parameters for 3-scale training for each case from five initialization points

| Parameter | Case 1 | Case 2 | Case 3 |

|---|---|---|---|

For the identification of optimal muscle length parameters , the initial points need to be chosen with respect to constraints applied by the geometry of the MSK system. The errors reported in Table 3 are calculated by taking the average of the percentage error of the identified MSK parameters from the 3-scale training with the multiple parameter initializations. It was observed that in MSK parameter identification, similar accuracy in characterization was obtained from all training scale approaches used within each case, with errors less than 1%. This indicates that the MR PI-RNN improves the generalization performance of the motion prediction, without loss in parameter identification accuracy. It is noted that this example investigates the predictivity of in-distribution testing data, i.e., testing data that lies within the range of the training data. The effect of MR PI-RNN training on out-of-distribution predictivity is also studied in “Appendix E”.

Validation: elbow flexion–extension motion

Application of MR PI-RNN to subject-specific data

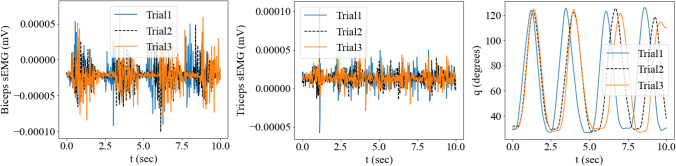

The recorded motion data and sEMG signals were collected and processed as per the data acquisition protocols mentioned in [1]. In brief, three elbow flexion–extension motion trials were performed by the subject for 10 s each, with two Delsys Trigno sEMG sensors placed on the biceps and triceps muscle groups, based on SENIAM recommendations [67]. The processed sEMG signals were transformed as described in Sect. 2.1 to obtain muscle activation signals, used to calculate the MSK forward dynamics ODE residual. The same simplified rigid body model was used as in Sect. 4 and appropriately scaled anthropometric properties (for the geometry of the model) and physiological parameters (for muscle–tendon material models used for the muscle groups) based on the generic upper body model defined in [68, 69] were used. Figure 9 shows the measured data of the three trials, including the transient raw sEMG signals and the corresponding angular motion of the elbow flexion–extension of the subject.

Fig. 9.

The measured raw sEMG signals and the corresponding angular motion of the elbow flexion–extension of the subject are plotted

In this example, the raw sEMG signals were used as input. A 5-scale MR training procedure as described in Sect. 4 was used on a GRU with 1 hidden layer with 50 neurons. The data of trials 1 and 3 were used for training, while trial 2 was used for testing, where each signal contained 500 temporal data points.

The muscle parameters to be identified by the framework include the maximum isometric force and the optimal muscle length from both muscle groups, which are denoted as . It was observed in our tests that despite the normalization process described in Eq. (37) and (38), the parameters obtained at the end of the MR training with motion data either diverged or converged to non-physiological values. To obtain physiologically consistent parameters, we use the values obtained from literature studies and constrain the space of parameter search [44].

Let the parameter to be identified be defined as

| 41 |

where is the value defined in the literature study and is the parameter to be optimized in the training such that it can be used to evaluate the sigmoid function and is the vector of these trainable parameters. Using the optimized , the desired MSK parameters can be estimated. This formulation constrains the identified parameters to be consistent with parameters obtained through experimental studies [68–70].

The proposed framework was then applied to simultaneously learn the MSK forward dynamics surrogate and identify the MSK parameters by optimizing Eq. (16), where the residual of the governing equation for the current time step , is expressed with a slight correction of Eq. (38) due to parameter space modification as

| 42 |

This is introduced into the residual term in the loss function and the optimization problem becomes,

| 43 |

As mentioned in the verification example (Sect. 4), the multi-resolution parameter identification is performed starting from the coarsest scale, transferring the learned hyperparameters to the next finer scale parameter identification, and finally completing the parameter identification at the full-scale, i.e., at scale .

Results

To accelerate the training process, the training dataset is standardized to have zero mean and unit variance. The training was performed with the standardized data, using the Adam algorithm [65] with an initial learning rate of and 4 history steps were considered. Five parameter initialization seeds were used for an averaged response of the MR training. To quantify the error in the testing predictions, a normalized mean squared error (NMSE) was defined,

| 44 |

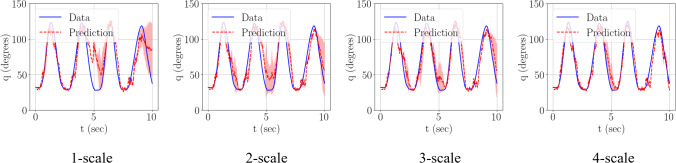

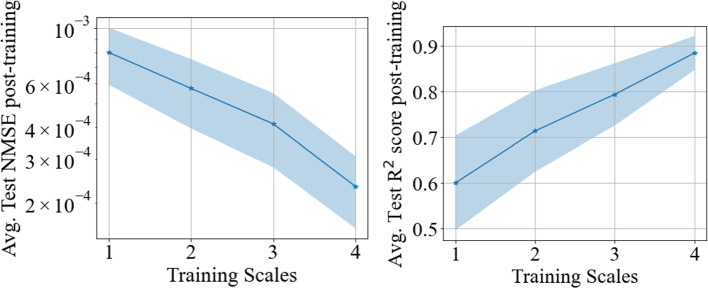

where is the target motion data point, is the predicted motion data point, from the MR PI-RNN framework, and is the mean of target motion data. The score was calculated using the metric defined in Eq. (40). From Fig. 10, Fig. 11 and Table 4, it is clear that addition of training scales leads to improved motion predictions. The multi-resolution training leads to an increase in average test score of more than 40% (bringing it closer to one), averaged over the multiple initialization seeds. With the addition of more scales, Fig. 10 clearly shows the progression in improvement of the predictions as more scales are involved in the training.

Fig. 10.

Comparison of test predictions post-training for each MR training scale performed. The solid dash line is the mean of the predictions post-training when various initialization points are utilized to begin the MR training, with shaded region indicating one standard deviation from the mean

Fig. 11.

The test normalized mean squared error (NMSE) and test score are plot for the testing predictions post-training, averaged over five initialization seeds. The mean of the metric is the solid marker line, the shaded portion being one standard deviation from the mean

Table 4.

The test metrics such as NMSE and score averaged over five initialization seeds, for the various training scales involved are reported here

| Training Scale | Avg. Test NMSE | Decrease (%) in Avg. Test NMSE w.r.t 1-Scale Training | Avg. Test Score | Increase (%) in Avg. Test score w.r.t 1-Scale Training |

|---|---|---|---|---|

| 1 | 8.00E–04 | - | 0.599 | - |

| 2 | 5.74E–04 | 28% | 0.713 | 19% |

| 3 | 4.14E–04 | 48% | 0.793 | 32% |

| 4 | 2.33E–04 | 71% | 0.884 | 47% |

The % decrease in average NMSE and % increase in average w.r.t 1-scale training are shown in the 3rd and 5th columns respectively

The identified MSK parameters from the MR PI-RNN training are summarized in Table 5 with the mean of the final converged values of and obtained from multiple parameter initializations at 4-scale training, consistent with the physiological estimates of these parameters reported in literature [68–70]. is slightly outside the estimated range, which could be attributed to the variance in population. Similar values were obtained across all scales of training hence parameters obtained from a representative 4-scale training are shown here. The results demonstrated the effectiveness of the proposed MR PI-RNN framework and promising potential for real applications.

Table 5.

The identified parameter estimates using MR PI-RNN training, and their values reported in literature [68–70]

| Parameter | Identified values | Estimates from literature |

|---|---|---|

| 158.4–845 | ||

| 0.115–0.142 | ||

| 554.4–2332.916 | ||

| 0.067–0.087 |

Discussion and conclusions

In this work, we proposed a multi-resolution physics-informed recurrent neural network (MR PI-RNN) for an application to MSK systems, for time-domain motion prediction and parameter identification. A GRU with a physics-informed loss function that minimized the error in the training data and the residual of the MSK forward dynamics equilibrium was used for this purpose. Wavelet based multi-resolution techniques were used to decompose the input sEMG signals and output joint motion data into coarse-scale approximations at different scales and fine-scale details at those scales. The sEMG and joint motion multi-scale components were then mapped to each other starting from a chosen coarse-scale components and then sequentially trained (via transfer learning) to higher scales, completing the training on the full-scale of the data.

By initializing training on the coarse-scale of the training data, the optimization reaches a local minimum that serves as a better initialization state for the training data that includes the sequential fine-scale details. The proposed transfer-learning based sequential training scheme can be used for learning datasets that have high frequency signals as shown in the verification example with synthetic mixed frequency sEMG data. The numerical examples show an improvement in testing prediction and identifying the parameters. We observe from the loss profiles that the testing loss decreased while the training loss increased as more scales of data were brought in. It was also observed that the average test MSE and metrics showed a clear improvement in the generalization accuracy. These phenomena can be explained through the theory of bias-variance tradeoff; training on various scales of the data introduces more variance to the training, helping the ML framework to reduce the bias it develops by just training on the full-scale of the data. Computationally, it is noted that the proposed method achieves improved accuracy by using the same amount of training epochs.

The proposed MR framework was validated on recorded sEMG and motion data from a subject [1] and significant improvements were observed in the testing prediction accuracy, with 1-scale training often leading to large errors. The predicted motion at higher training scales showed improvements across all initialization points used, indicating the robustness of the method. The identified parameters were also consistent with the physiological range observed in literature.

This method also has the advantage of operating in the time-domain as compared to the feature-encoded (FE) training [1], where the input sEMG signals were projected on to the frequency domain using the Fourier basis. In the FE training, to make a prediction, the input signal for the entire duration of the movement prediction was needed whereas the physics informed MR training of the RNN enables the trained model to make real-time predictions by using the information of the previous time-steps and the current sEMG signal. In addition, for mixed frequency signals, wavelet resolution can better capture the local frequency information as compared to the Fourier basis which captures the global frequency information. As compared to the NN-based time-domain training performed at the original scale of the data (scale [0]) proposed in [1], the MR PI-RNN training approach described here achieved significant improvements due to the stronger sequence learning capability of the RNN and the ability of the MR training.

This method is presented as a general approach where multi-resolution is applied to both input and output. For some applications, e.g., those that require only data mapping, the MR training can be applied by only considering the decomposition to the input, keeping the output at the full-scale (i.e., scale [0]) throughout, or vice versa. To apply this method to clinical studies, RNN hyperparameters may also be tuned to account for subject variability. The dependence of this method on the number of data points available in a signal can also be studied in the future. To further improve this method, we can consider the use of multi-resolution as activation functions of the ML framework, instead of relying on data filtration processes for better computational efficiency. This method will also be studied on other physics-informed ML techniques to solve forward problems with PDEs having mixed frequency source terms.

Acknowledgements

The support of this work by the National Institute of Health under grant number 5R01AG056999-04 to K. Taneja & J. S. Chen are very much appreciated.

List of symbols

Raw sEMG signals captured by sensors

Neural excitations

Muscle activations

Electro-mechanical delay between origin of neural excitation from central nervous system and reaching the muscle group

Shape factor used to relate neural excitation to muscle activation

Maximum isometric force in the muscle

Optimal muscle length corresponding to the maximum isometric force

Maximum contraction velocity

Slack length of the tendon

Pennation angle between muscle and tendon

Set of muscle parameters for each muscle group

Normalized muscle length

Normalized muscle velocity

Total length of muscle–tendon complex

Active force component of hill-type muscle model

Length dependent active force generation component

Velocity dependent active force generation component

Passive force component of hill-type muscle model

Total muscle force

Total force produced by muscle–tendon complex

Torque produced by the muscle–tendon complex

Generalized angular motion of the MSK system

Hidden state after n history steps of the RNN/GRU

Weights connecting the variable to variable

Bias for the RNN to calculate variable

Output from the reset gate of the GRU

Output from the update gate of the GRU

Intermediate variables in GRU forward pass

Candidate hidden state after n history steps of the GRU

Set of all weights and biases of the RNN/GRU

Scaling function in multi-resolution analysis

Wavelet function in multi-resolution analysis

The nested and complementary subspaces containing and respectively

Projection operators on a function projecting it onto subspaces and respectively

Parameter characterizing the parameterized ODE system

Input data at scale to the MR PI-RNN framework at time-step i

Input data set projected to scale

Predicted angular motion at scale [-j] from the MR PI-RNN framework at time-step i

Composite loss function of the PI-PINN minimizing data and ODE residual

Forearm length

Upper arm length

Mass of forearm

Appendix A: Muscle–Tendon Force Generation

The total muscle force can be expressed as

| 45 |

where is the passive muscle length dependent force generation function. The active force component can be expressed as:

| 46 |

where is the activation function in Eq. (2), is the normalized muscle length, is the normalized velocity of the muscle. The total length of the MT system is given by,

| 47 |

Given the current joint angle and the angular velocity , the current length, of the MT system can be calculated using trigonometric relations.

The and are generic functions of the length and velocity dependent force generation properties of the active muscle, represented by dimensionless quantities. In this study, the tendon is assumed to be rigid . The total force produced by the MT complex, , can be expressed as:

| 48 |

The rigid-tendon model simplifies the MT contraction dynamics [57, 58] which accounts for the interaction of the activation, force length, and force velocity properties of the MT complex.

Appendix B: Hill-Type Muscle Models

For the length dependent muscle force relations, this work uses the equations given in [55]. The active muscle force dependent on variation in length is given as

| 49 |

| 50 |

where and correspond to parameters in the passive muscle force model related to an adult human. The muscle force velocity relationship is used directly from [66].

Appendix C: Multi-Resolution GRU Formulation

For multi-resolution GRU, the initial learning starts from the coarsest scale as follows with notations according to Sect. 3.3.2,

| 51 |

| 52 |

| 53 |

where the weights and biases are trainable parameters.

Appendix D: Equation of Motion of the Simplified MSK Model

The equation of motion for the rigid body system used in Sect. 4 is,

| 54 |

where,

| 55 |

| 56 |

| 57 |

| 58 |

| 59 |

Appendix E: Study on Out-of-Distribution predictivity of the MR PI-RNN training

To investigate the performance of the MR training strategy on predictions made on trials that are out-of-distribution of the training data, two combinations of training and testing trials from the data used in the verification example (Sect. 4) were studied, as shown in Table 6.

Table 6.

Training and Testing data description for the Out-of-Distribution predictivity study

| Training Data Trials | Testing Data Trial | |

|---|---|---|

| Set A | 2, 3, 4, 5 | 1 |

| Set B | 1, 2, 3, 4 | 5 |

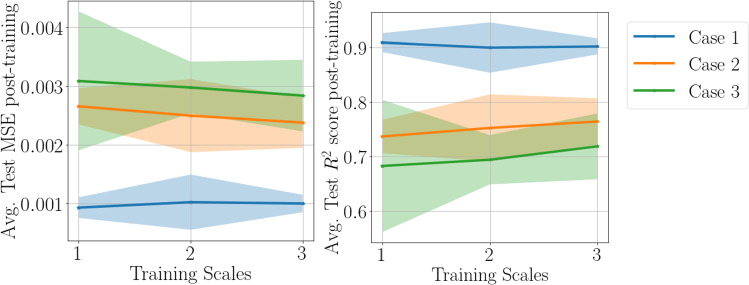

The setting of this study is the same as described in Sect. 4. The MSE and scores after applying 1-, 2- and 3-scale MR PI-RNN training are shown in Figs. 12–13. In both tests, the prediction accuracy of the testing trial increases with the number of training scales. Such trend is more apparent when the sEMG signals contain more high-frequency content (from Case 1 to 3). The results demonstrate the effectiveness and the ability for out-of-distribution predictions of the proposed approach.

Fig. 12.

The average test MSE and score post MR training for Set A are shown. The shaded area indicates one standard deviation from the mean (solid line), in both figures

Fig. 13.

The average test MSE and score post MR training for Set B are shown. The shaded area indicates one standard deviation from the mean (solid line), in both figures

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Taneja K, He X, He Q, Zhao X, Lin Y-A, Loh KJ, Chen J-S. A feature-encoded physics-informed parameter identification neural network for musculoskeletal systems. J Biomech Eng. 2022;144(121006):1–16. doi: 10.1115/1.4055238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Costello Z, Martin HG. A machine learning approach to predict metabolic pathway dynamics from time-series multiomics data. npj Syst Biol Appl. 2018;4(1):1–14. doi: 10.1038/s41540-018-0054-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Damle C, Yalcin A. Flood prediction using time series data mining. J Hydrol. 2007;333(2):305–316. [Google Scholar]

- 4.Nishino T, Hokugo A. A stochastic model for time series prediction of the number of post-earthquake fire ignitions in buildings based on the ignition record for the 2011 Tohoku earthquake. Earthq Spectra. 2020;36(1):232–249. [Google Scholar]

- 5.Manevitz L, Bitar A, Givoli D. Neural network time series forecasting of finite-element mesh adaptation. Neurocomputing. 2005;63:447–463. [Google Scholar]

- 6.Frank RJ, Davey N, Hunt SP. “Time Series Prediction and Neural Networks. J Intell Robotic Syst. 2001;31:91–103. [Google Scholar]

- 7.Längkvist M, Karlsson L, Loutfi A. A review of unsupervised feature learning and deep learning for time-series modeling. Pattern Recogn Lett. 2014;42:11–24. [Google Scholar]

- 8.Calderón-Macías C, Sen MK, Stoffa PL. Artificial neural networks for parameter estimation in geophysics. Geophys Prospect. 2000;48(1):21–47. [Google Scholar]

- 9.Hubbard SS, Rubin Y. Hydrogeological parameter estimation using geophysical data: a review of selected techniques. J Contam Hydrol. 2000;45(1):3–34. [Google Scholar]

- 10.Ebrahimian H, Astroza R, Conte JP. Extended kalman filter for material parameter estimation in nonlinear structural finite element models using direct differentiation method. Earthquake Eng Struct Dynam. 2015;44(10):1495–1522. [Google Scholar]

- 11.Haghighat E, Raissi M, Moure A, Gomez H, Juanes R. A physics-informed deep learning framework for inversion and surrogate modeling in solid mechanics. Comput Methods Appl Mech Eng. 2021;379:113741. [Google Scholar]

- 12.Heine CB, Menegaldo LL. Numerical validation of a subject-specific parameter identification approach of a quadriceps femoris EMG-driven model. Med Eng Phys. 2018;53:66–74. doi: 10.1016/j.medengphy.2018.01.006. [DOI] [PubMed] [Google Scholar]

- 13.Hirschvogel M, Bassilious M, Jagschies L, Wildhirt SM, Gee MW. A Monolithic 3D–0D coupled closed-loop model of the heart and the vascular system: experiment-based parameter estimation for patient-specific cardiac mechanics. Int J Numer Methods Biomed Eng. 2017;33(8):e2842. doi: 10.1002/cnm.2842. [DOI] [PubMed] [Google Scholar]

- 14.Schmid H, Nash MP, Young AA, Hunter PJ. Myocardial material parameter estimation—a comparative study for simple shear. J Biomech Eng. 2006;128(5):742–750. doi: 10.1115/1.2244576. [DOI] [PubMed] [Google Scholar]

- 15.Pau JWL, Xie SSQ, Pullan AJ. Neuromuscular interfacing: establishing an EMG-driven model for the human elbow joint. IEEE Trans Biomed Eng. 2012;59(9):2586–2593. doi: 10.1109/TBME.2012.2206389. [DOI] [PubMed] [Google Scholar]

- 16.Zhao Y, Zhang Z, Li Z, Yang Z, Dehghani-Sanij AA, Xie S. An EMG-driven musculoskeletal model for estimating continuous wrist motion. IEEE Trans Neural Syst Rehabil Eng. 2020;28(12):3113–3120. doi: 10.1109/TNSRE.2020.3038051. [DOI] [PubMed] [Google Scholar]

- 17.Kian A, Pizzolato C, Halaki M, Ginn K, Lloyd D, Reed D, Ackland D. Static optimization underestimates antagonist muscle activity at the glenohumeral joint: a musculoskeletal modeling study. J Biomech. 2019;97:109348. doi: 10.1016/j.jbiomech.2019.109348. [DOI] [PubMed] [Google Scholar]

- 18.Buchanan TS, Lloyd DG, Manal K, Besier TF. Neuromusculoskeletal modeling: estimation of muscle forces and joint moments and movements from measurements of neural command. J Appl Biomech. 2004;20(4):367–395. doi: 10.1123/jab.20.4.367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lloyd DG, Besier TF. An EMG-driven musculoskeletal model to estimate muscle forces and knee joint moments in Vivo. J Biomech. 2003;36(6):765–776. doi: 10.1016/s0021-9290(03)00010-1. [DOI] [PubMed] [Google Scholar]

- 20.Buongiorno D, Barsotti M, Barone F, Bevilacqua V, Frisoli A. A Linear approach to optimize an emg-driven neuromusculoskeletal model for movement intention detection in myo-control: a case study on shoulder and elbow joints. Front Neurorobot. 2018;12:74. doi: 10.3389/fnbot.2018.00074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Goodfellow I, Bengio Y, Courville A. Deep Learning. Cambridge, MA, USA: MIT Press; 2016. [Google Scholar]

- 22.Kaneko S, Wei H, He Q, Chen J-S, Yoshimura S. A hyper-reduction computational method for accelerated modeling of thermal cycling-induced plastic deformations. J Mech Phys Solids. 2021;151:104385. [Google Scholar]

- 23.Kim B, Azevedo VC, Thuerey N, Kim T, Gross M, Solenthaler B. Deep fluids: a generative network for parameterized fluid simulations. Comput Graph Forum. 2019;38(2):59–70. [Google Scholar]

- 24.Xie X, Zhang G, Webster C. Non-intrusive inference reduced order model for fluids using deep multistep neural network. Mathematics. 2019;7(8):757. [Google Scholar]

- 25.Fries WD, He X, Choi Y. LaSDI: parametric latent space dynamics identification. Comput Methods Appl Mech Eng. 2022;399:115436. [Google Scholar]

- 26.He X, Choi Y, Fries WD, Belof JL, Chen J-S. GLaSDI: Parametric physics-informed greedy latent space dynamics identification. J Comput Phys. 2023;489:112267. [Google Scholar]

- 27.Ghaboussi J, Garrett JH, Wu X. Knowledge-based modeling of material behavior with neural networks. J Eng Mech - ASCE. 1991;117(1):132–153. [Google Scholar]

- 28.Lefik M, Boso DP, Schrefler BA. Artificial neural networks in numerical modelling of composites. Comput Methods Appl Mech Eng. 2009;198(21):1785–1804. [Google Scholar]

- 29.He X, Chen J-S. Thermodynamically consistent machine-learned internal state variable approach for data-driven modeling of path-dependent materials. Comput Methods Appl Mech Eng. 2022;402:115348. [Google Scholar]

- 30.Kirchdoerfer T, Ortiz M. Data driven computing with noisy material data sets. Comput Methods Appl Mech Eng. 2017;326:622–641. [Google Scholar]

- 31.Eggersmann R, Kirchdoerfer T, Reese S, Stainier L, Ortiz M. Model-free data-driven inelasticity. Comput Methods Appl Mech Eng. 2019;350:81–99. [Google Scholar]

- 32.He Q, Chen J-S. A physics-constrained data-driven approach based on locally convex reconstruction for noisy database. Comput Methods Appl Mech Eng. 2020;363:112791. [Google Scholar]

- 33.He X, He Q, Chen J-S, Sinha U, Sinha S. Physics-constrained local convexity data-driven modeling of anisotropic nonlinear elastic solids. DCE. 2020;1:e19. [Google Scholar]

- 34.He Q, Laurence DW, Lee C-H, Chen J-S. Manifold learning based data-driven modeling for soft biological tissues. J Biomech. 2021;117:110124. doi: 10.1016/j.jbiomech.2020.110124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bahmani B, Sun W. Manifold embedding data-driven mechanics. J Mech Phys Solids. 2022;166:104927. [Google Scholar]

- 36.Carrara P, De Lorenzis L, Stainier L, Ortiz M. Data-driven fracture mechanics. Comput Methods Appl Mech Eng. 2020;372:113390. [Google Scholar]

- 37.He X, He Q, Chen J-S. Deep autoencoders for physics-constrained data-driven nonlinear materials modeling. Comput Methods Appl Mech Eng. 2021;385:114034. [Google Scholar]

- 38.Raissi M, Perdikaris P, Karniadakis GE. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys. 2019;378:686–707. [Google Scholar]

- 39.Karniadakis GE, Kevrekidis IG, Lu L, Perdikaris P, Wang S, Yang L. Physics-informed machine learning. Nat Rev Phys. 2021;3(6):422–440. [Google Scholar]

- 40.He Q, Tartakovsky AM. Physics-informed neural network method for forward and backward advection-dispersion equations. Water Resour Res. 2021;57(7):e2020WR029479. [Google Scholar]

- 41.Zhu Q, Liu Z, Yan J. Machine learning for metal additive manufacturing: predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput Mech. 2021;67(2):619–635. [Google Scholar]

- 42.Xu K, Tartakovsky AM, Burghardt J, Darve E. Learning viscoelasticity models from indirect data using deep neural networks. Comput Methods Appl Mech Eng. 2021;387:114124. [Google Scholar]

- 43.Tartakovsky AM, Marrero CO, Perdikaris P, Tartakovsky GD, Barajas-Solano D. Physics-informed deep neural networks for learning parameters and constitutive relationships in subsurface flow problems. Water Resour Res. 2020;56(5):e2019WR026731. [Google Scholar]

- 44.He Q, Stinis P, Tartakovsky AM. Physics-constrained deep neural network method for estimating parameters in a redox flow battery. J Power Sources. 2022;528:231147. [Google Scholar]

- 45.He Q, Barajas-Solano D, Tartakovsky G, Tartakovsky AM. Physics-informed neural networks for multiphysics data assimilation with application to subsurface transport. Adv Water Resour. 2020;141:103610. [Google Scholar]

- 46.Kissas G, Yang Y, Hwuang E, Witschey WR, Detre JA, Perdikaris P. Machine learning in cardiovascular flows modeling: predicting arterial blood pressure from non-invasive 4D flow MRI data using physics-informed neural networks. Comput Methods Appl Mech Eng. 2020;358:112623. [Google Scholar]

- 47.Sahli Costabal F, Yang Y, Perdikaris P, Hurtado DE, Kuhl E. Physics-informed neural networks for cardiac activation mapping. Front Phys. 2020;8:42. [Google Scholar]

- 48.Alber M, Buganza Tepole A, Cannon WR, De S, Dura-Bernal S, Garikipati K, Karniadakis G, Lytton WW, Perdikaris P, Petzold L, Kuhl E. “Integrating machine learning and multiscale modeling—perspectives, challenges, and opportunities in the biological, biomedical, and behavioral sciences”. npj Digit Med. 2019;2(1):1–11. doi: 10.1038/s41746-019-0193-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Yazdani A, Lu L, Raissi M, Karniadakis GE. Systems biology informed deep learning for inferring parameters and hidden dynamics. PLOS Comput Biol. 2020;16(11):e1007575. doi: 10.1371/journal.pcbi.1007575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zhang J, Zhao Y, Bao T, Li Z, Qian K, Frangi AF, Xie SQ, Zhang Z-Q. “Boosting personalized musculoskeletal modeling with physics-informed knowledge transfer”. IEEE Trans Instrum Meas. 2023;72:1–11. [Google Scholar]

- 51.Bartsoen L, Faes MGR, Andersen MS, Wirix-Speetjens R, Moens D, Jonkers I, Sloten JV. Bayesian parameter estimation of ligament properties based on tibio-femoral kinematics during squatting. Mech Syst Signal Process. 2023;182:109525. [Google Scholar]

- 52.Linden NJ, Kramer B, Rangamani P. Bayesian parameter estimation for dynamical models in systems biology. PLoS Comput Biol. 2022;18(10):e1010651. doi: 10.1371/journal.pcbi.1010651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Lipton, Z. C., Berkowitz, J., and Elkan, C., 2015, “A critical review of recurrent neural networks for sequence learning,” arXiv:1506.00019 [cs].

- 54.Hamner SR, Seth A, Delp SL. Muscle contributions to propulsion and support during running. J Biomech. 2010;43(14):2709–2716. doi: 10.1016/j.jbiomech.2010.06.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Zhang Y, Chen J, He Q, He X, Basava RR, Hodgson J, Sinha U, Sinha S. Microstructural analysis of skeletal muscle force generation during aging. Int J Numer Methods Biomed Eng. 2020;36(1):e3295. doi: 10.1002/cnm.3295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Chen J-S, Basava RR, Zhang Y, Csapo R, Malis V, Sinha U, Hodgson J, Sinha S. Pixel-based meshfree modelling of skeletal muscles. Comput Methods Biomech Biomed Eng Imaging Vis. 2016;4(2):73–85. doi: 10.1080/21681163.2015.1049712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Millard M, Uchida T, Seth A, Delp SL. Flexing computational muscle: modeling and simulation of musculotendon dynamics. J Biomech Eng. 2013;135(2):021005. doi: 10.1115/1.4023390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Winters JM. Hill-Based Muscle Models: A Systems Engineering Perspective. Springer, New York, NY: Multiple Muscle Systems; 1990. pp. 69–93. [Google Scholar]

- 59.Mallat SG. Multiresolution approximations and wavelet orthonormal bases of L 2 (R) Trans Am Math Soc. 1989;315(1):69. [Google Scholar]

- 60.Mallat SG. A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell. 1989;11(7):674–693. [Google Scholar]

- 61.Baydin AG, Pearlmutter BA, Radul AA, Siskind JM. Automatic differentiation in machine learning: a survey. J Mach Learn Res. 2018;18:43. [Google Scholar]

- 62.Yao Y, Rosasco L, Caponnetto A. On early stopping in gradient descent learning. Constr Approx. 2007;26(2):289–315. [Google Scholar]

- 63.Weiss K, Khoshgoftaar TM, Wang D. A survey of transfer learning. J Big Data. 2016;3(1):9. [Google Scholar]

- 64.Virtanen P, Gommers R, Oliphant TE, Haberland M, Reddy T, Cournapeau D, Burovski E, Peterson P, Weckesser W, Bright J, van der Walt SJ, Brett M, Wilson J, Millman KJ, Mayorov N, Nelson ARJ, Jones E, Kern R, Larson E, Carey CJ, Polat İ, Feng Y, Moore EW, VanderPlas J, Laxalde D, Perktold J, Cimrman R, Henriksen I, Quintero EA, Harris CR, Archibald AM, Ribeiro AH, Pedregosa F, van Mulbregt P. SciPy 1.0: fundamental algorithms for scientific computing in python. Nat Methods. 2020;17(3):261–272. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Kingma, D. P., and Ba, J., 2017, “Adam: A Method for Stochastic Optimization,” arXiv:1412.6980 [cs].

- 66.Thelen DG. Adjustment of muscle mechanics model parameters to simulate dynamic contractions in older adults. J Biomech Eng. 2003;125(1):70–77. doi: 10.1115/1.1531112. [DOI] [PubMed] [Google Scholar]

- 67.Hermens HJ, Freriks B, Merletti R, Stegeman D, Blok J, Rau G, Disselhorst-Klug C, Hägg G. “European recommendations for surface electromyography. Roessingh Res Dev. 1999;8(2):13–54. [Google Scholar]

- 68.Garner BA, Pandy MG. Estimation of musculotendon properties in the human upper limb. Ann Biomed Eng. 2003;31(2):207–220. doi: 10.1114/1.1540105. [DOI] [PubMed] [Google Scholar]

- 69.Holzbaur KRS, Murray WM, Delp SL. A model of the upper extremity for simulating musculoskeletal surgery and analyzing neuromuscular control. Ann Biomed Eng. 2005;33(6):829–840. doi: 10.1007/s10439-005-3320-7. [DOI] [PubMed] [Google Scholar]

- 70.An KN, Hui FC, Morrey BF, Linscheid RL, Chao EY. Muscles across the Elbow Joint: a biomechanical analysis. J Biomech. 1981;14(10):659–669. doi: 10.1016/0021-9290(81)90048-8. [DOI] [PubMed] [Google Scholar]