Abstract

There is an increasing demand for efficient and precise plant disease detection methods that can quickly identify disease outbreaks. For this, researchers have developed various machine learning and image processing techniques. However, real-field images present challenges due to complex backgrounds, similarities between different disease symptoms, and the need to detect multiple diseases simultaneously. These obstacles hinder the development of a reliable classification model. The attention mechanisms emerge as a critical factor in enhancing the robustness of classification models by selectively focusing on relevant regions or features within infected regions in an image. This paper provides details about various types of attention mechanisms and explores the utilization of these techniques for the machine learning solutions created by researchers for image segmentation, feature extraction, object detection, and classification for efficient plant disease identification. Experiments are conducted on three models: MobileNetV2, EfficientNetV2, and ShuffleNetV2, to assess the effectiveness of attention modules. For this, Squeeze and Excitation layers, the Convolutional Block Attention Module, and transformer modules have been integrated into these models, and their performance has been evaluated using different metrics. The outcomes show that adding attention modules enhances the original models' functionality.

Keywords: Attention mechanism, Computer vision, Deep learning, Classification, Plant disease detection

1. Introduction

The global agriculture industry is facing significant challenges due to economic, environmental, and demographic pressures. With the growing global population, increasing agricultural production is necessary. However, achieving this goal is not easy. In this context, India stands out as a prominent player in the agricultural sector. It holds the second position in the production of essential crops such as sugarcane, rice, cotton, wheat, fruits, tea, and vegetables. Furthermore, around 60 % of India's workforce is employed in agriculture, contributing 17 % to the nation's GDP (Gross domestic product). Despite these advantages, Indian agriculture struggles with low output, lagging behind that of other developing countries by 30-5%. This poses a significant challenge for the country. Factors such as pest and disease outbreaks, soil fertility deficiencies, insufficient water availability, and the impact of climate change are all responsible for the stagnation of agricultural productivity in India. Among these challenges, plant diseases, particularly fungal pathogens, emerge as a critical concern and a major limiting factor for crop yields [1]. To tackle the aforementioned challenges, the integration of machine learning and attention mechanisms holds great promise for effective plant disease identification. Machine learning (ML) and Deep Learning (DL) methods can be leveraged, to quickly and precisely identify agricultural diseases by analysing images of various plant components such as leaves, stems, flowers, and fruits. Among these components, leaves have become a widely adopted focal point for disease identification, as most disease symptoms prominently manifest on them. Accurately identifying plant diseases is difficult because real-field images often contain complex backgrounds, the potential for plant leaves to be obscured by other plant parts, and the occurrence of several diseases on a leaf picture. In this scenarios, the incorporation of machine learning algorithms featuring attention mechanisms becomes crucial in overcoming these obstacles and improving disease recognition accuracy.

Attention mechanisms allow models to concentrate on particular segments of an image that are more informative for disease identification. By assigning different weights or attention scores to various parts of an image, these mechanisms enable the model to prioritize disease-related features and disregard irrelevant or misleading information. Selective attention has the potential to improve the precision of categorizing diseases, even when several diseases share similar symptoms. The attention mechanism draws inspiration from the way our biological systems function, as they prioritize unique characteristics when confronted with a lot of information. Since the advent of deep neural networks, several different application domains have made extensive use of attention mechanisms [2].

Attention mechanisms have proven successful across diverse fields like computer vision, speech recognition, and natural language processing. In the realm of computer vision, attention mechanisms play a crucial role by selectively focusing on relevant regions within an image, assisting networks to arrive at better decisions. The use of attention mechanisms has the potential to improve a variety of applications, including semantic segmentation, classification, object recognition, image captioning, 3D vision, and super resolution. The primary goal of this study is to investigate the various attention mechanisms that are used at different stages of identifying plant diseases and figure out how they help with feature extraction, which in turn makes disease recognition better. It is imperative to investigate the application of attention mechanisms in plant disease recognition, given their potential benefits in improving model performance and interpretability. This research attempts to provide a comprehensive analysis of attention mechanisms and how they affect different methods for identifying plant diseases.

This study's main contribution.

-

•

This work offers a comprehensive review of different attention mechanisms, categorizing them based on the input they consider, the level of granularity they operate on, and the methods used to calculate attention weights.

-

•

This study focuses on the diverse attention mechanisms employed by researchers to efficiently identify plant diseases. To the extent of our understanding, there is presently an absence of any other research investigation that specifically examines the attention mechanisms employed in plant disease detection.

-

•

This study provides an in-depth survey of research that utilizes attention mechanisms to improve the performance of various tasks like image segmentation, feature extraction, object detection, and classification that are involved in developing plant disease recognition systems.

-

•

This study presents a detailed specification of the type of network design, loss function, and deep learning framework used by researchers to create solutions for plant disease identification.

-

•

This work performs a comparative analysis to analyse the effect of attention mechanisms on DL models. Various attention mechanisms are integrated into state-of-the-art models, including ShuffleNetV2, EfficientNetV2, and MobileNetV2, to evaluate their effectiveness.

This study is categorized into six sections, each serving a specific purpose. The initial section serves as an introduction, providing an overview of the main motivation and objectives of the study. Subsequently, in the second section, we discuss various attention mechanisms and their categorization. The third section explores different types of attentional mechanisms. In the fourth section, various studies proposed by researchers are examined, concentrating on leveraging attention mechanisms to improve the model's performance for efficient plant disease identification. Additionally, the fifth section conducts a comparative analysis between three deep learning models, both with and without the various attention modules. A discussion about the future potential of these mechanisms and the remaining challenges associated with their utilization is given. The study conclusion is presented in Section 6.

2. Related work

This section includes the recent studies that have reviewed various attention mechanism. Study [3], conducted a thorough examination on attention mechanisms for computer vision task and categorized them based on their approach, encompassing temporal attention, channel attention, branch attention, spatial attention, and various combinations of these methods. The main focus of this study was to categories attention approaches according to their data domain rather than their specific application area.

The authors of [4], classified image super-resolution models into different categories based on their network design, such as residual, dense, convolution, attention, distillation, and extremely lightweight solutions. This study's primary objective was to investigate lightweight approaches for image super-resolution that make use of deep learning techniques and attention mechanisms. This review serves as a valuable framework for our own study, as it provides us with a structured approach to follow.

In [5], details about the attention mechanisms used with neural networks is given, exploring their origins and recent advancements. The researchers provide comprehensive insights into various variants of attention models, including transformer and self-attention. Study [6], conducted a comprehensive analysis of the attention mechanism in deep learning, encompassing its key methodologies, practical implementations, diverse applications, and potential avenues for future development. This paper also describes how attention models are used to improve performance and what application domains can benefit from attention mechanisms.

Building upon this existing literature, our study contributes by categorizing attention mechanisms based on input nature, level of detail, and attention weight calculation method. A comprehensive examination of the attention mechanisms utilized in recent studies of plant disease identification for extracting relevant features at various stages, including segmentation, feature extraction, object identification, and classification, is done. This research conducted a comparative analysis by examining DL models, with or without attention modules to see the effectiveness of attention mechanisms on models. Furthermore, the paper addresses the current challenges of incorporating attention mechanisms into deep learning and explores potential future directions. It is worth noting that, to our knowledge, there is no existing literature review that delves as deeply into attention mechanisms for plant disease recognition, highlighting the significance of our study in advancing this field.

3. Understanding attention mechanisms

A significant advancement in deep learning, attention mechanisms revolutionize how models process information, enabling them to concentrate on specific features within a dataset. Modelled after human cognition, these mechanisms dynamically weigh various input components based on their importance, allowing the model to prioritize relevant information while disregarding irrelevant details. Notably, attention mechanisms are recognized for their ability to enhance model accuracy by focusing on essential information and filtering out noise. This capability is particularly advantageous in the context of plant disease identification, where precision is paramount. By selectively directing attention to specific regions or features within plant images, these mechanisms enable more precise and nuanced disease detection, resulting in more accurate diagnoses [7].

Moreover, attention mechanisms contribute to interpretability by highlighting which sections of an image are most influential in the classification process. This transparency provides valuable insights into how the model generates predictions, facilitating model validation and refinement. Additionally, attention mechanisms are highly adaptable and seamlessly integrate into a variety of deep learning architectures commonly used in plant disease detection, such as convolutional neural networks (CNNs) and Transformer models. This adaptability allows researchers to tailor models to specific task requirements and optimize performance across diverse datasets and plant species, underscoring their significance in advancing automated plant disease detection.

These mechanisms are classified based on a variety of factors, including the type of information they receive, such as spatial attention, which focuses on specific areas of an image, or channel attention, which improves CNN feature representations by highlighting specific channels or feature maps in the input. Furthermore, the granularity at which attention mechanisms operate varies, with global attention considering the entire input and local attention focusing on specific regions of interest within the input. Attention mechanisms differ in the process used to calculate attention weights Self-attention mechanisms calculate attention scores by examining the correlations between every pair of elements in the feature map or input sequence, whereas cross-attention mechanisms compute attention scores among elements from different input sequences or modalities and then use these scores to weight the information shared between them. Understanding these categorizations allows researchers to effectively use attention mechanisms to improve the performance of DL models. This study focuses on categorizing and describing the characteristics of these attention mechanisms.

3.1. Type of input considered

3.1.1. Channel attention

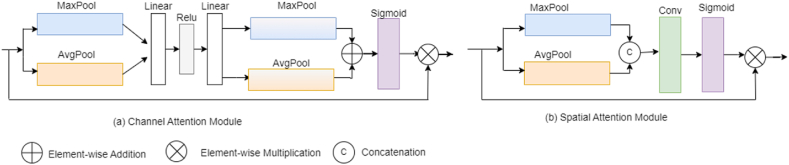

Attention mechanisms have distinct approaches tailored to different types of input considerations. One such method involves using channel information as input for attention mechanisms. In this paradigm, attention mechanisms refine feature representations within Convolutional Neural Networks (CNNs) by emphasizing specific channels or feature maps that are deemed critical for the task at hand. An image is represented by three channels: R, G, and B, which represent the red, green, and blue intensity levels of each pixel in the image. Through convolutional operations, each channel generates new channels with distinct information. By assigning weights to each channel to indicate its relevance to key information, a higher weight signifies greater relevance, thereby highlighting the importance of that channel [8]. Channel attention module (CAM) look for interactions and dependencies among various feature representations across multiple channels. The “squeeze-and-excitation” approach by Ref. [9] is utilized in CAM. The “squeeze” process reduced the dimensions of feature maps to just a single value (i.e., channel descriptor) by applying average pooling or max pooling. Fig. 1 (a) depicts the CAM [10]. The input tensor is processed initially in the CAM by an adaptive average pooling layer. This layer reduces the input tensor's spatial dimensions to 1x1 while keeping the channel dimension. The output is subsequently sent to a linear layer, which decrease the channel dimension by a predetermined reduction ratio. The rectified linear unit (ReLU) activation function is used for bringing nonlinearity into the network. Following that, another linear transformation is applied to restore the original channel dimension. To confine values within the range of 0–1, the sigmoid activation function is utilized, producing channel attention weights. These weights are then multiplied element by element with the input tensor so as to emphasize or minimize the importance of individual channels.

Fig. 1.

(a) Cam (b) SAM [11].

The Shuffle Attention technique focuses on channel-wise attention, which involves dividing input channels into groups and creating attention maps to highlight relevant aspects within each group. Shuffle attention initially splits the input feature map into several groups and simultaneously processes the sub-features within each group. Sub-features are combined, the outcomes from both branches are concatenated, and a channel shuffle operation makes it easier for various sub-features to communicate with one another. It has demonstrated improved performance in tasks like as instance segmentation, and object identification by including proposed shuffle attention module into different CNN models [12].

3.1.2. Spatial attention

The spatial attention technique prioritizes spatial information for feature enhancement and can be applied at various scales, allowing the model to focus on both detailed information and broader contextual areas. By adaptively attending to different region of the input, the model can understand variations in scale, and appearance, leading to improved performance on visual tasks. As presented in Fig. 1 (b), the first step in the spatial attention module (SAM) [10] includes processing the input tensor via separate max pooling and min pooling operations along the channel dimension. The max pooling operation identifies the maximum values across channels, while the min pooling operation identifies the minimum values. These outcomes are then combined along the channel dimension, resulting in a tensor comprising two channels. Subsequently, a 2D convolution operation is employed to manipulate the tensor and generate a single-channel output. Following that, the sigmoid activation function is employed to compress the values between 0 and 1. These values represent the spatial attention weights, which measure the importance of specific spatial regions. Finally, the attention weights are element-wise multiplied with the input tensor, emphasizing or downplaying specific spatial regions based on their relevance.

The term “soft attention” or “soft attention mechanism” refers to one particular type of spatial attention mechanism. In this approach, model takes a feature map as input, and generates attention weights for each spatial point. The attention weights represent the significance or relevance of each regions, allowing the model to emphasize or de-emphasize certain regions during computation. Soft attention is introduced by Ref. [13]. The soft attention mechanism employed an independent neural network module to produce attention weights. These weights were subsequently utilized to calculate a weighted sum of image features. By training the model, the attention weights were learned, enabling automatic identification of the most pertinent image regions for generating precise and meaningful captions.

Coordinate attention is a type of spatial attention mechanism. Unlike typical channel attention mechanisms such as SENet [9], which only capture inter-channel information, coordinate attention includes spatial information as well as channel interactions. This technique captures long-range dependency along one spatial dimension while keeping precise positioning information along the other [14].

3.1.3. Mixed attention

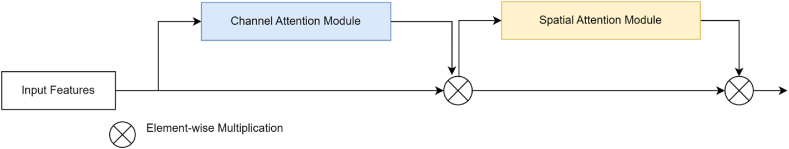

The mixed attention mechanism refers to a type of attention mechanism that combines different attention mechanisms or components within a neural network model. It involves the integration of multiple sources of information obtained from utilizing diverse attention mechanisms, such as spatial attention, channel attention, or other variants, for enhancing the model's ability to collect diverse information. The attention weights were learned during the training process. In Ref. [15], Dual Attention Network (DAN) is proposed which combine channel and spatial attention mechanism for scene segmentation. In Ref. [10], Convolutional Block Attention Module (CBAM) is proposed which contain two sub modules CAM and SAM as shown in Fig. 2. For better feature refinement, CBAM can be incorporated into any CNN architecture because of their lightweight structure.

Fig. 2.

CBAM module.

3.2. Method of computing attention weights

3.2.1. Self attention

Various attention mechanisms use different approaches to calculate attention weights, which are adapted to their specific purposes and input characteristics. Self-attention mechanisms, for example, compute attention weights by examining the associations between each pair of components in an input sequence or feature map. The self-attention mechanism enables interaction between elements of input ("self”) and establishes the level of attention that should be given to each element. The resulting outputs are a combination of these interactions and corresponding attention scores. By assigning attention weights to each pixel or region in the self-attention layers, the model may dynamically learn to emphasize the most relevant features of the input images. This feature allows the model to focus on locations having the most relevant information for discriminating between healthy and unhealthy plants.

3.2.2. Cross attention

In deep learning systems, cross-attention, often referred to as cross-modal attention or inter-attention, is a method that facilitates communication and interaction across several modalities or representations within a model. With cross-attention, a model can analyse data from one sequence or modality while attending to aspects from another, in contrast to self-attention, which concentrates on capturing dependencies inside a single sequence or feature set. When dealing with tasks that involve pairs of sequences, like machine translation, this variation of attention is very helpful since it helps the model identify the links between elements in various sequences [16]. A dual-branch transformer model named CrossVit is proposed in Ref. [17], for multi-scale feature learning in image classification. In order to maintain linear computing cost while enabling effective information flow between small-patch and large-patch tokens, cross-attention is utilized as an effective fusion technique. Cross attention is used in the U-Transformer network [18] for medical image segmentation to improve the capacity of the U-Net decoder to recover spatial information from skip connections. Cross-attention is used to filter out noisy or unnecessary regions from the skip connection features, so that the model can concentrate on semantically rich areas for precise segmentation.

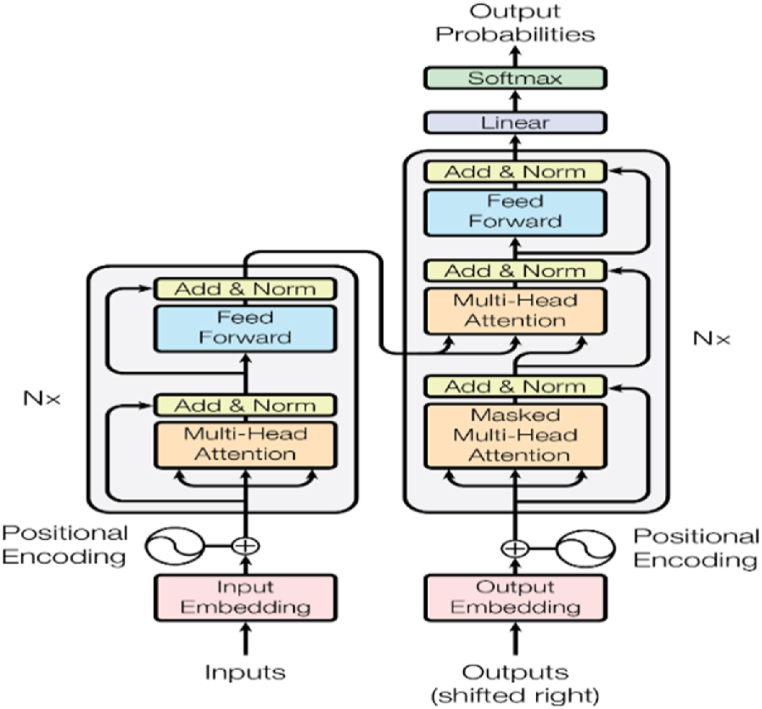

3.2.3. Transformer based attention

In 2017, study [11] achieved a significant advancement with the creation of a ground-breaking neural network known as the “Transformer.” The Transformer, which was first created for NLP tasks, revealed to the world the amazing powers of self-attention processes. By utilizing self-attention, this design enables models to process sequences in parallel, effectively capturing long-range dependencies. The transformer model follows an encoder-decoder architecture, where multiple encoders and decoders are stacked (as represented by Nx in Fig. 3). This stacking implies that the outcome of one encoder becomes the input for the subsequent encoder, and similarly, the outcome of one decoder becomes the input for the adjacent decoder [19]. According to this approach, the encoder receives a series of symbols as input, and the decoder produces a series of symbols, one element at a time, as the output [11]. The transformer model relies mainly on the attention function to produce output, it maps a query and a set of key-value pairs.

Fig. 3.

Transformer architecture [11].

Specifically, the attention method works on a set of queries that are arranged in a matrix Q. Similarly, the values and keys are organized into matrices V and K, respectively. The resulting matrix of outputs is computed through a specific process as shown in Eq. (1):

| (1) |

Where, is keys of dimensions.

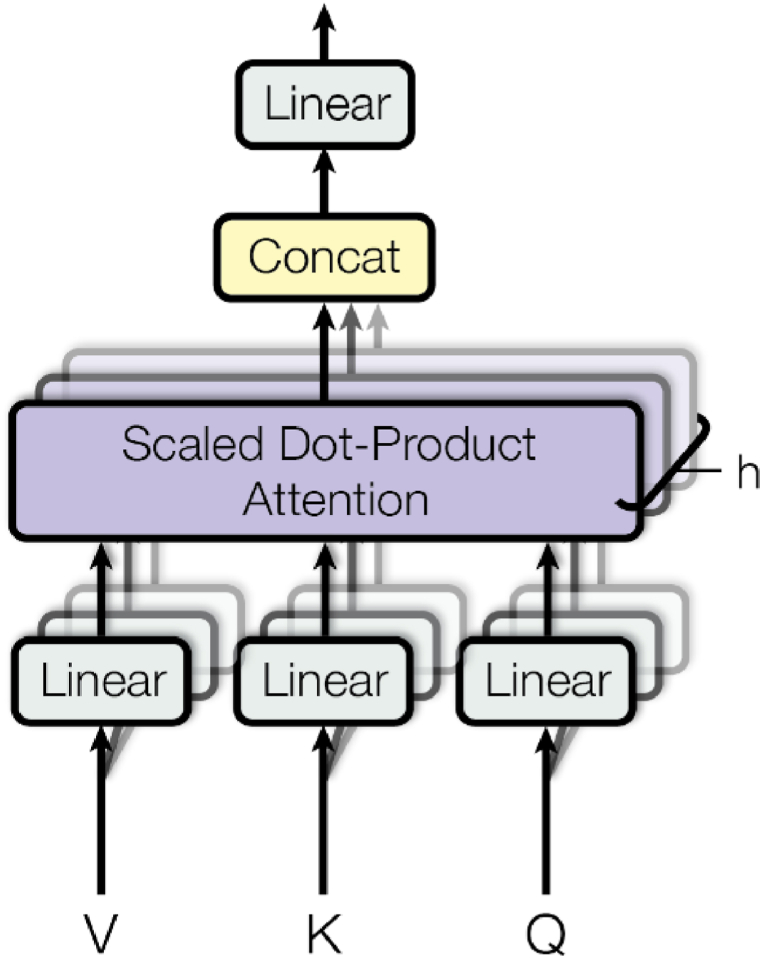

3.2.4. Multi-head attention

Multi-head attention, as seen in Fig. 4, allows the model to collectively focus on input from distinct representation subspaces and positions. In Ref. [11], researchers begin by applying a linear transformation to the input matrices Q, K, and V, then compute attention as in Eq. (2):

| (2) |

Where, , , are all learnable parameters. In multi-head attention, linear transformation is applied to the matrices and perform attention on number of heads and then these heads are concatenated and then linear transformation is applied again as shown in Eqs. (3), (4):

| (3) |

Where,

| (4) |

Fig. 4.

Multi-Head Attention [11].

Transformer-based attention employs self-attention and multi-head attention to handle input sequences. In the transformer architecture, a self-attention layer first process the input sequence, then a feed-forward network, and finally another self-attention layer. This sequence of layers is repeated multiple times, with residual connections and layer normalization applied between each layer. Transformer-based attention has been shown to be highly effective in modelling sequential and spatial dependencies in natural language and computer vision tasks [19].

In summary, self-attention serves as a foundational element within multi-head attention, which in turn functions as a critical component in the overall transformer-based attention mechanism. Some common transformer-based attention variants used in computer vision are: Vision Transformer (ViT) [20], Data-efficient Image Transformers (DeiT) [21], Swin Transformer [22], Pooling-based Vision Transformer (PiT) [23], and DEtection TRansformer (DETR) [24].

3.3. Level of granularity

Attention mechanisms work at multiple levels of granularity, allowing models to concentrate on different sections of the input data according to their specific needs. On a broader scale, attention processes can be categorized according to the amount of information they consider. Global attention techniques allow models to focus on every element in an input sequence or feature map. Local attention mechanisms, on the other hand, concentrate on particular areas or portions of the information, enabling more tailored processing while also lowering computing complexity. To improve discriminative features for classification, the Squeeze-and-Excitation Network (SENet) [9] for instance, uses global attention to recalibrate feature maps over the entire image based on channel-wise relationships [25].

3.3.1. Multi-scale attention

Multi-scale attention refers to the model's capacity to pay attention to and capture information at multiple scales or levels of detail within an input. This is typically achieved by incorporating mechanisms that can aggregate features from different scales, such as using dilated convolutions, feature pyramid networks [26], or pyramid pooling modules [27]. The aim is to enable the model to grasp detailed as well as comprehensive contextual information, thereby enhancing its understanding of the input across various levels and scales. Example of multi-scale attention models are: Non-local Neural Networks [28], Deep Layer Aggregation (DLA) [29], Scale-Aware Trident Network [30], and Path Aggregation Network (PANet) [31].

In conclusion, attention mechanisms provide an adaptable framework for deep learning feature representation. Attention processes help models extract meaningful information and increase performance across a variety of tasks by carefully directing focus to key components within the input data. Importantly, various attention mechanisms are tailored to specific areas of data processing, with each providing distinct benefits based on the task requirements. However, it is important to note that attention processes are not mutually exclusive; rather, they can complement one another when utilized alone or in combination. For example, a task may benefit from the simultaneous use of global and local attention mechanisms to collect both global patterns and specific details within the data. This adaptability demonstrates attention mechanisms' versatility and usefulness in resolving a variety of machine learning problems in addition to their importance as a key tool in increasing model capabilities.

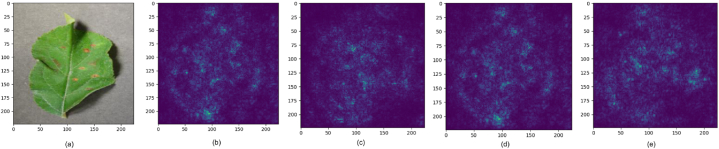

4. Attention mechanisms in plant disease identification

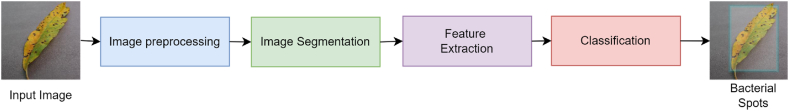

To create an automated system for identifying plant diseases, various stages must be undertaken, as depicted in Fig. 5. The initial stage involves capturing images of plants from real fields. While diseases can occur in various plant parts, such as roots, stems, or leaves, leaf images are commonly used for disease identification since most visible symptoms appear on leaves. Once the images are obtained, the subsequent stage involves image pre-processing. This entails resizing the images to suit the model's requirements and applying denoising techniques to eliminate distortions. Furthermore, image augmentation techniques like scaling, flipping, rotation, translation, cropping, and deep learning augmentation methods such as GANs are employed to augment the dataset, for better training of the data-hungry ML model.

Fig. 5.

Steps involves in plant disease identification.

In the third stage, image segmentation is performed to identify regions of interest (ROIs) where infected areas are present in the image. Subsequently, in the fourth stage, feature extraction techniques are applied to extract relevant features from the images, which facilitate the ML model in identifying diseased or healthy regions. The final stage involves classification, where the disease class affecting the plant leaf is determined. The output obtain from this stage is the probability values associated with different types of diseases that could be present on the plant leaf. To enhance the plant disease identification system, attention mechanisms are integrated into the segmentation, feature extraction, object detection, and classification phases. This enables the models to allocate more attention or resources to crucial regions of an image while reducing emphasis on less relevant regions. This section focuses on the techniques and models proposed by researchers that utilize attention mechanisms to develop solutions for plant disease detection and classification. Detailed information regarding pre-processing, augmentation, segmentation, and classification techniques employed in recent studies, as well as the frameworks utilized and the achieved accuracy, can be found in Table 1, which provides a comprehensive summary of various plant disease recognition studies.

Table 1.

An overview of recent studies utilizing attention mechanisms for plant disease identification.

| Reference | Method | Network Design | Loss Function | Pre-Processing | Framework | Dataset & Accuracy | Performance Metrics & Results | Keywords |

|---|---|---|---|---|---|---|---|---|

| [32] | DeepLabV3 + (MobileNetV2 + CBAM + Shuffle Attention + ASPP) | Segmentation | Weighted Loss | Labelme Annotation Tool: To Label the spot at pixel level. Albumentations Data Augmentation Library: For Brightness Adjustments, Cropping, Flipping, Shifting |

PyTorch | On Field - Sweetgum Leaf Spot Dataset (SLSD): | Accuracy: 94.5 % | Mixed Attention |

| [35] | Unet + Wavelet Pooling + Attention Gate Module | Segmentation | Dice Loss | – | – | On Field -CWFID (Crop Weed Field Image Dataset) | IoU: 94.81 % | CNN |

| [38] | Resnet50 + PAB | Segmentation | – | Random Brightness Level, Random Rotation, Flipping, Hue, Contrast, Saturation Adjustment | PyTorch | Wheat Dataset –Real Field Images | Accuracy: 96.4 % | CNN |

| [43] | Resnet101 + CBAM + FPN + Segmentation Network (Protonet Branch And The Prediction Head Branch) | Segmentation | Cross-Entropy Loss + Smooth L1 Loss | Random Cropping, Random Contrast, Photometric Distortion, Flipping, Random Rotation | PyTorch | Maize Disease Dataset – Publicly Available | Precision: 98.7 % Mean IoU (mIoU): 84.9 % |

Residual Networks |

| [45] | Resnet50 + FCN | Segmentation | Cross-Entropy Loss | RGB To HSV Colour Space Conversion | PyTorch | Grape Downy Mildew (DM) and Powdery Mildew (PM) Images -Real Field Images | mIoU: 84.%(DM), 74 % (PM) |

Hierarchical Multiscale Attention |

| [46] | Resnet50 + Transformer + MLP + MPH | Segmentation | – | Horizontal and Vertical Flipping, Rotations, Resizing, Normalizations, Segmentation: Copy-Paste Method | PyTorch | Tomato leaf Disease Segmentation Dataset (TDSD) | Accuracy: 96.40 % | Coordinated Attention |

| [48] | Grapcut + Resnet50 + New-CA, Ghostnet + New-CA | Segmentation | L1 Norm | Random Flipping, Random Rotation, Affine Transformation | PyTorch | Plant Disease Dataset | Top-1 err(%): 12.55 % |

CNN With Channel Pruning |

| [50] | Resnet50 + SE Module | Feature Extraction | – | Rotation, Zooming, Noise Addition, Colour Jitter | PyTorch | Tomato leaf PlantVillage Dataset | Accuracy: 96.81 % | Residual Network |

| [51] | Inception + RI-Block + CBAM | Feature Extraction | Cross-Entropy Loss | Random Rotation, Random Horizontal and Vertical Offsets, Cross-Cutting Transformation, Random Scaling, Flipping | TensorFlow | Potato, Corn, Tomato -Plantvillage Dataset | Accuracy: 99.55 % |

Residual Network |

| [52] | Pre-Trained CNN (Resnet50, Inceptionv3, VGG19, Mobilenetv2, Efficientnetb0) + CBAM | Feature Extraction | Softmax-Loss | – | Keras | Diamos Plant Dataset – Publicly Available | Accuracy: 86.89 % |

Pre-Trained CNN |

| [55] | Densenet + SE Module + Adabound Optimization Algorithm | Feature Extraction | Cross-Entropy Loss | Random Brightness Level, Viewing Angles, Colours and Horizontal Inversion | PyTorch | Rice Disease Dataset – Publicly Available | Accuracy: 99.4 % |

Deep Dense Network |

| [57] | Dilated CNN + HAM + Logistic Regression | Feature Extraction | Categorical Cross-Entropy Loss | Colour Space Conversion, Bilateral Filtering Data Augmentation: CGAN Segmentation: Otsu's Thresholding |

– | Tomato Disease - Plantvillage Dataset | 96.6 % | Dilated CNN |

| [59] | Densenet121 + MFA + SSA | Feature Extraction | Random Clipping, Random Zooming, Horizontal Flipping, Random Rotation | TensorFlow | PDR2018, FGVC8, And PlantDoc Datasets – Publicly Available | Accuracy: 88.32 %(PDR2018), 89.95 % (FGVC8), 89.75 % (PlantDoc) |

Multi-Granularity Feature Aggrigation | |

| [60] | Alexnet + Down Sampling Attention Module + Miss Activation Function | Feature Extraction | Binary Cross-Entropy Loss | Random Rotation, Horizontal Flip, Horizontal and Vertical Shift, Random Shear, Zoom | Keras | Corn Disease Dataset- Real Field Images | Accuracy: 99.35 % |

Group Convolution |

| [62] | Inverted Bottleneck Module(With Maxpool) + Shuffle Attention Module | Feature Extraction | Cross-Entropy Loss | Rotation, Cropping, Flipping, Scaling | – | PlantDoc++ Dataset – Publicly Available | Accuracy: 94.6 % |

Residual Network |

| [63] | MS Branch (Atrous Convolution) + DA Branch (SE-Module) | Feature Extraction | Cross-Entropy Loss | – | Paddle | Apple Leaf Disease Dataset – Publicly Available | Accuracy: 96.66 % |

Dual Branch CNN |

| [64] | Efficientnetb6 + CBAM + MSF Module | Feature Extraction | Focal Loss | Image Reverse, Increasing and Decreasing in Brightness level, Horizontal Flipping | PyTorch | Cassava Leaf Disease Dataset- Publicly Available | Accuracy: 88.1 % |

Diluted Convolution |

| [65] | Resnet101 + SE Module + Vit | Feature Extraction | Cross-Entropy Loss | Blurring, Rotation, Noise Addition, Change Brightness, and Darkness level | PyTorch | Python Crawler Tool To Collect Data From Web | Accuracy: 88.34 % |

CNN |

| [66] | Spectral Convolution + Channel Attention Module | Feature Extraction | – | – | – | Fusarium Dataset – Real Field | Accuracy: 82.78 % |

Dilated Convolution With Residual Connections |

| [67] | Yolov5s + CBAM + CA + K-Means | Object Detection | SIoU Loss | Random Rotation, Random Colour Adjustment, Random Brightness Adjustment, Random Contrast Adjustment | PyTorch | Cucumber Root-Knot Nematode Image Dataset - Real Field Images | Mean Average Precision (mAP): 94.8 % |

Dual Attention Mechanism |

| [68] | YOLOX-S + Simam + G-Head | Object Detection | Efficient Intersection over Union (EIoU) loss | LabelImg: To Manually Label Image Data Enhancement - MixUp and Mosaic Method |

PyTorch | Fusarium Head Blight Severity Grading Dataset – Real Field Images | mAP: 99.23 % | Ghost Convolution |

| [70] | Yolov5 + Transformer + Bifpn + Shuffle Attention Module + ASFF | Object Detection | SIoU Loss: For Bounding Box, Binary Cross-Entropy Loss: For Class Probability |

Rotation, Colour Dithering, Random Erasing, Image Translation, Mirror Flip | PyTorch | Tea Disease Dataset- Real Field Images | mAP@0.5: 85.35 % |

Multiscale Feature Fusion |

| [71] | Inceptionv3 + Transformer + Yolov5 | Object Detection | Sparse Category Cross-Entropy Loss | Linear Contrast, Vertical Flip, Horizontal Flip, Superpixed, Sharpening, Grayscale Conversion, Embossing, Affine transformation | – | Crop Image Dataset – Publicly Available | BLEU Score: 64.96 %(ICM) mAP50: 0.382(ODM) |

Pretrained CNN |

| [72] | Yolov5n + CA + Swin Transformer | Object Detection | Image Annotation Tool: Make Sense AI Augmentation: Changing Colour Brightness, Hue Saturation, Cropping, Scaling, Rotation, Noise Addition, and Mosaic Method |

PyTorch | Maize Leaf Dataset -PlantVillage Dataset | mAP: 95.2 % |

CNN | |

| [73] | Yolov5s + CA | Object Detection | GIoU Loss | Cut and Paste Technique, Resizing, Rotation | PyTorch | Blueberry Disease Inages-Real-Fields Images | Precision: 96.30 % |

Feature Pyramid Network |

| [75] | MobileNetV3-Large + ECA + Digconv | Image Classification | Bias Loss | Mirror Transformation, Horizontal Flipping, Blurring, Noise, Clipping | Corn Leaf Disease Datasets – Publicly Available | Accuracy: 98.23 % |

Dilated Convolution | |

| [76] | Convolution Block + Vision Transformer | Image Classification | – | – | Keras | PlantVillage Dataset, Wheat Rust Classification Dataset, And Rice Leaf Disease Dataset | Hybrid Models | |

| [77] | Convolution + Swin-Transformer | Image Classification | Cross-Entropy Loss with Label Smoothing | Random Horizontal and Vertical Flipping, Bilinear Interpolation - To Adjust the Image Size | PyTorch | Potato Disease Leaf Dataset (PDLD), Tomato Images (Plant Village), Banana Leaf Disease Images (BLDI), And Cucumber Plant Diseases Dataset (CPDD), | Accuracy: 97.5 % (PDLD), 98.2 % (Tomato), 92.2 % (BLDI), 90.9 % (CPDD) |

Vision Transformer |

| [78] | MobileNet + Multi-Head Attention + Bayesian Optimization | Image Classification | – | Otsu's Thresholding, Morphology, Cropping | TensorFlow | Rice Image Dataset – Publicly Available | Accuracy: 94.65 % |

Depthwise Convolution |

| [79] | 3DCNN + EOS + CAA | Image Classification | Binary Cross-Entropy Loss | Image Enhancement, Noise Reduction, Scaling, Colour Space Transformation | Keras | Corn Leaf Disease Dataset -Plantvillage Dataset and PlanDoc | Accuracy: 98 % |

Feed Forward Neural Network |

| [81] | Resnet50-CBAM + SVM | Image Classification | First Stage Training - Categorical Cross-Entropy Loss Second Stage Training-Squared Hinge Loss |

Data Augmentation - Random Rotation, Random Horizontal Shift, Random Vertical Shift, Horizontal and Vertical Flipping, Zooming, RGB To BGR Conversion Images are Categorized using One-Hot Encoding |

– | Tomato Leaf Disease Dataset - Plantvillage Dataset | Accuracy: 97.2 % |

Residual Blocks |

Attention mechanisms, known for their versatility, provide crucial support at several phases of plant disease identification. In image segmentation tasks, mechanisms such as spatial attention or multi-scale attention that target specific regions of interest within plant images, allowing for more exact demarcation of diseased areas. In the context of feature extraction, self-attention mechanisms prove particularly effective at identifying complex patterns and correlations in the data, which makes it easier to extract distinguishing traits linked to distinct diseases. In object detection, both channel and spatial attention mechanisms are essential because they direct focus to disease-related anomalies and allow for the identification of affected areas within complex plant leaf images. Finally, in the classification step, mechanisms like as global attention can be used to emphasize informative portions of the image, resulting in more accurate disease classification based on visual characteristics. By leveraging on these various attention mechanisms at different phases, systems for identifying plant diseases can attain enhanced precision, resilience, and effectiveness, thereby making a substantial contribution to the advancement of agricultural sustainability and crop management.

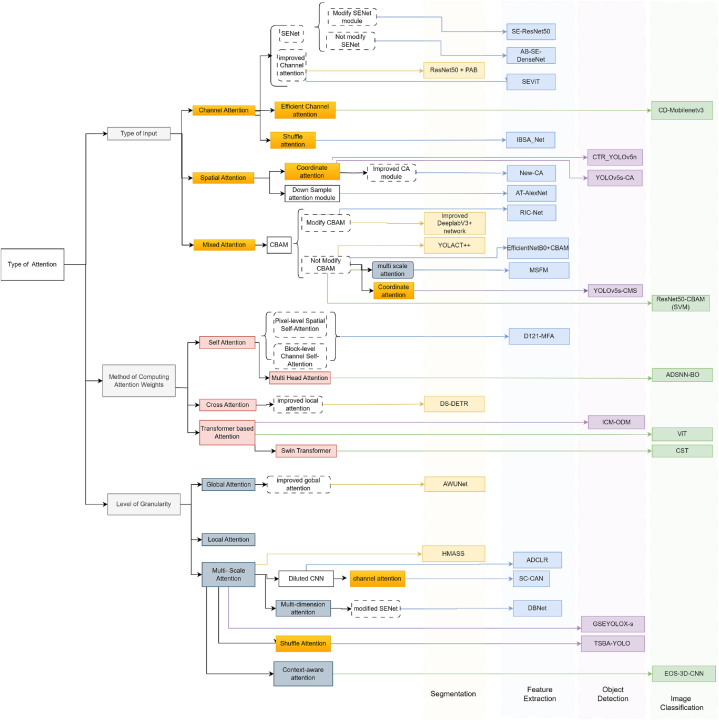

In this study, various techniques proposed by researchers are reviewed, which utilize attention mechanisms to enhance different aspects of plant disease identification, including segmentation, feature extraction, classification, and object detection. Throughout the analysis, a spectrum of approaches is observed, ranging from the direct incorporation of existing attention mechanisms to innovative modifications tailored to specific plant disease identification tasks. Fig. 6 is presented to offer a succinct overview of the studies included in the analysis. This figure categorizes the techniques based on the stages of the plant disease identification process they target, facilitating a detailed examination of the attention mechanisms employed across segmentation, feature extraction, classification, and object detection. By scrutinizing Fig. 6, readers can discern the specific attention mechanisms utilized to enhance segmentation processes, improve feature extraction, refine classification accuracy, and enhance object detection in plant disease identification scenarios.

Fig. 6.

Diagrammatic representation of various attention mechanism along with the studies that employ these mechanisms

In this section, we delve into the intricacies of attention mechanisms utilized by researchers to enhance various facets of plant disease identification. Our focus lies predominantly on elucidating the methodologies where attention mechanisms are harnessed to bolster different tasks inherent in this domain. The ensuing figure, presented within this section, serves as a comprehensive guide, delineating the strategic integration of these mechanisms within the model architecture and delineating the specific attention modules employed. Through this detailed analysis, we aim to provide clarity on the nuanced applications of attention mechanisms, shedding light on their pivotal role in advancing the field of plant disease identification.

4.1. Leveraging attention mechanisms for image segmentation

Image segmentation is the task of dividing an image into semantically relevant areas. Attention mechanisms have evolved as an efficient strategy for improving image segmentation performance because they selectively focus on important image features. In recent studies, several attention processes have been used to extract relevant characteristics and improve segmentation task performance. In recent studies, various attention mechanisms have been utilized to extract relevant features that improve the performance of segmentation tasks. Some of these studies in the area of plant disease identification are discussed in this section.

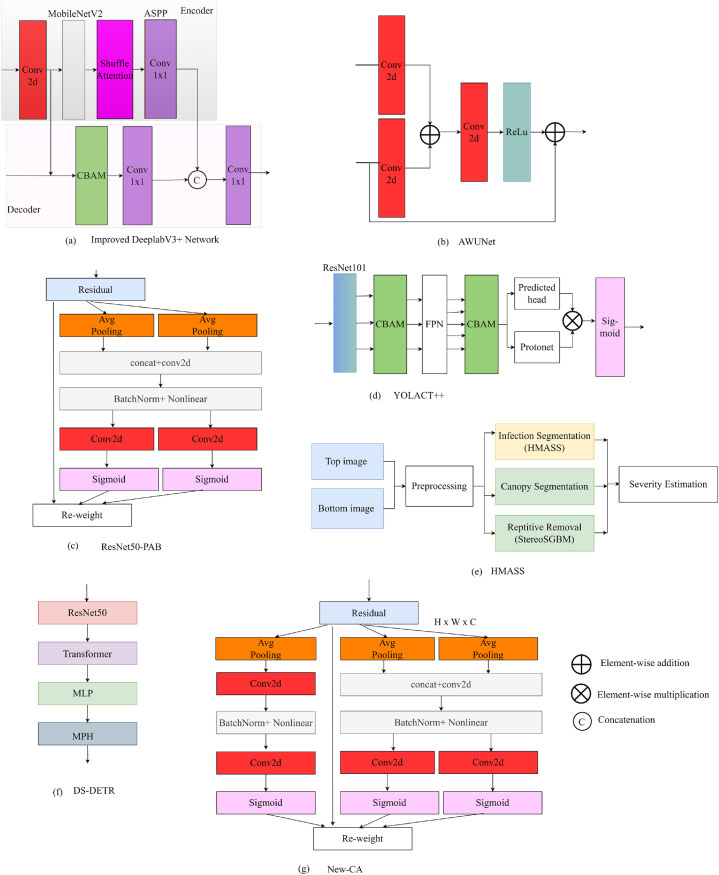

4.1.1. Improved DeeplabV3+ network

For better image segmentation, [32], suggested an improved DeeplabV3+ network. The encoder component, shown in Fig. 7 (a), employs MobileNetV2 [33] as the primary network for feature extraction instead of Xception network, which minimises computation and enhances speed. To handle sub-features, the shuffle attention module is utilized, while Atrous Spatial Pyramid Pooling (ASPP) [34] is used to fuse image context information to obtain high-level information and pass it to the decoder section. In the decoder, CBAM [10] is incorporated to enhance segmentation accuracy. Furthermore, to assign different weights for background and disease spots, a weighted loss function is utilized to ensure differential treatment during the training process, which is specified in Eq. (5):

| (5) |

Fig. 7.

Network design of studies that focus on image segmentation utilizing attention mechanisms (a) Improved DeeplabV3+ Network; (b) AWUNet; (c) ResNet50-PAB; (d) YOLACT++; (e) HMASS; (f) DS-DETR; (g) New-CA

Here, total pixel count is denoted by N, and total number of categories by C, i is the index representing a specific training pixel, j is the index denoting the class of that training pixel, true disease spot category of the ith training pixel's annotation is denoted by , and is the predicted disease spot category for the ith training pixel. Additionally, is a weight parameter for category j, calculated as, where Nj represents the pixel count for category j.

4.1.2. AWUNet

Study [35], introduced AWUNet (Attention-gated Wavelet pooled UNet) is a unique variant of the U-Net architecture [18] that integrates wavelet pooling and attention-gated skip connections. Attention gate module utilized in study is shown in Fig. 7 (b). Attention gate module and remodel skip connections is used to decrease the dimension of the feature map, wavelet pooling is used in between convolution layers instead of max pooling. AWUNet model integrates global and local information from both encoder and decoder paths, leveraging learned attention weights to focus on salient features for improved semantic segmentation. The suggested approach was evaluated against various deep learning techniques including U-Net [18], Visual Geometry Group (VGG) [36], and ResNet [37]. Mean Square Propagation (RMSPROP) optimizes the weights of the AWUNET model, and Lecun Normal is used for the kernel initializer.

4.1.3. ResNet50 + PAB

A novel lightweight position attention block (PAB) as shown in Fig. 7 (c) is proposed in Ref. [38] that breaks channel attention into one-dimensional feature encoding. The PAB can capture long-range dependencies along one spatial direction while keeping precise position information along the other by reducing global pooling into direction-specific encoding procedures. PAB can be embedded in existing networks such as MobileNet [39], VGG [36], and ResNet [37]. The generalization ability of the position attention block was tested by using object detection models like YOLOv3 [40], YOLOv5 [41], and semantic segmentation models like Mask RCNN [42].

4.1.4. YOLACT++

For better segmentation and detection of diseased spots, YOLACT++ with the attention module is proposed in Ref. [43] The suggested model has five components: In the initial stage, ResNet101 [37] is used as a feature extraction network, and in the second stage, the CBAM attention module [10] is used. In the third stage, the Feature Pyramid Network (FPN) architecture [26] is utilized to get the feature maps, which are fed into the second CBAM as showcase in Fig. 7 (d). The fourth stage involves a segmentation network that utilizes a prediction head structure to enhance the speed of segmentation. The network receives five feature maps as input and accomplishes three objectives: predicting target classification, bounding box, and mask coefficients. Other components of the segmentation network utilize Protonet to generate prototype masks that match the original image's size. The final step involves image post-processing, which includes thresholding, cropping, and fast mask re-scoring.

4.1.5. HMASS

For analyse grape foliar disease infection across multiple scales, the hierarchical multi-scale attention for semantic segmentation (HMASS) network [44] was used in Ref. [45] which uses a series of stereo images captured using a utility task vehicle (UTV) in the field. The proposed solution consists of four primary components: disease infection segmentation, canopy segmentation, image overlap removal, and infection severity estimation, as depicted in Fig. 7 (e). Canopy segmentation involved utilizing colour filtering techniques to generate canopy masks in the images. The module responsible for identifying and removing overlapping regions between successive photographs made use of depth and GPS data. The stereo semi-global block matching (StereoSGBM) approach is used to obtain depth data. Finally, the ratio of infected areas to canopy areas in non-repetitive picture regions was calculated to assess the severity of disease infections.

4.1.6. DS-DETR

Authors of [46], proposed the Disease Segmentation Detection Transformer (DS-DETR) for enhancing the convergence speed, and to minimize the training epochs required, an unsupervised pretrained UP-DETR model [24] is proposed. In the DS-DSTR model, to get the feature sequence vectors, ResNet50 is used, as depicted in Fig. 7 (f). Feature extracted are fed into the transformer; in the encoder phase of the transformer, improved Relative Position Encoding is utilized to give more attention to local features; and in the decoder phase of the transformer, the Spatially Modulate Co-attention (SMCA) module [47] is employed to extract features from various spatial positions. Results from the decoder phase are inputted into the mask prediction heads (MPH) to accomplish pixel-level segmentation of the identified targets.

4.1.7. New-CA

To address the issue of disease recognition in a complex background conditions study [48], use GrabCut algorithm to process the real field image and make the background black so that a similarity can be established between real time and the images used for training the model. A new coordinate attention (CA) block is proposed, as shown in Fig. 7 (g) that obtains long-range dependencies along two spatial directions and along channel directions as well, instead of CA that obtains information along H- and W-directions only. Additionally, channel pruning is used to reduce the size of the model, and fine-tuning is performed to account for the performance of the model after pruning. With the new CA, ResNet50 [37] and GhostNet [49] both perform well in terms of minimising the error and shrinking the model size.

Attention mechanisms allow the model to concentrate on important regions of the input image, enhancing image segmentation by accurately identifying objects and boundaries. They facilitate the selection of valuable features from different spatial locations, capturing intricate details in scenarios with diverse object scales, shapes, or appearances. However, the integration of attention mechanisms may lead to increased memory usage and longer inference times, particularly in resource-intensive or time-critical applications.

4.2. Leveraging attention mechanisms for feature extraction

Feature extraction refers to the process of obtaining meaningful and informative representations, also known as features, from raw input data. In the context of computer vision, feature extraction typically involves transforming images or image patches into lower-dimensional representations that capture relevant visual information. The utilization of attention mechanisms enhances the discriminative capability of the model, allowing it to prioritize informative regions or features. This is particularly advantageous for tasks that necessitate precise discrimination, such as object recognition or fine-grained classification. Studies that utilized various attention mechanisms for extracting relevant features are discussed here.

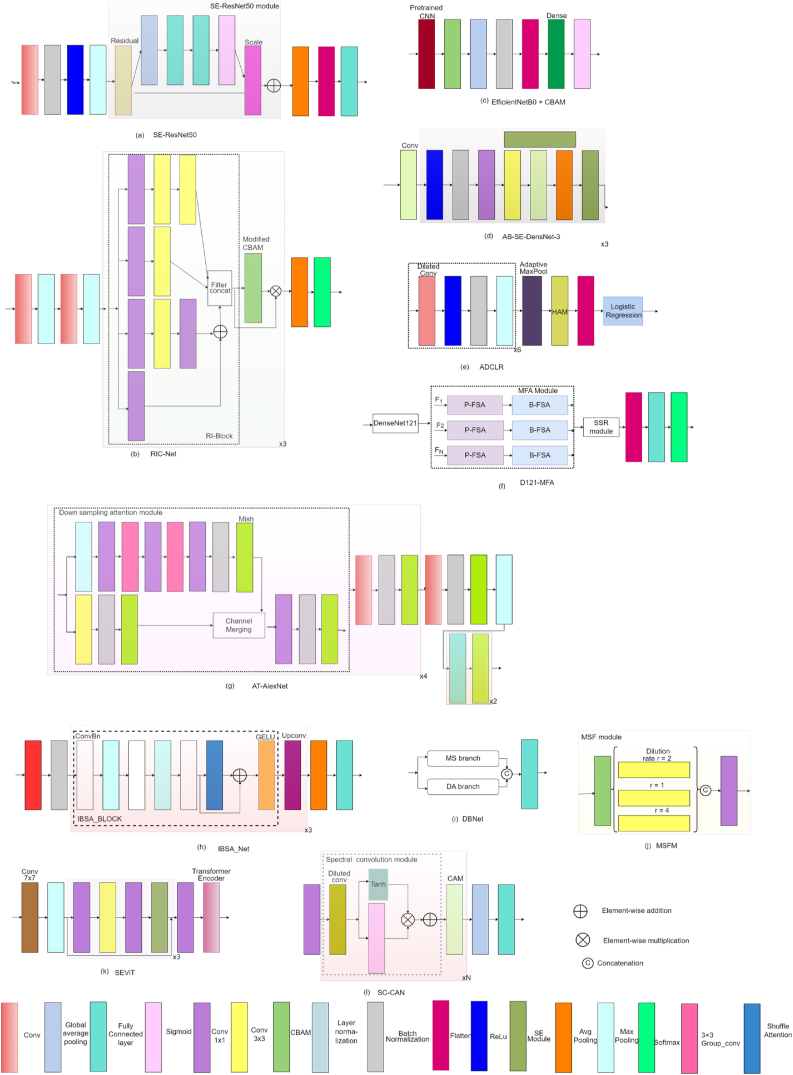

4.2.1. SE-ResNet50

A multi-scale CNN with residual blocks and Squeeze and Excitation (SE) module was introduced by Ref. [50] as shown in Fig. 8 (a) to identify tomato leaf diseases. The SE module recalibrates features by weighting feature channels by relevance. This recalibration approach improves the model's ability to extract complicated disease features by enhancing effective feature channels and reducing invalid ones. The SE module is directly integrated with the ResNet-50 architecture. This integration guarantees that the SE module works seamlessly with ResNet-50's existing layers, improving its feature extraction capabilities.

Fig. 8.

Network design of studies that focus on feature extraction utilizing attention mechanisms (a) SE-ResNet50; (b) RIC-Net; (c) EfficientNetB0 + CBAM; (d) AB-SE-DenseNet-3; (e) ADCLR; (f) D121-MFA; (g) AT-AlexNet; (h) IBSA_Net; (i) DBNet; (j) MSFM; (k) SEViT; (l) SC-CAN

4.2.2. RIC-Net

To reduce trainable parameters, RI-Block is proposed in Ref. [51] by merging the Inception structure with the residuals network. For accurate feature extraction, the CBAM module is introduced. Improvements are introduced in CBAM. In CAM, shared MLP's operations were initially replaced with two one-dimensional convolutions. Furthermore, a weighted operation was introduced to emphasize the significance of lesions. A fully trained RIC-Net model was then made available online to enable the real-time detection of plant diseases, as shown in Fig. 8 (b).

4.2.3. EfficientNetB0+CBAM

To emphasize significant local regions and extract more distinctive features from the output feature map of a CNN, [52], employed the CBAM module, as depicted in Fig. 8 (c). The effectiveness of CBAM was demonstrated using pre-trained CNN models such as, MobileNetV2 [33], VGG19, ResNet50 [37], EfficientNetB0 [53], and InceptionV3 [54]. EfficientNetB0+CBAM has outperformed the original EfficientNetB0 model by giving the best accuracy.

4.2.4. AB-SE-DenseNet

Study [55], propose an AB-SE-DenseNet model in which a DenseNet model [56] embedded with an SE module is used to extract useful features from global information and the AdaBound algorithm is used to accelerate model fitting and enhance the generalisation capability of the model. This study developed three different SE-DensetNet-1, SE-DensetNet-2, and SE-DensetNet-3 models that differ in how SE modules are embedded in the network. The SE-DensetNet-3 models, depicted in Fig. 8 (d), outperform other models by incorporating the SE module in both the transition layer and the dense block of DenseNet simultaneously.

4.2.5. ADCLR

The authors of study [57] proposed attention-based dilated CNN logistic regression (ADCLR) as illustrated in Fig. 8 (e). For image pre-processing, colour space conversion is used to extract brightness and saturation levels, and normalization is used to reduce computation complexity. In order to address the issue of imbalanced data, synthetic images are created through the utilization of a technique known as Conditional Generative Adversarial Network (CGAN) [58]. In this paper, a new feature extraction approach is developed that uses dilated CNN with hierarchical attention mechanisms (HAM). Multiple hidden layers are used in dilated CNN for efficient learning of discriminatory features. And in the last for classification task, a logistic regression model was used.

4.2.6. D121-MFA

The model proposed in Ref. [59] consists of four modules: First, to extract input image features, DenseNet121 [56] is used. Secondly, to obtain local and global features, a multi-granularity feature aggregation module (MFA) is developed that consists of two components: picture-level feature self-attention (P-FSA), which enables the extraction of discriminative features from various disease regions, and block-level feature self-attention (B-FSA), which improves the model's capacity to recognise the traits of various crop species. The MFA module collectively improves the feature aggregation process by incorporating attention mechanisms at different levels of granularity. Thirdly, to capture spatial geometric relationships between feature blocks, sequential spatial reasoning (SSR) is introduced, and in the last step, a classical classification head is used to categorize plant diseases as shown in Fig. 8 (f).

4.2.7. AT-AlexNet

To improve model feature extraction capability and minimize information, [60], introduced a modified version of the AlexNet network [61] integrated with a sampling attention module, depicted in Fig. 8 (g). As a means of augmenting the network's non-linear expression capability, the Mish activation function is employed in place of ReLU. This substitution leads to a notable 0.65 % improvement in the model's recognition accuracy. Group convolution (GC) is utilized to decrease the trainable parameters and increase the diagonal correlation between the convolution kernels of adjacent layers.

4.2.8. IBSA_Net

The authors [62], proposed the IBSA_Net model, which consists of IBMax_block, in which to reduce the number of parameters, an inverted bottleneck structure is used with batch normalization, and a MaxPool layer is also used in between ConvBN blocks, which are added to enhance the model's stability. To improve the capacity structure of the suggested model to obtain spatial location, the shuffle attention (SA) module is added together with residual and the GELU activation function, which is named IBSA_block. In the IBSA_Net model, as shown in Fig. 8 (h), there is the utilization of three IBSA_Blocks, with channel up-convolution (UPconv) employed between these modules. In the final block, global average pooling is applied to extract relevant information, followed by the FC layer.

4.2.9. DBNet

The dual-branch network (DBNet) model suggested in Ref. [63] which consists of a multiscale joint branch (MS) and a multi-dimensional attention branch (DA) as illustrated in Fig. 8 (i). Both of the branch use VGG-16 as their backbone network. MS uses dilated convolution and asymmetric convolution kernels to obtain various receptive fields in parallel, which is useful when lesion information in images is scattered. To get information about the exact lesion location in the image, a novel attention mechanism called DA is utilized. In the DBNet, output obtained from the MS and DA branches is concatenated, and then the FC layer is introduced to get the output.

4.2.10. MSFM

In [64], a lightweight multi-scale fusion model (MSFM) that contain EfficientNet-B6 [53] as the base network is introduced. The pre-trained network incorporates CBAM prior to each regularization stage to improve the model's capacity to choose features. A multi-scale fusion module is used to extract precise colour, texture, and context information from the image. CBAM is originally used in this module, as illustrated in Fig. 8 (j), to enhance the properties of tiny lesion information by learning relevant features. Then, dilated convolutions with various expansion rates are used to extract features.

4.2.11. SEViT

A squeeze-and-excitation vision transformer (SEViT) model was developed in Ref. [65] for efficient detection of fine-grained and large-scale disease symptoms in plants and contains two modules. The first module is the pre-processing network, in which the ResNet101 model [37] is improved by embedded the SE module, which enhances disease features. The second module is the pretrained ViT model [20], which takes enhanced expression input from SE-ResNet101 and produces disease probability as illustrated in Fig. 8 (k). The developed model's limitations include large model parameters and a deep network. The severity level of the disease cannot be predicted; only the disease can be classified.

4.2.12. SC-CAN

In [66], the Spectral Convolution and Channel Attention Network (SC-CAN) to distinguish between the spectral responses of stressed and healthy crops is introduced. The input to this network is given in the form of a sequence of spectral bands. To address the issue of class imbalance, the Synthetic Minority Oversampling Technique (SMOTE) is used to increase the sample size of the minority class. The SC-CAN comprises two components: the first is the spectral convolution module, which, to increase the receptive field, utilizes a dilated convolution layer with residual connections. This enables the extraction of global features even in shallow networks. The second module is the channel attention module, which takes refined feature maps obtained from dilated convolution layer as input and compute inter-channel relationships, as illustrated in Fig. 8 (l).

Attention mechanisms can be incorporated into various neural network architectures, such as recurrent neural networks (RNNs) and CNNs. They can complement existing architectures and improve their feature extraction capabilities. While embedding attention mechanisms improves feature extraction performance, they can make the interpretability of the learned features more challenging. Understanding which specific regions or features contribute to the model's decisions becomes more complex due to the attention mechanism's non-linear and implicit nature.

4.3. Leveraging attention mechanisms for object detection

Object detection is the process of identifying and locating objects in an image. However, this task can be quite difficult due to differences in how objects look, their size, and when they are partially obstructed. To tackle these challenges, attention mechanisms are employed in object detection models. The utilization of these mechanisms allows models to concentrate on the pertinent aspects of an image, adjust to objects with varying sizes, integrate contextual details, optimize resource allocation, and effectively manage complex scenes. By utilizing attention mechanisms, the models can significantly enhance their accuracy in detecting and localizing objects in complex visual environments. Below are a few examples of models that have been created by researchers, leveraging attention mechanisms to enhance the ability to identify diseases.

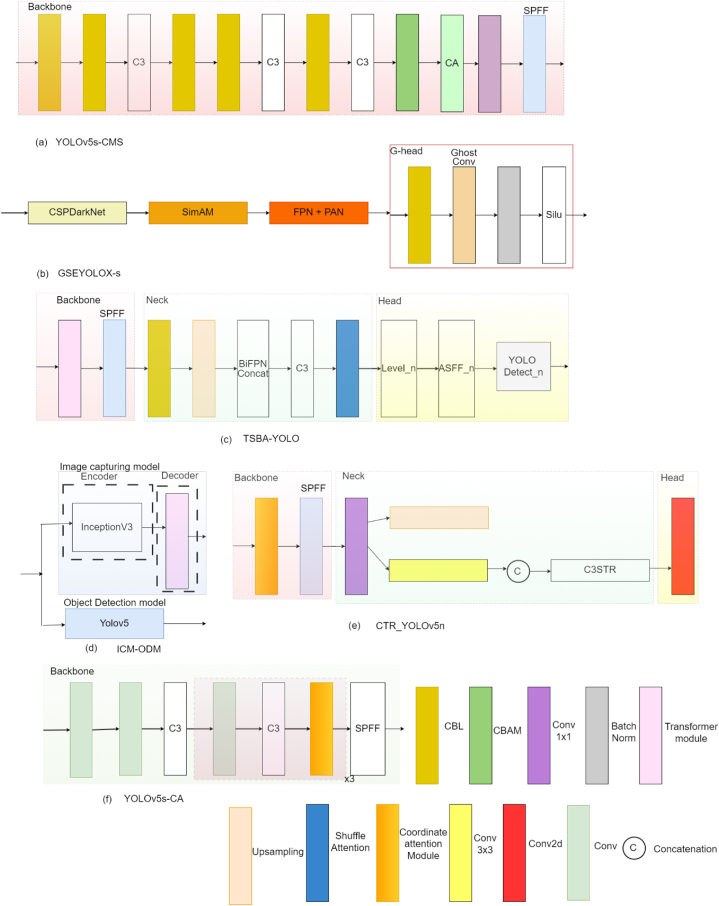

4.3.1. YOLOv5s-CMS

For detecting the root-knot nematode in cucumber plants, [67], used YOLOv5s [41] deep learning model. And to produce anchor boxes, k-means++ clustering algorithm was utilized. To identify key regions and capture distinguishable features from a small target, the dual attention mechanism CBAM and coordinated attention (CA) is embedded in the backbone of YOLOv5s as illustrated in Fig. 9 (a). The proposed model detects the affected region with a 3.1 % increase in mean average precision in comparison to the original model.

Fig. 9.

Network design of studies that focus on object detection utilizing attention mechanisms (a) YOLOv5s-CMS; (b) GSEYOLOX-s; (c) TSBA-YOLO; (d) ICM-ODM; (e) CTR_YOLOv5n; (f) YOLOv5s-CA

4.3.2. GSEYOLOX-s

To determine the level of severity of Fusarium head blight (FHB) in wheat, [68], proposed a novel, lightweight model named GSEYOLOX-s. The proposed model is an improvement over YOLOX-s model. In the suggested model, a simple, parameter-free attention module (SimAM) [69] is included after the CSPDarknet backbone network of the original model so that the model concentrates on crucial components without increasing trainable parameters, and after the FPN [26] and PAN [31] structures, a G-head module is introduced to simplify the redundancy problem of feature maps to reduce complexity and increase speed as shown in Fig. 9 (b). In place of the IoU loss, the Efficient Intersection over Union (EIoU) Loss function is utilized to achieve a more precise localization of the disease area. GSEYOLOX-s reduces the parameters to 0.88 MB and increases the mean average precision (mAPa) to 2.52 % from the original YOLOX-s model.

4.3.3. TSBA-YOLO

The TSBA-YOLO model is proposed in Ref. [70] to extract global information to find out about tea diseases that are spread across entire areas of leaves and to effectively detect small spots of disease. To increase global receptive field of the model, the transformer's self-attention mechanism is integrated into the backbone of YOLOv5, as depicted in Fig. 9 (c). For effective fusion of multiscale features, BiPEN is employed. A shuffle attention mechanism is added to the neck of YOLOv5 to improve the model's capacity to recognise disease features and express semantic information. The detection head of YOLOv5 is substituted with the proposed adaptively spatial feature fusion (ASFF) detection head, which facilitates the removal of irrelevant information and enables efficient fusion of disease-related details at different scales.

4.3.4. ICM-ODM

To identify disease symptoms severity, the solution proposed in Ref. [71] consists of two modules: image captioning and object detection as shown in Fig. 9 (d). Image captioning is used to generate sentences that contain information about the disease associated with visible symptoms and its severity level. The Image Captioning Model (ICM) uses the pretrained InceptionV3 [54] model as an encoder for feature extraction, and Transformer is used as a decoder for generating caption sentences from features extracted from the encoder. For detecting the infected area and displaying the bounding box around the infected area, YOLOv5 object detection model (ODM) is used. Leaf images are provided simultaneously to both models, and the output image contains a boundary box with sentences that provide information about disease type, symptoms, and degree of damage. The limitation of the proposed model is that ODM performance is very poor.

4.3.5. CTR_YOLOv5n

To enhance the efficiency of maize disease detection, the CTR_YOLOv5n model is proposed in Ref. [72], which embed the Coordinate Attention (CA) and the detection head of Swin Transformer (STR) into YOLOv5n. This modification increases the model's accuracy by 2.8 % compared to the original version. The YOLOv5n object detection model is chosen for its compact size and fast recognition speed. CA mechanism is introduced to the YOLOv5n backbone network to improve focus on smaller pixel blocks, where disease spots only occupy a few pixels in the image. For improving the model's capability in extracting global information, the C3 structure is replaced with the C3STR structure by incorporating the Swin Transformer into a larger detection head, as illustrated in Fig. 9 (e).

4.3.6. YOLOv5s-CA

In order to accurately detect Mummy Berry diseases, [73], introduced the YOLOv5s-CA model. This model incorporates the Coordinated Attention into the backbone of YOLOv5s [41], allowing it to concentrate on visual features related to the disease and amplify the importance of relevant features. This enhancement significantly improves the model's ability to detect diseases, as depicted in Fig. 9 (f). To effectively train the proposed model, the cut-and-paste data augmentation method [74] is employed, which involves creating synthetic images. Additionally, to enhance the localization and bounding box-regression performance of the proposed model when identifying infected areas amidst complex backgrounds, the General Intersection over Union (GIoU) loss function is used.

In object detection, attention mechanisms play a crucial role in considering contextual information for accurate detection. These mechanisms allow the model to focus on relevant regions surrounding an object, helping it gain a better understanding of the object's context and make more informed predictions. By selectively attending to these image regions, the model can emphasize discriminative features, resulting in more precise bounding box predictions. One potential concern with attention mechanisms is their tendency to overly rely on specific regions or features, potentially causing the model to disregard important cues in other parts of the image. To address this, it is essential to strike a balance between the attention mechanism's contribution and other components of the object detection model.

4.4. Leveraging attention mechanisms for image classification

Image classification refers to the task of assigning a label or category to an input image. The objective is to create models that can accurately categorize images into predetermined categories. To tackle challenges such as variations in scale, viewpoint, or occlusion, attention mechanisms can be employed. Following are a few models that researchers have recently developed, incorporating attention mechanisms to achieve precise classification of plant diseases.

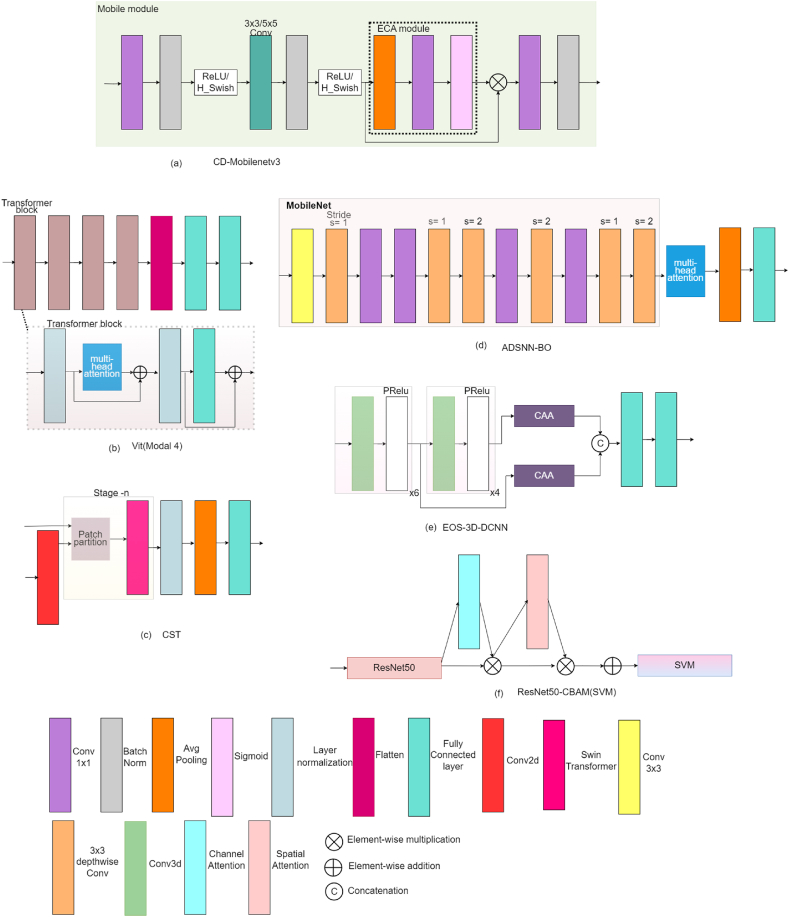

4.4.1. CD-Mobilenetv3

In [75], a CD-Mobilenetv3 model is proposed that uses dilated convolution (Digconv) to broaden the receptive field to ensure that convolution may gather more data and keep its learning attention on samples with special features. To decrease parameters while increasing accuracy, Efficient Channel Attention (ECA) is used in place of the SEs module [9]. Also, to introduce shallow features and to utilize in-depth and local features, cross-layer connections between Mobile modules are introduced as shown in Fig. 10 (a).

Fig. 10.

Network design of studies that focus on image classification utilizing attention mechanisms (a) CD- MobileNetV3; (b) Vit (Model 4); (c) CST; (d) ADSNN-BO; (e) EOS-3D-DCNN; (f) ResNet50-CBAM (SVM)

4.4.2. ViT

The authors of study [76], suggested a novel vision transformer (ViT) to compare it with CNN for these eight models are proposed that include a combination of four blocks, and each block contains a transformer block, a CNN block, or a combination of these two. The first block contains only CNN blocks, the second block contains only Transformer blocks, the third block contains one CNN block, then a Transformer block follows, and the fourth block contains a Transformer block followed by a CNN block. These eight models were evaluated on three datasets. In every scenario, the ViT model, when trained from the beginning, outperforms CNN or hybrid models in terms of accuracy. Despite having fewer parameters, the proposed ViT model utilizes attention blocks which are relatively slower compared to convolutional blocks. Model 4, shown in Fig. 10 (b), is made entirely of transformer blocks and has the highest recall, f1-score, and precision.

4.4.3. CST

In [77], a Convolutional Swin Transformer (CST) model is introduced, to identify diseases and assess their severity. This model is built upon the Swin Transformer [22]. Three variants of the model (small, large, and base) have been developed, which differ in the way STR blocks are employed in each step and the number of channels utilized. To obtain the image feature map, a convolution block is incorporated within the Swin transformer, allowing the feature map to be inputted into the network for learning, represented in Fig. 10 (c). This modification seeks to improve the model's accuracy and robustness. To address the issues of overfitting and overconfidence, the model incorporates the label smoothing regularization technique. The label smoothing cross-entropy is expressed in Eqs (6), (7):

| (6) |

Where,

| (7) |

Here, Eq. (7) presents a mathematical depiction of label smoothing. In the equation, where represents the one-hot encoded representation of a sample i. K denote total number of label categories, α represents a small number (specifically 0.1 in this study), and represents the probability that sample i belongs to the positive class.

4.4.4. ADSNN-BO

In [78], a novel model called Attention-based Depthwise Separable Deep Neural Network (ADSNN-BO) is proposed, depicted in Fig. 10 (d). They incorporated an attention layer into the MobileNet model [39], and to optimize the parameters, Bayesian optimization was employed. Using a filter visualization technique, the effectiveness of the suggested approach was tested and compared to existing deep learning model, allowing for detailed analysis and comparison.

4.4.5. EOS-3D-CNN

In [79], a new and innovative approach called the 3D-dense convolutional neural network (3D-DCNN) is introduced for accurately predicting corn disease. They employed the Ebola Optimization Search (EOS) algorithm to determine optimal weights, reduce overall error, and increase accuracy. The VGG16 [36] design is used as the base design for a 3D network. Ten 3D convolution layers are used, which employ a parametric rectified linear unit (PReLU). The model utilizes two Context-Aware Attention (CAA) [80] blocks, depicted in Fig. 10 (e), to capture information at different scales.

4.4.6. ResNet50-CBAM (SVM)

Support vector machines (SVM) is utilized in place of FC network layers to connect to the CNN model in Ref. [81], which also included CBAM for feature extraction as illustrated in Fig. 10 (f). During the second round of training, the base layers of the network are kept “frozen” to minimize trainable parameters after the model has been initially learned from scratch.

Attention mechanisms in image classification have changed the field by improving several aspects of the process. Attention mechanisms enhance object distinction by selectively focusing on particular areas or features within an image, allowing classifiers to more accurately distinguish between objects even in cluttered or complicated scenes. Furthermore, these mechanisms enable more efficient feature selection, enabling models to ignore noisy or irrelevant input and prioritize pertinent data.

Table 1 gives an overview of recent studies that developed techniques that utilized attention mechanisms for creating plant disease identification solutions.

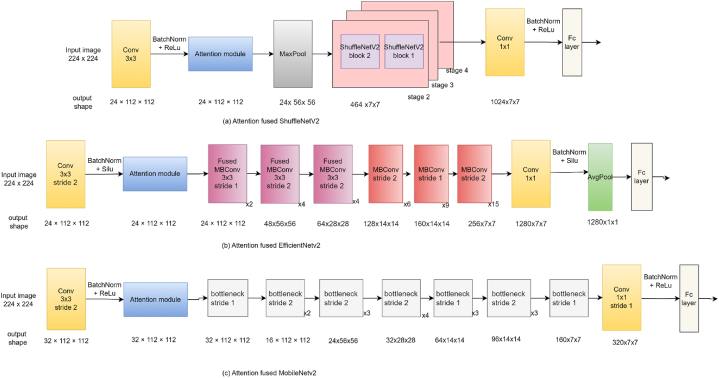

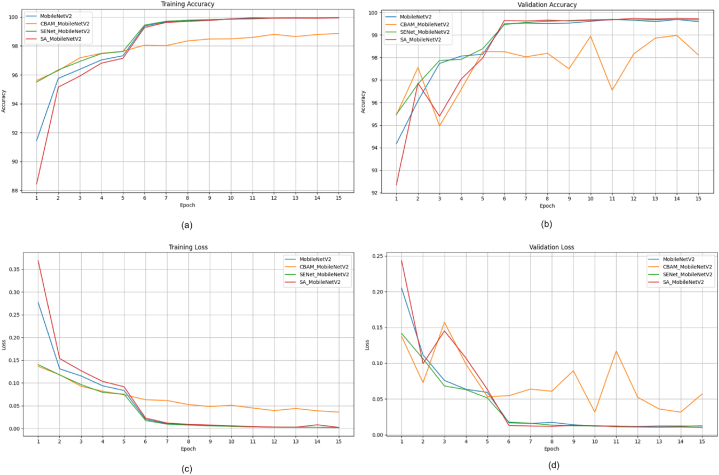

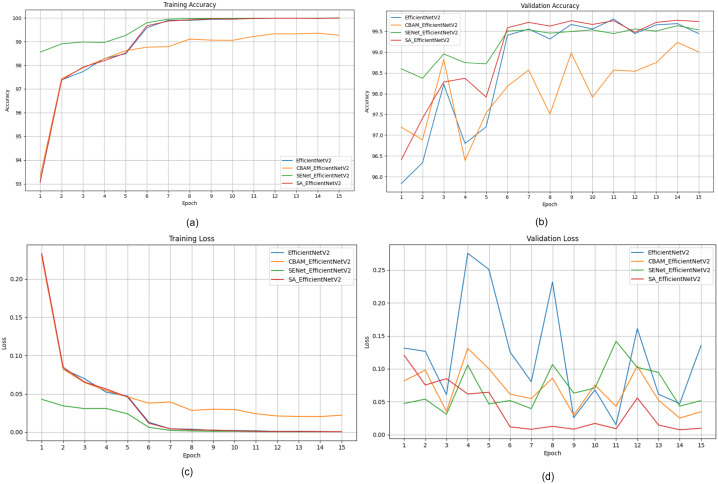

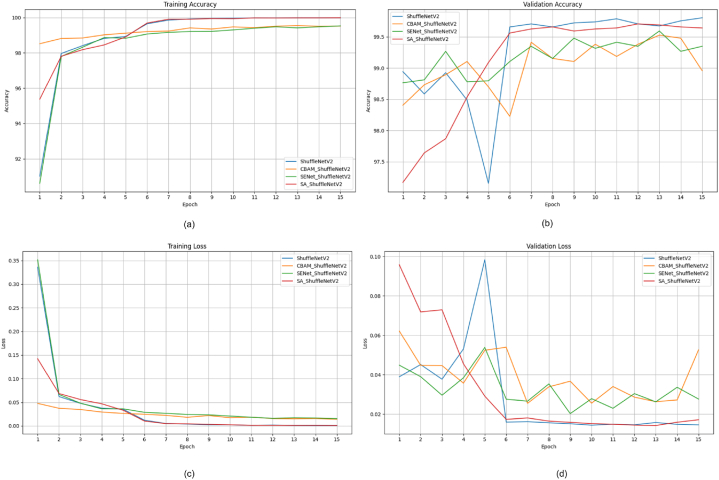

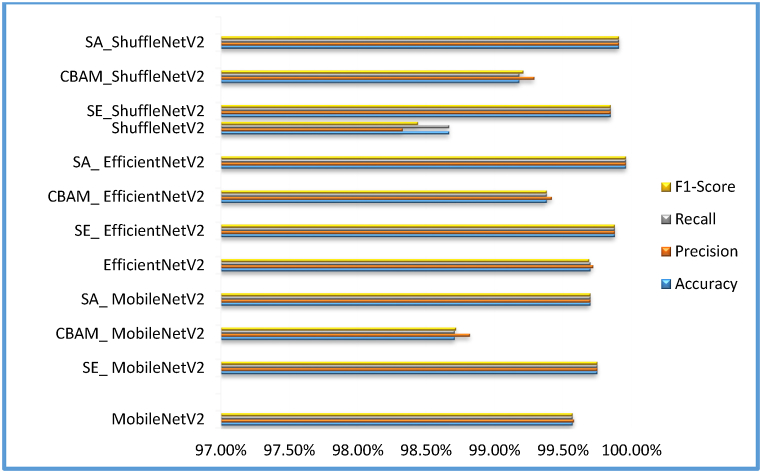

5. Comparative evaluation

This section presents a comparative study examining the impact of integrating attention modules, such as the SE module [9], CBAM [10], and Shuffle attention [12] modules, into state-of-the-art DL m++odels like ShuffleNetV2 [82], EfficientNetV2 [83], and MobileNetV2 [33]. Our goal is to assess how these modules affect factors such as model performance, efficiency, and parameter count. To achieve this, we've developed attention-fused versions of ShuffleNetV2, EfficientNetV2, and MobileNetV2 models. Initially, an attention module is added after the first convolution layer in each model to enhance the features passed on to subsequent layers. The attention module depicted in Fig. 11 represents the location where various attention mechanisms are applied in the models. Fig. 11 (a) displays the attention-fused ShuffleNetV2 model; the attention-fused EfficientNetV2 model is depicted in Fig. 11 (b); and the attention-fused MobileNetV2 model is presented in Fig. 11 (c).

Fig. 11.

Structure of attention fused model (a) ShuffleNetV2 (b) EfficientNetv2 (c) MobileNetv2

5.1. Material and methods

This comparative study utilizes a publicly available dataset [84] comprising 55,636 images and 5850 augmented images that have been created by performing tasks such as image flipping, scaling transformations, gamma correction, noise injection, rotation, and PCA colour augmentation. The dataset selection was based on its inclusion of images representing 26 diseases across 14 distinct crop species, facilitating comprehensive testing to assess the model's generalizability across diverse classes. The images were captured under controlled conditions with a consistent background and resized to dimensions of 224x224 pixels. The dataset is organized into 39 classes, each corresponding to a specific disease, as outlined in Table 2. To accommodate various deep learning models, additional image pre-processing techniques, such as resizing, are applied. The dataset was divided into three groups: training, validation, and testing, with ratios of 0.8, 0.1, and 0.1, respectively. As a result, 49,188 photos are used for the training set, 6149 for the validation set, and 6149 for the testing set.

Table 2.

Classification results of attention-fused model considered.

| Model | Accuracy | Precision | Recall | F1-Score | Parameters | Impact on accuracy due addition of attention mechanism | Parameters Added |

|---|---|---|---|---|---|---|---|

| MobileNetV2 | 99.57 % | 99.58 % | 99.57 % | 99.57 % | 2,273,831 | – | – |

| SE_ MobileNetV2 | 99.75 % | 99.75 % | 99.75 % | 99.75 % | 2,273,993 | +0.18 % | 162 |

| CBAM_ MobileNetV2 | 98.71 % | 98.82 % | 98.71 % | 9.872 % | 2,284,247 | −0.86 % | 10,416 |

| SA_ MobileNetV2 | 99.70 % | 99.70 % | 99.70 % | 99.7 % | 2,273,855 | +0.13 % | 24 |

| EfficientNetV2 | 99.70 % | 99.72 % | 99.70 % | 99.69 % | 20,227,447 | – | – |

| SE_ EfficientNetV2 | 99.88 % | 99.88 % | 99.88 % | 99.88 % | 20,227,520 | -+0.18 % | 73 |

| CBAM_ EfficientNetV2 | 99.38 % | 99.42 % | 99.38 % | 99.38 % | 20,237,863 | −0.32 % | 10,343 |

| SA_ EfficientNetV2 | 99.96 % | 99.96 % | 99.96 % | 99.96 % | 20,227,465 | +0.26 % | 18 |

| ShuffleNetV2 | 98.67 % | 98.33 % | 98.67 % | 98.44 % | 1,293,579 | – | |

| SE_ShuffleNetV2 | 99.85 % | 99.85 % | 99.85 % | 99.85 % | 1,293,652 | +1.18 % | 73 |

| CBAM_ShuffleNetV2 | 99.18 % | 99.29 % | 99.18 % | 99.21 % | 1,303,995 | +0.51 % | 10,416 |

| SA_ShuffleNetV2 | 99.91 % | 99.91 % | 99.91 % | 99.91 % | 1,293,597 | +1.24 % | 18 |

5.2. Experiment setup

Most studies in Section 4 and Table 1 used PyTorch, an open-source deep-learning framework popular in research. This study utilized pretrained deep learning models, MobileNetV2, ShuffleNetV2, and EfficientNetV2 models, for comparative analysis. More information and code can be found in Ref. [85]. The experiments were carried out on an NVIDIA GeForce GPU with Driver Version 525.105.17 and CUDA Version 12.0. The GPU's memory capacity was 12,288 MB. The deep learning models used for analysis were pretrained on the ImageNet dataset. The models in our experiment were trained for 15 epochs using a batch size of 32. The ADAM optimizer with an initial learning rate of 0.001 was utilized, and the CrossEntropyLoss function served as the loss function. Additionally, a learning rate decay of 10 % was implemented every 5 epochs to enhance the efficiency of the training process.

5.3. Evaluation metrics

Several criteria are taken into account in order to evaluate the models' performance, including accuracy, precision, recall, and F1 score. These indicators are essential for assessing a model's quality. The degree to which the predicted and actual values agree is known as accuracy as shown in Eq. (8). The ratio of true positives to all of the model's positive predictions is known as precision which is given in Eq. (9). Recall measures the ratio of true positives to positive samples in the dataset as depicted in Eq. (10). The F1 score sheds light on the model's capacity to recognise positive samples by combining precision and recall as given in Eq. (11). True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN) values are used to calculate evaluation metrics, as explained below:

| (8) |

| (9) |

| (10) |

| (11) |

5.4. Results and discussion

The integration of attention processes into deep learning models has resulted in significant increases in model performance across a variety of metrics. Table 2 includes the testing results obtained and compares the performance metrics of various models before and after adding attention techniques such as SENet (SE), CBAM, and Shuffle Attention (SA).