Abstract

Passive acoustic monitoring (PAM) is a powerful tool for studying ecosystems. However, its effective application in tropical environments, particularly for insects, poses distinct challenges. Neotropical katydids produce complex species-specific calls, spanning mere milliseconds to seconds and spread across broad audible and ultrasonic frequencies. However, subtle differences in inter-pulse intervals or central frequencies are often the only discriminatory traits. These extremities, coupled with low source levels and susceptibility to masking by ambient noise, challenge species identification in PAM recordings. This study aimed to develop a deep learning-based solution to automate the recognition of 31 katydid species of interest in a biodiverse Panamanian forest with over 80 katydid species. Besides the innate challenges, our efforts were also encumbered by a limited and imbalanced initial training dataset comprising domain-mismatched recordings. To overcome these, we applied rigorous data engineering, improving input variance through controlled playback re-recordings and by employing physics-based data augmentation techniques, and tuning signal-processing, model and training parameters to produce a custom well-fit solution. Methods developed here are incorporated into Koogu, an open-source Python-based toolbox for developing deep learning-based bioacoustic analysis solutions. The parametric implementations offer a valuable resource, enhancing the capabilities of PAM for studying insects in tropical ecosystems.

This article is part of the theme issue ‘Towards a toolkit for global insect biodiversity monitoring’.

Keywords: passive acoustic monitoring, machine learning, katydid, data engineering, bioacoustics, tropics

1. Introduction

Recent advances in data processing have ushered in transformative changes across various biological disciplines. Among these, the realm of acoustic signal detection and classification in soundscape recordings offers immense potential, with the capacity to yield unparalleled insights into spatio-temporal occurrence patterns of species within ecosystems (e.g. [1]). Over the past few decades, passive acoustic monitoring (PAM) methods using automatic signal recognition have been widely employed for monitoring a variety of terrestrial and aquatic fauna [2,3]. In the past decade, the adoption of machine learning (ML)-based automation approaches, which typically offer superior accuracy and robustness [4] over conventional techniques, has greatly enhanced the success of PAM methods in monitoring fauna at scale (e.g. [5]). Despite the demonstrated successes, there have been fewer studies employing ML-driven PAM approaches for monitoring insect populations.

Comprehensive insights into the composition of insect communities offer invaluable data for the purposes of conservation and effective management [6,7]. Unsettled debates regarding the nature and extent of declines in insect populations underscore our limited knowledge concerning this class of fauna, which occupy a key trophic level in the food chain [8,9]. The scarcity of knowledge includes critical details such as seasonality and distribution patterns across the forest canopy and understory [10,11]. In evolving forest ecosystems and amidst diminishing insect populations, persistent assessment of insect populations will be key for generating interpretable and actionable data. In agricultural applications such as detecting crop pests and adaptively modulating deployment of pesticides and other control measures, adoption of automated PAM techniques can facilitate faster interventions.

Insect calls offer a proxy for their spatial and temporal dynamics [12]. However, insects pose unique challenges for acoustic monitoring. Chorusing insects, such as several species of crickets, are generally more straightforward to detect due to their repetitive and synchronized calling patterns [13]. By contrast, katydids are not typically associated with the formation of choruses. Some employ a strategy that seeks to strike a balance between communication with conspecifics and minimizing their exposure to potential predators [14–16], often leading to low signalling rates. In addition, katydid calls and their calling behaviour are the result of a complex interplay of various selective forces. These include the preferences of females [17–19], competition among males [20,21], trade-offs between female preferences and male–male competition [22,23], the influence of parasites [24–26], the effects of environmental features on signal transmission [27–30] and energy constraints [31]. The resulting temporal, spectral and spectro-temporal niche-partitioning (e.g. [32]; figure 1) drive a greater degree of distinctiveness and dynamism in their acoustic behaviour, rendering the automated recognition of katydid species a more challenging task.

Figure 1.

Waveforms and spectrograms demonstrating inter-specific distinctiveness in calls of a few of the many species of katydids found at the study site: (a) Euceraia atryx, (b) Erioloides longinoi, (c) Docidocercus gigliotosi, (d) Euceraia insignis, (e) Ischnomela pulchripennis, (f) Anaulacomera spatulata, (g) Anaulacomera furcata and (h) Montezumina bradleyi.

In this study, we undertook the development of a convolutional neural network (CNN)-based solution to automate the recognition of 31 katydid species of interest found on the Barro Colorado Island (BCI), situated within the Panama Canal. BCI is home to a diverse community of over 80 katydid species, each contributing to the soundscape with calls spanning a wide bandwidth, including high ultrasonics, and varying in duration from mere milliseconds to multiple seconds [33]. Notably, certain katydid species emit calls with intricate multi-pulse structures, where subtle differences in inter-pulse intervals or central frequencies serve as the only discernible features. These nuanced differences, coupled with lower source levels and higher susceptibility to masking (even under moderate ambient noise), make species identification in PAM recordings a very challenging endeavour. Further complicating matters, our initial training dataset was limited and characterized by significant class imbalances. To overcome these challenges, we engaged in extensive data engineering, implementing a range of techniques to expand class representation and variance in the training dataset. We carefully selected approaches and parameters for data preprocessing, class balancing, input conditioning and data augmentations. Each of these choices was informed by both practical considerations and domain awareness on katydid call diversity.

Another serious challenge to realising an ML solution was the atypical nature of the available training recordings—clean focal recordings that were gathered by placing captured individuals in controlled settings presented a stark domain mismatch with real-world soundscapes where the model would ultimately be deployed. Though often not as pronounced, domain mismatch problems are commonly encountered in bioacoustic ML, across various taxa including insects (e.g. [34,35]), birds (e.g. [36,37]) and marine fauna (e.g. [38,39]). Consequently, a variety of solutions have also been explored, including the use of transfer learning following a pretraining using general-purpose datasets [37,40,41], source separation and denoising [42,43], discriminative training [44], domain adaptation based on covariance normalization [45], unsupervised domain adaptation [46,47], domain/context adaptive neural networks [36,48], etc. Use of data augmentation techniques to address domain mismatch problems was common among the top-performing solutions in a public challenge focused on developing generalizable methods for detecting birds [49]. Besides expanding on the available recordings, our solution also relied largely on using augmentations. We considered physics-based augmentations, in both time- and spectral domains, to produce near-realistic outputs.

Here, we provide the rationale for and details of the considered techniques, along with an account of the design choices governing our model's development. We present an evaluation of the resulting trained model using real-world field recordings. This study's contributions extend beyond the confines of katydid recognition. It highlights the importance of adopting tailored approaches in developing machine-listening solutions, with greater emphasis on data engineering to surmount common data-related challenges. The proposed techniques are generic and parametric, and provide a robust and adaptable approach that caters to researchers looking to develop insect biodiversity monitoring solutions.

2. Material and methods

(a) . Training dataset

At the start of our study, we had access only to a limited set of focal recordings from a prior study whose goal was to describe call characteristics of katydids on BCI [33]. The focal recordings were obtained from caged male katydids placed in a controlled quasi-natural setting resembling their natural environment. Acoustic foam was used to suppress sound reflections and limit ambient noise. Audio recordings were collected at a sampling frequency of 250 kHz using a CM16 condenser microphone (AviSoft Bioacoustics, Germany) placed at 30 cm from the focal insect and connected to an UltraSoundGate 416H A/D converter (AviSoft Bioacoustics, Germany). The resulting data presented high-fidelity recordings of katydid sounds with minimal background noise and other interferences. With little being known of the call characteristics of Panamanian katydids prior to ter Hofstede et al. [33], their protocol for focal data collection ensured utmost confidence in establishing call-to-species associations. However, the ‘domain mismatch’ of these near-studio-quality recordings with typical field recordings rendered them far from ideal as a standalone training dataset for training ML models. Furthermore, the focal recordings contained very few instances of any discernible non-katydid sounds, the lack of which limits an ML model's ability to learn to reject ‘out of vocabulary’ sounds.

To overcome the above shortcomings, we augmented the training dataset with in situ ambient recordings. Using Swift autonomous recorders (K. Lisa Yang Center for Conservation Bioacoustics, Cornell University) programmed to record at a sampling rate of 96 kHz, we recorded BCI soundscapes at different times of day on 24 different days over a span of seven months in 2019. Following manual annotations (see below), we retained approximately 1.3 h of recordings that contained katydid sounds and other discernible sounds from the environment. Although these recordings would enable the ML model to better adapt to the target application environment for which it is being developed, they however contained calls of only 22 of the 31 target species. Also, for many species, the numbers of detected calls were low (13 species with fewer than 10 calls; 10 species with fewer than 3 calls).

To address the above limitations, we further augmented the training set using playback recordings, wherein katydid sounds from the focal recordings were played back using an Avisoft Bioacoustics Vifa speaker with an Avisoft USGH 216 amplifier and re-recorded using a Swift recorder. Prior to playback, the speaker was characterized using a tone sweep, and playback recordings were filtered to compensate for deficiencies in speaker response. To mimic differences in attenuation and variations in spectrographic features arising from the propagation of sound in vegetated environments, the transmitter and the recorder were placed in six different spatial configurations among bushes and leaf cover and at varying relative distances (in the range 1–6 m).

Following an annotation protocol similar to that of Symes et al. [50], we produced call-level annotations using Raven Pro v.1.6 [51] for all three recording sets (i.e. focal recordings, in situ recordings and playback recordings). Annotations comprised boxes bounding the calls' time (within respective files) and frequency extents. In the in situ recordings, we also annotated calls of bats (one of the dominant predators of katydids) and other non-katydid sounds commonly occurring in the environment. With bats’ echolocation clicks having some resemblance to pulsed calls of a few katydid species (e.g. Anaulacomera furcata, Anaulacomera ‘ricotta’, Anaulacomera ‘goat’, and Anaulacomera spatulata) and with both occurring in similar bandwidths, having a separate detection-target class for bats facilitates training the ML model to better discriminate these and thereby helps us easily discard bat calls from detection outputs. Similarly, annotations of other non-katydid sounds facilitate having a ‘catch all’ reject class. Further, we also annotated representative time periods that did not contain any discernible spectrographic components. Besides facilitating training the ML model not to produce high scores in the absence of target sounds, these recording periods were also used during data augmentation (§2c(ii)).

(b) . Test dataset

Our test dataset comprised field recordings sampled pseudo-randomly from a long-term katydid monitoring programme at BCI. Audio recordings were collected at two locations on BCI using Swift recorders placed at a height of 24 m in the forest canopy. Vegetation density varied notably between the chosen locations, offering the potential to capture a wider range of possible species variations. The recorders were programmed to record for ten minutes at the beginning of each hour from dusk until dawn, at a sampling frequency of 96 kHz. From a period spanning five months (in 2019) covering both wet and dry seasons, we selected five dates corresponding to new moon nights. Katydids are known to be most active during darker nights [52,53]. From the 5 days, we randomly selected three 10-minute recordings representative of soundscapes immediately after dusk, at midnight and shortly before dawn.

Using Raven Pro software, we manually annotated the selected recordings using a dual-observer dual-reviewer protocol [50] wherein each audio file was initially annotated by one of the two observer analysts and was then reviewed iteratively by two expert analysts. Data corruption due to equipment malfunction at one of the sites during a dry season day forced us to discard three audio files from the corresponding day. The final test dataset contained a total of 270 min of soundscape recordings with annotations for 24 species of katydids, out of which 21 were common with our list of target species.

(c) . Data engineering

The calls of Anaulacomera ‘wallace’ and Hetaira sp. exhibit extreme similarity and are indistinguishable to human analysts. For the purposes of automatic classification, we merged annotations from these two species into a single class. We also combined annotations of all the uniquely identified non-katydid sounds into a single ‘catch all’ reject class. In total, we had 32 detection-target classes, of which 30 corresponded to 31 katydid species, one to bats, and one to the ‘catch all’ reject class. Annotations of the ‘background’ category were not assigned to a detection-target class. Instead, for training inputs (§2c(i)) belonging to this category, we set their ground-truth labels (a 32-dimensional vector containing values in the range 0–1) to contain all zeros.

(i) . Input preparation

Considering that call energies of all the katydid species considered were dominantly above approximately 7 kHz, we applied a 12th order Butterworth high-pass filter to suppress energies below 6.9 kHz. For consistency and speed-up of downstream steps, we downsampled all audio recordings to a sampling frequency of 96 kHz. This resulting Nyquist rate (48 kHz) remained well above the dominant frequencies of the calls of all included katydid species. Furthermore, this choice simplifies the envisaged application of the trained model resulting from this study—field recordings would be gathered at 96 kHz sampling frequency and as such no resampling would be necessary then. For producing fixed-dimension inputs to the CNN model, first we split up the downsampled continuous audio recordings into segments of 0.8 s duration. By considering different amounts of segment advance (= segment length – segment overlap), we coarsely controlled the number of segments generated in different scenarios. When pre-processing focal recordings, we considered different segment advance amounts for different species groups (table 1). These choices were driven primarily by the number of available annotations for each species and were aimed at reducing the magnitude of class imbalances in training inputs. For pre-processing in situ and playback recordings, we considered a fixed segment advance amount of 0.2 s. This pre-processing of the in situ recordings also generated ‘background’ segments, corresponding to annotations from that category. Additionally, we repeated pre-processing of in situ recordings with a segment advance of 0.1 s and only retained segments corresponding to ‘background’ annotations. These are ‘additive background’ segments for use in data augmentation (§2c(ii)) and do not constitute class-assigned training inputs.

Table 1.

Parameters considered in pre-processing of focal recordings corresponding to different species. Chosen segment advance amounts included 0.05 s (♣), 0.15 s (♦), 0.2 s (♥), 0.4 s (♠), 0.55 s (□) and 0.6 s (▪). Sound centralization penalty was considered for 13 of the 31 species.

|

We set the per-class ground-truth scores (values in the range 0–1) in the 32-dimensional label vectors for each segment to be reflective of the amount of temporal overlap between the segment and annotations from the corresponding class—where the segment fully contained an annotation or vice versa, we set the ground-truth score to 1.0, and where there was partial overlap between the segment and an annotation, we divided the overlap duration by the shorter of 0.8 s or the annotation's duration. For certain species with very short calls (annotations shorter than 50% of segment duration, i.e. 0.4 s), we applied an additional ‘sound centralization’ penalty—for annotations that neither occurred within the central 25–75% of the segment nor spanned across the mid-epoch of the segment, we penalized the ground-truth score by a factor of (1 − δ/0.4), where δ is the shortest temporal distance from the segment centre to the annotation. We applied sound centralization penalty only to segments from focal recordings.

Power spectral density spectrograms of normalized audio segments (waveform values in the range [−1.0, 1.0]) were computed using a 5 ms Hann window with 50% overlap between frames, resulting in time- and frequency resolutions of 2.5 ms and 200 Hz, respectively. We clipped the spectrograms along the frequency axis to retain only portions between 6.8 kHz and 47.4 kHz, which resulted in model inputs having dimensions of 204 × 319 (height × width).

The generated audio segments and the associated ground-truth scores (labels) constituted intermediate outputs of the input preparation stage. Although conceptually part of the input preparation stage of typical bioacoustic ML workflows, computations to transform audio segments into spectrograms were performed on-the-fly as a part of the training process (§2d(ii)) to facilitate ease in applying different types of data augmentations.

(ii) . Data augmentation

Given our modest-sized training dataset, we employed a variety of parametric data augmentation techniques (described below) to meaningfully inflate the training set while also improving the variance among the training inputs. These included both time-domain and spectral-domain augmentations. We set the sequence and probability of application of each augmentation type differently for focal and non-focal (in situ and playback) training inputs (table 2). We also selected different ranges of values for the parameters of each augmentation type for different input types. We made these choices (i.e. sequence and probability of application, parameter ranges) empirically, informed by our knowledge of the considered calls and while ensuring that the augmentations remained label-preserving. In the below descriptions of the considered augmentation types, all ‘random choices’ arise from a uniform distribution within the respective ranges.

Table 2.

Probability of application of time-domaina and spectral-domainb augmentations to training inputs from focal and non-focal (in situ and playback) recordings. The ordering in the table reflects the sequence of application of the augmentations. cFor focal inputs, Gaussian noise was only added to 25% of the inputs among those 33% that were not subject to background infusion. [NA: Not applied.]

| augmentation | probability of application |

|

|---|---|---|

| focal | non-focal | |

| echoa | 0.20 | NA |

| volume rampinga | 0.33 | 0.33 |

| background infusea | 0.67 | NA |

| Gaussian noisea | 0.25c | 0.75 |

| variable noise floorb | 1.00 | 1.00 |

| alter distanceb | 0.33 | 0.25 |

Echo. Synthesizes echo effect by adding a dampened and delayed copy of the input signal's waveform to itself. The dampening factor—a scalar multiplier applied across the copy—reflects a desired attenuation of l dB. The dampened copy is added to the original waveform with an offset of t ms. We set l to vary randomly in the range [−24, −15] and t to vary randomly in the range [1, 6].

Volume ramping. Simulates the effect of a source moving away from or closer to the receiver, by multiplying the input segment's waveform with an amplitude envelope that linearly decreases or increases, respectively. Setting one end of the amplitude envelope to 1.0, it is linearly reduced to a lower value (less than 1.0) at the other end to reflect an attenuation of l dB at that end. We set the ramping direction to vary randomly (by randomly selecting the end to be attenuated) and set l to vary randomly in the range [0, 6].

Background infuse. Adds real-world ambient noise to training inputs from focal recordings. The waveform of a randomly selected segment from the ‘additive background’ set is added to that of a focal segment after subjecting the former to volume ramping augmentation followed by attenuation. The attenuation, wherein a scalar multiplier is applied across the noise segment to reflect an attenuation level of l dB relative to the absolute peak in the focal segment, ensures that target signals in focal segments retain dominance in the resulting outputs. We set the ramping parameter (see above) to vary randomly in the range [0, 9] and set the attenuation level l to vary randomly in the range [−18, −12].

Gaussian noise. Adds Gaussian noise to the input segment's waveform. We set the standard deviation of the distribution such that the resulting noise waveform's maximum absolute amplitude occurred at a level that was l dB below the absolute peak amplitude in the signal waveform. We set l to vary randomly in the range [−24, −15] for segments from focal recordings and in the range [−45, −30] for segments from non-focal recordings.

Variable noise floor. Mimics the effect of the foreground having different loudness levels relative to the background. Typically, before converting linear-scale spectrograms to log-scale, a small positive quantity ε is added to avoid computing log(0). Our choice of ε = 1 × 10−10 produces spectrograms with a noise floor of −100 dB full scale. During training, we set ε to vary randomly to reflect a noise floor in the range [−105, −85] dB full scale.

Alter distance. Mimics the effect of increasing or decreasing the distance between a source and the receiver by attenuating or amplifying, respectively, a spectrogram's components at higher frequencies. A quantity whose magnitude varies linearly from 0 to l dB over the bandwidth of the clipped spectrogram (see §2c(i)) is added to all frames of the spectrogram, with the values 0 and l dB corresponding to the lowest and highest frequencies, respectively. We set l to vary randomly in the range [−6, −3] for segments from focal recordings and in the range [−3, 3] for segments from non-focal recordings. For non-focal recordings, the range spanning both negative and positive quantities simulated both increases and decreases in source-to-receiver separation. Attenuation (only) factors were set higher for focal recordings given the known smaller separations.

(d) . Machine learning

We built, trained and tested our ML model using Koogu (v.0.6.2; https://pypi.org/project/koogu/0.6.2/), an open-source framework for machine learning in bioacoustics. The underlying deep-learning framework was TensorFlow (v.2.3.1; [54]), running on Python v.3.6 (Python Foundation). We performed training and testing on an Ubuntu 20.04-based computer featuring an octa-core Intel Xeon Silver 4110 processor, 96 GB RAM and an NVIDIA Tesla GPU with 16 GB video RAM.

(i) . CNN architecture

We chose a quasi-DenseNet architecture [55] due to its computational efficiency and ability to train well with few samples. With 3 × 3 pooling at transition blocks (between successive quasi-dense blocks), the spatial dimensions of model inputs (204 × 319) diminish down to 3 × 4 over five blocks, rendering the resulting shape small enough to apply global average pooling. As such, we implemented a CNN with five quasi-dense blocks, each having two layers per block with a growth rate of 32. We added a pre-convolution layer having 32 3 × 3 filters preceding the five-block network. We connected the outputs of the five-block network to a global pooling layer, which was followed by a 64-node fully connected layer, a batch normalization layer [56], a ReLu activation layer [57] and finally, a 32-node (corresponding to 32 classes) fully connected layer with sigmoid activations.

(ii) . Training

We used 90% of the prepared segments for training the model and the remaining 10% for evaluating through the training process. We weighted training losses appropriately to address class imbalance in the training inputs. To improve model generalization, we used dropout [58] with a rate of 0.05. We trained models over 60 epochs using the Adam optimizer [59] and with a mini-batch size of 32. We used an initial learning rate of 0.01 and successively reduced it by a factor of 10 at epochs 20, 40 and 50.

To assess the level of influence that the considered augmentation techniques and the added playback recordings had on recognition performance, we considered training additional models (i) without the parametric augmentations and playback set (considering only the original focal and in situ recordings), and (ii) with parametric augmentations but without the playback set. To ascertain consistency, we replicated training in the three scenarios (i.e. only original recordings, original recordings + augmentations, and original recordings + playback recordings + augmentations) five times, producing a total of 3 × 5 = 15 fully trained models. For some level of completeness, we also included a transfer learning experiment using a MobileNetV2 [60] model whose weights were pre-trained on the ImageNet dataset [61]. Single-channel spectrograms were transformed into 3-channel equivalents using the jet colour palette, and were resized to 224 × 224 before being fed to the model. Limiting the transfer learning experiments to only the ‘all in’ scenario (i.e. original recordings + playback recordings + augmentations), we also trained five replicates of the transfer learning model. To ensure that all four models (three quasi-DenseNet + one MobileNetV2) in a replication set trained from the same ‘starting point’, we deterministically seeded the randomized initialization of neural-network weights (seed values were same for the different training scenarios in each replication, but differed across the five replications).

(e) . Inferencing protocol

We subjected recordings from the test dataset to the same sequence of pre-processing operations as the training dataset (i.e. resampling, filtering, segmentation, label association and spectrogram generation), but did not apply any augmentations. In contrast to the ad hoc amounts of segment advance chosen when generating training inputs, we used a fixed segment advance of 0.2 s to generate test inputs.

Given the wide range of durations of the target calls (from a few milliseconds to multiple seconds; electronic supplementary material, figure S1) and their disparity with the chosen segment length, we employed a post-processing algorithm to convert raw segment-level detections (with segment boundaries as a detection's time extents) into ‘grouped and processed detections' whose temporal extents were better aligned with those of the detected targets (figure 2). Applying a detection threshold τ to the model's outputs, for each class we identified groups of contiguous segments having detection scores ≥τ. Given the chosen values for segment length and advance, the innermost overlapping periods within a group were 0.2 s, 0.4 s or 0.6 s long for groups of 4, 3 or 2 segments, respectively, with 4-segment groups having the most-overlapped periods. Treating each group as a single detection, the post-processing algorithm reported time extents of the innermost overlapping periods as the detection's time extents. This logic catered to short-duration targets (less than 0.8 s, such as the calls of Anaulacomera ‘goat’, Anaulacomera furcata, etc.; see left and centre columns in figure 2). A larger grouping (more than 4 segments) resulted in a contiguous sequence of 0.2 s-long most-overlapped periods. Treating each larger grouping as a single detection, the post-processing algorithm reported the period encompassing the first and last of the corresponding most-overlapped periods as the detection's time extents. This logic catered to longer-duration targets (greater than 0.8 s, such as the calls of Acantheremus major, Erioloides longinoi, etc.; see right-most column in figure 2). Treating positive segments (segments having scores ≥τ) that were not part of a grouping as independent detections, the post-processing algorithm reported the segments' temporal boundaries as detection time extents. We retained all reported detections for later analysis.

Figure 2.

Illustration of the post-processing algorithm using actual test data (top row) and model outputs. The middle row shows raw (as generated by the model) per-segment scores (for the corresponding species), with blue triangles (temporally centred within the segments’ time extents) indicating scores exceeding the detection threshold (0.8; chosen for demonstration). Blue arcs extending on either sides of the triangles, along with the dotted vertical lines dropping down from their tips, show the time extents of each segment. The bottom panel shows, for each example (columns from left to right), the different numbers of innermost overlapping periods present (1, 1 and 5, respectively, resulting from 2, 3 and 8 successive segments exceeding the threshold) and the time extents of the combined detections reported by the algorithm.

(f) . Performance assessment

Given our inference protocol, time extents of reported detections were always multiples of 0.2 s. However, annotations of ground-truthed (GT) calls had no such constraints, which made automated matching of detections to annotations a non-trivial endeavour. Attempts to automate were further complicated (i) by the large variations in the durations of both detections and annotations, and (ii) for closely spaced short-duration GT calls, the post-processing algorithm could report fewer (or just one) detections each encompassing multiple GT calls. We therefore employed certain ‘coverage’ constraints to classify each reported detection as either a true- or a false-positive (TP or FP, respectively) and to ascertain whether a GT call was recalled or missed. For each reported detection, if it had any temporal overlap with one or more GT annotations of the same class and if the sum of the overlapping durations exceeded 60% of the detection's duration, then the detection was considered a TP. Similarly, a GT call was considered ‘recalled’ if its annotation had any temporal overlap with one or more reported detections of the same class and if the sum of the overlapping durations was ≥50% of the annotation's duration. Note that these rules for matching led to a deviation from the popular way of determining a detector's rate of recall—owing to an absence of direct correspondence between TPs and GT annotations (therefore TPs + false negatives ≠ GT calls), we simply take the ratio of the number of recalled GT calls to the total number of GT calls. To assess the precision versus recall characteristics of the trained model, we set τ to values in the range 0–1 (intervals of 0.05), and for each threshold value, we applied the post-processing algorithm and computed precision () and recall rates.

(g) . Manual validation

We reviewed reported detections that were not matched to any GT annotations. In a substantial number of cases, these detections corresponded to faint calls that the analysts had either missed or did not annotate because they did not fit the ‘visible pulse structure’ criterion [50]. Often, the calls were sufficiently faint that they were not readily visible in spectrograms. By filtering the recordings using a bandpass filtre (with appropriate pass-bands for different species) and adjusting visualization settings (in Raven Pro) appropriately, we were able to verify the signatures of many of the reported detections in spectrogram or in waveform, or both (for an example, electronic supplementary material, figure S2). In other cases, the reported detections corresponded clearly to notable call-like spectrographic features, but with call structures that were sufficiently degraded that human observers could neither support nor refute the model-assigned classes. Finally, there were cases where no signal was observable despite intensive review (absolute false positives).

3. Results

Applying the different segment advance choices to the training dataset generated 10 989 segments that were assigned to one or more species classes (see electronic supplementary material, table SI for a breakdown of per-class counts), 1057 ‘background’ segments and 2101 ‘additive background’ segments. With 10% of these carved out for validation, there were less than 10 000 species-assigned training samples. Having only 476 k total and 471 k trainable parameters, our 15-layer quasi-DenseNet model was considerably smaller compared to popular off-the-shelf architectures such as MobileNetV2 (53 layers, >2 million parameters) and EfficientNetB0 (>4 million parameters; [62]). Training achieved convergence for all 15 + 5 models. The quasi-DenseNet models attained final training and evaluation accuracies of up to 99.68% and 99.64%, respectively, with respective binary cross entropy losses as low as 4 × 10−3 and 8.9 ×10−3 (figure 3 and electronic supplementary material, table SII). The fine-tuned MobileNetV2 models attained final training and evaluation accuracies of up to 98.81% and 98.46%, respectively, with respective binary cross entropy losses as low as 2.5 × 10−2 and 4.9 × 10−2 (electronic supplementary material, figure S6 and table SIII).

Figure 3.

Recognition performance of the trained model against the test dataset—distribution of model's score outputs (left) categorized into and true- and false-positive detections, and precision-recall characteristics (right). In practice, the actual performance of the model lies between the ‘base + confirmed’ and ‘base + confirmed + unable to reject’ assessments.

As the primary goal of the study was to develop a well-performing trained model for analysing field recordings, we limited the painstaking manual validation efforts (§2g) to just one of the models from the ‘all in’ scenario. Following manual analyses of detections generated by the model on test set recordings, we report three versions of performance assessments. The first version, labelled ‘base’, matched reported detections to original annotations of the test recordings. In the second version, labelled ‘base + confirmed’, we added to the TP set (and to the GT set) any apparent FP detections that human observers could confidently match to underlying call(s), and present model performance using the expanded GT set. For the third version, we also treated as TPs any detections that could not be confidently rejected as FPs. In practice, the true performance of the model lies between the latter two assessments. While the model identified with high scores a large number of faint and degraded calls that human analysts had originally overlooked, the majority of the wrong classifications remained concentrated at low scores (figure 3). These are good indicators of the model's robustness in processing real-world field recordings.

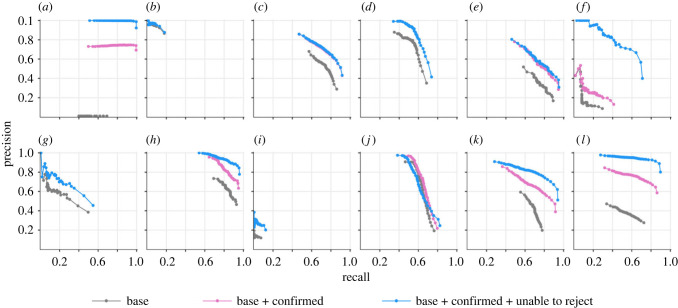

A class-specific breakdown of the recognition performance (figure 4) shows the discriminative power of the model in distinguishing between closely related call types (e.g. among congeneric Phaneropterinae Anaulacomera ‘wallace’ and Anaulacomera furcata). The model recognized with high accuracy the longer calls of Conocephalines (e.g. Acantheremus major, Erioloides longinoi) and loud calls of certain Pseudophyllinae (Pristonotus tuberosus and Thamnobates subfalcata). The lower overall recall rate of the model (figure 3) may be attributed to low recall rates for species having a large number of annotations in the test set, such as Agraecia festae (1155) and Ischnomela pulchripennis (1622). Among the species having no original annotations in the test dataset, no TP detections were reported for Balboana tibialis, Copiphora brevirostris and Steirodon stalli whereas for Paracopioricus and Eubliastes pollonerae the model attained moderate-to-high F1-scores (electronic supplementary material, figure S4).

Figure 4.

Class-specific precision-recall curves for katydid species that had at least 20 ground-truth annotations in the test dataset—(a) Acantheremus major, (b) Agraecia festae, (c) Anaulacomera furcata, (d) Anaulacomera spatulata, (e) Anaulacomera ‘wallace’ or Hetaira sp, (f) Docidocercus gigliotosi, (g) Ectemna dumicola, (h) Erioloides longinoi, (i) Ischnomela pulchripennis, (j) Montezumina bradleyi, (k) Pristonotus tuberosus, and (l) Thamnobates subfalcata. The apparent absence of pink lines in some of the panels is due to obscuration by blue lines as there were no detections of the corresponding class that the human observers could neither confirm nor refute.

The manual validation exercise (§2g), which categorized each reported detection (that did not have a match with GT annotations) as either TP or FP, had a larger influence on the model's precision rates than it had on recall rates as the categorization affected both the numerator and the denominator in the ratio . Given our approach to computing recall rates (see §2f), we could still obtain a representative estimate of a model's recall in the absence of manual validation. As such, we present performance comparisons between quasi-DenseNet models from the three training scenarios (§2d(ii)) using only their esimated recalls (figure 5). For better clarity with making pairwise comparisons, see electronic supplementary material, figure S5. Comparisons against the transfer learning approach, which was not among the primary objectives of the study, are also included in electronic supplementary material (figure S7). Given that we did not conduct any formal search for optimal hypermater combinations, readers are advised to treat these comparisons as indicative.

Figure 5.

Class-specific recall rates (panels a–l correspond to the respective classes listed in figure 4). Upper and lower extents of the filled regions correspond to maximum and minimum recall rates, respectively, obtained for the five quasi-DenseNet models in each training scenario.

4. Discussion

In this study, we addressed the non-trivial task of developing a ML detector for neotropical katydid sounds. Our efforts highlighted two overarching categories of challenges: domain-specific challenges and data-related constraints. These are not unique to our work but are emblematic of the broader challenges faced when developing machine listening solutions for multi-species recognition within the domain of insects.

The first category of challenges stems from the unique characteristics of katydid calls. In contrast to avian or marine mammal recognition, where established solutions exist, katydid recognition in tropical soundscapes presents a distinctive set of complexities. Given the extreme variance in the temporal, spectral and spectro-temporal characteristics of the calls of neotropical katydids, automatic recognition of certain species' calls is akin to ‘finding a needle in the haystack’. For example, the pulse durations of Phaneropterinae (0.8–1.7 ms) are often far narrower than typical frame widths considered in computing spectrograms. On the other hand, inter-species discriminatory traits sometimes diminish to subtle differences in factors such as pulse repetition rates (e.g. Euceraia atryx versus Euceraia insignis) and peak frequencies (e.g. Anaulacomera furcata versus Anaulacomera ‘ricotta’; see figure 1 for examples). Such extreme conditions precluded the use of pre-trained models (more on this below) or off-the-shelf solutions like BirdNET (with its 3-second-long, limited bandwidth inputs [63]). The idiosyncrasies of tropical insect calls necessitate an approach that comprises meticulously tailored processing at all facets of an ML workflow.

The second category of challenges emerged from the nature of the available training dataset—relatively small size, imbalanced class distribution and atypical origins—which presented limited resources for ML model development. While some classes had very few annotated calls (e.g. Erioloides longinoi and Parascopioricus), many classes contained only sounds from just one (e.g. Acantheremus major) or a few individuals, presenting significant class representativeness issues. Furthermore, the primary recordings in the dataset were collected in controlled environments that minimized ambient noise, deviating from the real-world soundscapes where the model would ultimately be deployed.

Use of pre-trained computer vision (CV) models in bioacoustics often neccesitates resizing of spectrograms to match a model's input shape expectations. Commonly available pre-trained models typically support inputs of shape 224 × 224 and 96 × 96. While upsizing spectrograms may have little or no adverse effects, downsizing could introduce artefacts and the ensuing compression along one or both axes could lead to distortion of discriminatory traits. Few other studies have explicitly considered the impacts of input compression (e.g. [64]). In our case, we chose the option of 224 × 224 as it was the closest match and would result in the least compression—approximately 30% along the horizontal (time) axis and none along the vertical (frequency) axis. Such a high compression ratio (1 : 0.7) across the time axis would cause, among other performance-lowering effects, a collapse of the closely spaced multi-pulse structure of some Phaneropterinae calls (especially Anaulacomera ‘wallace’/Heitara sp.) and a squishing of the spectral features in the short-duration frequency-modulated calls (see [33]) of Copiphora brevirostris, Docidocercus gigliotosi, Eubliastes pollonerae, Montezumina bradlyei and Thamnobates subfalcata, resulting in a loss of their characteristic distinctiveness. Deviating from the common practice of adopting very large CV models in bioacoustics, a few other studies have also demonstrated the upshot of using bespoke fully trained CNN architectures (e.g. [55,65,66]). Our choice of using a modest-sized CNN not only made training and inferencing fast, but it also reduced any risk of potential overfitting given the small training dataset. While adding to the small, but growing, body of literature on using custom-designed CNNs in bioacoustic pattern recognition, this study also highlights the importance of increased emphasis on data engineering (preprocessing, class-balancing, input conditioning, near-realistic augmentations, etc.) in non-trivial recognition problems.

Our choice of a 0.8 s segment length for breaking up continuous audio was a judicious selection as it strikes a balance between competing considerations. It is just long enough to capture sufficient portions of longer-duration calls (>0.8 s; e.g. Acantheremus major, Erioloides longinoi) to be able to unambiguously identify the source. On the other hand, it is short enough for the shortest of calls (e.g. Phaneropterinae calls) to manifest as significant portions of model inputs. For species with variable number of pulses (e.g. Anapolisia colossea, Thamnobates subfalcata), the 0.8 s window ensured that each input captured enough (≥4) pulses of the full call. This was vital, especially where inter-pulse intervals were a strong discriminatory trait (e.g. Euceraia atryx versus Euceraia insignis). While a 0.2 s segment advance during inferencing ensured that calls present in the recordings were captured by one or more segments, the ad libitum segment advance settings considered during input preparation improved class balance among training inputs. The choice of 0.8 s segments was also a trade-off between larger inputs, which exact higher resource demands and slower processing, and smaller input segments, which may not capture sufficient discriminatory traits. When associating ground-truth values to training inputs, our choice to assign continuous (non-binary) values ensured that inputs with clipped calls (at segment boundaries) were penalized less during training. Consequently, the models also likely learned better to produce partial scores for inference inputs with truncated calls. The latter benefit was also conducive for the post-processing stage.

Many studies have considered audio conditioning and spectrogram generation as part of the ‘input preparation’ process (e.g. [4,67]), generating spectrograms as intermediate outputs (often stored as image files). A few studies have considered applying augmentations also as part of the same process (e.g. [68,69]), generating augmented replicates of the original training set. Such approaches, besides increasing storage needs, make applying dynamic time-domain augmentations problematic. Breaking up the input preparation workflow into disjoint processes—audio pre-processing (resampling, filtering, segmentation and label-association) and on-the-fly spectrogram generation—allows additional flexibility in applying both time-domain and spectral-domain augmentations (e.g. [70]). By saving pre-processed audio segments (instead of spectrograms) as intermediate outputs and deferring the audio-to-spectrogram transformation to form part of the data-flow pipeline during training, we could easily include computations for a number of on-the-fly temporal and spectral augmentations prior to and post spectrogram generation, respectively. By randomizing the probability-of-application of each of the chained augmentations and by randomizing the parameter values of each augmentation type, we were able to greatly improve variance in the training inputs, both within each training epoch as well as across epochs.

Given the subtleties in the discernible traits of katydid sounds, synthetic masking (even minimal) risks occluding significant portions (sometimes, the entirety) of short-duration or short-bandwidth calls, thereby precluding augmentation techniques such as SpecAugment [71]. The short duration of input segments left little scope for applying time-warping augmentations without considerably risking label alteration or annulment. Also avoiding commonly used techniques from the CV domain (like rotation, reflection) that could result in label-altering outcomes, we considered only simplistic and largely physics-based augmentation techniques whose outputs resembled naturally occurring conditions more closely and were therefore more label-preserving. The considered data augmentations resulted in non-negative improvements in recall for every class (having more than 20 ground truth annotations in the test set), with the exception of Docidocercus gigliotosi. The positive improvements were noteworthy in the case of Agraecia festae, which had training inputs only from the focal set, and Ectemna dumicola, which had no training inputs from the in situ set and only six from the playback set (see electronic supplementary material, table SI). Though Pristonotus tuberosus had the least number of training inputs (64) in the focal set, it had attained high recall rates even without augmentations, as the high number of instances in the in situ set (219; the highest for any species) are likely to have provided adequate variance and coverage of target domain's characteristics. Other classes having high numbers of instances in the in situ set also saw little (Anaulacomera furcata) to no (Thamnobates subfalcata) improvements from augmentations, with the exception of Acantheremus major, which had a notable bump in recall. In the case of Montezumina bradleyi, which had no instances in the in situ set although its recall improved notably due to data augmentation, the improvements were dwarfed considerably by the inclusion of training inputs from the playback set. In the case of Acantheremus major and Erioloides longinoi (both having long-duration calls), with recall already attaining quite high values following augmentations there was little scope for the inclusion of playback set to improve performance any further. The very short-duration calls of Phaneropterinae species Anaulacomera furcata and Anaulacomera ‘wallace’ may likely have suffered intense signal degradation during the playback-recapture exercise (either from propagation and/or when the original recordings were filtered). Small dips observed in their recall rates may be attributable to this phenomenon. Further, we observed a strange behaviour where recall rates for some classes that had no training inputs in the playback set changed notably when models were trained with the playback set—recall rates of Agraecia festae and Ischnomela pulchripennis decreased, while that of Anaulacomera spatulata increased. These warrant further investigation. While we observed some improvements from the inclusion of playback set, its influence across classes was not as consistent as that of data augmentations. In addition to potential signal degradation issues (see above), our playback set was also not well-rounded, providing coverage for only half of the classes and having very few samples for some classes. A meaningful expansion of the playback set with additional recaptured recordings may help stabilize performance improvements. Alternatively, one could also simulate a playback set by convolving the focal recordings with impulse responses representative of the target environments that one attempts to mimic [72]. Such a technique offers a cost-effective alternative to using physical transmitters and receivers and offers the ability to ‘add’ newer environments to a playback set without the need for physical access to those environments.

As for the additional experiments with pre-trained models, training (and evaluation) accuracies and losses came close to those of the corresponding fully trained quasi-DenseNet models. However, recall rates were significantly lower (p-value < 10−3) across all thresholds for three classes (Anaulacomera furcata, Montezumina bradleyi and Thamnobates subfalcata) and considerably lower for most other classes. These findings provide credence to our concerns regarding the impacts of reducing spectrogram dimensions to suit an available model.

Starting with a dataset that was dominated by ‘studio quality’ focal recordings (see figure 1 for examples), we have successfully developed an ML solution capable of analysing real-world field recordings. The model's performance underscored the effectiveness of our data engineering efforts. While it excelled in the recognition of species with longer calls (high precision and recall), certain species, particularly those with pulsed and repetitive calls, posed challenges to its rate of recall. This observation may, in part, reflect post-processing considerations and warrant further investigation. We acknowledge that, despite offering good coverage of environmental conditions, the considered test set proved less substantial with respect to species coverage—it contained few or no calls from some of the target species. Subsequent work could consider expanding the test set with additional annotated field recordings to improve significance in assessments. However, we performed additional empirical checks on several random recordings from the area and noted promising outcomes—few FPs and few missed calls, and high confidence (scores close to 1.0) for most TPs. Further, in a related study [73], large-scale analyses of multi-year recordings were performed using the trained model from this study, and daily calling patterns (inferred based on reported detections) of several species were found to be in close agreement with prior knowledge.

Our work not only addresses the specific challenges of katydid sound recognition but, by successfully navigating the idiosyncrasies of domain-specific signal processing and data-related constraints, it also offers a valuable foundation for future endeavours in the realm of insect biodiversity monitoring through machine listening. The design considerations and techniques developed in this study, being generic and parametric, can be readily adopted and adapted by other researchers and initiatives focused on developing machine listening solutions for insect biodiversity monitoring. In our attempt to enrich the resource pool available to the broader scientific community, we have integrated the developed techniques into recent releases of the open-source Koogu toolbox and have also bundled the final trained model with releases of Raven Pro, starting from v.1.6.5.

Acknowledgements

We acknowledge the assistance of the staff of the Smithsonian Tropical Research Institute in facilitating logistics and site access. Hannah ter Hofstede kindly provided the equipment and methods for the re-recorded dataset.

Ethics

This work did not require ethical approval from a human subject or animal welfare committee.

Data accessibility

Data considered in this study were from previously published works [33,50]. The techniques developed in this study have been integrated into recent releases of the open-source Koogu toolbox: https://github.com/shyamblast/Koogu. The final trained model is also bundled with releases (starting from v.1.6.5) of Raven Pro software. For replication of the results presented in the manuscript, all training audio (focal, in situ and playback) and annotations (in Raven Pro selection table format) can be obtained from the Zenodo Repository: https://doi.org/10.5281/zenodo.10594015 [74] and all testing audio files and annotations (also Raven Pro format) are available from the Dryad Digital Repository: https://doi.org/10.5061/dryad.zw3r2288b [75].

Additional data and results are provided in electronic supplementary material [76].

Declaration of AI use

We have not used AI-assisted technologies in creating this article.

Authors' contributions

S.M.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, software, validation, visualization, writing—original draft, writing—review and editing; H.K.: funding acquisition, methodology, project administration, resources, writing—review and editing; L.B.S.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, validation, writing—original draft, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

Funding for this work was provided by National Geographic and Microsoft AI for Earth Grants (grant nos NGS-57246T-18 and NGS-84198T-21) and the Cornell Lab of Ornithology Rose Postdoctoral Fellowship (L.B.S.).

References

- 1.Wood CM, Popescu VD, Klinck H, Keane JJ, Gutiérrez RJ, Sawyer SC, Peery MZ. 2019. Detecting small changes in populations at landscape scales: a bioacoustic site-occupancy framework. Ecol. Indic. 98, 492-507. ( 10.1016/j.ecolind.2018.11.018) [DOI] [Google Scholar]

- 2.Stowell D. 2022. Computational bioacoustics with deep learning: a review and roadmap. PeerJ 10, e13152. ( 10.7717/peerj.13152) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sugai LS, Silva TS, Ribeiro JW Jr, Llusia D. 2019. Terrestrial passive acoustic monitoring: review and perspectives. BioScience 69, 15-25. ( 10.1093/biosci/biy147) [DOI] [Google Scholar]

- 4.Shiu Y, et al. 2020. Deep neural networks for automated detection of marine mammal species. Sci. Rep. 10, 607. ( 10.1038/s41598-020-57549-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wood CM, Barceinas Cruz A, Kahl S. 2023. Pairing a user-friendly machine-learning animal sound detector with passive acoustic surveys for occupancy modeling of an endangered primate. Am. J. Primatol. 85, e23507. ( 10.1002/ajp.23507) [DOI] [PubMed] [Google Scholar]

- 6.Fischer FP, Schulz U, Schubert H, Knapp P, Schmöger M. 1997. Quantitative assessment of grassland quality: acoustic determination of population sizes of orthopteran indicator species. Ecol. Appl. 7, 909-920. ( 10.1890/1051-0761(1997)007[0909:QAOGQA]2.0.CO;2) [DOI] [Google Scholar]

- 7.Thomas JA. 2005. Monitoring change in the abundance and distribution of insects using butterflies and other indicator groups. Phil. Trans. R. Soc. B 360, 339-357. ( 10.1098/rstb.2004.1585) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Simmons BI, et al. 2019. Worldwide insect declines: an important message, but interpret with caution. Ecol. Evol. 9, 3678-3680. ( 10.1002/ece3.5153) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Didham RK, et al. 2020. Interpreting insect declines: seven challenges and a way forward. Insect Conserv. Divers. 13, 103-114. ( 10.1111/icad.12408) [DOI] [Google Scholar]

- 10.Janzen DH, Hallwachs W. 2019. Perspective: Where might be many tropical insects? Biol. Conserv. 233, 102-108. ( 10.1016/j.biocon.2019.02.030) [DOI] [Google Scholar]

- 11.Forister ML, Pelton EM, Black SH. 2019. Declines in insect abundance and diversity: We know enough to act now. Conserv. Sci. Pract. 1, e80. ( 10.1111/csp2.80) [DOI] [Google Scholar]

- 12.Riede K. 2018. Acoustic profiling of Orthoptera: present state and future needs. J. Orthoptera Res. 27, 203-215. ( 10.3897/jor.27.23700) [DOI] [Google Scholar]

- 13.Ganchev T, Potamitis I. 2007. Automatic acoustic identification of singing insects. Bioacoustics 16, 281-328. ( 10.1080/09524622.2007.9753582) [DOI] [Google Scholar]

- 14.Belwood JJ, Morris GK. 1987. Bat predation and its influence on calling behavior in neotropical katydids. Science 238, 64-67. ( 10.1126/science.238.4823.64) [DOI] [PubMed] [Google Scholar]

- 15.Morris GK, Mason AC, Wall P, Belwood JJ. 1994. High ultrasonic and tremulation signals in neotropical katydids (Orthoptera: Tettigoniidae). J. Zool. 233, 129-163. ( 10.1111/j.1469-7998.1994.tb05266.x) [DOI] [Google Scholar]

- 16.Symes LB, Martinson SJ, Kernan CE, Ter Hofstede HM. 2020. Sheep in wolves' clothing: prey rely on proactive defences when predator and non-predator cues are similar. Proc. R. Soc. B 287, 20201212. ( 10.1098/rspb.2020.1212) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bailey WJ, Cunningham RJ, Lebel L. 1990. Song power, spectral distribution and female phonotaxis in the bushcricket Requena verticalis (Tettigoniidae: Orthoptera): active female choice or passive attraction. Anim. Behav. 40, 33-42. ( 10.1016/S0003-3472(05)80663-3) [DOI] [Google Scholar]

- 18.Ritchie MG. 1996. The shape of female mating preferences. Proc. Natl Acad. Sci. USA 93, 14 628-14 631. ( 10.1073/pnas.93.25.14628) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dutta R, Tregenza T, Balakrishnan R. 2017. Reproductive isolation in the acoustically divergent groups of tettigoniid, Mecopoda elongata. PLoS ONE 12, e0188843. ( 10.1371/journal.pone.0188843) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Greenfield MD. 1983. Unsynchronized chorusing in the coneheaded katydid Neoconocephalus affinis (Beauvois). Anim. Behav. 31, 102-112. ( 10.1016/S0003-3472(83)80178-X) [DOI] [Google Scholar]

- 21.Dadour IR. 1989. Temporal pattern changes in the calling song of the katydid Mygalopsis marki Bailey in response to conspecific song (Orthoptera: Tettigoniidae). J. Insect Behav. 2, 199-215. ( 10.1007/BF01053292) [DOI] [Google Scholar]

- 22.Morris GK, Kerr GE, Fullard JH. 1978. Phonotactic preferences of female meadow katydids (Orthoptera: Tettigoniidae: Conocephalus nigropleurum). Can. J. Zool. 56, 1479-1487. ( 10.1139/z78-205) [DOI] [Google Scholar]

- 23.Deily JA, Schul J. 2009. Selective phonotaxis in Neoconocephalus nebrascensis (Orthoptera: Tettigoniidae): call recognition at two temporal scales. J. Comp. Physiol. A 195, 31-37. ( 10.1007/s00359-008-0379-2) [DOI] [PubMed] [Google Scholar]

- 24.Hunt J, Allen GR. 1998. Fluctuating asymmetry, call structure and the risk of attack from phonotactic parasitoids in the bushcricket Sciarasaga quadrata (Orthoptera: Tettigoniidae). Oecologia 116, 356-364. ( 10.1007/s004420050598) [DOI] [PubMed] [Google Scholar]

- 25.Lehmann GU, Heller KG. 1998. Bushcricket song structure and predation by the acoustically orienting parasitoid fly Therobia leonidei (Diptera: Tachinidae: Ormiini). Behav. Ecol. Sociobiol. 43, 239-245. ( 10.1007/s002650050488) [DOI] [Google Scholar]

- 26.Lakes-Harlan R, Lehmann GU. 2015. Parasitoid flies exploiting acoustic communication of insects—comparative aspects of independent functional adaptations. J. Comp. Physiol. A 201, 123-132. ( 10.1007/s00359-014-0958-3) [DOI] [PubMed] [Google Scholar]

- 27.Greenfield MD. 1988. Interspecific acoustic interactions among katydids Neoconocephalus: inhibition-induced shifts in diel periodicity. Anim. Behav. 36, 684-695. ( 10.1016/S0003-3472(88)80151-9) [DOI] [Google Scholar]

- 28.Stephen RO, Hartley JC. 1991. The transmission of bush-cricket calls in natural environments. J. Exp. Biol. 155, 227-244. ( 10.1242/jeb.155.1.227) [DOI] [Google Scholar]

- 29.Römer H. 1993. Environmental and biological constraints for the evolution of long-range signalling and hearing in acoustic insects. Phil. Trans. R. Soc. Lond. B 340, 179-185. ( 10.1098/rstb.1993.0056) [DOI] [Google Scholar]

- 30.Schmidt AK, Balakrishnan R. 2015. Ecology of acoustic signalling and the problem of masking interference in insects. J. Comp. Physiol. A 201, 133-142. ( 10.1007/s00359-014-0955-6) [DOI] [PubMed] [Google Scholar]

- 31.Symes LB, Robillard T, Martinson SJ, Dong J, Kernan CE, Miller CR, Ter Hofstede HM. 2021. Daily signaling rate and the duration of sound per signal are negatively related in Neotropical forest katydids. Integr. Comp. Biol. 61, 887-899. ( 10.1093/icb/icab138) [DOI] [PubMed] [Google Scholar]

- 32.Falk JJ, ter Hofstede HM, Jones PL, Dixon MM, Faure PA, Kalko EK, Page RA. 2015. Sensory-based niche partitioning in a multiple predator–multiple prey community. Proc. R. Soc. B. 282, 20150520. ( 10.1098/rspb.2015.0520) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.ter Hofstede HM, Symes LB, Martinson SJ, Robillard T, Faure P, Madhusudhana S, Page RA. 2020. Calling songs of neotropical katydids (Orthoptera: Tettigoniidae) from Panama. J. Orthoptera Res. 29, 137-201. ( 10.3897/jor.29.46371) [DOI] [Google Scholar]

- 34.Ferreira AI, da Silva NF, Mesquita FN, Rosa TC, Monzón VH, Mesquita-Neto JN. 2023. Automatic acoustic recognition of pollinating bee species can be highly improved by Deep Learning models accompanied by pre-training and strong data augmentation. Front. Plant Sci. 14, 1081050. ( 10.3389/fpls.2023.1081050) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Branding J, von Hörsten D, Wegener JK, Böckmann E, Hartung E. 2023. Towards noise robust acoustic insect detection: from the lab to the greenhouse. Künstl. Intell. 1-17. ( 10.1007/s13218-023-00812-x) [DOI] [Google Scholar]

- 36.Lostanlen V, Salamon J, Farnsworth A, Kelling S, Bello JP. 2019. Robust sound event detection in bioacoustic sensor networks. PloS ONE 14, e0214168. ( 10.1371/journal.pone.0214168) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lauha P, Somervuo P, Lehikoinen P, Geres L, Richter T, Seibold S, Ovaskainen O. 2022. Domain-specific neural networks improve automated bird sound recognition already with small amount of local data. Methods Ecol. Evol. 13, 2799-2810. ( 10.1111/2041-210X.14003) [DOI] [Google Scholar]

- 38.Best P, Ferrari M, Poupard M, Paris S, Marxer R, Symonds H, Spong P, Glotin H. 2020. Deep learning and domain transfer for orca vocalization detection. In Int. Jt Conf. Neural Netw. (IJCNN), Glasgow, UK, 19–24 July 2020, pp. 1-7. New York, NY: IEEE. ( 10.1109/IJCNN48605.2020.9207567) [DOI] [Google Scholar]

- 39.Napoli A, White PR. 2023. Unsupervised domain adaptation for the cross-dataset detection of humpback whale calls. In Detection and Classification of Acoustic Scenes and Events 2023, Tampere, Finland, 20–22 September 2023. (See https://dcase.community/documents/workshop2023/proceedings/DCASE2023Workshop_Napoli_21.pdf.) [Google Scholar]

- 40.Çoban EB, Pir D, So R, Mandel MI. 2020. Transfer learning from youtube soundtracks to tag arctic ecoacoustic recordings. In IEEE Int. Conf. Acoust. Speech Signal Process (ICASSP), pp. 726-730. New York, NY: IEEE. ( 10.1109/ICASSP40776.2020.9053338) [DOI] [Google Scholar]

- 41.Yang W, Chang W, Song Z, Zhang Y, Wang X. 2021. Transfer learning for denoising the echolocation clicks of finless porpoise (Neophocaena phocaenoides sunameri) using deep convolutional autoencoders. J. Acoust. Soc. Am. 150, 1243-1250. ( 10.1121/10.0005887) [DOI] [PubMed] [Google Scholar]

- 42.Vickers W, Milner B, Risch D, Lee R. 2021. Robust North Atlantic right whale detection using deep learning models for denoising. J. Acoust. Soc. Am. 149, 3797-3812. ( 10.1121/10.0005128) [DOI] [PubMed] [Google Scholar]

- 43.Olvera M, Vincent E, Gasso G. 2021. Improving sound event detection with auxiliary foreground-background classification and domain adaptation. In Detection and Classification of Acoustic Scenes and Events, virtual/online event, 15–19 November 2021. [Google Scholar]

- 44.Tang T, Zhou X, Long Y, Li Y, Liang J. 2021. CNN-based Discriminative Training for Domain Compensation in Acoustic Event Detection with Frame-wise Classifier. In Asia-Pac Signal Inf Process Assoc Annu Summit Conf, pp. 939-944. New York, NY: IEEE. [Google Scholar]

- 45.Liaqat S, Bozorg N, Jose N, Conrey P, Tamasi A, Johnson MT. 2018. Domain tuning methods for bird audio detection. In Workshop on Detection and Classification of Acoustic Scenes and Events 2018, Woking, UK, 19–20 November 2018. (See https://dcase.community/documents/challenge2018/technical_reports/DCASE2018_Liaqat_96.pdf.) [Google Scholar]

- 46.Ganin Y, Lempitsky V. 2015. Unsupervised domain adaptation by backpropagation. In 32nd Int. Conf. Machine Learn., PMLR, Lille, France, 6–11 July 2015. J. Mach. Learn. Res. 37, 1180-1189. ( 10.48550/arXiv.1409.7495) [DOI] [Google Scholar]

- 47.Gharib S, Drossos K, Cakir E, Serdyuk D, Virtanen T. 2018. Unsupervised adversarial domain adaptation for acoustic scene classification. arXiv preprint 1808.05777. ( 10.48550/arXiv.1808.05777) [DOI]

- 48.Delcroix M, Kinoshita K, Ogawa A, Huemmer C, Nakatani T. 2018. Context adaptive neural network based acoustic models for rapid adaptation. IEEE/ACM Trans Audio, Speech, Language Process 26, 895-908. ( 10.1109/TASLP.2018.2798821) [DOI] [Google Scholar]

- 49.Stowell D, Wood MD, Pamuła H, Stylianou Y, Glotin H. 2018. Automatic acoustic detection of birds through deep learning: the first bird audio detection challenge. Methods Ecol. Evol. 10, 368-380. ( 10.1111/2041-210X.13103) [DOI] [Google Scholar]

- 50.Symes LB, Madhusudhana S, Martinson SJ, Kernan CE, Hodge KB, Salisbury DP, Klinck H, ter Hofstede H. 2022. Estimation of katydid calling activity from soundscape recordings. J. Orthoptera Res. 31, 173-180. ( 10.3897/jor.31.73373) [DOI] [Google Scholar]

- 51.K. Lisa Yang Center for Conservation Bioacoustics at the Cornell Lab of Ornithology. 2023. Raven Pro: interactive sound analysis software (version 1.6.4) [computer software]. Ithaca, NY: Cornell Lab of Ornithology. (https://ravensoundsoftware.com/) [Google Scholar]

- 52.Lang AB, Kalko EK, Römer H, Bockholdt C, Dechmann DK. 2006. Activity levels of bats and katydids in relation to the lunar cycle. Oecologia 146, 659-666. ( 10.1007/s00442-005-0131-3) [DOI] [PubMed] [Google Scholar]

- 53.Römer H, Lang A, Hartbauer M. 2010. The signaller's dilemma: a cost–benefit analysis of public and private communication. PloS One 5, e13325. ( 10.1371/journal.pone.0013325) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Abadi M, et al. 2016. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv preprint 1603.04467. ( 10.48550/arXiv.1603.04467) [DOI]

- 55.Madhusudhana S, et al. 2021. Improve automatic detection of animal call sequences with temporal context. J. R. Soc. Interface 18, 20210297. ( 10.1098/rsif.2021.0297) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ioffe S, Szegedy C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In 32nd Int. Conf. Machine Learn., PMLR, Lille, France, 6–11 July 2015, pp. 448-456. ( 10.48550/arXiv.1502.03167) [DOI] [Google Scholar]

- 57.Nair V, Hinton GE. 2010. Rectified linear units improve restricted boltzmann machines. In 27th Int. Conf. Machine Learn. (ICML-10), Haifa, Israel, 21–24 June 2010, pp. 807-814. Athens, Greece: Omnipress. [Google Scholar]

- 58.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. 2014. Dropout: a simple way to prevent neural networks from overfitting. J. Machine Learn. Res. 15, 1929-1958. [Google Scholar]

- 59.Kingma DP, Ba J. 2014. Adam: A method for stochastic optimization. arXiv preprint 1412.6980. ( 10.48550/arXiv.1412.6980) [DOI]

- 60.Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC. 2018. MobileNetV2: Inverted residuals and linear bottlenecks. In IEEE Comput. Soc. Conf. Computer Vis. Pattern Recognit., pp. 4510-4520. ( 10.1109/CVPR.2018.00474) [DOI] [Google Scholar]

- 61.Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L. 2009. ImageNet: A large-scale hierarchical image database. In IEEE Comput. Soc. Conf. Computer Vis. Pattern Recognit., pp. 248-255. New York, NY: IEEE. ( 10.1109/CVPR.2009.5206848) [DOI] [Google Scholar]

- 62.Tan M, Le Q. 2019. Efficientnet: Rethinking model scaling for convolutional neural networks. In Int. Conf. Machine Learn., pp. 6105-6114. ( 10.48550/arXiv.1905.11946) [DOI] [Google Scholar]

- 63.Kahl S, Wood CM, Eibl M, Klinck H. 2021. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 61, 101236. ( 10.1016/j.ecoinf.2021.101236) [DOI] [Google Scholar]

- 64.Florentin J, Dutoit T, Verlinden O. 2020. Detection and identification of European woodpeckers with deep convolutional neural networks. Ecol. Inform. 55, 101023. ( 10.1016/j.ecoinf.2019.101023) [DOI] [Google Scholar]

- 65.Wang K, Wu P, Cui H, Xuan C, Su H. 2021. Identification and classification for sheep foraging behavior based on acoustic signal and deep learning. Comput. Electron. Agric. 187, 106275. ( 10.1016/j.compag.2021.106275) [DOI] [Google Scholar]

- 66.Miller BS, Madhusudhana S, Aulich MG, Kelly N. 2023. Deep learning algorithm outperforms experienced human observer at detection of blue whale D-calls: a double-observer analysis. Remote Sens. Ecol. Conserv. 9, 104-116. ( 10.1002/rse2.297) [DOI] [Google Scholar]

- 67.Müller J, et al. 2023. Soundscapes and deep learning enable tracking biodiversity recovery in tropical forests. Nat. Commun. 14, 6191. ( 10.1038/s41467-023-41693-w) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ruff ZJ, Lesmeister DB, Duchac LS, Padmaraju BK, Sullivan CM. 2020. Automated identification of avian vocalizations with deep convolutional neural networks. Remote Sens. Ecol. Conserv. 6, 79-92. ( 10.1002/rse2.125) [DOI] [Google Scholar]

- 69.Tsalera E, Papadakis A, Samarakou M. 2021. Comparison of pre-trained CNNs for audio classification using transfer learning. J. Sens. Actuator Netw. 10, 72. ( 10.3390/jsan10040072) [DOI] [Google Scholar]

- 70.Denton T, Wisdom S, Hershey JR. 2022. Improving bird classification with unsupervised sound separation. In 2022 IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. 636-640. New York, NY: IEEE. ( 10.1109/ICASSP43922.2022.9747202) [DOI] [Google Scholar]

- 71.Park DS, Chan W, Zhang Y, Chiu CC, Zoph B, Cubuk ED, Le QV. 2019. SpecAugment: A simple data augmentation method for automatic speech recognition. arXiv preprint 1904.08779. ( 10.48550/arXiv.1904.08779) [DOI]

- 72.Faiß M, Stowell D. 2023. Adaptive representations of sound for automatic insect recognition. PLoS Comput. Biol. 19, e1011541. ( 10.1371/journal.pcbi.1011541) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Symes LB, Madhusudhana S, Martinson SJ, Geipel I, ter Hofstede HM. 2024. Multi-year soundscape recordings and automated call detection reveals varied impact of moonlight on calling activity of Neotropical forest katydids. Phil. Trans. R. Soc. B 379, 20230110. ( 10.1098/rstb.2023.0110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Madhusudhana S, Symes LB, Martinson SJ, ter Hofstede HM. 2024. Data for: Sounds of neotropical katydids from Barro Colorado Island, Panama. Zenodo. ( 10.5281/zenodo.10594016) [DOI] [Google Scholar]

- 75.Symes LB, Madhusudhana S, Martinson SJ, Kernan C, Hodge KB, Salisbury D, Klinck H, ter Hofstede HM. 2023. Data from: Neotropical forest soundscapes with call identifications for katydids. Dryad Digital Repository. ( 10.5061/dryad.zw3r2288b) [DOI]

- 76.Madhusudhana S, Klinck H, Symes LB. 2024. Extensive data engineering to the rescue: building a multi-species katydid detector from unbalanced, atypical training datasets. Figshare. ( 10.6084/m9.figshare.c.7151341) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data considered in this study were from previously published works [33,50]. The techniques developed in this study have been integrated into recent releases of the open-source Koogu toolbox: https://github.com/shyamblast/Koogu. The final trained model is also bundled with releases (starting from v.1.6.5) of Raven Pro software. For replication of the results presented in the manuscript, all training audio (focal, in situ and playback) and annotations (in Raven Pro selection table format) can be obtained from the Zenodo Repository: https://doi.org/10.5281/zenodo.10594015 [74] and all testing audio files and annotations (also Raven Pro format) are available from the Dryad Digital Repository: https://doi.org/10.5061/dryad.zw3r2288b [75].

Additional data and results are provided in electronic supplementary material [76].