Abstract

Emotion Recognition is the experience of attitude in graphic language expression and composition. People use both verbal and non-verbal behaviours to communicate their emotions. Visual communication and graphic design are always evolving to meet the demands of an increasingly affluent and culturally conscious populace. When graphic designing works, designers should consider their own opinions about related works from the audience's or customer's standpoint so that the emotion between them can resonate. Hence, this study proposes a personalized emotion recognition framework based on convolutional neural networks (PERF-CNN) to create visual content for graphic design. Graphic designers prioritize the logic of showing objects in interactive designs and use visual hierarchy and page layout approaches to respond to users' demands via typography and imagery. This ensures that the user experience is maximized. This research identifies three tiers of emotional thinking: expressive signal, emotional experience, and emotional infiltration, all of which affect graphic design. This article explores the subject of graphic design language and its ways of emotional recognition, as well as the relationship between graphic images, shapes, and feelings. CNN initially extracted expressive features from the user's face images and the poster's visual information. The clustering process categorizes the poster or advertisement images into positive, negative, and neutral classes. Research and applications of graphic design language benefit from the proposed method's experimental results, demonstrating that it outperforms conventional classification approaches in the dataset. In comparison to other popular models, the experimental results demonstrate that the proposed PERF-CNN model improves each of the following: classification accuracy (97.4 %), interaction ratio (95.6 %), emotion recognition ratio (98.9 %), rate of influence of pattern and colour features (94.4 %), and prediction error rate (6.5 %).

Keywords: Personalized emotion recognition, Graphic design, Typography, Visual communication, Graphic language

1. Introduction

Graphic design encompasses the theory and practice of creating and executing visual and textual representations of ideas and experiences [1]. Visual language is a communication method that uses visual features, whereas graphical language may relate to graphic modelling language or graphical forms of artificial language used to convey information or knowledge [2]. There are three primary components to emotion: personal experiences like happiness, sadness, or anger, as well as outward manifestations like a person's body language and facial expressions, all of which contribute to the subjective experience of people's attitudes toward objective things [3]. In light of society's ongoing progress, emotion recognition alone is no longer sufficient to meet the needs of interpersonal and international interaction. Amidst the data-driven era, the field of graphics language is encountering several chances and challenges. Consequently, the conversion of data into graphics language holds immense importance. Emotion detection methods based on physiological signals are becoming more and more personalized to each individual due to the wide variety of subjective responses people have to physical stimuli [4]. Art and design's creative and inventive pursuits have been essential in human society for countless generations [5]. The technique has progressed from its initial purpose of transmitting fundamental information to its present-day applications in expressing emotions, exploring concepts, and reflecting culture [6]. Graphic design, architecture, fashion, and industrial design are just a few contemporary art and graphic design disciplines that have expanded in recent decades [7]. Visual communications are created by graphic designers using classic art and design concepts, yet how customers understand these messages is unknown. When people express themselves visually, they communicate visually [8]. Graphics that evoke strong emotional responses result from thoughtful application of colour psychology. Because of the wide range of feelings, ideas, and recollections that colours may evoke, they are a potent tool for graphic designers.

Graphic designers can convey ideas and feelings using shape, colour, line, and texture, among other visual components. Visual impact and emotional expression in works of art and design are influenced by the selection and combination of these components [9]. Therefore, designers should think about visual communication traits and use various visual components flexibly to communicate themselves more emotionally and create more art [10]. Visual communication includes shade, colour, line, texture, etc. Colour is a powerful visual tool that may express many emotions and thoughts. An object's structure, volume, and spatiality may be described by its shape, whereas lines can imply movement and rhythm [11]. An object's tactile features and qualities may be communicated via its textures. Art and design works may benefit from these visual components since they increase their expressiveness and persuasiveness [12]. Visual communication is the first use of typography and fonts. The creation of visual hierarchies for the arrangement of information and the creation of brand and logo signatures in commercial design have been two of their many essential functions [13]. The therapeutic emotional significance of colour in graphic design for products advertised in print and digital media is an established reality. This is because it elicits a positive or effective reaction from the target audience, who are likelier to purchase the advertised goods. Messages are presented via visual media by reading, hearing, and seeing; these messages are featured in almost all retail product packaging and feature coloured illustrated writing. One function of advertising is to generate interest in items that target a certain demographic by showcasing appealing concepts to the general public. These are crucial for appealing to people's emotions since they let them know their factory-made goods are available. Therefore, consumer goods advertising relies on graphic design for aesthetics, including font, graphics, and more. To understand the target audience's demographics, psychographics, and emotional triggers, designers do extensive audience research. Designers may target certain feelings and interests by dividing the audience into subsets defined by characteristics like age, gender, interests, and preferences.

Deep Learning (DL) technology has succeeded tremendously in several fields owing to its powerful pattern recognition and feature extraction abilities [14]. Using computers and virtual reality allows for thorough investigation and analysis of graphic design in the animation scene, which is important for using DL models in animation graphic designs [15]. Using a Convolutional Neural Network (CNN), graphic poster designs may have emotive components extracted [16]. DL models like CNNs are ubiquitous in computer vision and image recognition applications [17]. The study adds to the existing body of knowledge in the field while introducing new features to address unique challenges in emotion recognition and boost overall performance. Since CNN is a kind of CNN architecture tailor-made for facial expression identification, combining it with graphical language models to recognize facial expressions is a novel approach.

This research primarily examines a collection of intelligent recommendation algorithms that can suggest better graphics descriptions to the user based on just a few pieces of field information. To do tasks like image identification, localization, and classification, it uses many hierarchies, including pooling layers, convolutional layers, and fully connected layers, to gradually extract feature information from input pictures [18]. Compared with traditional animated graphics, authoring tools tailored to data visualizations are still in their early stages. Programming libraries are a common tool for developers when making complex animated designs [19]. Examining the user behaviour while interacting with mobile devices to achieve information visualization, good user-information interaction, and satisfaction of user needs in graphical design. It then examined the visual aspects of the smartphone interface, beginning with the user's sensory interaction level and moving on to the user's operation mode level, covering everything from the expression of visual form to the most commonly used interface mode and the space of the User Interface (UI) components [20].

The fast proliferation of applications and the constant enhancement of cloud-based technology greatly encourage prosperity and increase people's standard of living. Emotion recognition aims to employ recognition of patterns to extract, evaluate, and comprehend the aspects of people's emotional behaviour. The initial stage of facial expression identification is the detection and location of images of the face.

Problems with system resources, efficiency, reliability, and complicated solutions are only a few operational concerns other academics have pointed out with picture recognition. Consequently, creating a system that can identify and categorize facial emotions is the primary objective of this work. Factors such as the 3D face posture, noise, opacity, and varying lighting conditions make facial emotion identification challenging.

According to the inquiry, present techniques for achieving high classification precision, sentiment analysis ratio, and lowered error ratio have multiple issues. As a result, a PERF-CNN is suggested as a tool for graphic designers to use while making visual material.

The main contribution of the article include.

-

•

Developing graphic design visual content using the perceptual emotion recognition framework based on convolutional neural networks (PERF-CNN).

-

•

When it comes to visual communication and graphical language, we're taking a look at the mathematical model CNN for emotion classification.

-

•

The numbers add up, and when compared to other methods, the suggested PERF-CNN model improves upon them in terms of classification accuracy, sentiment analysis ratio, and reduced error ratio.

2. Literature survey

Luning Zhao [21] proposed the Animation Visual Guidance System (AVGS) for graphic language in an intelligent environment. This article brings animation design more in line with the features of the times by studying and analyzing the visual orientation of graphic language in creating an animation visual guiding system. It injects this language with orientation and examines its effects on animation. It may be easily modified to fit new media formats, allowing businesses and audiences to communicate more effectively. According to the surveys, respondents in the 21–35 age bracket (with a maximum of 69) were more concerned with selecting graphic colours. Hendrik N.J. Schifferstein et al. [22] suggested the Regression Analysis (RA) for graphic design to communicate the consumer benefits of food packaging. According to a dummy regression analysis, claims made orally favourably conveyed healthiness and environmental friendliness and a negative tendency for sensory preferences. The images revealed positive effects on conveying working conditions and negative effects on wellness. Based on the results, customers can manage many messages on packaging, yet choosing the best selection is still a design issue. Bingjie Zhou [23] recommended the information interaction system based on gesture recognition (IIS-GR) for graphic design. An information interaction system is built using gesture recognition as its foundation, with a graphics generation module built and tested to evaluate the impact of both graphics design and gesture recognition. Findings demonstrate that post-processing gesture recognition accuracy may approach 100 % and that the information interaction system interface's graphic design display effect satisfies fundamental graphic design standards.

Yongqiang Guo [24] discussed the artistic graphic symbols using an intelligent guidance marking system. The author investigates how the emotional qualities and presentation of graphic symbols influence the transmission of emotions through the guidance signage system, which affects human cognitive behaviour, all based on the interplay between these three factors. The author briefly overviews the importance of visual symbols that convey emotion in guide signs. Through their dynamic expression, these symbols provide a distinct visual style that is more in keeping with the aesthetic preferences of contemporary people. Fufeng Chu and Wentao Li [25] deliberated the Data-Driven Image Interaction-Based Software Infrastructure (DDII-SI) for Graphic Design. It begins by outlining the design needs for the placement of augmented reality scene labels, moves on to design the energy function for the characteristics of augmented reality scenes, and finally solves the label placement problem by implementing optimization. Quantifying geometric, perceptual, and stylistic properties, this work presents a feature representation form that is pixel-, element-, interelement-, plane-, and application-oriented. Zhenyu Wang [26] presented the Voice Perception Model and Internet of Things (VPM-IoT) for the aesthetic evaluation of multi-dimensional graphic design. Creating a static solid interface in a three-dimensional space and designing different spatial shapes in a two-dimensional plane are the two primary components of multi-dimensional graphic design. Being open, dissociated, and fuzzily defined, it can only be studied in continuous change. Hence, this research builds an aesthetic rating system for multi-dimensional graphic design using the speech perception model and technologies connected to the Internet of Things.

Vimalkrishnan Rangarajan [27] introduced the role of affective quality perception in graphic design stimulus. This article uses a descriptive phenomenological technique to describe the subjective experience of 7 design learners as they perceive graphic design inspiration. Students' interpretations of motivational content varied from surface to deep levels of understanding. The vivid first-person experiences prompted by inspirational VR content enhanced other aspects, such as the sense of emotional quality. XIAOYU YAN [28] offered Data Mining for Computer Graphic Image Technology with Visual Communication. Computer figures and picture designs are increasingly focused on visually conveying effects and enhancing the expressiveness and beauty of graphics and pictures rather than being restricted to plain image design. Data mining algorithms verify their efficacy and feasibility in computer graphic images by mining the final frequent set of association rules efficiently and accurately in the context of computer figure and picture design and visual sense transmitting design. Jing Cao [29] applied Colour Language in Computer Graphic Design. Colour is both an objective stimulus and a symbol for humans and a subjective behaviour and response in the context of social activities and natural enjoyment. Although people's perceptions of colour vary greatly, there are surprisingly consistent reactions to colour throughout cultures and eras. Graphic designers must investigate these similarities since they are often the key to understanding and influencing the aesthetic mystique of colour. Chen et al. [30] proposed the 3D Virtual Vision Technology for Graphic Design. The transition from 2D to 3D graphic design expanded the development area for graphic design and helped successfully communicate the design idea of the virtual vision technology. This research built an interactive platform for interior design using 3D virtual vision. Following a comprehensive overview of the platform's 3D virtual visual effect displays' function design, the interior design platform's 3D virtual rendering capabilities were developed.

The processing and conversion of images allow us to naturally transform text into three-dimensional images within the realm of computer-based 3D graphics analysis. Li et al. [31], computer 3D graphics technology, examine the fundamentals of picture analysis and then suggest a method of visual communication that relies on extracting features from pixels and textures. This article primarily uses survey and part analysis methodologies to study the impact of people's perceptions in 3D movies. Regarding visual communication design, more than 80 % of respondents agreed that 3D image analysis equipment has an impact.

Leong et al. [32] examined the emotional designs, gadgets, designs, and classification strategies used by the chosen studies, determining the most common approaches and emerging trends in visual emotion recognition. This review lays the groundwork for applying relevant techniques in various fields and advocates for emotion recognition with visual data by identifying current trends, such as the increased use of deep learning algorithms and the need for further study on body gestures.

Fang et al. [33] present a model for a multimodal visual communication system (MVCS). This technique enables customized video communication by dynamically adjusting video material and playing mode based on users' moods and interests. A large-scale video dataset was used to train the MVCS model, which then assesses videos from many dimensions, including vision, sound, and text. We use recurrent neural networks to assess the audio and text characteristics for emotions and CNN to extract the visual elements of videos. It was clear that the MVCS model successfully improved the effectiveness of digital ad design since 92.6 % of users were satisfied with the modified commercials.

3. Proposed methodology

3.1. Problem statement

A graphic design language is a form of emotionally coding visual components and cognitive activities, and emotional activities are vital in current graphic design. The designer successfully communicates visual information to the audience through an emotional recognition approach that allows them to understand the essence and purpose of visuals; emotions are both an element of visual information content and the driving force behind visual meaning interpretation. Semantic meaning in graphic design is often constructed from expressive semantics and numerous meanings via the reconstruction of the relationship model between shape and meaning. An emotional appeal is constructed by integrating certain cultural elements, concepts, and styles with specific rhetorical strategies. If visual perception is a kind of picture cognition, then poetic comprehension and empathy highlight the emotional experience. Meaningful form is the stylized interpretation of graphic meaning under emotional experience; it corresponds to the progression of visual form from experience to taste and the progressive improvement of the graphics comprehension level. Graphic designers use their unique brand of expressive language to direct the production of visuals from real emotions, transforming abstract ideas into a visual language rich with meaning and passion. Aligning graphic design components with brand colours, typography, logos, and other visual assets helps maintain visual consistency with the company's identity. Ensure the company's visual identity is strengthened and complemented by the unique emotions conveyed via graphic design. This will help to create a consistent and easily identifiable brand presence across all platforms. Graphic designers now have access to multidisciplinary, multi-perspective solutions thanks to the development of contemporary disciplines such as language, psychology, communication, semiotics, and digital media technology. Graphics, a visual language, may serve as a means of conveying information, shaping the aesthetic norms and practices of a given time, and facilitating interaction across different cultures. Considering the history of art and design, it is apparent that theoretical frameworks cannot replace individual opinions and real-life experiences as the primary sources of inspiration. Great designers, therefore, must be deeply concerned with the state of society and the world at large to enhance their design inspiration and be adept at reading and responding to the emotional needs of their audiences. The visual languages of graphic design and typography are culturally and communally constructed ways of communication. How something is made has a significant impact on graphic design and typography. The typographic design business prospered through several generations of mechanical and photographic reproduction, from the discovery of movable type to the digital media platform of today. Computers and software eventually supplanted the expertise and machinery of the printing and typographic industries. The increased typesetting capabilities of personal computers have rendered typesetting machines and their operators obsolete. Typography, which was once the purview of professional typesetters, is now the responsibility of graphic designers. The availability of font options in international word-processing software may eventually replace designers.

This model provides a robust tool for accurately identifying and understanding facial expressions, facilitating applications in emotion identification, communication between humans and machines, cognitive computing, and related fields. Prior research has devised various deep-learning frameworks to address the task of individualized emotion recognition. Several studies exhibit adequate results when tested on datasets comprising photographs captured under controlled circumstances. However, they demonstrate limitations when confronted with more challenging datasets encompassing a more comprehensive range of images and partial facial features. This study utilized CNN and individualized emotion models to classify facial emotions.

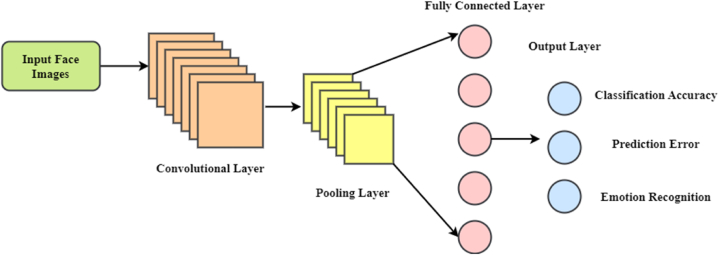

Hence, this study proposes a personalized emotion recognition framework based on convolutional neural networks (PERF-CNN) to create visual content for graphic design. For example, advertisements for consumer products in print and digital media could use a continuous colour line drawn in different directions to create the impression of movement. Advertising for electrical devices has the potential to evoke strong emotions in viewers when they employ well-organized colour schemes that have regular form repetition. The bottom line is that graphic designers have potent tools for colour values to convey ad consumers' innermost thoughts and sentiments. Various posters and billboards use colour as a linear representation in advertising. The proposed PERF-CNN model is illustrated in Fig. 1. The dataset for emotion detection is used to obtain the data. Under the constraints of the product's image and word descriptions, designers must frequently sift through various design components to find aesthetically pleasing and functionally appropriate for the marketed product. Line, shape, texture, space, imagery, typography, and colour are all elements gathered in input images. For the CNN algorithm to utilize them for the model, they are pre-processed after collection. Many methods and processes are involved in pre-processing: Data cleansing. Manual and automated methods are available to eliminate misclassified or wrongly added data. Information imputed. Applying methods to reduce bias in the model, such as preparing data, making changes to the algorithm, or using algorithms that are conscious of fairness in learning. Data augmentation and resampling are two pre-processing approaches that may ensure that the training data fairly represent various demographic groups. Users may directly input their opinions on the accuracy of emotion identification into PERF-CNN. The model's predictions, emotions that were incorrectly identified, or outputs that were annotated are all examples of possible forms of this feedback. The model parameters or individual components are adjusted based on this input to align with user expectations and preferences.

Fig. 1.

Proposed PERF-CNN model.

Fig. 1 shows the suggested PERF-CNN model. The information is taken from the emotion detection Kaggle dataset [31]. Designers often have to search for an extensive selection of design components (such as background images, signs, and text styles) for suitable matches to the advertised product and arrange these elements to satisfy aesthetic criteria, all given a product image and word descriptions. To help manage graphic designers and design, this research primarily employs data supplied by data resources to categorize and forecast graphic design features. Graphic designers may learn from and reference successful graphic design situations using information resource-sharing technologies. The Internet and other forms of technological innovation are the sources of these successful examples. The information resource compliments system must first collect data for this study. Pattern, text, colour, and form attributes should all be part of this data. If graphic design solutions are to be successful, their designers must possess these four qualities. The gathered data is then classified using clustering in this work; however, the feature data of these flat designs must be pre-processed based on normalization techniques before classification. Finally, this research must use the key features of these classified graphic designs to provide useful predictions. This research will use the data resource-sharing platform to classify patterns and colours, which will then be fed into a convolutional neural network (CNN) as an input layer. The four visual aspects of the design can be transformed into a matrix after undergoing pre-processing to ensure that the data distribution is uniform. The CNN's input layer will receive these features in matrix form. The convolutional layer of the CNN will receive input from the graphic design's pattern, text, and colour attributes via separate channels to extract these features. The pooling layer downsamples this data to make the CNN's computations easier. Assuming massive amounts of data are accessible, the technique may be readily refined for emotion classification. Similar to previous hypergraph learning approaches, the suggested method is unsuitable for emotion regression and is perfect for emotion classification. Mood, fatigue, grievances, sorrow, harm, tears, tear-up anger, and other emoticons may communicate information quickly and intuitively, yet they may additionally represent emotional attitudes and psychological responses more quietly and indirectly. The complex emotional semantics connected to people's social requirements are typically formed in graphic design via induced forms and language structures, which in turn are derived from emotional stimulation. Training PERF-CNN using diverse and representative data greatly improves its adaptability to different cultural environments. Patterns and traits that are significant across cultures may be better learned by training a model using data that covers various cultural environments, languages, and visual styles. Minimizing the number of dimensions in the feature space may aid in lowering computing costs and avoiding overfitting. Principal component analysis (PCA) and other feature selection techniques may be used to determine the complexities of this graphic design. Colour psychology is a powerful tool for designers looking to convey meaning. Colours can provoke different emotions; for instance, heated red and orange may make you feel passionate and energized, while cool colours like green and blue can make you feel relaxed and at peace. Designers may evoke a wide range of emotions via their work by properly choosing colours.

3.2. Emotion analysis based on graphic design elements

Emotion recognition uses computers to identify people's faces and evaluate their performance data based on specific traits. The system figures out why people need to be able to recognize and understand emotional expressions. There are three stages to emotion recognition when looking at it through the lens of the facial identification process: face detection and positioning, expression feature extraction, and expression categorization.

Adaptive social behaviour relies heavily on recognizing emotions through body language and facial expressions. Equally important to effective interpersonal communication is the ability to identify and manage one's emotions.

The rationale for utilizing the CNN model in detecting facial expressions arises from its capacity to identify spatial characteristics, handle translation invariance, comprehend expressive feature representations, collect global context, and attain expansion, flexibility, and compatibility with transfer learning techniques.

The Local Binary Pattern (LBP) method operator utilizes each pixel in the picture to determine the binary mode, a technique that describes texture features in face images. The image's core pixel is each component pixel, and the eight neighbours' pixels finish the threshold processing for that pixel. The values of the neighbouring pixels are zero when they are less than the centre pixel. Otherwise, the values of the neighbouring pixels are 1. A single method to express the operator for image extraction is

| (1) |

As shown in equation (1), where denotes the data respective to the centre location in the neighbourhood, and signifies the pixel value of images. Meanwhile, indicates the pixel values of other images in the area, and signifies symbolic functions.

Nevertheless, the traditional LBP operator is limited to a set range and can only cover a restricted region. Thus, the LBP operator has certain restrictions when there is a huge need for textures of varying sizes or frequencies. Circumstances involving the potential alteration of images, including human faces, are not suited for this. Central Local Binary Pattern (CLBP), the LBP operator of the centre pixel, is a revised version of the LBP method based on this basis. CLBP is expressed as

| (2) |

In equation (2), where denotes the CLBP operator, denotes the symbolic function, represents the central pixel of the image and represents the average grey value data of the respective picture. Using specialized networks, PERF-CNN can analyze many modalities (visual and textual) simultaneously, allowing for the independent extraction of significant information. For instance, pictures may be inputted into a convolutional neural network (CNN), while a recurrent neural network (RNN) or transformer model could handle textual inputs.

The application method will reduce CNN robustness due to image processing parameters like face rotation. The LBP algorithm's rotation-invariant properties allow it to fix the CNN's errors. Integrating CNN features with CLBP characteristics yields a face emotion recognition method. The second fully linked layer of the CNN completes feature fusion in this hybrid method. Selecting an appropriate approach and performing the dimensionality reduction operation are part of the feature fusion process. This study uses a connection-based feature fusion approach, and equation (3) details fused the features of the two output vectors for user and graphical design interaction.

| (3) |

CNN is a DL model widely utilized for computer vision and image recognition applications. It performs tasks like image identification, location, and classification by gradually extracting feature data from input pictures via various hierarchies, including pooling, convolutional, and fully connected layers. Convolution is the heart of CNN. To create a new feature map, the fundamental concept is to run a convolution kernel called a filter over an image, give it some weight, and then add it up with the values of the corresponding pixels. To enable feature extraction for the whole face picture, the convolutional process aids the network in capturing the local features in the image. The following equation (4) shows the convolution procedure and prediction error.

| (4) |

As discussed in equation (4), where signifies the pixel value of rows and columns in the new feature maps determined after convolutions; signifies the pixel value of rows and columns in the original images; indicates the weight value of rows and columns in the convolutional kernels, and denotes bias terms.

Fig. 2 displays the graphic design elements-based emotional analysis results. The first step is to create visual representations of the images using colour distributions and visual bag word models. The practice of producing signals for more than one imaging technology simultaneously is known as multimodal imaging or multiplexed imaging. Most of the language symbols used in graphic design are images, text, and colour, but the general shape of these symbols is an essential part of the profession and very aesthetic. CNNs can recognize emotions and classify images by gradually extracting high-level feature data from input images through continuous convolution and pooling processes. This study concludes the generative model-based clustering analysis of sentiment components by employing CNNs to extract features of participants' emotional components and sentiments from graphic design. In conclusion, this study contrasts emotional research-based deep-learning illustrations with shallow-learning visuals.

Fig. 2.

Emotion Analysis based on Graphic Design Elements.

Fig. 2 shows the emotional analysis based on Graphic Design Elements. The process begins by representing images using visual bag word models and colour distributions. It then uses a synonym library to extract negative and positive sentiment values from textual metadata. Then, it uses data processing methodology for feature analysis. Lastly, it uses machine learning methods for image classification, like convolutional neural networks. This work uses a trained visual attribute indicator to identify potential emotional qualities in pictures and then mine those areas for emotional content. Subsequently, it employs the attention model to autonomously extract the specific areas of images that are highly correlated with emotional descriptions. Graphics, pictures, text, and colours comprise the bulk of graphic design's overall language symbols, and the overall form of language symbols are crucial components of the field and have great aesthetic value. The image's original, dispersed points, lines, and surfaces are artistically processed with words, colours, and graphics to fill the whole visual space during the design process. This makes the design work more complete and less disorganized, and the information in the work is integrated from scattered. The overarching design form language symbol is necessary to integrate data into the last design and effect theme. Feature engineering and extracting areas favourable to emotional expression constitute the majority of image sentiment analysis; however, image categorization is its core. Image sentiment features at the lowest level, semantic sentiment features at the middle level, and deep network semantic sentiment features at the highest level make up the three phases. It takes a little longer to recognize picture emotion than text sentiment recognition. It is easier for users to convey their feelings when they utilize images with prominent objectives. Extracting targets and categorizing sentiment classes are both aided by target sentiment analysis. It takes the input document's entities and uses them to determine their sentiment. Products and services that get good or negative feedback may be identified via output data analysis. Lastly, the polarity of the final graphic image design, whether an advertisement or a poster, is obtained by fusing the findings predicted by each model. A designer must guarantee that the visual styles, including colours, shapes, and sizes, of all design components are in perfect harmony while making their selections and that the context of those pieces is consistent with the semantics provided by the product picture. For instance, decorative leaves and direct sunlight might give a poster advertising fruits a more natural and fresh appearance. The results show that the model can depict the arrangement of the graphic design. Concurrently, this arrangement makes changing the original parts' size and sequencing easy. Data pre-processing techniques include cleaning and normalization to remove irrelevant or noisy data and ensure the features are on a similar scale. Personalized emotional expression helps companies stand out by making brand experiences unique. Differentiating themselves in the marketplace, brands that successfully convey their values, personality, and emotional appeal via graphic design attract and keep consumers who empathize with their message.

3.3. CNN model for classifying the emotions

CNNs often use pooling techniques to decrease the feature map's dimensionality and parameter count. The pooling procedure might collect the pixel value of every local area in the feature maps to generate novel pooled feature maps. As seen in equations (5), (6), the most frequent pooling processes are maximum and average pooling.

| (5) |

| (6) |

As inferred from equations (5), (6), where and represent the size of the pooled area. A convolutional neural network (CNN) may perform tasks like emotion recognition and classification by continually using convolution and pooling operations to progressively extract the high-level feature data from the input images. In this article, the generative model-based clustering analysis of sentiment components is finished using convolutional neural networks (CNNs) to extract characteristics of participants' emotional elements and sentiments from graphic design. Finally, this study compares deep and shallow learning images based on emotional research and deep learning illustration graphic design. This study will be a cognitive visual analysis of healing illustrations. All channels corresponding to the image's area are considered concurrently by deep learning algorithms that use depth-wise separable convolutions, which include exceptions and mobilities into the conventional convolution mechanism. Each image's spatial area and channel are examined independently for depth separation convolution. Dividing the convolution process into depth-wise and point-wise procedures is possible. To get a deeper understanding of the target audience's wants, requirements, and emotional triggers, divide them into subsets according to demographics, psychographics, and behavioural traits. Graphic material may be targeted to certain audience groups to enhance communication by making it more customized and relevant.

Implementing CNN for facial expression identification, processing users' facial expressions in real-time. The visual elements can be changed in real-time based on the detected emotions. By integrating gaze tracking with deep learning algorithms, we can determine the focal point of human attention. Graphical elements can be dynamically modified in response to human eye movements, maximizing user engagement.

The data collection includes grayscale images of faces with dimensions of 48 × 48 pixels. With the faces automatically registered, the final photos will have them all centred on the subject and equal space between them. From the Kaggle open resource, we received training and private test datasets the same size as the public test datasets. The purpose of the former is to analyze the performance of the predictions. To validate our findings, we used the publicly available test dataset. Among the 35,887 photos that comprise the training set, 80 % are from the validation set, while 10 % are from the test set. Using CNNs for emotion recognition is a specific goal. Then, label each photo of a face with the corresponding emotion: surprise, neutral, anger, dislike, fear, joy, or sorrow.

Fig. 3 shows the CNN model. Image and video recognition technologies extensively use convolutional neural networks (CNNs), a kind of deep neural network, because of their ability to dramatically reduce the number of parameters in operation via the parameter-sharing mechanism. This study feeds data into a convolutional neural network (CNN) via its input layer. Converting speech or image data into a feature vector is the standard practice before feeding it into a neural network. In the convolution layer, the convolution kernel applies the convolution operation on both the input from the higher layer and the data from this layer. Through local connection and worldwide sharing, CNN significantly minimizes the number of parameters and boosts learning efficiency. The data retrieved from low-level features is sent into the pooling and linear rectification layers to down-sample using multi-layer convolution. The emotional identification capabilities of PERF-CNN may be fine-tuned according to each user's unique tastes, comments, and past interactions. The algorithm can tailor its predictions to suit each user's individual tastes and communication styles by taking into account user-specific information, including demographics, explicit feedback, and previous interactions. Evoking individual emotions via graphic designs might help establish a stronger emotional connection with customers. Design that people feel emotionally connected to makes them more inclined to interact with it, increasing their engagement, interest, and emotional investment.

Fig. 3.

CNN model.

Due to their ability to learn feature hierarchies, CNNs excel at computer vision tasks like picture recognition and classification. This is achieved by capturing basic features in the first layers of the network and more intricate patterns in the later levels. Using CNNs for picture categorization and feature identification has the critical benefit of not requiring human oversight. A key benefit of CNNs is their ability to learn directly from pixel input without human intervention. Without human intervention, this allows them to learn and improve upon the images' most prominent features—their edges, forms, colours, textures, and objects. For data analysis that requires transmission across a network, PERF-CNN may use secure communication protocols like Secure Socket Layer (SSL)/Transport Layer Security (TLS) to protect data privacy. These communication protocols protected sensitive user information from unwanted access or interception.

The network training parameters may be further reduced using the pooled data, and the model's fitting degree is somewhat strengthened. The last step is the output layer, which produces the result once the connection layer sends the input data to the neurons. The CNN's fully connected layer may link to local image features. The extraction and classification of image components relevant to facial emotions is critical in psychoanalytic face emotion identification technology. The CNN's hidden layer uses classifiers to finish feature extraction and picture feature classification. One popular classifier among them is Softmax, which mostly uses probability calculations to round out the categorization. The Softmax layer, mostly used in graphic design to predict the six distinct outputs of human facial emotions, corresponds to the output layer. As a discipline, graphic design offers a lot to help individuals with data organization, prioritization, and shape. When it comes to knowing how to control visual language, graphic designers are masters at influencing human behaviour. Both graphic designers and previous typesetters were confronted with the formidable issue of acquiring the skills necessary to set type using new software to get work in the profession. Graphic designers need to understand the emotional impact of their work. To make their clients and their goods' messages more successful, designers should try to appeal to people's emotions via their work. Hence, designers must acquire knowledge about emotional perception. Numerous visual design fields may benefit from this study's examination of four fundamental typographic design aspects: print, goods, experiences, information, and safety. Computer graphics and image processing technology are developing at the same rate as information technology. To simplify the process, improve the design effect, and better convey the design, graphic designers are turning to computer graphics and image processing technologies. The fast expansion of the manufacturing sector has increased the public's expectations for the quality of graphic design; nonetheless, mistakes are inevitable in any creative endeavour. Consistently updating the training dataset with new graphic design examples is necessary to capture evolving emotional expressions and emerging design trends. To keep up with new patterns and trends, a continuous training method entails retraining the CNN model regularly using the most recent data. CNNs continuously employ convolution and pooling operations to gradually extract the high-level feature data from the input images, allowing them to perform tasks such as emotion identification and classification. Here, CNNs extract features of participants' emotional components and sentiments from visual design and generate a model-based segmentation analysis of sentiment components. For this reason, businesses place a premium on thorough product quality assessment to guard against graphic design flaws that compromise the product's aesthetics and practicality. The graphic design process is fine-tuned and error-free due to the analysis of quality inspection findings. So, the present state of the art and the application of convolutional neural networks to computer graphics and image processing for flaw detection in visual design are investigated in this research. Parallel processing methods are used to spread the computational complexity among several processing units or risks. Because of this, the inference process may be accelerated, allowing for real-time analysis of dynamic visual graphic content. Compared to other popular methodologies, the proposed PERF-CNN model increases the classification accuracy, interaction ratio, emotion recognition, the impact of pattern and colour features, and prediction error.

4. Results and discussion

This study suggests a personalized emotion recognition framework based on convolutional neural networks (PERF-CNN) to create visual content in graphic design. The data are taken from the emotion detection Kaggle dataset [31]. The dataset comprises 35,685 examples of 48 × 48 pixel greyscale images of faces separated into test and train datasets. Imageries are characterized based on the emotion demonstrated in the facial expressions (neutral, fear, happiness, anger, sadness, disgust, surprise). The performance of the recommended PERF-CNN model has been examined based on metrics such as classification accuracy, interaction ratio, emotion recognition, the impact of pattern and colour features and prediction error.

4.1. Classification accuracy ratio

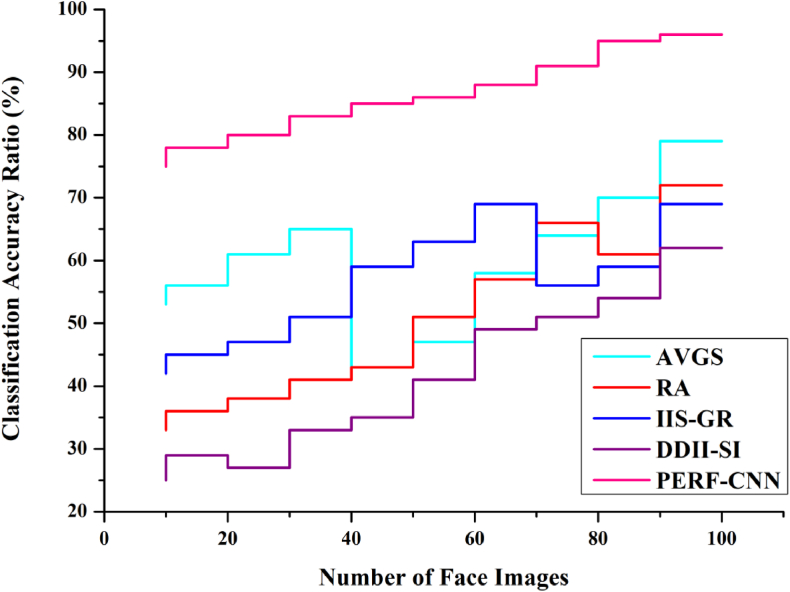

To better segment various areas of a facial image, this study first enhances a DL-based image classification approach. The approach employs a tri-branch network architecture to acquire detailed, semantic, and fusion data. Sentiment analysis and its recommendations are given classification in the deep learning model. Furthermore, several deep learning prototypes are compared according to datasets, the prototype features used, and the accuracy achieved. Accuracy is the most often utilized metric when evaluating a machine learning model. Utilizing fusion methods, PERF-CNN combines data across modalities after feature extraction from each modality. A unified image that conveys the graphic design's holistic emotional content is created via this fusion process, which merges the representations gained from each modality. The accuracy metric in the classification problem measures how many instances were correctly predicted relative to the total number of examples.

| (7) |

As shown in equation (7), where TN signifies the True Negatives, TP indicates the True Positives, FP indicates the False Positive, and FN represents the False Negatives. Fig. 4 denotes the classification accuracy ratio.

Fig. 4.

Classification accuracy ratio.

4.2. Emotion recognition rate

People rely heavily on graphic design and visual communication in their daily interactions. Facial expressions are a visual means of communication, and this research investigates how computers might learn to detect them. The advancement of such techniques would have far-reaching implications for fields including human-computer interaction, low-bandwidth face data transfer, and dynamic picture face recognition, among others. This research suggests data-driven approaches that rely on convolutional neural networks (CNNs) to identify emotions from facial expressions. The first method repeatedly masks portions of the input photos while training a convolutional neural network (CNN) to learn characteristics from less apparent face areas. Emotion recognition, face registration, feature extraction, and face localization are the main components of this process. Compared to more conventional models, CNN models outperform them regarding expression recognition rate. PERF-CNN employs cross-modal attention mechanisms to attend to important information across multiple modalities selectively. The model can zero in on the most important signals in each modality for emotion detection due to attention mechanisms that dynamically prioritize audiovisual material and graphic language according to their significance. Emotion recognition has been identified based on equations (5), (6). Fig. 5 indicates the emotion recognition rate.

Fig. 5.

Emotion recognition rate.

4.3. Prediction error

The prediction error distribution for pattern characteristics used in graphic design is shown in Fig. 6. As demonstrated in Fig. 6, all pattern feature prediction errors are below the acceptable range of 3 % for graphic designers. Patterns used in advertising graphics tend to include more intricate elements in the centre and more basic ones on the borders. In line with the distributional difficulty of advertising pattern characteristics, the image's central region mostly contains the pattern feature prediction errors of graphical design. The prediction error is mostly focused around 1 % in the background region of graphical design images, which is appealing small. This is because the pattern characteristics in the background region are fairly uniform. Improving the forecast accuracy of pattern features for graphical design can only be achieved by increasing training sets. Fusing successive network layers decreases memory bandwidth and increases computing efficiency. A single optimized kernel may reduce overhead and improve runtime performance via techniques like layer fusion, kernel fusion, or operator fusion. Based on equation (4), the prediction error has been determined.

Fig. 6.

Prediction error.

4.4. Interaction ratio

This research presents a smart graphic design system that may aid in the interactive process of creating posters automatically. The system shows user-uploaded product images and taglines on a poster and allows them to customize it according to their tastes. Consider the interplay between symbols while using fundamental symbols in graphic design. One must adhere to specific methods and rules while working with primary design languages like lines, dots, and surfaces. These rules vary based on the work's style, design theme, or form of expression. The goal is to convey information through the work, reflecting the author's inner emotions through a visually stunning and impactful presentation. In line with the results of experiments, the model can generate a high-quality layout using the visual semantics of the input image and keyword-based text summary. The model learns robust features from within to implement layout-aware design retrieval, which captures the complex relationship between contents and layouts. Adding time warping, speed variation, or temporal jittering to the training data augmentation allows PERF-CNN to be trained on a wider variety of temporal dynamics. Incorporating time-related data improves the model's ability to handle visual sequences with varying speeds and durations. Based on equation (3), the interaction rate has been identified. Fig. 7 shows the Interaction Ratio.

Fig. 7.

Interaction ratio.

4.5. Impact of pattern and colour features

Graphic rendering reduces the object's colour and texture complexity to a basic pattern while preserving its form and figure. According to a novel hypothesis, designers may find inspiration in how people's faces respond to different colour schemes and individual shades. Some have speculated that, since colour information complements picture textures, it might be a way to improve face emotion identification. Following optical correction, the visual field of every subject was assessed as 0.8 or above; not a single individual was colourblind or had any difficulty with vision. Correlating user reactions with eye movement patterns may study the effect of emotions on visual attention and memory. Pattern, colour, form, and character features are all easily handled using the CNN approach in graphic design. Fig. 8 represents the impact of pattern and colour feature rate. Gradient masking methods are used to safeguard gradient information from direct access by attackers during optimization. Gradient masking makes it harder for attackers to create successful adversarial perturbations by hiding gradient information.

Fig. 8.

Impact of pattern and colour feature rate.

Design literacy is necessary to successfully combine visual design, graphic composition, and the transmission of brand image (Fig. 8 for an illustration of the impact of pattern and colour feature rate). Different colours evoke various feelings. Colour palettes used in design can influence viewers' emotions and state of mind. A well-designed colour scheme can improve the user experience and boost conversion rates and other desirable behaviours.

Train PERF-CNN to differentiate between real and fake customized emotions by training it to recognize patterns. When training the model, supervised learning methods like binary classification or anomaly detection may label the data with the authenticity or synthetic of each emotion. PERF-CNN keeps track of user profiles and records details, including preferences, dislikes, and past behaviour. These profiles include users' communication preferences, emotional expressions, and preferred forms of interaction and material. These details could highlight the size or facial features given to the character when they were personified, among other physical and psychological attributes. Additionally, it is common practice to modify the body's proportions to move more freely while keeping human features in harmony with their natural shapes. Using graphic symbols or personified creatures, designers in the commercial design industry portray specific products or events. Appropriate documents, including packaging, promotional materials, and company-implement visual displays, may be used. A combination of extensive compositional practice, deep cultural understanding, and cumulative design activities yields the expertise of outstanding graphic designers. The ability to effectively mix visual design, graphic composition, and transmission of brand image requires design literacy.

Other potential avenues for future research include studying facial expressions to better understand people's emotional and psychological states and provide them with treatment and care that will positively impact their well-being.

The methodology under consideration applies to both industrial and healthcare settings. A significant factor in human-computer interaction is how emotion recognition in healthcare utilizes EEG signals to assess the “internal” state of the user directly. Numerous techniques for feature extraction exist, which typically rely on neuroscience findings to determine appropriate feature selection and electrode placement. Comparing these features using machine learning techniques to perform feature selection on a self-recorded data set yielded findings regarding the electrode position selection process, the utilization of selected feature categories, and the performance of various feature selection methods.

5. Conclusion

As a means of conveying ideas and information and satisfying people's aesthetic demands, the graphic design language is increasingly vital in modern life due to the digital era. This study presents a personalized emotion recognition framework based on convolutional neural networks (PERF-CNN) to create visual content in graphic design. Developing one's skills as a designer requires familiarity with the language of graphic design. This article focuses on researching graphical design language from an AI standpoint. The article first enhances the facial classification of images. The model uses two independent branching networks to acquire semantic and detailed image data. Then, an end-to-end classification procedure is achieved by combining the feature maps learnt by the two networks. This research aims to develop an automated system to turn complex user inputs into sophisticated graphic designs. Creating professional-looking posters or advertisements using the suggested model and the user-specified product image and text is possible by moving and dropping design components onto a blank surface. One of the article's primary limitations is the lack of more in-depth data analysis. Thus, future research will focus on selecting face expression elements using transfer learning. Other potential avenues for future research in this area include studying facial expressions to understand people's emotional and psychological states better so that we can better provide them with treatment and care that will positively impact their well-being.

Due to real-time sentiment analysis integration, the system can continuously monitor and assess users' emotional states as they interact with the graphics. The graphics might respond based on the detected emotional fluctuations. The experimental results show that the suggested PERF-CNN model outperforms other popular models concerning the following metrics: prediction error rate (6.5 %), rate of influence of pattern and colour features (94.4 %), emotion recognition ratio (98.9 %), interaction ratio (95.6 %), and classification accuracy (97.4 %).

Funding

This work was supported by 2020 Anhui University Outstanding Young Talent Support plan project (gxyq2020190) and Anhui Province Philosophy and Social Science Project “Research on the Coordinated Development of Southern Anhui Folk Art and Huizhou Lacquer Art, Culture and Creative Industry under the Background of Rural Revitalization” (AHSKQ2021D143).

Data availability statement

This article does not cover data research. No data were used to support this study.

CRediT authorship contribution statement

Zhenzhen Pan: Writing – review & editing, Writing – original draft. Hong Pan: Writing – review & editing, Writing – original draft. Junzhan Zhang: Writing – review & editing, Writing – original draft.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Biographies

Zhenzhen Pan was born in Weifang, Shandong Province, P.R.China, in 1982. She received the master's degree from Xi ‘an Academy of Fine Arts. Now, she works in School of Arts and Education, Chizhou College. She is studying for her PHD at Baekseok University of Korea. Her main research area is arts and crafts. E-mail: zhen080504@163.com

Hong Pan was born in Weifang, Shandong, P.R.China, in 1979. She received the master's degree from Xi'an Polytechnic University, China. From May 2007 to the present, She was an associate professor with School of Design, Anhui Polytechnic University, Wuhu, Anhui, China. Her research interests include Interior Design and landscape design. E-mail: 16962298@qq.com

Junzhan Zhang was born in Tongxu, Henan, P.R.China,in 1981. He received the master's degree from Central China Normal University, P.R. China.His research direction is cultural resources and cultural industry. E-mail: zjzhan2008@163.com

Contributor Information

Zhenzhen Pan, Email: zhen080504@163.com.

Hong Pan, Email: 16962298@qq.com.

Junzhan Zhang, Email: zjzhan2008@163.com.

References

- 1.Tian Z. Dynamic visual communication image framing of graphic design in a virtual reality environment. IEEE Access. 2020;8:211091–211103. [Google Scholar]

- 2.Ortis A., Farinella G.M., Battiato S. Survey on visual sentiment analysis. IET Image Process. 2020;14(8):1440–1456. [Google Scholar]

- 3.Breitfuss A., Errou K., Kurteva A., Fensel A. Representing emotions with knowledge graphs for movie recommendations. Future Generat. Comput. Syst. 2021;125:715–725. [Google Scholar]

- 4.Kendeou P., McMaster K.L., Butterfuss R., Kim J., Bresina B., Wagner K. The inferential language comprehension (iLC) framework: supporting children's comprehension of visual narratives. Topics in Cognitive Science. 2020;12(1):256–273. doi: 10.1111/tops.12457. [DOI] [PubMed] [Google Scholar]

- 5.Santos I., Castro L., Rodriguez-Fernandez N., Torrente-Patino A., Carballal A. Artificial neural networks and deep learning in the visual arts: a review. Neural Comput. Appl. 2021;33:121–157. [Google Scholar]

- 6.Habeeb R.M., Hassan N.A. The designer's artistic culture and its reflection in graphic design. Annals of the Romanian Society for Cell Biology. 2021;25(6):14914–14938. [Google Scholar]

- 7.Gultom E., Frans A., Cellay E. Adapting the graphic novel to improve speaking fluency for EFL learners. Al-Hijr. 2022;1(2):46–54. [Google Scholar]

- 8.Haotian W., Guangan L. Innovation and improvement of visual communication design of mobile app based on social network interaction interface design. Multimed. Tool. Appl. 2020;79(1–2):1–16. [Google Scholar]

- 9.Benke I., Knierim M.T., Maedche A. Chatbot-based emotion management for distributed teams: a participatory design study. Proceedings of the ACM on Human-Computer Interaction. 2020;4(CSCW2):1–30. [Google Scholar]

- 10.Dubois P.M.J., Maftouni M., Chilana P.K., McGrenere J., Bunt A. Gender differences in graphic design Q&AS: how community and site characteristics contribute to gender gaps in answering questions. Proceedings of the ACM on Human-Computer Interaction. 2020;4(CSCW2):1–26. [Google Scholar]

- 11.Li B., Sano A. Extraction and interpretation of deep autoencoder-based temporal features from wearables for forecasting personalized mood, health, and stress. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. 2020;4(2):1–26. [Google Scholar]

- 12.Thompson J., Liu Z., Li W., Stasko J. Understanding the design space and authoring paradigms for animated data graphics. Comput. Graph. Forum. 2020, June;39(3):207–218. [Google Scholar]

- 13.Tembhurne J.V., Diwan T. Sentiment analysis in textual, visual and multimodal inputs using recurrent neural networks. Multimed. Tool. Appl. 2021;80:6871–6910. [Google Scholar]

- 14.Hwang Y.M., Lee K.C. An eye-tracking paradigm to explore the effect of online consumers' emotion on their visual behaviour between desktop screen and mobile screen. Behav. Inf. Technol. 2022;41(3):535–546. [Google Scholar]

- 15.Dhanesh G.S., Rahman N. Visual communication and public relations: visual frame building strategies in war and conflict stories. Publ. Relat. Rev. 2021;47(1) [Google Scholar]

- 16.Kucher K., Martins R.M., Paradis C., Kerren A. StanceVis Prime: visual analysis of sentiment and stance in social media texts. J. Visual. 2020;23:1015–1034. [Google Scholar]

- 17.Lelis C., Leitao S., Mealha O., Dunning B. Typography: the constant vector of dynamic logos. Vis. Commun. 2022;21(1):146–170. [Google Scholar]

- 18.Gourisaria M.K., Agrawal R., Sahni M., et al. Comparative analysis of audio classification with MFCC and STFT features using machine learning techniques. Discov Internet Things. 2024;4:1. [Google Scholar]

- 19.Liu Yubin, Sivaparthipan C.B., Shankar Achyut. Human-computer interaction based visual feedback system for augmentative and alternative communication. Int. J. Speech Technol. 2022;25(2):305–314. [Google Scholar]

- 20.Xie Shaorong, Pan Qifei, Wang Xinzhi, Luo Xiangfeng, Sugumaran Vijayan. Combining prompt learning with contextual semantics for inductive relation prediction. Expert Syst. Appl. 2024;238(Part D) [Google Scholar]

- 21.Zhao L. The application of graphic language in animation visual guidance system under intelligent environment. J. Intell. Syst. 2022;31(1):1037–1054. [Google Scholar]

- 22.Schifferstein H.N., Lemke M., de Boer A. An exploratory study using graphic design to communicate consumer benefits on food packaging. Food Qual. Prefer. 2022;97 [Google Scholar]

- 23.Zhou B. Application of gesture recognition in graphic design and control of information interaction system. Int. J. Wireless Mobile Comput. 2023;24(3–4):322–328. [Google Scholar]

- 24.Guo Y. Design of artistic graphic symbols based on intelligent guidance marking system. Neural Comput. Appl. 2023;35(6):4255–4266. [Google Scholar]

- 25.Chu F., Li W. vol. 2022. Scientific Programming; 2022. (Data-Driven Image Interaction-Based Software Infrastructure for Graphic Design Research and Implementation). [Google Scholar]

- 26.Wang Z. Aesthetic evaluation of multi-dimensional graphic design based on voice perception model and Internet of things. Int. J. Syst. Assur. Eng. Manag. 2022;13(3):1485–1496. [Google Scholar]

- 27.Rangarajan V., Onkar P.S., De Kruiff A., Barron D. The role of perception of affective quality in graphic design inspiration. Des. J. 2022;25(5):867–886. [Google Scholar]

- 28.Yan X. A computer graphic image technology with visual communication based on data mining. Wseas Trans. Signal Process. 2022;18:89–95. [Google Scholar]

- 29.Cao J. vol. 1915. IOP Publishing; 2021, May. Research on the application of color Language in computer graphic design. (Journal of Physics: Conference Series). 4. [Google Scholar]

- 30.Chen Y., Zou W., Sharma A. Graphic design method based on 3D virtual vision technology. Recent Adv. Electr. Electron. Eng. (Formerly Recent Patents on Electrical & Electronic Engineering) 2021;14(6):627–637. [Google Scholar]

- 31.https://www.kaggle.com/datasets/ananthu017/emotion-detection-fer.

- 32.Li C. 2023 International Conference on Distributed Computing and Electrical Circuits and Electronics (ICDCECE) IEEE; 2023, April. Computer three-dimensional graphics and image analysis technology in visual communication design; pp. 1–6. [Google Scholar]

- 33.Leong S.C., Tang Y.M., Lai C.H., Lee C.K.M. Facial expression and body gesture emotion recognition: a systematic review on the use of visual data in affective computing. Comput. Sci. Rev. 2023;48 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This article does not cover data research. No data were used to support this study.