Summary

A critical goal of vision is to detect changes in light intensity, even when these changes are blurred by the spatial resolution of the eye and the motion of the animal. Here we describe a recurrent neural circuit in Drosophila that compensates for blur and thereby selectively enhances the perceived contrast of moving edges. Using in vivo, two-photon voltage imaging, we measured the temporal response properties of L1 and L2, two cell types that receive direct synaptic input from photoreceptors. These neurons have biphasic responses to brief flashes of light, a hallmark of cells that encode changes in stimulus intensity. However, the second phase was often much larger than the first, creating an unusual temporal filter. Genetic dissection revealed that recurrent neural circuitry strongly shapes the second phase of the response, informing the structure of a dynamical model. By applying this model to moving natural images, we demonstrate that rather than veridically representing stimulus changes, this temporal processing strategy systematically enhances them, amplifying and sharpening responses. Comparing the measured responses of L2 to model predictions across both artificial and natural stimuli revealed that L2 tunes its properties as the model predicts in order to deblur images. Since this strategy is tunable to behavioral context, generalizable to any time-varying sensory input, and implementable with a common circuit motif, we propose that it could be broadly used to selectively enhance sharp and salient changes.

Introduction

Determining how the intensity of light changes over space and time is fundamental to vision. However, both the spatial acuity of the eye and movements of the animal introduce blur, obscuring these changes. As a result, a host of behavioral mechanisms, including rapid photoreceptor, retinal, head and body movements have evolved to facilitate image stabilization in sighted animals so as to improve sampling of the image and reduce blur1,2. At the same time, in artificial image processing systems, image blur can be reduced in both stationary and moving scenes using a wide range of computational algorithms3. However, whether biological systems might utilize computational mechanisms downstream of retinal input to reduce image blur under naturalistic conditions is unknown. Here we show how recurrent circuit feedback dynamically tunes the temporal filtering properties of first order interneurons in the fruit fly Drosophila to suppress image blur caused by movement of the animal.

Visual processing in Drosophila begins in the retina, where each point in space is sampled by eight photoreceptor cells4. Six of these cells, R1–6, are essential inputs for achromatic information processing, including motion detection, and relay their signals to the distal-most region of the optic lobe, the lamina. Within each lamina cartridge, the six R1–6 cells representing the same point in visual space make extensive synaptic connections with three feedforward projection neurons, L1–3. L1–3 then provide differential inputs to downstream neural circuits that are specialized to detect either increases in light intensity or decreases in light intensity5–7. Extensive characterization of L1–3 using both artificial and naturalistic stimuli has revealed how each of these cell types can transmit distinct information about the luminance of a scene relative to the local spatial and temporal contrast8–12. However, how the temporal dynamics of L1 and L2 might relate to the spatiotemporal structure of the stimulus, particularly over short time intervals, is only incompletely understood.

Photoreceptors depolarize to increases in light intensity and have monophasic impulse responses13–16. That is, following a brief flash of light, they transiently depolarize before returning to baseline, a response waveform with a single phase (Figure 1A). The photoreceptor-to-lamina-neuron synapse is inhibitory; therefore, L1–3 hyperpolarize to increases in light intensity and depolarize to decreases in light intensity. Like the photoreceptors, L3 has sustained responses to light and a monophasic impulse response. In contrast, L1 and L2 respond more transiently and have biphasic impulse responses, meaning that they encode information about the rate of change in light intensity, the temporal derivative17,18 (Figure 1A). These biphasic impulse responses have been interpreted in the context of efficient coding, a hypothesis in which these neurons maximize the amount of information they encode about the stimulus while minimizing the resources used and remaining robust to noise17,19–21. In this framework, contrast changes are preferentially encoded because they contain new, behaviorally salient information. However, the ability of the visual system to estimate the temporal derivative of an input is diminished by blur: as the visual scene moves faster and faster, the measured contrast changes at single points in space diminish. How can neural processing compensate for the effects of blur so as to encode contrast changes if they are blurred in time?

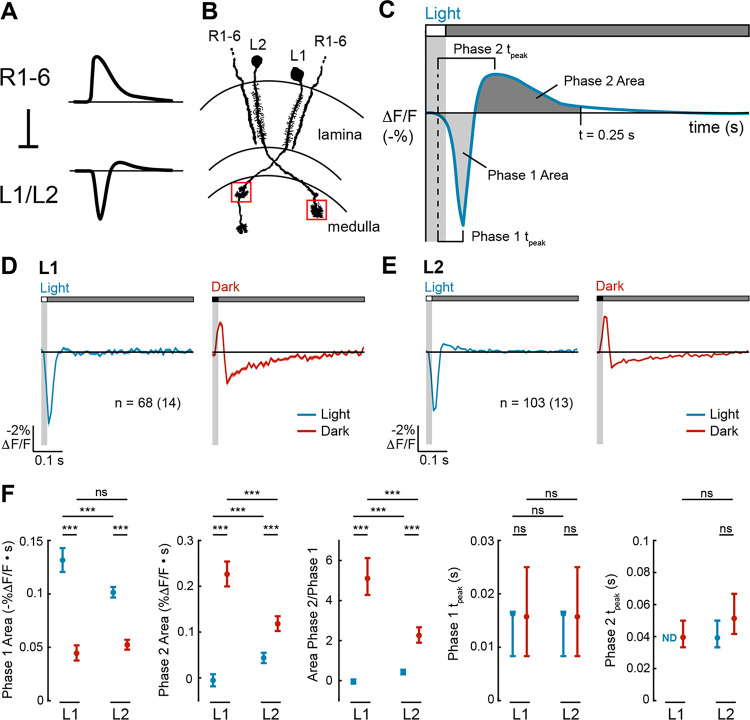

Figure 1. L1 and L2 have biphasic impulse responses that are asymmetric for light and dark.

(A) L1 and L2 are non-spiking neurons that receive inhibitory input from the R1–6 photoreceptors. R1–6 have monophasic responses to light, whereas L1 and L2 have biphasic responses to light.

(B) L1 and L2 project into the medulla neuropil, where L1 has two arbors (in layers M1 and M5), and L2 has one arbor (in layer M2). We imaged the layer M1 arbor of L1 and the layer M2 arbor of L2 (boxed in red). Diagram adapted from ref59.

(C) Schematic of how the metrics quantifying biphasic impulse responses are defined. The response to a light flash is shown here but equivalent metrics were used to quantify the response to a dark flash. Phase 1 Area: the area under the curve from the time the stimulus-evoked response begins to the time between the two phases. Phase 2 Area: the area under the curve from the time between the two phases to 0.25 s after the start of the stimulus. Phase 1 tpeak: time from when the stimulus-evoked response begins to when it peaks during the first phase. Phase 2 tpeak: time from when the stimulus-evoked response begins to when it peaks during the second phase.

(D-E) Responses of L1 (D) and L2 (E) to a 20-ms light flash (left, blue) or a 20-ms dark flash (right, red) with a 500-ms gray interleave. The solid line denotes the mean response and the shading is ± 1 SEM. n = number of cells (number of flies).

(F) Quantification of the responses. ND denotes when the Phase 2 Area was 0. The mean and 95% confidence interval are plotted. ns p>0.05, *** p<0.001.

Here we examine temporal processing by L1 and L2 in the fruit fly to address this issue. We find that L1 and L2 impulse responses are biphasic, as observed previously, but are surprisingly nonlinear with respect to light and dark and have larger second phases than expected. Informed by the effect of circuit manipulations on the second phase of L2 responses, we designed a recurrent dynamical model that can recapture the biphasic responses of L1 and L2. This model uses the output of L1 and L2 to scale the strength of a feedback signal back to L1 and L2, creating a recurrent structure. We find that responses that have been shaped by recurrent feedback emphasize rapid transitions between light and dark, producing sharper and faster responses than would be predicted by a model in which the visual system simply tries to reconstruct the “true” contrast change. We show that this sharpening would counteract blur introduced into photoreceptor responses by image motion and by the limited spatial resolution of the eye. Moreover, this recurrent circuitry is tuned by changes in neuromodulatory state that represent movement of the animal and enhances responses to naturalistic stimuli. Thus, temporal processing in early visual interneurons serves not to efficiently encode the signals they receive but instead serves to enhance a critical feature of natural scenes, thereby enhancing downstream extraction of salient visual features.

Results

L1 and L2 impulse responses are biphasic and nonlinear with respect to light and dark

We characterized the temporal tuning properties of L1 and L2 by expressing the voltage indicator ASAP2f22 cell type-specifically and recording visually evoked signals in the axonal arbors of individual cells (Figure 1B). To do this, we presented 20-millisecond full-field, contrast-matched light and dark flashes off of uniform gray on a screen in front of the fly. These impulse responses represent a linear approximation of how each cell type weighs visual inputs from different points in time and can be quantified with metrics capturing the magnitude and kinetics of each phase of the response (Figure 1C).

In response to the light flash, L1 and L2 both initially hyperpolarized, reaching peak amplitude at the same time (Figures 1D–F). The response of L2 then had a second depolarizing phase, while the response of L1 did not. In response to the dark flash, L1 and L2 initially depolarized, again with identical kinetics, and then hyperpolarized in a second phase. For both cell types, the light and dark impulse responses were not sign-inverted versions of each other, and therefore, these cells were not linear. Instead, the light and dark impulse responses of both cell types were asymmetric, differing in the extent to which they were biphasic. For L1, the light response was monophasic while the dark response was biphasic, with a substantially larger second phase than the first phase (Figures 1D and 1F). For L2, the light and dark responses were both biphasic, but the second phase of the dark response was larger than that of the light response (Figures 1E and 1F). Thus, L1 and L2 process light and dark inputs with distinct kinetics, and strikingly, the second phase of the response was often (but not always) much larger than the first phase of the response.

Recurrent synaptic feedback and cell-intrinsic properties together shape L2 impulse responses

Drosophila photoreceptors have monophasic impulse responses (Figure 1A)23, suggesting that the first phase of the response in both L1 and L2 reflects direct photoreceptor input, whereas the second phase of the response originates downstream of photoreceptors. Two non-mutually exclusive categories of biological mechanisms could explain the second phase: circuit elements (such as recurrent synaptic feedback) and cell-intrinsic mechanisms (such as voltage-gated ion channels). To test these possibilities, we took advantage of available genetic tools for cell type-specifically manipulating L2, first by limiting L2 inputs to the photoreceptors alone and then by selectively restoring recurrent feedback driven by L2.

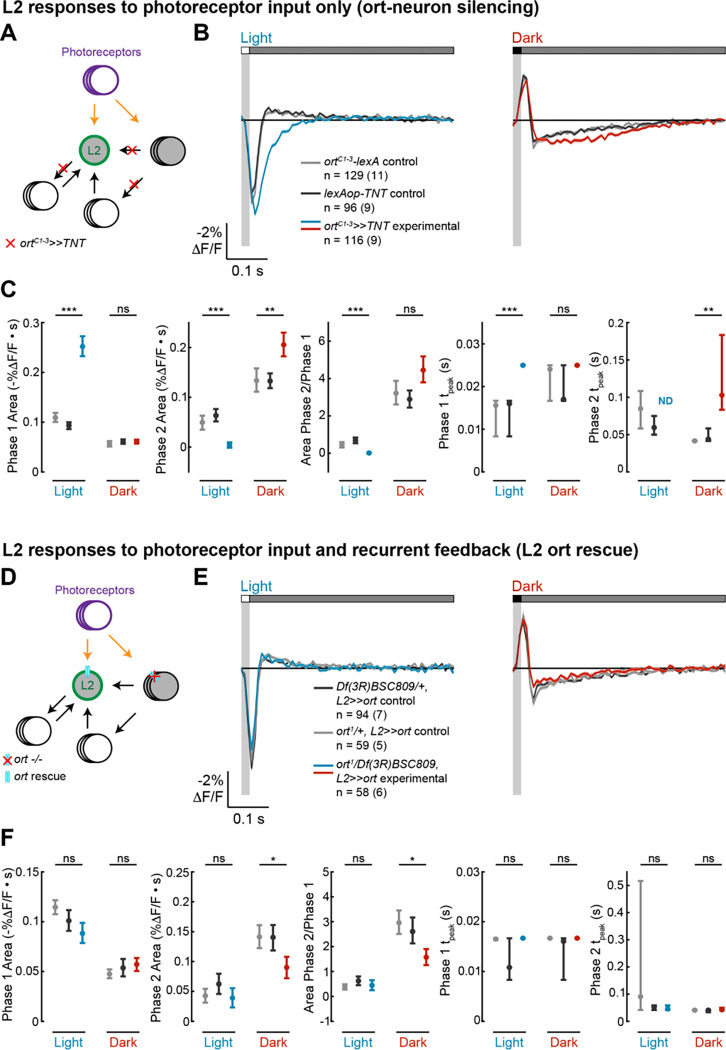

We first measured the impulse responses of L2 in the absence of all circuit inputs other than photoreceptors (Figure 2A). To do this, we blocked synaptic vesicle release by expressing tetanus toxin light chain (TNT) in all neurons directly postsynaptic to photoreceptors using a promoter fragment derived from ora transientless (ort), the histamine-gated chloride channel that serves as the neurotransmitter receptor for photoreceptor input24–27. As TNT blocks synaptic transmission instead of altering membrane potential, we could still measure the responses of L2 to photoreceptor input, even as L2 could not relay signals to downstream circuitry. In other words, we isolated L2 from both feedforward and feedback circuits while preserving only photoreceptor input, allowing us to observe how cell-intrinsic mechanisms shape its responses. Silencing circuit inputs to L2 eliminated the second phase of the response to light flashes while increasing the amplitude of the first phase and delaying the time to peak (Figures 2B and 2C). This observation suggests that circuit elements contribute a delayed, depolarizing input that truncates the initial hyperpolarizing response to photoreceptor signals and produces the depolarizing second phase. When we examined the response to dark flashes, we found, to our surprise, that blocking circuit input did not eliminate the second phase (Figures 2B and 2C). Instead, the second phase became larger and more sustained, peaking at a later time. The first phase was also unchanged, demonstrating that photoreceptor input and cell-intrinsic mechanisms are sufficient to produce it. Thus, the biphasic character of the light impulse response of L2 requires circuit input, while that of the dark impulse response is determined largely by cell-intrinsic mechanisms.

Figure 2. Circuit and intrinsic mechanisms shape L2 impulse responses.

(A-C), ort-neuron silencing. (D-F), L2 ort rescue.

(A), Diagram of the ort-neuron silencing experiment: ortC1–3-lexA-driven tetanus toxin (TNT) blocks synaptic vesicle release from all neurons that receive direct input from photoreceptors, thereby preventing visual information from being transmitted through circuits downstream of the first-order interneurons.

(D) Diagram of the L2 ort rescue experiment: in an ort null background, ort is rescued specifically in all L2 neurons. This preserves L2 responses to photoreceptor input and any L2-dependent circuit feedback but eliminates any circuit-based visual input that does not pass through L2.

(B, E) Responses of L2 to a 20-ms light flash (left) or a 20-ms dark flash (right) with a 500-ms gray interleave. The responses of the genetic controls are in gray; the experimental responses are in color. The solid line is the mean response and the shading is ± 1 SEM. n = number of cells (number of flies).

(C, F) Quantification of the responses in (B, E). The mean and 95% confidence interval are plotted. ND is shown instead of the Phase 2 tpeak value when the Phase 2 Area is 0. ns p>0.05, * p<0.05, ** p<0.01, *** p<0.001, Bonferroni correction was used for multiple comparisons.

We next performed a complementary experiment in which only L2 could respond to light and activate downstream circuitry. We leveraged the fact that ort, a histamine gated chloride channel, is essential for photoreceptors to signal to neurons in the lamina24,25,28,29. As expected, L2 neurons in ort-null flies did not respond to light (Figure S1). Next, we rescued expression of ort specifically in L2 in an ort-null genetic background, thereby allowing L2 alone to receive photoreceptor input and signal to its postsynaptic partners. (Figure 2D). Thus, circuit contributions that depend on direct visual input to neurons other than L2 were blocked, but L2-dependent recurrent circuits and cell-intrinsic mechanisms were preserved. Strikingly, L2-specific ort rescue restored not only the initial, photoreceptor-mediated phase but resulted in biphasic impulse responses to both light and dark flashes (Figures 2E and 2F). The light impulse response was indistinguishable from those measured in control flies heterozygous for each of the ort null alleles, indicating that L2 function is sufficient to produce the biphasic response and that the circuit mechanisms that generate the depolarizing second phase are L2-dependent and hence recurrent. However, interestingly, while the dark impulse response was biphasic, the rescue was not complete: the first phase of the response was intact, but the amplitude of the second phase was smaller than that seen in control animals. Therefore, L2-independent circuit mechanisms contribute to this second phase, consistent with the results from the ort-TNT experiments (Figure 2B). Taken together, these results demonstrate that both cell-intrinsic properties and L2-driven recurrent feedback shape impulse responses in L2 in an asymmetric, stimulus-dependent fashion.

A recurrent model replicating L1 and L2 response dynamics counteracts motion-induced blurring

Given the stimulus-selective biphasic impulse responses (Figure 1) and their complex control mechanisms (Figure 2), we next examined how the temporal properties of L1 and L2 affect visual processing. Biphasic impulse responses are generally thought of as biological implementations of derivative-taking, or in the frequency domain, bandpass filters. In this framework, the biphasic waveform that best approximates derivative-taking—or equivalently, is most strongly bandpass—has first and second phases with identical shapes and integrated areas. However, we never observed an impulse response of this form (Figures 1D–1F). Instead, the second phase of the response was often much stronger than the first phase (Figures 1D–1F), a form that has not been observed in many other visual neurons16,23,30–35. A linear filter with that shape would be low-pass in the frequency domain and would predict responses to contrast steps that are inconsistent with physiological measurements (Figure S2)22,36. Furthermore, as noted above, the observation that the light and dark impulse responses are not sign-inverted versions of each other indicates that temporal processing by L1 and L2 is not linear (Figures 1D–1F).

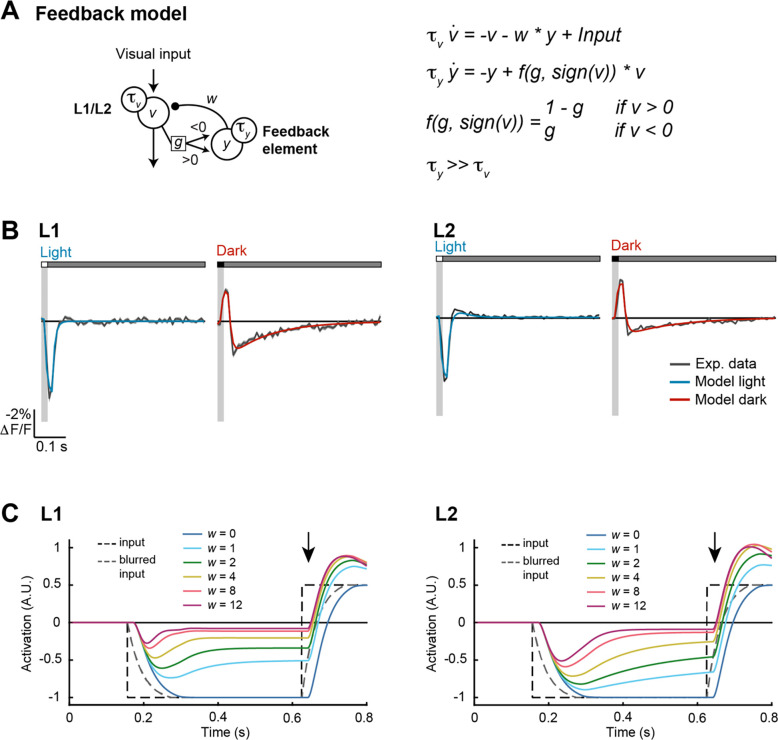

To explore the functional consequences of these properties, we modeled L1 and L2 responses using a set of differential equations that describe the time-varying activity of each cell (v) as a function of feedforward input from photoreceptors as well as feedback from recurrent circuitry (Figure 3A). The feedback (y) was modeled as a longer time-constant, sign-inverted signal scaled by the output of each cell type and served to generate the second phase of the L1 (or L2) response. One parameter (g) controlled how strongly depolarization or hyperpolarization of L1 (or L2) engages downstream feedback circuits, while a second parameter (w) controlled the overall strength of feedback. This recurrent, dynamical model captured the biphasic impulse responses of L1 and L2, including the nonlinear, asymmetric second-phase responses to light and dark flashes (Figure 3B). Moreover, different sets of model parameters could reproduce the differences between the two cell types (Figure 3B and Methods).

Figure 3. A recurrent feedback model recapitulates L1 and L2 responses and deblurs moving edges.

(A) Schematic of the feedback model (left) and equations (right). is the neural response of L1/L2, and is its time derivative. is the output of the feedback element, and is its time derivative. and describe time constants for and , respectively. is the strength of feedback, and controls how strongly positive and negative values of engage feedback.

(B) Modeled L1 (left) and L2 (right) responses to 20-ms light (blue) or dark (red) flashes overlaid on experimentally measured responses of L1 and L2 from Figure 1D (gray).

(C) Modeled L1 (left) and L2 (right) responses to a motion-blurred edge (blurred input, gray dashed line; original input, black dashed line) for models with different feedback weights (). No feedback is (dark blue). As the weight increases, the model responds more rapidly to the dark-to-light transition (see responses at the arrow). Responses are plotted with inversion over the x-axis to facilitate comparisons with the inputs.

In this model, the sign of the feedback and its long time constant can generate responses that are of opposite sign and perdure beyond the initial response. For example, a dark flash produces depolarization followed by sustained hyperpolarization. As the initial response to a light flash is a hyperpolarization, the second phase of the dark response could transiently enhance subsequent responses to light. We therefore reasoned that this property would be particularly impactful for stimuli that contain sequences of opposite contrast polarities. Such stimuli are common with motion; for example, a dark object passing over a light background will produce a temporal sequence of dark followed by light.

As a test case, we examined how the model responded to simple artificial stimuli corresponding to dark or light steps (Figure 3C). By definition, the transition in the stimulus was instantaneous; however, the input to the modeled L1 or L2 was delayed and blurred in time, reflecting the limited temporal resolution of photoreceptors and the upstream synapse. We then varied the weight of the feedback signal; when , the modeled L1 or L2 responses followed the time course of this delayed and blurred input. However, as the weight of the feedback increased, the transition reported by the modeled response became faster and sharper, more closely approaching the original instantaneous transition. The degree to which the model could accelerate responses to dark or light inputs depended on the degree of asymmetry in the strength of the feedback (Figure S3). In particular, when , meaning that only dark inputs drive feedback, responses to transitions that follow a dark input were selectively sharpened; in contrast, when , meaning that only light inputs drive feedback, responses to transitions that follow a light input were selectively sharpened. When , transitions in both directions were sharpened. In this context, L1 had highly asymmetric feedback () and accordingly, edge enhancement occurred mainly for one polarity (Figure S4). Conversely, L2 was also asymmetric, but less so (), and enhanced edges of both polarities. Notably these effects on temporal sharpening would be preserved by the known contrast selectivities of downstream neurons (Figure S4). Thus, recurrent feedback generates a form of temporal edge enhancement that could be useful for downstream processing.

Recurrent feedback compensates for motion-induced blurring of natural images

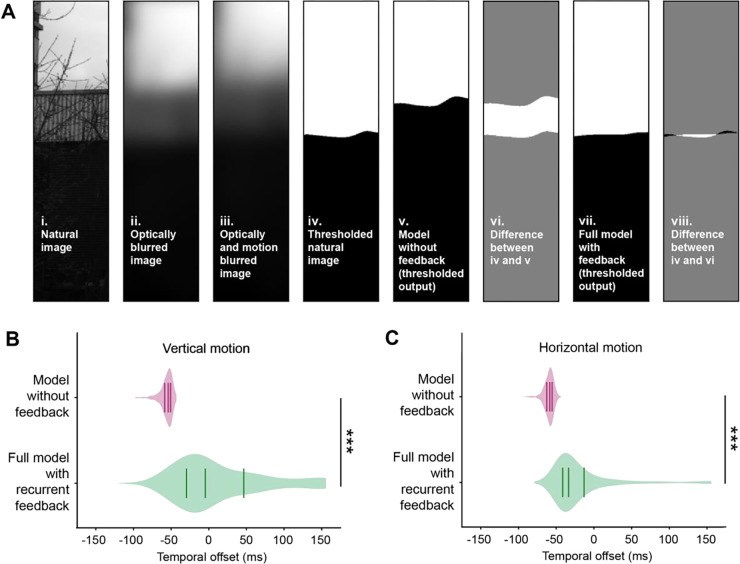

An animal’s own movement blurs stationary visual inputs by reducing their apparent contrast in both time and space and by imposing a temporal delay between the true and perceived location of objects in the environment. Given the dynamical model’s ability to sharpen an artificial moving edge (Figure 3C), we hypothesized that temporal sharpening could also reduce blur generated by self motion, leading to responses that are closer in time to the original, non-blurred signal. To test this, we simulated the responses of L1 and L2 evoked by moving natural images37,38. In brief, the natural images were processed with spatial blurring corresponding to retinal optics and temporal blurring produced by the non-zero integration time of photoreceptors and the effects of self-motion (Figure 4A and Methods). The blurred images were then used as inputs to the dynamical model. For comparison, we also used these images as inputs to a model that lacked recurrent feedback (by setting ). The outputs of these models were then compared with the input images to determine the temporal offset that maximized spatial alignment (Figure 4B). This offset captures the delay caused by both motion blur and circuit processing. Strikingly, the model that incorporated recurrent feedback significantly reduced the temporal offset relative to the model without feedback (Figure 4B). Intuitively, this effect arises because the temporal shift induced by feedback in the recurrent circuit compensates for the temporal shift induced by motion, thereby deblurring the image.

Figure 4. A feedback model deblurs and strengthens responses to edges.

(A) Deblurring edges in natural images. Thresholding refers to binarizing the image according to contrast polarity (above zero: white, below zero: black).

(B) Violin plots of the temporal offset between the thresholded natural image (A-iv) and either the thresholded output of the model without feedback (A-v) or the full model with recurrent feedback (A-vii), for 464 natural images (see Methods). Images were blurred with motion in the vertical direction. Lines indicate the lower quartile, median, and upper quartile. *** p<0.001, Wilcoxon signed-rank test.

(C) As in (B), but with motion in the horizontal direction.

Octopaminergic neuromodulation enhances feedback and improves deblurring at fast speeds

The average speed of images across the retina differs greatly depending on whether a fly is stationary, walking, or flying–thereby varying the motion blur. As a result, behavioral context should change the temporal processing strategy of L1 and L2. Indeed, many visual neurons downstream of L1 and L2 have different temporal tuning in moving and quiescent flies35,39–44. Using our model, we asked how changing the strength of the feedback element changes responses to high speed and low speed images. We found that increasing feedback improved deblurring of fast-moving images, diminishing the temporal offsets relative to a model with no feedback (Figures 5A and S5). Critically, increasing feedback also worsened blurring of slow-moving images, leading to “over-corrected” temporal offsets that frequently exceeded 0 ms, particularly for motion in the vertical direction (Figure 5A). Thus, to be most useful, feedback should adapt to different contexts. Specifically, feedback should be stronger in behavioral states where visual image motion is faster, such as flight. To test this model prediction, we applied the octopamine agonist chlordimeform (CDM) to the brain, a manipulation that has been used to mimic the change in neuromodulatory state associated with flight and that enhances tuning for faster image velocities in visual neurons35,40,43. Under these conditions, the measured impulse responses of L1 and L2 to dark flashes had larger second phases relative to their first phases, while their light responses were relatively unchanged, qualitatively matching predictions of the model with enhanced feedback (Figures 5B and 5C). Thus, temporal filtering by L1 and L2 can be shaped by octopamine to enhance deblurring under behaviorally relevant contexts.

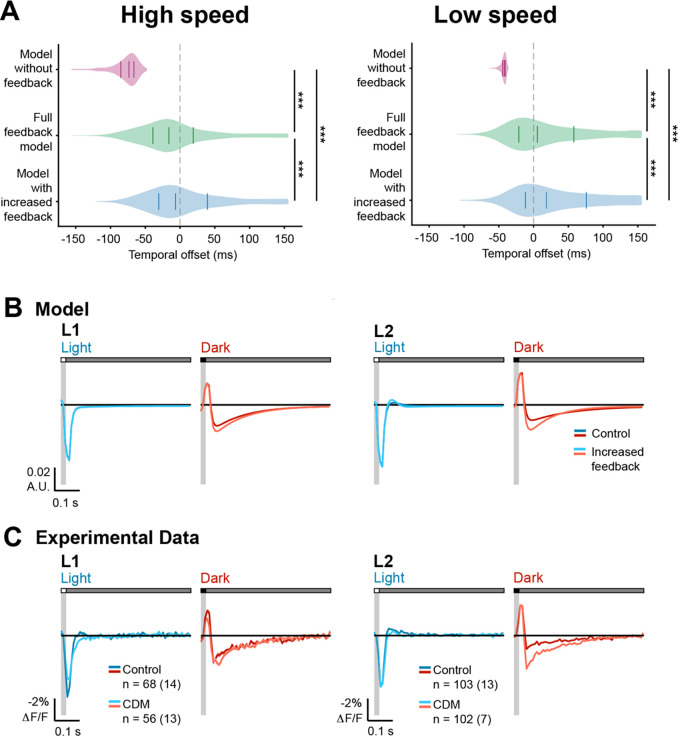

Figure 5. Octopaminergic neuromodulation enhances feedback and improves deblurring at fast image speeds.

(A) Violin plots of the temporal offset between the natural image and model output at high (left, 600 °/s) and low (right, 150 °/s) image speeds, using (model without feedback), (full feedback model), and (model with increased feedback). Images were blurred with motion in the vertical direction. Solid lines indicate the lower quartile, median, and upper quartile. Dotted gray line indicates temporal offset = 0 ms. *** p<0.001, Wilcoxon signed-rank test.

(B) Modeled L1 (left) and L2 (right) responses to 20-ms light (blue) or dark (red) flashes with a 500-ms gray interleave under control or enhanced feedback.

(C) Experimentally measured responses of L1 and L2 to a 20-ms light flash (blue) or a 20-ms dark flash (red) with or without bath application of the octopamine receptor agonist CDM (20 μM). The control data is repeated from Figure 1. The solid line is the mean response and the shading is ± 1 SEM. n = number of cells (number of flies).

Feedback sharpens intensity changes in naturalistic stimuli over time

To test the generalizability of these predictions, we recorded the voltage responses of L2 to a temporally naturalistic stimulus. This stimulus simulated the sequence of light intensities that would be detected by the photoreceptors upstream of a single L2 cell as a fly moves relative to the visual scene (Figures 6A, S6A, and S6Bs). Under these conditions, the voltage responses of L2 broadly followed the changes in stimulus intensity, depolarizing as the stimulus became dimmer and hyperpolarizing as the stimulus became brighter (Figure 6B). Interestingly, responses to small contrast changes were accentuated and responses to large contrast changes were accelerated (Figure 6B, insets), resulting in temporally sharpened transitions.

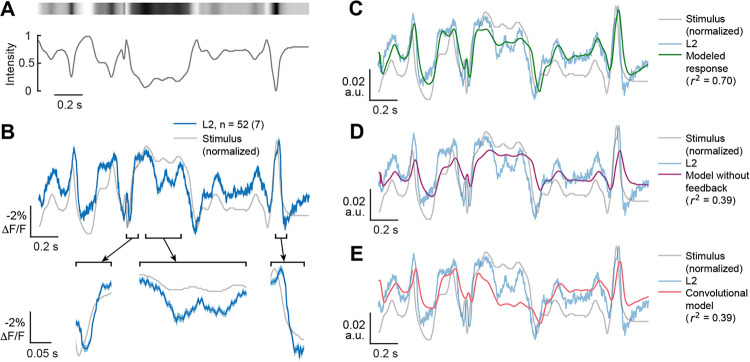

Figure 6. Both measured and modeled responses sharpen responses to naturalistic stimuli.

(A). The time varying changes in intensity of a full-field naturalistic stimulus (bottom) and a visual representation of the stimulus intensity (top).

(B) The measured response of L2 (blue, top) to this naturalistic stimulus, overlaid on an inverted stimulus trace (gray). Selected segments are depicted below as expanded insets (bottom) to better visualize response dynamics. The solid line is the mean response and the shading is ± 1 SEM. n = number of cells (number of flies).

(C) Full feedback model of L2 responses to the naturalistic stimulus using the same parameters as for the impulse responses, overlaid on the inverted stimulus (gray) and the measured response (light blue).

(D) As in (C), but using the model without feedback (magenta).

(E) As in (C), but with a convolutional model using the impulse response as a linear filter (red).

We then modeled L2 responses to the naturalistic stimulus using the same parameters that were used to predict the impulse response. Despite the distinct stimulus statistics, this recurrent model predicted the response of L2 to the naturalistic stimulus well (r2 = 0.72) (Figure 6C). Modifying the model to remove the feedback component or using a convolutional model that used the impulse response as a linear filter dramatically reduced performance (r2 = 0.39 for both) (Figures 6D and 6E). Directly fitting the model to the naturalistic stimulus response improved performance (r2 = 0.90), while using the same model but without feedback only yielded r2 = 0.56 (Figure S6). Taken together, these measured and modeled results suggest that the recurrent structure of the dynamical model captures the temporal features of L2 responses under naturalistic conditions and further support a role for L2 in deblurring stimuli.

Discussion

Responding strongly and precisely to changes in contrast is a critical step in visual processing. Using in vivo, two-photon voltage imaging and computational modeling to measure and describe the temporal properties of the first order interneurons L1 and L2, we reveal surprising complexity in this computation. Examining the impulse responses of these cells, we show that L1 and L2 have distinct responses to flashes of light and dark. Across both cell types, the responses to these oppositely signed flashes differ in waveform and thus are not consistent with a linear system. Intriguingly, we observed that the response to dark flashes had an unusual biphasic character, where the second phase of the response was much larger than the first. Using genetic manipulations that isolated different elements of the circuit, we show that while cell intrinsic mechanisms are important, recurrent circuit feedback plays a central role in shaping the biphasic responses of L2 to both light and dark. To test hypotheses about the functional roles of these responses, we built a dynamical model with recurrent feedback that was fit to experimental data. Using simulation, we find that the recurrent feedback element of the model enables faster responses to contrast changes, compensating for motion-induced blurring of natural scenes. This feedback can be tuned by modulating octopaminergic signaling, consistent with adapting the feedback to the expected degree of blur imposed by self-motion. Finally, we show that L2 responds sharply to contrast changes in a naturalistic stimulus, as does the recurrent feedback model, even when fit to the impulse responses. Taken together, tunable recurrent feedback early in visual processing represents a computational mechanism that can compensate for spatiotemporal blurring.

Our study builds upon previous work extensively characterizing the role of L1 and L2 in relaying information from R1–6 photoreceptors to downstream circuits. Prior work has shown that L1 and L2 encode information about contrast and provide inputs to downstream circuits that become selective to light and dark5,6,8,9,22,33,45–48. In addition to encoding contrast, L1 and L2 also adapt to recent stimulus history, rescaling their responses relative to the frequency and luminance distributions of the input over a timescale of hundreds of milliseconds to seconds49. Genetic and circuit dissection experiments have suggested that the response properties of L1 and L2 are not solely dependent on photoreceptor input, but rather are a product of dynamic lateral interactions among multiple cell types, as well as recurrent feedback connections with photoreceptors36,50–52. We have built upon these previous findings by taking temporally precise, cell type-specific voltage measurements of L1 and L2 axon terminals in response to both artificial and naturalistic stimuli. As expected, the responses of L1 and L2 to these visual stimuli are consistent with contrast tuning. Interestingly, these contrast tuned responses were unusually sharp in time, particularly in response to the naturalistic stimulus at times when the stimulus contrast changed abruptly, creating a temporal “edge”. Using dynamical modeling informed by circuit perturbations, we show that this phenomenon emerges from oppositely signed recurrent feedback. Intuitively, this effect arises because if the stimulus maintains one intensity and then transitions to a different intensity, the recurrent input’s slower time constant causes a normally dampening input to become transiently facilitating, accelerating responses to large contrast changes. This sharpening effect emerges over timescales on the order of 100 milliseconds, a timescale over which changes commonly occur in moving natural stimuli.

Predictive coding provides one explanation for the careful temporal shaping seen in L1 and L2 by positing that a goal of early sensory processing is to efficiently encode sensory information17,19. In this view, temporal impulse responses are biphasic so as to encode information only about changes in a stimulus, minimizing the use of neural resources when inputs are constant and therefore predictable. However, this assumes that the main goal of the initial steps in sensory processing is to recode the input ensemble in a more efficient manner. Rather, nervous systems could recode the information in a way that is not necessarily veridical with respect to the information in the stimulus but instead in a way that would make it easier to perform downstream computations useful for behavior53,54.

In parallel, control theory in engineering stresses the ability of feedback to change the output dynamics of a system that has constrained input components55. We interpret our findings in this light. Feeding back a delayed, scaled, and sign-inverted version of a sensory neuron's input through a recurrent circuit combined with carefully tuned intrinsic properties makes the system hypersensitive to input changes. This can create responses that occur earlier and are disproportionately larger than specified by the sensory input. This strategy is useful in that it can not only undo blurring of edges during self-motion but can also overemphasize them. In other words, it exaggerates critical features, creating a caricature-like representation of the sensory input to feed into downstream computations. Importantly, the strength of the feedback is adjustable, thereby allowing deblurring to be matched to the expected characteristics of the input. Thus, in this view, even the most peripheral visual circuits in the fly implement critical processing steps that enhance specific visual features.

This type of tunable temporal sharpening could be applied to many sensory systems. In vertebrates, bipolar cells, as the first neurons downstream of the photoreceptors, are the circuit analogs of L1 and L2. However, while the linear filters of many bipolar cell types are biphasic, the second phase is the same size or smaller than the first phase and is not extended in time, unlike L1 and L232,56,57. We speculate that this difference between Drosophila and vertebrates is because phototransduction is faster in flies, perhaps better preserving the contrast changes to be sharpened. Conversely, auditory processing in vertebrates can detect rapid changes in stimulus features and use this information to guide behavior. Remarkably, in echolocating bats, neurons in inferior colliculus display biphasic responses in which the second phase is extended in time, a receptive field structure that enhances contrast along multiple stimulus axes58. Thus, in sensory systems where detecting rapid stimulus changes is critical, this form of temporal deblurring might provide a generalizable strategy for beginning to abstract the complexity of the natural world into a set of enhanced features primed for downstream computations.

STAR Methods

EXPERIMENTAL MODEL AND STUDY PARTICIPANT DETAILS

Flies were raised on standard molasses food at 25 °C on a 12/12-h light-dark cycle. Female flies of the following genotypes were used:

L1>>ASAP2f (Figures 1 and 5): w/+; UAS-ASAP2f/+; GMR37E04-GAL4/+

ortC1–3>>TNT experimental (Figure 2): +; UAS-ASAP2f/ortC1–3-lexA::VP16AD; 21D-GAL4/13XLexAop2-IVS-TNT::HA

ortC1–3-lexA control (Figure 2): +; UAS-ASAP2f/ortC1–3-lexA::VP16AD; 21D-GAL4/+

lexAop-TNT control (Figure 2): +; UAS-ASAP2f/+; 21D-GAL4/13XLexAop2-IVS-TNT::HA

Df(3R)BSC809/+, L2>>ort control (Figure 2): w/+; UAS-ASAP2f/UAS-ort; 21D-GAL4/Df(3R)BSC809

ort1/+, L2>>ort control (Figure 2): +; UAS-ASAP2f/UAS-ort; 21D-GAL4, ort1/+

ort1/Df(3R)BSC809, L2>>ort experimental (Figure 2): +; UAS-ASAP2f/UAS-ort; 21D-GAL4, ort1/Df(3R)BSC809

ort1/Df(3R)BSC809 control (Figure S1): +; UAS-ASAP2f/+; 21D-GAL4, ort1/Df(3R)BSC809

L2>>ASAP2f, jRGECO1b (Figure 6): +; UAS-ASAP2f/+; 21D-GAL4/UAS-jRGECO1b

METHOD DETAILS

Fly preparation

Flies were prepared for imaging as previously described22,66. Briefly, flies were collected on CO2 1–2 days post-eclosion and imaged 5–8 days post-eclosion. The flies were cold anesthetized, positioned in a custom-cut hole in a steel shim, and immobilized with UV glue (NOA 68T from Norland Products). The back of the head capsule was dissected with fine forceps to expose the left optic lobe and perfused with a room temperature, carbogen-bubbled saline-sugar solution (modified from ref.67). The saline composition was as follows: 103 mM NaCl, 3 mM KCl, 5 mM TES, 1 mM NaH2PO4, 4 mM MgCl2, 1.5 mM CaCl2, 10 mM trehalose, 10 mM glucose, 7 mM sucrose, and 26 mM NaHCO3. The pH of the saline equilibrated near 7.3 when bubbled with 95% O2/5% CO2. For the CDM experiments, a 200 mM stock solution was made by dissolving the powder in distilled water and stored at 4 °C. Saline-sugar solution with 20 μM CDM was made fresh from the stock solution daily. Each fly was imaged for up to 1 h.

Two-photon imaging

The L1 arbor was imaged in medulla layer M1, and the L2 arbor was imaged in medulla layer M2.

In Figures 1–3, and 5, we used a Leica TCS SP5 II system with a Leica HCX APO 20X/1.0-NA water immersion objective and a Chameleon Vision II laser (Coherent). Data were acquired at a frame rate of 82.4 Hz using a frame size of 200×20 pixels, pixel size of 0.2 μm× 0.2 μm, a line scan rate of 1400 Hz, and bidirectional scanning. ASAP2f was excited at 920 nm with 5–15 mW of post-objective power, and photons were collected through a 525/50-nm filter (Semrock). Imaging was synchronized with visual stimulation (see below) using triggering functions in the LAS AF Live Data Mode software (Leica), a Data Acquisition Device (NI DAQ USB-6211, National Instruments), and a photodiode (Thorlabs, SM05PD1A) directly capturing the timing of stimulus frames. Data were acquired on the DAQ at 5000 Hz.

In Figure 6, we used a Bruker Ultima system with a Leica HCX APO 20X/1.0-NA water immersion objective and a Mai Tai BB DS laser (Spectra-Physics). Data were acquired at a frame rate of 354.4 Hz using a frame size of 200×40 pixels, pixel size of 0.2 μm×0.2 μm, 8 kHz line scan rate, and bidirectional resonant scanning. ASAP2f was excited at 920 nm with 5–15 mW of post-objective power, and photons were collected through a 525/50-nm filter (Semrock). To identify cells that respond to a search stimulus during imaging (see below), jRGECO1b signals were excited at 1070 nm using a Fidelity II laser (Coherent) and collected through a 595/50-nm filter (Semrock). Imaging was synchronized with visual stimulation (see below) using a photodiode (Thorlabs, SM05PD1A) connected to the Bruker system to collect the timing of stimulus frames.

Visual stimulation

Visual stimuli were generated with custom-written software using MATLAB (MathWorks) and Psychtoolbox-363–65 and presented using the blue LED of a DLP LightCrafter 4500 projector (Texas Instruments), as previously described66. The projector light was filtered with a 482/18-nm bandpass filter (Semrock) and refreshed at 300 Hz. The stimulus utilized 6 bits/pixel, allowing for 64 distinct luminance values. The radiance at 482 nm was approximately 78 mW·sr−1·m−2. In Figures 1–3 and 5, the stimulus was rear-projected onto a screen positioned approximately 8 cm anterior to the fly that spanned approximately 70° of the fly’s visual field horizontally and 40° vertically. In Figure 6, the stimulus was rear-projected onto a screen positioned approximately 6 cm anterior to the fly that spanned approximately 80° of the fly’s visual field horizontally and 50° vertically. All stimuli also included a small square simultaneously projected onto a photodiode to synchronize visual stimulus presentation with the imaging data. Stimulus presentation details were saved in MATLAB .mat files to be used in subsequent processing.

The visual stimuli used were:

Search stimulus: alternating light and dark flashes, each 300 ms in duration, were presented at the center of the screen (8° from each edge of the screen from the perspective of the fly). The responses to this stimulus were used during analysis to exclude cells with receptive fields located at the edges of the screen, which would have uneven center-surround stimulation during full-field stimuli. Cells with receptive fields at the edge of the screen would have opposite-signed responses to the search stimulus and the full-field stimulus, whereas cells with receptive fields at the center of the screen would have responses with the same sign to the two stimuli. The search stimulus was presented for 61 s per field of view for data shown in Figures 1–3 and 5, and 30 s per field of view for data shown in Figure 6.

Impulse stimulus (Figures 1–3 and 5): 20 ms light and dark flashes were presented over the entire screen with 500 ms gray interleaves between flashes. The light and dark flashes were randomly chosen at each presentation. The Weber contrast of the flashes relative to the gray interleave was 1. This stimulus was presented for 121 s per field of view.

Naturalistic stimulus (Figure 6): a 2 second sequence of varying intensities extracted from a moving panoramic natural image was presented over the entire screen. The natural image68 was processed to remove gamma correction and converted to grayscale. A Gaussian filter was applied to the image to simulate optical blur due to the angular resolution of the fly eye38,69. To simulate motion blur, we used a time-varying sequence of yaw rotation angles converted from the angular velocity trajectory of a fly freely walking on a flat surface70,71. The intensity of the pixels corresponding to the yaw angle sequence were obtained from the image and normalized to a range of 0 to 1. This stimulus was presented for 360 s per field of view.

In vivo imaging data analysis

Imaging data were analyzed as previously described66. Time series were read into MATLAB using Bio-Formats and motion-corrected by maximizing the cross-correlation in Fourier space of each image with the average of the first 30 images. ROIs (regions of interest) corresponding to individual cells were manually selected from the average image of the whole time series, and the fluorescence of a cell over time was extracted by averaging the pixels within the ROI in each imaging frame. The mean background value was subtracted either frame by frame (Leica) or line by line (Bruker) to correct for visual stimulus bleedthrough. To correct for bleaching, the time series for each ROI was fitted with the sum of two exponentials, and the fitted value at each time t was used as in the calculation of . For the search stimulus, all imaging frames were used to compute the fit, thereby placing at the mean response after correction for bleaching. For the impulse stimulus, only imaging frames that fell in the last 25% of the gray period were used to fit the bleaching curve; this places at the mean baseline the cell returns to after responding to the flash instead of at the mean of the entire trace (which is inaccurate when the responses to the light and dark flashes are not equal and opposite). Time series were manually inspected and discarded if they contained uncorrected movement. For the search and impulse stimuli, the stimulus-locked average response was computed for each ROI by reassigning the timing of each imaging frame to be relative to the stimulus transitions and then averaged across a window of 8.33 ms. This effectively resampled our data from 82.4 Hz to 120 Hz. For the naturalistic stimulus, responses were recorded at 354.4 Hz so the stimulus-locked average response was simply computed by assigning the first frame after the start of each stimulus to t=0 and averaging across trials.

As the screen on which stimuli were presented did not span the fly’s entire visual field, only a subset of imaged ROIs experienced the stimulus across the entire extent of their spatial receptive fields. These ROIs were identified based on having a response of the appropriate sign to the search stimulus. ROIs lacking a response to this stimulus or having one of the opposite sign were not considered further, except for the ort1/Df(3R)BSC809 control condition (Figure S1). As L2 did not respond to either the search stimulus or the impulse stimulus in this genotype, all ROIs not discarded for motion artifacts were considered valid. All traces are presented as the mean ± 1 SEM across all of the valid ROIs. Because ASAP2f decreases in brightness upon depolarization, y-axes are in units of negative % so that depolarization is up and hyperpolarization is down.

The metrics quantifying the impulse responses are defined as:

Phase 1 Area: The area under the curve from the time when the stimulus-evoked response first starts to when the response equals zero between the first and second phases. The start of the response was defined as the first time when the change in the response (, arbitrary units, or normalized amplitude) between one frame and the next is more than the change between that frame and the previous one plus 10%. After the signed area is computed, the sign is flipped if necessary such that the expected direction of the response is defined as positive (e.g. L1 and L2 initially depolarize to dark, which is a negative , so the phase 1 area for the dark flash is multiplied by -1).

Phase 2 Area: The area under the curve from the time when the response equals zero between the first and second phases to 0.25 seconds after the start of the stimulus. As with the Phase 1 Area, positive is defined as the area in the expected direction of the response.

Area Phase 2/Phase 1: The Phase 2 Area divided by Phase 1 Area.

Phase 1 tpeak: The time when the stimulus-evoked response first starts to the time when magnitude of the first phase is maximal.

Phase 2 tpeak: The time when the stimulus-evoked response first starts to the time when the magnitude of the second phase is maximal, unless the Phase 2 Area was not statistically significantly different from 0, in which case it was not reported.

The responses of all of the valid ROIs of a particular genotype or condition were bootstrapped (sampled with replacement with the sample size equal to the original sample size) and the quantification metrics were computed on the mean trace; this was repeated 10,000 times to calculate the 95%, 99%, and 99.9% confidence intervals. The mean and 95% confidence interval was plotted. The confidence intervals were used to test for a statistically significant difference between two genotypes or conditions: if the confidence intervals did not overlap, then the difference was statistically significant at the corresponding level (e.g. 95% for , 99% for , and 99.9% for ). To correct for multiple comparisons (Figure 2), Bonferroni correction was applied, and the confidence intervals corresponding to particular levels were adjusted accordingly.

Computational model

To maximize interpretability, a feedback model consisting of two differential equations was used: one corresponding to an effective voltage variable that captures the membrane potential of L1 or L2 (Eq. 1) and the second to the dynamics of the recurrent feedback circuit (Eq. 2A). The simplified linear form of the model is:

| Eq. 1 |

| Eq. 2A |

where and describe time constants for and , the neural response and the feedback element, respectively, and describes the strength of the feedback. However, this linear form of our model is not capable of capturing the strong asymmetry in the impulse responses to light and dark, in turn reflecting differences in the ability of light and dark to drive feedback. Therefore, Eq. 2A was modified to capture this asymmetry (Eq. 2B):

| Eq. 2B |

Accordingly, when is equal to one, only light drives the feedback element; when is equal to zero, only dark responses drive the feedback element, and when light and dark responses drive feedback equally. In addition, to account for the asymmetry of the first phase of response in which the feedback plays little role, we assume that the input term in Eq. 1 is weighted differently for light and dark responses as follows:

Where denotes the stimuli and , are constant weights. Additionally, an affine transformation is performed on the output to match the experiment scale. All parameters associated with the computational model were fitted through gradient descent to minimize the mean square error (MSE) between the model's predictions and the experimental responses to the light and dark flashes. The model was simulated using the Euler method to match the experimental sampling frequency. The Adam optimizer was used with an initial learning rate of 0.005 for the first 3000 steps, followed by a reduced learning rate of 0.001 for an additional 2000 steps.

When fitting the data, a latency difference between the model output and the experimental data was found. To reconcile this latency difference, the inputs were delayed by milliseconds. A range of delays were swept, and explained the experimental data the best. This delay approximates the delays associated with phototransduction, which were not accounted for in the model51.

In Figure 3B, the fitted values for L1 were: , , , . The values used for L2 were: seconds, , , . Figure 3C used L1 and L2 parameter values, aside from , which was systematically swept across a range of values. The models in Figure 4 used the values corresponding to L2. Qualitatively similar results held when using parameters corresponding to L1. In Figure 5, the increased feedback model had for L1 and for L2, obtained by fitting the model to the CDM responses. All other model parameters were identical to those used in Figure 3. In Figures 3C, S3, and S4, the model was simulated with an input of length 0.47 seconds starting at 0.155 seconds. In Figure S4, the model of the L1 pathway was implemented by adding a subsequent sign-inversion followed by half-wave rectification; the model of the L2 pathway was implemented by adding a subsequent half-wave rectification.

In Figures 6C and 6D, the L2 parameters fitted from the impulse responses were used, and only the final affine transformation was refit. The model without feedback was obtained by setting . The full model was also fit to the naturalistic stimulus response, as shown in Figure S6. The model parameters were initialized to be the same as the L2 parameters, and then the Adam optimizer was used to train the model with an initial learning rate of 0.002 for 2000 steps, followed by a reduced learning rate of 0.001 for an additional 1000 steps. The fitted parameters were: seconds, seconds, , . The model without feedback was trained with , and the initial learning rate and the reduced learning rate were changed to 0.0002 and 0.0001 respectively. The fitted parameters for the model without feedback were: . For the convolutional model, the light or dark impulse responses of L2 were used depending on the input sign:

and are L2 responses to the light or dark impulse stimulus.

The van Hateren dataset of natural images was used to conduct simulations37. To preprocess and subsample the dataset, we first standardized (Z-scored) each image, and then analyzed the standardized images before and after binarizing them using a threshold of 0. Contrast values were determined for each standardized and thresholded image by taking the standard deviation of each column (vertical) or row (horizontal) and averaging those values . These contrast values were then used to subsample the highest-contrast images in the dataset such that the standardized and thresholded images both had contrast values in the top quartile. Our simulation results only hold for high-contrast images. Translating these natural images to input was done by incorporating photoreceptor optics and rigid translational motion, as in ref.38. In brief, this transformation comprised two steps: a spatial blur introduced by the optics of the fly visual system, modeled by a Gaussian blur, and a temporal blur corresponding to the visual scene moving during the non-zero photoreceptor integration time, modeled by a temporal exponential blur. An image speed of 300 degrees per second was used for Figure 4. 150 and 600 degrees per second were used for low and high speeds, respectively, in Figure 5. The output image was constructed by first translating the original image into a spatially and temporally blurred image. Then, each column (vertical) or row (horizontal) of this transformed image was used as the temporal input to the feedback model. A final output image was constructed by combining the outputs of the model running on each column or row. Denote the origin image as and the model reformed image as . We infer the latency between the origin image and the model reconstructed image from the following optimization for vertical moving direction:

where we choose and . For horizontal moving direction, we use the transposed image in the above equation. To reduce the boundary effect, we only sum from 400 to 800 pixels (for vertical ones) and 400 to 1336 pixels (for horizontal ones). To save compute time, we sampled from 200 to 400 with a stride of 2.

Idealized impulse responses (Figure S2)

Idealized impulse responses were constructed in MATLAB by defining the time at which the response starts relative to the “start of the stimulus” as well as the time at which the response reaches peak amplitude for both the first and second phases and their amplitudes at those times. Linear interpolation defined the values between the response start and the peak of the first phase as well as between the peaks of the two phases. The decay of the second phase was a single exponential decay with the same decay constant across all of the idealized impulse responses. The peak amplitude of the first phase was held constant, and the peak amplitude of the second phase was varied to change the relative size of the second phase. The monophasic impulse response had a second phase peak amplitude of 0. The parameters were chosen to approximate the measured impulse responses and produce the desired area ratio but were not directly fit. The sample rate was 120 Hz, matching that of the measured responses. The magnitude across frequencies was computed by calculating the absolute value of the Fourier transform of idealized impulse responses normalized to energy. The simulated response to a 250 ms dark step off of gray was computed by convolving the impulse responses with that stimulus.

QUANTIFICATION AND STATISTICAL ANALYSIS

All statistical details are described briefly in the figure legends and in depth in the Method Details. Statistical significance was defined at , and Bonferroni correction for multiple comparisons was applied when appropriate. Statistical analyses were performed in MATLAB and Python.

RESOURCE AVAILABILITY

Lead contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Helen Yang (helen_yang2@hms.harvard.edu).

Materials availability

This study did not generate new unique reagents.

Supplementary Material

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Chemicals, peptides, and recombinant proteins | ||

| Chlordimeform (CDM) hydrochloride | Sigma-Aldrich | Cat#35914; CAS: 19750-95-9 |

| Experimental models: Organisms/strains | ||

| D. melanogaster: L1-Gal4: w[1118]; P{y[+t7.7] w[+mC]=GMR37E04-GAL4}attP2 | Bloomington Drosophila Stock Center | RRID:BDSC_49955 |

| D. melanogaster: L2-Gal4: +; +; 21D-Gal4/TM6B | Obtained from C.-H. Lee47 | |

| D. melanogaster: w[*]; P{y[+t7.7] w[+mC]=20XUAS-ASAP2f}attP40; + | Yang et al.22 | RRID:BDSC_65414 |

| D. melanogaster: +; ortC1-3-lexA::VP16AD/CyO; + | Obtained from C.-H. Lee26 | |

| D. melanogaster: +; +; PBac{y[+mDint2]w[+mC]=13XLexAop2-IVS-TNT::HA}VK00033/TM6B | Obtained from C.-H. Lee60 | |

| D. melanogaster: w[1118]; +; Df(3R)BSC809/TM6C, Sb[1] cu[1] | Bloomington Drosophila Stock Center | RRID:BDSC_27380 |

| D. melanogaster: +; UAS-ort/CyO; + | Obtained from C.-H. Lee47 | |

| D. melanogaster: +; +; ort1 | Gengs et al.24 | RRID:BDSC_1133 |

| D. melanogaster: w[*]; +; PBac{y[+mDint2] w[+mC]=20XUAS-IVS-NES-jRGECO1b-p10}VK00005 | Dana et al.61 | RRID:BDSC_63795 |

| Software and algorithms | ||

| MATLAB | MathWorks | |

| Python 3.6.10 | ||

| Google Colab (Python 3.10.12) | ||

| LAS AF Live Data Mode software | Leica | |

| Prairie View | Bruker | |

| Bio-Formats | Open Microscopy Environment62 | |

| Psychtoolbox-3 | Brainard, Pelli, and Kleiner63–65 | http://psychtoolbox.org/ |

| Other | ||

| UV glue: NOA 68T | Norland Products | |

| Leica HCX APO 20X/1.0-NA water immersion objective | Leica | |

| Leica TCS SP5 II microscope | Leica | |

| Chameleon Vision II laser | Coherent | |

| Bruker Ultima microscope | Bruker | |

| Mai Tai BB DS laser | Spectra-Physics | |

| Fidelity II laser | Coherent | |

| Data Acquisition Device: NI DAQ USB-6211 | National Instruments | |

| Photodiode: SM05PD1A | Thorlabs | |

| DLP LightCrafter 4500 projector | Texas Instruments | |

| 482/18-nm filter | Semrock | |

| 525/50-nm filter | Semrock | |

| 595/50-nm filter | Semrock | |

| Code for imaging analysis, visual stimulus, and computational model | This paper | https://github.com/ClandininLab/L1L2-deblur |

Acknowledgements

Work done at Stanford is performed on unceded land of the Muwekma Ohlone Tribe. We thank Chi-Hon Lee for sharing 21D-GAL4, ort1–3-lexA, LexAop-TNT, and UAS-ort flies. We thank Bella Brezovec for collecting the fly walking data used to generate the naturalistic stimulus and members of the Clandinin lab for feedback and troubleshooting assistance. This work was supported by R01EY022638 (T.R.C.), by a National Defense Science and Engineering Graduate (NDSEG) Fellowship (M.M.P.), a Stanford Interdisciplinary Graduate Fellowship (H.H.Y.), a Jane Coffin Childs Fellowship (H.H.Y.), an NIH K99 (NS129759 to H.H.Y.), R01EB028171 (F.C. and S.D.), and U19NS104655 (S.D. and T.R.C.). T.R.C. is a Chan-Zuckerberg Biohub Investigator.

Footnotes

Declaration of Interests

The authors declare no competing interests.

Data and code availability

All data reported in this paper will be shared by the lead contact upon request.

All original code has been deposited at Github and listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Land M. (2019). Eye movements in man and other animals. Vision Res. 162, 1–7. 10.1016/j.visres.2019.06.004. [DOI] [PubMed] [Google Scholar]

- 2.Walls G.L. (1962). The evolutionary history of eye movements. Vision Res. 2, 69–80. 10.1016/0042-6989(62)90064-0. [DOI] [Google Scholar]

- 3.Zhang K., Ren W., Luo W., Lai W.-S., Stenger B., Yang M.-H., and Li H. (2022). Deep Image Deblurring: A Survey. Int. J. Comput. Vis. 130, 2103–2130. 10.1007/s11263-022-01633-5. [DOI] [Google Scholar]

- 4.Currier T.A., Pang M.M., and Clandinin T.R. (2023). Visual processing in the fly, from photoreceptors to behavior. Genetics 224. 10.1093/genetics/iyad064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Joesch M., Schnell B., Raghu S.V., Reiff D.F., and Borst A. (2010). ON and OFF pathways in Drosophila motion vision. Nature 468, 300–304. [DOI] [PubMed] [Google Scholar]

- 6.Clark D.A., Bursztyn L., Horowitz M.A., Schnitzer M.J., and Clandinin T.R. (2011). Defining the Computational Structure of the Motion Detector in Drosophila. Neuron 70, 1165–1177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Takemura S. (2013). A visual motion detection circuit suggested by Drosophila connectomics. Nature 500, 175–181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ketkar M.D., Sporar K., Gür B., Ramos-Traslosheros G., Seifert M., and Silies M. (2020). Luminance Information Is Required for the Accurate Estimation of Contrast in Rapidly Changing Visual Contexts. Curr. Biol. 30, 657–669.e4. 10.1016/j.cub.2019.12.038. [DOI] [PubMed] [Google Scholar]

- 9.Ketkar M.D., Gür B., Molina-Obando S., Ioannidou M., Martelli C., and Silies M. (2022). First-order visual interneurons distribute distinct contrast and luminance information across ON and OFF pathways to achieve stable behavior. Elife 11. 10.7554/eLife.74937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Matulis C.A., Chen J., Gonzalez-Suarez A.D., Behnia R., and Clark D.A. (2020). Heterogeneous Temporal Contrast Adaptation in Drosophila Direction-Selective Circuits. Curr. Biol. 30, 222–236.e6. 10.1016/j.cub.2019.11.077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Drews M.S., Leonhardt A., Pirogova N., Richter F.G., Schuetzenberger A., Braun L., Serbe E., and Borst A. (2020). Dynamic Signal Compression for Robust Motion Vision in Flies. Curr. Biol. 30, 209–221.e8. 10.1016/j.cub.2019.10.035. [DOI] [PubMed] [Google Scholar]

- 12.Kemppainen J., Scales B., Razban Haghighi K., Takalo J., Mansour N., McManus J., Leko G., Saari P., Hurcomb J., Antohi A., et al. (2022). Binocular mirror-symmetric microsaccadic sampling enables Drosophila hyperacute 3D vision. Proc. Natl. Acad. Sci. U. S. A. 119, e2109717119. 10.1073/pnas.2109717119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Järvilehto M., and Zettler F. (1971). Localized intracellular potentials from pre- and postsynaptic components in the external plexiform layer of an insect retina. Z. Vgl. Physiol. 75, 422–440. [Google Scholar]

- 14.Hardie R.C. (1987). Is histamine a neurotransmitter in insect photoreceptors? J. Comp. Physiol. A 201.–. [DOI] [PubMed] [Google Scholar]

- 15.Weckström M. (1989). Light and dark adaptation in fly photoreceptors: Duration and time integral of the impulse response. Vision Res. 29, 1309–1317. [DOI] [PubMed] [Google Scholar]

- 16.Juusola M., and Hardie R.C. (2001). Light adaptation in Drosophila photoreceptors: I. Response dynamics and signaling efficiency at 25 degrees C. J. Gen. Physiol. 117, 3–25. 10.1085/jgp.117.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Srinivasan M.V., Laughlin S.B., and Dubs A. (1982). Predictive coding: a fresh view of inhibition in the retina. Proc. R. Soc. Lond. B Biol. Sci. 216, 427–459. [DOI] [PubMed] [Google Scholar]

- 18.van Hateren J.H. (1992). Theoretical predictions of spatiotemporal receptive fields of fly LMCs, and experimental validation. Journal of Comparative Physiology A 171, 157–170. 10.1007/BF00188924. [DOI] [Google Scholar]

- 19.Barlow H.B. (1961). Possible Principles Underlying the Transformations of Sensory Messages. In Sensory Communication (The MIT Press; ), pp. 216–234. 10.7551/mitpress/9780262518420.003.0013. [DOI] [Google Scholar]

- 20.Laughlin S.B. (1981). A simple coding procedure enhances a neuron’s information capacity. Zeitschrift für Naturforsch. B 36, 910–912. [PubMed] [Google Scholar]

- 21.van Hateren J.H. (1992). A theory of maximizing sensory information. Biol. Cybern. 68, 23–29. 10.1007/BF00203134. [DOI] [PubMed] [Google Scholar]

- 22.Yang H.H., St-Pierre F., Sun X., Ding X., Lin M.Z., and Clandinin T.R. (2016). Subcellular Imaging of Voltage and Calcium Signals Reveals Neural Processing In Vivo. Cell 166, 245–257. 10.1016/j.cell.2016.05.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Juusola M., and Hardie R.C. (2001). Light adaptation in Drosophila photoreceptors: II. Rising temperature increases the bandwidth of reliable signaling. J. Gen. Physiol. 117, 27–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gengs C., Leung H.-T., Skingsley D.R., Iovchev M.I., Yin Z., Semenov E.P., Burg M.G., Hardie R.C., and Pak W.L. (2002). The target of Drosophila photoreceptor synaptic transmission is a histamine-gated chloride channel encoded by ort (hclA). J. Biol. Chem. 277, 42113–42120. 10.1074/jbc.M207133200. [DOI] [PubMed] [Google Scholar]

- 25.Pantazis A., Segaran A., Liu C.-H., Nikolaev A., Rister J., Thum A.S., Roeder T., Semenov E., Juusola M., and Hardie R.C. (2008). Distinct roles for two histamine receptors (hclA and hclB) at the Drosophila photoreceptor synapse. J. Neurosci. 28, 7250–7259. 10.1523/JNEUROSCI.1654-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gao S., Takemura S.-Y., Ting C.-Y., Huang S., Lu Z., Luan H., Rister J., Thum A.S., Yang M., Hong S.-T., et al. (2008). The neural substrate of spectral preference in Drosophila. Neuron 60, 328–342. 10.1016/j.neuron.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sweeney S.T., Broadie K., Keane J., Niemann H., and O’Kane C.J. (1995). Targeted expression of tetanus toxin light chain in Drosophila specifically eliminates synaptic transmission and causes behavioral defects. Neuron 14, 341–351. [DOI] [PubMed] [Google Scholar]

- 28.Hardie R.C. (1989). A histamine-activated chloride channel involved in neurotransmission at a photoreceptor synapse. Nature 339, 704–706. 10.1038/339704a0. [DOI] [PubMed] [Google Scholar]

- 29.Witte I., Kreienkamp H.-J., Gewecke M., and Roeder T. (2002). Putative histamine-gated chloride channel subunits of the insect visual system and thoracic ganglion. J. Neurochem. 83, 504–514. 10.1046/j.1471-4159.2002.01076.x. [DOI] [PubMed] [Google Scholar]

- 30.Schnapf J.L., Nunn B.J., Meister M., and Baylor D.A. (1990). Visual transduction in cones of the monkey Macaca fascicularis. J. Physiol. 427, 681–713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Baccus S.A., and Meister M. (2002). Fast and slow contrast adaptation in retinal circuitry. Neuron 36, 909–919. [DOI] [PubMed] [Google Scholar]

- 32.Saszik S., and DeVries S.H. (2012). A Mammalian Retinal Bipolar Cell Uses Both Graded Changes in Membrane Voltage and All-or-Nothing Na+ Spikes to Encode Light. J. Neurosci. 32, 297–307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Silies M., Gohl D.M., Fisher Y.E., Freifeld L., Clark D.A., and Clandinin T.R. (2013). Modular use of peripheral input channels tunes motion-detecting circuitry. Neuron 79, 111–127. 10.1016/j.neuron.2013.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Behnia R., Clark D.A., Carter A.G., Clandinin T.R., and Desplan C. (2014). Processing properties of ON and OFF pathways for Drosophila motion detection. Nature 512, 427–430. 10.1038/nature13427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Arenz A., Drews M.S., Richter F.G., Ammer G., and Borst A. (2017). The Temporal Tuning of the Drosophila Motion Detectors Is Determined by the Dynamics of Their Input Elements. Curr. Biol. 82, 887–895. [DOI] [PubMed] [Google Scholar]

- 36.Freifeld L., Clark D.A., Schnitzer M.J., Horowitz M.A., and Clandinin T.R. (2013). GABAergic Lateral Interactions Tune the Early Stages of Visual Processing in Drosophila. Neuron 78, 1075–1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.van Hateren J.H., and van der Schaaf A. (1998). Independent component filters of natural images compared with simple cells in primary visual cortex. Proc. Biol. Sci. 265, 359–366. 10.1098/rspb.1998.0303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fitzgerald J.E., and Clark D.A. (2015). Nonlinear circuits for naturalistic visual motion estimation. Elife 4, 1–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chiappe M.E., Seelig J.D., Reiser M.B., and Jayaraman V. (2010). Walking modulates speed sensitivity in drosophila motion vision. Curr. Biol. 20, 1470–1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Suver M.P., Mamiya A., and Dickinson M.H. (2012). Octopamine neurons mediate flight-induced modulation of visual processing in drosophila. Curr. Biol. 22, 2294–2302. [DOI] [PubMed] [Google Scholar]

- 41.Maimon G., Straw A.D., and Dickinson M.H. (2010). Active flight increases the gain of visual motion processing in Drosophila. Nat. Neurosci. 13, 393–399. [DOI] [PubMed] [Google Scholar]

- 42.Tuthill J.C., Nern A., Rubin G.M., and Reiser M.B. (2014). Wide-Field Feedback Neurons Dynamically Tune Early Visual Processing. Neuron 82, 887–895. [DOI] [PubMed] [Google Scholar]

- 43.Strother J.A. (2018). Behavioral state modulates the ON visual motion pathway of Drosophila. Proc. Natl. Acad. Sci. U. S. A. 115, 102–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kohn J.R., Portes J.P., Christenson M.P., Abbott L.F., and Behnia R. (2021). Flexible filtering by neural inputs supports motion computation across states and stimuli. Curr. Biol. 31, 5249–5260.e5. 10.1016/j.cub.2021.09.061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Takemura S.-Y., Nern A., Chklovskii D.B., Scheffer L.K., Rubin G.M., and Meinertzhagen I.A. (2017). The comprehensive connectome of a neural substrate for “ON” motion detection in Drosophila. Elife 6. 10.7554/eLife.24394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Shinomiya K., Huang G., Lu Z., Parag T., Xu C.S., Aniceto R., Ansari N., Cheatham N., Lauchie S., Neace E., et al. (2019). Comparisons between the ON- and OFF-edge motion pathways in the Drosophila brain. Elife 8. 10.7554/eLife.40025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rister J., Pauls D., Schnell B., Ting C.-Y., Lee C.-H., Sinakevitch I., Morante J., Strausfeld N.J., Ito K., and Heisenberg M. (2007). Dissection of the peripheral motion channel in the visual system of Drosophila melanogaster. Neuron 56, 155–170. 10.1016/j.neuron.2007.09.014. [DOI] [PubMed] [Google Scholar]

- 48.Tuthill J.C., Nern A., Holtz S.L., Rubin G.M., and Reiser M.B. (2013). Contributions of the 12 neuron classes in the fly lamina to motion vision. Neuron 79, 128–140. 10.1016/j.neuron.2013.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zheng L., Nikolaev A., Wardill T.J., O’Kane C.J., de Polavieja G.G., and Juusola M. (2009). Network adaptation improves temporal representation of naturalistic stimuli in Drosophila eye: I dynamics. PLoS One 4, e4307. 10.1371/journal.pone.0004307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zheng L., de Polavieja G.G., Wolfram V., Asyali M.H., Hardie R.C., and Juusola M. (2006). Feedback network controls photoreceptor output at the layer of first visual synapses in Drosophila. J. Gen. Physiol. 127, 495–510. 10.1085/jgp.200509470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Nikolaev A., Zheng L., Wardill T.J., O’Kane C.J., de Polavieja G.G., and Juusola M. (2009). Network adaptation improves temporal representation of naturalistic stimuli in Drosophila eye: II mechanisms. PLoS One 4, e4306. 10.1371/journal.pone.0004306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Wu J., Ji X., Gu Q., Liao B., Dong W., and Han J. (2021). Parallel Synaptic Acetylcholine Signals Facilitate Large Monopolar Cell Repolarization and Modulate Visual Behavior in Drosophila. JOURNAL OF NEUROSCIENCE 41, 2164–2176. 10.1523/JNEUROSCI.2388-20.2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.DiCarlo J.J., and Cox D.D. (2007). Untangling invariant object recognition. Trends Cogn. Sci. 11, 333–341. [DOI] [PubMed] [Google Scholar]

- 54.Gollisch T., and Meister M. (2010). Eye Smarter than Scientists Believed: Neural Computations in Circuits of the Retina. Neuron 65, 150–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Åström K.J., and Murray R.M. (2008). Feedback systems (Princeton University Press; ). [Google Scholar]

- 56.Schreyer H.M., and Gollisch T. (2021). Nonlinear spatial integration in retinal bipolar cells shapes the encoding of artificial and natural stimuli. Neuron 109, 1692–1706.e8. 10.1016/j.neuron.2021.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Franke K., Berens P., Schubert T., Bethge M., Euler T., and Baden T. (2017). Inhibition decorrelates visual feature representations in the inner retina. Nature 542, 439–444. 10.1038/nature21394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hoffmann S., Warmbold A., Wiegrebe L., and Firzlaff U. (2013). Spatiotemporal contrast enhancement and feature extraction in the bat auditory midbrain and cortex. J. Neurophysiol. 110, 1257–1268. 10.1152/jn.00226.2013. [DOI] [PubMed] [Google Scholar]

- 59.Fischbach K.-F., and Dittrich A.P. (1989). The optic lobe of Drosophila melanogaster. I: A. Golgi analysis of wildtype structure. Cell Tissue Res. 258, 441–475. [Google Scholar]

- 60.Karuppudurai T. (2014). A Hard-Wired Glutamatergic Circuit Pools and Relays UV Signals to Mediate Spectral Preference in Drosophila. Neuron 81, 603–615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Dana H., Mohar B., Sun Y., Narayan S., Gordus A., Hasseman J.P., Tsegaye G., Holt G.T., Hu A., Walpita D., et al. (2016). Sensitive red protein calcium indicators for imaging neural activity. Elife 5. 10.7554/eLife.12727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Linkert M., Rueden C.T., Allan C., Burel J.-M., Moore W., Patterson A., Loranger B., Moore J., Neves C., Macdonald D., et al. (2010). Metadata matters: access to image data in the real world. J. Cell Biol. 189, 777–782. 10.1083/jcb.201004104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Brainard D.H. (1997). The Psychophysics Toolbox. Spat. Vis. 10, 433–436. 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- 64.Pelli D.G. (1997). The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442. 10.1163/156856897X00366. [DOI] [PubMed] [Google Scholar]

- 65.Kleiner M., Brainard D., and Pelli D. (2007). What’s new in Psychtoolbox-3? Preprint.

- 66.Liu Z., Lu X., Villette V., Gou Y., Colbert K.L., Lai S., Guan S., Land M.A., Lee J., Assefa T., et al. (2022). Sustained deep-tissue voltage recording using a fast indicator evolved for two-photon microscopy. Cell 185, 3408–3425.e29. 10.1016/j.cell.2022.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Wilson R.I., Turner G.C., and Laurent G. (2004). Transformation of olfactory representations in the Drosophila antennal lobe. Science 303, 366–370. [DOI] [PubMed] [Google Scholar]

- 68.Juusola M., Dau A., Song Z., Solanki N., Rien D., Jaciuch D., Dongre S.A., Blanchard F., de Polavieja G.G., Hardie R.C., et al. (2017). Microsaccadic sampling of moving image information provides Drosophila hyperacute vision. Elife 6. 10.7554/eLife.26117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Stavenga D.G. (2003). Angular and spectral sensitivity of fly photoreceptors. II. Dependence on facet lens F-number and rhabdomere type in Drosophila. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 189, 189–202. 10.1007/s00359-003-0390-6. [DOI] [PubMed] [Google Scholar]

- 70.York R.A., Brezovec L.E., Coughlan J., Herbst S., Krieger A., Lee S.-Y., Pratt B., Smart A.D., Song E., Suvorov A., et al. (2022). The evolutionary trajectory of drosophilid walking. Curr. Biol. 32, 3005–3015.e6. 10.1016/j.cub.2022.05.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Yang H.H., Brezovec L.E., Capdevila L.S., Vanderbeck Q.X., Rayshubskiy S., and Wilson R.I. (2023). Fine-grained descending control of steering in walking Drosophila. bioRxiv, 2023.10.15.562426. 10.1101/2023.10.15.562426. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data reported in this paper will be shared by the lead contact upon request.

All original code has been deposited at Github and listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.