Abstract

This review paper aims to serve as a comprehensive guide and instructional resource for researchers seeking to effectively implement language models in medical imaging research. First, we presented the fundamental principles and evolution of language models, dedicating particular attention to large language models. We then reviewed the current literature on how language models are being used to improve medical imaging, emphasizing a range of applications such as image captioning, report generation, report classification, findings extraction, visual question response systems, interpretable diagnosis and so on. Notably, the capabilities of ChatGPT were spotlighted for researchers to explore its further applications. Furthermore, we covered the advantageous impacts of accurate and efficient language models in medical imaging analysis, such as the enhancement of clinical workflow efficiency, reduction of diagnostic errors, and assistance of clinicians in providing timely and accurate diagnoses. Overall, our goal is to have better integration of language models with medical imaging, thereby inspiring new ideas and innovations. It is our aspiration that this review can serve as a useful resource for researchers in this field, stimulating continued investigative and innovative pursuits of the application of language models in medical imaging.

Keywords: medical imaging, ChatGPT, large language model, BERT, multimodal learning

1. Introduction

Medical physics has always been the forefront in implementing novel scientific discoveries and innovative technologies into the field of medicine. The origin of medical physics extends back to as early as 3000–2500 BC (Keevil 2012). In the history of modern medical physics, one of the most important milestones was the discovery of x-ray by Wilhelm Conrad Röntgen. The use of x-ray has revolutionized medical diagnostics and treatment. There are numerous other examples in the medical physics history book that significantly transformed medical practice, including the application of radiation therapy for cancer, the development of the gamma camera, the advent of positron emission tomography (PET) imaging, the invention of computed tomography (CT), and the advancement of magnetic resonance imaging (MRI), among others. Recently, there is a growing consensus that artificial intelligence (AI) is starting the fourth industrial revolution. Therefore, the integration of AI into medicine is of great interest to the medical physics community.

Over the past decade, deep learning (DL), a branch of AI, has experienced a significant surge in development and has found widespread applications, including autonomous vehicles, facial recognition, natural language processing, and medical diagnostics. DL models, categorized by data modality—vision, language, and sensor—have seen rapid development in vision and language owing to their adaptability and efficiency. Vision models, interpreting and processing visual data, have transformed medical imaging by improving diagnostic accuracy and efficiency in organ delineation (Pan et al 2022), advancing early disease detection (Lee et al 2022, Li et al 2022), achieving high-quality medical image synthesis (Lei et al 2018, Lei et al 2019, Yamashita and Markov 2020, Pan et al 2021) and facilitating personalized treatment plans (Florkow et al 2020). By utilizing the capabilities of vision models, the paradigm of medical imaging has shifted from conventional methods to AI-driven techniques, leading to improved patient outcomes and healthcare efficiency.

Conversely, language models (LMs), designed to understand, interpret, and generate human languages, have undergone significant advancements in recent years. These models have transformed various sectors by enabling more sophisticated text analysis, improving language translation systems, and enhancing user interaction with technology through natural language processing (NLP). Despite the extensive deployment and rapid progression of LMs in applications such as customer service chatbots, automated content creation, and language translation services, their integration into medical domains, particularly medical imaging, has not captured the researchers’ attention until recently due to several factors. Firstly, medical reports are replete with specialized terminology that requires precise interpretation and thorough analysis to ensure accurate and effective communication. Historically, LMs have encountered challenges in comprehending and utilizing the intricate medical terminology and subtleties essential for accurate medical diagnosis and effective patient care. Secondly, developing robust models requires large amounts of data and comprehensive corpora, which are difficult to obtain in the medical imaging domain due to stringent privacy regulations and the inherently confidential nature of medical records. This scarcity of accessible, annotated medical datasets has hindered the development and fine-tuning of LMs for medical applications. Thirdly, certain applications in medical imaging require an integration of vision and language models, known as vision-language models, which presents significant challenges, including ensuring coherent and meaningful correlations between image content and textual descriptions and adapting models to interpret medical images, which often contain highly specialized and nuanced information. Lastly, there is a noticeable deficit of comprehensive review literature that could help inspiring collaboration between researchers and medical professionals, which can lead to board applications for LMs in medical imaging.

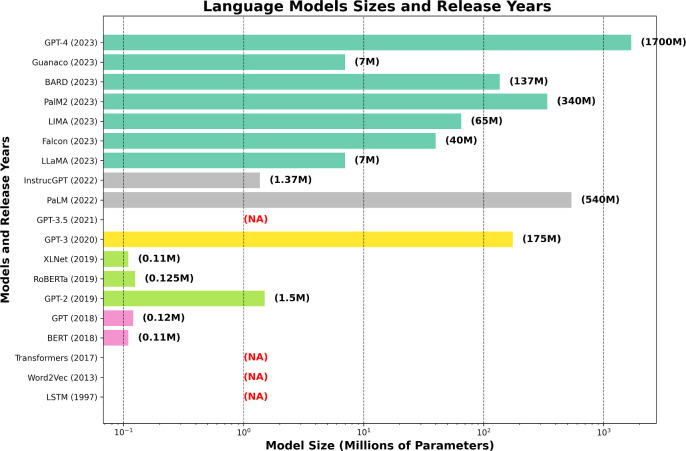

The recent surge in interest towards LMs in the medical imaging is attributed to several key developments. One of the pivotal factors catalyzing this trend is the release of large-scale language models by several tech companies, as shown in figure 1, which exhibit exceptional proficiency in parsing and generating complex language structures. This breakthrough has prompted researchers to explore potential applications of LMs in medical imaging. Another contributing factor is the wide accessibility of these advanced pre-trained large models, engineered for immediate application or minimal customization, aligns well with the specialized requirements of medical imaging tasks. Such flexibility is advantageous for medical imaging applications, where specialized tasks can often be addressed with slight modifications to existing models by finetuning on domain-specific datasets, thus yielding more targeted and effective applications within medical contexts. Moreover, the emergence of multimodal large language models, GPT-4-V, has significantly reduced the complexity of adopting vision-language models in medical imaging. These models offer an integrated framework that merges visual and linguistic analytics capabilities, thus streamlining the interpretative process of medical images which frequently requires a combination of visual and textual analysis.

Figure 1.

The bar chart illustrates the evolution of several influential language models, commencing with long short-term memory (LSTM) in 1997. Models released within the same year are represented by a unified color, while ‘NA’ denotes models whose sizes are either undisclosed or not applicable. The graph depicts a remarkable increase in the number of parameters of language models in recent times. While some models such as generative pre-trained transformer (GPT) by OpenAI and LLaMA (Large Language Model) by Meta AI have considerably scaled up in size to enhance their capabilities, others such as pathways language model (PaLM) by Google have employed diverse strategies to balance performance gains with efficiency, resulting in more compact model sizes.

This review paper is presented in response to the challenges identified and aims to propel the use of language models in medical imaging. It is designed to lay the groundwork for foundational understanding and to motivate researchers in the medical imaging domain to pursue innovative uses of language models to advance the utility of medical imaging. Our review begins with a historical and conceptual survey of LMs, giving special emphasis to the significant strides in large language models and their expanding influence in this sphere. Subsequently, we dive into current research and applications specifically related to medical physics. We explore potential uses of LMs in medical imaging, including extracting findings from radiology reports (section 3.1), generating captions for images and videos (section 3.2), interpreting radiology diagnosis (section 3.3), classifying reports (section 3.4), creating detailed reports (section 3.5), learning from multimodal data (section 3.6), and answering visual questions (section 3.7).

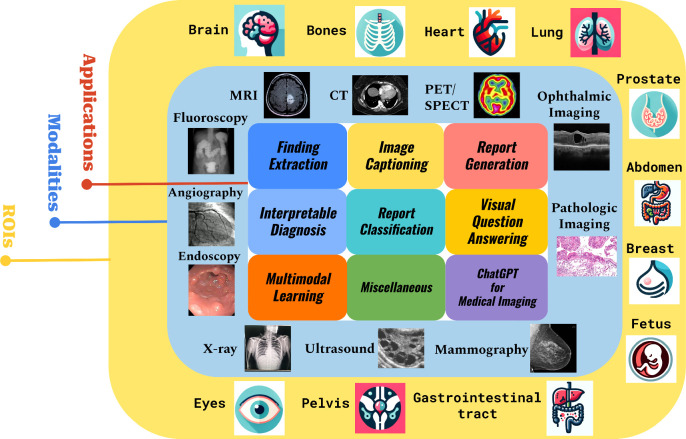

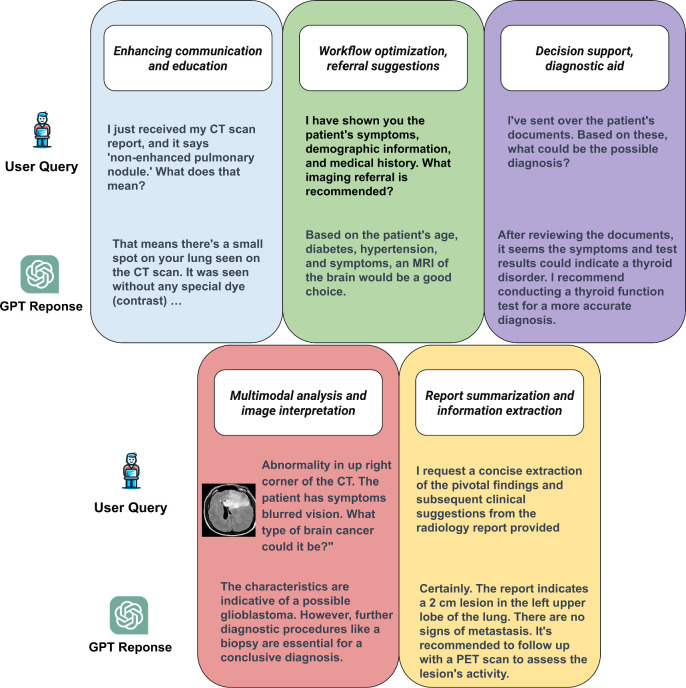

In recognition of the profound advancements in natural language understanding and generation, we feature a dedicated section on ChatGPT (section 3.8). This section highlights ChatGPT’s powerful capabilities, the multi-modality of its derivative GPT-4-V, and the significant attention it has attracted from the academic community, making it one of the hottest topics in the field. The paper continues with an analysis of current literature, elucidating the diverse ways in which language models are enhancing medical imaging, as outlined in figure 2. We conclude with a thorough review of the current research landscape and a forward-looking discussion on potential future directions.

Figure 2.

Comprehensive overview of topics in our review paper. The innermost part represents the specific applications discussed in our review, the middle circle encompasses the imaging modalities covered, and the outermost circle indicates the regions of interest (ROIs) addressed in the papers we reviewed.

2. Basics of language models

2.1. Glossaries

Table 1 provides a list of common glossaries used in computer vison (CV) and NLP. This resource is intended for readers who may not have a solid background in machine learning or NLP, making it easier for them to understand our work. This table does not aim to be exhaustive.

Table 1.

Common glossaries in natural language processing (NLP).

| Term | Definition | Term | Definition | Term | Definition |

|---|---|---|---|---|---|

| Machine learning (ML) | A branch of computer science dealing with the simulation of intelligent behavior in computers | Deep learning (DL) | An advanced subset of ML that uses layered neural networks to analyze various factors of data | Convolutional neural networks (CNNs) | A type of deep neural network, primarily used to analyze visual imagery |

| Natural language processing (NLP) | A branch of AI focused on enabling computers to understand, interpret, and manipulate human language | Transfer learning | A method in ML where a model developed for a task is reused as the starting point for a model on a second task | Reinforcement learning | An area of ML concerned with how agents ought to take actions in an environment to maximize cumulative reward |

| Vision-language models | Models that understand and generate content combining visual and textual data | Bag of words (BoW) | A simple text representation model in NLP. It treats text as a collection of words regardless of their order or grammar | Corpus | A large collection of text documents or spoken language data used for training and testing NLP models |

| Embedding | Vector representations of words in a continuous space, capturing semantic relationships for NLP model improvement | Feature engineering | Selecting and transforming linguistic features from raw text for NLP model input preparation | Hidden markov model (HMM) | A statistical model in NLP representing sequence data, useful in tasks like speech recognition |

| Information retrieval | The process in NLP of retrieving relevant information from large text collections based on user queries | Jaccard similarity | A measure for comparing the similarity of two sets of words or documents based on shared elements | Keyword extraction | Automatically identifying and extracting key words or phrases from a document for summarization |

| Lemmatization | Reducing words to their base or root form in various word forms like singular/plural or verb tenses | Machine translation | An NLP task for automatically translating text from one language to another computationally | Named entity recognition (NER) | Identifying and classifying named entities (people, places, organizations) in text |

| Ontology | Formally representing knowledge by defining concepts and entities and their relationships in NLP | Question answering | An NLP task of generating accurate answers to questions posed in natural language | Recurrence | Using recurrent neural networks (RNNs) in NLP for processing data sequences in language modeling |

| Sentiment analysis | Determining the emotional tone or sentiment of text, typically as positive, negative, or neutral | Tokenization | Breaking text data into individual units (words, n-grams) for analysis in NLP tasks | Unsupervised learning | Training NLP models on data without explicit labels, allowing independent pattern learning |

| Vector space model (VSM) | A mathematical model transforming text into numerical vectors for similarity calculations in NLP | Word sense disambiguation (WSD) | Identifying the correct meaning of a word in context, especially for words with multiple meanings | Zero-shot learning | Training models to perform tasks they have not explicitly been trained on, used in various NLP applications |

Some introductory-level articles in CV (Voulodimos et al 2018, Khan et al 2021) and NLP (Harrison and Sidey-Gibbons 2021, Lauriola et al 2022) may prepare readers with better backgrounds for our more in-depth reviewed topics.

2.2. Evaluation metrics

Comprehending the evaluation metrics for language models in medical imaging is of utmost importance. As our readership comprises a diverse group of professionals, ranging from machine learning researchers to medical physicists and physicians, an in-depth understanding of these metrics is critical for this broad audience. In this subsection, we present a list of these metrics and expound on their interpretation, laying the foundation for quantitatively understanding the performance of language models for medical imaging.

-

•Accuracy: This metric represents the overall percentage of correct predictions. In mathematical terms, it is expressed as:

-

•Precision: Precision is the ratio of correctly predicted positive observations to the total predicted positives. It is crucial for models where false positives are a significant concern.

-

•Recall: Recall measures the proportion of actual positives that are correctly identified. It is important in scenarios where missing a positive is more critical than falsely identifying a negative.

-

•F1 Score: The F1 Score is the harmonic mean of precision and recall, giving a balance between these two metrics. It is particularly useful when the class distribution is uneven.

-

•

Area under the ROC curve (AUC): This metric measures a model’s ability to distinguish between classes. An AUC of 1 represents a perfect model, while 0.5 suggests no discriminative power.

-

•

BLEU (Bilingual evaluation understudy): Measures precision of n-gram overlap between generated and reference translations.

-

•

ROUGE (Recall-oriented understudy for gisting evaluation): Evaluates how well generated text captures the meaning of reference text. There are multiple ROUGE metrics (e.g. ROUGE-N, ROUGE-L), each with its own focus and calculation method.

-

•

METEOR: A metric that incorporates exact word matching, synonym matching, and stemming to evaluate translation quality. Unlike BLEU, it accounts for semantic meaning and syntactic structure.

-

•Perplexity: Measures how well a language model predicts a sample. Lower perplexity indicates better model performance. Here, is the probability of the sequence x according to the model.

-

•

Word error rate (WER): Indicates the percentage of incorrect words in generated text compared to reference. Calculated as the number of substitutions, insertions, and deletions divided by total words in reference.

-

•

Mean reciprocal rank (MRR): Averages the reciprocal ranks of the first relevant answer in a list of possibilities.

-

•

CIDEr (consensus-based image description evaluation): CIDEr serves as an evaluative metric for image captioning that quantifies the quality and diversity of the generated captions. It gauges the term frequency-inverse document frequency (TF-IDF) scores for n-grams in both reference and generated captions and subsequently derives a consensus score. An elevated CIDEr score signifies an improvement in caption quality and diversity.

-

•

CLIPScore (contrastive language–image pre-training score): CLIPScore is a tool designed to evaluate the correspondence between image descriptions and image content. It is particularly suitable for models such as CLIP. The tool leverages the CLIP model to embed the generated descriptions and images into a common semantic space and computes the cosine similarity between these embeddings. A higher CLIPScore indicates that the quality of the generated descriptions is better in terms of matching the visual content of the images.

2.3. The evolution of language models

2.3.1. Pre-transformer era

Before the Transformer era, statistical and early neural network approaches dominated the landscape of language models. The most common of these were N-gram models, which used preceding words to predict the likelihood of the next word. However, these models were limited in their ability to capture long-range dependencies and were affected by data sparsity issues.

Later, probabilistic frameworks like hidden markov models (HMMs) (Rabiner 1989) were introduced, offering improved sequence modeling, but still struggled with the complexities of natural language. The introduction of recurrent neural networks (RNNs), particularly long short-term memory (LSTM) networks (Hochreiter and Schmidhuber 1997), marked a significant milestone as they could capture information over longer sequences, which earlier models could not. However, RNNs faced challenges like the vanishing gradient problem, which limited their efficacy in processing very long sequences.

This period before the Transformer models is crucial as it sets the foundation for developing them. The efforts of this era helped overcome several limitations in language modeling. They paved the way for developing more sophisticated models used today in various fields, such as medical imaging.

2.3.2. Transformers

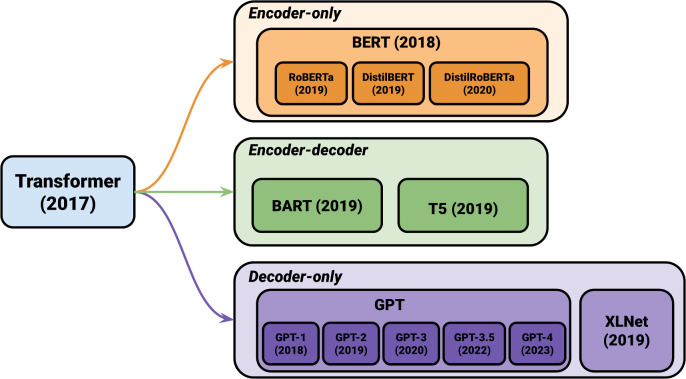

Transformers, introduced by Vaswani et al (2017) have revolutionized the field of natural language processing with their innovative model architecture. They have surpassed traditional recurrent or convolutional encoder–decoder models. The key enhancement brought by attention mechanisms has significantly optimized the performance of encoder–decoder models. The Transformer model, the pioneering model that relies entirely on self-attention mechanisms for input and output representations, has outperformed both recurrent and convolutional neural networks. A notable advantage of the Transformer model is its ability to be trained in parallel, resulting in reduced training time. The encoder is composed of six identical blocks, each consisting of two sub-layers, a multi-head self-attention mechanism, and a fully connected feed-forward network. Similarly, the decoder comprises six identical blocks, each incorporating three sub-layers, including the addition of a multi-head self-attention sub-layer. The self-attention mechanism employed by the Transformer model utilizes a scaled dot-product attention approach. It involves multiplying the query and key vectors and applying a SoftMax function to obtain weightings for the output. Although both additive and dot-product attention are common attention functions, dot-product attention is favored for its superior space efficiency and faster computation, thanks to the utilization of highly optimized matrix multiplication code. A key aspect of the transformative impact of Transformers is their role as the foundational architecture for subsequent advanced language models. Models such as bidirectional encoder representations from transformers (BERT), generative pretrained transformer (GPT), T5 (Text-to-text transfer transformer) (Raffel et al 2020), bidirectional and auto-regressive transformers (BART) (Lewis et al 2019), and XLNet (Yang et al 2019) owe their genesis to the Transformer architecture shown as figure 3.

Figure 3.

Transformer-based models. The graph illustrates models of different architectures—encoder-only (autoencoding), decoder-only (autoregressive), and encoder–decoder models.

2.3.3. BERT

BBERT (Lee and Toutanova 2018), a pre-trained LLM, has garnered significant attention in the NLP community for its outstanding performance across a range of tasks. Unlike its predecessors, which relied solely on unidirectional training, BERT adopts a bidirectional training approach. It predicts masked words within a sentence by considering both the left and right contexts. This unique approach enables the model to capture intricate relationships between words and phrases.

BERT’s pre-training process involves training on a diverse and extensive corpus of text, allowing it to learn the nuances and intricacies of natural language. As a result, BERT has achieved state-of-the-art performance on various benchmarks, including sentiment analysis, question-answering, and natural language inference. The impact of BERT on the field of NLP has been revolutionary, paving the way for the development of more advanced language models.

2.3.4. PaLM

Parameterized language model (PaLM) (Peng et al 2019), an LLM proposed in 2019. Unlike traditional language models that use a fixed number of parameters to generate text, PaLM employs a dynamic parameterization approach. The model’s parameterization is adaptable, enabling it to generate text that is highly accurate and contextually relevant by considering the given context and input. The training of PaLM involves large-scale text data utilizing unsupervised learning techniques, facilitating its understanding of the underlying patterns and structures within natural language. Its versatility is evident in its ability to be fine-tuned for diverse NLP tasks, including text classification, question answering, and language modeling. PaLM’s remarkable feature lies in its proficiency in handling words that do not appear in the training data. The model can effectively generate contextually relevant words to replace out-of-vocabulary (OOV) words, improving its overall text generation performance.

2.3.5. ChatGPT

ChatGPT, developed by OpenAI and based on the GPT (Radford et al 2018), has undergone a remarkable evolution through various iterations. Since its debut in June 2020, ChatGPT has progressively advanced with each version, showcasing OpenAI’s commitment to enhancing language model capabilities.

The trajectory of GPT’s development can be observed in its successive versions:

-

(1)

GPT-2: This iteration marked a significant step forward from the original GPT, featuring a larger model size and an expanded training dataset. GPT-2 was notable for its improved text generation capabilities, setting new standards in language model performance.

-

(2)

GPT-3: Representing a quantum leap in the series, GPT-3 was distinguished by its massive scale in terms of parameters and training data volume. It gained acclaim for its ability to generate text that remarkably mimics human writing, offering profound improvements in context understanding and nuanced response generation.

-

(3)

GPT-3.5: As an intermediary update, GPT-3.5 bridged the gap between GPT-3 and GPT-4. It refined the capabilities of its predecessor, offering enhanced performance in text generation and understanding. This version continued to push the envelope in language processing, preparing the ground for the next significant advancement.

-

(4)

GPT-4: The most recent in the series, GPT-4, has not only sustained the legacy of generating human-like text but has also introduced groundbreaking multimodal capabilities. This version can process and respond to both textual and visual stimuli, substantially broadening its applicability. GPT-4's (GPT-4-V’s) multimodality is a transformative feature, enabling more complex and dynamic interactions that closely resemble human cognitive abilities.

2.3.6. LLaMA

LLaMA, an acronym for large language model meta AI, comprises a collection of foundational language models (Touvron et al 2023). The models underwent comprehensive training using unsupervised learning techniques, such as masked language modeling and next-sentence prediction, on an extensive corpus of tokens. The training data was sourced from publicly available datasets such as Wikipedia, Common Crawl, and OpenWebText. By training exclusively on publicly available data, The LLaMA team has demonstrated the feasibility of attaining cutting-edge performance without relying on proprietary datasets.

3. Language models for medical imaging

3.1. Finding extraction from radiology report

Extracting meaningful information from radiology reports is essential for secondary applications in clinical decision-making, research, and outcomes prediction. However, there remain several challenges in the field, such as reducing the workload of radiologists and improving communication with referring physicians. Furthermore, the task of extracting detailed semantic representations of radiological findings from reports requires a significant amount of laborious work and meticulous effort.

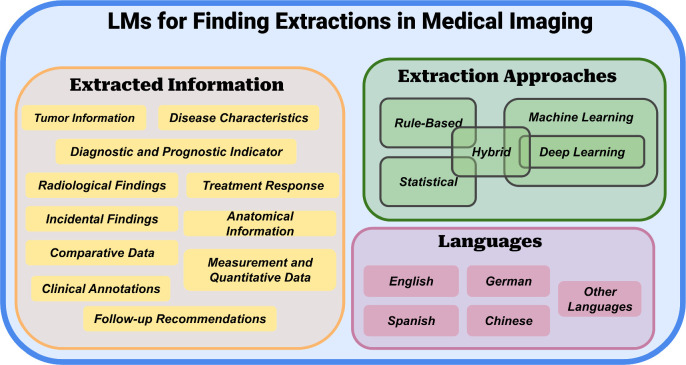

In this section, we provide an overview of the current state of findings extraction from radiology reports. This includes an examination of existing methods, their associated challenges and limitations, as well as an exploration of the potential benefits achievable through the adoption of innovative frameworks and techniques. Figure 4 shows the schematic view of LMS for information extraction in medical imaging. By exploring these advancements, we aim to provide valuable insights into using language models to improve clinical decision-making in radiology.

Figure 4.

The schematic view of LMS for information extraction in medical imaging. We can categorize them roughly, as shown in the diagram, based on the extracted information, extraction approaches, and the language used in the documents being extracted.

In their research, Smit et al (2020) presented CheXbert, a BERT-based method for radiology report labeling in x-ray modalities, utilizing the CheXpert and MIMIC-CXR datasets. They employed a modified BERT architecture with 14 linear heads to address diverse medical observations, trained the model using cross-entropy loss and Adam optimization with fine-tuning of all BERT layers, and evaluated its performance through weighted-F1 metrics across positive, negative, and uncertainty extraction tasks, providing 95% confidence intervals through bootstrapping. The results showcased CheXbert’s superiority, achieving an F1 score of 0.798, surpassing previous models, including the state-of-the-art CheXpert labeler, and matching the performance of a board-certified radiologist, marking a significant advancement in radiology report labeling by amalgamating the scalability of rule-based systems with expert annotation quality.

Furthermore, domain adaptation can significantly enhance the performance of language models when extracting findings. In this study by Dhanaliwala et al (2023), the research demonstrated that the domain-adapted RadLing system surpasses the general-purpose GPT-4 in extracting common data elements (CDEs) from x-ray and CT reports, emphasizing the importance of specialized language models for medical information extraction. RadLing’s two-phase approach utilizes a Sentence Transformer, addressing data imbalance and achieving high sensitivity (94% versus 70%) but slightly lower precision (96% versus 99%) compared to GPT-4. Importantly, RadLing offers practical benefits such as local deployment and cost-efficiency, making it a promising tool for structured information extraction in radiology.

In some cases, extracting intricate spatial details from the radiology report presents greater challenges. The research employs two methods for extracting spatial triggers from radiology reports. The baseline method used a BERTBASE model, fine-tuned on the annotated corpus, treating it as a sequence labeling task. The proposed hybrid method included candidate trigger generation through exact matching and domain-specific constraints, followed by a BERT-based classification model to determine correctness. Both methods used BERTBASE, pre-trained on MIMIC-III clinical notes, and the evaluation includes rule-based approaches. While the sequence labeling method had high precision but low recall, exact matching improved recall significantly but resulted in many false positives. Applying constraints to exact matched triggers enhanced precision slightly. However, the hybrid approach, which combined domain-inspired constraints with a BERT-based classifier, achieved both balanced precision and recall. It exhibited an impressive 24-point improvement in F1 score compared to standard BERT sequence labeling, with an average accuracy of 88.7% over 10-fold cross-validation.

The pursuit of detailed information extraction leads to exploring linguistic diversity, specifically the challenges in processing German in medical texts. In a recent study by Jantscher et al (2023), they developed an information extraction framework for improved medical text comprehension in low-resource languages like German. Their approach, using a transformer-based language model for named entity recognition (NER) and relation extraction (RE) in German radiological reports, showed enhanced performance in medical predictive studies. By incorporating active learning and strategic data sampling techniques, they reduced the effort required for data labeling. Additionally, they introduced a comprehensive annotation scheme that integrated medical ontologies. The results demonstrated significant performance gains, particularly in NER and RE tasks, when transitioning from random to strategic sampling. Furthermore, adapting models across different clinical domains and anatomical regions proved highly effective, underscoring the model’s versatility.

This exploration of linguistic diversity sets the stage for examining German BERT models, showcasing tailored solutions for language-specific radiological data. A study by Dada et al (2024) demonstrated the effectiveness of German BERT models pre-trained on radiological data for extracting information from loosely structured radiology reports. This has significant implications for clinical workflows and patient care. Using two datasets for training and two BERT models, they achieved noteworthy results. RadBERT excelled in pre-training, with an HST accuracy of 48.05% and a 5HST accuracy of 66.46%. During fine-tuning, G-BERT+Rad+Flex had the highest F1-score (83.97%), and GM-BERT+Rad+Flex achieved the highest EM (Exact match) score (71.81%) in the RCQA task. Additionally, GM-BERT+Rad+Flex showed the highest accuracy for unanswerable questions, and models performed slightly better on CT reports. Strong generalization capabilities were observed across various categories.

Besides German, some researchers have also attempted work in other languages, such as Spanish. In a past study by López-Úbeda et al (2021), pre-trained BERT-based models were introduced for automating the extraction of biomedical entities from Spanish radiology reports, addressing a crucial need in Spanish medical text processing. Using three approaches—BERT multi-class entity, BERT binary class entity, and a BERT Pipeline—the study evaluated these methods on a dataset of 513 annotated ultrasonography reports, recognizing seven entity types and three radiological concept hedging cues. Notably, the BERT Binary class entity approach outperformed others, achieving an 86.07% precision and a significant 14% F1 improvement compared to the BERT multi-class entity method, reaching 67.96%. This method particularly excelled in recognizing specific entities, including negated entities, underscoring its effectiveness in Spanish radiology report entity detection.

The summary of publications related to finding extraction is presented in table 2. Table 3 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 2.

Overview of LMs for extracting findings from medical imaging documents. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model name | Base Model/structure |

|---|---|---|---|---|---|

| Datta and Roberts (2020) | Brain, Chest | x-ray, MRI | MIMIC III | * | BERT |

| Smit et al (2020) | Chest | x-ray | CheXpert, MIMIC-CXR | ChestXbert | BERT |

| Dhanaliwala et al (2023) | Chest | x-ray, CT | Institutional | RadLing | ELECTRA, BERT |

| Jantscher et al (2023) | Head, Pediatric | CT, MRI, x-ray | Institutional | * | BERT |

| Dada et al (2024) | * | CT, MRI | Institutional, DocCheck Flexikon | G-BERT, GM-BERT | BERT |

| Moon et al (2022) | Chest | x-ray | MIMIC-CXR, Open-I | MedViLL | ResNet-50, BERT |

| Singh et al (2022) | Cardiac structure | MRI | EWOC | * | BERT |

| López-Úbeda et al (2021) | * | * | SpRadIE | BETO | BERT |

Table 3.

Comparative assessment of limitations and future perspectives for research in finding extraction.

| References | Specific limitation | Specific future perspective |

|---|---|---|

| Datta and Roberts (2020) | Rare phrases, common english terms, verb phrases | Generalization, rule expansion, POS information |

| Smit et al (2020) | Dependency on existing labeler, token limitation, limited to 14 observations, single radiologist ground truth, generalization across institutions | Reducing dependency on labelers, handling longer reports, expanding to rarer conditions, multiple radiologists for ground truth, cross-institutional evaluation |

| Dhanaliwala et al (2023) | Limited generalizability, evaluation scope, small cohort, data and model version, benchmarking method | Expanded scope, incorporating real-world complexity, model and data updates, comparative evaluation, integration into clinical workflows |

| Jantscher et al (2023) | Single-center study, limited open data sources, specific clinical domain | Evaluation across clinical sites, open language models, expansion to different clinical domains |

| Dada et al (2024) | Single text span answers, language restriction, interpretability | Multiple span models, language extension, interpretability research |

| Singh et al (2022) | Small test set, postprocessing requirements, portability, clinical implementation challenges, quality control | Larger test set, reduced postprocessing, enhanced portability, clinical implementation strategies, continuous monitoring |

| López-Úbeda et al (2021) | Entity recognition variability, dependency on pre-trained models | Error analysis, linguistic features, ontologies |

3.2. Image/video captioning

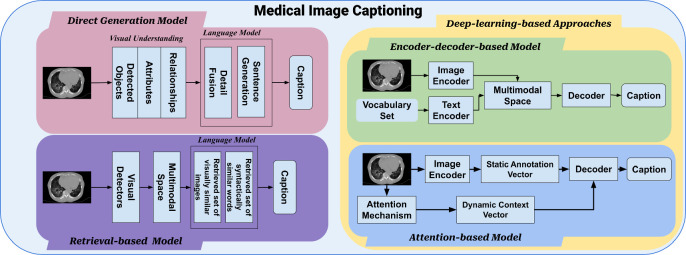

Generating accurate and reliable automatic report generation systems for medical images poses several challenges, including analyzing limited medical images using machine learning approaches and generating informative captions for images involving multiple organs. For instance, captioning fetal ultrasound images presents a unique set of challenges due to their inherent complexity and significant variability. Fetal ultrasound images are often noisy, low-resolution, and subject to great variation depending on factors such as fetal position, gestational age, and imaging plane. Additionally, there is a lack of large-scale annotated datasets for this task. To address these challenges, researchers have proposed novice approaches that integrate visual and textual information to generate informative captions for images and videos. In figure 5, the diagram illustrates the different processes of medical image captioning using language models.

Figure 5.

This diagram illustrates the process of medical image captioning using language models. Based on different captioning methods, it can be broadly categorized into direct generation approaches, retrieval-based approaches, and deep learning-based approaches. Among the deep learning approaches, there is further classification based on the involvement of an attention mechanism, leading to encoder–decoder-based approaches and attention-based approaches. Most medical image captioning methods primarily focus on encoder–decoder approaches.

In their research, Nicolson et al (2021) applied a sequence-to-sequence model, incorporating pre-trained ViT and PubMedBERT checkpoints, to the task of medical image captioning. The model followed a vision-language architecture, with a ViT-based encoder for processing medical images and a decoder utilizing the pre-trained PubMedBERT checkpoint for generating captions. The encoder segmented images into non-overlapping patches, adds position embeddings, and aggregates representations. The decoder incorporateed token embeddings, position embeddings, and segment embeddings. Interestingly, the best-performing configuration, ‘vit2mrt-0.1.1_5_e131,’ eschewed various additional steps. However, a notable gap between validation and test scores raises questions of potential overfitting or dataset disparities, and the study highlighted the complexity of optimizing models for medical image captioning, with no universal solution.

Building on the foundation of pre-trained models, another study introduced a specialized approach for CT image captioning, combining advanced neural network architectures. In this paper, Kim et al (2023b) introduced a novel CT image captioning model that combined 3D-CNN encoders (ResNet-50, EfficientNet-B5, DenseNet-201, and ConvNeXt-S) with a distilGPT2 decoder to address continuous CT scan sequence captioning. Their approach employed cross-attention, teacher forcing, and a penalty-applied loss function, particularly enhancing intracranial hemorrhage detection. The EfficientNet-B5 model, trained with penalty-applied loss functions, achieved the highest scores in metrics like BLEU, METEOR, and ROUGE-L, outperforming models using standard loss functions. This highlights the efficacy of penalty-based loss functions in capturing critical medical information.

Transitioning from specific imaging modalities to a more general approach, the next research utilized a comprehensive encoder-to-decoder model, aiming to address the diversity of medical imaging. Nicolson et al (2023) proposed a concise encoder–decoder model for medical image captioning, optimized with self-critical sequence training (SCST) (Rennie et al 2017) and focusing on BERTScore. This approach addressed the challenge of generating coherent captions for diverse medical images, with relevance for clinical documentation and multimodal analysis. The model employed CvT-21 as the image encoder and DistilGPT2 as the caption decoder, trained using a two-stage process with teacher forcing (TF) (Toomarian and Barhen 1992) and SCST. The model achieved top-ranking results in BERTScore and performed well in other metrics like CLIPScore, ROUGE, and CIDEr, despite lower ranks in METEOR and BLEU due to SCST optimization with BERTScore.

Further enhancing the capabilities of medical image captioning, the subsequent study adopted the BLIP framework, demonstrating its efficacy in a medical context. Wang and Li (2022) use the BLIP framework for medical image captioning, improving verbal fluency and semantic interpretation in a medical context. They employed the multimodal mixture of encoder–decoder (MED) and dual pre-training strategies. Pre-training from scratch on the ImageCLEFmedical dataset had limited success, but secondary pre-training with the original BLIP model achieved a notable BLEU score of 0.2344 when fine-tuned on the validation set, demonstrating its effectiveness in medical image captioning.

Expanding upon the use of the BLIP framework, the following research explored its application in a more focused area, introducing a novel classification pipeline. Ding et al (2023) introduced a mitosis classification pipeline using large vision-language models (BLIP) that framed the problem as both image captioning and visual question answering, incorporating metadata for context. BLIP outperformed CLIP models, with significantly higher F1 scores and AUC on MIDOG22 dataset. Adding metadata enhances BLIP’s performance, while CLIP did not surpass vision-only baselines, highlighting the advantage of multimodal vision and language integration for mitosis detection in histopathology images.

Moving from specific applications to a more integrative approach, MSMedCap (Wang et al 2023a) presented a model that combined dual encoders with large language models, demonstrating a unique methodology in image captioning. Guided by SAM, the model captured diverse information in medical images. MSMedCap integrated dual image encoders (fCLIP and fSAM) to capture general and fine-grained features, improving caption quality over baseline models BLIP2 and SAM-BLIP2. Mixed semantic pre-training in MSMedCap effectively combined CLIP’s general knowledge and SAM’s fine-grained understanding, showcasing its strength in generating high-quality medical image captions.

During the captioning process, there may be instances where interaction with human users is desirable. Zheng and Yu (2023) contributed by developing an interactive medical image captioning framework that effectively involves human users, integrated evidence-based uncertainty estimation, and provided a theoretical basis for uncertainty integration, thus improving the accuracy of image captions in a data-efficient manner. Their novel approach combined evidential learning with interactive machine learning for medical image captioning, progressing through stages from keyword prediction to caption generation, with user feedback informing refinement. Evaluations on two medical image datasets demonstrated the model’s capacity for efficient learning with limited labeled data, achieving superior performance compared to other models across various metrics on the PEIR and MIMIC-CXR datasets.

The summary of publications related to medical image/video captioning is presented in table 4. Table 5 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 4.

Overview of LMs for captioning medical images. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model name | Vision model | Language model |

|---|---|---|---|---|---|---|

| Nicolson et al (2023) | * | x-ray, Ultrasound, CT, MRI | Radiology objects in context (ROCO) | CvT2DistilGPT2 | CvT-21 | DistilGPT2 |

| Nicolson et al (2021) | * | CT, Ultrasoud, x-ray, Fluroscopy, PET, Mammography, MRI, Angiography | ROCO, ImageCLEFmed Caption 2021 | * | ViT | PubMedBERT |

| Kim et al (2023b) | Brain | CT | Institutional | * | ResNet-50, EfficientNet-B5, DenseNet-201, and ConvNeXt-S | DistilGPT2 |

| Zheng and Yu (2023) | * | * | IU-Xray | * | CDGPT (Conditioned Densely-connected Graph Transformer) | AlignTrans (Alignment Transformer) |

| Wang and Li (2022) | * | * | ImageCLEFmedical Caption 2022 | BLIP | Vision Transformer (ViT-B) | BERT |

| Ding et al (2023) | * | Histopathology | MIDOG 2022 | CLIP, BLIP | Vision Transformer (ViT-B) | BERT |

| Wang et al (2023a) | * | * | ImageCLEFmedical Caption 2022 | BLIP | Vision Transformer (ViT-B) | BERT |

| Zhou et al (2023) | Ultrasound, CT, x-ray, MRI | ROCO | BLIP-2 | ViT-g/14 | OPT2.7B |

Table 5.

Comparative assessment of limitations and future perspectives for research in image and video captioning.

| References | Specific limitation | Specific future perspectives |

|---|---|---|

| Nicolson et al (2023) | Discrepancy between validation and test scores; sensitivity of SCST; noise in PubMed central datasets | Clinical relevance metrics; ethical considerations; learning rate optimization |

| Kim et al (2023b), Wang et al (2023a) | Spatial information handling; performance on normal CT scans | Specialized language models; advanced spatial analysis; contextual information |

| Wang et al (2023a) | Suboptimal results with BLIP2; confusion due to irrelevant training details | Incorporating medical knowledge; specialized evaluation metrics |

3.3. Diagnosis interpretability

Medical image analysis and diagnosis have long been challenging due to the need for more interpretability of deep neural networks, limited annotated data, and complex biomarker information. These challenges have inspired researchers to develop innovative AI-based methods that can automate diagnostic reasoning, provide interpretable predictions, and answer medical questions based on raw images.

Monajatipoor et al (2022) made a significant contribution with BERTHop, a specialized vision-and-language model tailored for medical applications. BERTHop specifically addressed the challenge of interpretability in medical imaging by combining PixelHop++ (Chen et al 2020b) and VisualBERT (Li et al 2019). This innovative approach allowed the model to effectively capture connections between clinical notes and chest x-ray images, leading to improved interpretability in disease diagnosis. On the OpenI dataset, BERTHop achieved remarkable performance with an average AUC of 98.23% when diagnosing thoracic diseases from CXR images and radiology reports, outperforming existing models in the majority of disease diagnoses. Additionally, the study highlighted the benefits of initializing with in-domain text data for further improving model performance.

Building on the interpretability enhanced by BERTHop, the next advancement, ChatCAD (Wang et al 2023b), integrated large language models (LLMs) with computer-aided diagnosis networks, further bridging AI and clinical practice. This framework enhanced diagnosis interpretability by summarizing and reorganizing CAD network outputs into natural language text, creating user-friendly diagnostic reports. ChatCAD’s approach involved translating CAD model results into natural language, utilizing LLMs for summarization and diagnosis, and incorporating patient interaction. Evaluation on medical datasets demonstrated ChatCAD’s strong performance, outperforming existing methods for key disease observations on the MIMIC-CXR dataset in terms of precision, recall, and F1-score. ChatCAD excelled in providing informative and reliable medical reports, enhancing diagnostic capabilities and report trustworthiness.

Expanding from specific AI-language model integrations, the focus shifts to a unified framework that leverages natural language concepts for a more robust and interpretable medical image classification (Yan et al 2023a). This approach enhanced diagnostic accuracy, trustworthiness, and interpretability by associating visual features with medical concepts. The framework outperformed various baselines in datasets with confounding factors, demonstrating its robustness. It also provided concept scores, classification layer weights, and instance-level predictions for improved interpretability in medical image classification.

Narrowing the scope from a general framework to a specialized application, the next study introduced BCCX-DM (Chen et al 2021), specifically targeting breast cancer diagnosis and enhancing accuracy through a novel XAI model. The model included three components: structuring mammography reports, transforming TabNet’s structure with causal reasoning, and using the Depth-First-Search algorithm and gated neural networks for non-Euclidean data. In experiments, Causal-TabNet achieved better accuracy (0.7117) compared to TabNet (0.6825) when processing 21 features in classifying benign and malignant breast tumors. The model’s interpretability excelled by identifying critical diagnostic factors, such as tumor boundaries and lymph nodes, aligning with clinical significance.

Further advancing the connection between medical images and textual data, the MONET model (Kim et al 2023a) represented a significant leap in AI transparency, particularly in the field of dermatology. This model enhanced AI transparency by connecting medical images with text and generating dense concept annotations, particularly in dermatology. MONET, when combined with a Concept Bottleneck Model (MONET+CBM), consistently outperformed other methods in malignancy and melanoma prediction tasks, with mean AUROC values of 0.805 for malignancy and 0.892 for melanoma prediction. These results were statistically significant (p < 0.001) and demonstrate MONET’s superiority. Also contributed in dermatology, Patrício et al (2023) improved language models for skin lesion diagnosis, enhancing interpretability and performance. Their method achieved consistent performance gains over CLIP variations across three datasets. It also provided concept-based explanations for melanoma diagnosis.

The summary of publications related to interpretability improvement is presented in table 6. Table 7 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 6.

Overview of LMs for improving diagnosis interpretability. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model name | Base model/structure |

|---|---|---|---|---|---|

| Chen et al (2021) | Breast | Mammography | Institutional | BCCX-DM | TabNet, causal bayesian networks, gated neural networks (GNN) |

| Monajatipoor et al (2022) | Chest | x-ray | Open-I | BERTHop | PixelHop++, BlueBERT |

| Wang et al (2023b) | Chest | x-ray | MIMIC-CXR, CheXpert | ChatCAD | GPT-3, CAD |

| Kim et al (2023a) | Chest | x-ray | Fitzpatrick 17k, DDI | MONET | ResNet, CLIP |

| Yan et al (2023a) | Chest | x-rays | NIH-CXR, Covid-QU, Pneumonia, Open-I | — | GPT4, BioVil |

| Patrício et al (2023) | Skin | Dermascope | PH2, Derm7pt, ISIC2018 | CLIP | BERT |

Table 7.

Comparative assessment of limitations and future perspectives for research in diagnosis interpretability.

| References | Specific limitation | Specific future perspective |

|---|---|---|

| Monajatipoor et al (2022) | Data annotation; visual encoder choices; evaluation scope | Larger annotated datasets; visual encoder flexibility; diverse medical imaging tasks |

| Wang et al (2023b) | Non-human-like language generation; limited prompts; impact of LLM Size | Language model refinement; exploration of more complex information; investigation of vision classifiers; quantitative analysis of prompt design; engagement with clinical professionals |

| Yan et al (2023a) | Potential biases; accuracy of concept scores | Improved language models; robustness enhancements; reducing model dependency; biased data mitigation |

| Patrício et al (2023) | Interpretable concept | Expanding to other imaging modalities; enhanced interpretability |

3.4. Report classification

In this session, we will be discussing the challenges faced in report classification for medical imaging. The manual labeling process of radiology reports for computer vision applications is time-consuming and labor-intensive, creating a bottleneck in model development. Extracting clinical information from these reports is also a challenge, limiting the efficiency and accuracy of clinical decision-making. The goal of this research area is to automate this process, improving efficiency and accuracy in the medical field.

Bressem et al (2020) successfully applied BERT models to accurately classify chest radiographic reports, achieving high accuracy in identifying important findings. They utilized four BERT-based models, including GER-BERT, MULTI-BERT, FS-BERT, and RAD-BERT, trained and evaluated on a vast dataset of radiological reports. RAD-BERT emerged as the top-performing model with a pooled AUC of 0.98 and an AUPRC of 0.93 when fine-tuned on a 4000-text training dataset. However, performance varied by finding, with pneumothorax being challenging due to class imbalance. RAD-BERT also excelled in classifying CT reports, showcasing its promise in radiology report classification.

Expanding from this foundational use of BERT models, the next study introduced FlexR (Keicher et al 2022), a method that elevated the standard of automated reporting in chest x-rays through few-shot learning techniques. This addressed the need for standardized and automated reporting in radiology. FlexR leveraged self-supervised pretraining to extract clinical findings from structured report templates, maps them into a joint language-image embedding space, and fine-tunes a classifier. Using the MIMIC-CXR-JPG dataset, it excelled in tasks like cardiomegaly severity assessment and pathology localization with limited annotated data, achieving significant AUC improvements over baselines in both 1-shot and 5-shot learning scenarios.

Moving beyond chest x-rays to a broader range of imaging modalities, the following study focuses on automating the labeling of MRI radiology reports with the innovative ALARM model (Wood et al 2020). ALARM modified and fine-tunes the BioBERT language model, outperforming expert neurologists and stroke physicians in classification tasks. It achieved remarkable accuracy, sensitivity, and specificity in binary classification and granular abnormality categorization, showcasing its potential in radiology report labeling.

Delving deeper into the specialization of neural networks for imaging, the subsequent research (Wood et al 2022) shifted focus to the efficient deep learning approach in automating head MRI dataset labeling. Their sophisticated neural network model, trained on a large-scale dataset with meticulous labeling, demonstrated high efficacy in classifying head MRI examinations based on radiology reports, achieving impressive results with an AUC-ROC over 0.95 for all categories against reference-standard report labels. While there was a slight performance drop when tested against reference-standard image labels, the model’s robustness in automated MRI dataset labeling is evident, particularly in neuroradiology reports.

Finally, Zhang et al (2023b) introduced connect image and text embeddings (CITE), an innovative framework that merged biomedical text with foundation models to enhance pathological image classification. This model combined a pre-trained vision encoder and a biomedical language model to align image and class name embeddings, significantly improving classification accuracy. In their evaluation on the PatchGastric dataset, focusing on gastric adenocarcinoma subtypes, CITE consistently outperformed all baseline models by a significant margin, showcasing its effectiveness in handling limited medical data and enhancing pathological image diagnosis.

The summary of publications related to report classification presented in table 8. Table 9 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 8.

Overview of LMs for report classification. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model Name | Base model/structure |

|---|---|---|---|---|---|

| Wood et al (2020) | Head | MRI | Institutional | ALARM | BIOBERT, custom attention mechanism |

| Wood et al (2022) | Head | MRI | Institutional | BioBERT | BERT |

| Huemann et al (2023b) | * | PET, CT | Institutional | BERT, BioClinicalBERT, RadBERT, and RoBERTa | BERT |

| Keicher et al (2022) | Chest | x-ray | MIMIC-CXR-JPG v2.0.0 | FlexR | CLIP, language embedding, fine-tuning classifier |

| Bressem et al (2020) | Chest | x-ray, CT | Institutional | GER-BERT, MULTI-BERT, FS-BERT, and RAD-BERT | BERT |

| Zhang et al (2023b) | * | Histopathology | PatchGastric | CITE | CLIP, ImageNet-21k, CLIP textual encoder, BioBERT (BB), and BioLinkBERT (BLB) |

Table 9.

Comparative assessment of limitations and future perspectives for research in report classification.

| References | Specific limitation | Specific future perspective |

|---|---|---|

| Wood et al (2020) | Granular classifiers as work in progress; semi-supervised image labeling for unlabeled data | Expanding training dataset; utilizing web-based annotation tools for refined labeling |

| Wood et al (2022) | Less accurate granular label assignment; mislabeling in neuroradiology report classifiers | Investigating classifier generalizability; refining model to reduce label noise |

| Huemann et al (2023b) | Data source diversity; label variability; single prediction task focus; limited human versus ai comparison; token limitation in language models; image processing methodology | Expanding task range; utilizing diverse pretraining data; conducting multi-reader studies; addressing token limitation; incorporating full-text reports; evaluating in diverse institutions |

| Keicher et al (2022) | Data availability for self-supervised pretraining; disease localization challenges | Refining pretraining; explicit modeling of label dependencies; developing standardized reporting templates; implementing negative prompts strategy; enhancing fine-grained clinical findings; improving data accessibility |

| Bressem et al (2020) | Model size limitation; language specificity; bias in labeling short reports | Integration of single-finding reports; utilizing larger BERT models; developing multilingual models; handling longer texts efficiently; generalization of RAD-BERT |

| Zhang et al (2023b) | Data-limited challenges; dependency on medical domain knowledge | Use of synthetic pathological images; foundation training on multi-modal medical images |

3.5. Report generation

This section is dedicated to exploring the advancements in language models for generating medical reports. which has become an increasingly important task in the field of computer-aided diagnosis. The challenges of generating accurate and readable medical reports from various types of medical images have motivated researchers to explore new methods for automatic report generation. The authors of the studies discussed here were motivated by the task of generating diagnostic reports with interpretability for various medical imaging modalities, including computed tomography (CT) volumes, skin pathologies, ultrasound images, and brain CT imaging. The progress in creating precise and efficient language models specifically designed for medical report generation has the potential to enhance the effectiveness of clinical workflows, mitigate diagnostic errors, and provide valuable support to healthcare professionals in delivering timely and precise diagnoses.

Leonardi, Portinale et al provided a vital methodology centered on reusing pre-trained language models, offering the potential to automate radiological report generation from x-ray images in healthcare (Leonardi et al 2023). The model was based on a decoder-only transformer architecture, customized for the generation of textual reports from radiological images. It integrated input embedding, self-attention mechanisms, and an output layer. To adopt a multimodal approach, an image encoder was incorporated. During the training process, teacher forcing with cross entropy loss was utilized. The evaluation tasks focused on generating textual descriptions for medical images, making use of datasets such as MIMIC-CXR. This adaptation significantly improved the model’s capacity to process visual data and generate precise textual reports. The most remarkable combination, ViT + GPT-2 with Beam Search, attained the highest performance, achieving a precision of 0.79, recall of 0.76, and an impressive F1-score of 0.78. These outcomes outperformed alternative model architectures and decoding methods, highlighting the importance of capturing semantic and contextual information to generate high-quality text that aligns closely with human judgment.

Expanding upon the foundation of pre-trained models, Clinical-BERT (Yan and Pei 2022) emerged as a tailored vision-language pre-training model, enhancing domain-specific knowledge integration in radiology diagnosis and report generation. Clinical-BERT incorporated domain-specific pre-training tasks, including Clinical Diagnosis, Masked MeSH Modeling, and Image-MeSH Matching, alongside general pre-training tasks like masked language modeling. Radiographic visual features and report text were combined to create token embeddings. The model was pre-trained on the MIMIC-CXR dataset, utilizing BERT-base as the base model and DenseNet121 as the visual feature extractor. Fine-tuning for specific tasks involved variations in data ratios, tokenizations, and loss functions. Clinical-BERT demonstrated exceptional performance in Radiograph Report Generation, securing top rankings in NLG metrics on IU x-ray and substantially improving clinical efficacy metrics, with precision, recall, and F1 scores showing an average gain of 8.6% over a competing model. Ablation studies underscored the effectiveness of domain-specific pre-training tasks.

Furthering the advancement in report generation, the next study focused on cross-institutional solutions, addressing the challenge of model generalization in diverse healthcare environments. The ChatRadio-Valuer model (Zhong et al 2023) combined computer vision and advanced language models to extract clinical information from radiology images and generate coherent reports. It trained on a diverse dataset from multiple institutions, demonstrating robust performance and high clinical relevance. Evaluation metrics showed its superior performance compared to 15 other models, particularly excelling in Recall-1, Recall-2, and ROUGUE-L scores, especially for datasets from Institutions 2, 5, and 6. Within Institution 1, it adapted well across various systems, making it a promising tool for enhancing diagnostics and clinical decision-making in diverse medical settings.

Building on these advancements, MedEPT (Li 2023) proposed a method enhancing data and parameter efficiency, reducing the reliance on extensive human-annotated data. MedEPT featured three key components: a CLIP-inspired feature extractor with separate image and text encoders and projection heads, a lightweight transformer-based mapping network, and a parameter-efficient training approach. It used soft prompts for text generation and was trained on various medical datasets, including MIMIC-CXR, RSNA Pneumonia, and COVID. MedEPT consistently outperformed existing models across metrics like BLEU-1, BLEU-4, Rouge, and CIDEr, even requiring less training time and fewer parameters. The study emphasized the significance of diverse text generation techniques, language model choice, and parameter-efficient tuning in optimizing performance.

RGRG (Tanida et al 2023), which innovatively integrated anatomical region detection with interactive report generation, enhanced clinical relevance and report completeness. The RGRG method encompassed radiology report generation, anatomy-based sentence generation, and selection-based sentence generation, mirroring a radiologist’s workflow. It comprised four main modules: object detection, region selection, abnormality classification, and a transformer-based language model. The model underwent training in three stages, beginning with the object detector, and evaluation encompassed standard language generation and clinical efficacy metrics. In radiology report generation, it outperformed previous models, achieving a new state-of-the-art in METEOR and excelling in clinically relevant CE metrics. For anatomy-based sentence generation, it produced pertinent anatomy-related sentences with a notable anatomy-sensitivity-ratio. In selection-based sentence generation, the model demonstrated robustness to deviations in bounding boxes.

Advancing the integration of clinical knowledge, the KiUT (Huang et al 2023) represented a significant leap in report generation, incorporating a novel architecture and a symptom graph for more accurate and clinically informed reports. KiUT, a comprehensive solution for generating informative radiology reports from 2D images, consisting of three core components: cross-modal U-transformer, injected knowledge distiller, and region relationship encoder. The U-Transformer enhanced feature aggregation through multi-modal interaction, while the injected knowledge distiller aligned the model with medical expertise by combining visual, contextual, and clinical knowledge. The region relationship encoder captured image region relationships for contextually relevant reports. On the MIMIC-CXR dataset, KiUT outperformed competing models, achieving higher NLG metrics with BLEU-1 scores of 0.393 and ROUGE-L scores of 0.285, demonstrating its proficiency in generating coherent and clinically relevant reports. Additionally, KiUT excelled in describing clinical abnormalities on CheXpert labels for 14 disease categories, and it surpassed most models on the IU-Xray dataset.

Setting a new standard in both clinical efficacy and explainability in medical report generation, transformer-based semantic query (TranSQ) (Kong et al 2022) introduced a novel candidate set prediction and selection method. This model was comprised of three components: the visual extractor, semantic encoder, and report generator. It used ViT to convert medical images into visual features, processed by semantic queries to create semantic features. The report generator produced sentence candidates through retrieval and predicted selection probabilities with a multi-label classifier. TranSQ achieved state-of-the-art results, including a BLEU score of 0.608 and an F1 score of 0.642 on the IU x-ray dataset, and demonstrated impressive clinical efficacy on the MIMIC-CXR dataset with a precision score of 0.482, recall score of 0.563, and an F1-score of 0.519, surpassing the state-of-the-art model KGAE. Remarkably, TranSQ achieved these improvements without introducing abnormal terms and maintained its state-of-the-art performance even with random-ordered sentences.

The summary of publications related to report generation is presented in table 10. Table 11 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 10.

Overview of LMs for report generation. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model name | Vision model | Language model |

|---|---|---|---|---|---|---|

| Zhong et al (2023) | Chest, Abdomen, musculoskeletal system, head, maxillofacial and neck | CT, MRI | Institutional (Six Chinese Hospital) | ChatRadio-Valuer | * | Llama2 |

| Yan and Pei (2022) | Chest | x-ray | MIMIC-CXR, IU x-ray, COV-CTR, NIH ChestX-ray14 | Clinical-BERT | DenseNet121 | BERT-base |

| Leonardi et al (2023) | Chest | x-ray | MIMIC-CXR | * | ViT, CheXNet | Transformer |

| Li (2023) | Chest | x-ray | MIMIC-CXR, RSNA Pneumonia, COVID, IU Chest x-ray | * | CLIP | GPT-2, OPT-1.3B, OPT-2.7B |

| Tanida et al (2023) | Chest | x-ray | Chest ImaGenome v1.0.0 | RGRG Method | ResNet-50, Faster R-CNN | Transformer |

| Huang et al (2023) | Chest | x-ray | IU-Xray, MIMIC-CXR | KiUT | ResNet101, U Transformer | BERT |

| Cao et al (2023) | Gastrointestinal tract, chest | Endoscope, x-ray | Gastrointestinal endoscope image dataset (GE), IU-CX, MIMIC-CXR | MMTN | DenseNet-121 | BERT |

| Moon et al (2022) | Chest | x-ray | MIMIC-CXR, Open-I | MedViLL | CNN | BERT |

| Kong et al (2022) | Chest | x-ray | IU x-ray, MIMIC-CXR | TranSQ | ViT-B/32 | MPNet |

Table 11.

Comparative assessment of limitations and future perspectives for research in report generation.

| References | Specific limitation | Specific future perspective |

|---|---|---|

| Zhong et al (2023) | Focus only on chinese radiology reports; limited to ct and mri reports; inferior performance in certain report types; challenges in causal reasoning report generation; generalizability concerns in global contexts | Investigate performance with more diverse data; expand to other image modalities; improve performance in complex reports; enhance causal reasoning in reports; test and adapt in varied healthcare systems |

| Yan and Pei (2022) | MeSH word prediction accuracy | Incorporate organ localization; expand downstream tasks |

| Leonardi et al (2023) | Scarcity of data; localization errors; computational resource limitations; usability validation issues | Explore data augmentation techniques; improve localization accuracy; develop resource-efficient models; conduct usability assessments |

| Li (2023) | Limited data availability; model comparison constraints; caption diversity issues | Develop effective data augmentation methods; explore various advanced language models; enhance caption diversity and control |

| Tanida et al (2023) | Strong supervision reliance; focus on isolated chest x-rays; incomplete coverage of reference reports | Adapt for limited supervision; utilize sequential exam information; create hybrid systems for comprehensive sentence generation |

| Kong et al (2022) | Limited focus on abnormality term generation | Apply semantic features to a conditional linguistic decoder for enhanced sentence generation |

3.6. Multimodal learning

Advancements in medical imaging technologies have led to an increase in the volume of image and text data generated in healthcare systems. To analyze these vast amounts of data and support medical decision-making, there is a growing interest in leveraging machine learning for automated image analysis and diagnosis. However, the effectiveness of these techniques is often limited by the challenges associated with bridging multimodal data, such as text and images.

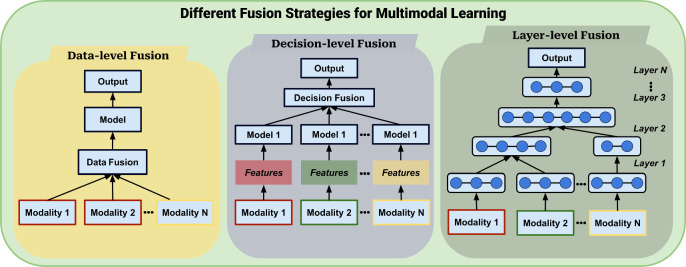

Multimodal learning has emerged as a promising approach for addressing these challenges in medical imaging. By leveraging both visual and textual information, multimodal learning techniques have the potential to improve diagnostic accuracy and enable more efficient analysis of medical imaging data. Figure 6 depicts the common strategies for fusing different modalities of data, primarily text and image data in the papers reviewed in our study.

Figure 6.

Illustration of various fusion strategies in multi-modal learning. This includes Data-level (Early) Fusion, where information from different modalities is combined at the input; Layer-level (Intermediate) Fusion, where fusion occurs at intermediate model layers; and Decision-level (Late) Fusion, where modalities are integrated at the final decision stage.

Khare et al (2021) developed MMBERT, a Multimodal Medical BERT model, through self-supervised pretraining on a large medical image+caption dataset. This resulted in state-of-the-art visual question answering (VQA) performance for radiology images and improved interpretability via attention maps. MMBERT utilized a specialized Transformer encoder with self-attention, ResNet152 for image features, and BERT WordPiece tokenizer for text. Fine-tuning on medical VQA datasets achieved superior accuracy and BLEU scores compared to existing models, and qualitative analysis showed its ability to attend to relevant image regions and even surpass ground truth answers in some cases.

Expanding on the multimodal concept, ConTEXTual Net (Huemann et al 2023a) emerged, focusing specifically on enhancing pneumothorax segmentation in chest radiographs. By combining a U-Net-based vision encoder with the T5-Large language model and cross-attention modules, ConTEXTual Net achieved a Dice score of 0.716 for pneumothorax segmentation on the CANDID-PTX dataset, outperforming the baseline U-Net and performing comparably to the primary physician annotator. Vision augmentations significantly improved performance, while text augmentations did not. The choice of language model had minimal impact, and lower-level cross-attention integration improved results, with L4 module performing slightly better than L3.

Building further on multimodal interactions, GLoRIA (global-local representations for images using attenion mechanism) (Huang et al 2021) represented a significant step forward in jointly learning global and local representations of medical images. GLoRIA learns multimodal global and local representations of medical images through attention-weighted regions and paired reports. It employed attention mechanisms to emphasize image regions and reports during training, creating comprehensive representations. GLoRIA excelled in image-text retrieval, achieving high precision on the CheXpert dataset. In fine-tuned image classification, it performed remarkably well with AUROC scores of 88.1% (CheXpert) and 88.6% (RSNA) despite limited training data. In zero-shot image classification, it exhibited robust generalization with F1 scores of 0.67 (CheXpert) and 0.58 (RSNA). Furthermore, in segmentation tasks on the SIIM Pneumothorax dataset, GLoRIA attains competitive Dice scores, including a maximum of 0.634.

Leveraging textual annotations for image enhancement, the language meets vision transformer (LViT) model introduceed a novel approach in medical image segmentation, particularly addressing limited labeled data. LViT utilizes a double-U structure that combines a U-shaped CNN branch with a U-shaped Transformer branch, facilitating the integration of image and text information. A pixel-level attention module (PLAM) retains local image features, while an Exponential Pseudo-label Iteration mechanism and a language-vision (LV) Loss enable training with unlabeled images using direct text information. The tiny version of LViT, LViT-T, achieved significant improvements over the nnUNet model on the QaTa-COV19 dataset, enhancing the Dice score by 3.24% and the mean intersection over union (mIoU) score by 4.3%, even with just a quarter of the training labels, demonstrating its prowess in enhancing segmentation performance.

Besides segmentation, Huemann et al (2022) utilized NLP methods in clinical report interpretation, particularly in the context of lymphoma PET/CT imaging. Their multimodal model, combining language models like ROBERTA-Large pretrained on clinical reports and vision models processing PET/CT images, predicted 5-class visual Deauville scores (DS) with impressive accuracy. The ROBERTA language model achieved a 73.7% 5-class accuracy and a Cohen kappa (κ) of 0.81 using clinical reports alone, while the multimodal model, combining text and images, reached the highest accuracy of 74.5% with a κ of 0.82. Pretraining language models with masked language modeling (MLM) further improved performance, with ROBERTA reaching 77.4%.

Further exploring the synergy of vision and language, the development of multi-modal masked autoencoders (M3AE) (Chen et al 2022) highlights advancements in self-supervised learning paradigms. The M3AE architecture employs Transformer-based models for both vision and language encoders, which are combined using a multi-modal fusion module. In a self-supervised learning paradigm, the model masks and subsequently reconstructs portions of both images and text, facilitating joint learning of visual and textual representations. It demonstrated superior performance in Medical Visual Question Answering and medical image-text classification compared to previous uni-modal and multi-modal methods under the non-continued pre-training setting.

The summary of publications related to multimodal learning is presented in table 12. Table 13 summarizes the specific limitations and future perspectives of the reviewed papers, along with the common limitations and future perspectives discussed in this section.

Table 12.

Overview of LMs for multimodal learning. The asterisks (*) indicate terms that are either not present in the original paper or do not apply in this context.

| References | ROI | Modality | Dataset | Model name | Vision model | Language model |

|---|---|---|---|---|---|---|

| Huemann et al (2023a) | Chest | x-ray | CANDID-PTX | ConTEXTual Net | U-Net | T5-Large |

| Huang et al (2021) | Chest | x-ray | CheXpert, RSNA pneumonia detection challenge, SIIM-ACR pneumothorax segmentation, NIH ChestX-ray14 | GLoRIA | CNN | Transformer |

| Li et al (2023) | * | CT, x-ray | MosMedData+, ESO-CT, QaTa-COV19 | LViT | U-shaped CNN | U-shaped ViT (BERT-Embed) |

| Khare et al (2021) | * | * | VQA-Med 2019, VQA-RAD, ROCO | MMBERT | ResNet152 | BERT |

| Huemann et al (2022) | Lymphoma | PET, CT | Institutional | — | ViT, EfficientNet B7 | ROBERTA-Large, Bio ClinicalBERT, and BERT |

| Chen et al (2022) | * | x-ray, MRI, CT | ROCO, MedICaT | M3AE | ViT | BERT |

| Delbrouck et al (2022) | * | * | MIMIC-CXR, Indiana University x-ray collection, PadChest, CheXpert, VQA-Med 2021 | ViLMedic | CNN | BioBERT |

Table 13.

Comparative assessment of limitations and future perspectives for research in multimodal learning.

| References | Specific limitation | Specific future perspective |

|---|---|---|

| Huemann et al (2023a) | Single dataset; limited multimodal datasets; primary annotator bias; language as input requirement; comparison challenges | Diverse datasets; inter-observer variability; task expansion; reducing language dependency; AI-assisted clinical workflow |

| Huang et al (2021) | Focus on chest radiographs; dependence on report quality; designed for english-language reports | Expand to other modalities and regions; improve robustness to report quality; adapt for different languages |

| Li et al (2023d) | 2D segmentation limitation; manual text annotation requirement | 3D segmentation; automating text annotation generation |