Abstract

Objectives

To compare the performance of a composite citation score (c-score) and its six constituent citation indices, including H-index, in predicting winners of the Weisenfeld Award in ophthalmologic research. Secondary objectives were to explore career and demographic characteristics of the most highly cited researchers in ophthalmology.

Methods

A publicly available database was accessed to compile a set of top researchers in the field of clinical ophthalmology and optometry based on Scopus data from 1996 to 2021. Each citation index was used to construct a multivariable model adjusted for author demographic characteristics. Using area under the receiver operating curve (AUC) analysis, each index’s model was evaluated for its ability to predict winners of the Weisenfeld Award in Ophthalmology, a research distinction presented by the Association for Research in Vision and Ophthalmology (ARVO). Secondary analyses investigated authors’ self-citation rates, career length, gender, and country affiliation over time.

Results

Approximately one thousand unique authors publishing primarily in clinical ophthalmology/optometry were analyzed. The c-score outperformed all other citation indices at predicting Weisenfeld Awardees, with an AUC of 0.99 (95% CI: 0.97–1.0). The H-index had an AUC of 0.89 (95% CI: 0.83–0.96). Authors with higher c-scores tended to have longer career lengths and similar self-citation rates compared to other authors. Sixteen percent of authors in the database were identified as female, and 64% were affiliated with the United States of America.

Conclusion

The c-score is an effective metric for assessing research impact in ophthalmology, as seen through its ability to predict Weisenfeld Awardees.

Subject terms: Scientific community, Education, Events

Introduction

Assessing a researcher’s scientific contributions can impact decisions regarding hiring, funding, and promotion [1–3]. Traditionally, these assessments relied on peer-review. Numeric citation indices can offer the advantages of greater perceived objectivity and lower costs compared to peer-review [3]. However, each index is inherently limited by the data that it incorporates, and indices have varying utility depending on the field to which they are applied.

Within ophthalmology, as with other fields, the most universally applied bibliometric tool is the H-index, developed by Jorge Hirsch in 2005 [4–6]. It is calculated as the number of publications h that have each received at least h citations; for instance an author with four papers that each received four citations would have an H-index of 4 [7]. An author with six papers, of which four received four citations and two received none, would also have an H-index of 4. While the H-index has the advantages of broad applicability and straightforward interpretation,it is limited by the simplicity of its components [3], and recent work has found a substantial decline in the correlation between H-index and receiving reputable scientific awards [8].

Several attempts have been made to improve upon existing bibliometrics, often incorporating additional data to describe the complexities of multiple authorship and author ranking [8]. Schreiber et al. developed the Hm-index in 2008 to account for multiple authorships [9]. The Hm-index, along with metrics representing single, first, and last author contributions, were combined into a single composite score by Ioannidis et al. in 2016 [10]. This composite score, or c-score, was evaluated broadly across several fields including medicine; however, the applicability of the c-score to specific specialties of medicine is unknown.

As ophthalmology is a field where publications often include multiple authors with the first and last authors as primary contributors, we tested the application of c-score to ophthalmology researchers with the hypothesis that c-score would be accurately predict the recipients of an internationally renowned ophthalmology research career honor, the Weisenfeld Award [11]. In addition, we examined the gender, nationality, and career characteristics of the most highly cited authors in ophthalmology as ranked by c-score.

Methods

This study utilized a publicly available database of the world’s top scientists compiled by researchers at Stanford University and SciTech Strategies© using Scopus-indexed papers published after 1996 [12]. We included scientists from this database, referred to as “c-score database,” whose primary research fields were ophthalmology/optometry and clinical medicine. We analyzed six citation indices: number of total citations (NC), number of single author citations (NCS), number of single or first author citations (NCSF), number of single, first or last author citations (NCSFL), Hirsch index (H), and Schreiber adjusted co-authorship index (Hm). These indices were log-transformed to a number between 0 and 1, then added together to create the combined c-score. A detailed description of this method is described by Ioannidis et al. [10]. We utilized c-score databases containing data up to 2017, 2018, 2019, 2020, and 2021, in order to measure annual trends between versions of the database.

We chose the Mildred Weisenfeld Award for Excellence in Ophthalmology, presented by the Association for Research in Vision and Ophthalmology (ARVO), as a “ground truth” for evaluating citation metrics. This award recognizes individuals with distinguished scholarly contributions to clinical research in ophthalmology, which directly parallels our inclusion criteria for authors to have their primary fields of research be clinical medicine and ophthalmology or optometry.

To compare citation metrics, first a correlation matrix of all the citation indices was generated using Pearson correlation coefficients. To assess the performance of each citation metric against the Weisenfeld award, each metric was used to create a ranked list of authors. From these ranked lists, we compared the number of Weisenfeld awardees captured by the top N authors by the citation metrics’ ranking.

To compare citation metric performance with adjustment for other variables, seven generalized linear models (GLMs) were constructed with Weisenfeld Award as the binary outcome and each citation metric as a predictor variable, with one citation metric per model. All seven models also included predictor variables of author demographic characteristics including gender, career length, nationality (USA vs other), self-citation rate, and year of first publication. Each model was trained on a stratified training set of 70% of all Weisenfeld awardees and 70% of all other authors, then tested on the remaining 30% of author data. To compare model performance, Receiver Operating Characteristic (ROC) curves were constructed and the Area under the ROC curve (AUC) with confidence intervals were calculated using the pROC program in R version 4.2.1 [13].

Additional analyses compared the top 50 authors by c-score vs other authors with regard to self-citation rate, annual movement in percentile ranking, career length, gender, and country affiliation. These comparisons used Wilcoxon rank-sum tests, with a p value < 0.05 considered significant. Gender was assigned based on authors’ first names using Gender-API©, which has shown a classification accuracy of over 98% in previous studies [14, 15].

Linear regression was used to investigate the relationship between self-citation rate and c-score ranking. Linear regression was also used to measure annual changes in author characteristics within the overall sample and top 50 authors by c-score. These characteristics included career length, percentile movement, gender, and country affiliation. The number of authors per 1 million population in their affiliate country was used to compare country contributions to the c-score database. For author characteristics whose linear time trend differed significantly between top 50 and other authors, we constructed multivariate GLMs with the primary outcome of a 5-year change in the characteristic with predictor variables of whether an individual belonged to the top 50 authors, combined with time-fixed characteristics including country, gender, and first year of publication. The top 50 variables were also investigated as an interaction term with all other variables.

Results

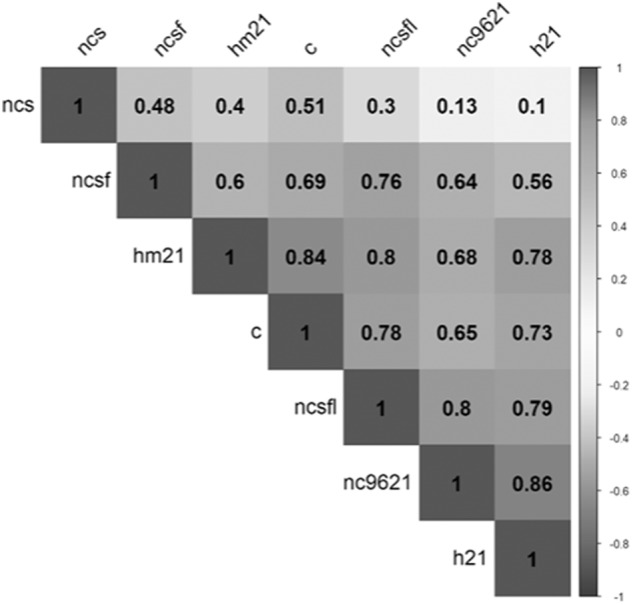

A total of 4603 unique authors in clinical ophthalmology or optometry were included in five annual editions of the c-score database. Figure 1 shows a correlogram of the Pearson correlation coefficients between citation indices among 1417 researchers in the 2021 database. The H21 and NC9621 indices had the strongest correlation of 0.86. In general, H21, HM21, and NCSFL strongly correlated (r > 0.70) with other indices the most frequently. The NCS was most weakly correlated (r < 0.60) with other citation indices.

Fig. 1. Correlogram of Pearson correlation coefficients between various citation indices.

nc9621 total citations from 1996 to 2021, h21 Hirsch H-index in 2021, hm Schreiber Hm-index in 2021, ncs total citations to papers for which the scientist is single author, ncsf total citations to papers for which scientist is single or first author, ncsfl total citations to papers for which author is single, first, or last author, c composite citation indicator.

Figure 2 shows the relationship between author rank and number of Weisenfeld awardees captured for each citation metric. The top 50 authors by c-score capture the highest number of Weisenfeld awardees in every annual edition of the c-score database from 2017 to 2021. The HM21 consistently captured the second-most awardees, and NCSF consistently captured the fewest awardees. The individual Weisenfeld Awardees’ inclusion among the top 50 authors by each citation metric is seen in Supplementary Table 1.

Fig. 2. Number of Weisenfeld awardees captured by rankings based on different citation indices.

nc9621 total citations from 1996 to 2021, h20 Hirsch H-index in 2021, hm Schreiber Hm-index in 2021, ncs total citations to papers for which the scientist is single author, ncsf total citations to papers for which scientist is single or first author, ncsfl total citations to papers for which author is single, first, or last author, c composite citation indicator.

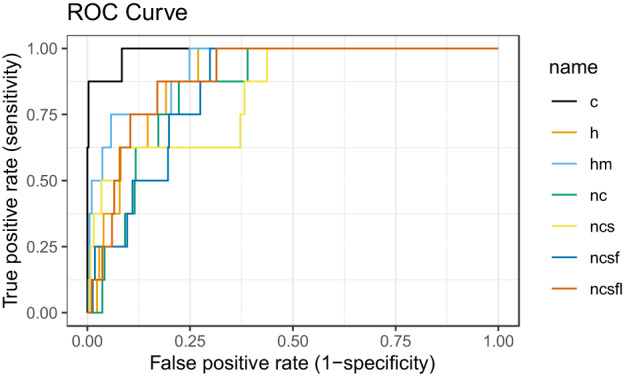

Among the multivariable generalized linear models that were generated for each citation metric, c-score performed the best at capturing Weisenfeld awardees, with an AUC of 0.99 (95% CI: 0.97–1.00). The Hm-index performed comparably well, with an AUC of 0.93 (95% CI: 0.86–0.99). The H-index performed significantly less well than c-score, with an AUC of 0.89 (95% CI: 0.83–0.96) (p < 0.05). All seven GLMs found that demographic characteristics were not significantly associated with Weisenfeld Awardee status (p > 0.05), while each citation metric was significantly associated with Weisenfeld Awardee status (p < 0.05). Table 1 shows odds ratios (ORs) and 95% CI associated with one point of increase in each citation metric. ROC curves are shown in Fig. 3, and the results of an AUC analysis are shown in Table 1.

Table 1.

Performance of seven different citation indices in capturing Weisenfeld awardees from 1996 to 2021 using multivariable generalized linear models adjusted for career length, gender, first year of publication, self-citation rate, and US vs non-US affiliation.

| Multivariate analysisa | |||

|---|---|---|---|

| Citation index | Odds ratio | p value | AUC (95% CI) |

| c-score | 0.10 | 6.39e-13 | 0.99 (0.97–1.00) |

| H-index | 1.4e-03 | 2.18e-08 | 0.89 (0.83–0.96) |

| Hm-index | 4.1e-03 | 2.87e-12 | 0.93 (0.86–0.99) |

| nc | 1.9e-06 | 7.28e-06 | 0.85 (0.77–0.94) |

| ncs | 5.9e-05 | 3.05e-13 | 0.83 (0.70–0.97) |

| ncsf | 9.9e-06 | 1.97e-06 | 0.85 (0.77–0.93) |

| ncsfl | 5.7e-06 | 4.00e-08 | 0.89 (0.82–0.97) |

aEach row represents a separate generalized linear model containing the citation index and the following demographic characteristics: career length, gender, first year of publication, self-citation rate, and US vs non-US affiliation.

Fig. 3. Receiver operating characteristic (ROC) curves for citation indices used to predict Weisenfeld Awardees.

nc9621 total citations from 1996 to 2021, h20 Hirsch H-index in 2021, hm Schreiber Hm-index in 2021, ncs total citations to papers for which the scientist is single author, ncsf total citations to papers for which scientist is single or first author, ncsfl total citations to papers for which author is single, first, or last author, c composite citation indicator.

When comparing the top 50 authors by c-score to other authors, a two-sample Wilcoxon test showed no significant difference in the self-citation rate, and χ2 tests showed no significant difference in gender proportion (Supplementary Table 2). Wilcoxon tests found significantly longer career lengths and lower changes in rank among the top 50 authors across all annual editions of the database (p < 0.01).

A Wilcoxon test comparing the c-scores of all female and male authors showed no significant difference (p > 0.1). A linear regression between self-citation and author ranking by c-score revealed a small but statistically significant negative relationship between self-citations and author ranking (p < 0.03, R2 = 0.001) (Supplementary Fig. 1). The median overall self-citation rate was 11.60% (IQR = 8.15%).

Table 2 shows the annual changes in author career and demographic characteristics among all authors and top 50 authors from 2017 to 2021. Gender-API produced gender assignments for 99.5% of author names, including 100% of the top 50 authors as ranked by c-score. The genders of the top 50 authors were correlated with gender-specific pronouns found in online profiles, and 98% of authors had concordance between gender assigned by Gender-API and online pronouns. The single author who was misclassified by Gender-API was re-classified according to their online pronouns.

Table 2.

Career and demographic characteristics of top-publishing authors in ophthalmology.

| Results of linear regression for change in characteristic from 2017 to 2021 | |||||||

|---|---|---|---|---|---|---|---|

| Characteristic | 2017 | 2018 | 2019 | 2020 | 2021 | Average yearly change (slope; 95% CI) | p value |

| Mean career length (all authors) | 36.1 | 38.6 | 38.2 | 38.3 | 40.0 | 0.52 (0.34 to 0.71) | <0.01 |

| Mean career length (top 50) | 38.8 | 42.2 | 42.8 | 43.2 | 40.6 | 1.19 (0.52 to 1.86) | <0.01 |

| Mean change in percentile from previous year (all authors) | – | 5.6 | 14.9 | 10.4 | 2.4 | –1.8 (–2.1 to –1.6) | <0.01 |

| Mean change in percentile from previous year (top 50) | – | 0.71 | 0.97 | 0.54 | 0.097 | –0.2 (–0.3 to –0.1) | <0.01 |

| Mean self-citation percentage (all authors) | 12.0 | 12.2 | 12.4 | 12.2 | 12.2 | –1.0 (–1.2 to –0.88) | <0.01 |

| Mean self-citation percentage (top 50) | 13.2 | 12.9 | 13.1 | 12.8 | 15.9 | –1.6 (–2.1 to –1.0) | <0.01 |

| Proportion female (all authors) | 15.6 | 16.0 | 16.4 | 16.6 | 16.7 | 0.28 (0.15 to 0.41) | <0.01 |

| Proportion female (top 50) | 14.6 | 18.4 | 19.1 | 20.0 | 20.0 | 1.24 (–0.02 to 2.50) | 0.051 |

Linear regressions showed significant positive trends (p < 0.01) in mean career length for both the top 50 authors and all authors overall, as well as for gender proportion overall. Between 2017 and 2021, the mean career length among top 50 authors increased from 38.8 to 40.6 years (p < 0.01), and the mean career length among all authors increased from 36.1 to 40.0 years (p < 0.01). The proportion of female authors increased from 15.6% to 16.7% overall (p < 0.01), and from 14.6% to 20% among the top 50 authors (p > 0.05). The latter regression did not fit a linear trend, showing an exponential increase instead of linear.

Linear regressions showed significant negative trends (p < 0.01) in mean change in percentile and self-citation rate. The change in self-citation rate decreased annually by 1% among all authors (p < 0.01) and 1.6% among top 50 authors (p < 0.01). The mean change in percentile had an annual average decrease of 0.2 percentiles per year among all authors (p < 0.01) and 1.8 percentiles among the top 50 authors (p < 0.01).

Among all time trends investigated, the mean change in percentile was the single characteristic whose linear time trend differed between the top 50 authors and other authors. A generalized linear model was generated with the outcome variable of 5-year change in percentile and predictor variables of whether an individual was among the top 50 authors by c-score, as well as time-fixed characteristics of gender, year of first publication, and country of origin from the US or outside of the US. In this multivariable analysis, the top 50 variables were not found to be a significant predictor of an individual’s change in percentile, on its own or as an interaction term. An earlier year of first publication and origin within the United States were both associated with a lower 5-year change in percentile (p < 0.001).

The countries that have produced the highest number of authors within the c-score database are shown in Supplementary Table 3. The United States of America has produced the greatest number of authors (n = 811). However, after accounting for population size, Denmark produced the greatest number of authors per 1 million population (3.07). France had the highest percentage of female authors (33.33%). The countries that have produced at least one top 50 authors are also shown in Supplementary Table 3. The United States produced the greatest number of top 50 authors (n = 41). After accounting for population size, Singapore produces the greatest number of top 50 authors per 1 million population (0.37) followed by the United States (0.12). Singapore had the highest percentage of female top 50 authors (50%, 1 out of 2 top authors were female).

Discussion

This study investigated the application of a c-score, or composite citation indicator, to the field of ophthalmology, assessing its ability to capture noteworthy ophthalmology researchers and describing the population of high-ranking researchers as defined by the c-score. We chose the Weisenfeld Award to represent the ground truth for research impact in this study because it internationally recognizes “distinguished scholarly contributions to the clinical practice of ophthalmology,” which corresponds to the subset of researchers included in our analysis whose primary research fields are listed as ophthalmology/optometry and clinical medicine [11]. Our method parallels prior studies that use notable scientific awards as a gold standard for comparing the performance of citation metrics [8, 10, 16]. We found that the c-score showed the best performance (AUC: 0.99, 95% CI: 0.97–1.00) in capturing Weisenfeld Awardees, outperforming H-index and performing similarly to Hm-index. In addition, we found that the c-score outperforms its constituent citation metrics in capturing Weisenfeld Awardees in its highest ranks. These findings suggest that the c-score has a substantial correlation with subjective research impact derived from peer-review within the field of clinical ophthalmology. A likely explanation is that, compared to H-index and Hm-index, the c-score gives additional credit to first and last authors, who often represent people who contribute significantly to clinical research in ophthalmology.

Among all authors, the strongest correlations in citation metrics were seen between c-score and Hm. The main difference between these two indicators is that c-score incorporates slightly more impact from first and last authorship. Because the c-score captured more Weisenfeld awardees in its top ranks, it is likely that it reflects ophthalmology peer-review more accurately than Hm.

When considering the career lengths of authors included in this study, authors in the top 50 as ranked by c-score tended to have longer career lengths. While this is unsurprising, it was notable that the significance of the disparity between career length in the top 50 vs non-top 50 increased from 2017 to 2021, while the mean career length of all ophthalmologists included in the database increased. A larger difference in career length between the top 50 and other researchers could have reflected an increasing pool of young ophthalmology researchers in the overall database, but this explanation is contradicted by an increase in the average career length from 2017 to 2021. Another possible explanation is that the advantage offered by a longer career increased from 2017 to 2021. The change in percentile was consistently different between the top 50 and other authors, with the top 50 generally experiencing less percentile shift from year to year. This is reasonable, as the top 50 authors’ ability to climb in rank is naturally limited, and their ability to fall in rank is limited by their existing research impact.

With regard to self-citation rate, a very small negative association was observed between self-citation percentage and ranking by c-score. This may suggest self-citation rate can positively influence a researcher’s impact; however, the size and significance of this association greatly limits its meaning. This is consistent with previous studies, which show minimal differences in self-citation rate across groups of scientists with a great range of reputability [10, 17].

Among the top 50 authors and other authors, female authors comprised 15%-20% of all researchers, with a slight increase in representation from 2017 to 2021. This proportion of women is generally smaller than that reported by other studies into gender balance in ophthalmology, which report that women comprise 20% of all ophthalmologists [18], 25% of ophthalmology award recipients [15], and 33% of authors in clinical ophthalmology journals [19].

Several limitations are present in this study. First, we utilized a limited sample of authors selected by their having high c-scores as defined by Ioannidis et al. [10]. Our comparison of multiple citation indices is limited by the sole inclusion of authors with a high ranking c-score, which may exclude authors who have lower c-scores but rank highly in other citation metrics. Additionally, our evaluation of each citation metrics' performance was based on comparison to a "gold standard" of the Weisenfeld Award, whose selection process relies on subjective peer review. While this award was well-suited to our authors' inclusion criteria, there is no perfect "gold standard" for research impact, inherently limiting our study’s generalizability. Thirdly, beyond the author-specific data pulled directly from the Ioannidis database, gender assignments were generated with Gender-API. This software is limited by its corpus of non-English names (among the top 50 authors, only one name was misclassified, and the author had an Asian name) and its binary gender assignment. However, it is regarded as the most accurate automated gender assignment application, and the broad trends it finds over a thousand-plus names are likely reliable [14, 15, 20].

Finally, all citation metrics we considered are limited by only representing data enumerated in Scopus. Other search engines have different means of counting citations, which can create substantial diversity in an author’s raw citation count between different databases such as Google Scholar, Web of Science, and Scopus [21]. Metrics such as PlumX and Altmetrics also utilize data sources beyond academic citations including online user engagement, news media references, and social media coverage [22–24]. Since there are many more citation indices beyond those considered here that can influence an author’s “impact,” future work should further investigate how other metrics correlate with subjective outcomes such as award allocations and career trajectories.

The professional relevance of citation indices has contributed to the creation of numerous bibliometric strategies for capturing an individual’s research impact into a single number. This is the first investigation into applying the c-score, a composite citation indicator, to the field of clinical ophthalmology research. By assessing the c-score’s ability to predict Weisenfeld Awardees, we found that the c-score more accurately predicts peer-review-based awards compared to its constituent citation indices including the H-index, thus proving that the c-score has promise in accurately describing individuals’ research impact in ophthalmology.

Summary

What was known before

Citation indices are important for assessing a researcher’s scientific contributions and can impact decisions regarding hiring, funding, and promotion.

The c-score, a composite citation score, can capture more Nobel laureates in its top ranks compared to its constituent citation indices, which include H-index.

What this study adds

This study evaluates the ability of citation indices to predict winners of the Weisenfeld award, a peer-review-based honor the field of clinical ophthalmology research.

The c-score, a composite citation index, more accurately predicts Weisenfeld Awardees (AUC: 0.99, CI: 0.97–1.00) compared to its constituent indices including the H-index (AUC: 0.89, CI: 0.93–0.96).

The top 1000 authors by c-score in ophthalmology are predominantly male (84%) and affiliated with the United States of America (64%).

Disclaimer

The view(s) expressed herein are those of the author(s) and do not reflect the official policy or position of the U.S. Army Medical Department, the U.S. Army Office of the Surgeon General, the Department of the Air Force, the Department of the Army, Department of Defense, the Uniformed Services University of the Health Sciences or any agency of the U.S. Government.

Supplementary information

Author contributions

GJ, AAP, and FW contributed to the conception and design of the work. AAP and ATP contributed to extracting and analyzing the data and drafting initial sections of the paper. BA, GJ, GL, and FW provided feedback during manuscript revision and approved of the submitted version.

Data availability

All data used in this project are publicly available in the “Updated science-wide author databases of standardized citation indicators” found at the following website: https://elsevier.digitalcommonsdata.com/datasets/btchxktzyw/6.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41433-023-02912-2.

References

- 1.Abbot A, Cyranoski D, Jones N. Metrics: do metrics matter? Nature. 2010;465:860–2. doi: 10.1038/465860a. [DOI] [PubMed] [Google Scholar]

- 2.Holden G, Rosenberg G, Barker K. Bibliometrics: a potential decision making aid in hiring, reappointment, tenure and promotion decisions. Soc Work Health Care. 2005;41:67–92. doi: 10.1300/J010v41n03_03. [DOI] [PubMed] [Google Scholar]

- 3.Haustein S, Larivière V. The use of bibliometrics for assessing research: possibilities, limitations and adverse effects. In: Welpe, I, Wollersheim, J, Ringelhan, S, Osterloh, M, eds. Incentives and performance.Cham: Springer; 2015. 10.1007/978-3-319-09785-5_8.

- 4.Tanya SM, He B, Tang J, He P, Zhang A, Sharma E, et al. Research productivity and impact of Canadian academic ophthalmologists: trends in H-index, gender, subspecialty, and faculty appointment. Can J Ophthalmol. 2022;57:188–94. doi: 10.1016/j.jcjo.2021.03.011. [DOI] [PubMed] [Google Scholar]

- 5.Lopez SA, Svider PF, Misra P, Bhagat N, Langer PD, Eloy JA. Gender differences in promotion and scholarly impact: an analysis of 1460 academic ophthalmologists. J Surg Educ. 2014;71:851–9. doi: 10.1016/j.jsurg.2014.03.015. [DOI] [PubMed] [Google Scholar]

- 6.Huang G, Fang CH, Lopez SA, Bhagat N, Langer PD, Eloy JA. Impact of fellowship training on research productivity in academic ophthalmology. J Surg Educ. 2015;72:410–7. doi: 10.1016/j.jsurg.2014.10.010. [DOI] [PubMed] [Google Scholar]

- 7.Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci USA. 2005;102:16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Koltun V, Hafner D. The h-index is no longer an effective correlate of scientific reputation. PLOS ONE. 2021;16:e0253397. doi: 10.1371/journal.pone.0253397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schreiber M. A modification of the h-index: the hm-index accounts for multi-authored manuscripts. J Informetr. 2008;2:211–6. doi: 10.1016/j.joi.2008.05.001. [DOI] [Google Scholar]

- 10.Ioannidis JPA, Klavans R, Boyack KW. Multiple citation indicators and their composite across scientific disciplines. PLOS Biol. 2016;14:e1002501. doi: 10.1371/journal.pbio.1002501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.ARVO Achievement Awards. https://www.arvo.org/awards-grants-and-fellowships/arvo-achievement-awards/.

- 12.Ioannidis JPA, Boyack KW, Baas J. Updated science-wide author databases of standardized citation indicators. PLOS Biol. 2020;18:e3000918. doi: 10.1371/journal.pbio.3000918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Robin X, Turck N, Hainard A, Tiberti N, Lisacek F, Sanchez JC, et al. pROC: an open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinforma. 2011;12:77. doi: 10.1186/1471-2105-12-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nielsen MW, Andersen JP, Schiebinger L, Schneider JW. One and a half million medical papers reveal a link between author gender and attention to gender and sex analysis. Nat Hum Behav. 2017;1:791–6. doi: 10.1038/s41562-017-0235-x. [DOI] [PubMed] [Google Scholar]

- 15.Nguyen AX, Ratan S, Biyani A, Trinh XV, Saleh S, Sun Y, et al. Gender of award recipients in major ophthalmology societies. Am J Ophthalmol. 2021;231:120–33. doi: 10.1016/j.ajo.2021.05.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sinatra R, Wang D, Deville P, Song C, Barabási AL. Quantifying the evolution of individual scientific impact. Science. 2016;354:aaf5239. doi: 10.1126/science.aaf5239. [DOI] [PubMed] [Google Scholar]

- 17.Szomszor M, Pendlebury DA, Adams J. How much is too much? The difference between research influence and self-citation excess. Scientometrics. 2020;123:1119–47. doi: 10.1007/s11192-020-03417-5. [DOI] [Google Scholar]

- 18.Reddy AK, Bounds GW, Bakri SJ, Gordon LK, Smith JR, Haller JA, et al. Representation of women with industry ties in ophthalmology. JAMA Ophthalmol. 2016;134:636. doi: 10.1001/jamaophthalmol.2016.0552. [DOI] [PubMed] [Google Scholar]

- 19.Mimouni M, Zayit-Soudry S, Segal O, Barak Y, Nemet AY, Shulman S, et al. Trends in authorship of articles in major ophthalmology journals by gender, 2002–2014. Ophthalmology. 2016;123:1824–8. doi: 10.1016/j.ophtha.2016.04.034. [DOI] [PubMed] [Google Scholar]

- 20.Santamaría L, Mihaljević H. Comparison and benchmark of name-to-gender inference services. PeerJ Comput Sci. 2018;4:e156. doi: 10.7717/peerj-cs.156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yang K, Meho LI. Citation analysis: a comparison of Google Scholar, Scopus, and Web of Science. Proc Am Soc Inf Sci Technol. 2006;43:1–15. doi: 10.1002/meet.14504301185. [DOI] [Google Scholar]

- 22.Allen P. World-Wide News Coverage in PlumX. 2017. https://plumanalytics.com/world-wide-news-coverage-plumx/.

- 23.Meschede C, Siebenlist T. Cross-metric compatability and inconsistencies of altmetrics. Scientometrics. 2018;115:283–97. doi: 10.1007/s11192-018-2674-1. [DOI] [Google Scholar]

- 24.How Outputs Are Tracked and Measured. Altmetric https://help.altmetric.com/support/solutions/articles/6000234171-how-outputs-are-tracked-and-measured.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data used in this project are publicly available in the “Updated science-wide author databases of standardized citation indicators” found at the following website: https://elsevier.digitalcommonsdata.com/datasets/btchxktzyw/6.