Abstract

Bayesian statistics plays a pivotal role in advancing medical science by enabling healthcare companies, regulators, and stakeholders to assess the safety and efficacy of new treatments, interventions, and medical procedures. The Bayesian framework offers a unique advantage over the classical framework, especially when incorporating prior information into a new trial with quality external data, such as historical data or another source of co-data. In recent years, there has been a significant increase in regulatory submissions using Bayesian statistics due to its flexibility and ability to provide valuable insights for decision-making, addressing the modern complexity of clinical trials where frequentist trials are inadequate. For regulatory submissions, companies often need to consider the frequentist operating characteristics of the Bayesian analysis strategy, regardless of the design complexity. In particular, the focus is on the frequentist type I error rate and power for all realistic alternatives. This tutorial review aims to provide a comprehensive overview of the use of Bayesian statistics in sample size determination, control of type I error rate, multiplicity adjustments, external data borrowing, etc., in the regulatory environment of clinical trials. Fundamental concepts of Bayesian sample size determination and illustrative examples are provided to serve as a valuable resource for researchers, clinicians, and statisticians seeking to develop more complex and innovative designs.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12874-024-02235-0.

Keywords: Bayesian hypothesis testing, Sample size determination, Regulatory environment, Frequentist operating characteristics

Background

Clinical trials are a critical cornerstone of modern healthcare, serving as the crucible in which medical innovations are tested, validated, and ultimately brought to patients [1]. Traditionally, since the 1940s, these trials have adhered to frequentist statistical methods, offering valuable insights into decision-making to demonstrate treatment effects. However, they may fall short in addressing the increasing complexity of modern clinical trials, such as personalized medicine [2, 3], innovative study designs [4, 5], and the integration of real-world data into randomized controlled trials [6–8], among many other challenges [9–11].

These new challenges commonly necessitate innovative solutions. The US 21st Century Cures Act and the US Prescription Drug User Fee Act VI include provisions to advance the use of complex innovative trial designs [12]. Generally, complex innovative trial designs have been considered to refer to complex adaptive, Bayesian, and other novel clinical trial designs, but there is no fixed definition because what is considered innovative or novel can change over time [12–15]. A common feature of many of these designs is the need for simulations rather than mathematical formulae to estimate trial operating characteristics. This highlights the growing embrace of complex innovative trial designs in regulatory submissions.

In this paper, our particular focus is on Bayesian methods. Guidance from the U.S. Food and Drug Administration (FDA) [16] defines Bayesian statistics as an approach for learning from evidence as it accumulates. Bayesian methods offer a robust and coherent probabilistic framework for incorporating prior knowledge, continuously updating beliefs as new data emerge, and quantifying uncertainty in the parameters of interest or outcomes for future patients [17]. The Bayesian approach aligns well with the iterative and adaptive nature of clinical decision-making, offering opportunities to maximize clinical trial efficiency, especially in cases where data are sparse or costly to collect.

The past two decades have seen notable demonstrations of Bayesian statistics addressing various types of modern complexities in clinical trial designs. For example, Bayesian group sequential designs are increasingly used for seamless modifications in trial design and sample size to expedite the development process of drugs or medical devices, while potentially leveraging external resources [18–22]. One recent example is the COVID-19 vaccine trial, which includes four Bayesian interim analyses with the option for early stopping to declare vaccine efficacy before the planned trial end [23]. Other instances where Bayesian approaches have demonstrated their promise are umbrella, basket, or platform trials under master protocols [24]. In these cases, Bayesian adaptive approaches facilitate the evaluation of multiple therapies in a single disease, a single therapy in multiple diseases, or multiple therapies in multiple diseases [25–32]. Moreover, Bayesian approaches provide an effective means to integrate multiple sources of evidence, a particularly valuable aspect in the development of pediatric drugs or medical devices where small sample sizes can impede traditional frequentist approaches [33–35]. In such cases, Bayesian borrowing techniques enable the integration of historical data from previously completed trials, real-world data from registries, and expert opinion from published resources. This integration provides a more comprehensive and probabilistic framework for information borrowing across different sub-populations [36–39].

It is important to note that the basic tenets of good trial design are consistent for both Bayesian and frequentist trials. Sponsors using the Bayesian approach for sizing a trial should adhere to the principles of good clinical trial design and execution, including minimizing bias, as outlined in regulatory guidance [16, 40, 41], following almost the same standards as those given to frequentist approaches. For example, regulators often recommend that sponsors submit a Bayesian design that effectively maintains the frequentist type I and type II error rates (or some analog of it) at the nominal levels for all realistic scenarios by carefully calibrating design parameters.

In the literature, numerous articles [13, 42–47] and textbooks [17, 48] extensively cover both basic and advanced concepts of Bayesian designs. While several works focus on regulatory issues in developing Bayesian designs [49–51], there seems to be a lack of tutorial-type review papers explaining how to develop Bayesian designs for regulatory submissions within the evolving regulatory environment, along with providing tutorial-type examples. Such papers are crucial for sponsors, typically pharmaceutical or medical device companies, preparing to use Bayesian designs to gain insight and build more complex Bayesian designs.

In this paper, we provide a pedagogical understanding of Bayesian designs by elucidating key concepts and methodologies through illustrative examples and address the existing gaps in the literature. For the simplicity of explanation, we apply Bayesian methods to construct single-stage designs, two-stage designs, and parallel designs for single-arm trials, but the illustrated key design principles can be generalized to multiple-arm trials. Specifically, our focus in this tutorial is on Bayesian sample size determination, which is most useful in confirmatory clinical trials, including late-phase II or III trials in the drug development process or pivotal trials in the medical device development process. We highlight the advantages of Bayesian designs, address potential challenges, examine their alignment with evolving regulatory science, and ultimately provide insights into the use of Bayesian statistics for regulatory submissions.

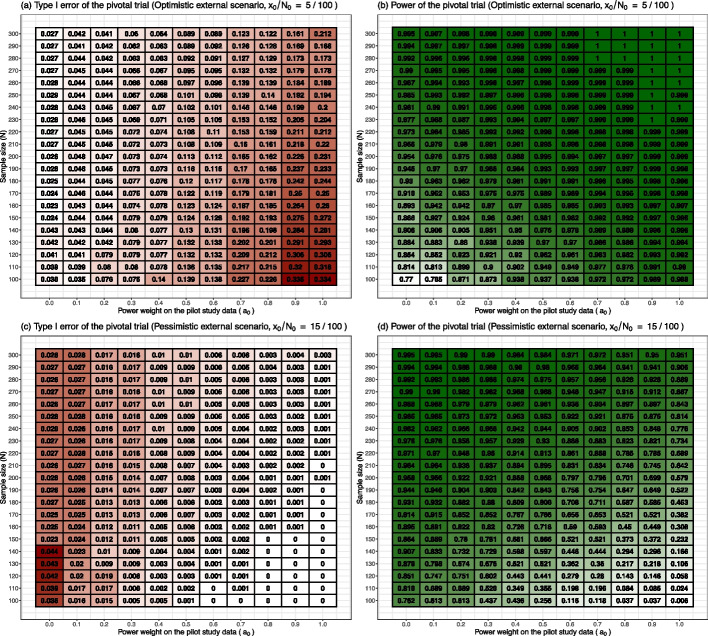

This tutorial paper is organized as follows. Figure 1 displays the diagram of the paper organization. We begin by explaining a simulation-based approach to determine the sample size of a Bayesian design in Sizing a Bayesian trial section, which is consistently used throughout the paper as the building blocks to develop many kinds of Bayesian designs. Next, the specification of the prior distribution for Bayesian submission is discussed in Specification of prior distributions section, and two important Bayesian decision rules, namely, the posterior probability approach and the predictive probability approach, are illustrated in Decision rule - posterior probability approach and Decision rule - predictive probability approach sections, respectively. These are essential in the development of Bayesian designs for regulatory submissions. Advanced design techniques for multiplicity adjustment using Bayesian hierarchical modeling are illustrated in Multiplicity adjustments section, and incorporating external data using power prior modeling is explained in External data borrowing section. We conclude the paper with a discussion in Conclusions section.

Fig. 1.

Topics, key concepts, and organization of paper

Sizing a Bayesian trial

A simulation principle of Bayesian sample size determination

Although practical and ethical issues need to be considered, one’s initial reasoning when determining the trial size should focus on the scientific requirements [52]. Scientific requirements refer to the specific criteria, conditions, and standards that must be met in the design, conduct, and reporting of scientific research to ensure the validity, reliability, and integrity of the findings. Much like frequentist approaches for determining the sample size of the study [53], its Bayesian counterpart also proceeds by first defining a success criterion to align with the primary objective of the trial. Subsequently, the number of subjects is determined to provide a reliable answer to the questions addressed within regulatory settings.

In the literature, various studies have explored the sizing of Bayesian trials [54–60]. Among these, the simulation-based method proposed by [60] stands out as popular, and it was further explored by [61, 62] for practical applications. This method is widely used by many healthcare practitioners, including design statisticians at companies or universities, for its practical applicability in a broad range of Bayesian designs. Furthermore, this method, with a particular prior setting, is well-suited for the regulatory submission, where the evaluation of the frequentist operating characteristics of the Bayesian design is critical. This will be discussed in Calibration of Bayesian trial design to assess frequentist operating characteristics section.

In this section, we outline the framework of the authors’ work [60]. Similar to the notation in Reference [63] assume that the endpoint has probability density function , where the represents the parameter of main interest. The hypotheses to be investigated are the null and alternative hypotheses,

| 1 |

where and represent the disjoint parameter spaces for the null and alternative hypotheses, respectively. denotes the entire parameter space. Suppose that the objective of the study is to evaluate the efficacy of a new drug, achieved by rejecting the null hypothesis. Let denotes a set of N outcomes such that () is identically and independently distributed according a distribution .

Throughout the paper, we assume that the parameter space is a subset of real numbers. The range of the parameter space is determined by the type of outcomes. For example, for continuous outcomes y, the distribution may be a normal distribution, where the parameter space is the set of real numbers, ; and for binary outcomes, the distribution is the Bernoulli distribution, where the parameter space is the set of fractional numbers, . In this formulation, typically, the hypotheses (1) are one-sided; for example, versus or versus . Throughout the paper, when we denote hypotheses in the abstract form (1), it is considered a one-sided superiority test for the coherency of the paper. The logic explained in this paper can be generalized to a form of a two-sided test, non-inferiority test, or equivalence test in a similar manner, but discussion on these forms is out of scope for this paper.

The simulation-based approach incorporates two essential components: the ‘sampling prior’ and the ‘fitting prior’ . The sampling prior is utilized to generate observations by considering the scenario of ‘what if the parameter is likely to be within a specified portion of the parameter space?’ The fitting prior is employed to fit the model once the data has been obtained upon completion of the study. We note that the sampling prior should be a proper distribution, while the fitting prior does not need to be proper as long as the resulting posterior, , is proper. We also note that the sampling prior is a unique Bayesian concept adopted in the simulation-based approach, whereas the fitting prior refers to the prior distributions used in the daily work of Bayesian data analyses [64], not confined to the context of sample size determination.

In the following, we illustrate how to calculate the Bayesian test statistic, denoted as , using the posterior probability approach by using a sampling prior and a fitting prior. (Details of the posterior probability approach will be explained in Decision rule - posterior probability approach section). First, one generates a value of parameter of interest from the sampling prior , and then generates the outcome vector based on that . This process produces N outcomes from its prior predictive distribution (also called, marginal likelihood function)

| 2 |

After that, one calculates the posterior distribution of given the data , which is

| 3 |

Eventually, a measure of evidence to reject the null hypothesis is summarized by the Bayesian test statistics, the posterior probability of the alternative hypothesis being true given the observations , which is

where the indicator function is 1 if A is true and 0 otherwise. A typical success criterion takes the form of

| 4 |

where is a pre-specified threshold value.

At this point, we introduce a key quantity to measure the expected behavior of the Bayesian test statistics – the probability of study success based on the Bayesian testing procedure – by considering the idea of repeated sampling of the outcomes :

| 5 |

In the notation (5), the superscript ‘N’ indicates the dependence on the sample size N, and the subscript ‘’ represents the support of the sampling prior . Note that in the Eq. (5), the probability inside of (that is, ) is computed with respect to the posterior distribution (3) under the fitting prior, while the probability outside (that is, ) are taken with respect to the marginal distribution (2) under the sampling prior. Note that the value (5) also depends on the choice of the threshold (), the parameter spaces corresponding to the null and alternative hypothesis ( and ), and the sampling and fitting priors ( and ).

Monte Carlo simulation is employed to approximate the value of (Eq. 5) in cases where it is not expressed as a closed-form formula:

where R is the number of simulated datasets. When Monte Carlo simulation is used for regulatory submission in a Bayesian design to estimate the expected behavior of the Bayesian test statistics , typically, one uses or 100, 000 and also reports a 95% confidence interval for to describe the precision of the approximation. Often, for complex designs, computing the Bayesian test statistic itself requires the use of Markov Chain Monte Carlo (MCMC) sampling techniques, such as the Gibbs sampler or Metropolis-Hastings algorithm [65–67]. In such cases, a nested simulation technique is employed to approximate (5) (Algorithm 1 in Supplemental material). It is important to note that when MCMC techniques are used, regulators recommend sponsors check the convergence of the Markov chain to the posterior distribution [16], using various techniques to diagnose nonconvergence [64, 65].

Now, we are ready to apply the above concept to Bayesian sample size determination. We consider two different populations from which the random sample of N observations may have been drawn, with one population corresponding to the null parameter space and another population corresponding to the alternative parameter space – similar to Neyman & Pearson’s approach (based on hypothesis testing and type I and II error rates) [68].

This can be achieved by separately considering two scenarios: ‘what if the parameter is likely to be within a specified portion of the null parameter space?’ and ‘what if the parameter is likely to be within a specified portion of the alternative parameter space?’ Following notations from [62], let and denote the closures of and , respectively. In this formulation, the null sampling prior is the distribution supported on the boundary , and the alternative sampling prior is the distribution supported on the set . For a one-sided test, such as versus , one may choose the null sampling prior as a point-mass distribution at , and the alternative sampling prior as a distribution supported on .

Eventually, for a given and , the Bayesian sample size is the value

| 6 |

where and are given in (5) corresponding to and , respectively. The values of and are referred to as the Bayesian type I error and power, while is referred to as the Bayesian type II error. The sample size N satisfying the condition meets the Bayesian type I error requirement. Similarly, the sample size N satisfying the condition meets the Bayesian Power requirement. Eventually, the selected sample size N (6) is the minimum value that simultaneously satisfies the Bayesian type I error and power requirement. Typical values for are 0.025 for a one-sided test and 0.05 for a two-sided test, and is typically set to 0.1 or 0.2 regardless of the direction of the alternative hypothesis [16].

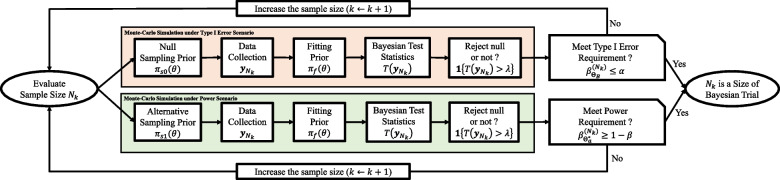

Figure 2 provides a flowchart illustrating the process of Bayesian sample size determination. We explain the practical algorithm for selecting an optimal Bayesian sample size N (6), subject to the maximum sample size – typically chosen under budgetary limits. To begin, we consider a set of K candidate sample sizes, denoted as . Often, one may include the frequentist sample size as a reference.

Fig. 2.

Flow chart of Bayesian sample size determination within the collection of possible sizes of Bayesian trial

The process commences with the evaluation of the smallest sample size, , checking whether it meets the Bayesian type I error and power requirements, i.e., and . To that end, we independently generate outcomes, , from the marginal distributions and , which are based on the null and alternative sampling priors and , respectively. The data drawn in this manner corresponds to the type I error and power scenarios, respectively. Subsequently, we independently compute the Bayesian test statistics, , using the common fitting prior , and record the testing results, whether it rejects the null hypothesis or not, (4) for each scenario. By repeating this procedure R times (for example, ), we can estimate the expected behaviors of the Bayesian test statistics and through Monte-Carlo approximation and evaluate whether the size meets both Bayesian type I error and power requirements. If these requirements are met, then is deemed the Bayesian sample size for the study. If not, we evaluate the next sample size, , and reassess its suitability for meeting the requirements. This process continues until we identify the Bayesian sample size meeting the requirements within the set . If it cannot be found within this set , it may be necessary to explore a broader range of candidate sizes, adjust the values of and under regulatory consideration, modify the threshold , or consider other potential modifications such as changing the hyper-parameters of the fitting prior.

It is evident that Bayesian sample size determination is computationally intensive. It becomes even more intense when the complexity of the design increases. For instance, one needs to consider factors like the number and timing of interim analyses for Bayesian group sequential design, as well as the number of sub-groups and ratios in Bayesian platform design. Moreover, the computational complexity increases when the Bayesian test statistic requires MCMC sampling, as the convergence of the Markov chain should be diagnosed for each iteration within the Monte Carlo simulation. In such scenarios, the use of parallel computation techniques or modern sampling schemes can significantly reduce computation time [69, 70].

Calibration of Bayesian trial design to assess frequentist operating characteristics

Scientifically sound clinical trial planning and rigorous trial conduct are important, regardless of whether trial sponsors use a Bayesian or frequentist design. Maintaining some degree of objectivity in the interpretation of testing results is key to achieving scientific soundness. The central question here is how much we can trust a testing result based on a Bayesian hypothesis testing procedure, which is driven by the Bayesian type I error and power in the planning phase. More specifically, suppose that such a Bayesian test, where the threshold of the decision rule was chosen to meet the Bayesian type I error rate of less than 0.025 and power greater than 0.8, yielded the rejection of the null hypothesis, while a frequentist test did not upon completion of the study. Then, can we still use the result of the Bayesian test for registration purposes? Perhaps, this can be best addressed by calculating the frequentist type I error and power of the Bayesian test during the planning phase so that the Bayesian test can be compared with some corresponding frequentist test in an apple-to-apple comparison, or as close as possible.

In most regulatory submissions, Bayesian trial designs are ‘calibrated’ to possess good frequentist properties. In this spirit, and in adherence to regulatory practice, regulatory agencies typically recommend that sponsors provide the frequentist type I and II error rates for the sponsor’s proposed Bayesian analysis plan [16, 71].

The simulation-based approach for Bayesian sample size determination [60], as illustrated in A simulation principle of Bayesian sample size determination section, is calibrated to measure the frequentist operating characteristics of a Bayesian trial design if the null sampling prior is specified by a Dirac measure with the point-mass at the boundary value of the null parameter space (i.e., for some where is the Direc-Delta function), and the alternative sampling prior is specified by a Dirac measure with the point-mass at the value inducing the minimally detectable treatment effect, representing the smallest effect size (i.e., for some ).

In this calibration, the expected behavior of the Bayesian test statistics can be represented as the frequentist type I error and power of the design as follow:

| 7 |

| 8 |

Throughout the paper, we interchangeably use the notations and . The former notation is simpler, yet it omits specifying which values are being treated as random and which are not; hence, the latter notation is sometimes more convenient for Bayesian computation.

With the aforementioned calibration, the prior specification problem of the Bayesian design essentially boils down to the choice of the fitting prior . This is because the selection of the null and alternative sampling prior is essentially determined by the formulation of the null and alternative hypotheses, aligning with the frequentist framework. In other words, the fitting prior provides the unique advantage of Bayesian design by incorporating prior information about the parameter , which is then updated by Bayes’ theorem, leading to the posterior distribution. The choice of the fitting prior will be discussed in Specification of prior distributions section. In what follows, to avoid notation clutter, we omit the subscript ‘f’ in the notation of the fitting prior .

Example - standard single-stage design based on beta-binomial model

Suppose a medical device company aims to evaluate the primary safety endpoint of a new device in a pivotal trial. The safety endpoint is the primary adverse event rate through 30 days after a surgical procedure involving the device. The sponsor plans to conduct a single-arm study design in which patient data is accumulated throughout the trial. Only once the trial is complete, the data will be unblinded, and the pre-planned statistical analyses will be executed. Suppose that the null and alternative hypotheses are: versus . Here, represents the performance goal of the new device, a numerical value (point estimate) that is considered sufficient by a regulator for use as a comparison for the safety endpoint. It is recommended that the performance goal not originate from a particular sponsor or regulator. It is often helpful if it is recommended by a scientific or medical society [72].

A fundamental regulatory question is “when a device passes a safety performance goal, does that provide evidence that the device is safe?”. To answer this question, the sponsor sets a performance goal by , and anticipates that the safety rate of the new device is . The objective of the study is, therefore, to detect a minimum treatment effect of in reducing the adverse event rate of patients treated with the new medical device compared to the performance goal. The sponsor targeted to achieve a statistical power of with the one-sided level test of a proposed design. The trial is successful if the null hypothesis is rejected after observing the outcomes from N patients upon completion of the study.

The following Bayesian design is considered:

One-sided significance level: ,

Power: ,

Null sampling prior: , where ,

Alternative sampling prior: , where ,

Prior: ,

Hyper-parameters: and ,

Likelihood: ,

Decision rule: Reject null hypothesis if .

Under the setting, (frequentist) type I error and power of the Bayesian design can be expressed as:

Here, the integral expression () can be further simplified to summation expression () by using a binomial distribution, similar to [73].

The Bayesian sample size satisfying the type I & II error requirements are then

Due the conjugate relationship between the binomial distribution and beta prior, the posterior distribution is the beta distribution, such that . Therefore, the Bayesian test statistics can be represented as a closed-form in this case.

We consider and 200 as the possible sizes for the Bayesian trial. We evaluate three prior options: (1) a non-informative prior with (prior mean is 50%), (2) an optimistic prior with and (prior mean is 4.76%), and (3) a pessimistic prior with and (prior mean is 14.89%). An optimistic prior assigns a probability mass that is favorable for rejecting the null hypothesis before observing any new outcomes, while a pessimistic prior assigns a probability mass that is favorable for accepting the null hypothesis before observing any new outcomes. As a reference, we consider a frequentist design in which the decision criterion is determined by the p-value associated with the z-test statistic, , being less than the one-sided significance level of to reject the null hypothesis.

Table 1 shows the results of the power analysis obtained by simulation. Designs satisfying the requirement of type I error 2.5% and power 80%, are highlighted in bold in the table. The results indicate that the operating characteristics of the Bayesian design based on a non-informative prior are very similar to those obtained using the frequentist design. This similarity is typically expected because a non-informative prior has minimal impact on the posterior distribution, allowing the data to play a significant role in determining the results.

Table 1.

Frequentist operating characteristics of Bayesian designs with different prior options

| Bayesian Design (Non-informative prior) | Bayesian Design (Optimistic prior) | Bayesian Design (Pessimistic prior) | Frequentist Design (Z-test statistics) | |||||

|---|---|---|---|---|---|---|---|---|

| Sample Size (N) | Type I Error | Power | Type I Error | Power | Type I Error | Power | Type I Error | Power |

| 100 | 0.0148 | 0.6181 | 0.0755 | 0.8767 | 0.0148 | 0.6181 | 0.0155 | 0.6214 |

| 150 | 0.0231 | 0.8690 | 0.0448 | 0.9268 | 0.0114 | 0.7838 | 0.0242 | 0.8690 |

| 200 | 0.0164 | 0.9184 | 0.0467 | 0.9767 | 0.0164 | 0.9184 | 0.0158 | 0.9231 |

Note: Bayesian designs are based on the beta-binomial models with prior options: (1) a non-informative prior with , (2) an optimistic prior with and , and (3) a pessimistic prior with and

The results show that the Bayesian design based on an optimistic prior tends to increase power at the expense of inflating the type I error. Technically, the inflation is expected because, by definition, the type I error is evaluated by assuming the true treatment effect is null (i.e. ), then it is calculated under a scenario where the prior is in conflict with the null treatment effect, resulting in the inflation of the type I error. In contrast, the Bayesian design based on a pessimistic prior tends to decrease the type I error at the cost of deflating the power. The deflation is expected because, by definition, the power is evaluated by assuming the true treatment effect is alternative (i.e. ), then it is calculated under a scenario where the prior is in conflict with the alternative treatment effect, resulting in the deflation of the power.

Considering the trade-off between power and type I error, which is primarily influenced by the prior specification, thorough pre-planning is essential for selecting the most suitable Bayesian design on a case-by-case basis for regulatory submission. Particularly, when historical data is incorporated into the hyper-parameter of the prior as an optimistic prior, there may be inflation of the type I error rate, even after appropriately discounting the historical data [74]. In such cases, it may be appropriate to relax the type I error control to a less stringent level compared to situations where no prior information is used. This is because the power gains from using external prior information in clinical trials are typically not achievable when strict type I error control is required [75, 76]. Refer to Section 2.4.3 in [77] for relevant discussion. The extent to which type I error control can be relaxed is a case-by-case decision for regulators, depending on various factors, primarily the confidence in the prior information [16]. We discuss this in more detail by taking the Bayesian borrowing design based on a power prior [36] as an example in External data borrowing section.

Numerical approximation of power function

In this subsection, we illustrate a numerical method to approximate the power function of a Bayesian hypothesis testing procedure. The power function of a test procedure is the probability of rejecting the null hypothesis, with the true parameter value as the input. The power function plays a crucial role in assessing the ability of a statistical test to detect a true effect or relationship between the design parameters. Visualizing the power function over the parameter space, as provided by many statistical software (SAS, PASS, etc), is helpful for trial sizing because it displays the full spectrum of the behavior of the testing procedure. Understanding such behaviors is crucial for regulatory submission, as regulators often recommend simulating several likely scenarios and providing the expected sample size and estimated type I error for each case.

Consider the null and alternative hypotheses, versus , where , and and are disjoint. Let outcomes () be identically and independently distributed according to a density . Given a Bayesian test statistics , suppose that a higher value of raises more doubt about the null hypothesis being true. We reject the null hypothesis if , where is a pre-specified threshold. Then, the power function is defined as follows:

| 9 |

Eventually, one needs to calculate over the entire parameter space to explore the behavior of the testing procedure. However, the value of is often not expressed as a closed-form formula, mainly due to two reasons: no explicit formula for the outside integral or the Bayesian test statistics . Thus, it is often usual that the value of is approximated through a nested simulation strategy. See Algorithm 1 in Supplemental material. The idea of the Algorithm 1 is that the outside integral in (9) is approximated by a Monte-Carlo simulation (with R number of replicated studies), and the test statistics is approximated by Monte-Carlo or Markov Chain Monte-Carlo simulation (with S number of posterior samples) when the test statistics are not expressed in closed form. It is important to note that this approximation is exact in the sense that if R and S go to infinity, then converges to the truth . This contrasts with the formulation of the power functions of many frequentist tests, which are derived based on some large sample theory [78], to induce a closed-form formula.

Specification of prior distributions

Classes of prior distributions

The prior distributions for regulatory submissions can be broadly classified into non-informative priors and informative priors. A non-informative prior is a prior distribution with no preference for any specific parameter value. A Bayesian design based on a non-informative prior leads to objective statistical inference, resembling frequentist inference, and is therefore the least controversial. It is important to note that choosing a non-informative prior distribution can sometimes be challenging, either because there may be more than one way to parameterize the problem or because there is no clear mathematical justification for defining non-informativeness. [79] reviews the relevant literature but emphasizes the continuing difficulties in defining what is meant by ‘non-informative’ and the lack of agreed reference priors in all but simple situations.

For example, in the case of a beta-binomial model (as illustrated in Calibration of Bayesian trial design to assess frequentist operating characteristics section), choices such as , , , or could all be used as non-informative priors. Refer to Subsection 5.5.1 of [17] and the paper by [80] for a relevant discussion. In Bayesian hierarchical models, the mathematical meaning of a non-informative prior distribution is not obvious due to the complexity of the model. In those cases, we typically set the relevant hyper-parameters to diffuse the prior evenly over the parameter space and minimize the prior information as much as possible, leading to a nearly non-informative prior.

On the other hand, an informative prior is a prior distribution that expresses a preference for a particular parameter value, enabling the incorporation of prior information. Informative priors can be further categorized into two types: prior distributions based on empirical evidence from previous trials and prior distributions based on personal opinions, often obtained through expert elicitation. The former class of informative priors is less controversial when the current and previous trials are similar to each other. Possible sources of prior information include: clinical trials conducted overseas, patient registries, clinical data on very similar products, and pilot studies. Recently, there has been breakthrough development of informative prior distribution that enables incorporating the information from previous trials, and eventually reducing sample size of a new trial, while providing appropriate mechanism of discounting [81–84]. We provide details on the formulation of an informative prior and relevant regulatory considerations in External data borrowing section. Typically, informative prior distribution based on personal opinions is not recommended for Bayesian submissions due to subjectivity and controversy [85].

Incorporating prior information formally into the statistical analysis is a unique feature of the Bayesian approach but is also often criticized by non-Bayesians. To mitigate any conflict and skepticism regarding prior information, it is crucial that sponsors and regulators meet early in the process to discuss and agree upon the prior information to be used for Bayesian clinical trials.

Prior probability of the study claim

The prior predictive distribution plays a key role in pre-planning a Bayesian trial to measure the prior probability of the study claim – the probability of the study claim before observing any new data. Regulators recommend that this probability should not be excessively high, and what constitutes ‘too high’ is a case-by-case decision [16]. Measuring this probability is typically recommended when an informative prior distribution is used for the Bayesian submission. Regulatory agencies make this recommendation to ensure that prior information does not overwhelm the data of a new trial, potentially creating a situation where unfavorable results from the proposed study get masked by a favorable prior distribution. In an evaluation of the prior probability of the claim, regulators will balance the informativeness of the prior against the efficiency gain from using prior information, as opposed to using noninformative priors.

To calculate the prior probability of the study claim, we simulate multiple hypothetical trial data using the prior predictive distribution (2) by setting the sampling prior as the fitting prior, and then calculate the probability of rejecting the null hypothesis based on the simulated data. We illustrate the procedure for calculating this probability using the beta-binomial model illustrated in Calibration of Bayesian trial design to assess frequentist operating characteristics section as an example. First, we generate the data (), where R represents the number of simulations. Here, f is the Bernoulli likelihood, and is the beta prior with hyper-parameters a and b. In this particular example, a and b represent the number of hypothetical patients showing adverse events and not showing adverse events a priori, hence is the prior effective sample size. The number of patients showing adverse events out of N patients, , is distributed according to a beta-binomial distribution [86], denoted as -. One can use a built-in function within the package to generate the r-th outcome . Second, we compute the posterior probability and make a decision whether to reject the null or not, i.e., if is rejected and 0 otherwise. Finally, the value of is the prior probability of the study claim based on the prior choice of .

We consider four prior options where the hyper-parameters have been set to induce progressively stronger prior information to reject the null a priori. Table 2 shows the results of the calculations of this probability. For the non-informative prior, the prior probability of the study claim is only 5.8%, implying that the outcome from a new trial will most likely dominate the final decision. However, the third and fourth options provide probabilities greater than 50%, indicating overly strong prior information; hence, appropriate discounting on the prior effective sample size is recommended.

Table 2.

Prior probability of the study claim based on beta-binomial model

| Prior Distribution | Number of hypothetical patients showing adverse events | Number of hypothetical patients not showing adverse events | Prior mean (standard deviation) | Prior probability of a study claim |

|---|---|---|---|---|

| 1 | 1 | 50% (5.8%) | 5.8% | |

| 1 | 9 | 10% (9%) | 47.1% | |

| 1 | 19 | 5% (4.9%) | 77.3% | |

| 1 | 49 | 2% (2%) | 99.1% |

Decision rule - posterior probability approach

Posterior probability approach

The central motivation for utilizing the posterior probability approach in decision-making is to quantify the evidence to address the question, “Does the current data provide convincing evidence in favor of the alternative hypothesis?” The key quantity here is the posterior probability of the alternative hypothesis being true based on the data observed up to the point of analysis. This Bayesian tail probability can be used as the test statistic in a single-stage Bayesian design upon completion of the study, similar to the role of the p-value in a single-stage frequentist design [77]. Furthermore, one can measure it in both interim and final analyses within the context of Bayesian group sequential designs [19, 46], akin to a z-score in a frequentist group sequential design [87, 88].

It is important to note that if the posterior probability approach is used in decision-making at the interim analysis, it does not involve predicting outcomes of the future remaining patients. This distinguishes it from the predictive probability approach, where the remaining time and statistical information to be gathered play a crucial role in decision-making at the interim analysis (as discussed in Decision rule - predictive probability approach section). Consequently, the posterior probability approach is considered conservative, as it may prohibit imputation for incomplete data or partial outcomes. For this reason, the posterior probability approach is standardly employed in interim analyses to declare early success or in the final analysis to declare the trial’s success to support marketing approval of medical devices or drugs in the regulatory submissions [23, 89].

Suppose that denotes an analysis dataset, and is the parameter of main interest. A sponsor wants to test versus , where , and and are disjoint. Bayesian test statistics following the posterior probability approach can be represented as a functional , such that:

| 10 |

where represents the collection of posterior distributions. Finally, to induce a dichotomous decision, we need to pre-specify the threshold . By introducing an indicator function (referred as a ‘critical function’ in [63]), the testing result is determined as follow:

where 1 and 0 indicate the rejection and acceptance of the null hypothesis, respectively.

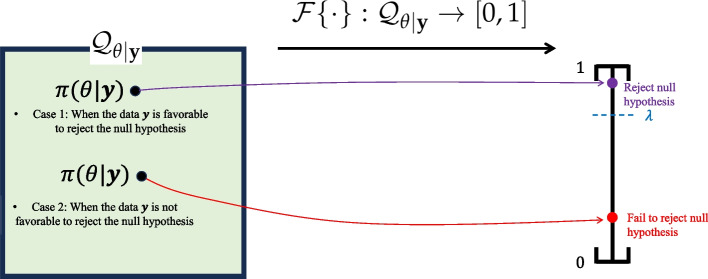

In the interim analysis, rejecting the null can be interpreted as claiming the early success of the trial, and in the final analysis, rejecting the null can be interpreted as claiming the final success of the trial. Figure 3 displays a pictorial description of the decision procedure.

Fig. 3.

Pictorial illustration of the decision rule based on the posterior probability approach: If the data were generated from the alternative (or null) density where (or ), then the posterior distribution would be more concentrated on the alternative space (or null parameter ), resulting in a higher (or lower) value of the test statistic . The pre-specified threshold is used to make the dichotomous decision based on the test statistic

The formulation of Bayesian test statistics is universal regardless of the hypothesis being tested (e.g., mean comparison, proportion comparison, association), and it does not rely on asymptotic theory. The derivation procedure for Bayesian test statistics based on the posterior probability approach is intuitive, considering the backward process of the Bayesian theorem. A higher value of implies that more mass has been concentrated on the alternative parameter space a posteriori. Consequently, there is a higher probability that the data were originally generated from the density indexed with parameters belonging to , that is, , . The prior distribution in this backward process acts as a moderator by appropriately allocating even more or less mass on the parameter space before seeing any data . If there is no prior information, the prior distribution plays a minimal role in this process.

This contrasts with the derivation procedure for frequentist test statistics, which involves formulating a point estimator such as sufficient statistics from the sample data to make a decision about a specific hypothesis. The derivation may vary depending on the type of test (e.g., t-test, chi-squared test, z-test) and the hypothesis being tested. Furthermore, asymptotic theory is often used if the test statistics based on exact calculation are difficult to obtain [53].

For a single-stage design with the targeted one-sided significance level of , the threshold is normally set to , provided that the test is a one-sided test and the prior distribution is a non-informative prior. This setting is frequently chosen, particularly when there is no past historical data to be incorporated into the prior; see the example of the beta-binomial model in Calibration of Bayesian trial design to assess frequentist operating characteristics section. If an informative prior is used, this convention (that is, ) should be carefully used because the type I error rate can be inflated or deflated based on the direction of the informativeness of prior distribution (see Table 1).

Asymptotic property of posterior probability approach

Bernstein-Von Mises theorem [90, 91], also called Bayesian central limit theorem, states that if the sample size N is sufficiently large, the influence of the prior diminishes, and the posterior distribution closely resembles the likelihood under suitable regularity conditions (for e.g., conditions stated in [91] or Section 4.1.2 of [92]). Consequently, it simplifies the complex posterior distribution into a more manageable normal distribution, independent of the form of prior, as long as the prior distribution is continuous and positive on the parameter space.

By using Bernstein-Von Mises theorem, we can show that if the sample size N is sufficiently large, the posterior probability approach asymptotically behaves similarly to the frequentist testing procedure based on the p-value approach [93] under the regularity conditions. For the ease of exposition, we consider a one-sided testing problem. In this specific case, we further establish an asymptotic equation between the Bayesian tail probability (10) and p-value.

Theorem 1

Let a random sample of size N, , be independently and identically taken from a distribution depending on the real parameter . Consider a one-sided testing problem versus where denotes the performance goal. Consider testing procedures with two paradigms:

where is the maximum likelihood estimator and is the Bayesian test statistics based on posterior probability approach, that is, . and denote threshold values for the testing procedures. For frequentist testing procedure, we assume that itself serves as the frequentist test statistics of which higher values cast doubt against the null hypothesis , and denotes the p-value. For Bayesian testing procedure, assume that the prior density is continuous and positive on the parameter space .

Under the regularity conditions necessary for the validity of normal asymptotic theory of the maximum likelihood estimator and posterior distribution, and assuming the null hypothesis to be true, it holds that

| 11 |

independently of the form of .

The proof can be found in Supplemental material.

Typically, for regulatory submissions, the significance level of the one-sided superiority test (e.g., versus , with the performance goal ) is . To achieve a one-sided significance level of for a frequentist design, one would use the decision rule to reject the null hypothesis, where denotes the p-value. The p-value is often called the ‘observed significance level’ because the value by itself represents the evidence against a null hypothesis based on the observed data [94].

Theorem 1 states that the value of the Bayesian tail probability (10) itself also serves as the evidence for the statistical significance. Furthermore, a Bayesian decision rule of will lead to the one-sided significance level of 0.025, regardless of the choice of prior, whether it is informative or non-informative, under regularity conditions, if the sample size N is sufficiently large.

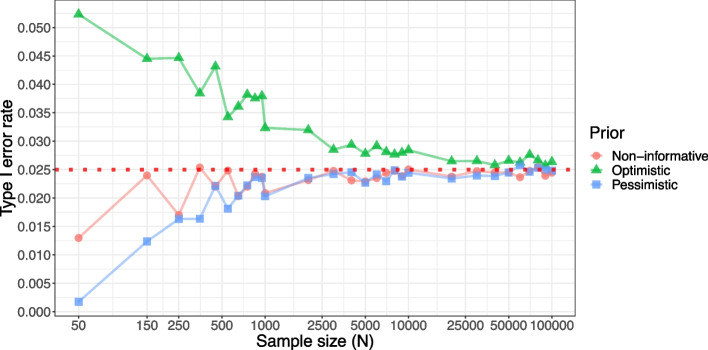

We illustrate Theorem 1 by using the beta-binomial model described in Calibration of Bayesian trial design to assess frequentist operating characteristics section as an example. Recall that, under sample sizes of , , and , Bayesian designs with non-informative priors meet the type I error requirement, while Bayesian designs with optimistic and pessimistic priors inflate and deflate the type I error, respectively (see Table 1). Under the same settings (that is, Bayesian threshold ), we now increase the sample size N up to 100,000 to explore the asymptotic behavior of the Bayesian designs. Figure 4 shows the results, where the inflation and deflation induced by the choice of the prior are getting washed out as N increases. When N is as large as 25,000 or more, the type I errors of all the Bayesian designs approximately achieve the type I error rate of 2.5%, implying that the asymptotic Eq. (10) holds.

Fig. 4.

Type I error rates of Bayesian designs based on the beta-binomial model with three prior options for testing versus , where . Prior options are (1) a non-informative prior with , (2) an optimistic prior with and , and (3) a pessimistic prior with and

In practice, the sample size (N) for pivotal trials in medical device development and phase II trials in drug development often leads to a modest sample size, and there are practical challenges limiting the feasibility of conducting larger studies [95]. Consequently, the asymptotic Eq. (10) may not hold in such limited sample sizes. Therefore, sponsors need to conduct extensive simulation experiments in the pre-planning of Bayesian clinical trials to best leverage existing prior information while controlling the type I error rate.

Bayesian group sequential design

An adaptive design is defined as a clinical study design that allows for prospectively planned modifications based on accumulating study data without undermining the study’s integrity and validity [16, 40, 41]. In nearly all situations, to preserve the integrity and validity of a study, modifications should be prospectively planned and described in the clinical study protocol prior to initiation of the study [16]. Particularly, for Bayesian adaptive designs, including Bayesian group sequential designs, clinical trial simulation is a fundamental tool to explore, compare, and understand the operating characteristics, statistical properties, and adaptive decisions to answer the given research questions [96].

Posterior probability approach is widely adopted as a decision rule for complex innovative designs. In such designs, the choice of the threshold value(s) often depends on several factors, including the complexity of trial design, specific objectives, the presence of interim analyses, ethical considerations, statistical methodology, prior information, and type I & II error requirements.

Consider a multi-stage design where the sponsor wants to use the posterior probability approach as an early stopping option for the trial success at interim analyses as well as the success at the final analysis. Let () denote the analysis dataset at the k-th interim analysis (thus, the K-th interim analysis is the final analysis), and is the parameter of main interest. The sponsor wants to test versus , where , and and are disjoint. One can use the following sequential decision criterion:

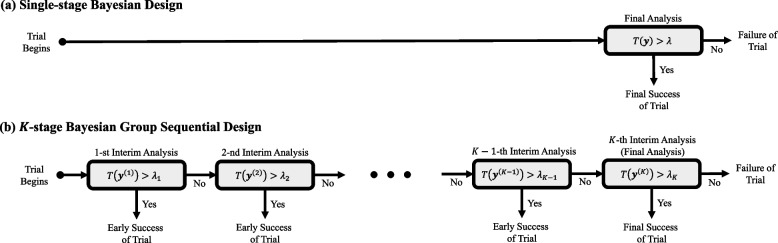

Figure 5 displays the processes of decision rules based on single-stage design and K-stage group sequential design. In practice, a general rule suggests that planning for a maximum of five interim analyses () is often sufficient [52]. In single-stage design, there is only one opportunity to declare the trial a success. In contrast, sequential design offers K chances to declare success at interim analyses and the final analysis. However, having K opportunities to declare success implies that there are K ways the trial can be falsely considered successful when it is not truly successful. These are the K false positive scenarios, and controlling the overall type I error rate is crucial to maintain scientific integrity for regulatory submission [16].

Fig. 5.

Processes of fixed design (a) and sequential design (b). The former allows only a single chance to declare success for the trial, while the latter allows K chances to declare success. The test statistic for the former design is denoted as , and for the latter design, they are , where . In both designs, threshold values ( and , ) should be pre-specified before the trial begins to control the type I error rate

Similar to frequentist group sequential designs, our primary concern here is to control the overall type I error rate of the sequential testing procedure. The overall type I error rate refers to the probability of falsely rejecting the null hypothesis at any analysis, given that is true. In this example, the overall type I error rate is given by:

| 12 |

where denotes the null value which leads to the maximum type I error rate (for e.g., is the performance goal for a single-arm superiority design). Noting from Eq. (12), the overall type I error rate is a summation of the error rates at each interim analysis. For the relevant calculations corresponding to the frequentist group sequential design, refer to page 10 of [97], where Bayesian test statistics and thresholds () are replaced by Z-test statistics based on interim data and pre-specified critical values, respectively.

The crucial design objective in the development of a Bayesian group sequential design is to control the overall type I error rate to be less than a significance level of (typically, 0.025 for a one-sided test and 0.05 for a two-sided test). This objective is similar to what is typically achieved in its frequentist counterparts, such as O’Brien-Fleming [98] or Pocock plans [99], or through the alpha-spending approach [100]. To achieve this objective, adjustments to the Bayesian thresholds are important, and this adjustment necessitates extensive simulation work. Failing to make these adjustments may result in an inflation of the overall type I error. For example, if one were to use the same thresholds of () for all the interim analyses, then the overall type I error would lead to the value greater than regardless of the maximum number of interim analyses. Furthermore, the overall type I error may eventually converge to 1 as the number of interim analyses K goes to infinity, similar to the behavior observed in a frequentist group sequential design [101]. Additionally, compared to single stage designs, group sequential designs may require a larger sample size to achieve the same power all else being equal, as there is an inevitable statistical cost for repeated analyses.

Example - two-stage group sequential design based on beta-binomial model

We illustrate the advantage of using a Bayesian group sequential design compared to the single-stage Bayesian design described in Calibration of Bayesian trial design to assess frequentist operating characteristics section. Similar research using frequentist designs can be found in [102]. Recall that the previous fixed design based on a non-informative prior led to a power of 86.90% and a type I error rate of 2.31% with a sample size of 150 and a threshold of (Table 1). Our goal here is to convert the fixed design into a two-stage design that is more powerful, while controlling the overall the type I error rate . For fair comparison, we aim for the expected sample size E(N) of the two-stage design to be as close to 150 as possible. Having a smaller value of E(N) than 150 is even more desirable in our setting because it means that two-stage design can shorten the length of the trial of the fixed design. To compensate for the inevitable statistical cost of repeated analyses, the total sample size of the two-stage design is set to , representing an 8% increase in the final sample size of the single-stage design. The stage 1 sample size and stage 2 sample size are divided in the ratios of 3 : 7, 5 : 5, or 7 : 3 to see the pattern of probability of early termination with different timing of interim analysis. Finally, we choose and as the thresholds for the interim analysis and the final analysis, respectively. Note that a more stringent stopping rule has been applied for early interim analyses than for the final analysis, similar to the proposed design of O’Brien and Fleming [98]. The same adaptation procedure will be taken to the single-stage designs with final sample sizes of 100 and 200 as reference.

Table 3 shows the results of the power analysis. It is observed that the overall type I error rates have been protected at 2.5% for all the considered designs. The expected sample sizes of the two-stage designs using a total sample size of are (), (), and (), with the power improved from 86.9% (single-stage design, see Table 1) to approximately 88.6% for all three cases. The power gain is even greater for the two-stage designs using a total sample size of , where the expected sample sizes are smaller than , which is advantageous for using a group-sequential design. Power gains occur for the two-stage designs using a total sample size of as well, but the expected sample sizes are larger than ; therefore, the single-stage design would be preferable in terms of expected sample sizes.

Table 3.

Operating characteristics of two-stage designs based on beta-binomial model

| Total Sample Size () | Stage 1 Sample Size () | Stage 2 Sample Size () | Expected Sample Size() | Probability of Early Termination () | Type I Error () | Power () | % Change in Power Compared with Single-stage Design |

|---|---|---|---|---|---|---|---|

| 108 | 32 | 76 | 108 | 0.0000 | 0.0199 | 0.7053 | +14.10 |

| 54 | 54 | 105 | 0.0603 | 0.0220 | 0.6945 | +12.36 | |

| 76 | 32 | 100 | 0.2632 | 0.0219 | 0.7094 | +14.77 | |

| 162 | 49 | 113 | 153 | 0.0819 | 0.0200 | 0.8865 | +2.01 |

| 81 | 81 | 145 | 0.2202 | 0.0228 | 0.8862 | +1.97 | |

| 113 | 49 | 146 | 0.3348 | 0.0208 | 0.8860 | +1.95 | |

| 216 | 65 | 151 | 191 | 0.1659 | 0.0219 | 0.9598 | +4.50 |

| 108 | 108 | 177 | 0.3642 | 0.0205 | 0.9570 | +4.20 | |

| 151 | 65 | 183 | 0.5120 | 0.0197 | 0.9568 | +4.18 |

Note: All two-stage designs are based on the beta-binomial model with a non-informative prior. The expected sample size (E(N)) and the probability of early termination (PET) have been calculated under alternative, . Formula of E(N) is given by , where and denote the sample sizes for stages 1 and 2, respectively. Thresholds for stage 1 and stage 2 are and , respectively, for all designs. The percentage change in the last column has been calculated by comparing the powers between the two-stage design and the single-stage design (non-informative) in Table 1

To summarize, the results show that, with an 8% increase in the final sample size of the single-stage design, we can construct a two-stage design in which the expected sample size is smaller or equal to the final sample size of the single-stage design. This is while still protecting the type I error rate below 2.5% and benefiting from an increase in the overall power of the designs by as much as 14% (), 2% (), and 4% (), assuming the alternative hypothesis is true. In other words, a Bayesian group sequential design allowing the claim of early success at interim analysis can help save costs by possibly reducing length of a trial when there is strong evidence of a treatment effect for the new medical device. Even if the evidence turns out to be not as strong as expected upon completion of the study (the null hypothesis seems more likely to be true in the observed final results), the potential risk for the sponsor would be the additional cost spent on enrolling 8% more patients than with the single-stage design.

Decision rule - predictive probability approach

Predictive probability approach

The primary motivation for employing the predictive probability approach in decision-making is to answer the question at an interim analysis: “Is the trial likely to present compelling evidence in favor of the alternative hypothesis if we gather additional data, potentially up to the maximum sample size?” This question fundamentally involves predicting the future behavior of patients in the remainder of the study, where the prediction is based on the interim data observed thus far. Consequently, its idea is akin to measuring conditional power given interim data in the stochastic curtailment method [103, 104]. The key quantity here is the predictive probability of observing a statistically significant treatment effect if the trial were to proceed to its predefined maximum sample size, calculated in a fully Bayesian way.

One of the most standard applications of predictive probability approach for regulatory submission is the interim analysis for futility stopping (i.e., early stopping the trial in favor of the null hypothesis) [23, 105–107]. This is motivated primarily by an ethical imperative; the goal here is to assess whether the trial, based on interim data, is unlikely to demonstrate a significant treatment effect even if it continues to its planned completion. This information can then be utilized by the monitoring committee to assess whether the trial is still viable midway through the trial [108]. The study will stop for lack of benefit if the predictive probability of success at the final analysis is too small. Other areas where this approach are useful include the early termination for success with consideration of the current sample size (i.e., early stopping the trial in favor of the alternative hypothesis) [18, 109, 110], or sample size re-estimation to evaluate whether the planned sample size is sufficiently large to detect the true treatment effect [111].

We focus on illustrating the use of the predictive probability approach for futility interim analysis. To simplify the discussion, we consider the two-stage futility design where only one interim futility analysis exists. The idea illustrated here can be extended to a multi-stage design by implementing the following testing procedure at each of the interim analyses in the multi-stage design. The logic explained here can be extended to the applications of early success claims and sample size re-estimation after a few modifications.

Suppose that and denote the datasets at the interim and final analyses, respectively, and is the main parameter of interest. We distinguish all incremental quantities from cumulative ones using the notation “tilde”. Therefore, and represent the incremental stage 2 data and the final data, respectively.

At the final analysis, a sponsor plans to test the null hypothesis versus the alternative hypothesis , where , and and are disjoint sets. Suppose that is the final test statistic to be used, and a higher value casts doubt that the null hypothesis is true. Therefore, the sponsor will claim the success of the trial if it is demonstrated that with a predetermined threshold , where the threshold is chosen to satisfy the type I & II error requirement of the futility design. It is at the sponsor’s discretion whether to use frequentist or Bayesian statistics to construct the final test statistic . This is because the purpose of using the predictive probability approach is to make a decision at the interim analysis, not at the final analysis.

At the interim analysis, the outcomes from stage 1 patients are observed. We measure the predictive probability of success at the final analysis, which is the Bayesian test statistics of the predictive probability approach represented as a functional , such that:

| 13 |

where represents the collection of posterior predictive distributions of stage 2 patient outcome given the interim data . As seen from the integral (13), the fully Bayesian nature of the predictive probability approach is characterized by its integration of final decision results over the data space of all possible scenarios of future patients’ outcome , with the weight of the integral respecting the posterior predictive distribution . Note that the posterior predictive distribution is again a mixture distribution of the likelihood function of the future outcome and the posterior distribution given the interim data:

It is important to note that the predictive probability (13) differs from the predictive power [112, 113], which represents a weighted average of the conditional power, given by . The calculation of the predictive probability (13) follows the fully Bayesian paradigm. However, the predictive power is a mix of both frequentist and Bayesian paradigms, constructed based on the conditional power (frequentist statistics) and posterior distribution (Bayesian statistics). Both can be used as the metric of a Bayesian stochastic curtailment method [114], but the recent trend seems to be that the predictive probability is more prevalently used for regulatory submissions than predictive power [23, 115].

Finally, to induce a dichotomous decision at the interim analysis, we need to pre-specify the futility threshold . By introducing an indicator function , the testing result for the futility analysis is determined as follow:

where 1 and 0 indicate the rejection and acceptance of the null hypothesis, respectively. Figure 6 displays a pictorial description of the decision procedure.

Fig. 6.

Pictorial illustration of the decision rule based on the predictive probability approach for futility analysis. If the interim data favors accepting the null hypothesis (Case 2 in the figure), it is also likely that the future remaining patients’ outcomes would be predicted to be more favorable for accepting the null hypothesis. This prediction results in a lower value of the test statistic (13). The pre-specified threshold is then used to make the dichotomous decision based on the test statistic

Theoretically, it is important to note that allowing early termination of a trial for futility tends to reduce both the trial’s power and the type I error rate [107]. To explain this, suppose that one uses the identical final threshold in both of the two-stage futility design, as explained above, and the fixed design. Then, the following inequality holds:

| 14 |

which means that the power function of the fixed design is uniformly greater or equal to the power function of the two-stage futility design over the entire parameter space . This implies that equipping a futility rule to a fixed design leads to a reduction of both the type I error rate and power compared to the fixed design.

We briefly discuss the choice of the futility threshold and the final threshold in the two-stage futility design. Futility threshold is typically chosen within the range of 1% to 20% in many problems. Having fixed the threshold , a higher threshold for increases the likelihood of discontinuing a trial involving an ineffective treatment, which is desirable because it shortens the trial length when there is a true negative effect. However, it may reduce both the type I error rate and power compared to a lower threshold for . On the other hand, the final threshold of the futility design is typically chosen to align with the nominal significance level of the corresponding fixed design. This is mainly due to the relevant operational risk of inflating the type I error rate if futility stopping were not executed as planned, even after the final threshold has been chosen to make rejection easier to reclaim the lost type I error rate [107, 116]. In summary, when constructing a futility design, the sponsor needs to choose the futility threshold that does not substantially affect the operating characteristics of the original fixed-sample size, while also curtailing the trial length when there is a negative effect.

Example - two-stage futility design with Greenwood test

Suppose that a sponsor considers a single-arm design for a phase II trial to assess the efficacy of a new antiarrhythmic drug in treating patients with a mild atrial fibrillation [117]. The primary efficacy endpoint is the freedom from recurrence of the indication at 52 weeks (1 year) after the intervention. The sponsor sets the null and alternative hypotheses by versus , where denotes the probability of freedom from recurrence at 52 weeks. Let S(t) represent the survival function; then the main parameter of interest is . At the planning stage, regulator agreed on the proposal of sponsor that the time to recurrence follows a three-piece exponential model, with a hazard function given as if , if , and if , where is a positive number. In order to simulate the survival data in the power calculation, the value of will be derived to set the true data-generating parameter to be and 0.7. Note that corresponds to the type I error scenario, and the rest of the settings correspond to power scenarios.

We first construct a single-stage design with the final sample size of patients. The final analysis is conducted by a frequentist hypothesis testing based on the one-sided level-0.025 Greenwood test using a confidence interval approach [118]. More specifically, the testing procedure is that the null hypothesis is rejected if the lower bound of the 95% two-sided confidence interval evaluated at is greater than 0.5, that is,

| 15 |

Here, the mean estimate is the Kaplan-Meier estimate of S(t) [119], and its variance estimate is based on the Greenwood formula [120], and notation represents the final data from patients. The results of the power analysis obtained by simulation indicate that the probabilities of rejecting the null hypothesis are 0.0185, 0.1344, 0.461, 0.8332, and 0.9793 when the effectiveness success rates () are 0.5, 0.55, 0.60, 0.65, and 0.7, respectively. Note that the type I error rate is 0.0185 less than the 0.025.

Next, we construct a two-stage futility design by equipping the above single-stage design with a non-binding futility stopping option based on the predictive probability approach. Non-binding means that the investigators can freely decide whether they really want to stop or not. This is more common in practice because a stopping decision is typically influenced not only by interim data but also by new external data or safety information [121]. The final sample size of the futility design is again , and we keep the decision criterion for the study success of the final test the same as that of the single-stage design (15). This means that there are no adjustments to the final threshold to reclaim a loss of type I error rate. The futility analysis will be performed when patients have completed the 52 weeks of follow-up (30% of participants). A non-informative Gamma prior will be used for each of the hazard rate parameters of the three-piece exponential model. Futility stopping (i.e., accepting the null hypothesis) is triggered if the predictive probability of trial success at the maximum sample size is less than the pre-specified futility threshold . Technically, the predictive probability is

where and denote the time-to-event outcomes from patients and patients, respectively, and denotes the posterior predictive distribution of outcomes of the future remaining patients .

In the power analysis, we vary the number of stage 1 patients, , to 50 and 70 and set the futility threshold, , to 0.1 and 0.15 to explore the operating characteristics of the futility design. Figure 7 illustrates the testing procedures of the single-stage design and the two-stage futility design. In this setting, the only difference between the futility and single-stage designs is that the former has the option to stop the trial due to futility when patients had completed the follow-up of 52 weeks, while the latter does not. Table 4 shows the power analysis results of the two-stage futility designs.

Fig. 7.

Testing procedures of the single-stage design and the two-stage futility design are as follows: at the final analysis, both designs employ the one-sided level-0.025 Greenwood test with a final sample size of . Only the futility design has the option to stop the trial due to futility when patients had completed 52 weeks of follow-up. In the power analysis, we use , and 70, along with , 0.1, and 0.15 to assess the operating characteristics of the design

Table 4.

Operating characteristics of two-stage futility designs with the final sample size

| Stage 1 Sample Size | Stage 1 Sample Size | Stage 1 Sample Size | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Futility Threshold | Effectiveness Success Rate | Probability of Rejecting Null Hypothesis | Expected Sample Size () | Probability of Early Termination () | Probability of Rejecting Null Hypothesis | Expected Sample Size () | Probability of Early Termination () | Probability of Rejecting Null Hypothesis | Expected Sample Size () | Probability of Early Termination () |

| 0.05 | 0.5 | 0.017 | 70.11 | 0.427 | 0.017 | 66.80 | 0.664 | 0.018 | 76.12 | 0.796 |

| 0.55 | 0.116 | 84.18 | 0.226 | 0.117 | 80.75 | 0.385 | 0.12 | 85.06 | 0.498 | |

| 0.6 | 0.441 | 93.98 | 0.086 | 0.439 | 92.85 | 0.143 | 0.446 | 93.97 | 0.201 | |

| 0.65 | 0.818 | 98.32 | 0.024 | 0.817 | 97.95 | 0.041 | 0.829 | 98.74 | 0.042 | |

| 0.7 | 0.975 | 99.58 | 0.006 | 0.973 | 99.50 | 0.01 | 0.976 | 99.88 | 0.004 | |

| 0.10 | 0.5 | 0.016 | 59.68 | 0.576 | 0.016 | 63.05 | 0.739 | 0.016 | 74.11 | 0.863 |

| 0.55 | 0.114 | 76.48 | 0.336 | 0.115 | 76.45 | 0.471 | 0.117 | 82.00 | 0.600 | |

| 0.6 | 0.428 | 89.01 | 0.157 | 0.427 | 89.65 | 0.207 | 0.44 | 92.08 | 0.264 | |

| 0.65 | 0.799 | 95.31 | 0.067 | 0.810 | 96.95 | 0.061 | 0.826 | 97.93 | 0.069 | |

| 0.7 | 0.966 | 98.74 | 0.018 | 0.969 | 99.15 | 0.017 | 0.971 | 99.55 | 0.015 | |

| 0.15 | 0.5 | 0.016 | 57.93 | 0.601 | 0.016 | 61.20 | 0.776 | 0.015 | 73.15 | 0.895 |

| 0.55 | 0.110 | 73.61 | 0.377 | 0.114 | 74.40 | 0.512 | 0.115 | 80.56 | 0.648 | |

| 0.6 | 0.419 | 87.12 | 0.184 | 0.423 | 88.20 | 0.236 | 0.429 | 90.67 | 0.311 | |

| 0.65 | 0.795 | 94.47 | 0.079 | 0.806 | 96.60 | 0.068 | 0.817 | 97.27 | 0.091 | |

| 0.7 | 0.964 | 98.6 | 0.020 | 0.968 | 99.05 | 0.019 | 0.969 | 99.31 | 0.023 | |

Note: Probabilities of rejecting null hypothesis of single-stage design with the final sample size of are 0.0185, 0.1344, 0.461, 0.8332, and 0.9793 when the effectiveness success rates are 0.5, 0.55, 0.60, 0.65, and 0.7, respectively

The results demonstrate that the probability of rejecting the null hypothesis in the futility design is consistently lower than that in the single-stage design across various effectiveness success rates ( and 0.7). This finding aligns with the theoretical result (refer to inequality (14)). For example, in the case where the futility threshold with a stage 1 sample size of , the percentage change in the probability of rejecting the null hypothesis compared to a single-stage design is , , , , and when the true effectiveness success rate () is 0.5, 0.55, 0.6, 0.65, and 0.7, respectively.

We examine the general pattern of the reduction in the type I error rate and power of the futility design compared to the single-stage design as the futility threshold changes. Note that the average of type I error rates across three different stage 1 sample size for the futility design are 0.0173, 0.0160, and 0.0156 when the futility thresholds are set at 0.05, 0.10, and 0.15, respectively. These results reflect reductions of 6.4%, 13.5%, and 15.6% in the type I error rate compared to the single-stage design. (Recall that the type I error rate of the single-stage design is 0.0185.) This implies that a higher value for the futility threshold leads to a more substantial reduction in the type I error rate compared to the single-stage design. A similar pattern of reduction is observed in the power scenarios when and 0.7.

Notably, the probability of early termination tends to increase as the stage 1 sample size grows from to . This increase is particularly significant in the type I error scenario when . Across all the scenarios examined, the expected sample size consistently stays below . This indicates that the futility design outperforms the single-stage design in terms of expected sample size as a performance criterion. Furthermore, this reduction in expected sample size is even more pronounced in the type I error scenarios. In conclusion, it is evident that for long-term survival endpoints, like the example discussed here, the futility design can lead to substantial resource savings by allowing the trial to be terminated midway when the lack of clinical benefit becomes clear.

Multiplicity adjustments

Multiplicity problem - primary endpoint family

Efficacy endpoints are measures designed to reflect the intended effects of a drug or medical device. Clinical trials are often conducted to evaluate the relative efficacy of two or more modes of treatment. For instance, consider a new drug developed for the treatment of heart failure [122]. In this case, it may be unclear whether the heart failure drug primarily promotes a decrease in mortality, a reduction in heart failure hospitalization, or an improvement in quality of life (such as Kansas City Cardiomyopathy Questionnaire score overall summary score [123]). However, demonstrating any of these effects individually would hold clinical significance; there are multiple chances to ‘win.’ Consequently, all three endpoints – mortality rate, number of heart failure hospitalizations, and an index for quality of life – might be designated as separate primary endpoints. This is an illustrative example of a primary endpoint family, and failure to adjust for multiplicity can lead to a false conclusion that the heart failure drug is effective. Here, multiplicity refers to the presence of numerous comparisons within a clinical trial [124–127]. See Section III of the FDA guidance document for the multiple endpoints for more details on the primary endpoint family [128].

In the following, we formulate the multiplicity problem of the primary endpoint family. We consider a family of K primary endpoints, any one of which could support the conclusion that a new treatment has a beneficial effect. For simplicity, we assume that the outcomes of the patients are binary responses, where a response of 1 (yes) indicates that the patient shows a treatment effect. Using the example of a heart failure drug, the first efficacy endpoint measures mortality: whether a patient has survived (yes/no), the second endpoint measures morbidity: whether a patient experienced heart failure hospitalization (no/yes), and the third endpoint measures the quality of life: whether the Kansas City Cardiomyopathy Questionnaire overall summary score has improved by more than 15 points (yes/no) during a defined period after the treatment. The logic explained in the following can be applied to various types of outcomes, including continuous outcomes and time-to-event outcomes.

We consider a form of parallel group trial design, each associated with hypotheses given by:

| 16 |

where denotes the response rate for the i-th endpoint (where a higher rate indicates a better treatment effect), and represents the performance goal associated with the i-th endpoint.