Abstract

Background

Adequate staffing is crucial for high quality patient care and nurses’ wellbeing. Nurses’ professional assessment on adequacy of staffing is the gold standard in measuring staffing adequacy. However, available measurement instruments lack reliability and validity.

Objectives

To develop and psychometrically test an instrument to measure nurses’ perceived adequacy of staffing (PAS) of general hospital wards.

Design

A multicenter cross-sectional psychometric instrument development and validation study using item response theory.

Settings

Ten general nursing wards in three teaching hospitals in the Netherlands.

Participants

A sample of 881 participants, including third and fourth year nursing students and nurse/care assistants.

Methods

The 13-item self-reported questionnaire was developed based on a previous Delphi study and two focus groups. We interviewed five nurses to evaluate the content validity of the instrument. The field test for psychometric evaluation was conducted on ten general wards of three teaching hospitals in the Netherlands. Structural validity of the item bank was examined by fitting a graded response model (GRM) and inspecting GRM fit. Measurement invariance was assessed by evaluating differential item functioning (DIF) of education level and work experience. We examined convergent validity by testing the hypothesis that there was at least a moderate correlation between the PAS instrument and a single item measuring adequacy of staffing. Internal consistency and reliability across the scale were also examined.

Results

A total of 881 measurements were included in the analysis. The data fitted the GRM adequately and item fit statistics were good. DIF was detected for work experience for the protocols item, but the impact on total scores was negligible. The hypothesis was confirmed and the item bank reliably measured two standard deviations around the mean.

Conclusions

The Nurse Perceived Adequacy of Staffing Scale (NPASS) for nurses of general hospital wards in the Netherlands has sufficient reliability and validity and is ready for use in nurse staffing research and practice.

Keywords: Instruments, Nurse staffing, Perceptions, Personnel staffing and scheduling, Psychometric properties, Staffing adequacy, Workforce planning

Contribution of the paper

What is already known about the topic?

-

•

Many measurement instruments to facilitate nurse managers in making evidence-based staffing decisions have been developed, but there is insufficient evidence in favor of a particular instrument

-

•

Nurses’ professional judgment on nurse staffing is the nearest to a gold standard.

-

•

Instruments available for perceived adequacy of nurse staffing lack reliability and validity.

What this paper adds

-

•

The Nurse Perceived Adequacy of Staffing Scale (NPASS) consists of 13 reflective items to measure nurses’ perceived adequacy of staffing.

-

•

Psychometric evaluation of the NPASS proved sufficient reliability and validity; the instrument is ready for use in practice.

1. Introduction

Adequate matching of demand for nursing work and nursing resources is critical to avoid unfavorable outcomes for patients and nurses (Shin et al., 2018; Unruh, 2008). Both understaffing and overstaffing are undesirable; understaffing leads to adverse events, while overstaffing wastes scarce nursing resources (Dall'Ora et al., 2021). Nowadays, increasing demand for care, nursing labor shortages, and budget constraints have made understaffing part of the daily routine. To optimally utilize the available nursing resources, staffing levels need to fit demand for nursing work precisely. To determine the most appropriate staffing levels, it is important to measure what constitutes sufficient nursing staff in a given situation.

Over the past 50 years, many attempts have been made to develop adequate nurse staffing methods and tools (Fasoli and Haddock, 2010). A variety of methods are available including volume-based methods (e.g., patient-to-nurse ratios), patient prototype/classification, timed-task approaches and professional judgement (Griffiths et al., 2020). None of these have been implemented on a large scale and there is no evidence to favor the use of a particular method over others (Griffiths et al., 2020). Available instruments are time-consuming and lack reliability and validity testing (Royal College of Nursing, 2010). Among the many methods available, measuring the adequacy of staffing based on the opinion of nursing experts is considered the nearest to the gold standard (Griffiths et al., 2020). This is supported by the association between positive perceived adequacy of staffing (PAS) by nurses and positive patient, nurse, and hospital outcomes (van der Mark et al., 2021). The Telford instrument is an early example of an approach that incorporates nurses’ perceptions on adequacy of staffing to set nurse rosters, and subsequently converts this into establishments (Telford, 1979). This approach is criticized because it does not account for daily fluctuations in demand for nursing work (Royal College of Nursing, 2010). The RAFAELA system is a resource planning system at shift level. It combines a patient classification instrument and a retrospective assessment of the adequacy of staffing to determine the amount of nursing staff needed (Fagerström et al., 2000). This system is barely used in non-Nordic countries because of validity and acceptability issues (van Oostveen et al., 2016). Moreover, it focuses mainly on patient-related work, while nursing work includes non-patient related work such as supervising nursing students and quality- and organization-related work (van den Oetelaar et al., 2016; Morris et al., 2007).

Although PAS has many merits, the instruments available to measure it are not valid or reliable (van der Mark et al., 2021). The construct is oversimplified by the use of single items (van der Mark et al., 2021). For example, the unit and staff characteristics that are part of the MISSCARE and Nursing Teamwork survey contains the item ‘% of the time perceived staffing adequacy in the unit’ (Kalisch and Williams, 2009; Kalisch et al., 2010). A single item leaves the definition of the construct to the rater. This leads to over- and underestimation of PAS and is therefore inappropriate for a complex construct like PAS (de Vet et al., 2011; Kramer and Schmalenberg, 2005). Available multi-item PAS instruments focus solely on direct patient care or are developed for non-staffing purposes, such as the Practice Environment Scale, which was developed to measure the nurses’ work environment (van der Mark et al., 2021; Lake, 2002). These instruments do not fit the resource planning purpose by referring to items such as satisfaction with the salary (van der Mark et al., 2021). To support resource planning decisions, it is relevant to measure PAS at the shift level instead of aggregated measures (Story, 2010). Fluctuations in the demand for nursing work (e.g., due to varying patient needs) and nurse supply (e.g., due to leave or sickness) typically cause strong variability in staffing adequacy. On average, nurse staffing may be adequate, but frequent understaffing may increase the risk of patient death (Fagerström et al., 2018; Griffiths et al., 2019; Rochefort et al., 2020; Musy et al., 2021) and nurse fatigue (Blouin and Podjasek, 2019).

In response to the deficiencies outlined above, we aimed to develop a valid and reliable PAS instrument. We previously defined adequacy of shift staffing by identifying the items involved (van der Mark et al., 2022). In the present study, we used these items to develop an instrument to measure nurses’ PAS and explore the psychometrical validity and reliability of this instrument.

2. Methods

This is a multicenter cross-sectional psychometric instrument development and validation study. The instrument was developed as described by de Vet et al. (Kramer and Schmalenberg, 2005). First, we developed the measurement instrument in focus groups with nurses working on general wards. Subsequently, five nurses with special interest in nurse staffing were interviewed to evaluate content validity of the instrument. Second, we conducted a field test in three teaching hospitals in The Netherlands to test construct validity (i.e., structural validity, measurement invariance, and hypothesis testing) and reliability (de Vet et al., 2011).

Item Response Theory (IRT) was used to evaluate construct validity and reliability and to calculate test scores (de Vet et al., 2011; Edelen and Reeve, 2007). IRT was developed for psychological and educational measurement and is increasingly being used in other fields, such as quality of life research (Reeve et al., 2007). This theory creates a common sustainable scale by item banking and locates each item on the scale depending on its difficulty. The item bank creates highly efficient instruments, e.g., such as short forms or computer adaptive tests (Edelen and Reeve, 2007). This offers many opportunities in the daily application of an instrument to measure PAS.

This study was conducted and reported according to the ‘Consensus-based Standards for the Selection of Health Measurement Instruments’ (COSMIN) checklist for study design (Mokkink et al., 2019) and associated reporting guideline (Gagnier et al., 2021).

2.1. Instrument development

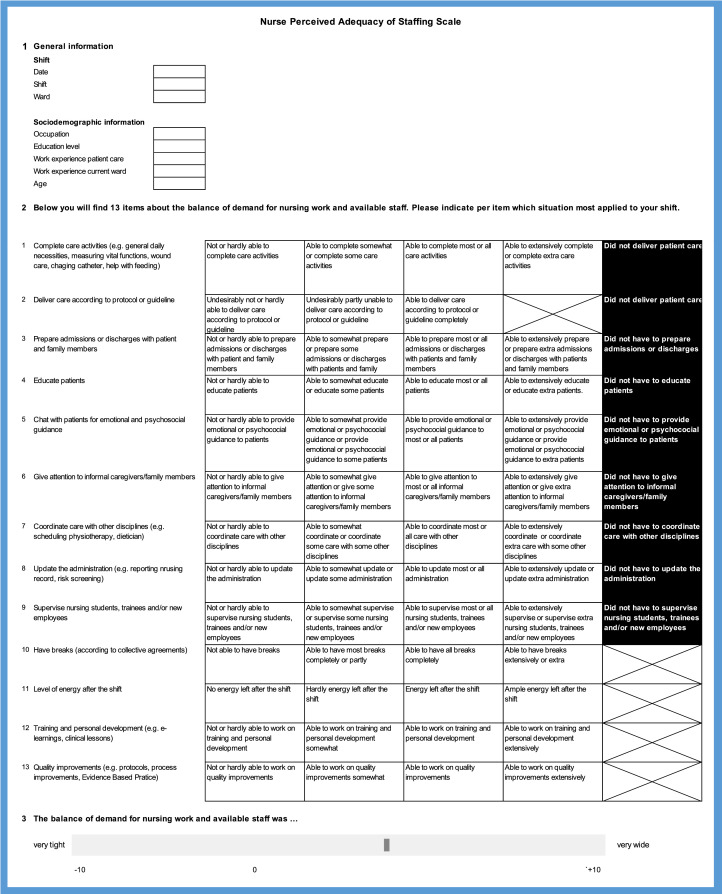

We conducted a three-round Delphi study to find consensus on which items determine adequacy of shift staffing. Of the 27 items entered in the Delphi study, six were relevant to adequacy of staffing, nine were not relevant, and 12 were unsure. These results have been published elsewhere (van der Mark et al., 2022). In two nurse focus groups, the unsure items of adequacy of staffing were discussed in depth. Focus group meetings were recorded and the discussion was analyzed by two researchers (CO, CM), who divided the discussed items into three categories: (1) items to include, (2) items to reword and include, and (3) items to exclude. Two researchers (CO, CM) reviewed and discussed this list until consensus was reached on which items to include in the instrument. Five items were not included (Appendix 1). Participants stated that these items were too subjective, overlapped with other included items, or were not clearly an effect indicator of staffing adequacy. The other seven unsure items were included (Appendix 1). The 13 items of the measurement instrument were scored at an ordinal level so that several response options could generate a variety of staffing adequacy levels. There were four to five possible responses for each item, including a ‘did not have to’ option for items that did not necessarily apply to each respondent or shift (Fig. 1). One example of such an item was ‘being able to supervise nursing students’, with ‘did not have to supervise nursing students’ as a response option. The instrument consisted of three parts: (1) general questions on shift information e.g., date, shift, and ward, and sociodemographic information of the respondent, e.g. education level and working experience, (2) the 13 items on adequacy of shift staffing, and (3) a single item to assess the overall adequacy of staffing on a scale ranging from −10 (very tight) to +10 (very wide) for convergent validity testing.

Fig. 1.

Nurse Perceived Adequacy of Staffing Scale (NPASS), translated from Dutch for this article.

The content of the instrument was pilot tested. One researcher (CM) conducted five semi-structured interviews with nurses. Participants were members of the nursing board and nurses with a special interest in nurse staffing from the researchers’ network. They were asked to fill out the instrument using the ‘think aloud’ method, in which participants are asked to say exactly what they thought (Eccles and Arsal, 2017). This technique is useful to gain a thorough understanding of how participants interpret and respond to the items. Subsequently, they were asked to comment on the instructions, items, and response categories and to consider the content validity (relevance, comprehensiveness, comprehensibility), acceptability, and feasibility of the instrument. The interviews were recorded and analyzed by one researcher (CM). Two researchers (CO, CM) reviewed the outcomes. Overall, respondents described the instrument as ‘clear’ and ‘complete’ and were motivated to apply the instrument in practice. Some minor textual adaptations were made, e.g. trainees and new employees were added to item nine on supervising nursing students, and examples on items were added to the descriptions of items 1, 7, 8, 12, and 13. The instrument is shown in Fig. 1.

2.2. Psychometric evaluation

2.2.1. Participants and procedure

The field test was conducted in three teaching hospitals in the Netherlands: VieCuri Medical Center, Spaarne Gasthuis, and Rijnstate hospital. Thirteen wards with 19 - 48 beds each were interested in participation. These wards participated voluntarily.

Inclusion criteria were registered nurses, third- and fourth-year nursing students, nurse assistants, or care assistants working on general hospital wards (medical and surgery). Under- and overstaffed shifts should be balanced by reallocating nurses or patients. This balancing act is applicable to interchangeable capacity, i.e. general wards and not to highly specialised wards (e.g. intensive care unit and paediatrics). Additionally, in resource planning it is relevant to cover adequacy of staffing of the total amount of nursing work for resources rostered at ward level. Hence, nurses, nurse/care assistants and third- and fourth-year nursing students were included since their work represents the total nursing work, i.e. direct and indirect patient care activities, and non-patient care-related nursing activities (Morris et al., 2007). Moreover, these occupations are commonly part of the wards full time equivalents (FTE) budget. Occupations not contributing to nursing work or not included in the wards’ FTE budget were excluded, e.g. first and second year nursing students, nutrition assistants, and facility services.

Participants received an information letter, in which the aim, procedure, and duration of the study were explained. We also stated that informed consent was assumed by filling out the instrument. Further information was given on request in two presentations.

For four to six weeks, each shift was individually evaluated by all staff present for adequacy of staffing. Participants were asked to fill out the instrument at the end of each shift by means of a digital questionnaire especially developed for this research. To prevent participants having to repeatedly provide sociodemographic information when evaluating each shift, they each inserted a unique code before filling in the questionnaire that was asked at subsequent measurements. Participation was anonymous as no traceable personal information was requested. To avoid missing data, participants were not allowed to skip any question. The questionnaire could be completed using a mobile device or desktop. Posters with QR codes were provided for easy access to the questionnaire. The questionnaire was administered in Dutch.

Five wards spent two to three weeks testing the questionnaire for technical reliability, accessibility, speed, flow, and clarity, before the final version was released. Data was gathered between May and August 2022. Head nurses of participating wards were requested to coordinate the field test and to motivate nurses to fill out the instrument. They received a weekly update on the number of measurements per shift.

2.3. Statistical analysis

We performed all statistical analyses using R (version 4.1.2) (Team, 2013). The R-package LTM (version 1.1–1) was used for descriptive statistics. Packages used for the IRT analysis are provided in Table 1.

Table 1.

Descriptions of the IRT concepts, tests, and acceptable values per test, adapted from van Kooten et al. (2021) (van Kooten et al., 2021).

| Investigated property | Explanation | Test | Criteria for acceptable values | |

|---|---|---|---|---|

| Assumptions IRT model | Unidimensionality | All items in the item bank should reflect the same construct | Unidimensionality was examined by CFA (R-package Lavaan; version 0.6–10 (Rosseel, 2012)). One-factor model fit was tested using the WLSMV estimation. In case of insufficient one-factor model fit, a bi-factor analysis and EFA were used (R-package Psych; version 2.2.5 (Revelle and Revelle, 2015)). A bi-factor analysis tests the item loadings on other group factors in addition to the general factor. EFA tests how much of the variance in the data is explained by the first factor (the construct we want to measure) (Reise, 2012; Rodriguez et al., 2016). | 1. CFA Scaled CFI > 0.95 Scaled TLI > 0.95 Scaled RMSEA < 0.06 SRMR < 0.08 (Schermelleh-Engel et al., 2003) 2. Bi-factor analysis ωH > 0.80 3. EFA ECV > 0.70 |

| Local independence | Items should be independent of each other when controlled for the construct measured. | Local independence was examined by evaluating residual correlations in the CFA model after controlling for the dominant factor. | Residual correlations ≤ 0.20 (Reeve et al., 2007) | |

| Monotonicity | The probability of selecting an item response indicative for more problems (i.e. less adequate staffing) increases with an increasing level of the construct. | Monotonicity was examined by a non-parametric IRT model fit with Mokken scaling (R-package Mokken; version 3.0.6 (Van der Ark, 2007)). | Scalability coefficient H (scale) > 0.50 Scalability coefficient Hi (item) > 0.30 (Mokken, 2011) | |

| IRT-model fit | IRT models describe the relationship between a person's response to an item and the level of construct measured by the total item bank. | The GRM was fitted (R-package Mirt; version 1.35.1 (mirt, 2012)). Discrimination and threshold parameters were estimated per item using marginal maximum likelihood. | Orlando and Thissen's S-X² p value ≥ 0.001 (Reeve et al., 2007; Kang and Chen, 2011) | |

| DIF | DIF assesses whether measurements with different participant characteristics having the same level of theta (i.e. adequacy of staffing), have different probabilities of selecting a certain response category. | Both uniform (magnitude is constant across the scale) and non-uniform DIF (magnitude is not constant across the scale) were tested. McFadden's pseudo R² test was used to test DIF (R-package lordiff, version 0.3–3 (Choi et al., 2011)). | Change in McFadden's R² ≤ 0.02 (Swaminathan and Rogers, 1990) | |

| Reliability | Assesses reliability across the PAS scale, where reliability is expressed as the standard error of theta (SE(θ)) | SE(θ) were calculated using the EAP estimator. | SE(θ) ≤ 0.32 |

Abbreviations: CFA, confirmatory factor analysis; CFI, Comparative fit index; DIF, Differential Item Functioning; EAP, Expected A Posteriori; ECV, Explained Common Variance; EFA, exploratory factor analysis; GRM, Graded Response Model; RMSEA, root mean square error of approximation; SE, standard error; SRMR, Standardized root mean square residual; TLI, Tucker-Lewis index; WLSMV, weighted least squares with mean and variance adjustment; ωH, Omega hierarchical.

Sociodemographic variables and item-level descriptives Descriptive statistics (mean, proportions) were used to present sociodemographic variables. The data was cross-sectional and each shift was a unit of measurement. We used the unique participant codes to insert sociodemographic information automatically at the start of each shift measurement. Wards with less than 25 measurements were removed from the database to prevent measurement bias. This threshold was arbitrarily chosen.

Prior to the psychometric evaluation of the PAS instrument, we calculated the median and mean response category for all items, as well as the response percentages. Inter-item correlations of >0.75 were flagged because they may indicate similarity and therefore redundancy of items. The ‘did not have to’ answer option was an option for nine items (items 1–9) and was statistically treated as missing data.

We conducted the psychometric analyses of the PAS data according to the PROMIS analysis plan for IRT applications in quality of life research (Reeve et al., 2007). There are no guidelines on sample size requirements for IRT analysis (Edelen and Reeve, 2007), so we followed Tsutakawa and Johnson's recommendation of a sample size of approximately 500 for accurate parameter estimates (Tsutakawa and Johnson, 1990).

Structural validity We assessed structural validity of the PAS item bank by fitting a graded response model (GRM) and exploring item fit. A GRM was considered a suitable IRT model for instrument items with ordinal response categories that allow for a varying number of response categories per item (Nguyen et al., 2014). Discrimination and threshold parameters were estimated per item using marginal maximum likelihood. The discrimination parameter (α) represents the ability of an item to distinguish between shifts with a different level of PAS (θ). The threshold parameters (β) represent the required levels of staffing adequacy as perceived by an individual where the probability for selecting a higher response category over a lower response category is higher. Prior to fitting the model, we examined the required assumptions of the IRT model: unidimensionality, local independence, and monotonicity (Edelen and Reeve, 2007). Missing data were listwise deleted during our examination of IRT assumptions and exploration of item fit. We found that other methods to handle missing data (pairwise deletion and imputed data) led to the same conclusions. Table 1 provides further descriptions of the IRT concepts, tests, and acceptable values per test.

Measurement invariance We assessed measurement invariance by inspecting differential item functioning (DIF). DIF was evaluated for education level and work experience, as both factors were significantly associated with adequacy of staffing in previous research (Asiret et al., 2017; Spence et al., 2006; Choi and Staggs, 2014). If DIF was found, test characteristic curves (TCC) were plotted to test the impact of DIF on total scores by comparing total scores of all items with total scores of DIF items only. The TCC shows the relation between the total item scores (y-axis) and theta (x-axis).

Hypothesis testing Convergent validity was examined by hypothesis testing (de Vet et al., 2011). We hypothesized that there was a positive moderately high correlation between the PAS item bank and the single item that assesses the overall adequacy of staffing on a scale ranging from −10 (very tight) to +10 (very wide). Theta estimates were calculated using the expected a posteriori estimator. The hypothesis was accepted when the correlation coefficient Pearson's r was ≥ 0.5 (moderate) (Cohen, 1992).

Reliability We examined reliability by assessing the internal consistency of the total PAS item bank. The classical test theory-based Cronbach's alpha ≥ 0.70 was considered sufficient (Prinsen et al., 2018). We also tested reliability across the PAS scale. Standard errors of measurement were plotted over the range of theta scores. An SE(θ) of 0.32 or lower was considered a reliable measurement, which corresponds to a reliability coefficient of 0.90 or higher.

2.3.1. Ethical considerations

The Dutch Law on Medical Research Involving Human Subjects (WMO) did not require us to seek ethical approval as the research would not contribute to clinical medical knowledge and no participation by patients or use of patients’ data was involved. Hence, the medical ethical review board waived the need to approve the study. Local review boards of the participated institutions assessed the study for feasibility.

Personal information of participants was requested by predefined answer-options to avoid any form of traceability. Data were saved according to the rules and legislations of the participating institutions.

3. Results

3.1. Sample characteristics

In total, 906 measurements were collected. Three wards were excluded from the analysis because they did not meet the minimum measurement requirements, leaving 881 measurements from 10 wards in the final analysis. Sociodemographic characteristics of the participants are summarized in Table 2. Most measurements were performed by nurses aged 35 or younger, with vocational or higher professional education, and five years or less working experience.

Table 2.

Sociodemographic characteristics.

| n (%) | |

|---|---|

| Total number of measurements | 881 |

| Age (years) | |

| < 25 | 287 (33) |

| 25–35 | 350 (40) |

| 36–45 | 115 (13) |

| 46–55 | 76 (9) |

| > 55 | 53 (6) |

| Education level | |

| Secondary education | 19 (2) |

| Vocational education | 374 (42) |

| Higher professional education | 474 (54) |

| University education | 8 (1) |

| Other | 6 (1) |

| Occupation | |

| Care assistant | 13 (1) |

| Nurse assistant | 61 (7) |

| Nursing student | 35 (4) |

| Nurse | 772 (88) |

| Work experience patient care (years) | |

| < 3 | 286 (32) |

| 3–5 | 205 (23) |

| 6–10 | 122 (14) |

| 11–20 | 102 (12) |

| > 20 | 166 (19) |

| Work experience current ward (years) | |

| < 3 | 421 (48) |

| 3–5 | 182 (21) |

| 6–10 | 91 (10) |

| 11–20 | 141 (16) |

| > 20 | 46 (5) |

The item responses (median, mean and frequencies) are presented in Table 3. All scale values were used in at least 2% of the responses. Respondents were least able to work on training and personal development (item 12) or on quality improvements (item 13) and response option one (‘not or hardly able to’) was most frequently chosen for these items. The ‘did not have to’ response option was most frequently used for the item on supervising nursing students, trainees, and new employees. The inter-item correlation ranged from 0.23 to 0.71 (Appendix 2). The highest correlations were found between items 5 and 6 (0.71) and items 12 and 13 (0.70).

Table 3.

Item median, mean and frequencies and IRT item characteristics of the NPASS item bank (N = 881).

| Item | Median | Mean | Scale value | Item fit statistics | Discrimination parameters | Difficulty parameters | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5* | S-X² | p S-X² | α | β1 | β2 | β3 | |||

| 1 | 3 | 3..1 | 3% | 10% | 61% | 25% | 1% | 18.75 | 0.28 | 2.66 | −2.30 | −1.35 | 0.79 |

| 2 | 3 | 2.8 | 2% | 13% | 84% | - | 1% | 13.98 | 0.17 | 2.43 | −2.54 | −1.28 | NA |

| 3 | 3 | 2.8 | 8% | 17% | 31% | 15% | 28% | 16.23 | 0.70 | 2.43 | −1.55 | −0.53 | 0.95 |

| 4 | 3 | 2.8 | 6% | 21% | 38% | 14% | 20% | 27.54 | 0.04 | 3.52 | −1.67 | −0.53 | 0.94 |

| 5 | 3 | 2.5 | 14% | 31% | 31% | 14% | 10% | 16.70 | 0.67 | 2.58 | −1.29 | −0.05 | 1.12 |

| 6 | 3 | 2.5 | 10% | 25% | 28% | 10% | 27% | 17.12 | 0.45 | 2.70 | −1.43 | −0.17 | 1.20 |

| 7 | 3 | 2.7 | 5% | 16% | 35% | 7% | 36% | 18.61 | 0.35 | 2.93 | −1.82 | −0.66 | 1.19 |

| 8 | 3 | 2.9 | 6% | 20% | 53% | 18% | 3% | 20.32 | 0.32 | 2.60 | −1.87 | −0.76 | 1.03 |

| 9 | 3 | 2.6 | 4% | 10% | 12% | 5% | 68% | 19.44 | 0.37 | 2.83 | −1.36 | −0.20 | 1.06 |

| 10 | 3 | 2.6 | 5% | 36% | 51% | 9% | 26.80 | 0.03 | 1.99 | −2.30 | −0.32 | 1.81 | |

| 11 | 2 | 2.3 | 17% | 35% | 45% | 2% | 27.03 | 0.41 | 1.53 | −1.45 | 0.07 | 3.01 | |

| 12 | 1 | 1.6 | 61% | 20% | 17% | 3% | 19.81 | 0.34 | 2.10 | 0.35 | 1.08 | 2.49 | |

| 13 | 1 | 1.6 | 61% | 23% | 14% | 2% | 37.44 | 0.01 | 1.89 | 0.35 | 1.28 | 2.77 | |

*'did not have to'.

3.2. Structural validity

All IRT assumptions were met. The confirmatory factor analysis showed suboptimal evidence of unidimensionality (scaled CFI=0.87, scaled TLI=0.84, scaled RMSEA=0.1, SRMR=0.06), so bi-factor analysis and explanatory factor analysis were performed (Omega-H = 0.88 and ECV=0.82). The results of these analyses indicated that the item bank is sufficiently unidimensional. Items were locally independent based on residual correlations that ranged from −0.05 to 0.19. In addition, all items and the total scale complied with monotonicity (item H = 0.62–0.73; total scale H = 0.68). All 13 PAS items showed sufficient GRM model fit (p S-X² ≥ 0.001) (Table 3). Discrimination parameters ranged from 1.53 (item 11 on energy) to 3.52 (item 4 on patient education). Threshold parameters ranged from −2.54 (item 2 on protocols) to 3.01 (item 11 on energy). The curves of the item characteristics of the total item bank are presented in Appendix 3.

3.3. Measurement invariance

We tested the DIF for vocational education (n = 374) versus higher professional education (n = 474). To obtain sufficient instances in response categories, a dichotomized variable for work experience was created: <3 years (n = 286) vs ≥3 years (n = 595). Uniform DIF was found for the work experience on the item ‘delivering care according to protocol or guideline‘ (item 2) (R²12 = 0.025); the magnitude was constant across the scale (Fig. 2). The threshold parameter was lower for work experience < 3 years than for work experience ≥ 3 years, indicating that less experienced nurses endorse higher response categories at the same level of PAS. Fig. 3 presents the overall impact of DIF for work experience on the TCC for all items and for item 2 alone. The DIF item affected the total score by less than 0.5 points across the entire theta range. No DIF was found for education.

Fig. 2.

Item response function DIF item.

Fig. 3.

Test characteristic curves for all items and DIF items.

3.4. Hypothesis testing

An expected moderate correlation was found between the PAS item bank and the single item that assesses the overall PAS (r = 0.66).

3.5. Reliability

Cronbach's alpha of the PAS item bank was 0.94. Fig. 4 presents the standard errors of measurement over the theta range. Reliability was in the −2 to +2 theta range. Most of the respondents’ measurements (84.2%) were estimated by the PAS item bank with a reliability of ≥ 0.9.

Fig. 4.

Standard errors over the range of theta.

4. Discussion

This is the first study to develop a multi-item instrument to measure nurses’ PAS of a shift and to assess the psychometric properties of the item bank by IRT. The reliability and validity of the item bank was sufficient. Structural validity was also sufficient because all items fitted the IRT model well and satisfactory convergent validity was provided by hypothesis testing. The internal consistency was adequate and the full item bank measured PAS reliably at the mean and two standard deviations around the mean. Taken together, a new valid and reliable PAS instrument was developed; the Nurse Perceived Adequacy of Staffing Scale (NPASS).

The NPASS measure consists of 13 reflective items, i.e. effective indicators of the construct (de Vet et al., 2011). Some items are commensurate with other constructs, e.g. getting the work done corresponds to the practice environment scale, and completing care activities and patient education correspond to the MISSCARE survey (Kalisch and Williams, 2009; Lake, 2002). While concepts like missed care and work environment have been introduced in the context of patient safety and attracting and retaining nurses, PAS was developed for resource planning (Maassen et al., 2020; Kalisch et al., 2009). However, the overlap between these tools and the NPASS can be seen as that PAS is the link between nursing work, work environment and available staffing resources. This is confirmed by the associations previously found between PAS and patient and nurse outcomes, e.g. mortality and job satisfaction (Tvedt et al., 2014; Bruyneel et al., 2009). The set of NPASS items is unique while it combines the broad spectrum of nursing work, including supervising students, quality and organizational work, training and development, and other relevant indicators of staffing adequacy such as level of energy and having breaks.

The DIF analysis showed that less experienced nurses were more likely to assess care delivery of a shift according to protocol or guideline than experienced nurses were. Benner et al. defined a less experienced nurse with 0–2 years of clinical experience as a novice/advanced beginner (Benner, 1982). These nurses rely on rules and protocols or guidelines to integrate knowledge into practice, whereas competent, proficient, and expert nurses have already mastered the protocols and use their experience and discretionary judgment to make decisions in clinical situations (Manias et al., 2005). A possible explanation of the difference between these groups is that less experienced nurses feel less confident to deliberately deviate from protocol or guidelines because of possible consequences on safety and quality of care delivery (McNab et al., 2016; Allen, 2007). Removing the item from the measurement instrument would reduce the content validity; experts agreed on inclusion of this item in defining staffing adequacy most (van der Mark et al., 2022). The DIF on the item also had a negligible impact on the total score, so we retained the item in the NPASS item bank.

We found an expected moderate correlation between the total NPASS item bank and the single item measuring PAS ranging from very tight to very wide. This moderate association may be explained by nurses’ visions and definitions of their work and their professional identity (Hoeve et al., 2014; Allen, 2018). When scoring the single item, nurses implicitly weigh the relevance of the elements of nursing work according to their values and beliefs (Youngblut and Casper, 1993). As a consequence, nurses could rate staffing relatively wide, while some work was left undone or was not recognized as an essential element of nursing work and not weighted at all, which weakens the correlation. As Kramer and Schmalenberg (Kramer and Schmalenberg, 2005) described, “When ‘adequacy of staffing’ is measured as a perception, a process rather than as a structure, it is necessary to include the steps in the process and/or the attributes and conditions that augment or ameliorate the perceptual process” (p.189). Hence, a global rating for PAS is not sufficient for such a complex concept. The PAS item bank developed in this study guides this perceptual process.

The location of the items on the NPASS scale tells us something about nurses’ visions on nursing practice. Nurses were not able or hardly able to work on training and personal development (item 12) and quality improvement (item 13), possibly because of time constraints or the organization of nursing work and prioritization of activities within a limited amount of time (Allen, 2007; Cho et al., 2020). As a result, these items are located relatively high (i.e. high difficulty parameters) in the current model and are relevant for detecting wider levels of staffing. However, if in future nurses have more time for training and development and quality improvement the model should be updated to fit current nursing standards.

4.1. Strengths and limitations

This is the first study to develop and validate a PAS measurement instrument by setting up an item bank using IRT. This is a sustainable approach as the item bank can be easily expanded as needed. Another strength of this study is that we tested our instrument using both classical and modern test theory methods (Edelen and Reeve, 2007). Despite these strengths, there are also some limitations to the current study.

First, the content validity was assessed by qualitative methods. These are often less objective than quantitative methods, e.g. the content validity index (Smith, 1983; Polit and Beck, 2006). Nevertheless, the NPASS items were identified in a quantitative Delphi study (van der Mark et al., 2022) and our qualitative approach ensured adequate methodological quality according to the COSMIN checklist for study design (Mokkink et al., 2019; Mokkink et al., 2018).

Second, we did not test all psychometric properties as formulated by the COSMIN guideline, such as criterion validity, reliability, and responsiveness (Prinsen et al., 2018). Criterion validity requires a gold standard of the construct (de Vet et al., 2011). No gold standard is available for PAS, so we extensively tested construct validity. In the absence of a proper objective PAS measure, convergent validity was tested with a subjective item of PAS. Regarding reliability, we tested internal consistency and reliability across the scale, but additional strategies that evaluate variation of repeated measurements are available, i.e. test-retest reliability by asking the nurses to fill-out the instrument after the evaluated shift and the day after that shift. Furthermore, responsiveness could be tested to detect change over time in a longitudinal study (de Vet et al., 2011). These issues were beyond the scope of the current study and should be explored in future research.

Third, this study was conducted in teaching hospitals, so no measurements from general or academic hospitals were included. Furthermore, most respondents were relatively young – 73% of responses were given by persons under 35. This could reflect the age distribution on the included wards or young nurses may have found it easier to participate in the study because of their familiarity with mobile phones and QR codes, which we used for measuring PAS. This may have reduced the generalizability of our results. Although we expect that our instrument can be used in other hospital settings – since we included three different settings and our sample size far exceeded the number of measurements necessary -, we recommend a check on model fit when using data from other hospital types. Moreover, the NPASS was developed and tested in Dutch. We expect that a translation of the instrument is applicable to other countries since the content fits international definitions of nursing work (https://www.icn.ch/nursing-policy/nursing-definitions).

4.2. Practical implications

The results support the use of the NPASS in practice. It is a longstanding misconception that objective measurements outperform subjective measurements (Hahn et al., 2007). Multiple items of the NPASS item bank objectify the inherently subjective construct of adequacy of staffing. Moreover, using the NPASS in practice incorporates nurses’ professional assessments in nurse staffing decisions and can enhance nurses’ professional commitment to business operations (Royal College of Nursing, 2010; An Roinn Sláinte 2018; Jacob et al., 2021). Measuring PAS also gives nurses a voice and helps in the transition from staffing as a managerial decision to a decision by and for nurses.

Measuring PAS helps to recognize patterns of tight(er) or wider adjustments in the staff-shift schedule, which contains the selection of shifts to be worked (Burke et al., 2004). This reveals the required number of staff that need to be employed. Additionally, PAS is a common scale and measurements can be compared within wards or between hospitals, allowing to learn from one other. It offers a base for further discussion on strategies of daily allocation of nursing work to individuals, prioritization of nursing work, and the lean organization of work processes.

Optimizing tactical/strategical staffing decisions does not guarantee optimal staffing when it is needed because the demand for care is uncertain. Therefore, short-term forecasting of staff requirements is essential to align demand for nursing work with nurse supply and to allocate the scarce nursing resources fairly (Story, 2010; Litvak et al., 2005). Current available machine learning and artificial intelligence techniques offer many opportunities to predict PAS using data extracted from hospital information systems. Applying these techniques opens a new era into decision support on nurse staffing.

5. Conclusions

This is the first study to describe the development and calibration of a PAS item bank; the NPASS. The NPASS showed sufficient reliability and validity among nurses in Dutch hospitals. It can be used by nurses to evaluate the PAS of a shift in general hospital wards and gives nurses a voice in staffing plans. More research is needed to test the responsiveness and test-retest reliability of the instrument, to fit the model to other hospital contexts, and to explore practical applications in nurse staffing decision making.

Funding sources

No external funding.

Declaration of Competing Interest

None.

Acknowledgements

The authors thank all participants of the focus groups and interviews and nurses who tested the measurement instrument for their willingness and enthusiasm to participate. We specially thank Michiel Luijten, Department of Epidemiology and Data Science, Amsterdam UMC, the Netherlands, for his advice in the IRT analysis.

Footnotes

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.ijnsa.2023.100138.

Appendix. Supplementary materials

References

- Allen D. What do you do at work? Profession building and doing nursing. Int. Nurs. Rev. 2007;54:41–48. doi: 10.1111/j.1466-7657.2007.00496.x. [DOI] [PubMed] [Google Scholar]

- Allen D. Translational Mobilisation Theory: a new paradigm for understanding the organisational elements of nursing work. Int. J. Nurs. Stud. 2018;79:36–42. doi: 10.1016/j.ijnurstu.2017.10.010. [DOI] [PubMed] [Google Scholar]

- An Roinn Sláinte. Framework for safe nurse staffing and skill mix in general and specialist medical and surgical care settings in adult hospitals in Ireland 2018 2018.

- Asiret G.D., Kapucu S., Kurt B., Ersoy N.A., Kose T.K. Attitudes and satisfaction of nurses with the work environment in Turkey. Int J Caring Sci. 2017;10:771–780. [Google Scholar]

- Benner P. From Novice to Expert. Am. J. Nurs. 1982;82:402–407. [PubMed] [Google Scholar]

- Blouin A.S., Podjasek K. The continuing saga of nurse staffing: historical and emerging challenges. J Nurs Adm JONA. 2019;49:221–227. doi: 10.1097/NNA.0000000000000741. [DOI] [PubMed] [Google Scholar]

- Bruyneel L., Heede K.V., Diya L., Aiken L., Sermeus W. Predictive Validity of the International Hospital Outcomes Study Questionnaire: an RN4CAST Pilot Study. J. Nurs. Scholarsh. 2009;41:202–210. doi: 10.1111/j.1547-5069.2009.01272.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke E.K., De Causmaecker P., van der Berghe G., van Landeghem H. The State of the Art of Nurse Rostering. J. Sched. 2004;7:441–499. doi: 10.1023/B:JOSH.0000046076.75950.0b. [DOI] [Google Scholar]

- Cho S., Lee J., You S.J., Song K.J., Hong K.J. Nurse staffing, nurses prioritization, missed care, quality of nursing care, and nurse outcomes. Int. J. Nurs. Pract. 2020;26:e12803. doi: 10.1111/ijn.12803. [DOI] [PubMed] [Google Scholar]

- Choi J., Staggs V.S. Comparability of nurse staffing measures in examining the relationship between RN staffing and unit-acquired pressure ulcers: a unit-level descriptive, correlational study. Int. J. Nurs. Stud. 2014;51:1344–1352. doi: 10.1016/j.ijnurstu.2014.02.011. [DOI] [PubMed] [Google Scholar]

- Choi S.W., Gibbons L.E., Crane P.K. Lordif: an R package for detecting differential item functioning using iterative hybrid ordinal logistic regression/item response theory and Monte Carlo simulations. J Stat Softw. 2011;39:1. doi: 10.18637/jss.v039.i08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. A power primer. Psychol. Bull. 1992;1:155–159. doi: 10.1037//0033-2909.112.1.155. [DOI] [PubMed] [Google Scholar]

- Dall'Ora C., Saville C., Rubbo B., Turner L.Y., Jones J., Griffiths P. Nurse staffing levels and patient outcomes: a systematic review of longitudinal studies. MedRxiv. 2021 doi: 10.1101/2021.09.17.21263699. 2021.09.17.21263699. [DOI] [PubMed] [Google Scholar]

- de Vet H.C.W., Terwee C.B., Mokkink L.B., Knol D.L. Cambridge University Press; Cambridge: 2011. Measurement in medicine : a Practical Guide. [Google Scholar]

- Eccles D.W., Arsal G. The think aloud method: what is it and how do I use it? Qual Res Sport Exerc Health. 2017;9:514–531. [Google Scholar]

- Edelen M.O., Reeve B.B. Applying item response theory (IRT) modeling to questionnaire development, evaluation, and refinement. Qual. Life Res. 2007;16:5–18. doi: 10.1007/s11136-007-9198-0. [DOI] [PubMed] [Google Scholar]

- Fagerström L., Kinnunen M., Saarela J. Nursing workload, patient safety incidents and mortality: an observational study from Finland. BMJ Open. 2018;8 doi: 10.1136/bmjopen-2017-016367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fagerström L., Rainio A.-K., Rauhala A., Nojonen K. Validation of a new method for patient classification, the Oulu Patient Classification. J. Adv. Nurs. 2000;31:481–490. doi: 10.1046/j.1365-2648.2000.01277.x. [DOI] [PubMed] [Google Scholar]

- Fasoli D.R., Haddock K.S. Results of an integrative review of patient classification systems. Annu. Rev. Nurs. Res. 2010;28:295–316. doi: 10.1891/0739-6686.28.295. [DOI] [PubMed] [Google Scholar]

- Gagnier J.J., Lai J., Mokkink L.B., Terwee C.B. COSMIN reporting guideline for studies on measurement properties of patient-reported outcome measures. Qual. Life Res. 2021;30:2197–2218. doi: 10.1007/s11136-021-02822-4. [DOI] [PubMed] [Google Scholar]

- Griffiths P., Maruotti A., Recio Saucedo A., Redfern O.C., Ball J.E., Briggs J., et al. Nurse staffing, nursing assistants and hospital mortality: retrospective longitudinal cohort study. BMJ Qual Amp Saf. 2019;28:609. doi: 10.1136/bmjqs-2018-008043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffiths P., Saville C., Ball J., Jeremy J., Natalie P., Thomas M. Nursing workload, Nurse Staffing Methodologies & Tools: a systematic scoping review & discussion. Int. J. Nurs. Stud. 2020;103 doi: 10.1016/j.ijnurstu.2019.103487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hahn E.A., Cella D., Chassany O., Fairclough D.L., Wong G.Y., Hays R.D., et al. Vol. 82. Elsevier; 2007. pp. 1244–1254. (Precision of Health-Related Quality-Of-Life Data Compared With Other Clinical Measures). [DOI] [PubMed] [Google Scholar]

- Hoeve Y ten, Jansen G., Roodbol P. The nursing profession: public image, self-concept and professional identity. A discussion paper. J. Adv. Nurs. 2014;70:295–309. doi: 10.1111/jan.12177. [DOI] [PubMed] [Google Scholar]

- Jacob N., Burton C., Hale R., Jones A., Lloyd A., Rafferty A.M., et al. Pro-judge study: nurses’ professional judgement in nurse staffing systems. J. Adv. Nurs. 2021;77:4226–4233. doi: 10.1111/jan.14921. [DOI] [PubMed] [Google Scholar]

- Kalisch B.J., Landstrom G.L., Hinshaw A.S. Missed nursing care: a concept analysis. J. Adv. Nurs. 2009;65:1509–1517. doi: 10.1111/j.1365-2648.2009.05027.x. [DOI] [PubMed] [Google Scholar]

- Kalisch B.J., Lee H., Salas E. The Development and Testing of the Nursing Teamwork Survey. Nurs. Res. 2010;59:42. doi: 10.1097/NNR.0b013e3181c3bd42. [DOI] [PubMed] [Google Scholar]

- Kalisch B.J., Williams R.A. Development and Psychometric Testing of a Tool to Measure Missed Nursing Care. JONA J Nurs Adm. 2009;39:211–219. doi: 10.1097/NNA.0b013e3181a23cf5. [DOI] [PubMed] [Google Scholar]

- Kang T., Chen T.T. Performance of the generalized S-X2 item fit index for the graded response model. Asia Pac Educ Rev. 2011;12:89–96. [Google Scholar]

- Kramer M., Schmalenberg C. Revising the essentials of magnetism tool. JONA J Nurs Adm. 2005;35:188–198. doi: 10.1097/00005110-200504000-00008. [DOI] [PubMed] [Google Scholar]

- Lake E.T. Development of the practice environment scale of the nursing work index. Res. Nurs. Health. 2002;25:176–188. doi: 10.1002/nur.10032. [DOI] [PubMed] [Google Scholar]

- Litvak E., Buerhaus P.I., Davidoff F., Long M.C., McManus M.L., Berwick D.M. Managing Unnecessary Variability in Patient Demand to Reduce Nursing Stress and Improve Patient Safety. Jt Comm J Qual Patient Saf. 2005;31:330–338. doi: 10.1016/S1553-7250(05)31044-0. [DOI] [PubMed] [Google Scholar]

- Maassen S.M., Weggelaar Jansen A.M.J., Brekelmans G., Vermeulen H., van Oostveen C.J. Psychometric evaluation of instruments measuring the work environment of healthcare professionals in hospitals: a systematic literature review. Int. J. Qual. Health Care. 2020;32:545–557. doi: 10.1093/intqhc/mzaa072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manias E., Aitken R., Dunning T. How graduate nurses use protocols to manage patients’ medications. J. Clin. Nurs. 2005;14:935–944. doi: 10.1111/j.1365-2702.2005.01234.x. [DOI] [PubMed] [Google Scholar]

- McNab D., Bowie P., Morrison J., Ross A. Understanding patient safety performance and educational needs using the ‘Safety-II'approach for complex systems. Educ Prim Care. 2016;27:443–450. doi: 10.1080/14739879.2016.1246068. [DOI] [PubMed] [Google Scholar]

- mirt Chalmers RP. A multidimensional item response theory package for the R environment. J Stat Softw. 2012;48:1–29. [Google Scholar]

- Mokken R.J. A theory and procedure of scale analysis. Theory Proced. Scale Anal., De Gruyter Mouton. 2011 [Google Scholar]

- Mokkink L., de Vet H., Prinsen C. COSMIN Study Design checklist for Patient-reported outcome measurement instruments 2019:1–32.

- Mokkink L.B., de Vet H.C.W., Prinsen C.A.C., Patrick D.L., Alonso J., Bouter L.M., et al. COSMIN Risk of Bias checklist for systematic reviews of Patient-Reported Outcome Measures. Qual Life Res Int J Qual Life Asp Treat Care Rehabil - Off J Int Soc Qual Life Res. 2018;27:1171–1179. doi: 10.1007/s11136-017-1765-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris R., MacNeela P., Scott A., Treacy P., Hyde A. Reconsidering the conceptualization of nursing workload: literature review. J. Adv. Nurs. 2007;57:463–471. doi: 10.1111/j.1365-2648.2006.04134.x. [DOI] [PubMed] [Google Scholar]

- Musy S.N., Endrich O., Leichtle A.B., Griffiths P., Nakas C.T., Simon M. The association between nurse staffing and inpatient mortality: a shift-level retrospective longitudinal study. Int. J. Nurs. Stud. 2021;120 doi: 10.1016/j.ijnurstu.2021.103950. [DOI] [PubMed] [Google Scholar]

- Nguyen T.H., Han H.-R., Kim M.T., Chan K.S. An introduction to item response theory for patient-reported outcome measurement. Patient-Patient-Centered Outcomes Res. 2014;7:23–35. doi: 10.1007/s40271-013-0041-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polit D.F., Beck C.T. The content validity index: are you sure you know what's being reported? Critique and recommendations. Res. Nurs. Health. 2006;29:489–497. doi: 10.1002/nur.20147. [DOI] [PubMed] [Google Scholar]

- Prinsen C.A.C., Mokkink L.B., Bouter L.M., Alonso J., Patrick D.L., de Vet H.C.W., et al. COSMIN guideline for systematic reviews of patient-reported outcome measures. Qual Life Res Int J Qual Life Asp Treat Care Rehabil - Off J Int Soc Qual Life Res. 2018;27:1147–1157. doi: 10.1007/s11136-018-1798-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reeve B.B., Hays R.D., Bjorner J.B., Cook K.F., Crane P.K., Teresi J.A., et al. Psychometric Evaluation and Calibration of Health-Related Quality of Life Item Banks: plans for the Patient-Reported Outcomes Measurement Information System (PROMIS) Med. Care. 2007;45 doi: 10.1097/01.mlr.0000250483.85507.04. [DOI] [PubMed] [Google Scholar]

- Reise S.P. The rediscovery of bifactor measurement models. Multivar Behav Res. 2012;47:667–696. doi: 10.1080/00273171.2012.715555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Revelle W., Revelle M.W. Package ‘psych.’. Compr R Arch Netw. 2015;337:338. [Google Scholar]

- Rochefort C.M., Beauchamp M.-E., Audet L.-A., Abrahamowicz M., Bourgault P. Associations of 4 nurse staffing practices with hospital mortality. Med. Care. 2020;58:912. doi: 10.1097/MLR.0000000000001397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez A., Reise S.P., Haviland M.G. Applying bifactor statistical indices in the evaluation of psychological measures. J. Pers. Assess. 2016;98:223–237. doi: 10.1080/00223891.2015.1089249. [DOI] [PubMed] [Google Scholar]

- Rosseel Y. lavaan: an R package for structural equation modeling. J Stat Softw. 2012;48:1–36. [Google Scholar]

- Royal College of Nursing. Guidance on safe nurse staffing levels in the UK 2010.

- Schermelleh-Engel K., Moosbrugger H., Müller H. Evaluating the fit of structural equation models: tests of significance and descriptive goodness-of-fit measures. Methods Psychol Res Online. 2003;8:23–74. [Google Scholar]

- Shin S., Park J., Bae S. Nurse staffing and nurse outcomes: a systematic review and meta-analysis. Nurs. Outlook. 2018;66:273–282. doi: 10.1016/j.outlook.2017.12.002. [DOI] [PubMed] [Google Scholar]

- Smith J.K. Quantitative versus qualitative research: an attempt to clarify the issue. Educ Res. 1983;12:6–13. doi: 10.3102/0013189X012003006. [DOI] [Google Scholar]

- Spence K., Tarnow-Mordi W., Duncan G., Jayasuryia N., Elliott J., King J., et al. Measuring nursing workload in neonatal intensive care. J. Nurs. Manage. 2006;14:227–234. doi: 10.1111/j.1365-2934.2006.00609.x. [DOI] [PubMed] [Google Scholar]

- Story P. CRC Press; 2010. Dynamic Capacity Management For healthcare: Advanced methods and Tools For Optimization. [Google Scholar]

- Swaminathan H., Rogers H.J. Detecting differential item functioning using logistic regression procedures. J Educ Meas. 1990;27:361–370. [Google Scholar]

- Team R.C. R: a language and environment for statistical computing 2013.

- Telford W. Determining nursing establishments. Health Serv. Manpow. Rev. 1979;5:11–17. [PubMed] [Google Scholar]

- Tsutakawa R.K., Johnson J.C. The effect of uncertainty of item parameter estimation on ability estimates. Psychometrika. 1990;55:371–390. doi: 10.1007/BF02295293. [DOI] [Google Scholar]

- Tvedt C., Sjetne I.S., Helgeland J., Bukholm G. An observational study: associations between nurse-reported hospital characteristics and estimated 30-day survival probabilities. BMJ Qual Saf. 2014;23:757–764. doi: 10.1136/bmjqs-2013-002781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Unruh L. Nurse staffing and patient, nurse, and financial outcomes. AJN. 2008;108:62–71. doi: 10.1097/01.NAJ.0000305132.33841.92. [DOI] [PubMed] [Google Scholar]

- van den Oetelaar W., van Stel H., van Rhenen W., Stellato R., Grolman W. Balancing nurses’ workload in hospital wards: study protocol of developing a method to manage workload. BMJ Open. 2016;6:1–11. doi: 10.1136/bmjopen-2016-012148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van der Ark L.A. Mokken scale analysis in R. J Stat Softw. 2007;20:1–19. [Google Scholar]

- van der Mark C., Vermeulen H., Hendriks P., van Oostveen C. Measuring perceived adequacy of staffing to incorporate nurses’ judgement into hospital capacity management: a scoping review. BMJ Open. 2021 doi: 10.1136/bmjopen-2020-045245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Mark C.J., Kraan J., Hendriks P.H., Vermeulen H., van Oostveen C.J. Defining adequacy of staffing in general hospital wards: a Delphi study. BMJ Open. 2022;12 doi: 10.1136/bmjopen-2021-058403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Kooten J.A., Terwee C.B., Luijten M.A., Steur L.M., Pillen S., Wolters N.G., et al. Psychometric properties of the Patient-Reported Outcomes Measurement Information System (PROMIS) Sleep Disturbance and Sleep-Related Impairment item banks in adolescents. J. Sleep. Res. 2021;30:e13029. doi: 10.1111/jsr.13029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Oostveen C.J., Ubbink D.T., Mens M.A., Pompe E.A., Vermeulen H. Pre-implementation studies of a workforce planning tool for nurse staffing and human resource management in university hospitals. J. Nurs. Manage. 2016;24:184–191. doi: 10.1111/jonm.12297. [DOI] [PubMed] [Google Scholar]

- Youngblut J.M., Casper G.R. Single-item indicators in nursing research. Res. Nurs. Health. 1993;16:459–465. doi: 10.1002/nur.4770160610. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.