Abstract

There have been numerous calls for increased transparency and disclosure in forensic science. However, there is a paucity of guidance on how to achieve this transparency in reports, and the impacts it may have on criminal justice proceedings. We describe one multi-disciplinary forensic laboratory's journey to fully transparent reporting, disclosing matters of scientific relevance and importance. All expert reports across 17 disciplines now contain information regarding the fundamental principles and methodology, validity and error, assumptions and limitations, competency testing and quality assurance, cognitive factors, and areas of scientific controversy. Staff support for transparent reporting increased following introduction, with most reporting largely positive impacts. A slight increase in questioning in court has been experienced, with increased legal attention paid to the indicia of scientific validity. Transparency in expert forensic science reports is possible, and can improve the use of scientific evidence in courts without compromising the timeliness of service.

Keywords: Forensic science, Expert reports, Communicating opinion, Expert evidence, Transparency

1. Introduction

“[It is a fundamental duty of the expert witness] to furnish the judge or jury with the necessary scientific criteria for testing the accuracy of their conclusions, so as to enable the judge or jury to form their own independent judgement by the application of these criteria to the facts proved in evidence … [T]he bare ipse dixit1 of a scientist, however, eminent, upon the issue in controversy, will normally carry little weight, for it cannot be tested by cross-examination nor independently appraised …”

Davie v Magistrates of Edinburgh [1953] SC 34, 39–40 (Lord President Cooper].

Forensic science must be transparent to be appropriately utilised within the criminal justice system. As noted in Davie v Magistrates of Edinburgh [1], and numerous cases since, unexplained conclusions and opinions from scientific experts do not allow the courts, or any end-user of the forensic science results obtained, to adequately determine the admissibility of the evidence, the potential probative value within the case, or the impartiality and competence of the forensic practitioner. Indeed, a recent Australian High Court case noted that the opinion of an expert has probative value only if the opinion can assist the trier of fact in forming its own opinion as to the inferences that can be drawn from the evidence [2]. Failure to disclose key information regarding the accuracy, reliability, limitations and uncertainties within methods and opinions could contribute to the overvaluing or misuse of forensic science evidence, and in the worst-case scenario, lead to miscarriages of justice.

The communication of forensic science results and opinions, whether in written reports or oral testimony, can be difficult. Unlike other modes of scientific communication which are intradisciplinary and between peers with similar levels of foundational knowledge, forensic scientists must predominantly communicate their findings to a lay audience. They must also work within the confines of the criminal justice system, which may result in restrictions on the means and substance of what is to be communicated. Written reports may need to comply with organisational policy, legal codes of practice or legislation, while oral testimony may be restricted based on the questioning and chosen strategy of the prosecution and defence.

Ineffective or incomplete communication of results, and the scientific foundations that underpin those results, has the potential to undermine the provision of justice – threatening the ability for investigators to identify perpetrators and details of crimes, the fairness of investigative and legal processes, the accuracy of justice outcomes, and the early identification of salient issues within cases and trials. The US National Academy of Sciences (NAS) seminal 2009 report [3] noted that forensic laboratory reports at that time largely did not meet the expected level of completeness and disclosure, while subsequent research, although limited in jurisdictional scope, has suggested that reports do not contain all recommended information, and can be misunderstood, or not understood at all, by relevant stakeholders [[3], [4], [5], [6], [7], [8], [9], [10], [11], [12]].

Authoritative reports, academic commentators, and legal bodies have variously recommended that written reports should contain critical disclosures to assist understanding, appropriate use, and critical scrutiny of forensic science results. Although there are some differences between recommended inclusions, Table 1 summarises the main areas of disclosure recommended, with some representative recommending references included.

Table 1.

Recommended aspects of disclosure in forensic reports.

| Disclosure | Recommending body/report |

|---|---|

| Qualifications & experience | [[13], [14], [15], [16], [17]] |

| Proficiency | [13,[17], [18], [19], [20], [21]] |

| Procedures and methods | [3,[12], [13], [14],17,22,23] |

| Assumptions and facts underlying their approach | [13,14,24] |

| Foundational validity of the discipline | [3,13,23,25] |

| Human factors and performance | [13,17,24,25] |

| Uncertainties, limitations, and error rates | [3,[12], [13], [14],17,22,23,25,26] |

Disclosure of these attributes is vital in light of the critical reports on scientific validity and reliability of many forensic science methods, and the growing number of wrongful convictions and miscarriages of justice caused by improper, or improperly applied, forensic science [3,23,27]. There is, at present, limited information on the impact of transparent reporting, or of the impact of failure to disclose, on how legal professionals and lay decision-makers weigh and utilise forensic science opinions. However, there is empirical research that provides some knowledge on the importance of disclosure on some of the aspects above.

Knowledge of a practitioner's qualifications or experience did not impact the evaluation of hair testimony [28], or gait analysis, regardless of whether the testimony was considered strong or weak [29]. However, Koehler and colleagues found that disclosure of information about a practitioner's experience did impact juror perceptions for bitemark and fingerprint evidence [21]. Disclosure regarding a practitioner's proficiency has been shown to impact the weight that lay decision-makers placed on the practitioner's opinions, with an increase in individual error rates resulting in a lessening of the weight placed on the evidence found in some, but not all, research [18,19,29,30]. Information on the method employed, and its limitations, similarly has varied empirical support for its impact – Garrett & Mitchell [31] found that inclusion of method information in testimony increased juror's belief that the defendant had deposited the fingerprints and counteracted the decrease in weight caused by the acknowledgement of the possibility of error. Goodman-Delahunty & Hewson [32] found that providing information on DNA methods improved understanding and increased skepticism of the evidence. However, others have found that information about methods and limitations had no impact on evidentiary weight [28,33]. Similarly, the evidence is inconsistent on whether jurors are sensitive to information regarding scientific validity [21].

In general, there appears to be substantial interplay between the different aspects recommended for disclosure. It appears that decision-makers have their own prior assumptions and knowledge regarding forensic science generally and specific forensic disciplines which impact on their utilisation of expert testimony. Case context, mode of presentation, individual aspects of juror knowledge and cognition, and personal characteristics of witnesses all interact with the opinion and its presentation mode, the scientific information and validity of the method, and the likelihood of error in a given case. Therefore, definitive empirical guidance is difficult to obtain regarding the potential impact of transparent disclosure. In the absence of this, forensic practitioners have been urged to use mainstream scientific methods and norms regarding the communication of forensic science to lay decision makers [13].

This article summarises how an operational forensic science laboratory transitioned to transparent reporting, with all reports containing opinion evidence being fully compliant with best practice recommendations listed in Table 1.

2. Transition to transparent reporting

Victoria Police Forensic Services Department (VPFSD) is the main service provider for forensic science services for the state of Victoria, Australia. Across the cohort of over 500 police, forensic officers and public servant staff, the Department delivers operational casework services for some 17 different disciplines, with approximately 4000 expert reports delivered per year. The Department is structured in three operational divisions – field operations providing crime scene, ballistics, CBR/DVI, collision reconstruction and multi-disciplinary evidence recovery services; analytical services providing illicit drug, clandestine laboratory, document, chemical trace, fire and explosion, vehicle examination and botanical examination services; and biometric services providing biology, fingerprints and facial comparison services. Requests for examinations are received primarily from police investigators, or from crime scene examiners working across the State. VPFSD has a case management service model, where items and examinations are considered holistically against the investigative questions or issues that require scientific investigation. Therefore, the examiners from each discipline will assess the request for examination, and derive appropriate examination strategies to address the pertinent issues. Reports are issued automatically at the conclusion of the examination to the investigator. Some disciplines issue full expert reports at this point, while other disciplines issue preliminary shorter reports, with full expert reports issued for contested matters and trials.

Over the past decades, different disciplines have gradually increased the transparency and level of comprehensibility of their reports and opinions. In the early to mid-2000s, a number of disciplines adopted the use of appendices to statements, providing explanations of the methods used, listing scales of conclusions, or defining key terms used in reports and testimony. However, these appendices were discipline specific in structure and content, and were not provided for all methods within a discipline.

Within the state of Victoria, Australia, courts have paid attention to the need for expert reports to contain necessary disclosures to facilitate understanding. The Forensic Evidence Working Group, a multidisciplinary group of judges, forensic science and medicine practitioners and legal practitioners developed a Practice Note on Expert Evidence in Criminal Trials [14,34]. This Practice Note, which came into effect in the Supreme and County Courts of Victoria in 2014, contains detailed specifications on what an expert report must contain, with the stated goal of enhancing the quality and reliability of expert evidence and improving the utility of such by ensuring that it is focused on relevant issues [14]. The Practice Note requires that experts outline, amongst other requirements:

-

•

Whether and to what extent the opinion(s) in the report are based on the expert's specialised knowledge, and the training, study experience on which that specialised knowledge is based;

-

•

The reasons for each opinion, with any literature, research or other materials or processes relied on in support of that opinion;

-

•

Any examinations or tests on which the expert has relied, including the relevant accreditation standard under which it was performed;

-

•

Any qualification of an opinion, without which the report would or might be incomplete or misleading;

-

•

Any limitation or uncertainty affecting the reliability of the methods or techniques used, or the data relied on;

-

•

Any limitation or uncertainty affecting the reliability of the opinions as a result of insufficient research or data.

The Practice Note was intended to be triggered on request – when expert opinions were at issue, either party in a case could request a full expert report containing all of the required material, tailored to the issue at hand. As a result of the introduction of the Practice Note, VPFSD developed new standardised templates in 2014 for all expert reports containing expert opinion evidence. For each method, information that fulfilled the requirements of the Practice Note was developed, reviewed, and approved for use in a standardised report template. When a Practice Note compliant report was required, experts could therefore easily include the relevant material within the body of their statement, disclosing necessary methodological, validation, cognitive factor and quality management information, as well as information regarding any significant or recognised disagreements within the field. The templating of all such information was designed to enable full transparency and rapid provision of expert reports while limiting ongoing resource imposts.

However, since the introduction of the Practice Note in 2014, there have been very limited requests for expert reports compliant with the Practice Note. Such requests were received only in a handful of cases over the 10 years since introduction – despite regular promotion of the value of the Practice Note and the type of material that would be provided to legal stakeholders including both prosecution and defence. Although the reasons why transparent expert reports were not being requested by legal practitioners are not known, it may be surmised, as other authors have, that they were not fully cognisant of the issues that had been raised regarding forensic science validity and reliability [3,23,35,36], nor of the importance of surfacing limitations, case-specific methodological issues, cognitive factors such as bias, or exploring potential controversies within methods which may impact on the probative value of evidence. Additionally, research has demonstrated a general skepticism of forensic science amongst legal practitioners compared to lay people [37,38], and it is therefore possible that their pre-existing notions led to the belief that they were already aware of what they perceived to be the important factors – noting that these may be different to the factors perceived by scientists to be important.

It is the duty of an expert to assist the Court impartially, by giving objective, unbiased opinions on matters within their area of specialised knowledge. As forensic scientists, regardless of the organisational affiliation, it is vital that we adhere to both scientific and legal expectations of conduct – presenting the evidence to ensure we remain within the bounds of scientific knowledge, disclosing factors that may influence, in any way, the consideration of the evidence, and ensuring, to the best of our ability, that all users of our service have sufficient understanding to utilise the evidence in appropriate ways to ensure just outcomes. Legal actors untrained in forensic science cannot be expected to fully understand all relevant scientific issues surrounding forensic methodologies and techniques, given the vast array and complexity. VPFSD therefore, in considering the limited uptake of the Practice Note compliant reports, scientific norms and expectations, legal obligations to disclose, and a primary mission to ensure that forensic science is used correctly to obtain fair justice outcomes, made the decision in 2019 to introduce fully transparent reporting for all opinion evidence provided by our Department.

3. Transparent reporting – standardised annexures

It is a reality of forensic science service provision that turnaround times are important. Providing a report long after the case is finalised, or after an offender has committed numerous other crimes, may not meet the goals of forensic science to assist the community and the criminal justice system. Therefore, it was important to find a means that ensured that all reports were fully transparent but did not adversely affect production and review time. It was also important to ensure that the information being provided was consistent between experts, and that information being communicated was, wherever possible, evidence-based and empirically supported. For this reason, it was decided that the information for a forensic discipline or technique that was relevant across cases would be communicated via annexures that would be attached to each statement to provide the necessary scientific context to the opinions. The combined documents containing a statement with case specific material the annexure containing standardised material would therefore jointly form an expert report. Annexures were selected rather than appendices, as annexures are specifically designed to be read as stand-alone documents, in contrast to appendices which require the context of the main document. As the annexures contained material that was not designed to be case-specific, but instead method or discipline specific, they could be written to be referred to across multiple cases, rather than tailored as in the case of appendices. The broad content and layout of each annexure was standardised to enable end-users to easily locate specific material, and to compare between annexures in cases where multiple reports and disciplines were included in a case. Specific annexures were created for each type of analysis and opinion provided – for example, separate annexures were created for different types of DNA analysis, different ballistics/firearms analyses, or document examination methods. Each discipline therefore created multiple annexures to report on each type of analysis/opinion provided – with each annexure potentially describing multiple methods that may be used to derive the type of opinion reported. For example, the illicit drug analysis annexure describes multiple techniques (e.g. colour tests, mass spectrometry, infrared spectroscopy) to explain how the identification of substances is performed.

Table 2 provides the sections and indicative content for each annexure.

Table 2.

Standardised annexure structure and content.

| Section | Content |

|---|---|

| Fundamental Principles of Discipline/Method | Outlines the premises on which the discipline relies, and the empirical support that exists for the claims |

| Methodology | Briefly describes how the method is conducted, with reference to published texts or standards if applicable |

| Scale of Conclusions | Outlines the entire scale of conclusions and definitions of each conclusion if applicable |

| Considerations of Discipline/Method | |

| Validity & Error Rates | Describes the validation (internal and external) status of the technique with references provided. If indicative error rates are available, they are provided with an explanation as to whether they are directly applicable to the methodology in use at VPFSD, or if there are differences in approach/considerations from the published study |

| Assumptions | Methodological assumptions that are common across all cases are outlined – case-specific assumptions are disclosed within the statement |

| Limitations | Methodological limitations that are common across all cases are outlined – case-specific limitations are disclosed within the statement |

| Competency | Briefly outlines the ongoing competency requirements (e.g. internal and external proficiency tests, annual recertification) for the practitioners |

| Quality Assurance | Discloses the standards to which the laboratory is accredited for that discipline/method |

| Cognitive Factors associated with Discipline/Method | Information provided regarding:

|

| Discipline-specific limitations (e.g. meaning of absence, ability to age deposits) | Information provided on important discipline specific considerations, such as what an absence of evidence should or not be interpreted to mean, whether the age of a deposit can be determined, the difference between source (or sub-source) and activity level opinions |

| Controversies relevant to Discipline/Method | Outlines the findings of the NAS and the President's Council of Advisors on Science and Technology (PCAST) reports [3,23]where relevant to the discipline and includes information about any progress made since these reports, including relevant validation or error rate studies. |

| Glossary | Defines and explains key terms present in the annexure or statement |

The “Considerations” section was explicitly separated under a large heading to alert readers to the importance of considering the information provided in the context of the case, and in the evaluation of the probative value, reliability and validity of the evidence provided. The first sections provide background information to assist in understanding the technique, while the Considerations section should be used to place the evidence in context of the case.

Each annexure was drafted by subject matter experts in the discipline to ensure that the content was relevant and appropriate for the methodology in use and all relevant procedural factors were included. While the process of drafting content was similar for all disciplines, it was noted that providing accessible and lay-person appropriate citations and further reading material differed significantly between the different areas and methods. Some areas of forensic science, such as biology or chemistry, have large bodies of supporting literature and research that is relatively easily available. Other areas have lower publication rates, or discipline-specific journals that are not openly accessible to non-forensic science organisations. Therefore, careful thought was given to providing references and supporting material that was both scientifically appropriate, and wherever possible accessible to the reader. Following subject matter expert drafting and internal review across experts amongst within their individual work areas, the draft was then reviewed by non-specialists to ensure comprehension, standardisation, coverage of relevant issues and appropriate disclosure of critical factors. All annexures were reviewed and approved by senior scientific and operational managers prior to introduction.

In total, a suite of 52 annexures were created, covering methods across crime scene/reconstructive disciplines, forensic chemistry, forensic biology, comparative sciences (e.g. fingerprints, firearms, handwriting), and vehicle forensic sciences. The annexures are updated regularly as feedback is obtained on the content, additional knowledge is gained through research and publications, and methods are continuously improved and updated. Examples of sections of annexures are provided in Supplementary Material A, with further explanation of the content provided below.

4. Annexure content

4.1. Fundamental principles

All forensic science methods are based on underpinning natural or scientific principles. This may be that the particular type of evidence (e.g. fingerprints, DNA, handwriting) provides sufficient discriminatory power to differentiate between individuals/sources, and that it is sufficiently stable within an individual/source to enable the same individual/source to be connected. Alternatively, it may be that specific activities or actions consistently result in the same or similar outcomes with relation to a specific type of evidence (e.g. vehicle collisions or blood patterns), or that the characteristics of the evidence can be determined with sufficient resolution to enable a substance to be identified (e.g. gunshot residue or chemical substances). Within the annexure, the principles upon which the discipline or method is based are outlined, with a summary of the empirical support that exists for these principles – outlining what studies have been undertaken to investigate the extent of evidence for the claim.

4.2. Methodology

The main steps, equipment, and procedures that form the method are outlined in brief. The annexures are not intended to be exhaustive training tools for non-experts, nor to provide every possible step in a method. However, to enable a broad understanding of the method utilised to form the opinion, the main steps are described, including evidence recovery, analysis, interpretation, evaluation and review/verification. Where external standards, best practice guidelines, or recommended procedures exist nationally or internationally, adherence or deviation from these is noted. Additionally, it is noted when procedures involve multiple staff, such as in many analytical procedures. Where appropriate, diagrams and flow charts are used to indicate the sequence of processing.

4.3. Scale of conclusions

As research [28,33,[39], [40], [41], [42]]has demonstrated that many of the scales of conclusion are not internally intuitive to lay decision makers [28,33,[39], [40], [41], [42]], and there is the potential for misunderstanding with some forms of expression, the entire scale of possible conclusions is provided for those disciplines that report against such a scale. This includes those that use a verbal expression of the likelihood ratio, discipline-specific scales or an explanation of the likelihood ratio and the chosen upper reporting bound that is applied.

4.4. Considerations of the method

The previous sections are aimed at increasing the understanding of the method and the opinion provided. In contrast, the Considerations section aims to provide information that may impact on the admissibility, probative value, and relative weighting or incorporation with other evidence in the case during the decision-making process. This is split across 8 sections, which may be tailored depending on the specific discipline or method being discussed.

4.4.1. Validity and error rates

Proof of validation, both fundamental and applied, is necessary to ensure that the opinion being provided can be considered reliable and robust. Where available, studies are described that demonstrate fundamental validity of the method, including any important findings of note and error rates. If the published studies are of only partial applicability to the exact method utilised, such as if the study omits the verification of the result by an independent examiner, this is disclosed, but it is also noted that the impact of this difference is not known. Internal validation studies are disclosed with key findings, including if the internal validation differs from published sources.

Where no validation studies or error rates exist, this is disclosed. It is noted however that an absence of validation studies does not indicate that a technique is not reliable, but that without validation it is not possible to determine error rates or accuracy in the specific methodology.

Regarding the provision of error rates within the annexures, we accept that the method-wide error rates, from published studies, that may not have been conducted with VPFSD staff and exact methods, may not reflect the potential or actual rate of error that may be possible in the specific case being reported. However, in our view it is vital to communicate that error is possible, even with the best-trained experts applying a valid method under suitable conditions, in the environment of a robust quality management system. Utilising published literature is a means of doing this, even if the error rate is not perfect – by providing an indicative rate, it enables the end-user to place the evidence in greater context within the case, and to hopefully incorporate the potential for an opinion to be erroneous into their weighting and decision making.

4.4.2. Assumptions & limitations

These sections provide the methodological, interpretative, or technological assumptions and limitations that are associated with the method. This may include describing the impact of low quality and quantity evidence, the effects of environmental exposure, the reproducibility of features across different depositions, any limitations in databases or research on specific factors, or issues regarding contamination. These assumptions and limitations apply broadly across all, or many, of the analyses performed with the method – case-specific assumptions are detailed within the body of the statement.

4.4.3. Competency

A brief description of how examiners demonstrate their ongoing competence is given. This includes whether they sit proficiency tests, whether those tests are developed and assessed internally or externally, and the frequency at which assessment occurs. Qualifications, training, study and experience is provided within the statement.

4.4.4. Quality assurance

A short paragraph is included to note that the quality management system is accredited to the requirements of ISO/IEC17025 and AS5388 [43,44].

4.4.5. Cognitive factors

As all forensic methods require some form of human decision making, this section describes potential cognitive factors that may impact on the validity, accuracy, or reliability of the opinion or result. This includes if the examiner was exposed to contextual information, or if blinding occurred. If the method is based on a subjective interpretation or weighting of features and characteristics, and if this may result in differences of opinion between examiners, this is described, with references to published or internal studies if rates of differences are known empirically.

4.4.6. Discipline-specific limitations

This section is included for some methods where there may be a chance of misunderstanding the meaning or implications of the opinion, or where examiners are commonly asked if they can provide specific information that is not yet obtainable with a validated technique. Examples include the inability to determine when a latent fingerprint was deposited or what an absence of evidence should be interpreted to infer. For disciplines such as DNA or trace evidence (glass, paint, fibres) where the propositions may be at different levels in the hierarchy (sub-source, source, or activity level), there is a section explaining at which level the propositions were set, and why the opinion should not be taken to provide support (or otherwise) to propositions at a different level in the hierarchy.

4.4.7. Controversies relevant to the discipline/method

This section is required under the Practice Note and is intended to promote disclosure in areas where there is legitimate scientific disagreement about the method. For many disciplines present at VPFSD, the methods are internationally accepted, and relatively standardised. However, this section is used to alert readers to the NAS and PCAST reports and their main findings in relation to each discipline, and, where appropriate, to indicate what knowledge, changes to practice, or governance have occurred within the discipline and Australian forensic landscape since these reports were published. Other inclusions under this section, specific to particular methods, include the criticisms of the identification paradigm and discipline-specific reports that outline limitations or problematic practices with fields (e.g. Refs. [45,46]).

5. Impact of transparent reporting

The annexures were introduced over a three-year period, with each discipline commencing use once the annexure had been reviewed and approved. By January 1, 2022, all reports containing opinion evidence released by VPFSD complied with the transparent reporting principles and the Practice Note through the provision of standardised annexures. To gather information on the impact that the annexures have had since introduction, VPFSD staff were surveyed regarding the annexures and any changes to engagement with end-users since introduction. Altogether, 58 staff members from VPFSD completed the survey, from around 140 staff authorised to provide opinion evidence in court (∼40 % response rate). Further details regarding the survey, respondents and the survey questions are provided in Supplementary Material B.

5.1. Staff perceptions of transparent reporting prior to introduction

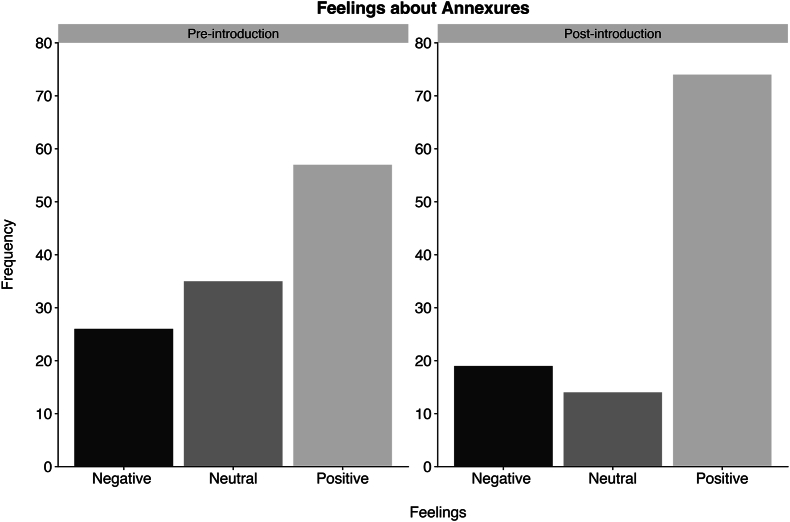

We surveyed staff on their feelings about transparent reporting prior to the introduction of the annexures – noting that the survey was conducted 1–3 years after their initial experiences, and so their reflections may have been affected by their subsequent experience. Respondents were asked to select from a list the feelings that most accurately reflected their attitudes towards the annexures during the pre-implementation phase. There was no limit on the number of feelings they could select. The feelings have been categorised into three groups: negative (included: resistant, pessimistic, skeptical, concerned, conflicted and fearful), neutral (includes: indifferent, cautious, curious and anticipatory), and positive (includes: optimistic, enthusiastic, confident, supportive and excited). The majority of the feelings selected by respondents were positive (see Fig. 1). Among the positive feelings, the most frequently chosen was supportive (n = 28). The most frequently chosen neutral feeling was cautious (n = 15) while the most frequent negative feeling was skepticism regarding the annexures and their potential benefits to practitioners and stakeholders (n = 10).

Fig. 1.

Distribution of responses regarding pre- and post-introduction feelings about the annexures.

Most respondents (72 %, n = 42) expressed that they did not have any specific fears or concerns about the proposal to include an annexure with every written opinion provided to courts. Twenty-four percent (n = 14)2 of respondents expressed that they did have some specific fears or concerns. These concerns primarily revolved around four main themes: increased scrutiny and questioning of their evidence, the large amount of material to review prior to each court appearance, the potential for stakeholders to misunderstand or misuse the material, and environmental concerns of having to use more paper for court. Comments did not indicate a fear of transparency or questioning but suggested that there was a level of anxiety about the amount of court preparation given the increased transparency, and the implications of this on workloads and time required prior to each appearance.

5.2. Staff perceptions of transparent reporting 1 year after introduction

We also asked respondents to select from a list the feelings that most accurately reflected their attitudes towards the annexures after their implementation (see Fig. 1). The number of positive feelings selected by respondents increased, while the number of neutral and negative feelings selected decreased, suggesting that staff members felt more positive after the implementation of the annexures. Again, among the positive feelings, the most frequently chosen was supportive (n = 37). Feelings of cautiousness (n = 2) and skepticism (n = 5) notably decreased after implementation of the standardised annexures. The most frequently chosen neutral feeling post-implementation was indifferent (n = 10) and the most frequent negative feeling was concerned (n = 6).

Again, most participants (74 %, n = 43) expressed that they did not have any specific fears or concerns now that the annexures have been introduced in all Units. Twenty-six percent (n = 15) did have some specific fears or concerns. These concerns primarily revolved around seven main themes: increased scrutiny and questioning of their evidence, the large amount of material to review, the potential for stakeholders to misunderstand or misuse the material, environmental concerns of having to use more paper for court, stakeholders not reading the materials, the materials being overwhelming for stakeholders, and the need to continuously update the annexures in light of new research and developments.

Just over one-third of respondents (34 %, n = 20) reported that they felt the annexures were making their jobs easier (with the highest percentage of respondents (40 %, n = 23) reporting no effect), and two-thirds of respondents (67 %; n = 39) reported that the annexures had no impact on how they write their statements. For the 26 % (n = 15) of respondents who reported that the annexures had made their job harder, and the 33 % (n = 19) who reported that the annexures had impacted how they write their statements, the most common impacts described were the addition of references to the annexures within their statements and the removal of details now included in the annexures.

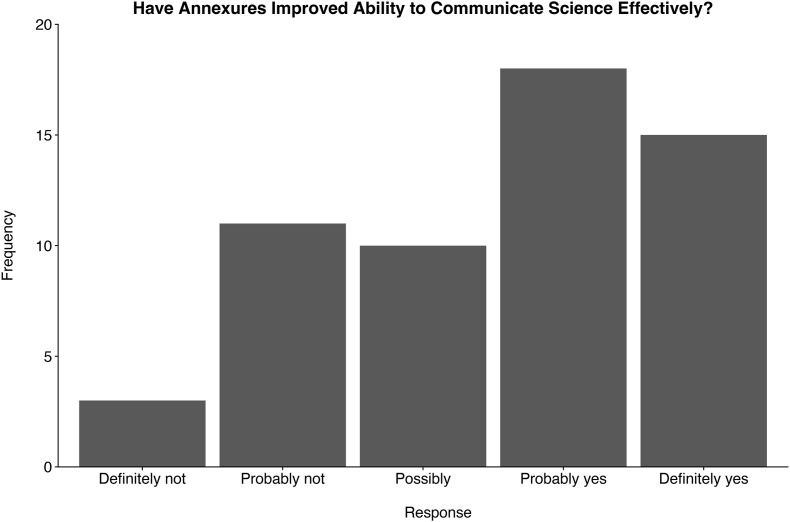

Encouragingly, over half (57 %) of respondents felt that the annexures were helping them to better explain their science to a lay audience. This finding provides evidence to suggest that the annexures are successfully accomplishing one of their primary goals of enhancing science communication (see Fig. 2).

Fig. 2.

Distribution of responses regarding whether the annexures have improved practitioner's ability to explain their science effectively.

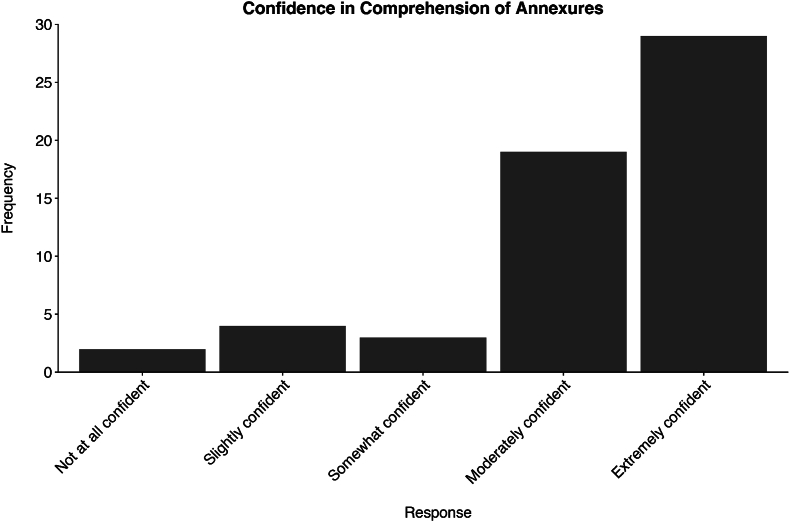

Lastly, regarding education and training, 41 % (n = 24) of respondents reported receiving specific training prior to the introduction of the annexures. This training was a maximum length of 5 h, and was predominantly self-directed learning, workshops or seminars. Over half of the respondents (57 %, n = 33) reported receiving no education and/or training. Disciplines in which members received no additional training reported that their existing training programs adequately covered the annexure material, and therefore further training was not required on the content and process for providing annexures. Disciplines that did provide training used it as a vehicle to provide refresher training or further information about the literature, or to provide support to examiners regarding potential questions that may be asked around the literature cited or concepts covered. Encouragingly, 83 % of respondents reported feeling moderately or extremely confident in their comprehension of the material contained in the annexure (see Fig. 3), suggesting that the content of the annexures might be clear enough to necessitate minimal effort in creating dedicated training. Further training, ongoing support and monitoring have demonstrated the high levels of competency of staff to explain concepts and details contained within the annexures, suggesting that the lack of confidence is an individual perception factor, rather than a lack of ability to communicate the material.

Fig. 3.

Distribution of responses regarding practitioner's confidence in their comprehension of the material in the annexures.

5.3. Court experiences with transparent reporting

We also asked staff about their experiences with using the annexures in their cases being prepared for court. Most respondents (79 %) reported that the number of questions they receive from stakeholders, such as police investigators and lawyers, prior to going to court remained unchanged after the introduction of the annexures in their Unit (see Table 3). In future research, obtaining stakeholder impressions of the annexures is critical for understanding why the annexures may not be prompting an increase in questions asked of our practitioners prior to court.

Table 3.

Responses regarding changes in the number of pre-court questions after the introduction of annexures.

| Number of Questions Prior to Court | Frequency (%) |

|---|---|

| Decreased | 5 (8.62) |

| Increased | 3 (5.17) |

| Remained the same | 46 (79.31) |

| No response | 4 (6.90) |

Similarly, most respondents (72 %) also noted that the number of requests to appear in court as an expert witness that they received remained unchanged after the introduction of the annexures in their Unit (see Table 4). Interestingly, 14 % of respondents reported a decrease in requests to appear in court after the introduction of the annexures. This could indicate that the disclosures contained in the annexures are assisting in addressing some stakeholder questions that would previously have been raised during court proceedings.

Table 4.

Responses regarding changes in the number of requests to appear in court after the introduction of annexures.

| Requested to Appear in Court | Frequency (%) |

|---|---|

| Less frequently | 8 (14) |

| No change | 42 (72) |

| More frequently | 6 (10) |

| No response | 2 (4) |

Lastly, 53 % of respondents reported that the number of questions they were being asked about their evidence in court had remained the same, while 22 % of respondents reported that the number had increased since the introduction of the annexures (see Table 5). This increase could be due to the annexures providing legal stakeholders with clearer guidance on potential questions to ask in court. Supporting this assertion, in free-text responses respondents reported receiving pre- and in-court questions from stakeholders about error rates, methodology, cognitive factors, and PCAST after the introduction of the annexures.

Table 5.

Responses regarding changes in the number of in-court questions after the introduction of annexures.

| Number of Questions in Court | Frequency (%) |

|---|---|

| Decreased | 0 (0) |

| Increased | 13 (22) |

| Remained the same | 31 (53) |

| No response | 14 (24) |

The results of the survey show that overall, while some staff had concerns prior to the introduction of transparent reporting, these fears were mostly allayed post-implementation. Transparency slightly increased the number of questions on relevant indicia of scientific validity and reliability matters during oral testimony, but this was balanced by staff feeling confident in the material due to being able to refer to the annexures, and through a slight decrease in court appearances for some matters.

6. Conclusion

There is a clear expectation from courts, legal practitioners, scientists and authoritative bodies that forensic scientists should disclose all relevant facts and material associated with expert opinions. It is therefore incumbent for organisations to meet this expectation to ensure that forensic science contributes to accurate, just and fair outcomes within the criminal justice system. Although transparent reporting represented a shift in custom and practice, in our experience it is possible to achieve with limited ongoing impost on resourcing. Ensuring adequate support for staff during the change process, including education if required, is vital, along with a mechanism for regular updating of the disclosed information as scientific knowledge grows. Transparency, in our experience, is assisting in directing legal attention to appropriate matters that impact on the validity and contextualisation of opinions within cases. However, there is still limited engagement with such matters in some cases, and therefore transparency must be accompanied by education for legal stakeholders on the importance of considering the information disclosed.

Over the coming years, VPFSD will continue to refine and improve the annexure content with assistance from internal and external stakeholder reviews and feedback. Avenues to provide the content proactively for all legal and forensic practitioners are currently being explored.

Ethics

The nature and scope of this research was considered minimal risk as per the National Health and Medical Research Council National Statement on Ethical Conduct in Human Research, and therefore was conducted as a business improvement activity.

Data code and materials

The data and materials that support the findings of this study are available from the corresponding author on reasonable request.

CRediT authorship contribution statement

Kaye N. Ballantyne: Writing – original draft, Supervision, Resources, Project administration, Methodology, Investigation, Formal analysis, Conceptualization. Stephanie Summersby: Writing – original draft, Visualization, Methodology, Investigation, Formal analysis. James R. Pearson: Writing – review & editing, Project administration, Investigation, Conceptualization. Katherine Nicol: Writing – review & editing, Project administration, Investigation. Erin Pirie: Writing – review & editing, Project administration, Investigation. Catherine Quinn: Writing – review & editing, Supervision, Conceptualization. Rebecca Kogios: Writing – review & editing, Supervision, Conceptualization.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests.

All authors are employees of Victoria Police Forensic Services Department. The authors were offered a waiver on the article publishing charge for open access publication of this article.

Acknowledgements

We would like to thank the staff of VPFSD for their work in developing, continually improving and supporting the transparent reporting framework. The subject matter experts that provided their expertise were critical to the implementation of this project, and we are grateful for their efforts.

Supplementary data to this article can be found online at https://doi.org/10.1016/j.fsisyn.2024.100474.

“He, himself, said it” – referring to an unsupported assertion.

Two participants did not provide a response.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.Davie v Lord Provost Magistrates and Counsellors of the City of. 1953;SC:34. [Google Scholar]

- 2.Lang V the Queen, 2023 HCA 29.

- 3.The US National Academy of Sciences (NAS), National Research Council Committee on identifying the needs of the forensic science community, strengthening forensic science in the United States: A Path Forward. 2009. https://www.ojp.gov/pdffiles1/nij/grants/228091.pdf. (Accessed 11 January 2024).

- 4.Bali A.S., Edmond G., Ballantyne K.N., Kemp R.I., Martire K.A. Communicating forensic science opinion: an examination of expert reporting practices. Sci. Justice. 2020;60:216–244. doi: 10.1016/j.scijus.2019.12.005. [DOI] [PubMed] [Google Scholar]

- 5.Howes L.M., Julian R., Kelty S.F., Kemp N., Kirkbride K.P. The readability of expert reports for non-scientist report-users: reports of DNA analysis. Forensic Sci. Int. 2014;237:7–18. doi: 10.1016/J.FORSCIINT.2014.01.007. [DOI] [PubMed] [Google Scholar]

- 6.Howes L.M., Kirkbride K.P., Kelty S.F., Julian R., Kemp N. The readability of expert reports for non-scientist report-users: reports of forensic comparison of glass. Forensic Sci. Int. 2014;236:54–66. doi: 10.1016/J.FORSCIINT.2013.12.031. [DOI] [PubMed] [Google Scholar]

- 7.Howes L.M., Kirkbride K.P., Kelty S.F., Julian R., Kemp N. Forensic scientists' conclusions: how readable are they for non-scientist report-users? Forensic Sci. Int. 2013;231:102–112. doi: 10.1016/J.FORSCIINT.2013.04.026. [DOI] [PubMed] [Google Scholar]

- 8.Howes L.M. ‘Sometimes I give up on the report and ring the scientist’’: bridging the gap between what forensic scientists write and what police investigators read. ’ Policing Soc. 2017;27:541–559. doi: 10.1080/10439463.2015.1089870. [DOI] [Google Scholar]

- 9.Reid C.A., Howes L.M. Communicating forensic scientific expertise: an analysis of expert reports and corresponding testimony in Tasmanian courts. Sci. Justice. 2020;60:108–119. doi: 10.1016/j.scijus.2019.09.007. [DOI] [PubMed] [Google Scholar]

- 10.de Keijser J., Elffers H. Understanding of forensic expert reports by judges, defense lawyers and forensic professionals. Psychol. Crime Law. 2012;18:191–207. doi: 10.1080/10683161003736744. [DOI] [Google Scholar]

- 11.Cashman K., Henning T. Lawyers and DNA: issues in understanding and challenging the evidence. Curr. Issues Crim. Justice. 2012;24:69–83. doi: 10.1080/10345329.2012.12035945. [DOI] [Google Scholar]

- 12.Siegel J.A., King M., Reed W. The laboratory report project, forensic science policy & management. Int. J. 2013;4:68–78. doi: 10.1080/19409044.2013.858798. [DOI] [Google Scholar]

- 13.Edmond G., Found B., Martire K., Ballantyne K., Hamer D., Searston R., Thompson M., Cunliffe E., Kemp R., San Roque M., Tangen J., Dioso-Villa R., Ligertwood A., Hibbert D., White D., Ribeiro G., Porter G., Towler A., Roberts A. Model forensic science. Aust. J. Forensic Sci. 2016;48:496–537. doi: 10.1080/00450618.2015.1128969. [DOI] [Google Scholar]

- 14.Supreme Court of Victoria Expert evidence in criminal trials practice note SC CR 3. 2017. https://www.supremecourt.vic.gov.au/areas/legal-resources/practice-notes/sc-cr-3-expert-evidence-in-criminal-trials

- 15.Federal rules of evidence, 28 U.S.C. §702. 1975. https://www.govinfo.gov/app/details/USCODE-2010-title28/USCODE-2010-title28-app-federalru-dup2-rule702

- 16.The criminal procedure rules - rule 19.4. 2020. https://www.legislation.gov.uk/uksi/2020/759/contents/made

- 17.Found B., Edmond G. Reporting on the comparison and interpretation of pattern evidence: recommendations for forensic specialists. Aust. J. Forensic Sci. 2012;44:193–196. doi: 10.1080/00450618.2011.644260. [DOI] [Google Scholar]

- 18.W.E. Crozier, J. Kukucka, B.L. Garrett, Juror appraisals of forensic evidence: effects of blind proficiency and cross-examination, Forensic Sci. Int. 315 (2020), 110433, doi:10.1016/j.forsciint.2020.110433. [DOI] [PubMed]

- 19.Mitchell G., Garrett B. The impact of proficiency testing information and error aversions on the weight given to fingerprint evidence. Behav. Sci. Law. 2019;37:195–210. doi: 10.1002/bsl.2402. [DOI] [PubMed] [Google Scholar]

- 20.Garrett B.L., Mitchell G. Forensics and fallibility: comparing the views of lawyers and judges. W. Va. Law Rev. 2016;119:100–117. [Google Scholar]

- 21.Koehler J.J., Schweitzer N.J., Saks M.J., McQuiston D.E. Science, technology, or the expert witness: what influences jurors' judgments about forensic science testimony. Psychol. Publ. Pol. Law. 2016;22:401–413. doi: 10.1037/LAW0000103. [DOI] [Google Scholar]

- 22.Howes L.M. A step towards increased understanding by non-scientists of expert reports: recommendations for readability. Aust. J. Forensic Sci. 2015;47:456–468. doi: 10.1080/00450618.2015.1004194. [DOI] [Google Scholar]

- 23.The President’s Council of Advisors on Science and Technology (PCAST) 2016. Forensic Science in Criminal Proceedings: Ensuring Scientific Validity of Feature-Comparison Methods.https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/PCAST/pcast_forensic_science_report_final.pdf [Google Scholar]

- 24.Expert Working Group on Human Factors in Latent Print Analysis Latent print examination and human factors: improving the practice through a systems approach. 2012. [DOI]

- 25.S. Carr, E. Piasecki, A. Gallop, Demonstrating reliability through transparency: a scientific validity framework to assist scientists and lawyers in criminal proceedings, Forensic Sci. Int. 308 (2020), 110110, doi:10.1016/j.forsciint.2019.110110. [DOI] [PubMed]

- 26.Martire K.A., Edmond G. Rethinking expert opinion evidence, Melb. Univ. Law Rev. 2017;40:967–998. [Google Scholar]

- 27.Morgan J. Wrongful convictions and claims of false or misleading forensic evidence. J. Forensic Sci. 2023;68:908–961. doi: 10.1111/1556-4029.15233. [DOI] [PubMed] [Google Scholar]

- 28.McQuiston-Surrett D., Saks M.J. Communicating opinion evidence in the forensic identification sciences: accuracy and impact. Hastings Law J. 2008;59:1159. [Google Scholar]

- 29.Martire K.A., Edmond G., Navarro D. Exploring juror evaluations of expert opinions using the Expert Persuasion Expectancy framework. Leg. Criminol. Psychol. 2020;25:90–110. doi: 10.1111/LCRP.12165. [DOI] [Google Scholar]

- 30.Nance D.A., Morris S.B. Juror understanding of DNA evidence: an empirical assessment of presentation formats for trace evidence with a relatively small random‐match probability. J. Leg. Stud. 2005;34:395–444. [Google Scholar]

- 31.Garrett B., Mitchell G. How jurors evaluate fingerprint evidence: the relative importance of match language, method information, and error acknowledgment. J. Empir. Leg. Stud. 2013;10:484–511. [Google Scholar]

- 32.Goodman-Delahunty J., Hewson L. 2009. Improving Jury Understanding and Use of DNA Expert Evidence, Report to the Criminology Research Council. CRC 05/07-08. [Google Scholar]

- 33.McQuiston-Surrett D., Saks M.J. The testimony of forensic identification science: what expert witnesses say and what factfinders hear. Law Hum. Behav. 2009;33:436–453. doi: 10.1007/s10979-008-9169-1. [DOI] [PubMed] [Google Scholar]

- 34.Maxwell C. Preventing miscarriages of justice: the reliability of forensic evidence and the role of the trial judge as gatekeeper. Aust. Law J. 2019;93:642–654. [Google Scholar]

- 35.Faigman D.L., Porter E., Saks M.J. Check your crystal ball at the courthouse door, please: exploring the past, understanding the present, and worrying about the future of scientific evidence. Cardozo Law Rev. 1994;15:1799. [Google Scholar]

- 36.Edmond G. Forensic science and the myth of adversarial testing. Curr. Issues Crim. Justice. 2020;32:146–179. doi: 10.1080/10345329.2019.1689786. [DOI] [Google Scholar]

- 37.Garrett B., Crozier W., Grady R. Error rates, likelihood ratios, and jury evaluation of forensic evidence. J. Forensic Sci. 2020;65:1199–1209. doi: 10.1111/1556-4029.14323. [DOI] [PubMed] [Google Scholar]

- 38.S. Summersby, G. Edmond, R. Kemp, K. Ballantyne, K. Martire, The effect of following best practice reporting recommendations on legal and community evaluations of forensic examiners reports, Forensic Sci. Int. (in press). [DOI] [PubMed]

- 39.Bali A.S., Martire K.A., Edmond G. Lay comprehension of statistical evidence: a novel measurement approach. Law Hum. Behav. 2021;45:370–390. doi: 10.1037/lhb0000457. [DOI] [Google Scholar]

- 40.Martire K.A., Kemp R.I., Sayle M., Newell B.R. On the interpretation of likelihood ratios in forensic science evidence: presentation formats and the weak evidence effect. Forensic Sci. Int. 2014;240:61–68. doi: 10.1016/j.forsciint.2014.04.005. [DOI] [PubMed] [Google Scholar]

- 41.Martire K.A., Kemp R.I., Watkins I., Sayle M.A., Newell B.R. The expression and interpretation of uncertain forensic science evidence: verbal equivalence, evidence strength, and the weak evidence effect. Law Hum. Behav. 2013;37:197–207. doi: 10.1037/lhb0000027. [DOI] [PubMed] [Google Scholar]

- 42.Thompson W.C., Newman E.J. Lay understanding of forensic statistics: evaluation of random match probabilities, likelihood ratios, and verbal equivalents. Law Hum. Behav. 2015;39:332–349. doi: 10.1037/lhb0000134. [DOI] [PubMed] [Google Scholar]

- 43.International Organisation for Standardization . 2017. ISO/IEC17025:2017 General Requirements for the Competence of Testing and Calibration Laboratories. [Google Scholar]

- 44.Standards Australia . 2013. AS 5388 Forensic Analysis. [Google Scholar]

- 45.AAAS Forensic science assessments: a quality and gap analysis- latent fingerprint examination. Report prepared by William Thompson, John Black, Anil Jain, and Joseph Kadane) 2017 doi: 10.1126/srhrl.aag2874. [DOI] [Google Scholar]

- 46.AAAS Forensic science assessments: a quality and gap analysis - fire investigation, (report prepared by José Almirall, hal Arkes, John Lentini, fred Mowrer, and Janusz Pawliszyn) 2017. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.