Abstract

Distal radius fractures rank among the most prevalent fractures in humans, necessitating accurate radiological imaging and interpretation for optimal diagnosis and treatment. In addition to human radiologists, artificial intelligence systems are increasingly employed for radiological assessments. Since 2023, ChatGPT 4 has offered image analysis capabilities, which can also be used for the analysis of wrist radiographs. This study evaluates the diagnostic power of ChatGPT 4 in identifying distal radius fractures, comparing it with a board-certified radiologist, a hand surgery resident, a medical student, and the well-established AI Gleamer BoneView™. Results demonstrate ChatGPT 4’s good diagnostic accuracy (sensitivity 0.88, specificity 0.98, diagnostic power (AUC) 0.93), surpassing the medical student (sensitivity 0.98, specificity 0.72, diagnostic power (AUC) 0.85; p = 0.04) significantly. Nevertheless, the diagnostic power of ChatGPT 4 lags behind the hand surgery resident (sensitivity 0.99, specificity 0.98, diagnostic power (AUC) 0.985; p = 0.014) and Gleamer BoneView™(sensitivity 1.00, specificity 0.98, diagnostic power (AUC) 0.99; p = 0.006). This study highlights the utility and potential applications of artificial intelligence in modern medicine, emphasizing ChatGPT 4 as a valuable tool for enhancing diagnostic capabilities in the field of medical imaging.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00402-024-05298-2.

Keywords: Fracture detection, Distal radius fracture, Radiology, ChatGPT, Artificial intelligence, Hand surgery

Introduction

In 2019, fractures of the distal radius ranked third in Germany with a total of 72,087 cases, surpassed only by femoral neck and femoral pertrochanteric fractures. Unlike femoral fractures, which are 20 times more common in populations over 70 years of age than in those under 70, radius fractures often also affect younger populations [1]. Distal radius injuries in older individuals are typically caused by low-energy trauma. In contrast, younger individuals tend to experience higher energy trauma [2]. In the clinical management of these conditions, treatment approaches may involve surgical intervention for complicated and displaced fractures or non-operative methods for simple and non-displaced fractures. The indications for each approach may overlap [3]. Due to the high incidence of forearm and hand fractures and the complications if not adequately diagnosed and treated, selecting the appropriate diagnostic and treatment regimen is of significant importance [4]. Consequently, the financial burden on a country is significant. This is demonstrated by the annual expenses of €540 million for treatment in the Netherlands [5].

In addition to the clinical examination of the hand and concomitant injuries, radiological imaging plays a key role in diagnosing a fracture and determining the treatment regime. The classification of the Osteosynthesis Working Group (AO) is an established assessment scheme for distal radius fractures. By using this system, work processes can be systematised and optimised, leading to a more precise diagnosis, more effective treatment strategies, and ultimately improved clinical outcomes for patients [6]. In addition, the work of medical staff at LMU University Hospital Munich has been supported by Gleamer BoneView™ (Gleamer, Paris, France) since 2022 [7]. Gleamer BoneView™ is an artificial intelligence (AI) optimised for radiological fracture detection. In contrast to artificial intelligence algorithms that use large language models (LLM), GleamerAI cannot translate radiological image information into language or a precise classification system. OpenAI, an LLM that was officially launched in November 2022, can produce speech and has already demonstrated its ability to perform medical tasks, such as passing the United States Medical Licensing Examination (USMLE) [8]. A previous study has demonstrated that chatbots utilising ChatGPT 4 technology are capable of producing AO codes from radiological reports. These were significantly faster, but much less accurate in the creation of AO codes [9]. On 25 September 2023, the previously text-based language model ChatGPT 4 received an update for image input and processing. Visual capabilities based on Convolutional Neural Networks (CNNs) were achieved through a training process similar to that used for ChatGPT 4 text processing [10]. Firstly, ChatGPT 4 had to anticipate the next words within a document using textural and visual data sets. Secondly, refinement was achieved by adding additional data, supported by Reinforcement Learning from Human Feedback (RLHF) [11].

This improvement indicates a promising use of ChatGPT 4 in clinical practice to diagnose and classify fractures and to support and supplement clinical practicians. To assess this question, the accuracy and efficiency of ChatGPT 4, GleamerAI, a medical student, radiologists and a physician were compared in the detection of distal radius fractures presented to the Division of Hand, Plastic and Aesthetic Surgery within the LMU University Hospital Munich.

Methods

In the present study, we aimed to examine the diagnostic power of the AI chatbot ChatGPT 4 in the detection of distal radius fractures in wrist X-rays and compare it to the radiological report of a board-certified radiologist, a hand surgery resident, a medical student and Gleamer BoneView™ (Gleamer AI, France), a commercially available AI algorithm for fracture detection in radiographs. For this purpose, we have included 100 wrist X-rays with and 50 without distal radius fracture of patients who had received radiographs due to a suspected fracture in this study. The X-ray images were irreversibly anonymised, and a combined image was created from the ap and lateral view (Figs. 1 and 2). Afterwards, the order of the images was randomised for the following examination.

Fig. 1.

Combined image of wrist x-rays of a patient with distal radius fracture

Fig. 2.

Combined image of wrist x-rays of a patient without distal radius fracture

For the radiological evaluation with ChatGPT 4, the radiological images were uploaded one after the other, and the following standardised sequence of consecutive questions was used. If ChatGPT 4 did not answer one of the questions adequately, the question was paraphrased and asked again.

The following image shows the ap and lateral view of a wrist x-ray of the same person. Can you detect a fracture on the image? Yes or No.

If the answer was yes – Which bone is broken in the uploaded image?

The images were also examined in the same order by a hand surgery resident and a medical student in the clinical training phase regarding the above-mentioned questions. In addition, the images were analysed using the AI software BoneView™. As the software only marks fractures with a square, the marking of the distal radius in the presence of a fracture was evaluated as the correct detection of the fracture and localisation. The radiological reports of a board-certified radiologist were used as reference.

For statistical analysis of distal radius fracture detection rate, sensitivity and specificity were calculated and receiver operating characteristic analysis was performed. McNemar’s test was performed to analyse the sensitivity and specificity of fracture detection. All data are given as means and standard error of the mean. A p-value < 0.05 was considered statistically significant.

Results

A total of 150 wrist radiographs from the Division of Hand, Plastic and Aesthetic Surgery within the LMU University Hospital Munich were included in this study. Among the 100 distal radius fractures, 20 fractures were classified as type A, 4 as type B, and 76 as type C according to the AO classification for distal radius fracture.

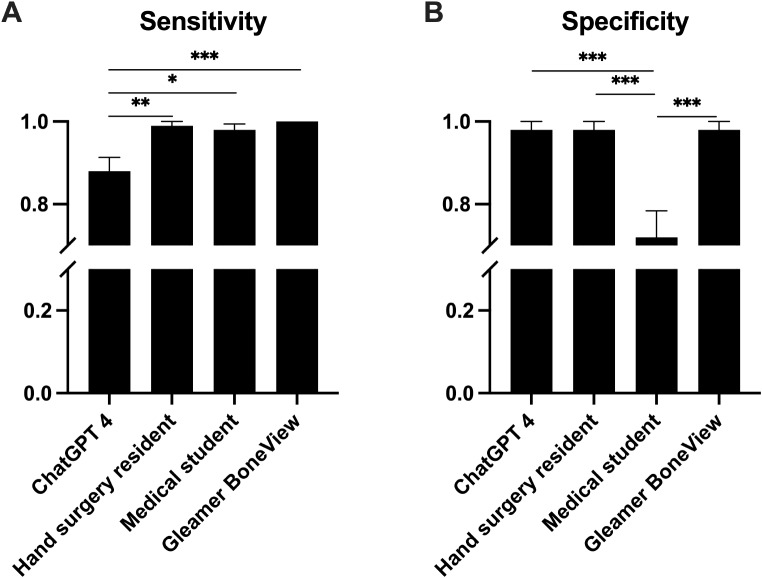

We conducted an analysis of the sensitivity (n = 100) and specificity (n = 50) of ChatGPT 4, hand surgery resident, medical student and Gleamer BoneView™ for distal radius fracture detection. McNemar’s test was conducted for statistical comparison (Fig. 3). The results revealed a sensitivity of 0.88 (0.033) for ChatGPT 4, 0.99 (0.010) for hand surgery resident, 0.98 (0.014) for medical student, and 1.00 (0.000) for Gleamer BoneView™. McNemar’s test indicated a significantly lower sensitivity of ChatGPT 4 compared to hand surgery resident (p = 0.003), medical student (p = 0.013), and Gleamer BoneView™ (p < 0.001). Conversely, specificity was 0.98 (0.020) for ChatGPT 4, 0.98 (0.020) for hand surgery resident, 0.72 (0.064) for medical student, and 0.98 (0.020) for Gleamer BoneView™. Statistical analysis demonstrated significantly lower specificity of medical student compared to ChatGPT 4, hand surgery resident, and Gleamer BoneView™ (all p < 0.001).

Fig. 3.

Sensitivity (A) and Specificity (B) of distal radius fracture detection rate. A Sensitivity is 0.88 (0.033) for ChatGPT 4, 0.99 (0.010) for hand surgery resident, 0.98 (0.014) for medical student, and 1.00 (0.000) for Gleamer BoneView™. McNemar’s test revealed significantly lower sensitivity of ChatGPT 4 than hand surgery resident (p = 0.003), medical student (p = 0.013), and Gleamer BoneView™ (p < 0.001). B Specificity is 0.98 (0.020) for ChatGPT 4, 0.98 (0.020) for hand surgery resident, 0.72 (0.064) for medical student, and 0.98 (0.020) for Gleamer BoneView™. McNemar’s test revealed significantly lower speficity of medical student than ChatGPT 4, hand surgery resident, and Gleamer BoneView™ (all p < 0.001)

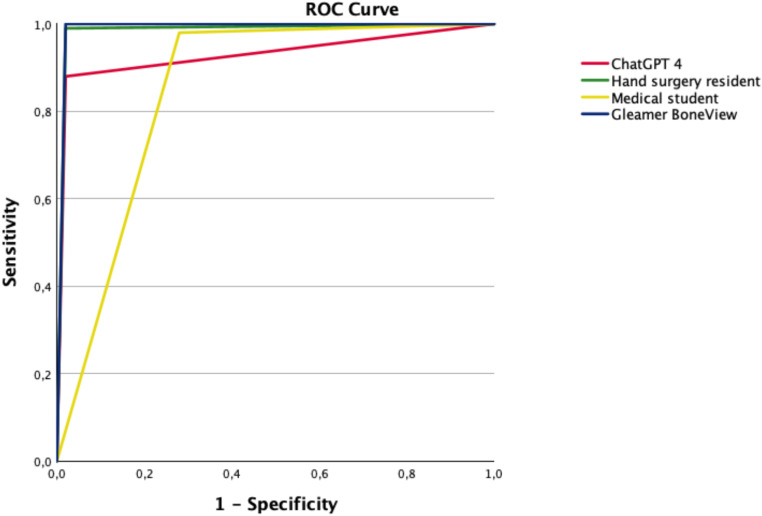

The diagnostic power of each group was assessed using a receiver operating characteristic curve of sensitivity and specificity (Fig. 4). The respective area under the curve (AUC) was calculated as 0.93 (0.023) for ChatGPT 4, 0.985 (0.013) for hand surgery resident, 0.85 (0.040) for medical student, and 0.99 (0.012) for Gleamer BoneView™. AUC analysis revealed that hand surgery resident and Gleamer BoneView™ exhibited the highest diagnostic power without any statistical differences between them (p = 0.741). Both demonstrated significantly higher diagnostic power than ChatGPT 4 (p = 0.014 and p = 0.006, respectively) and medical student (both p < 0.001). The comparison of ChatGPT 4 and medical student showed a significantly higher diagnostic power of ChatGPT 4 than medical student (p = 0.04, Table 1).

Fig. 4.

Receiver operating characteristic curve of the distal radius fracture detection rate. Area under the curve is 0.93 (0.023) for ChatGPT 4, 0.985 (0.013) for hand surgery resident, 0.85 (0.040) for medical student, and 0.99 (0.012) for Gleamer BoneView™

Table 1.

Comparison of the area under the ROC curve (AUC) of ChatGPT4, hand surgery resident, medical student, and Gleamer BoneView™

| Difference in AUC | Confidence interval | p-Value | |

|---|---|---|---|

| ChatGPT 4 – Hand surgery resident | -0.055 | -0.099 / -0.011 | 0.014 |

| ChatGPT 4 – Medical student | 0.08 | 0.004 / 0.156 | 0.04 |

| ChatGPT 4 – Gleamer BoneView™ | -0.06 | -0.103 / -0.017 | 0.006 |

| Hand surgery resident – Medical student | 0.135 | 0.067 / 0.203 | < 0.001 |

| Hand surgery resident – Gleamer BoneView™ | -0.005 | -0.035 / 0.146 | 0.741 |

| Medical student – Gleamer BoneView™ | -0.140 | -0.209 / -0.071 | < 0.001 |

In summary, ChatGPT 4 demonstrates good diagnostic power in detecting distal radius fractures in wrist radiographs.

Discussion

The diagnostic accuracy of ChatGPT 4 was compared with that of a hand surgery resident, a medical student, and the AI algorithm Gleamer BoneView™. The study shows that ChatGPT 4 has lower diagnostic sensitivity compared to the hand surgery resident and Gleamer BoneView™, but higher precision than a medical student.

We performed receiver operating characteristic (ROC) curve analysis to quantify the diagnostic power of each observer. The area under the curve (AUC) for ChatGPT 4 was high at 0.93, reflecting good diagnostic capability, although it was lower than the AUC of the hand surgery resident and Gleamer BoneView™. In direct comparison, ChatGPT 4 exhibited significantly higher diagnostic power than the medical student, as demonstrated by their respective AUCs.

Recent studies showed various applications of Chat GPT in medicine. Application in radiology consist for example of translating medical reports into plain language to enhance the understanding of patients [12–14]. It also has the potential to support radiological decision-making [15–18] and to generate AO Codes from radiologists’ reports [19]. To the best of our knowledge, there has been no study to date that has analysed medical X-ray images using ChatGPT 4. This would create a new application for ChatGPT 4.

Previous studies have investigated the use of artificial intelligence systems to improve and aid in diagnosing distal radius fractures by radiologists. Guermazi et al. showed that AI reduced the average reading time per examination by 6.3 s and increased the sensitivity [7]. A good diagnostic rate of fractures was acquired using an VGG16 model by Kunihiro et al. [19]. In 2021, Tobler et al. utilised a deep convolutional neural network (DCNN) to detect and classify distal radius fractures [20]. This study demonstrated the effective use of DCNNs as adjunctive tools for second readings. This work provides a basis for using ChatGPT 4, a CNN based model, in a similar task. However, models intended for fracture classification were not yet ready for clinical application. In line with previous findings Zech et al. demonstrated high accuracy of pediatric wrist fractures using an objective-detection-based deep learning approach [21].

Olczak et al. [22], Anttila et al. [23], Gan et al. [24], Kim and MacKinnon [25], Thian et al. [26], Oka et al. [19] and Lindsey et al. [27] have all reported high accuracies in fracture detection on radiographs, with AUCs ranging from 0.918 to 0.98. These positive results are consistent with our own findings of 0.93 AUC using ChatGPT 4, despite not being specifically programmed for this task.

Our study had limitations. Firstly, the study was retrospective in nature and the radiographs did not include clinical information, which resulted in a lack of important parameters such as pain localisation [28]. Secondly, the training data for ChatGPT 4 is unknown to us. We cannot comment on the size of the dataset that the model was trained on. However, deep learning models perform worse when applied to new data sets and different patients [29]. Therefore, our setting for ChatGPT 4 was more difficult, as the offered scenario of fracture images was not available for training. In the context of fracture diagnostics, our investigation incorporated 150 wrist radiographs from the Division of Hand, Plastic and Aesthetic Surgery within the LMU University Hospital Munich. The fracture cohort consisted of 100 distal radius fractures, stratified into 20 type A, 4 type B, and a predominant 76 type C fractures as per the AO classification criteria. Different trauma centres report fewer type C fractures and more type A and B fractures [30, 31]. Therefore, our population favors higher diagnostic accuracy, as type C fractures are usually easier to detect.

Conclusion

In the current study we were able to analyse the diagnostic power of ChatGPT 4 and compare it to a hand surgery resident, a medical student and Gleamer BoneView™. ChatGPT 4 has a good sensitivity (0.88), specificity (0.98), and diagnostic power assessed through AUC calculation (0.93). Although ChatGPT 4 had a significantly lower diagnostic power than the hand surgery resident and Gleamer BoneView™, it had a significantly higher diagnostic power than the medical student. It should always be considered that ChatGPT was not designed for fracture detection and the image function has only been available for a few months.

Our findings collectively suggest that while ChatGPT 4 presents a valuable tool for distal radius fracture detection, it currently lacks the diagnostic proficiency of hand surgery professionals and advanced imaging technology, such as Gleamer BoneView™. As technology continues to advance, future enhancements to ChatGPT models may further improve their diagnostic capabilities. Our study contributes valuable insights into the evolving landscape of artificial intelligence applications in medical imaging, emphasizing the importance of continued collaboration between technology developers and healthcare professionals to optimise diagnostic outcomes.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article. This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors. This study was conducted in accordance with the relevant ethical and legal guidelines and regulations. No patient-related data was used. All x-ray images have been irreversibly anonymized. A separate ethical approval is not required.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Footnotes

This article has been retracted. Please see the retraction notice for more detail: 10.1007/s00402-024-05554-5

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

9/14/2024

This article has been retracted. Please see the Retraction Notice for more detail: 10.1007/s00402-024-05554-5

References

- 1.Rupp M, Walter N, Pfeifer C, Lang S, Kerschbaum M, Krutsch W, Baumann F, Alt V (2021) The incidence of Fractures among the Adult Population of Germany-an analysis from 2009 through 2019. Dtsch Arztebl Int 118(40):665–669. 10.3238/arztebl.m2021.0238 10.3238/arztebl.m2021.0238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Amin S, Achenbach SJ, Atkinson EJ, Khosla S, Melton LJ 3rd (2014) Trends in fracture incidence: a population-based study over 20 years. J Bone Min Res 29(3):581–589. 10.1002/jbmr.2072 10.1002/jbmr.2072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lichtman DM, Bindra RR, Boyer MI et al (2010) Treatment of distal radius fractures. J Am Acad Orthop Surg 18(3):180–189. 10.5435/00124635-201003000-00007 10.5435/00124635-201003000-00007 [DOI] [PubMed] [Google Scholar]

- 4.Cavalcanti Kussmaul A, Kuehlein T, Langer MF, Ayache A, Unglaub F (2023) The treatment of closed finger and metacarpal fractures. Dtsch Arztebl Int 120(50):855–862. 10.3238/arztebl.m2023.0226 10.3238/arztebl.m2023.0226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.de Putter CE, Selles RW, Polinder S, Panneman MJM, Hovius SER, van Beeck EF (2012) Economic impact of hand and wrist injuries: health-care costs and productivity costs in a population-based study. J Bone Joint Surg Am 94(9):e56. 10.2106/JBJS.K.00561 10.2106/JBJS.K.00561 [DOI] [PubMed] [Google Scholar]

- 6.Waever D, Madsen ML, Rölfing JHD, Borris LC, Henriksen M, Nagel LL, Thorninger R (2018) Distal radius fractures are difficult to classify. Injury 49 Suppl 1S29–S32. 10.1016/S0020-1383(18)30299-7 [DOI] [PubMed]

- 7.Guermazi A, Tannoury C, Kompel AJ et al (2022) Improving Radiographic fracture Recognition Performance and Efficiency using Artificial Intelligence. Radiology 302(3):627–636. 10.1148/radiol.210937 10.1148/radiol.210937 [DOI] [PubMed] [Google Scholar]

- 8.Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D (2023) How does ChatGPT perform on the United States Medical Licensing examination? The implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ 9:e45312. 10.2196/45312 10.2196/45312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Russe MF, Fink A, Ngo H, Tran H, Bamberg F, Reisert M, Rau A (2023) Performance of ChatGPT, human radiologists, and context-aware ChatGPT in identifying AO codes from radiology reports. Sci Rep 13(1):14215. 10.1038/s41598-023-41512-8 10.1038/s41598-023-41512-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.OpenAI J, Achiam SA, Sandhini Agarwal et al (2023) GPT-4 Technical Report. arXiv:2303–08774 [cs.CL]. 10.48550/arXiv.2303.08774

- 11.Long Ouyang JW, Xu Jiang D, Almeida et al (2022) Training language models to follow instructions with human feedback. 10.48550/arXiv.2203.02155. arXiv:2203.02155 [cs.CL]

- 12.Lyu Q, Tan J, Zapadka ME, Ponnatapura J, Niu C, Myers KJ, Wang G, Whitlow CT (2023) Translating radiology reports into plain language using ChatGPT and GPT-4 with prompt learning: results, limitations, and potential. Vis Comput Ind Biomed Art 6(1):9. 10.1186/s42492-023-00136-5 10.1186/s42492-023-00136-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jeblick K, Schachtner B, Dexl J et al (2023) ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. Eur Radiol. 10.1007/s00330-023-10213-1 10.1007/s00330-023-10213-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li H, Moon JT, Iyer D et al (2023) Decoding radiology reports: potential application of OpenAI ChatGPT to enhance patient understanding of diagnostic reports. Clin Imaging 101:137–141. 10.1016/j.clinimag.2023.06.008 10.1016/j.clinimag.2023.06.008 [DOI] [PubMed] [Google Scholar]

- 15.Barash Y, Klang E, Konen E, Sorin V (2023) ChatGPT-4 assistance in optimizing Emergency Department Radiology referrals and Imaging Selection. J Am Coll Radiol 20(10):998–1003. 10.1016/j.jacr.2023.06.009 10.1016/j.jacr.2023.06.009 [DOI] [PubMed] [Google Scholar]

- 16.Rao A, Kim J, Kamineni M, Pang M, Lie W, Succi MD (2023) Evaluating ChatGPT as an Adjunct for Radiologic decision-making. medRxiv. 10.1101/2023.02.02.23285399 10.1101/2023.02.02.23285399 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Huang Y, Gomaa A, Semrau S et al (2023) Benchmarking ChatGPT-4 on a radiation oncology in-training exam and Red Journal Gray Zone cases: potentials and challenges for Ai-assisted medical education and decision making in radiation oncology. Front Oncol 13:1265024. 10.3389/fonc.2023.1265024 10.3389/fonc.2023.1265024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Patil NS, Huang RS, van der Pol CB, Larocque N (2023) Using Artificial Intelligence Chatbots as a radiologic decision-making Tool for Liver Imaging: do ChatGPT and Bard communicate information consistent with the ACR appropriateness Criteria? J Am Coll Radiol 20(10):1010–1013. 10.1016/j.jacr.2023.07.010 10.1016/j.jacr.2023.07.010 [DOI] [PubMed] [Google Scholar]

- 19.Oka K, Shiode R, Yoshii Y, Tanaka H, Iwahashi T, Murase T (2021) Artificial intelligence to diagnosis distal radius fracture using biplane plain X-rays. J Orthop Surg Res 16(1):694. 10.1186/s13018-021-02845-0 10.1186/s13018-021-02845-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tobler P, Cyriac J, Kovacs BK et al (2021) AI-based detection and classification of distal radius fractures using low-effort data labeling: evaluation of applicability and effect of training set size. Eur Radiol 31(9):6816–6824. 10.1007/s00330-021-07811-2 10.1007/s00330-021-07811-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zech JR, Carotenuto G, Igbinoba Z, Tran CV, Insley E, Baccarella A, Wong TT (2023) Detecting pediatric wrist fractures using deep-learning-based object detection. Pediatr Radiol 53(6):1125–1134. 10.1007/s00247-023-05588-8 10.1007/s00247-023-05588-8 [DOI] [PubMed] [Google Scholar]

- 22.Olczak J, Pavlopoulos J, Prijs J, Ijpma FFA, Doornberg JN, Lundström C, Hedlund J, Gordon M (2021) Presenting artificial intelligence, deep learning, and machine learning studies to clinicians and healthcare stakeholders: an introductory reference with a guideline and a Clinical AI Research (CAIR) checklist proposal. Acta Orthop 92(5):513–525. 10.1080/17453674.2021.1918389 10.1080/17453674.2021.1918389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Anttila TT, Karjalainen TV, Mäkelä TO, Waris EM, Lindfors NC, Leminen MM, Ryhänen JO (2023) Detecting Distal Radius fractures using a segmentation-based Deep Learning Model. J Digit Imaging 36(2):679–687. 10.1007/s10278-022-00741-5 10.1007/s10278-022-00741-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gan K, Xu D, Lin Y et al (2019) Artificial intelligence detection of distal radius fractures: a comparison between the convolutional neural network and professional assessments. Acta Orthop 90(4):394–400. 10.1080/17453674.2019.1600125 10.1080/17453674.2019.1600125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kim DH, MacKinnon T (2018) Artificial intelligence in fracture detection: transfer learning from deep convolutional neural networks. Clin Radiol 73(5):439–445. 10.1016/j.crad.2017.11.015 10.1016/j.crad.2017.11.015 [DOI] [PubMed] [Google Scholar]

- 26.Thian YL, Li Y, Jagmohan P, Sia D, Chan VEY, Tan RT (2019) Convolutional Neural Networks for Automated Fracture Detection and localization on wrist radiographs. Radiol Artif Intell 1(1):e180001. 10.1148/ryai.2019180001 10.1148/ryai.2019180001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lindsey R, Daluiski A, Chopra S et al (2018) Deep neural network improves fracture detection by clinicians. Proc Natl Acad Sci U S A 115(45):11591–11596. 10.1073/pnas.1806905115 10.1073/pnas.1806905115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Castillo C, Steffens T, Sim L, Caffery L (2021) The effect of clinical information on radiology reporting: a systematic review. J Med Radiat Sci 68(1):60–74. 10.1002/jmrs.424 10.1002/jmrs.424 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Raisuddin AM, Vaattovaara E, Nevalainen M et al (2021) Critical evaluation of deep neural networks for wrist fracture detection. Sci Rep 11(1):6006. 10.1038/s41598-021-85570-2 10.1038/s41598-021-85570-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sander AL, Leiblein M, Sommer K, Marzi I, Schneidmuller D, Frank J (2020) Epidemiology and treatment of distal radius fractures: current concept based on fracture severity and not on age. Eur J Trauma Emerg Surg 46(3):585–590. 10.1007/s00068-018-1023-7 10.1007/s00068-018-1023-7 [DOI] [PubMed] [Google Scholar]

- 31.Koo OT, Tan DM, Chong AK (2013) Distal radius fractures: an epidemiological review. Orthop Surg 5(3):209–213. 10.1111/os.12045 10.1111/os.12045 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.