Abstract

Simulation is a crucial tool for the evaluation and comparison of statistical methods. How to design fair and neutral simulation studies is therefore of great interest for both researchers developing new methods and practitioners confronted with the choice of the most suitable method. The term simulation usually refers to parametric simulation, that is, computer experiments using artificial data made up of pseudo-random numbers. Plasmode simulation, that is, computer experiments using the combination of resampling feature data from a real-life dataset and generating the target variable with a known user-selected outcome-generating model, is an alternative that is often claimed to produce more realistic data. We compare parametric and Plasmode simulation for the example of estimating the mean squared error (MSE) of the least squares estimator (LSE) in linear regression. If the true underlying data-generating process (DGP) and the outcome-generating model (OGM) were known, parametric simulation would obviously be the best choice in terms of estimating the MSE well. However, in reality, both are usually unknown, so researchers have to make assumptions: in Plasmode simulation studies for the OGM, in parametric simulation for both DGP and OGM. Most likely, these assumptions do not exactly reflect the truth. Here, we aim to find out how assumptions deviating from the true DGP and the true OGM affect the performance of parametric and Plasmode simulations in the context of MSE estimation for the LSE and in which situations which simulation type is preferable. Our results suggest that the preferable simulation method depends on many factors, including the number of features, and on how and to what extent the assumptions of a parametric simulation differ from the true DGP. Also, the resampling strategy used for Plasmode influences the results. In particular, subsampling with a small sampling proportion can be recommended.

Introduction

Simulation studies are usually defined as computer experiments using artificial data generated by a pseudo-random number generator for which some truth about the data-generating process (DGP) and the outcome-generating model (OGM) is known, e.g., the true parameter values of the OGM or the distribution of the features. Well-designed, fair simulation studies are needed both for the evaluation of newly introduced methods and, in particular, for the neutral comparison of existing methods [1]. The DGP and OGM are usually chosen to either reflect realistic scenarios or edge cases for the application of the method of interest. We investigate the first case here.

We call the kind of simulation with artificial data, where the DGP and OGM are fully known, “parametric” simulations. Non-parametric simulation, where all data are real-life data, is not part of our analysis. Plasmode simulation is a special case of semi-parametric simulation, which is characterized by parts of the data being real-life data and parts of the DGP or OGM being specified. [2] give a technical introduction to (parametric) simulation studies and focus on guidance for best practices in performing and reporting simulation studies (“ADEMP” criteria). [3] give a general and more applied introduction to (parametric) simulation studies.

Parametric simulation studies are a crucial tool in the performance evaluation and comparison of statistical methods since they can offer insights beyond analytical results [2, 3] and can be used to evaluate criteria that cannot be assessed on real data where the DGP and OGM are unknown [3]. An example is the bias of an estimator, which can only be evaluated if the true parameter value can be controlled within the simulation. Therefore, one of the main advantages of parametric simulation studies is the full knowledge of the parameters of the DGP and OGM within the study. Another advantage is the possibility of investigating large numbers of different scenarios which permits analyzing how the performance of methods depends on the choice of the DGP and OGM. Moreover, it is possible to generate very large numbers of datasets. One of the main disadvantages is the simplification of real-life DGPs. Often very simple DGPs and OGMs are chosen arbitrarily which then do not reflect the often complex real-life processes. This may lead to wrong conclusions [3]. The over-simplification gets even worse for high-dimensional data, as it gets harder, for example, to specify realistic distributions and correlation structures for an increasing number of variables [4].

A different approach are so-called statistical Plasmodes as first introduced by [5]. [4] distinguish between statistical and biological Plasmodes depending on the procedure used for generating data. The motivation of statistical Plasmodes is to preserve a realistic data structure by resampling feature data from real-life datasets instead of using pseudo-random numbers as usually done in parametric simulation. At the same time, some control over the generated data is given by generating outcome variables for the given resampled feature data according to a known outcome-generating model like in parametric simulation. So, parametric and Plasmode simulations differ in the generation of features, while outcomes are generated in the same manner. For the feature resampling, different resampling approaches can be utilized [4]. Biological Plasmodes are generated by natural biological processes, for example in a wet lab by manipulating biological samples. In this paper, only statistical Plasmodes are considered.

The main advantage of Plasmode simulations is that the DGP does not have to be specified. The resampling is claimed to ensure the generation of realistic feature data. At the same time, quantities depending on the parameters of the OGM can still be assessed in contrast to fully non-parametric simulations. However, the resampling requires suitable datasets from the true DGP of interest with not too few observations. Depending on the application, this might be a major limitation. A more detailed discussion of the advantages and disadvantages of parametric vs. Plasmode simulation is given in [4]. The authors especially point out that evidence for the often-made claim of Plasmode simulations producing more realistic data, i.e. data that is closer to the true DGP, is missing. Also, the authors emphasize the lack of studies on the effect of the often arbitrary choice of OGMs, which might affect both Plasmode and parametric simulation studies.

We aim to compare the ability of Plasmode and parametric simulation to assess the performance of statistical methods, especially concerning how misspecifications affect the results in both cases. We do this in a controlled simulation scenario so that we know both the true DGP and the true OGM, for evaluation purposes. In general, if we knew the truth, parametric simulation using this truth would be best. Since the truth is usually unknown in real-life applications, for parametric simulation researchers instead have to make assumptions about the DGP. These assumptions might deviate from the truth. Without deviations, the parametric simulation will always perform best since it accurately reflects the truth. On the other hand, when the parametric assumptions about the DGP are far from the truth, we expect Plasmode to be superior since the resampling is expected to give results that are rarely very far from the true DGP. Our goal is to determine the extent of deviation for which the parametric simulation gets worse than Plasmode. Therefore, we aim to find out

How much the DGP chosen in the parametric simulation can deviate from the truth before the parametric simulation becomes worse than Plasmode.

How deviations of the chosen OGM from the true OGM affect both parametric and Plasmode simulations.

How the choice of the resampling type affects the Plasmode simulation.

Based on the results, we are able to give guidance in which situations to choose parametric or Plasmode simulation and how to perform it.

We restrict our analysis to a simple scenario and focus on the estimation of the mean squared error (MSE) of the least squares estimator (LSE) in a linear regression model. Therefore, we focus on explanatory performance of the linear model and do not consider predictive performance. Moreover, we restrict to the low-dimensional setting, i.e., at most p = 50 features. We compare how well both parametric simulations with different assumptions about the DGP and the OGM and Plasmode simulations using different resampling strategies estimate the true MSE. So here, we check how well parametric and Plasmode simulation perform for one particular example of application. We investigate this for different true DGPs and OGMs. To compare different methods via simulation, this approach ensures that the simulation studies approximate well the performance of the methods for the true DGP and OGM.

The article is structured as follows. First, we describe parametric and Plasmode simulation in general and our specific simulation setup. Afterwards, we present the results of our simulations and provide recommendations for performing simulations based on our results. Finally, the results are summarized and discussed.

Methods

In the following, we briefly explain parametric and Plasmode simulation in general, pointing out, in particular, the different options for the resampling strategy in Plasmode simulations.

Parametric simulation

In parametric simulation studies, the whole data-generating process (DGP) and the outcome-generating model (OGM) have to be specified and are therefore known within the study. They are usually set up to either be as close as possible to a certain kind of data that the researcher is interested in (e.g. gene expression data) or to cover as many situations as possible, possibly including extreme situations. We focus on the first case. Given the specified DGP, a large number of feature datasets is generated using pseudo-random number generators. In all cases that we are investigating, a target variable is then generated from these features by applying the OGM. This yields a large number of datasets which are then used for applying the methods of interest. This procedure allows the researcher to evaluate the performance of the methods with respect to a metric of interest. The process of generating the datasets can be seen as mimicking the repeated collection of samples from a large population. The results can provide insights into how the methods under study perform on average for datasets that are similar to the chosen DGPs and OGMs [3]. For more details on how to design, perform, analyze, and report parametric simulation studies, refer to [2].

Plasmode simulation

In Plasmode simulation studies, no assumptions on the DGP for the feature data are made. Instead, it is required to have a representative real-life dataset at hand that resulted from the true DGP [4]. If only real data was used, we would have no control over the DGP and OGM in our simulation. This means that we could not estimate certain quantities (e.g. the bias of an estimator) that directly depend on the true unknown parameters [3]. To enable us to estimate the quantities that directly depend on the true parameters of the OGM (which are most quantities of interest for performance evaluation of models), Plasmode simulation combines the use of real feature data with a known OGM. A Plasmode simulation study then works as follows. In each iteration, a Plasmode dataset is drawn from the real-life dataset at hand. The researcher has to decide on the resampling method. Possible methods include

n out of n Bootstrap [6], i.e. drawing with replacement a dataset of the same size as the original dataset,

m out of n Bootstrap [7–9], i.e. drawing with replacement m < n observations of the original dataset,

subsampling, i.e. drawing without replacement m < n observations of the original dataset,

or other adaptations of Bootstrap like

smoothed Bootstrap [10–13], i.e. applying kernel-estimation to the empirical distribution of the original dataset and resampling from this smoothed empirical distribution,

wild Bootstrap [14], i.e. adding the standardized values of each variable scaled by a random number to the original variable, or

no resampling, i.e. using the whole dataset as it is.

A discussion of the first three options in the context of Plasmode simulation can be found in [4]. In the case of m out of n Bootstrap and subsampling, the researcher also has to decide on the number of observations to draw. For m out of n Bootstrap, there exists a data-dependent algorithm to find the optimal value of m [15]. After resampling a number of Plasmode datasets, the OGM is applied to each of the datasets to generate the outcomes. The resulting datasets can then be used for computing the performance metrics of interest like in parametric simulation. For more details, see [4].

Setup of the comparison study

In the following, we first describe the general approach and then the detailed setup of our comparison study.

General approach

We conduct our comparisons with respect to different true DGPs and OGMs. For each scenario, we calculate the true MSE of the least squares estimator (LSE). We then perform a parametric and a Plasmode simulation for estimating the MSE. For these simulations, we choose different DGPs and OGMs. The estimated MSEs resulting from these simulations are then compared to the true MSEs to assess how well the parametric and Plasmode simulation approximate the true values.

The outcomes are in all cases generated according to a linear model

| (1) |

for the true scenarios as well as for the parametric and Plasmode simulations. Note that the intercept is included in this model. The true MSE of the LSE depends on the number of observations n, the residual variance of ε, and the distribution of the features X. For fixed X, the LSE is unbiased and thus the MSE reduces to the variance, which is given by .

To define the true DGP and OGM, we have to determine

the true distribution of the features,

the true parameter vector β, and

the true distribution of the error term ε.

In the context of the parametric simulation, both DGP and OGM have to be chosen, so the distribution of the features, the coefficient vector, and the error distribution have to be specified. Inside the Plasmode simulation, only the OGM has to be chosen, so the coefficient vector and the error distribution have to be specified.

Simulation setup

In this section, we describe the true scenarios used in the simulation as well as the deviations from these true scenarios that are assumed for the parametric or Plasmode simulation.

True scenarios

We use different scenarios as our truth for the comparison. Table 1 gives an overview of these scenarios. The scenarios differ in the number of features (p) and observations (n) as well as the true correlation structure. In all scenarios, we assume that our features come from a multivariate normal distribution with mean zero and variances of one, that the true vector of coefficients (β) consists of all ones, and that the true error distribution is N(0, 0.32).

Table 1. Parameters for true data generating processes (DGP) and outcome generating models (OGM).

In all scenarios, the true vector of coefficients is equal to and the error distribution is set to ε ∼ N(0, 0.32). 0p denotes the p-dimensional vector of zeros.

| Name | p | n | Distribution of features |

|---|---|---|---|

| (p2n100ρ0.2) | 2 | 100 | (X1, X2)T ∼ N2(02, Σ) with Σi,j = 0.2 ∀i ≠ j, Σii = 1 |

| (p2n50ρ0.2) | 2 | 50 | (X1, X2)T ∼ N2(02, Σ) with Σi,j = 0.2 ∀i ≠ j, Σii = 1 |

| (p2n100ρ0.5) | 2 | 100 | (X1, X2)T ∼ N2(02, Σ) with Σi,j = 0.5 ∀i ≠ j, Σii = 1 |

| (p10n100ρ0.2) | 10 | 100 | (X1, …, X10)T ∼ N10(010, Σ) with Σi,j = 0.2 ∀i ≠ j, Σii = 1 |

| (p10n50ρ0.2) | 10 | 50 | (X1, …, X10)T ∼ N10(010, Σ) with Σi,j = 0.2 ∀i ≠ j, Σii = 1 |

| (p50n100ρ0.2) | 50 | 100 | (X1, …, X50)T ∼ N50(050, Σ) with Σi,j = 0.2 ∀i ≠ j, Σii = 1 |

| (p50n100ρ0.2|i−j|) | 50 | 100 | (X1, …, X50)T ∼ N50(050, Σ) with covariance matrix Σ with blockdiagonal structure where within each of 5 blocks of 10 features the pairwise covariance/ correlation between the ith and jth feature of the block is given as 0.2|i−j| and all variances are equal to 1 |

| (p50n100ρ0.5|i−j|) | 50 | 100 | (X1, …, X50)T ∼ N50(050, Σ) with covariance matrix Σ with block diagonal structure where within each of 5 blocks of 10 features the pairwise covariance/ correlation between the ith and jth feature of the block is given as 0.5|i−j| and all variances are equal to 1 |

| (quake) | 3 | 100 | (X1, …, X3)T ∼ N3(03, Σ) with covariance matrix Σ estimated from real dataset quake [16] |

| (wine_quality) | 11 | 100 | (X1, …, X11)T ∼ N11(011, Σ) with covariance matrix Σ estimated from real dataset wine_quality [17] |

| (pol) | 26 | 100 | (X1, …, X26)T ∼ N26(026, Σ) with covariance matrix Σ estimated from real dataset pol [18] |

| (Yolanda) | 100 | 200 | (X1, …, X100)T ∼ N100(0100, Σ) with covariance matrix Σ estimated from real dataset Yolanda [19] |

We start with simple scenarios with only two features (p = 2), which are sampled from a bivariate Gaussian distribution with mean zero, variances of one, and a pairwise correlation of 0.2 or 0.5, and 50 or 100 observations. We use the same parameter settings for p = 10 except that we only look at pairwise correlations of 0.2. For p = 50, we always use 100 observations for identifiability reasons. Once again, we set all pairwise correlations to 0.2. Additionally, we use block diagonal correlation matrices with five blocks of ten features each. Within each block, the correlations are once set to 0.2|i−j| and once to 0.5|i−j| for all i ≠ j. Features from different blocks are assigned a correlation of 0.

The scenarios are chosen to represent a low, a moderate, and a higher number of features for which the estimation process is still stable for 100 observations.

Moreover, we use covariance matrices estimated from real datasets to see how the simulations behave with more complicated correlation structures. We chose regression datasets that were available on OpenML [20], were used in the benchmark in [21], had at most 100 features, no constant features, no missing values and pairwise correlations with absolute values of at most 0.95. With these criteria, we ended up with four datasets: quake [16], wine_quality [17], pol [18], and Yolanda [19].

Deviations from true scenarios

We choose DGPs and OGMs for parametric and Plasmode simulation that present different kinds of deviations from the truth described in the previous section. The general structures of these deviations are listed in Table 2. A complete list of the specific parameter values that were chosen can be found in S1 Table. As a baseline, we assume the true scenario, which reflects the case that we—by chance—correctly specify all parameters in the simulations. Then we consider choices for each part of the DGP and OGM that reflect increasing deviations from the truth. For the coefficients, we use different values that are either wrong, but of the same order, or that even differ a large factor. We also included the case of assuming no effect (β = 0) which is an important special case that might be of interest in many studies. For the distribution of ε, we either only misspecify its standard deviation or misspecify the distribution as either more heavy-tailed (scaled t-distribution) or skewed (scaled and shifted χ2-distribution). As deviations from the true feature distribution, we first still assume multivariate normal distribution but with wrong correlations, expectations, or variances. We then look at entirely wrong distributions, namely Gaussian mixture, log-normal, and Bernoulli distribution. The true correlation structure is preserved in those cases. We achieve this by generating Gaussian mixture, log-normal, and Bernoulli variables from multivariate normals and setting the covariance matrix of the underlying normals in a way such that the corresponding variables have the desired variances and covariances. For log-normals and Bernoulli variables with variables of the same distribution, the calculation can be found in [22, 23]. The calculation for log-normal and Bernoulli variables in combination with normal variables as well as all calculations for Gaussian mixture variables can be found in S1 Appendix.

Table 2. Deviations from true DGP and OGM for parametric and Plasmode simulation.

| Scenario name | Description |

|---|---|

| True model | Assumptions coincide with truth |

| Coefficients misspecified I | Assumed β vector instead of 1p+1 |

| Coefficients misspecified II | Assumed β vector 0.05p+1 instead of 1p+1 |

| Coefficients misspecified III | Assumed β vector 10p+1 instead of 1p+1 |

| Coefficients misspecified IV | Assumed β vector 0p+1 instead of 1p+1 |

| Error sd misspecified c | Assumed σ = c instead of σ = 0.3 for ε ∼ N(0, σ2) |

| Correlation misspecified ρ | Assumed fixed pairwise correlation of ρ |

| Correlation misspecified ρ|i−j| | Assumed pairwise correlation of ρ|i−j| for ith and jth feature for p = 10, or ith and jth feature within each of 5 blocks of 10 features for p = 50, respectively |

| Coefficients (I) and correlation (ρ) misspec. | 0.05p+1 instead of 1p+1 and fixed pairwise correlation of ρ instead of ρtrue |

| Coefficients (II) and correlation (ρ) misspec. | Assumed β vector 10p+1 instead of 1p+1 and fixed pairwise correlation of ρ instead of ρtrue |

| Error sd (0.4) and correlation (ρ) misspec. | Assumed σ = 0.4 instead of σ = 0.3 for ε ∼ N(0, σ2) and fixed pairwise correlation of ρ instead of ρtrue |

| Feature distribution misspecified N(0,1), N(μ,1) | Assumed expectation of μ for second half of features |

| Feature distribution misspecified N(μ,1) | Assumed expectation of μ for all features |

| Feature distribution misspecified N(0,1), N(0,σ2) | Assumed variance of σ2 for second half of features |

| Feature distribution misspecified N(0,σ2) | Assumed variance of σ2 for all features |

| Feature distribution misspecified N(0,1), (1 − α)N(0,1)+αN(0,10) | Assumed marginal distribution of second half of features as Gaussian mixture with 100α% outliers sampled from N(0, 10) and marginal distribution of first half of features misspecified as normal with mean 0 and variance that matches the variance σ2 of the second half of features, Cor(Xi, Xj) = ρtrue, i ≠ j still holds |

| Feature distribution misspecified N(μ,σ2), (1 − α)N(0,1)+αN(3,1) | Assumed marginal distribution of second half of features as Gaussian mixture with 100α% of the observations sampled from N(3, 1) and marginal distribution of first half of features misspecified as normal with mean μ and variance σ2 chosen such that they match mean and variance of the second half of features, Cor(Xi, Xj) = ρtrue, i ≠ j still holds |

| Feature distribution misspecified N(1.65,2.83), logN(0,1) | Assumed marginal distribution of second half of features misspecified as log-normal with parameters 0 and 1 and marginal distribution of first half of features misspecified as normal with matching mean and variance, Cor(Xi, Xj) = ρtrue, i ≠ j still holds |

| Feature distribution misspecified Bin(π) | Assumed marginal distribution of second feature misspecified as Bernoulli with a success probability of π, Cor(Xi, Xj) = ρtrue, i ≠ j still holds |

| Error distribution misspecified t(df) scaled | Assumed ε ∼ tdf and scaled ε to still have sd 0.3 |

| Error distribution misspecified chisq(df) scaled | Assumed and shifted and scaled ε to still have mean 0 and sd 0.3 |

Simulation procedure

The overall simulation structure is described in Algorithm 1. For each true scenario, we first approximate the true MSE by drawing 25 000 000 datasets of size n from the true distribution of X with the first column being a vector of ones, corresponding to the intercept of the model. We then calculate Xβ and add random noise ε according to the true distribution of ε and define this as our outcome vector y belonging to the respective dataset. For each pair of data X and corresponding target y, we estimate using least squares estimation. We then calculate the component-wise means over the replications of the simulation of , with p denoting the number of features, as estimates of the true component-wise MSEs. We refer to these quantities as the “true” component-wise MSEs. In each true scenario, we then perform parametric and Plasmode simulations for estimating the component-wise MSEs under the assumption that we do not know the respective true scenario.

Algorithm 1 Structure of simulation process

Require: n > 0 (number of observations), 0 < p < n (number of features), n.mse > 0 (number of MSE estimations), n.mod > 0 (number of LSEs, i.e. model estimates, used for estimation of one estimated MSE), true DGP (distribution of features), true OGM (β, distribution of ε), assumed DGP (assumed distribution of features), assumed OGM (βa, assumed distribution of ε), type of Bootstrap, proportion π for resampling (= 1 for n out of n Bootstrap, Wild Bootstrap, and Smoothed Bootstrap)

Ensure: Error in estimated MSE for parametric simulation

1: for the LSE in the true model

2: for k = 1, …, n.mse do

3: X(k,i) ← design matrix generated with Algorithm 2 or 3 for i = 1, …, n.mod

4: for i = 1, …, n.mod do

5: ε(k, i)← noise sampled from assumed distribution of ε

6: y(k,i) ← X(k,i) βa + ε(k,i)

7: ⊳ LSE

8: end for

9:

10:

11: end for

The process for data generation for parametric simulation is described in Algorithm 2. We make different assumptions on the distribution of the features (X), the values of the coefficients β, and the distribution of ε. We then generate n.mod = 1000 datasets according to these assumptions using pseudo-random numbers.

Algorithm 2 Structure of feature data generation for parametric simulation

Require: n > 0, 0 < p < n, assumed DGP, k (iteration number of Algorithm 1)

Ensure: Generated datasets

1: for i = 1, …, n.mod do ⊳ Inner Simulation

2: X(k,i) ← design matrix drawn from assumed data generating process using a pseudo-random number generator

3: end for

In some scenarios, we use parametric simulation with estimation of mean and covariance. For this, at the beginning of each simulation, one dataset of size n = 1000 is sampled from the true DGP and the mean and covariance are estimated from this dataset and used as the assumed mean and covariance of the assumed DGP. This corresponds to the case that researchers might have data at hand from which they estimate some characteristics of the DGP to incorporate them into a parametric simulation in order to perform a more realistic simulation.

The procedure for data generation for Plasmode simulation is described in Algorithm 3. Here, we have to specify the resampling method to use. As a first step for each Plasmode simulation, one dataset is drawn from the true DGP. Note that in each case, the number of observations after resampling has to match the number of observations used for parametric simulation and for the true scenario to ensure a fair comparison of methods. For a more detailed discussion of this issue, see below. We then draw n.mod = 1000 resampled datasets from our dataset according to the chosen resampling method.

Algorithm 3 Structure of feature data generation for Plasmode simulation

Require: n > 0, 0 < p < n, true DGP, type of Bootstrap, proportion π for resampling (= 1 for n out of n Bootstrap, Wild Bootstrap and Smoothed Bootstrap), k (iteration number of Algorithm 1)

Ensure: Plasmode datasets

1: design matrix drawn from true DGP

2: for i = 1, …, n.mod do ⊳ Inner Simulation

3: if type == “m out of n Bootstrap” or type == “n out of n Bootstrap” then

4: X(k,i) ← n rows sampled from with replacement

5: else

6: if type == “Subsampling” then

7: X(k,i) ← n rows sampled from without replacement

8: else

9: if type == “Wild Bootstrap” then

10: a ← vector of p numbers sampled from N(0, 1)

11:

12: , j = 2, …, p + 1

13: else

14: if type == “Smoothed Bootstrap” then

15: X(k,i) ← n rows sampled from with replacement + random noise from a multivariate normal distribution centered at the data points and parameterized by corresponding bandwidth matrix estimated by Silverman‘s rule [24]);

16: end if

17: end if

18: end if

19: end if

20: end for

We utilize the following Bootstrap versions:

m out of n Bootstrap [7–9] with resampling proportion π ∈ {0.01, 0.1, 0.5, 0.632, 0.8, 0.9}, i.e. drawing with replacement n observations out of ⌈n/π⌉ observations,

n out of n Bootstrap [6], i.e. drawing with replacement n observations out of n (special case of m out of n Bootstrap for π = 1),

Smoothed Bootstrap [10–13], i.e. drawing with replacement n observations out of the smoothed empirical distribution of n observations,

Wild Bootstrap [14], i.e. adding the standardized version of each observed feature vector scaled with a noise factor sampled from N(0, 1) to the observed feature vectors, and

subsampling with resampling proportion π ∈ {0.01, 0.1, 0.5, 0.632, 0.8, 0.9}, i.e. drawing without replacement n observations out of ⌈n/π⌉ observations,

no resampling, equivalent to subsampling with resampling proportion π = 1.

We do not determine an optimal resampling proportion, e.g. with the algorithm introduced in [15], since it takes too much time to repeat this for every dataset in the simulation. Instead, we try a range of resampling proportions.

On each dataset generated either according to the parametric or the Plasmode approach, the linear model (1) using the chosen parameters for the OGM is then applied to generate the outcome variable. From these, is estimated for each dataset. The MSE is estimated as the average component-wise squared difference of the estimated and assumed coefficient vectors. One estimated MSE value corresponds to the result of one parametric or Plasmode simulation study. The whole process is repeated 100 times so we can see how much variation exists in the MSE estimation when repeating the parametric or Plasmode simulation study.

Performance evaluation

To compare the performance of parametric and Plasmode simulation, we look at their errors in MSE estimation. For each type of simulation (parametric, parametric with estimation of mean and variance, Plasmode with different resampling methods and proportions) we obtain 100 estimated MSEs for each deviation from the true DGP and OGM. We calculate the component-wise absolute errors as the differences between estimated MSEs and corresponding true MSEs in each case. Additionally, we calculate the relative errors by dividing the absolute errors by the corresponding true MSEs. We aggregate the absolute and relative errors per simulation over the coefficients by taking the arithmetic mean over the absolute component-wise values. We aggregate over the repetitions of the simulation studies by taking the median of the aggregated values. With this strategy, runs with large errors in single coefficients obtain large aggregated values, while the overall aggregated value across simulation repetitions is robust against single simulations with large aggregated errors.

We examine the errors graphically using boxplots. An example with a corresponding explanation will be shown later. Additionally, we analyze how much the assumptions in parametric simulation can deviate from the truth until the results are worse than with Plasmode simulation. Therefore, we sort the parametric deviations within each subgroup (e.g. deviation from the variance of the multivariate normal) in increasing order of the magnitude of the deviation (e.g. if the true variance is 1 and the tested values are 0.1, …, 0.99, these are ordered decreasingly) and identify the first value in this order for which the fully aggregated error for parametric simulation is larger than that for the considered type of Plasmode simulation. In this way, we can quantitatively compare parametric simulation to the different Plasmode variants. If the first value where parametric is worse than Plasmode is close to the true value, it follows that for deviations of this type, the parametric simulation is very sensitive to small deviations and we have to be very confident in our parameter settings for the DGP if we want to use parametric simulation. These are the cases where Plasmode might be superior to parametric simulation.

Software

All analyses are performed using R 4.2.2 [25]. We use the mvtnorm package [26, 27] to simulate data from multivariate normal distributions. For smoothed Bootstrap, the R package kernelboot [28] is used. For visualization of the results, we use the ggplot2 package [29] and ggh4x [30]. The R code and results for the simulation are available on Zenodo (https://doi.org/10.5281/zenodo.10567144, https://doi.org/10.5281/zenodo.10567059).

Results

In this Section, we evaluate the results of the simulations. First, we explain the plots for one simple scenario and type of deviation. Then, the different resampling strategies for Plasmode are compared. Afterward, we discuss the results for the different types of deviations. Last, we consider the results for correlation structures estimated from real data as well as the effect of the size of the resampled dataset.

Example

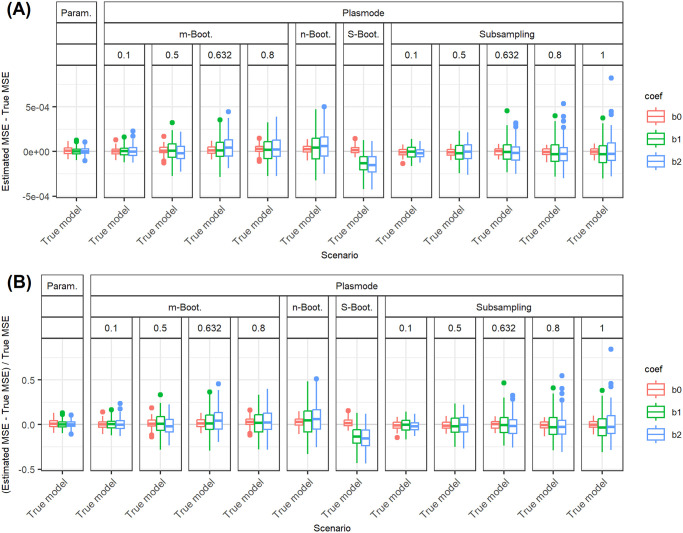

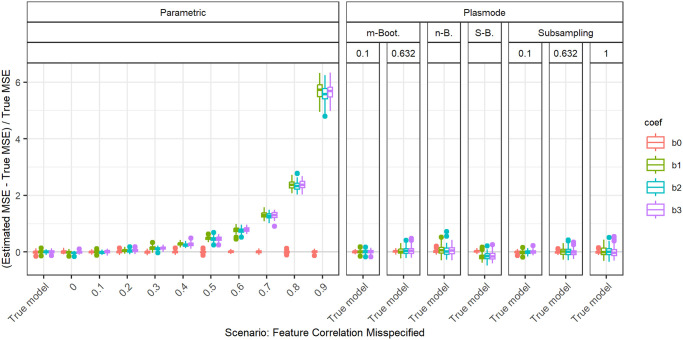

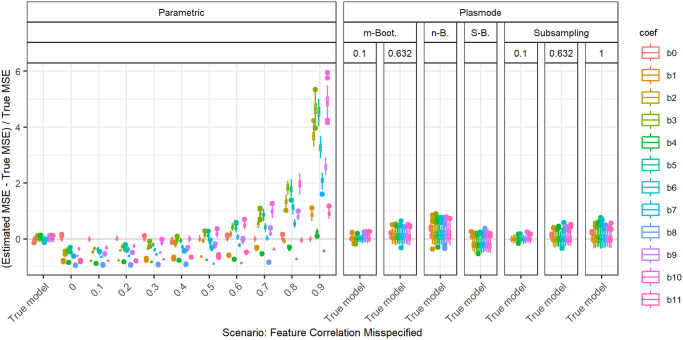

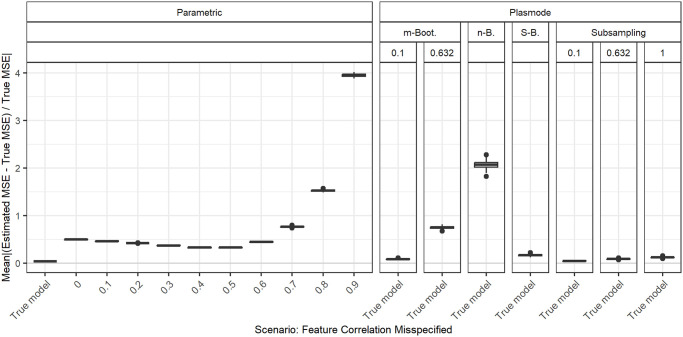

In the following, we explain the displays that we use in the subsequent sections using one concrete example. We again consider the two scenarios with p = 2, n = 100, pairwise correlation of 0.2, β = 13, and ε ∼ N(0, 0.32) or ε ∼ N(0, 32). We calculated the errors in the MSE estimation using parametric and Plasmode simulation as described in the previous section. We display the errors in different ways using boxplots. We display the absolute or relative errors for each coefficient individually like in Figs 1 and 3, and S1 Fig, or aggregated over the coefficients like in Fig 2. We use the unaggregated version in cases where the error for different coefficients might behave differently. This is for example the case for deviations in the feature distribution of the second half of features. Otherwise, if all coefficients behave similarly, we use the aggregated version.

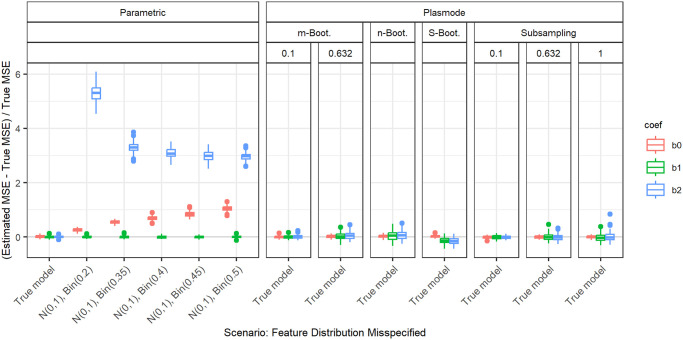

Fig 1. Relative error in MSE estimation for individual coefficients for different types of Plasmode simulation compared to parametric simulation under assumption of true DGP and OGM.

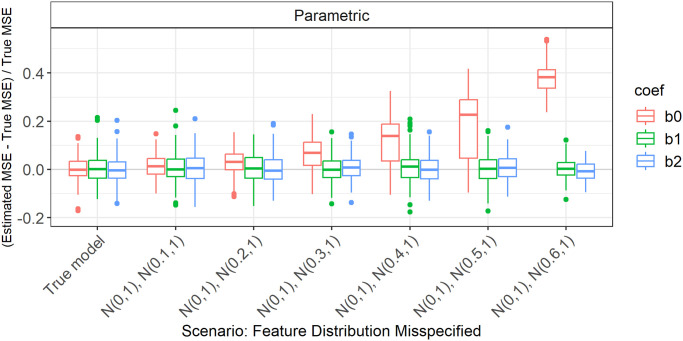

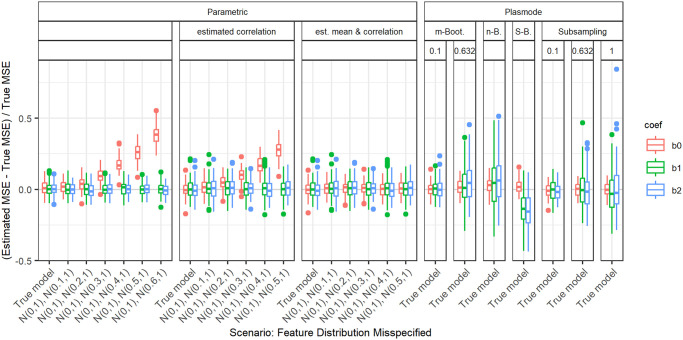

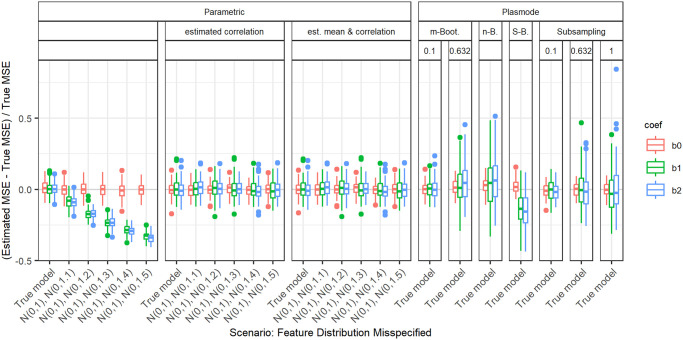

Fig 3. Absolute value of relative error in MSE estimation for individual coefficients when the assumed feature distribution in parametric simulation deviates from the true distribution, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

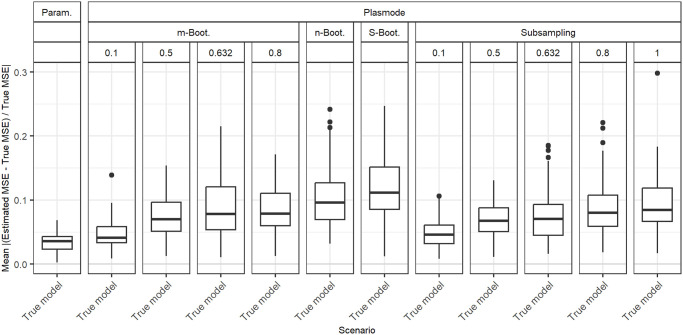

Fig 2. Absolute value of the relative error in MSE estimation averaged over individual coefficients, for different types of Plasmode simulation compared to parametric simulation under the assumption of the true DGP and OGM, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

For the individual coefficients, the absolute or relative errors of the 100 repetitions of each type of simulation (parametric, different types of Plasmode) are displayed in one box per coefficient. This is done separately for the true model and each deviation. The deviations are described on the x-axis and coefficients are distinguished by differently colored boxes. The headers give information about the type of simulation used. The first row is the distinction between parametric and Plasmode simulation. The second row gives the type of Plasmode simulation. The third row gives the resampling proportion. For example in Fig 1 in the third facet, the relative errors per coefficient for Plasmode using m out of n Bootstrap with a resampling proportion of 0.5 are displayed. This corresponds to sampling with replacement 100 observations from a dataset of 200 observations for each simulation. For parametric simulation, n out of n Bootstrap and Smoothed Bootstrap, there is no subsampling proportion so this field is left empty. We leave out Wild Bootstrap in the following analyses since it produces very large outliers and is consistently outperformed by all other Bootstrap types (see e.g. Fig 4). We abbreviate m out of n Bootstrap as m-Bootstrap, n out of n Bootstrap as n-Bootstrap and Smoothed Bootstrap as S-Bootstrap. If necessary we further abbreviate Bootstrap as Boot. or B., Parametric as Param. or Prm. and Subsampling as Sub.

Fig 4. Absolute value of relative error in the MSE estimation averaged over individual coefficients for different types of Plasmode simulation compared to parametric simulation, under the assumption of the true data generating process and outcome generating model, for p = 50, n = 100, β = 151, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

For the aggregated errors, we display the mean over the absolute values of the errors of the individual coefficients per deviation, i.e. the mean error per coefficient of one simulation, for the 100 repetitions of each simulation type in one box. Apart from the aggregation, the figures are constructed in the same way as for the individual coefficients.

There are two types of comparisons: we can compare the performance of different types of simulation for the true model like in Figs 1 and 2, and S1 Fig to see how well each simulation type would perform if we knew the truth. Or we can compare the performance for differently strong deviations like in Fig 3. This allows us to assess the impact of different deviations on the performance. We can also combine both displays and show the performance for one kind of deviation for all those types of simulation that are affected by it and the performance for all other simulation types for the true model only. For example, in the case of deviation from the mean of the second feature distribution, we can show the errors for parametric simulation for different amounts of deviation as in Fig 3 along with the performance of the Plasmode types under the true model as in Fig 1 or S1 Fig. We cannot misspecify the feature distribution in Plasmode simulation, so only the true model is shown. This combined version is the display that we will use for the rest of our analysis.

In general, we might be interested in both absolute and relative errors. As can be seen in S1 Fig, the absolute errors for our specific problem are directly dependent on the chosen parameter for the error standard deviation: if the standard deviation changes by a factor of 10, e.g. here from σ = 0.3 to σ = 3, the errors change by a factor of approximately 102 = 100, which can easily be checked by the theoretical relation , using that the LSE is unbiased for fixed X. The relative errors, on the other hand, are independent of σ since the factor affects both the absolute error and the true MSE, by which the absolute error is divided, in the same way. This is for example demonstrated in Fig 1. Therefore, we will only display the relative version for the rest of this analysis since the absolute values could be scaled to be arbitrarily small or large by choosing the error variance accordingly. We will also restrict our analysis to the case σ = 0.3, since this leads to more stable simulations than σ = 3, as the latter corresponds to an extremely low signal-to-noise ratio.

Comparison of different Plasmode types and resampling proportions

Fig 4 shows the aggregated relative errors for p = 50 with fixed pairwise correlations of 0.2 for the true model for all types of simulation. This example confirms that overall, Plasmode using Wild Bootstrap performs worst. All values for its relative errors lie outside the range of all other resampling types. This is similar for other scenarios, such that we do not show the results for Wild Bootstrap in any other plot. Within the other simulation types, Plasmode using the n out of n Bootstrap performs worst with relative mean errors of around 2.5 and also relatively high variation. m out of n Bootstrap and subsampling perform better both in terms of the median aggregated error and in terms of smaller variability with decreasing resampling proportion, i.e. the larger the dataset from which the 100 observations are sampled, the lower the variability. m out of n Bootstrap converges towards n out of n Bootstrap for increasing subsampling proportions. Except for very low subsampling proportions (0.1 and 0.01), Bootstrap performs worse than subsampling both with regard to median aggregated error and variability. It is interesting to note, that no resampling (i.e. subsampling with a subsampling rate of one), which means using the same feature data for the whole simulation and only sampling new observations of the target, still outperforms m out of n Bootstrap with subsampling proportions from 0.5 on as well as the smoothed, n out of n, and wild bootstrap. Smoothed Bootstrap performs worse than all subsampling versions, but better than the m out of n Bootstrap for subsampling proportions from 0.5 on. With Smoothed Bootstrap, the true MSE of the slope coefficients gets consistently underestimated under the true model (see e.g. Fig 1). Subsampling and m out of n Bootstrap are indistinguishable for very low subsampling proportions since the impact of duplicate observations decreases with increasing size of the dataset from which we resample. For a proportion of 0.01, both these approaches perform as well as the parametric simulation. These results reflect what we also have seen in all other scenarios, although for lower p, the differences between the simulation types become very small. It should be noted that in these simulations, subsampling and m out of n Bootstrap require a larger dataset to resample from for lower resampling proportions. This might give them an advantage. Due to its very poor performance, we will exclude the wild Bootstrap from now on. We will also reduce the values of resampling proportions to 0.1 and 0.632 for m out of n Bootstrap and to 0.1, 0.632, and 1 for subsampling for more clarity. The numbers were chosen to represent a relatively low and a relatively high resampling proportion. Moreover, 0.632 has been used in Plasmode simulations, motivated by the expected proportion of non-duplicated observations for n out of n Bootstrap [31].

Deviations from true feature distribution

We will now take a look at the different deviations from the true feature distribution. These only affect the parametric simulation. Since in all cases, different coefficients are affected differently, we always show the individual errors per coefficient. We focus on the case p = 2 and n = 100 since for this we can still display the errors for individual coefficients in a clear manner. The results can be transferred to higher numbers of features or lower numbers of observations. As expected, the absolute values of the errors are larger for higher values of p or smaller values of n, but the qualitative results are the same. In all cases, we only display the range of deviations that is relevant to the comparison of parametric and Plasmode simulation.

Gaussian with wrong expectation

Fig 5 shows the relative errors in case of deviations from the expectation of the second feature (Feature distribution misspecified N(0,1), N(μ,1), cf. Table 2). We can observe that the second coefficient stays unaffected while the errors for the intercept increase with increasing deviations from the true mean. This result is to be expected, as can be seen by reparametrization. If the truth is X2 ∼ N(0, 1) and we assume , we can rewrite the resulting linear model using instead of X2 as

As μ > 0 and β2 > 0 in our case, it holds .

Fig 5. Relative error in MSE estimation for individual coefficients when the assumed mean of the marginal distribution of the second feature in parametric simulation deviates from the true mean, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

N(0,1), N(μ,1) denotes that the first feature is generated from a standard normal (truth), and the second feature is generated from a normal distribution with mean μ instead (deviation).

The errors in the intercept can be prevented by estimating the mean using a dataset sampled from the true DGP. This leads to slightly increased variance in the errors of the parametric simulation, but stable median errors that are close to zero for all coefficients.

Gaussian with wrong variance

Fig 6 shows the relative errors for deviations from the true variance of the second feature. We see that both slope coefficients are affected. The true MSE is underestimated by the simulation and this underestimation gets worse for increasing (misspecified) variance of the second feature.

Fig 6. Relative error in MSE estimation for individual coefficients when the assumed variance of the marginal distribution of the second feature in parametric simulation deviates from the true variance, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

N(0,1), N(0,σ2) denotes that the first feature is generated from a standard normal (truth), and the second feature is generated from a normal distribution with variance σ2 instead (deviation).

Again, this behavior can be prevented by estimating the covariance matrix from a dataset from the true DGP at the cost of slightly increased variation. For estimation of the covariance matrix, the dataset from the true DGP must have sufficiently many observations. Here, we used 1000 observations which is sufficient for p = 2, as well as for p = 50. Smaller numbers of observations are insufficient for p = 50 as can be seen in S2 Fig. When increasing the variance of the second feature, the error in MSE converges to an upper bound corresponding to the true MSE, since the estimated MSE converges to zero for increasing variances. This can lead to problems later on, when we look for the first deviation where the aggregated error for parametric simulation exceeds the error for Plasmode simulation. For low p, the upper bound of the error for increasing the feature variance in parametric simulation is still larger than the errors obtained with Plasmode simulation. However, for large p, where Plasmode performs worse, the error reached even with very high values for the variance of the second half of features is smaller than that of some Plasmode types. This is demonstrated in S3 Fig for the case of p = 50. There, we show the mean of the relative errors of the coefficients per simulation run. In that case, we do not take the absolute values before averaging, to demonstrate the direction of the errors. In the present case, this is no problem since either the MSEs for all coefficients are overestimated or all are underestimated, so there is no risk of the errors of different coefficients cancelling out in the mean. Decreasing instead of increasing the variance of the second half of features leads to an overestimation of the true MSE and this overestimation is unbounded. Therefore, in settings where the upper bound does not exceed the errors of all Plasmode types, we use decreasing instead of increasing variances, see e.g. S4 Fig.

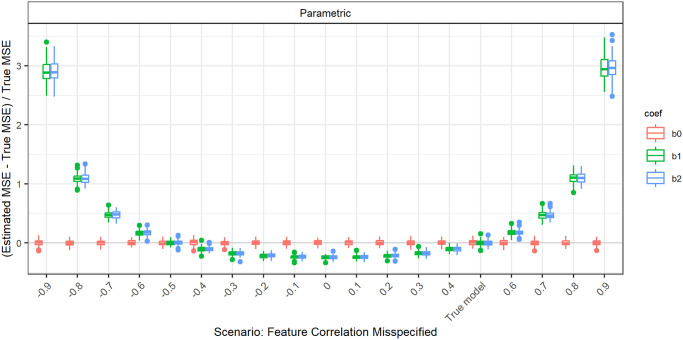

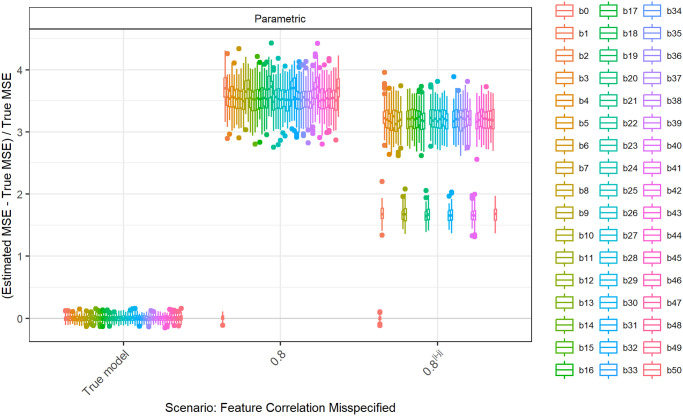

Gaussian with wrong correlations

The overall influence of misspecifying the pairwise correlations of the features is more easily demonstrated, when the true pairwise correlations are 0.5 instead of 0.2. The relative errors in this case for parametric simulation are shown in Fig 7.

Fig 7. Relative error in MSE estimation for individual coefficients when the assumed correlation of the features in parametric simulation deviates from true correlation, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.5 ∀i ≠ j.

The intercept is unaffected when misspecifying the correlation. For the errors in the slopes, we observe a parabolic shape that intersects with zero at the true correlation of 0.5 and at −0.5. For the MSE estimation, the sign of the correlation does not seem to have any influence, only the absolute value, as the parabolic shape is symmetrical around zero. When overestimating the absolute value of the true correlation, the true MSE is overestimated. For underestimating the absolute value of the true correlation, the true MSE is underestimated.

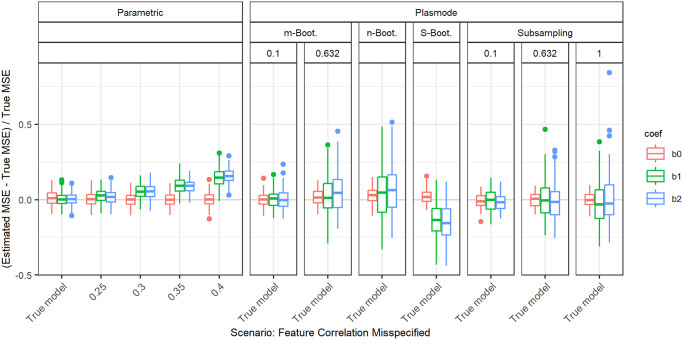

This pattern is also observed for a true correlation of 0.2 (Fig 8). For the comparison of parametric and Plasmode, we concentrate on assuming a correlation that is higher than the true correlation, since for these deviations, the errors are monotonously increasing. This can for example be seen in the comparison for true fixed pairwise correlations of 0.2 and p = 2, n = 100 as shown in Fig 8.

Fig 8. Relative error in MSE estimation for individual coefficients when the assumed correlation of the features in parametric simulation deviates from true correlation, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

The observed shape is plausible from a theoretical point of view. The MSE of the LSE given X is equal to its variance, as it is unbiased. This variance is given as the diagonal of σ2(XTX)−1. For X drawn from a multivariate normal distribution, i.e. ignoring the intercept term, (XTX)−1 follows an inverse Wishart distribution. Its expectation is given by the inverse covariance matrix Σ−1 of this multivariate normal. When explicitly calculating the diagonal values of Σ−1 in case of pairwise fixed correlations of ρ, we can see that this expectation depends quadratically on ρ, which matches the observed form.

When the true correlation matrix has a block structure, we observe lower errors for the coefficients at the margins of the blocks if the value of the correlations but not their structure is misspecified (Fig 9). Again, this can be derived theoretically for the very simple case described above when inserting the block diagonal structure for Σ.

Fig 9. Relative error in MSE estimation for individual coefficients when the assumed correlation of the features in parametric simulation deviates from true correlation, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2|i−j| for ith and jth feature within each of the 5 blocks.

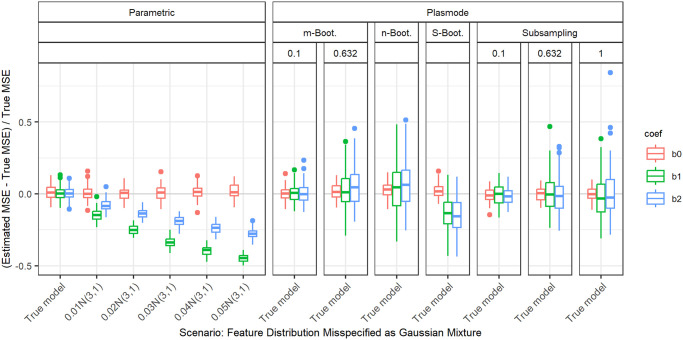

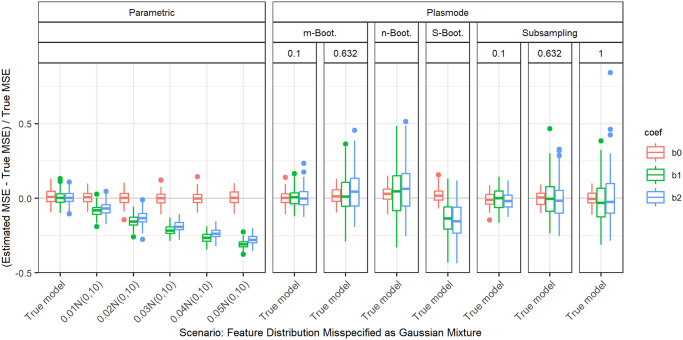

Gaussian mixture

Next, we use two different versions of Gaussian mixtures as feature distributions for the second half of the features. With this, not only the parameter but the whole shape of the distribution is altered. For the first type of Gaussian mixture, a proportion of α of the observations stems from a normal distribution with mean 3 and variance 1. This yields a bimodal distribution. For the second type of Gaussian mixture, a proportion of α of the observations stems from a normal distribution with mean 0 and variance 10. This represents a contamination model with outliers. In both cases, the remaining proportion of 1 − α stems from the standard normal, in agreement with the true distribution. We always set the marginal distribution of the first feature to a normal that has the same mean and variance as the Gaussian mixture for the second marginal distribution and successively increase the proportion in the mixing distribution. This enables us to separate the influence of the change in expectation and variance of the distribution from the effect of the bimodality and outliers.

In the bimodal case (Fig 10) we see that with an increasing proportion of observations from the N(3, 1) distribution, the underestimation of the MSE for the corresponding second coefficient also increases. It is still less pronounced than for the first coefficient which corresponds to the normal with wrong expectation and variance. This might be due to the fact that most of the observations in the mixture distribution belong to the true distribution. In the case of a normal with wrong expectation and variance, all observations come from a distribution that differs from the true one.

Fig 10. Relative error in MSE estimation for individual coefficients when the assumed marginal distribution of the second feature in parametric simulation is misspecified as Gaussian mixture with increasing proportion of data drawn from Gaussian with different expectations (bimodal distribution).

The mean and the variance of the marginal normal distribution of the first feature are set to match those of the second. The mixing proportion is given on the x-axis.

For the contamination model (Fig 11) we observe the same behavior, but the differences between the coefficients are smaller there.

Fig 11. Relative error in MSE estimation for individual coefficients when the assumed marginal distribution of the second feature in parametric simulation is misspecified as Gaussian mixture with increasing proportion of data drawn from Gaussian with different variance (contaminated distribution), for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

The mean and the variance of the marginal normal distribution of the first feature are set to match those of the second. The mixing proportion is given on the x-axis.

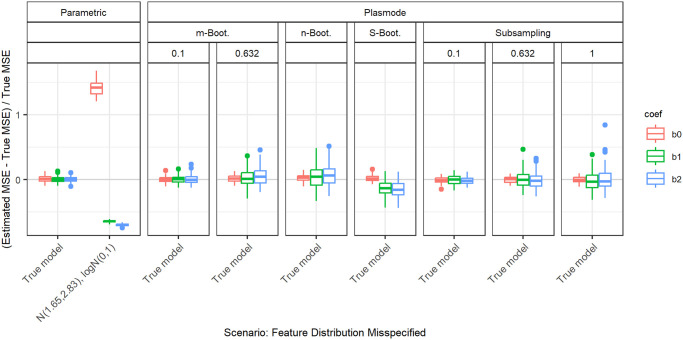

Log-normal

Fig 12 shows the relative errors for the individual coefficients when the distribution of the second feature is misspecified as log-normal and the distribution of the first feature is misspecified as a normal with matching mean and variance. There is a large overestimation of the MSE for the intercept, while the MSEs for the other coefficients are underestimated. The underestimation is slightly worse for the second coefficient than for the first, so the additional skewness of the log-normal leads to worse MSE estimation compared to a normal with the same mean and variance. The errors in all coefficients for this deviation are considerably higher than the ones of any Plasmode variant that is compared here.

Fig 12. Relative error in MSE estimation for individual coefficients when the assumed marginal distribution of the second feature in parametric simulation is misspecified as log-normal, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

The mean and the variance of the marginal normal distribution of the first feature are set to match those of the second.

Bernoulli

Fig 13 shows the relative errors for the individual coefficients when the distribution of the second feature is misspecified as Bernoulli and the distribution of the first feature is correctly specified as a standard normal. We observe an increasing overestimation of the MSE for the intercept with increasing success probabilities. The MSE for the first coefficient is unaffected. The MSE for the coefficient belonging to the binary feature is also clearly overestimated where the overestimation decreases towards success probabilities of 0.5. The errors for the intercept and the second coefficient for this deviation are considerably higher than the ones of any Plasmode variant that is compared here.

Fig 13. Relative error in MSE estimation for individual coefficients when the assumed marginal distribution of the second feature in parametric simulation is misspecified as Bernoulli with different success probabilities, for p = 2, n = 100, β = (1, 1, 1)T, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

Deviations from true coefficients

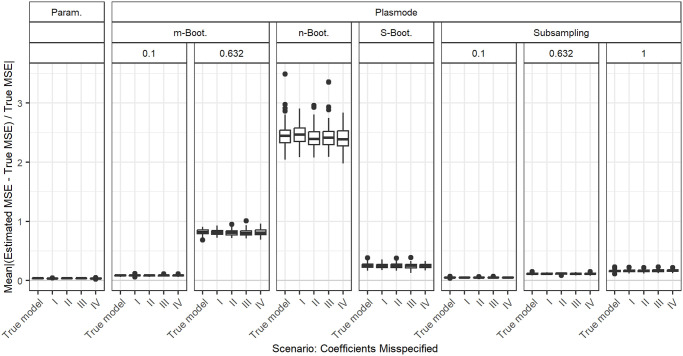

Fig 14 shows the aggregated relative errors in MSE estimation for p = 50 and fixed correlations of 0.2 for misspecifications of the coefficient vector β. Since the specification of the coefficient vector is part of the OGM, this concerns all types of simulations. For each simulation type, the errors for the misspecified coefficients do not differ from the errors for the true model. Therefore, we conclude that the assumed values for the coefficients do not affect the simulation results. The theoretical MSE formula for given X is also only dependent on σ and X, so independent of β.

Fig 14. Absolute value of relative error in MSE estimation averaged over individual coefficients when the assumed coefficients in parametric and Plasmode simulation are misspecified, for p = 50, n = 100, β = 151, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j, βI = (0, 0.02, …, 1)T, βII = 0.0551, βIII = 1051, βIV = 051.

Large outliers for n out of n Bootstrap are not displayed.

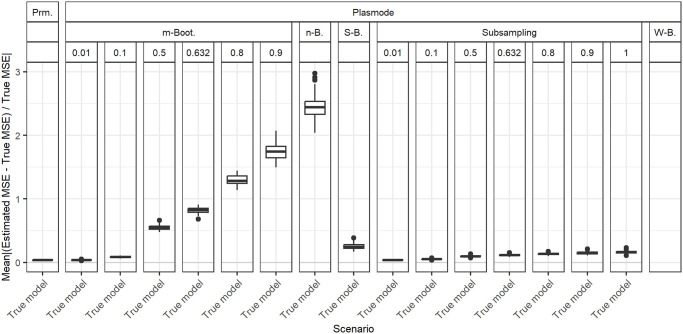

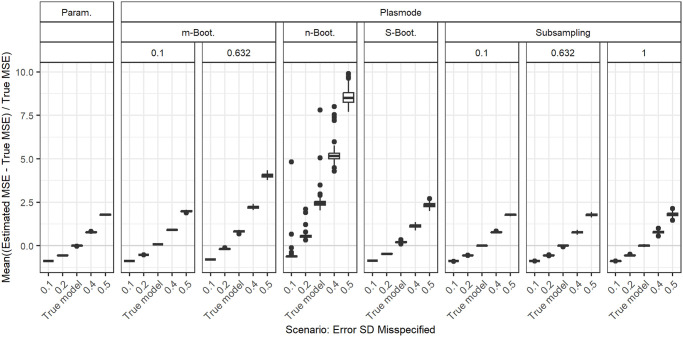

Deviations from true error variance

In Fig 15, the aggregated relative errors in MSE estimation for p = 50 and fixed correlations of 0.2 for misspecifications of the standard deviation of the error term ε are shown. Here, we use the relative errors directly without taking the absolute value to demonstrate under- and over-estimation. This again concerns all types of simulation. In general, for too small error standard deviations, the true MSE is underestimated, and for too large error standard deviations, the true MSE is overestimated. This pattern is visible for nearly all types of simulations. For m out of n Bootstrap with large resampling proportions as well as for n out of n Bootstrap, the MSE is overestimated even for the true model, and the errors for other values of the error standard deviation are shifted up accordingly. This leads to values closest to zero for too small error standard deviations. In all cases, the variability of the errors increases with increasing error standard deviation. We observe the same ordering that has already resulted for the true model (see Fig 4) when comparing the errors from different simulation types for misspecified error standard deviations.

Fig 15. Absolute value of relative error in MSE estimation averaged over individual coefficients when the assumed error variance in parametric and Plasmode simulation are misspecified for p = 50, n = 100, β = 151, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

Large outliers for n out of n Bootstrap are not displayed.

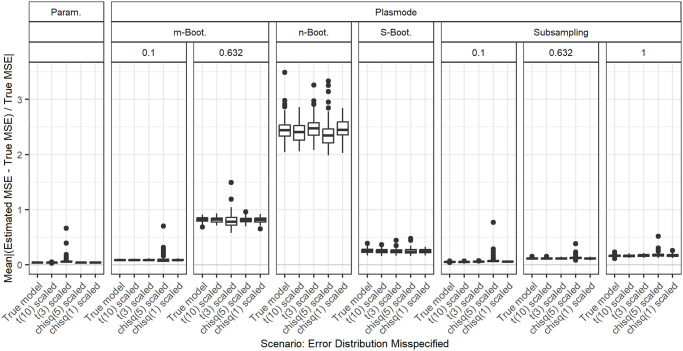

Deviations from true error distribution

In Fig 16, the aggregated relative errors in MSE estimation for p = 50 and fixed correlations of 0.2 for misspecifications of the distribution of the error term are shown. There are two types of misspecifications that we compare. We use t-distributed errors as an example of a heavier-tailed distribution and χ2-distributed errors as an example of a skewed distribution. Both are scaled and shifted in a way that the errors still have zero expectation and a standard deviation of 0.3. Overall, the distribution of the errors does not seem to have any influence on the error in MSE estimation as long as the error standard deviation and zero mean are preserved.

Fig 16. Absolute value of relative error in MSE estimation averaged over individual coefficients when the assumed error distributions in parametric and Plasmode simulation are misspecified, for p = 50, n = 100, β = 151, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

Large outliers for n out of n Bootstrap are not displayed.

True DGP: Correlation estimated from real data

We now analyze the results for the scenarios where the true correlation matrix is estimated from a real dataset. In the following, we only discuss the results that differ from those for the more simple correlation structures we looked at before. These are all deviations that do not alter the correlation matrix. For deviations from the true correlations, it gets more complicated. In the case of small correlations which differ little, the results are still similar to those that we saw before. For example, Fig 17 shows the results for the correlation estimated from the dataset quake. The true pairwise correlations are Cor(X1, X2) = −0.1286, Cor(X1, X3) = −0.0151, and Cor(X2, X3) = 0.1353. The results look similar to those we saw before for fixed correlations of 0.2. On the other hand, for the other datasets, the estimated pairwise correlations show higher variation, which means that no fixed value can be used to approximate all correlations simultaneously in a good way. This is for example clearly visible in Fig 18 for the correlation matrix estimated from the dataset wine_quality. For each choice of fixed pairwise correlation, there are some coefficients with very large relative errors. This can also lead to errors showing a pattern that differs from the parabolic shape we observed before (Fig 7), as can be seen in Fig 19 for the dataset Yolanda. For those cases where no constant correlation approximates all real correlations well, many of the Plasmode variants outperform parametric simulation for all assumed oversimplified correlation structures. A possible cure for parametric simulation would be to estimate the correlation structure from real data which—in this case—corresponds to the true model. Overall, assuming some simple correlation structure, like often done in parametric simulations, might lead to high errors in the estimation of the MSE in cases where the true correlation structure is more complicated. To correctly guess this correlation structure is highly unlikely, and it might even be impossible to specify complicated correlation structures in high-dimensional settings.

Fig 17. Absolute value of relative error in MSE estimation for individual coefficients when the assumed feature correlation matrix in parametric simulation is misspecified.

True correlation matrix is estimated from the benchmark dataset quake (p = 3, n = 100, β = 14, σ = 0.3).

Fig 18. Absolute value of relative error in MSE estimation for individual coefficients when the assumed feature correlation matrix in parametric simulation is misspecified.

True correlation matrix is estimated from benchmark dataset wine_quality (p = 11, n = 100, β = 112, σ = 0.3).

Fig 19. Absolute value of relative error in MSE estimation averaged over individual coefficients when the assumed feature correlation matrix in parametric simulation is misspecified.

True correlation matrix is estimated from benchmark dataset Yolanda (p = 100, n = 200, β = 1101, σ = 0.3).

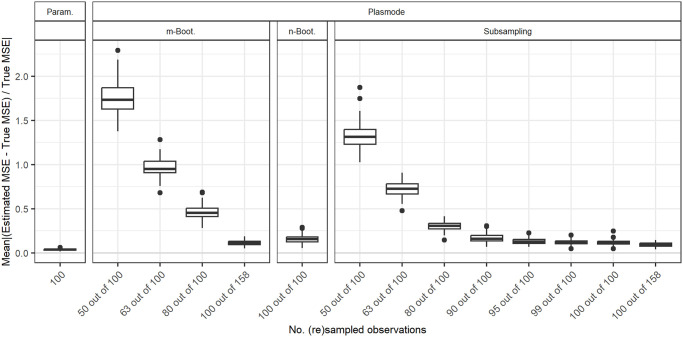

Size of resampled datasets

Until now, we have always compared simulations that use the same number of observations, which leads to differently sized datasets from which the Plasmode data is resampled. This might seem unintuitive, but is necessary to ensure a fairer comparison of the simulation methods since the true MSE that the estimations are compared to, is monotonously decreasing in the number of observations in the dataset. Therefore, if we set the size of the dataset that we are resampling from to 100 and resample smaller datasets from this, the MSE will always be overestimated, even for the true model. This means that if we want to estimate the MSE for datasets of a certain size n, we have to use datasets of that exact size in our simulations. However, it might be unrealistic that we have a dataset of the correct size at hand to resample from for our simulation. For example, if we use simulation to estimate a quantity that cannot be estimated directly from the data since it depends on unknown parameters (e.g. the bias of an estimator), we might have a concrete dataset at hand for which we want to estimate this quantity. In this case, Plasmode would be a natural choice and since the number of observations is limited, we might use resampled datasets of smaller size to estimate the quantity for the whole dataset. We now discuss the results for this case for p = 10 for the true model. For p = 2, differences between the resampling methods are very small anyway. For p = 50, it will be hard to differentiate between the errors occurring due to the differently sized datasets and the errors caused by approaching the boundary of identifiability. Fig 20 shows the results for the different Bootstrap methods compared to parametric simulation for differing sizes of datasets resampled from a dataset of size 100. For comparison, the case of resampling 100 out of 158 observations that has been used in the analysis so far for a resampling proportion of 0.632 is also included. The estimated MSEs are compared to the true MSE for n = 100 in all cases. Higher errors are observed for smaller sizes of the resampled dataset. The smallest errors are observed for subsampling with the subsampling proportion approaching the number of observations in the dataset. So in the case where the number of observations is limited to the number of observations that we are interested in, it might even be the best choice to do no resampling at all and just generate different responses for the MSE estimation. It should be noted that when fixing the size of the dataset to resample from, the n out of n Bootstrap performs comparably well. A reason for this might be that it uses a dataset of size 100 for estimating the MSE. Therefore, no errors occur due to the dependency of the MSE on n. Moreover, the n out of n Bootstrap can use the dataset more efficiently since it uses more samples for the MSE estimation than subsampling or the m out of n Bootstrap with lower resampling proportions.

Fig 20. Comparison of different resampling types for different numbers of observations resampled from a dataset with 100 observations.

Absolute value of relative error in MSE estimation averaged over individual coefficients when the true model is assumed in parametric and Plasmode simulation, for p = 10, n = 100, β = 111, σ = 0.3, Cor(Xi, Xj) = 0.2 ∀i ≠ j.

Conclusions and recommendations

In the following, we summarize what we have learned from the comparisons that we performed. First, we provide some general insights. Then, we present detailed comparisons, for which type of deviations from the data-generating process Plasmode was superior to parametric simulation in our analyses.

General insights

We looked at different true data-generating processes (DGP) and deviations from those for the estimation of the MSE of the least squares estimator (LSE) in linear regression to compare how well different simulation types perform in this case. Overall, we saw that if there is no deviation from the true scenario, parametric simulation outperforms all Plasmode simulations. The same holds for deviations that affect parametric as well as Plasmode, i.e. deviations from the outcome generating model (OGM), given that the DGP used for parametric simulation is close to the truth. We saw that the misspecification of the coefficients and of the error distribution (as long as expectation and variance are kept) does not have any effect on the quality of the MSE estimation while the misspecification of the error standard deviation does have an effect.

Misspecifications of the DGP only affect parametric simulation. For all kinds of misspecifications of the DGP in parametric simulation (misspecification of expectation, variance, correlation, whole distribution), parametric simulation can become worse than Plasmode. The degree of misspecification needed for Plasmode to be superior depends on the type of misspecification, the resampling method used in the context of Plasmode that we compare with, and on the number of observations n and the number of features p. A detailed analysis of the degree of misspecification needed for Plasmode to be superior is given in Subsection 2.

Within the different resampling strategies for Plasmode simulations we observed that in general, Wild Bootstrap performed worst, followed by n out of n Bootstrap. m out of n Bootstrap performed better than n out of n and subsampling usually performed best. For both m out of n Bootstrap and subsampling, smaller resampling proportions are favorable. This means that for a fixed number of subsampled observations n of interest, larger datasets to resample from are required. Smoothed Bootstrap usually performs worse than subsampling and even than no resampling (subsampling proportion of one), but better than m out of n Bootstrap with moderate resampling rates, i.e. rates larger than 0.5. When the number of observations for resampling is limited to the number of observations that we are interested in, we are restricted to n out of n Bootstrap, Smoothed Bootstrap, Wild Bootstrap, no resampling at all (i.e. subsampling with the proportion of one), or resampling a dataset of smaller size for Plasmode. Our analyses suggest that no resampling at all or subsampling with a subsampling proportion very close to one might be the best choice in this case. This is due to the dependence of the MSE on the number of observations, which leads to biased estimates of the MSE if the number of observations used for the simulation differs from the number of observations of interest.

Detailed comparisons

Table 3 presents the values for each scenario and deviation at which certain types of Plasmode simulation are superior to parametric simulation. As discussed before, this is only applicable to deviations regarding the data-generating process (DGP). The numbers given in the Plasmode columns are calculated as follows. For the given scenario, deviation and Plasmode type, the deviations are ordered increasingly. Then, the first deviation for which the median aggregated relative error of parametric is higher than that for the Plasmode type is identified. These values correspond to the medians in the aggregated boxplots. For example in the first row, the case of p = 2, n = 100 and fixed pairwise correlations of 0.2 is analyzed for deviations of the assumed expected value for the second feature. The true expectation is 0. Plasmode with m out of n Bootstrap or subsampling with a resampling proportion of 0.1 is superior to parametric simulation for assumed expectations of 0.25 and higher. Plasmode with m out of n Bootstrap or subsampling with a resampling proportion of 0.632 is only superior for assumed expectations of 0.4 and higher, n out of n Bootstrap for values of 0.5 and higher, Smoothed Bootstrap for values of 0.55 and higher, and Plasmode without resampling (subsampling with proportion of 1) for values of 0.45 and higher.

Table 3. Smallest deviations in parametric simulations for which Plasmode simulation is superior to parametric simulation.

p denotes the number of features, n the number of observations. True ρ gives the true correlation structure, scenario type the type of deviation and true value the true parameter value that the deviation refers to.

| p | n | True ρ | Scenario type | True value | m-Bootstrap | n-Bootstrap | Smoothed Bootstrap | Subsampling | No resampling | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.632 | 0.1 | 0.632 | ||||||||

| 2 | 100 | 0.2 | Expectation of 2nd feature misspecified | 0 | 0.25 | 0.4 | 0.5 | 0.55 | 0.25 | 0.4 | 0.45 |

| 2 | 100 | 0.2 | Variance of 2nd feature misspecified | 1 | 1.05 | 1.15 | 1.15 | 1.2 | 1.1 | 1.1 | 1.15 |

| 2 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.02 | 0.02 |

| 2 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 |

| 2 | 100 | 0.2 | Feature correlation misspecified | N(0,1) | 0.28 | 0.35 | 0.39 | 0.4 | 0.29 | 0.35 | 0.36 |

| 2 | 50 | 0.2 | Expectation of 2nd feature misspecified | 0 | 0.3 | 0.5 | 0.55 | 0.6 | 0.3 | 0.5 | 0.55 |

| 2 | 50 | 0.2 | Variance of 2nd feature misspecified | 1 | 1.1 | 1.2 | 1.25 | 1.3 | 1.1 | 1.2 | 1.2 |

| 2 | 50 | 0.2 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.03 | 0.03 | 0.04 | 0.01 | 0.03 | 0.03 |

| 2 | 50 | 0.2 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.02 | 0.02 |

| 2 | 50 | 0.2 | Feature correlation misspecified | N(0,1) | 0.33 | 0.41 | 0.41 | 0.41 | 0.29 | 0.39 | 0.41 |

| 2 | 50 | 0.5 | Expectation of 2nd feature misspecified | 0 | 0.25 | 0.35 | 0.4 | 0.5 | 0.25 | 0.35 | 0.4 |

| 2 | 50 | 0.5 | Variance of 2nd feature misspecified | 1 | 1.05 | 1.15 | 1.15 | 1.25 | 1.05 | 1.1 | 1.15 |

| 2 | 50 | 0.5 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.02 | 0.02 |

| 2 | 50 | 0.5 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.01 | 0.02 |

| 2 | 50 | 0.5 | Feature correlation misspecified | N(0,1) | 0.54 | 0.57 | 0.57 | 0.61 | 0.54 | 0.56 | 0.57 |

| 10 | 100 | 0.2 | Expectation of 2nd half of features misspecified | 0 | 0.25 | 0.45 | 0.6 | 0.7 | 0.25 | 0.4 | 0.5 |

| 10 | 100 | 0.2 | Variance of 2nd half of features misspecified | 1 | 1.1 | 1.25 | 1.4 | 1.55 | 1.1 | 1.2 | 1.25 |

| 10 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.02 | 0.03 | 0.04 | 0.01 | 0.02 | 0.02 |

| 10 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.01 | 0.02 |

| 10 | 100 | 0.2 | Feature correlation misspecified | N(0,1) | 0.24 | 0.29 | 0.33 | 0.36 | 0.24 | 0.28 | 0.3 |

| 10 | 100 | 0.2 | Feature correlation misspecified ρ|i−j| | N(0,1) | 0.24 | 0.38 | 0.41 | 0.44 | 0.24 | 0.36 | 0.39 |

| 10 | 50 | 0.2 | Expectation of 2nd half of features misspecified | 0 | 0.3 | 0.7 | 0.9 | 0.7 | 0.3 | 0.55 | 0.65 |

| 10 | 50 | 0.2 | Variance of 2nd half of features misspecified | 1 | 1.1 | 1.55 | 2.45 | 1.55 | 1.1 | 1.3 | 1.45 |

| 10 | 50 | 0.2 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.05 | 0.08 | 0.04 | 0.01 | 0.03 | 0.04 |

| 10 | 50 | 0.2 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.03 | 0.06 | 0.03 | 0.01 | 0.02 | 0.03 |

| 10 | 50 | 0.2 | Feature correlation misspecified | N(0,1) | 0.26 | 0.36 | 0.43 | 0.36 | 0.25 | 0.31 | 0.34 |

| 10 | 50 | 0.2 | Feature correlation misspecified ρ|i−j| | N(0,1) | 0.33 | 0.44 | 0.5 | 0.44 | 0.22 | 0.39 | 0.42 |

| 50 | 100 | 0.2 | Expectation of 2nd half of features misspecified | 0 | 0.4 | 1.55 | 2.7 | 0.8 | 0.25 | 0.5 | 0.65 |

| 50 | 100 | 0.2 | Variance of 2nd half of features misspecified (too small) | 1 | 0.88 | 0.38 | 0.17 | 0.69 | 0.94 | 0.84 | 0.77 |

| 50 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.02 | 0.57 | 0.05 | 0.01 | 0.02 | 0.03 | |

| 50 | 100 | 0.2 | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.98 | 0.04 | 0.01 | 0.02 | 0.02 | |

| 50 | 100 | 0.2 | Feature correlation misspecified | N(0,1) | 0.27 | 0.57 | 0.78 | 0.37 | 0.24 | 0.29 | 0.32 |

| 50 | 100 | 0.2 | Feature correlation misspecified ρ|i−j| | N(0,1) | 0.25 | 0.62 | 0.79 | 0.46 | 0.34 | 0.21 | 0.42 |

| 50 | 100 | 0.2|i−j| in 5 blocks | Expectation of 2nd half of features misspecified | 0 | 0.4 | 2.05 | 2.6 | 0.8 | 0.25 | 0.5 | 0.6 |

| 50 | 100 | 0.2|i−j| in 5 blocks | Variance of 2nd half of features misspecified (too small) | 1 | 0.88 | 0.39 | 0.17 | 0.68 | 0.94 | 0.84 | 0.77 |

| 50 | 100 | 0.2|i−j| in 5 blocks | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.02 | 0.51 | 0.05 | 0.01 | 0.02 | 0.03 | |

| 50 | 100 | 0.2|i−j| in 5 blocks | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.26 | 0.03 | 0.01 | 0.02 | 0.02 | |

| 50 | 100 | 0.2|i−j| in 5 blocks | Feature correlation misspecified | N(0,1) | 0.2 | 0.5 | 0.74 | 0.28 | 0.2 | 0.2 | 0.22 |

| 50 | 100 | 0.2|i−j| in 5 blocks | Feature correlation misspecified ρ|i−j| | 0.2|i−j| | 0.3 | 0.59 | 0.78 | 0.41 | 0.25 | 0.32 | 0.36 |

| 50 | 100 | 0.5|i−j| in 5 blocks | Expectation of 2nd half of features misspecified | 0 | 0.5 | 2.05 | 3.4 | 2.05 | 0.3 | 0.6 | 0.8 |

| 50 | 100 | 0.5|i−j| in 5 blocks | Variance of 2nd half of features misspecified (too small) | 1 | 0.88 | 0.39 | 0.17 | 0.68 | 0.94 | 0.84 | 0.78 |

| 50 | 100 | 0.5|i−j| in 5 blocks | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.02 | 0.43 | 0.05 | 0.01 | 0.02 | 0.04 | |

| 50 | 100 | 0.5|i−j| in 5 blocks | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.26 | 0.02 | 0.01 | 0.01 | 0.01 | |

| 50 | 100 | 0.5|i−j| in 5 blocks | Feature correlation misspecified | N(0,1) | 0.5 | 0.67 | 0.83 | 0.51 | 0.5 | 0.5 | 0.5 |

| 50 | 100 | 0.5|i−j| in 5 blocks | Feature correlation misspecified ρ|i−j| | 0.5|i−j| | 0.54 | 0.72 | 0.85 | 0.6 | 0.53 | 0.55 | 0.58 |

| 3 | 100 | quake | Expectation of 2nd half of features misspecified | 0 | 0.3 | 0.5 | 1 | 1 | 0.3 | 0.45 | 1 |

| 3 | 100 | quake | Variance of 2nd half of features misspecified (too small) | 1 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| 3 | 100 | quake | Distribution misspecified: Gaussian mixture with N(0,10) | 0 | 0.01 | 0.02 | 0.02 | 0.03 | 0.01 | 0.02 | 0.02 |

| 3 | 100 | quake | Distribution misspecified: Gaussian mixture with N(3,1) | 0 | 0.01 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 |

When using correlation matrices estimated from real datasets, the order for the deviations in the correlations is unclear, as discussed before. Therefore, they are excluded from the comparison. Also, in all cases, assuming log-normal or binary data instead of normal data is worse than all Plasmode variants and therefore also excluded.

For these analyses, in the parametric simulations, the expectations and high variances were increased in steps of 0.05, and the low variances were decreased in steps of 0.1. The mixing proportion for Gaussian mixtures and the pairwise correlations were increased in steps of 0.01.

For p = 50 and assuming Gaussian mixtures, in some cases even a proportion of 100% data for the second half of features coming from the wrong distribution is not sufficient for Plasmode to be superior, as can be concluded from the values found for deviating expectations and variances. The corresponding entries in Table 3 are left empty in these cases.

Summary and discussion

We performed a simulation study to compare the performance of parametric and Plasmode simulation in the context of MSE estimation for the least squares estimator (LSE) in the linear regression model. For parametric simulation, artificial data is generated according to a fully user-specified data-generating process (DGP) for generating the feature data and an outcome-generating model (OGM) for generating the outcome variable. In contrast to that, in Plasmode simulation the feature data is generated by resampling from a real-life dataset and only the OGM has to be specified. For comparing the two approaches, we need control of the true underlying DGP and OGM. We used different true DGPs and OGMs. Since the true DGP and OGM are unknown in practice, they must be specified when conducting a simulation study. For Plasmode simulation the DGP is implicitly given by the chosen dataset. This specification is likely a deviation from the truth. Therefore, we examined the influence of different deviations on both types of simulation studies. Note that for Plasmode, there is no explicit deviation from the DGP. When resampling from a dataset, one samples from the empirical DGP which ideally converges to the true DGP.