Abstract

Purpose of review

This article explores the impact of recent applications of artificial intelligence on clinical anesthesiologists’ decision-making.

Recent findings

Naturalistic decision-making, a rich research field that aims to understand how cognitive work is accomplished in complex environments, provides insight into anesthesiologists’ decision processes. Due to the complexity of clinical work and limits of human decision-making (e.g. fatigue, distraction, and cognitive biases), attention on the role of artificial intelligence to support anesthesiologists’ decision-making has grown. Artificial intelligence, a computer’s ability to perform human-like cognitive functions, is increasingly used in anesthesiology. Examples include aiding in the prediction of intraoperative hypotension and postoperative complications, as well as enhancing structure localization for regional and neuraxial anesthesia through artificial intelligence integration with ultrasound.

Summary

To fully realize the benefits of artificial intelligence in anesthesiology, several important considerations must be addressed, including its usability and workflow integration, appropriate level of trust placed on artificial intelligence, its impact on decision-making, the potential de-skilling of practitioners, and issues of accountability. Further research is needed to enhance anesthesiologists’ clinical decision-making in collaboration with artificial intelligence.

Keywords: anesthesiology, artificial intelligence, decision-making, safety

INTRODUCTION

In the not-too-distant future, artificial intelligence will be a frequent partner in the operating room [1–3] embedded in clinical devices and technology. In this article, we discuss what is known about how anesthesiologists currently make decisions in the operating room. Then, we discuss the opportunities of increasingly sophisticated artificial intelligence technologies to support practicing anesthesiologists’ decision-making. Lastly, we discuss artificial intelligence use risks and challenges for intraoperative anesthesia decision-making and the research needed in this space.

DECISION-MAKING

How anesthesiologists currently make decisions in the operating room

To reduce errors and improve patient safety, decision-making in the dynamic, complex, high-stakes operative environment has been studied for decades. Insight on the thought processes of anesthesia providers has been used for medical education and assessment, development of new technologies and techniques, and stressor mitigation. Naturalistic decision-making (NDM), a research field that aims to understand how cognitive work is accomplished in complex environments, provides insight into why and how decisions are made in high-stress situations [4]. This primarily involves observing real-world, dynamic settings instead of laboratory simulations. Researchers apply NDM principles to workers in dynamic high-tempo, high-consequence domains like anesthesiology and surgery.

A recent systematic review of NDM studies across domains including healthcare, fire and rescue, aviation, and military identified four primary decision-making strategies: recognition-primed (or intuitive), analytical (or deliberative), rule-based, and innovative [5■]. Recognition-primed decision-making (RPD) was the predominant strategy used in high-risk, time-pressured, crisis events. Practitioners tended to employ analytical strategies in settings with less time dependence and deliberate consideration of multiple options. Rule-based and creative strategies were infrequent.

The RPD model includes assessment, recognition (i.e. mapping to similar prior situations), mental simulation of action, and action implementation. What makes RPD unique is the near-immediate familiarity and recognition by experts when experiencing a situation, as opposed to a deliberative process where several potential actions are generated and systematically evaluated before selection. The ‘assessment’ and ‘recognition’ steps of RPD are complementary to the first two levels of situational awareness described by Endsley and co-workers [6].

RPD’s immediacy is the mainstay of time-constricted decision-making. Recognition of a situation triggers potential actions and their consequences. Mental simulation involves modeling the likely pathways that could occur with the recognition-generated action (e.g. choice of fluid, esmolol and/or phenylephrine for a tachycardic/hypotensive patient with 2mm lateral ST depressions) to confirm if it will work in that specific situation. Following implementation of an action, the response is assessed to ascertain if it matches expectations (i.e. diagnosis, somewhat analogous to the third level of situational awareness [6]) as well as developing new expectations of the anticipated future trajectory (fourth level of situational awareness). The loop repeats as necessary to reassess and take other probabilities into account. In domains like anesthesiology, an action may be chosen to clarify diagnosis, correct or improve the situation (therapy), or both (e.g. administration of phenylephrine and fluids in the situation above resolved all abnormalities, suggesting hypovolemia with associated supply–demand ischemia).

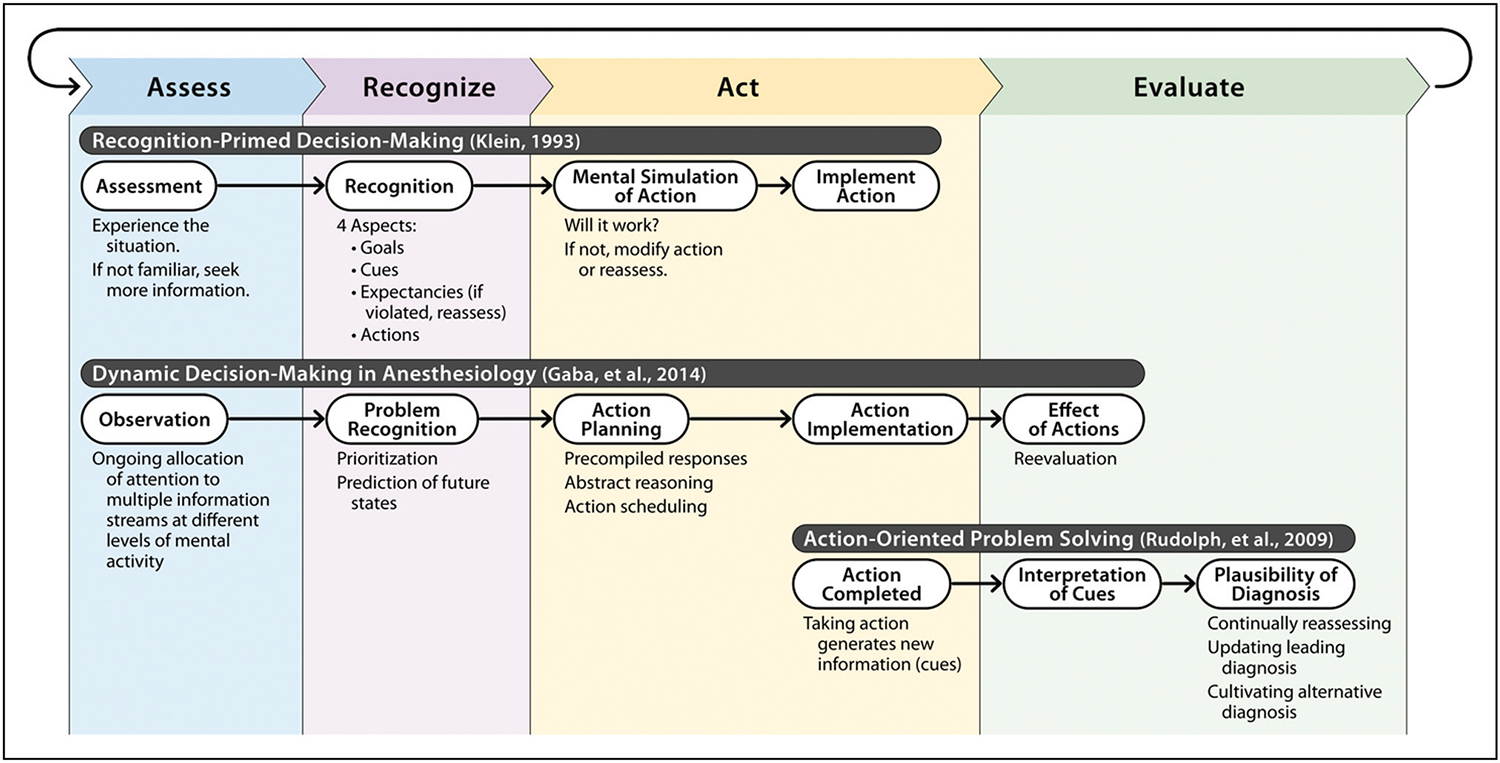

Other models of clinical/intraoperative decision-making processes have been proposed independently by Rudolph et al. [7] and Gaba et al. [8]. Both the Rudolph and Gaba models are harmonious with RPD. Figure 1 provides an overview of these three models.

FIGURE 1.

Summary of three naturalistic decision-making models relevant to anesthesiology practice. Model synthesis was adapted from: Klein [9], Gaba et al. [8], and Rudolph et al. [7].

Like RPD, the Gaba model describes an observation, decision, action, and reevaluation loop. It is specific to intraoperative anesthesiologist decision-making, incorporating elements such as diagnosis, treatment, and escalation of care. This process enables adaptation to dynamic changes in patient status, surgery, and the operating room environment.

Rudolph’s problem-solving model, based on studies of medical diagnosis in crisis situations, focuses on clinician’s diagnostic decision-making process. It describes a cycle of acting, interpreting, cultivating feedback and alternatives. In contrast to RPD, it starts with interpreting new information generated through actions, rather than observing the situation.

These models are now years to decades old, and there has been limited work in the field of dynamic clinical decision-making since. In the next few years, we expect new empiric data from an on-going national multicenter trial (called IMPACTS for Improving Medical Performance during Acute Crises Through Simulation) of clinical performance and decision-making strategies of physician anesthesiologists during simulated critical perioperative events.

There are many contributory factors that can adversely affect anesthesiologists’ decision-making including stress, fatigue, burnout, and distraction [10–13]. As a cornerstone of anesthesia patient safety for three decades, human factors engineering (HFE) is a discipline focused on optimizing the design and implementation of tools and processes to enhance human performance [14]. HFEs and cognitive scientists have also been interested in the ways humans may think about, or frame, a situation that could lead to suboptimal decisions (i.e. cognitive biases). As technologies continue to advance, the potential for artificial intelligence to support anesthesiologists’ decision-making through reduced errors and improved safety has gained attention.

ARTIFICIAL INTELLIGENCE TO SUPPORT DECISION-MAKING

What is artificial intelligence

Artificial intelligence, in general terms, is a computer’s ability to perform human cognitive functions such as problem-solving, object and word recognition, inference of world states, decision-making, and potentially even reasoning. Machine learning and deep learning are two types of artificial intelligence. Machine learning is a set of algorithms that through training data exposure, detect patterns and make predictions. These algorithms adapt in response to new data and experiences to improve their efficacy over time. Deep learning is a specific framework of machine learning that identifies very complex relationships through a structure of multiple interconnected layers (i.e. artificial neural networks) [15]. The anticipated greatest potential for artificial intelligence in healthcare may be in the areas of precision medicine, optimization of available resources, and reduction of inequalities [16].

Artificial intelligence in anesthesia

Artificial intelligence used in anesthesia today can increase the vigilance of anesthesia professionals and predictor potentially prevent perioperative complications. For instance, machine learning techniques have been used to generate meaningful alarms during general anesthesia using two distinct approaches: complication detection and anomaly detection [17]. Using these approaches, artificial intelligence algorithms predict interoperative hypotension before it happens using analysis of waveforms from arterial blood pressure [18], electrocardiogram, and electroencephalogram [19]. Prediction of postoperative acute kidney injury uses preoperative demographic, past medical history, medications, and flowsheet information [20].

In another artificial intelligence application, monitoring of pain ‘perception’ during anesthesia resulted in significantly improved postoperative pain in patients undergoing major abdominal surgery [21]. A computer-aided diagnosis system identified the remaining parts to be examined in real-time endoscopic procedures, which may assist anesthesia professionals to titrate the appropriate duration and degree of sedation, minimizing emergence and recovery times [22].

Artificial intelligence appears to have appreciable potential for supporting image-guided procedures. For example, the Food and Drug Administration (FDA) recently authorized a software-based medical device, ScanNav, designed to help anesthesiologists and other health professionals, especially those less experienced with regional anesthesia, identify important anatomical structures in ultrasound images before inserting needles. This device uses deep learning to create color overlays of key anatomical structures on live ultrasound images [23–28]. Integration of artificial intelligence with ultrasound for image enhancement and analysis increases clinicians’ ability to localize structures in patients with challenging anatomy. For example, use of artificial intelligence-guided ultrasound to identify vertebral bodies increased first-attempt success rate during spinal anesthesia in severely obese patients when compared with traditional palpation methods. Artificial intelligence use decreased needle placement time partly by predicting best needle direction and target structure depth in epidural anesthesia [29]. There has also been research on artificial intelligence-based Tuohy needle path planning, which allows an anesthesiologist to control, with robot assistance, the tip of the needle during epidural placement [30].

In the future, the optimal design, implementation, and use of artificial intelligence tools is likely to differ depending on the application (e.g. image recognition/analysis, patient monitoring and alarms, and diagnostic/therapeutic decision support). In the next section, we will discuss some of the challenges and concerns of artificial intelligence-assisted anesthesia procedures and practices.

CONSIDERATIONS FOR THE IMPLEMENTATION OF ARTIFICIAL INTELLIGENCE IN ANESTHESIA

While there is some evidence that artificial intelligence can improve clinical decision-making and patient safety [31], there are important considerations to address prior to widespread implementation. Several national agencies, including the National Academy of Medicine, the Coalition for Health AI, and the National Institute of Standards and Technology, have outlined considerations for the use of artificial intelligence in medicine, describing issues around bias, explainability, and regulation [32–34]. Char and Burgart [35] described four considerations for the implementation of artificial intelligence in anesthesiology: impact on workflow, skill atrophy, accountability, and clinician autonomy. Below, we briefly review four issues specific to artificial intelligence’s impact on anesthesiologists’ decision-making and on patient safety. Table 1 outlines these considerations and areas for future research.

Table 1.

Considerations for the future implementation of artificial intelligence in anesthesiology

| Consideration | Research gaps |

|---|---|

|

| |

| Appropriate trust | What information is needed for anesthesiologists to appropriately rely on AI? What competencies do anesthesiologists need to use AI safely? How will anesthesiologists develop these competencies? |

| Impact on decision-making | How does AI support and alter anesthesiologist decision-making? How can AI be safely deployed in time pressured situations? |

| De-skilling | How do we maintain skill and expertise? How do we train new anesthesiologists who regularly rely on AI? |

| Accountability | Who will be responsible when AI fails? How can we support learning from AI failures? |

AI, artificial intelligence.

Appropriate trust

Trust is a major concern with the implementation and use of artificial intelligence [34]. Due to its inherent ‘black box’ nature, trust in artificial intelligence can be challenging [1,36]. This can go both ways – inappropriate trust in artificial intelligence decisions (more often by novices or experts placed in rare situations) as well as inappropriate lack of trust (more often by experts in what look like common situations). Benda et al. [37] proposed that the goal should be appropriate trust in artificial intelligence based on ‘the purpose of the AI, its process for making recommendations, and its performance in the given context’ (p. 1). Appropriate trust is based on clinicians’ use and reliance on a system compared with the actual artificial intelligence capabilities in a specific context [38], which can be measured with two specific constructs:

calibration: ‘the extent to which a user’s trust matches the performance of the system’

resolution: ‘the users’ ability to adapt their trust based on changing functions and goals, or how the AI performance changes over time’

To be most effective, artificial intelligence tools must obey the five rights of decision support: Providing the right information, to the right person, in the right format, through the right channel, at the right time in the workflow [39]. The algorithm’s output is highly related to the quality of input, thus affecting the clinical decision support [15]. Furthermore, anesthesiologists will want the ability to understand why artificial intelligence has made a particular decision or recommendation. This can be especially difficult for neural network-based artificial intelligence. The explainability (or transparency) of artificial intelligence can be an obstacle to clinician acceptance and is an important area of current research.

When using artificial intelligence in the operating room, anesthesiologists will need to determine if they can trust the artificial intelligence-based information in a time-sensitive environment and then make potentially life-critical decisions based on this information. Future research is needed to determine what information is needed to support anesthesiologists’ appropriate trust in artificial intelligence. One aspect of improving appropriate trust will be the development of new clinician competencies to support interpretation and understanding of artificial intelligence algorithms [40,41]. Research is needed to determine the competencies required and how these can best be taught to current and future generations of anesthesiologists.

Impact on decision-making

Questions remain around how artificial intelligence will influence anesthesiologists’ decision-making. Rather than a human-alone or artificial intelligence-alone approach, the future will more likely reflect a human–artificial intelligence collaboration to provide optimal care to patients [15]. It remains unclear when and how to optimally provide artificial intelligence-based information to clinicians in the operating room. For instance, building on the RPD model of decision-making, how will expert anesthesiologists utilize new information or ‘cues’ from artificial intelligence algorithms to guide clinical decisions? Artificial intelligence-provided treatment recommendations could lead an experienced clinician to rapidly accept and pursue an incorrect course of action against their better judgement. An artificial intelligence-provided differential diagnosis may lead to treatment delays while the clinician awaits diagnostic test results to rule out proposed alternative diagnoses. There is limited research on what information will be needed and how it needs to be presented by artificial intelligence tools to support (and not hinder) decision-making and clinical workflows [15,42■].

De-skilling

Another concern is the effect of AI on ‘de-skilling’ the workforce. In anesthesia, anesthesiologists’ ability to re-take control from an automated system may determine patients’ safety. Thus, maintenance of psychomotor and cognitive skills will be necessary [35]. Performance-based fields like aviation, recognize the growing challenges involved in maintaining critical emergency skills when operators are routinely functioning in progressively more automated contexts [43]. Several artificial intelligence algorithms guide and support the completion of tasks, such as Tuohy needle path planning [30,35]. Routine reliance on artificial intelligence may lead to skill degradation, making it harder for anesthesiologists to take over and perform well in situations when artificial intelligence fails or complexity exceeds artificial intelligence’s ability to cope. Further, how will training anesthesiologists develop expertise without regular exposure to what (unaided) would be complex tasks? We must address how to train and maintain anesthesiologists’ skills with the introduction of artificial intelligence systems.

Accountability

As with any new technology introduced into a complex system, artificial intelligence tools will invariably create new potential failure modes. Who will be held responsible when the artificial intelligence inevitably fails? One can envision two scenarios that present concern: when artificial intelligence makes an ‘incorrect’ recommendation and the clinician acts on it, and when artificial intelligence makes a ‘correct’ recommendation and the clinician decides, perhaps with good reason in the moment, not to follow that recommendation. New policies, procedures, and laws will need to be developed to manage these complex situations. Feedback mechanisms should be developed to support clinician learning when artificial intelligence recommendations were, or were not, correct. Additional research is needed to determine how to maintain clinician autonomy and to develop clear guidelines on culpability in these complex cases.

WILL ARTIFICIAL INTELLIGENCE BE EQUITABLE AND SAFE?

There are many additional issues to be considered as we design and implement artificial intelligence-based tools in the operating room. Will different artificial intelligence tools (including those for surgeons and nurses) talk with each other and ‘play nicely’? Will artificial intelligence be able to accommodate and adjust its behavior for the invariably noisy and incomplete input signals from the patient and other sources? Will the algorithms indeed assure more equitable care or will they exaggerate existing care inequities? How will artificial intelligence accommodate the numerous ‘special’ situations associated with modern high-acuity high-risk surgery? For machine learning systems, how will clinicians (and regulators) be able to detect drift from optimal performance over time as the systems ‘learn’ and change based on new input data (including changes in patient population characteristics)? Additional research is needed to fully understand and mitigate these pressing issues.

CONCLUSION

Artificial intelligence-driven technologies to support clinical decision-making have considerable potential to improve patient safety. However, there are real dangers and appreciable unknowns. Additional human factors and qualitative research on the design and implementation of these tools in clinical workflows is needed to elucidate the optimal approach to glean the benefits of artificial intelligence while preserving patient and clinician safety.

KEY POINTS.

The current models of anesthesiologist intraoperative decision-making processes are years to decades old and there are limited empirical data to support their practical application.

Artificial intelligence implementation has begun across healthcare with some early progress in anesthesiology, most notably in image recognition and associated procedural guidance.

There are several important considerations, including usability, trust, explainability, skill maintenance, accountability, equity, and safety, that require further research before widespread implementation of artificial intelligence can be recommended.

Acknowledgements

This research was made possible by funding from the Agency for Healthcare Research and Quality (AHRQ) grants R18HS26158 (M.B.W., PI) and K01HS029042 (M.E.S., PI). The content is solely the responsibility of the authors and does not necessarily represent the official views of the AHRQ. The authors are members of the Simulation Assessment Research Group (SARG, www.vumc.org/sarg) and the support of the following individuals is recognized: David Gaba, Laura Militello, Shilo Anders, Jason Slagle, Amanda Burden, Arna Banerjee, Lisa Sinz, Bill McIvor, Michael Andreae, John Rask, Jeff Cooper, Laurence Torsher, Adam Levine, Jack Boulet, Randy Steadman, & Huaping Sun.

Footnotes

Conflicts of interest

All the authors are involved in an on-going national multicenter trial, Improving Medical Performance during Acute Crises Through Simulation (IMPACTS). M.B.W. is a consultant for Fresenius-Kabi/Ivenix on matters completely unrelated to the content of this manuscript. Otherwise, the authors do not have any conflicts of interest in the manuscript, including financial, consultant, institutional, and other relationships that might lead to bias or a conflict of interest.

REFERENCES AND RECOMMENDED READING

Papers of particular interest, published within the annual period of review, have been highlighted as:

■ of special interest

■■of outstanding interest

- 1.Zaouter C, Joosten A, Rinehart J, et al. Autonomous systems in anesthesia: where do we stand in 2020? A narrative review. Anesth Analg 2020; 130:1120–1132. [DOI] [PubMed] [Google Scholar]

- 2.London MJ. Back to the OR of the future: how do we make it a good one? Anesthesiology 2021; 135:206–208. [DOI] [PubMed] [Google Scholar]

- 3.Tremper KK. Back to the OR of the future: comment. Anesthesiology 2022; 136:393. [DOI] [PubMed] [Google Scholar]

- 4.Naturalistic Decision Making Association. Principles of naturalistic decision making. 2023. [cited 2023 June 30]; Available at: https://naturalisticdecisionmaking.org/ndm-principles/. [Accessed 30 June 2023] [Google Scholar]

- 5.■Reale C, Salwei ME, Militello LG, et al. Decision-making during high-risk events: a systematic literature review. J Cogn Eng Decision Making 2023; 17:188–212.This systematic literature review identified 32 empiric research articles that examine how trained professionals make naturalistic decisions under pressure across multiple domains.

- 6.Schulz CM, Endsley MR, Kochs EF, et al. Situation awareness in anesthesia: concept and research. Anesthesiology 2013; 118:729–742. [DOI] [PubMed] [Google Scholar]

- 7.Rudolph JW, Morrison JB, Carroll JS. The dynamics of action-oriented problem solving: linking interpretation and choice. Acad Manage Rev 2009; 34:733–756. [Google Scholar]

- 8.Gaba DM, Fish KJ, Howard SK, et al. Crisis management in anesthesiology. 2nd ed. Philadelphia: Elsevier Health Sciences; 2014. [Google Scholar]

- 9.Klein GA. A recognition-primed decision (RPD) model of rapid decision making. Decision making in action: models and methods, 1993;5(4):138–147. [Google Scholar]

- 10.Biebuyck JF, Weinger MB, Englund CE. Ergonomic and human factors affecting anesthetic vigilance and monitoring performance in the operating room environment. J Am Soc Anesthesiol 1990; 73:995–1021. [DOI] [PubMed] [Google Scholar]

- 11.Hyman SA, Card EB, De Leon-Casasola O, et al. Prevalence of burnout and its relationship to health status and social support in more than 1000 subspecialty anesthesiologists. Reg Anesth Pain Med 2021; 46:381–387. [DOI] [PubMed] [Google Scholar]

- 12.Liberman JS, Slagle JM, Whitney G, et al. Incidence and classification of nonroutine events during anesthesia care. Anesthesiology 2020; 133: 41–52. [DOI] [PubMed] [Google Scholar]

- 13.Slagle JM, Porterfield ES, Lorinc AN, et al. Prevalence of potentially distracting noncare activities and their effects on vigilance, workload, and nonroutine events during anesthesia care. Anesthesiology 2018; 128:44–54. [DOI] [PubMed] [Google Scholar]

- 14.Weinger MB, Gaba DM. Human factors engineering in patient safety. Anesthesiology 2014; 120:801–806. [DOI] [PubMed] [Google Scholar]

- 15.Bellini V, Carna ER, Russo M, et al. Artificial intelligence and anesthesia: a narrative review. Ann Transl Med 2022; 10:. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bellini V, Valente M, Gaddi AV, et al. Artificial intelligence and telemedicine in anesthesia: potential and problems. Minerva Anestesiol 2022; 88:729–734. [DOI] [PubMed] [Google Scholar]

- 17.Macią g TT, van Amsterdam K, Ballast A, et al. Machine learning in anesthesiology: detecting adverse events in clinical practice. Health Informatics J 2022; 28:14604582221112855. [DOI] [PubMed] [Google Scholar]

- 18.Monge García MI, García-López D, Gayat É, et al. Hypotension prediction index software to prevent intraoperative hypotension during major non-cardiac surgery: protocol for a European Multicenter Prospective Observational Registry (EU-HYPROTECT). J Clin Med 2022; 11:5585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jo Y-Y, Jang J-H, Kwon J-m, et al. Predicting intraoperative hypotension using deep learning with waveforms of arterial blood pressure, electroencephalogram, and electrocardiogram: Retrospective study. Plos one 2022; 17:e0272055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bishara A, Wong A, Wang L, et al. Opal: an implementation science tool for machine learning clinical decision support in anesthesia. J Clin Monit Comput 2022; 36:1367–1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fuica R, Krochek C, Weissbrod R, et al. Reduced postoperative pain in patients receiving nociception monitor guided analgesia during elective major abdominal surgery: a randomized, controlled trial. J Clin Monit Comput 2023; 37:481–491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Xu C, Zhu Y, Wu L, et al. Evaluating the effect of an artificial intelligence system on the anesthesia quality control during gastrointestinal endoscopy with sedation: a randomized controlled trial. BMC Anesthesiol 2022; 22:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bowness J, El-Boghdadly K, Burckett-St Laurent D. Artificial intelligence for image interpretation in ultrasound-guided regional anaesthesia. Anaesthesia 2021; 76:602–607. [DOI] [PubMed] [Google Scholar]

- 24.Bowness JS, Burckett-St Laurent D, Hernandez N, et al. Assistive artificial intelligence for ultrasound image interpretation in regional anaesthesia: an external validation study. Br J Anaesth 2023; 130:217–225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bowness JS, El-Boghdadly K, Woodworth G, et al. Exploring the utility of assistive artificial intelligence for ultrasound scanning in regional anesthesia. Reg Anesth Pain Med 2022; 47:375–379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bowness JS, Macfarlane AJ, Burckett-St Laurent D, et al. Evaluation of the impact of assistive artificial intelligence on ultrasound scanning for regional anaesthesia. Br J Anaesth 2023; 130:226–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lloyd J, Morse R, Taylor A, et al. Artificial intelligence: innovation to assist in the identification of sono-anatomy for ultrasound-guided regional anaesthesia. Adv Exp Med Biol 2022; 1356:117–140. [DOI] [PubMed] [Google Scholar]

- 28.Larkin HD. FDA approves artificial intelligence device for guiding regional anesthesia. JAMA 2022; 328:2101. [DOI] [PubMed] [Google Scholar]

- 29.Compagnone C, Borrini G, Calabrese A, et al. Artificial intelligence enhanced ultrasound (AI-US) in a severe obese parturient: a case report. Ultrasound J 2022; 14:34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Haro-Mendoza D, Perez-Escamirosa F, Pineda-Mart ínez D, Gonzalez-Villela VJ. Needle path planning in semiautonomous and teleoperated robot-assisted epidural anaesthesia procedure: a proof of concept. Int J Med Robot 2022; 18:e2434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nair BG, Gabel E, Hofer I, et al. Intraoperative clinical decision support for anesthesia: a narrative review of available systems. Anesth Analg 2017; 124:603–617. [DOI] [PubMed] [Google Scholar]

- 32.National Institute of Standards and Technology, Artificial intelligence risk management framework (AI RMF 1.0). 2023, U.S. Department of Commerce. [Google Scholar]

- 33.Matheny M, Israni ST, Ahmed M, et al. Artificial intelligence in healthcare: the hope, the hype, the promise, the peril. Natl Acad Med 2020; 94–97. [Google Scholar]

- 34.Coalition for Health AI. Blueprint for trustworthy AI: implementation guidance and assurance for healthcare. The MITRE Corporation. Duke University. Version 1.0. 4 April 2023. [Google Scholar]

- 35.Char DS, Burgart A. Machine learning implementation in clinical anesthesia: opportunities and challenges. Anesth Analg 2020; 130:1709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.van der Ven WH, Veelo DP, Wijnberge M, et al. One of the first validations of an artificial intelligence algorithm for clinical use: the impact on intraoperative hypotension prediction and clinical decision-making. Surgery 2021; 169: 1300–1303. [DOI] [PubMed] [Google Scholar]

- 37.Benda NC, Novak LL, Reale C, Ancker JS. Trust in AI: why we should be designing for APPROPRIATE reliance. J Am Med Inform Assoc 2022; 29:207–212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Benda NC, Reale C, Ancker J, et al. Purpose, process, performance: designing for appropriate trust of AI in healthcare. in Proceedings of the CHI Conference on Human Factors in Computing Systems, Yokohama. 2021. [Google Scholar]

- 39.Ray JM, Ratwani RM, Sinsky CA, et al. Six habits of highly successful health information technology: powerful strategies for design and implementation. J Am Med Inform Assoc 2019; 26:1109–1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Garvey KV, Craig KJT, Russell RG, et al. The potential and the imperative: the gap in ai-related clinical competencies and the need to close it. Med Sci Educ 2021; 31:2055–2060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Russell RG, Lovett Novak L, Patel M, et al. Competencies for the use of artificial intelligence–based tools by healthcare professionals. Acad Med 2023; 98:348–356. [DOI] [PubMed] [Google Scholar]

- 42.■Salwei ME, Carayon P. A sociotechnical systems framework for the application of artificial intelligence in healthcare delivery. J Cogn Eng Decis Making 2022; 16:194–206.This article described current challenges of integrating artificial intelligence into clinical care and propose a sociotechnical systems (STS) approach for artificial intelligence design and implementation.

- 43.Casner SM, Geven RW, Recker MP, Schooler JW. The retention of manual flying skills in the automated cockpit. Hum Factors 2014; 56:1506–1516. [DOI] [PubMed] [Google Scholar]