Abstract

With the growing implementation and use of health IT such as Clinical Decision Support (CDS), there is increasing attention on the potential negative impact of these technologies on patients (e.g., medication errors) and clinicians (e.g., increased workload, decreased job satisfaction, burnout). Human-Centered Design (HCD) and Human Factors (HF) principles are recommended to improve the usability of health IT and reduce its negative impact on patients and clinicians; however, challenges persist. The objective of this study is to understand how an HCD process influences the usability of health IT. We conducted a systematic retrospective analysis of the HCD process used in the design of a CDS for pulmonary embolism diagnosis in the emergency department (ED). Guided by the usability outcomes (e.g., barriers and facilitators) of the CDS use “in the wild” (see Part 1 of this research in the accompanying manuscript), we performed deductive content analysis of 17 documents (e.g., design session transcripts) produced during the HCD process. We describe if and how the design team considered the barriers and facilitators during the HCD process. We identified 7 design outcomes of the HCD process, for instance designing a workaround and making a design change to the CDS. We identify gaps in the current HCD process and demonstrate the need for a continuous health IT design process.

Keywords: Human-centered design, Health IT, Clinical decision support, Usability evaluation, Retrospective analysis

1. Introduction

Health care is evolving at a rapid pace with major changes related to the explosion of available data (e.g. genomics, biological data) (Brennan, 2019) and the increasing use of Health Information Technology (IT), such as the Electronic Health Record (EHR). These technological advances provide opportunities to improve patient safety and care quality as well as clinician efficiency and effectiveness (El-Kareh et al., 2013; King et al., 2014). For instance, Clinical Decision Support (CDS) can improve clinical decision-making by providing patient-specific assessments and recommendations at the point of care (Hunt et al., 1998; Patterson et al., 2019). Yet, major problems with the usability of health IT persist (Han et al., 2005; Hill et al., 2013; Karsh et al., 2010; Ratwani et al., 2019; Schulte & Fry, 2019). The Office of the National Coordinator for Health Information Technology as well as several other agencies recommend the use of Human-Centered Design (HCD) and Human Factors (HF) principles in order to improve the usability of health IT and reduce negative impacts on patients and clinicians (The Office of the National Coordinator for Health Information Technology, 2020). The aim of this study is to explore how an HCD process impacts the usability of health IT after the technology is implemented.

1.1. Human-centered design

HCD is an HF methodology aimed at identifying (and meeting) user needs in the design of systems such as technologies. HCD has two primary goals: (1) determine the right problem to solve, and (2) develop an appropriate solution to address that problem (Melles et al., 2021). The HCD process involves 4 main phases: (a) understanding and specifying the context of use, (b) specifying the user and sociotechnical systems requirements, (c) developing design solutions, and (d) evaluating the developed designs (International Organization for Standardization, 2010; Melles et al., 2021). This process can increase a technology’s usability, defined by the International Organization for Standardization (ISO) as the “the extent to which a system, product, or service can be used by specific users to achieve specified goals with effectiveness, efficiency, and satisfaction” (International Organization for Standardization, 2018) (pg. 2).

1.2. Evaluating the human-centered design process

While HCD can be beneficial for health IT usability (Carayon et al., 2020; Russ et al., 2014), few studies have performed systematic evaluations of HCD processes (Haims & Carayon, 1998; Hose et al., 2023; Xie et al., 2015). Xie et al. (2015) examined collaboration between design team members in a project re-designing the family-centered rounds process for hospitalized children. After completing the design process, researchers interviewed 10 design team members to understand the different stakeholder experiences in the collaborative design process. The specific challenge of managing multiple perspectives (i.e. multiple stakeholders) was highlighted. Extending on this work, Hose et al. (2023) examined how multiple roles (e.g., ICU nurse, emergency physician) with differing perspectives collaborated during the design of a team health IT solution to support pediatric trauma care transitions. Researchers outlined processes of collaboration that occurred among design team members during 4 design sessions. In particular, they described how various methods and approaches implemented in the HCD process supported the development of common ground and clarification of perspectives from the different team members. Despite this work, we do not fully understand how the HCD process results in usability outcomes. As described by Harte and colleagues (2017) there is “a lack of descriptive detail of the activities carried out within the design process, particularly in regard to ISO 9241–210 (human-centered design), and a lack of reporting on how successful or unsuccessful these activities were”. To ensure we are optimizing the design of health IT, we need to better understand how the HCD process leads to technology usability outcomes after the technology is implemented.

1.3. Archival analysis

Archival analysis, also referred to as retrospective analysis or historiography, uses pre-existing data (e.g. incident or operating records, annual reports, planning documents), and is a useful research method for understanding a process over a long period of time (Bisantz & Drury, 2005). As archival data are not collected for a research purpose, archival analysis can provide a more realistic view of the events that occurred and is typically an “inexpensive” research method. The goal of an archival analysis is “examining data from the past, integrating it into a coherent unity and putting it to some pragmatic use for the present and the future” (Sarnecky, 1990). Compared to other types of secondary analyses, archival analysis is commonly focused on finding associations and causes of events that have occurred, which is based on knowledge of outcomes. For instance, archival analysis can be used to understand why adverse events, such as accidents, occurred (Bisantz & Drury, 2005). There are 3 approaches to analyze the data: (1) for content (i.e. content analysis), (2) as commentary, and (3) as actors (Miller & Alvarado, 2005). Archival analysis has been used to understand the development of a patient portal in clinics in Canada (Avdagovska et al., 2020), to evaluate the professional gains of a therapist training program for master’s students (Niño et al., 2015), and to describe public health response to rural mass gatherings (Polkinghorne et al., 2013). In this study, we conduct a retrospective archival analysis to examine the HCD process used to design a CDS; the retrospective analysis is based on our knowledge of the usability outcomes of the CDS use “in the wild” following implementation in the emergency department.

1.4. Human-centered design of PE Dx CDS

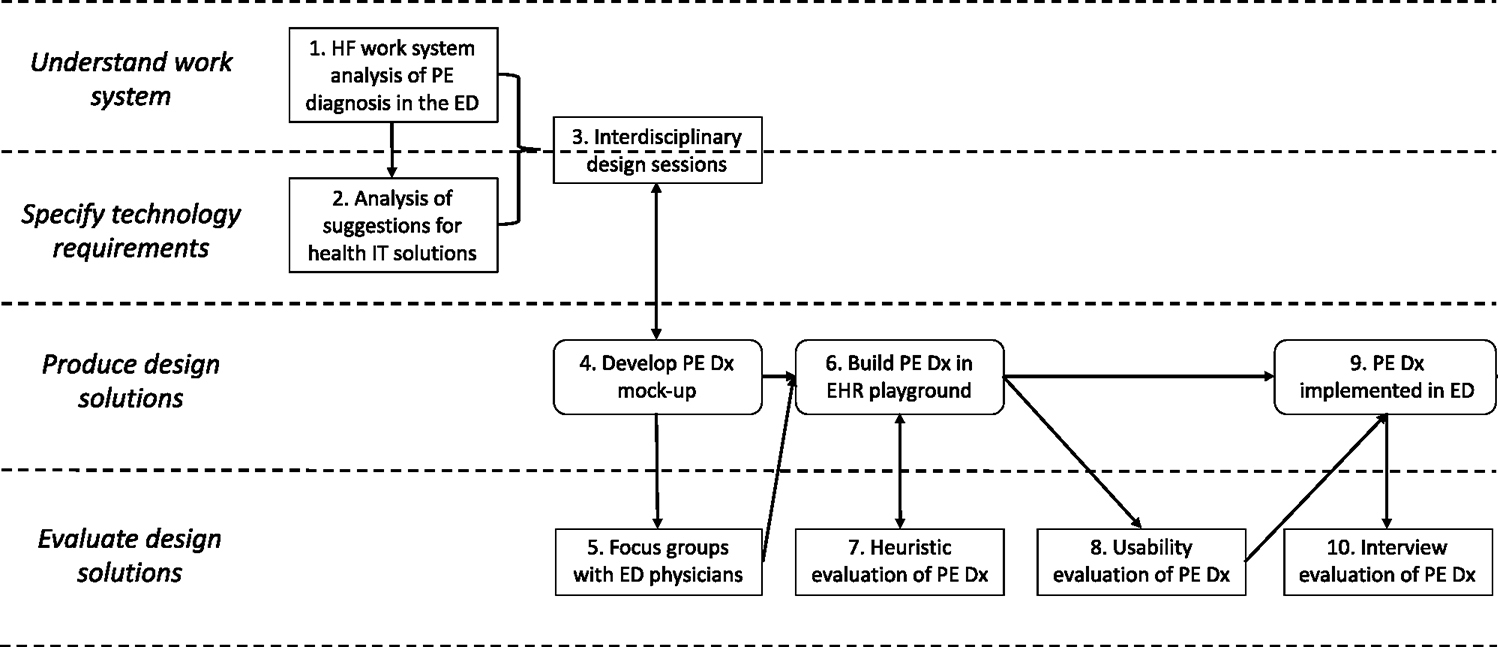

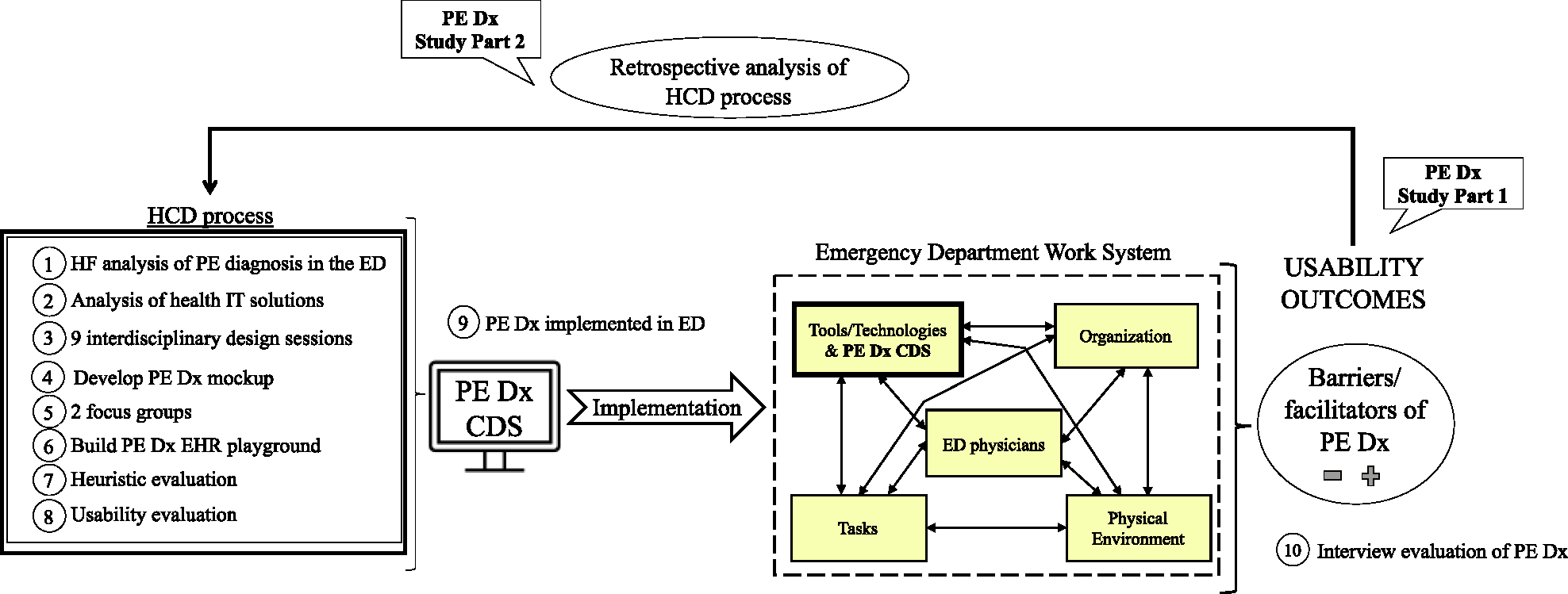

An interdisciplinary team of 7 HF engineers, 2 emergency physicians, and 1 IT specialist designed a CDS to support Pulmonary Embolism (PE) Diagnosis (Dx) in the Emergency Department (ED) (i.e. PE Dx). All authors of this paper were members of the interdisciplinary design team (4 HF engineers and 2 emergency physicians). Fig. 1 depicts the HCD process for PE Dx, which followed the 4 phases of HCD described by ISO (International Organization for Standardization, 2010); this is fully described in three published articles (Carayon et al., 2020; Hoonakker et al., 2019; Salwei et al., 2022a). Throughout the design of PE Dx, we systematically considered several HF design principles (left side of Table 2). The PE Dx CDS combined two risk scoring algorithms recommended for diagnosing PE, the Wells’ criteria (Wells et al., 2001) and the Pulmonary Embolism Rule-out Criteria (PERC) rule (Kline et al., 2010) (so forth referred to as Wells’ and PERC). The PE Dx automatically populated patient data from the EHR, calculated the patient’s risk for PE using the Wells’ and PERC, and provided a recommendation on the appropriate diagnostic pathway; the PE Dx then supported ordering the recommended diagnostic test and automatically documented the decision in the physician EHR note. We implemented PE Dx in one ED in December 2018 (Salwei et al., 2022b). Following the implementation of PE Dx, Salwei et al., 2023 investigated the usability outcomes (i.e., barriers and facilitators) of the CDS in the ED. Guided by our knowledge of the usability outcomes of the CDS in use (documented in the accompanying Part 1 manuscript), in this study we perform an archival analysis of the HCD process to understand if and how these usability outcomes were considered in the HCD process. Fig. 2 depicts the conceptual framework guiding this study.

Fig. 1.

Human-centered design (HCD) of PE Dx CDS.

Table 2.

Categories of usability outcomes identified in the implementation of PE Dx (full details in Salwei et al., 2023). A code was created for each of these categories.

| Expected usability outcomes related to HF principles (9 codes) | Emergent usability outcomes (18 codes) |

|---|---|

|

| |

| Automation of information acquisition | Mobile workflow |

| Automation of information analysis | Computer access |

| Support of decision selection | Resident workflow in other services |

| Explicit control/flexibility | Preference for another CDS |

| Minimization of workload | Integration of multiple CDS |

| Consistency | Time pressure |

| Chunking/grouping | Integration within HER |

| Visibility | Physician-patient workflows |

| Error prevention | Availability of residents |

| Interruptions Preference for another PE workflow Attending-resident tasks Gestalt and memorization of criteria Teaching tool Adaptation for patient risk Ordering workflow Access to CDS evidence Alerts |

|

Fig. 2.

Conceptual framework of the study.

2. Problem statement

Based on our knowledge of the usability outcomes of an HF-based CDS in the ED (Salwei et al., 2023), we aim to understand how the HCD process contributed to these outcomes. By better understanding this, we can improve our HF methods and the usability of health IT.

3. Methods

This study was part of a larger project aimed at supporting Venous ThromboEmbolism (VTE) diagnosis and management; the study took place at one ED of an academic health system in Wisconsin, USA. This study was approved by the Institutional Review Board.

3.1. Data collection

To systematically analyze the HCD process, we performed a retrospective archival analysis of the HCD process used to design PE Dx. The HCD process included 10 steps (see Fig. 2); each step generated one or more documents, i.e., design artifacts, that contained information about the design process. Table 1 details the steps of the HCD process and the document(s) created in each step.

Table 1.

Step of HCD process and corresponding documents produced.

| HCD phase | HCD step | Design activities | Documents produced |

|---|---|---|---|

|

| |||

| Understand work system | 1 | Human factors work system analysis of PE diagnosis in the ED | Excel spreadsheet of VTE cues and work system barriers and facilitators |

| Specify technology requirements | 2 | Analysis of suggestions for health IT solutions made by ED physicians | Health IT solutions of PE diagnosis analysis summary document |

| Understand work system & specify technology requirements | 3 | Interdisciplinary design sessions over one-year for a total of 13.5 h (each session was between 1 and 2 h) | Design session 1 transcript |

| Design session 2 transcript | |||

| Design session 3 transcript | |||

| Design session 4 meeting minutes | |||

| Design session 5 transcript | |||

| Design session 6 transcript | |||

| Design session 7 meeting minutes | |||

| Design session 8 transcript | |||

| Design session 9 transcript | |||

| Produce design solutions | 4 | Develop PE Dx mock-up | N/A |

| Evaluate design solutions | 5 | Focus groups with four ED physicians (one hour each, interspersed between design sessions) for review of PE Dx mock-up | Focus group 1 transcript |

| Focus group 2 transcript | |||

| Produce design solutions | 6 | Build PE Dx in EHR playground | N/A |

| Evaluate design solutions | 7 | Heuristic evaluation of PE Dx with 7 participants (HF engineers, physician, IT specialist) for a total of 2 h | Heuristic evaluation final report |

| Evaluate design solutions | 8 | Usability evaluation of PE Dx | Team meeting minutes (5/11/18) Team meeting minutes (7/6/18) Email with PE Dx programmer |

| Produce design solutions | 9 | PE Dx implemented in ED | N/A |

| Evaluate design solutions | 10 | Interview evaluation of PE Dx | N/A |

| Total documents | 17 | ||

3.2. Data analysis

We previously identified 27 categories of usability outcomes (Table 2) of PE Dx in a post-implementation evaluation (Salwei et al., 2023). The categories on the left side of Table 2 represent “expected usability outcomes” of the PE Dx, as these correspond to the HF design principles we applied during the HCD process of PE Dx. In contrast, the categories on the right side of Table 2 represent “emergent usability outcomes”; these were unexpected barriers and facilitators that we identified in the post-implementation evaluation of PE Dx. Full details on the post-implementation evaluation and interview methods can be found in an accompanying Part 1 manuscript Salwei et al., 2023).

To understand if and how these usability outcomes were addressed in our HCD process, we created a code for each usability outcome. A total of 27 codes guided the analysis: 9 codes were expected usability outcomes and 18 codes emerged as usability outcomes (i.e., barriers or facilitators) when the PE Dx was implemented.

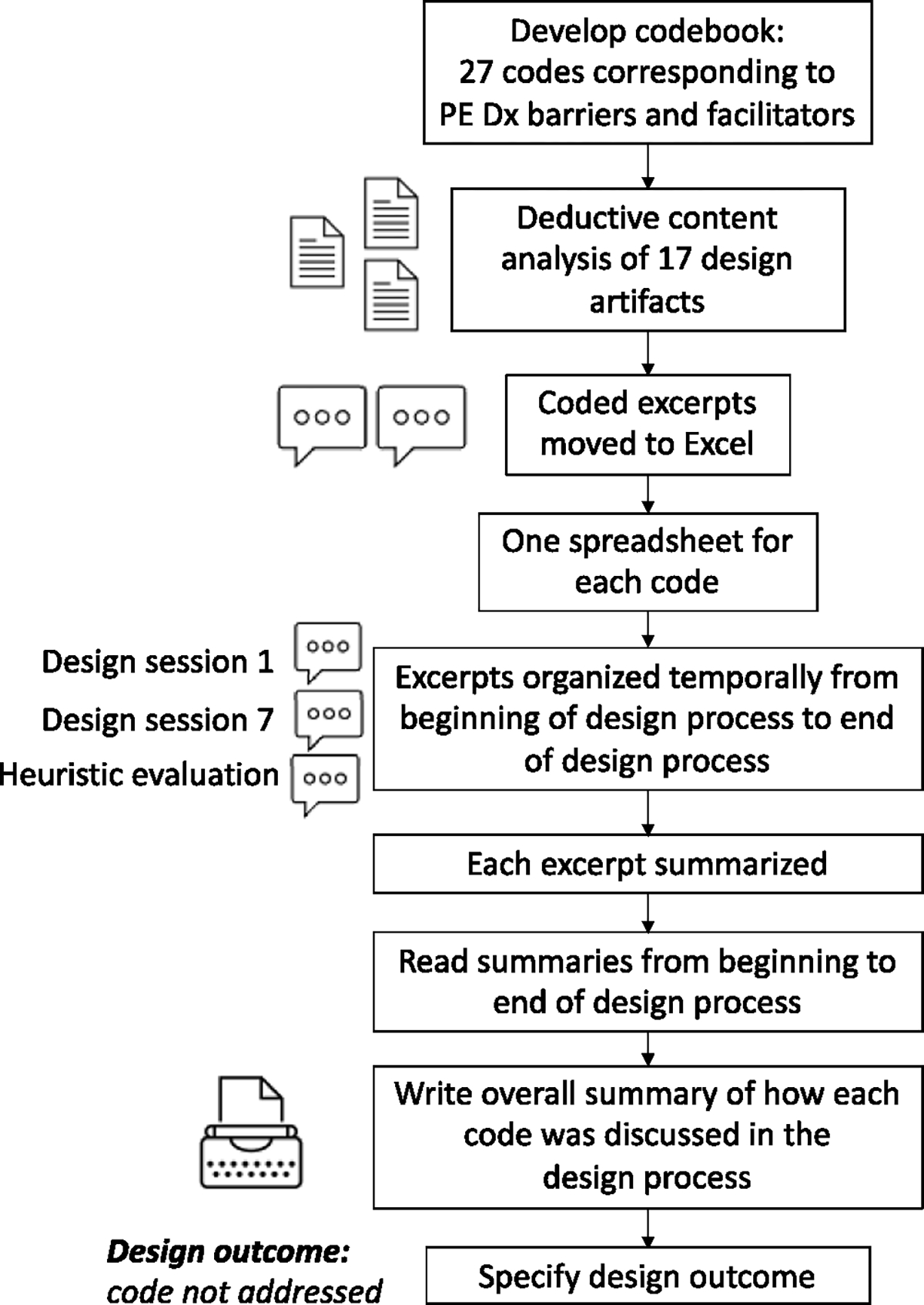

Using these codes, we performed deductive content analysis (Elo & Kyngäs, 2008) of the 17 documents produced in the HCD process of PE Dx. First, we developed a codebook with a description and examples of each code. We coded the documents in Microsoft Word and then exported the coded excerpts into Microsoft Excel for analysis. The data analysis process is depicted in Fig. 3. In Excel, we iteratively analyzed the data in several steps. First, we created an Excel spreadsheet for each code (e.g., consistency, error prevention). Each Excel spreadsheet contained the code’s excerpts organized temporally as they occurred throughout the HCD process. If more than one code was discussed at a time, we assigned multiple codes to the excerpt (e.g., consistency, support of decision selection) and then copied the excerpt into both code’s tabs in Excel. Next, one researcher created a summary describing each excerpt. After writing a summary for each excerpt, one researcher read through the summaries from the start of the HCD process until the end. Next, an overall summary was written to describe how each code was considered in the HCD process. The summaries and results were discussed with another researcher to get their input and feedback. Table 3 shows an example of the data analysis performed in Excel. Finally, we classified each code according to the design output from the code’s discussion in the HCD process. For instance, in Table 3, the design outcome was “code not addressed”.

Fig. 3.

Flowchart with data analysis steps.

Table 3.

Example data analysis.

| Code: Integration of multiple CDS | ||||

|---|---|---|---|---|

|

| ||||

| Step in HCD process | HCD document | Excerpt | Excerpt summary | Character count |

|

| ||||

| 3 | Design session 3 transcript | “Design team member 1: Well, one of the reasons I wanted to spend some time on this is, first of all, the broader context is important. I mean, we not only designing, we design this CDS, but we have to keep other CDS in mind as well. It’s very easy to design one good CDS, but it depends on the relationship with other CDS. Right? I mean, if, if all the bad ones they will override everything so include in this one. The other thing is a lot of the CDS are currently built at one place into the system, and you could build them into several places in a system, because it’s a process. So I don’t want to lose that part it, I mean, you can do it here, but you don’t have to do it here.” | Design team member discusses considering the broader context in designing PE Dx. He mentions it is easy to design one good CDS, but we need to consider other CDS as well. Other CDS are built in one place in the system. | 680 |

| 3 | Design session 9 transcript | “Design team member 2: Because my thought would be we put in the PE one, and then in an ideal scenario, in ten years, that little thing you drop down and it’s PE versus like the other many successful tools that we do” | Design team member discusses a future state in which there is a drop down in Epic with PE Dx and many other CDS. | 207 |

Total length of discussion: 887 characters

Overall summary: In design session 3, one design team member mentioned that we need to consider the CDS in the context of other CDS. No design decisions were made as a result of this comment. At the end of the design sessions in design session 9, another design team member mentions a future state in which more CDS would be available in the EHR like PE Dx.

Design outcome: Code not addressed

To further characterize how the codes were considered during the HCD process, we calculated the number of documents and steps (see Table 1) in which codes were discussed and the length of discussion for each code. As a proxy for length of discussion, we calculated the total number of characters in the excerpts for each code. Finally, we organized the codes according to how frequently they were discussed during the HCD process. We identified two natural breaks in the frequency of code discussion based on the length of discussion and the total number of design documents that each codewas discussed in. This resulted in 3 groups: (1) codes that were not discussed (0 characters coded; 0 design documents), (2) codes that were briefly discussed (399–1779 characters coded; 1–4 design documents), and (3) codes that were frequently discussed (3153–107,641 characters coded; 4–16 design documents).

4. Results

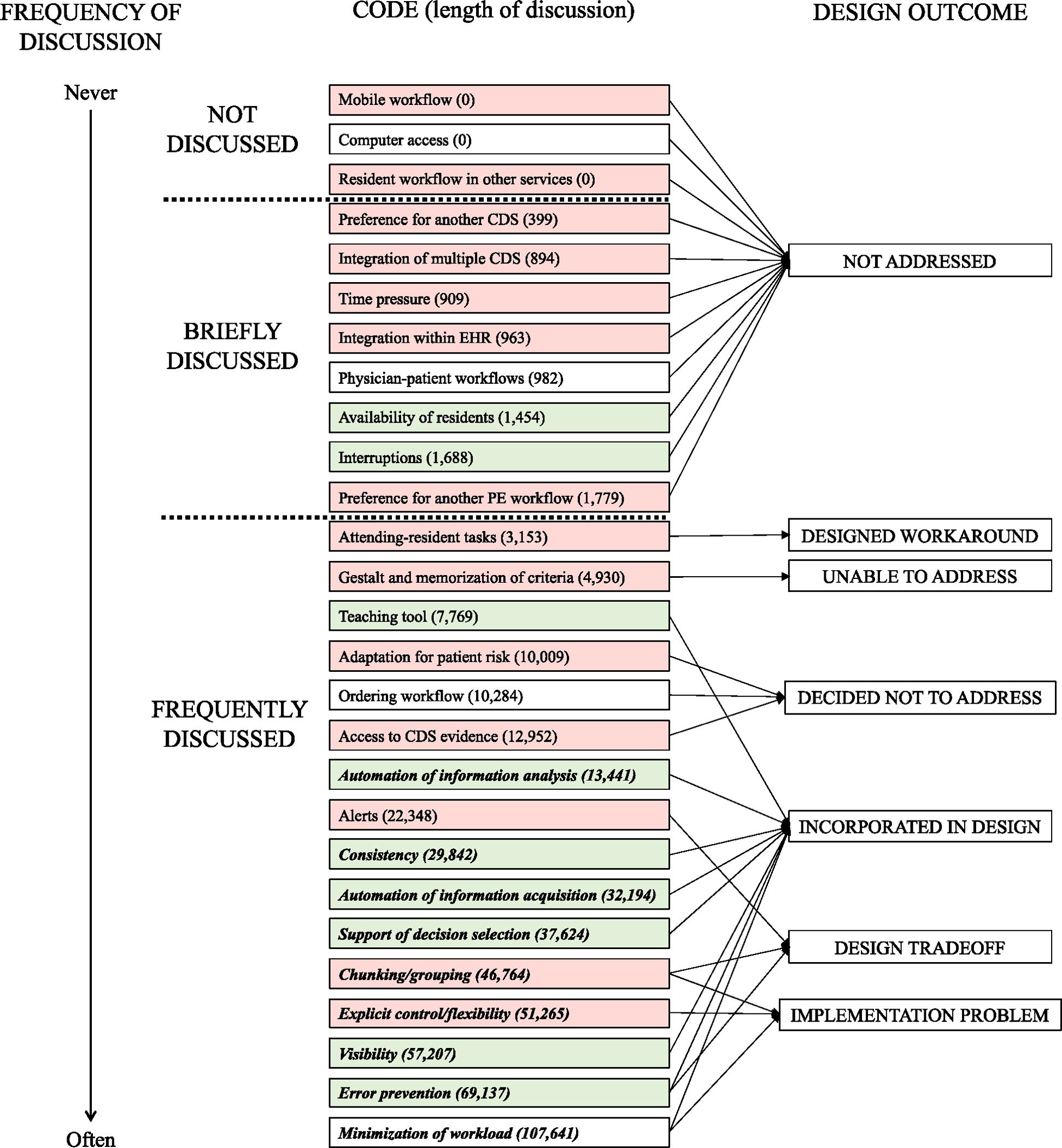

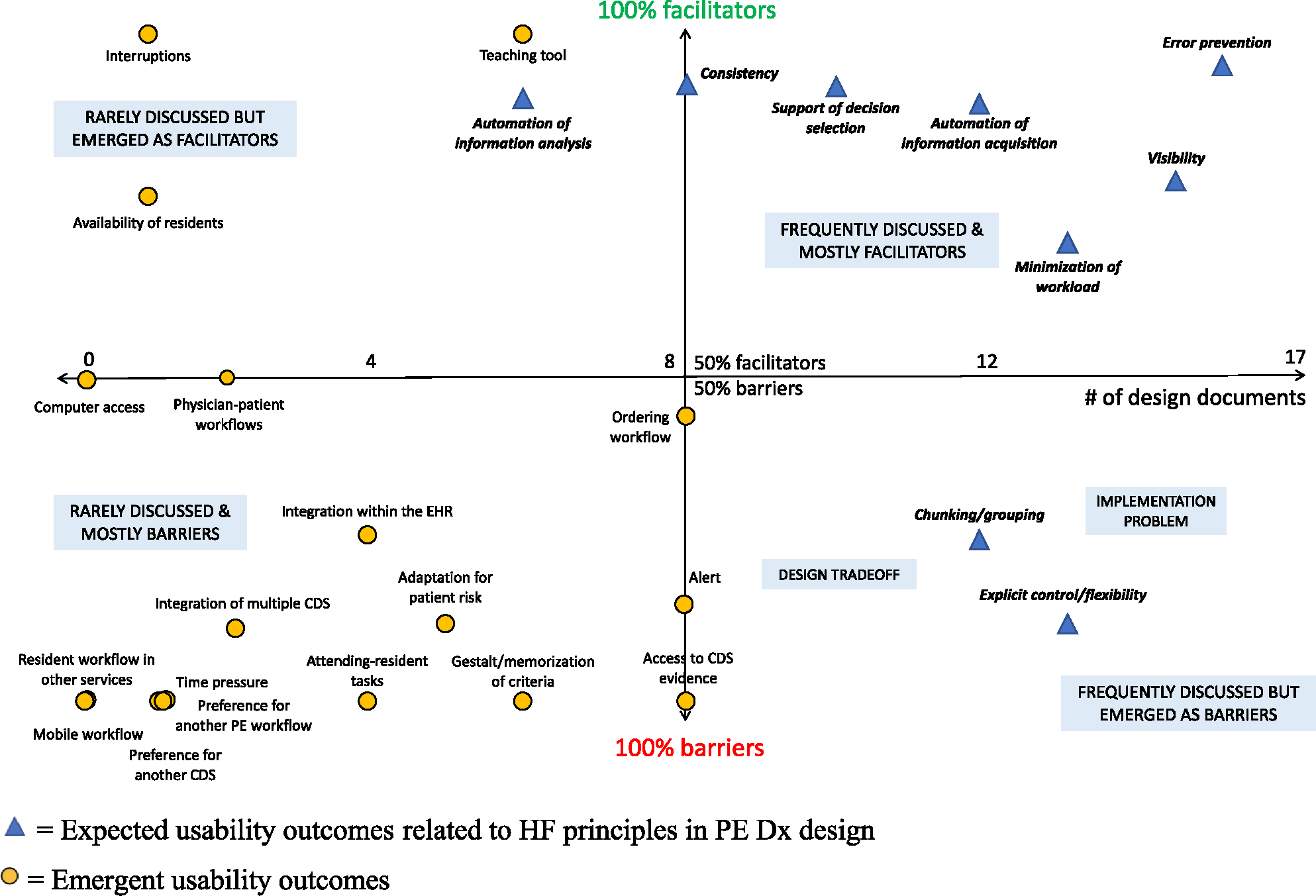

Through a qualitative analysis of the PE Dx design process, we identified differences in how each of the 27 codes were discussed in the HCD process as well as differences in how each code was (or was not) incorporated in the design of PE Dx; these findings are described in Sections 4.1 and 4.2, respectively. Finally, in Section 4.3, we explore the relationship between how frequently a code was discussed in the PE Dx HCD process and the resulting usability outcomes (i.e., proportion of barriers and facilitators) identified when the technology was implemented.

4.1. How were the usability outcomes (i.e., barriers and facilitators) considered in the HCD process?

We found that 24 out of the 27 codes were discussed during the HCD process. Eight of these 24 codes were only briefly discussed during the HCD process (see Appendix 1) with the remaining 16 codes being frequently discussed (see Appendix 2). Three of the codes (mobile use, computer access, and resident workflow in other services) were never discussed in the HCD process; this means that we did not find discussion of these topics in any of the 17 documents coded. Fig. 4 depicts a model of how the 27 codes were incorporated in the design of PE Dx. In this figure, the codes are organized according to how frequently they were discussed in the HCD process spanning from codes that were never discussed at the top of the figure and codes that were frequently discussed at the bottom.

Fig. 4.

Frequency of code discussion in the HCD process and impact on PE Dx design Bold, italics = Codes that were expected usability outcomes related to HF principles in the design of PE Dx

Green = More than 66 % of excerpts coded as facilitators in post-implementation evaluation (Salwei et al., 2023)

White = 34–66% of excerpts coded as facilitatorsRed = Less than 34 % of excerpts coded as facilitators.

4.2. What was the result of the discussion in the PE Dx HCD process?

Consideration (or lack thereof) of the codes during the HCD process resulted in one of 7 design outcomes:

The problem was not addressed.

The problem was addressed with the design of a workaround.

The problem was unable to be addressed through design.

The designers decided not to address the problem.

The problem was addressed with a CDS design change.

The problem represented a design tradeoff that needed to be managed, or

The problem was addressed but issues emerged due to the CDS roll-out, go-live, and/or following implementation of the technology.

Below we describe each of these design outcomes.

4.2.1. Not addressed in the PE Dx design

We identified 11 codes that were not addressed or incorporated in the design of PE Dx; all of these codes emerged as barriers/facilitators after the implementation of PE Dx (right side of Table 2). Three of these codes were never discussed (mobile use, computer access, resident workflow in other services), while the other 8 codes were only discussed in 1, 2, or 4 design documents (preference for another CDS, integration of multiple CDS, time pressure, integration with EHR, physician-patient workflows, availability of residents, interruptions, and preference for another PE workflow). For example, the code integration of multiple CDS, was briefly discussed during design session 3 (see example in Table 3). One design team member mentioned that we should consider the broader context of PE Dx use, including other CDS that physicians use: “Well, one of the reasons I wanted to spend some time on this is, first of all, the broader context is important. I mean, we’re not only designing, we design this CDS, but we have to keep other CDS in mind as well. It’s very easy to design one good CDS, but it depends on the relationship with other CDS. Right?” The design team member goes on to discuss how other CDS are currently in one part of the system, “The other thing is a lot of the CDS are currently built at one place into the system, and you could build them into several places in a system, because it’s a process. So, I don’t want to lose that part”. We did not make any design changes based on this comment. Later in the HCD process during design session 9, an ED physician described a future state in which PE Dx would be one of many CDS integrated in the EHR. After the implementation of PE Dx, we identified a barrier to use of PE Dx since it was the only CDS integrated in the EHR.

4.2.2. Design of a workaround

One code, attending-resident tasks, was discussed by the design team and resulted in the design of a workaround to address this issue. In design session 2, a design team member stated: “there may be a scenario where the resident, APP need to talk to the attending”, an ED physician agreed. In design session 5, an ED physician discussed how the attending should have the ability to create a new entry in the CDS if they do not agree with how the resident filled out the CDS; this was re-iterated in design session 8 and implemented in the CDS design. Rather than designing the CDS to support the discussion between residents and attendings using the CDS (mentioned in design session 2), we designed a workaround so that physicians could edit the CDS results if they disagreed with the resident use of the CDS.

4.2.3. Unable to address in PE Dx design

One code, gestalt and memorization of criteria, was frequently discussed during the HCD process; however, we were unable to make a design change to address this code. The design team identified this as a potential barrier early in the design process in the analysis of cues (i.e., design step 1). While this challenge was acknowledged in our design process, we could not address this factor with any design changes. When PE Dx was implemented, this code was a barrier to using the CDS as physicians said they had a clinical gestalt of PE risk or had the Wells’ and/or PERC criteria memorized and therefore did not need to use PE Dx.

4.2.4. Decided not to address in PE Dx design

We identified 3 codes, adaptation for patient risk, ordering workflow, and access to CDS evidence, that were explicitly considered in the HCD process, but which the design team decided not to address in PE Dx. For instance, the code, adaptation for patient risk, was discussed in the analysis of cues, design sessions 2, 8, and 9, and in focus group 2. In each of these design steps, we considered different patient scenarios that would alter the use of the CDS such as patient age over 50, pregnancy, and patients with cancer. For example, one design team member, an ED physician, described a scenario where the patient risk is low: “There’s definitely another bucket here, where it’s a young person, if I’m just basically trying to see if I need to even think about PE at all, in a way, and I’m just sort of quickly applying PERC and then being done with it.”. Because use of PE Dx was not mandatory and physicians could exit the tool at any time, we decided not to adapt the CDS to specific patient scenarios. Instead, we concluded that physicians would not use PE Dx in these cases, or they could ignore the recommendation if it did not apply to a specific patient. When the CDS was implemented, several physicians stated that they would not use the CDS in certain situations such as for oncology patients that are very high risk; physicians thought that the CDS was useful in other patient scenarios.

4.2.5. Incorporated in PE Dx design

Discussion of 8 out of the 27 codes resulted in design changes to PE Dx. These codes were primarily facilitators when the PE Dx was implemented, with between 65 % and 100 % coded as facilitators (Salwei et al., 2023). All but one of these codes (teaching tool) were “expected usability outcomes” (left side of Table 2): automation of information analysis, consistency, automation of information acquisition, support of decision selection, visibility, error prevention, and minimization of workload. These codes were frequently considered by the team when making design decisions. For instance, during the design sessions, the team discussed the order to list the Wells’ and PERC criteria, deciding to list the criteria in the same order as the currently used CDS, MDCalc, to support consistency.

The code, minimization of workload, was the most frequently discussed code throughout the HCD process and prompted many design changes. For instance, early on in design session 1, an ED physician explained “I think in my mind, that’s the big problem, is the data is out there but it’s scattered. It’s inefficiently, it’s fragmented”. This prompted the design team to automatically populate data (e.g., heart rate, age) into PE Dx to reduce physician workload from searching and remembering information from one screen to another. Minimization of workload resulted in the most facilitators out of all the codes; however, several barriers emerged related to implementation problems (see Section 4.2.7 on Implementation Problems).

4.2.6. Design tradeoffs

Three of the codes, alerts, chunking/grouping, and error prevention, were frequently discussed in the design process and resulted in design trade-offs. For example, the code alerts, was discussed in 8 different documents throughout the HCD process. Early in the HCD process, we decided that the CDS would be “pull” rather than “push” via an alert, meaning the physician would have the option of whether to use the CDS. The decision to have the CDS “pull” versus “push” was related to balancing physician autonomy with error prevention (e.g., ensuring that the physician does not forget to consider PE for a patient). For example, in design session 3 we discussed alerts, “I think we’re designing this interface, and, definitely, physicians will be able to call up the interface on their own, and then I would leave it as an open question for now. Whether or not we push that interface on people at various points where they seem to be doing the wrong thing, because that’s a more invasive type of decision support”. We made the decision as a team to respect physician autonomy and not “push” the CDS on the physician (i.e., with an alert). This decision was supported by a discussion about how challenging it can be to determine the appropriate triggers for an alert (e.g., chief complaint of chest pain, elevated heart rate). It is also difficult to determine the appropriate timing in the workflow for the alert to be placed. These challenges further supported our decision not to push the CDS with an alert to the physician. Our team discussed a future state in which the CDS could be pull and push after the initial implementation.

4.2.7. Implementation problems

Three codes were discussed and addressed in the PE Dx design; however, problems after the implementation of PE Dx resulted in barriers to use of the CDS. For example, we identified a barrier related to the location of PE Dx within a section of the EHR called the “ED navigator” (code: chunking/grouping). In design sessions 2, 3, 5, 6, 8, and 9, we discussed where the CDS should be placed within the EHR and concluded that multiple different locations would be possible. Because of the flexibility in the location of the CDS, we decided to ask physicians about their preferred location for PE Dx during the usability testing. At the end of the usability testing session, we asked physicians to select one of three possible locations for the PE Dx to be located in the EHR. Seventy-two percent of physicians agreed that the ED navigator was the best spot for the CDS. Five months after PE Dx was implemented, the EHR was upgraded making the ED navigator much less prominent. This ultimately resulted in many barriers to PE Dx use; specifically, that it was inconvenient to go to the ED navigator to use the CDS. Although we considered the PE Dx location during the HCD process, the change in the EHR functionality after implementation resulted in many barriers to using PE Dx.

We also identified several barriers related to implementation problems for the code minimization of workload. For instance, one barrier was due to challenges with the automatic documentation feature of the CDS. We discussed the CDS documentation feature throughout the HCD process, and in design sessions 8 and 9, we discussed that the CDS documentation should be editable. However, physicians were not aware that the documentation could be edited. This resulted in barriers reported by physicians stating that the CDS documentation does not always work if the physician has already started their note and that the automatic documentation cannot be edited after it is pulled into the physician note. This reflects a problem communicating the CDS functionality and physician training during the implementation of the technology.

4.3. Relationship between frequency of discussion in HCD process and usability outcomes

Fig. 5 shows the distribution of codes according to their frequency of discussion in the HCD process and the percentage of barriers and facilitators identified in the post-implementation evaluation (Salwei et al., 2023). In the figure, the x-axis represents how often the code was discussed based on the number of design documents that the code appeared in during the HCD process. The y-axis represents the proportion of barriers and facilitators for each code based on the interviews with ED physicians. For example, in the post-implementation evaluation of PE Dx (Salwei et al., 2023), the code teaching tool was described by ED physicians as a facilitator 100 % of the time, meaning the code was never mentioned as a barrier; this code appeared in 6 design documents. The code physician-patient workflows was described as both a barrier and facilitator, with ED physicians describing 2 barriers and 2 facilitators relating to this code; this code appeared in 2 design documents.

Fig. 5.

Distribution of codes based on their consideration in the HCD process and the percentage of barriers and facilitators identified after implementation.

The four quadrants in Fig. 5 provide further perspective on the outcomes of the HCD process. The upper right quadrant represents codes that were frequently discussed in the HCD process and that were primarily facilitators to PE Dx use when implemented in the ED. All these codes were expected usability outcomes after the implementation of PE Dx (left side of Table 2), which makes sense; these codes were systematically considered and incorporated in the CDS design. In contrast, the lower left quadrant represents codes that were only briefly discussed in the HCD process and that emerged as barriers when the CDS was implemented. Again, not surprisingly, these codes all represent factors that were unexpected and emerged as usability barriers following implementation. In the upper left quadrant, we see several codes that were not frequently discussed but that emerged as facilitators when the CDS was implemented. Finally, in the lower right quadrant, there are codes that were frequently discussed during the HCD process, but that emerged as barriers when the CDS was implemented. These codes were related to design tradeoffs and/or implementation problems of the CDS.

5. Discussion

In this study, we analyzed the HCD process used in the design of an HF-based CDS in light of the outcomes of use in the clinical environment. HCD is essential to ensure the usability of health IT (The Office of the National Coordinator for Health Information Technology, 2020); however, little is known about how the HCD process relates to usability outcomes when health IT is implemented in the clinical environment (Harte et al., 2017). Only a few studies have performed a systematic evaluation of the HCD process (Haims & Carayon, 1998; Hose et al., 2023; Xie et al., 2015). Our study helps to better understand HCD processes, and to evaluate (and improve) the impact of our HF methods and approaches on the usability of health IT. We analyzed the PE Dx HCD process and identified 7 outcomes of the design process. We found that the HCD process can produce benefits, e.g., usable technologies, but there are many challenges, such as design tradeoffs and consideration of all the work system elements. This work sheds light on a specific HCD process, including the work and role of HF professionals in the design of health IT. We expanded our understanding of how the HCD process leads to usability outcomes of health IT. Below we outline strengths and gaps in the HCD process and opportunities for improvement in the future design of CDS. Table 6 provides specific recommendations corresponding to each of the design outcomes.

Table 6.

Design outcomes and recommendations for designers.

| Design outcome | Recommendation for designers |

|---|---|

|

| |

| Not addressed | - These factors should be incorporated as guiding design principles in future HCD processes. - For example, how will the presence/absence of other CDS in the EHR impact use of the technology? Will physician movement throughout the physical environment hinder use of the technology? |

| Designed a workaround | N/A |

| Unable to address | - Principles of change management should be employed to promote the technology and why it is important. - For instance, why is the technology better than current practice? What evidence is there that the technology improves patient safety? |

| Decided not to address | - Continue monitoring the technology after implementation to determine if these factors cause issues to technology use - Consider design modifications if issues arise. |

| Incorporated in the design | N/A |

| Design tradeoff | - Consider the priorities for the technology and which design principles will take precedence when in conflict, e.g., safety vs efficiency - Communicate design decisions based on the tradeoff analysis to users during training and implementation |

| Implementation | - Develop organizational processes to support a continuous design process for the technology after implementation. For instance, build a feedback |

| problem | mechanism into the technology to easily gather end user input and flag potential design issues. - Designate a role to monitor the technology’s use after implementation and investigate issues if use is consistently low. - Continually learn about design and implementation issues in order to improve future HCD processes |

5.1. Design tradeoffs

We identified several design trade-offs in our HCD process. For instance, in the design of the CDS, we frequently discussed the use of an alert as a reminder to use the CDS, as this is a common approach in CDS implementation (Mann et al., 2011; Press et al., 2015; Tan et al., 2020). We ultimately decided not to use an alert in the PE Dx; this decision was a design trade-off between physician autonomy and error prevention, as physicians may forget to use the CDS without the use of an alert. We also identified a design tradeoff between the codes minimization of workload (i.e., show overlapping criteria together and skipping Wells’ if it is not needed), explicit control/flexibility (i.e., allowing the physician to use the CDS in any order they would like), and error prevention (i.e., following hospital policy and guidelines that recommend Wells’ to be used before PERC). In our CDS, one of our key goals was to ensure that the CDS did not recommend the incorrect decision to the physician, making error prevention a dominant design principle when the HF principles were in conflict. In the design of health IT, it is important to understand the priorities and design objectives so that design trade-offs can be discussed and systematically addressed based on the values of the team, the goals for the technology and organizational guidance.

We found that a code being frequently discussed in the HCD process was generally a positive sign as it indicated that the code was explicitly considered by the design team in making design decisions. However, frequent discussion of a code throughout the design process can also indicate that there was ambiguity, uncertainty or disagreement in the HCD process, with no clear design decision indicated; this resulted in more frequent discussion among the team to make design decisions. Following implementation of health IT, designers should monitor any barriers that emerge related to design tradeoffs and assess if modifications are needed to manage these barriers.

5.2. Consideration of the work system elements

We identified a lack of consideration of all of the work system elements (Smith & Carayon-Sainfort, 1989) in our HCD process. Most of the codes not discussed in the HCD process related to the ‘physical environment’ and ‘organization’ work system elements. For instance, the codes mobile workflow, computer access, and physician-patient workflows all described aspects of the physical environment that influenced workflow integration of the CDS. Several other codes briefly considered in the HCD process related to the organization work system element including resident workflow in other services, time pressure, availability of residents, and interruptions. These codes represent topics that should be more systematically integrated in future design of CDS to prevent barriers after implementation. Our findings demonstrate that the ‘physical environment’ and ‘organization’ work system elements were not adequately considered in our HCD process.

The lack of consideration of all of the work system elements indicates a need to expand the HCD methods used to design health IT. Based on the SEIPS model (Carayon et al., 2006), SEIPS-based process modeling (Wooldridge et al., 2017) is one method that could be used to help take into account the physical environment and organization work system elements during the HCD process. Role network analysis (Salwei et al., 2019) is another method that could be used to better understand organizational workflow, including the workflow of teams, during the design process; these methods systematically take into account each of the work system elements and visually depict the interactions between system elements. Designers could develop SEIPS-based process maps and role networks depicting clinic workflows. These tools could then be used throughout the HCD process to support consideration of all work system elements and their interactions. These tools may help to identify individual, team, and organizational workflows that can inform the design of the technology and improve its integration in the ED workflow.

5.3. Continuous design of health IT

We propose that a continuous design perspective is needed in the design of health IT. We identified several barriers that emerged after the implementation of the PE Dx. The most common barrier was the location of the PE Dx within the EHR (code: chunking/grouping), which was discussed in 6 design sessions and in the usability testing of PE Dx. After implementing the PE Dx, the EHR vendor updated the EHR system; this upgrade altered much of the structure and workflow within the EHR and resulted in the ED navigator becoming less prominent. This modification of the workflow after our CDS was implemented resulted in the PE Dx being difficult to access in physicians’ new workflow. Our findings demonstrate the importance of a continuous design process, which entails regular monitoring and feedback loops to support the design of a technology after its implementation (Carayon, 2006, 2019; Carayon et al., 2017; Carayon & Salwei, 2021, 2008; Salwei et al., 2021). Following the implementation of the PE Dx, we could have continued to monitor the design and use of the CDS, which would have helped to identify the problem and prompted a change to the location of PE Dx in the EHR to better support physician workflow. Designers cannot anticipate and correct all design issues before a technology is implemented due to emergent properties of complex systems (Flach, 2012; Wilson, 2014). Therefore, it is important to monitor technologies after implementation (i.e. continuous design) to understand issues that arise and continue designing the technology to address the evolving work system (Carayon, 2006, 2019). We found that issues relating to the physical environment, organization, and some aspects of the task (teamwork) are more likely to be found after implementation as these work system factors can be more difficult to simulate and test prior to implementation. In a continuous design process, additional attention may be needed relating to these work system elements.

One limitation of this study is that we did not have transcripts for all design sessions, and so we used a combination of meeting minutes and transcripts to analyze the design sessions. There may have been discussion of HF design principles or inductive categories that were missed in those meetings. Another limitation is that the retrospective archival analysis focused on the documents created throughout the steps in the HCD process; there were discussions about the CDS design that were not captured as a part of the documents analyzed in the formal HCD process. However, we diligently recorded all design decisions made throughout the CDS design process, so it is unlikely that we missed any significant decisions. Another limitation is that the researcher analyzing the data was involved in the design process, which may have limited the objectivity of the analysis; the use of skeptical peer review and member checking helped to reduce potential biases in the analysis (Devers, 1999). Finally, these findings only represent one HCD process used to design one type of health IT, a CDS; the findings may not be applicable to other types of HCD processes or the design of other types of health IT. However, we followed a rigorous HCD process, including all of the steps outlined by ISO, and we systematically applied HF principles throughout the HCD process. We believe the gaps identified in our study will be applicable across other HCD processes.

Future research should continue to explore how HCD processes impact health IT usability outcomes, especially in other settings and with other types of health IT. We found that archival analysis is a useful method for reflecting and learning about the HCD process. We recommend that those interested in archival analysis of HCD processes create a plan upfront on how they will generate archival data (e.g., design session transcripts) to be analyzed at the end of the design process; this upfront planning (e.g., audio-recording design meetings; keeping track of design decisions made) can reduce the back end “costs” associated with archival analysis and can streamline the analysis process.

6. Conclusion

HCD and HF principles can improve the usability of health IT and its integration in clinical workflow. We identified benefits as well as several gaps in our HCD process. In the design of health IT, it is important to not only consider the technology, tasks, and people, but also the physical environment and organization, i.e., all of the work system elements. This study can inform improvements for future HCD processes to support consideration of each of the work system elements and the workflow of clinicians in the design of health IT.

Implications and applications

This work helps us to understand how a rigorous HCD process and systematic consideration of HF design principles lead to technology usability outcomes. We found many benefits of the HCD process on CDS usability as well as many challenges such as balancing design tradeoffs and managing CDS design after implementation. Health IT designers should employ additional methods in the design of CDS to support consideration of all the work system elements. Following the implementation of health IT, designers should also adopt a continuous design approach to systematically monitor and modify the technology’s design as issues emerge. The gaps identified in our HCD process can be used to improve future design of health IT and enhance CDS usability.

Impact statement

Despite promise, there is increasing attention on the negative impact of health IT on patients (e.g., medication errors) and clinicians (e.g., increased workload, burnout). HCD and HF principles may improve the usability of health IT; however, there is limited information on how the HCD process leads to usability outcomes. This work sheds light on how an HCD process leads to usability outcomes and provides guidance on how to improve the design of health IT. By improving the design (and usability) of health IT, we can increase technology effectiveness and improve patient safety.

Acknowledgments

Research reported in this publication was supported by the Agency for Healthcare Research and Quality (AHRQ) [grant numbers K12HS026395, K01HS029042, K08HS024558, and R01HS022086] and was supported by the Clinical and Translational Science Award (CTSA) program, through the NIH National Center for Advancing Translational Sciences (NCATS) [grant number 1UL1TR002373]. The content is solely the responsibility of the authors and does not necessarily represent the official views of AHRQ or the NIH.

Appendix 1. Overview of the codes briefly discussed in the HCD process

| HCD process | Design document | Codes Briefly Discussed in the HCD Process | |||||||

| Preference for another CDS | Integration of multiple CDS | Time pressure | Integration within EHR | Physician-patient workflows | Availability of residents | Interruptions | Preference for another PE workflow | ||

| VTE cues WSB-F | ✓ | ||||||||

| Health IT solutions PE diagnosis | ✓ | ||||||||

| Design session 1 transcript | ✓ | ||||||||

| Design session 2 transcript | ✓ | ✓ | ✓ | ||||||

| Design session 3 transcript | ✓ | ✓ | |||||||

| Design session 4 meeting minutes | |||||||||

| Design session 5 transcript | |||||||||

| Design session 6 transcript | ✓ | ||||||||

| Focus group 1 transcript | ✓ | ||||||||

| Focus group 2 transcript | |||||||||

| Design session 7 meeting minutes | |||||||||

| Design session 8 transcript | ✓ | ||||||||

| Design session 9 transcript | ✓ | ||||||||

| Heuristic evaluation report | |||||||||

| Team Meeting Minutes 5-11-18 | |||||||||

| Team Meeting Minutes 7-6-18 | |||||||||

| Email with PE Dx programmer | |||||||||

| Archival analysis data | # of design documents | 1 | 2 | 1 | 4 | 2 | 1 | 1 | 1 |

| Length of discussion (characters) | 399 | 894 | 909 | 963 | 982 | 1454 | 1688 | 1779 | |

| Usability outcomes (interview data) | Total excerpts coded | 5 | 8 | 3 | 6 | 6 | 3 | 1 | 3 |

| # of barriers | 5 | 7 | 3 | 4 | 3 | 1 | 0 | 3 | |

| # of facilitators | 0 | 1 | 0 | 2 | 3 | 2 | 1 | 0 | |

Checkmarks indicate that the code was discussed in the corresponding design document

Appendix 2. Overview of the codes frequently discussed in the HCD process

| HCD process | Design document | Codes Frequently Discussed in the HCD Process | |||||||||||||||

| Attending-resident tasks | Gestalt and memorization of criteria | Teaching tool | Adaptation impatient risk | Ordering workflow | Access to CDS evidence | Automation of information analysis | Alerts | Consistency | Automation of information acquisition | Support of derision selection | Chunking/grouping | Explicit control/flexibility | Visibility | Error prevention | Minimization of workload | ||

| VTE cues WS B-F | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||

| Health FT solutions PE diagnosis | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Design session 1 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||

| Design session 2 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Design session 3 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Design session 4 meeting minutes | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||

| Design session 5 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Design session 6 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| Focus group 1 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Focus group 2 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| Design session 7 meeting minutes | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Design session 8 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Design session 9 transcript | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| Heuristic evaluation report | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Team Meeting Minutes 5-11-18 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||

| Team Meeting Minutes 7-6-18 | ✓ | ✓ | ✓ | ✓ | |||||||||||||

| Email with PE Dx programmer | ✓ | ✓ | ✓ | ✓ | |||||||||||||

| Archival analysis data | # of design documents | 4 | 6 | 6 | 5 | 8 | 8 | 6 | 8 | 8 | 12 | 10 | 12 | 13 | 15 | 16 | 13 |

| Length of discussion (characters) | 3153 | 4930 | 7769 | 10009 | 10284 | 12952 | 13441 | 22348 | 29842 | 32194 | 37624 | 46764 | 51265 | 57207 | 69137 | 107641 | |

| Usability outcomes (interview data) | Total excerpts coded | 2 | 9 | 10 | 8 | 7 | 2 | 9 | 13 | 7 | 12 | 28 | 37 | 6 | 8 | 10 | 82 |

| # of barrios | 2 | 9 | 0 | 6 | 4 | 2 | 2 | 10 | 1 | 3 | 4 | 27 | 5 | 2 | 1 | 29 | |

| # of facilitators | 0 | 0 | 10 | 2 | 3 | 0 | 7 | 3 | 6 | 9 | 24 | 10 | 1 | 6 | 9 | 53 | |

Checkmarks indicate that the code was discussed in the corresponding design document

Footnotes

Declaration of Competing Interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: Megan Salwei reports financial support was provided by Agency for Healthcare Research and Quality. Brian Patterson reports financial support was provided by Agency for Healthcare Research and Quality. Pascale Carayon reports financial support was provided by Agency for Healthcare Research and Quality.

References

- Avdagovska M, Stafinski T, Ballermann M, Menon D, Olson K, & Paul P (2020). Tracing the decisions that shaped the development of mychart, an electronic patient portal in Alberta, Canada: Historical research study. Journal of Medical Internet Research, 22(5), e17505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisantz AM, & Drury CG (2005). Applications of archival and observational data. Evaluation of Human Work, 3, 61–82. [Google Scholar]

- Brennan P (2019). Prepared statement of patricia flatley brennan, director. National Library of Medicine. [Google Scholar]

- Carayon P (2006). Human factors of complex sociotechnical systems. Applied Ergonomics, 37(4), 525–535. [DOI] [PubMed] [Google Scholar]

- Carayon P (2019). Human factors in health (care) Informatics: Toward continuous sociotechnical system design. Studies in Health Technology And Informatics, 265, 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Du S, Brown R, Cartmill R, Johnson M, & Wetterneck TB (2017). EHR-related medication errors in two ICUs. Journal of Healthcare Risk Management, 36(3), 6–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Hoonakker P, Hundt AS, Salwei ME, Wiegmann D, Brown RL, et al. (2020). Application of human factors to improve usability of clinical decision support for diagnostic decision-making: A scenario-based simulation study. BMJ Quality & Safety, 29(4), 329–340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Hundt AS, Karsh BT, Gurses AP, Alvarado CJ, Smith M, et al. (2006). Work system design for patient safety: The SEIPS model. Quality & safety in health care, 15(Supplement I). i50–i58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, & Salwei ME (2021). Moving towards a sociotechnical systems approach to continuous health IT design: The path forward for improving EHR usability and reducing clinician burnout. Journal of the American Medical Informatics Association, 28(5), 1026–1028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Wetterneck TB, Hundt AS, Rough S, & Schroeder M (2008). Continuous technology implementation and sustainability of sociotechnical change: A case study of advanced intravenous infusion pump technology implementation in a hospital. Corporate sustainability as a challenge for comprehensive management (pp. 139–151). Springer. [Google Scholar]

- Devers KJ (1999). How will we know “good” qualitative research when we see it? Beginning the dialogue in health services research. Health Services Research, 34(5), 1153–1188. [PMC free article] [PubMed] [Google Scholar]

- El-Kareh R, Hasan O, & Schiff GD (2013). Use of health information technology to reduce diagnostic errors. BMJ Quality & Safety, 22(Suppl 2). 10.1136/bmjqs-2013-001884. ii40–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elo S, & Kyngäs H (2008). The qualitative content analysis process. Journal of Advanced Nursing, 62. 10.1111/j.1365-2648.2007.04569.x [DOI] [PubMed] [Google Scholar]

- Flach JM (2012). Complexity: Learning to muddle through. Cognition, Technology & Work, 14(3), 187–197. [Google Scholar]

- Haims MC, & Carayon P (1998). Theory and practice for the implementation of ‘in-house’, continuous improvement participatory ergonomic programs. Applied Ergonomics, 29(6), 461–472. [DOI] [PubMed] [Google Scholar]

- Han YY, Carcillo JA, Venkataraman ST, Clark RSB, Watson RS, Nguyen TC, et al. (2005). Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics, 116(6), 1506–1512. 10.1542/peds.2005-1287 [DOI] [PubMed] [Google Scholar]

- Harte R, Glynn L, Rodríguez-Molinero A, Baker PM, Scharf T, Quinlan LR et al. (2017). A human-centered design methodology to enhance the usability, human factors, and user experience of connected health systems: A three-phase methodology [Original Paper. JMIR human factors, 4(1), e8. 10.2196/humanfactors.5443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill RG Jr, Sears LM, & Melanson SW (2013). 4000 clicks: A productivity analysis of electronic medical records in a community hospital ED. The American Journal of Emergency Medicine, 31(11), 1591–1594. [DOI] [PubMed] [Google Scholar]

- Hoonakker P, Carayon P, Salwei ME, Hundt AS, Wiegmann D, Kleinschmidt P, et al. (2019). The design of PE Dx, a CDS to support pulmonary embolism diagnosis in the ED. Studies in Health Technology & Informatics, 265, 134–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hose BZ, Carayon P, Hoonakker PL, Ross JC, Eithun BL, Rusy DA, et al. (2023). Managing multiple perspectives in the collaborative design process of a team health information technology. Applied Ergonomics, 106, Article 103846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunt DL, Haynes RB, Hanna SE, & Smith K (1998). Effects of computer-based clinical decision support systems on physician performance and patient outcomes: A systematic review. JAMA, 280(15), 1339–1346. [DOI] [PubMed] [Google Scholar]

- International Organization for Standardization. (2010). ISO 9241-210:2010(E) ergonomics of human-system interaction - Part 210: Human-Centred Design for Interactive Systems. Switzerland. [Google Scholar]

- International Organization for Standardization. (2018). ISO 9241-11:2018(E) ergonomics of human-system interaction - Part 11: Usability: Definitions and Concepts. Switzerland. [Google Scholar]

- Karsh BT, Weinger MB, Abbott PA, & Wears RL (2010). Health information technology: Fallacies and sober realities. Journal of the American Medical Informatics Association, 17(6), 617–623. 10.1136/jamia.2010.005637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King J, Patel V, Jamoom EW, & Furukawa MF (2014). Clinical benefits of electronic health record use: National findings. Health Services Research, 49(1pt2), 392–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kline JA, Peterson CE, & Steuerwald MT (2010). Prospective evaluation of real-time use of the pulmonary embolism rule-out criteria in an academic emergency department. Academic Emergency Medicine, 17(9), 1016–1019. [DOI] [PubMed] [Google Scholar]

- Mann DM, Kannry JL, Edonyabo D, Li AC, Arciniega J, Stulman J, et al. (2011). Rationale, design, and implementation protocol of an electronic health record integrated clinical prediction rule (iCPR) randomized trial in primary care. Implementation Science, 6. 10.1186/1748-5908-6-109. Article 109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melles M, Albayrak A, & Goossens R (2021). Innovating health care: Key characteristics of human-centered design. International Journal for Quality in Health Care, 33(Supplement_1), 37–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller FA, & Alvarado K (2005). Incorporating documents into qualitative nursing research. Journal of Nursing Scholarship, 37(4), 348–353. [DOI] [PubMed] [Google Scholar]

- Niño A, Kissil K, & Apolinar Claudio FL (2015). Perceived professional gains of master’s level students following a person-of-the-therapist training program: A retrospective content analysis. Journal of Marital And Family Therapy, 41(2), 163–176. [DOI] [PubMed] [Google Scholar]

- Patterson BW, Pulia MS, Ravi S, Hoonakker PL, Hundt AS, Wiegmann D, et al. (2019). Scope and influence of electronic health record–integrated clinical decision support in the emergency department: A systematic review. Annals of Emergency Medicine. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polkinghorne BG, Massey PD, Durrheim DN, Byrnes T, & MacIntyre CR (2013). Prevention and surveillance of public health risks during extended mass gatherings in rural areas: The experience of the Tamworth Country music festival. Public health, 127(1), 32–38. [DOI] [PubMed] [Google Scholar]

- Press A, McCullagh L, Khan S, Schachter A, Pardo S, & McGinn T (2015). Usability testing of a complex clinical decision support tool in the emergency department: Lessons learned. JMIR Human Factors, 2(2), e14. 10.2196/humanfactors.4537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratwani RM, Reider J, & Singh H (2019). A decade of health information technology usability challenges and the path forward. JAMA, 321(8), 743–744. [DOI] [PubMed] [Google Scholar]

- Russ A, Zillich AJ, Melton BL, Russell SA, Chen S, Spina JR, et al. (2014). Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. Journal of the American Medical Informatics Association, 21(e2), e287–e296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salwei ME, Carayon P, Hoonakker P, Hundt AS, Wiegmann D, Patterson BW, et al. (2021). Workflow integration analysis of a human factors-based clinical decision support in the emergency department. Applied Ergonomics, 97, 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salwei ME, Carayon P, Hundt AS, Hoonakker P, Agrawal V, Kleinschmidt P, et al. (2019). Role network measures to assess healthcare team adaptation to complex situations: The case of venous thromboembolism prophylaxis. Ergonomics, 62(7), 864–879. 10.1080/00140139.2019.1603402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salwei ME, Carayon P, Wiegmann D, Pulia MS, Patterson BW, & Hoonakker PL (2022a). Usability barriers and facilitators of a human factors engineering-based clinical decision support technology for diagnosing pulmonary embolism. International Journal of Medical Informatics, 158, Article 104657. 10.1016/j.ijmedinf.2021.104657 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salwei ME, Hoonakker P, Carayon P, Wiegmann D, Pulia M, & Patterson B (2022b). Usability of a human factors-based clinical decision support (CDS) in the emergency department: Lessons learned for design and implementation. Human Factors. 10.1177/00187208221078625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salwei ME, Hoonakker PLT, Pulia MS, Wiegmann D, Patterson BW, & Carayon P (2023). Post-implementation usability evaluation of a human factors based clinical decision support for pulmonary embolism diagnosis: PE Dx Study Part 1. Human Factors in Healthcare, 4, Article 100056. 10.1016/j.hfh.2023.100056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarnecky MT (1990). Historiography: A legitimate research methodology for nursing. ANS. Advances in Nursing Science, 12(4), 1–10. [PubMed] [Google Scholar]

- Schulte F, & Fry E (2019). Death by 1,000 clicks: Where electronic health records went wrong (p. 18). Kaiser Health News. [Google Scholar]

- Smith MJ, & Carayon-Sainfort P (1989). A balance theory of job design for stress reduction. International journal of industrial ergonomics, 4(1), 67–79. [Google Scholar]

- Tan A, Durbin M, Chung FR, Rubin AL, Cuthel AM, McQuilkin JA, et al. (2020). Design and implementation of a clinical decision support tool for primary palliative care for emergency medicine (PRIM-ER). BMC Medical Informatics And Decision Making, 20(1), 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Office of the National Coordinator for Health Information Technology. (2020). Strategy on reducing regulatory and administrative burden relating to the use of Health IT and EHRs. https://www.healthit.gov/sites/default/files/page/2020-02/BurdenReport_0.pdf.

- Wells PS, Anderson DR, Rodger M, Stiell I, Dreyer JF, Barnes D, et al. (2001). Excluding pulmonary embolism at the bedside without diagnostic imaging: Management of patients with suspected pulmonary embolism presenting to the emergency department by using a simple clinical model and d-dimer. Annals of Internal Medicine, 135(2), 98–107. [DOI] [PubMed] [Google Scholar]

- Wilson JR (2014). Fundamentals of systems ergonomics/human factors. Applied Ergonomics, 45(1), 5–13. [DOI] [PubMed] [Google Scholar]

- Wooldridge AR, Carayon P, Hundt AS, & Hoonakker PL (2017). SEIPS-based process modeling in primary care. Applied Ergonomics, 60, 240–254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie A, Carayon P, Cartmill R, Li Y, Cox ED, Plotkin JA, et al. (2015). Multi-stakeholder collaboration in the redesign of family-centered rounds process. Applied Ergonomics, 46(Part A), 115–123. 10.1016/j.apergo.2014.07.011 [DOI] [PMC free article] [PubMed] [Google Scholar]