Communication among health care personnel is vulnerable to error during patient handoffs (ie, the transfer of responsibility for patient care between health care professionals). Handoffs occur with high frequency in the hospital and have been increasing following restrictions of resident work hours.1 However, to our knowledge, there remains a lack of rigorously performed studies that help guide best practices in handoffs of hospitalized adult patients. In this study, we implemented a web-based handoff tool and training for health care professionals, and evaluated the association of the tool with rates of medical errors in adult medical and surgical patients.

Methods |

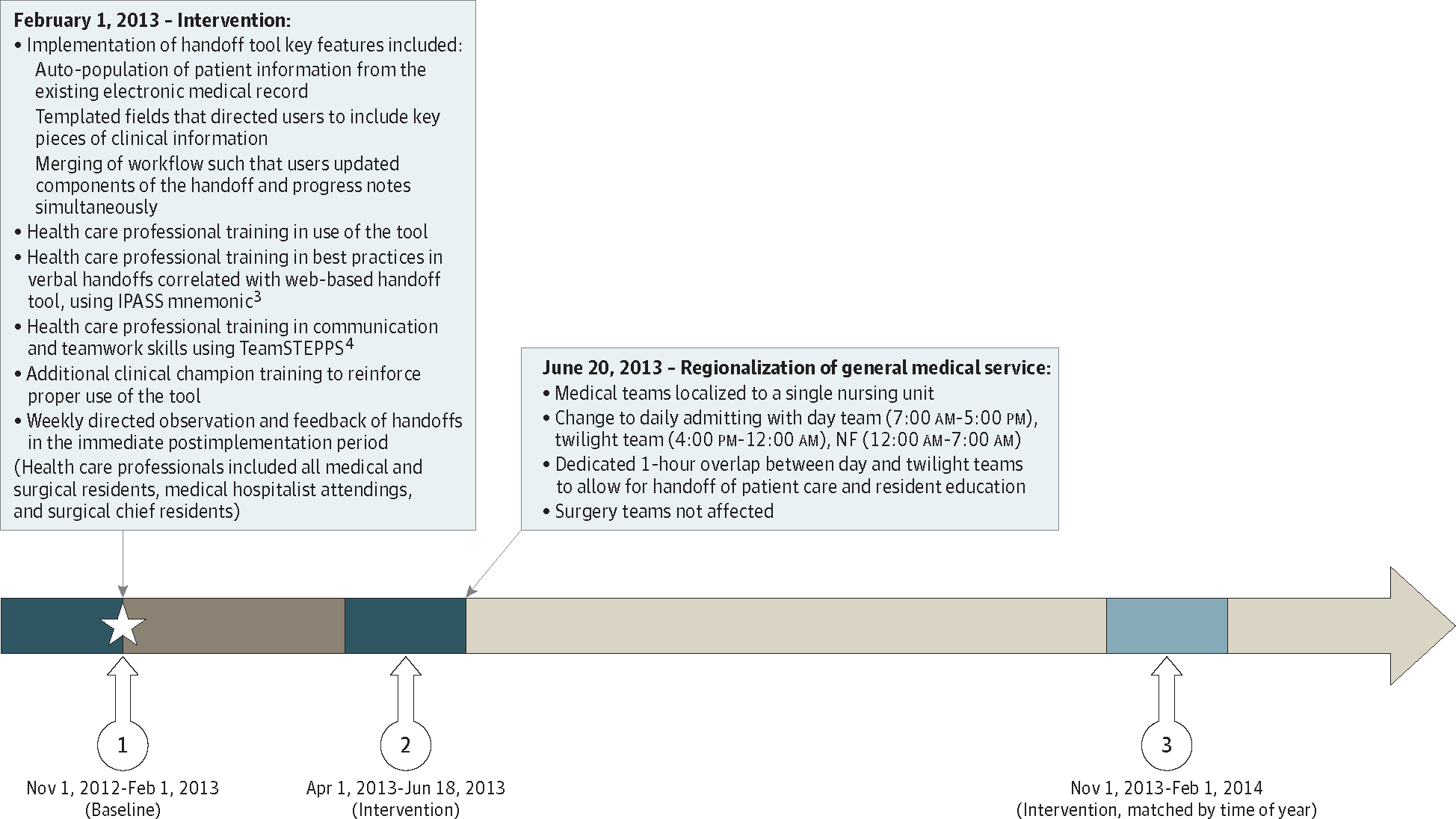

We conducted a prospective cohort analysis from November 1, 2012, to February 1, 2014, of 5407 patients on 3 general medicine services and 2 general surgery services at Brigham and Women’s Hospital during 1 data collection period before implementation of a web-based handoff tool and 2 periods after implementation.2 Between periods 2 and 3, general medicine services (but not surgical services) underwent restructuring to regionalized care teams (Figure).3,4

Figure. Intervention Timeline With 3 Periods of Data Collection3,4.

Three periods of data collection include: (1) preimplementation of the web-based handoff tool, (2) postimplementation, and (3) postimplementation, matched by time of year. Regionalization of general medicine service occurred after data collection period 2. IPASS indicates Illness severity, Patient summary, Action items, Situational awareness, Synthesis by receiver; and NF, nightfloat.

To screen for potential errors, validated surveillance surveys3 were administered to “nightfloat” (working 12 AM to 7 AM) and “twilight” (working 4 PM to 12 AM) residents on completion of their shifts, and to residents and attending physicians 2 days after starting on the general medical or surgical service, querying for potential errors, followed by targeted review of medical records. All incidents were rated on presence of errors and level of harm using the National Coordinating Council for Medication Error Reporting and Prevention scale5 and on attribution to failures in communication and handoff. Incidents with harm (adverse events) were additionally rated on preventability.3 All ratings were adjudicated by a physician who was unaware of the time period; discrepancies in ratings prompted review of medical records, with final determination by the adjudicator (S.K.M.). The study was approved by the Partners Healthcare Human Subjects Review Committee. The need for patient consent was waived by the institutional review board as this was a hospital-wide quality improvement initiative with additional focused teamwork and tool training on the intervention units.

Patient characteristics were compared using χ2 or t tests. All outcomes were converted to errors per 100 patient-days (error rates), which were compared in period 1 vs 2 and 3 using multivariable Poisson regression (SAS, version 9.3; SAS Institute), clustering by role and adjusting for covariates.

Results |

Of the 5407 total patients, 77 medical errors were detected before the intervention vs 45 after the intervention. Primary and secondary outcomes (Table) are notable for significant reductions in total medical error rates per 100 patient days (period 1 rate, 3.56; 95% CI, 1.70–7.44; period 2 and 3 rate, 1.76; 95% CI, 0.93–3.31; P < .001), errors owing to failures in communication (period 1 rate, 2.88; 95% CI, 1.22–6.82; period 2 and 3 rate, 1.15;95% CI, 0.76–1.74; P < .001),errors owing to mistakes in handoffs (period 1 rate, 2.47; 95% CI, 1.00–6.07; period 2 and 3 rate, 0.95; 95% CI, 0.56–1.61; P < .001), errors from end-of-shift (but not end-of-rotation) handoffs (period 1 rate, 6.93; 95% CI, 5.36–8.76; period 2 and 3 rate, 3.59; 95% CI, 2.55–4.87; P = .001), and errors on both medical (period 1 rate, 3.18; 95% CI, 2.45–4.05; period 2 and 3 rate, 1.30; 95% CI, 0.85–1.87; P < .001) and surgical (period 1 rate, 13.11; 95% CI, 7.69–20.63; period 2 and 3 rate, 5.45; 95% CI, 3.40–8.20; P < .001) services. Total error rates were also significantly reduced on the medical services in period 1 vs period 3 (incident rate ratio, 0.47; 95% CI, 0.33–0.66) and in period 2 vs period 3 (incident rate ratio, 0.40; 95% CI, 0.17–0.96), but not on the surgical services.

Table.

Adjusted Effect of Web-Based Handoff Tool and Training of Health Care Professionals on Rates of Medical Errors

| Outcome | Rate of Medical Errors per 100 Patient-days (95% CI)a | IRR (95% CI) | P Value | |

|---|---|---|---|---|

| Time Period 1 (Before Intervention) (n = 2406) | Time Periods 2 and 3 (After Intervention) (n = 3001) | |||

| Total medical errors | 3.56 (1.70–7.44) | 1.76 (0.93–3.31) | 0.49 (0.42–0.58) | <.001 |

| Medical errors owing to failures in communication | 2.88 (1.22–6.82) | 1.15 (0.76–1.74) | 0.40 (0.25–0.63) | <.001 |

| Medical errors owing to mistakes in handoff | 2.47 (1.00–6.07) | 0.95 (0.56–1.61) | 0.38 (0.27–0.56) | <.001 |

| Medical errors that caused harm (preventable adverse events)b | 0.49 (0.25–0.86) | 0.26 (0.10–0.53) | 0.53 (0.19–1.47) | .22 |

| Nonpreventable adverse eventsb | 0.39 (0.18–0.73) | 0.74 (0.44–1.15) | 1.89 (0.82–4.38) | .14 |

| Subgroup Analyses b | ||||

| Medical errors by type of handoff | ||||

| End of shift | 6.93 (5.36–8.76) | 3.59 (2.55–4.87) | 0.52 (0.35–0.78) | .001 |

| End of rotation | 1.16 (0.64–1.92) | 0.63 (0.29–1.18) | 0.55 (0.23–1.13) | .18 |

| Medical errors by service | ||||

| Medical | 3.18 (2.45–4.05) | 1.30 (0.85–1.87) | 0.41 (0.26–0.65) | <.001 |

| Surgical | 13.11 (7.69–20.63) | 5.45 (3.40–8.20) | 0.11 (0.06–0.22) | <.001 |

Abbreviation: IRR, incident rate ratio.

Except where noted below, results clustered by health care professional and controlled for service and patients’ age, sex, race/ethnicity, length of stay, and diagnosis-related group weight.

Unadjusted results shown, as low number of events precluded adjusted analyses.

Discussion |

We found that implementation of a web-based handoff tool and training for health care professionals was associated with a significant reduction in rates of medical errors, driven largely by a reduction in errors attributable to communication failure and errors that occurred during end-of-shift handoffs. It is possible that the tool was more adept at improving end-of-shift handoffs, although it is also plausible that our study was underpowered to examine end-of-rotation handoffs, supported by the trend toward reduced errors observed in that subgroup.

More important, the reduction in rates of medical errors remained significant in the time-matched analysis (periods 1 vs 3), accounting for potential effects of resident experience. In addition, we saw a stepwise reduction in rates of errors on general medicine services, suggesting that regionalization between periods 2 and 3 had an additive or synergistic effect, supported by the fact that this reduction was not replicated on surgical services. As noted in the Figure, regionalization included dedicated time for handoffs. These results add to existing literature, which has focused mainly on the connection between poor-quality handoffs and medical errors,6 or evaluating the effects of interventions in limited patient populations with variable use of information technology tools.3

Our findings are subject to several limitations. As this was a single-site study, our findings may not be generalizable to other institutions. However, the components of the handoff tool are easily adaptable to other sites,2 including those that use vendor electronic health records. In addition, we are not able to separate the effect of the handoff tool from that of training for health care professionals.

Conclusions |

Our findings suggest that implementation of a web-based handoff tool and training for health care professionals is associated with fewer medical errors, particularly those owing to communication failures. In addition, our intervention appeared synergistic (or additive) with concurrent care team regionalization, suggesting effectiveness in a real world context.

Funding/Support:

This research was supported by funds within the Department of Medicine, Brigham and Women’s Hospital.

Role of the Funder/Sponsor:

The funding source had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Footnotes

Conflict of Interest Disclosures: Dr Schnipper reported receiving grant funding from Sanofi Aventis for an investigator-initiated study to design and evaluate an intensive discharge and follow-up intervention in patients with diabetes. No other disclosures were reported.

Contributor Information

Stephanie K. Mueller, Division of General Internal Medicine, Brigham and Women’s Hospital, Boston, Massachusetts; Harvard Medical School, Boston, Massachusetts.

Catherine Yoon, Division of General Internal Medicine, Brigham and Women’s Hospital, Boston, Massachusetts.

Jeffrey L. Schnipper, Division of General Internal Medicine, Brigham and Women’s Hospital, Boston, Massachusetts; Harvard Medical School, Boston, Massachusetts.

References

- 1.Accreditation Council for Graduate Medical Education. Duty hours. http://www.acgme.org/What-We-Do/Accreditation/Duty-Hours. Accessed May 10, 2016.

- 2.Schnipper JL, Karson A, Morash S, et al. Design and evaluation of a multi-disciplinary web-based handoff tool. Presented at: Society of General Internal Medicine 35th Annual Meeting; May 12, 2012; Orlando, Florida. [Google Scholar]

- 3.Starmer AJ, Spector ND, Srivastava R, et al. ; I-PASS Study Group. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812. [DOI] [PubMed] [Google Scholar]

- 4.Agency for Healthcare Research and Quality, US Dept of Health & Human Services. TeamSTEPPS: strategies and tools to enhance performance and patient safety. http://www.ahrq.gov/professionals/education/curriculum-tools/teamstepps/index.html. Updated May 2016. Accessed April 12, 2016. [PubMed]

- 5.National Coordinating Council for Medication Error Reporting and Prevention. Types of medication errors http://www.nccmerp.org/types-medication-errors. Accessed May 3, 2016.

- 6.Riesenberg LA, Leitzsch J, Massucci JL, et al. Residents’ and attending physicians’ handoffs: a systematic review of the literature. Acad Med. 2009;84(12):1775–1787. [DOI] [PubMed] [Google Scholar]