Abstract

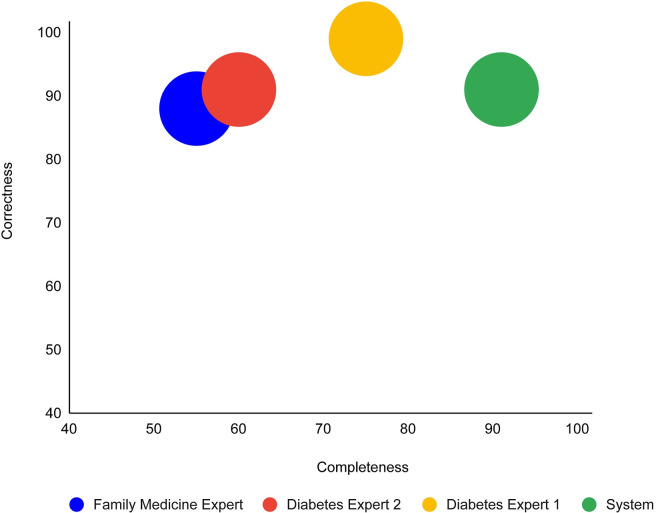

We introduce a new approach for automated guideline-based-care quality assessment, the bidirectional knowledge-based assessment of compliance (BiKBAC) method, and the DiscovErr system, which implements it. Our methodology compares the guideline’s Asbru-based formal representation, including its intentions, with the longitudinal medical record, using a top-down and bottom-up approach. Partial matches are resolved using fuzzy temporal logic. The system was evaluated in the type 2 Diabetes management domain, comparing it to three expert clinicians, including two diabetes experts. The system and the experts commented on the management of 10 patients, randomly selected from 2,000 diabetes patients. On average, each record spanned 5.23 years; the data included 1,584 medical transactions. The system provided 279 comments. The experts made 181 different unique comments. The completeness (recall) of the system was 91% when the gold standard was comments made by at least two of the three experts, and 98%, compared to comments made by all three experts. The experts also assessed all of the 114 medication-therapy-related comments, and a random 35% of the 165 tests-and-monitoring-related comments. The system’s correctness (precision) was 81%, compared to comments judged as correct by both diabetes experts, and 91%, compared to comments judged as correct by one diabetes expert and at least as partially correct by the other. 89% of the comments were judged as important by both diabetes experts, 8% were judged as important by one expert, and 3% were judged as less important by both experts. Adding the validated system comments to the experts’ comments, the completeness scores of the experts were 75%, 60%, and 55%; the expert correctness scores were respectively 99%, 91%, and 88%. Thus, the system could be ranked first in completeness and second in correctness. We conclude that systems such as DiscovErr can effectively assess the quality of continuous guideline-based care.

1. Introduction

1.1. The necessity of assessing the quality of medical care

The necessity of common standards for medical care is becoming increasingly clear to the medical community. Evidence-based recommendations are published world-wide in the form of clinical guidelines and protocols. These guidelines are usually published in a text format, and are intended to be used by clinicians to provide the state of the art care. Evidence also indicates that implementation of these guidelines may improve medical care and reduce its costs [1–5].

However, as demonstrated repeatedly in multiple clinical domains, clinicians and patients often do not sufficiently and uniformly adhere to the clinical guidelines in a manner that is sensitive to the context of each patient. A typical recent example is the type 2 diabetes domain, in which significant variance has been demonstrated across five European countries with respect to the application of the American Diabetes Association (ADA) and the Kidney Disease: Improving Global Outcomes (KDIGO) guidelines with respect to metabolic and blood pressure control, and the use of renin–angiotensin system–blocking agents, statins, and acetylsalicylic acid [6]. Another example is the Preeclampsia-Toxemia (PET) domain, in which compliance of clinicians, when not assisted by a guideline-based decision-support system (DSS), had a completeness, out of the total guideline-based recommendations relevant to the patient at hand, of only 41% to 49%, rising to 93% when guideline-based recommendations were first suggested by the DSS; furthermore, 68% of the clinician proposed actions were correct, but redundant, when compared with the patient-specific recommendations of the guidelines and the patient record; the redundancy was reduced to 3% when the clinicians were first exposed to the DSS suggestions [7].

Such gaps in adherence to clinical guidelines are important to detect; efficient, automated, large-scale detection might lead to fast, specific, focused adjustments, usually of the clinician management patterns, but possibly of the guidelines themselves.

Efforts had been made to develop automated systems that can perform some form of evidence-based assessment of the quality of care, the major examples include the HyperCritic [8], Trauma-AID [9], Trauma-TIQ [10], AsthmaCritic [11], IGR [12], RoMA [13], and the model checking for critiquing [14].

In addition, a growing number of medical centers and organizations have established internal quality and risk assessment units that perform quality assessment of the medical treatment, typically on a random subset of the patient population, or in dire circumstances in which mistakes have already been made. These risk assessment units usually examine the medical records manually, or by using relatively simple computational methods, and compare the medical records to a deterministic set of quality measures that are created specifically for that purpose.

Typically, though, these earlier efforts did not include the combination of (1) a complete formal representation of the original guideline; (2) explicit, context-sensitive temporal reasoning regarding missing or redundant actions at each context over the patient’s complete longitudinal record; (3) an assignment of a level of adherence to every action or intention of the guideline; and (4) a rigorous evaluation of the completeness and correctness of the approach.

The current study was intended to fill this gap.

1.2. The objective of this research: design, implementation, and rigorous evaluation a new methodology for quality assessment

In the current study, we first introduce a new approach for automated guideline-based quality assessment of the care process, the bidirectional knowledge-based assessment of compliance (BiKBAC) method. Our BiKBAC methodology assesses the degree of compliance when applying clinical guidelines, with respect to multiple different aspects of the guideline (e.g., the guideline’s process and outcome objectives). The assessment is performed through a highly detailed, automated quality-assessment retrospective analysis, which compares a formal representation of the guideline and of its process and outcome intentions with the longitudinal electronic medical record of its continuous application over a significant time period, using both a top-down and a bottom-up approach, which we explain in the Methods section. Partial matches of the data to the process and to the outcome objectives are resolved using fuzzy temporal logic.

We then introduce the DiscovErr system, which implements the BiKBAC approach, and present its detailed architecture.

Then, in the rest of the current paper, we present our rigorous, realistic evaluation of the DiscovErr system in the Type II Diabetes domain, by comparing its performance to a panel of three clinicians, two experts in diabetes-patient management and a senior family practitioner highly experienced in diabetes treatment. The system and the three experts commented on the management of 10 patients who were randomly selected before the evaluation from a database containing longitudinal records of 2,000 type 2 diabetes patients.

Our evaluation methodology for assessing the DiscovErr system is most similar in its spirit to the well-known evaluation of the HyperCritic system [15], a system that critiqued the care of patients who had hypertension. HyperCritic was evaluated with the help of a panel of eight expert physicians, whose critiques of the care provided by general practitioners were compared to those of the system. However, as we shall see in the Methods section, the computational methodology underlying the DiscovErr system is quite different from that underlying the HyperCritic system, and is based directly on a formal representation of the original clinical guideline and on application of a temporal fuzzy logic.

As we describe in detail in the Results section, the DiscovErr system’s completeness and correctness with respect to the comments it made regarding the patient records were quite high. In fact, if the DiscovErr system were to be considered as an additional, fourth expert, it would have ranked first in completeness and second in correctness (if these measures were to be computed also for the human experts, using a majority consensus of opinions as the gold standard).

2. Methods

We start by briefly describing the languages that we are using to represent the procedural (“How”) and declarative (“What”) aspects of the guideline and its objectives. We then proceed to describe the new quality assessment methodology, which exploits these languages, and the system that implements this methodology.

2.1. Formal representation of the guideline’s procedural and declarative knowledge

2.1.1. Procedural knowledge representation: The asbru guideline-representation language

Asbru is an expressive guideline-representation language that enables guideline designers to represent procedural sequential, concurrent, conditional, and repeating actions as a hierarchy of execution plans. To control the application of the plans, Asbru uses multiple types of entry conditions (specifically, compulsory filter conditions such as age or gender that must be true on entry, and achievable setup conditions such as having access to the patient’s glucose-tolerance test, which can be satisfied using some action or plan), as well as abort, suspend, restart, and complete conditions, represented as temporal-abstraction patterns (see below). Furthermore, to support quality assessment, Asbru includes a meta-level of explicit intermediate and overall process (e.g., administration of a beta blocker twice a day) and outcome (e.g., reduction of diastolic blood pressure below 85 mmHg) intentions, also represented as temporal-abstraction patterns, whose objective is to maintain (if they already exist), achieve (if they are currently false), or avoid (assuming they are false and should stay so) some temporally extendable goal [16, 17]. The temporal semantics of Asbru have also been shown to be consistent and clear [18].

Asbru has been used in multiple clinical decision-support projects, such as in the MobiGuide EU project, in which it was used to represent a gestational-diabetes and gestational hypertension guideline to manage patients in Spain, and an atrial-fibrillation guideline to manage patients in Italy [19, 20]. The BiKBAC compliance-assessment method assumes that the guideline is formally represented in Asbru or in some other language equivalent to it (i.e., which includes a semantic equivalent to all of Asbru’s actions, conditions, and intentions).

The Asbru language had been successfully used in several projects for formal representation of clinical guidelines in multiple clinical domains, some examples include: Neonatal Intensive Care [21], Hyperbilirubinemia in healthy term newborns [22], breast cancer [14], preeclampsia / toxemia of pregnancy [7], gestational diabetes, and atrial fibrillation [19]. The temporal semantics of Asbru have also been shown to be consistent and clear [18].

2.1.2. Declarative knowledge representation: The knowledge-based temporal abstraction method

All conditions and actions and intentions in Asbru are based on domain knowledge that is represented as temporal-abstraction patterns. Temporal abstractions are interval-based, abstract concepts (e.g., “moderate anemia for three weeks”) derived from the input raw, time-stamped clinical data (e.g., a series of point-based Hemoglobin-value measurements).

In our study, we have derived all temporal abstractions from the raw time-stamped data using domain knowledge that is formally represented using the ontology underlying the knowledge-based temporal-abstraction (KBTA) method [23, 24], and used the KBTA method to compute these temporal abstractions, such as to decide whether a particular entry condition holds, or whether an outcome intention was achieved.

The KBTA ontology defines measurable raw concepts (e.g., blood-glucose value); interval-based abstract concepts, which include abstractions of type State (e.g., High and Low value), Gradient (e.g., Increasing or Decreasing or Stable blood pressure), Rate (e.g., changing quickly), Trend; Events, which include external, usually volitional actions such as administration of a medication or a surgical procedure; Patterns (e.g., proper administration of a certain medication), which are interval-based combinations of events, raw and abstract concepts, and possibly other patterns, which need to satisfy various value and time constraints for each interval and among the intervals; and interpretation contexts (e.g., the period during which short-acting insulin affects blood glucose), which are induced by all other ontological entities (e.g., for 30 minutes and up to eight hours following the administration of a regular insulin injection), and change, in a context-sensitive manner, the specific knowledge (e.g., the functional knowledge that determines the definition of a High blood-glucose state that is applied in that context, such as before or after a meal). Several types of temporal-abstraction knowledge exist—structural, functional, logical, and probabilistic [23].

As we describe later in this section, we also added a fuzzy extension to the KBTA method, the fuzzy temporal reasoner, which assigns a membership value between 0 and 1 to each abstraction with respect to fulfilling a certain (temporal) condition or intention.

2.2. The bidirectional knowledge-based compliance-analysis methodology

We shall start by introducing our computational method, the Bidirectional Knowledge-Based Assessment of Compliance (BiKBAC) method, and the architecture of the DiscovErr system implementing it.

The BiKBAC methodology assumes that the guideline is represented in a formal fashion at two levels. These levels include (1) its overall process (recommended actions) and its transition conditions (e.g., entry condition, stop condition) and (2) its process and outcome intentions. The representation need not be identical, but should be expressively equivalent to that of the Asbru guideline-representation language. For example, the subtypes of the entry condition must include some version of the Asbru Filter (i.e., compulsory) and Setup (i.e., a state to be achieved) entry conditions, while the subtypes of the stop condition must include some version of the Asbru Complete, Abort, and Suspend conditions, with similar semantics to those of the Asbru language. Both of these conditions, as well as the process and outcome intentions, are represented formally using the KBTA ontology and computed using the KBTA method, as explained in the previous section.

The goal of this compliance analysis methodology is to evaluate the data of each single patient with respect to its compliance with the process and outcome objectives of the full set of operative formal clinical guidelines that are represented in the system’s library, and in particular, those guidelines that are relevant to the patient’s state at each point in time. When invoked, the algorithm accepts the complete set of patient’s data as an argument; the data are represented using an in-memory object that holds both the demographic and the temporal data of the patient.

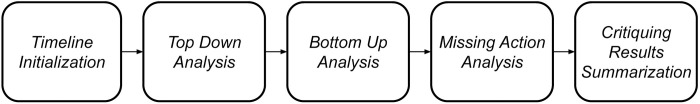

The algorithm that is at the core of the BiKBAC method combines two computational approaches: top-down and bottom-up. The algorithm consists of several sequential steps, in which each step can directly analyze the raw patient data or use the outputs calculated in the previous steps of the algorithm. The outputs of the steps are stored in a central data structure called a TimeLine, which supports fast storage and retrieval of the time-stamped data items. The main steps of the algorithm and their respective order are presented in Fig 1 and are described in the following sections.

Fig 1. The key steps in the Bidirectional Knowledge-Based Assessment of Compliance (BIKBAC) method.

The TimeLine data structure is used to store and retrieve time-stamped data items, which are called TimePoints, which represent multiple events in the patient’s history. Each TimePoint includes an annotation regarding its type, a timestamp, and references to additional relevant information according to its type. The TimePoints are divided into two main types: Data-Item points represent the raw data items that exist in the original medical record, while Computed-Explanation points represent new higher-level information that is created during the compliance analysis. The description of the core algorithm of the BiKBAC method in the following sections clarifies the context in which the various types of computed-explanation points are added to the TimeLine.

2.2.1. Top-down analysis

The Top-Down analysis is a computational process that analyzes the time-stamped patient data from the perspective of the set of potentially relevant guidelines, i.e., a knowledge-driven process. In general, it is performed for the detection, within each time-stamped patient data series, of the high-level interval-based temporal abstractions that are related to the entry conditions, stop conditions, and/or outcome intentions of any operative guideline that is available in the knowledge library.

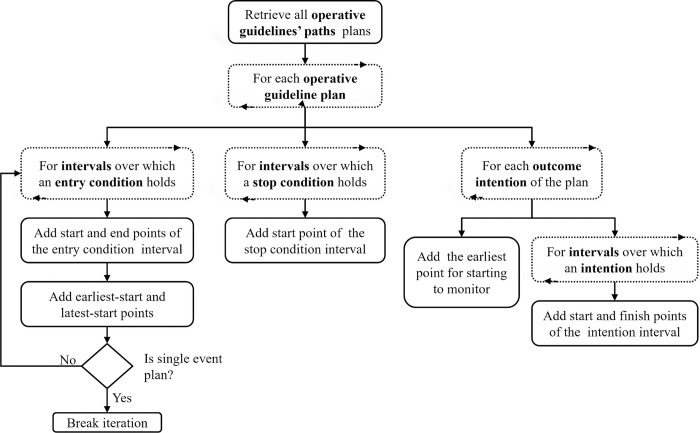

Fig 2 presents a flowchart illustrating the steps of top-down analysis; a description of the process is provided in the following paragraphs.

Fig 2. The top-down analysis step of the BiKBAC method’s compliance analysis algorithm.

The dashed rectangles represent iterative steps performed on a specific collection. The top-down analysis is performed on each operative guideline plan in the library.

When starting the top-down analysis, the system retrieves the complete set of operative guideline plans, and then breaks composite guidelines into a virtual set of guideline-plans, each describing a single clinical path of the original composite guideline. The virtual plans are assigned the guideline-conditions (i.e., Asbru’s conditions: Filter, Setup, Complete, Abort, Suspend, and Restart) of the original “parent” guideline. We call this process “Condition Propagation”, and it involves the following logic: When propagating an entry condition of a guideline (i.e., Filter, Setup, and Restart) from a parent plan to one of its sub-plans, the condition of the parent plan is added as a conjunction (i.e., AND relation) to the existing entry condition of the sub-plan, for example “Diabetes AND Pregnancy”. That is, all of the entry conditions must hold to enter the sub-plan, including those of its parent plan. When propagating a stop condition of a guideline (i.e., Complete, Abort, or Suspend) from a parent plan to one of its sub-plans, the condition of the parent plan is added as a disjunction (i.e., OR relation) to the existing stop condition of the sub-plan, for example “Patient Hospitalization OR Severe Pain”. That is, it is sufficient that any of the stop conditions will hold, including one of those propagated from the parent plan, to stop the sub-plan.

For each operative guideline path, the declarative concepts of the entry conditions, stop conditions, and outcome-intentions are extracted and sent to the Fuzzy Temporal Reasoner (which we describe later in this section), which evaluates them on the patient data. For each of these declarative concepts, the Fuzzy Temporal Reasoner returns a set of zero or more temporal abstractions, in the form of time stamped intervals assigned with a membership score (from 0 to 1). The system filters the returned intervals and accepts only those with a membership score that passes certain configurable thresholds. The remaining intervals are then used for adding data points to the TimeLine according to the logic described in the flowchart in Fig 2.

Thus, for example, in the case of the type 2 diabetes management guideline, an entry condition (in this case, a compulsory Filter condition) of HbA1C level greater than 6.5%, would be used to label time intervals during which this condition held, indicating also to what (fuzzy) degree the condition held (on a scale of 0.1).

2.2.2. Bottom-up analysis

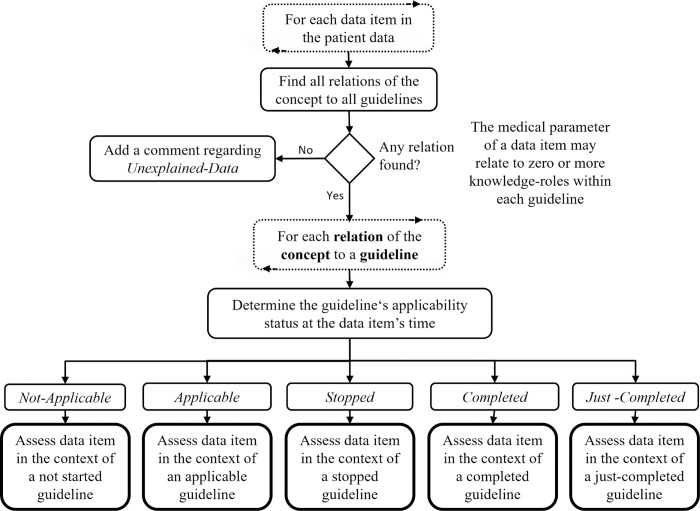

The Bottom-Up analysis is a computational process that analyzes the guideline compliance from the perspective of the patient’s data, i.e., a data-driven process. In general, the bottom-up analysis consists of a process that examines each data item in the patient’s medical record, and provides it with a set of possible knowledge-based (i.e., clinical guideline-based) explanations.

In the bottom-up analysis, the system scans each item in the patient data, and tries to provide as many computed explanations for each data item as possible. The computed explanations are structured semantic comments that evaluate the data item in the context of any guideline-plan that is related to this type of item, i.e., the item is included in the formal definition of a certain part of the guideline. This, of course, is done according to what is known to the system, i.e., the full set of formal operative guidelines.

For example, consider a hemoglobin A1c measurement that is potentially related to a diabetes guideline in two ways: It can be a pre-diabetic (screening) test that is taken to decide if the patient has diabetes (included in the guideline’s filter-condition), or it can be an ongoing periodic test step (included in the guideline’s plan-body), to monitor a patient who was already diagnosed. The data item is examined in the context of these two different guideline knowledge roles, and a possible computed explanation is provided for each. Each of these computed explanations that are added for a specific data point, is represented in a data structure that holds additional details. For example, computed explanations in the context of guideline step points, are assigned a quality score for the assessment of the timing of the action, with a corresponding description of the temporal relation, which can be assigned step-too-early, step-on-time, or step-is-late. Note that each data point that represents a clinical parameter in the patient’s TimeLine, is assigned with a collection of one or more possible computed explanations. In a later step of the compliance assessment, the computed explanations are summarized to select the most reasonable one. Fig 3 presents a hierarchical flow chart illustrating the main flow of the bottom-up analysis.

Fig 3. The bottom-up analysis step of the BiKBAC method’s compliance analysis algorithm.

In the next step, for data items that were found with relations to the knowledge roles (e.g., conditions) of potentially relevant operative guidelines, each of the relations is examined to determine the Applicability-Status of all potentially applicable guidelines. The applicability status can be generally described as an answer to the question “Was the guideline’s plan applicable in the time referred to by the data item?”, and is determined by scanning the previous events recorded in the TimeLine during the Top-Down analysis phase. These events include the guideline’s (earliest and latest) start and stop points, which were added in the Top-Down analysis (see Fig 2), and the computed explanations of all of the clinical steps that occurred prior to the current examined time (recall that the data items are scanned chronologically). The applicability-status is used to determine the context in which to further analyze the data item, in which additional logic is applied to determine the type of explanation to add. For example, in the context of a Stopped-Guideline, as determined during the Top-Down analysis, a plan body item of this guideline, found in the Bottom-Up analysis, would be assigned the explanation Stopped-Guideline-Step, whereas in the context of a previously determined Completed-Guideline, the same plan body item would be explained after the Bottom-Up analysis as Redundant-Step-Repeated.

Thus, for example, while critiquing the management over time of a diabetes type 2 patient, (1) a HbA1C measurement result might be associated with the Filter Condition and the Outcome Intention of the type 2 diabetes management guideline (assuming that guideline exists in the guideline library); (2) an LDL cholesterol measurement result might be associated with the Filter Condition and the Outcome Intention of a Hyperlipidemia management guideline, if that guideline also happens to be in the guideline library; and (3) a Lithium medication (possibly given to control the patient’s Bipolar disorder) would be labeled as “unexplained data” if the Bipolar Disorder guideline, or any other clinical guideline that involves administration of Lithium, does not exist in the guideline library.

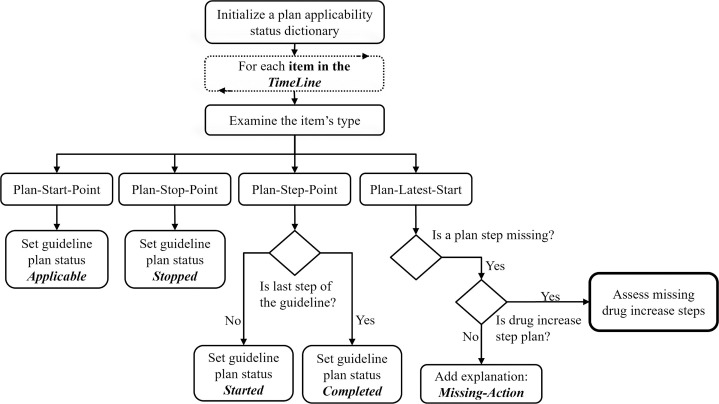

2.2.3. Missing actions analysis

The Missing-Actions-Analysis, illustrated in Fig 4, is an important step of the compliance analysis, due to the fact that missing an action is one of the more common problems with respect to compliance to clinical guidelines. In this step, the system scans the TimeLine again, this time to detect missing actions. There is a need for an additional scan of (i.e., a third pass over) the TimeLine, due to the fact that in the previous steps of the compliance analysis the system could not detect the missing actions; in the Top-Down analysis, the system examines the guidelines’ conditions and outcome-intentions but does not directly consider the clinical actions (which are represented in the plan-body of the formal guidelines); in the Bottom-Up analysis, the process scans the existing data items in the patient’s record, thus, in this manner, it cannot detect actions that are missing. While scanning the TimeLine chronologically, when detecting a Plan-Latest-Start of a guideline, a Missing-Action comment is generated for each missing clinical action of that guideline.

Fig 4. The missing-actions analysis of the BiKBAC compliance-analysis methodology.

In the specific and important case of assessing a missing Drug-Increase clinical steps item, the system is trying to explain the missing actions by considering two common scenarios in which the medication-increase action should be cancelled even if it is required according to the patient’s data. The first scenario is reaching a maximal dose of a medication, a situation in which a further dose increase is not recommended. The second scenario is cases in which the compliance to the medication was low to begin with, at the time of the assessment, meaning that the patient did not take the medication; in such a situation, it is more reasonable to improve the core compliance to the taking of the medication, rather than to increase the dose of the medication, and thus, that should be the output of the quality-assessment process.

2.2.4. Critiquing results summarization

During the application of the previous steps of the compliance analysis algorithm, the system is generating a large amount of computed explanations, which are represented as TimePoints objects that are stored in the TimeLine. Some of these computed explanations represent useful comments regarding compliance with one or more relevant clinical guidelines (e.g. a late drug administration action), whereas other computed explanations are less useful for potential users and relevant mostly to support the analysis process itself (e.g. an internal notion of the system regarding a detected drug-increase action). In this phase, the system extracts the useful computed explanations from the TimeLine, and filters out the less useful ones. The useful computed explanations represent the system’s Comments regarding compliance to the guidelines. The Comments are the BiKBAC method’s main output.

Intention Related Comments are extracted from the outputs of the top-down analysis, in which each outcome-intention of a guideline-plan was evaluated using the Fuzzy-Reasoner. The outcome intentions-related explanations are represented as scored temporal-intervals, and for each outcome-intention the following sets of temporal-intervals are extracted: a set of temporal-intervals with assessment regarding the achievement of the intention (e.g., the hemoglobin A1c goals were almost on target in a certain period of time, therefore, the fuzzy membership score might be, say, 0.85); a set of temporal-intervals in which the intentions should have been monitored (e.g., for a patient who was diagnosed with diabetes in January, the outcome-intentions should have been monitored from April); and a set of temporal intervals in which the system detects insufficient data to determine the achievement of the outcome intention, i.e., intervals in which an intention was not monitored.

Data Item Comments are extracted from the outputs of the bottom-up analysis, in which, as explained, each data item in the patient record is evaluated and assigned zero or more computed explanations. In general, it is reasonable to provide multiple explanations when analyzing data in a retrospective manner, however, for practical reasons, there is a need to organize these multiple computed-explanations in a manner that emphasizes the most reasonable computed explanations provided for each data item. The computed-explanations of each data item are sorted using a score that represents the “reasonableness” (i.e., likelihood of being relevant) of the computed explanations. The computed explanation with the highest “reasonableness” score is selected as the compliance comment and assigned to the examined data item. The “reasonableness” score is the mean of the following scores given to each explanation: (1) a score that represents the level of applicability of the guideline at the valid time of the data item; (2) a score that describes the strength of the relation between the data item to the knowledge role of the relevant clinical guideline (e.g., the score of a data item unique to a particular guideline is higher, for that particular guideline, than the score of a data item that might be equally relevant to three different guidelines, with respect to each of the three guidelines); (3) a score for the timing of the clinical step that is represented by the data item, available only for clinical steps that were expected according to an applicable guideline.

The full set of data item comment types include the following: step-not-supported (by an applicable known guideline), stopped-plan-step, redundant-step-repeated, duplicate-step, wrong-path-selection (another guideline path is more suitable), step-too-early, step-on-time, step-too-late.

Missing Actions Comments are extracted from the output of the Missing-Actions-Analysis, in which the system scans the TimeLine and adds computed explanations regarding any missing action of the operative guideline. Extracting these computed explanations is important, since, as noted above, missing actions are a common problem of guideline compliance.

2.3. The fuzzy temporal reasoner

Recall that the Top-Down analysis exploits fuzzy temporal reasoning to assign values to the degree to which certain conditions or intentions hold over time. The Fuzzy Temporal Reasoner is a KBTA-based engine (extending the KBTA method) that is used to extract high level interval-based interpretations from the raw, time-stamped temporal data, and assign fuzzy membership scores to the resultant abstractions. It uses formal declarative knowledge about medical concepts, which are represented according to the KBTA ontology. In addition, the Fuzzy Temporal Reasoner applies techniques of fuzzy logic [25, 26] that take part in the reasoning process, enabling it to provide a (fuzzy) membership score for each abstraction it generates. The use of fuzzy logic techniques distinguishes the Fuzzy Temporal Reasoner from other existing KBTA engines that are able to extract from raw data only deterministic abstractions based on classical First Order Logic.

In addition to applying temporal logic for the evaluation of the various types of KBTA abstract concepts (e.g., State, Gradient, Rate), the Fuzzy Temporal Reasoner uses techniques of fuzzy logic both in the evaluation of logical relations (e.g., x < y), and in the evaluation of logical operators (i.e., AND, OR operators) that are part of compound logical expressions.

To better understand the motivation behind the use of fuzzy temporal logic in the compliance analysis process of the BIKBAC methodology, consider the following example of a Clinical Quality Measure (CQM) in the domain of the current study, i.e., Type II Diabetes: “Low Density Lipoprotein (LDL-C) Control in Diabetes Mellitus”, defined as “Percentage of patients aged 18 through 75 years with diabetes mellitus who had most recent LDL-C level in control (less than 100 mg/dL)”. A simplistic algorithm using this rigid cut-off value would assign a care provider who manages a group of 50 patients, whose LDL-C values at the point in which they were examined were all just slightly higher than 100 mg/dL, say 106, a quality measure of zero. Such an extreme assignment is not likely to be well received by the clinical community, and would decrease trust and acceptance of quality assessment results. However, due to the use of the fuzzy temporal logic mechanism to assess quality measures, the DiscovErr system would assess the quality of care of that group as quite high, say, by assigning them a membership score of, say, 0.88 or even 0.93, although the compliance would not be perfect. However, an LDL-C value of, say, 130 or higher would be assigned a membership score of 0, while intermediate values would be assigned some membership score between 0 and 1, using a linear or another membership function to interpolate between the two extreme values.

The Fuzzy Temporal Reasoner is an internal component of the Analysis Framework and is used by the guideline Compliance Analysis Engine to evaluate the state of the patient with regard to the conditions specified in the guidelines. For example, when evaluating the compliance to a guideline regarding a medication that should be stopped in the case of reduced kidney function, the Compliance Analysis Engine uses the Fuzzy Temporal Reasoner to retrieve the time intervals during which the reduced kidney function state was equal to True according to the patient’s data (i.e., the State abstraction “reduced-kidney-function-state” = ‘True’). The results of the Fuzzy Temporal Reasoner include a set of time-intervals, each assigned with a membership score represented as a continuous number between 0 and 1. In time periods during which the raw data of the patient completely satisfy the constraints specified in definition of the “reduced-kidney-function-state” concept, the membership score is assigned the value of 1; during periods when these constraints are fully contradicted, the membership score is assigned the value of 0; during periods in which these constraints are partially satisfied, due to raw data that are close to satisfying the relation, the membership score is assigned a rational number between 0 and 1.

The Fuzzy Temporal Reasoner is described in detail in S1 Appendix.

2.4. The DiscovErr system

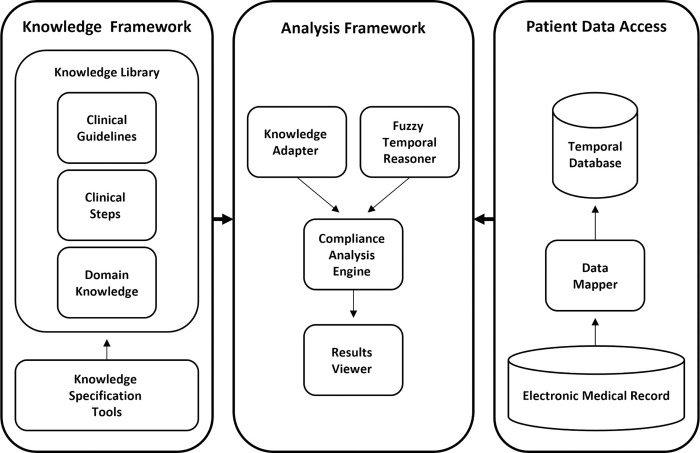

The architecture of the DiscovErr system, which fully implements the BiKBAC compliance-assessment methodology, is presented in Fig 5. The DiscovErr system includes three main modules that are integrated to support the guideline compliance analysis task; the Knowledge Framework, including the knowledge library and knowledge specification tool, the Patient Data Access module, which retrieves the data from the electronic medical record, and the Analysis Framework, which performs the compliance analysis and provides a graphical interface for the users to view the compliance analysis results.

Fig 5. The DiscovErr system’s architecture, implementing the BiKBAC compliance-assessment methodology.

For the formal representation of the guideline, the DiscovErr system uses the Asbru language [16, 17], due to its formal representation of multiple types of process and outcome intentions as temporal patterns to be achieved, avoided, or maintained, and of multiple types of explicit conditions, such as several types of eligibility conditions and of guideline-completion conditions.

The Knowledge Framework includes a library that facilitates a knowledge model that integrates three types of formal knowledge: procedural knowledge of clinical guidelines (represented using the Asbru language), declarative medical domains knowledge (using the KBTA method), and knowledge about clinical steps with references to controlled medical vocabularies, such as the WHO’s Anatomical Therapeutic Chemical Classification System (ATC) for drug related steps, and Logical Observation Identifiers Names and Codes (LOINC) for laboratory test orders and results. The Knowledge Framework includes a graphical tool for knowledge specification, which is used by expert physicians and knowledge engineers to create and maintain the formal knowledge and store it in the knowledge library.

The Patient Data Access module includes the Data Mapper module for importing data from the electronic medical record and for storing it in a time-oriented (technically, historical) database according to the internal schema of the system. The Data Mapper performs three main tasks during the data import process: (1) map the concept identifiers used in the external electronic medical record to the concept identifiers used in the formal knowledge; (2) convert the data when required due to different units of measurement; and (3) store the time-oriented patient data in a time-oriented database, in which each raw data item (e.g., Hemoglobin level) is mapped to a corresponding concept in the formal knowledge, and includes the relevant valid-start and valid-stop time stamps. The data in the time-oriented database consists of two types of parameters; primitive parameters that represent information about the state of the patient (e.g., lab results, physical examinations) and event parameters that represent the medical treatment (e.g., medication orders, procedures).

The Analysis Framework is responsible for the computational task of compliance analysis. The core of the Analysis Framework is the Compliance Analysis Engine, which applies the computational algorithms to analyze the patient data regarding the compliance to the clinical guidelines. The analysis engine accesses the knowledge through the Knowledge Adapter that provides sophisticated methods to query the knowledge library. The analysis engine uses the Fuzzy Temporal Reasoner to extract high level temporal abstractions regarding the patient’s state. In addition, the Analysis Framework includes the Results Viewer, a graphical interface that allows users to view and explore the compliance analysis results.

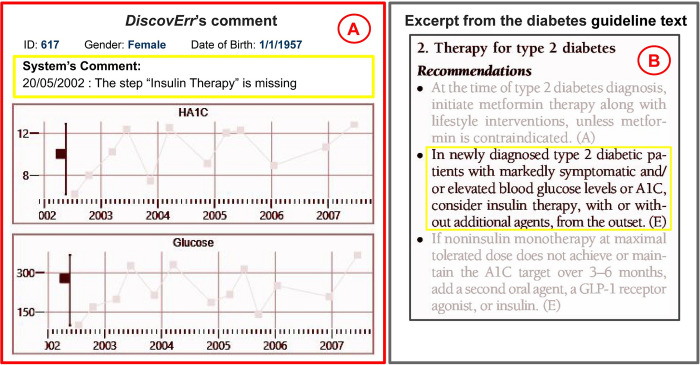

Fig 6 displays a typical view of the output of the DiscovErr system, from the point of view of a medical expert using it during the evaluation of that system, and a relevant excerpt from the original guideline’s text.

Fig 6. An example of a comment made by the DiscovErr system.

The comment (A), regarding missing insulin therapy for a newly diagnosed patient with very high levels of HbA1c (>10%) and Glucose (>290 mg/dL), and the relevant excerpt from the original guideline (B).

3. Evaluation

The evaluation of the DiscovErr system was designed to assess the feasibility of implementing it in a real clinical quality-assessment setting. The experiment was performed in the diabetes domain, in which we specified knowledge from a state-of-the-art guideline in a formal representation, and applied the system to real retrospective patient data, to assess to what extent the treatment complied with the standard guideline. The data were presented to expert physicians. The expert physicians were then asked to manually evaluate the compliance of the treatment of a set of patients to the guideline by examining the electronic medical records. Then, the comments given by the system regarding compliance to the guidelines were presented to the experts, and they assessed the correctness and importance of these comments.

The general objective of the evaluation was to enable assessing the correctness (i.e., precision) and completeness (i.e., recall or coverage) of the comments provided by the system relative to the gold standard comments of the clinicians, when automatically analyzing electronic medical records for guideline-based compliance of the therapy manifested by these records.

Note that the terms completeness and correctness used here refer to different measures than the formal definitions of first-order logic, and describe a continuous grade for the level of coverage and level of correctness of the comments given by the system regarding guidelines compliance issues.

3.1. Research questions

The general objective of the research was to evaluate the feasibility of applying the system in realistic clinical settings. This led to the definition of several more specific research questions, that aim to assess the quality and significance of the results of the compliance analysis. For each research question presented in this section (i.e., specific objective), we describe here the general idea of how it was measured, as more details are available in the experimental design and the Results section.

Question 1: Completeness: Does the system produce all or most of the important comments relevant to the task of assessing compliance to a guideline?

To use such a system in real clinical settings, it is necessary to evaluate the quality of its results. One dimension of the quality of the results is the completeness, or level of coverage, as it is important to know if all or most of the deviations from the guideline are detected by the system.

To answer this question, we conducted an experiment in which the system and three medical experts examined the medical records of the same set of patients, and provided comments regarding the compliance to the same clinical guideline for the management of diabetes mellitus. The measure for completeness was defined as the portion of compliance-related comments that were mentioned by the system from the comments mentioned by the majority of the medical experts.

Question 2: Correctness: Is the system correct in its comments regarding the compliance to the guideline?

Another dimension of the quality of the results is the level of correctness of the system output, sometimes referred to as precision. It is important to know the proportion of correct compliance comments provided by the system out of all the system comments.

Question 3: Importance: Are the comments provided by the system significant and important for understanding the quality of treatment?

In addition to measuring the correctness and completeness of the compliance analysis results, we were also interested in measuring the level of significance of the comments provided by the system regarding the compliance.

To answer questions 2 and 3, we conducted an experiment in which two diabetes experts evaluated the correctness and importance of the compliance-related comments provided by the system when analysing the medical records of a set of patients. The measure for correctness was defined as the portion of system comments that were evaluated as correct by the two experts. The measure for importance was defined as the portion of system comments that were evaluated as important by the two experts.

3.1.1. Secondary research issues

In addition to the primary research questions presented above, we were also interested in examining two secondary issues that focus on the human aspects of the experiment, and to answer them to some extent.

Issue 1: Comparison of the quality-assessment comments of the experts and the system: What is the completeness and correctness of the comments of the expert physicians, when using the comments of the colleagues of each of the experts as a gold standard? How does the performance of the DiscovErr system measure up, using this method?

Issue 2: Similarity in the experts’ meta-critiquing: What is the level of agreement between several experts regarding the quality of the system assessments?

We found these issues interesting, as one of the major motivations for clinical guideline implementation, is its contribution to the reduction of the variance among treatments provided by different physicians. It was important for us to learn whether the experts mostly agree with each other when performing the task of compliance assessment, which is a different task from providing a real treatment. Due to the fact that in the compliance analysis task, the experts refer to the same guideline, we assumed that the experts would have a reasonable level of consensus.

In addition, evaluating the level of agreement between the experts is also essential in order to enable the evaluation of the system itself, as there is no true meaning for the experts’ evaluation of the system if there is no agreement between them.

To address the first issue, we defined indirect measures to evaluate the completeness and correctness of the experts themselves. The specific details of these measures are described in the Results section. To address the second issue, we used Cohen’s Kappa statistic, and applied it to the evaluations of the experts regarding the correctness and importance of the system comments (i.e., as part of addressing Research Questions 2 and 3).

3.2. Experimental design

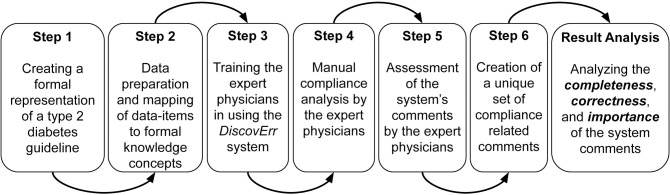

To answer these research questions, we designed a study that included several experimental steps (see Fig 7), which enabled evaluation of several aspects of the overall framework.

Fig 7. A diagram of the experimental steps in our evaluation of the DiscovErr system.

A preliminary step before designing the study was to find a medical domain. After examining several medical domains, we decided to perform the experiment in the Diabetes domain. This domain is suitable for clinical guideline application, with well-established evidence, and in which the diagnostic and therapeutic process is performed over a sufficiently long period of time (i.e., a period of time of sufficient duration for performing meaningful longitudinal patient monitoring and therapeutic decisions that might adhere to a continuous-care guideline). It is important to mention that although we wished to evaluate the system in more than one medical domain, we had limited resources and decided, instead of performing several smaller experiments in several medical domains (collaborating with one expert in each domain), to focus more deeply on a larger experiment within a single domain.

We obtained a dataset that included anonymized information about 2,038 patients diagnosed with type 2 diabetes. The data were collected between Jan 15, 2002 to July 15, 2007, and were obtained as part of a research collaboration with the Quality Assessment Department of a large Israeli HMO, and included 378,273 time-oriented data records, including details about test results and medication orders and purchases that are relevant for assessment of compliance to the diabetes-management guideline. The records in this data set covered up to five years of continuous treatment for each of the patients, together with general demographic information about the patient, such as gender and age. We had no access to information that could identify individual participants during or after data collection.

In the following sections, we describe the structure of the study and each experimental step.

Step 1: Creating a formal representation of an established diabetes-management guideline

The first step in the evaluation focused on the formal representation of a clinical guideline within the selected clinical domain, using the knowledge specification interface of DiscovErr, which uses, for procedural knowledge representation, such as specific recommendations regarding the performance of hemoglobin A1c monitoring under certain conditions, the Asbru language; and for declarative knowledge representation, such as for the definition of different abstractions of the Blood-Glucose-value raw-data concept into abstract-concept values, such as Hypoglycemia, Normoglycemia, or Hyperglycemia, the knowledge-based temporal-abstraction (KBTA) ontology [23]. The guideline that was selected was the Standards of Medical Care in Diabetes [27], that roughly corresponded to the time in which the patients were managed. This is a comprehensive guideline that is based on an extensive literature review and is updated every year. The guideline addresses multiple aspects of diabetes, from screening through diagnosis to multiple aspects of the long-term treatment.

The overall guideline specification was performed in two steps. In the first step, we created a prototype version of the formal guideline by detecting the relevant sections in the guideline, deciding on the optimal representation according to the formal model, and using the system knowledge acquisition interface to formally specify the knowledge. In the second step, one of the expert physicians was involved, and assisted in validating the knowledge represented in the first prototype and extending it with additional knowledge that was not explicit in the original guideline. An example of such knowledge that did not exist in the original guideline is the definition of the concept “reduced kidney function”, which is important for the management of Metformin drug therapy. The definition of this concept was not mentioned in the guideline and had to be added by the expert by referring to additional sources. Another example of a decision that was made by the expert physician during the specification process, is a general decision regarding the specification of the temporal aspect of recommendations about patient monitoring. In the first prototype, when specifying a recommendation about the temporal aspect of periodic monitoring (e.g., test hemoglobin A1c every 3 months), we used the temporal annotations of the Asbru language, “earliest-start” and “latest-start”, to define a flexible time range for the next measurement. After consulting with the expert physician, the “earliest-start” was removed in some sections of the guideline to prevent the system from providing comments regarding tests that were performed too early. This was decided because in some cases in clinical practice, such as hemoglobin A1c and LDL monitoring, there is no limit to the number of tests (frequency of testing) of the same clinical variable that the physician can order. This relaxed constraint is of course true only for certain tests, which are simple to perform, are not too expensive, and are useful for assessing response to a treatment change and improvement of patient engagement.

The process of formal representation of the guideline was concluded after applying the system to a small set of longitudinal patient records, and briefly examining the results to perform the validation (note that since the objective of the system is not to manage patients, but rather to perform a retrospective assessment of compliance, we did not need to extend the performance evaluation beyond a reasonable assurance that the representation seems accurate).

The overall knowledge specification process was completed in two weeks, in which the first week was dedicated for the first step of the process, of creating a prototype representation, and the second week was dedicated for collaborating with the medical expert to validate and improve the guideline’s representation.

Step 2: Preparation of the data and mapping it to concepts in the formal knowledge base

The data preparation is a preliminary step that enables application of the system on the medical records. To allow the system to retrieve the relevant items in the patient data at run time, there is a need to map between the concepts in the guideline’s formal knowledge base and the terms in the patient database. This can be completed only after finalizing the specification of the guideline in a formal representation, when the complete set of relevant concepts is determined.

Regarding tests and measurements, the original data set did not include codes from standardized vocabularies for medical concept identification. Therefore, we had to manually examine each term in the database, and map it to the relevant declarative concept in the system knowledge-base, and if necessary, perform a conversion to the same units of measurement specified in the knowledge case. Examples of relevant test and measurement concepts include blood glucose, hemoglobin A1c, blood cholesterol, and creatinine lab tests.

Regarding drug therapy concepts, the data included codes according to the standard of WHO’s ATC classification system, a fact that simplified the mapping process. Using the interface of DiscovErr, we only had to select the relevant ATC terms and attach them to the concepts appearing within the appropriate steps in the formal clinical steps’ library. For example, the concept used within the step “initiate-insulin-therapy” was mapped to several potentially relevant ATC items, such as “A10B: Insulins and analogues for injection, fast-acting” or “A10AC: Insulins and analogues for injection, intermediate-acting”. Mapping the concepts appearing within the clinical steps to these higher level classes of the ATC hierarchy allows the system to automatically detect the drug identifiers found in the electronic medical record and to relate them to the relevant items in the knowledge.

Step 3: Training the expert physicians

A training session was conducted with each of the expert physicians. The session covered the following topics:

A general overview of the research and its specific goals.

Presentation of the diabetes guideline, which was provided to the experts in a printed copy format and in an electronic format; in both formats, the relevant sections were visually marked. It is important to note that all of the experts were familiar with the selected guideline, so this topic was covered within a short time.

Description of the patients’ dataset, its source, structure, available clinical variables, and data format.

Demonstration of the DiscovErr system and its comment-making interface, by performing a compliance analysis of two or three demonstration patients. We found that the demonstration allowed the experts to better understand the idea of the system, and to understand the nature of the comments provided by the system regarding compliance to the guideline.

A short training regarding the specialized user interface that we had created solely for this evaluation, which was used by the expert physicians to both insert their own evaluations of the actions found within the patient record, and to add their evaluation of the assessment performed by the system of these actions (see the interface description below).

Step 4: Manual compliance analysis of the patients’ management, by the expert physicians, performed on a randomly selected set of patients

Following the training step, the experts were provided with a convenient (visual) interactive interface for browsing the complete set of longitudinal data of multiple types of a randomly selected subset of patients from the database that was introduced earlier to them, for the full duration of time for which the patient was followed (up to five years per patient). They were then asked to perform the two evaluation tasks (i.e., (1) assessment of the quality of care given by each patient’s care provider, and (2) assessment of the quality of the DiscovErr system comments).

Specifically, we first asked each of the expert physicians to review the data of the same 10 randomly selected patients, which comprised, altogether, 1584 time-oriented records, and manually add comments regarding the compliance of the patient treatment to the diabetes guideline. At this point, the experts could not yet see the system comments regarding the compliance of these patients to the guideline. They were provided with a user interface for the visualization of the raw temporal data of the patient, and for adding their comments using this interface.

For each of their comments, the experts were asked to provide data about the date of the clinical event, the importance/significance of the clinical issue, the type of the comment, and a textual description of the clinical issue. For the type of the comment they could select from a given set of comment types, or insert their own type of comment. The given set of values is presented in Table 2 in the results.

Table 2. The distribution of the comments given by the experts with respect to the type of compliance issue.

| Compliance Issue Type | Comments | % |

|---|---|---|

| Late Action (Expected action that was performed too late) | 118 | 36% |

| Action is On Time (An action expected by the guideline is performed on time) | 61 | 19% |

| Missing Actions (Missing expected action) | 59 | 18% |

| Patient Compliance ((Low compliance to medications) | 56 | 17% |

| No Support (Action should not be started at this time) | 32 | 10% |

| Redundant (Another action with same intention performed previously) | 1 | 0.3% |

| Early Action (Action performed too early) | 1 | 0.3% |

| Guideline Contradicted (Action that contradicts the guideline’s recommendation) | 1 | 0.3% |

| All | 329 | 100% |

The experts were not limited regarding the time they could invest for the evaluation of each patient, except their own limitation on time constraints; they could browse several years of data for each patient, and add as many comments as they wanted.

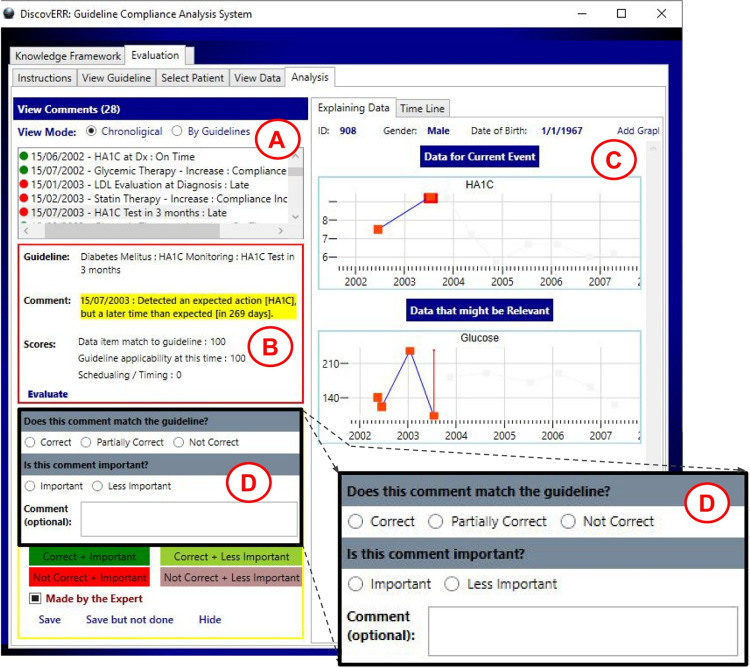

Step 5: Assessment, by the expert physicians, of the comments provided by the system

In this step, we asked the two diabetes experts to evaluate the comments of the system regarding the compliance to the diabetes guideline. The experts used the output interface of the system, to explore the guideline compliance comments. The comments were presented together with the relevant patient data in a visual manner. Using the meta-commentary part of the user interface, the experts assessed the comments given by the system regarding correctness and importance (see Fig 8). For each system comment, the expert marked whether the comment is correct according to the guideline when considering the data that was available to the care provider at the specific point in time. In addition, the expert marked whether the comment is important, to express an opinion regarding the level of clinical significance of the issue referred to by this comment (regardless of whether the comment itself is judged as correct or incorrect). For the correctness evaluation, the expert could select from three options: correct, partially correct, and not correct; for the importance evaluation, the expert could select from two options: important and less important. (The binary scale emerged from the discussions with the experts; the pilot experiment included originally an additional level, very-important, but it turned out to create problems in maintaining consistency when assessing the system comments: for example, different instances of the same comment regarding late HA1C lab tests were annotated both as important and as very-important by the same expert). In addition to annotating regarding the correctness and importance of the system comment, the experts could enter an optional free text comment when they wanted to add additional information.

Fig 8. The interface used by the experts for evaluating the comments of the system.

This interface was designed as part of our study. The expert can view the list of comments on the top left side of the screen (A). When selecting a specific comment, additional explanation is displayed (B) together with graphs showing raw data (C). The explanation includes the specific path of the guideline and a textual description with details about the system scores. The graphs are of one or more parameters that lead the system to its comment. Zoom into the part of the UI for meta-critiquing (D), shows the manner in which the experts expressed their opinion of the DiscovErr system comments.

Step 6: Creation of a unique set of compliance-related comments

The final step of the result evaluation included additional preparation that was required for supporting a deeper quantitative analysis of the results. In this step, the knowledge engineer scanned and analyzed each of the comments resulting from the previous steps of the evaluation, both of the system and of the experts, and extended it with additional metadata.

3.2.1. Cross-tabulation and annotation of the experts’ comments

In the first step of this preparatory analysis, we examined the comments that were added by the experts in the step of manual evaluation of the management of the treatment of patients. We examined each comment given by each expert, and compared it to the comments of the other two experts and to those of the system. Each comment was then annotated with three additional attributes that indicated whether it was detected by (1) the system, (2) the other first expert, (3) the other second expert. For example, when examining a comment of the first diabetes expert, for each patient we scanned the full set of comments of the system, of the second diabetes expert, and of the family physician expert; and annotated whether the comment was included in each of these comment sets. By opening four instances of the system, we scanned hundreds of comments provided by each expert, and annotated whether each comment was detected by the system and by the other experts.

It is important to mention that in this part of the preparatory analysis, we had to deal with cases in which comments were phrased differently by each expert. This was solved by manually reviewing the comments and relating to the semantic meaning of each comment. For example, the same scenario of a (too) late clinical action can be described as a “late action” by one expert, or as a “missing action” in the earlier period of the patients record by the other expert, based on the same data and the same guideline-based clinical knowledge. Thus, the result of this phase was essentially a determination of a set of (semantically) unique comments.

An additional issue that had to be addressed in the preparatory analysis of the expert comments is the fact that some of the comments provided by the experts were regarding knowledge that belonged to sections of the source guideline that were not included in the formal representation, or that belonged to other clinical guidelines. Comments of this type were annotated as “out-of-scope” and were excluded from the formal analysis of system completeness.

3.2.2. Cross-tabulation and annotation of the evaluations given by the experts on the system comments

In the second step of the preparatory analysis, we re-examined the comments provided by the system. As explained earlier the system comments were already evaluated in the previous step regarding correctness and importance by each of the diabetes experts. However, to support additional levels of result analysis, we re-examined each comment to determine whether it exists in the sets of patient management comments provided by each diabetes expert when they made their own management compliance comments, before they were exposed to the comments of the system. In this manner, each comment of the system was extended with additional meta-attributes that annotated whether it was, in fact, mentioned by each of the two diabetes experts.

4. Results

Recall that our main objectives in the current study were to assess the completeness of the DiscovErr system comments, relative to the comments that need to be made; the correctness of the comments made by the system; and the importance of the comments that were made or missed by the system. We also wanted to examine several secondary issues, such as the completeness and correctness of the medical experts themselves, and their internal agreement.

4.1. Completeness of the system comments

The results in this section relate to the first part of the experiment, namely, the manual compliance analysis of the patient management, by the three expert physicians, performed on a randomly selected set of patients. The results in this section provide insights about several interesting aspects; these aspects include the time needed for the experts to complete the compliance analysis task, the distribution of the types of compliance issues found by the experts in the records of the patient management, a comparison between the experts based on their compliance comments, the completeness of the comments given by the system relative to one or more comments given by the experts, and more. Our objective in this phase of the evaluation was to measure the completeness of the DiscovErr system comments, namely, the portion, out of the comments mentioned by the experts, that were made by the system (we shall present a more precise definition later).

In this first part of the experiment, which included two diabetes experts and one family medicine expert, each expert evaluated the longitudinal record of each patient within a group of 10 patients who were randomly selected before the evaluation. Table 1 presents information about the randomly selected patient set in comparison to the whole dataset. The mean duration of the period on which we had patient data was 5.23 years. The overall data of the 10 patients consisted of 1,584 time-oriented single medical transactions (e.g., a single laboratory test result or a single administration of a medication), i.e., an average of 158 time-oriented medical transactions per patient, which had to be examined by the experts. The mean time for an expert to examine a single patient was 27 minutes. The experts made a total of 381 comments, from which 31 were labelled as insights (i.e., comments that are not directly related to guideline compliance), 21 were out of the scope of the guideline’s sections that were used in the experiment, and 329 were compliance comments within the scope of the guideline. A total of 66% of the comments were regarding drug actions and 34% regarding test and monitoring actions.

Table 1. Description of the data set used in the experiment.

| Entire Dataset | Randomly Selected Patients | |

|---|---|---|

| Number of patients | 2038 | 10 |

| Total medical transactions | 378,273 | 1,584 |

| Age in years [Mean /(StDev)] | 49 / 15.78 | 50 / 11.93 |

| Male patients percentage | 49% | 60% |

Table 2 presents the distribution of the comments given by the experts regarding the type of compliance comments. Note that “Action is on time” is the only positive type comment, denoting the fact that in the expert’s opinion, the action is a correct one and was performed in a timely manner.

To analyze the completeness of the system, the comments were divided into three groups according to the level of support by the experts: comments mentioned by only one expert, by exactly two experts, or by all three experts; then, by meticulously examining the text of all comments, the number of unique compliance issues was counted for each group, where a unique issue is a specific clinical management topic that can be mentioned, possibly using other words, or as part of another comment by one or more experts and the system. There were 50 (27.5%) unique compliance issues that were mentioned by all three; 48 (26.7%) of the unique compliance issues that were mentioned by two experts; and 83 (46%) of the unique compliance issues that were mentioned by only one expert. In total, 98 (55%) of the 181 unique compliance issues were mentioned by two or more experts out of the three, i.e., by a majority of the experts. Table 3 displays the completeness results analyzed for each level of support.

Table 3. Completeness of the comments given by the experts relative to the unique compliance issues, by level of support of the three experts.

| Unique Compliance Issues | Compliance Issues Detected by System | Completeness Regarding the exact no. of supporting experts | Completeness at that support level or higher | |

|---|---|---|---|---|

| Mentioned by one Expert | 83 | 55 | 66% | 80% |

| Mentioned by two Experts | 48 | 40 | 83% | 91% |

| Mentioned by three Experts | 50 | 49 | 98% | 98% |

| All | 181 | 144 |

The system completeness for comments that had the support of a majority of the experts (i.e., two or three out of three) was 91%, and the completeness was significantly higher for comments that had higher levels of support by the experts.

4.1.2. The completeness, as defined in the context of our evaluation, was expressed by the portion of unique compliance issues detected by the system, relative to the overall number of unique compliance issues mentioned by a group of experts

Applying in each case a proportion test, the completeness by the system of the unique compliance issues mentioned by all three experts was found to be significantly higher than the completeness by the system of the unique compliance issues that were mentioned by only two experts (z = 2.51, p = .012), which, in turn, was found to be significantly higher than the completeness of the system with respect to unique compliance issues that were mentioned by only one expert (z = 2.11, p = .035).

4.2. Correctness of the system comments

The results in this section relate to the second part of the experiment, in which the two diabetes experts evaluated the correctness and importance of the compliance comments mentioned by the system. This evaluation was performed on the data of the same 10 patients who were examined by the experts in the first part of the experiment. The mean time for the experts to evaluate the comments provided by the system on a single patient was 9.5 minutes.

In this phase of the evaluation, we wanted to determine the correctness of the DiscovErr system comments, defined as the proportion of correct comments provided by the system, out of all of the comments made by the system.

The system provided a total of 279 comments when applied to the data of all 10 patients, 165 (59%) regarding issues related to the compliance to tests and monitoring recommendations, and 114 (41%) regarding issues related to the compliance to medication therapy recommendations. A total of 172 comments were evaluated (62% of the comments by the system): 100% of the 114 medication-therapy-related comments and 35% of the 165 tests-and-monitoring-related comments. The reason for this discrepancy is that only the data of three of the patients were fully evaluated regarding the correctness of the tests and monitoring comments made by the system, due to constraints on the experts’ time.

To validate the consistency of the expert assessments of the comments given by the system, we started by calculating the level of agreement about the validity of these comments between the experts themselves, before proceeding further in the analysis. We measured the inter-expert agreement using a weighted version of Cohen’s Kappa coefficient, assigning linear disagreement weights according to the distance between the ordered values of the correctness ordinal scale (correct, partially-correct, not-correct). Cases in which both experts agreed were assigned a weight of 0; cases in which the experts did not agree, but with a distance of only one level between the assessments (i.e., correct/partially-correct, partially-correct/not correct), were assigned a weight of 1; and cases in which the experts did not agree, with a distance of two levels between the assessments (correct/not-correct), were assigned a weight of 2.

The weighted Kappa was 0.61, a value that represents a good agreement [28], and is significantly higher compared to a chance value of 0 (p < .05).

In addition to the Kappa, we measured the level of agreement between the diabetes experts regarding the truth value of the correctness of the system comment that was being assessed (whether agreeing that the system is correct or agreeing that the system comment is incorrect). The experts fully agreed on the correctness, partial correctness, or incorrectness of 151 of the 172 evaluated system comments (87.8%), partially agreed on the truth value of an additional 20 of the 172 comments (11.6%) (i.e., the comment was evaluated as partially correct by one expert and as correct or not-correct by the other expert), and did not agree at all on only one of the system comments (0.6%).

Since it was clear that the experts significantly agree on the correctness or incorrectness of the system comments, we now looked at what they actually said about these comments. According to the judgment of diabetes expert 1, 84% of the comments were correct, 11% were partially correct, and 5% were not correct; according to the judgment of diabetes expert 2, 88% of the comments were correct, 7% were partially correct, and 5% were not correct. The next step was to integrate the results of the two diabetes experts.

Table 4 displays the correctness when integrating the result of both diabetes experts. To integrate the evaluation of the two experts, the possible combinations of the correctness results were organized into six combination groups. The groups were ordered from top to bottom, from the most correct combination to the most incorrect combination. For each combination the number of comments is presented together its proportion to the total number of comments.

Table 4. Correctness of the system comments according to both diabetes experts.

| Comments | % | Cumulative % | |

|---|---|---|---|

| Comments judged as correct by both experts | 139 | 81% | 81% |

| Comments judged as correct by one expert and as partially correct by the other | 17 | 10% | 91% |

| Comments judged as partially correct by both experts | 6 | 3% | 94% |

| Comments judged as not correct by one expert and as correct by the other | 1 | 1% | 95% |

| Comments judged as not correct by one expert and as partially correct by the other | 3 | 2% | 97% |

| Comments judged as not correct by both experts | 6 | 3% | 100% |

| All | 172 | 100% |

To present the complete picture, Table 4 shows the full results for all levels of agreement of the experts with the comments made by the DiscovErr system. The cumulative percentage represents the proportion between the number of comments that are at least as correct as the current combination to the total number of comments; thus, 81% of the evaluated comments were judged as correct by both experts, while 91% of the evaluated comments were judged as correct by both experts or judged as correct by one expert and partially correct by the other. Only 3% of the evaluated comments were judged as not correct by both experts.

Thus, 91% of the comments given by the system were fully supported by at least one of the experts, while the other expert did not disagree with the system (i.e., partially or completely agreed with its comments). We consider that 91% portion as representing, for practical purposes, the level of correctness of the system comments.

4.3. Importance of the system comments

Regarding the importance of the guideline compliance issues that were referred by the comments given by the system; 153 comments (89%) were judged as referring to important issues by both experts, 14 comments (8%) were judged as referring to important issues by only one of the experts, and 5 comments (3%) were judged as less important by both experts.

We measured the inter-expert agreement regarding the importance assessment, using the standard (0/1 weights) version of Cohen’s Kappa coefficient, and the Kappa coefficient was 0.37. This value, although significantly higher than a chance value of 0 (p < .05), technically represents only a fair agreement [28], but that is probably an artifact, due to the highly skewed distribution of importance values.

Please note that the experts agreed on the importance (or less importance) of 92% of the comments (158 from a total of 172 comments), a number that reflects a high level of agreement, in contrast to the relatively low Kappa. In order to estimate the number of experts required for achieving a higher Kappa of 0.7, we used the Spearman-Brown prediction formula, as suggested by [29], and found that it will require to multiply the number of experts by 3.97, i.e., include eight experts.

The overall voting score for importance of the issues referred to by all of the comments was 93%, which is the portion of the number of judgments of a comment as important (320 votes) out of the number of all importance judgments (344 votes, i.e., 172 instances, commented by both of the diabetes experts).

4.4. A comparison of the correctness and completeness of the experts and the system

As an additional aspect in the correctness and completeness analysis, we were interested in comparing the results of the different experts, and to compare their results to those of the system (See issue 1 in the Research questions sub-section). Although the experiment did not include a step in which the experts directly evaluated the comments of each other, we could use their evaluations from the two experimental steps to indirectly calculate their level of completeness and correctness. For this, we have defined two additional objective measures to evaluate the quality of the experts’ evaluations: Indirect Correctness and Indirect Completeness.

4.4.1. Indirect correctness of the experts

The Indirect Correctness of an expert was calculated by using the comments that were mentioned by the expert in the first step of manual evaluation of the treatment’s compliance, in which each expert added their comments regarding the compliance to the guideline as manifested in the medical records of each of the 10 patients. The indirect correctness is measured by the portion of the expert’s comments that were mentioned by at least one additional expert.

It is important to note that the level of completeness of an expert has an effect on the results of the indirect correctness of the other experts, since an expert who makes only a relatively small number of the relevant comments artificially reduces the correctness of the comments made by the other experts. Due to this fact, we were interested in adding the system as an additional compliance evaluation agent. In the previous steps of the analysis, the completeness and correctness of the system were found relatively high; therefore, we assumed it is reasonable to use the comments of the system as part of the evaluations of the indirect correctness of the experts.