Abstract

This study summarizes current trends and future directions in projection mapping technologies. Projection mapping seamlessly merges the virtual and real worlds through projected imagery onto physical surfaces, creating an augmented reality environment. Beyond traditional applications in advertising, art, and entertainment, various fields, including medical surgery, product design, and telecommunications, have embraced projection mapping. This study categorizes recent techniques that address technical challenges in accurately replicating desired appearances on physical surfaces through projected imagery into four groups: geometric registration, radiometric compensation, defocus compensation, and shadow removal. It subsequently introduces unconventional projectors developed to resolve specific technical issues and discusses two approaches for overcoming the inherent limitations of projector hardware, such as the inability to display images floating above physical surfaces. Finally, this study concludes the discussion with possible future directions for projection mapping technologies.

Keywords: projection mapping, augmented reality, projector-camera systems

1. Introduction

Projection mapping (PM) overlays computer-generated imagery onto physical surfaces using projectors, creating an augmented reality (AR) environment where the virtual and real worlds seamlessly merge. These surfaces encompass not only flat and uniform white screens but also general, non-planar, textured surfaces in our surroundings. The PM-based AR provides additional information, such as annotations directly on the target physical surface.1) Furthermore, it can visually alter the material of the physical surface, thus transforming a plaster statue into metallic, transparent, or furry material.2) The PM-based AR is often referred to as spatial AR,3) offering several advantages over other AR display technologies, such as video and optical see-through displays. For instance, PM does not require users to wear or hold displays, such as head-mounted displays or smartphones, thus not restricting the user’s field of view (FOV). Furthermore, it enables multiple users to simultaneously share in-situ AR experiences.

Thanks to these advantages, various application fields have been explored beyond typical ones, such as advertising, art, and entertainment.4–6) For example, PM is used to navigate users to target locations by superimposing arrows onto the physical environment.7) Similarly, PM is useful in supporting object searches in physical space by highlighting the searched object.8–12) The highlighting technique is also beneficial in medical surgery, where an invisible emission signal indicating the resection area in a human organ is visualized by projected imagery.13) It can also be used for artwork creation in such a way that projected patterns indicate where to paint on a canvas or where to dig in clay.14–16) Rich graphical information, such as a navigator’s avatar, is projected onto the artwork or its surrounding surfaces for museum guides.17,18) Projected avatars of distant people are also used for tele-communication.19–21) Similarly, human body silhouette projection extends the reaching distance of our body.22–24) The PM on a human face supports makeup.25,26) Apparent material transformation is useful in product design.27–30)

More conceptually, PM can make any real-world surface, such as a table, wall, and even our skin, visually programmable. When combined with proper sensing technologies, these surfaces become responsive to user actions. Artificial intelligence (AI) technologies further enhance their interactive capability. This enables a paradigm shift in human-computer interaction from inorganic, tangible-based input and output schemas, requiring typical mice, keyboards, touch panels, and 2D monitors, to organic ones. In this paradigm, real-world objects, including our bodies, are covered by so-called smart skins through which we interact with AI in an intimate way. More specifically, we might perceive that AI is symbiotically embedded in our body or exists anywhere surrounding us, which could fundamentally change our perceptual and cognitive model of AI. Such an ultimate application would be achieved when PM becomes ubiquitous, and typical room lights are substituted with projectors.

Technically speaking, these applications work properly only when we can accurately replicate desired appearances on real-world surfaces through projected imagery. However, this is not straightforward, as our projection targets are unconstrained and arbitrary surfaces (e.g., non-planar and textured), often unsuitable for projection. Without careful considerations, the projected image gets severely degraded.

First, when a target surface is non-planar, the projected image gets deformed. Thus, geometric registration of a projector to the surface is required to allow a PM system to determine which projector pixel illuminates which surface point. Second, even when pixel correspondence is established, achieving the desired color reproduction on the surface, especially when it is textured, is rarely straightforward. Thus, radiometric compensation is necessary to correct distorted colors. In cases where the surface is non-planar, parts of projected result are defocused and, consequently, appear blurred. Defocus blur should be compensated; otherwise, it significantly reduces high spatial frequency components (or details) of a projected image. Finally, shadows, which occur when a user occludes projected light, significantly reduce the sense of immersion in the AR experience. Therefore, shadow removal is also an important technical issue in PM.

Over the past 25 years, researchers have dedicated their efforts to addressing these technical challenges. They mathematically model the image degradation processes and solve their inverse problems to generate compensation images, allowing them to reproduce desired appearances on target surfaces through projection. However, owing to the technical limitations of projector hardware, such as a limited dynamic range (displayable luminance range) and shallow depth-of-field, compensation images are not always readily displayable. Researchers have successfully overcome these limitations beyond the capabilities of the original projector hardware. Recent trends to achieve this include combining near-eye optics in PM and applying perceptual tricks.

This review briefly introduces technical solutions for each challenge and discusses future research directions in PM technologies. Notably, comprehensive surveys on this topic were previously published in 200831) and 2018.32) Therefore, this review focuses on summarizing recent works, specifically those not discussed in the aforementioned literature. In addition, this review concentrates on the technological aspects of PM and does not provide an overview of the trends in recent PM applications.

2. Geometric registration

The PM system needs to determine which projector pixel incidents on which surface point to display a desired appearance on the surface. Conventional keystone correction addresses this issue only when the surface is flat. However, non-planar surfaces are frequently used in PM, thereby making it necessary to establish proper geometric registration of a projector with the target surface.

2.1. Projector calibration.

The geometric relationship between two-dimensional (2D) coordinate value of a projector pixel (x, y) and three-dimensional (3D) coordinate value of corresponding surface point (X, Y, Z) is mathematically described using a pinhole camera model as [x, y, 1]t = PM[X, Y, Z, 1]t, where P and M are 3 × 4 and 4 × 4 matrices, respectively. This model comprises the projector’s intrinsic parameters such as its focal length in P, and the extrinsic parameters that determine the pose of the projector relative to the target surface in M.

Efficient and accurate calibration of these parameters has been successfully established for static PM setups based on a camera calibration framework.33) The standard method involves using a camera to capture projected calibration patterns through which the parameters are then estimated. Recent technologies have also used projector-camera (ProCam) systems for unique setups. For example, Xie et al. introduced a user-friendly calibration technique, allowing a user to use a handheld mobile phone camera in the calibration process.34) Sugimoto et al. proposed an attachment-type system for calibrating intrinsic parameters of a projector in limited space.35) Another group attempted to achieve precise calibration by incorporating a LiDAR (light detection and ranging) sensor with a ProCam system.36) The calibration of multiple projectors using various camera setups, including multiple cameras and a depth camera, is also currently an active area of research.37–39)

2.2. Dynamic projection mapping.

The trend in PM research has undergone a dramatic shift from static PM to dynamic PM (DPM) in recent years. In DPM, aligning the projected image with the surface of a moving object is imperative. As a projector’s intrinsic parameters remain constant as long as the lens settings are unchanged, they can be pre-calibrated. Therefore, the primary challenge in achieving DPM is the rapid estimation of extrinsic parameters, specifically, fast tracking of the moving surface. Researchers have tackled this challenge by capturing distinctive visual markers attached to surfaces. The 3D positions of these markers on the surfaces were predetermined. Subsequently, extrinsic parameters could be computed by establishing a relationship between the 3D positions of the markers and their corresponding 2D positions in the captured images.

Interestingly, multiple research groups have focused on aligning projected images onto deformable surfaces such as cloth by tracking dot array markers captured by an RGB camera.40–43) Typical projectors inevitably introduce noticeable delays in projected images onto a target surface in DPM, even when fast marker tracking is available. Maeda and Koike addressed this problem using deep neural networks (DNNs) for object pose prediction.44) Another issue in marker-based DPM is the visibility of markers. Visible markers under projection significantly reduce a user’s immersion in DPM experience. Notably, this is also a critical concern in low-latency DPM (see Sec. 2.3). Researchers have attempted to reduce the visibility of markers using imperceptible materials (e.g., infrared (IR) ink only detectable using an IR camera)45) or IR LEDs46) as markers (Fig. 1) and have further reduced visibility by projecting complementary colors onto the marker area.47) Edible markers have been developed for projecting images onto foods, and these are also designed to reduce their visibility using transparent materials48) or by embedding markers in internal structures of foods.49)

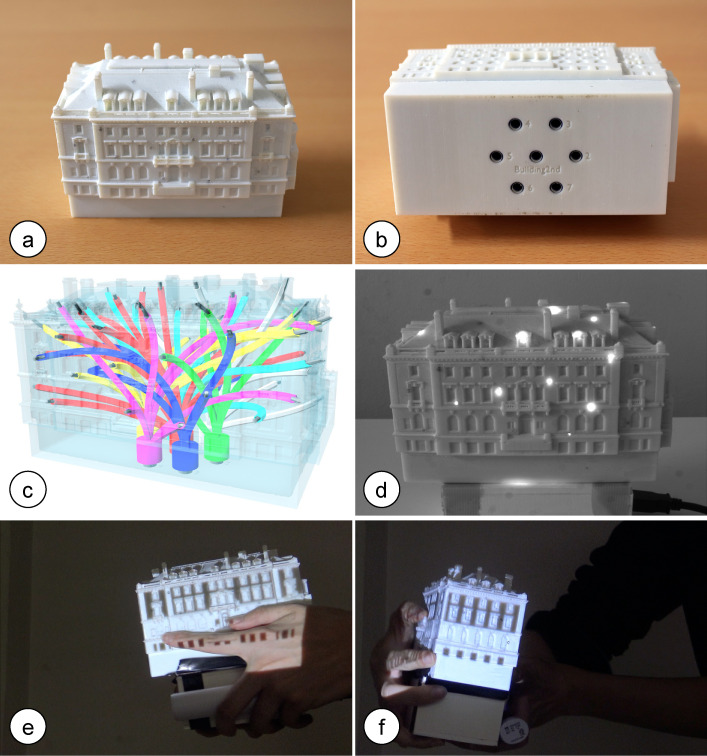

Figure 1.

(Color online) Invisible markers for the geometric registration in DPM.46) (a) Projection target. (b) Multiple holes on the bottom of projection target, into which IR LEDs are inserted. (c) Internal structure of the projection target, created using a multi-material 3D printer with embedded optical fibers. The IR light from the LEDs is routed to the surface by the fibers. (d) Captured IR image of the target surface. (e, f) DPM results. (IEEE Trans. Vis. Comput. Graph. 2020, 26, 2030–2040)

Marker-less tracking has also been explored. For example, the pose of a rigid target object was robustly tracked based on silhouette information, even in the presence of occlusions caused by the user’s hands, using multiple cameras.50) However, the marker-less approach is generally error-prone. In cases where multiple projectors are used in DPM, tracking errors can result in noticeable misalignments of projected images from different projectors. To address this challenge, Kurth et al. proposed a scalable online solution for their depth camera-based marker-less DPM. Their approach optimizes overlapped projection images by reducing the pixel values from projectors other than the one projecting the finest and brightest pixels, particularly in areas with discontinuities in the depth of the surface point from the projector and in the color of the projected image.51)

2.3. Low-latency dynamic projection mapping.

Typical projectors with a 60 Hz refresh rate are ill-suited for DPM because the human visual system detects misalignment when the delay from motion to projection exceeds 6–7 ms.52) A promising game-changer overcoming this limitation is a recently developed high-speed projector capable of achieving almost 1,000 frames per second (fps) full-color video projection.53) Alongside the high-speed projector, researchers have used high-speed cameras, nearly 1,000 fps, for tracking 3D pose of a target surface. Marker-based tracking techniques have demonstrated effectiveness in handling rigid surfaces54–56) and non-rigid surfaces.57) In addition, researchers have sought to enhance the quality of projected images while meeting low-latency demands. Nomoto et al. introduced a distributed cooperative approach in multi-projection DPM to ensure that projected images cover the entire surfaces of target objects.58) The same research group elevated the realism of the projected results using a ray tracing technique.59)

Marker-less tracking is a key element in making DPM more applicable. The most successful field for marker-less DPM is makeup, mainly due to the robust and fast face tracking technologies that have already been established in computer vision research.60) However, addressing fast enough marker-less tracking in other application fields remains a technical challenge, as it necessitates the projection of calibration patterns onto surfaces for estimating the extrinsic parameters. Researchers have proposed projecting calibration patterns and their complementary patterns at high speeds to meet low-latency demands and to make the calibration patterns imperceptible to human observers.61) Another team uses a high-speed IR projector.62) An alternative approach to avoid the pattern projection requirement involves the use of a co-axial high-speed ProCam system where the projector and camera share their optical axes.63)

Low-latency DPM can be achieved without the need for high-speed projectors. A promising alternative involves combining a typical projector with a dual-axis galvanometer for rapid redirection of the projector’s illumination direction. Although this approach has the drawback of not being able to quickly adjust the projected image for local pose changes of the target surface, it allows the projected image to smoothly follow the target without noticeable latency, even when the target moves over a large area. For instance, researchers have demonstrated a DPM on a screen mounted on a flying drone.64) A downsized version of the galvanometer-based system can even be worn and used for PM on a moving hand.65) Previous studies have shown that the mentioned drawback can be overcome by substituting the high-speed projector for the typical projector.54,55)

3. Radiometric compensation

Radiometric or photometric compensation is another essential technique in PM that calculates projector pixel values to display a desired color even on a textured surface, thus making it appear as if it were a uniformly white surface. The typical forward model used in radiometric compensation is described by ci = fi (pj) + ei, where the RGB color vectors of , , and , respectively, represent the observed color at a surface point i as captured by a camera, the input pixel value for a projector pixel j incident on i, and the surface appearance under environmental lighting. is the function that transforms the input pixel value into the observed color, considering the color distortion caused by the surface reflectance property and the spectral characteristics of the camera and projector.

To reproduce a desired appearance on the surface in PM, the inverse of the model can be used. Specifically, the pixel value to be projected is computed as . Note that, although a recent study66) indicated that converting the captured colors from a camera into the device-independent XYZ color space in the compensation provided a slightly accurate result, the majority of studies have directly used the captured colors.

3.1. Compensation techniques based on hand-crafted color transformation models.

Two decades ago, pioneering studies applied linear transformation models as f.31) However, due to the nonlinear nature of color processing in projector hardware, these models suffered from limited compensation accuracy. Thereafter, Grundhöfer demonstrated that a nonlinear model outperforms the linear ones.67,68) Specifically, they used a thin-plate spline (TPS) to approximate nonlinear transformation, which, however, required projecting hundreds of uniformly colored images onto the target surface in advance to calibrate the model parameters.

A recent study has simplified the model complexity using a second-order polynomial.69) It continuously updates the model parameters in a real-time projection-and-capturing feedback loop and adjusts the projected colors accordingly, enabling it to handle changing lighting conditions. Li et al. approximated the nonlinear transformation using a piecewise linear function and significantly reduced the number of projecting calibration patterns by embedding multiple colors into a single pattern, assuming that the spectral reflectance of most real-world materials is smooth.70)

In addition to efforts solely focused on improving compensation accuracy, other research groups have explored various extension possibilities of radiometric compensation framework. Researchers have concentrated on estimating reflectance properties of target surfaces by decomposing the captured images under different color projections.71,72) The estimated reflectance maps were subsequently used to create novel target appearances, such as reducing color saturation. Amano and their group applied distributed multiple ProCam systems to control the appearance of a surface with view-dependent reflectance properties.73–76) As other extensions, Hashimoto and Yoshimura adapted a radiometric compensation technique for a moving fabric, supporting DPM.77) Pjanic et al. achieved seamless multi-projection displays using TPS-based color transformation model,78) ensuring a seamless transition in the overlapping area of images projected by different projectors.

3.2. DNN-based end-to-end compensation techniques.

Very recently, Huang et al. found that DNNs can approximate the nonlinear transformation more accurately than hand-crafted models. They initially demonstrated that DNNs comprising a UNet-like backbone network and an autoencoder subnet, outperformed the classical TPS-based technique79) (Fig. 2). Subsequently, they extended their DNNs to enable geometric registration and radiometric compensation for PM on non-planar surfaces.80) They further improved compensation accuracy by introducing a siamese architecture into their network.81) Other researchers used a differentiable rendering framework in radiometric compensation.82) Handling high-resolution images typically requires long training times and involves high memory costs. Wang et al. mitigated this issue by incorporating a sampling scheme into the network and introducing attention blocks.83) Li et al., in their latest work, reduced the network size by using a network solely for the color transformation of the projector.84) Interestingly, they also demonstrated that a hand-crafted, precise physics-based model of the PM process with limited reliance on neural networks outperformed the end-to-end compensation techniques described above.

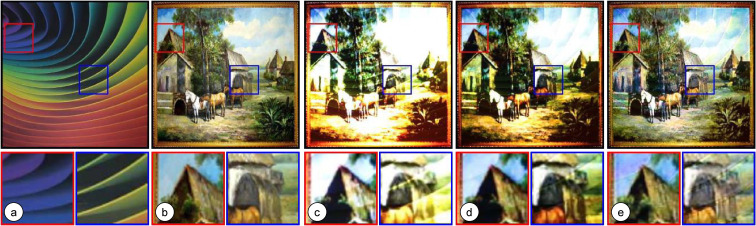

Figure 2.

(Color online) Radiometric compensation using DNNs.79) (a) Projection target under uniformly white projection. (b) Target appearance. (c) PM result of the target appearance without any compensation. (d) PM result using a classical TPS-based technique. (e) PM result using the DNN-based technique. (2019 IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR) 2019, 6803–6812)

DNNs can be applied to various tasks in addition to radiometric compensation. Huang and Ling demonstrated that their networks could reconstruct the shape of a projected scene and simulate the scene’s appearance under a novel image projection.85) The latter is particularly useful for testing or debugging PM without the requirement for actual PM operations. Erel et al. successfully decomposed scene geometry and view-dependent reflectance properties and estimated the projector’s intrinsic and extrinsic parameters by training neural representations of the scene from multi-view captures under PM with different color patterns.86) They showcased that their DNNs can handle geometric registration and radiometric compensation for novel viewpoints.

4. Defocus compensation

As projectors are designed to emit maximum brightness through their lenses, they have a large aperture size, resulting in a shallow depth-of-field (DOF). The typical forward model of defocus blur is described by I′ = K * I, where I, I′, and K represent a projected image without suffering from defocus, a defocused result, and a spatially varying 2D defocus kernel, respectively. In this equation, * represents a 2D convolution process. Deblurring the projected result is achieved by computing the inverse of the forward model. However, standard algorithms such as Wiener filter are unsuitable because the dynamic range of a projector device is not infinite (e.g., the maximum luminance is limited, and negative light is physically not displayable).

A classical study solved this problem using an iterative, constrained steepest-descent algorithm.87) A recent work introduced a non-iterative technique that simply enhances the pixel intensities around the edge areas that are lost due to defocus blur, resulting in reduced computational time.88) These techniques require a dot pattern projection to obtain spatially varying blur kernels every time either the projector or the surface moves. Kageyama et al. recently addressed this issue using DNNs89,90) (Fig. 3). Specifically, their DNNs estimated the blur kernels from the PM result of a natural image and generated the projection image compensating for defocus blur.

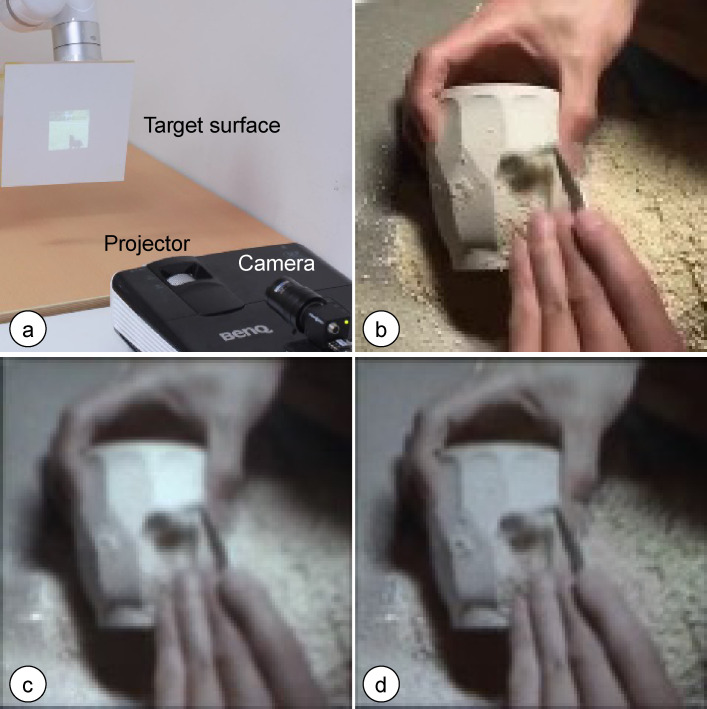

Figure 3.

(Color online) Software-based defocus compensation using DNNs.90) (a) Experimental setup: a robotic arm repeatedly moves the target surface along the same path for comparison. (b) Target appearance. (c) PM result without compensation. (d) Compensated PM result. (IEEE Trans. Vis. Comput. Graph. 2022, 28, 2223–2233)

The compensation capacity of the software-based solutions mentioned above is restricted by limited dynamic range of projector hardware. Researchers have developed hardware-based solutions to overcome this limitation. Xu et al. proposed a multifocal projector comprising an electrically focus-tunable lens (ETL) and a synchronized high-speed projector.91) They modulate the focal length of the ETL at more than 60 Hz, thus making it imperceptible to human observers, and project images precisely when the focusing distance of the projector corresponds to the target surface. The same setup also achieved a varifocal projector in which ETL’s focal length was constantly adjusted to match the target surface.92) Although a large aperture ETL would be suitable for these systems, the response time of such ETLs is limited. The ETL is made of an optical fluid sealed off by an elastic polymer membrane. An actuator ring exerts pressure on the outer zone of the container, changing the curvature of the lens. The response time limitation is caused by the rippling of the optical fluid after actuation. Researchers demonstrated that input signals computed using sparse optimization can speed up the response time.93)

Other hardware-based solutions control the waveform of the projected light. Li et al. proposed optimizing the diffractive optical element to preserve the high spatial frequency components of the projected image over various distances, thereby extending the DOF of the projector.94) Other researchers have proposed spatially adaptive focal projection, coining the term “focal surface projection” to describe their approach, using a phase-only spatial light modulator. This approach enables focusing on all parts of a non-planar target surface.95)

5. Shadow removal

Cast shadows significantly degrade the sense of immersion in PM. Previous studies removed shadows using synthetic aperture approaches. Specifically, they spatially distributed multiple projectors to ensure that users do not simultaneously occlude a projection target from all projectors. Once either an occluder or its shadow is detected by cameras, the system compensates for the shadow by illuminating that area from an unoccluded projector.96) Although they computed the projection images for all projectors on a single central server, the recent research trend has shifted toward applying cooperative distributed algorithms since around 2015.97) Uesaka and Amano proposed a technique in which multiple co-axial ProCam systems cooperatively remove shadows.98) Nomoto et al. demonstrated shadow removal in DPM with multiple high-speed projectors using a cooperative algorithm.58) However, these synthetic aperture approaches suffer from a delay in computational compensation process. In other words, a shadow cannot be perfectly removed while an occluder is moving.

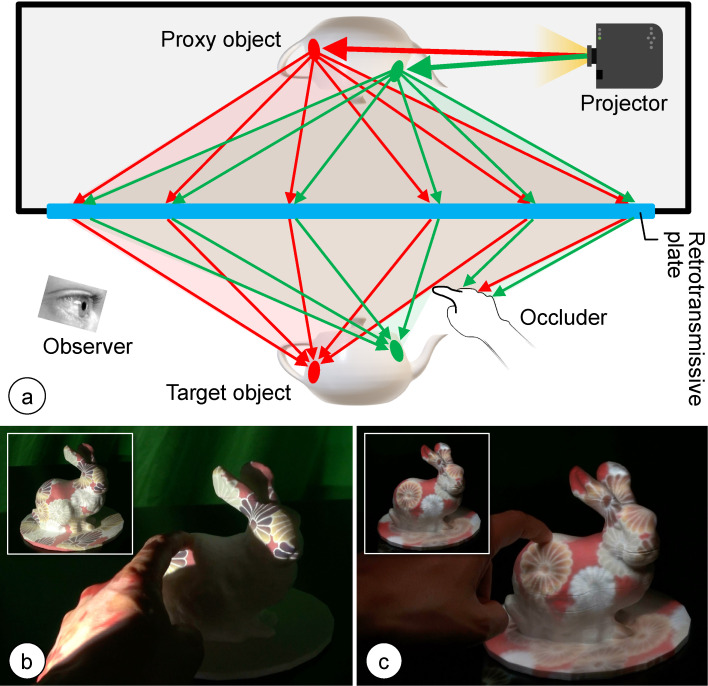

By contrast, optical approaches achieve delay-free shadow removal and have also attracted significant attention from researchers. Hiratani et al. applied a large-format retrotransmissive plate to project images onto a surface from wide viewing angles99,100) (Fig. 4). The retrotransmissive plate collects the light rays emitted from a point in space at a plane-symmetrical position with respect to it. They prepared a white diffuse object (proxy object) with a shape that is plane-symmetrical to the projection target and placed the target and proxy objects in a plane-symmetrical arrangement with respect to retrotransmissive plate. When an image is projected onto the proxy object, the reflected light rays pass through the retrotransmissive plate and converge on the target object. Consequently, the appearance of the proxy object is duplicated on the target object’s surface. When the size of the retrotransmissive plate is sufficiently large relative to an occluder, shadowless PM is achieved without the need for the shadow removal computations used in conventional synthetic aperture approaches.

Figure 4.

(Color online) Shadow removal using a large-format retrotransmissive plate.99) (a) Schematic illustrating the principle. (b) Typical PM result with an occluder. (c) PM result using the shadow removal system with the same occluder. (IEEE Trans. Vis. Comput. Graph. 2023, 29, 2280–2290)

The above optical solution is restricted to static DPM because the proxy and target objects must be placed at the plane-symmetrical positions. Other researchers have overcome this limitation by moving the proxy object using a robotic arm to match its pose with the target object.101) The same research group also proposed using a volumetric display102) and light field display103) instead of placing a physical proxy object to generate light rays as if they were emitted from the surface of a proxy object whose pose matches that of the target object.

6. Unconventional projectors

As discussed in the previous sections, unconventional projectors such as those with ETLs and high-speed ones can fundamentally resolve the specific technical issues that could not be addressed using standard projectors. This section introduces three types of unconventional projectors each of which has been currently explored by multiple research groups.

6.1. Wearable projectors.

Following pioneering work,104) several researchers have explored PM using wearable projectors.105,106) The recent trend of downsizing projector hardware, coupled with bright light sources such as LEDs and lasers, has driven the research in this direction. Wearable projectors are valuable for PM onto nearby surfaces or the user’s body. For example, a tiny projector was used as a display component of a smartwatch, enabling a user to interact with the overlaid smartwatch image contents on their arm.107) Another study combined a wearable projector with a pan-tilt mirror and high-speed camera to make projected images follow a moving target surface without perceivable latency.65) Head-mounted setups were also explored, wherein the distance between a projector and user’s eye is reduced, enabling nearly occlusion-free PM.108)

A current research trend involves combining actuated head-mounted projectors with head-mounted displays. Wang et al. proposed attaching a projector to a virtual reality (VR) headset.109) Their system projected VR scenes that the headset user is watching onto the floor around them, enabling them to share their VR experiences with others. Hartmann et al. suggested using a head-mounted projector with an optical see-through AR headset and demonstrated the sharing of augmented image contents displayed in the headset with people in the vicinity through projected imagery.110) They also proposed displaying auxiliary information and user interface widgets with the head-mounted projector to support interaction with the image contents displayed in the headset.

6.2. Omnidirectional projectors.

The FOV of a typical projector is limited, necessitating the use of multiple projectors to achieve large-area PM. An omnidirectional projector, using a fisheye lens with almost a 180-degree FOV, presents a potential solution for this issue. A research group proposed an omnidirectional projector and demonstrated various PM applications using it.111,112) The geometric registration of an omnidirectional projector is non-trivial because the pinhole camera model is no longer valid. The researchers addressed this problem using a co-axial approach in which a projector and camera share their optical axes using a beam-splitter before the fisheye lens. Their co-axial omnidirectional ProCam system can project images onto physical surfaces without distortion, on which visual markers are attached.

Yamamoto et al. implemented an omnidirectional ProCam system using another unique approach.113) They proposed a monocular ProCam system in which the projector and camera share the same objective lens. Using relay optics, they optically transferred the image panels of the camera and projector to the focal point of the objective lens, resulting in overlaid image panels. The overlaid pixels have sensing and displaying capabilities. They realized an omnidirectional ProCam system using a fisheye lens as the objective within this framework. Furthermore, they showcased the high scalability of their approach by implementing a high dynamic range ProCam system using a traditional double modulation framework.114,115)

6.3. Visible light communication projectors.

Embedding invisible code independently in each projected pixel enables the control of electronic devices with photo sensors within the projector’s FOV while simultaneously presenting meaningful images to human observers who remain unaware of the embedded information. In other words, the projector has the capability for visible light communication at the pixel level. This can be achieved by modulating the projected light intensity at a very high speed such as 1 MHz, which is much higher than critical flicker fusion frequency of the human visual system. While pioneering work was published in 2007,116) where fixed information was embedded in grayscale images, this topic is still actively explored by multiple research groups.

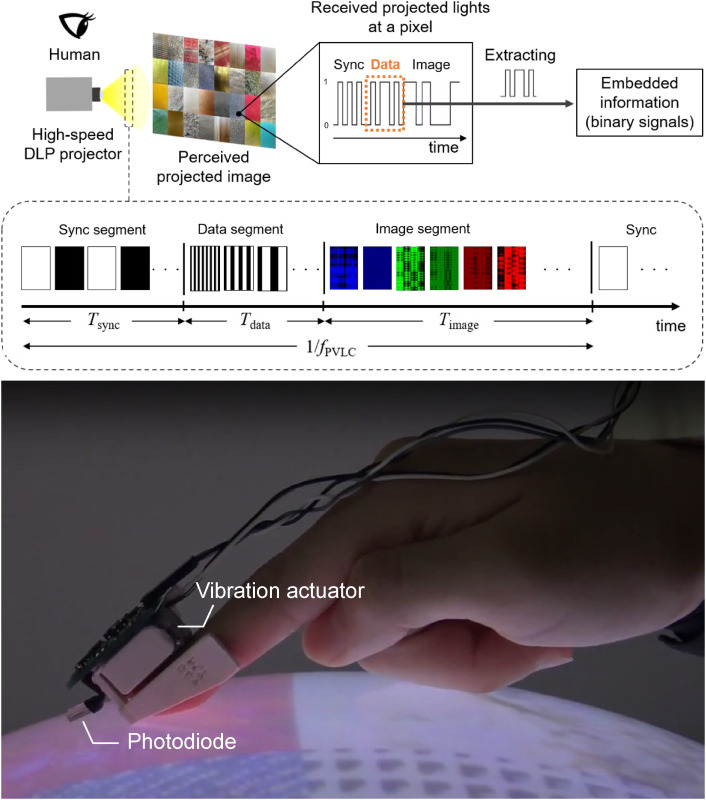

A recent study achieves embedding information in full-color images that can be updated interactively.117) It was demonstrated that the embedded information controls multiple robots,118,119) and wearable haptic displays120) (Fig. 5), in cooperation with graphical images. Although these systems read embedded information using photo sensors, Kumar et al. demonstrated that a high-speed camera can simultaneously read the information embedded in different pixels.121) Researchers have developed a projector emitting RGB as well as IR light, embedding information in the IR channel.122)

Figure 5.

(Color online) Pixel-level visible light communication.120) (Top) Schematic illustrating the embedding of unique information into each projector pixel while presenting an image to human observers. Note that fPVLC is higher than the critical flicker fusion frequency. (Bottom) A user wearing a haptic device experiences different vibration patterns based on the touched position in a projected image. (IEEE Trans. Vis. Comput. Graph. 2023, 29, 2005–2019)

7. Overcoming technical limitations

Projector hardware has inherent limitations that cannot be addressed by projector devices alone. This section summarizes two approaches to tackle these limitations; one combines near-eye optics in PM, and the other uses perceptual tricks.

7.1. Combining near-eye optics.

In typical PM, projectors alter the appearance of target surfaces, although displaying images floating above physical surfaces is not feasible. Stereoscopic PM technology overcomes this limitation, allowing users to perceive 3D objects that appear to float above physical surfaces with arbitrary shapes. These effects are achieved through the tracking of an observer’s viewpoint, rendering perspectively correct images with appropriate disparity for each eye, and projecting these two images in a time-sequential manner within each frame. The projected images are viewed through active-shutter glasses equipped with liquid crystal shutters, which prevent image interference between the two eyes. Researchers have recognized the potential of stereoscopic PM in various fields, including museum guides,18) product design,30) architecture planning,123) and teleconferencing.124)

A recent work applied the principle of stereoscopic PM to alter the appearance of a mirror surface.125) This technique does not directly project images onto a mirror surface; instead, it projects images onto diffuse surfaces that are visible to an observer through the mirror. With stereoscopic PM, the distance of the projected diffuse surfaces matches that of the mirror surface.

Typical stereoscopic PM technology only addresses binocular cues and cannot provide accurate focus cues, leading to a vergence-accommodation conflict (VAC) that causes significant discomfort, fatigue, and distorted 3D perception for the observer. Recent studies have tried to mitigate VAC. Fender et al. optimized the placement of the displayed 3D objects such that the depth difference becomes small between the projected physical surface and displayed objects.126) Kimura et al. proposed a multifocal stereoscopic PM to address VAC.127) They attached ETLs to active-shutter glasses and applied fast and periodical focal sweeps to ETLs, causing the “virtual image” (as an optical term) of every part of the real scene seen through ETLs to move back and forth during each sweep period. In each frame, the 3D objects were projected from a synchronized high-speed projector at the exact moment that the virtual image of the projected imagery on a real surface is located at a desired distance from ETLs.

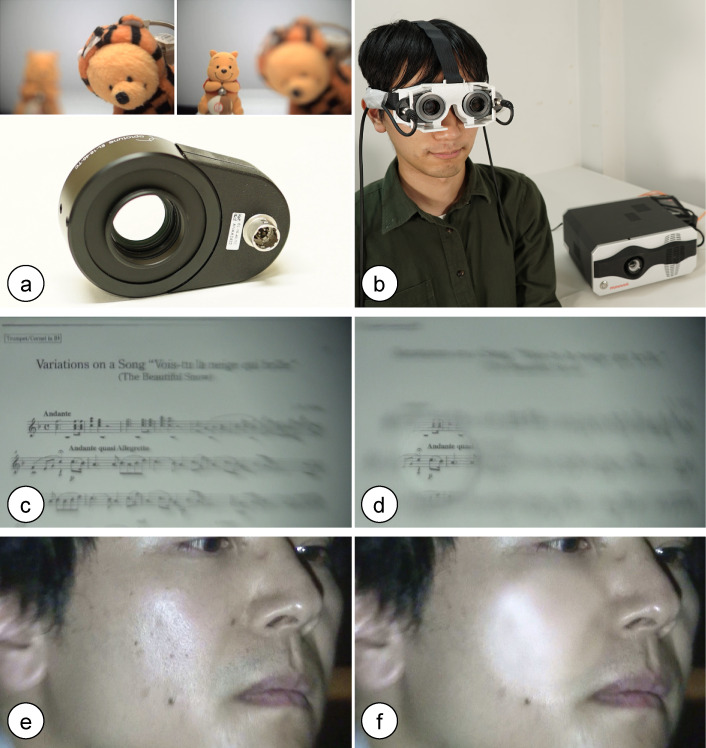

Using ETLs as eyeglasses in conjunction with a synchronized high-speed projector creates other novel vision experiences that cannot be achieved using projectors alone. Ueda et al. proposed using a high-speed projector to illuminate a real scene rather than overlaying images onto it. This approach allows for spatially non-uniformly defocused real-world appearances, irrespective of the distance from the user’s eyes to observed real objects128) (Fig. 6). They achieved this by periodically modulating the focal lengths of the glasses at a rate exceeding 60 Hz. During a specific phase when optical power of ETLs is too high for a user to adjust their vision to focus on the scene, one part of the scene intended to appear blurred is illuminated by the projector, whereas another part intended to appear focused is illuminated during a different phase. This process realizes the spatial defocusing effect that can be used for gaze navigation.129) Based on a similar principle, Ueda et al. used two ETLs for each eye for spatial zooming, where a part of a scene is zoomed in.130)

Figure 6.

(Color online) Combining near-eye optics with a high-speed projector enables spatially non-uniformly defocused real-world appearances.128) (a, b) Two ETLs, capable of quick focus modulation, are used as eyeglasses. (c) The appearance of a music score without the eyeglasses, and (d) with the eyeglasses, where a spatially non-uniform blurring effect guides a player at a fixed tempo. (e) The appearance of a human face without the eyeglasses, and (f) with the eyeglasses, where the facial impression becomes younger by reducing wrinkles and minimizing stains. (IEEE Trans. Vis. Comput. Graph. 2020, 26, 2051–2061)

Although PM can be used to visually alter the material of a real surface, it cannot simultaneously reproduce view-dependent effects such as specular reflections for multiple observers. Hamasaki et al. addressed this issue by incorporating optical see-through displays into PM.131) They displayed the view-dependent components on an optical see-through display worn by each observer. They also demonstrated that the system can extend the dynamic range, i.e., contrast, of the displayed results. Another intriguing research direction at the intersection of PM and optical see-through displays has emerged. Itoh et al. realized a lightweight optical see-through display comprising a screen and thin optics onto which a pan-tilt telescopic projector installed in the environment provides images on their see-through display.132) They have continued to improve the system, for example, by developing thinner optics using holographic optical elements (HOE)133) and achieving a low-latency PM on the screen using a 2D lateral effect position sensor.134)

7.2. Perceptual tricks.

Considering human perceptual properties is useful in PM. Even if a desired appearance is not physically reproducible by projected imagery onto a physical surface, PM is considered successful when observers perceive that the desired appearance is displayed. Researchers have explored the possibility of reproducing physically unfeasible appearances in PM in a perceptually equivalent manner. As the ultimate example, Sato et al. made the motion trajectory of a real object appear bent using a high-speed projector, although it physically moved in a rectilinear manner.135)

The performance in radiometric compensation (see Sec. 3) is significantly restricted by the limited color space and dynamic range of projectors. Researchers have addressed this issue by leveraging the nonlinear properties of the human visual system. Akiyama et al. developed a unique radiometric compensation technique using the color constancy of the human visual system and demonstrated that their method can perceptually enlarge the displayable color space beyond the capability of the projector devicealone.136) Similarly, Nagata and Amano used glare illusion to perceptually enhance glossiness of a projected result.137)

Although deforming an actual surface is not physically possible, Kawabe et al. demonstrated that overlaying monochrome movement patterns onto a 2D static textured object, such as a painted picture, induces illusory movement perception.138) Specifically, human observers perceive the projected results as if the static picture is moving along with the projected movements. Recent studies have extended this technique to DPM, achieving the modulation of the perceived stiffness of fabric139) and the low-latency deformation of handheld rigid objects using a high-speed projector.140) Fukiage et al. developed a computational framework to optimize the projected movement pattern to maximize the illusory effect.141) Okutani et al. found that 3D deformation is also possible when combining stereoscopic PM.142)

Researchers have experimentally proven that the depth perception of a physical surface can be manipulated without applying stereoscopic PM. Kawabe et al. found that adding shadows induces the perceived depth modulation of a 2D picture.143) Schmidt et al. investigated how projected color temperature, luminance contrast, and blur affect the perceived depth of the projected surface through a series of psychophysical experiments.144) They found that perceived depth can be influenced by projected illusions, and in particular, an increase in the luminance contrast between an object and its surroundings made the object appear close to the observer.

The PM is a form of visual media, primarily providing visual perception without directly engaging other sensory modalities. Researchers have sought to overcome this limitation by exploring cross-modal interactions between visual stimuli and other sensory experiences. In a recent study, it was demonstrated that altering a user’s finger position in response to the deformation of a physically touched surface in stereoscopic PM can induce a change in the perceived shape of the touched surface.145) Another study reported that haptic sensations can be induced by providing various visual effects to a virtual hand projected onto physical surfaces. The projected hand movement is determined by magnifying a user’s physical hand movement on a touch panel. When the projected hand movement is inconsistent with the actual hand movement, users reported experiencing haptic sensations.146) Researchers have also recently investigated how the appearance manipulation of food in PM affects its taste. Suzuki et al. projected dynamic boiling texture onto foods and found that it influences the perceived taste, such as saltiness.147) Fujimoto demonstrated that modifying the color saturation or the intensity at the highlight region of food enhances the perceived deliciousness.148)

8. Future directions

A future direction, derived by a simple extrapolation of current research trends, is the development of a low-latency DPM technique that simultaneously addresses radiometric compensation, deblurring, and shadow removal issues. This goal is not particularly challenging when projection targets are rigid body objects tracked using markers, as a recent study has already addressed a part of the required issues.58) Alternatively, achieving the goal for non-rigid surfaces without markers is not simple. Furthermore, a recent study revealed that even with a 1,000-fps projector and high-speed camera, achieving a motion-to-projection latency of less than 6 ms, the misalignment between a moving target object and projected image is sometimes noticeable.149) Therefore, achieving a much lower latency than 6 ms is crucial in DPM. Anticipating this, Nakagawa and Watanabe developed a 5,600 fps projector.150)

Technical issues persist even in static PM scenarios. Recently, an intriguing grand challenge in display technologies was coined: achieving “perceptual realism”, producing imagery indistinguishable from real-world 3D scenes.151) The most significant discrepancy between the PM and real-world 3D scenes arises from the fact that PM works properly only in a dark environment. The PM in a dark room tends to induce a self-luminous impression for a projected object.152) Researchers have recently started addressing this issue by substituting projectors for room lights and reproducing the environmental illumination while excluding the projection target with the projectors.153–156) As there are still many technical difficulties including the above-mentioned dark room constraint in realizing perceptual realism in PM, this research topic will be intensively explored in the next decade.

Integrating projection systems of other sensory modalities (referred to as X) into full-color PM (i.e., RGB-X PM) to expand user experiences is another promising research direction. For instance, researchers have combined a typical RGB projector with a thermal projector capable of changing the direction of a far IR light spot, simultaneously providing visible and thermal sensations on a user’s body.157) In addition, an aerial vibrotactile display based on an ultrasound phased array can provide vibrotactile sensations to a user’s body without contact,158,159) suggesting its potential integration with a typical projector in PM. Olfactory projection systems160,161) could also be integrated into PM, offering the potential to provide novel visual-olfactory experiences to users.

9. Conclusion

This review has introduced the current trends in the PM research from 2018 and later, as well as future directions. To recap, the notable trends include low-latency DPM, high quality radiometric compensation and deblurring by DNNs, delay-free shadow removal by large aperture optics, unconventional projectors for various tasks, and overcoming technical limitations by combining near-eye optics and perceptual tricks. Expected future directions encompass much lower-latency DPM, the pursuit of perceptual realism, and the development of RGB-X PM. The field of PM research will continue to evolve, integrating diverse disciplines such as computer science, optics, psychology, and electrical and electronic engineering.

Non-standard abbreviation list

- AR

augmented reality

- DNN

deep neural network

- DPM

dynamic projection mapping

- ETL

electrically focus-tunable lens

- IR

infrared

- PM

projection mapping

Profile

Daisuke Iwai was born in Ehime in 1980. After receiving his PhD degree from Osaka University in 2007, he started his academic career at Osaka University. He was also a visiting scientist at Bauhaus-University Weimar, Germany, from 2007 to 2008 and a visiting Associate Professor at ETH, Switzerland, in 2011. He is currently working as an Associate Professor at the Graduate School of Engineering Science, Osaka University, Japan. His research interests include augmented reality, projection mapping, and human–computer interaction. He is also currently serving as an Associate Editor of IEEE Transactions on Visualization and Computer Graphics and has previously served as Program Chairs of IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (2021, 2022) and IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2022). His publications have received Best Paper Awards at IEEE VR (2015), IEEE Symposium on 3D User Interfaces (3DUI) (2015), and IEEE ISMAR (2021). He is a recipient of the JSPS Prize (2023).

References

- 1).Iwai D., Yabiki T., Sato K. (2013) View management of projected labels on nonplanar and textured surfaces. IEEE Trans. Vis. Comput. Graph. 19, 1415–1424. [DOI] [PubMed] [Google Scholar]

- 2).Siegl C., Colaianni M., Thies L., Thies J., Zollhöfer M., Izadi S., et al. (2015) Real-time pixel luminance optimization for dynamic multi-projection mapping. ACM Trans. Graph. 34, 237. [Google Scholar]

- 3).Bimber, O. and Raskar, R. (2005) Spatial Augmented Reality: Merging Real and Virtual Worlds. A. K. Peters, Ltd., Wellesley, MA, USA. [Google Scholar]

- 4).Jones, B.R., Benko, H., Ofek, E. and Wilson, A.D. (2013) Illumiroom: Peripheral projected illusions for interactive experiences. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’13, Association for Computing Machinery, New York, NY, USA, pp. 869–878. [Google Scholar]

- 5).Mine M.R., van Baar J., Grundhofer A., Rose D., Yang B. (2012) Projection-based augmented reality in Disney theme parks. Computer 45, 32–40. [Google Scholar]

- 6).Wilson, A., Benko, H., Izadi, S. and Hilliges, O. (2012) Steerable augmented reality with the beamatron. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology, UIST ’12, Association for Computing Machinery, New York, NY, USA, pp. 413–422. [Google Scholar]

- 7).Pinhanez, C. (2001) Using a steerable projector and a camera to transform surfaces into interactive displays. In CHI ’01 Extended Abstracts on Human Factors in Computing Systems, pp. 369–370. [Google Scholar]

- 8).Iwai, D. and Sato, K. (2006) Limpid desk: See-through access to disorderly desktop in projection-based mixed reality. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, VRST ’06, Association for Computing Machinery, New York, NY, USA, pp. 112–115. [Google Scholar]

- 9).Iwai D., Sato K. (2011) Document search support by making physical documents transparent in projection-based mixed reality. Virtual Real. 15, 147–160. [Google Scholar]

- 10).Kitajima Y., Iwai D., Sato K. (2017) Simultaneous projection and positioning of laser projector pixels. IEEE Trans. Vis. Comput. Graph. 23, 2419–2429. [DOI] [PubMed] [Google Scholar]

- 11).Matsushita, K., Iwai, D. and Sato, K. (2011) Interactive bookshelf surface for in situ book searching and storing support. In Proceedings of the 2nd Augmented Human International Conference (Tokyo, 2011), Article No. 2. [Google Scholar]

- 12).Raskar R., Beardsley P., van Baar J., Wang Y., Dietz P., Lee J., et al. (2004) Rfig lamps: Interacting with a self-describing world via photosensing wireless tags and projectors. ACM Trans. Graph. 23, 406–415. [Google Scholar]

- 13).Nishino H., Hatano E., Seo S., Nitta T., Saito T., Nakamura M., et al. (2018) Real-time navigation for liver surgery using projection mapping with indocyanine green fluorescence: Development of the novel medical imaging projection system. Ann. Surg. 267, 1134–1140. [DOI] [PubMed] [Google Scholar]

- 14).Bandyopadhyay, D., Raskar, R. and Fuchs, H. (2001) Dynamic shader lamps: painting on movable objects. In Proceedings IEEE and ACM International Symposium on Augmented Reality, pp. 207–216. [Google Scholar]

- 15).Flagg, M. and Rehg, J.M. (2006) Projector-guided painting. In Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology, pp. 235–244. [Google Scholar]

- 16).Rivers A., Adams A., Durand F. (2012) Sculpting by numbers. ACM Trans. Graph. 31, 157. [Google Scholar]

- 17).Bimber O., Coriand F., Kleppe A., Bruns E., Zollmann S., Langlotz T. (2005) Superimposing pictorial artwork with projected imagery. IEEE Multimed. 12, 16–26. [Google Scholar]

- 18).Schmidt S., Bruder G., Steinicke F. (2019) Effects of virtual agent and object representation on experiencing exhibited artifacts. Comput. Graph. 83, 1–10. [Google Scholar]

- 19).Iwai D., Matsukage R., Aoyama S., Kikukawa T., Sato K. (2018) Geometrically consistent projection-based tabletop sharing for remote collaboration. IEEE Access 6, 6293–6302. [Google Scholar]

- 20).Pejsa, T., Kantor, J., Benko, H., Ofek, E. and Wilson, A. (2016) Room2room: Enabling life-size telepresence in a projected augmented reality environment. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work and Social Computing, CSCW ’16, pp. 1716–1725. [Google Scholar]

- 21).Raskar, R., Welch, G., Cutts, M., Lake, A., Stesin, L. and Fuchs, H. (1998) The office of the future: A unified approach to image-based modeling and spatially immersive displays. In Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’98, pp. 179–188. [Google Scholar]

- 22).Duan, T., Punpongsanon, P., Iwai, D. and Sato, K. (2018) Flyinghand: Extending the range of haptic feedback on virtual hand using drone-based object recognition. In SIGGRAPH Asia 2018 Technical Briefs, SA ’18, Association for Computing Machinery, New York, NY, USA, Article No. 28. [Google Scholar]

- 23).Ueda, Y., Asai, Y., Enomoto, R., Wang, K., Iwai, D. and Sato, K. (2017) Body cyberization by spatial augmented reality for reaching unreachable world. In Proceedings of the 8th Augmented Human International Conference, AH ’17, Association for Computing Machinery, New York, NY, USA, Article No. 19. [Google Scholar]

- 24).Xu, H., Iwai, D., Hiura, S. and Sato, K. (2006) User interface by virtual shadow projection. In 2006 SICE-ICASE International Joint Conference, pp. 4814–4817. [Google Scholar]

- 25).Bermano A.H., Billeter M., Iwai D., Grundhöfer A. (2017) Makeup lamps: Live augmentation of human faces via projection. Comput. Graph. Forum 36, 311–323. [Google Scholar]

- 26).Siegl C., Lange V., Stamminger M., Bauer F., Thies J. (2017) Faceforge: Markerless non-rigid face multi-projection mapping. IEEE Trans. Vis. Comput. Graph. 23, 2440–2446. [DOI] [PubMed] [Google Scholar]

- 27).Cascini G., O’Hare J., Dekoninck E., Becattini N., Boujut J.-F., Ben Guefrache F., et al. (2020) Exploring the use of ar technology for co-creative product and packaging design. Comput. Ind. 123, 103308. [Google Scholar]

- 28).Marner M.R., Smith R.T., Walsh J.A., Thomas B.H. (2014) Spatial user interfaces for large-scale projector-based augmented reality. IEEE Comput. Graph. Appl. 34, 74–82. [DOI] [PubMed] [Google Scholar]

- 29).Menk C., Jundt E., Koch R. (2011) Visualisation techniques for using spatial augmented reality in the design process of a car. Comput. Graph. Forum 30, 2354–2366. [Google Scholar]

- 30).Takezawa, T., Iwai, D., Sato, K., Hara, T., Takeda, Y. and Murase, K. (2019) Material surface reproduction and perceptual deformation with projection mapping for car interior design. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 251–258. [Google Scholar]

- 31).Bimber O., Iwai D., Wetzstein G., Grundhöfer A. (2008) The visual computing of projector-camera systems. Comput. Graph. Forum 27, 2219–2245. [Google Scholar]

- 32).Grundhöfer A., Iwai D. (2018) Recent advances in projection mapping algorithms, hardware and applications. Comput. Graph. Forum 37, 653–675. [Google Scholar]

- 33).Zhang Z. (2000) A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22, 1330–1334. [Google Scholar]

- 34).Xie, C., Shishido, H., Kameda, Y. and Kitahara, I. (2019) A projector calibration method using a mobile camera for projection mapping system. In 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 261–262. [Google Scholar]

- 35).Sugimoto M., Iwai D., Ishida K., Punpongsanon P., Sato K. (2021) Directionally decomposing structured light for projector calibration. IEEE Trans. Vis. Comput. Graph. 27, 4161–4170. [DOI] [PubMed] [Google Scholar]

- 36).Yoon, D., Kim, J., Jo, J. and Kim, K. (2023) Projection mapping method using projector-lidar (light detection and ranging) calibration. In 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 857–858. [Google Scholar]

- 37).Kurth P., Lange V., Siegl C., Stamminger M., Bauer F. (2018) Auto-calibration for dynamic multi-projection mapping on arbitrary surfaces. IEEE Trans. Vis. Comput. Graph. 24, 2886–2894. [DOI] [PubMed] [Google Scholar]

- 38).Tehrani M.A., Gopi M., Majumder A. (2021) Automated geometric registration for multi-projector displays on arbitrary 3D shapes using uncalibrated devices. IEEE Trans. Vis. Comput. Graph. 27, 2265–2279. [DOI] [PubMed] [Google Scholar]

- 39).Ueno, A., Amano, T. and Yamauchi, C. (2022) Geometric calibration with multi-viewpoints for multi-projector systems on arbitrary shapes using homography and pixel maps. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 828–829. [Google Scholar]

- 40).Ahmed B., Lee K.H. (2019) Projection mapping onto deformable nonrigid surfaces using adaptive selection of fiducials. J. Electron. Imaging 28, 063008. [Google Scholar]

- 41).Ibrahim, M.T., Gopi, M. and Majumder, A. (2023) Self-calibrating dynamic projection mapping system for dynamic, deformable surfaces with jitter correction and occlusion handling. In 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 293–302. [Google Scholar]

- 42).Ibrahim, M.T., Meenakshisundaram, G. and Majumder, A. (2020) Dynamic projection mapping of deformable stretchable materials. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, VRST ’20, Association for Computing Machinery, New York, NY, USA, Article No. 35. [Google Scholar]

- 43).Miyazaki, D. and Hashimoto, N. (2018) Dynamic projection mapping onto non-rigid objects with dot markers. In 2018 International Workshop on Advanced Image Technology (IWAIT), doi: 10.1109/IWAIT.2018.8369679. [Google Scholar]

- 44).Maeda, K. and Koike, H. (2020) MirAIProjection: Real-time projection onto high-speed objects by predicting their 3D position and pose using DNNs. In Proceedings of the International Conference on Advanced Visual Interfaces, AVI ’20, Association for Computing Machinery, New York, NY, USA, Article No. 59. [Google Scholar]

- 45).Lee K., Sim K., Uhm T., Lee S.H., Park J.-I. (2020) Design of imperceptible metamer markers and application systems. IEEE Access 8, 53687–53696. [Google Scholar]

- 46).Tone D., Iwai D., Hiura S., Sato K. (2020) Fibar: Embedding optical fibers in 3D printed objects for active markers in dynamic projection mapping. IEEE Trans. Vis. Comput. Graph. 26, 2030–2040. [DOI] [PubMed] [Google Scholar]

- 47).Asayama H., Iwai D., Sato K. (2018) Fabricating diminishable visual markers for geometric registration in projection mapping. IEEE Trans. Vis. Comput. Graph. 24, 1091–1102. [DOI] [PubMed] [Google Scholar]

- 48).Oku, H., Nomura, M., Shibahara, K. and Obara, A. (2018) Edible projection mapping. In SIGGRAPH Asia 2018 Emerging Technologies, SA ’18, Association for Computing Machinery, New York, NY, USA, Article No. 2. [Google Scholar]

- 49).Miyatake, Y., Punpongsanon, P., Iwai, D. and Sato, K. (2022) interiqr: Unobtrusive edible tags using food 3D printing. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, UIST ’22, Association for Computing Machinery, New York, NY, USA, Article No. 84. [Google Scholar]

- 50).Halvorson, Y., Saito, T. and Hashimoto, N. (2022) Robust tangible projection mapping with multi-view contour-based object tracking. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 756–757. [Google Scholar]

- 51).Kurth P., Leuschner M., Stamminger M., Bauer F. (2022) Content-aware brightness solving and error mitigation in large-scale multi-projection mapping. IEEE Trans. Vis. Comput. Graph. 28, 3607–3617. [DOI] [PubMed] [Google Scholar]

- 52).Ng, A., Lepinski, J., Wigdor, D., Sanders, S. and Dietz, P. (2012) Designing for low-latency direct-touch input. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology, UIST ’12, Association for Computing Machinery, New York, NY, USA, pp. 453–464. [Google Scholar]

- 53).Watanabe, Y. and Ishikawa, M. (2019) High-speed and high-brightness color single-chip dlp projector using high-power led-based light sources. In 26th International Display Workshops, IDW 2019, pp. 1350–1352. [Google Scholar]

- 54).Mikawa, Y., Sueishi, T., Watanabe, Y. and Ishikawa, M. (2018) Variolight: Hybrid dynamic projection mapping using high-speed projector and optical axis controller. In SIGGRAPH Asia 2018 Emerging Technologies, SA ’18, Association for Computing Machinery, New York, NY, USA, Article No. 17. [Google Scholar]

- 55).Mikawa Y., Sueishi T., Watanabe Y., Ishikawa M. (2022) Dynamic projection mapping for robust sphere posture tracking using uniform/biased circumferential markers. IEEE Trans. Vis. Comput. Graph. 28, 4016–4031. [DOI] [PubMed] [Google Scholar]

- 56).Sueishi, T. and Ishikawa, M. (2021) Ellipses ring marker for high-speed finger tracking. In Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, VRST ’21, Association for Computing Machinery, New York, NY, USA, Article No. 31. [Google Scholar]

- 57).Peng H.-L., Watanabe Y. (2021) High-speed dynamic projection mapping onto human arm with realistic skin deformation. Appl. Sci. 11, 3753. [Google Scholar]

- 58).Nomoto T., Li W., Peng H.-L., Watanabe Y. (2022) Dynamic multi-projection mapping based on parallel intensity control. IEEE Trans. Vis. Comput. Graph. 28, 2125–2134. [DOI] [PubMed] [Google Scholar]

- 59).Nomoto, T., Koishihara, R. and Watanabe, Y. (2020) Realistic dynamic projection mapping using real-time ray tracing. In ACM SIGGRAPH 2020 Emerging Technologies, SIGGRAPH ’20, Association for Computing Machinery, New York, NY, USA, Article No. 13. [Google Scholar]

- 60).Tsurumi, N., Ohishi, K., Kakimoto, R., Tsukiyama, F., Peng, H.-L., Watanabe, Y. et al. (2023) Rediscovering your own beauty through a highly realistic 3D digital makeup system based on projection mapping technology. In 33rd IFSCC Congress, RB-03. [Google Scholar]

- 61).Kagami S., Hashimoto K. (2019) Animated stickies: Fast video projection mapping onto a markerless plane through a direct closed-loop alignment. IEEE Trans. Vis. Comput. Graph. 25, 3094–3104. [DOI] [PubMed] [Google Scholar]

- 62).Hisaichi, S., Sumino, K., Ueda, K., Kasebe, H., Yamashita, T., Yuasa, T. et al. (2021) Depth-aware dynamic projection mapping using high-speed rgb and ir projectors. In SIGGRAPH Asia 2021 Emerging Technologies, SA ’21, Association for Computing Machinery, New York, NY, USA, Article No. 3. [Google Scholar]

- 63).Miyashita L., Watanabe Y., Ishikawa M. (2018) Midas projection: Markerless and modelless dynamic projection mapping for material representation. ACM Trans. Graph. 37, 196. [Google Scholar]

- 64).Iuchi, M., Hirohashi, Y. and Oku, H. (2023) Proposal for an aerial display using dynamic projection mapping on a distant flying screen. In 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), pp. 603–608. [Google Scholar]

- 65).Miyashita, L., Yamazaki, T., Uehara, K., Watanabe, Y. and Ishikawa, M. (2018) Portable Lumipen: Dynamic sar in your hand. In 2018 IEEE International Conference on Multimedia and Expo (ICME), doi: 10.1109/ICME.2018.8486514. [Google Scholar]

- 66).Post, M., Fieguth, P., Naiel, M.A., Azimifar, Z. and Lamm, M. (2019) Fresco: Fast radiometric egocentric screen compensation. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1899–1906. [Google Scholar]

- 67).Grundhöfer, A. (2013) Practical non-linear photometric projector compensation. In 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 924–929. [Google Scholar]

- 68).Grundhöfer A., Iwai D. (2015) Robust, error-tolerant photometric projector compensation. IEEE Trans. Image Process. 24, 5086–5099. [DOI] [PubMed] [Google Scholar]

- 69).Kurth, P., Lange, V., Stamminger, M. and Bauer, F. (2020) Real-time adaptive color correction in dynamic projection mapping. In 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 174–184. [Google Scholar]

- 70).Li Y., Majumder A., Gopi M., Wang C., Zhao J. (2018) Practical radiometric compensation for projection display on textured surfaces using a multidimensional model. Comput. Graph. Forum 37, 365–375. [Google Scholar]

- 71).Akiyama R., Yamamoto G., Amano T., Taketomi T., Plopski A., Sandor C., et al. (2021) Robust reflectance estimation for projection-based appearance control in a dynamic light environment. IEEE Trans. Vis. Comput. Graph. 27, 2041–2055. [DOI] [PubMed] [Google Scholar]

- 72).Nishizawa, M. and Okajima, K. (2018) Precise surface color estimation using a non-diagonal reflectance matrix on an adaptive projector-camera system. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 190–195. [Google Scholar]

- 73).Amano, T. and Yoshioka, H. (2020) Viewing-direction dependent appearance manipulation based on light-field feedback. In Virtual Reality and Augmented Reality (eds. Bourdot, P., Interrante, V., Kopper, R., Olivier, A.-H., Saito, H. and Zachmann, G.). Springer International Publishing, Cham, pp. 192–205. [Google Scholar]

- 74).Kanaya, J. and Amano, T. (2022) Apparent shape manipulation by light-field projection onto a retroreflective surface. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 880–881. [Google Scholar]

- 75).Kimura, K. and Amano, T. (2022) Perceptual brdf manipulation by 4-degree of freedom light field projection using multiple mirrors and projectors. In 2022 EuroXR Conference, pp. 95–99. [Google Scholar]

- 76).Murakami, K. and Amano, T. (2018) Materiality manipulation by light-field projection from reflectance analysis. In ICAT-EGVE 2018 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments (eds. Bruder, G., Yoshimoto, S. and Cobb, S.). The Eurographics Association, pp. 99–105. [Google Scholar]

- 77).Hashimoto N., Yoshimura K. (2021) Radiometric compensation for non-rigid surfaces by continuously estimating inter-pixel correspondence. Vis. Comput. 37, 175–187. [Google Scholar]

- 78).Pjanic P., Willi S., Iwai D., Grundhöfer A. (2018) Seamless multi-projection revisited. IEEE Trans. Vis. Comput. Graph. 24, 2963–2973. [DOI] [PubMed] [Google Scholar]

- 79).Huang, B. and Ling, H. (2019) End-to-end projector photometric compensation. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6803–6812. [Google Scholar]

- 80).Huang, B. and Ling, H. (2019) Compennet++: End-to-end full projector compensation. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7164–7173. [Google Scholar]

- 81).Huang B., Sun T., Ling H. (2022) End-to-end full projector compensation. IEEE Trans. Pattern Anal. Mach. Intell. 44, 2953–2967. [DOI] [PubMed] [Google Scholar]

- 82).Park J., Jung D., Moon B. (2022) Projector compensation framework using differentiable rendering. IEEE Access 10, 44461–44470. [Google Scholar]

- 83).Wang, Y., Ling, H. and Huang, B. (2023) Compenhr: Efficient full compensation for high-resolution projector. In 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), pp. 135–145. [Google Scholar]

- 84).Li Y., Yin W., Li J., Xie X. (2023) Physics-based efficient full projector compensation using only natural images. IEEE Trans. Vis. Comput. Graph. doi: 10.1109/TVCG.2023.3281681. [DOI] [PubMed] [Google Scholar]

- 85).Huang B., Ling H. (2021) Deprocams: Simultaneous relighting, compensation and shape reconstruction for projector-camera systems. IEEE Trans. Vis. Comput. Graph. 27, 2725–2735. [DOI] [PubMed] [Google Scholar]

- 86).Erel Y., Iwai D., Bermano A.H. (2023) Neural projection mapping using reflectance fields. IEEE Trans. Vis. Comput. Graph. 29, 4339–4349. [DOI] [PubMed] [Google Scholar]

- 87).Zhang, L. and Nayar, S. (2006) Projection defocus analysis for scene capture and image display. In ACM SIGGRAPH 2006 Papers, SIGGRAPH ’06, Association for Computing Machinery, New York, NY, USA, pp. 907–915. [Google Scholar]

- 88).He Z., Li P., Zhao X., Zhang S., Tan J. (2021) Fast projection defocus correction for multiple projection surface types. IEEE Trans. Industr. Inform. 17, 3044–3055. [Google Scholar]

- 89).Kageyama Y., Isogawa M., Iwai D., Sato K. (2020) Prodebnet: projector deblurring using a convolutional neural network. Opt. Express 28, 20391–20403. [DOI] [PubMed] [Google Scholar]

- 90).Kageyama Y., Iwai D., Sato K. (2022) Online projector deblurring using a convolutional neural network. IEEE Trans. Vis. Comput. Graph. 28, 2223–2233. [DOI] [PubMed] [Google Scholar]

- 91).Xu H., Wang L., Tabata S., Watanabe Y., Ishikawa M. (2021) Extended depth-of-field projection method using a high-speed projectorwith a synchronized oscillating variable-focus lens. Appl. Opt. 60, 3917–3924. [DOI] [PubMed] [Google Scholar]

- 92).Wang L., Tabata S., Xu H., Hu Y., Watanabe Y., Ishikawa M. (2023) Dynamic depth-of-field projection mapping method based on a variable focus lens and visual feedback. Opt. Express 31, 3945–3953. [DOI] [PubMed] [Google Scholar]

- 93).Iwai D., Izawa H., Kashima K., Ueda T., Sato K. (2019) Speeded-up focus control of electrically tunable lens by sparse optimization. Sci. Rep. 9, 12365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94).Li, Y., Fu, Q. and Heidrich, W. (2023) Extended depth-of-field projector using learned diffractive optics. In 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), pp. 449–459. [Google Scholar]

- 95).Ueda F., Kageyama Y., Iwai D., Sato K. (2023) Focal surface projection: Extending projector depth of field using a phase-only spatial light modulator. J. Soc. Inf. Disp. 31, 651–656. [Google Scholar]

- 96).Nagase M., Iwai D., Sato K. (2011) Dynamic defocus and occlusion compensation of projected imagery by model-based optimal projector selection in multi-projection environment. Virtual Real. 15, 119–132. [Google Scholar]

- 97).Tsukamoto J., Iwai D., Kashima K. (2015) Radiometric compensation for cooperative distributed multi-projection system through 2-dof distributed control. IEEE Trans. Vis. Comput. Graph. 21, 1221–1229. [DOI] [PubMed] [Google Scholar]

- 98).Uesaka, S. and Amano, T. (2022) Cast-shadow removal for cooperative adaptive appearance manipulation. In ICAT-EGVE 2022 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments (eds. Uchiyama, H. and Normand, J.-M.). The Eurographics Association, pp. 11–16. [Google Scholar]

- 99).Hiratani K., Iwai D., Kageyama Y., Punpongsanon P., Hiraki T., Sato K. (2023) Shadowless projection mapping using retrotransmissive optics. IEEE Trans. Vis. Comput. Graph. 29, 2280–2290. [DOI] [PubMed] [Google Scholar]

- 100).Hiratani, K., Iwai, D., Punpongsanon, P. and Sato, K. (2019) Shadowless projector: Suppressing shadows in projection mapping with micro mirror array plate. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 1309–1310. [Google Scholar]

- 101).Kiyokawa, M., Okuda, S. and Hashimoto, N. (2019) Stealth projection: Visually removing projectors from dynamic projection mapping. In SIGGRAPH Asia 2019 Posters, SA ’19, Association for Computing Machinery, New York, NY, USA, Article No. 41. [Google Scholar]

- 102).Kiyokawa, M. and Hashimoto, N. (2021) Dynamic projection mapping with 3D images using volumetric display. In 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp. 597–598. [Google Scholar]

- 103).Watanabe, T. and Hashimoto, N. (2021) Light-field projection for tangible projection mapping. In ACM SIGGRAPH 2021 Posters, SIGGRAPH ’21, Association for Computing Machinery, New York, NY, USA, Article No. 18. [Google Scholar]

- 104).Karitsuka, T. and Sato, K. (2003) A wearable mixed reality with an on-board projector. In The Second IEEE and ACM International Symposium on Mixed and Augmented Reality, 2003. Proceedings, pp. 321–322. [Google Scholar]

- 105).Harrison, C., Benko, H. and Wilson, A.D. (2011) Omnitouch: Wearable multitouch interaction everywhere. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, UIST ’11, Association for Computing Machinery, New York, NY, USA, pp. 441–450. [Google Scholar]

- 106).Mistry, P., Maes, P. and Chang, L. (2009) Wuw - wear ur world: A wearable gestural interface. In CHI ’09 Extended Abstracts on Human Factors in Computing Systems, CHI EA ’09, Association for Computing Machinery, New York, NY, USA, pp. 4111–4116. [Google Scholar]

- 107).Xiao, R., Cao, T., Guo, N., Zhuo, J., Zhang, Y. and Harrison, C. (2018) Lumiwatch: On-arm projected graphics and touch input. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ’18, Association for Computing Machinery, New York, NY, USA, Paper No. 95. [Google Scholar]

- 108).Cortes G., Marchand E., Brincin G., Lécuyer A. (2018) Mosart: Mobile spatial augmented reality for 3D interaction with tangible objects. Front. Robot. AI 5, 93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109).Wang, C.-H., Yong, S., Chen, H.-Y., Ye, Y.-S. and Chan, L. (2020) Hmd light: Sharing in-VR experience via head-mounted projector for asymmetric interaction. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, UIST ’20, Association for Computing Machinery, New York, NY, USA, pp. 472–486. [Google Scholar]

- 110).Hartmann, J., Yeh, Y.-T. and Vogel, D. (2020) Aar: Augmenting a wearable augmented reality display with an actuated head-mounted projector. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, UIST ’20, Association for Computing Machinery, New York, NY, USA, pp. 445–458. [Google Scholar]

- 111).Hoffard, J., Miyafuji, S., Pardomuan, J., Sato, T. and Koike, H. (2022) OmniTiles - A User-Customizable Display Using An Omni-Directional Camera Projector System. In ICAT-EGVE 2022 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments (eds. Uchiyama, H. and Normand, J.-M.). The Eurographics Association, pp. 149–158. [Google Scholar]

- 112).Xu, R., Sato, T., Miyafuji, S. and Koike, H. (2022) Omnilantern: Design and implementations of a portable and coaxial omnidirectional projector-camera system. In Proceedings of the 2022 International Conference on Advanced Visual Interfaces, AVI 2022, Association for Computing Machinery, New York, NY, USA, Article No. 13. [Google Scholar]

- 113).Yamamoto K., Iwai D., Tani I., Sato K. (2022) A monocular projector-camera system using modular architecture. IEEE Trans. Vis. Comput. Graph. 29, 5586–5592. [DOI] [PubMed] [Google Scholar]

- 114).Kusakabe Y., Kanazawa M., Nojiri Y., Furuya M., Yoshimura M. (2008) A yc-separation-type projector: High dynamic range with double modulation. J. Soc. Inf. Disp. 16, 383–391. [Google Scholar]

- 115).Seetzen H., Heidrich W., Stuerzlinger W., Ward G., Whitehead L., Trentacoste M., et al. (2004) High dynamic range display systems. ACM Trans. Graph. 23, 760–768. [Google Scholar]

- 116).Kimura, S., Kitamura, M. and Naemura, T. (2007) Emitable: A tabletop surface pervaded with imperceptible metadata. In Second Annual IEEE International Workshop on Horizontal Interactive Human-Computer Systems (TABLETOP’07), pp. 189–192. [Google Scholar]

- 117).Hiraki T., Fukushima S., Watase H., Naemura T. (2019) Dynamic pvlc: Pixel-level visible light communication projector with interactive update of images and data. ITE Transactions on Media Technology and Applications 7, 160–168. [Google Scholar]

- 118).Hiraki, T., Fukushima, S., Kawahara, Y. and Naemura, T. (2019) Navigatorch: Projection-based robot control interface using high-speed handheld projector. In SIGGRAPH Asia 2019 Emerging Technologies, SA ’19, Association for Computing Machinery, New York, NY, USA, pp. 31–33. [Google Scholar]

- 119).Takefumi Hiraki Y.K., Fukushima S., Naemura T. (2018) Phygital field: An integrated field with physical robots and digital images using projection-based localization and control method. SICE Journal of Control, Measurement, and System Integration 11, 302–311. [Google Scholar]

- 120).Miyatake Y., Hiraki T., Iwai D., Sato K. (2023) Haptomapping: Visuo-haptic augmented reality by embedding user-imperceptible tactile display control signals in a projected image. IEEE Trans. Vis. Comput. Graph. 29, 2005–2019. [DOI] [PubMed] [Google Scholar]

- 121).Kumar D., Raut S., Shimasaki K., Senoo T., Ishii I. (2021) Projection-mapping-based object pointing using a high-frame-rate camera-projector system. ROBOMECH J. 8, 8. [Google Scholar]

- 122).Kamei I., Hiraki T., Fukushima S., Naemura T. (2019) Pilc projector: Image projection with pixel-level infrared light communication. IEEE Access 7, 160768–160778. [Google Scholar]

- 123).Bimber, O., Wetzstein, G., Emmerling, A. and Nitschke, C. (2005) Enabling view-dependent stereoscopic projection in real environments. In Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’05), pp. 14–23. [Google Scholar]

- 124).Benko, H., Jota, R. and Wilson, A. (2012) Miragetable: Freehand interaction on a projected augmented reality tabletop. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’12, Association for Computing Machinery, New York, NY, USA, pp. 199–208. [Google Scholar]

- 125).Kaminokado, T., Iwai, D. and Sato, K. (2019) Augmented environment mapping for appearance editing of glossy surfaces. In 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 55–65. [Google Scholar]

- 126).Fender, A., Herholz, P., Alexa, M. and Müller, J. (2018) OptiSpace: Automated placement of interactive 3D projection mapping content. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ’18, Association for Computing Machinery, New York, NY, USA, Paper No. 269. [Google Scholar]

- 127).Kimura S., Iwai D., Punpongsanon P., Sato K. (2021) Multifocal stereoscopic projection mapping. IEEE Trans. Vis. Comput. Graph. 27, 4256–4266. [DOI] [PubMed] [Google Scholar]

- 128).Ueda T., Iwai D., Hiraki T., Sato K. (2020) Illuminated focus: Vision augmentation using spatial defocusing via focal sweep eyeglasses and high-speed projector. IEEE Trans. Vis. Comput. Graph. 26, 2051–2061. [DOI] [PubMed] [Google Scholar]

- 129).Miyamoto, J., Koike, H. and Amano, T. (2018) Gaze navigation in the real world by changing visual appearance of objects using projector-camera system. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, VRST ’18, Association for Computing Machinery, New York, NY, USA, pp. 91–98. [Google Scholar]

- 130).Ueda T., Iwai D., Sato K. (2021) Illuminatedzoom: spatially varying magnified vision using periodically zooming eyeglasses and a high-speed projector. Opt. Express 29, 16377–16395. [DOI] [PubMed] [Google Scholar]

- 131).Hamasaki T., Itoh Y., Hiroi Y., Iwai D., Sugimoto M. (2018) HySAR: Hybrid material rendering by an optical see-through head-mounted display with spatial augmented reality projection. IEEE Trans. Vis. Comput. Graph. 24, 1457–1466. [DOI] [PubMed] [Google Scholar]

- 132).Itoh Y., Kaminokado T., Akşit K. (2021) Beaming displays. IEEE Trans. Vis. Comput. Graph. 27, 2659–2668. [DOI] [PubMed] [Google Scholar]

- 133).Akşit, K. and Itoh, Y. (2023) Holobeam: Paper-thin near-eye displays. In 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), pp. 581–591. [Google Scholar]

- 134).Hiroi Y., Watanabe A., Mikawa Y., Itoh Y. (2023) Low-latency beaming display: Implementation of wearable, 133 µs motion-to-photon latency near-eye display. IEEE Trans. Vis. Comput. Graph. 29, 4761–4771. [DOI] [PubMed] [Google Scholar]