Abstract

Learning multimodal representations involves integrating information from multiple heterogeneous sources of data. It is a challenging yet crucial area with numerous real-world applications in multimedia, affective computing, robotics, finance, human-computer interaction, and healthcare. Unfortunately, multimodal research has seen limited resources to study (1) generalization across domains and modalities, (2) complexity during training and inference, and (3) robustness to noisy and missing modalities. In order to accelerate progress towards understudied modalities and tasks while ensuring real-world robustness, we release MultiBench, a systematic and unified large-scale benchmark for multimodal learning spanning 15 datasets, 10 modalities, 20 prediction tasks, and 6 research areas. MultiBench provides an automated end-to-end machine learning pipeline that simplifies and standardizes data loading, experimental setup, and model evaluation. To enable holistic evaluation, MultiBench offers a comprehensive methodology to assess (1) generalization, (2) time and space complexity, and (3) modality robustness. MultiBench introduces impactful challenges for future research, including scalability to large-scale multimodal datasets and robustness to realistic imperfections. To accompany this benchmark, we also provide a standardized implementation of 20 core approaches in multimodal learning spanning innovations in fusion paradigms, optimization objectives, and training approaches. Simply applying methods proposed in different research areas can improve the state-of-the-art performance on 9/15 datasets. Therefore, MultiBench presents a milestone in unifying disjoint efforts in multimodal machine learning research and paves the way towards a better understanding of the capabilities and limitations of multimodal models, all the while ensuring ease of use, accessibility, and reproducibility. MultiBench, our standardized implementations, and leaderboards are publicly available, will be regularly updated, and welcomes inputs from the community.

1. Introduction

Our perception of the natural world surrounding us involves multiple sensory modalities: we see objects, hear audio signals, feel textures, smell fragrances, and taste flavors. A modality refers to a way in which a signal exists or is experienced. Multiple modalities then refer to a combination of multiple signals each expressed in heterogeneous manners [10]. Many real-world research problems are inherently multimodal: from the early research on audio-visual speech recognition [48] to the recent explosion of interest in language, vision, and video understanding [48] for applications such as multimedia [102, 116], affective computing [101, 127], robotics [84, 91], finance [70], dialogue [126], human-computer interaction [47, 117], and healthcare [51, 172]. The research field of multimodal machine learning (ML) brings unique challenges for both computational and theoretical research given the heterogeneity of various data sources [10]. At its core lies the learning of multimodal representations that capture correspondences between modalities for prediction, and has emerged as a vibrant interdisciplinary field of immense importance and with extraordinary potential.

Limitations of current multimodal datasets:

Current multimodal research has led to impressive advances in benchmarking and modeling for specific domains such as language and vision [4, 103, 105, 132]. However, other domains, modalities, and tasks are relatively understudied. Many of these tasks are crucial for real-world intelligence such as improving accessibility to technology for diverse populations [62], accelerating healthcare diagnosis to aid doctors [78], and building reliable robots that can engage in human-AI interactions [16, 83, 137]. Furthermore, current benchmarks typically focus on performance without quantifying the potential drawbacks involved with increased time and space complexity [148], and the risk of decreased robustness from imperfect modalities [101, 123]. In real-world deployment, a balance between performance, robustness, and complexity is often required.

MultiBench:

In order to accelerate research in building general-purpose multimodal models, our main contribution is MultiBench (Figure 1), a systematic and unified large-scale benchmark that brings us closer to the requirements of real-world multimodal applications. MultiBench is designed to comprehensively evaluate 3 main components: generalization across domains and modalities, complexity during training and inference, and robustness to noisy and missing modalities:

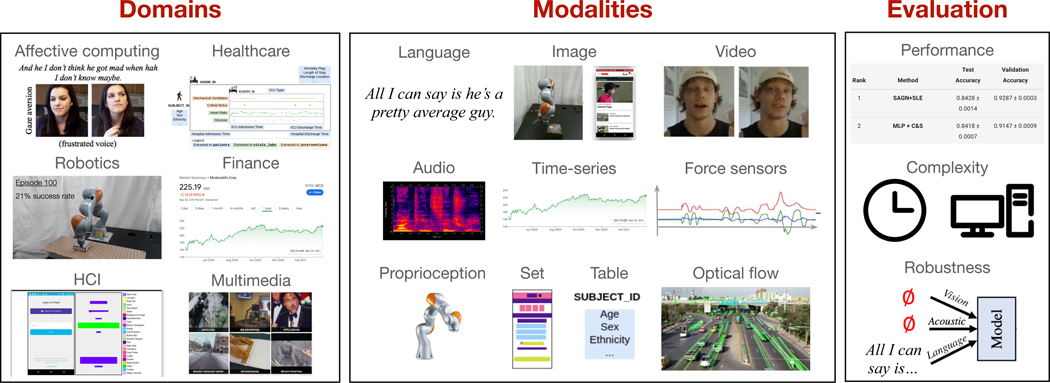

Figure 1:

MultiBench contains a diverse set of 15 datasets spanning 10 modalities and testing for more than 20 prediction tasks across 6 distinct research areas, thereby enabling standardized, reliable, and reproducible large-scale benchmarking of multimodal models. To reflect real-world requirements, MultiBench is designed to holistically evaluate (1) performance across domains and modalities, (2) complexity during training and inference, and (3) robustness to noisy and missing modalities.

Generalization across domains and modalities: MultiBench contains a diverse set of 15 datasets spanning 10 modalities and testing for 20 prediction tasks across 6 distinct research areas. These research areas include important tasks understudied from a multimodal learning perspective, such as healthcare, finance, and HCI. Building upon extensive data-collection efforts by domain experts, we worked with them to adapt datasets that reflect real-world relevance, present unique challenges to multimodal learning, and enable opportunities in algorithm design and evaluation.

Complexity during training and inference: MultiBench also quantifies potential drawbacks involving increased time and space complexity of multimodal learning. Together, these metrics summarize the tradeoffs of current models as a step towards efficiency in real-world settings [142].

-

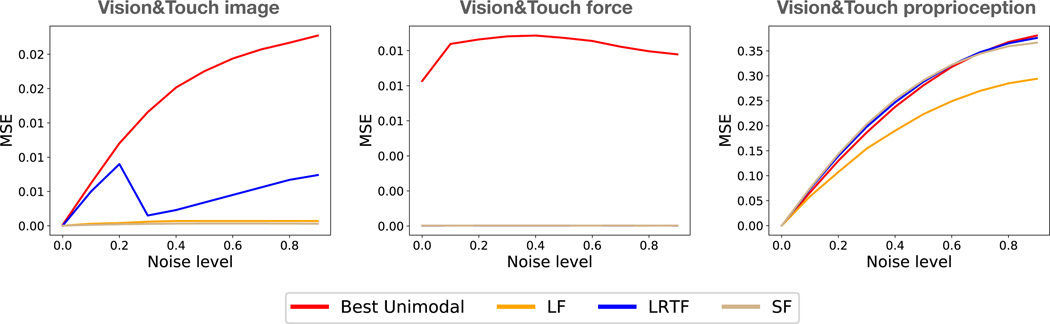

Robustness to noisy and missing modalities: Different modalities often display different noise topologies, and real-world multimodal signals possibly suffer from missing or noisy data in at least one of the modalities [10]. MultiBench provides a standardized way to assess the risk of decreased robustness from imperfect modalities through a set of modality-specific and multimodal imperfections that reflect real-world noise, thereby providing a benchmark towards safe and robust deployment.

Together, MultiBench unifies efforts across separate research areas in multimodal learning to enable quick and accurate benchmarking across a wide range of datasets and metrics.

To help the community accurately compare performance and ensure reproducibility, MultiBench includes an end-to-end pipeline including data preprocessing, dataset splits, multimodal algorithms, evaluation metrics, and cross-validation protocols. This includes an implementation of 20 core multimodal approaches spanning innovations in fusion paradigms, optimization objectives, and training approaches in a standard public toolkit called MultiZoo. We perform a systematic evaluation and show that directly applying these methods can improve the state-of-the-art performance on 9 out of the 15 datasets. Therefore, MultiBench presents a step towards unifying disjoint efforts in multimodal research and paves a way towards a deeper understanding of multimodal models.

Most importantly, our public zoo of multimodal benchmarks and models will ensure ease of use, accessibility, and reproducibility. Finally, we outline our plans to ensure the continual availability, maintenance, and expansion of MultiBench, including using it as a theme for future workshops and competitions and to support the multimodal learning courses taught around the world.

2. MultiBench: The Multiscale Multimodal Benchmark

Background:

We define a modality as a single particular mode in which a signal is expressed or experienced. Multiple modalities then refer to a combination of multiple heterogeneous signals [10]. The first version of MultiBench focuses on benchmarking algorithms for multimodal fusion, where the main challenge is to join information from two or more modalities to perform a prediction (e.g., classification, regression). Classic examples for multimodal fusion include audio-visual speech recognition where visual lip motion is fused with speech signals to predict spoken words [48]. Multimodal fusion can be contrasted with multimodal translation where the goal is to generate a new and different modality [162], grounding and question answering where one modality is used to query information in another (e.g., visual question answering [4]), and unsupervised or self-supervised multimodal representation learning [109, 143]. We plan future versions of MultiBench to study these important topics in multimodal research in Appendix I.

Each of the following 15 datasets in MultiBench contributes a unique perspective to the various technical challenges in multimodal learning involving learning and aligning complementary information, scalability to a large number of modalities, and robustness to realistic real-world imperfections.

2.1. Datasets

Table 1 shows an overview of the datasets provided in MultiBench. We provide a brief overview of the modalities and tasks for each of these datasets and refer the reader to Appendix C for details.

Table 1:

MultiBench provides a comprehensive suite of 15 multimodal datasets to benchmark current and proposed approaches in multimodal representation learning. It covers a diverse range of research areas, dataset sizes, input modalities (in the form of : language, : image, : video, : audio, : time-series, : tabular, : force sensor, : proprioception sensor, : set, : optical flow), and prediction tasks. We provide a standardized data loader for datasets in MultiBench, along with a set of state-of-the-art multimodal models.

| Research Area | Size | Dataset | Modalities | # Samples | Prediction task |

|---|---|---|---|---|---|

| Affective Computing | S M L L |

MUStARD [24] CMU-MOSI [181] UR-FUNNY [64] CMU-MOSEI [183] |

{, , } {, , } {, , } {, , } |

690 2,199 16,514 22,777 |

Sarcasm sentiment humor sentiment, emotions |

| Healthcare | L | MIMIC [78] | {, } | 36,212 | mortality, ICD-9 codes |

| Robotics | M L |

MuJoCo Push [90] Vision&Touch [92] |

{, , } {, , } |

37,990 147,000 |

object pose contact, robot pose |

| Finance | M M M |

Stocks-F&B Stocks-Health Stocks-Tech |

{ ×18} { ×63} { ×100} |

5,218 5,218 5,218 |

stock price, volatility stock price, volatility stock price, volatility |

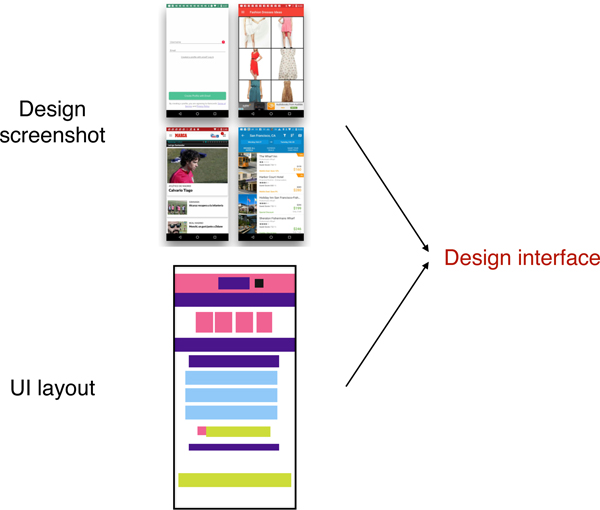

| HCI | S | ENRICO [93] | {, } | 1,460 | design interface |

| Multimedia | S M M L |

Kinetics400-S [80] MM-IMDb [8] AV-MNIST [161] Kinetics400-L [80] |

{, , } {, } {, } {, , } |

2,624 25,959 70,000 306,245 |

human action movie genre digit human action |

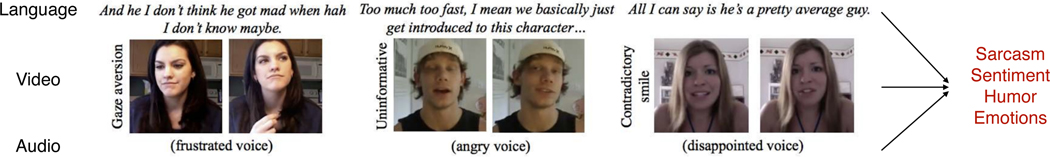

Affective computing studies the perception of human affective states (emotions, sentiment, and personalities) from our natural display of multimodal signals spanning language (spoken words), visual (facial expressions, gestures), and acoustic (prosody, speech tone) [124]. It has broad impacts towards building emotionally intelligent computers, human behavior analysis, and AI-assisted education. MultiBench contains 4 datasets involving fusing language, video, and audio time-series data to predict sentiment (CMU-MOSI [181]), emotions (CMU-MOSEI [183]), humor (UR-FUNNY [64]), and sarcasm (MUStARD [24]). Complementary information may occurs at different moments, requiring models to address the multimodal challenges of grounding and alignment.

Healthcare:

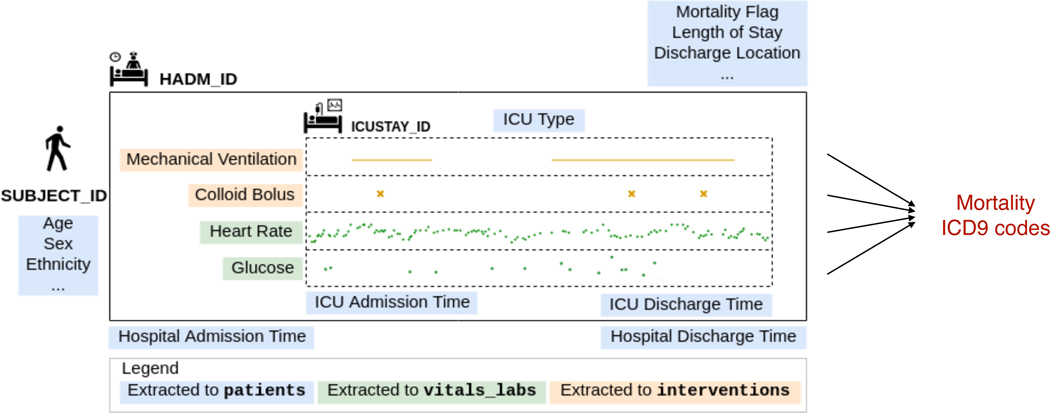

Modern medical decision-making often involves integrating complementary information and signals from several sources such as lab tests, imaging reports, and patient-doctor conversations. Multimodal models can help doctors make sense of high-dimensional data and assist them in the diagnosis process [5]. MultiBench includes the large-scale MIMIC dataset [78] which records ICU patient data including time-series data measured every hour and other demographic variables (e.g., age, gender, ethnicity in the form of tabular numerical data). These are used to predict the disease ICD-9 code and mortality rate. MIMIC poses unique challenges in integrating time-varying and static modalities, reinforcing the need of aligning multimodal information at correct granularities.

Robotics:

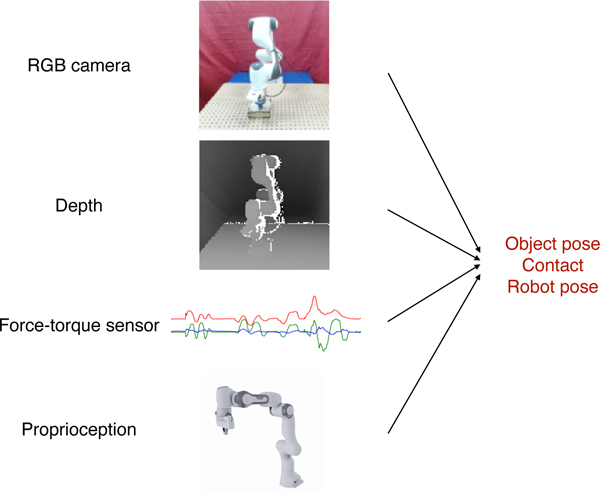

Modern robot systems are equipped with multiple sensors to aid in their decision-making. We include the large-scale MuJoCo Push [90] and Vision&Touch [92] datasets which record the manipulation of simulated and real robotic arms equipped with visual (RGB and depth), force, and proprioception sensors. In MuJoCo Push, the goal is to predict the pose of the object being pushed by the robot end-effector. In Vision&Touch, the goal is to predict action-conditional learning objectives that capture forward dynamics of the different modalities (contact prediction and robot end-effector pose). Robustness is important due to the risk of real-world sensor failures [89].

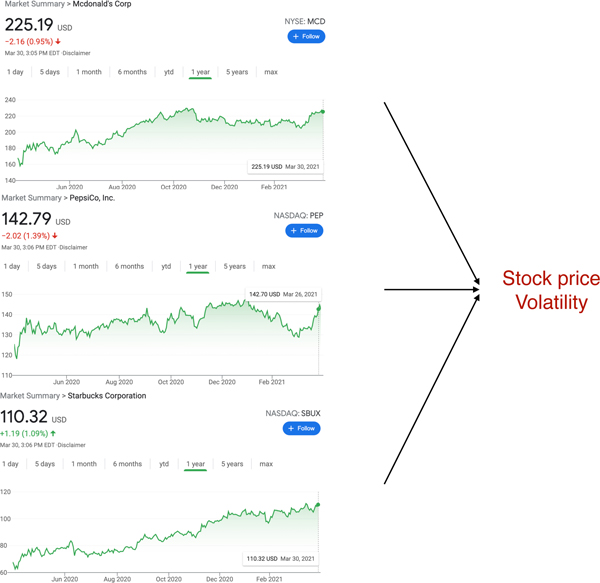

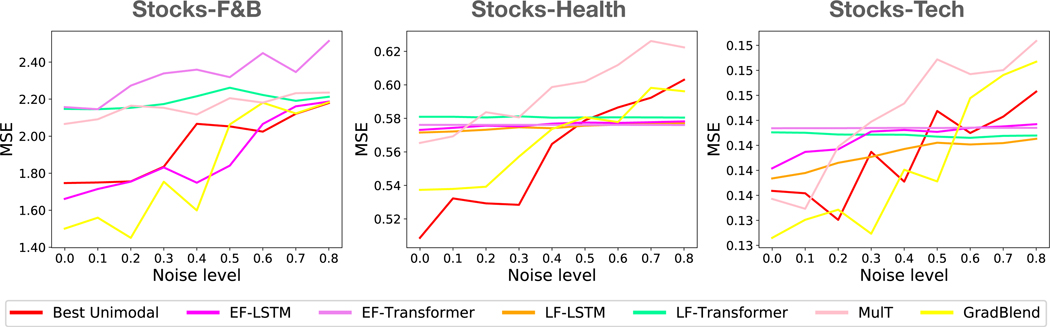

Finance:

We gathered historical stock data from the internet to create our own dataset for financial time-series prediction across 3 groups of correlated stocks: Stocks-F&B, Stocks-Health, and Stocks-Tech. Within each group, the previous stock prices of a set of stocks are used as multimodal time-series inputs to predict the price and volatility of a related stock (e.g., using Apple, Google, and Microsoft data to predict future Microsoft prices). Multimodal stock prediction [136] presents scalability issues due to a large number of modalities (18/63/100 vs 2/3 in most datasets), as well as robustness challenges arising from real-world data with an inherently low signal-to-noise ratio.

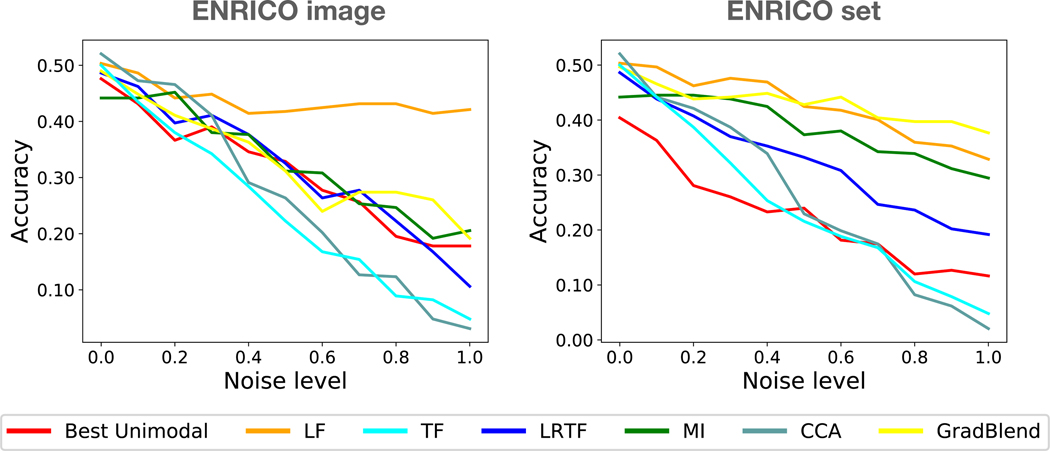

Human Computer Interaction (HCI) studies the design of computer technology and interactive interfaces between humans and computers [43]. Many real-world problems involve multimodal inputs such as language, visual, and audio interfaces. We use the Enrico (Enhanced Rico) dataset [40, 93] of Android app screens (consisting of an image as well as a set of apps and their locations) categorized by their design motifs and collected for data-driven design applications such as design search, user interface (UI) layout generation, UI code generation, and user interaction modeling.

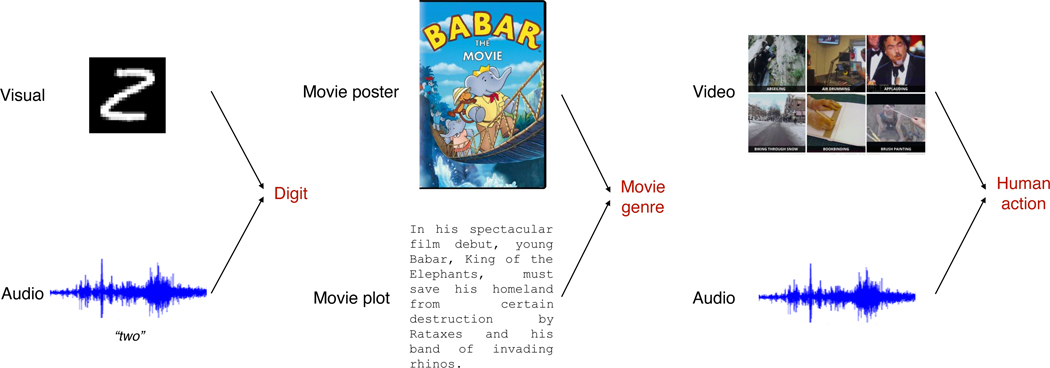

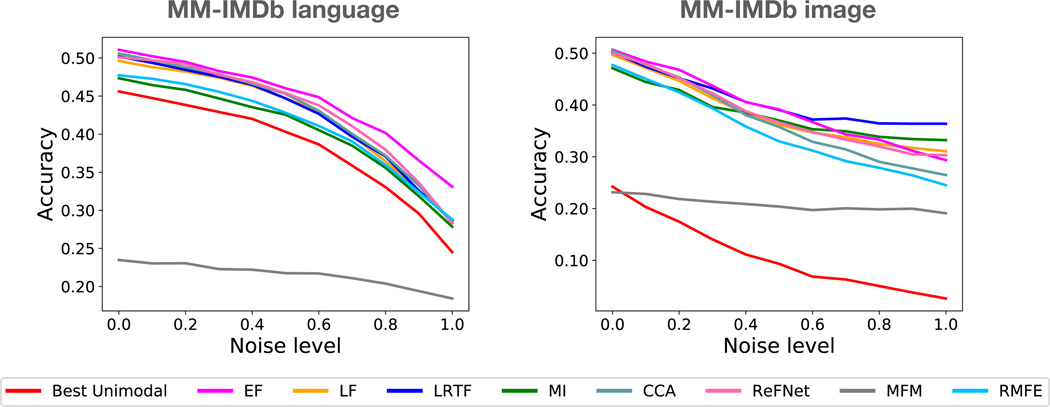

Multimedia:

A significant body of research in multimodal learning has been fueled by the large availability of multimedia data (language, image, video, and audio) on the internet. MultiBench includes 3 popular large-scale multimedia datasets with varying sizes and levels of difficulty: (1) AV-MNIST [161] is assembled from images of handwritten digits [88] and audio samples of spoken digits [94], (2) MM-IMDb [8] uses movie titles, metadata, and movie posters to perform multi-label classification of movie genres, and (3) Kinetics [80] contains video, audio, and optical flow of 306,245 video clips annotated for 400 human actions. To ease experimentation, we split Kinetics into small and large partitions (see Appendix C).

2.2. Evaluation Protocol

MultiBench contains evaluation scripts for the following holistic desiderata in multimodal learning:

Performance:

We standardize evaluation using metrics designed for each dataset, including MSE and MAE for regression to accuracy, micro & macro F1-score, and AUPRC for classification.

Complexity:

Modern ML research unfortunately causes significant impacts to energy consumption [142], a phenomenon often exacerbated in processing high-dimensional multimodal data. As a step towards quantifying energy complexity and recommending lightweight multimodal models, MultiBench records the amount of information taken in bits (i.e., data size), number of model parameters, as well as time and memory resources required during the entire training process. Real-world models may also need to be small and compact to run on mobile devices [131] so we also report inference time and memory on CPU and GPU (see Appendix D.2).

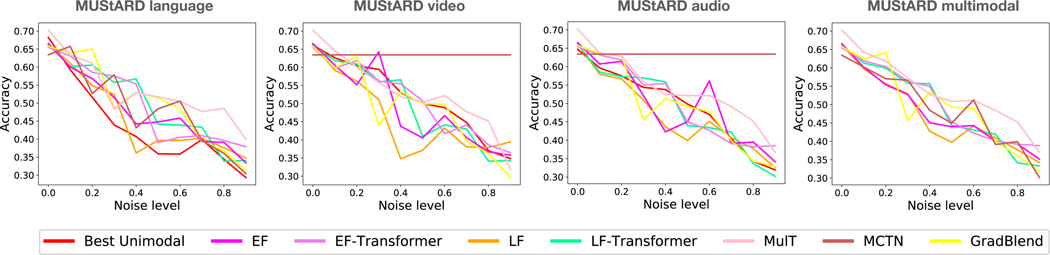

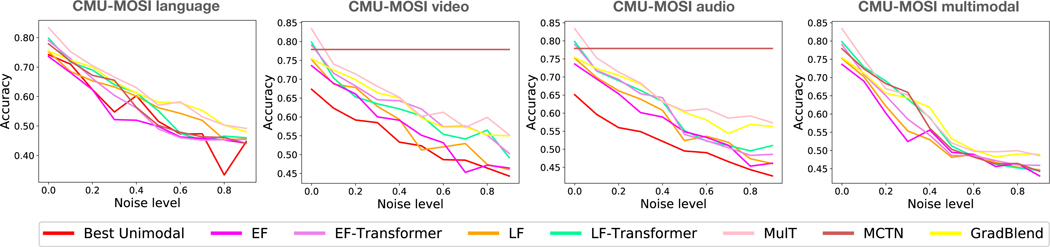

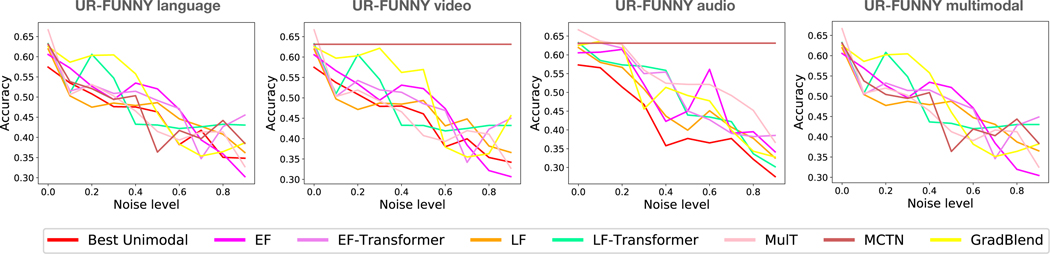

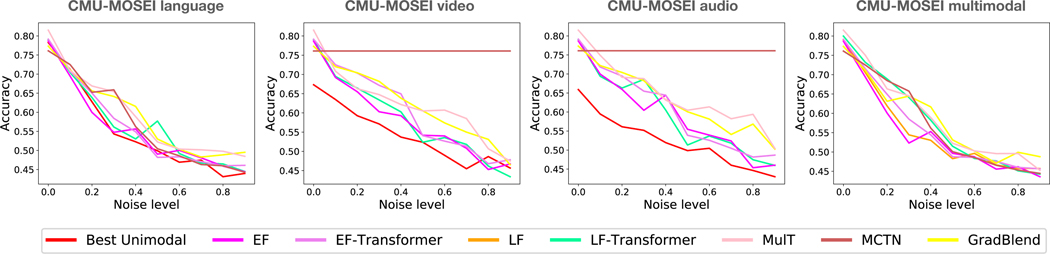

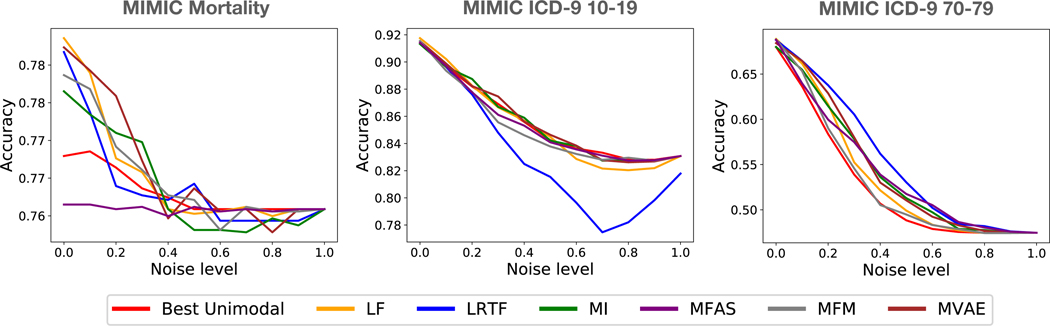

Robustness:

Real-world multimodal data is often imperfect as a result of missing entries, noise corruption, or missing modalities entirely, which calls for robust models that can still make accurate predictions despite only having access to noisy and missing signals [101, 123]. To standardize efforts in evaluating robustness, MultiBench includes the following tests: (1) Modality-specific imperfections are independently applied to each modality taking into account its unique noise topologies (i.e., flips and crops of images, natural misspellings in text, abbreviations in spoken audio). (2) Multimodal imperfections capture correlations in imperfections across modalities (e.g., missing modalities, or a chunk of time missing in multimodal time-series data). We use both qualitative measures (performance-imperfection curve) and quantitative metrics [149] that summarize (1) relative robustness measuring accuracy under imperfections and (2) effective robustness measuring the rate of accuracy drops after equalizing for initial accuracy on clean test data (see Appendix D.3 for details).

3. MultiZoo: A Zoo of Multimodal Algorithms

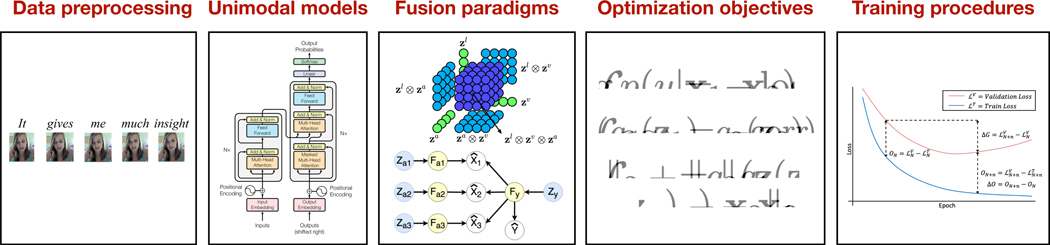

To complement MultiBench, we release a comprehensive toolkit, MultiZoo, as starter code for multimodal algorithms which implements 20 methods spanning different methodological innovations in (1) data preprocessing, (2) fusion paradigms, (3) optimization objectives, and (4) training procedures (see Figure 2). To introduce these algorithms, we use the simple setting with 2 modalities for notational convenience but refer the reader to Appendix E for detailed descriptions and implementations. We use , for input modalities, , for unimodal representations, for the multimodal representation, and for the predicted label.

Figure 2:

MultiZoo provides a standardized implementation of a suite of multimodal methods in a modular fashion to enable accessibility for new researchers, compositionality of approaches, and reproducibility of results.

3.1. Data Preprocessing

Temporal alignment [26] has been shown to help tackle the multimodal alignment problem for time-series data. This approach assumes a temporal granularity of the modalities (e.g., at the level of words for text) and aligns information from the remaining modalities to the same granularity. We call this approach WordAlign [26] for temporal data where text is one of the modalities.

3.2. Fusion Paradigms

Early and late fusion:

Early fusion performs concatenation of input data before using a model (i.e.,) while late fusion applies suitable unimodal models to each modality to obtain their feature representations, concatenates these features, and defines a classifier to the label (i.e.,) [10]. MultiZoo includes their implementations denoted as EF and LF respectively. Tensors are specifically designed to tackle the multimodal complementarity challenge by explicitly capturing higher-order interactions across modalities [179]. Given unimodal representations , , tensors are defined as where denotes an outer product. However, computing tensor products is expensive since their dimension scales exponentially with the number of modalities so several efficient approximations have been proposed [71, 101, 106]. MultiZoo includes Tensor Fusion (TF) [179] as well as the approximate Low-rank Tensor Fusion (LRTF) [106].

Multiplicative Interactions (MI) generalize tensor products to include learnable parameters that capture multimodal interactions [77]. In its most general form, MI defines a bilinear product where , , , and are trainable parameters. By appropriately constraining the rank and structure of these parameters, MI recovers HyperNetworks [61] (unconstrained parameters resulting in a matrix output), Feature-wise linear modulation (FiLM) [120, 188] (diagonal parameters resulting in vector output), and Sigmoid units [37] (scalar parameters resulting in scalar output). MultiZoo includes all 3 as MI-Matrix, MI-Vector, and MI-Scalar respectively.

Multimodal gated units learn representations that dynamically change for every input [25, 167, 171]. Its general form can be written as , where represents a function with sigmoid activation and denotes element-wise product. is commonly referred to as “attention weights” learned from to attend on . Attention is conceptually similar to MI-Vector but recent work has explored more expressive forms of such as using a Query-Key-Value mechanism [167] or fully-connected layers [25]. We implement the Query-Key-Value mechanism as NL Gate [167].

Temporal attention models tackle the challenge of multimodal alignment and complementarity. Transformer models [158] are useful for temporal data by automatically aligning and capturing complementary features at different time-steps [154, 174]. We include the Multimodal Transformer (MulT) [154] which applied a Crossmodal Transformer block using to attend to (and vice-versa) to obtain a multimodal representation .

Algorithm 1.

PyTorch code integrating MultiBench datasets and MultiZoo models.

| from datasets.get_data import get_dataloader |

| from unimodals.common_models import ResNet, Transformer |

| from fusions.common_fusions import MultInteractions |

| from training_structures.gradient_blend import train, test |

|

# loading Multimodal IMDB dataset |

| traindata, validdata, testdata = get_dataloader(‘multimodal_imdb’) |

| out_channels = 3 |

| # defining ResNet and Transformer unimodal encoders |

| encoders = [ResNet(in_channels=1, out_channels, layers=5), |

| Transformer(in_channels=1, out_channels, layers=3)] # defining a Multiplicative Interactions fusion layer fusion = MultInteractions([out_channels*8, out_channels*32], out_channels*32, ‘matrix’) classifier = MLP(out_channels*32, 100, labels=23) # training using Gradient Blend algorithm |

| model = train(encoders, fusion, classifier, traindata, validdata, epochs=100, optimtype=torch.optim.SGD, lr=0.01, weight_decay=0.0001) |

| # testing |

| performance, complexity, robustness = test(model, testdata) |

Architecture search:

Instead of hand-designing architectures, several approaches define a set of atomic operations (e.g., linear transformation, activation, attention, etc.) and use architecture search to learn the best order of these operations for a given task [122, 173], which we call MFAS.

3.3. Optimization Objectives

In addition to the standard supervised losses (e.g., cross entropy for classification, MSE/MAE for regression), several proposed methods have proposed new objective functions based on:

Prediction-level alignment objectives tackle the challenge of alignment by capturing a representations where semantically similar concepts from different modalities are close together [9, 33, 91, 151]. Alignment objectives have been applied at both prediction and feature levels. In the former, we implement Canonical Correlation Analysis (CCA) [7, 145, 166], which maximizes correlation by adding a loss term where , are auxiliary classifiers mapping each unimodal representation to the label.

Feature-level alignment:

In the latter, contrastive learning has emerged as a popular approach to bring similar concepts close in feature space and different concepts far away [33, 91, 151]. We include REFNET [135] which uses a self-supervised contrastive loss between unimodal representations , and the multimodal representation , i.e., where , is a layer mapping each modality’s representation into the joint multimodal space.

Reconstruction objectives based on generative-discriminative models (e.g., VAEs) aim to reconstruct the input (or some part of the input) [91, 155]. These have been shown to better preserve task-relevant information learned in the representation, especially in settings with sparse supervised signals such as robotics [91] and long videos [155]. We include the Multimodal Factorized Model (MFM) [155] that learns a representation that can reconstruct input data , while also predicting the label, i.e., adding an objective where , are auxiliary decoders mapping to each raw input modality. MFM can be paired with any multimodal model from section 3.2 (e.g., learning via tensors and adding a term to reconstruct input data).

Improving robustness:

These approaches modify the objective function to account for robustness to noisy [101] or missing [89, 111, 123] modalities. MultiZoo includes MCTN [123] which uses cycle-consistent translation to predict the noisy/missing modality from present ones (i.e., a path , with additional reconstruction losses ). While MCTN is trained with multimodal data, it only takes in one modality at test-time which makes it robust to the remaining modalities.

3.4. Training Procedures

Improving generalization:

Recent work has found that directly training a multimodal model is sub-optimal since different modalities overfit and generalize at different rates. MultiZoo includes Gradient Blending (GRadBlend), that computes generalization statistics for each modality to determine their weights during fusion [167], and Regularization by Maximizing Functional Entropies (RMFE), which uses functional entropy to balance the contribution of each modality to the result [53].

3.5. Putting Everything Together

In Algorithm 1, we show a sample code snippet in Python that loads a dataset from MultiBench (section C.2), defines the unimodal and multimodal architectures, optimization objective, and training procedures (section 3), before running the evaluation protocol (section 2.2). Our MultiZoo toolkit is easy to use and trains entire multimodal models in less than 10 lines of code. By standardizing the implementation of each module and disentangling the individual effects of models, optimizations, and training, MultiZoo ensures both accessibility and reproducibility of its algorithms.

4. Experiments and Discussion

Setup:

Using MultiBench, we load each of the datasets and test the multimodal approaches in MultiZoo. We only vary the contributed method of interest and keep all other possibly confounding factors constant (i.e., using the exact same training loop when testing a new multimodal fusion paradigm), a practice unfortunately not consistent in previous work. Our code is available at https://github.com/pliang279/MultiBench. Please refer to Appendix G for experimental details. MultiBench allows for careful analysis of multimodal models and we summarize the main take-away messages below (see Appendix H for full results and analysis).

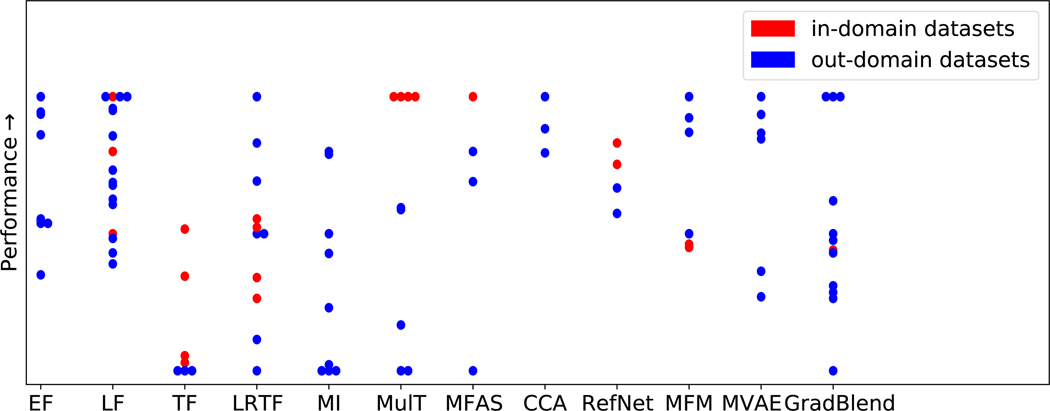

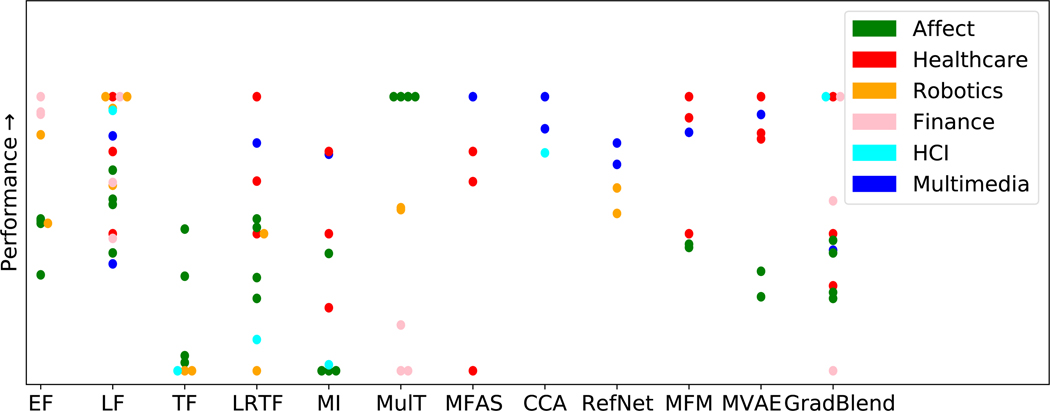

Benefits of standardization:

From Table 2, simply applying methods proposed outside of the same research area can improve the state-of-the-art performance on 9 of the 15 MultiBench datasets, especially for relatively understudied domains and modalities (i.e., healthcare, finance, HCI).

Table 2:

Standardizing methods and datasets enables quick application of methods from different research areas which achieves stronger performance on 9/15 datasets in MultiBench, especially in healthcare, HCI, robotics, and finance. In-domain refers to the best performance across methods previously proposed on that dataset and out-domain shows best performance across remaining methods. ↑ indicates metrics where higher is better (Acc, AUPRC), ↓ indicates lower is better (MSE).

| Dataset | MUStARD ↑ | CMU-MOSI ↑ | UR-FUNNY ↑ | CMU-MOSEI ↑ | MIMIC ↑ |

|---|---|---|---|---|---|

| Unimodal | 68.6±0.4 | 74.2±0.5 | 58.3±0.2 | 78.8±1.5 | 76.7±0.3 |

|

| |||||

| In-domain | 66.3±0.3 | 83.0±0.1 | 62.9±0.2 | 82.1±0.5 | 77.9±0.3 |

| Out-domain | 71.8±0.3 | 75.5±0.5 | 66.7±0.3 | 78.1±0.3 | 78.2±0.2 |

| Improvement | 4.7% | - | 6.0% | - | 0.4% |

| Dataset | MuJoCo Push ↓ | V&T EE ↓ | Stocks-F&B ↓ | Stocks-Health ↓ | Stocks-Tech ↓ |

|---|---|---|---|---|---|

| Unimodal | 0.334±0.034 | 0.202±0.022 | 1.856±0.093 | 0.541±0.010 | 0.125±0.004 |

|

| |||||

| In-domain | 0.290±0.018 | 0.258±0.011 | 1.856±0.093 | 0.541±0.010 | 0.125±0.004 |

| Out-domain | 0.402±0.026 | 0.185±0.011 | 1.820±0.138 | 0.526±0.017 | 0.120±0.008 |

| Improvement | - | 8.4% | 1.9% | 2.8% | 4.0% |

| Dataset | ENRICO ↑ | MM-IMDb ↑ | AV-MNIST ↑ | Kinetics-S ↑ | Kinetics-L ↑ |

|---|---|---|---|---|---|

| Unimodal | 47.0±1.6 | 45.6±4.5 | 65.1±0.2 | 56.5 | 72.6 |

|

| |||||

| In-domain | 47.0±1.6 | 49.8±1.7 | 72.8±0.2 | 56.1 | 74.7 |

| Out-domain | 51.0±1.4 | 50.2±0.9 | 72.3±0.2 | 23.7 | 71.7 |

| Improvement | 8.5% | 0.8% | - | - | - |

Generalization across domains and modalities:

MultiBench offers an opportunity to analyze algorithmic developments across a large suite of modalities, domains, and tasks. We summarize the following observations regarding performance across datasets and tasks (see details in Appendix H.7):

Many multimodal methods show their strongest performance on in-domain datasets and do not generalize across domains and modalities. For example, MFAS [122] works well on domains it was designed for (AV-MNIST and MM-IMDb in multimedia) but does not generalize to other domains such as healthcare (MIMIC). Similarly, MulT [154] performs extremely well on the affect recognition datasets it was designed for but struggles on other multimodal time-series data in the finance and robotics domains. Finally, GRadBlend [167], an approach specifically designed to improve generalization in multimodal learning and tested on video and audio datasets (e.g., Kinetics), does not perform well on other datasets. In general, we observe high variance in the performance of multimodal methods across datasets in MultiBench. Therefore, there still does not exist a one-size-fits-all model, especially for understudied modalities and tasks.

There are methods that are surprisingly generalizable across datasets. These are typically general modality-agnostic methods such as LF. While simple, it is a strong method that balances simplicity, performance, and low complexity. However, it does not achieve the best performance on any dataset.

Several methods such as MFAS and CCA are designed for only 2 modalities (usually image and text), and TF and MI do not scale efficiently beyond 2/3 modalities. We encourage the community to generalize these approaches across datasets and modalities on MultiBench.

Tradeoffs between modalities:

How far can we go with unimodal methods? Surprisingly far! From Table 2, we observe that decent performance can be obtained with the best performing modality. Further improvement via multimodal models may come at the expense of around 2−3× the parameters.

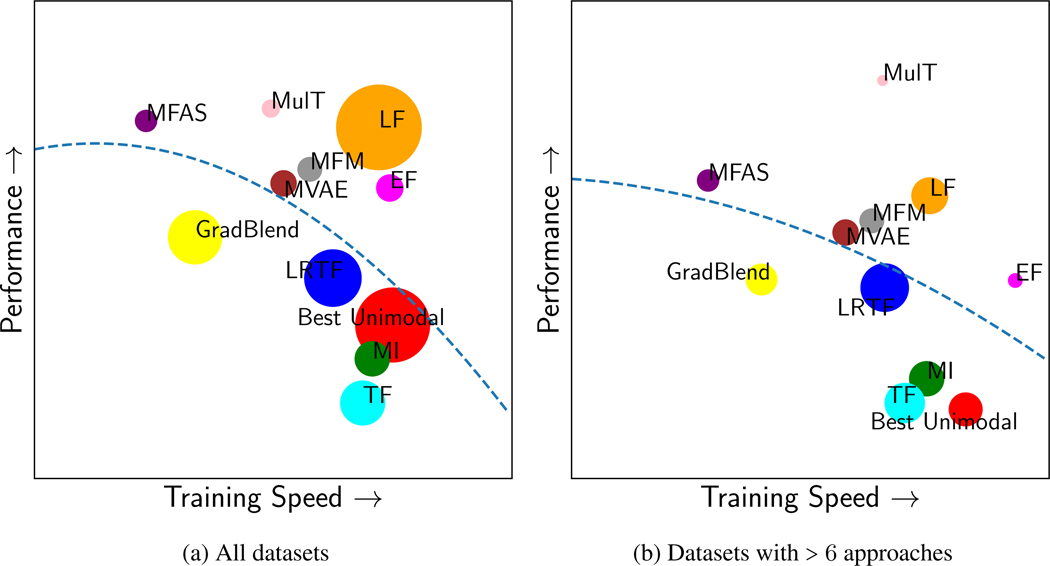

Tradeoffs between performance and complexity:

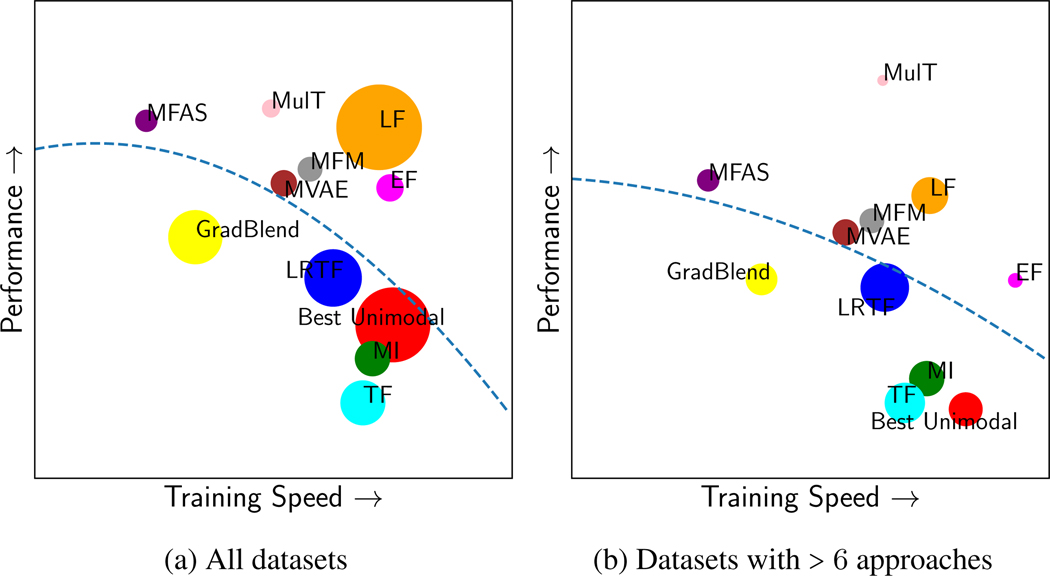

In Figure 3(a), we summarize the performance of all methods in terms of performance and complexity. We find a strong tradeoff between these two desiderata: simple fusion techniques (e.g., LF) are actually appealing choices which score high on both metrics, especially when compared to complex (but slightly better performing) methods such as architecture search (MFAS) or Multimodal Transformers (MulT). While LF is the easiest to adapt to new datasets and domains, we encountered difficulties in adapting several possibly well-performing methods (such as MFAS or MulT) to new datasets and domains. Therefore, while their average performance is only slightly better than LF on all datasets (see Figure 3(a)), they perform much better on well-studied datasets (see Figure 3(b)). We hope that the release of MultiBench will greatly accelerate research in adapting complex methods on new datasets (see full results in Appendix H.8).

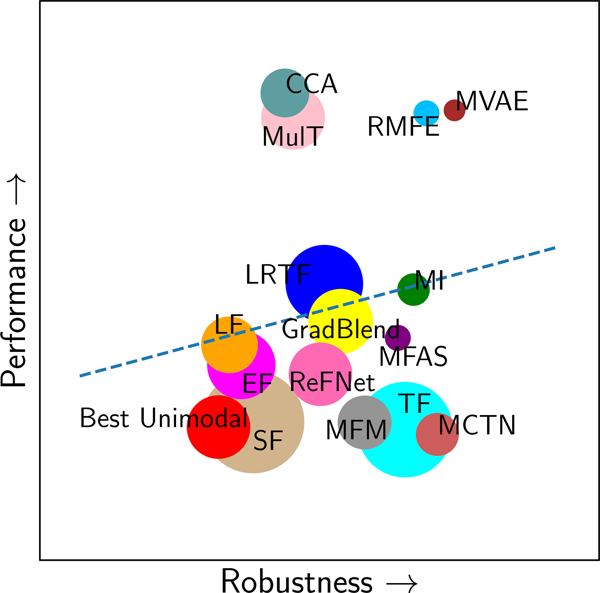

Figure 3: Tradeoff between performance and complexity.

Size of circles shows variance in performance across (a) all datasets and (b) datasets on which we tested >6 approaches. We plot a dotted blue line of best quadratic fit to show the Pareto frontier. These strong tradeoffs should encourage future work in lightweight multimodal models that generalize across datasets, as well as in adapting several possibly well-performing methods (such as MFAS or MulT) to new datasets and domains.

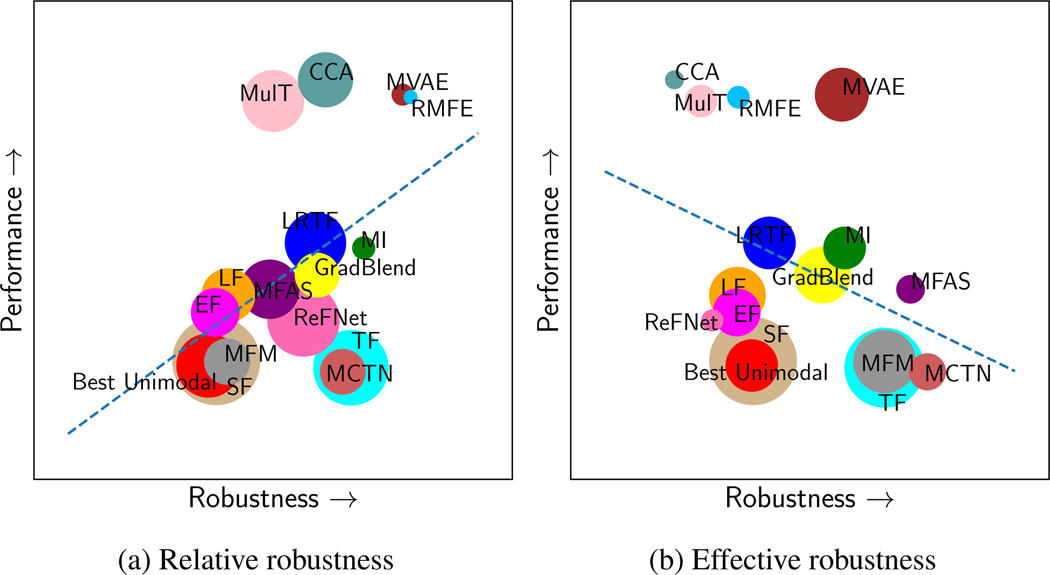

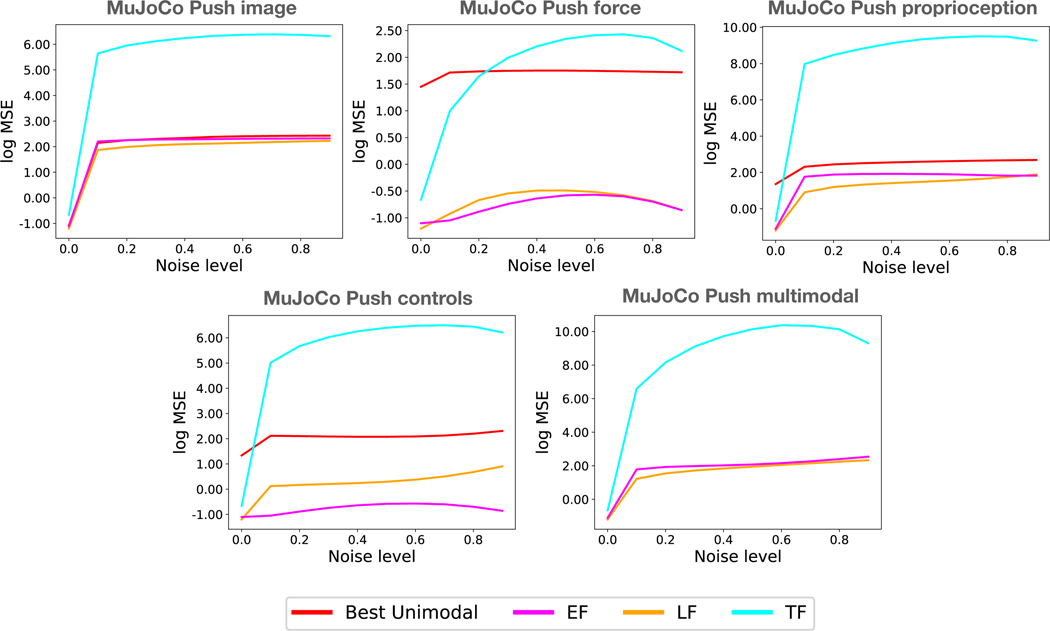

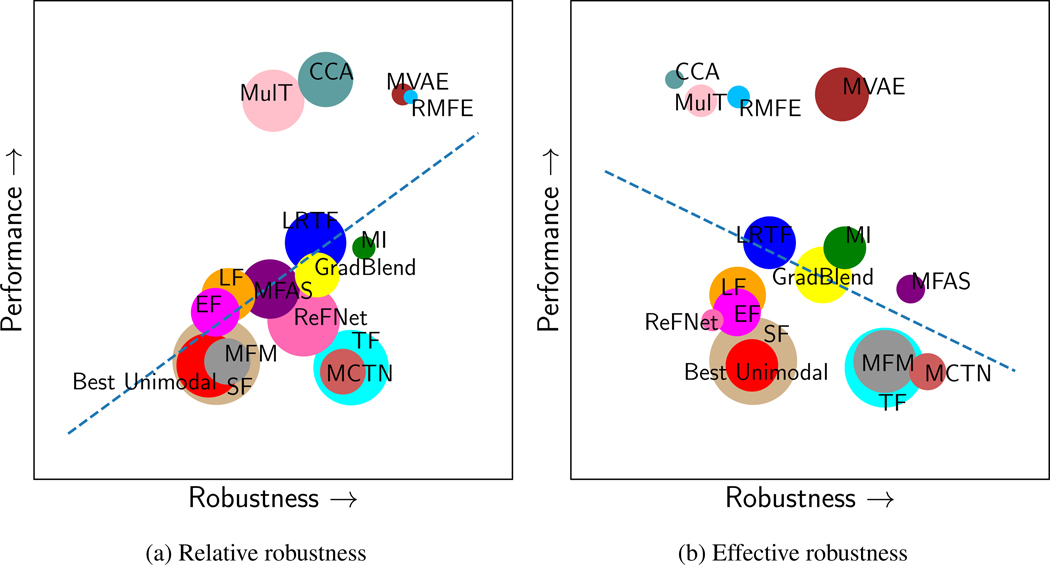

Tradeoffs between performance and robustness:

In Figure 4, we plot a similar tradeoff plot between accuracy and (relative & effective) robustness. As a reminder, relative robustness directly measures accuracy under imperfections while effective robustness measures the rate at which accuracy drops after equalizing for initial accuracy on clean test data (see Appendix D.3 for details). We observe a positive correlation between performance and relative robustness (see Figure 4(a)), implying that models starting off with higher accuracy tend to stay above other models on the performance-imperfection curve. However, we observe a negative best fit between performance and effective robustness (see Figure 4(b)) because several well-performing methods such as MulT, CCA, and MVAE tend to drop off faster after equalizing for initial accuracy on clean test data. Furthermore, very few models currently achieve both positive relative and effective robustness, which is a crucial area for future multimodal research (see full results in Appendix H.9).

Figure 4: Tradeoff between performance and robustness.

Size of circles shows variance in robustness across datasets. We show the line of best linear fit in dotted blue. While better performing methods show better relative robustness (a), some suffer in effective robustness since performance drops off faster (b). Few models currently achieve both relative and effective robustness, which suggests directions for future research.

5. Related Work

We review related work on standardizing datasets and methods in multimodal learning.

Comparisons with related benchmarks:

To the best of our knowledge, MultiBench is the first multimodal benchmark with such a large number of datasets, modalities, and tasks. Most previous multimodal benchmarks have focused on a single research area such as within affective computing [56], human multimodal language [177], language and vision-based question answering [50, 138], text classification with external multimodal information [60], and multimodal learning for educa-tion [65]. MultiBench is specifically designed to go beyond the commonly studied language, vision, and audio modalities to encourage the research community to explore relatively understudied modalities (e.g., tabular data, time-series, sensors, graph and set data) and build general multimodal methods that can handle a diverse set of modalities.

Our work is also inspired by recent progress in better evaluation benchmarks for a suite of important tasks in ML such as language representation learning [163, 164], long-range sequence modeling [150], multilingual representation learning [72], graph representation learning [74], and robustness to distribution shift [85]. These well-crafted benchmarks have accelerated progress in new algorithms, evaluation, and analysis in their respective research areas.

Standardizing multimodal learning:

There have also been several attempts to build a single model that works well on a suite of multimodal tasks [95, 109, 143]. However, these are limited to the language and vision space, and multimodal training is highly tailored for text and images. Transformer architectures have emerged as a popular choice due to their suitability for both language and image data [27, 73] and a recent public toolkit was released for incorporating multimodal data on top of text-based Transformers for prediction tasks [60]. By going beyond Transformers and text data, MultiBench opens the door to important research questions involving a much more diverse set of modalities and tasks while holistically evaluating performance, complexity, and robustness.

Analysis of multimodal representations:

Recent work has begun to carefully analyze and challenge long-standing assumptions in multimodal learning. They have shown that certain models do not actually learn cross-modal interactions but rather rely on ensembles of unimodal statistics [68] and that certain datasets and models are biased to the most dominant modality [22, 59], sometimes ignoring others completely [3]. These observations are currently only conducted on specific datasets and models without testing their generalization to others, a shortcoming we hope to solve using MultiBench which enables scalable analysis over modalities, tasks, and models.

6. Conclusion

Limitations:

While MultiBench can help to accelerate research in multimodal ML, we are aware of the following possible limitations (see detailed future directions in Appendix I):

Tradeoffs between generality and specificity: While it is desirable to build models that work across modalities and tasks, there is undoubtedly merit in building modality and task-specific models that can often utilize domain knowledge to improve performance and interpretability (e.g., see neurosymbolic VQA [159], or syntax models for the language modality [31]). MultiBench is not at odds with research in this direction: in fact, by easing access to data, models, and evaluation, we hope that MultiBench will challenge researchers to design interpretable models leveraging domain knowledge for many multimodal tasks. It remains an open question to define “interpretability” for other modalities beyond image and text, a question we hope MultiBench will drive research in.

Scale of datasets, models, and metrics: We plan for MultiBench to be a continuously-growing community effort with regular maintenance and expansion. While MultiBench currently does not include several important research areas outside of multimodal fusion (e.g., question answering [4, 63], retrieval [187], grounding [32], and reinforcement learning [110]), and is also limited by the models and metrics it supports, we outline our plan to expand in these directions in Appendix I.

Projected expansions of MultiBench:

In this subsection, we describe concrete ongoing and future work towards expanding MultiBench (see details in Appendix I).

-

Other multimodal research problems: We are genuinely committed to building a community around these resources and continue improving it over time. While we chose to focus on multimodal fusion by design for this first version to have a more coherent way to standardize and evaluate methods across datasets, we acknowledge the breadth of multimodal learning and are looking forward to expanding it in other directions in collaboration with domain experts. We have already included 2 datasets in captioning (and more generally for non-language outputs, retrieval): (1) Yummly-28K of paired videos and text descriptions of food recipes [114], and (2) Clotho dataset for audio-captioning [45] as well as a language-guided RL environment Read to Fight Monsters (RTFM) [188] and are also working towards more datasets in QA, retrieval, and multimodal RL.

To help in scalable expansion, we plan for an open call to the community for suggestions and feedback about domains, datasets, and metrics. As a step in this direction, we have concrete plans to use MultiBench as a theme for future workshops and competitions (building on top of the multimodal workshops we have been organizing at NAACL 2021, ACL 2020, and ACL 2019, and in multimodal learning courses (starting with the course taught annually at CMU). Since MultiBench is public and will be regularly maintained, the existing benchmark, code, evaluation, and experimental protocols can greatly accelerate any dataset and modeling innovations added in the future. In our public GitHub, we have included a section on contributing through task proposals or additions of datasets and algorithms. The authors will regularly monitor new proposals through this channel.

New evaluation metrics: We also plan to include evaluation for distribution shift, uncertainty estimation, tests for fairness and social biases, as well as labels/metrics for interpretable multimodal learning. In the latter, we plan to include the EMAP score [68] as an interpretability metric assessing whether cross-modal interactions improve performance.

Multimodal transfer learning and co-learning: Can training data in one dataset help learning on other datasets? MultiBench enables easy experimentation of such research questions: our initial experiments on transfer learning found that pre-training on larger datasets in the same domain can improve performance on smaller datasets when fine-tuned on a smaller dataset: performance on the smaller CMU-MOSI dataset improved from 75.2 to 75.8 using the same late fusion model with transfer learning from the larger UR-FUNNY and CMU-MOSEI datasets. Furthermore, recent work has shown that multimodal training can help improve unimodal performance as well [140, 170, 180]. While previous experiments were on a small scale and limited to a single domain, we plan to expand significantly on this phenomenon (multimodal co-learning) in future versions of MultiBench.

Multitask learning across modalities: Multitask learning across multimodal tasks with a shared set of input modalities is a promising direction that can enable statistical strength sharing across datasets and efficiency in training a single model. Using MultiBench, we also ran an extra experiment on multi-dataset multitask learning. We used the 4 datasets in the affective computing domain and trained a single model across all 4 of them with adjustable input embedding layers if the input features were different and separate classification heads for each dataset’s task. We found promising initial results with performance on the largest CMU-MOSEI dataset improving from 79.2 to 80.9 for a late fusion model and from 82.1 to 82.9 using a multimodal transformer model, although performance on the smaller CMU-MOSI dataset decreased from 75.2 to 70.8. We believe that these potential future studies in co-learning, transfer learning, and multi-task learning are strengths of MultiBench since it shows the potential of interesting experiments and usage.

In conclusion, we present MultiBench, a large-scale benchmark unifying previously disjoint efforts in multimodal research with a focus on ease of use, accessibility, and reproducibility, thereby paving the way towards a deeper understanding of multimodal models. Through its unprecedented range of research areas, datasets, modalities, tasks, and evaluation metrics, MultiBench highlights several future directions in building more generalizable, lightweight, and robust multimodal models.

Acknowledgements

This material is based upon work partially supported by the National Science Foundation (Awards #1722822 and #1750439), National Institutes of Health (Awards #R01MH125740, #R01MH096951, #U01MH116925, and #U01MH116923), BMW of North America, and SquirrelAI. PPL is supported by a Facebook PhD Fellowship and a Center for Machine Learning and Health Fellowship. RS is supported in part by NSF IIS1763562 and ONR Grant N000141812861. Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation, National Institutes of Health, Facebook, CMLH, Office of Naval Research, BMW of North America, and SquirrelAI, and no official endorsement should be inferred. We are extremely grateful to Amir Zadeh, Chaitanya Ahuja, Volkan Cirik, Murtaza Dalal, Benjamin Eysenbach, Tiffany Min, and Devendra Chaplot for helpful discussions and feedback, as well as Ziyin Liu and Chengfeng Mao for providing tips on working with financial time-series data. Finally, we would also like to acknowledge NVIDIA’s GPU support.

Appendix

A. Broader Impact Statement

Multimodal data and models are ubiquitous in a range of real-world applications. MultiBench and MultiZoo is our aim to systematically categorize the plethora of datasets and models currently in use. While these contributions will accelerate research towards multimodal datasets and models as well as their real-world deployment, we believe that special care must be taken in the following regard to ensure that these models are safely deployed for real-world benefit:

Time & space complexity:

Modern multimodal datasets and models are large, especially when building on already large pretrained unimodal datasets and models such as BERT or ResNets. The increasing time and space complexity of these models can cause financial impacts resulting from the cost of hardware, electricity, and computation, as well as environmental impacts resulting from the carbon footprint required to fuel modern hardware. Therefore, there has been much recent interest in building lightweight machine learning models [142].

MultiBench also provides several efforts in this direction:

Firstly, MultiBench alleviates the need for separate research groups to repeat preprocessing efforts when beginning to work on a new multimodal dataset, which often takes significant time when large video & audio datasets and feature extractors are involved.

Secondly, our standardized implementation of core approaches in MultiZoo prevents duplicate efforts in adapting approaches to new datasets. We found that while many authors of these multimodal methods released their code publicly on GitHub, there was still some effort needed to adapt their code and tune their models to achieve the best performance on our standardized implementation in MultiZoo. By standardizing these experimentation efforts, we can facilitate the sharing of code and trained models, ensure reproducibility across implementations, and save time and effort in the future.

Finally, MultiBench explicitly tests for complexity and encourages researchers to build lightweight models. While this has been less studied in multimodal research, we hope that our efforts will pave the way for greener multimodal learning.

Privacy and security:

There may be privacy risks associated with making predictions from multimodal data of recorded human behaviors. The datasets potentially in question might include those in affective computing (recorded video data labeled for sentiment, emotions, and personality attributes), and healthcare (health data labeled for disease and mortality rate). Therefore, it is crucial to obtain user consent before collecting device data. In our experiments with real-world data where people are involved (i.e., healthcare and affective computing), the creators of these datasets have taken the appropriate steps to only access public data which participants/content creators have consented for released to the public (see details in Appendix C.2). We only use these datasets for research purposes. All data was anonymized and stripped of all personal (e.g., personally identifiable information) and protected attributes (e.g., race, gender).

To deploy these algorithms at scale in the real world, it is also important to keep data and features private on each device without sending it to other locations using techniques such as federated learning [96, 100], differential privacy [55], or encryption [35].

Social biases:

We acknowledge that there is a risk of exposure bias due to imbalanced datasets, especially when human-centric data and possibly sensitive labels are involved. For example, will models trained on imbalanced data disproportionately classify videos of a particular gender as displaying a particular emotion? Models trained on biased data have been shown to amplify the underlying social biases especially when they correlate with the prediction targets [108]. This leaves room for future work in exploring methods tailored for specific scenarios such as mitigating social biases in words [18], sentences [99], images [118], and other modalities. Future research in multimodal models should also focus on quantifying the trade-offs between fairness and performance [186]. MultiBench enables the large-scale study of these crucial research questions and we outline some of our ongoing and future efforts in expanding the evaluation metrics in MultiBench to take these into account in Appendix I.

Possible biases within each dataset:

In this section, we expand upon the previous two points regarding privacy and social biases by describing the possible biases in each domain/dataset included in MultiBench.

-

Affective computing: Analysis of sentiment, emotions, and personality might carry biases if care is not taken to appropriately anonymize the video data used. In MultiBench, all models trained on affect recognition datasets use only pre-extracted non-invertible features that rely on general visual or audio features such as the presence of a smile or magnitude of voice. Therefore the features used in this paper cannot be used to identify the speaker [181, 183]. Furthermore, videos within the datasets all follow the creative commons license and follow fair use guidelines of YouTube. This license allows is the standard way for content creators to grant someone else permission to use and redistribute their work. We use no information regarding gender, ethnicity, identity, or video identifier in online sources. We emphasize that the models trained to perform automated affect recognition should not in any way be used to harm individuals and should only be used as a scientific study.

In addition to privacy issues, we also studied the videos collected in these affective computing datasets and found no offensive content. While there are clearly expressions of highly negative sentiment or strong displays of anger and disgust, there are no offensive words used or personal attacks recorded in the video. All videos are related to movie or product reviews, TED talks, and TV shows.

Healthcare: The MIMIC dataset [78] has been rigorously de-identified in accordance with Health Insurance Portability and Accountability Act (HIPAA) such that all possible personal information has been removed from the dataset. Removed personal information include patient name, telephone number, address, and dates. Dates of birth for patients aged over 89 were shifted to obscure their true age. Please refer to Appendix C.2.2 for de-identification details. Again, we emphasize that any multimodal models trained to perform prediction should only be used for scientific study and should not in any way be used for real-world prediction.

Finance: There is no personal/human data included and there is no risk of personally identifiable information and offensive content.

Robotics: There is no personal/human data included and there is no risk of personally identifiable information and offensive content.

HCI: There is no personal/human data included and there is no risk of personally identifiable information and offensive content.

Multimedia: For MM-IMDb and AV-MNIST, there is no personal/human data included and there is no risk of personally identifiable information and offensive content. For Kinetics, all videos within the dataset are obtained from public YouTube videos that follow the creative commons license which allows content creators to grant permission to use and redistribute their work. We use no information regarding gender, ethnicity, identity, or video identifier in online sources. We emphasize that the models trained to perform action recognition should not in any way be used to harm individuals and should only be used as a scientific study. We also checked to make sure that these videos do not contain offensive content. All videos are related to human actions and do not contain any offensive words/audio.

Overall, MultiBench offers opportunities to study these potential issues at scale across modalities, tasks, datasets, and domains. We plan to continue expanding this benchmark to rigorously test for these social impacts to improve the safety and reliability of multimodal models. For example, in Appendix I.3.3, we describe some concrete extensions to include evaluations for fairness and privacy of multimodal models trained on the datasets in MultiBench. Our holistic evaluation metrics will also encourage the research community to quantify the tradeoffs between performance, complexity, robustness, fairness, and privacy in human-centric multimodal models.

B. Background: Multimodal Representation Learning

We first provide background focusing on multimodal representation learning and several core technical challenges in this area.

B.1 Problem Statement

We define a modality as a single particular mode in which a signal is expressed or experienced. Multiple modalities then refer to a combination of multiple signals each expressed or experienced in heterogeneous manners [10]. We distinguish between the possible temporal resolution of modalities that will impact the types of approaches used:

Static modalities include inputs without a time dimension such as images, tabular data (i.e., a table of numerical data).

Temporal modalities include those coming in a sequence with a time-dimension such as language (a sequence of tokens), video (a sequence of frames/audio features/optical flow features), or time-series data (a sequence of data points indexed by time).

The first version of MultiBench focuses on benchmarks and algorithms for multimodal fusion, where the main challenge is to join information from two or more modalities to perform a prediction. Classic examples include audio-visual speech recognition where visual lip motion is fused with speech signals to predict spoken words [48]. Note that in fusion problems, it should be well-defined to predict the label with a single modality only, which marks an important distinction to tasks in question answering and grounding where one modality is used to query information in another (e.g., visual question answering [4] using a text question to query information in the image). We outline our plans to extend future versions of MultiBench to include more multimodal challenges such as question answering, retrieval, and grounding in Appendix I.

Formally, the multimodal fusion problem is defined as follows. We suppose there is a set of modalities drawn from a joint distribution where is a random variable denoting data distributed according to modality and is a random variable representing the label. If modality is a static modality, is a random vector without the time dimension. If modality is a temporal modality, is a random vector with a time dimension which can be represented as follows: where is the number of time-steps in the temporal modality.

In multimodal fusion, a set of modalities is drawn from a joint distribution where is a random variable denoting data distributed according to modality and is a random variable representing the label. A multimodal dataset is a collection of draws of (data, label) pairs from the joint distribution . We denote a dataset as . These draws from the true distribution are possibly biased (e.g., across individuals, topics, or labels) and noisy (e.g., due to noisy or missing modalities). A multimodal model is a set of functions where each of the are unimodal encoders, one for each modality, and is a multimodal fusion network. The unimodal encoders are specially designed with domain knowledge to learn representations from each modality (e.g., convolutional networks for images, temporal models for time-series data) resulting in unimodal representations . The multimodal network is designed to capture information across unimodal representations and summarize it in a multimodal representation that can be used to predict the label . The goal of multimodal fusion is to learn a model with the lowest prediction error as measured on a held-out test set, while also balancing other potential objectives such as low complexity and robustness to imperfect data.

B.2 Technical Challenges

MultiBench tests for the following holistic desiderata in multimodal fusion:

- Performance: We summarize the following core challenges across all prediction tasks for multimodal learning with reference to Baltrusaitis et al., [10]. Solving these challenges is essential in any multimodal prediction problem, regardless of domain and task.

- Unimodal structure and granularity: The information coming from each modality follows a certain underlying structure and invariance, which needs to be processed by suitable unimodal encoders. While there are certain generally adopted unimodal encoders for commonly studied modalities such as images and text, there remain challenges in designing unimodal encoders with the right types of inductive biases for other less-studied modalities such as tabular and time-series data. Representations extracted from unimodal encoders should contain task-relevant information from that modality, expressed at the right granularity.

- Multimodal complementarity: The information coming from different modalities have varying predictive power by themselves and also when complemented by each other. We refer to these as higher-order interactions: first-order interactions define a predictive signal from a single granular unit of information in one modality to the label (e.g., the presence of a smile indicating positive sentiment); second-order interactions define a predictive signal from a pair of granular units of information across two modalities to the label (e.g., the presence of an eye-roll together with a positive word indicating sarcasm); and nth-order interactions extend the above definition to modalities. There are many possible interactions that explain the labels in a dataset, out of which only some may generalize to unseen data. It remains a challenge to discover these higher-order interactions using suitably expressive models. At the same time, the space of possible interactions is too large which requires suitable inductive biases in model design (see challenges regarding complexity in model design below).

-

Multimodal alignment: Information from different modalities often comes in different granularities. In order to learn predictive signals from higher-order interactions, there is a need to first identify the relations between granular units from two or more different modalities. This challenge requires a measure of the relationship between different modalities, which we call cross-modal alignment.When dealing with temporal data, it also requires capturing possible long-range dependencies across time, which we call temporal alignment. For example, it requires aligning the presence of an eye-roll together with a positive word to recognize sarcasm even when both signals happen at different times. This challenge extends cross-modal alignment to the temporal dimension.

Complexity: The space of possible interactions is very large which requires suitable inductive biases in model design. While more expressive models may perform better, these often come at the cost of time and space complexity during training and inference. To enable real-world deployment of multimodal models in a variety of settings [142], there is a need to build lightweight models with cheap training and inference.

Robustness: Information from different modalities often display different noise topologies, and real-world multimodal signals possibly suffer from missing or noisy data in at least one of the modalities [10]. While most methods are trained on carefully curated and cleaned datasets, there is a need to benchmark their robustness in realistic scenarios. The core challenge here is to build models that still perform well despite the presence of unimodal-specific or multimodal imperfections.

Figure 5:

MultiBench provides a standardized machine learning pipeline across data processing, data loading, multimodal models, evaluation metrics, and a public leaderboard to encourage future research in multimodal representation learning. MultiBench aims to present a milestone in unifying disjoint efforts in multimodal machine learning research and paves the way towards a better understanding of the capabilities and limitations of multimodal models, all the while ensuring ease of use, accessibility, and reproducibility.

C. MultiBench Datasets

MultiBench provides a standardized machine learning pipeline that starts from data loading to running multimodal models, providing evaluation metrics, and a public leaderboard to encourage future research in multimodal representation learning (see Figure 5).

In this section, we provide additional details on the distribution, release, and maintenance of each of the datasets in MultiBench as well as the maintenance of MultiBench as a whole.

C.1 Dataset Selection

In this section, we discuss our choices of datasets in MultiBench. We select each dataset based on its data collection method, input modalities, evaluation tasks, evaluation metric, and train/test splits that reflect real-world multimodal applications. We consulted with domain experts in each of the application areas to select datasets that satisfy the following properties:

Realism in data collection, input modalities, preprocessing, and task: Each of the datasets in MultiBench reflect a subset of real-world sensory modalities collected in the wild. Realism is important since it brings natural noise topologies in each modality and in the prediction task. It is crucial that these datasets reflect real-world data such that capturing these imperfections through machine learning models can potentially bridge the gap towards real-world deployment.

Diversity in research area: We chose these research areas through a survey of recent research papers in multimodal learning across conferences in machine learning and beyond (e.g., HCI, NLP, vision, robotics conferences). Furthermore, we consulted with domain experts in applying multimodal learning to their respective application areas to determine areas of large potential. Through engaging with domain experts we were able to select research areas and datasets that reflected realism in data collection, input modalities, preprocessing, and tasks which present challenges for machine learning models and potential for real-world transfer of learned algorithms. These research areas that are designed to span both human-centric and data-centric machine learning. In the former, we selected HCI, healthcare, and robotics since these are fast-growing research areas with increasingly specialized tracks in machine learning conferences dedicated to them. In the latter, financial data analysis is an area with an inherently low signal-to-noise ratio reflecting extremely noisy, imperfect, and uncertain real-world datasets which provide challenges for current multimodal models. We also included several multimedia datasets due to the large resources publicly available on the internet which results in multimodal datasets of the largest scale.

Diversity in modalities: We started with a set of commonly studied modalities such as language, image, video, and audio. For each of the following research areas, we consulted with domain experts to choose datasets that are established, but not overstudied. More importantly, we aimed for diversity in modalities to truly test the generalization capabilities of modern multimodal models outside of commonly studied domains and modalities. For example, while there is much work in HCI involving images and text, we chose a modality representing a set of mobile applications for coverage. Similarly, in robotics, we consulted with domain experts to obtain datasets with high-frequency force and proprioception sensors that provide a unique challenge to machine learning researchers.

Challenging for ML models: We aim to choose datasets where the current state-of-the-art performance via machine learning models is still far from human performance (if human performance is provided, otherwise judged by a domain expert). This is to ensure that there is room for improvement through community involvement in this research area.

Community expansion: Finally, we would like to emphasize that we heavily encourage and actively seek out community participation in expanding MultiBench to keep up with the incredible pace in multimodal machine learning research. We describe our plans for an open call for proposals of new research areas, datasets, and prediction tasks in section I.

Figure 6:

Affective computing studies the perception of human affective states (emotions, sentiment, and personalities) from our natural display of multimodal signals spanning language (spoken words), visual (facial expressions, gestures), and acoustic (prosody, speech tone) [124]. MultiBench contains 4 datasets in this category involving fusing language, video, and audio time-series data to predict sentiment (CMU-MOSI [181] and CMU-MOSEI [183]), emotions (CMU-MOSEI [183]), humor (UR-FUNNY [64]), and sarcasm (MUStARD [24]).

C.2 Dataset Details

We provide details for each of the research areas and datasets selected in MultiBench. In each of the categories, we describe the research area, the datasets and their associated data collection process, their access restrictions and licenses, and any data preprocessing or feature extraction we used following current work in each of these domains.

C.2.1 Affective Computing

1. MUStARD is a multimodal video corpus for research in automated sarcasm discovery [24]. The dataset is compiled from popular TV shows including Friends, The Golden Girls, The Big Bang Theory, and Sarcasmaholics Anonymous. MUStARD consists of audiovisual utterances annotated with sarcasm labels. Each utterance is accompanied by its context, which provides additional information on the scenario where the utterance occurs, thereby providing a further challenge in the long-range modeling of multimodal information. Sarcasm is specifically chosen as an annotation task since it requires careful modeling of complementary information, particularly when the semantic information from each modality do not agree with each other.

Data collection:

According to Castro et al., [24], they conducted web searches on YouTube using keywords such as Friends sarcasm, Chandler sarcasm, Sarcasm 101, and Sarcasm in TV shows to obtain videos with sarcastic content from three main TV shows: Friends, The Golden Girls, and Sarcasmaholics Anonymous. To obtain non-sarcastic videos, they used a subset of 400 videos from MELD, a multimodal emotion recognition dataset derived from the Friends TV series [128]. Videos from The Big Bang Theory were also collected by segmenting episodes using laughter cues from its audience.

Access restrictions:

While we do not have the license to this dataset, it is a public dataset free to download by the research community from https://github.com/soujanyaporia/MUStARD.

Licenses:

MIT, see https://github.com/soujanyaporia/MUStARD/blob/master/LICENSES

Dataset preprocessing:

We followed these preprocessing steps for each modality as suggested in the original paper [24]:

Language: Textual utterances are represented using pretrained BERT representations [42] as well as Common Crawl pre-trained 300-dimensional GloVe word vectors [119] for each token.

Visual: Visual features are extracted for each frame using a pool5 layer of an ImageNet [41] pretrained ResNet-152 [66] model. Every frame is first preprocessed by resizing, center-cropping, and normalizing it. We also use the OpenFace facial behavioral analysis tool [11] to extract facial expression features.

Audio: Low-level features from the audio data stream are extracted using the speech processing library Librosa [112]. We also extract COVAREP [39] features as is commonly used for the other datasets in the affective computing domain (see below).

Train, validation, and test splits:

there are 414, 138, and 138 video segments in train, valid, and test data respectively, which gives a total of 690 data points.

2. CMU-MOSI is a collection of 2,199 opinion video clips each rigorously annotated with labels for subjectivity, sentiment intensity, per-frame, and per-opinion annotated visual features, and per-milliseconds annotated audio features [181]. Sentiment intensity is annotated in the range [−3,+3] which enables fine-grained prediction of sentiment beyond the classical positive/negative split. Each video is collected from YouTube with a focus on video blogs, or vlogs which reflect the real-world distribution of speakers expressing their behaviors through monologue videos. CMU-MOSI is a realistic real-world multimodal dataset for affect recognition and is regularly used in competitions and workshops.

Data collection:

According to Zadeh et al., [181], videos were collected from YouTube with a focus on video blogs indexed by #vlog. A total of 93 videos were randomly selected. The final set of videos contained 89 distinct speakers, including 41 female and 48 male speakers. Most of the speakers were approximately between the ages of 20 and 30 from different backgrounds (e.g., Caucasian, African-American, Hispanic, Asian). All speakers expressed themselves in English and the videos originated from either the United States of America or the United Kingdom.

Access restrictions:

The authors are part of the team who collected the CMU-MOSI dataset [181] so we have the license and right to redistribute this dataset. CMU-MOSI was originally downloaded from https://github.com/A2Zadeh/CMU-MultimodalSDK.

Licenses:

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the conditions in https://raw.githubusercontent.com/A2Zadeh/CMU-MultimodalSDK/master/LICENSE.txt

Train, validation, and test splits:

Each dataset contains several videos, and each video is further split into short segments (roughly 10 − 20 seconds) that are annotated. We split the data at the level of videos so that segments from the same video will not appear across train, valid, and test splits. This enables us to train user-independent models instead of having a model potentially memorizing the average affective state of a user. There are 52, 10, and 31 videos in train, valid, and test data respectively. Splitting up these videos gives a total of 1,284, 229, and 686 segments respectively for a total of 2,199 data points.

Dataset preprocessing:

We follow current work [103, 183] and apply the following preliminary feature extraction for the CMU-MOSI dataset:

Language: Glove word embeddings [119] were used to embed a sequence of individual words from video segment transcripts into a sequence of word vectors that represent spoken text. The Glove word embeddings used are 300-dimensional word embedding trained on 840 billion tokens from the common crawl dataset, resulting in a sequence of dimension × 300 after alignment. The timing of word utterances is extracted using P2FA forced aligner [176]. This extraction enables alignment between text, audio, and video.

Visual: We use the library Facet [75] to extract a set of visual features including facial action units, facial landmarks, head pose, gaze tracking, and HOG features. These visual features are extracted from the full video segment at 30Hz to form a sequence of facial gesture changes throughout time, resulting in a sequence of dimension × 35. In addition to Facet, OpenFace facial behavioral analysis tool [11] is used to extract the facial expression features which include facial Action Units (AU) based on the Facial Action Coding System (FACS) [49].

Audio: The software COVAREP [39] is used to extract acoustic features including 12 Mel-frequency cepstral coefficients, pitch tracking and voiced/unvoiced segment features [46], glottal source parameters [28], peak slope parameters and maxima dispersion quotients [79]. These visual features are extracted from the full audio clip of each segment at 100Hz to form a sequence that represents variations in tone of voice over an audio segment, resulting in a sequence of dimension × 74.

3. UR-FUNNY is the first large-scale multimodal dataset of humor detection in human speech [64]. UR-FUNNY is a realistic representation of multimodal language (including text, visual and acoustic modalities). This dataset opens the door to understanding and modeling humor in a multimodal framework, which is crucial since humor is an inherently multimodal communicative tool involving the effective use of words (text), accompanying gestures (visual), and prosodic cues (acoustic). UR-FUNNY consists of more than 16,000 video samples from TED talks which are among the most diverse idea-sharing channels covering speakers from various backgrounds, ethnic groups, and cultures discussing a variety of topics from discoveries in science and arts to motivational speeches and everyday events. The diversity of speakers, topics, and unique annotation targets make it a realistic dataset for multimodal language modeling.

Data collection:

According to Hasan et al., [64] 1,866 videos and their transcripts in English were collected from the TED portal, chosen from 1,741 different speakers and across 417 topics. The laughter markup is used to filter out 8257 humorous punchlines from the transcripts. The context is extracted from the prior sentences to the punchline (until the previous humor instances or the beginning of the video is reached). Using a similar approach, 8,257 negative samples are chosen at random intervals where the last sentence is not immediately followed by a laughter marker. After this negative sampling, there is a homogeneous 50% split in the dataset between positive and negative humor examples.

Access restrictions:

This is a public dataset free to download by the research community from https://github.com/ROC-HCI/UR-FUNNY. The authors of the dataset also note that videos on www.ted.com are publicly available for download [64].

Licenses:

No license was provided with this dataset.

Dataset preprocessing:

We follow current work [103, 183] and apply the same preliminary feature extraction as the CMU-MOSI dataset described above.

Train, validation, and test splits:

Each dataset contains several videos, and each video is further split into short segments (roughly 10 − 20 seconds) that are annotated. We split the data at the level of videos so that segments from the same video will not appear across train, valid, and test splits. This enables us to train user-independent models instead of having a model potentially memorizing the average affective state of a user. There are 1,166, 300, and 400 videos in train, valid, and test data respectively. Splitting up these videos gives a total of 10,598, 2,626, and 3,290 segments respectively for a total of 16,514 data points,

4. CMU-MOSEI is the largest dataset of sentence-level sentiment analysis and emotion recognition in real-world online videos [102, 183]. CMU-MOSEI contains more than 65 hours of annotated video from more than 1,000 speakers and 250 topics. Each video is annotated for sentiment as well as the presence of 9 discrete emotions (angry, excited, fear, sad, surprised, frustrated, happy, disappointed, and neutral) as well as continuous emotions (valence, arousal, and dominance). The diversity of prediction tasks makes CMU-MOSEI a valuable dataset to test multimodal models across a range of real-world affective computing tasks. The dataset has been continuously used in workshops and competitions revolving around human multimodal language.

Data collection:

According to Zadeh et al., [183], videos from YouTube are automatically analyzed for the presence of one speaker in the frame using face detection to ensure the video is a monologue and rejecting videos that have moving cameras. A diverse set of 250 frequently used topics in online videos is used as the seed for acquisition. The authors restrict the number of videos acquired from each channel to a maximum of 10 and limit the videos to have manual and properly punctuated transcriptions. After manual quality inspection, they also performed automatic checks on the quality of video and transcript using facial feature extraction confidence and forced alignment confidence, before balancing the gender in the dataset using the data provided by annotators (57% male to 43% female).

Access restrictions:

The authors are part of the team who collected the CMU-MOSEI dataset [183] so we have the license and right to redistribute this dataset. CMU-MOSEI was originally downloaded from https://github.com/A2Zadeh/CMU-MultimodalSDK.

Figure 7:

Healthcare: Medical decision-making often involves integrating complementary signals from several sources such as lab tests, imaging reports, and patient-doctor conversations. Multimodal models can help doctors make sense of high-dimensional data and assist them in the diagnosis process [5]. MultiBench includes the MIMIC dataset [78] which records ICU patient data including time-series data measured every hour and other tabular numerical data about the patient (e.g., age, gender, ethnicity) to predict mortality rate and the disease ICD-9-code. Figure adapted from [165].

Licenses:

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the““Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the conditions in https://raw.githubusercontent.com/A2Zadeh/CMU-MultimodalSDK/master/LICENSE.txt

Dataset preprocessing:

We follow current work [103, 183] and apply the same preliminary feature extraction as the CMU-MOSI and UR-FUNNY datasets described above.

Train, validation, and test splits:

Each dataset contains several videos, and each video is further split into short segments (roughly 10 − 20 seconds) that are annotated. We split the data at the level of videos so that segments from the same video will not appear across train, valid, and test splits. This enables us to train user-independent models instead of having a model potentially memorizing the average affective state of a user. There are a total of 16,265, 1,869, and 4,643 segments in train, valid, and test datasets respectively for a total of 22,777 data points.

C.2.2 Healthcare