Abstract

Objective

To identify methods to increase response to postal questionnaires.

Design

Systematic review of randomised controlled trials of any method to influence response to postal questionnaires.

Studies reviewed

292 randomised controlled trials including 258 315 participants

Intervention reviewed

75 strategies for influencing response to postal questionnaires.

Main outcome measure

The proportion of completed or partially completed questionnaires returned.

Results

The odds of response were more than doubled when a monetary incentive was used (odds ratio 2.02; 95% confidence interval 1.79 to 2.27) and almost doubled when incentives were not conditional on response (1.71; 1.29 to 2.26). Response was more likely when short questionnaires were used (1.86; 1.55 to 2.24). Personalised questionnaires and letters increased response (1.16; 1.06 to 1.28), as did the use of coloured ink (1.39; 1.16 to 1.67). The odds of response were more than doubled when the questionnaires were sent by recorded delivery (2.21; 1.51 to 3.25) and increased when stamped return envelopes were used (1.26; 1.13 to 1.41) and questionnaires were sent by first class post (1.12; 1.02 to 1.23). Contacting participants before sending questionnaires increased response (1.54; 1.24 to 1.92), as did follow up contact (1.44; 1.22 to 1.70) and providing non-respondents with a second copy of the questionnaire (1.41; 1.02 to 1.94). Questionnaires designed to be of more interest to participants were more likely to be returned (2.44; 1.99 to 3.01), but questionnaires containing questions of a sensitive nature were less likely to be returned (0.92; 0.87 to 0.98). Questionnaires originating from universities were more likely to be returned than were questionnaires from other sources, such as commercial organisations (1.31; 1.11 to 1.54).

Conclusions

Health researchers using postal questionnaires can improve the quality of their research by using the strategies shown to be effective in this systematic review.

What is already known on this topic

Postal questionnaires are widely used in the collection of data in epidemiological studies and health research

Non-response to postal questionnaires reduces the effective sample size and can introduce bias

What this study adds

This systematic review includes more randomised controlled trials than any previously published review or meta-analysis no questionnaire response

The review has identified effective ways to increase response to postal questionnaires

The review will be updated regularly in the Cochrane Library

Introduction

Postal questionnaires are widely used to collect data in health research and are often the only financially viable option when collecting information from large, geographically dispersed populations. Non-response to postal questionnaires reduces the effective sample size and can introduce bias.1 As non-response can affect the validity of epidemiological studies, assessment of response is important in the critical appraisal of health research. For the same reason, the identification of effective strategies to increase response to postal questionnaires could improve the quality of health research. To identify such strategies we conducted a systematic review of randomised controlled trials.

Methods

Identification of trials

We aimed to identify all randomised controlled trials of strategies to influence the response to a postal questionnaire. Eligible studies were not restricted to medical surveys and included any questionnaire topic in any population. Studies in languages other than English were included. Strategies requiring telephone contact were included, but strategies requiring home visits by investigators were excluded for reasons of cost. We searched 14 electronic bibliographical databases (table 1). Two reviewers independently screened each record for eligibility by examining titles, abstracts, and keywords. Records identified by either reviewer were retrieved. We searched the reference lists of relevant trials and reviews, and two journals in which the largest number of eligible trials had been published (Public Opinion Quarterly and American Journal of Epidemiology). We contacted authors of eligible trials and reviews to ask about unpublished trials. Reports of potentially relevant trials were obtained, and two reviewers assessed each for eligibility. We estimated the sensitivity of the combined search strategy (electronic searching and manual searches of reference lists) by comparing the trials identified by using this strategy with the trials identified by manually searching journals. We used ascertainment intersection methods to estimate the number of trials that may have been missed during screening.2

Table 1.

Electronic bibliographical databases and search strategies used in systematic review of response to postal questionnaires

| Database (time period or version) | Search strategy |

|---|---|

| With study type filters of known sensitivity and positive predictive value†: | |

| CINAHL (1982-07/1999) Cochrane Controlled Trials Register (1999.3) Dissertation Abstracts (1981-08/1999) Embase (1980-08/1999) ERIC (1982-09/1998) Medline (1966-1999) PsycLIT (1887-09/1999) | A. questionnair*or survey*or data collection B. respon*or return* C. remind*or letter*or postcard*or incentiv*or reward*or money*or monetary or payment*or lottery or raffle or prize or personalis*or sponsor*or anonym*or length or style*or format or appearance or color or colour or stationery or envelope or stamp*or postage or certified or registered or telephon*or telefon*or notice or dispatch*or deliver*or deadline or sensitive D. control*or randomi*or blind*or mask*or trial*or compar*or experiment*or “exp” or factorial E. A and B and C and D |

| Without study type filters of known sensitivity and positive predictive value‡: | |

| Science Citation Index (1980-1999) Social Science Citation Index (1981-1999) | (survey*or questionnair*) and (return*or respon*) |

| Social Psychological Educational Criminological Trials Register (1950-1998) | (survey*or questionnair*) and (return*or respon*) |

| EconLit (1969-2000) Sociological Abstracts (1963-2000) | ((survey$ or questionn$) and (return$ or respon$)).ti or ((survey$ or questionn$) and (mail$ or post$)).ti or ((return$ or respon$) and (mail$ or post$)).ti |

| Index to Scientific and Technical Proceedings (1982-2000) | ((survey*, questionn*)+(return*,respon*))@TI,((return*,respon*)+ (mail,mailed,postal))@TI, ((survey*,questionn*)+(mail,mailed,postal))@TI |

| National Research Register (Web version: 2000.1) | ((survey*:ti or questionn*:ti) and (return*:ti or respon*:ti)) or ((return*:ti or respon*:ti) and (mail:ti or mailed:ti or postal:ti)) or ((survey*:ti or questionn*:ti) and (mail:ti or mailed:ti or postal:ti)) |

Search strategies were developed to achieve a balance between sensitivity and positive predictive value.

Highly sensitive subject searches (search statements A, B, C) were designed and their positive predictive value increased by using study type filters (search statement D). These searches were not restricted to the abstract or title fields.

The positive predictive value of the search strategies was increased by restricting search terms to the title field only, by using permutations of subject term combinations, or by using fewer search terms.

Data extraction and outcome measures

Two reviewers independently extracted data from eligible reports by using a standard form. Disagreements were resolved by a third reviewer. We extracted data on the type of intervention evaluated, the number of participants randomised to intervention or control groups, the quality of the concealment of participants' allocation, and the types of participants, materials, and follow up methods used. Two outcomes were used to estimate the effect of each intervention on response: the proportion of completed or partially completed questionnaires returned after the first mailing and the proportion returned after all follow up contacts had been made. We wrote to the authors of reports when these data were missing or the methods used to allocate participants were unclear (for example, where reports said only that participants were “divided” into groups).

Interventions were classified and analysed within distinct strategies to increase response. In trials with factorial designs, interventions were classified under two or more strategies. When interventions were evaluated at more than two levels (for example, highly, moderately, and slightly personalised questionnaires), we combined the upper levels to create a dichotomy. To assess the influence of a personalised questionnaire on response, for example, we compared response to the least personalised questionnaire with the combined response for the moderately and highly personalised questionnaires.

Data analysis and statistical methods

We used Stata statistical software to analyse our data. For each strategy, we estimated pooled odds ratios in a random effects model. We calculated 95% confidence intervals and two sided P values for each outcome. Selection bias was assessed by using Egger's weighted regression method and Begg's rank correlation test and funnel plot.3 Heterogeneity among the trials' odds ratios was assessed by using a χ2 test at a 5% significance level. In trials of monetary incentives, we specified a priori that the amount of the incentive might explain any heterogeneity between trial results. To investigate this, we used regression to examine the relation between response and the current value of the incentive in US dollars. When the year of the study was not known, we used the average delay between year of study and year of publication for other trials (three years). We also specified a priori that, in trials of questionnaire length, the number of pages used might explain any heterogeneity between trial results, and to investigate this, the odds of response were regressed on the number of pages.

Results

We identified 292 eligible trials including a total of 258 315 participants that evaluated 75 different strategies for increasing response to postal questionnaires. The average number of participants per trial was 1091 (range 39-10 047). The trials were published in 251 reports—80 (32%) in medical, epidemiological, or health related journals, 58 (23%) in psychological, educational, or sociological journals, 105 (42%) in marketing, business, or statistical journals, and 8 (3%) in engineering journals or dissertations, or they had not yet been published (see Appendix A).

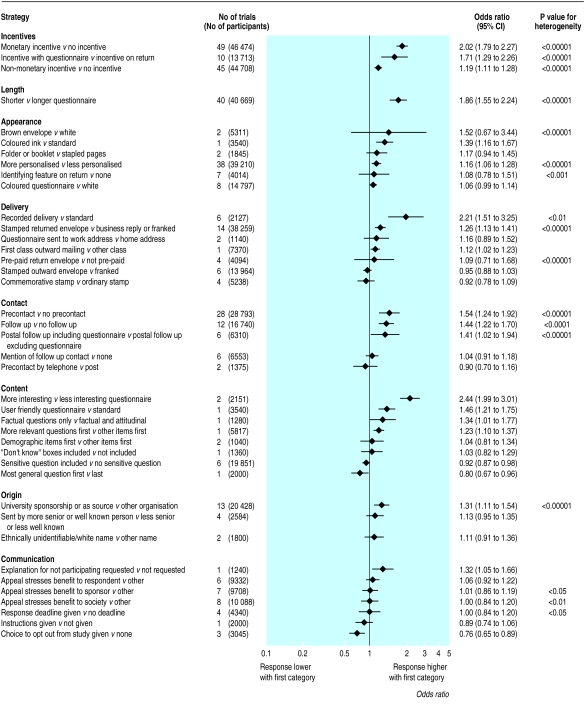

All tests for selection bias were significant (P<0.05) in five strategies: monetary incentives, varying length of questionnaire, follow up contact with non-respondents, saying that the sponsor will benefit if participants return questionnaires, and saying that society will benefit if participants return questionnaires. Tests were not possible in 15 strategies where fewer than three trials were included. The method of randomisation was not known in most of the eligible trials. Where information was available, the quality of the concealment of participants' allocation was poor in 30 trials and good in 12 trials. The figure shows the pooled odds ratios and 95% confidence intervals for the 40 different strategies in which the combined trials included more than 1000 participants.

Table 2 may be used to translate odds ratios into response rates from different baseline rates. At least one strategy in each category was found to influence response. For example, when incentives were used the odds of response were more than doubled when money was the incentive (odds ratio 2.02; 95% confidence interval 1.79 to 2.27) and were almost doubled when incentives were not conditional on response (1.71; 1.29 to 2.26). The length of questionnaires influenced response: short questionnaires made response more likely (1.86; 1.55 to 2.24). The use of coloured ink as opposed to blue or black ink increased response (1.39; 1.16 to 1.67) as did making questionnaires and letters more personal (1.16; 1.06 to 1.28). When recorded delivery was used the odds of response were more than doubled (2.21; 1.51 to 3.25), and they were increased when stamped return envelopes were used (1.26; 1.13 to 1.41) and questionnaires were sent by first class post (1.12; 1.02 to 1.23). Contacting participants before sending questionnaires increased response (1.54; 1.24 to 1.92), as did follow up contact (1.44; 1.22 to 1.70) and providing non-respondents with a second copy of the questionnaire (1.41; 1.02 to 1.94). Questionnaires designed to be of more interest to participants were more likely to be returned (2.44; 1.99 to 3.01), but questionnaires containing questions of a sensitive nature were less likely to be returned (0.92; 0.87 to 0.98). Questionnaires originating from universities were more likely to be returned than questionnaires from other sources, such as commercial organisations (1.31; 1.11 to 1.54).

Table 2.

Conversion of odds ratios to response rates from different baseline rates

| Baseline rate (%) |

Odds ratio

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.50 | 0.75 | 1.00 | 1.25 | 1.50 | 1.75 | 2.00 | 2.25 | 2.50 | 2.75 | 3.00 | |

| 10 | 5 | 8 | 10 | 12 | 14 | 16 | 18 | 20 | 22 | 23 | 25 |

| 20 | 11 | 16 | 20 | 24 | 27 | 30 | 33 | 36 | 38 | 41 | 43 |

| 30 | 18 | 24 | 30 | 35 | 39 | 43 | 46 | 49 | 52 | 54 | 56 |

| 40 | 25 | 33 | 40 | 45 | 50 | 54 | 57 | 60 | 63 | 65 | 67 |

| 50 | 33 | 43 | 50 | 56 | 60 | 64 | 67 | 69 | 71 | 73 | 75 |

| 60 | 43 | 53 | 60 | 65 | 69 | 72 | 75 | 77 | 79 | 80 | 82 |

| 65 | 48 | 58 | 65 | 70 | 74 | 76 | 79 | 81 | 82 | 84 | 85 |

| 70 | 54 | 64 | 70 | 74 | 78 | 80 | 82 | 84 | 85 | 87 | 88 |

| 75 | 60 | 69 | 75 | 79 | 82 | 84 | 86 | 87 | 88 | 89 | 90 |

| 80 | 67 | 75 | 80 | 83 | 86 | 88 | 89 | 90 | 91 | 92 | 92 |

| 85 | 74 | 81 | 85 | 88 | 89 | 91 | 92 | 93 | 93 | 94 | 94 |

| 90 | 82 | 87 | 90 | 92 | 93 | 94 | 95 | 95 | 96 | 96 | 96 |

| 95 | 90 | 93 | 95 | 96 | 97 | 97 | 97 | 98 | 98 | 98 | 98 |

We found significant heterogeneity among trial results in 17 out of the 31 strategies that included more than one trial. For trials of monetary incentives the heterogeneity among the results was significant (P<0.0001). In regression analysis, the relation was positive between the size of the incentive and the odds of response:

Log(OR)=0.69[SE 0.05]+0.084 [SE 0.03] ×Log (Amount in US$)

The model predicts, for example, that the odds of response with a $1 incentive will be twice that with no incentive. The model also predicts that the marginal benefit will diminish, in terms of increasing the odds of response, for each additional $1 increase in the amount given (for example, the odds of response with a $15 incentive will be only 2.5 times that with no incentive). For trials examining the effect of questionnaire length, heterogeneity between results was apparent on inspection of the forest plot (P<0.00001). In regression analysis a relation was found between the number of pages used and the odds of response:

Log(OR)=0.36[SE 0.21]–0.64[SE 0.15] ×Log(Pages in short)+0.32[SE 0.18] ×Log(Pages in long)

The model predicts, for example, that the odds of response with a single page will be twice that with three pages.

Discussion

Several reviews and meta-analyses of strategies to increase response to postal questionnaires have been published in the literature on research surveys over the past 40 years. Our review, which was based on a systematic search of published and unpublished literature in English and other languages, includes more than twice as many trials as any previously published review.4 The trials identified were not restricted to medical surveys: one third were medical, epidemiological, or health related; one quarter were psychological, educational, or sociological; and two fifths were marketing, business, or statistical.

We have identified a range of strategies that seem to increase response to postal questionnaires. The pooled effect measures for some strategies are precise because large numbers of participants were included in the combined trials. Before these results are implemented, several methodological issues must be considered.

Identification and inclusion of all relevant trials

Identifying and including all relevant trials in systematic reviews reduces random error in meta-analyses and, because ease of identification of trials is associated with the size of treatment effects, complete ascertainment may reduce bias.5 We estimate that our search strategy retrieved nearly all eligible trials (estimated sensitivity 95%; 84% to 99%) and that we missed very few relevant records during screening.2 We excluded some trials because we could not confirm that participants had been randomly allocated to intervention or control groups, and we have not examined whether the results of these trials differ systematically from the included trials. Tests for selection bias were significant in five strategies. Although these results may be due to true heterogeneity between trial results rather than bias in the selection of trials,3 we cannot rule out the possibility of selection bias having an effect on the results.

Methodological quality of trials

Inadequate allocation concealment can bias the results of clinical trials.6 In our review, information on allocation concealment was unavailable for most of the included trials. If they had inadequate concealment, this may have biased the results, which is unlikely in this context because the researchers making the allocations would find it difficult to predict propensity to respond to a questionnaire.

Heterogeneity among trial results

We found substantial heterogeneity among the results of trials in half of the strategies, and for these it may be inappropriate to combine results to produce a single estimate of effect.7 Before undertaking the analyses we developed hypotheses concerning underlying differences in the trials of monetary incentives and length of questionnaire that might explain heterogeneity. Regression analyses identified relations between response and amounts of incentive and between response and questionnaire length. These models explain some of the heterogeneity. For other strategies, variation between trial interventions and populations is likely to explain some of the heterogeneity. For example, among trials evaluating non-monetary incentives, the types of incentive used are very different, ranging from donations to charity to free key rings. Much of the heterogeneity between results may disappear when subgroups of trials are analysed. Further exploratory subgroup analyses may show important sources of variation—for example, according to methodological quality, questionnaire topic, age of the study, or type of population. In this review, our aim was to identify eligible trials systematically, critically appraise them, and present the relevant data. We did not intend to produce single effect estimates for every strategy. For many statistically heterogeneous strategies the direction of the effects is the same. For these strategies we cannot be sure about the size of the effect, but we can be reasonably confident that there was an effect on response.

Conclusions

Researchers can increase response to postal questionnaires by using the strategies shown to be effective in this systematic review. Some strategies will require additional materials or administrative time, whereas others can be implemented at little extra cost.

We have presented odds ratios for methodological reasons,7 but the practical implications of the odds ratio for a strategy may be difficult to interpret without knowing the response at baseline without the strategy. If the size of the effect that would be expected if a specific strategy were used is an important consideration for researchers, the data used in this review may be accessed through the Cochrane Library, where they will be updated regularly.8

Figure.

Effects on questionnaire response of 40 strategies where combined trials included over 1000 participants

Acknowledgments

We thank Peter Sandercock, Iain Chalmers, and Catherine Peckham for their help and advice with the study.

References to reviewed trials

Adams LL, Gale D. Solving the quandary between questionnaire length and response rate in educational research. Res Higher Educ 1982;17:231-40.

Albaum G. Do source and anonymity affect mail survey results? J Acad Marketing Sci 1987;15:74-81.

Albaum G, Strandskov J. Participation in a mail survey of international marketers: effects of pre-contact and detailed project explanation. J Global Marketing 1989;2:7-23.

Alutto JA. Some dynamics of questionnaire completion and return among professional and managerial personnel: the relative impacts of reception at work site or place of residence. J Appl Psychol 1970;54:430-2.

Andreasen AR. Personalizing mail questionnaire correspondence. Public Opin Q 1970;34:273-7.

Asch DA, Christakis NA. Different response rates in a trial of two envelope styles in mail survey research. Epidemiology 1994;5:364-5.

Asch DA. Use of a coded postcard to maintain anonymity in a highly sensitive mail survey: cost, response rates, and bias. Epidemiology 1996;7:550-1.

Asch DA, Christakis NA, Ubel PA. Conducting physician mail surveys on a limited budget. A randomized trial comparing $2 bill versus $5 bill incentives. Med Care 1998;36:95-9.

Bachman DP. Cover letter appeals and sponsorship effects on mail survey response rates. J Marketing Educ 1987;9:45-51.

Barker PJ, Cooper RF. Do sexual health questions alter the public's response to lifestyle questionnaires? J Epidemiol Community Health 1996;50:688.

Berdie DR. Questionnaire length and response rate. J Appl Psychol 1973;58:278-80.

Bergen AV, Spitz JC. De Introductie van een schriftelijke enquete. Nederlandsche Tijdschrift voor Psychologie 1957;12:68-96.

Berk ML, Edwards WS, Gay NL. The use of a prepaid incentive to convert non responders on a survey of physicians. Eval Health Professions 1993;16:239-45.

Berry S. Physician response to a mailed survey. An experiment in timing of payment. Public Opin Q 1987;51:102-14.

Biggar RJ, Melbye M. Responses to anonymous questionnaires concerning sexual behaviour: a method to examine potential biases. Am J Public Health 1992;82:1506-12.

Biner PM. Effects of cover letter appeal and monetary incentives on survey response: a reactance theory application. Basic Appl Soc Psychol 1988;9:99-106.

Biner PM, Barton DL. Justifying the enclosure of monetary incentives in mail survey cover letters. Psychol Marketing 1990;7:153-62.

Biner PM, Kidd HJ. The interactive effects of monetary incentive justification and questionnaire length on mail survey response rates. Psychol Marketing 1994;11:483-92.

Blass T, Leichtman SR, Brown RA. The effect of perceived consensus and implied threat upon responses to mail surveys. J Soc Psychol 1981;113:213-6.

Blass-Wilhelms W. Influence of ‘real’ postage stamp versus stamp ‘postage paid’ on return rate of response cards. Zeitschrift für Soziologie 1982;11:64-8.

Blomberg J, Sandell R. Does a material incentive affect response on a psychotherapy follow-up questionnaire? Psychother Res 1996;6:155-63.

Blythe BJ. Increasing mailed survey responses with a lottery. Soc Work Res Abst 1986;22:18-9.

Boser JA. Surveying alumni by mail: effect of booklet/folder questionnaire format and style of type on response rate. Res Higher Educ 1990;31:149-59.

Brechner K, Shippee G, Obitz FW. Compliance techniques to increase mailed questionnaire return rates from alcoholics. J Stud Alcohol 1976;37:995-6.

Brennan M. The effect of a monetary incentive on mail survey response rates. J Market Res Soc 1992;34:173-7.

Brennan M, Seymour P, Gendall P. The effectiveness of monetary incentives in mail surveys: further data. Marketing Bull 1993;4:43-51.

Brook LL. The effect of different postage combinations on response levels and speed of reply. J Market Res Soc 1978;20:238-4.

Brown ML. Use of a postcard query in mail surveys. Public Opin Q 1965;29:635-7.

Brown GH. Randomised inquiry vs conventional questionnaire method in estimating drug usage rates through mail surveys (technical report). Human Resources Research Organisation (HumRRO). Alexandria VA: US Army Research Institute for the Behavioural and Social Sciences, 1975.

Buchman TA, Tracy JA. Obtaining responses to sensitive questions: conventional questionnaire versus randomized response technique. J Account Res 1982;20:263-71.

Campbell MJ, Waters WE. Does anonymity increase response rate in postal questionnaire surveys about sensitive subjects? A randomised trial. J Epidemiol Community Health 1990;44:75-6.

Camunas C, Alward RR, Vecchione E. Survey response rates to a professional association mail questionnaire. J NY York State Nurses Assoc 1990;21:7-9.

Carpenter EH. Personalizing mail surveys: a replication and reassessment. Public Opin Q 1974;38:614-20.

Cartwright A. Some experiments with factors that might affect the response of mothers to a postal questionnaire. Stat Med 1986;5:607-17.

Childers TL, Skinner SJ. Gaining respondent cooperation in mail surveys through prior commitment. Public Opin Q 1979;43:558-61.

Childers TL, Pride WM, Ferrell OC. A reassessment of the effects of appeals on response to mail surveys. J Marketing Res 1980;17:365-70.

Childers TL, Skinner SJ. Theoretical and empirical issues in the identification of survey respondents. J Market Res Soc 1985;27:39-53.

Choi BC, Pak AW, Purdham JT. Effects of mailing strategies on response rate, response time, and cost in a questionnaire study among nurses. Epidemiology 1990;1:72-4.

Clarke R, Breeze E, Sherliker P, Shipley M, Youngman L. Design, objectives, and lessons from a pilot 25 year follow up re-survey of survivors in the Whitehall study of London civil servants. J Epidemiol Community Health 1998;52:364-9.

Corcoran KJ. Enhancing the response rate in survey research. Soc Work Res Abstracts 1985;21:2.

Cox EP, Anderson T, Fulcher DG. Reappraising mail survey response rates. J Marketing Res 1974;11:413-7.

Deehan A, Templeton L, Taylor C, Drummond C, Strang J. The effect of cash and other financial inducements on the response rate of general practitioners in a national postal study. Br J Gen Pract 1997;47:87-90.

Del Valle ML, Morgenstern H, Rogstad TL, Albright C, Vickrey BG. A randomised trial of the impact of certified mail on response rate to a physician survey, and a cost-effectiveness analysis. Eval Health Professions 1997;20:389-406.

Denton J, Tsai C-Y, Chevrette P. Effects on survey responses of subjects, incentives, and multiple mailings. J Exper Educ 1988;56:77-82.

Denton JJ, Tsai C-Y. Two investigations into the influence of incentives and subject characteristics on mail survey responses in teacher education. J Exper Educ 1991;59:352-66.

Dillman DA, Sinclair MD, Clark JR. Effects of questionnaire length, respondent-friendly design, and a difficult question on response rates for occupant-addressed census mail surveys. Public Opin Q 1993;57:289-304.

Dodd DK, Markwiese BJ. Survey response rate as a function of personalized signature on cover letter. J Soc Psychol 1987;127:97-8.

Dommeyer CJ. Experimentation on threatening appeals in the follow-up letters of a mail survey. Doctoral dissertation, University of Santa Clara, California, 1980.

Dommeyer CJ. Does response to an offer of mail survey results interact with questionnaire interest? J Market Res Soc 1985;27:27-38.

Dommeyer CJ. The effects of negative cover letter appeals on mail survey response. J Market Res Soc 1987;29:445-51.

Dommeyer CJ. How form of the monetary incentive affects mail survey response. J Market Res Soc 1988;30:379-85.

Dommeyer CJ. Offering mail survey results in a lift letter. J Market Res Soc 1989;31:399-408.

Dommeyer CJ, Elganayan D, Umans C. Increasing mail survey response with an envelope teaser. J Market Res Soc 1991;33:137-40.

Doob A, Zabrack M. The effect of freedom-threatening instructions and monetary inducement on compliance. Can J Behav Sci 1971;3:408-12.

Doob AN, Freedman JL, Carlsmith JM. Effects of sponsor and prepayment on compliance with a mailed request. J Appl Psychol 1973;57:346-7.

Dorman PJ, Slattery JM, Farrell B, Dennis MS, Sandercock PAG. A randomised comparison of the EuroQol and SF-36 after stroke. United Kingdom Collaborators in the International Stroke Trial. BMJ 1997;315:461.

Eaker S, Bergstrom R, Bergstrom A, Hans-Olov A, Nyren O. Response rate to mailed epidemiologic questionnaires: a population-based randomized trial of variations in design and mailing routines. Am J Epidemiol 1998;147:74-82.

Easton AN, Price JH, Telljohann SK, Boehm K. An informational versus monetary incentive in increasing physicians' response rates. Psychol Rep 1997;81:968-70.

Edwards P, Roberts I. A comparison of two questionnaires for assessing outcome after head injury. 2001 (unpublished).

Enger JM. Survey questionnaire format effect on response rate and cost per return. Paper presented at the Annual Meeting of the American Educational Research Association, Atlanta 1993.

Etter J-F, Perneger TV, Rougemont A. Does sponsorship matter in patient satisfaction surveys? A randomized trial. Med Care 1996;34:327-35.

Etter JF, Perneger TV. Questionnaire color and response patterns in mailed surveys: a randomised trial and meta-analysis. Institute of Social and Preventive Medicine, Geneva, 1997 (unpublished).

Etter J-F, Perneger TV, Ronchi A. Collecting saliva samples by mail. Am J Epidemiol 1998;147:141-6.

Etter J-F, Perneger TV, Laporte J-D. Unexpected effects of a prior feedback letter and a professional layout on the response rate to a mail survey in Geneva. J Epidemiol Community Health 1998;52:128-9.

Everett SA, Price JH, Bedell A, Telljohann SK. The effect of a monetary incentive in increasing the return rate of a survey to family physicians. Eval Health Professions 1997;20:207-4.

Faria AJ, Dickinson JR. Mail survey response, speed, and cost. Ind Marketing Manage 1992;21:51-60.

Faria MC, Mateus CL, Coelho F, Martins R, Barros H. Postal questionnaires: a useful strategy for the follow up of stroke cases. Acta Med Portugesa 1997;10:61-5.

Feild HS. Effects of sex of investigator on mail survey response rates and response bias. J Appl Psychol 1975;60:772-3.

Ferrell OC, Childers TL, Reukert RW. Effects of situational factors on mail survey response. Educ Conf Proc 1984:364-7.

Finn DW. Response speeds, functions, and predictability in mail surveys. J Acad Marketing Sci 1983;11:61-70.

Fiset L, Milgrom P, Tarnai J. Dentists' response to financial incentives in a mail survey of malpractice liability experience. J Public Health Dent 1994;54:68-72.

Ford NM. The advance letter in mail surveys. J Marketing Res 1967;4:202-4.

Friedman HH, Goldstein L. Effect of ethnicity of signature on the rate of return and content of a mail questionnaire. J Appl Psychol 1975;60:770-1.

Friedman HH, San Augustine AJ. The effects of a monetary incentive and the ethnicity of the sponsors signature on the rate and quality of response to a mail survey. J Acad Marketing Sci 1979;7:95-101.

Furse DH, Stewart DW. Monetary incentives versus promised contribution to charity: new evidence on mail survey response. J Marketing Res 1982;XIX:375-80.

Furst LG, Blitchington WP. The use of a descriptive cover letter and secretary pre-letter to increase response rate in a mailed survey. Personnel Psychol 1979;32:155-9.

Futrell CM, Swan J. Anonymity and response by salespeople to a mail questionnaire. J Marketing Res 1977;14:611-6.

Futrell CM, Stem DE, Fortune BD. Effects of signed versus unsigned internally administered questionnaires for managers. J Business Res 1978;6:91-8.

Futrell CM, Lamb C. Effect on mail survey return rates of including questionnaires with follow up letters. Percept Mot Skills 1981;52:11-5.

Futrell CM, Hise RT. The effects of anonymity and a same-day deadline on the response rate to mail surveys. Eur Res 1982;10:171-5.

Gajraj AM, Faria AJ, Dickinson JR. A comparison of the effect of promised and provided lotteries, monetary and gift incentives on mail survey response rate, speed and cost. J Market Res Soc 1990;32:141-62.

Gendall P, Hoek J, Brennan M. The tea bag experiment: more evidence on incentives in mail surveys. J Market Res Soc 1998;40:347-51.

Gibson PJ, Koepsell TD, Diehr P, Hale C. Increasing response rates for mailed surveys of medicaid clients and other low-income populations. Am J Epidemiol 1999;149:1057-62.

Giles WF, Feild HS. Effects of amount, format, and location of demographic information on questionnaire return rate and response bias of sensitive and non sensitive items. Personnel Psychol 1978;31:549-59.

Gillespie DF, Perry RW. Survey return rates and questionnaire appearance. Austr N Z J Sociol 1975;11:71-2.

Gillpatrick TR, Harmon RR, Tseng LP. The effect of a nominal monetary gift and different contacting approaches on mail survey response among engineers. IEE Transact Engineering Manage 1994;41:285-90.

Gitelson RJ, Drogin EB. An experiment on the efficacy of a certified final mailing. J Leisure Res 1992;24:72-8.

Glisan G, Grimm JL. Improving response rate in an industrial setting: will traditional variables work? Southern Marketing Assoc Proc 1982;20:265-8.

Godwin K. The consequences of large monetary incentives in mail surveys of elites. Public Opin Q 1979;43:378-87.

Goldstein L, Friedman HH. A case for double postcards in surveys. J Advertising Res 1975;15:43-7.

Goodstadt MS, Chung L, Kronitz R, Cook G. Mail survey response rates: their manipulation and impact. J Marketing Res 1977;14:391-5.

Green KE, Stager SF. The effects of personalization, sex, locale, and level taught on educators' responses to a mail survey. J Exper Educ 1986;54:203-6.

Green KE, Kvidahl RF. Personalization and offers of results: effects on response rates. J Exper Educ 1989;57:263-70.

Greer TV, Lohtia R. Effects of source and paper color on response rates in mail surveys. Ind Marketing Manage 1994;23:47-54.

Gullahorn JT, Gullahorn JE. Increasing returns from non-respondents. Public Opin Q 1959;23:119-21.

Gullahorn JE, Gullahorn JT. An investigation of the effects of three factors on response to mail questionnaires. Public Opin Q 1963;27:294-6.

Gupta L, Ward J, D'Este C. Differential effectiveness of telephone prompts by medical and nonmedical staff in increasing survey response rates: a randomised trial. Aust N Z J Public Health 1997;21:98-9.

Hackler JC, Bourgette P. Dollars, dissonance and survey returns. Public Opin Q 1973;37:276-81.

Hancock JW. An experimental study of four methods of measuring unit costs of obtaining attitude toward the retail store. J Applied Psychol 1940;24:213-30.

Hansen RA, Robinson LM. Testing the effectiveness of alternative foot-in-the-door manipulations. J Marketing Res 1980;17:359-64.

Hansen RA. A self-perception interpretation of the effect of monetary and nonmonetary incentives on mail survey respondent behaviour. J Marketing Res 1980;17:77-83.

Hawes JM, Crittenden VL, Crittenden WF. The effects of personalisation, source, and offer on mail survey response rate and speed. Akron Business Econ Rev 1987;18:54-63.

Hawkins DI. The impact of sponsor identification and direct disclosure of respondent rights on the quantity and quality of mail survey data. J Business 1979;52:577-90.

Heaton E. Increasing mail questionnaire returns with a preliminary letter. J Advertising Res 1965;5:36-9.

Hendrick C, Borden R, Giesen M, Murray EJ, Seyfried BA. Effectiveness of ingratiation tactics in a cover letter on mail questionnaire response. Psychonom Sci 1972;26:349-51.

Henley JR. Response rate to mail questionnaires with a return deadline. Public Opin Q 1976;40:374-5.

Hensley WE. Increasing response rate by choice of postage stamp. Curr Opin Q 1974;38:280-3.

Hewett WC. How different combinations of postage on outgoing and return envelopes affect questionnaire returns. J Market Res Soc 1974;16:49-50.

Hoffman SC, Burke AE, Helzlsouer KJ, Comstock GW. Controlled trial of the effect of length, incentives, and follow-up techniques on response to a mailed questionnaire. Am J Epidemiol 1998;148:1007-11.

Hopkins KD, Hopkins BR, Schon I. Mail surveys of professional populations: the effects of monetary gratuitues on return rates. J Exp Educat 1988;56:173-5.

Hornik J. Time cue and time perception effect on response to mail surveys. J Marketing Res 1981;18:243-8.

Horowitz JL, Sedlacek WE. Initial returns on mail questionnaires: a literature review and research note. Res Higher Educ 1974;2:361-7.

Houston MJ, Jefferson RW. The negative effects of personalization on response patterns in mail surveys. J Marketing Res 1975;12:114-7.

Houston MJ, Nevin JR. The effect of source and appeal on mail survey response patterns. J Marketing Res 1977;14:374-8.

Hubbard R, Little EL. Promised contributions to charity and mail survey responses: replication with extension. Public Opin Q 1988;52:223-30.

Hubbard R, Little EL. Cash prizes and mail survey response rates: a threshold analysis. J Acad Marketing Sci 1988;16:42-4.

Huck SW, Gleason E. Using monetary inducements to increase response rates from mailed surveys. J Applied Psychol 1974;59:222-5.

Hyett GP, Farr DJ. Postal questionnaires: double-sided printing compared with single-sided printing. Eur Res 1977;5:136-7.

Iglesias CP, Torgerson DJ. Does length of questionnaire matter? A randomised trial of response rates to a mailed questionnaire. J Health Serv Res Policy 2000;5:219-21.

Jacobs LC. Effect of the use of optical scan sheets on survey response rate. Paper presented at the annual meeting of the American Educational Research Association, 1986.

Jacoby A. Possible factors affecting response to postal questionnaires: findings from a study of general practitioner services. J Public Health Med 1990;12:131-5.

James J, Bolstein R. The effect of monetary incentives and follow-up mailings on the response rate and response quality in mail surveys. Public Opin Q 1990;54:346-61.

James J, Bolstein R. Large monetary incentives and their effect on mail survey response rates. Public Opin Q 1992;56:442-53.

Jensen JL. The effect of survey format on response rate and patterns of response. Doctoral dissertation, University of California, 1994.

Jobber D, Sanderson S. The effects of a prior letter and coloured questionnaire paper on mail survey response rates. J Market Res Soc 1983;25:339-49.

Jobber D, Sanderson S. The effect of two variables on industrial mail survey returns. Ind Marketing Manage 1985;14:119-21.

Jobber D, Birro K, Sanderson SM. A factorial investigation of methods of stimulating response to mail surveys. Eur J Operational Res 1988;37:158-64.

Jobber D. An examination of the effects of questionnaire factors on response to an industrial mail survey. Int J Res Marketing 1989;6:129-40.

Johansson L, Solvoll K, Opdahl S, Bjorneboe G-E, Drevon CA. Response rates with different distribution methods and reward, and reproducibility of a quantitative food frequency questionnaire. Eur J Clin Nutr 1997;51:346-53.

John EM, Savitz DA. Effect of a monetary incentive on response to a mail survey. Ann Epidemiol 1994;4:231-35.

Jones WH, Linda G. Multiple criteria effects in a mail survey experiment. J Marketing Res 1978;15:280-4.

Jones WH, Lang JR. Sample composition bias and response bias in a mail survey: a comparison of inducement methods. J Marketing Res 1980;17:69-76.

Kahle LR, Sales BD. Personalization of the outside envelope in mail surveys. Public Opin Q 1978;42:547-50.

Kaplan S, Cole P. Factors affecting response to postal questionnaires. Br J Prev Soc Med 1970;24:245-7.

Keown CF. Foreign mail surveys: response rates using monetary incentives. J Int Business Studies 1985;16:151-3.

Kephart WM, Bressler M. Increasing the response to mail questionnaires: a research study. Public Opin Q 1958;21:123-32.

Kerin RA. Personalization strategies, response rate and response quality in a mail survey. Soc Sci Q 1974;55:175-81.

Kerin RA, Harvey MG. Methodological considerations in corporate mail surveys: a research note. J Business Res 1976;4:277-81.

Kerin RA, Peterson RA. Personalization, respondent anonymity, and response distortion in mail surveys. J Applied Psychol 1977;62:86-9.

Kerin RA, Barry TE, Dubinsky AJ, Harvey MG. Offer of results and mail survey response from a commercial population: a test of Gouldner's Norm of Reciprocity. Proc Am Inst Decision Sci 1981:283-5.

Kernan JB. Are ‘bulk rate occupants’ really unresponsive? Public Opin Q 1971;35:420-2.

Kindra GS, McGown KL, Bougie M. Stimulating responses to mailed questionnaires. An experimental study. Int J Res Marketing 1985;2:219-35.

King JO. The influence of personalization on mail survey response rates. Arkansas Business Econ Rev 1978;11:15-8.

Koo MM, Rohan TE. Printed signatures and response rates. Epidemiology 1995;6:568.

Koo MM, Rohan TE. Types of advance notification in reminder letters and response rates. Epidemiology 1996;7:215-6.

Kurth LA. Message responses as functions of communication mode: a comparison of electronic mail and typed memoranda. Doctoral dissertation, Arizona State University, 1987.

La Garce R, Kuhn LD. The effect of visual simuli on mail survey response rates. Ind Marketing Manage 1995;24:11-18.

Labrecque DP. A response rate experiment using mail questionnaires. J Marketing 1978;42:82-3.

Larsson I. Increasing the rate of returns in mail surveys. A methodological study. Didakometry Sociometry 1970;2:43-70.

Leigh Brown AP, Lawrie HE, Kennedy AD, Webb JA, Torgerson DJ, Grant AM. Cost effectiveness of a prize draw on response to a postal questionnaire: results of a randomised trial among orthopaedic outpatients in Edinburgh. J Epidemiol Community Health 1997;51:463-4.

Linsky AS. A factorial experiment in inducing responses to a mail questionnaire. Sociol Soc Res 1965;49:183-9.

London SJ, Dommeyer CJ. Increasing response to industrial mail surveys. Ind Marketing Manage 1990;19:235-41.

Lund DB, Malhotra NK, Smith AE. Field validation study of conjoint analysis using selected mail survey response rate facilitators. J Business Res 1988;16:351-68.

Lund E, Gram IT. Response rate according to title and length of questionnaire. Scand J Soc Med 1998;26:154-60.

Maheux B, Legault C, Lambert J. Increasing response rates in physicians' mail surveys: an experimental study. Am J Public Health 1989;79:638-9.

Marrett LD, Kreiger N, Dodds L, Hilditch S. The effect on response rates of offering a small incentive with a mailed questionnaire. Ann Epidemiol 1992;2:745-53.

Martin JD, McConnell JP. Mail questionnaire response induction: the effect of four variables on the response of a random sample to a difficult questionnaire. Soc Sci Q 1970;51:409-14.

Martin WS, Duncan WJ, Powers TL, Sawyer JC. Costs and benefits of selected response inducement techniques in mail survey research. J Business Res 1989;19:67-79.

Martin CL. The impact of topic interest on mail survey response behaviour. J Market Res Soc 1994;36:327-38.

Mason WS, Dressel RJ, Bain RK. An experimental study of factors affecting response to a mail survey of beginning teachers. Public Opin Q 1961;25:296-9.

Matteson MT. Type of transmittal letter and questionnaire colour as two variables influencing response rates in a mail survey. J Applied Psychol 1974;59:535-6.

McConochie RM, Rankin CA. Effects of monetary premium variations on response/non response bias: Representation of black and non black respondents in surveys of radio listening. American Statistical Association—Proceedings of the Section on Survey Research Methods, 1985:42-5.

McKee D. The effect of using a questionnaire identification code and message about non-response follow-up plans on mail survey response characteristics. J Market Res Soc 1992;34:179-91.

McKillip J, Lockhart DC. The effectiveness of cover-letter appeals. J Soc Psychol 1984;122:85-91.

Miller MM. The effects of cover letter appeal and non monetary incentives on university professors' response to a mail survey. Paper presented at the annual meeting of the American Educational Research Association, 1994.

Mortagy AK, Howell JB, Waters WE. A useless raffle. J Epidemiol Community Health 1985;39:183-4.

Moss VD, Worthen BR. Do personalization and postage make a difference on response rates to surveys of professional populations. Psychol Rep 1991;68:692-4.

Mullen P, Easling I, Nixon SA, Koester DR, Biddle AK. The cost-effectiveness of randomised incentive and follow-up contacts in a national mail survey of family physicians. Eval Health Professions 1987;10:232-45.

Mullner RM, Levy PS, Byre CS, Matthews D. Effects of characteristics of the survey instrument on response rates to a mail survey of community hospitals. Public Health Rep 1982;97:465-9.

Murawski MM, Carroll NV. Direct mail performance of selected health related quality of life scales. J Pharmacoepidemiol 1996;5:17-38.

Murphy PM, Daley JM. Exploring the effects of postcard prenotification on industrial firms' response to mail surveys. J Market Res Soc 1991;33:335-41.

Myers JH, Haug AF. How a preliminary letter affects mail survey returns and costs. J Advertising Res 1969;9:37-9.

Nagata C, Hara S, Shimizu H. Factors affecting response to mail questionnaire: research topics, questionnaire length, and non-response bias. J Epidemiol 1995;5:81-5.

Nakai S, Hashimoto S, Murakami Y, Hayashi M, Manabe K, Noda H. Response rates and non-response bias in a health-related mailed survey. Nippon-Koshu-Eisei-Zasshi 1997;44:184-91.

Nevin JR, Ford NM. Effects of a deadline and veiled threat on mail survey responses. J Appl Psychol 1975;61:116-8.

Newland, CA, Waters, WE, Standford, AP, Batchelor, BG. A study of mail survey method. Int J Epidemiol 1977;6:65-7.

Nichols RC, Meyer MA. Timing postcard follow-ups in mail questionnaire surveys. Public Opin Q 1966;30:3006-7.

Nichols S, Waters WE, Woolaway M, Hamilton-Smith MB. Evaluation of the effectiveness of a nutritional health education leaflet in changing public knowledge and attitudes about eating and health. J Human Nutr Dietetics 1988;1:233-8.

Ogbourne AC, Rush B, Fondacaro R. Dealing with nonrespondents in a mail survey of professionals. Eval Health Professions 1986;9:121-8.

Olivarius NdeF, Andreasen AH. Day-of-the-week effect on doctors' response to a postal questionnaire. Scand J Primary Health Care 1995;13:65-7.

Osborne MO, Ward J, Boyle C. Effectiveness of telephone prompts when surveying general practitioners: a randomised trial. Austr Family Physician 1996;25:S41-3.

Parasuraman A. Impact of cover letter detail on response patterns in a mail survey. Am Inst Decision Sci 1981;13th meeting:289-91.

Parsons RJ, Medford TS. The effect of advance notice in mail surveys of homogenous groups. Public Opin Q 1972;36:258-9.

Peck JK, Dresch SP. Financial incentives, survey response, and sample representativeness: does money matter. Rev Publ Data Use 1981;9:245-66.

Perneger TV, Etter J-F, Rougemont A. Randomized trial of use of a monetary incentive and a reminder card to increase the response rate to a mailed questionnaire. Am J Epidemiol 1993;138:714-22.

Perry N. Postage combinations in postal questionnaire surveys—another view. J Market Res Soc 1974;16:245-6.

Peters TJ, Harvey IM, Bachmann MO, Eachus JI. Does requesting sensitive information on postal questionnaires have an impact on response rates? A randomised controlled trial in the south west of England. J Epidemiol Community Health 1998;52:130.

Peterson RA. An experimental investigation of mail survey responses. J Business Res 1975;3:199-210.

Phillips WM. Weaknesses of the mail questionnaire: a methodological study. Sociol Soc Res 1951;35:260-7.

Pirotta M, Gunn J, Farish S, Karabatsos G. Primer postcard improves postal survey response rates. Aust N Z J Public Health 1999;23:196-7.

Poe GS, Seeman I, McLaughlin J, Mehl E, Dietz M. ‘Don’t know' boxes in factual questions in a mail questionnaire. Public Opin Q 1988;52:212-22.

Powers DE, Alderman DL. Feedback as an incentive for responding to a mail questionnaire. Res Higher Educ 1982;17:207-11.

Pressley MM, Tullar WL. A factor interactive investigation of mail survey response rates from a commercial population. J Marketing Res 1977;14:108-11.

Pressley MM. Care needed when selecting response inducements in mail surveys of commercial populations. J Acad Marketing Sci 1978;6:336-43.

Pressley MM, Dunn MG. A factor-interactive experimental investigation of inducing response to questionnaires mailed to commercial populations. AMA Educators Conference Proceedings, 1985:356-61.

Pucel DJ, Nelson HF, Wheeler DN. Questionnaire follow-up returns as a function of incentives and responder characteristics. Vocational Guidance Q 1971;March:188-93.

Rimm EB, Stampfer MJ, Colditz GA, Giovannuci E, Willet W C. Effectiveness of various mailing strategies among nonrespondents in a prospective cohort study. Am J Epidemiol 1990;131:1068-71.

Roberts RE, McCrory OF, Forthofer RN. Further evidence on using a deadline to stimulate responses to a mail survey. Public Opin Q 1978;42:407-10.

Roberts H, Pearson JC, Dengler R. Impact of a postcard versus a questionnaire as a first reminder in a postal lifestyle survey. J Epidemiol Community Health 1993;47:334-5.

Roberts I, Coggan C, Fanslow J. Epidemiological methods: the effect of envelope type on response rates in an epidemiological study of back pain. Aust N Z J Occ Health Safety 1994;10:55-7.

Rolnick SJ, Gross CR, Garrard J, Gibson RW. A comparison of response rate, data quality, and cost in the collection of data on sexual history and personal behaviours. Am J Epidemiol 1989;129:1052-61.

Romney VA. A comparison of responses to open-ended and closed ended items on a state-level community education needs assessment instrument. Doctoral dissertation, University of Virginia, 1993.

Roszkowski MJ, Bean AG. Believe it or not! Longer questionnaires have lower response rates. J Business Psychol 1990;4:495-509.

Rucker MH, Arbaugh JE. A comparison of matrix questionnaires with standard questionnaires. Educ Psychol Measurement 1979;39:637-43.

Rucker M, Hughes R, Thompson R, Harrison A, Vanderlip N. Personalization of mail surveys: too much of a good thing? Educ Psychol Measurement 1984;44:893-905.

Sallis JF, Fortmann SP, Solomon DS, Farquhar JW. Increasing returns of physician surveys. Am J Public Health 1984;74:1043.

Salomone PR, Miller GC. Increasing the response rates of rehabilitation counselors to mailed questionnaires. Rehab Counseling Bull 1978;22:138-41.

Salvesen K. Vatten L. Effect of a newspaper article on the response to a postal questionnaire. J Epidemiol Community Health 1992;46:86.

Schweitzer M, Asch D. Timing payments to subjects of mail surveys: cost-effectiveness and bias. J Clin Epidemiol 1995;48:1325-9.

See Tai S, Nazareth I, Haines A, Jowett C. A randomized trial of the impact of telephone and recorded delivery reminders on the response rate to research questionnaires. J Public Health Med 1997;19:219-21.

Shahar E, Bisgard KM, Folsom AR. Response to mail surveys: effect of a request to explain refusal to participate. Epidemiology 1993;4:480-2.

Shiono PH, Klebanoff MA. The effect of two mailing strategies on the response to a survey of physicians. Am J Epidemiol 1991;134:539-42.

Simon R. Responses to personal and form letters in mail surveys. J Advertising Res 1967;7:28-30.

Skinner SJ, Ferrell OC, Pride WM. Personal and nonpersonal incentives in mail surveys: immediate versus delayed inducements. Acad Marketing Sci 1984;12:106-14.

Sletto R. Pretesting of questionnaires. Am Sociol Rev 1940;5:193-200.

Sloan M, Kreiger N, James B. Improving response rates among doctors: randomised trial. BMJ 1997;315:1136.

Smith K, Bers T. Improving alumni survey response rates: An experiment and cost-benefit analysis. Res Higher Educ 1987;27:218-25.

Spry VM, Hovell MF, Sallis JG, Hofsteter CR, Elder JP, Molgaard CA. Recruiting survey respondents to mailed surveys: controlled trials of incentives and prompts. Am J Epidemiol 1989;130:166-72.

Stafford JE. Influence of preliminary contact on mail returns. J Marketing Res 1966;3:410-1.

Stevens RE. Does precoding mail questionnaires affect response rates. Public Opin Q 1975;38:621-2.

Sutherland HJ, Beaton M, Mazer R, Kriukov V, Boyd NF. A randomized trial of the total design method for the postal follow-up of women in a cancer prevention trial. Eur J Cancer Prevention 1996;5:165-8.

Sutton RJ, Zeitz LL. Multiple prior notifications, personalization, and reminder surveys. Marketing Res 1992;4:14-21.

Svoboda P. A comparison of two questionnaires for assessing outcome after head injury in the Czech Republic. 2001 (unpublished).

Swan JE, Epley DE, Burns WL. Can follow-up response rates to a mail survey be increased by including another copy of the questionnaire? Psychol Rep 1980;47:103-6.

Tambor ES, Chase GA, Faden RR, Geller G, Hofman KJ, Holtzman NA. Improving response rates through incentives and follow-up: the effect on a survey of physicians' knowledge of genetics. Am J Public Health 1993;83:1599-603.

Temple-Smith M, Mulvey G, Doyle W. Maximising response rates in a survey of general practitioners. Lessons from a Victorian survey on sexually transmissible diseases. Austr Fam Physician 1998;27(suppl 1):S15-S18.

Trice AD. Maximizing participation in surveys: hotel ratings VII. J Soc Behav Pers 1985;1:137-41.

Tullar WL, Pressley MM, Gentry DL. Toward a theoretical framework for mail survey response. Proceedings of the Third Annual Conference of the Academy of Marketing Science 1979;2:243-7.

Urban N, Anderson GL, Tseng A. Effects on response rates and costs of stamps vs business reply in a mail survey of physicians. Clin Epidemiol 1993;46:455-9.

Vocino T. Three variables in stimulating responses to mailed questionnaires. J Marketing 1977;41:76-7.

Vogel PA, Skjostad K, Eriksen L. Influencing return rate by mail of alcoholics' questionnaires at follow-up by varying lottery procedures and questionnaire lengths. Two experimental studies. Eur J Psychiatry 1992;6:213-22.

VonRiesen RD. Postcard reminders versus replacement questionnaires and mail survey response rates from a professional population. J Business Res 1979;7:1-7.

Waisanen FB. A note on the response to a mailed questionnaire. Public Opin Q 1954;18:210-2.

Ward J, Wain G. Increasing response rates of gynaecologists to a survey: a randomised trial of telephone prompts. Austr J Public Health 1994;18:332-4.

Ward J, Boyle C, Long D, Ovadia C. Patient surveys in general practice. Austr Fam Physician 1996;25:S19-S20.

Ward J, Bruce T, Holt P, D'Este K, Sladden M. Labour-saving strategies to maintain survey response rates: a randomised trial. Aust N Z J Public Health 1998;22(3 suppl):394-6.

Warriner K, Goyder J, Gjertsen H, Hohner P, Mcspurren K. Charities, no; lotteries, no; cash, yes. Public Opin Q 1996;60:542-62.

Weilbacher WM, Walsh HR. Mail questionnaires and the personalized letter of transmittal. Marketing Notes 1952;16:331-6.

Weir, N. Methods of following up stroke patients. Neurosciences Trials Unit, University of Edinburgh, 2001 (unpublished).]

Wells DV. The representativeness of mail questionnaires as a function of sponsorship, return postage, and time of response. Doctoral dissertation, Hofstra University, 1984.

Weltzien RT, McIntyre TJ, Ernst JA, Walsh JA, Parker JK. Crossvalidation of some psychometric properties of the CSQ and its differential return rate as a function of token financial incentives. Community Mental Health J 1986;22:49-55.

Wensing M, Mainz J, Kramme O, Jung HP, Ribacke M. Effect of mailed reminders on the response rate in surveys among patients in general practice. J Clin Epidemiol 1999;52:585-7.

Whitmore WJ. Mail survey premiums and response bias. J Marketing Res 1976;13:46-50.

Willits FK, Ke B. Part-whole question order effects. Public Opin Q 1995;59:392-403.

Windsor J. What can you ask about? The effect on response to a postal screen of asking about two potentially sensitive questions. J Epidemiol Community Health 1992;46:83-5.

Woodward A, Douglas B, Miles H. Chance of a free dinner increases response to mail questionnaire. Int J Epidemiol 1985;14:641-2.

Worthen BR, Valcarce RW. Relative effectiveness of personalized and form covering letters in initial and follow-up mail surveys. Psychol Rep 1985;57:735-44.

Wynn GW, McDaniel SW. The effect of alternative foot-in-the-door manipulations on mailed questionnaire response rate and quality. J Market Res Soc 1985;27:15-26.

Zagumny MJ, Ramsey R, Upchurch MP. Is anonymity important in AIDS survey research? Psychol Rep 1996;78:270.

Zusman BJ, Duby P. An evaluation of the use of monetary incentives in postsecondary survey research. J Res Dev Educ 1987;20:73-8.

Editorial by Smeeth and Fletcher

Footnotes

Funding: The study was supported by a grant from the BUPA Foundation and a Nuffield Trust short term fellowship.

Competing interests: None declared.

References

- 1.Armstrong BK, White E, Saracci R. Principles of exposure measurement in epidemiology. Monographs in epidemiology and biostatistics. Vol. 21. New York: Oxford University Press; 1995. pp. 294–321. [Google Scholar]

- 2. Edwards P, Clarke M, DiGuiseppi C, Pratap S, Roberts I, Wentz R. Identification of randomised controlled trials in systematic reviews: accuracy and reliability of screening records. Stat Med (in press). [DOI] [PubMed]

- 3.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yammarino FJ, Skinner SJ, Childers TL. Understanding mail survey response behavior: a meta-analysis. Publ Opin Q. 1991;55:613–639. [Google Scholar]

- 5.Clarke MJ, Stewart LA. Obtaining data from randomised controlled trials: how much do we need for reliable and informative meta-analyses? BMJ. 1994;309:1007–1010. doi: 10.1136/bmj.309.6960.1007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias: dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273:408–412. doi: 10.1001/jama.273.5.408. [DOI] [PubMed] [Google Scholar]

- 7.Engels EA, Schmid CH, Terrin N, Olkin I, Lau J. Heterogeneity and statistical significance in meta-analysis: an empirical study of 125 meta-analyses. Stat Med. 2000;19:1707–1728. doi: 10.1002/1097-0258(20000715)19:13<1707::aid-sim491>3.0.co;2-p. [DOI] [PubMed] [Google Scholar]

- 8.Edwards P, Roberts I, Clarke M, DiGuiseppi C, Pratap S, Wentz R, Kwan I. Cochrane Library. Issue 2. Oxford: Update Software; 2002. Methods to influence response to postal questionnaires. [Google Scholar]