Summary

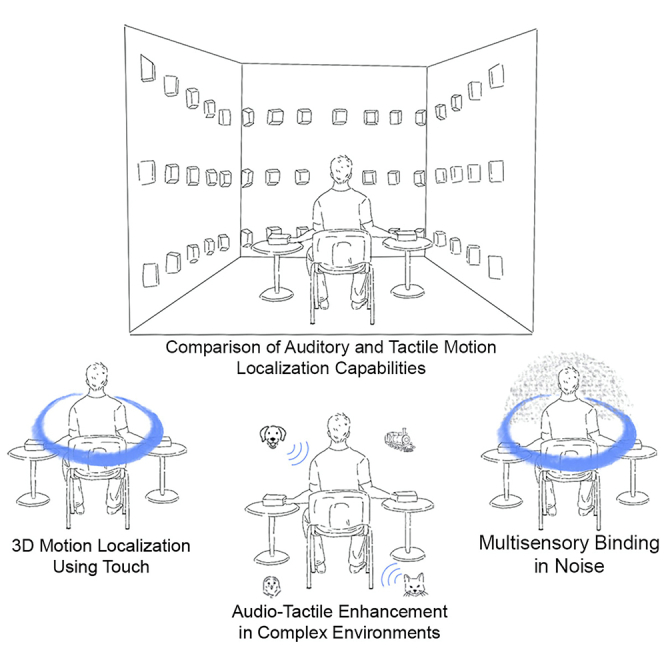

Each sense serves a different specific function in spatial perception, and they all form a joint multisensory spatial representation. For instance, hearing enables localization in the entire 3D external space, while touch traditionally only allows localization of objects on the body (i.e., within the peripersonal space alone). We use an in-house touch-motion algorithm (TMA) to evaluate individuals’ capability to understand externalized 3D information through touch, a skill that was not acquired during an individual’s development or in evolution. Four experiments demonstrate quick learning and high accuracy in localization of motion using vibrotactile inputs on fingertips and successful audio-tactile integration in background noise. Subjective responses in some participants imply spatial experiences through visualization and perception of tactile “moving” sources beyond reach. We discuss our findings with respect to developing new skills in an adult brain, including combining a newly acquired “sense” with an existing one and computation-based brain organization.

Subject areas: Biological sciences, Neuroscience, Techniques in neuroscience

Graphical abstract

Highlights

-

•

Intensity-weighted tactile information enables representation of 3D spatial motion

-

•

Tactile stimulation enables motion localization accuracy near auditory capabilities

-

•

Rapid learning of a sensory capability never experienced in development or evolution

-

•

Binding auditory and tactile information enhances performance in noisy environments

Biological sciences; Neuroscience; Techniques in neuroscience

Introduction

Each of our senses is separately capable of acquiring spatial information, yet spatial perception is intrinsically multisensory and acquired as such from the early years of life.1 Hearing is capable of perceiving external locations and moving sources in all angles of the 3D environment. Vision has a significantly narrower frontal field of view of approximately 120° and is used to refine auditory localization precision through head movement.2 Both vision and hearing receive information from both the extrapersonal space and the peripersonal space (the space within which one can reach or be physically reached by other entities).3 The tactile sense is conventionally associated solely with locations on the body and thus limited to the peripersonal space.

Meanwhile, some mammals, such as elephants, have the ability to comprehend both content and spatial locations through tactile vibrations. Specifically, they receive seismic cues sent over long distances by another elephant’s stomping or low-frequency vocalizations. To enhance source localization, the receiving elephant lifts a single foot off the ground to perform triangulation.4 Elephants do this task using specialized mechanoreceptors encoding vibrations concentrated on the feet, which information is then combined with the perceived sounds.5 In humans, these receptors are most prominent on the fingertips (but also in the feet and the mouth).6,7

Auditory and tactile senses in humans bear certain similarities. Interestingly, the organ of Corti in the inner ear is believed to have originally developed from epithelium (skin).8 They both have the ability to encode vibrations within a partially overlapping frequency range using mechanoreceptors (specifically for the tactile sense, Pacinian corpuscles respond to vibrations up to 700–1000 Hz,6,9,10,11 while healthy hearing range is 20–20,000 Hz) and their neural pathways converge through common or related brain networks.12,13,14,15,16,17 Both the auditory and the tactile sense have also been referred to as “dynamic”, as they are capable of encoding inputs in motion with high accuracy (as opposed to vision which is most accurate in detecting static inputs).18

Possibly due to the similarities between the two senses, a number of authors have shown that multisensory perceptual interactions between the auditory and the tactile systems may be inherent.17,19,20,21,22,23 Specifically designed tactile inputs have been successfully used, in the context of improving speech perception24,25,26,27,28, music perception29,30,31,32 and auditory-based spatial localization.33,34 In spatial tasks, a bias in sound localization has been shown toward congruent tactile inputs, similar to the audiovisual ventriloquist effect.35,36,37,38

Auditory localization is a computational task occurring at various levels of the auditory system, starting as early as the olivary complex in the brainstem.39,40 In order to localize sound source positions, first interaural comparisons are carried out, combining minute interaural level differences (ILD), interaural time differences (ITD), and spectral differences of the sounds arriving at each ear caused by the interfering head and ear shape (otherwise known as the head-related transfer function, HRTF). The combination of these cues is used to create the binaural auditory model.41 The process by which the nervous system integrates multiple static locations to perceive smooth motion remains largely unknown.42

Several classical works have hinted that a type of tactile “binaural” mechanism of cue comparisons might exist for source localization.43,44,45,46,47 In one of his pioneering works, Van Békésy43 found that a time delay between two tactile inputs of the same intensity moves the locus of the sensation closer to the vibrator that received the input first, and that the latter is perceived louder. A series of works in the 1970s further showed that intensity-differences (ILDs) can be used as cues for cutaneous localization with much closer resolution to hearing than temporal cues (ITDs, with significantly poorer resolution for touch).44,45,46 Only frontal sources have been studied thus far, while the tactile localization of sources simulating motion around the entire 3D space has yet to be explored.

In the current study, we attempt to assess whether a mediating technology would enable humans to decipher extrapersonal spatial information and content through touch. For this purpose, one of the authors of the manuscript (A.S.) designed a touch-motion algorithm (TMA) that represents positions on a virtual sphere around the participant as vibrations on four fingertips (see STAR Methods). The solution fits within the general framework of sensory substitution devices (SSDs) which deliver certain features of one (primary) sensory modality through another, based on conversion algorithms. Following dedicated training, participants develop capabilities of performing sensory tasks using the SSD (e.g., recognizing visual object shapes through sounds or using tactile inputs for navigation.48,49,50,51,52,53,54 We also test the capabilities of the auditory system in the same tasks using Higher Order Ambisonics (HOA).

The initial two experiments test the potential of performing motion localization of simple soundwaves using an input-weighting design (similar to how the auditory system performs such tasks) through touch in 360° azimuth (Experiment 1), and on a semi-sphere (Experiment 2). We hypothesize that if the conversion of spatial information using the TMA is well fitted to the tactile modality, the task should be achievable. This, taking into account the intrinsic resemblance between the auditory and the tactile senses and their natural interactions. Furthermore, we assume that using moving rather than static sources, thus better mirroring natural environments, should also contribute to the fast learning process. Experiment 3 is similar to Experiment 1 but tests the effect of adding background noise on spatial localization accuracy. In addition, in experiments 1–2 using questionnaires, we inquire regarding participants’ subjective experiences. We hypothesize that if the information received from the complex tactile inputs becomes spatially perceived, the participants will attribute the motion to sources other than the devices themselves, i.e., as sources beyond their reach, and/or would imagine/visualize the moving sources. In experiment 4, we investigate audio-tactile integration within a naturalistic auditory scene containing multiple moving environmental sounds. As also in Experiment 3, we test integration of the new technologically based “novel sense” (which was not present during critical periods nor in evolution) with one that is natural and familiar since early childhood.55 Specifically, we hypothesize that if the relevant sound sources are indeed integrated with the tactile input, directed attention using TMA could diminish the effects of “change deafness” (i.e., not noticing that one source has disappeared from a scene).56,57

Results

All the experiments included in the current study were carried out using higher order Ambisonics for the synthesis of motion in audio. The tactile inputs were provided on two fingers of both hands placed on the sides of the body (see Figure 1). Tactile spatial locations were decoded using a TMA which controlled weighted differences among four vibrating actuators to produce locations on four fingertips (for further information see the STAR Methods section). Experiments 1–3 were motion localization tasks. Participants reported the start point, the endpoint and the direction of each stimulus. The scores were calculated based on error in degrees between the center point of the resorted trajectory and that of the target. A perfect score of 1 represented 0° error, and a score of 0 represented a 180° error. Chance was 0.5 (corresponding to a 90° error), considering there was an equal distribution of possible response positions along the full azimuthal circle. Experiment 4 applied a “change deafness” paradigm with visual (text) and tactile cues (see STAR Methods).

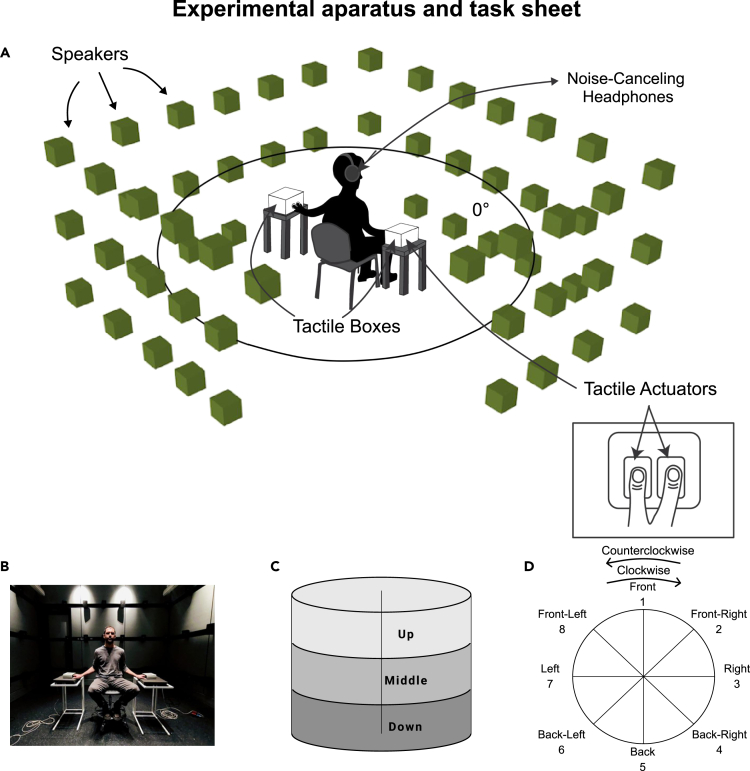

Figure 1.

Experimental apparatus and task sheet

The experimental set-up. The participant was seated in the middle of a cubic sound-attenuated room with two fingers of both hands placed in two vibrating boxes on the sides of the body (A and B); audio and vibrotactile stimuli were designed to induce perception of objects moving around the space. During the audio condition, sounds were played from speakers mounted on the walls, with the 3D sound environment as a virtual sphere created using 12th order Ambisonics.

(C and D) The participant was given an answer sheet showing 3 possible elevation levels and 8 possible horizontal locations of start and endpoints for each moving trajectory. The task of the participant was to report the start points, the endpoints and the direction of audio, tactile or audio-tactile stimuli.

Experiment 1: High accuracy of the tactile modality in localizing 360 horizontal motion

In experiment 1, participants were asked to identify the trajectories of stimuli “moving” around them on a horizontal plane (azimuth). The stimuli were 250 Hz sawtooth waves. The experiment consisted of four test conditions, each indicating the modality used to perform the spatial task: audio only 1 (A1), combined audio-tactile (AT), tactile only (T), audio only 2 (A2). No training was provided to the participants. The group scores (N = 29) for the tactile task were relatively high (M = 0.885, SD = 0.179; CI 0.873–0.898, IQR = 0.25; 18.4–22.9° error) and significantly higher than chance (Z = −24.405, p < 0.001). This was also the case for the multisensory audio-tactile task (Z = −25.288, p < 0.001), and the two auditory tasks (A1: Z = −24.478, p < 0.001; A2: Z = −24.778, p < 0.001). Comparisons between the scores in different test conditions showed higher performance for A1 (M = 0.905, SD = 0.007; CI 0.892–0.918, IQR = 0.25, 14.8–19.4° error; z = −2.849, p = 0.004), A2 (M = 0.915, SD = 0.007; CI 0.902–0.928; IQR = 0.902–0.927, 13.1–17.6° error; z = −4.177, p < 0.001), and AT (M = 0.913, SD = 0.005; CI 0.908–0.930, IQR = 0.25, 12.6–16.6° error; z = −4.216, p < 0.001), when each was directly compared to T. There was no statistically significant difference found between any other pairs of conditions. All comparisons were performed with the Wilcoxon signed-rank tests, with adjusted p < 0.0083. The results are depicted in Figure 2.

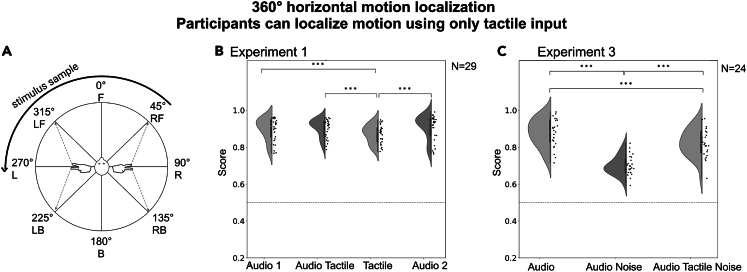

Figure 2.

360° horizontal motion localization

Participants can localize motion using tactile input only.

The task and the results of experiment 1 and experiment 3 with the sources moving on a 360° horizontal plane.

(A) After each stimulus, the participant was asked to report the start and the endpoint, as well as the direction of the motion trajectory (F = front, RF = right-front, R = right, RB = right-back, B = back, LB = left-back, L = left, LF = left-front).

(B) Group results in experiment 1. There were four test conditions in the following order: (1) audio only [Audio 1], (2) tactile only [Tactile], (3) audio and tactile combined [Audio Tactile], (4) audio only [Audio 2]. In all conditions, the results were significantly above chance (marked with a dashed line). Data are represented as mean ± SD.

(C) Group results in experiment 3. The experiment included three test conditions: (1) a baseline audio condition [Audio], (2) audio in noise [Audio Noise], (3) audio in noise with concurrent vibrotactile input [Audio Tactile Noise]; Statistical comparisons with a Wilcoxon signed-rank test (∗∗∗p < 0.001, ∗∗p < 0.01, ∗p < 0.05). Data are represented as mean ± SD.

Experiment 2: High accuracy of the tactile modality in localizing combined horizontal & vertical motion on a partial sphere

In order to further assess tactile spatial localization, experiment 2 replicated experiment 1 in both the setup and the azimuthal spatial conversion, while also adding a vertical motion component. This component was produced using changes in the frequency, based on the crossmodal correspondence between frequency and spatial elevation.58 A sawtooth wave within a range of 35–700 Hz was mapped logarithmically onto elevation between −30° to 30°, with regards to the participant’s head position. Scoring for the task was performed in the same manner as in experiment 1 while the participant had to also report the elevation of the start and endpoint (i.e., choose to indicate “up”, “middle”, or “down”), and a diagonal distance error was calculated. There were two study conditions: auditory (A) and tactile (T). A ∼30 min training session preceded the tactile test.

In Experiment 2 (N = 44) the T scores were relatively high (M = 0.772, SD = 0.009; CI 0.7527–0.7913, IQR = 0.17, 37.6–44.5° error) and significantly higher than chance (Z = −18.22, p < 0.001). The results for A (M = 0.797, SD = 0.009; CI 0.7781–0.8167, IQR = 0.19; 33–40° error) were also significantly above chance (Z = −18.976, p < 0.001). The audio scores were higher than the T scores (z = −2.902; p = 0.004; Wilcoxon signed-rank test; p < 0.05). The group results are shown in Figure 3. One-third of the participants reproduced motion trajectories better through the tactile modality, while two-thirds performed better in Audio (Figure 3C). In an additional analysis, we found that while for Audio, the scores were higher when reporting the endpoints of the trajectories compared to the start points (start: M = 0.806, SD = 0.009; CI 0.7877–0.8251, IQR = 0.25, 31.5–38.2° error; end: M = 0.833, SD = 0.009; CI 0.8156–0.8513, IQR = 0.25; 22.2–26.8° error; z = −2.647, p = 0.008), for the Tactile modality the reverse was true (start: M = 0.817, SD = 0.007; CI 0.8031–0.8303, IQR = 0.25; 30.5–35.4° error; end: M = 0.783, SD = 0.008; CI 0.7680–0.7988, IQR = 0.30; 36.2–41.8° error; z = −2.991, p = 0.003) (not shown in figures).

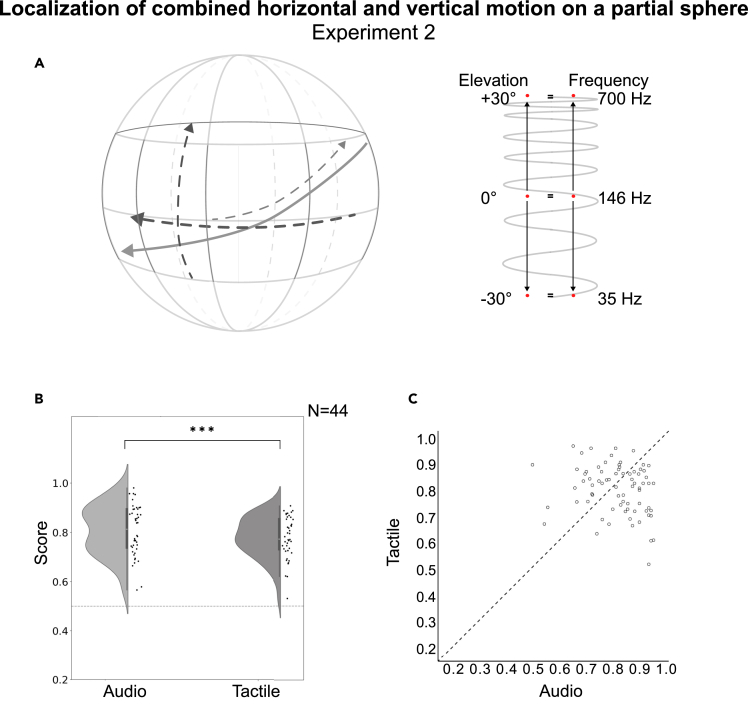

Figure 3.

Localization of combined vertical and horizontal motion on a partial sphere

Experiment 2.

The task and the results of experiment 2 with the sources moving on a semi-sphere.

(A) Example trajectories of “moving” stimuli. Participants were asked to reproduce the start and endpoints, as well as the direction of sources moving both horizontally (within the entire 360° azimuth range) and vertically (+/−30° in elevation) on a partial sphere.

(B) Group results (chance level marked as a dashed line). There were two test conditions: Audio and Tactile. Data are represented as mean ± SD. Statistical comparisons with a Wilcoxon signed-rank test (∗∗∗p < 0.001,∗∗p < 0.01,∗p < 0.05).

(C) Single participants’ scores (with one-third of the participants performing better in the task using touch, as compared to audio).

Experiment 3: Multisensory effect for motion localization in noise

Experiment 3 was designed to test the effect of adding background noise on spatial localization accuracy and multisensory integration. The sawtooth sounds were moving on 360° azimuth, same as in Experiment 1, but were presented with white noise in the background (SNR = −10dB). There were three experimental conditions: audio (A), audio-in-noise (AN) and audio-tactile in noise (ATN). There was no training provided to the participants. The scoring system was the same as in Experiment 1. As in Experiment 1, the participants achieved high scores for the auditory condition in quiet (M = 0.862, SD = 0.008; CI 0.845–0.880, IQR = 0.13; 21.6–27.9° error). Adding background noise significantly decreased the scores (M = 0.699, SD = 0.01; CI 0.678–0.720, IQR = 0.38; 50.4–57.96° error; A vs. AN: z = 11.4, p < 0.001). Performance in the audio-tactile task in noise was almost as high as in the audio condition in quiet (M = 0.820, SD = 0.008; CI 0.803–0.838, IQR = 0.13; 29.16–35.46° error) but was still significantly lower (A vs. ATN; z = 4.35, p < 0.001). There was also a statistically significant difference between scores in the AN and the ATN condition, the latter being significantly higher (z = 8.85, p < 0.001). All results were significantly above chance (p < 0.001). The results are depicted in Figure 2.

Experiment 4: Decreased “change deafness” through audio-tactile integration

To investigate multisensory integration in a naturalistic environment consisting of multiple natural sound sources, a “change deafness” experiment was designed. As in the prior experiments, HOA was used for audio and TMA for source azimuth using touch. The participants experienced pairs of identical 5-s scenes with eight sound sources moving individually along the horizontal plane, separated by a short burst of white noise (0.5 s long). The task was to recognize whether one of the sounds disappeared upon the second presentation of the scene, in three test conditions: (1) with no cue as to which sound is going to disappear, (2) with a visual cue showing the name of the sound that was going to disappear, and (3) with concurrent tactile vibrations (matching in content and location) representing the disappearing sound delivered on 4 fingertips (see Figure 4A). There was no training provided to the participants.

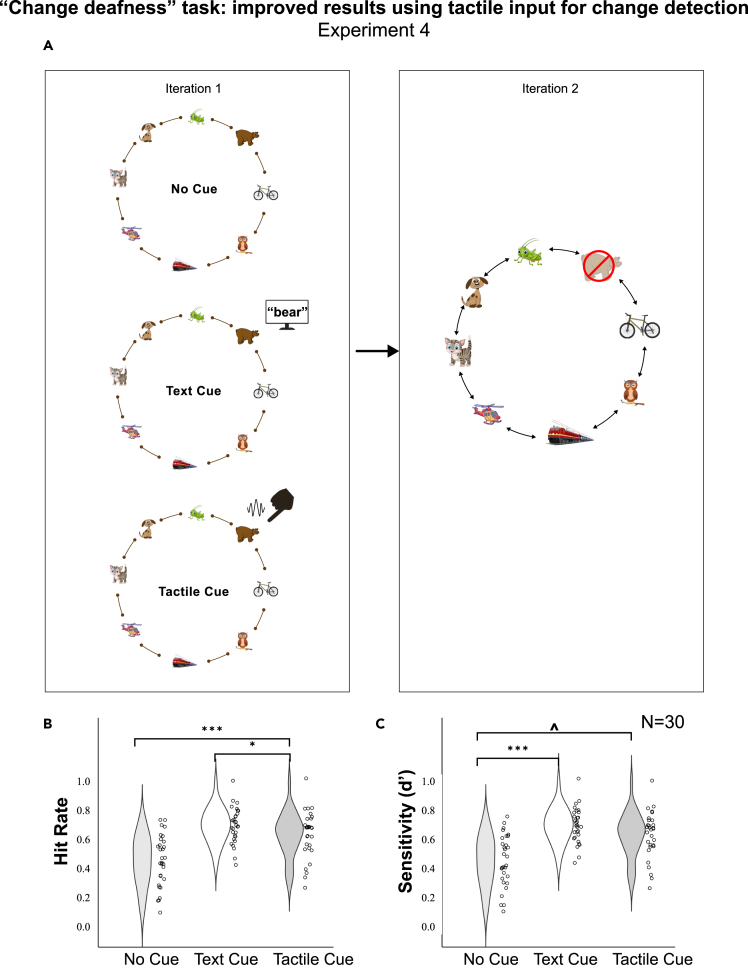

Figure 4.

“Change deafness” task: improved results using tactile inputs for change detection

Experiment 4.

The task and results of a “change deafness” experiment (experiment 4) assessing the role of directed attention for detecting a single sound disappearance within a complex auditory scene in motion.

(A) The participant listened twice to a sound scene. The first iteration always consisted of 8 moving sound sources. In the second iteration, the participants were asked to detect whether one of the sound sources disappeared. In 75% of the cases one sound disappeared, while in the remaining 25% the two iterations were identical. There were three test conditions: (1) with no directed attention [No Cue], (2) with directed attention to the disappearing source using text on a screen showing its name in the first iteration [Text Cue], (3) directed attention to the disappearing source using matching tactile input in the first iteration [Tactile Cue].

(B) Hit rate results (1 signifying 100% accuracy) for the three conditions (Wilcoxon signed-rank tests).

(C) Sensitivity (d’) results for the three conditions (Wilcoxon signed-rank tests; ∗∗∗p < 0.001, ∗∗p < 0.01, ∗p < 0.05, p < 0.1).

When hit rates (detecting disappearance of a sound in a situation that it actually disappeared) were compared between the three test conditions, we found that performance in the tactile cue condition (M = 0.666, IQR = 0.20, CI 0.559–0.680) was significantly higher than performance in the no cue condition (M = 0.433, IQR = 0.28, CI 0.370–0.505 Z = −3.909, p < 0.001). The same effect was found for the text cue condition (M = 0.666, IQR = 0.20, CI 0.641–0737), as compared to no cue (Z = −4.398, p < 0.001). At a tendency level, hit rates were also higher in the text cue condition, in comparison to the tactile condition (Z = −2.093, p = 0.036). When false alarms were also taken into account, sensitivity to change (d prime), was significantly higher, when a text cue was presented before the experiment with the name of the disappearing object (d’ visual = 1.2179, C = 0.3653), as compared to the no-cue condition (i.e., with no directed attention; d’ no cue = 0.3747, C = 0.4414; Z = −3.754, p < 0.001). There was also a tendency for a difference between the tactile directed attention condition (d’ tactile = 0.8112, C = 0.268) and the no cue condition (Z = −1.914, p = 0.056). D prime values for the tactile and visual directed attention conditions were not statistically significantly different from one another (Z = −1.872; p = 0.067). The results are depicted in Figure 4.

Subjective experience of the participants

In experiments 1–2, the participants were asked about their subjective experience during the tactile test condition. We found that when tasked with localizing motion changing in azimuth only (experiment 1, N = 29), 21 participants said they visualized/imagined the moving sources (>72%). When the induced motion was in both azimuth and elevation (experiment 2), 35 participants out of 43 said they visualized their experience (>80%).

Here selected quotes from the participants that were imaging the tactile stimuli moving around them or visualizing, in both experiment 1 and experiment 2: “The sound was moving around me/up and down”, “Some kind of energy that moved in space”, “A ball that moves in a circle”, “A moving ball of light”, “I imagined the vibrations traveling in a circle”, “It felt like I was on a rollercoaster”, “I imagined a thin thread passing through my fingers”, “As a wind that moves from place to place”.

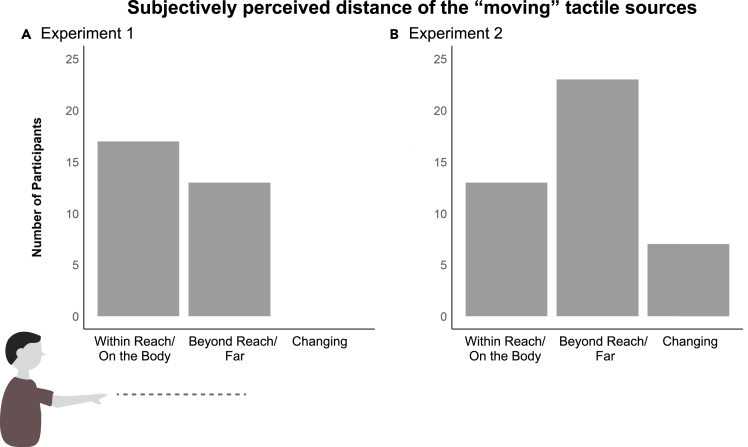

With respect to subjectively judging the distance of the tactile inputs, in experiment 1 (only horizontal motion) 16 participants said that the stimuli were within reach, and 13 that they were beyond reach/far. This result translates to 45% of the participants reporting the distance not or not consistently within reach (while the stimuli were always delivered in the same way on the fingertips). During experiment 2 (combined horizontal and vertical motion), when asked about the perceived distance, 13 participants said the stimuli was within reach, while 23 said that the sources were out of reach/far. For the remaining 8 subjects, the distance was perceived as changing in distance. This means that 70% of the subjects perceived the distance of the moving trajectories as not or not constantly within reach.

Figure 5 depicts the responses of the participants, regarding their subjective experience of distance.

Figure 5.

Subjectively perceived distance of the “moving” tactile sources

Subjective experience of the participants who were asked about the perceived distance of the “moving” tactile stimuli delivered on their fingertips following experiment 1 & 2 (A & B respectively). The respondents chose their response from among categories: within reach/on the body, beyond reach/far, changing in distance.

Discussion

Spatial localization is possible through the tactile sense

Four experiments were carried out with the objective of assessing the tactile modality’s potential for representing spatial sources moving in three dimensions in space. We also tested whether inputs coming from a newly acquired tactile “sense” for localization can be integrated with the information coming from the existing auditory sense. Despite the novel nature of the tasks, participants were able to localize the “moving” tactile sources with accuracy significantly above chance levels, and in challenging acoustic conditions integrate the inputs with the auditory stream. The human tactile system was thus able to engage in this new complex task (similar to other mammals for remote locations) following technology-mediated perceptual learning.

Motion localization through touch vs. audio, potential brain mechanisms

Auditory detection and tracking the trajectories of moving sources in the environment is a complex task, requiring integration of multiple shifts in frequency, intensity and time properties of the sources. The underlying mechanisms (e.g., temporal vs. spatial snapshots, the role of prediction, existence of motion-sensitive brain networks) are still a subject of debate.41,59 In the current study, tactile motion localization was found to be high for a completely novel task, and on average very close to the auditory performance (with some individuals even showing higher results while performing the tactile task, as opposed to the auditory). This entails that the tactile system is indeed capable of utilizing weighted cues to understand source positions and motion, despite its generally inferior temporal, frequency and intensity discrimination capabilities, as compared to the auditory system.44,47

We believe that these results contribute to the body of evidence demonstrating the adult brain’s ability to perform tasks using an “atypical” sensory modality, given that the design properly fits the nature of the modality.60 In species such as elephants which are capable of using seismic cues for localization, tactile information is used (as well as integrated with audio) seamlessly, without a mediating technology.4 The results might also indicate task-based brain organization, maintaining that a certain computation is performed in a specific brain network regardless of the sensory modality.60,61,62,63 In fact, the temporo-occipital region of hMT+/V5, classically associated with processing visual motion, has been found to process motion and localization of sounds,64,65 as well as tactile motion such as brush strokes on the skin or Braille pin patterns.66,67,68 In addition (and what might have contributed to the quick learning in the current experiment) neuroimaging findings show extensive neuronal links between the sensorimotor and auditory neuronal systems,69,70 as well as the auditory system’s engagement in encoding vibrotactile inputs.14,16,17 Further research is warranted into the underlying neuronal mechanisms of 3D tactile motion localization, and the representation of a novel tactile localization “sense”, as designed in the current study.

Notably, two tactile tasks in the study required no training whatsoever, while only a relatively short training session was needed to perform the more challenging localization of combined horizontal and vertical motion. This intuitive learning stands in contrast to other classical SSDs (particularly visual to auditory and visual to tactile), including devices that were developed by some of the co-authors and/or studied extensively in the past, which required days or weeks of training for accurate task performance.48,51,52,71,72,73 Possibly, due to the more “natural” translation between the auditory and the tactile sensory inputs, as well as by using stimuli in motion, i.e., more closely resembling natural environments, the novel task used in the current experiment felt more intuitive.33,74,75

For both modalities the results were also generally lower when the task became more complex with vertical motion added to the horizontal motion (although no actual comparison of performance was possible). While the azimuth was represented using intensity cues, through TMA, resembling auditory localization mechanism, as well as fitted for the tactile domain, the vertical representation (experiment 2) was based on a crossmodal correspondence between the auditory and tactile systems connecting perception of elevation and frequency.34,58,76 Further testing, taking into account intensity vs. frequency sensitivity of the tactile system, is required to check whether vertical cues are more challenging to decipher for tactile perception of space (through TMA), similar to audition.2,40,77

Audio-tactile integration in noise

In natural conditions, vision is typically used to pinpoint the exact sound source locations, and is specifically useful in challenging auditory situations, e.g., in order to discern front vs. back sound locations or in the presence of multiple competing sound sources/talkers.77,78,79,80 Based on our findings in experiments 3–4, we speculate that touch may also carry the potential of improving auditory localization.33,35,74,81

In experiment 3, specifically developed to test the effects of multisensory integration, we added noise to the auditory environment. This in order to assess multisensory effects considering the rule of inverse effectiveness. The rule posits that as information from one sense becomes less reliable, the prominence of information received through other senses increases, and thus multisensory integration provides greater benefit.12,82 Indeed we showed improved performance in the audio-tactile spatial task as compared to auditory only, when the latter was presented in background noise (SNR = −10dB). In fact, adding touch caused the performance to become almost as good as auditory in quiet. This finding is in agreement with several other multisensory experiments in which noise was used to vary the reliability of unisensory target information and probe multisensory integration for improved perception.25,83,84

Furthermore, in order to test integration of TMA within a more naturalistic acoustically complex environment, we designed experiment 4, involving multiple sound sources, necessitating selective attention and sound stream segregation for task performance.39,40,85,86 The results showed that participants were able to use a combination of complex vibrations along with location information from TMA, with the auditory input, for directed attention. In all previous studies on “change deafness”, text explicitly depicting the name of the intended source was used before presenting the auditory scene for the same purpose.56,57 We speculate that the substantial challenge to the auditory system with the high number of concurrent competing sources prompted integration with the tactile cues to improve the results. We believe the integration was also enabled due to the convergence of the multisensory stimuli in a number of parameters, including content, spatial location, and timing, adhering to the established principles of optimal multisensory integration.87

The demonstrated enhancement of auditory perception and attention using tactile means, including in a complex ecological environment, is rather unique, as it involves one sensory modality that has been used for the task of localization since early years of development (and in evolution), with a modality that has just developed this skill. Taking into account the abundance of examples of sensory integration for spatial perception among natural senses, this finding further demonstrates similarities between the SSD and behavior of the natural senses (in effect acting as a “novel sense”).

In addition, in experiment 1, we showed high performance for audio-tactile horizontal motion perception (higher than for A1 and T, and almost as high as for A2) but there was no clear multisensory benefit over auditory performance. Considering the results of experiments 1 and 3, the reason was most probably higher reliability of the auditory sources and the already obtained high auditory performance. Interestingly, however, further analysis demonstrated improved detection of motion endpoints, as compared to the start points in audition, while the opposite was true for the tactile modality. For the auditory sense this might be related to threat evasion, where it is more critical for survival to understand the endpoint of an object’s motion. The potentially divergent behavior of the two sensory modalities suggests a further possibility for complementary roles for enhanced overall performance in spatial perception.34

Subjective experiences of 3D tactile spatial localization

Certain further parallels can be drawn between the tactile and the auditory modality based on the subjective reports of the participants. With 45–70% of the subjects attesting to perceiving the tactile stimuli as coming from beyond their reach (even though the vibrations were delivered directly on their fingertips) in combination with the objective localization results, it seems that at least for some people the experience resembled that of auditory motion perception, i.e., one whose sources are located in the extrapersonal space. Further results showed that most participants (70–80%) also visualized or imagined the tactile stimuli as moving around them (and referred to them as, for example, “a ball of light”, “a wave”, “a bee”). Such visualization has often been described as an almost automatic phenomenon for localizing auditory objects in motion,41,88 and can be considered further evidence that spatial perception is naturally multisensory. These results also indicate initial signs of “distal attribution” for moving tactile sources, i.e., perceiving them as external to the sensory receptors, contributing to the debate regarding whether SSD-rendered objects can be perceived as existing directly in the external space, as with natural senses.89,90,91,92 Nevertheless, further research is warranted to better decipher this phenomenon, with one possible addition including representing looming-receding effects using touch (e.g., through vibrations changing in amplitude).91,92

Application in the hearing-impaired population

We believe that the TMA shows potential, both for research and practical applications. Nevertheless, translating our technology into a real-world environment with multiple sound sources still requires further development. First and foremost, we envision its application in the hearing-impaired population, who are known to have reduced localization capabilities, such as cochlear implant users and individuals with single-sided deafness. These groups show limited ability to extract binaural auditory information due to both the sensory impairment and the applied algorithms for sound encoding.93,94,95,96,97 Although localization of sources is of utmost importance for source segregation and comprehension, particularly in noisy environments, no dedicated localization training/treatment is typically performed in audiology/ENT centers around the world (with substantial emphasis on improving speech perception).

Limitations of the study and future directions

Our in-house technology for localization of “moving” sources is more elaborated than the other existing solutions, in that it encodes both horizontal and vertical 3D space and motion via touch, while requiring minimum learning time. However, certain limitations need to be mentioned with respect to our setup and the experimental design. Studying motion which encircled the participant in 360° impacted the design and the data collection in two ways: (1) the trajectory of the experienced motion could not be tracked online (i.e., we cannot be certain regarding the individuals moment-by-moment tracking of the motion) and (2) the responses were given verbally, limiting their resolution to 45° in azimuth (8 locations on a circle) and 30° in elevation (3 levels). This response manner was chosen, since the fingers were inserted into the tactile devices during tactile tasks and prevented hand/body motion. In the future, in order to test audio/tactile localization abilities with a higher resolution, the design can incorporate online motion tracking and/or tasks incorporating responses using a foot pedal. Furthermore, combining elevation cues along with complex sounds (content) did not appear in the current study, due to the applied tactile algorithm and its representation of elevation via frequency content. The possibility for combining these two cues (content and elevation changes), or using other cues for elevation (such as level differences, time differences, spectral cues) warrants further research. It should also be mentioned that during experiment 2 participants received vertical auditory cues both as changes in frequency as well as locations in space, while the tactile modality received only the prior. Future investigation including only a single cue for the auditory modality is needed. Finally, in experiment 4 there was a difference in the provided attentional cues. While the text cue was as an explicit indication which preceded the stimulus, the tactile cue (preserving content and location of the disappearing sound) was simultaneous with the first iteration of the auditory scene. The study was designed to compare between the well-established paradigm using a visual cue (maintained in working memory),56 and one which applied multisensory integration for improved task performance. It is possible that lower performance for the tactile vs. text cue was due to the task being more demanding. Future studies should aim to directly compare the impact of audiovisual and audio-tactile integration on “change deafness”.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Max MSP (Version 8.15) | Cycling 74 | https://cycling74.com/ |

| Spat Library (Version 5.2) | Institute for Research and Coordination in Acoustics/Music (IRCAM) | https://forum.ircam.fr/projects/detail/spat/ |

| Touch-Motion Algorithm (TMA) | This paper | This paper |

| SPSS Statistics ver. 29 | IBM | https://www.ibm.com/products/spss-statistics |

| Python ver. 3.12.2 | Python Software Foundation | https://www.python.org/ |

| UMIK-1 (measurement microphone) | MiniDSP | https://www.minidsp.com/products/acoustic-measurement/umik-1 |

| Control 23-1L (passive loudspeakers) | JBL | https://jblpro.com/en/products/jbl-professional-control-23-1 |

| DCi 8|600DA (amplifier) | Crown Audio | https://www.crownaudio.com/en/products/dci-8-600da |

| VAS (vibrotactile device) | Neurodevice | Cieśla et al. 201924 |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Dr Adi Snir (adisaxophone@gmail.com).

Materials availability

This study did not generate new unique materials.

Data and code availability

-

•

Data and code reported in this paper will be shared by the lead contact upon request.

-

•

All original code reported in this paper will be shared by the lead contact upon request.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Experimental model and study participants details

We present here four sequential and complementary experiments in a total of 127 adult individuals. All participants reported normal hearing and had no history of tactile deficits. All participants were of Middle Eastern and/or European descent. Furthermore, none of the participants was a professional musician (98/127 answered this screening question). Thirty randomly chosen subjects underwent a hearing test at the Communication Aging and Neuropsychology lab at the Reichman University (in a soundbooth, audiometer MAICO MA-51, using a staircase procedure of 5dB up-10dB down, and were found to have normal hearing, i.e. <15 dB for 250Hz-8kHz, ASHA 2023). All experiments were approved by the Institutional Review Board of the Reichman University, Herzliya, Israel and were in accordance with the regulations of the Declaration of Helsinki 2013.

Group sizes were defined based on the power analysis using GPower, for a middle effect size of 0.5, alpha 0.05 and power 0.8, the minimum size of a sample for within-group comparisons using the Wilcoxon signed-rank test is 28.98 Experiments 1,2,4 were designed taking this minimum sample size into account, while Experiment 3 had 24 participants.

Experiment 1

Twenty-nine (29) young adults (8 male, 21 female, mean age 23.6+/-8.75) participated in Experiment 1.

Experiment 2

Forty-four (44) young adults (29 female, 15 male, age: M=23.7+/-2.3 years) took part in Experiment 2.

Experiment 3

24 participants took part in experiment 3 (17 female, 7 male, age M=23.75+/-1.4).

Experiment 4

Thirty participants (30) took part in experiment 4 (19 female, 11 male, age M=25.66+/-7.55).

Method details

The methodology of experiments 1-2-3 was very similar and focused on localizing the trajectories of induced spatial motion through the tactile modality. In Experiment 1 and Experiment 3 the motion was limited to the horizontal axis around the body, while Experiment 2 included stimuli also moving vertically on a semi-sphere, producing more complex motion trajectories. Experiment 4 was designed to test the plausibility of using the set-up in a naturalistic setting containing complex sound sources and vibrations.

The subjects were recruited from among the students of the University and their friends, all signed an Informed Consent, and were either paid for their participation or received student credits.

Experimental space

All experiments took place in a cube-shaped (4 x 4 x 4 meter) dimly lit room at the The Baruch Ivcher Institute For Brain, Cognition & Technology at Reichman University. The room was acoustically sealed from the outside and controlled for reflections on the inside using mineral wool soundproofing, with a reverberation time of < 0.3 s. The room was equipped with 97 loudspeakers placed on the walls and on the ceiling. For all three experiments, the participant was seated on a chair in the center of the room, with their head at the level of the middle loudspeaker ring. The researcher communicated with the participant from a separate control room using a studio talkback system. For the tactile stimulation, they placed their two fingers (index and middle) of both hands into two Vibrating Auditory Stimulators (VAS) (https://www.neurodevice.pl/en/24,25), each consisting of a vibrating interface with two piezoelectric plates. The devices were placed on two small tables to the sides of the participant (see Figure 1).

Apparatus: Audio

Seventy-two out of the 97 loudspeakers (JBL Control 23-1L, powered by 13 Dante-enabled amplifiers, Crown Audio DCi 8|600DA) were organized in three horizontal rings on the walls, each containing 24 speakers. A uniform azimuthal angle difference of 15° (in relation to the center point) between the neighboring speakers defined the position of each speaker on the ring. The three rings were placed at heights of 48cm, 148cm, 248cm from the ground. The 25 additional speakers were placed on the ceiling, on a 5x5 speaker grid. All loudspeaker outputs were measured from the center position of the space (participant’s head position) and corrected for the frequency response and the delay, to create an optimal uniform audio response (measurements and corrections were configured using the Audio Architect software by HiQnet). The All-Round Ambisonic Decoding (AllRAD) method with High Order Ambisonics (HOA) encoding was used to synthesize motion and location on a virtual sphere, configured to 12th order Ambisonics. This set-up in combination with the density of speakers in the room allowed for very accurate source angle reproduction (exceeding the resolution needed for the current experiments).99,100,101 All stimuli were generated and decoded using Max MSP. Ambisonic encoding and decoding was done utilizing the Spat library (version 5.2) developed at the Institut de Recherche et Coordination Acoustique/Musique (IRCAM).

Apparatus: Tactile

Two identical VAS tactile devices, each containing two piezoelectric actuators, were used in all three experiments (see Figure 1). To perceive the vibrations emitted by the piezoelectric plates, the participant placed two fingers of each hand into two holes on the side of the VAS. The index and the middle finger were chosen due to their high acuity.102 Each finger was placed on a separate piezoelectric plate, which delivered vibrations corresponding directly to the original audio files. Each box was powered through an amplifier and connected to an electrical socket. The tactile device boxes were internally padded with a foam layer and the openings for the fingers had silicone inserts with slits. To ensure no perceivable sound was emitted by the tactile devices during combined audio and touch conditions, a measurement microphone (MiniDSP UMIK-1, frequency response 8Hz-20kHz) was used to gauge sound levels at the participants’ ear position. The measurement was performed using 20 random stimuli selected from each experiment, with the tactile devices ON and OFF. The mean levels were the following: a) Experiment 1 - tactile devices OFF: 65.3 +/- 2.2dB vs ON: 64.6 +/-1.7dB; b) Experiment 2 - tactile devices OFF: 61.1+/-2.9dB vs ON: 61.6+/-3.2dB; c) Experiment 3 (target with noise combined) - tactile devices OFF: 72.2+/-0.6dB vs ON: 71.8+/0.25dB, d) Experiment 4 - tactile devices OFF: 74.0+/-1.5dB vs ON: 74.6+/- 1.3dB.

Touch motion algorithm (TMA)

Our in-house solution (TMA) matched vibration level differences (among four vibration plates) in order to represent locations in 3D space in the horizontal plane. Intensity differences, found in a number of cases to be more effective for tactile comparisons than temporal differences44,74, were weighted on 4 actuators, enabling intermediate source positions as well as smooth motion. This technique, which recreates virtual source positions and maps them to multiple actuators, was inspired by vector based amplitude processing (VBAP) decoding techniques.101,103,104 As schematically depicted in Figure 2, each of the 4 fingers represented a certain diagonal orientation of the surrounding space (i.e. azimuthal 45°, 135°, 225° and 315°; the azimuthal front being at 0°). This method rendered a 90° angle between the adjacent fingers (the homologue fingers of both hands, as well as the index and the middle finger of each hand). As an example, if both fingers of the right hand received vibrations of equal intensity, this indicated the right (90°) azimuth. At the same time, when only the middle finger of the left hand received inputs, this indicated a stimulus in the back left position (225°).

To represent horizontal locations of the stimulus through touch, the developed TMA algorithm compared the angle of the intended virtual source’s position to the tactile actuator’s intended diagonal angle, in order to define the gain differences among the four actuators. This enabled representation of both static and moving spatial representation. These gain comparisons changed as the source moved. Gain scaling of each actuator utilized a logarithmic amplitude scale.

Vertical positions of the “moving” stimuli (Experiment 2 only), ranging between -30° and 30°, were represented as changes in frequency. Specifically, the three levels were divided into three elevation ranges of 20° each (with regards to the participant’s head position) which corresponded to three frequency subranges , scaled logarithmically in accordance with an octave pitch base: 1) the bottom level as 37-87Hz, 2) the middle level as 88-233Hz, 3) and the upper level as 234-622Hz (see Figure 3). The applied frequency ranges as well as the velocities of the “moving sources” were well perceivable through touch as vibrations (all below 700 Hz7). The differences logarithmic octave-based scaling of in frequencies allowed for recreating intuitive vertical positions, considering higher frequencies are more naturally perceived as coming from higher positions in space and vice versa, while maintaining familiarity with general auditory perception (also shown for tactile and visual perception).37,58,76

The TMA algorithm is currently patent pending. For further details, please contact the corresponding author (A.S.).

Experiment 1

Experimental design

During Experiment 1 the participants took part in four test conditions: a) with sounds “moving” in the horizontal plane (azimuth) around them produced by the Ambisonic system (auditory condition, A1), b) with vibrations on fingertips of both hands representing “moving” locations in space (tactile condition, T), c) combined moving audio-tactile stimuli (audio-tactile condition, AT), d) another audio only test, same as A1 (A2). There were 28 stimuli used in each test condition (A, T, AT).

During the T and the AT test conditions (when both sound and tactile devices were active), none of the participants said they heard any sounds coming from the boxes. For both A conditions (A1 and A2), all the sounds were presented at the height of the participant’s ears (the middle loudspeakers’ ring).

Participants had their eyes open during the entire duration of the experiment and were asked to face forward. They could move their heads while the rest of the body and hands were fixed in positions.96

Stimuli

Both the audio and tactile stimuli were constructed of a synthesized 250 Hz sawtooth wave. This frequency was chosen to ensure that the stimuli are easily perceivable through vibrotactile receptors on fingertips (this frequency is also where the Pacinian cells have their peak sensitivity).7,10,11 Tactile horizontal positions were given using the horizontal component of TMA combined level differences of the four tactile actuators, with gradual changes in level weighting in order to recreate “motion”. ILDs were chosen, as opposed to ITDs, due to the higher sensitivity of the tactile system to intensity cues.44,74 All the stimuli were moving in the horizontal plane surrounding the participant on the full 360 degree azimuth.

Before the experiment, four different sets of stimuli were automatically generated for each person using a pseudo-randomizing algorithm, from 9 optional sets. It was ensured for all test sheets that the stimuli are equally distributed in terms of both their azimuthal orientation (defined by the horizontal center point of the motion), their velocity (moving across a certain number of horizontal and vertical positions), and the direction of motion (clockwise vs counterclockwise). The 28 stimuli consisted of : 12 stimuli moving clockwise, 12 stimuli moving counterclockwise and 4 that were not moving at all. Each stimulus covered a distance of 45°, 90°, 135° or 180°. Each distance appeared six times (3 in each direction) at different orientations. In the A and the AT condition, the level of sounds was in the range of approximately 65+/-3 dB (SPL).

The task

The participant gave a verbal response after each stimulus, by identifying the start and the end point of it (in terms of its horizontal position), as well as the direction of motion (clockwise, counterclockwise or static). Verbal responses, instead of pointing, were chosen considering that the participant’s hands remained inside the tactile devices and with some locations behind the head. Each participant was given a response sheet indicating all possible locations of the incoming stimuli that he/she could use for reference (Figure 1).

Experiment 2

Experimental design

Experiment 2 was identical to Experiment 1 in terms of the experimental space, equipment and the general technical features. However, the auditory and the tactile stimuli were now “moving” in both the horizontal and vertical plane surrounding the participant. There were two test conditions : auditory (A) and tactile (T). In 22 participants, the order of the conditions was A then T, and in the other 22 the order was reversed (T-A). Each test condition consisted of 14 trials. The tactile test was preceded by a short tactile training session (mean duration: 30.6 +/- 6.55 min), which included stimuli of a similar type to those used in the tactile test (see the Stimuli section for details). The participants were given direct verbal feedback by the researcher about their answers and allowed to request stimuli to be repeated multiple times. The stages of the training included: 1) horizontal motion, 2) vertical motion, 3) combined horizontal and vertical motion. One dedicated sheet with a set of stimuli was used for the training session in all participants (this sheet was never used in the test session). In order to proceed to the subsequent section of the training, the participants had to provide at least 2 correct answers in a row.

Stimuli

Both auditory and tactile stimuli were constructed of sawtooth waves in the frequency range between 37Hz-622Hz. This frequency range is easily perceivable by touch.7 The level of sounds was in the range of approximately 61+/-3.5 dB (SPL). For both the auditory and the tactile task, changes in frequency between the stimulus’ start and end point were carried out in a linear fashion (musical glissando), in order to maintain an impression of linear motion rather than stepwise jumps.20,21 Horizontal motion was induced by TMA in the same way by the same level weighting as in Experiment 1. Specifically, the 14 stimuli were made up of: two that were not “moving” horizontally, 6 that moved clockwise and 6 that moved counterclockwise. Of these, three stimuli covered each of the angle distances : 45°, 90°, 135°, 180°. In terms of the vertical motion: six stimuli had upwards motion, six had downwards motion, and two were not “moving” vertically. Out of the 12 vertically moving stimuli, 4 moved within 1 level, 4 moved across 2 levels, and 4 moved across all 3 elevation levels.

The task

Horizontal positions were reported in the same form as in Experiment 1, while the added vertical positions were reported as “up”, “middle” or “down” (see Figure 1 for the response sheet). An example of a possible response could be that the sound started in the “front… [0° horizontal] …up [30° vertical]” position, and moved counterclockwise towards the “back-left… [225° horizontal] …middle [0° vertical]” position.

Experiment 3

Experimental design, task

The same apparatus, experimental set-up and task were used as in Experiment 1.

Stimuli

The moving target stimuli in experiment 3 were the same as in Experiment 1. The background noise was constructed of white noise generators located evenly along the space, emitted from eight positions (0º, 45º, 90º, 135º, 180º, 225º, 270º, 315º in azimuth) within the Ambisonic environment. Each of the white noise positions was synthesized using a separate noise generator in order to avoid illusions of motion caused by multiple instances of the same source. SNR was set to approximately -10dB (target: 60.5+/-0.8dB SPL, wavering noise: 68-71 dB SPL), with the overall level of combined target and noise measured at 72+/-0.9dB (SPL).

The experiment consisted of three conditions: a) audio in quiet (A), b) audio with background noise (AN), c) audio-tactile with background noise (ATN). In half of the participants (9), the order of conditions was A, AN, ATN and in the other half the order was A, ATN, AN.

Experiment 4

Experimental design

Using the same apparatus and experimental set-up as in experiments 1-3, we performed a “change deafness” experiment based on the classical findings by Eramudugolla and colleagues.56 The participants were seated in the middle of the room, with their head facing the front. They were first introduced to 8 possible environmental sounds used in the experiment and heard each one separately. All were able to name each with 100% accuracy. All sounds were placed at an elevation of 0° (at the height of the participants’ ears). Then, each participant took part in 3 study conditions, each with 20 pairs of sound scenes. The second iteration of the scene in a pair was either exactly the same (25%), or with one sound source redacted (75%). The intention behind having the three conditions was to test the impact of drawing attention to the potentially disappearing sound source through different means. In condition 1 (audio only), no attentional cue was given and participants experienced just the audio scene pair. In condition 2, the participants saw the name of the disappearing source appear as text on an ipad display prior to exposure to the paired audio scenes. In condition 3, the disappearing sound was paired with matching tactile vibrations of the target sound during the first iteration of the scene, and the second scene of the pair had no attentional cue. The order of conditions (1-2-3) was counterbalanced among the participants. Horizontal locations of the sound source were rendered via TMA as described in experiments 1-2, while the content of the relevant sound source was sent directly to the vibration actuators.

Stimuli

Each auditory scene was 5 seconds in duration and repeated twice, with 500 milliseconds of white noise in between. The paired scenes could only differ by redacting one sound source on the second appearance, while everything else including the sounds used, their timing, the locations and the source motion patterns remained exactly the same within each pair. Eight sounds, placed at various different locations, were used to comprise the first iteration of the scene. The center points of the motion in azimuth around the participant for the 8 sounds were at 0, 45, 90, 135, 180, 225, 270, 315 degrees, with motion direction (clockwise vs counterclockwise) randomly selected. The sounds included: a dog, a cat, an owl, a crow, a cricket, a bear, a train and a helicopter, and were randomly distributed among 8 orientations. In each scene, all 8 sounds were slightly moving within a range of 10-35 degrees in azimuth with no overlap between the 8 positions. All sounds in the scene included frequencies perceivable by touch and were normalized for matching levels (74+/-1.5dB). The tactile stimuli were a direct rendering of the actual target sounds.

The task

After a pair of auditory scenes was presented, the participant was tasked with a two alternative forced choice (2AFC). The question was whether the second scene presentation was different or the same as the first scene presentation (“same/different” task). The responses were verbal and recorded by the researcher through the talkback system.

Quantification and statistical analysis

Experiment 1

This experiment was conducted on n=29 human participants, The main analysis of the scores in the localization tasks involved comparing the center point of each stimulus and that of the given response. The actual center point was placed at half the azimuthal distance between the start and end point, considering the motion direction as well. To obtain the maximum score (“1”), all points of the actual and the reported trajectory had to perfectly overlap, i.e. with a 0° difference. A 90° error translated into a score of 0.5, an error of 180° was scored 0 points, and any intermediate angle distance error was converted to an intermediate score, based on a linear scale. Considering that all selectable points were evenly distributed around the circle, and 180º was the maximum possible distance error, chance error was 90º, which on a 0-1 scale translated to 0.5 chance. The scores obtained by each participant for the 28 stimuli in the auditory, audio-tactile and tactile test conditions were then compared with one another (using Wilcoxon signed-rank test) : A1/A2 with T, A1/A2 with T, A1 with A2, A1/A2 with AT, T with AT (840 data points per condition : 30 people x 28 trials). Non-parametric tests were chosen due to lack of normal distribution of the data (Shapiro-Wilk, p<0.001). All the statistical analysis was done in IBM SPSS Statistics version 2020. The results can be seen in Figure 2.

Subjective experience

Following the tactile localization test, the participants were asked to respond to a short questionnaire pertaining to their subjective experience. Each participant was asked two questions about their subjective experience: 1. “How far was the stimulus from you?” : “far”/“outside of reach” vs “within reach/on the body” vs “changing” (see Figure 5), 2. “Did you imagine/visualize the stimuli ?” : “yes” vs “no''. The participants were also encouraged to provide free responses regarding their general experience during the task.

Experiment 2

This experiment was conducted on n=44 human participants. Data analysis for experiment 2 was identical to experiment 1, while adding into the calculation the vertical responses as well. In order to assess the combined distance (both horizontal and vertical) between the stimulus and the reported locations, distances were calculated separately in each axis and converted into a diagonal, using a simple pythagorean equation (horizontal error2 + vertical error2 = final error2). The results were then divided by 180 and subtracted from 1, arriving at scores within a range between 0 and 1 for each trial. As in Experiment 1, the chance within an equally distributed spatial environment where 180º error is the maximum, remained at 90º (0.5). The obtained single-trial scores were compared between the two (A vs T) conditions (616 data points per condition : 44 people x 14 trials) using Wilcoxon Signed-ranks test (non-parametric tests were chosen due to the non-normal distribution of the data points, Shapiro-Wilk p<0.001). Scores for reporting the start vs end points of the trajectories were also compared within the two sensory modalities.

After the tactile localization test, the participants answered the same two questions regarding their subjective experience as in Experiment 1. The results can be seen in Figures 3 and 5.

Experiment 3

This experiment was conducted on n=24 human participants. The scores were calculated as in Experiment 1. Wilcoxon Signed-ranks tests were applied to compare the scores in the three experimental conditions. The results can be seen in Figure 2.

Experiment 4

This experiment was conducted on n=30 human participants. For the analysis of the results, we considered as “hits” the occurrences, when the participants correctly recognised the trails when there was a difference between the two scenes in the pair (one source disappeared in the second iteration) as those with a difference. We considered as “false alarms” those instances when there was no difference in the pair of scenes but the participant claimed there was. Using Wilcoxon Signed-Rank tests, hit rates were compared across the tree test conditions. In addition, based on the signal detection theory, the results were also analyzed taking into account the sensitivity and the bias of the responses. The D’prime index and the Criterion were calculated using a method described in Brophy and colleagues105 (see also106). The D’prime values were compared across the three study conditions using Wilcoxon Signed-Rank tests. The results can be seen in Figure 4.

Acknowledgments

This project has received funding from the European Union's Horizon 2020 research and innovation programme under (grant agreement No 101017884). This project has received funding from the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation programme (grant agreement No 773121). This project has received funding from Joy Ventures (to AA). We would like to thank several lab memebers (Amber Maimon, Tamar Ziv, Noam Efraty) for their support with collecting the data and for their general comments to the manuscript and Hili Ben-Or for the graphical abstract.

Author contributions

Writing–Original Draft: A.S. and K.C.; Writing – Review and Editing Programming: A.S., K.C., and A.A.; Software development: A.S.; Methodology Development: A.S. and K.C.; Conceptualization: A.S., K.C., and A.A.; Supervision: A.S., K.C., and A.A.; Project Administration: A.S., K.C., and R.V.; Investigation: G.O. and R.V.; Visualization: G.O. and R.V.; Data Curation: R.V.; Resources: A.A.

Declaration of interests

As mentioned also in the STAR Methods section, we declare a patent has been filed based on the technology featured in the current manuscript.

The author A.A. is a co-founder and a member of the scientific advisory board of Remepy and Davinci Neuroscience Inc.

Published: April 26, 2024

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2024.109820.

Contributor Information

Adi Snir, Email: adisaxophone@gmail.com.

Amir Amedi, Email: amir.amedi@runi.ac.il.

Supplemental information

References

- 1.Bruns P., Röder B. Development and experience-dependence of multisensory spatial processing. Trends Cognit. Sci. 2023;27:961–973. doi: 10.1016/j.tics.2023.04.012. [DOI] [PubMed] [Google Scholar]

- 2.Spector, R. H. (2011). Visual Fields. [PubMed]

- 3.Clery J., Guipponi O., Wardak C., Ben Hamed S. Neuronal bases of peripersonal and extrapersonal spaces, their plasticity and their dynamics: Knowns and unknowns. Neuropsychologia. 2015;70:313–326. doi: 10.1016/j.neuropsychologia.2014.10.022. [DOI] [PubMed] [Google Scholar]

- 4.O’Connell-Rodwell C.E. Keeping an “ear” to the ground: seismic communication in elephants. Physiology. 2007;22:287–294. doi: 10.1152/physiol.00008.2007. [DOI] [PubMed] [Google Scholar]

- 5.Bouley D.M., Alarcón C.N., Hildebrandt T., O'Connell-Rodwell C.E. The distribution, density and three-dimensional histomorphology of Pacinian corpuscles in the foot of the Asian elephant (Elephas maximus) and their potential role in seismic communication. J. Anat. 2007;211:428–435. doi: 10.1111/j.1469-7580.2007.00792.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stark B., Carlstedt T., Hallin R.G., Risling M. Distribution of human Pacinian corpuscles in the hand: a cadaver study. J. Hand Surg. Br. 1998;23:370–372. doi: 10.1016/s0266-7681(98)80060-0. [DOI] [PubMed] [Google Scholar]

- 7.Rowe M.J. Synaptic transmission between single tactile and kinaesthetic sensory nerve fibers and their central target neurons. Behav. Brain Res. 2002;135:197–212. doi: 10.1016/s0166-4328(02)00166-3. [DOI] [PubMed] [Google Scholar]

- 8.Pisciottano F., Cinalli A.R., Stopiello J.M., Castagna V.C., Elgoyhen A.B., Rubinstein M., Gómez-Casati M.E., Franchini L.F., Franchini L.F. Inner ear genes underwent positive selection and adaptation in the mammalian lineage. Mol. Biol. Evol. 2019;36:1653–1670. doi: 10.1093/molbev/msz077. [DOI] [PubMed] [Google Scholar]

- 9.Pongrac H. Vibrotactile perception: examining the coding of vibrations and the just noticeable difference under various conditions. Multimed. Syst. 2007;13:297–307. [Google Scholar]

- 10.Merchel S., Altinsoy M.E. Psychophysical comparison of the auditory and tactile perception: a survey. J. Multimodal User Interfaces. 2020;14:271–283. [Google Scholar]

- 11.Bolanowski S.J., Jr., Gescheider G.A., Verrillo R.T., Checkosky C.M. Four channels mediate the mechanical aspects of touch. J. Acoust. Soc. Am. 1988;84:1680–1694. doi: 10.1121/1.397184. [DOI] [PubMed] [Google Scholar]

- 12.Kayser C., Petkov C.I., Augath M., Logothetis N.K. Integration of touch and sound in the auditory cortex. Neuron. 2005;48:373–384. doi: 10.1016/j.neuron.2005.09.018. [DOI] [PubMed] [Google Scholar]

- 13.Caetano G., Jousmäki V. Evidence of vibrotactile input to the human auditory cortex. Neuroimage. 2006;29:15–28. doi: 10.1016/j.neuroimage.2005.07.023. [DOI] [PubMed] [Google Scholar]

- 14.Auer E.T., Jr., Bernstein L.E., Sungkarat W., Singh M. Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport. 2007;18:645–648. doi: 10.1097/WNR.0b013e3280d943b9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Beauchamp M.S., Yasar N.E., Frye R.E., Ro T. Touch, sound and vision in human superior temporal sulcus. Neuroimage. 2008;41:1011–1020. doi: 10.1016/j.neuroimage.2008.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Levänen S., Jousmäki V., Hari R. Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr. Biol. 1998;8:869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

- 17.Schürmann M., Caetano G., Hlushchuk Y., Jousmäki V., Hari R. Touch activates the human auditory cortex. Neuroimage. 2006;30:1325–1331. doi: 10.1016/j.neuroimage.2005.11.020. [DOI] [PubMed] [Google Scholar]

- 18.Katz D., Krueger L.E. Psychology press; 2013. The World of Touch. [Google Scholar]

- 19.Landelle C., Caron-Guyon J., Nazarian B., Anton J.L., Sein J., Pruvost L., Amberg M., Giraud F., Félician O., Danna J., Kavounoudias A. Beyond sense-specific processing: Decoding texture in the brain from touch and sonified movement. iScience. 2023;26 doi: 10.1016/j.isci.2023.107965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Crommett L.E., Madala D., Yau J.M. Multisensory perceptual interactions between higher-order temporal frequency signals. J. Exp. Psychol. Gen. 2019;148:1124–1137. doi: 10.1037/xge0000513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bernard C., Monnoyer J., Wiertlewski M., Ystad S. Rhythm perception is shared between audio and haptics. Sci. Rep. 2022;12:4188. doi: 10.1038/s41598-022-08152-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gick B., Derrick D. Aero-tactile integration in speech perception. Nature. 2009;462:502–504. doi: 10.1038/nature08572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hayward V. Scholarpedia of Touch. Atlantis Press; 2016. Tactile illusions; pp. 327–342. [Google Scholar]

- 24.Cieśla K., Wolak T., Lorens A., Heimler B., Skarżyński H., Amedi A. Immediate improvement of speech-in-noise perception through multisensory stimulation via an auditory to tactile sensory substitution. Restor. Neurol. Neurosci. 2019;37:155–166. doi: 10.3233/RNN-190898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cieśla K., Wolak T., Lorens A., Mentzel M., Skarżyński H., Amedi A. Effects of training and using an audio-tactile sensory substitution device on speech-in-noise understanding. Sci. Rep. 2022;12:1–16. doi: 10.1038/s41598-022-06855-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fletcher M.D., Hadeedi A., Goehring T., Mills S.R. Electro-haptic enhancement of speech-in-noise performance in cochlear implant users. Sci. Rep. 2019;9:11428. doi: 10.1038/s41598-019-47718-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Huang J., Sheffield B., Lin P., Zeng F.G. Electro-tactile stimulation enhances cochlear implant speech recognition in noise. Sci. Rep. 2017;7:2196–2205. doi: 10.1038/s41598-017-02429-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Drullman R., Bronkhorst A.W. Speech perception and talker segregation: effects of level, pitch, and tactile support with multiple simultaneous talkers. J. Acoust. Soc. Am. 2004;116:3090–3098. doi: 10.1121/1.1802535. [DOI] [PubMed] [Google Scholar]

- 29.Karam M., Russo F.A., Branje C., Price E., Fels D.I. Graphics Interface. 2008, May. Towards a model human cochlea: sensory substitution for crossmodal audio-tactile displays; pp. 267–274. [Google Scholar]

- 30.Russo F.A., Ammirante P., Fels D.I. Vibrotactile discrimination of musical timbre. J. Exp. Psychol. Hum. Percept. Perform. 2012;38:822–826. doi: 10.1037/a0029046. [DOI] [PubMed] [Google Scholar]

- 31.Young G.W., Murphy D., Weeter J. Haptics in music: the effects of vibrotactile stimulus in low frequency auditory difference detection tasks. IEEE Trans. Haptics. 2017;10:135–139. doi: 10.1109/TOH.2016.2646370. [DOI] [PubMed] [Google Scholar]

- 32.Sharp A., Houde M.S., Maheu M., Ibrahim I., Champoux F. Improved tactile frequency discrimination in musicians. Exp. Brain Res. 2019;237:1575–1580. doi: 10.1007/s00221-019-05532-z. [DOI] [PubMed] [Google Scholar]

- 33.Gori M., Vercillo T., Sandini G., Burr D. Tactile feedback improves auditory spatial localization. Front. Psychol. 2014;5:1121. doi: 10.3389/fpsyg.2014.01121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Occelli V., Spence C., Zampini M. Audiotactile interactions in front and rear space. Neurosci. Biobehav. Rev. 2011;35:589–598. doi: 10.1016/j.neubiorev.2010.07.004. [DOI] [PubMed] [Google Scholar]

- 35.Caclin A., Soto-Faraco S., Kingstone A., Spence C. Tactile “capture” of audition. Percept. Psychophys. 2002;64:616–630. doi: 10.3758/bf03194730. [DOI] [PubMed] [Google Scholar]

- 36.Bruns P., Röder B. Tactile capture of auditory localization: an event-related potential study. Eur. J. Neurosci. 2010;31:1844–1857. doi: 10.1111/j.1460-9568.2010.07232.x. [DOI] [PubMed] [Google Scholar]

- 37.Occelli V., Spence C., Zampini M. Compatibility effects between sound frequency and tactile elevation. Neuroreport. 2009;20:793–797. doi: 10.1097/WNR.0b013e32832b8069. [DOI] [PubMed] [Google Scholar]

- 38.Driver J., Spence C. Cross-modal links in spatial attention. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1998;353:1319–1331. doi: 10.1098/rstb.1998.0286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Schnupp J., Nelken I., King A. MIT press; 2011. Auditory Neuroscience: Making Sense of Sound. [Google Scholar]

- 40.Middlebrooks J.C. In: Aminoff M.J., Boller F., Swaab D.F., editors. Vol. 129. Elsevier; 2015. Chapter 6 - Sound localization; pp. 99–116. (Handbook of Clinical Neurology). [Google Scholar]

- 41.Carlile S., Leung J. The perception of auditory motion. Trends Hear. 2016;20 doi: 10.1177/2331216516644254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Leung J. University of Sidney; 2016. Auditory Motion in Motion. PhD thesis. [Google Scholar]

- 43.Van Békésy G. Sensations on the skin similar to directional hearing, beats, and harmonics of the ear. J. Acoust. Soc. Am. 1957;29:489–501. [Google Scholar]

- 44.Gescheider G. Some comparisons between touch and hearing. IEEE Trans. Man Mach. Syst. 1970;11:28–35. [Google Scholar]

- 45.Frost B.J., Richardson B.L. Tactile localization of sounds: Acuity, tracking moving sources, and selective attention. J. Acoust. Soc. Am. 1976;59:907–914. doi: 10.1121/1.380950. [DOI] [PubMed] [Google Scholar]

- 46.Richardson B.L., Frost B.J. Tactile localization of the direction and distance of sounds. Percept. Psychophys. 1979;25:336–344. doi: 10.3758/bf03198813. [DOI] [PubMed] [Google Scholar]

- 47.Borg E. Cutaneous senses for detection and localization of environmental sound sources: a review and tutorial. Scand. Audiol. 1997;26:195–206. doi: 10.3109/01050399709048007. [DOI] [PubMed] [Google Scholar]

- 48.Bach-y-Rita P., Collins C.C., Saunders F.A., White B., Scadden L. Vision substitution by tactile image projection. Nature. 1969;221:963–964. doi: 10.1038/221963a0. [DOI] [PubMed] [Google Scholar]

- 49.Auvray M., Hanneton S., O'Regan J.K. Learning to perceive with a visuo—auditory substitution system: localization and object recognition with ‘The Voice. Perception. 2007;36:416–430. doi: 10.1068/p5631. [DOI] [PubMed] [Google Scholar]

- 50.Hamilton-Fletcher G., Wright T.D., Ward J. Cross-modal correspondences enhance performance on a colour-to-sound sensory substitution device. Multisensory Res. 2016;29:337–363. doi: 10.1163/22134808-00002519. [DOI] [PubMed] [Google Scholar]

- 51.Abboud S., Hanassy S., Levy-Tzedek S., Maidenbaum S., Amedi A. EyeMusic: Introducing a “visual” colorful experience for the blind using auditory sensory substitution. Restor. Neurol. Neurosci. 2014;32:247–257. doi: 10.3233/RNN-130338. [DOI] [PubMed] [Google Scholar]

- 52.Meijer An experimental system for auditory image representations. IEEE (Inst. Electr. Electron. Eng.) Trans. Biomed. Eng. 1992;39 doi: 10.1109/10.121642. [DOI] [PubMed] [Google Scholar]

- 53.Amedi A., Stern W.M., Camprodon J.A., Bermpohl F., Merabet L., Rotman S., Hemond C., Meijer P., Pascual-Leone A., Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat. Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- 54.Richardson M.L., Lloyd-Esenkaya T., Petrini K., Proulx M.J. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 2020, April. Reading with the tongue: Individual differences affect the perception of ambiguous stimuli with the BrainPort; pp. 1–10. [Google Scholar]

- 55.Heimler B., Amedi A. Are critical periods reversible in the adult brain? Insights on cortical specializations based on sensory deprivation studies. Neurosci. Biobehav. Rev. 2020;116:494–507. doi: 10.1016/j.neubiorev.2020.06.034. [DOI] [PubMed] [Google Scholar]

- 56.Eramudugolla R., Irvine D.R., McAnally K.I., Martin R.L., Mattingley J.B. Directed Attention Eliminates ‘change deafness’ in Complex Auditory Scenes. Curr. Biol. 2005;15:1108–1113. doi: 10.1016/j.cub.2005.05.051. [DOI] [PubMed] [Google Scholar]

- 57.Gaston J., Dickerson K., Hipp D., Gerhardstein P. Change deafness for real spatialized environmental scenes. Cogn. Res. Princ. Implic. 2017;2:29. doi: 10.1186/s41235-017-0066-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Mudd S.A. Spatial stereotypes of four dimensions of pure tone. J. Exp. Psychol. 1963;66:347–352. doi: 10.1037/h0040045. [DOI] [PubMed] [Google Scholar]

- 59.Roggerone V., Vacher J., Tarlao C., Guastavino C. Auditory motion perception emerges from successive sound localizations integrated over time. Sci. Rep. 2019;9 doi: 10.1038/s41598-019-52742-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Heimler B., Striem-Amit E., Amedi A. Origins of task-specific sensory-independent organization in the visual and auditory brain: neuroscience evidence, open questions and clinical implications. Curr. Opin. Neurobiol. 2015;35:169–177. doi: 10.1016/j.conb.2015.09.001. [DOI] [PubMed] [Google Scholar]

- 61.Zimmermann M., Mostowski P., Rutkowski P., Tomaszewski P., Krzysztofiak P., Jednoróg K., Marchewka A., Szwed M. The extent of task specificity for visual and tactile sequences in the auditory cortex of the deaf and hard of hearing. J. Neurosci. 2021;41:9720–9731. doi: 10.1523/JNEUROSCI.2527-20.2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cecchetti L., Kupers R., Ptito M., Pietrini P., Ricciardi E. Are supramodality and cross-modal plasticity the yin and yang of brain development? From blindness to rehabilitation. Front. Syst. Neurosci. 2016;10:89. doi: 10.3389/fnsys.2016.00089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bola Ł., Zimmermann M., Mostowski P., Jednoróg K., Marchewka A., Rutkowski P., Szwed M. Task-specific reorganization of the auditory cortex in deaf humans. Proc. Natl. Acad. Sci. USA. 2017;114:E600–E609. doi: 10.1073/pnas.1609000114. [DOI] [PMC free article] [PubMed] [Google Scholar]