Significance

We provide the largest-scale evidence available to date on the effect of Facebook and Instagram access on political knowledge, attitudes, and behavior in a presidential election season. This study is part of the U.S. 2020 Facebook and Instagram Election Study, a unique collaboration between academics and researchers at Meta that allowed unprecedented access to Meta platform data and algorithms while also including extensive safeguards to guarantee the integrity of the research.

Keywords: social media, Facebook, Instagram, election, polarization

Abstract

We study the effect of Facebook and Instagram access on political beliefs, attitudes, and behavior by randomizing a subset of 19,857 Facebook users and 15,585 Instagram users to deactivate their accounts for 6 wk before the 2020 U.S. election. We report four key findings. First, both Facebook and Instagram deactivation reduced an index of political participation (driven mainly by reduced participation online). Second, Facebook deactivation had no significant effect on an index of knowledge, but secondary analyses suggest that it reduced knowledge of general news while possibly also decreasing belief in misinformation circulating online. Third, Facebook deactivation may have reduced self-reported net votes for Trump, though this effect does not meet our preregistered significance threshold. Finally, the effects of both Facebook and Instagram deactivation on affective and issue polarization, perceived legitimacy of the election, candidate favorability, and voter turnout were all precisely estimated and close to zero.

Many people argue that social media has profoundly affected democracy in America. Social media may increase political polarization, in part by facilitating ideological echo chambers among like-minded people (1–6). It may make voters more (5, 7–9) or less (10–13) knowledgeable, depending on the balance of accurate and inaccurate information that they receive. It may either increase (4, 14, 15) or decrease (16, 17) political engagement and voter turnout, and it may impact overall trust and confidence in the electoral system (18, 19). Observers on each side of the political spectrum speculate that social media may tilt elections in favor of their opponents (20–23). Much of this discussion has focused on Facebook, but Instagram is now a more widely used platform among younger voters and contains a significant amount of political content (24, 25).

These concerns were particularly relevant in the 2020 U.S. presidential election. Affective polarization among U.S. voters reached an all-time high in 2020 (26). Popular media articles argued that misinformation on social media was a key driver of the 2016 election outcome (20, 21, 27), and several observers speculated that its impact in 2020 could be as large or larger (28–30). Concerns about the integrity of the electoral process were widely aired on social media, including concerns about fraud and vote-by-mail (31), and social media played an important role in the events following the election, including the “Stop the Steal” movement questioning the election outcome and the storming of the U.S. Capitol on January 6 (32, 33). Some have highlighted ways that social media may have advantaged Joe Biden (34, 35); others have emphasized ways it benefited Donald Trump (36).

We use a randomized experiment to measure the effects of access to Facebook and Instagram on individual-level political outcomes during the 2020 election. We recruited Facebook users and Instagram users who used the platform for more than 15 min per day at baseline. We randomly assigned 27% to a treatment group that was paid to deactivate their Facebook or Instagram accounts for the 6 wk before election day, and the remainder to a control group that was paid to deactivate for just 1 wk. We estimate effects of deactivation on consumption of other apps and news sources, factual knowledge, political polarization, perceived legitimacy of the election, political participation, and candidate preferences.

Existing experimental evidence on the effects of social media access is limited. Most related are earlier experiments that measured the effect of Facebook deactivation on political outcomes in the United States (5, 37) and other countries (38, 39). Our paper differs from this prior literature in studying social media access in a U.S. presidential election and in evaluating the effects of both Facebook and Instagram. Our Facebook sample alone is more than 10 times larger than any previous deactivation experiment of which we are aware. Our study also improves on earlier experiments by incorporating directly measured outcomes and administrative platform data from Meta, including a wider range of survey-based outcomes, and using a longer deactivation period. Our study builds on a broader literature using randomized experiments (40–42) and observational “natural experiments” (4, 43–48) to study political effects of social media.

Our study has several important limitations, related to generalizability, a time-limited intervention, general equilibrium effects, self-reported outcomes, and attrition. We discuss these in more detail below. While all of these limitations are common in experimental studies of social media, they suggest caution in interpreting our results.

This project is part of the U.S. 2020 Facebook and Instagram Election Study (49–52). Although both Meta researchers and academics were part of the research team, the lead academic authors had the final say on the analysis plan, collaborated with Meta researchers on the code implementing the analysis plan, and had control rights over data analysis decisions and the manuscript text. Under the terms of the collaboration, Meta could not block any results from being published. The academics were not financially compensated and the analysis plan was preregistered prior to data availability. More details of this partnership are in SI Appendix, sections F and G. We have posted answers to frequently asked questions online at https://medium.com/@2020_election_research_project/us-2020-facebook-instagram-election-study-frequently-asked-questions-faq-266d30cbe95b.

Theoretical Mechanisms

Prior research suggests mechanisms by which social media access could affect each of our primary outcomes.

Social media access may affect political knowledge by increasing exposure to both true and false content. Many Americans regularly get news through social media (53), and the great majority of political content on social media is from major news outlets (54). However, misinformation often spreads through social media (55), and a small share of users see significant amounts of false content (54, 56, 57).

Social media access may affect polarization by exposing users to predominantly like-minded content, due to segregated friendship networks, algorithmic filtering, and/or their own decisions (1–3, 50–52, 58). Such users may be pulled toward more extreme attitudes or views consistent with their initial ideology. These effects may be enhanced by the prevalence of content that is extreme, sensational, or focused on negative portrayals of the outgroup (2, 59). Polarization may also be increased by exposure to content from the opposite side of the political spectrum due to backlash effects or distorted perceptions (2, 41).

Social media access may affect perceived legitimacy of the election by exposing users to content that undermines and/or supports legitimacy, including messages about electoral fraud, unreliability of voting machines, voter suppression, foreign interference, and infringement of press freedom and free speech. Concerns about legitimacy played a particularly large role in the 2020 election, with Democrats emphasizing issues of voter access and Republicans emphasizing the possibility of voter fraud. Many also remained concerned about the possibility of foreign interference similar to what had occurred in 2016 and about infringement of press freedom and free speech (60–64). Claims and allegations about all of these issues were widely circulated on social media both before and after the election (31, 64–66).

Social media access may affect participation, including turning out to vote, attending protests, signing petitions, and discussing politics online. Social media provides the most important online forum for political discussions (2), so it could directly increase the likelihood of online participation. It could affect the likelihood of offline participation and turnout through several channels, including increasing or decreasing overall interest in the election, providing information about polling places and candidates, and simply crowding out time that would have been used for these other activities.

Finally, social media access may affect vote choice and candidate favorability. Both candidates deployed substantial resources to sway voters to their side on social media and/or encourage their supporters to turn out and vote. These efforts included an estimated 1.6 billion dollars spent on digital advertising, as well as various forms of unpaid content and promotion (67). It may have been that one campaign or the other was more effective in these efforts on net, and/or that different groups of voters were swayed in different directions. The broader body of content voters see on social media could also sway them to one side or the other.

Experimental Design

This section provides a high-level overview of the experimental design. Details are in SI Appendix, section A.

We ran two parallel experiments, with Facebook and Instagram as the respective “focal platform.” For each focal platform, Meta drew a stratified random sample of users who lived in the United States, were at least 18 y old, and had logged into their account at least once in the past month. Meta placed survey invitations in these users’ focal platform news feeds from August 31 to September 12, 2020. Users who clicked on the invitations were told about the study and asked whether they were willing to deactivate their focal platform account for 1 wk in exchange for $25 and also whether they were willing to deactivate their focal platform account for 6 wk in exchange for $150. Those who were willing to deactivate were asked to complete a series of surveys, including baseline (responses collected September 8 to 21), endline (November 4 to 18), and postendline (December 9 to 23).

Individual-level participation in the experimental analyses and surveys was compensated and required informed consent. We believe that the societal benefits of the study (i.e., the knowledge about Facebook’s and Instagram’s impact in the election that will be generated) outweigh its potential harms to respondents, which are not larger than what individuals experience in their ordinary life. The overall project was evaluated and approved by the Institutional Review Board (IRB) of the National Opinion Research Center at the University of Chicago (NORC). Meta sought review from and was granted approval to conduct the experimental studies by the NORC Institutional Review Board (Protocol number 20.08.10, Project number 8870). Academic collaborators also worked with their respective university IRBs to ensure compliance with Human Subjects Research regulations in their authorship of papers, including analysis of aggregated, deidentified data collected by Meta and NORC.

Just after the baseline survey, participants were randomized into two groups: Deactivation (27%) and Control (73%). The Control group was informed that they would receive $25 if they did not log in to their focal platform for the next week, while the Deactivation group was informed that they would receive $150 if they did not log in to their focal platform for the next 6 wk. Since the Deactivation condition was more expensive than Control, we allocated fewer participants to the former to increase statistical power per dollar of cost. We included a short deactivation period for the Control group to ensure that the Control and Deactivation experiences were identical except for the deactivation length and payment amount, limiting the risk of differential attrition and experimenter demand effects.* On September 23, Meta began deactivating all participants’ focal platform accounts. The account deactivations were completed by the end of the day on September 23 for Instagram and September 24 for Facebook. Meta reactivated Control and Deactivation group accounts on September 30th and November 4th, respectively.

While accounts were deactivated, participants could use their Facebook and Instagram credentials to log in to other apps and services, including WhatsApp and Facebook Messenger. The experiment therefore focuses on the impact of the core Facebook and Instagram products. Participants could also choose to log in to (and thereby reactivate) the focal platform at any time. Those who did so were reminded that they would forfeit their deactivation payments, but they were asked to remain in the study and complete the remaining surveys. In addition to the deactivation incentives, participants received base payments of $5 for completing the baseline survey and $20 each for the endline and postendline surveys.

In addition to variables drawn from the baseline, endline, and postendline surveys, our analysis incorporates a number of directly measured variables. First, we matched participants to state voting records to produce a validated measure of voter turnout. Second, we matched participants to public records of campaign donations. Third, a subset of participants opted into passive tracking that directly recorded their use of news and social media apps and websites in exchange for an additional payment. Finally, we matched all participants in our primary analysis sample to Meta platform data, including time spent on the platforms.

Empirical Strategy

Our preanalysis plan was registered with the Open Science Foundation on September 22, 2020, and updated on November 3, 2020, the day before endline data collection began.† It specified the primary analysis sample, the target population for creating sample weights, the primary and secondary outcomes, the subgroup analyses, the estimating equation, and the handling of missing data. It also included shells of key tables and figures. It specified that we would base inference on two-sided tests and that we would use sharpened false discovery rate (FDR) adjusted -values (68) to control for multiple hypothesis testing, with as our measure of statistical significance for all tests. We did not substantively deviate from the preanalysis plan. SI Appendix, section H describes clarifications and minor modifications, mainly driven by changes in data availability. We characterize our null results using 95% CIs. We note that in some cases, the 95% CI bounds both have the same sign even though the effect is not statistically significant at our preregistered thresholds.

Our primary analysis samples are limited to participants who used the focal platform for more than 15 min per day at baseline. We weight the samples to be representative of U.S. focal platform users on race, political party, education, and (among those with more than 15 min of daily use) baseline account activity. See SI Appendix, section A.5 for additional details.

We use an instrumental variables regression to estimate the causal effect of deactivation while accounting for imperfect compliance with deactivation. In SI Appendix, section D.1, we report intent-to-treat effects based on ordinary least squares regressions of outcomes on an indicator for assignment to treatment. We define as a measure of participant ’s deactivation compliance, as a vector of controls, as a vector of randomization stratum indicators, and as an outcome. The estimating equation is

| [1] |

where we instrument for with a Deactivation group indicator variable .

SI Appendix, section B includes more details on variable construction. As prespecified, the controls are the variables selected in a lasso regression of on the baseline value of (if available) and a vector of demographics and baseline survey variables. We use the same vector in all subgroup analyses for a given outcome.

The compliance measure is defined as , where is the share of days that used the platform (defined by viewing five or more pieces of content) during the September 30 to November 3 treatment period and is the Control group average. Thus, for participants who never use the platform, for participants with usage equal to the Control group average, and measures the local average treatment effect of never using the platform instead of using the Control group average, for people induced to deactivate by the $150 payment.

We emphasize a number of important limitations that readers should keep in mind in interpreting the results. Similar caveats also apply to prior experimental studies of social media including refs. 5, 37, and 38. First, readers should be cautious about generalizing beyond our specific sample and time period. Our estimates are only directly informative about the set of people who agreed to participate in the study and were willing to deactivate their accounts for the payments we offered, and we do not know what the effects might have been in a different year or outside of an election period. However, we do think our results can inform readers’ priors about the potential effects of social media in the final weeks of high-profile national elections.

Second, we can only estimate the effect of 5 wk of individual-level deactivation. Longer-term deactivation could have different effects, and deactivating many users or eliminating Facebook or Instagram altogether could have broader “general equilibrium” effects on how people get information and what media outlets cover. Third, although we include a number of directly measured outcomes, many key outcome variables are self-reported, raising the possibility of various forms of measurement error. Fourth, although we designed our experiment to minimize the possibility of experimenter demand effects (e.g., requiring both Control and Deactivation participants to deactivate their accounts for at least some time), and although past work (5, 69) implies that experimenter demand effects in our context may be limited, participants were aware that they were part of an experiment, and we cannot rule out the possibility that this influenced their behavior.

Sample, Compliance, and Substitution

Sample Characteristics.

We summarize the characteristics of our sample in SI Appendix, section C. On Facebook, a total of million users were invited to the study. clicked the invitation, of whom consented to participate and were willing to deactivate. Of these, completed the baseline survey, could be linked to platform data, and had more than 15 min of baseline use per day. This final group is our “primary analysis sample.” Of the primary analysis sample, people opted into passive tracking. On Instagram, the analogous numbers are million invites, clicks, consents, participants in the primary analysis sample, and people in passive tracking.

Relative to the broader target populations of Facebook and Instagram users, our sample overrepresents users who are more liberal and more engaged with civic content. Our weighting strategy addresses selection on these variables. In SI Appendix, Tables S6 and S7, we show that differential selection on these characteristics is mainly driven by the users who select into our screening survey at the initial stage, and that characteristics of the sample are relatively stable from then on.

The degree of selection into our experiment is comparable to that in the prior literature. In ref. 5, recruitment ads were shown to 1.9 million users; 2,897 completed baseline and were randomized. In ref. 38, recruitment ads were shown to 365,599 users; 556 completed baseline and were randomized. In ref. 37, recruitment ads were shown to a large set of Texas A&M undergraduates, with the exact number who received them not reported; of the 1,929 individuals who responded, 167 completed baseline and were randomized.

Balance and Attrition.

The Deactivation and Control groups were balanced on observable characteristics at both baseline and endline; see SI Appendix, Tables S12 and S13. In our Facebook primary analysis sample, 91% of the Deactivation group and 89% of the Control group completed the endline survey. In the Instagram sample, the corresponding shares are 88% and 86%. Our attrition rates of 9 to 14% compare favorably to prior work—e.g., they are similar to ref. 37, lower than ref. 38, and lower than the mean of 96 field experiments published in top economics journals that were surveyed by Ghanem et al. (70).

The roughly 2 percentage point difference between attrition rates in Deactivation and Control is also comparable to prior work (70). Given our large sample, these differences are statistically significant for both samples; see SI Appendix, Table S14. There were also differences in the timing of response, with Deactivation participants generally completing the endline survey earlier than Control participants; see SI Appendix, Fig. S2. While this attrition is an important potential concern, we discuss a range of evidence below suggesting that it is unlikely to be a source of substantial bias in our estimates.

Compliance.

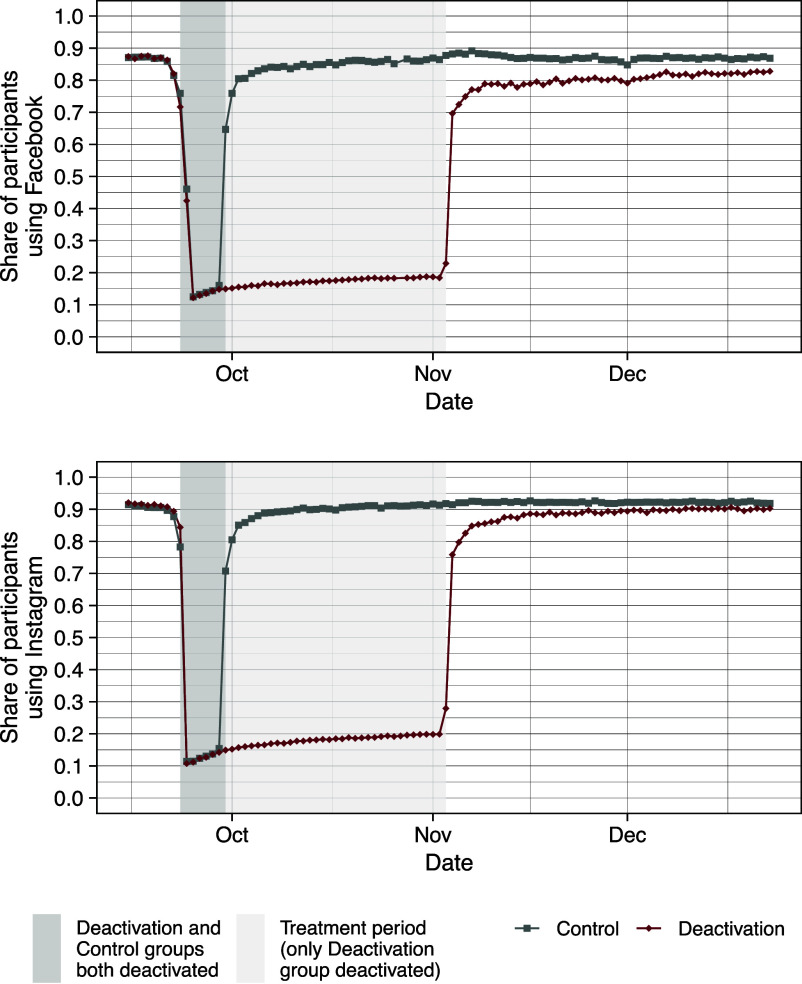

Fig. 1 presents the share of participants who used Facebook and Instagram (defined as logging in and seeing at least five pieces of content) for each day of the experiment. Before the experiment, about 90% of users in the Deactivation and Control groups accessed the focal platform on a given day. During the period when both groups were being paid to avoid logging in and the deactivation process had been completed for all users (September 25 to 29 for Facebook and September 24 to 29 for Instagram), the daily usage rate was roughly 10 to 15%. From September 30 to November 3, the 5-wk “treatment period” when only the Deactivation group was being paid to avoid logging in, that group’s daily usage rate was roughly 15 to 20%, while the Control group’s usage rate returned close to baseline levels.

Fig. 1.

Share of participants using Facebook and Instagram during study period. Note: This figure presents the share of Deactivation and Control groups that used Facebook and Instagram on each day. “Use” is defined as logging in and seeing five or more pieces of content. The dark gray shaded area indicates the Control group’s 7-d deactivation period, while the light gray shaded area indicates the Deactivation group’s 35-d additional deactivation period. We exclude Facebook use data from October 27th due to a logging error.

Thus, the experiment reduced the Deactivation group’s daily usage rate by about relative to Control over the treatment period. This illustrates the first stage of the instrumental variables estimator in Eq. 1. Consistent with results in refs. 5 and 71, the Deactivation group was still less likely to use the platforms at the end of December, almost 2 mo after the deactivation incentive had ended. In SI Appendix, Fig. S5 and Table S27, we show this amounted to reductions (relative to Control) of 23% and 15% in average time spent over the postdeactivation period for Facebook and Instagram, respectively.

Substitution.

The treatment effects we estimate below are the combined effect of reducing Facebook or Instagram use and reallocating that time to other activities. How that time is reallocated is a key determinant of the effects (72). For example, if participants reallocated most of their social media time to consuming high-quality news sources that generally provide accurate information, the impacts could be quite different than if they allocated most of that time to less accurate sources or to activities unrelated to news or politics.

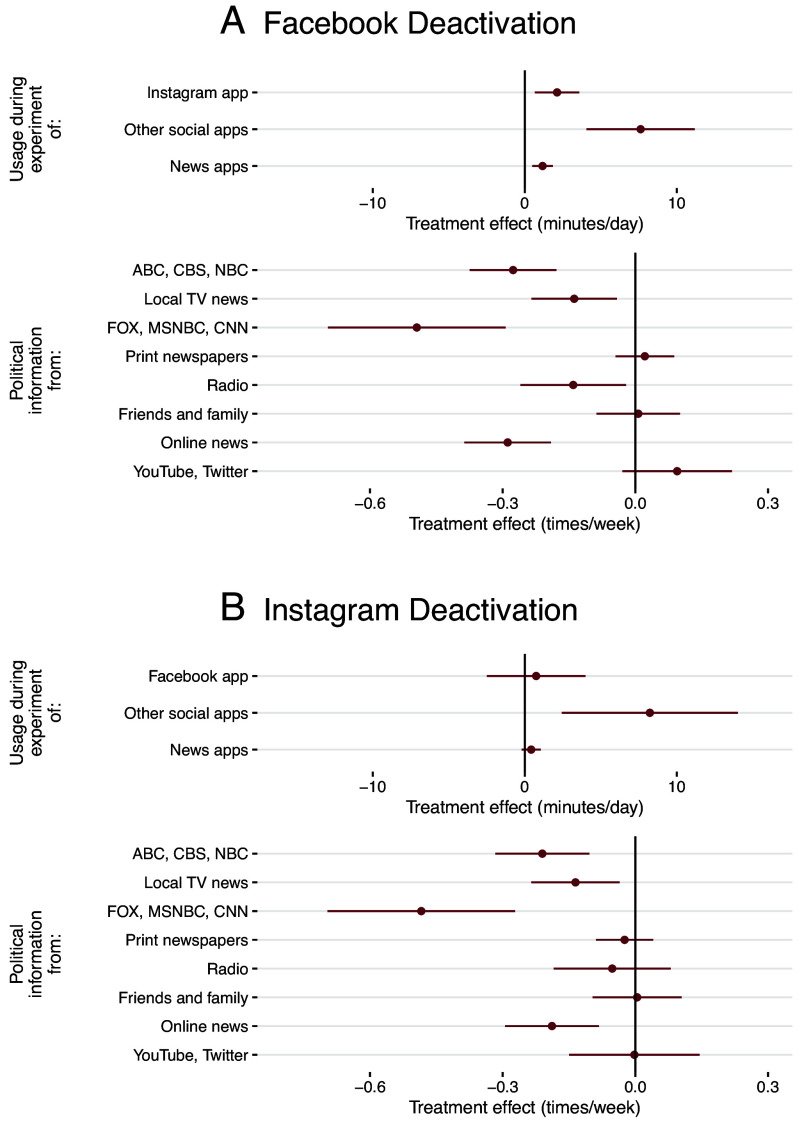

Fig. 2 presents the effects of Facebook and Instagram deactivation on use of substitutes. The first three rows of each panel show effects on time spent on other mobile apps, as directly measured among participants who opted into passive tracking. As a point of reference, the Facebook and Instagram Control group participants in the passive tracking sample spent about 43 and 16 min per day, respectively, on the Facebook and Instagram mobile apps during the treatment period. Facebook deactivation increased time spent on Instagram, other social media apps (such as YouTube, Twitter, and Snapchat), and news apps (such as the New York Times and Fox News), by point estimates of about 2, 8, and 1 min per day, respectively. Together, these point estimates sum to about 26% of the passive tracking sample Control group’s average Facebook mobile app use during the treatment period, with the remaining time going to other apps and to activities we did not observe. Instagram deactivation caused almost no substitution to Facebook or news apps, but it increased time spent on other social media apps by a point estimate of 8 min per day. Together, the point estimates sum to about 57% of the passive tracking sample Control group’s average Instagram mobile app use.

Fig. 2.

Effects of Facebook and Instagram Deactivation on use of substitutes. Note: This figure presents local average treatment effects of Facebook (A) or Instagram (B) deactivation estimated using Eq. 1. Facebook/Instagram app minutes, other news apps minutes, and minutes on other social apps are measured only for participants who opted into passive tracking of apps. The bottom rows in each panel use self-reported answers to the question, “How often in the past week have you gotten political information from the following sources?” Responses were coded as “every day” = 7, “several times” = 3, “once” = 1, and “never” = 0. The horizontal lines represent 95% CI.

The bottom eight rows of each panel show that both Facebook and Instagram deactivation reduced the amount of political information that participants self-reported getting from online news outlets, as well as from network, cable, and local TV news outlets. Facebook deactivation also reduced political information from radio. In total, across all sources reported, the Facebook and Instagram Deactivation groups reported getting political information just over one time per week less. This is a reduction of about 4% relative to the Control group average of 26 times per week. One explanation is that deactivation reduces the amount of content from these outlets that people receive through Facebook or Instagram, and this is not outweighed by any increase in direct access. Other possible explanations are that deactivation reduced attention to political content on these other media or that these self-reports are inaccurate (73).

Effects on Primary and Secondary Outcomes

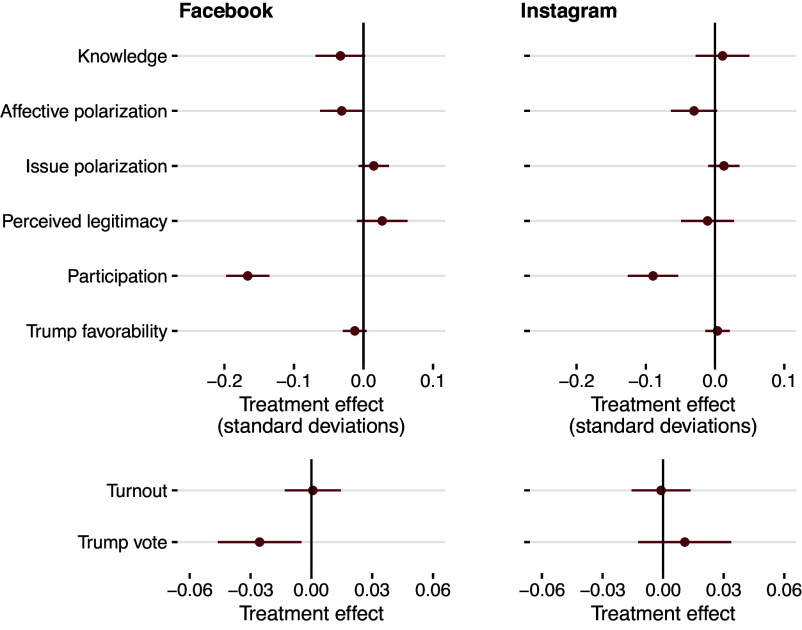

Fig. 3 presents the effects of deactivation on our eight prespecified primary outcome variables. The first six variables are standardized into units of SD within the Control groups. The bottom two variables (turnout and Trump vote) are reported in their original units. SI Appendix, section D.1 presents point estimates, values, and sharpened false discovery rate–adjusted two-stage -values for all primary outcomes.

Fig. 3.

Effects of Facebook and Instagram Deactivation on primary outcomes. Note: This figure presents local average treatment effects of Facebook and Instagram deactivation estimated using Eq. 1. The horizontal lines represent 95% CI.

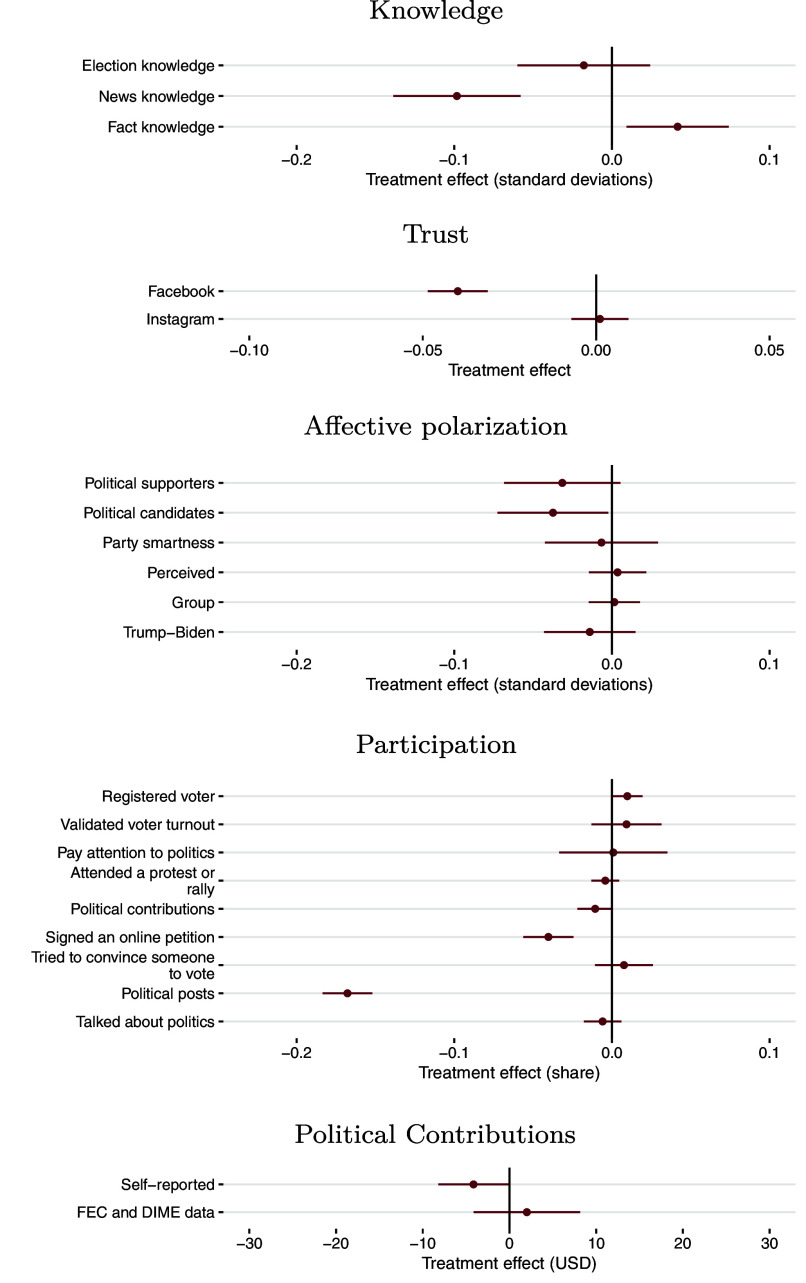

Fig. 4 presents the effects of Facebook deactivation on selected outcomes that we labeled as “secondary” in the preanalysis plan but are useful in interpreting the results in Fig. 3. SI Appendix, Fig. S3 presents the analogous results for Instagram. SI Appendix, section D.2 presents point estimates and values for the full set of preregistered secondary outcomes.

Fig. 4.

Effects of Facebook Deactivation on selected secondary outcomes. This figure presents local average treatment effects of Facebook deactivation estimated using Eq. 1. “Trust” uses answers to the question, “How much do you think political information from each of these sources can be trusted?” Responses were coded as “not at all” = 0, “a little” = 0.25, “a moderate amount” = 0.5, “a lot” = 0.75, and “a great deal” = 1. The horizontal lines represent 95% CI.

Knowledge.

The first primary outcome is political knowledge. The knowledge variable is the average of standardized scores on three sets of factual questions: i) election knowledge (knowledge of candidates’ policy positions); ii) news knowledge (correctly distinguishing recent news events from plausible placebo events that had not happened); and iii) fact knowledge (correctly distinguishing true statements from misinformation that was circulating about topics such as COVID-19 and fraudulent ballots). These questions were based on a set of true and false news stories that circulated widely during the period in which our treatments took place. Stories are defined to be false based on determinations by Meta’s third-party fact checkers.

The effects of both Facebook and Instagram deactivation on our overall knowledge index are small and insignificant. For Facebook, the point estimate is that deactivation slightly reduced knowledge by 0.033 SD (, , 95% CI bounds ). For Instagram, the point estimate is closer to zero (95% CI bounds ).

This overall knowledge index masks two partially offsetting components, as shown in the first panel of Fig. 4. First, Facebook deactivation decreased news knowledge by a point estimate of 0.098 SD (, ). This is directionally consistent with the reduction in self-reported news consumption from Fig. 2, and the effect size is similar to that in ref. 5. Second, the (statistically insignificant) point estimate is that Facebook deactivation increased fact knowledge by 0.042 SD (, ). Increased fact knowledge implies that participants in the Deactivation group were better able to distinguish misinformation from true stories. As a point of comparison for these magnitudes, college graduates in the Control groups scored 0.54 and 0.57 SD higher than noncollege graduates on news knowledge and fact knowledge, respectively.

Auxiliary analyses in SI Appendix, Fig. S7 show effects on the individual survey questions used to construct these knowledge indices. Within the news knowledge index, Facebook deactivation decreased correct beliefs in three events that did happen [i) “a militia’s plot to kidnap Michigan governor Gretchen Whitmer was foiled by undercover agents,” ii) “Pope Francis voiced support for same-sex civil unions,” and iii) “Amy Coney Barrett became the newest Supreme Court justice”) and increased incorrect beliefs in one event that did not (“Donald Trump announced that he would stop holding public rallies out of concern for COVID-related risks”]. Within the fact knowledge index, Facebook deactivation did not affect beliefs in any true statements but decreased belief in three false statements: i) “evidence found on Hunter Biden’s laptop proves Joe Biden took bribes from foreign powers,” ii) “millions of fraudulent ballots were cast in the 2020 presidential election,” and iii) “Joe Biden is a pedophile.” This evidence is consistent with Facebook access increasing belief in misinformation.

The second panel of Fig. 4 shows that Facebook deactivation reduced trust in political information from Facebook by a point estimate of 0.040 SD (, ), without affecting trust in Instagram. SI Appendix, Fig. S3 similarly shows that Instagram deactivation reduced trust in information from Instagram without affecting trust in Facebook. One potential explanation is that time away from a platform made users more aware of the amount of low-quality or inaccurate information to which they had been exposed. The effect echoes the finding in ref. 5 that Facebook deactivation reduced postexperiment Facebook use and in ref. 74 that Fox News viewers who had been given incentives to watch CNN for a month reported lower trust in Fox News. SI Appendix, Tables S19 and S20 show that deactivation did not impact trust in other news sources.

Polarization.

We construct two measures of political polarization: affective polarization and issue polarization. For both variables, we exclude Independents who report that they do not lean toward one party or the other.

Affective polarization is the standardized average of three underlying variables: i) political supporters polarization (the difference in participants’ favorability toward people who support their own party vs. the other party); ii) political candidates polarization (the difference in participants’ favorability toward people running for office in their own party vs. the other party); and iii) party smartness polarization (the difference in participants’ perceived smartness of people in their own party vs. people in the other party). Neither Facebook nor Instagram deactivation had a significant effect. The (statistically insignificant) point estimates are that both Facebook and Instagram deactivation reduced affective polarization by 0.031 and 0.030 SD (Facebook: , , 95% CI bounds ; Instagram: , , 95% CI bounds ).

The third panel of Fig. 4 presents the effects of Facebook deactivation on the three components of the affective polarization index, plus the other three preregistered affective polarization secondary outcomes: perceived polarization, polarization in views toward groups such as immigrants and minorities, and polarization in feeling thermometer ratings for Trump and Biden. All six effects are statistically indistinguishable from zero after adjusting for multiple hypothesis testing. The largest point estimates are for political candidate polarization and political supporters polarization.

The issue polarization variable is an index of eight political opinions (on immigration, repeal of Obamacare, unemployment benefits, mask requirements, foreign policy, policing, racial justice, and gender relations), with the signs of the variables adjusted so that the difference between the own-party and other-party averages is positive. These questions were chosen to focus on issues that were prominent during the study period. Neither Facebook nor Instagram deactivation significantly affected issue polarization, and the 95% CI bounds rule out effects of SD.

As a point of comparison for these magnitudes, ref. 5 find that Facebook deactivation reduced an overall index of political polarization prior to the 2018 midterm elections. This includes a statistically insignificant reduction of 0.06 SD in a measure of affective polarization, and a significant reduction of 0.10 SD in a measure of issue polarization. One possible explanation for the difference in effects on issue polarization is that our study took place during a presidential election, where the environment was saturated with political information and opinion from many sources outside of social media. Another possible explanation is that the set of specific issues on which we focus here may have produced different responses. As another comparison point, ref. 26 estimate that affective polarization has grown by an average of 0.021 SD per year since 1978.

Perceived Legitimacy of Election.

The perceived legitimacy variable is an index of agreement with six statements: i) Elections are free from foreign influence, ii) all adult citizens have equal opportunity to vote, iii) elections are conducted without fraud, iv) government does not interfere with journalists, v) government protects individuals’ right to engage in unpopular speech, and vi) voters are knowledgeable about candidates and issues. Neither Facebook nor Instagram deactivation had a significant effect, and the 95% CI bounds rule out effects of SD.

These null results are important in light of the prominent challenges to election legitimacy that took place around the 2020 election, including Trump’s emphasis on potential fraud in the run-up to the election and the Stop the Steal movement that followed it. Of the subcomponents of our election legitimacy index, one might expect (iii) to be most related to these events. SI Appendix, Tables S19 and S20 show that effects of deactivation on this component are also small and insignificant. We show below that the same is true for effects on our postendline survey in December 2020. We also discuss below the separate effects on Republicans and Democrats.

Political Participation and Turnout.

The participation variable is the sum of indicators for whether a participant reported doing the following six activities: i) attended a protest or rally, ii) contributed money to a political candidate or organization, iii) signed an online petition, iv) tried to convince someone how to vote (online or in-person), v) wrote and posted political messages online, and vi) talked about politics with someone they know. Turnout is an indicator for whether the participant reported voting.

Facebook and Instagram deactivation significantly reduced participation, by point estimates of 0.167 and 0.090 SD, respectively (, for both variables). Neither Facebook nor Instagram deactivation significantly affected turnout, and the 95% CI bounds rule out effects larger than about 1.6 percentage points in either direction.

The fourth panel of Fig. 4 presents effects of Facebook deactivation on the six components of participation, plus the five other preregistered participation secondary outcomes. The effects of Facebook on participation are mainly driven by online activities: posting about politics and signing petitions online. This panel also shows that the effect on turnout is also close to zero and statistically insignificant when we use the directly measured validated voter turnout variable. These results are similar for Instagram; see SI Appendix, Fig. S3.

The final panel of Fig. 4 presents effects on political contributions measured in dollars, using both our survey-based outcome and our directly measured outcome based on administrative contribution records. Both effects are statistically insignificant after adjusting for multiple hypothesis testing.

Candidate Evaluations and Vote Choice.

The Trump favorability variable is the average of two components: i) Trump approval ratings and ii) the difference between Trump and Biden feeling thermometer ratings. The Trump vote variable is defined as 1 for people who reported voting for Trump, 1 for people who reported voting for Biden, and 0 for those who did not vote or voted for some other candidate.

Neither Facebook nor Instagram deactivation significantly affected Trump favorability. The point estimate is negative for Facebook (95% CI bounds ) and very close to zero for Instagram (95% CI bounds ). The point estimate for the effect of Facebook deactivation on Trump vote is a reduction of 0.026 units (, , 95% CI bounds ). This effect falls just short of our preregistered significance threshold of . Instagram deactivation did not significantly affect Trump vote (95% CI bounds ).

To put the magnitude of a 0.026 unit effect on our Trump vote variable in context, note that this would result if Facebook deactivation caused % of Trump voters to switch to Biden, or if it caused % of Trump voters to not vote. SI Appendix, section D.4 shows that the associated point estimate for the effect on the Trump–Biden two-party vote share within our sample is 1.16 percentage points.

SI Appendix, section D.4 presents additional discussion of the vote share effect, including a comparison to prior estimates of the impact of television, newspapers, and social media on vote choice. As discussed in SI Appendix, section G.2, the samples were far too small for the experiment itself to have changed any actual election outcomes.

Several additional facts may be useful to assess whether the effect of Facebook deactivation on Trump vote share implied by our point estimate is plausible. First, some people did change their minds over the study period: about 20% of Control group participants report voting differently at endline than they had reported intending to vote at baseline (SI Appendix, Table S22). Second, several (statistically insignificant) point estimates on other variables are directionally consistent with an effect on vote choice: deactivation (statistically insignificantly) decreased Trump favorability as described above, (statistically insignificantly) decreased turnout among Republicans and increased turnout among Democrats (SI Appendix, Fig. S21), and (statistically insignificantly) decreased three secondary outcomes reported in SI Appendix, section D.2: pro-Republican affect, pro-Republican issue positions, and votes for Republicans in state-level elections. However, the fact that any effects on correlated outcomes are small is an important note of caution.‡

Postendline Effects.

There are no statistically significant effects of Facebook or Instagram deactivation on the affective polarization, perceived legitimacy, and Trump favorability questions on the postendline survey; see SI Appendix, Fig. S6. This is not surprising, given that there were no statistically significant effects on these outcomes at endline.

Attrition and Robustness.

Earlier, we reported that 9 to 14% of the participants in our primary analysis sample failed to complete the endline survey, and that these attrition rates were somewhat higher for the Deactivation groups than for the Control groups. As shown formally by Ghanem et al. (70), the key question for assessing the potential for attrition to introduce bias is not whether it is differential per se, but instead the extent to which it generates different distributions of potential outcomes across the treatment conditions. We present several pieces of evidence that together suggest such bias may be limited.

First, we apply the test proposed by Ghanem et al. (70) to evaluate potential bias using the distribution of baseline outcomes among attritters and nonattritters. This test, which is presented in SI Appendix, Tables S23 and S24, fails to reject the null hypothesis that the distribution of potential outcomes remains balanced postattrition for 15 of the 16 primary outcomes across the two experiments. The one rejection is for affective polarization in the Facebook sample. The baseline means of affective polarization are balanced in the endline sample, meaning that there is no evidence of systematic distortion in our endline treatment effect estimates, but the baseline means are less balanced among attritters.

Second, we present additional evidence on the extent to which attrition is related to observed determinants of outcomes. SI Appendix, Table S13 shows that the Deactivation and Control groups are balanced on observables at endline. Consistent with this, SI Appendix, Fig. S10 shows that the estimates depend little on the choice of control variables . SI Appendix, Fig. S15 shows that treatment effects on endline outcomes predicted using baseline observables are all indistinguishable from zero. SI Appendix, Tables S25 and S26 present bias tests recommended by Oster (75) which suggest minimal bias from attrition under the assumption that the distribution of unobserved outcome determinants is similar to the distribution of observables.

Third, in SI Appendix, Table S27, we use our directly measured outcomes (which are available for both attritters and nonattritters) to compare the results for the full sample vs. the postattrition sample. This provides a direct test of bias due to attrition for these outcomes. In all cases, the full and postattrition samples yield very similar results, with the differences between the two in all cases less than one SE. This shows that attrition is not a significant source of bias in the treatment effects for these outcomes.

Finally, we construct Lee (76) bounds for the worst-case bias that could have been produced by attrition; see SI Appendix, Tables S28 and S29. These show how the estimates would change if those who attrited were maximally selected on unobserved determinants of outcomes. Following ref. 76, we delete the highest or lowest values in the distribution of Deactivation group outcomes until the resulting response rate equals the Control group’s response rate; we also “tighten” the estimates using baseline characteristics. The Lee bounds exclude zero for three primary outcomes: participation for both Facebook and Instagram and Trump vote for Facebook.

In SI Appendix, Figs. S10–S12, we present effects on our primary outcomes under several alternative specifications: i) weighting observations equally; ii) controlling for party by date of survey response to address possible underlying time trends in partisan views in the days after the election§; iii) excluding the lasso-selected control variables from the estimating equation; iv) excluding the 8% of participants in the Facebook sample and 33% of participants in the Instagram sample who reported having multiple accounts; and v) using alternative weights that vary the threshold for winsorization and omit individual variables from the set used to calculate the weights one at a time. None of these robustness checks substantially changes the qualitative or quantitative pattern of our estimates.

Subgroup Analysis.

As prespecified, we estimate heterogeneous effects using four primary moderators (political party, baseline use, and indicators for undecided voters and for voters who identify as Black/Hispanic) and five secondary moderators (age, gender, education, urban status, and an indicator for swing state residence). These results are reported in SI Appendix, section E with the relevant CI for each subgroup.

The effects of Facebook deactivation on perceived legitimacy and participation are larger for the above-median use group. There are no other significant differences by baseline use. The effects on undecided voters and Black/Hispanic voters are generally indistinguishable from both the full sample estimates and from zero. Furthermore, there are generally no significant differences in primary effects within each of these subgroups (minority vs. nonminority voters; undecided vs. decided voters).

Effects for political party subgroups are generally similar to each other, with several exceptions. First, Facebook deactivation significantly reduced our overall index of knowledge among Democrats. Second, Facebook deactivation increased issue polarization among strong Democrats and (insignificantly) decreased it among strong Republicans; see SI Appendix, Fig. S21. This could reflect Facebook deactivation making the issue views of both Republicans and Democrats more pro-Democratic. Third, both Facebook and Instagram deactivation initially appear to have increased perceived legitimacy among Republicans, but this result does not survive controlling for the timing of survey response; see SI Appendix, Figs. S21 and S22.

In terms of the secondary moderators, most differences are not significant. One notable pattern is that there are several subgroups (above-median age, men, people with college degrees, and rural residents) in which Facebook deactivation had somewhat larger and more significant negative effects on affective polarization.SI Appendix, section E provides additional discussion of these and other differences, including relevant CI for each subgroup.

Discussion

Our findings paint a nuanced picture of the way Facebook and Instagram influenced attitudes, beliefs, and behavior in the 2020 election.

We replicate in a new context and a larger sample the prior finding by ref. 5 and Asimovic et al. (38) that access to Facebook leads users to be more informed about events in the news. We present large-scale experimental evidence suggesting that access to Facebook may increase belief in misinformation. Together, these findings are consistent with the fact that both trustworthy news and misinformation circulate widely on the platform, and they highlight the importance of continuing efforts to decrease exposure to the latter.

We find no significant effects of social media access on polarization. Our point estimates are consistent with both Facebook and Instagram access slightly increasing affective polarization, but the effects are small relative to the long-term trend in affective polarization. We find no significant effects of either Facebook or Instagram access on issue polarization. This suggests that if Facebook access contributes to political polarization, the effect is either small or accumulates over a longer period than 5 wk.

Our estimated effect of Facebook access on self-reported vote choice is large enough to be meaningful in a close election, although it is not significant at our preregistered threshold. The point estimate would be consistent with the Trump campaign using Facebook more effectively than the Biden campaign. It could also reflect the posts from users, pages, and groups to which users were exposed favoring Trump on net. We reiterate that our vote choice estimate applies to the specific population that selected into our experiment and that it cannot be extrapolated to the broader population without strong assumptions.

Our results also provide large-scale evidence on the political impact of access to Instagram. Aside from a reduction in online participation, we find no significant impacts of Instagram deactivation on any other primary outcomes. This is true even among younger users, and it suggests that despite Instagram’s rapid growth, Facebook likely remains the platform with the largest impacts on political outcomes.

We find precisely estimated zero effects of both Facebook and Instagram access on the perceived legitimacy of the electoral process, including perceptions of electoral fraud (although we do detect negative effects of Facebook access on perceived legitimacy among heavy users). Of course, we do not capture the effect of content shared after election day because our deactivation period had ended.

Finally, we find no significant effects of either Facebook or Instagram access on turnout, with CIs that rule out moderate effects. This is true for turnout measured with our survey as well as validated turnout from the voter file.

All of these findings are subject to the important caveats described above, including generalizability, a time-limited intervention, general equilibrium effects, self-reported outcomes, and attrition. Notwithstanding its limitations, we believe that this study can usefully inform and constrain the discussion of the effects of social media on American democracy.

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We thank participants at the Social Science Research Council (SSRC) Workshop on the Economics of Social Media, the Bay Area Tech Economics Seminar, the Winter Business Economics Conference and seminars at Stanford, University of California Berkeley, and the University of Chicago Harris School for helpful comments and suggestions.

Author contributions

H.A., M.G., W.M., A.W., P.B., T.B., A.C.-T., D.D., D.F., S.G.-B., A.M.G., Y.M.K., D.L., N.M., D.M., B.N., J.P., J.S., E.T., R.T., C.V.R., M.W., A.F., C.K.d.J., N.J.S., and J.A.T. designed research; H.A., M.G., W.M., A.W., J.C.C., S.N.-D., H.N.E.B., A.C.P.d.Q., B.W., and S.Z. performed research; H.A., M.G., W.M., A.W., J.C.C., S.N.-D., H.N.E.B., A.C.P.d.Q., B.W., and S.Z. analyzed data; N.J.S. and J.A.T. are joint principal investigators of the broader study; and H.A., M.G., W.M., and A.W. wrote the paper.

Competing interests

Some authors are or have been employed by Meta: W.M., A.W., P.B., T.B., D.M., A.F., and C.K.d.J. are current employees. A.C.-T., D.D., and C.V.R. are former employees. All of their work on the study was conducted while they were employed by Meta. The following academic authors have had one or more of the following funding or personal financial relationships with Meta (paid consulting work, received direct grant funding, received an honorarium or fee, served as an outside expert, or own Meta stock): M.G., A.M.G., B.N., J.P., J.S., R.T., M.W., N.J.S., and J.A.T. The costs of the study were funded by Meta. None of the academic researchers nor their institutions received financial compensation from Meta for their participation in the project. The overall project was evaluated and approved by the National Opinion Research Center (NORC) Institutional Review Board (IRB). Academic researchers coordinated with their specific university IRBs to ensure they followed regulations concerning human subject research when analyzing data collected by NORC and Meta as well as when authoring papers based on the results. Additionally, the research group was provided ethical counsel by the independent company Ethical Resolve to inform the study designs. For additional information about the above disclosures as well as a review of the steps taken to protect the integrity of the research, see SI Appendix, sections F and G.

Footnotes

Reviewers: L.B., University of Chicago; and R.D., National University of Singapore.

*For more context on the design choices, see SI Appendix, section A.4.

†The PAP is publicly available from https://osf.io/t9q2f.

‡For example, a one SD increase in Trump favorability is associated with a 0.6 unit increase in Trump vote in the Control group. Since deactivation reduced Trump favorability by a point estimate of 0.013 SD (with a 95% CI lower bound of 0.030 SD), one might thus expect Trump vote to decrease by units (with a 95% CI lower bound of 0.018 units), which is closer to zero than the actual point estimate of 0.026 units.

§From November 4 to 16, perceived legitimacy dropped substantially among Republicans while increasing substantially among Democrats; see SI Appendix, Figs. S13 and S14.

Data, Materials, and Software Availability

Deidentified data and analysis code from this study will be stored in the Social Media Archive (SOMAR) housed by the Inter-university Consortium for Political and Social Research (ICPSR) (77). This will be accessible for university research approved by Institutional Review Boards (IRBs) related to elections or for the purpose of validating the results of this study. ICPSR will handle and vet all applications requesting access to these data. Anonymized data (De-identified data and analysis code) will be deposited.

Supporting Information

References

- 1.Sunstein C. R., #Republic: Divided Democracy in the Age of Social Media (Princeton University Press, 2017). [Google Scholar]

- 2.J. E. Settle, Frenemies: How social media polarizes America (Cambridge University Press, 2018).

- 3.P. Barberá, “Social media, echo chambers, and political polarization” in Social Media and Democracy: The State of the Field, Prospects for Reform. SSRC Anxieties of Democracy, N. Persily, J. A. Tucker, Eds. (Cambridge University Press, 2020), pp. 34–55.

- 4.Zhuravskaya E., Petrova M., Enikolopov R., Political effects of the internet and social media. Annu. Rev. Econ. 12, 415–438 (2020). [Google Scholar]

- 5.Allcott H., Braghieri L., Eichmeyer S., Gentzkow M., The welfare effects of social media. Am. Econ. Rev. 110, 629–676 (2020). [Google Scholar]

- 6.Van Bavel J. J., et al. , How social media shapes polarization. Sci. Soc. 25, 913–916 (2021). [DOI] [PubMed] [Google Scholar]

- 7.Benkler Y., The Wealth of Networks: How Social Production Transforms Markets and Freedom (Yale University Press, 2008). [Google Scholar]

- 8.Fletcher R., Nielsen R. K., Are people incidentally exposed to news on social media? A comparative analysis. New Media Soc. 20, 2450–2468 (2018). [Google Scholar]

- 9.Munger K., Egan P. J., Nagler J., Ronen J., Tucker J., Political knowledge and misinformation in the era of social media: Evidence from the 2015 UK election. Br. J. Polit. Sci. 52, 107–127 (2022). [Google Scholar]

- 10.C. Silverman, This analysis shows how viral fake election news stories outperformed real news on Facebook (2016). https://www.buzzfeednews.com/article/craigsilverman/viral-fake-election-news-outperformed-real-news-on-facebook. Accessed 16 April 2024.

- 11.McIntyre L., Post-Truth (MIT Press, 2018). [Google Scholar]

- 12.Vosoughi S., Roy D., Aral S., The spread of true and false news online. Science 359, 1146–1151 (2018). [DOI] [PubMed] [Google Scholar]

- 13.A. M. Guess, B. A. Lyons, “Misinformation, disinformation, and online propaganda” in Social Media and Democracy: The State of the Field, Prospects for Reform. SSRC Anxieties of Democracy, N. Persily, J. A. Tucker, Eds. (Cambridge University Press, 2020), pp. 10–33.

- 14.Bond R. M., et al. , A 61-million-person experiment in social influence and political mobilization. Nature 289, 295–298 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jones J. J., Bond R. M., Bakshy E., Eckles D., Fowler J. H., Social influence and political mobilization: Further evidence from a randomized experiment in the 2012 U.S. presidential election. PLoS ONE 12, 1–9 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ravel A., A new kind of voter suppression in modern elections. U. Mem. L. Rev. 49, 1019 (2018). [Google Scholar]

- 17.Overton S., State power to regulate social media companies to prevent voter suppression. UC Davis L. Rev. 53, 1793 (2020). [Google Scholar]

- 18.Tucker J. A., Theocharis Y., Roberts M. E., Barberá P., From liberation to turmoil: Social media and democracy. J. Democracy 28, 46–59 (2017). [Google Scholar]

- 19.McKay S., Tenove C., Disinformation as a threat to deliberative democracy. Polit. Res. Q. 74, 703–717 (2021). [Google Scholar]

- 20.H. J. Parkinson, Click and elect: How fake news helped Donald Trump win a real election. The Guardian. https://www.theguardian.com/commentisfree/2016/nov/14/fake-news-donald-trump-electionalt-right-social-media-tech-companies. Accessed 16 April 2024.

- 21.M. Read, Donald Trump won because of Facebook (2016). https://nymag.com/intelligencer/2016/11/donald-trump-won-because-of-facebook.html. Accessed 16 April 2024.

- 22.J. Clayton, Social media: Is it really biased against US Republicans? BBC. https://www.bbc.com/news/technology-54698186. Accessed 16 April 2024.

- 23.Pew Research Center, Most Americans think social media sites censor political viewpoints. Pew Research Center. https://www.pewresearch.org/internet/2020/08/19/most-americans-think-social-media-sites-censor-political-viewpoints/. Accessed 16 April 2024.

- 24.K. Taylor, Instagram is gen Z’s go-to source of political news—And it’s already having an impact on the 2020 election. Business Insider. https://www.businessinsider.com/gen-z-gets-its-political-news-from-instagram-accounts-2019-6. Accessed 16 April 2024.

- 25.H. Murphy, D. Sevastopulo, Why US politicians are turning to Instagram ahead of 2020 election (2019). https://www.ft.com/content/737d2428-2fdf-11e9-ba00-0251022932c8. Accessed 16 April 2024.

- 26.Boxell L., Gentzkow M., Shapiro J. M., Cross-country trends in affective polarization. Rev. Econ. Stat. 106, 557–565 (2024). [Google Scholar]

- 27.C. Dewey, Facebook fake-news writer: ‘I think Donald Trump is in the White House because of me’. The Washington Post, 17 November 2016. https://www.washingtonpost.com/news/the-intersect/wp/2016/11/17/facebook-fake-news-writer-i-think-donald-trump-is-in-the-white-house-because-of-me/. Accessed 16 April 2024.

- 28.M. Parks, Social media usage is at an all-time high. that could mean a nightmare for democracy (2020). https://www.npr.org/2020/05/27/860369744/social-media-usage-is-at-an-all-time-high-that-could-mean-a-nightmare-for-democr. Accessed 16 April 2024.

- 29.E. Prapuolenis, Election 2020: Misinformation has never been more of a threat (2020). https://www.youtube.com/watch?v=aS_ZjeWhuo8. Accessed 16 April 2024.

- 30.A. Sebenius, Why disinformation is a major threat to the 2020 election. Bloomberg, 14 May 2020. https://www.bloomberg.com/news/articles/2020-05-14/how-disinformation-has-morphed-for-the-2020-election-quicktake. Accessed 16 April 2024.

- 31.A. C. Estes, R. Heilweil, Why mail-in voting conspiracy theories are so dangerous in 2020 (2020). https://www.vox.com/recode/21454529/vote-by-mail-voter-fraud-trump-qanon-conspiracy-theory-2020-election. Accessed 16 April 2024.

- 32.M. Graham, S. Rodriguez, Twitter and Facebook race to label a slew of posts making false election claims before all votes counted. CNBC. https://www.cnbc.com/2020/11/04/twitter-and-facebook-label-trump-posts-claiming-election-stolen.html. Accessed 16 April 2024.

- 33.S. Alcorn, Behind the tweet that became the rallying cry for the insurrection (2021). https://revealnews.org/article/behind-the-tweet-that-became-the-rallying-cry-for-the-insurrection. Accessed 16 April 2024.

- 34.P. Suciu, Social media proved crucial for Joe Biden—It allowed him to connect with young voters and avoid his infamous gaffes. Forbes. https://www.forbes.com/sites/petersuciu/2020/11/17/social-media-proved-crucial-for-joe-biden–it-allowed-him-to-connect-with-young-voters-and-avoid-his-infamous-gaffes/?sh=3bc612d44148. Accessed 16 April 2024.

- 35.K. Roose, How Joe Biden’s digital team tamed the MAGA internet. The New York Times, 6 December 2020. https://www.nytimes.com/2020/12/06/technology/joe-biden-internet-election.html. Accessed 16 April 2024.

- 36.J. C. Wong, One year inside Trump’s monumental Facebook campaign. The Guardian. https://www.theguardian.com/us-news/2020/jan/28/donald-trump-facebook-ad-campaign-2020-election. Accessed 16 April 2024.

- 37.Mosquera R., Odunowo M., McNamara T., Guo X., Petrie R., The economic effects of Facebook. Exp. Econ. 23, 575–602 (2019). [Google Scholar]

- 38.Asimovic N., Nagler J., Bonneau R., Tucker J. A., Testing the effects of Facebook usage in an ethnically polarized setting. Proc. Natl. Acad. Sci. U.S.A. 118, e2022819118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Asimovic N., Nagler J., Tucker J. A., Replicating the effects of Facebook deactivation in an ethnically polarized setting. Res. Polit. 10, 20531680231205157 (2023). [Google Scholar]

- 40.A. M. Guess, “Experiments using social media data” in Advances in Experimental Political Science, J. N. Druckman, D. P. Green, Eds. (Cambridge University Press, 2021), pp. 184–198.

- 41.Bail C. A., et al. , Exposure to opposing views on social media can increase political polarization. Proc. Natl. Acad. Sci. U.S.A. 115, 9216–9221 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Levy R., Social media, news consumption, and polarization: Evidence from a field experiment. Am. Econ. Rev. 111, 831–870 (2021). [Google Scholar]

- 43.Petrova M., Sen A., Yildirim P., Social media and political contributions: The impact of new technology on political competition. Manag. Sci. 67, 2997–3021 (2021). [Google Scholar]

- 44.T. Rotesi, The impact of Twitter on political participation (Working paper, Bocconi University, 2019).

- 45.Müller K., Schwarz C., From hashtag to hate crime: Twitter and antiminority sentiment. Am. Econ. J.: Appl. Econ. 15, 270–312 (2023). [Google Scholar]

- 46.Fujiwara T., Müller K., Schwarz C., The effect of social media on elections: Evidence from the United States. J. Eur. Econ. Assoc. 2023, jvad058 (2023). [Google Scholar]

- 47.N. Persily, J. A. Tucker, Eds., Social Media and democracy: The State of the Field, Prospects for Reform. SSRC Anxieties of Democracy (Cambridge University Press, 2020).

- 48.J. A. Tucker et al. , Social media, political polarization, and political disinformation: A review of the scientific literature. SSRN. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3144139. Accessed 16 April 2024.

- 49.González-Bailón S., et al. , Asymmetric ideological segregation in exposure to political news on Facebook. Science 381, 392–398 (2023). [DOI] [PubMed] [Google Scholar]

- 50.A. M. Guess et al., How do social media feed algorithms affect attitudes and behavior in an election campaign? Science 381, 398–404 (2023). [DOI] [PubMed]

- 51.Guess A. M., et al. , Reshares on social media amplify political news but do not detectably affect beliefs or opinions. Science 381, 404–408 (2023). [DOI] [PubMed] [Google Scholar]

- 52.Nyhan B., et al. , Like-minded sources on Facebook are prevalent but not polarizing. Nature 620, 137–144 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pew Research Center. More than eight-in-ten Americans get news from digital devices (2020). https://www.pewresearch.org/short-reads/2021/01/12/more-than-eight-in-ten-americans-getnews-from-digital-devices/. Accessed 16 April 2024.

- 54.Allen J., Howland B., Mobius M., Rothschild D., Watts D. J., Evaluating the fake news problem at the scale of the information ecosystem. Sci. Adv. 6, eaay3539 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Allcott H., Gentzkow M., Social media and fake news in the 2016 election. J. Econ. Perspect. 31, 211–236 (2017). [Google Scholar]

- 56.Guess A. M., Nyhan B., Reifler J., Exposure to untrustworthy websites in the 2016 US election. Nat. Human Behav. 4, 472–480 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Grinberg N., Joseph K., Friedland L., Swire-Thompson B., Lazer D., Fake news on Twitter during the 2016 US presidential election. Science 363, 374–378 (2019). [DOI] [PubMed] [Google Scholar]

- 58.Pariser E., The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think (Penguin Books, 2011). [Google Scholar]

- 59.Rathje S., Van Bavel J. J., van der Linden S., Out-group animosity drives engagement on social media. Proc. Natl. Acad. Sci. U.S.A. 118, e2024292118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.H. Hartig, 75% of Americans say it’s likely that Russia or other governments will try to influence 2020 election (2020). https://www.pewresearch.org/fact-tank/2020/08/18/75-of-americans-say-its-likely-that-russia-or-other-governments-will-try-to-influence-2020-election.

- 61.H. Hartig, The Trump administration and the media (2020). https://cpj.org/reports/2020/04/trump-media-attacks-credibility-leaks. Accessed 16 April 2024.

- 62.M. Sullivan, Trump is leaving press freedom in tatters. Biden can take these bold steps to repair the damage (2020). https://www.washingtonpost.com/lifestyle/media/trump-biden-press-freedom/2020/12/04/251c669e-3583-11eb-a997-1f4c53d2a747_story.html. Accessed 16 April 2024.

- 63.D. Kemp, E. Ekins, New poll: 75% don’t trust social media to make fair content moderation decisions, 60% want more control over posts they see (2020). https://www.cato.org/blog/new-poll-75-dont-trust-social-media-make-fair-content-moderation-decisions-60-want-more. Accessed 16 April 2024.

- 64.A. Akala, Don’t censor! Stop the hoaxes! Facebook, Twitter face a catch-22 (2020). https://www.npr.org/2020/10/16/924625825/dont-censor-stop-the-hoaxes-facebook-twitter-face-a-catch-22?t=1660562843875. Accessed 16 April 2024.

- 65.E. Ferrara, H. Chang, E. Chen, G. Muric, J. Patel, Characterizing social media manipulation in the 2020 US presidential election. First Monday 25, 10.5210/fm.v25i11.11431 (2020). [DOI]

- 66.M. Scott, R. Kern, The online world still can’t quit the ‘Big Lie’ (2022). https://www.politico.com/news/2022/01/06/social-media-donald-trump-jan-6-526562. Accessed 16 April 2024.

- 67.H. Homonoff, 2020 Political ad spending exploded: Did it work? (2020). https://www.forbes.com/sites/howardhomonoff/2020/12/08/2020-political-ad-spending-exploded-did-it-work/?sh=584a80d73ce0. Accessed 16 April 2024.

- 68.Benjamini Y., Krieger A. M., Yekutieli D., Adaptive linear step-up procedures that control the false discovery rate. Biometrika 93, 491–507 (2006). [Google Scholar]

- 69.de Quidt J., Haushofer J., Roth C., Measuring and bounding experimenter demand. Am. Econ. Rev. 108, 3266–3302 (2018). [Google Scholar]

- 70.Ghanem D., Hirshleifer S., Ortiz-Beccera K., Testing attrition bias in field experiments. J. Human Res. 59, 0920-11190R2 (2023). [Google Scholar]

- 71.Allcott H., Gentzkow M., Song L., Digital addiction. Am. Econ. Rev. 112, 2424–2463 (2022). [Google Scholar]

- 72.S. DellaVigna, E. La Ferrara, “Economic and social impacts of the media” in Handbook of Media Economics, S. P. Anderson, J. Waldfogel, D. Stromberg, Eds. (Elsevier, 2015), vol. 1, pp. 723–768.

- 73.Parry D. A., et al. , A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nat. Human Behav. 5, 1535–1547 (2021). [DOI] [PubMed] [Google Scholar]

- 74.D. Broockman, J. Kalla, The manifold effects of partisan media on viewers’ beliefs and attitudes: A field experiment with Fox News viewers. OSF Preprint (2022). https://edisciplinas.usp.br/pluginfile.php/7883470/mod_resource/content/1/Partisan_Media.pdf. Accessed 16 April 2024.

- 75.Oster E., Unobservable selection and coefficient stability: Theory and evidence. J. Bus. Econ. Stat. 37, 187–204 (2019). [Google Scholar]

- 76.Lee D. S., Training, wages, and sample selection: Estimating sharp bounds on treatment effects. Rev. Econ. Stud. 76, 1071–1102 (2009). [Google Scholar]

- 77.SOMAR @ICPSR, U.S. 2020 Facebook and Instagram Election Study: Inter-university Consortium for Political and Social Research [distributor]. SOMAR. https://socialmediaarchive.org/collection/US2020. Accessed 16 April 2024.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 01 (PDF)

Data Availability Statement

Deidentified data and analysis code from this study will be stored in the Social Media Archive (SOMAR) housed by the Inter-university Consortium for Political and Social Research (ICPSR) (77). This will be accessible for university research approved by Institutional Review Boards (IRBs) related to elections or for the purpose of validating the results of this study. ICPSR will handle and vet all applications requesting access to these data. Anonymized data (De-identified data and analysis code) will be deposited.