ABSTRACT

Background

Novel influenza viruses pose a potential pandemic risk, and rapid detection of infections in humans is critical to characterizing the virus and facilitating the implementation of public health response measures.

Methods

We use a probabilistic framework to estimate the likelihood that novel influenza virus cases would be detected through testing in different community and healthcare settings (urgent care, emergency department, hospital, and intensive care unit [ICU]) while at low frequencies in the United States. Parameters were informed by data on seasonal influenza virus activity and existing testing practices.

Results

In a baseline scenario reflecting the presence of 100 novel virus infections with similar severity to seasonal influenza viruses, the median probability of detecting at least one infection per month was highest in urgent care settings (72%) and when community testing was conducted at random among the general population (77%). However, urgent care testing was over 15 times more efficient (estimated as the number of cases detected per 100,000 tests) due to the larger number of tests required for community testing. In scenarios that assumed increased clinical severity of novel virus infection, median detection probabilities increased across all healthcare settings, particularly in hospitals and ICUs (up to 100%) where testing also became more efficient.

Conclusions

Our results suggest that novel influenza virus circulation is likely to be detected through existing healthcare surveillance, with the most efficient testing setting impacted by the disease severity profile. These analyses can help inform future testing strategies to maximize the likelihood of novel influenza detection.

Keywords: detection, H5N1, healthcare testing, influenza, novel virus

1. Introduction

Novel influenza viruses are different from the seasonal influenza viruses currently circulating in humans (A/H3N2, A/H1N1, and B/Victoria). Human infections with novel influenza viruses are generally rare and isolated events that occur through exposure to infected animals (such as livestock) during recreational or occupational activities. At the time of writing (May 10, 2024), widespread avian influenza A(H5N1) virus outbreaks occurring among wild and commercial birds since January 2022 have been associated with just two detected human cases of H5N1 in the United States: one individual who was exposed to infected poultry and one who was exposed to infected dairy cattle [1, 2]. The H5N1 viruses associated with these outbreaks do not easily bind to receptors in the human upper respiratory tract, and the risk to the general public is currently low [1]. However, a novel influenza virus that transmits efficiently between humans could pose a pandemic risk. Rapid detection of human infection with a novel influenza virus is critical to characterizing the virus causing the infection and facilitating a rapid public health response [3].

Testing is particularly important to distinguish novel influenza virus infection from seasonal influenza or other respiratory virus infections with similar symptom profiles [4]. Although active monitoring and testing of individuals with exposure to infected animals can identify new spillover infections [2], such measures are not designed to detect cases in the wider community following sustained human‐to‐human transmission. Public health surveillance systems must be equipped to detect novel influenza cases through testing in the community or in healthcare settings where infected individuals might seek care.

We use a probabilistic framework to estimate the likelihood of detection of novel influenza virus cases once sustained human‐to‐human transmission is occurring at low frequencies within the United States (i.e., 1000 total cases or less). We consider testing of individuals presenting to different healthcare settings with no known previous exposure to infected animals or humans and use information on testing for seasonal influenza viruses to develop assumptions about plausible testing probabilities. Our findings can help inform testing strategies to improve detection of novel influenza virus cases occurring at low frequencies.

2. Methods

2.1. Model

We adapted an existing framework to estimate detection probabilities for a novel influenza virus in the United States [5]. For a given case of novel influenza virus infection, the probability of detection in a particular healthcare setting can be expressed as

where is the probability that someone is tested in that setting given that they are a case; is the probability that testing occurs while virus is still detectable; is the test sensitivity; and is the probability a positive test is forwarded to a public health laboratory for further testing. Most commercial assays currently used for human influenza virus testing cannot distinguish novel influenza A viruses from seasonal influenza A viruses. Thus, further testing at a public health laboratory is required for a positive specimen to be identified as a novel virus (until tests specific for that virus become more widely available). We initially assumed 50% of positive specimens are forwarded (i.e., = 50%). This was informed by the average percentage of influenza A hospitalizations that were subtyped between 2010 and 2019 [6]. However, we considered a range of forwarding levels (25%, 50%, 75%, and 100%) in sensitivity analyses. All specimens forwarded for further testing were assumed to be correctly identified as a novel influenza virus.

The per case probability of being tested is the combined probability that a case will develop symptoms (), seek care for those symptoms in a particular healthcare setting (), and be tested in that setting (), that is,

For a certain incidence of novel cases each month, I, in a population of size N (where I is the fraction of the population infected with the novel influenza virus), we estimate the probability of detecting at least one novel case as 1 − the probability of detecting no cases among the entire population, or

(see the supporting information for further details). The expected number of clinical tests used per month, , is the combined number of tests conducted among cases and noncases. Noncases represent individuals presenting at healthcare settings with respiratory illness symptoms that are not due to novel influenza virus infection. The expected number of tests can be expressed as

where is the probability that someone without novel influenza virus infection is tested. The latter quantity is estimated as the background rate of presentation with respiratory illness symptoms to a given healthcare setting among the general population () multiplied by the probability of being tested in that setting (). To compare testing efficiency in different settings, we estimated the expected number of detected cases per 100,000 clinical tests conducted as

Finally, we considered random testing in the general community as a supplemental strategy that could be deployed in addition to healthcare testing. Given that community testing does not depend on symptom presentation or care‐seeking behavior, was simply the frequency of community tests conducted per month, and the expected number of tests was . Similarly, was the approximate time (in months) that virus would remain detectable and was parameterized to capture individual variation in virus shedding dynamics. Since community testing would be initiated to seek out novel influenza virus infection, we did not adjust for specimen forwarding (i.e., we assumed all specimens would be tested to distinguish novel influenza virus from seasonal influenza viruses).

For each healthcare and community setting, we drew 10,000 parameter combinations from data‐informed distributions (outlined below) and calculated the quantities described above. All analyses and visualizations were generated in R version 4.0.3 using the data.table, truncnorm, here, scales, patchwork, colorspace, and tidyverse packages [7, 8, 9, 10, 11, 12, 13, 14].

2.2. Healthcare Settings and Model Parameterization

We considered three distinct healthcare settings to reflect different care‐seeking behaviors and testing practices: (i) outpatient urgent care and emergency departments (UC/ED); (ii) inpatient hospital settings; and (iii) intensive care units (ICU). Each setting was assumed independent such that a person presenting to both (for example, a hospital admission followed by a subsequent ICU admission) could be tested in both, according to the corresponding testing probabilities. Data were collated from various existing influenza surveillance platforms to inform parameters for each setting (Table 1). We defined N = 330 million to approximate the US population [25] and considered incidence values that corresponded to 100 and 1000 total novel influenza cases.

TABLE 1.

Baseline care‐seeking and testing parameters.

| Parameter | Assumed distribution | Source and available timeframe (if applicable) |

|---|---|---|

| Proportion of novel cases developing symptoms, |

Uniform with range: 40–80% |

[15] |

| Care‐seeking and presentation of novel symptomatic cases at specific sites, : | Uniform with range: | |

| UC/ED | 10%–20% of symptomatic cases | FNY, ONM: 2018–2023 |

| Hospital | 1%–2% of symptomatic cases | CDC burden estimates: 2010–2021 [16] |

| ICU | 15%–20% of hospitalizations | VISION: 2020–2021; FluSurv‐Net: 2022–2023 |

| Testing of individuals with ARI, : | Truncated normal with mean/SD/range: | VISION: December 2021–May 2022 |

| UC/ED | 50%/10%/10%–90% | |

| Hospital | 53%/10%/20%–95% | |

| ICU | 46%/10%/1%–95% | |

| Community testing as a proportion of the general population, regardless of symptoms | 3%–6% of general population per month | Assumption following [17] |

| Tests that occur while virus is detectable, | Uniform with range: | |

| Healthcare settings | 50%–85% | Proportion seeking care ≤ 7 days after symptom onset [18] |

| Community settings | 25%–50% | Proportion of month that virus is detectable [19] |

| Test sensitivity, | Uniform with range 80%–100% | [20] |

| Proportion of positive specimens that are forwarded to a public health laboratory, | 50% | Assumption following [6] |

| Background occurrence in the general population, , of: | Uniform with range: | |

| ILI a | 0.6%–6% | FNY, ONM: 2019, 2022 |

| Hospital ARI admissions | 0.03%–0.1% | MarketScan: 2015–2021 |

| ICU ARI admissions | 0.02%–0.03% | MarketScan: 2015–2021 |

Note: Surveillance platforms are Flu Near You (FNY), Outbreaks Near Me (ONM), VISION Vaccine Effectiveness Network, FluSurv‐Net, and IBM MarketScan Commercial Claims and Encounters Database (MarketScan) [21, 22, 23, 24]. Further details of each platform are provided in the supporting information.

Abbreviations: ARI = acute respiratory illness, ED = emergency department, ICU = intensive care unit, ILI = influenza‐like‐illness, SD = standard deviation, UC = urgent care.

Background ILI occurrence is multiplied by care‐seeking probabilities in urgent care or emergency departments () to estimate the rate of presentation to urgent care or emergency departments with influenza symptoms in the general population. We omitted data from 2020 and 2021 due to atypically low levels of respiratory virus circulation.

Our baseline scenario reflected a novel influenza virus with similar severity to seasonal influenza. However, we also considered increased severity scenarios that ranged from severity that was similar to COVID‐19, to the severity of recent H5N1 virus infections in humans (Table 2). For these scenarios, we assumed similar or increased probabilities of developing symptoms and seeking care in each healthcare setting, while ensuring that the combined percentages did not exceed 100%. We initially assumed testing probabilities were fixed (Table 1) but explored alternative scenarios with increased testing ( mean = 90%) to compare the effect of enhanced surveillance across healthcare settings.

TABLE 2.

Scenarios for increased symptom severity.

| Scenario | Symptomatic | UC/ED a | Hospital | ICU b | Source(s) |

|---|---|---|---|---|---|

| Baseline | 40%–80% | 10%–20% | 1%–2% CHR a | 15%–20% | [15, 16] |

| COVID‐like | 40%–80% | 10%–20% | 1%–2% IHR c | 20%–30% | [17, 26] |

| Intermediate 1 | 25% > baseline d | 25% > baseline d | 4.5%–5.5% IHR c | 30%–40% | [17] |

| Intermediate 2 | 50% > baseline d | 50% > baseline d | 9.5%–10.5% IHRc | 45%–55% | [17] |

| Recent H5‐like | 50% > baseline d | 50% > baseline d | 60%–70% IHRc | 75%–85% | [1] |

Note: All parameters are assumed to follow a Uniform distribution with the reported range.

Abbreviations: CHR = case‐hospitalization ratio, ED = emergency department, ICU = intensive care unit, IHR = infection‐hospitalization ratio, UC = urgent care.

Expressed as a percentage of symptomatic individuals.

Expressed as a percentage of hospitalizations.

Expressed as a percentage of infected individuals.

Up to a maximum of 100%.

We also considered scenarios in which testing practices changed according to seasonal influenza activity. For example, clinicians may be less likely to test for influenza viruses during summer months when background respiratory virus activity is low. To explore this, we first defined distinct probability distributions for the background rates of presentation to each healthcare setting, , during peak (November–February) and off‐peak (May–August) time periods (Table 3). We then simulated the model for each time period and healthcare setting, assuming the care‐seeking behavior of novel influenza cases did not change but that testing in off‐peak periods was either equal to, or 50% of, testing in peak periods.

TABLE 3.

Baseline occurrence of ILI or ARI symptoms partitioned by peak versus off‐peak activity.

| Parameter | Range of uniform distribution | Period | Source and available timeframe |

|---|---|---|---|

| Occurrence of: | |||

| ILI a | 1.0–6.0% | Peak | FNY, ONM: 2019, 2022 |

| 0.6%–2.5% | Off‐peak | ||

| Hospital ARI admission | 0.04%–0.10% of general population | Peak | MarketScan: 2015–2021 |

| 0.03%–0.09% of general population | Off‐peak | ||

| ICU ARI admission | 0.014%–0.035% of general population | Peak | MarketScan: 2015–2021 |

| 0.010%–0.030% of general population | Off‐peak | ||

Abbreviations: ARI = acute respiratory illness, ICU = intensive care unit, ILI = influenza‐like‐illness, FNY = Flu Near You, ONM = Outbreaks Near Me.

Background ILI occurrence is multiplied by care‐seeking probabilities in urgent care or emergency departments () to estimate the rate of presentation to urgent care or emergency departments in the general population. We omitted data from 2020 and 2021 due to atypically low levels of respiratory virus circulation.

3. Results

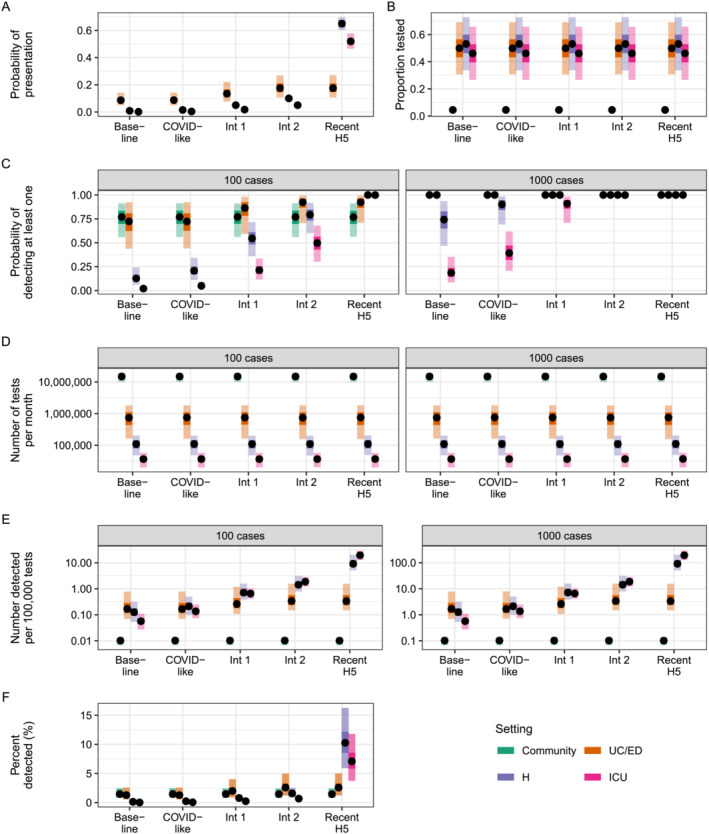

We first simulated the model with baseline severity assumptions and no distinction between peak and off‐peak time periods. At the lowest incidence (100 novel cases in the population), the median probability of detecting at least one case was highest in community and UC/ED settings, at 77% (95th percentile: 56%–91%) and 72% (44%–92%), respectively (Figure 1C; Baseline scenario). In comparison, median detection probabilities in hospital and ICU settings were less than 15%. The probability of detection increased across all settings when there were 1000 assumed novel cases in the population, to 100% (100%–100%) in UC/EDs and the community, 74% (47%–94%) in hospitals, and 19% (9%–35%) in ICUs. Testing in UC/ED settings was always most efficient and detected more cases per 100,000 tests than other settings (Figure 1E). Notably, community testing was least efficient due to the greater number of tests required (more than 10 million per month; Figure 1D), and no setting detected more than 3% of all novel cases under our assumptions (Figure 1F). Increasing the percentage of influenza positive specimens forwarded to public health laboratories to 75% or 100% increased detection probabilities and test efficiency across all healthcare settings (Figure S1). For example, the median detection probability in UC/EDs increased to 85% (58%–98%) and 92% (69%–99%) at the lowest incidence, respectively. Conversely, a decrease in the percentage forwarded to 25% decreased detection probabilities and test efficiencies, although the relative ordering of setting efficiency was preserved. Thus, for a novel influenza virus with similar severity to seasonal influenza, UC/ED settings are likely to provide greatest opportunities for case detection.

FIGURE 1.

Probabilities of detection and test usage under different severity scenarios. (A) Assumed probabilities of presentation to a particular setting, calculated as for UC/ED, hospital, and ICU settings. All cases are assumed to be in the community, resulting in a probability of one for that setting (not shown). (B) Assumed proportion of individuals with ILI or ARI tested in UC/ED, hospital and ICU settings, or proportion of all individuals tested in the community. (C) Estimated probability of detecting at least one novel case per month. Panels indicate different assumed levels of incidence (100 and 1000 novel cases). (D) Expected number of clinical tests used per month. (E) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (F) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. ARI = acute respiratory illness; ED = emergency department; H = hospital; ICU = intensive care unit; ILI = influenza‐like illness; Int 1 = Intermediate 1; Int 2 = Intermediate 2; UC = urgent care.

Given uncertainty in the potential severity of a novel influenza virus, we explored additional scenarios in which cases were more likely to develop symptoms and/or present to a particular healthcare setting than the baseline severity scenario (Table 2 and Figure 1A). As severity increased, the probability of detection also increased across all healthcare settings due to the greater probability of requiring medical attention (Figure 1C). The difference between detection probabilities in UC/ED compared with hospital and ICU settings also decreased as cases were more likely to be severe and require admission to the latter. For example, median detection probabilities for ICU settings increased from 2% (1%–4%) and 19% (9%–35%) at baseline with 100 and 1000 novel cases, respectively, to 100% (98%–100%) and 100% (100%–100%) in the “Recent‐H5” scenario. There were also substantial increases in testing efficiency in hospital and ICU settings (Figure 1E) and increases in the percent of novel cases detected (for example, from a maximum of 0.3% in hospital settings at baseline to 16% in the Recent H5 scenario; Figure 1F). Test usage is driven primarily by background seasonal influenza virus testing and thus did not change across severity scenarios (Figure 1D). Simulating an increase in clinical testing probabilities ( mean = 90%) substantially increased detection probabilities and test usage for all healthcare settings but did not impact the relative performance among settings (Figure S2).

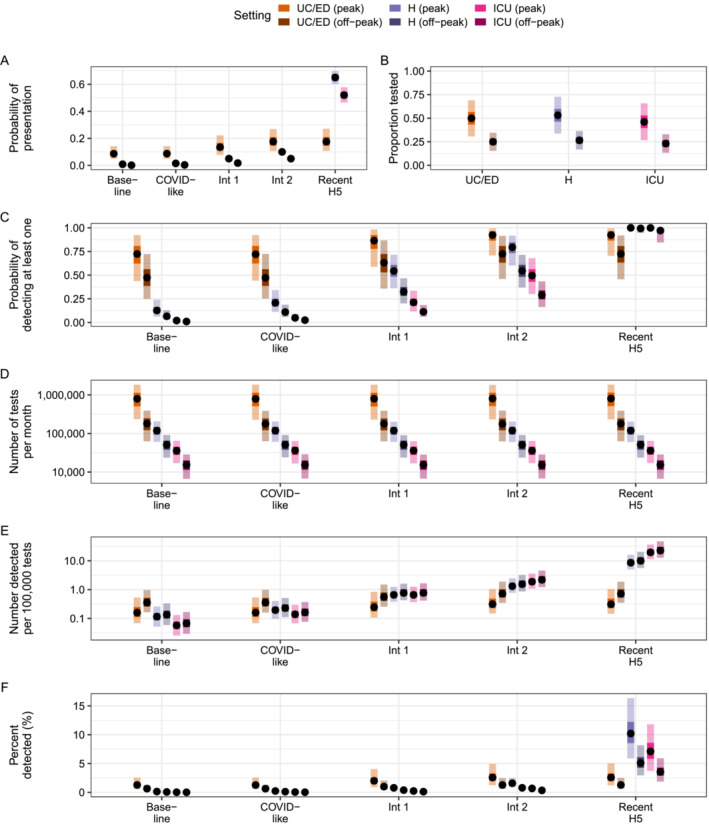

Finally, we assessed how seasonal changes in background activity could impact probabilities of case detection and testing efficiency. Assuming testing practices did not change seasonally led to equal probabilities of detection in peak and off‐peak periods, although testing efficiencies were increased in off‐peak periods due to the lower number of background tests conducted (Figure S3). Conversely, assuming a 50% reduction in testing across all healthcare settings in off‐peak periods (Figure 2B) reduced the corresponding probabilities of detection (Figure 2C). However, for the most severe scenarios (Intermediate 1, Intermediate 2, and Recent H5), there was always at least one healthcare setting with a median detection probability greater than 60% in off‐peak periods at the lowest incidence.

FIGURE 2.

Probabilities of detection and test usage in healthcare settings assuming reduced testing probabilities during periods of off‐peak seasonal activity. Incidence is fixed at 100 novel cases in the population. (A) Assumed probabilities of presentation to a particular setting, calculated as . Ranges are constant in peak and off‐peak periods. (B) Assumed proportion of individuals with ILI or ARI tested in peak and off‐peak periods. Ranges are constant across severity scenarios. (C) Estimated probability of detecting at least one novel case per month. (D) Expected number of clinical tests used per month. (E) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (F) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands are the 50th percentiles, and outer shaded bands are the 95th percentiles. ARI = acute respiratory illness; ED = emergency department; H = hospital; ICU = intensive care unit; ILI = influenza‐like illness; Int 1 = Intermediate 1; Int 2 = Intermediate 2; UC = urgent care.

4. Discussion

We modeled the likelihood of detection of novel influenza virus cases occurring at low incidence in the United States. We adapted a simple probabilistic framework that accounted for symptom severity, care‐seeking behavior, and testing practices in different healthcare settings, and used care‐seeking and testing information from recent influenza seasons to inform model parameters. We found that the most efficient setting for detection depends on the severity profile of the novel influenza virus. Although the percent of total novel influenza cases detected was relatively low, the probabilities of detecting at least one case, and thus identifying novel influenza virus circulation, were high in at least one setting across a range of different testing, severity, and specimen forwarding assumptions.

The high probabilities of detecting at least one case that we have estimated here are relevant for public health pandemic preparedness. The detection of one case would facilitate the implementation of public health actions including increased testing strategies, further virus characterization, vaccine development (if warranted), the implementation of appropriate public health control measures, and updated recommendations for the use of influenza antiviral medications. One key parameter influencing the detection probability was the probability of testing in each healthcare setting. We found that detection probabilities could decrease if influenza testing is substantially reduced below in‐season values (for example, during off‐peak months). However, it is also possible that clusters of cases and outbreaks could be more likely to be detected and tested during off‐peak months if clinicians remain vigilant for evidence of atypical respiratory virus signs or symptoms. The detection probability was also influenced by assumptions about the forwarding of clinical specimens. Our baseline value of 50% was informed by subtyping information from hospitalized influenza infections between 2010 and 2019 [6]. However, we included a lower bound of 25% to reflect recent post–COVID‐19 pandemic trends and potentially reduced forwarding in UC/ED outpatient settings [23]. We also included higher values up to 100% to explore maximum attainable detection probabilities if all tests were forwarded and found a substantial improvement in our estimates. Therefore, during the current H5N1 situation, it is critical that clinicians maintain high testing frequencies and forward influenza A positive specimens to public health laboratories for further testing when recommended. Finally, given the severity of prior H5N1 cases (for example, there has been a 50% case‐fatality proportion in cases identified since 1997 [1]), additional strategies to increase testing in ICU settings may help increase the likelihood of detection and testing efficiency, particularly during summer months when background acute respiratory illness rates are low.

Although the probability of detecting one case was generally high, the percent of total cases detected was low, especially in the lower severity scenarios. This finding assumes there are no immediate changes to testing or healthcare seeking behavior once the first case is detected and arises because detection of influenza through clinical settings requires someone to become symptomatic, seek care, be tested in a timely manner, and have a positive specimen forwarded for further characterization. Although community testing removes these barriers to identification, it is resource intensive and would need to occur even in the absence of perceived novel influenza virus spread to be effective, potentially requiring over 100 million tests per year at the level modeled in this analysis. Similarly, at‐home or self‐administered tests could alleviate issues associated with care‐seeking and clinical testing practices. However, such tests would need to be specific to the novel influenza virus and undergo potentially lengthy development and authorization procedures before being available for widespread use. Pandemic planning efforts should therefore include strategies to rapidly increase testing of acute respiratory illness cases in clinical settings once human‐to‐human spread of a novel influenza virus has been identified or is likely. Such strategies should account for the possibility that many cases may not be detected, even with increased testing.

There are several caveats to our modeling framework. First, as our primary aim was to estimate detection capabilities once sustained human‐to‐human transmission is occurring within the United States, we did not consider surveillance for earlier events that might spark such transmission, such as spillover from infected animals or introductions from outside the United States. It is possible that these events would be associated with a greater probability of testing due to relevant exposure histories and thus have a greater likelihood of being detected compared with our estimates. Second, we did not stratify detection probabilities by age. The severity of seasonal influenza can vary substantially among different age groups [27], and age patterns of severity may differ for a novel influenza virus compared to seasonal influenza viruses due to immunological imprinting and age‐related exposures to previous circulating viruses [28, 29]. Age may also impact testing probabilities and healthcare seeking behavior [30, 31], although mean testing probabilities for children < 18 years were similar to those of adults ≥ 18 years in the VISION data used to parameterize (for example, 55% vs. 50% in UC/ED for children and adults, respectively). Including age in the current framework would require additional assumptions regarding the cross‐reactivity of the novel influenza virus with seasonal influenza viruses to infer age‐specific severity distributions and thus reduce the generalizability of our results. Third, we did not explicitly incorporate delays in case admission to hospital or ICU that could reduce the window for viable virus detection relative to other settings. These delays are likely on the order of several days and are captured within our conservative range for the proportion of care‐seekers who are tested while virus is still detectable [32]. Fourth, we modeled the United States as a single population and did not explicitly consider spatial or other heterogeneities in care‐seeking and testing practices. If such data were available, our analysis could be replicated at finer resolution to assess local response and detection capabilities. Fifth, data were not available to fully inform our test forwarding assumptions. Although we considered a range in sensitivity analyses, further information would increase the accuracy of our detection probability estimates. We also assumed perfect sensitivity and specificity for all forwarded tests in line with evaluation of real‐time RT‐PCR tests for novel H1N1 variant influenza viruses [33]. Although minor reductions in sensitivity should not substantially impact our detection probability estimates, reductions in specificity could lead to false positive results that we have not considered. However, the number of false positive results is likely to be small unless testing reaches extremely high levels, such as considered here in the community setting.

Finally, our assumed inputs for baseline testing and background activity were informed by previous influenza seasons and may not reflect future changes to these values. Where possible, we developed parameter distributions based on data from multiple influenza seasons, before and after the COVID‐19 pandemic, to account for broad fluctuations in care‐seeking behavior, testing practices, and seasonal influenza dynamics. We also explored scenarios with increased testing to capture the potential impacts of changes to healthcare surveillance following additional policy recommendations. More generally, our estimates of detection probabilities and test efficiency reflect the combined uncertainty in each underlying parameter value and should thus be robust to small changes in any single parameter.

Novel influenza viruses pose a potential pandemic risk, and prompt detection is critical to characterizing the virus causing the infection and facilitating a rapid public health response. Here we demonstrate how a simple probabilistic framework can be used to estimate novel influenza virus detection probabilities through testing in different community and healthcare settings, and can help inform the targeting of future testing efforts. Our work was motivated by the 2022–2024 H5N1 situation in the United States but could be applied more broadly to other locations and/or other potential novel influenza viruses.

Author Contributions

Sinead E. Morris: conceptualization, formal analysis, investigation, methodology, visualization, writing–original draft, writing–review and editing. Matthew Gilmer: data curation, writing–review and editing. Ryan Threlkel: data curation, writing–review and editing. Lynnette Brammer: conceptualization, writing–review and editing. Alicia P. Budd: conceptualization, writing–review and editing. A. Danielle Iuliano: data curation, writing–review and editing. Carrie Reed: conceptualization, investigation, supervision, writing–review and editing. Matthew Biggerstaff: conceptualization, investigation, methodology, supervision, writing–review and editing.

Conflicts of Interest

The authors declare no conflicts of interest.

Peer Review

The peer review history for this article is available at https://www.webofscience.com/api/gateway/wos/peer‐review/10.1111/irv.13315.

Supporting information

Figure S1. Test forwarding, p f , impacts probabilities of detection in healthcare settings under baseline assumptions. (A) Estimated probability of detecting at least one novel case per month. (B) Expected number of clinical tests used per month. (C) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (D) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ED = emergency department; H = hospital; ICU = intensive care unit; UC = urgent care.

Figure S2. Increasing mean healthcare testing probabilities, p test , to 90% increases probabilities of detection across all healthcare settings and severity scenarios. The number of novel cases in the population is fixed at 100. (A) Estimated probability of detecting at least one novel case per month. Panels indicate different assumed testing probabilities (baseline values and increased values). (B) Expected number of clinical tests used per month. (C) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (D) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ED = emergency department; H = hospital; ICU = intensive care unit; Int 1 = Intermediate 1; Int 2 = Intermediate 2; UC = urgent care.

Figure S3. Probabilities of detection and test usage in healthcare settings assuming equal testing probabilities in periods of peak and off‐peak seasonal activity. The number of novel cases in the population is fixed at 100. (A) Assumed probabilities of presentation to a particular setting, calculated as p symp × p seek . (B) Assumed proportion of individuals with ILI or ARI tested in peak and off‐peak periods. (C) Estimated probability of detecting at least one novel case per month. (D) Expected number of clinical tests used per month. (E) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (F) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ARI = acute respiratory illness; ED = emergency department; H = hospital; ICU = intensive care unit; ILI = influenza‐like illness; Int 1 = Intermediate; Int 2 = Intermediate 2; UC = urgent care.

The findings and conclusions in this report are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention.

Data Availability Statement

All model inputs and code needed to perform the analysis are available at https://github.com/CDCgov/novel‐flu‐detection. Data were used solely to inform model inputs and were the result of secondary analyses; the original sources are cited in the text.

References

- 1. CDC , “H5N1 Bird Flu: Current Situation Summary,” (2024), cited 2024 May 10, https://www.cdc.gov/flu/avianflu/avian‐flu‐summary.htm.

- 2. Kniss K., Sumner K. M., Tastad K. J., et al., “Risk for Infection in Humans After Exposure to Birds Infected With Highly Pathogenic Avian Influenza A(H5N1) Virus, United States, 2022,” Emerging Infectious Diseases 29, no. 6 (2023): 1215–1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. CDC , “Pandemic Influenza,” (2023), cited 2024 May 10, https://www.cdc.gov/flu/pandemic‐resources/index.htm.

- 4. NASPHV , “Zoonotic Influenza: Detection, Response, Prevention, and Control Reference Guide,” (2022), cited 2024 May 10, http://www.nasphv.org/documentsCompendiaZoonoticInfluenza.html.

- 5. Russell S., Ryff K., Gould C., Martin S., and Johansson M., “Detecting Local Zika Virus Transmission in the Continental United States: A Comparison of Surveillance Strategies,” PLoS Currents 9 (2017). 10.1371/currents.outbreaks.cd76717676629d47704170ecbdb5f820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Sumner K., Masalovich S., O'Halloran A., et al., “Severity of Influenza‐Associated Hospitalisations by Influenza Virus Type and Subtype in the USA, 2010–19: A Repeated Cross‐Sectional Study,” Lancet Microbe 4, no. 11 (2023), E903–E912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Wickham H., Averick M., Bryan J., et al., “Welcome to the Tidyverse,” Journal of Open Software 4, no. 43 (2019): 1686. [Google Scholar]

- 8. Pedersen T. L., “patchwork: The Composer of Plots. R Package Version 1.1.1,” (2020).

- 9. R Core Team , “R: A Language and Environment for Statistical Computing. 2020: R Foundation for Statistical Computing.”

- 10. Dowle M., and Srinivasan A., “data.table: Extension of ‘data.frame’. R Package Version 1.14.2,” (2021).

- 11. Zeileis A., Fisher J. C., Hornik K., et al., “colorspace: A Toolbox for Manipulating and Assessing Colors and Palettes,” Journal of Statistical Software 96, no. 1 (2020): 1–49. [Google Scholar]

- 12. Mersmann O., Trautmann H., Steuer D., and Bornkamp B., “truncnorm: Truncated Normal Distribution. R Package Version 1.0.9,” (2023).

- 13. Müller K., “here: A Simpler Way to Find Your Files. R Package Version 1.0.1,” (2020).

- 14. Wickham H., and Seidel D. P., “scales: Scale Functions for Visualization. R Package Version 1.2.1,” (2022).

- 15. Cohen C., Kleynhans J., Moyes J., et al., “Asymptomatic Transmission and High Community Burden of Seasonal Influenza in an Urban and a Rural Community in South Africa, 2017–18 (PHIRST): A Population Cohort Study,” The Lancet Global Health 9, no. 6 (2021): e863–e874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. CDC , “Past Seasons Estimated Influenza Disease Burden,” (2023), cited 2023 April 17, https://www.cdc.gov/flu/about/burden/index.html.

- 17. UKHSA , “Investigation Into the Risk to Human Health of Avian Influenza (Influenza A H5N1) in England: Technical Briefing 3,” (2023).

- 18. Biggerstaff M., Jhung M. A., Reed C., Fry A. M., Balluz L., and Finelli L., “Influenza‐Like Illness, the Time to Seek Healthcare, and Influenza Antiviral Receipt During the 2010–2011 Influenza Season—United States,” The Journal of Infectious Diseases 210, no. 4 (2014): 535–544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ip D. K., Lau L. L., Leung N. H., et al., “Viral Shedding and Transmission Potential of Asymptomatic and Paucisymptomatic Influenza Virus Infections in the Community,” Clinical Infectious Diseases 64, no. 6 (2017): 736–742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Millman A. J., Reed C., Kirley P. D., et al., “Improving Accuracy of Influenza‐Associated Hospitalization Rate Estimates,” Emerging Infectious Diseases 21, no. 9 (2015): 1595–1601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Outbreaks Near Me , “COVID‐19 and Flu in Your Community,” (2023), cited 2023 October 20, https://outbreaksnearme.org/us/en‐US.

- 22. CDC , “VISION Vaccine Effectiveness Network,” (2023), cited 2023 April 17, https://www.cdc.gov/flu/vaccines‐work/vision‐network.html.

- 23. CDC , “Influenza Hospitalization Surveillance Network (FluSurv‐NET),” (2023), cited 2023 April 17, https://www.cdc.gov/flu/weekly/influenza‐hospitalization‐surveillance.htm.

- 24.“IBM MarketScan® Commercial Claims and Encounters Database,” cited 2023 October 20, https://www.ibm.com/downloads/cas/OWZWJ0QO.

- 25. United States Census Bureau , “Census Data,” (2023), cited 2023 May 31, https://data.census.gov/.

- 26. CDC , “COVID Data Tracker,” (2023), cited 2023 April 17, https://covid.cdc.gov/covid‐data‐tracker/#datatracker‐home.

- 27. Reed C., Chaves S. S., Daily Kirley P., et al., “Estimating Influenza Disease Burden From Population‐Based Surveillance Data in the United States,” PLoS ONE 10, no. 3 (2015): e0118369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Shrestha S. S., Swerdlow D. L., Borse R. H., et al., “Estimating the Burden of 2009 Pandemic Influenza A (H1N1) in the United States (April 2009‐April 2010),” Clinical Infectious Diseases 52, no. Suppl 1 (2011): S75–S82. [DOI] [PubMed] [Google Scholar]

- 29. Gostic K. M., Ambrose M., Worobey M., and Lloyd‐Smith J. O., “Potent Protection Against H5N1 and H7N9 Influenza Via Childhood Hemagglutinin Imprinting,” Science 354, no. 6313 (2016): 722–726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Dalton A. F., Couture A., DeSilva M. B., et al., “Patient and Epidemiological Factors Associated With Influenza Testing in Hospitalized Adults With Acute Respiratory Illnesses, 2016–2017 to 2019–2020,” Open Forum Infectious Diseases 10 (2023): 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Baltrusaitis K., Reed C., Sewalk K., Brownstein J. S., Crawley A. W., and Biggerstaff M., “Healthcare‐Seeking Behavior for Respiratory Illness Among Flu Near You Participants in the United States During the 2015–2016 Through 2018–2019 Influenza Seasons,” The Journal of Infectious Diseases 226, no. 2 (2020): 270–277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Tenforde M. W., Cummings C. N., O'Halloran A. C., et al., “Influenza Antiviral Use in Patients Hospitalized With Laboratory‐Confirmed Influenza in the United States, FluSurv‐NET, 2015–2019,” Open Forum Infectious Diseases 10, no. 1 (2023): ofac681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Ellis J., Iturriza M., Allan R., et al., “Evaluation of Four Real‐Time PCR Assays for Detection of Influenza A(H1N1)v Viruses,” Eurosurveillance 14, no. 22 (2009): 19230. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1. Test forwarding, p f , impacts probabilities of detection in healthcare settings under baseline assumptions. (A) Estimated probability of detecting at least one novel case per month. (B) Expected number of clinical tests used per month. (C) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (D) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ED = emergency department; H = hospital; ICU = intensive care unit; UC = urgent care.

Figure S2. Increasing mean healthcare testing probabilities, p test , to 90% increases probabilities of detection across all healthcare settings and severity scenarios. The number of novel cases in the population is fixed at 100. (A) Estimated probability of detecting at least one novel case per month. Panels indicate different assumed testing probabilities (baseline values and increased values). (B) Expected number of clinical tests used per month. (C) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (D) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ED = emergency department; H = hospital; ICU = intensive care unit; Int 1 = Intermediate 1; Int 2 = Intermediate 2; UC = urgent care.

Figure S3. Probabilities of detection and test usage in healthcare settings assuming equal testing probabilities in periods of peak and off‐peak seasonal activity. The number of novel cases in the population is fixed at 100. (A) Assumed probabilities of presentation to a particular setting, calculated as p symp × p seek . (B) Assumed proportion of individuals with ILI or ARI tested in peak and off‐peak periods. (C) Estimated probability of detecting at least one novel case per month. (D) Expected number of clinical tests used per month. (E) Estimated test efficiency, calculated as the number of detected novel cases per 100,000 tests. (F) Percent of all novel cases detected per month. In all panels, points represent median values across 10,000 simulations, inner shaded bands show 50th percentiles, and outer shaded bands show 95th percentiles. Abbreviations: ARI = acute respiratory illness; ED = emergency department; H = hospital; ICU = intensive care unit; ILI = influenza‐like illness; Int 1 = Intermediate; Int 2 = Intermediate 2; UC = urgent care.

Data Availability Statement

All model inputs and code needed to perform the analysis are available at https://github.com/CDCgov/novel‐flu‐detection. Data were used solely to inform model inputs and were the result of secondary analyses; the original sources are cited in the text.