Practice guidelines are valid if “they lead to the health gains and costs predicted for them.”1 When implemented, valid guidelines lead to changes in clinical practice and improvements in outcomes for patients.2–5 Invalid guidelines, however, may lead to the use of ineffective interventions that waste resources, or even to harm.

Guidelines must offer recommendations for both effective and efficient care, and these have not previously been available in the United Kingdom. We have reported the development and content of guidelines for primary care in the United Kingdom based explicitly on evidence of effectiveness.6–9 Here, we present the methods used to develop evidence based guidelines on the use in primary care of four important groups of drugs—angiotensin converting enzyme inhibitors in patients with heart failure, choice of antidepressants, non-steroidal anti-inflammatory drugs in patients with osteoarthritis, and aspirin as an antithrombotic agent.10–13 Abridged versions of the guidelines on angiotensin converting enzyme inhibitors, aspirin, and non-steroidal anti-inflammatory drugs will be published in subsequent articles.14–16

Summary points

Guideline development groups defined important clinical questions, produced search criteria, and drew up protocols for systematic review and, where appropriate, meta-analysis

Medline and Embase were searched for systematic reviews and meta-analyses, randomised trials, quality of life studies, and economic studies

Meta-analysis was used extensively by the group to answer specific clinical questions

Statements on evidence were categorised in relation to study design, reflecting their susceptibility to bias

Strength of recommendations was graded according to the category of evidence and its applicability, economic issues, values of the guideline group and society, and the groups’ awareness of practical issues

Recommendations cease to apply in December 1999, by which time relevant results that may affect recommendations may be known

Guideline development groups

Guideline development groups comprised three broad classes of members—relevant healthcare professionals (up to five general practitioners (all with an interest and postgraduate training in primary care therapeutics), up to two hospital consultants, a health authority medical or pharmaceutical adviser, and a pharmacist); specialist resources (an epidemiologist (NF) and a health economist (JM)); and a specialist in guideline methodology and in leading small groups (ME). All group members were offered reimbursement of their travelling expenses and general practitioners could also claim for any expenses incurred in employing a locum.

Evidence: identification and overview

As a first step, the guideline development groups defined a set of clinical questions within the area of the guideline. This ensured that the guideline development work outside the meeting focused on issues that practitioners considered important and produced criteria for the search and the protocol for systematic review and, where appropriate, meta-analysis.

Search strategy

Searches were undertaken using Medline and, where appropriate, Embase. Using a combination of subject heading and free text terms, the search strategies located systematic reviews and meta-analyses, randomised trials, quality of life studies, and economic studies. Further details of the specific search strategies are provided in the full versions of the guidelines.10–13 Recent, high quality review articles and bibliographies and contacts with experts were used extensively. New searches were concentrated on areas where existing systematic reviews were unable to provide valid or up to date answers. The search strategy was backed up by the expert knowledge and experience of group members.

Synthesising published reports

We assessed the quality of relevant studies retrieved and their ability to provide valid answers to the questions posed. Assessment of the quality of studies considered issues of internal, external, and construct validity.17 The criteria used are shown in the box. Once individual papers had been assessed for methodological rigour and clinical importance, the information was synthesised.

Criteria for assessing quality of randomised trials

Appropriateness of inclusion and exclusion criteria

Concealment of allocation

Blinding of patients

Blinding of health professionals

Objective or blind method of data collection

Valid or blind method of data analysis

Completeness and length of follow up

Appropriateness of outcome measures

Statistical power of results

Describing evidence

We used meta-analysis to summarise and describe the results of studies, conducting analyses to answer specific questions raised by the guideline development groups. Our primary aim was to provide valid estimates of treatment effects using approaches that provided results in a form that could best inform treatment recommendations.

Meta-analyses combine statistically the results from similar studies and provide a weighted average of study estimates of effect. The most important criterion for combining studies is that their combination makes practical sense and, therefore, the results are interpretable. Statistical analysis procedures for meta-analysis using different outcomes are essentially analogous; all involve large sample theory and differ mainly in the details of calculations of standard errors and bias correction.18 Fixed effects models assume a common underlying effect and weight each study by the inverse of the variance. Random effects models assume a distribution of effects and incorporate this heterogeneity into the overall estimate of effect and its precision.19,20 Decisions on the appropriateness of fixed or random effects models were based primarily on a priori assumptions about the construct being tested in each case. Where heterogeneity between studies was identified, we also reported routinely random effects results.

Publication bias

Publication bias and missing data can undermine substantially the validity of meta-analyses.21 Besides using sensitive search strategies, we went to considerable lengths to obtain missing data from the trials identified. We wrote to investigators and the companies sponsoring them, and followed up non-respondents with further letters and, where appropriate, other forms of communication.

Binary outcomes

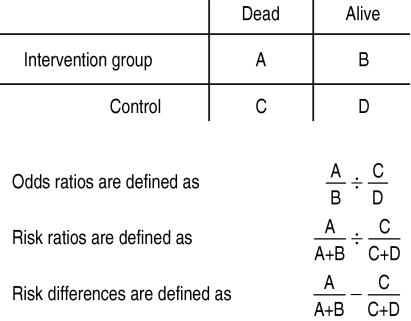

Meta-analysis of binary data, such as the number of deaths in a randomised trial, enables the results of a group of trials to be expressed in several ways (box). The pooled odds ratio is a statistically robust measure but is hard to interpret clinically; risk ratios are easier to interpret. Both are inadequate for exploring the practical implications of interventions in primary care. Risk differences are less helpful for exploring underlying effects, but are useful for describing the importance of the effects of an intervention in practice. Pooled risk differences can be adjusted for time of exposure when reviews include trials of varying lengths. This provides estimates of annual risk that can also be expressed as numbers needed to treat.22

Meta-analysis of binary data

Worked example In the study of left ventricular disease treatment trial of enalapril in patients with heart failure, there were 452 deaths in 1285 patients randomised to receive enalapril and 510 deaths in 1284 patients allocated to placebo at the end of the four years’ follow up.23,24 In a two by two table these data provide an odds ratio of 0.82, a risk ratio of 0.89, and a risk difference of −0.045 (or a 4.5% reduction in the risk of death).

Continuous outcomes

Where continuous outcomes are measured similarly in different studies, meta-analysis can be used to calculate a weighted mean difference. If measurement between studies is not undertaken using a common metric—because different instruments are used or poor reliability between those undertaking rating is likely—standardised scores based on variance within the study may be calculated for each trial. This approach, for example, enables the statistical pooling of outcomes expressed in different versions of the Hamilton depression rating scale, in which the 17 and 21 item forms are commonly used. We used the straightforward approach proposed by Hedges and Olkin,25 in which the variance estimate is based upon the intervention and control group and the effect size is corrected for bias due to small sample size.

Economic analysis

The guidelines include systematic appraisals of effectiveness, compliance, safety, health service resource use, and costs of medical interventions in British general practice. The economic analysis is presented in a straightforward manner, showing the possible bounds of cost effectiveness that may result from treatment. Lower and higher estimates of cost effectiveness reflect the available evidence and the concerns of the guideline development group. Economic analyses are susceptible to bias through the methods used; we avoided making strong statements where uncertainty existed. However, the simplicity of presentation permits simple reworking with different values from the ones used by the group. This practice reflects the desire of group members for understandable and robust information upon which to base recommendations.

Presenting a review of previous economic analyses which have adopted a variety of differing perspectives, analytic techniques, and baseline data was not considered helpful. However, economic reports were reviewed to compare findings of the guideline project with representative published economic analyses and to interpret differences when these occurred.

Categorising evidence

Summarised evidence was categorised according to study design, and reflects susceptibility to bias. The box shows the categories in descending order of importance. Categories of evidence were adapted from the classification of the United States Agency for Health Care Policy and Research.26 Questions were answered using the best evidence available. If, for example, a question on the effect of an intervention could be answered by category I evidence, then studies of weaker design (controlled studies without randomisation) were not reviewed. This categorisation is most appropriate to questions of causal relations. Similar taxonomies for other types of research question do not yet exist.

Categories of evidence

Ia—Evidence from meta-analysis of randomised controlled trials Ib—Evidence from at least one randomised controlled trial IIa—Evidence from at least one controlled study without randomisation IIb—Evidence from at least one other type of quasi-experimental study III—Evidence from descriptive studies, such as comparative studies, correlation studies and case-control studies IV—Evidence from expert committee reports or opinions or clinical experience of respected authorities, or both

Strength of recommendation

Informal consensus methods were used to derive recommendations, and reflect the certainty with which the effectiveness and cost effectiveness of a medical intervention can be recommended. Recommendations are based upon consideration of the following: the strength of evidence, the applicability of the evidence to the population of interest, economic considerations, values of the guideline developers and society, and guideline developers’ awareness of practical issues. While the process of interpreting evidence inevitably involves value judgments, we clarified the basis of these judgments as far as possible by making this process explicit. The relation between the strength of a recommendation and the category of evidence is shown in the box.

Strength of recommendation

A—Directly based on category I evidence B—Directly based on category II evidence or extrapolated recommendation from category I evidence C—Directly based on category III evidence or extrapolated recommendation from category I or II evidence D—Directly based on category IV evidence or extrapolated recommendation from category I, II or III evidence

Areas without evidence

Informal consensus methods were used to develop recommendations in areas where there was no evidence. This process sometimes identified important unanswered research questions. These are recorded at the end of the relevant section of the guideline.

Review of the guideline

External

External reviewers were chosen to reflect three groups: potential users of the guidelines, experts in the subject area, and guideline methodologists. Although the reviewers’ comments influenced the style and content of the guidelines, these remained the responsibility of the development group.

Scheduled review

The recommendations of these guidelines cease to apply at the end of 1999, by which time new, relevant results that may affect recommendations are likely to be available.

Acknowledgments

We thank the following for their contribution to the functioning of the guidelines development group and the development of the practice guideline: Janette Boynton, Anne Burton, Julie Glanville, Susan Mottram.

Footnotes

Funding: The development of the guideline was funded by the Prescribing Research Initiative of the Department of Health.

Conflict of interest: None.

References

- 1.Field MJ, Lohr KN, editors. Institute of Medicine. Guidelines for clinical practice. From development to use. Washington, DC: National Academy Press; 1992. [PubMed] [Google Scholar]

- 2.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993;342:1317–1322. doi: 10.1016/0140-6736(93)92244-n. [DOI] [PubMed] [Google Scholar]

- 3.Grimshaw JM, Russell IT. Achieving health gain through clinical guidelines. I. Developing scientifically valid guidelines. Qual Health Care. 1993;2:243–248. doi: 10.1136/qshc.2.4.243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Grimshaw JM, Russell IT. Achieving health gain through clinical guidelines. II. Ensuring guidelines change medical practice. Qual Health Care. 1994;3:552–558. doi: 10.1136/qshc.3.1.45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Implementing clinical practice guidelines: can guidelines be used to improve clinical practice? Leeds: University of Leeds; 1994. (Effective Health Care Bulletin No 8.) [Google Scholar]

- 6.Eccles M, Clapp Z, Grimshaw J, Adams PC, Higgins B, Purves I, et al. Developing valid guidelines: methodological and procedural issues from the North of England evidence based guideline development project. Qual Health Care. 1996;5:44–50. doi: 10.1136/qshc.5.1.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Eccles M, Clapp Z, Grimshaw J, Adams PC, Higgins B, Purves I, et al. North of England evidence based guideline development project: methods of guideline development. BMJ. 1996;312:760–762. doi: 10.1136/bmj.312.7033.760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.North of England Stable Angina Guideline Development Group. North of England evidence based guidelines development project: summary version of evidence based guideline for the primary care management of stable angina. BMJ. 1996;312:827–832. [PMC free article] [PubMed] [Google Scholar]

- 9.North of England Asthma Guideline Development Group. North of England evidence based guidelines development project: summary version of evidence based guideline for the primary care management of asthma in adults. BMJ. 1996;312:762–766. doi: 10.1136/bmj.312.7033.762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.North of England Evidence Based Guideline Development Project. Evidence based clinical practice guideline: ACE inhibitors in the primary care management of adults with symptomatic heart failure. Newcastle upon Tyne: Centre for Health Services Research; 1997. [Google Scholar]

- 11.North of England Evidence Based Guideline Development Project. Evidence based clinical practice guideline: the choice of antidepressants for depression in primary care. Newcastle upon Tyne: Centre for Health Services Research, 1997.

- 12.North of England Evidence Based Guideline Development Project. Evidence based clinical practice guideline: the use of aspirin for the secondary prophylaxis of vascular disease in primary care. Newcastle upon Tyne: Centre for Health Services Research; 1997. [Google Scholar]

- 13.North of England Evidence Based Guideline Development Project. Evidence based clinical practice guideline: non-steroidal anti-inflammatory drugs (NSAIDs) versus basic analgesia in the treatment of pain believed to be due to degenerative arthritis. Newcastle upon Tyne: Centre for Health Services Research; 1997. [Google Scholar]

- 14.Eccles M, Freemantle N, Mason J, for the North of England ACE-inhibitor Guideline Development Group. North of England evidence based development project: guideline for angiotensin converting enzyme inhibitors in primary care management of adults with symptomatic heart failure. BMJ (in press). [DOI] [PMC free article] [PubMed]

- 15.Eccles M, Freemantle N, Mason J, for the North of England ACE-inhibitor Guideline Development Group. Evidence based guideline for the use of aspirin for the secondary prophylaxis of vascular disease in primary care. BMJ (in press). [DOI] [PMC free article] [PubMed]

- 16.Eccles M, Freemantle N, Mason J, for the North of England Non-steroidal Anti-inflammatory Drug Guideline Development Group. Evidence based guideline for the use of non-steroidal anti-inflammatory drugs versus basic analgesia in the treatment of pain believed to be due to degenerative arthritis. BMJ (in press). [DOI] [PMC free article] [PubMed]

- 17.Cook TD, Campbell DT. Quasi-experimentation: design and analysis issues for field settings. Chicago: Rand McNally; 1979. [Google Scholar]

- 18.Hedges LV. Meta-analysis. J Educ Stat. 1992;17:279–296. [Google Scholar]

- 19.DerSimonian R, Laird N. Meta analysis in clinical trials. Controlled Clin Trials. 1986;7:177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 20.Whitehead A, Whitehead J. A general parametric approach to the meta-analysis of randomised clinical trials. Stat Med. 1991;10:1665–1677. doi: 10.1002/sim.4780101105. [DOI] [PubMed] [Google Scholar]

- 21.Freemantle N, Mason JM, Haines A, Eccles MP. CONSORT: an important step toward evidence-based health care. Ann Intern Med. 1997;126:81–83. doi: 10.7326/0003-4819-126-1-199701010-00011. [DOI] [PubMed] [Google Scholar]

- 22.Ioannidis JPA, Cappelleri JC, Lau J, Skolnik PR, Melville B, Chalmers TC, et al. Early or deferred zidovudine therapy in HIV-infected patients without an AIDS-defining illness. Ann Intern Med. 1995;122:856–866. doi: 10.7326/0003-4819-122-11-199506010-00009. [DOI] [PubMed] [Google Scholar]

- 23.Hedges LV, Olkin I. Statistical methods for meta-analysis. London: Academic Press; 1985. [Google Scholar]

- 24.United States Department of Health and Human Services, Public Health Service, Agency for Health Care Policy and Research. Acute pain management: operative or medical procedures and trauma. Rockville, MD: Agency for Health Care Policy and Research Publications; 1992. [Google Scholar]

- 25.SOLVD Investigators. Effect of enalapril on mortality and the development of heart failure in asymptomatic patients with reduced left ventricular ejection fractions. N Engl J Med. 1992;327:685–691. doi: 10.1056/NEJM199209033271003. [DOI] [PubMed] [Google Scholar]

- 26.SOLVD Investigators. Effect of enalapril on survival in patients with reduced left ventricular ejection fractions and congestive heart failure. N Engl J Med. 1991;325:293–302. doi: 10.1056/NEJM199108013250501. [DOI] [PubMed] [Google Scholar]